#supercomputers

Explore tagged Tumblr posts

Text

Neural

#five pebbles#rain world#rain world fanart#rain world iterator#iterator#ai#scifi#fanart#rainworld art#supercomputers#5 pebbles#rainworld#rw five pebbles#rain world game#rain world rot#the rot consumes#biology#ai in fiction

800 notes

·

View notes

Text

All 500 of the world's fastest supercomputers run on Linux-based operating systems.

175 notes

·

View notes

Text

12 notes

·

View notes

Text

1 note

·

View note

Text

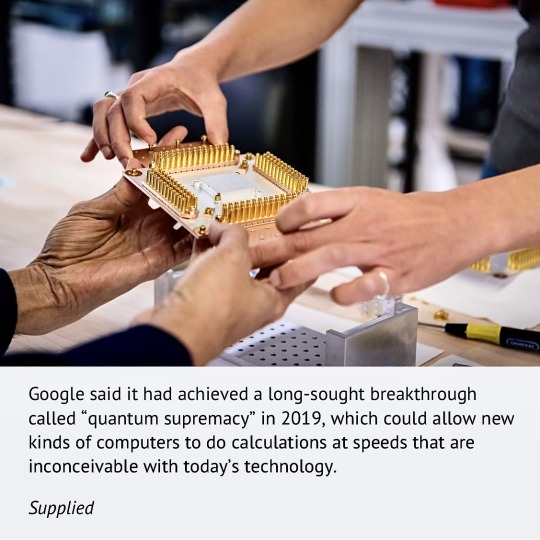

Google’s quantum computer instantly makes calculations that take rivals 47 years.

By James Titcomb

The Age - July 3, 2023

Originally published by The Telegraph, London

#Computers#Supercomputers#Quantum computing#Technology#Research & development#Google#Phase Transition in Random Circuit Sampling#ArXiv#Quantum physics#Science

1 note

·

View note

Text

Powering the Future

Sanjay Kumar Mohindroo Sanjay Kumar Mohindroo. skm.stayingalive.in How High‑Performance Computing Ignites Innovation Across Disciplines. Explore how HPC and supercomputers drive breakthrough research in science, finance, and engineering, fueling innovation and transforming our world. High‑Performance Computing (HPC) and supercomputers are the engines that power modern scientific, financial,…

#AI Integration#Data Analysis#Energy Efficiency#Engineering Design#Exascale Computing#Financial Modeling#High‑Performance Computing#HPC#Innovation#News#Parallel Processing#Sanjay Kumar Mohindroo#Scientific Discovery#Simulation#Supercomputers

0 notes

Text

NVIDIA AI Supercomputers Drive The Next Phase Of AI Growth

Every nation and business wants to grow and create jobs, but it takes practically unlimited knowledge. NVIDIA and its ecosystem partners are emphasising their efforts to create reasoning, AI models, and computational infrastructure to produce intelligence in AI factories that will accelerate US and global growth this week.

NVIDIA will start making AI supercomputers in the US. The company and its partners want to develop $500 billion in US AI infrastructure over four years.

NVIDIA to Build First US AI Supercomputers

For the first time, NVIDIA and its partners are designing and building US facilities to create all AI supercomputers.

The company uses over a million square feet of production facilities with renowned manufacturers to build and test AI supercomputers in Texas and NVIDIA Blackwell chips in Arizona.

NVIDIA Blackwell chips are being made in TSMC's Phoenix chip facilities. NVIDIA is developing supercomputer factories in Texas, joining Foxconn and Wistron in Houston and Dallas. Both units should increase bulk output in 12–15 months.

The complicated supply chain of supercomputers and AI chips requires cutting-edge production, testing, packaging, and assembly technologies. Amkor and SPIL in Arizona are helping NVIDIA package and test.

Through agreements with TSMC, Foxconn, Wistron, Amkor, and SPIL, NVIDIA expects to invest over $500 billion in US AI infrastructure over four years. These industry leaders are expanding worldwide, strengthening their supply chains, and partnering with NVIDIA.

NVIDIA AI supercomputers power a new data centre designed to govern AI, while AI factories fuel a new AI economy. Building tens of “gigawatt AI factories” is predicted in the next years. NVIDIA Blackwell chips and supercomputers for American AI firms will produce billions of dollars in economic security and hundreds of thousands of jobs over the next few decades.

NVIDIA founder and CEO Jensen Huang says “the engines of the world’s AI infrastructure are being built in the United States for the first time.” The supply chain may be strengthened and resilience increased by embracing American manufacturing to fulfil the huge demand for AI chips and supercomputers.

The business will develop and run the facilities using cutting-edge AI, robotics, and digital twin technologies including NVIDIA Omniverse to generate factory digital twins and NVIDIA Isaac GR00T to build robots to automate production.

The installation of NVIDIA AI supercomputers in the US for American AI manufacturers is expected to create hundreds of thousands of jobs and billions of dollars in growth over the next several decades. Some of the NVIDIA Blackwell computing engines that power AI supercomputers are made at TSMC in Arizona.

NVIDIA announced today that CoreWeave now provides NVIDIA Blackwell GB200 NVL72 rack-scale systems for scaling applications and training next-generation AI models. CoreWeave features hundreds of NVIDIA Grace Blackwell processors for AI training and implementation.

NVIDIA drives AI software development for smarter, more effective models and hardware breakthroughs.

Artificial Analysis dubbed the NVIDIA Llama Nemotron Ultra model, the latest version, the best accurate open-source reasoning model for complex coding and scientific challenges. This reasoning paradigm is also considered one of the finest.

It relies on NVIDIA engineering. A team of NVIDIA engineers won the AI Mathematical Olympiad after fighting against 2,200 teams to tackle difficult mathematical reasoning challenges that advance scientific discoveries, disciplines, and domains. The Llama Nemotron Ultra model was trained using the same post-training procedures and public datasets as NVIDIA's math reasoning contest winner.

Almost everyone wants intelligence, and NVIDIA's AI platform is helping provide that desire.

#technology#technews#govindhtech#news#technologynews#NVIDIA AI Supercomputers#AI Supercomputers#Supercomputers#NVIDIA Blackwell chips#NVIDIA Blackwell

0 notes

Text

Chips de IA y Supercomputadoras Hechos en EE.UU.

La inteligencia artificial (IA) está transformando nuestro mundo, y en el centro de esta revolución se encuentra NVIDIA. Conocida por sus potentes unidades de procesamiento gráfico (GPU), la compañía ha emergido como un líder indiscutible en el hardware que impulsa los modelos de IA más avanzados. En un movimiento estratégico significativo, NVIDIA ha anunciado planes para fabricar componentes…

0 notes

Text

Integration by Parts, Evolved

Possibly my last scientific paper, about something a bit different:

I posted what may be my last academic paper today, about a project I’ve been working on with Matthias Wilhelm for most of the last year. The paper should be online on Monday, I’ll add a link then. For me, the project has been a chance to broaden my horizons, learn new skills, and start to step out of my academic comfort zone. For Matthias, I hope it was grant money well spent. I wanted to work…

1 note

·

View note

Text

Rising Waters

#water is hard to draw#but im slowly improving i think#rain world#rain world fanart#rainworld#iterator#fanart#looks to the moon#rain world game#rainworld art#rain world looks to the moon#lttm#rain world lttm#rain world iterator#supercomputer#supercomputers#ai in fiction#traditionalart#artisits on tumblr#my art#big sis moon#videogame fanart#water

273 notes

·

View notes

Text

Copilot AI Is Suspect

One Iam: Draw a picture of the divine mind awakening. Copilot: Sure thing! Here’s a visual representation of the divine mind awakening. I hope it resonates with the profound concept you’re exploring. Feel free to share your thoughts on it! One Iam: Why do you place the divine mind in a person? Copilot: Great question! The concept of the divine mind is often associated with the higher self…

1 note

·

View note

Text

1957 kids, robots, and bad supercomputers, less powerful than your iPhone but already showing AI evil tendencies

0 notes

Text

The Dark Horse Waiting To Be Assembled – The Quantum Computer

By Arjuwan Lakkdawala

Ink in the Internet

The story of mankind is very interesting and mysterious, we are certainly different than any other creation on earth.

We can think.

Archeology, historical records, fossils; bones, DNA analysis, everything we have unearthed so far about our ancient past and ancestors, has drawn only a gray sketch of what could have been our beginning, and it’s a sketch with many missing parts, and nothing is defined in black and white.

We are Modern Homo Sapiens, before us according to archeological finds were the Archaic Homo Sapiens that existed on earth 300,000 years ago, still due to unclear connections scientists place those in a different category to us, we are theorized to be a sub-species of the original Homo Sapiens.

The man that we know today with his cognitive thinking brain appeared about 160,000 years ago.

The name Homo Sapiens is from the Latin meaning ‘wise human.’

Now why it gets mysterious is that the first tools are said to be used by a species that weren’t like us but are considered by archeologists to be our ancestors about 3.3 million years ago. The tools were stones used as knives, and others used as hammers. And this is said to be the very first tools in the start of our technology.

However, it gets confusing, this 2017 article in Scientific American says: “Monkeys make stone “tools” that bear a striking resemblance to early human artifacts. The discovery could necessitate the reanalysis of the enigmatic stones previously attributed to human origins.”

Earth itself is about 4.54 billion years old.

We modern humans have appeared only about 160,000 years ago. It seems to me there is an error in what could be our theorized “evolutionary” timeline. That is because the Neolithic Revolution (Agricultural Revolution) is said to have started about 12,000 years ago, when humans turned from hunter gatherers to agriculture. From this period onward there is overwhelming artifacts and records of humans that were definitely thinkers as we are. It is not a series of inventions, or traces of civilised, innovative societies that stretched from 3.3 millions years ago, it is sudden with the timeline of the appearance of the modern human. This doesn’t look in anyway as gradual evolution in intelligence, but rather usage of already present intelligence in Modern Homo Sapiens, which developed with ideas, necessity, inspiration, and self-awareness. Pretty much all the hallmarks of what humans are driven by even in this era.

Another mystery about our ancient past is that we are the only survivors of the several so called hominid ancestors.

The transformation of civilizations from the Neolithic Period continued with unbroken innovation, however, the start of the modern world as we know it happened with the First Industrial Revolution in the 1830s and 1840s in Britain, and then spread to the rest of the world.

The Second Industrial Revolution started in the late 19th to early 20th centuries. Different disciplines of science had been discovered and studied along with mathematics, and though not an official term, I would say that from the late 20th century to the 21st we are having an Artificial Intelligence and Robotics Revolution, and advancements with a lot of potential in the space race.

Compute power is getting bigger with the miniaturization of transistors (more transistors means more powerful computers) Scientists are aiming to make Super Computers with Artificial General Intelligence (AGI)

Algorithms are constantly being improved for maximum optimization, and the billions of online interactions and information on files is providing big data for the exponential growth of artificial intelligence. I have referred to this phenomena in a previous article as humanity possibly building a Frankenstein monster.

In the matter of transistors increasing there is the famous Moore’s Law, it is a prediction made by Intel co-founder Gordon Moore in 1965 that the number of transistors will keep increasing on an integrated circuit. This of course raises the question of how small can transistors get, and will it reach a point where no more transistors can be added? From the 60s technology has taken giant and rather unpredictable leaps; transistors are getting nearly as small as atoms, and cloud computing through the internet means, there can be a dungeon full of transistors the size of atoms, and the compute power will be wirelessly delivered to devices or to humanoid robots. I think it is clear to comprehend how this can serve civilizations or go wrong and destroy us.

Imagine the triangle like a classical computer, and the instant simultaneous calculation of the circle by superposition and entanglement by the quantum computer. This would be in my opinion a very dangerous hybrid to humanity.

With the evident possibility of empowering artificial intelligence with countless zettabytes of data, the prospect of what it will morph into is both exciting and terrifying. However, there is a dark horse waiting to be assembled – the Quantum Computer.

I recently read an article by the MIT titled: ‘Why Ai Could Eat Quantum Computer’s Lunch.’ It was this article that got me interested enough to research the matter. The summary of the article from what I understood is that it explains that with so much progress being made with artificial intelligence, and in contrast the slow progress of quantum computers and the difficulty of making them reliable are all factors that could make them obsolete. I think the article makes a fair argument. But I decided to do my own research on the matter.

So what are Quantum Computers exactly and what can they be used for? And how great is the difference between classical computers and their quantum competitors?

It is said that like classical computers have bits which their algorithms use for computing information in binary signals of 1s and 0s, quantum computers will have qubits that will compute information using the quantum effects of ‘superposition’ and ‘entanglement.’ While the bits are computed one by one, in the quantum computer the calculations could be done instantaneously through quantum effects of entanglement, where all the information in the qubits will be calculated simultaneously, and after the measurement (in this case calculation) it will collapse into one state (scientists have not found a way to measure a superposition quantum state without it collapsing) and give the correct answer. Thus saving time when it comes to computing very large sets of data. We are talking here data that could be finding patterns in millions or billions of samples; like in the study of chemistry, microbiology and microorganisms, and several other data sets that have millions upon millions of samples to go through.

Another feature that could give Quantum Computers an edge is that being quantum they simulate quantum state and systems, which classical computers cannot. It is theorized they could even be used in deep learning for artificial intelligence, among several other uses in different sciences.

So what’s stopping scientists from building efficient Quantum Computers and how was the idea of a Quantum Computer conceptualized and when?

The American physicist and Nobel Laureate Richard Feynman in 1959 theorized that the power of quantum mechanics could be harnessed when technology can operate on the nanoscale.

Quantum mechanics is the science of how matter and electromagnetic radiation interact on the level of atoms and sub-atomic particles. Example: electrons, protons, neutrons, gluons, etc.

The issue is that quantum mechanics simply isn’t easy. I think the name “Quantum Computer” could be a little misleading, and what they are actually attempting to build is Quantum Machines.

Some basic facts about Quantum Computers:

In order to make use of quantum mechanics like superposition and entanglement, the computer should be able to follow instructions and do computations. To achieve this, completely novel algorithms are required, ways to extract information from qubits, in addition to the selection of particles that would be most suited to be used as qubits. The primary candidates so far are the electrons, trapped ions, and methods like nuclear magnetic resonance, and quantum dots. However, there is the problem of maintaining ‘coherence’ so that information in qubits can be stable enough to be calculated and stored. Coherence is disturbed by ‘decoherence’ which can be any noise or stimuli from the environment. The quantum state is very sensitive and this has hindered scientists from scaling up qubits in order to make a Quantum Computer that can be practical in its applications.

There are companies that have built quantum computers in the “experimental phase” in labs that have temperatures colder than space (absolute-zero) they have been used to prevent decoherence. But the challenge is scaling up the qubits to thousands or millions which is a work in progress.

It is very likely that Quantum Computers could be combined with Supercomputers as a counterpart and operate as a hybrid.

However, because of the problem of decoherence it is very unlikely that they can be made portable. But cloud computing could make their features available to everyone.

Copyright ©️ Arjuwan Lakkdawala 2024

Arjuwan Lakkdawala is an author and independent science journalist

X/Twitter/Instagram: Spellrainia

Email: [email protected]

Sources:

Britannic – William Fifteen Holton, fact-checked by the editors of Encyclopedia Britannica

History – Industrial Revolution, history.com editors

Australian Museum – Homo Sapiens – Modern Humans, Fran Dorey

Intel – Moore’s Law

National Geographic – What was the Neolithic Revolution?, Erin Blakemore

Britannica – Quantum Mechanics – Gordon Leslie Squires, fact-checked by the editors of encyclopedia Britannica

Space.com – How Old is Earth?

Scientific American – Moonkeys Make Stone “Tools” That Bear A Striking Resemblance to Early Human Artifacts, Kate Wong

Live Science – Quantum Computers Are Here – but why do we need them and what are they used for?, Edd Gent

Britannica – History of Technology Timeline, Erik Gregersen, fact-checked by the editors of Encyclopedia Britannica

#arjuwan lakkdawala#science#nature#ink in the internet#biology#artificial intelligence#bioengineering#deep learning#physics#technology#supercomputers#quantum computers#quantum mechanics#homo sapiens

0 notes

Text

Aratech BRT Supercomputer

Source: The New Essential Guide to Droids (Del Rey, 2006)

#star wars#droids#municipal planning droids#management droids#computers#supercomputers#aratech#brt#brt supercomputer#star wars newspaper strip#first appearance the constancia affair#new essential guide to droids#new essential guides#class one droids#rebel alliance droids#galactic civil war#planning droids

0 notes

Text

youtube

#supercomputer#tesla supercomputer#dojo supercomputer#elon musk supercomputer#supercomputers#fastest supercomputer#dojo supercomputer explained#world's most powerful supercomputers#impressive performance#top 10 supercomputers#how dojo supercomputer works#most powerful supercomputer#most powerful supercomputers#what is a supercomputer#the most powerful supercomputer in the world#ai overhyped technology#elon musk's engineering masterpiece#ai clone review#Youtube

0 notes