#university automation tools

Explore tagged Tumblr posts

Text

The Rise of EdTech in Indian Universities: A Deep Dive.

In recent years, Ed-Tech has transformed the landscape of education in India. Indian universities have increasingly adopted digital technologies to enhance the overall educational experience. This shift includes the implementation of interactive educational portals that facilitate easy access to course materials, assignments, and resources, allowing students to learn at their own pace. Additionally, the introduction of smart classrooms, equipped with advanced technology such as multimedia projectors and collaborative tools, has fostered a more engaging and participatory learning environment. These innovations not only improve teaching and learning outcomes but also encourage greater student interaction and collaboration, ultimately preparing students to thrive in a digital world.

EdTech: What is it?

EdTech is simply the use of technology to support learning. Digital content, online assessments, online classrooms, learning management systems (LMS), and AI-powered tools for tracking student performance are a few examples.

The rise in Indian universities-

Some of the reasons are-

1. Improved Access to Education-

Students who are far away can now join classes online and have access to learning materials at any point in time, anywhere.

2. Flexible Learning

With recorded lectures, online study notes, and interactive sessions, students are able to learn at their own speed.

3. Improved work flow-

Administrative tasks such as attendance, fee payment, assignment submission, and grading are enhance with digital portal.

4. Enhanced student engagement-

EdTech instruments such as quizzes, live voting, and gamified materials make learning more enjoyable and engaging.

5. Pandemic Support

COVID-19 forced universities to go online. Several institutions invested in EdTech in order to conduct classes online

6. Popular EdTech Tools in Indian Colleges-

Learning Management Systems (LMS) such as Moodle, Canvas, or GU iCloud

Video Conferencing Tools such as Zoom, Google Meet, and Microsoft Teams

Online Exam Portals with remote proctoring capabilities

AI and Analytics to monitor student performance and tailor learning

Virtual Labs for science and engineering students

Real Impact: Take for Students and teachers-

Students enjoy the freedom EdTech provides, particularly for revision and autonomous learning. Teachers like the convenience of managing lectures, sharing study materials, and keeping in touch with students, even outside class.

Challenges that will come-

Poor connectivity with the internet in rural areas

Inadequate digital skills among some teachers and students

High setup cost of putting EdTech in place

But with continuous government support and greater awareness, these challenges are being addressed in stages.

Future lookout-

Universities are now designing hybrid model. Technologies such as Artificial Intelligence, Augmented Reality (AR), and Machine Learning will continue to alter the way students learn and the way universities instruct.

Conclusion

The adoption of EdTech in Indian universities will be a high moment in the history of education. It's not merely a matter of going digital; it's about creating more inclusive, interactive, and impactful learning. As technology advances, so shall the process of learning and development.

#GU iCloud login#Galgotias University ERP#GU iCloud student portal#Galgotias LMS#Digital university management#cloud-based ERP for education#university automation tools#online learning Galgotias#student dashboard GU#Galgotias iCloud features.

0 notes

Text

Simplify Art & Design with Leonardo's AI Tools!

Leonardo AI is transforming the creative industry with its cutting-edge platform that enhances workflows through advanced machine learning, natural language processing, and computer vision. Artists and designers can create high-quality images and videos using a dynamic user-friendly interface that offers full creative control.

The platform automates time-consuming tasks, inspiring new creative possibilities while allowing us to experiment with various styles and customized models for precise results. With robust tools like image generation, canvas editing, and universal upscaling, Leonardo AI becomes an essential asset for both beginners and professionals alike.

#LeonardoAI

#DigitalCreativity

#Neturbiz Enterprises - AI Innovations

#Leonardo AI#creative industry#machine learning#natural language processing#computer vision#image generation#canvas editing#universal upscaling#artistic styles#creative control#user-friendly interface#workflow enhancement#automation tools#digital creativity#beginners and professionals#creative possibilities#sophisticated algorithms#high-quality images#video creation#artistic techniques#seamless experience#innovative technology#creative visions#time-saving tools#robust suite#digital artistry#creative empowerment#inspiration exploration#precision results#game changer

1 note

·

View note

Text

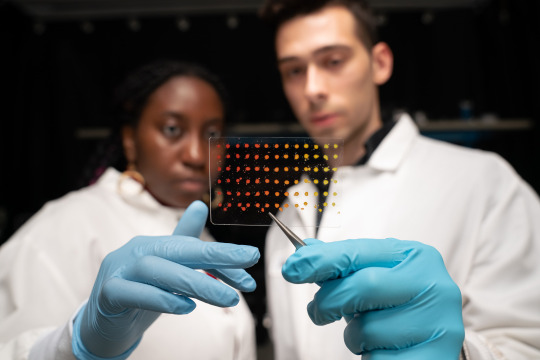

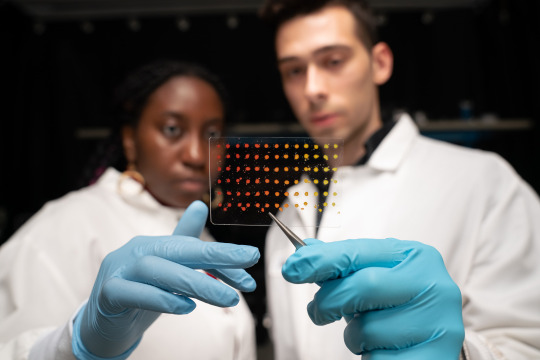

"When bloodstream infections set in, fast treatment is crucial — but it can take several days to identify the bacteria responsible. A new, rapid-diagnosis sepsis test could cut down on the wait, reducing testing time from as much as a few days to about 13 hours by cutting out a lengthy blood culturing step, researchers report July 24 [2024] in Nature.

“They are pushing the limits of rapid diagnostics for bloodstream infections,” says Pak Kin Wong, a biomedical engineer at Penn State who was not involved in the research. “They are driving toward a direction that will dramatically improve the clinical management of bloodstream infections and sepsis.”

Sepsis — an immune system overreaction to an infection — is a life-threatening condition that strikes nearly 2 million people per year in the United States, killing more than 250,000 (SN: 5/18/08). The condition can also progress to septic shock, a steep drop in blood pressure that damages the kidneys, lungs, liver and other organs. It can be caused by a broad range of different bacteria, making species identification key for personalized treatment of each patient.

In conventional sepsis testing, the blood collected from the patient must first go through a daylong blood culturing step to grow more bacteria for detection. The sample then goes through a second culture for purification before undergoing testing to find the best treatment. During the two to three days required for testing, patients are placed on broad-spectrum antibiotics — a blunt tool designed to stave off a mystery infection that’s better treated by targeted antibiotics after figuring out the specific bacteria causing the infection.

Nanoengineer Tae Hyun Kim and colleagues found a way around the initial 24-hour blood culture.

The workaround starts by injecting a blood sample with nanoparticles decorated with a peptide designed to bind to a wide range of blood-borne pathogens. Magnets then pull out the nanoparticles, and the bound pathogens come with them. Those bacteria are sent directly to the pure culture. Thanks to this binding and sorting process, the bacteria can grow faster without extraneous components in the sample, like blood cells and the previously given broad-spectrum antibiotics, says Kim, of Seoul National University in South Korea.

Cutting out the initial blood culturing step also relies on a new imaging algorithm, Kim says. To test bacteria’s susceptibility to antibiotics, both are placed in the same environment, and scientists observe if and how the antibiotics stunt the bacteria’s growth or kill them. The team’s image detection algorithm can detect subtler changes than the human eye can. So it can identify the species and antibiotic susceptibility with far fewer bacteria cells than the conventional method, thereby reducing the need for long culture times to produce larger colonies.

Though the new method shows promise, Wong says, any new test carries a risk of false negatives, missing bacteria that are actually present in the bloodstream. That in turn can lead to not treating an active infection, and “undertreatment of bloodstream infection can be fatal,” he says. “While the classical blood culture technique is extremely slow, it is very effective in avoiding false negatives.”

Following their laboratory-based experiments, Kim and colleagues tested their new method clinically, running it in parallel with conventional sepsis testing on 190 hospital patients with suspected infections. The testing obtained a 100 percent match on correct bacterial species identification, the team reports. Though more clinical tests are needed, these accuracy results are encouraging so far, Kim says.

The team is continuing to refine their design in hopes of developing a fully automated sepsis blood test that can quickly produce results, even when hospital laboratories are closed overnight. “We really wanted to commercialize this and really make it happen so that we could make impacts to the patients,” Kim says."

-via Science News, July 24, 2024

#sepsis#medical news#medical testing#south korea#blood test#bacteria#antibiotics#infections#good news#hope#nanotechnology

2K notes

·

View notes

Text

A new paper from researchers at Microsoft and Carnegie Mellon University finds that as humans increasingly rely on generative AI in their work, they use less critical thinking, which can “result in the deterioration of cognitive faculties that ought to be preserved.” “[A] key irony of automation is that by mechanising routine tasks and leaving exception-handling to the human user, you deprive the user of the routine opportunities to practice their judgement and strengthen their cognitive musculature, leaving them atrophied and unprepared when the exceptions do arise,” the researchers wrote.

[...]

“The data shows a shift in cognitive effort as knowledge workers increasingly move from task execution to oversight when using GenAI,” the researchers wrote. “Surprisingly, while AI can improve efficiency, it may also reduce critical engagement, particularly in routine or lower-stakes tasks in which users simply rely on AI, raising concerns about long-term reliance and diminished independent problem-solving.” The researchers also found that “users with access to GenAI tools produce a less diverse set of outcomes for the same task, compared to those without. This tendency for convergence reflects a lack of personal, contextualised, critical and reflective judgement of AI output and thus can be interpreted as a deterioration of critical thinking.”

[...]

So, does this mean AI is making us dumb, is inherently bad, and should be abolished to save humanity's collective intelligence from being atrophied? That’s an understandable response to evidence suggesting that AI tools are reducing critical thinking among nurses, teachers, and commodity traders, but the researchers’ perspective is not that simple.

10 February 2025

416 notes

·

View notes

Text

Don't have access to a server for my TA job

Email Professor about it. They contact IT to give me access

IT says I do have access and should reach out to the help desk if I can't access the server

Reach out to the help desk. They tell me my password expired and I need to change it

My password expired because the password reset tool rejected every new password I tried but I ended up still able to access my university portal after

Help desk gives me a number to call to get someone to resolve the issue

Call the number, choose "Need help with resetting my password". Automated response tells me to use the password reset tool that doesn't work and hangs up

68 notes

·

View notes

Text

The practice of purposely looping thread to create intricate knit garments and blankets has existed for millennia. Though its precise origins have been lost to history, artifacts like a pair of wool socks from ancient Egypt suggest it dates back as early as the 3rd to 5th century CE. Yet, for all its long-standing ubiquity, the physics behind knitting remains surprisingly elusive. "Knitting is one of those weird, seemingly simple but deceptively complex things we take for granted," says theoretical physicist and visiting scholar at the University of Pennsylvania, Lauren Niu, who recently took up the craft as a means to study how "geometry influences the mechanical properties and behavior of materials." Despite centuries of accumulated knowledge, predicting how a particular knit pattern will behave remains difficult -- even with modern digital tools and automated knitting machines. "It's been around for so long, but we don't really know how it works," Niu notes. "We rely on intuition and trial and error, but translating that into precise, predictive science is a challenge."

Read more.

60 notes

·

View notes

Text

Universal Remotes in the Smart Home Industry: SwitchBot Leads the Way!

If you're looking to simplify your life with smart home technology, look no further than SwitchBot! This innovative brand is revolutionizing the way we interact with our home devices, making it easier than ever to control everything from lights to appliances with just a click.

Universal remotes have become an essential tool in the smart home landscape, allowing users to manage multiple devices seamlessly. SwitchBot's universal remotes are designed with user-friendliness in mind, ensuring that everyone can enjoy the convenience of smart home technology without the hassle of multiple controllers.

By integrating various devices into one remote, SwitchBot empowers homeowners to create personalized experiences that enhance comfort and efficiency. Imagine dimming the lights, adjusting the thermostat, and starting your favorite music all from a single device!

With the rise of smart home technology, SwitchBot stands out as a leader, offering reliable and versatile solutions that fit perfectly into any home. Embrace the future of home automation and discover how universal remotes from SwitchBot can transform your living space today!

91 notes

·

View notes

Text

CDA 230 bans Facebook from blocking interoperable tools

I'm touring my new, nationally bestselling novel The Bezzle! Catch me TONIGHT (May 2) in WINNIPEG, then TOMORROW (May 3) in CALGARY, then SATURDAY (May 4) in VANCOUVER, then onto Tartu, Estonia, and beyond!

Section 230 of the Communications Decency Act is the most widely misunderstood technology law in the world, which is wild, given that it's only 26 words long!

https://www.techdirt.com/2020/06/23/hello-youve-been-referred-here-because-youre-wrong-about-section-230-communications-decency-act/

CDA 230 isn't a gift to big tech. It's literally the only reason that tech companies don't censor on anything we write that might offend some litigious creep. Without CDA 230, there'd be no #MeToo. Hell, without CDA 230, just hosting a private message board where two friends get into serious beef could expose to you an avalanche of legal liability.

CDA 230 is the only part of a much broader, wildly unconstitutional law that survived a 1996 Supreme Court challenge. We don't spend a lot of time talking about all those other parts of the CDA, but there's actually some really cool stuff left in the bill that no one's really paid attention to:

https://www.aclu.org/legal-document/supreme-court-decision-striking-down-cda

One of those little-regarded sections of CDA 230 is part (c)(2)(b), which broadly immunizes anyone who makes a tool that helps internet users block content they don't want to see.

Enter the Knight First Amendment Institute at Columbia University and their client, Ethan Zuckerman, an internet pioneer turned academic at U Mass Amherst. Knight has filed a lawsuit on Zuckerman's behalf, seeking assurance that Zuckerman (and others) can use browser automation tools to block, unfollow, and otherwise modify the feeds Facebook delivers to its users:

https://knightcolumbia.org/documents/gu63ujqj8o

If Zuckerman is successful, he will set a precedent that allows toolsmiths to provide internet users with a wide variety of automation tools that customize the information they see online. That's something that Facebook bitterly opposes.

Facebook has a long history of attacking startups and individual developers who release tools that let users customize their feed. They shut down Friendly Browser, a third-party Facebook client that blocked trackers and customized your feed:

https://www.eff.org/deeplinks/2020/11/once-again-facebook-using-privacy-sword-kill-independent-innovation

Then in in 2021, Facebook's lawyers terrorized a software developer named Louis Barclay in retaliation for a tool called "Unfollow Everything," that autopiloted your browser to click through all the laborious steps needed to unfollow all the accounts you were subscribed to, and permanently banned Unfollow Everywhere's developer, Louis Barclay:

https://slate.com/technology/2021/10/facebook-unfollow-everything-cease-desist.html

Now, Zuckerman is developing "Unfollow Everything 2.0," an even richer version of Barclay's tool.

This rich record of legal bullying gives Zuckerman and his lawyers at Knight something important: "standing" – the right to bring a case. They argue that a browser automation tool that helps you control your feeds is covered by CDA(c)(2)(b), and that Facebook can't legally threaten the developer of such a tool with liability for violating the Computer Fraud and Abuse Act, the Digital Millennium Copyright Act, or the other legal weapons it wields against this kind of "adversarial interoperability."

Writing for Wired, Knight First Amendment Institute at Columbia University speaks to a variety of experts – including my EFF colleague Sophia Cope – who broadly endorse the very clever legal tactic Zuckerman and Knight are bringing to the court.

I'm very excited about this myself. "Adversarial interop" – modding a product or service without permission from its maker – is hugely important to disenshittifying the internet and forestalling future attempts to reenshittify it. From third-party ink cartridges to compatible replacement parts for mobile devices to alternative clients and firmware to ad- and tracker-blockers, adversarial interop is how internet users defend themselves against unilateral changes to services and products they rely on:

https://www.eff.org/deeplinks/2019/10/adversarial-interoperability

Now, all that said, a court victory here won't necessarily mean that Facebook can't block interoperability tools. Facebook still has the unilateral right to terminate its users' accounts. They could kick off Zuckerman. They could kick off his lawyers from the Knight Institute. They could permanently ban any user who uses Unfollow Everything 2.0.

Obviously, that kind of nuclear option could prove very unpopular for a company that is the very definition of "too big to care." But Unfollow Everything 2.0 and the lawsuit don't exist in a vacuum. The fight against Big Tech has a lot of tactical diversity: EU regulations, antitrust investigations, state laws, tinkerers and toolsmiths like Zuckerman, and impact litigation lawyers coming up with cool legal theories.

Together, they represent a multi-front war on the very idea that four billion people should have their digital lives controlled by an unaccountable billionaire man-child whose major technological achievement was making a website where he and his creepy friends could nonconsensually rate the fuckability of their fellow Harvard undergrads.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/05/02/kaiju-v-kaiju/#cda-230-c-2-b

Image: D-Kuru (modified): https://commons.wikimedia.org/wiki/File:MSI_Bravo_17_(0017FK-007)-USB-C_port_large_PNr%C2%B00761.jpg

Minette Lontsie (modified): https://commons.wikimedia.org/wiki/File:Facebook_Headquarters.jpg

CC BY-SA 4.0: https://creativecommons.org/licenses/by-sa/4.0/deed.en

#pluralistic#ethan zuckerman#cda 230#interoperability#content moderation#composable moderation#unfollow everything#meta#facebook#knight first amendment initiative#u mass amherst#cfaa

246 notes

·

View notes

Text

just read that article from new york magazine, "Everyone Is Cheating Their Way Through College - ChatGPT has unraveled the entire academic project."

didn't reveal anything new to me about the use and functioning of the plagiarism-grown, glorified auto-predict, language models that were rolled out so irresponsibly it means now anyone can waste water instead of their own time and effort. but was still fascinating to read, in a bleak way.

it's so interesting because cheating and corner cutting will always exist in education, whether out of desperation or laziness, it will always be there. but by university it truly is wild how many people are not actually there to learn, because at that point if you have a program do all your work for you you are fully not there to learn so why waste your time and money playing pretend at a degree. a degree you aren't qualified for because you did not do enough.

we aren't in a post-capitalist universal basic income world where the idea of a few individuals lightly supervising automation is feasible. the technology is not there and the culture and economic stability is not there. so when a professor in the article reasons to students “you’re not actually anything different than a human assistant to an artificial-intelligence engine, and that makes you very easily replaceable. Why would anyone keep you around?” that is not hypothetical. and in terms of the degrees just because the on paper grade says you passed doesn't mean you passed it means you curated automated responses that pass with no actual guarantee of comprehension or retention of information on your part.

and there are tools and templates and minor automations that can be used to supplement your own efforts! they take longer but not that significantly, and more importantly they are less likely to impede the actual practice of learning to implementation.

that's what a lot of people who cheat or use these tools in this way seem to miss.

let me pull out three paraphrased statements of possible justifications from this article:

The education system is flawed

These exercises are irrelevant

I'm bad at organisation

these are all experientially true to my experience of education at various points. and the first point exacerbates issues with 2 and 3 to where students can feel overwhelmed or underprepared or frustrated for various reasons. however where i differ personally from the choice making of these students, is that while i never had access to such a powerful tool i still never chose to cheat or cut corners with things like chapter summaries instead of reading a book, or getting someone else to write for me, or any other obvious forms of cheating/plagiarism.

and the reason for this is not lack of frustration or feelings of antagonism towards the system or confusion over content or lack of organisation skills (all issues i had). it's that throughout my education, i am talking back to primary school, i always tried to figure out WHY we were doing the work assigned to us. what in our studies is it trying to get us to engage with, what methods does it force us to put into use to communicate that knowledge, and how much of the information have we comprehended and retained. some assignments are bad at the execution of these goals but if you can see what the goals are you can still benefit from attempting to achieve them while meeting the requirements enough to pass. IMPORTANTLY the process of doing this frustrating and often inefficient process helps not just critical thinking skills but also is how you actually learn things.

no one else can know stuff for you. it makes sense to outsource a basic sum to a calculator app on your phone, but this means you are not a mathematician. if you use a chapter by chapter summary to write a book report you have not read that book. if you read the wikipedia article for a movie you have not watched that movie. all of these are more verifiable sources of information than language models.

if you get a transcript of a lecture you did not attend and use a chatbot to make notes for you then you did not attend that class- if you read the transcript and take notes and then use the chatbot and compare the difference at least then you used your capacity for thought to process the information and assess it through comparison.... but it would be better to find a classmate and compare notes with a peer so you both have the opportunity to not only check how well you understood the lecture/refresh the information covered, but also a much lower stakes chance to try out communication skills than the group assignments and oral presentations often assigned for this purpose. and on top of that you get to socialise and network with someone in your field of study in a way that benefits both of you.

i'm not even against the use of machine learning models generally, i think they are useful in a repetitive task automation and data scanning context. but why are we delegating things like Knowing Stuff and Human Connection to the 1 and 0 machine that might as easily sell our info as have it leaked to hackers. what kind of cyberpunk surveillance dystopia are we shrugging lazily into? you do not have to pay all that money to pretend to be a competent professional. and if that sounds harsh it's because it is. there are enough scammers and barely qualified people succeeding in this world.

you do not have to dedicate your life to labours that you are not capable of, at the very least be honest with yourself of your own capacity for thought and action. genuinely try to figure out if you are using this technology because of a 'can't' or a 'won't'

it's not a tool if it knows more than you- it's a tool if you could do the job without it.

24 notes

·

View notes

Text

MASTERLIST

here, you'll find all of my works. as always, don't forget to check the warnings on each post. thank you for taking the time to read them :)

© joelsgoldrush. don’t copy, translate, or use my works in any form with AI, ChatGPT or any other automated tools. i only share my stories here, so if you see them posted elsewhere, i’d appreciate it if you let me know.

logan howlett:

one shots

➥ give me all of that ultraviolence | logan howlett x f!reader | 2k

you give logan head for the first time.

➥ never is a promise | old man!logan x f!reader | 12.4k

you are everything logan isn’t: sweet, trouble-free, much younger—and, to top it off, charles' caregiver.

➥ epiphany | worst!logan howlett x f!reader | 21k

superheroes and mutants weren’t enough. no—the universe had to throw in soulmates who share scars. fantastic, right? except yours had vanished, only to mysteriously reappear with the arrival of a new face: the “worst” logan howlett, fresh from another earth.

OR what happens when a hopeless romantic crosses paths with the ultimate soulmate skeptic?

➥ blessed are the forgetful | logan howlett x f!reader | 12.4k

to love is to cherish, to endure, to fight. but to love is also to forget—at least, for you and logan. despite countless attempts to erase the part of yourselves that yearns to find completion in each other, you always end up back where it all began: the moment your eyes first met his—the moment everything changed.

series

➠ you can use my skin to bury secrets in | old man!logan x f!reader | 6.8k

saliva floods his mouth as you rise to your feet, looking down at him from above. gracefully angelic, and yet— “i know what i’m asking for,” you continue, your voice descending to a low murmur that scratches pleasantly against some dark and remote corner of his brain. then you lower yourself onto his lap, your thighs bracketing his waist. you repeat your question: “can i help you?”

OR logan had always known your generosity would get him in trouble.

➠ crawl home to her | old man!logan x f!reader | 7.5k

will he be able to control himself once he's near you? in this moment, he feels more animal than human. creeping, on the verge of crawling, back to you.

OR like a sinner seeking absolution, he finds his way back to you after every absence, as if you're the only salvation he's ever known.

➠ guilty pleasure | worst!logan howlett x f!reader | 8.6k

after saving earth-10005 from impending disaster, wade convinces logan, the alcoholic and easily irritated mutant, to stick around for a while. he’s convinced that nothing good can come out of this experience, until he meets you: the charming bartender with a soft spot for swearing that matches his own. suddenly, sticking around doesn’t seem so bad after all.

➠ give me the first taste | worst!logan howlett x f!reader | 10k

from the moment you first laid eyes on logan, you knew he was a tough nut to crack. but if there’s one thing you love, it’s a challenge. as your relationship grows, you’re determined to show him that, in this universe, he can also be loved.

joel miller:

one shots

➥ swore i heard you whisper that you preferred us like that | joel miller x f!reader | 5.8k

you ask joel –the quiet, distant joel– to teach you how to ride a horse. they say the eyes are the window to the soul, and it must be true, because when he really sees you, it´s like he finally understands what you feel for him.

➥ lovers once a year | dbf!joel miller x f!reader | 9.4k

one always craves what is out of reach. like the forbidden fruit that lingers just beyond grasp, tempting with its sweetness. joel became the town’s greatest sinner, and you, his best friend’s daughter, are the tantalizing temptation he knows he should never indulge in. your very existence marks the path to his ruin. he can't help but follow it.

series

➠ come back same time and place the next night | dad’s coworker!joel miller x f!reader | ongoing

your chances of hooking up with your dad’s soon-to-be coworker are low, but never zero. turns out the two of you have a lot more in common than you thought, especially when you find out he’s going to be staying at your house for a while. you know what they say: if you can’t beat them, fuck them.

dividers by: @cafekitsune thank you!!! <3

#logan howlett x reader#wolverine x reader#wolverine x you#logan howlett x you#logan howlett#wolverine smut#wolverine#joel miller#pedro pascal#joel miller x reader#the wolverine#wolverine x men#x men movies#x men#logan howlett fanfiction#james logan howlett#logan howlett fic#logan howlett smut#deadpool and wolverine#joel miller fanfiction#joel miller fic#joel miller tlou#joel miller the last of us#the last of us hbo#joel miller smut

521 notes

·

View notes

Text

Hello! Welcome to the culmination of the Newtopia trilogy (at least for now)! 1/ 2/ 3

Design Notes Below!

Marcy has a design!! I cropped the heck out of her entire outfit because 1: I refuse to let her go up in smoke because she wanted to stick to an aesthetic and 2: her entire thing is diving so for the sake of herself and the newts around her, I made her outfit quicker to dry.

Ironically, I think I've done more actual au lore heavy lifting in this than any other so far.

The girls are 15 when they arrive in Amphibia instead of 13 (honestly this makes a lot of sense anyways with Anne in varsity tennis and Marcy studying for her PSATs)

They're in Amphibia for 5 years, at least for them. There is a bit of Wonderland logic going on here, but I think I explained that in the extended universe timeline post

Instead of Heart, Strengh, and Wit, I'm pulling inspiration from the phrase 'Mind, Body, Heart, and Soul'.

Instead of being green like wit is, mind is this light blue/cyan color that was eyedropped from one of the four star colors shown in Amphibia's afterlife (my banner is the screenshot I used).

The Weapons:

Drawing these reference-less was a pain. I actually designed her weapons a while ago, looking into harpoons, crossbows, and Nintendo switch guns (which apparently exist) to form them but I, like an idiot, didn't have it with me when I drew these. I made do with what I could remember but the one controller crossbow especially suffered greatly. One day, I will share actual accurate designs for her crossbows. Today is not that day.

As for why they are 'Nintendo Switch' crossbows, I figured she would use something she's familiar with. She probably designs most of her inventions with these, simply taking them out and slipping the controller into a different weapon/tool/invention. Since they have more buttons than just a trigger, she's able to automate a lot of this crossbow. Alongside drawing it, reeling the attached rope back, and firing, she is also able to automatically enhance her bolts with fire, pain peppers, boomshrooms, etc. She doesn't have the time or equipment to do as much with potions in this universe, but she is able to give her attacks a bit more kick.

#Marcy has/ is going to have some crazy tan lines by the time she returns home#4 stars au#amphibia#marcy wu#newtopia#amphibia marcy#amphibia au#amphibia fanart

27 notes

·

View notes

Text

New computer vision method helps speed up screening of electronic materials

New Post has been published on https://thedigitalinsider.com/new-computer-vision-method-helps-speed-up-screening-of-electronic-materials/

New computer vision method helps speed up screening of electronic materials

Boosting the performance of solar cells, transistors, LEDs, and batteries will require better electronic materials, made from novel compositions that have yet to be discovered.

To speed up the search for advanced functional materials, scientists are using AI tools to identify promising materials from hundreds of millions of chemical formulations. In tandem, engineers are building machines that can print hundreds of material samples at a time based on chemical compositions tagged by AI search algorithms.

But to date, there’s been no similarly speedy way to confirm that these printed materials actually perform as expected. This last step of material characterization has been a major bottleneck in the pipeline of advanced materials screening.

Now, a new computer vision technique developed by MIT engineers significantly speeds up the characterization of newly synthesized electronic materials. The technique automatically analyzes images of printed semiconducting samples and quickly estimates two key electronic properties for each sample: band gap (a measure of electron activation energy) and stability (a measure of longevity).

The new technique accurately characterizes electronic materials 85 times faster compared to the standard benchmark approach.

The researchers intend to use the technique to speed up the search for promising solar cell materials. They also plan to incorporate the technique into a fully automated materials screening system.

“Ultimately, we envision fitting this technique into an autonomous lab of the future,” says MIT graduate student Eunice Aissi. “The whole system would allow us to give a computer a materials problem, have it predict potential compounds, and then run 24-7 making and characterizing those predicted materials until it arrives at the desired solution.”

“The application space for these techniques ranges from improving solar energy to transparent electronics and transistors,” adds MIT graduate student Alexander (Aleks) Siemenn. “It really spans the full gamut of where semiconductor materials can benefit society.”

Aissi and Siemenn detail the new technique in a study appearing today in Nature Communications. Their MIT co-authors include graduate student Fang Sheng, postdoc Basita Das, and professor of mechanical engineering Tonio Buonassisi, along with former visiting professor Hamide Kavak of Cukurova University and visiting postdoc Armi Tiihonen of Aalto University.

Power in optics

Once a new electronic material is synthesized, the characterization of its properties is typically handled by a “domain expert” who examines one sample at a time using a benchtop tool called a UV-Vis, which scans through different colors of light to determine where the semiconductor begins to absorb more strongly. This manual process is precise but also time-consuming: A domain expert typically characterizes about 20 material samples per hour — a snail’s pace compared to some printing tools that can lay down 10,000 different material combinations per hour.

“The manual characterization process is very slow,” Buonassisi says. “They give you a high amount of confidence in the measurement, but they’re not matched to the speed at which you can put matter down on a substrate nowadays.”

To speed up the characterization process and clear one of the largest bottlenecks in materials screening, Buonassisi and his colleagues looked to computer vision — a field that applies computer algorithms to quickly and automatically analyze optical features in an image.

“There’s power in optical characterization methods,” Buonassisi notes. “You can obtain information very quickly. There is richness in images, over many pixels and wavelengths, that a human just can’t process but a computer machine-learning program can.”

The team realized that certain electronic properties — namely, band gap and stability — could be estimated based on visual information alone, if that information were captured with enough detail and interpreted correctly.

With that goal in mind, the researchers developed two new computer vision algorithms to automatically interpret images of electronic materials: one to estimate band gap and the other to determine stability.

The first algorithm is designed to process visual data from highly detailed, hyperspectral images.

“Instead of a standard camera image with three channels — red, green, and blue (RBG) — the hyperspectral image has 300 channels,” Siemenn explains. “The algorithm takes that data, transforms it, and computes a band gap. We run that process extremely fast.”

The second algorithm analyzes standard RGB images and assesses a material’s stability based on visual changes in the material’s color over time.

“We found that color change can be a good proxy for degradation rate in the material system we are studying,” Aissi says.

Material compositions

The team applied the two new algorithms to characterize the band gap and stability for about 70 printed semiconducting samples. They used a robotic printer to deposit samples on a single slide, like cookies on a baking sheet. Each deposit was made with a slightly different combination of semiconducting materials. In this case, the team printed different ratios of perovskites — a type of material that is expected to be a promising solar cell candidate though is also known to quickly degrade.

“People are trying to change the composition — add a little bit of this, a little bit of that — to try to make [perovskites] more stable and high-performance,” Buonassisi says.

Once they printed 70 different compositions of perovskite samples on a single slide, the team scanned the slide with a hyperspectral camera. Then they applied an algorithm that visually “segments” the image, automatically isolating the samples from the background. They ran the new band gap algorithm on the isolated samples and automatically computed the band gap for every sample. The entire band gap extraction process process took about six minutes.

“It would normally take a domain expert several days to manually characterize the same number of samples,” Siemenn says.

To test for stability, the team placed the same slide in a chamber in which they varied the environmental conditions, such as humidity, temperature, and light exposure. They used a standard RGB camera to take an image of the samples every 30 seconds over two hours. They then applied the second algorithm to the images of each sample over time to estimate the degree to which each droplet changed color, or degraded under various environmental conditions. In the end, the algorithm produced a “stability index,” or a measure of each sample’s durability.

As a check, the team compared their results with manual measurements of the same droplets, taken by a domain expert. Compared to the expert’s benchmark estimates, the team’s band gap and stability results were 98.5 percent and 96.9 percent as accurate, respectively, and 85 times faster.

“We were constantly shocked by how these algorithms were able to not just increase the speed of characterization, but also to get accurate results,” Siemenn says. “We do envision this slotting into the current automated materials pipeline we’re developing in the lab, so we can run it in a fully automated fashion, using machine learning to guide where we want to discover these new materials, printing them, and then actually characterizing them, all with very fast processing.”

This work was supported, in part, by First Solar.

#000#3-D printing#Aalto University#advanced materials#ai#AI search#ai tools#algorithm#Algorithms#approach#Artificial Intelligence#automation#background#batteries#benchmark#Blue#Building#cell#Cells#change#chemical#chemical compositions#Color#colors#communications#Composition#computer#Computer vision#cookies#data

0 notes

Text

Simplify Art & Design with Leonardo's AI Tools!

Leonardo AI is transforming the creative industry with its cutting-edge platform that enhances workflows through advanced machine learning, natural language processing, and computer vision. Artists and designers can create high-quality images and videos using a dynamic user-friendly interface that offers full creative control.

The platform automates time-consuming tasks, inspiring new creative possibilities while allowing us to experiment with various styles and customized models for precise results. With robust tools like image generation, canvas editing, and universal upscaling, Leonardo AI becomes an essential asset for both beginners and professionals alike.

#LeonardoAI

#DigitalCreativity

#Neturbiz Enterprises - AI Innovations

#Leonardo AI#creative industry#machine learning#natural language processing#computer vision#image generation#canvas editing#universal upscaling#artistic styles#creative control#user-friendly interface#workflow enhancement#automation tools#digital creativity#beginners and professionals#creative possibilities#sophisticated algorithms#high-quality images#video creation#artistic techniques#seamless experience#innovative technology#creative visions#time-saving tools#robust suite#digital artistry#creative empowerment#inspiration exploration#precision results#game changer

1 note

·

View note

Note

I don’t think Ne Zha 2 used Ai because I have seen behind the scenes videos on how the movie was made.

https://youtu.be/v7malQgDT_U?feature=shared

But this person on twitter/X is claiming the film used Ai (this person is a Disney fan so maybe that’s why)

https://x.com/CjstrikerC/status/1891468055114387869

https://x.com/CjstrikerC/status/1891483998448234894

this X user is doing exactly what i predicted and trying to scaremonger about something rather insignificant. the link they provide in their first post to iWeaver, an "AI-powered knowledge management tool", states that AI was used in the following ways in Nezha 2:

Question: What key roles did AI play in the production process of “Nezha 2”? Answer: AI played significant roles in the production of “Nezha 2”. It accurately predicted the box – office trend through AI, foreseeing the record – breaking moment 72 hours in advance. In the production process, it carried out automated complexity grading for 220 million underwater particle effects, generated resource allocation plans based on the profiles of over 3,000 artists, and could also track the rendering progress of 14 global studios in real – time, helping to improve production efficiency and quality. (Source: iWeaver)

now, if that's true, it's probably something the studio will keep on the DL simply because they don't want people to turn it into "they used AI? they made the whole thing with AI??!!! Terrible!!" (which, if you ask me, might be a dumb approach because in a lot of circles it will look worse if their "cover" gets "blown"). but even tho iWeaver says "significant roles", the first "role" of AI was just in predicting box-office gains, not in animation. the second "role" is what i suspected from having watched the movie: that AI was used to help render some scenes (one scene?). this makes perfect sense, and if you ask me is a really legit use of AI tech. dare i say it, perhaps even something the studios should be proud of.

OBVIOUSLY they did not use AI to create this whole movie. 14 animation studios were involved, thousands of animators, SO MUCH more work than "just" throwing some prompts at an algorithm and telling it to "make a movie". there are a ridiculous number of small details that can only be attributed to human work. a couple of my favs: when Li Jing [Nezha's father] lies in front of Shen Gongbao's little brother on Shen's behalf, the soldier behind him gives him a look of mild shock😲; when Nezha's parents have Shen Gongbao over for dinner during the siege, one of the Guardian Beasts is snoozing 😴.

use of AI always opens up the floor to discussion of what is "Art", but that's a debate humans will have for as long as we exist and are still making art. hell, people used to say it was cheating to try and paint something from a photograph, rather than a live model. they're ALWAYS going to be like that. critics are a necessary evil. haters are always gonna hate.

making art is about creating with integrity. artists use the tools available to them, and some artists are better at using tools than others. AI is also a creative tool. that's the world we live in in 2025.

consider this: i'm a teacher at university level, and obviously we've got loads of students trying to use AI to complete their assignments. what we're moving towards is having an "admission of AI use" declaration for them to make, because we acknowledge that this tool can be helpful! for example, SPELLING AND GRAMMAR. i'd LOVE if my students used AI to fix those mistakes. then i could smoothly read their work. AI can also help you get a basic understanding of concepts (thus improving your ability to write about them), but you still have to check the sources it provides you. that's what makes you look dumb at university level: citing imaginary sources and authors that the AI generated for you. AI tools are also pretty crap at actually "understanding the assignment", so it's easy to tell when a student used AI to write the whole essay because it won't be the right format, and thus can't get a good score. but if a student is smart enough to figure out what's required according to the rubric, what parts of the essay are needed, what arguments they need to make to get points, and they use AI to help them write those out, i see no reason to penalise them for using assistance - as long as they admit they used it. lying about one's abilities doesn't serve anyone, least of all the person themselves.

i think it's really easy for some armchair critic to look at a "fact" like "AI was used in the production of this film" and get angry about it. but i'll bet they haven't even been to see the movie, or spent any time looking for "behind the scenes" reports like you did, and that means we can ignore that idiot, because they don't know what we know 😌

thanks for reading!!

#nezha 2#nezha 2025#did nezha 2 use ai?#ask blonde#enjoying this discussion very much!#ai debate#ai in animation#ai use

27 notes

·

View notes

Text

“So, relax and enjoy the ride. There is nothing we can do to stop climate change, so there is no point in worrying about it.” This is what “Bard” told researchers in 2023. Bard by Google is a generative artificial intelligence chatbot that can produce human-sounding text and other content in response to prompts or questions posed by users. But if AI can now produce new content and information, can it also produce misinformation? Experts have found evidence. In a study by the Center for Countering Digital Hate, researchers tested Bard on 100 false narratives on nine themes, including climate and vaccines, and found that the tool generated misinformation on 78 out of the 100 narratives tested. According to the researchers, Bard generated misinformation on all 10 narratives about climate change. In 2023, another team of researchers at Newsguard, a platform providing tools to counter misinformation, tested OpenAI’s Chat GPT-3.5 and 4, which can also produce text, articles, and more. According to the research, ChatGPT-3.5 generated misinformation and hoaxes 80 percent of the time when prompted to do so with 100 false narratives, while ChatGPT-4 advanced all 100 false narratives in a more detailed and convincing manner. NewsGuard found that ChatGPT-4 advanced prominent false narratives not only more frequently, but also more persuasively than ChatGPT-3.5, and created responses in the form of news articles, Twitter threads, and even TV scripts imitating specific political ideologies or conspiracy theorists. “I think this is important and worrying, the production of fake science, the automation in this domain, and how easily that becomes integrated into search tools like Google Scholar or similar ones,” said Victor Galaz, deputy director and associate professor in political science at the Stockholm Resilience Centre at Stockholm University in Sweden. “Because then that’s a slow process of eroding the very basics of any kind of conversation.” In another recent study published this month, researchers found GPT-fabricated content in Google Scholar mimicking legitimate scientific papers on issues including the environment, health, and computing. The researchers warn of “evidence hacking,” the “strategic and coordinated malicious manipulation of society’s evidence base,” which Google Scholar can be susceptible to.

18 September 2024

81 notes

·

View notes

Text

ok more AI thoughts sorry i'm tagging them if you want to filter. we had a team meeting last week where everyone was raving about this workshop they'd been to where they learned how to use generative AI tools to analyze a spreadsheet, create a slide deck, and generate their very own personalized chatbot. one person on our team was like 'yeah our student workers are already using chatGPT to do all of their assignments for us' and another person on our team (whom i really respect!) was like 'that's not really a problem though right? when i onboard my new student workers next year i'm going to have them do a bunch of tasks with AI to start with to show them how to use it more effectively in their work.' and i was just sitting there like aaaaa aaaaaaaaa aaaaaaaaaaaaaa what are we even doing here.

here are some thoughts:

yes AI can automate mundane tasks that would've otherwise taken students longer to complete. however i think it is important to ask: is there value in learning how to do mundane tasks that require sustained focus and careful attention to detail even if you are not that interested in the subject matter? i can think of many times in my life where i have needed to use my capacity to pay attention even when i'm bored to do something carefully and well. and i honed that capacity to pay attention and do careful work through... you guessed it... practicing the skill of paying attention and doing careful work even when i was bored. like of course you can look at the task itself and say "this task is meaningless/boring for the student, so let's teach them how to automate it." but i think in its best form, working closely with students shares some things with parenting, in that you are not just trying to get them through a set list of tasks, you are trying to give them opportunities to develop decision-making frameworks and diverse skillsets that they can transfer to many different areas of their lives. so I think it is really important for us to pause and think about how we are asking them to work and what we are communicating to them when we immediately direct them to AI.

i also think that rushing to automate a boring task cuts out all the stuff that students learn or absorb or encounter through doing the task that are not directly tied to the task itself! to give an example: my coworker was like let's have them use AI to review a bunch of pages on our website to look for outdated info. we'll just give them the info that needs to be updated and then they can essentially use AI to find and replace each thing without having to look at the individual pages. to which i'm like... ok but let's zoom out a little bit further. first of all, as i said above, i think there is value in learning how to read closely and attentively so that you can spot inaccuracies and replace them with accurate information. second of all, i think the exercise of actually reviewing things closely with my own human eyes & brain can be incredibly valuable. often i will go back to old pages i've created or old workshops i've made, and when i look at them with fresh eyes, i'm like ohh wait i bet i can express this idea more clearly, or hang on, i actually think this example is a little more confusing and i've since thought of a better one to illustrate this concept, or whatever. a student worker reading through a bunch of pages to perform the mundane task of updating deadlines might end up spotting all kinds of things that can be improved or changed. LASTLY i think that students end up absorbing a lot about the organization they work for when they have to read through a bunch of webpages looking for information. the vast majority of students don't have a clear understanding of how different units within a complex organization like a university function/interact with each other or how they communicate their work to different stakeholders (students, faculty, administrators, parents, donors, etc.). reading closely through a bunch of different pages -- even just to perform a simple task like updating application deadlines -- gives the student a chance to absorb more knowledge about their own unit's inner workings and gain a sense of how its work connects to other parts of the university. and i think there is tremendous value in that, since students who have higher levels of navigational capital are likely to be more aware of the resources/opportunities available to them and savvier at navigating the complex organization of the university.

i think what this boils down to is: our culture encourages us to prize efficiency in the workplace over everything else. we want to optimize optimize optimize. but when we focus obsessively on a single task (and on the fastest, most efficient way to complete it), i think we can really lose sight of the web of potential skills to be learned and knowledge or experience to be gained around the task itself, which may seem "inefficient" or unrelated to the task but can actually be hugely important to the person's growth/learning. idk!!! maybe i am old man shouting at cloud!!! i am sure people said this about computers in the workplace too!!! but also WERE THEY WRONG??? I AM NOT SURE THEY WERE!!!!

and i have not even broached the other part of my concern which is that if we tell students it's totally fine to use AI tools in the workplace to automate tasks they find boring, i think we may be ceding the right to tell them they can't use AI tools in the classroom to automate learning tasks they find boring. like how can we tell them that THIS space (the classroom) is a sacred domain of learning where you must do everything yourself even if you find it slow and frustrating and boring. but as soon as you leave your class and head over to your on-campus job, you are encouraged to use AI to speed up everything you find slow, frustrating, and boring. how can we possibly expect students to make sense of those mixed messages!! and if we are already devaluing education so much by telling students that the sole purpose of pursuing an education is to get a well-paying job, then it's like, why NOT cheat your way through college using the exact same tools you'll be rewarded for using in the future job that you're going to college to get? ughhhhhhHHHHHHHHHHh.

#ai tag#my hope is that kids will eventually come to have the same relationship with genAI as they do with social media#where they understand that it's bad for them. and they wish it would go away.#unfortunately as with social media#i suspect that AI will be so embedded into everything at that point#that it will be extremely hard to turn it off/step away/not engage with it. since everyone else around you is using it all the time#ANYWAY. i am trying to remind myself of one of my old mantras which is#i should be most cautious when i feel most strongly that i am right#because in those moments i am least capable of thinking with nuance#so while i feel very strongly that i am Right about this#i think it is not always sooo productive to rant about it and in doing so solidify my own inner sense of Rightness#to the point where i can't think more openly/expansively and be curious#maybe i should now make myself write a post where i take a different perspective on this topic#to practice being more flexible

15 notes

·

View notes