#web scraping and data analytics

Explore tagged Tumblr posts

Text

#quick-commerce platforms#web scraping services#Tracking real-time stock#web scraping and data analytics

0 notes

Text

Restaurant Data Analytics Services - Restaurant Business Data Analytics

Restaurant data analytics services to turn raw restaurant data into actionable insights. Make data-driven decisions to boost your business in today’s competitive culinary landscape. Our comprehensive restaurant data analytics solutions empower you to optimize operations, enhance customer experiences, and boost profitability. Our team of seasoned data analysts strives hard to deliver actionable data insights that drive tangible results.

#Restaurant data analytics services#Data Analytics#restaurant data analytics solutions#Scraping restaurant data#food delivery service#Food Data Scraping Services#web scraping services#web scraping#web data extraction#Restaurant Data Scraper#price monitoring services#monitor competitor’s prices

1 note

·

View note

Text

https://www.webrobot.eu/travel-data-scraper-benefits-hospitality-tourism

The travel industry faces several challenges when using travel data. Discover how web scraping technology can help your tourism business solve these issues.

#travel#tourism#big data#web scraping tools#data extraction#hospitality#data analytics#datasets#webrobot#data mining#no code#ai tools

1 note

·

View note

Text

#proxies#proxy#proxyserver#residential proxy#automation#ecommerce#data insights#data intеgration challеngеs#web scraping techniques#web scraping services#web scraping tools#industry data#datascience#data analytics#market analysis#shopping

0 notes

Text

Web scraping is a technique for extracting organized web information from unstructured HTML design data in a ledger sheet or data set.

A data extraction solution, such as NewsData.io's news API, can generate an organized database of data that can be analyzed and converted into the news. This not only speeds up and simplifies data journalism, but it can also help journalists make better sense of the data because structured data can be filtered, sorted, and processed in a variety of ways without the journalist having to manually scrape data. For more detail read our blog- https://newsdata.io/blog/web-scraping-in-data-journalism/

#data science#programming#web scraping#news api#web developers#data analytics#data journalism#python#data visualization#coding#marketing#api

1 note

·

View note

Text

In late January, a warning spread through the London-based Facebook group Are We Dating the Same Guy?—but this post wasn’t about a bad date or a cheating ex. A connected network of male-dominated Telegram groups had surfaced, sharing and circulating nonconsensual intimate images of women. Their justification? Retaliation.

On January 23, users in the AWDTSG Facebook group began warning about hidden Telegram groups. Screenshots and TikTok videos surfaced, revealing public Telegram channels where users were sharing nonconsensual intimate images. Further investigation by WIRED identified additional channels linked to the network. By scraping thousands of messages from these groups, it became possible to analyze their content and the patterns of abuse.

AWDTSG, a sprawling web of over 150 regional forums across Facebook alone, with roughly 3 million members worldwide, was designed by Paolo Sanchez in 2022 in New York as a space for women to share warnings about predatory men. But its rapid growth made it a target. Critics argue that the format allows unverified accusations to spiral. Some men have responded with at least three defamation lawsuits filed in recent years against members, administrators, and even Meta, Facebook’s parent company. Others took a different route: organized digital harassment.

Primarily using Telegram group data made available through Telemetr.io, a Telegram analytics tool, WIRED analyzed more than 3,500 messages from a Telegram group linked to a larger misogynistic revenge network. Over 24 hours, WIRED observed users systematically tracking, doxing, and degrading women from AWDTSG, circulating nonconsensual images, phone numbers, usernames, and location data.

From January 26 to 27, the chats became a breeding ground for misogynistic, racist, sexual digital abuse of women, with women of color bearing the brunt of the targeted harassment and abuse. Thousands of users encouraged each other to share nonconsensual intimate images, often referred to as “revenge porn,” and requested and circulated women’s phone numbers, usernames, locations, and other personal identifiers.

As women from AWDTSG began infiltrating the Telegram group, at least one user grew suspicious: “These lot just tryna get back at us for exposing them.”

When women on Facebook tried to alert others of the risk of doxing and leaks of their intimate content, AWDTSG moderators removed their posts. (The group’s moderators did not respond to multiple requests for comment.) Meanwhile, men who had previously coordinated through their own Facebook groups like “Are We Dating the Same Girl” shifted their operations in late January to Telegram's more permissive environment. Their message was clear: If they can do it, so can we.

"In the eyes of some of these men, this is a necessary act of defense against a kind of hostile feminism that they believe is out to ruin their lives," says Carl Miller, cofounder of the Center for the Analysis of Social Media and host of the podcast Kill List.

The dozen Telegram groups that WIRED has identified are part of a broader digital ecosystem often referred to as the manosphere, an online network of forums, influencers, and communities that perpetuate misogynistic ideologies.

“Highly isolated online spaces start reinforcing their own worldviews, pulling further and further from the mainstream, and in doing so, legitimizing things that would be unthinkable offline,” Miller says. “Eventually, what was once unthinkable becomes the norm.”

This cycle of reinforcement plays out across multiple platforms. Facebook forums act as the first point of contact, TikTok amplifies the rhetoric in publicly available videos, and Telegram is used to enable illicit activity. The result? A self-sustaining network of harassment that thrives on digital anonymity.

TikTok amplified discussions around the Telegram groups. WIRED reviewed 12 videos in which creators, of all genders, discussed, joked about, or berated the Telegram groups. In the comments section of these videos, users shared invitation links to public and private groups and some public channels on Telegram, making them accessible to a wider audience. While TikTok was not the primary platform for harassment, discussions about the Telegram groups spread there, and in some cases users explicitly acknowledged their illegality.

TikTok tells WIRED that its Community Guidelines prohibit image-based sexual abuse, sexual harassment, and nonconsensual sexual acts, and that violations result in removals and possible account bans. They also stated that TikTok removes links directing people to content that violates its policies and that it continues to invest in Trust and Safety operations.

Intentionally or not, the algorithms powering social media platforms like Facebook can amplify misogynistic content. Hate-driven engagement fuels growth, pulling new users into these communities through viral trends, suggested content, and comment-section recruitment.

As people caught notice on Facebook and TikTok and started reporting the Telegram groups, they didn’t disappear—they simply rebranded. Reactionary groups quickly emerged, signaling that members knew they were being watched but had no intention of stopping. Inside, messages revealed a clear awareness of the risks: Users knew they were breaking the law. They just didn’t care, according to chat logs reviewed by WIRED. To absolve themselves, one user wrote, “I do not condone im [simply] here to regulate rules,” while another shared a link to a statement that said: “I am here for only entertainment purposes only and I don’t support any illegal activities.”

Meta did not respond to a request for comment.

Messages from the Telegram group WIRED analyzed show that some chats became hyper-localized, dividing London into four regions to make harassment even more targeted. Members casually sought access to other city-based groups: “Who’s got brum link?” and “Manny link tho?”—British slang referring to Birmingham and Manchester. They weren’t just looking for gossip. “Any info from west?” one user asked, while another requested, “What’s her @?”— hunting for a woman’s social media handle, a first step to tracking her online activity.

The chat logs further reveal how women were discussed as commodities. “She a freak, I’ll give her that,” one user wrote. Another added, “Beautiful. Hide her from me.” Others encouraged sharing explicit material: “Sharing is caring, don’t be greedy.”

Members also bragged about sexual exploits, using coded language to reference encounters in specific locations, and spread degrading, racial abuse, predominantly targeting Black women.

Once a woman was mentioned, her privacy was permanently compromised. Users frequently shared social media handles, which led other members to contact her—soliciting intimate images or sending disparaging texts.

Anonymity can be a protective tool for women navigating online harassment. But it can also be embraced by bad actors who use the same structures to evade accountability.

"It’s ironic," Miller says. "The very privacy structures that women use to protect themselves are being turned against them."

The rise of unmoderated spaces like the abusive Telegram groups makes it nearly impossible to trace perpetrators, exposing a systemic failure in law enforcement and regulation. Without clear jurisdiction or oversight, platforms are able to sidestep accountability.

Sophie Mortimer, manager of the UK-based Revenge Porn Helpline, warned that Telegram has become one of the biggest threats to online safety. She says that the UK charity’s reports to Telegram of nonconsensual intimate image abuse are ignored. “We would consider them to be noncompliant to our requests,” she says. Telegram, however, says it received only “about 10 piece of content” from the Revenge Porn Helpline, “all of which were removed.” Mortimer did not yet respond to WIRED’s questions about the veracity of Telegram’s claims.

Despite recent updates to the UK’s Online Safety Act, legal enforcement of online abuse remains weak. An October 2024 report from the UK-based charity The Cyber Helpline shows that cybercrime victims face significant barriers in reporting abuse, and justice for online crimes is seven times less likely than for offline crimes.

"There’s still this long-standing idea that cybercrime doesn’t have real consequences," says Charlotte Hooper, head of operations of The Cyber Helpline, which helps support victims of cybercrime. "But if you look at victim studies, cybercrime is just as—if not more—psychologically damaging than physical crime."

A Telegram spokesperson tells WIRED that its moderators use “custom AI and machine learning tools” to remove content that violates the platform's rules, “including nonconsensual pornography and doxing.”

“As a result of Telegram's proactive moderation and response to reports, moderators remove millions of pieces of harmful content each day,” the spokesperson says.

Hooper says that survivors of digital harassment often change jobs, move cities, or even retreat from public life due to the trauma of being targeted online. The systemic failure to recognize these cases as serious crimes allows perpetrators to continue operating with impunity.

Yet, as these networks grow more interwoven, social media companies have failed to adequately address gaps in moderation.

Telegram, despite its estimated 950 million monthly active users worldwide, claims it’s too small to qualify as a “Very Large Online Platform” under the European Union’s Digital Service Act, allowing it to sidestep certain regulatory scrutiny. “Telegram takes its responsibilities under the DSA seriously and is in constant communication with the European Commission,” a company spokesperson said.

In the UK, several civil society groups have expressed concern about the use of large private Telegram groups, which allow up to 200,000 members. These groups exploit a loophole by operating under the guise of “private” communication to circumvent legal requirements for removing illegal content, including nonconsensual intimate images.

Without stronger regulation, online abuse will continue to evolve, adapting to new platforms and evading scrutiny.

The digital spaces meant to safeguard privacy are now incubating its most invasive violations. These networks aren’t just growing—they’re adapting, spreading across platforms, and learning how to evade accountability.

57 notes

·

View notes

Text

Mythbusting Generative AI: The Ethical ChatGPT Is Out There

I've been hyperfixating learning a lot about Generative AI recently and here's what I've found - genAI doesn’t just apply to chatGPT or other large language models.

Small Language Models (specialised and more efficient versions of the large models)

are also generative

can perform in a similar way to large models for many writing and reasoning tasks

are community-trained on ethical data

and can run on your laptop.

"But isn't analytical AI good and generative AI bad?"

Fact: Generative AI creates stuff and is also used for analysis

In the past, before recent generative AI developments, most analytical AI relied on traditional machine learning models. But now the two are becoming more intertwined. Gen AI is being used to perform analytical tasks – they are no longer two distinct, separate categories. The models are being used synergistically.

For example, Oxford University in the UK is partnering with open.ai to use generative AI (ChatGPT-Edu) to support analytical work in areas like health research and climate change.

"But Generative AI stole fanfic. That makes any use of it inherently wrong."

Fact: there are Generative AI models developed on ethical data sets

Yes, many large language models scraped sites like AO3 without consent, incorporating these into their datasets to train on. That’s not okay.

But there are Small Language Models (compact, less powerful versions of LLMs) being developed which are built on transparent, opt-in, community-curated data sets – and that can still perform generative AI functions in the same way that the LLMS do (just not as powerfully). You can even build one yourself.

No it's actually really cool! Some real-life examples:

Dolly (Databricks): Trained on open, crowd-sourced instructions

RedPajama (Together.ai): Focused on creative-commons licensed and public domain data

There's a ton more examples here.

(A word of warning: there are some SLMs like Microsoft’s Phi-3 that have likely been trained on some of the datasets hosted on the platform huggingface (which include scraped web content like from AO3), and these big companies are being deliberately sketchy about where their datasets came from - so the key is to check the data set. All SLMs should be transparent about what datasets they’re using).

"But AI harms the environment, so any use is unethical."

Fact: There are small language models that don't use massive centralised data centres.

SLMs run on less energy, don’t require cloud servers or data centres, and can be used on laptops, phones, Raspberry Pi’s (basically running AI locally on your own device instead of relying on remote data centres)

If you're interested -

You can build your own SLM and even train it on your own data.

Let's recap

Generative AI doesn't just include the big tools like chatGPT - it includes the Small Language Models that you can run ethically and locally

Some LLMs are trained on fanfic scraped from AO3 without consent. That's not okay

But ethical SLMs exist, which are developed on open, community-curated data that aims to avoid bias and misinformation - and you can even train your own models

These models can run on laptops and phones, using less energy

AI is a tool, it's up to humans to wield it responsibly

It means everything – and nothing

Everything – in the sense that it might remove some of the barriers and concerns people have which makes them reluctant to use AI. This may lead to more people using it - which will raise more questions on how to use it well.

It also means that nothing's changed – because even these ethical Small Language Models should be used in the same way as the other AI tools - ethically, transparently and responsibly.

So now what? Now, more than ever, we need to be having an open, respectful and curious discussion on how to use AI well in writing.

In the area of creative writing, it has the potential to be an awesome and insightful tool - a psychological mirror to analyse yourself through your stories, a narrative experimentation device (e.g. in the form of RPGs), to identify themes or emotional patterns in your fics and brainstorming when you get stuck -

but it also has capacity for great darkness too. It can steal your voice (and the voice of others), damage fandom community spirit, foster tech dependency and shortcut the whole creative process.

Just to add my two pence at the end - I don't think it has to be so all-or-nothing. AI shouldn't replace elements we love about fandom community; rather it can help fill the gaps and pick up the slack when people aren't available, or to help writers who, for whatever reason, struggle or don't have access to fan communities.

People who use AI as a tool are also part of fandom community. Let's keep talking about how to use AI well.

Feel free to push back on this, DM me or leave me an ask (the anon function is on for people who need it to be). You can also read more on my FAQ for an AI-using fanfic writer Master Post in which I reflect on AI transparency, ethics and something I call 'McWriting'.

#fandom#fanfiction#ethical ai#ai discourse#writing#writers#writing process#writing with ai#generative ai#my ai posts

4 notes

·

View notes

Text

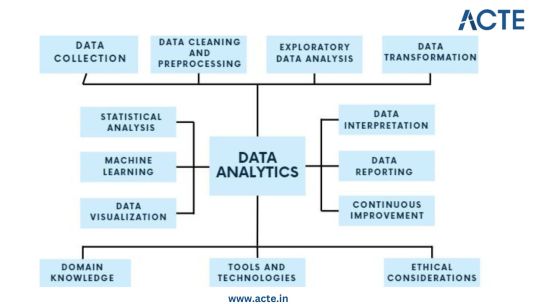

In the subject of data analytics, this is the most important concept that everyone needs to understand. The capacity to draw insightful conclusions from data is a highly sought-after talent in today's data-driven environment. In this process, data analytics is essential because it gives businesses the competitive edge by enabling them to find hidden patterns, make informed decisions, and acquire insight. This thorough guide will take you step-by-step through the fundamentals of data analytics, whether you're a business professional trying to improve your decision-making or a data enthusiast eager to explore the world of analytics.

Step 1: Data Collection - Building the Foundation

Identify Data Sources: Begin by pinpointing the relevant sources of data, which could include databases, surveys, web scraping, or IoT devices, aligning them with your analysis objectives. Define Clear Objectives: Clearly articulate the goals and objectives of your analysis to ensure that the collected data serves a specific purpose. Include Structured and Unstructured Data: Collect both structured data, such as databases and spreadsheets, and unstructured data like text documents or images to gain a comprehensive view. Establish Data Collection Protocols: Develop protocols and procedures for data collection to maintain consistency and reliability. Ensure Data Quality and Integrity: Implement measures to ensure the quality and integrity of your data throughout the collection process.

Step 2: Data Cleaning and Preprocessing - Purifying the Raw Material

Handle Missing Values: Address missing data through techniques like imputation to ensure your dataset is complete. Remove Duplicates: Identify and eliminate duplicate entries to maintain data accuracy. Address Outliers: Detect and manage outliers using statistical methods to prevent them from skewing your analysis. Standardize and Normalize Data: Bring data to a common scale, making it easier to compare and analyze. Ensure Data Integrity: Ensure that data remains accurate and consistent during the cleaning and preprocessing phase.

Step 3: Exploratory Data Analysis (EDA) - Understanding the Data

Visualize Data with Histograms, Scatter Plots, etc.: Use visualization tools like histograms, scatter plots, and box plots to gain insights into data distributions and patterns. Calculate Summary Statistics: Compute summary statistics such as means, medians, and standard deviations to understand central tendencies. Identify Patterns and Trends: Uncover underlying patterns, trends, or anomalies that can inform subsequent analysis. Explore Relationships Between Variables: Investigate correlations and dependencies between variables to inform hypothesis testing. Guide Subsequent Analysis Steps: The insights gained from EDA serve as a foundation for guiding the remainder of your analytical journey.

Step 4: Data Transformation - Shaping the Data for Analysis

Aggregate Data (e.g., Averages, Sums): Aggregate data points to create higher-level summaries, such as calculating averages or sums. Create New Features: Generate new features or variables that provide additional context or insights. Encode Categorical Variables: Convert categorical variables into numerical representations to make them compatible with analytical techniques. Maintain Data Relevance: Ensure that data transformations align with your analysis objectives and domain knowledge.

Step 5: Statistical Analysis - Quantifying Relationships

Hypothesis Testing: Conduct hypothesis tests to determine the significance of relationships or differences within the data. Correlation Analysis: Measure correlations between variables to identify how they are related. Regression Analysis: Apply regression techniques to model and predict relationships between variables. Descriptive Statistics: Employ descriptive statistics to summarize data and provide context for your analysis. Inferential Statistics: Make inferences about populations based on sample data to draw meaningful conclusions.

Step 6: Machine Learning - Predictive Analytics

Algorithm Selection: Choose suitable machine learning algorithms based on your analysis goals and data characteristics. Model Training: Train machine learning models using historical data to learn patterns. Validation and Testing: Evaluate model performance using validation and testing datasets to ensure reliability. Prediction and Classification: Apply trained models to make predictions or classify new data. Model Interpretation: Understand and interpret machine learning model outputs to extract insights.

Step 7: Data Visualization - Communicating Insights

Chart and Graph Creation: Create various types of charts, graphs, and visualizations to represent data effectively. Dashboard Development: Build interactive dashboards to provide stakeholders with dynamic views of insights. Visual Storytelling: Use data visualization to tell a compelling and coherent story that communicates findings clearly. Audience Consideration: Tailor visualizations to suit the needs of both technical and non-technical stakeholders. Enhance Decision-Making: Visualization aids decision-makers in understanding complex data and making informed choices.

Step 8: Data Interpretation - Drawing Conclusions and Recommendations

Recommendations: Provide actionable recommendations based on your conclusions and their implications. Stakeholder Communication: Communicate analysis results effectively to decision-makers and stakeholders. Domain Expertise: Apply domain knowledge to ensure that conclusions align with the context of the problem.

Step 9: Continuous Improvement - The Iterative Process

Monitoring Outcomes: Continuously monitor the real-world outcomes of your decisions and predictions. Model Refinement: Adapt and refine models based on new data and changing circumstances. Iterative Analysis: Embrace an iterative approach to data analysis to maintain relevance and effectiveness. Feedback Loop: Incorporate feedback from stakeholders and users to improve analytical processes and models.

Step 10: Ethical Considerations - Data Integrity and Responsibility

Data Privacy: Ensure that data handling respects individuals' privacy rights and complies with data protection regulations. Bias Detection and Mitigation: Identify and mitigate bias in data and algorithms to ensure fairness. Fairness: Strive for fairness and equitable outcomes in decision-making processes influenced by data. Ethical Guidelines: Adhere to ethical and legal guidelines in all aspects of data analytics to maintain trust and credibility.

Data analytics is an exciting and profitable field that enables people and companies to use data to make wise decisions. You'll be prepared to start your data analytics journey by understanding the fundamentals described in this guide. To become a skilled data analyst, keep in mind that practice and ongoing learning are essential. If you need help implementing data analytics in your organization or if you want to learn more, you should consult professionals or sign up for specialized courses. The ACTE Institute offers comprehensive data analytics training courses that can provide you the knowledge and skills necessary to excel in this field, along with job placement and certification. So put on your work boots, investigate the resources, and begin transforming.

24 notes

·

View notes

Text

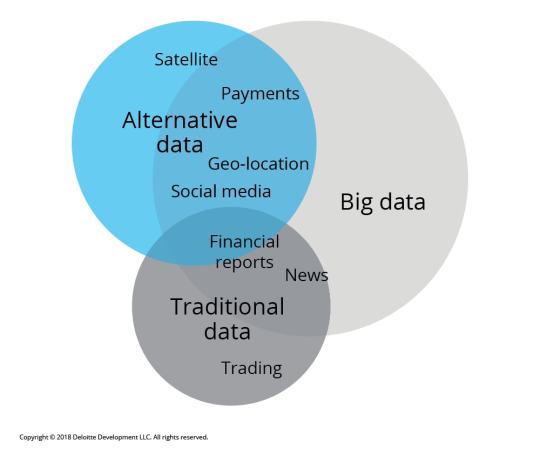

📊 Unlocking Trading Potential: The Power of Alternative Data 📊

In the fast-paced world of trading, traditional data sources—like financial statements and market reports—are no longer enough. Enter alternative data: a game-changing resource that can provide unique insights and an edge in the market. 🌐

What is Alternative Data? Alternative data refers to non-traditional data sources that can inform trading decisions. These include:

Social Media Sentiment: Analyzing trends and sentiments on platforms like Twitter and Reddit can offer insights into public perception of stocks or market movements. 📈

Satellite Imagery: Observing traffic patterns in retail store parking lots can indicate sales performance before official reports are released. 🛰️

Web Scraping: Gathering data from e-commerce websites to track product availability and pricing trends can highlight shifts in consumer behavior. 🛒

Sensor Data: Utilizing IoT devices to track activity in real-time can give traders insights into manufacturing output and supply chain efficiency. 📡

How GPT Enhances Data Analysis With tools like GPT, traders can sift through vast amounts of alternative data efficiently. Here’s how:

Natural Language Processing (NLP): GPT can analyze news articles, earnings calls, and social media posts to extract key insights and sentiment analysis. This allows traders to react swiftly to market changes.

Predictive Analytics: By training GPT on historical data and alternative data sources, traders can build models to forecast price movements and market trends. 📊

Automated Reporting: GPT can generate concise reports summarizing alternative data findings, saving traders time and enabling faster decision-making.

Why It Matters Incorporating alternative data into trading strategies can lead to more informed decisions, improved risk management, and ultimately, better returns. As the market evolves, staying ahead of the curve with innovative data strategies is essential. 🚀

Join the Conversation! What alternative data sources have you found most valuable in your trading strategy? Share your thoughts in the comments! 💬

#Trading #AlternativeData #GPT #Investing #Finance #DataAnalytics #MarketInsights

2 notes

·

View notes

Text

Best data extraction services in USA

In today's fiercely competitive business landscape, the strategic selection of a web data extraction services provider becomes crucial. Outsource Bigdata stands out by offering access to high-quality data through a meticulously crafted automated, AI-augmented process designed to extract valuable insights from websites. Our team ensures data precision and reliability, facilitating decision-making processes.

For more details, visit: https://outsourcebigdata.com/data-automation/web-scraping-services/web-data-extraction-services/.

About AIMLEAP

Outsource Bigdata is a division of Aimleap. AIMLEAP is an ISO 9001:2015 and ISO/IEC 27001:2013 certified global technology consulting and service provider offering AI-augmented Data Solutions, Data Engineering, Automation, IT Services, and Digital Marketing Services. AIMLEAP has been recognized as a ‘Great Place to Work®’.

With a special focus on AI and automation, we built quite a few AI & ML solutions, AI-driven web scraping solutions, AI-data Labeling, AI-Data-Hub, and Self-serving BI solutions. We started in 2012 and successfully delivered IT & digital transformation projects, automation-driven data solutions, on-demand data, and digital marketing for more than 750 fast-growing companies in the USA, Europe, New Zealand, Australia, Canada; and more.

-An ISO 9001:2015 and ISO/IEC 27001:2013 certified -Served 750+ customers -11+ Years of industry experience -98% client retention -Great Place to Work® certified -Global delivery centers in the USA, Canada, India & Australia

Our Data Solutions

APISCRAPY: AI driven web scraping & workflow automation platform APISCRAPY is an AI driven web scraping and automation platform that converts any web data into ready-to-use data. The platform is capable to extract data from websites, process data, automate workflows, classify data and integrate ready to consume data into database or deliver data in any desired format.

AI-Labeler: AI augmented annotation & labeling solution AI-Labeler is an AI augmented data annotation platform that combines the power of artificial intelligence with in-person involvement to label, annotate and classify data, and allowing faster development of robust and accurate models.

AI-Data-Hub: On-demand data for building AI products & services On-demand AI data hub for curated data, pre-annotated data, pre-classified data, and allowing enterprises to obtain easily and efficiently, and exploit high-quality data for training and developing AI models.

PRICESCRAPY: AI enabled real-time pricing solution An AI and automation driven price solution that provides real time price monitoring, pricing analytics, and dynamic pricing for companies across the world.

APIKART: AI driven data API solution hub APIKART is a data API hub that allows businesses and developers to access and integrate large volume of data from various sources through APIs. It is a data solution hub for accessing data through APIs, allowing companies to leverage data, and integrate APIs into their systems and applications.

Locations: USA: 1-30235 14656 Canada: +1 4378 370 063 India: +91 810 527 1615 Australia: +61 402 576 615 Email: [email protected]

2 notes

·

View notes

Text

Next-Gen B2B Lead Generation Software Platforms to Boost ROI in 2025

In 2025, precision is everything in B2B marketing. With buyers conducting extensive research before engaging with vendors, companies can no longer afford to rely on outdated or generic tools. This is why the adoption of next-gen Lead Generation Software has surged across industries. These tools are now smarter, faster, and more predictive than ever, making them central to any modern sales and marketing strategy.

Why B2B Teams Prioritize Lead Generation Software

Today’s Lead Generation Software offers more than just contact databases or form builders. It acts as a full-scale prospecting engine, equipped with:

Advanced intent analytics to identify high-interest accounts

AI-powered outreach automation that mimics human engagement

Behavioral insights to guide nurturing workflows

CRM and MAP integrations for seamless data movement

Let’s explore the top Lead Generation Software platforms driving results for B2B companies in 2025.

1. LeadIQ

LeadIQ helps B2B sales teams prospect faster and smarter. As a cloud-based Lead Generation Software, it focuses on streamlining contact capture, enrichment, and syncing to CRM platforms.

Key Features:

Real-time prospecting from LinkedIn

AI-generated email personalization

Team collaboration and task tracking

Syncs with Salesforce, Outreach, and Salesloft

2. Demandbase

Demandbase combines account intelligence with intent data, making it a powerful Lead Generation Software for enterprise-level ABM strategies. In 2025, its AI engine predicts purchase readiness with impressive accuracy.

Key Features:

Account-based targeting and engagement

Real-time intent signals and analytics

Predictive scoring and segmentation

Integration with MAP and CRM systems

3. AeroLeads

AeroLeads is ideal for SMBs and B2B startups looking for affordable yet effective Lead Generation Software. It enables users to find business emails and phone numbers from LinkedIn and other platforms in real-time.

Key Features:

Chrome extension for live data scraping

Verified contact details with export options

Data enrichment and lead tracking

Integrates with Zapier, Salesforce, and Pipedrive

4. Prospect.io

Prospect.io provides automation-first Lead Generation Software for modern sales teams. It excels in outbound workflows that blend email and calls with analytics.

Key Features:

Multi-step email and task sequences

Lead activity tracking

Lead scoring and pipeline metrics

Gmail and CRM compatibility

5. LeadSquared

LeadSquared has become a go-to Lead Generation Software in sectors like edtech, healthcare, and finance. It combines lead acquisition, nurturing, and sales automation in a single platform.

Key Features:

Landing pages and lead capture forms

Workflow automation based on behavior

Lead distribution and scoring

Built-in calling and email tools

6. CallPage

CallPage converts website traffic into inbound calls, making it a unique Lead Generation Software tool. In 2025, businesses use it to instantly connect leads to sales reps through intelligent callback pop-ups.

Key Features:

Instant callback widgets for websites

Call tracking and lead scoring

Integration with CRMs and analytics tools

VoIP and real-time routing

7. Reply.io

Reply.io automates cold outreach across email, LinkedIn, SMS, and more. It has positioned itself as a top Lead Generation Software solution for teams focused on multichannel engagement.

Key Features:

AI-powered email writing and A/B testing

Task and call management

Real-time analytics and campaign tracking

Integration with CRMs and Zapier

8. Leadzen.ai

Leadzen.ai offers AI-enriched B2B leads through web intelligence. As a newer player in the Lead Generation Software space, it’s earning attention for delivering verified leads with context.

Key Features:

Fresh business leads with smart filters

Enriched data with social profiles and web signals

API support for real-time data syncing

GDPR-compliant lead sourcing

9. Instantly.ai

Instantly.ai is focused on scaling email outreach for demand generation. It positions itself as a self-optimizing Lead Generation Software platform using inbox rotation and performance tracking.

Key Features:

Unlimited email sending with smart rotation

Real-time inbox health and deliverability checks

AI copy testing and reply detection

CRM syncing and reporting dashboards

10. SalesBlink

SalesBlink streamlines the entire sales outreach workflow. As a holistic Lead Generation Software, it covers lead sourcing, outreach automation, and pipeline management under one roof.

Key Features:

Cold email + call + LinkedIn integration

Visual sales sequence builder

Email finder and verifier

Real-time metrics and team tracking

How to Evaluate Lead Generation Software in 2025

Selecting the right Lead Generation Software is not just about feature lists—it’s about alignment with your business model and sales process. Consider these questions:

Is your strategy inbound, outbound, or hybrid?

Do you need global data compliance (e.g., GDPR, CCPA)?

How scalable is the tool for larger teams or markets?

Does it support integration with your existing stack?

A platform that integrates seamlessly, provides enriched data, and enables multi-touch engagement can significantly accelerate your pipeline growth in 2025.

Read Full Article: https://acceligize.com/featured-blogs/best-b2b-lead-generation-software-to-use-in-2025/

About Us:

Acceligize is a leader in end-to-end global B2B demand generation solutions, and performance marketing services, which help technology companies identify, activate, engage, and qualify their precise target audience at the buying stage they want. We offer turnkey full funnel lead generation using our first party data, and advanced audience intelligence platform which can target data sets using demographic, firmographic, intent, install based, account based, and lookalike models, giving our customers a competitive targeting advantage for their B2B marketing campaigns. With our combined strengths in content marketing, lead generation, data science, and home-grown industry focused technology, we deliver over 100,000+ qualified leads every month to some of the world’s leading publishers, advertisers, and media agencies for a variety of B2B targeted marketing campaigns.

Read more about our Services:

Content Syndication Leads

Marketing Qualified Leads

Sales Qualified Leads

0 notes

Text

Ifood Restaurant Data Scraping | Scrape Ifood Restaurant Data

Foodspark provides the Best Ifood Restaurant Data Scraping services in the USA, UK, Spain and China to extract or Scrape Ifood restaurant menu competitive pricing. Get the Best Ifood Restaurant Data Scraping API at affordable prices

#ifood restaurant data scraping#ifoods data scraping#web scraping tools#food data scraping#food data scraping services#zomato api#restaurant data scraping#grocerydatascrapingapi#fooddatascrapingservices#web scraping services#restaurant data analytics#seamless api#data scraping#data scraping services#restaurantdataextraction

0 notes

Text

Top 10 AI SDR Platforms in California to Supercharge Your Sales Pipeline

In today’s rapidly evolving sales landscape, integrating artificial intelligence into your sales development process is no longer optional—it’s essential. Sales Development Representatives (SDRs) are the backbone of B2B pipeline generation, and AI-driven SDR platforms are revolutionizing how companies in California generate leads, qualify prospects, and close deals.

Here’s a deep dive into the top 10 AI SDR platforms in California that are helping businesses streamline sales outreach, boost efficiency, and significantly increase conversion rates.

Landbase – AI-Powered Lead Discovery and Outreach

Headquartered in California, Landbase is leading the AI SDR revolution with its data-enriched platform tailored for outbound prospecting. It intelligently combines real-time data with machine learning to identify high-value leads, craft personalized messages, and engage prospects at the right moment.

Key Features:

Dynamic lead scoring

AI-personalized email sequences

CRM integrations

Smart outreach timing

Perfect for B2B sales teams looking to optimize every touchpoint, Landbase turns raw data into real opportunities.

Apollo.io – Intelligent Prospecting and Sales Automation

Based in San Francisco, Apollo.io is one of the most trusted platforms for AI sales engagement. It offers a comprehensive B2B database, AI-assisted messaging, and real-time sales analytics. Its automation features help SDRs reduce manual work and spend more time closing.

Top Tools:

Smart email templates

Data enrichment

Predictive lead scoring

Workflow automation

Apollo.io is a go-to choice for tech startups and enterprises alike.

Outreach – AI Sales Engagement That Converts

Outreach.io, a Seattle-headquartered company with a strong presence in California, provides one of the most powerful AI SDR platforms. It transforms how sales teams operate by offering AI-driven recommendations, sentiment analysis, and performance insights.

What Sets It Apart:

AI-guided selling

Multichannel engagement (email, calls, LinkedIn)

Machine learning-powered insights

Cadence optimization

Outreach is ideal for scaling sales organizations needing data-driven performance tracking.

Cognism – AI Lead Generation with Global Reach

Though originally based in the UK, Cognism has made a strong mark in the California tech ecosystem. Its AI SDR tool helps teams identify ICP (ideal customer profile) leads, comply with global data regulations, and execute personalized outreach.

Highlighted Features:

AI-enhanced contact data

Intent-based targeting

GDPR and CCPA compliance

Integrated sales intelligence

Cognism is perfect for international sales development teams based in California.

Clay – No-Code Platform for AI Sales Automation

Clay enables SDRs to build custom workflows using a no-code approach. The platform empowers sales teams to automate prospecting, research, and outreach with AI scraping and enrichment tools.

Noteworthy Tools:

LinkedIn automation

Web scraping + lead enrichment

AI content generation

Zapier and API integrations

California-based startups that value flexibility and custom workflows gravitate toward Clay.

Lavender – AI-Powered Sales Email Assistant

Lavender isn’t a full-stack SDR platform but is one of the most innovative tools on the market. It acts as an AI email coach, helping SDRs write better-performing sales emails in real time.

Key Features:

Real-time writing feedback

Personalization suggestions

Email scoring and A/B testing

AI grammar and tone check

Sales reps using Lavender have reported higher open and reply rates—a game-changer for outreach campaigns.

Regie.ai – AI Content Generation for Sales Campaigns

California-based Regie.ai blends copywriting and sales strategy into one AI platform. It allows SDRs to create personalized multichannel sequences, from cold emails to LinkedIn messages, aligned with the buyer’s journey.

Top Capabilities:

AI sales sequence builder

Persona-based content creation

A/B testing

CRM and outreach tool integrations

Regie.ai helps your SDR team speak directly to prospects’ pain points with crafted messaging.

Exceed.ai – AI Chatbot and Email Assistant for SDRs

Exceed.ai uses conversational AI to engage leads via email and chat, qualify them, and book meetings—all without human intervention. It’s a great tool for teams with high inbound traffic or looking to scale outbound efficiency.

Standout Features:

Conversational AI chatbot

Lead nurturing via email

Calendar integration

Salesforce/HubSpot compatibility

California companies use Exceed.ai to support their SDRs with 24/7 lead engagement.

Drift – AI Conversational Marketing and Sales Platform

Drift combines sales enablement and marketing automation through conversational AI. Ideal for SDRs focused on inbound sales, Drift captures site visitors and guides them through intelligent chat funnels to qualify and schedule calls.

Core Tools:

AI chatbots with lead routing

Website visitor tracking

Personalized playbooks

Real-time conversation data

Drift’s AI makes the customer journey frictionless, especially for SaaS companies in Silicon Valley.

Seamless.AI – Real-Time Lead Intelligence Platform

Seamless.AI uses real-time data scraping and AI enrichment to build verified B2B contact lists. With its Chrome extension and integration capabilities, SDRs can access lead insights while browsing LinkedIn or corporate sites.

Essential Features:

Verified contact emails and numbers

Real-time search filters

AI-powered enrichment

CRM syncing

Its ease of use and data accuracy make it a must-have for SDRs targeting California’s competitive tech market.

How to Choose the Right AI SDR Platform for Your Business

With numerous AI SDR tools available, selecting the right one depends on your business size, target market, tech stack, and sales strategy. Here are some quick tips:

Define your goals: Are you looking to scale outbound outreach, improve response rates, or automate email campaigns?

Assess integrations: Ensure the platform integrates seamlessly with your existing CRM and sales tools.

Consider customization: Choose a platform that allows flexibility for custom workflows and sequences.

Look at analytics: Prioritize platforms that offer robust data and insights to refine your strategy.

Final Thoughts

Adopting an AI SDR platform isn’t just a competitive advantage—it’s a necessity in California’s high-stakes, fast-moving sales environment. Whether you’re a startup in Palo Alto or an enterprise in Los Angeles, leveraging these AI tools can dramatically enhance your pipeline growth and sales performance.

Take the next step in modernizing your sales process by choosing the AI SDR platform that best aligns with your business needs. Let technology do the heavy lifting so your team can focus on what they do best—closing deals.

0 notes

Text

#proxies#proxy#proxyserver#residential proxy#captcha#web scraping techniques#web scraping tools#web scraping services#datascience#data analytics#automation#algorithm

0 notes

Text

Scraping Grocery Apps for Nutritional and Ingredient Data

Introduction

With health trends becoming more rampant, consumers are focusing heavily on nutrition and accurate ingredient and nutritional information. Grocery applications provide an elaborate study of food products, but manual collection and comparison of this data can take up an inordinate amount of time. Therefore, scraping grocery applications for nutritional and ingredient data would provide an automated and fast means for obtaining that information from any of the stakeholders be it customers, businesses, or researchers.

This blog shall discuss the importance of scraping nutritional data from grocery applications, its technical workings, major challenges, and best practices to extract reliable information. Be it for tracking diets, regulatory purposes, or customized shopping, nutritional data scraping is extremely valuable.

Why Scrape Nutritional and Ingredient Data from Grocery Apps?

1. Health and Dietary Awareness

Consumers rely on nutritional and ingredient data scraping to monitor calorie intake, macronutrients, and allergen warnings.

2. Product Comparison and Selection

Web scraping nutritional and ingredient data helps to compare similar products and make informed decisions according to dietary needs.

3. Regulatory & Compliance Requirements

Companies require nutritional and ingredient data extraction to be compliant with food labeling regulations and ensure a fair marketing approach.

4. E-commerce & Grocery Retail Optimization

Web scraping nutritional and ingredient data is used by retailers for better filtering, recommendations, and comparative analysis of similar products.

5. Scientific Research and Analytics

Nutritionists and health professionals invoke the scraping of nutritional data for research in diet planning, practical food safety, and trends in consumer behavior.

How Web Scraping Works for Nutritional and Ingredient Data

1. Identifying Target Grocery Apps

Popular grocery apps with extensive product details include:

Instacart

Amazon Fresh

Walmart Grocery

Kroger

Target Grocery

Whole Foods Market

2. Extracting Product and Nutritional Information

Scraping grocery apps involves making HTTP requests to retrieve HTML data containing nutritional facts and ingredient lists.

3. Parsing and Structuring Data

Using Python tools like BeautifulSoup, Scrapy, or Selenium, structured data is extracted and categorized.

4. Storing and Analyzing Data

The cleaned data is stored in JSON, CSV, or databases for easy access and analysis.

5. Displaying Information for End Users

Extracted nutritional and ingredient data can be displayed in dashboards, diet tracking apps, or regulatory compliance tools.

Essential Data Fields for Nutritional Data Scraping

1. Product Details

Product Name

Brand

Category (e.g., dairy, beverages, snacks)

Packaging Information

2. Nutritional Information

Calories

Macronutrients (Carbs, Proteins, Fats)

Sugar and Sodium Content

Fiber and Vitamins

3. Ingredient Data

Full Ingredient List

Organic/Non-Organic Label

Preservatives and Additives

Allergen Warnings

4. Additional Attributes

Expiry Date

Certifications (Non-GMO, Gluten-Free, Vegan)

Serving Size and Portions

Cooking Instructions

Challenges in Scraping Nutritional and Ingredient Data

1. Anti-Scraping Measures

Many grocery apps implement CAPTCHAs, IP bans, and bot detection mechanisms to prevent automated data extraction.

2. Dynamic Webpage Content

JavaScript-based content loading complicates extraction without using tools like Selenium or Puppeteer.

3. Data Inconsistency and Formatting Issues

Different brands and retailers display nutritional information in varied formats, requiring extensive data normalization.

4. Legal and Ethical Considerations

Ensuring compliance with data privacy regulations and robots.txt policies is essential to avoid legal risks.

Best Practices for Scraping Grocery Apps for Nutritional Data

1. Use Rotating Proxies and Headers

Changing IP addresses and user-agent strings prevents detection and blocking.

2. Implement Headless Browsing for Dynamic Content

Selenium or Puppeteer ensures seamless interaction with JavaScript-rendered nutritional data.

3. Schedule Automated Scraping Jobs

Frequent scraping ensures updated and accurate nutritional information for comparisons.

4. Clean and Standardize Data

Using data cleaning and NLP techniques helps resolve inconsistencies in ingredient naming and formatting.

5. Comply with Ethical Web Scraping Standards

Respecting robots.txt directives and seeking permission where necessary ensures responsible data extraction.

Building a Nutritional Data Extractor Using Web Scraping APIs

1. Choosing the Right Tech Stack

Programming Language: Python or JavaScript

Scraping Libraries: Scrapy, BeautifulSoup, Selenium

Storage Solutions: PostgreSQL, MongoDB, Google Sheets

APIs for Automation: CrawlXpert, Apify, Scrapy Cloud

2. Developing the Web Scraper

A Python-based scraper using Scrapy or Selenium can fetch and structure nutritional and ingredient data effectively.

3. Creating a Dashboard for Data Visualization

A user-friendly web interface built with React.js or Flask can display comparative nutritional data.

4. Implementing API-Based Data Retrieval

Using APIs ensures real-time access to structured and up-to-date ingredient and nutritional data.

Future of Nutritional Data Scraping with AI and Automation

1. AI-Enhanced Data Normalization

Machine learning models can standardize nutritional data for accurate comparisons and predictions.

2. Blockchain for Data Transparency

Decentralized food data storage could improve trust and traceability in ingredient sourcing.

3. Integration with Wearable Health Devices

Future innovations may allow direct nutritional tracking from grocery apps to smart health monitors.

4. Customized Nutrition Recommendations

With the help of AI, grocery applications will be able to establish personalized meal planning based on the nutritional and ingredient data culled from the net.

Conclusion

Automated web scraping of grocery applications for nutritional and ingredient data provides consumers, businesses, and researchers with accurate dietary information. Not just a tool for price-checking, web scraping touches all aspects of modern-day nutritional analytics.

If you are looking for an advanced nutritional data scraping solution, CrawlXpert is your trusted partner. We provide web scraping services that scrape, process, and analyze grocery nutritional data. Work with CrawlXpert today and let web scraping drive your nutritional and ingredient data for better decisions and business insights!

Know More : https://www.crawlxpert.com/blog/scraping-grocery-apps-for-nutritional-and-ingredient-data

#scrapingnutritionaldatafromgrocery#ScrapeNutritionalDatafromGroceryApps#NutritionalDataScraping#NutritionalDataScrapingwithAI

0 notes

Text

Adaptive Web Scraping: The Future of Reliable Data Extraction from Dynamic Websites

In today’s fast-paced digital world, #websites are constantly evolving — layouts shift, #HTML structures change, and #dynamic content is rendered differently across platforms. Traditional #scraping techniques often break under these changes, leading to missed #data, system failures, and costly delays.

At #iWebDataScraping, we’ve built #adaptive scraping solutions that are designed to keep up. Our cutting-edge technology uses #intelligentSelectors and #AI-drivenModels that seamlessly adjust to even the smallest HTML or structural changes, ensuring uninterrupted, high-accuracy #dataDelivery — no matter how frequently the site updates.

💡 Whether you're extracting #pricingData from #eCommerce platforms, tracking #reviews across marketplaces, or pulling listings from real-time #booking or #foodDelivery sites — adaptive scraping ensures you never miss a beat.

🔧 Key Features:

#AI-powered selectors that automatically adapt to changing layouts

#RealTime monitoring to avoid #dataDowntime

Seamless integration with your #analytics stack

#Scalable solutions for large #dataPipelines

Support for complex, #JavaScript-heavy websites

When your business relies on accurate, real-time insights, don't let unstable scrapers hold you back. Upgrade to #adaptiveWebScraping and extract with confidence.

0 notes