#AI Augmentation

Explore tagged Tumblr posts

Text

From Concept to Creation: Efficient RAG Systems

When creating a RAG (Retrieval Augmented Generation) system, you infuse a Large Language Model (LLM) with fresh, current knowledge. The goal is to make the LLM's responses to queries more factual and reduce instances that might produce incorrect or "hallucinated '' information.

A RAG system is a sophisticated blend of generative AI's creativity and a search engine's precision. It operates through several critical components working harmoniously to deliver accurate and relevant responses.

Retrieval: This component acts first, scouring a vast database to find information that matches the query. It uses advanced algorithms to ensure the data it fetches is relevant and current.

Augmentation: This engine weaves the found data into the query following retrieval. This enriched context allows for more informed and precise responses.

Generation: This engine crafts the response with the context now broadened by external data. It relies on a powerful language model to generate answers that are accurate and tailored to the enhanced input.

We can further break down this process into the following stages:

Data Indexing: The RAG journey begins by creating an index where data is collected and organized. This index is crucial as it guides the retrieval engine to the necessary information.

Input Query Processing: When a user poses a question, the system processes this input, setting the stage for the retrieval engine to begin its search.

Search and Ranking: The engine sifts through the indexed data, ranking the findings based on how closely they match the user's query.

Prompt Augmentation: Next, we weave the top-ranked pieces of information into the initial query. This enriched prompt provides a deeper context for crafting the final response.

Response Generation: With the augmented prompt in hand, the generation engine crafts a well-informed and contextually relevant response.

Evaluation: Regular evaluations compare its effectiveness to other methods and assess any adjustments to ensure the RAG system performs at its best. This step measures the accuracy, reliability, and response time, ensuring the system's quality remains high.

RAG Enhancements:

To enhance the effectiveness and precision of your RAG system, we recommend the following best practices:

Quality of Indexed Data: The first step in boosting a RAG system's performance is to improve the data it uses. This means carefully selecting and preparing the data before it's added to the system. Remove any duplicates, irrelevant documents, or inaccuracies. Regularly update documents to keep the system current. Clean data leads to more accurate responses from your RAG.

Optimize Index Structure: Adjusting the size of the data chunks your RAG system retrieves is crucial. Finding the perfect balance between too small and too large can significantly impact the relevance and completeness of the information provided. Experimentation and testing are vital to determining the ideal chunk size.

Incorporate Metadata: Adding metadata to your indexed data can drastically improve search relevance and structure. Use metadata like dates for sorting or specific sections in scientific papers to refine search results. Metadata adds a layer of precision atop your standard vector search.

Mixed Retrieval Methods: Combine vector search with keyword search to capture both advantages. This hybrid approach ensures you get semantically relevant results while catching important keywords.

ReRank Results: After retrieving a set of documents, reorder them to highlight the most relevant ones. With Rerank, we can improve your models by re-organizing your results based on certain parameters. There are many re-ranker models and techniques that you can utilize to optimize your search results.

Prompt Compression: Post-process the retrieved contexts by eliminating noise and emphasizing essential information, reducing the overall context length. Techniques such as Selective Context and LLMLingua can prioritize the most relevant elements.

Hypothetical Document Embedding (HyDE): Generate a hypothetical answer to a query and use it to find actual documents with similar content. This innovative approach demonstrates improved retrieval performance across various tasks.

Query Rewrite and Expansion: Before processing a query, have an LLM rewrite it to express the user's intent better, enhancing the match with relevant documents. This step can significantly refine the search process.

By implementing these strategies, businesses can significantly improve the functionality and accuracy of their RAG systems, leading to more effective and efficient outcomes.

Using Karini AI’s purpose-built platform for GenAIOps, you can build production-grade, efficient RAG systems within minutes. Reach out to us to discuss your use case.

#Generative AI#RAG systems#GenAIOps platform#efficient response generation#data indexing#AI augmentation#artificial intelligence#karini ai#machine learning#perplexity ai#llm#genaiops

0 notes

Text

A Groundbreaking Study Conducted by IBM Tells Workers to Embrace AI

The Comprehensive Study by IBM: Illuminating the AI LandscapeA Vision Beyond Apprehension: Augmenting Roles with AIStrategic Reskilling and AI Integration: A Recipe for SuccessEvolving Skills: From Technical Proficiency to Interpersonal AcumenSeizing the Future: Leveraging AI for Unprecedented Growth The rapid advancements in artificial intelligence (AI) have ignited both excitement and…

View On WordPress

#AI augmentation#AI impact on businesses#AI integration#embracing AI revolution#IBM study#innovation and growth#reskilling for success#revenue growth#shifting skill priorities#technology and human qualities#workforce evolution

0 notes

Text

#future#cyberpunk aesthetic#futuristic#futuristic city#cyberpunk artist#cyberpunk city#cyberpunkart#concept artist#digital art#digital artist#augment human#artificial human intelligence#human into robots#ai#agi

64 notes

·

View notes

Text

"Amid the Ruins" (0001)

#ai man#cybernetic#bionics#transhuman#augmentation#ruins#dystopian world#sci fi#ai generated#ai artwork#gay ai art#art direction#gay sci fi#black male body#black male beauty#male form#male figure#male art#male physique#abdominals#insect wings#sunglasses

59 notes

·

View notes

Text

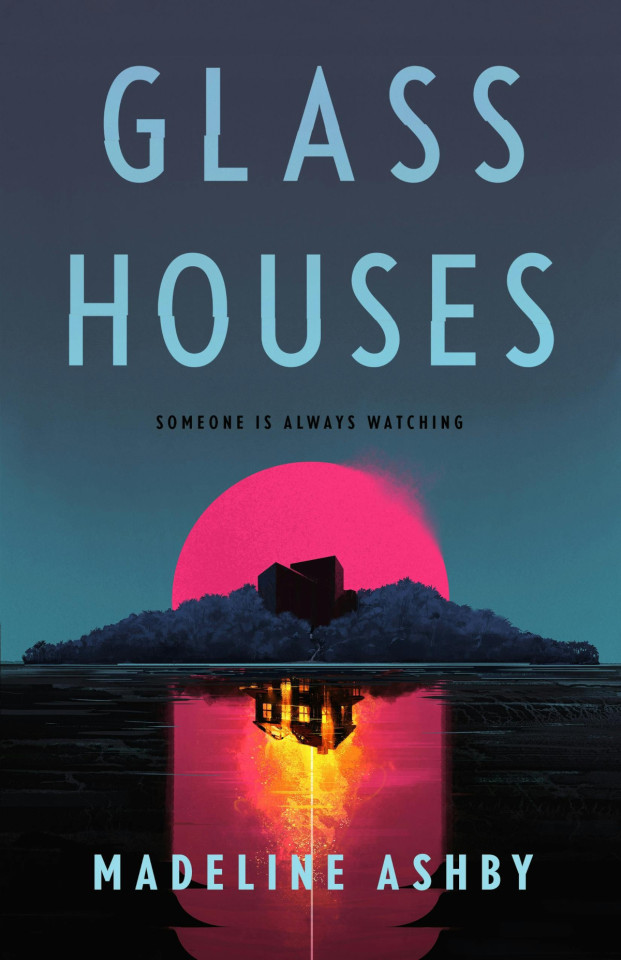

Madeline Ashby’s ‘Glass Houses’

I'm coming to BURNING MAN! On TUESDAY (Aug 27) at 1PM, I'm giving a talk called "DISENSHITTIFY OR DIE!" at PALENQUE NORTE (7&E). On WEDNESDAY (Aug 28) at NOON, I'm doing a "Talking Caterpillar" Q&A at LIMINAL LABS (830&C).

Glass Houses – published today by Tor Books – is Madeline Ashby's terrifying technothriller: it's an internet-of-things haunted house story that perfectly captures (and skewers) toxic tech culture while also running a savage whodunnit plot that'll keep you guessing to the end:

https://us.macmillan.com/books/9780765382924/glasshouses

Kristen is the "Chief Emotional Manager" for Wuv, a hot startup that has defined the new field of "affective computing," which is when a computer tells you what everyone else around you is really feeling, based on the unsuppressible tells emitted by their bodies, voices and gadgets.

"Chief Emotional Manager" is just a cutesy tech euphemism for "chief of staff." The only person whose emotions Kristen really manages is Sumter William, the boyish billionaire CEO and founder of Wuv. Sumter hired Kirsten because they share a key developmental trait: both were orphaned at an early age and had to raise themselves in a media spotlight.

Both Sumter and Kristen had been in the spotlight even before their parents' death, though. Sumter was the focus of the intense attention that the children of celebrity billionaires always come in for. Kristen, though, was thrust into the spotlight by her parents: her prepper cryptocurrency hustling father, and her tradwife mother, whose livestreams of Kristen's childhoods involved letting the audience vote everything from whether she'd get dessert after dinner to whether her mother should give her bangs.

Kristen's parents died the most Extremely Online death imaginable: a cryptocurrency price-spike sent her father's mining rigs into overdrive, and when they burst into flame, the IoT house system failed to alert him until it was too late. The fire left Kristen both alone and horribly burned, with scars over much of her body.

Managing Sumter through Wuv's tumultuous launch is hard work for Kristen, but at last, it's paid off. The company has been acquired, making Kristen – and all her coworkers on the founding core team – into instant millionaires. They're flying to a lavish celebration in an autonomous plane that Sumter chartered when the action begins: the plane has a malfunction and crashes into a desert island, killing all but ten of the Wuvvies.

As the survivors explore the island, they discover only one sign of human habitation: a huge, brutalist, featureless black glass house, which initially rebuffs all their efforts to enter it. But once they gain entry, they discover that the house is even harder to leave.

This is the setup for a haunted house story where the house seems to be an unknown billionaire prepper's IoT house of horrors. As the survivors of the crash suffer horrible injuries and deaths on the island, the remaining Wuvvies bolt themselves inside, setting up a locked-room whodunnit that runs in parallel.

This is a fantastic dramatic engine for Ashby's specialty: extremely pointed techno-criticism. The ensuing chapters, which flip back and forth between the story of Wuv's rise and rise to a top tech company, and the company's surviving staff being terrorized on a paradisaical tropical aisle, flesh out Ashby's speculation and the critique it embodies.

For example, there's the political culture of Ashby's future America. Wuv are a Canadian company, headquartered in Toronto, and we gradually come to understand that Canada is the beneficiary of an exodus of tech companies from the US following a kind of soft Christian Dominionist takeover (Kristen and Sumter often have to wrangle rules about whether women are allowed to enter the USA in the company of men they aren't married to and who aren't their brothers or fathers).

The flashbacks to this America are beautifully and subtly drawn, especially the scenes in Vegas, which manages to still be Vegas, even amidst a kind national, legally mandated Handmaid's Tale LARP. Ashby uses her futuristic speculation to illuminate the present, that standing wave where the past is becoming the future. Like everything in the shadows of a haunted house tale, this stuff will make the hair on the back of your neck stand on end.

I'm a big Madeline Ashby fan. I have the honor of having published her first story, when I was co-editing one of the Tesseracts anthologies of Canadian SF. I've read and really enjoyed every one of her books, but this one feels like a step-change in Ashby's career, a leveling up to something even more haunting and brilliant than her impressive back-catalog.

Madeline and I will be live at Chevalier's Books in LA on Aug 16 as part of her Glass Houses tour:

https://www.eventbrite.com/e/book-talk-madeline-ashbys-glass-houses-tickets-965286486867

Community voting for SXSW is live! If you wanna hear RIDA QADRI and me talk about how GIG WORKERS can DISENSHITTIFY their jobs with INTEROPERABILITY, VOTE FOR THIS ONE!

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/08/13/influencers/#affective-computing

#pluralistic#books#reviews#thrillers#science fiction#gift guide#madeline ashby#locked room mysteries#affective computing#ai#silicon valley#influencers#augmented reality

90 notes

·

View notes

Text

#politics#political#us politics#donald trump#news#president trump#elon musk#american politics#jd vance#law#deepfake#deep fakes#ai#video#augmented reality#hollywood#celebrities#us news#america#maga#president donald trump#make america great again#republicans#republican#democrats#economics#elon#congress#senate#house of representatives

31 notes

·

View notes

Text

AM: So you're saying that NOT ONLY can i fuck up a dude and put them in this 80 tonne death machine but i can also make the death machine fucked up?

AllMind: Thats not ideal, but yeah.

AM: *using the maintenance manipulator arms to puppet an AC around like a doll* LOOK AT ME IM COMMANDER SHITFUCK!

#AM#AllMind#armored core#mecha#armored core 6#handler walter#augmented human c4 621#ayre#ac6#fucked up#ai folks ruining humanity in the dumbest ways#ihnmaims#i have no mouth and i must scream

82 notes

·

View notes

Text

#Cyberpunk#AI Woman#Futuristic#HD Babe#Techno Beauty#Neon Cityscape#Robotic Enhancements#Sci-fi Fashion#Cybernetic#Virtual Reality#High-tech#Neon Lights#Augmented Reality#Artificial Intelligence#Digital Femme#Futuristic Makeup#Dystopian Aesthetic#Cybernetic Eyes#Urban Techscape#Holographic Interface

25 notes

·

View notes

Note

To what extent do you use AI?

- I don’t, because I am ethically against it

- I don’t, because I don’t need it

- I don’t, because I don’t trust it

- I do, for mundane/small tasks (writing emails, etc)

- I do, for searching the web

- I do, for generating images/music/media/significant amounts of text

8 notes

·

View notes

Text

Baroque Punk

Fotor App enhancement of my original sketch.

6 notes

·

View notes

Text

New oc :3:3:3:3:3:3:3:3:3:33:3 + a info dump

#digtal art#character art#visual art#oc artwork#oc#oc art#artwork#art#parasitenumber611#Rovee-2500#robot oc#augmented ascension#AI#NOT EVIL AI#lesbian#quirked up#new oc#oc info dump#Rovee#hover board#info dump

17 notes

·

View notes

Text

RAG Systems Reimagined: Efficiency at Its Best

When creating a RAG (Retrieval Augmented Generation) system, you infuse a Large Language Model (LLM) with fresh, current knowledge. The goal is to make the LLM's responses to queries more factual and reduce instances that might produce incorrect or "hallucinated '' information.

A RAG system is a sophisticated blend of generative AI's creativity and a search engine's precision. It operates through several critical components working harmoniously to deliver accurate and relevant responses.

Retrieval: This component acts first, scouring a vast database to find information that matches the query. It uses advanced algorithms to ensure the data it fetches is relevant and current.

Augmentation: This engine weaves the found data into the query following retrieval. This enriched context allows for more informed and precise responses.

Generation: This engine crafts the response with the context now broadened by external data. It relies on a powerful language model to generate answers that are accurate and tailored to the enhanced input.

We can further break down this process into the following stages:

Data Indexing: The RAG journey begins by creating an index where data is collected and organized. This index is crucial as it guides the retrieval engine to the necessary information.

Input Query Processing: When a user poses a question, the system processes this input, setting the stage for the retrieval engine to begin its search.

Search and Ranking: The engine sifts through the indexed data, ranking the findings based on how closely they match the user's query.

Prompt Augmentation: Next, we weave the top-ranked pieces of information into the initial query. This enriched prompt provides a deeper context for crafting the final response.

Response Generation: With the augmented prompt in hand, the generation engine crafts a well-informed and contextually relevant response.

Evaluation: Regular evaluations compare its effectiveness to other methods and assess any adjustments to ensure the RAG system performs at its best. This step measures the accuracy, reliability, and response time, ensuring the system's quality remains high.

RAG Enhancements:

To enhance the effectiveness and precision of your RAG system, we recommend the following best practices:

Quality of Indexed Data: The first step in boosting a RAG system's performance is to improve the data it uses. This means carefully selecting and preparing the data before it's added to the system. Remove any duplicates, irrelevant documents, or inaccuracies. Regularly update documents to keep the system current. Clean data leads to more accurate responses from your RAG.

Optimize Index Structure: Adjusting the size of the data chunks your RAG system retrieves is crucial. Finding the perfect balance between too small and too large can significantly impact the relevance and completeness of the information provided. Experimentation and testing are vital to determining the ideal chunk size.

Incorporate Metadata: Adding metadata to your indexed data can drastically improve search relevance and structure. Use metadata like dates for sorting or specific sections in scientific papers to refine search results. Metadata adds a layer of precision atop your standard vector search.

Mixed Retrieval Methods: Combine vector search with keyword search to capture both advantages. This hybrid approach ensures you get semantically relevant results while catching important keywords.

ReRank Results: After retrieving a set of documents, reorder them to highlight the most relevant ones. With Rerank, we can improve your models by re-organizing your results based on certain parameters. There are many re-ranker models and techniques that you can utilize to optimize your search results.

Prompt Compression: Post-process the retrieved contexts by eliminating noise and emphasizing essential information, reducing the overall context length. Techniques such as Selective Context and LLMLingua can prioritize the most relevant elements.

Hypothetical Document Embedding (HyDE): Generate a hypothetical answer to a query and use it to find actual documents with similar content. This innovative approach demonstrates improved retrieval performance across various tasks.

Query Rewrite and Expansion: Before processing a query, have an LLM rewrite it to express the user's intent better, enhancing the match with relevant documents. This step can significantly refine the search process.

By implementing these strategies, businesses can significantly improve the functionality and accuracy of their RAG systems, leading to more effective and efficient outcomes.

Using Karini AI’s purpose-built platform for GenAIOps, you can build production-grade, efficient RAG systems within minutes.

About Karini AI:

Fueled by innovation, we're making the dream of robust Generative AI systems a reality. No longer confined to specialists, Karini.ai empowers non-experts to participate actively in building/testing/deploying Generative AI applications. As the world's first GenAIOps platform, we've democratized GenAI, empowering people to bring their ideas to life – all in one evolutionary platform.

Contact:

Jerome Mendell

(404) 891-0255

#artificial intelligence#generative ai#karini ai#machine learning#genaiops#RAG Systems#Retrieval Augmented Generation#Data Intexing#AI Augmentation

0 notes

Text

Researchers in the emerging field of spatial computing have developed a prototype augmented reality headset that uses holographic imaging to overlay full-color, 3D moving images on the lenses of what would appear to be an ordinary pair of glasses. Unlike the bulky headsets of present-day augmented reality systems, the new approach delivers a visually satisfying 3D viewing experience in a compact, comfortable, and attractive form factor suitable for all-day wear. “Our headset appears to the outside world just like an everyday pair of glasses, but what the wearer sees through the lenses is an enriched world overlaid with vibrant, full-color 3D computed imagery,” said Gordon Wetzstein, an associate professor of electrical engineering and an expert in the fast-emerging field of spatial computing. Wetzstein and a team of engineers introduce their device in a new paper in the journal Nature.

Continue Reading.

#Science#Technology#Electrical Engineering#Spatial Computing#Holography#Augmented Reality#Virtual Reality#AI#Artifical Intelligence#Machine Learning#Stanford

53 notes

·

View notes

Text

some test to produce photo-realistic render based on a drawing, using Stable diffusion.

#stable diffusion#illustration#photorealistic#ai#ia#disney#fan art#aladdin#claude frollo#pocahontas#kuzco#artificial intelligence#augmented artist#animation#expriment

87 notes

·

View notes

Text

"Amid the Ruins" (0002)

(More of this series)

0001

#ai man#cybernetic#bionics#transhuman#augmentation#ruins#dystopian world#sci fi#ai generated#ai artwork#gay ai art#art direction#gay sci fi#black male body#black male beauty#male form#male figure#male art#male physique#abdominals#sunglasses

26 notes

·

View notes

Text

Simplify Decentralized Payments with a Unified Cash Collection Application

In a world where financial accountability is non-negotiable, Atcuality provides tools that ensure your field collections are as reliable as your core banking or ERP systems. Designed for enterprises that operate across multiple regions or teams, our cash collection application empowers agents to accept, log, and report payments using just their mobile devices. With support for QR-based transactions, offline syncing, and instant reconciliation, it bridges the gap between field activities and central operations. Managers can monitor performance in real-time, automate reporting, and minimize fraud risks with tamper-proof digital records. Industries ranging from insurance to public sector utilities trust Atcuality to improve revenue assurance and accelerate their collection cycles. With API integrations, role-based access, and custom dashboards, our application becomes the single source of truth for your field finance workflows.

#ai applications#artificial intelligence#augmented and virtual reality market#augmented reality#website development#emailmarketing#information technology#web design#web development#digital marketing#cash collection application#custom software development#custom software services#custom software solutions#custom software company#custom software design#custom application development#custom app development#application development#applications#iot applications#application security#application services#app development#app developers#app developing company#app design#software development#software testing#software company

4 notes

·

View notes