#AI solution architecture platform

Explore tagged Tumblr posts

Text

#AI Factory#AI Cost Optimize#Responsible AI#AI Security#AI in Security#AI Integration Services#AI Proof of Concept#AI Pilot Deployment#AI Production Solutions#AI Innovation Services#AI Implementation Strategy#AI Workflow Automation#AI Operational Efficiency#AI Business Growth Solutions#AI Compliance Services#AI Governance Tools#Ethical AI Implementation#AI Risk Management#AI Regulatory Compliance#AI Model Security#AI Data Privacy#AI Threat Detection#AI Vulnerability Assessment#AI proof of concept tools#End-to-end AI use case platform#AI solution architecture platform#AI POC for medical imaging#AI POC for demand forecasting#Generative AI in product design#AI in construction safety monitoring

0 notes

Text

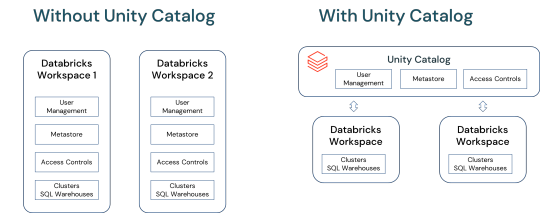

Unlocking Full Potential: The Compelling Reasons to Migrate to Databricks Unity Catalog

In a world overwhelmed by data complexities and AI advancements, Databricks Unity Catalog emerges as a game-changer. This blog delves into how Unity Catalog revolutionizes data and AI governance, offering a unified, agile solution .

View On WordPress

#Access Control in Data Platforms#Advanced User Management#AI and ML Data Governance#AI Data Management#Big Data Solutions#Centralized Metadata Management#Cloud Data Management#Data Collaboration Tools#Data Ecosystem Integration#Data Governance Solutions#Data Lakehouse Architecture#Data Platform Modernization#Data Security and Compliance#Databricks for Data Scientists#Databricks Unity catalog#Enterprise Data Strategy#Migrating to Unity Catalog#Scalable Data Architecture#Unity Catalog Features

0 notes

Text

Cloud Computing: Definition, Benefits, Types, and Real-World Applications

In the fast-changing digital world, companies require software that matches their specific ways of working, aims and what their customers require. That’s when you need custom software development services. Custom software is made just for your organization, so it is more flexible, scalable and efficient than generic software.

What does Custom Software Development mean?

Custom software development means making, deploying and maintaining software that is tailored to a specific user, company or task. It designs custom Software Development Services: Solutions Made Just for Your Business to meet specific business needs, which off-the-shelf software usually cannot do.

The main advantages of custom software development are listed below.

1. Personalized Fit

Custom software is built to address the specific needs of your business. Everything is designed to fit your workflow, whether you need it for customers, internal tasks or industry-specific functions.

2. Scalability

When your business expands, your software can also expand. You can add more features, users and integrations as needed without being bound by strict licensing rules.

3. Increased Efficiency

Use tools that are designed to work well with your processes. Custom software usually automates tasks, cuts down on repetition and helps people work more efficiently.

4. Better Integration

Many companies rely on different tools and platforms. You can have custom software made to work smoothly with your CRMs, ERPs and third-party APIs.

5. Improved Security

You can set up security measures more effectively in a custom solution. It is particularly important for industries that handle confidential information, such as finance, healthcare or legal services.

Types of Custom Software Solutions That Are Popular

CRM Systems

Inventory and Order Management

Custom-made ERP Solutions

Mobile and Web Apps

eCommerce Platforms

AI and Data Analytics Tools

SaaS Products

The Process of Custom Development

Requirement Analysis

Being aware of your business goals, what users require and the difficulties you face in running the business.

Design & Architecture

Designing a software architecture that can grow, is safe and fits your requirements.

Development & Testing

Writing code that is easy to maintain and testing for errors, speed and compatibility.

Deployment and Support

Making the software available and offering support and updates over time.

What Makes Niotechone a Good Choice?

Our team at Niotechone focuses on providing custom software that helps businesses grow. Our team of experts works with you throughout the process, from the initial idea to the final deployment, to make sure the product is what you require.

Successful experience in various industries

Agile development is the process used.

Support after the launch and options for scaling

Affordable rates and different ways to work together

Final Thoughts

Creating custom software is not only about making an app; it’s about building a tool that helps your business grow. A customized solution can give you the advantage you require in the busy digital market, no matter if you are a startup or an enterprise.

#software development company#development company software#software design and development services#software development services#custom software development outsourcing#outsource custom software development#software development and services#custom software development companies#custom software development#custom software development agency#custom software development firms#software development custom software development#custom software design companies#custom software#custom application development#custom mobile application development#custom mobile software development#custom software development services#custom healthcare software development company#bespoke software development service#custom software solution#custom software outsourcing#outsourcing custom software#application development outsourcing#healthcare software development

2 notes

·

View notes

Text

Powering the Next Wave of Digital Transformation

In an era defined by rapid technological disruption and ever-evolving customer expectations, innovation is not just a strategy—it’s a necessity. At Frandzzo, we’ve embraced this mindset wholeheartedly, scaling our innovation across every layer of our SaaS ecosystem with next-gen AI-powered insights and cloud-native architecture. But how exactly did we make it happen?

Building the Foundation of Innovation

Frandzzo was born from a bold vision: to empower businesses to digitally transform with intelligence, agility, and speed. Our approach from day one has been to integrate AI, automation, and cloud technology into our SaaS solutions, making them not only scalable but also deeply insightful.

By embedding machine learning and predictive analytics into our platforms, we help organizations move from reactive decision-making to proactive, data-driven strategies. Whether it’s optimizing operations, enhancing customer experiences, or identifying untapped revenue streams, our tools provide real-time, actionable insights that fuel business growth.

A Cloud-Native, AI-First Ecosystem

Our SaaS ecosystem is powered by a cloud-native core, enabling seamless deployment, continuous delivery, and effortless scalability. This flexible infrastructure allows us to rapidly adapt to changing market needs while ensuring our clients receive cutting-edge features with zero downtime.

We doubled down on AI by integrating next-gen technologies from a bold vision that can learn, adapt, and evolve alongside our users. From intelligent process automation to advanced behavior analytics, AI is the engine behind every Frandzzo innovation.

Driving Digital Agility for Customers

Innovation at Frandzzo is not just about building smart tech—it’s about delivering real-world value. Our solutions are designed to help organizations become more agile, make smarter decisions, and unlock new growth opportunities faster than ever before.

We partner closely with our clients to understand their pain points and opportunities. This collaboration fuels our product roadmap and ensures we’re always solving the right problems at the right time.

A Culture of Relentless Innovation

At the heart of Frandzzo’s success is a culture deeply rooted in curiosity, experimentation, and improvement. Our teams are empowered to think big, challenge assumptions, and continuously explore new ways to solve complex business problems. Innovation isn’t a department—it’s embedded in our DNA.

We invest heavily in R&D, conduct regular innovation sprints, and stay ahead of tech trends to ensure our customers benefit from the latest advancements. This mindset has allowed us to scale innovation quickly and sustainably.

Staying Ahead in a Fast-Paced Digital World

The digital landscape is changing faster than ever, and businesses need partners that help them not just keep up, but lead. Frandzzo persistent pursuit of innovation ensures our customers stay ahead—ready to seize new opportunities and thrive in any environment.We’re not just building products; we’re engineering the future of business.

2 notes

·

View notes

Text

Affordable RTX 4090 and RTX 5090 Rentals for AI in the USA: Best Price Guarantee

Affordable RTX 4090 and RTX 5090 Rentals for AI in the USA: Best Price Guarantee

Introduction

Artificial Intelligence (AI) continues to evolve, demanding powerful computing resources to train and deploy complex models. In the United States, where AI research and development are booming, access to high-end GPUs like the RTX 4090 and RTX 5090 has become crucial. However, owning these GPUs is expensive and not practical for everyone, especially startups, researchers, and small teams. That’s where GPU rentals come in.

If you're looking for Affordable RTX 4090 and RTX 5090 Rentals for AI in the USA, you’re in the right place. With services like NeuralRack.ai, you can rent cutting-edge hardware at competitive rates, backed by a best price guarantee. Whether you’re building a machine learning model, training a generative AI system, or running high-intensity simulations, rental GPUs are the smartest way to go.

Read on to discover how RTX 4090 and RTX 5090 rentals can save you time and money while maximizing performance.

Why Renting GPUs Makes Sense for AI Projects

Owning a high-performance GPU comes with a significant upfront cost. For AI developers and researchers, this can become a financial hurdle, especially when models change frequently and need more powerful hardware. Affordable RTX 4090 and RTX 5090 Rentals for AI in the USA offer a smarter solution.

Renting provides flexibility—you only pay for what you use. Services like NeuralRack.ai Configuration let you customize your GPU rental to your exact needs. With no long-term commitments, renting is perfect for quick experiments or extended research periods.

You get access to enterprise-grade GPUs, excellent customer support, and scalable options—all without the need for in-house maintenance. This makes GPU rentals ideal for AI startups, freelance developers, educational institutions, and tech enthusiasts across the USA.

RTX 4090 vs. RTX 5090 – A Quick Comparison

Choosing between the RTX 4090 and RTX 5090 depends on your AI project requirements. The RTX 4090 is already a powerhouse with over 16,000 CUDA cores, 24GB GDDR6X memory, and superior ray-tracing capabilities. It's excellent for deep learning, natural language processing, and 3D rendering.

On the other hand, the newer RTX 5090 outperforms the 4090 in almost every way. With enhanced architecture, more CUDA cores, and optimized AI acceleration features, it’s the ultimate choice for next-gen AI applications.

Whether you choose to rent the RTX 4090 or RTX 5090, you’ll benefit from top-tier GPU performance. At NeuralRack Pricing, both GPUs are available at unbeatable rates. The key is to align your project requirements with the right hardware.

If your workload involves complex computations and massive datasets, opt for the RTX 5090. For efficient performance at a lower cost, the RTX 4090 remains an excellent option. Both are available under our Affordable RTX 4090 and RTX 5090 Rentals for AI in the USA offering.

Benefits of Renting RTX 4090 and RTX 5090 for AI in the USA

AI projects require massive computational power, and not everyone can afford the hardware upfront. Renting GPUs solves that problem. The Affordable RTX 4090 and RTX 5090 Rentals for AI in the USA offer:

High-end Performance: RTX 4090 and 5090 GPUs deliver lightning-fast training times and high accuracy for AI models.

Cost-Effective Solution: Eliminate capital expenditure and pay only for what you use.

Quick Setup: Platforms like NeuralRack Configuration provide instant access.

Scalability: Increase or decrease resources as your workload changes.

Support: Dedicated customer service via NeuralRack Contact Us ensures smooth operation.

You also gain flexibility in testing different models and architectures. Renting GPUs gives you freedom without locking your budget or technical roadmap.

If you're based in the USA and looking for high-performance AI development without the hardware investment, renting from NeuralRack.ai is your best bet.

Who Should Consider GPU Rentals in the USA?

GPU rentals aren’t just for large enterprises. They’re a great fit for:

AI researchers working on time-sensitive projects.

Data scientists training machine learning models.

Universities and institutions running large-scale simulations.

Freelancers and startups with limited hardware budgets.

Developers testing generative AI, NLP, and deep learning tools.

The Affordable RTX 4090 and RTX 5090 Rentals for AI in the USA model is perfect for all these groups. You get premium resources without draining your capital. Plus, services like NeuralRack About assure you’re working with experts in the field.

Instead of wasting time with outdated hardware or bottlenecked cloud services, switch to a tailored GPU rental experience.

How to Choose the Right GPU Rental Service

When selecting a rental service for RTX GPUs, consider these:

Transparent Pricing – Check NeuralRack Pricing for honest rates.

Hardware Options – Ensure RTX 4090 and 5090 models are available.

Support – Look for responsive teams like at NeuralRack Contact Us.

Ease of Use – Simple dashboard, fast deployment, easy scaling.

Best Price Guarantee – A promise you get with NeuralRack’s rentals.

The right service will align with your performance needs, budget, and project timelines. That’s why the Affordable RTX 4090 and RTX 5090 Rentals for AI in the USA offered by NeuralRack are highly rated among developers nationwide.

Pricing Overview: What Makes It “Affordable”?

Affordability is key when choosing GPU rentals. Buying a new RTX 5090 can cost over $2,000+, while renting from NeuralRack Pricing gives you access at a fraction of the cost.

Rent by the hour, day, or month depending on your needs. Bulk rentals also come with discounted packages. With NeuralRack’s Best Price Guarantee, you’re assured of the lowest possible rate for premium GPUs.

There are no hidden fees or forced commitments. Just clear pricing and instant setup. Visit NeuralRack.ai to explore more.

Where to Find Affordable RTX 4090 and RTX 5090 Rentals for AI in the USA (150 words)

Finding reliable and budget-friendly GPU rentals is easy with NeuralRack. As a trusted provider of Affordable RTX 4090 and RTX 5090 Rentals for AI in the USA, they deliver enterprise-grade hardware, best price guarantee, and 24/7 support.

Simply go to NeuralRack.ai and view the available configurations on the Configuration page. Have questions? Contact the support team through NeuralRack Contact Us.

Whether you’re based in California, New York, Texas, or anywhere else in the USA—NeuralRack has you covered.

Future-Proofing with RTX 5090 Rentals

The RTX 5090 is designed for the future of AI. With faster processing, more CUDA cores, and higher bandwidth, it supports next-gen AI models and applications. Renting the 5090 from NeuralRack.ai gives you access to bleeding-edge performance without the upfront cost.

It’s perfect for generative AI, LLMs, 3D modeling, and more. Make your project future-ready with Affordable RTX 4090 and RTX 5090 Rentals for AI in the USA.

Final Thoughts: Why You Should Go for Affordable GPU Rentals

If you want performance, flexibility, and affordability all in one package, go with GPU rentals. The Affordable RTX 4090 and RTX 5090 Rentals for AI in the USA from NeuralRack.ai are trusted by developers and researchers across the country.

You get high-end GPUs, unbeatable prices, and expert support—all with zero commitment. Explore the pricing at NeuralRack Pricing and get started today.

FAQs

What’s the best way to rent an RTX 4090 or 5090 in the USA? Use NeuralRack.ai for affordable, high-performance GPU rentals.

How much does it cost to rent an RTX 5090? Visit NeuralRack Pricing for updated rates.

Is there a minimum rental duration? No, NeuralRack offers flexible hourly, daily, and monthly options.

Can I rent GPUs for AI and deep learning? Yes, both RTX 4090 and 5090 are optimized for AI workloads.

Are there any discounts for long-term rentals? Yes, NeuralRack offers bulk and long-term discounts.

Is setup assistance provided? Absolutely. Use the Contact Us page to get help.

What if I need multiple GPUs? You can configure your rental on the Configuration page.

Is the hardware reliable? Yes, NeuralRack guarantees high-quality, well-maintained GPUs.

Do you support cloud access? Yes, NeuralRack supports remote GPU access for AI workloads.

Where can I learn more about NeuralRack? Visit the About page for the full company profile.

2 notes

·

View notes

Text

Google Cloud’s BigQuery Autonomous Data To AI Platform

BigQuery automates data analysis, transformation, and insight generation using AI. AI and natural language interaction simplify difficult operations.

The fast-paced world needs data access and a real-time data activation flywheel. Artificial intelligence that integrates directly into the data environment and works with intelligent agents is emerging. These catalysts open doors and enable self-directed, rapid action, which is vital for success. This flywheel uses Google's Data & AI Cloud to activate data in real time. BigQuery has five times more organisations than the two leading cloud providers that just offer data science and data warehousing solutions due to this emphasis.

Examples of top companies:

With BigQuery, Radisson Hotel Group enhanced campaign productivity by 50% and revenue by over 20% by fine-tuning the Gemini model.

By connecting over 170 data sources with BigQuery, Gordon Food Service established a scalable, modern, AI-ready data architecture. This improved real-time response to critical business demands, enabled complete analytics, boosted client usage of their ordering systems, and offered staff rapid insights while cutting costs and boosting market share.

J.B. Hunt is revolutionising logistics for shippers and carriers by integrating Databricks into BigQuery.

General Mills saves over $100 million using BigQuery and Vertex AI to give workers secure access to LLMs for structured and unstructured data searches.

Google Cloud is unveiling many new features with its autonomous data to AI platform powered by BigQuery and Looker, a unified, trustworthy, and conversational BI platform:

New assistive and agentic experiences based on your trusted data and available through BigQuery and Looker will make data scientists, data engineers, analysts, and business users' jobs simpler and faster.

Advanced analytics and data science acceleration: Along with seamless integration with real-time and open-source technologies, BigQuery AI-assisted notebooks improve data science workflows and BigQuery AI Query Engine provides fresh insights.

Autonomous data foundation: BigQuery can collect, manage, and orchestrate any data with its new autonomous features, which include native support for unstructured data processing and open data formats like Iceberg.

Look at each change in detail.

User-specific agents

It believes everyone should have AI. BigQuery and Looker made AI-powered helpful experiences generally available, but Google Cloud now offers specialised agents for all data chores, such as:

Data engineering agents integrated with BigQuery pipelines help create data pipelines, convert and enhance data, discover anomalies, and automate metadata development. These agents provide trustworthy data and replace time-consuming and repetitive tasks, enhancing data team productivity. Data engineers traditionally spend hours cleaning, processing, and confirming data.

The data science agent in Google's Colab notebook enables model development at every step. Scalable training, intelligent model selection, automated feature engineering, and faster iteration are possible. This agent lets data science teams focus on complex methods rather than data and infrastructure.

Looker conversational analytics lets everyone utilise natural language with data. Expanded capabilities provided with DeepMind let all users understand the agent's actions and easily resolve misconceptions by undertaking advanced analysis and explaining its logic. Looker's semantic layer boosts accuracy by two-thirds. The agent understands business language like “revenue” and “segments” and can compute metrics in real time, ensuring trustworthy, accurate, and relevant results. An API for conversational analytics is also being introduced to help developers integrate it into processes and apps.

In the BigQuery autonomous data to AI platform, Google Cloud introduced the BigQuery knowledge engine to power assistive and agentic experiences. It models data associations, suggests business vocabulary words, and creates metadata instantaneously using Gemini's table descriptions, query histories, and schema connections. This knowledge engine grounds AI and agents in business context, enabling semantic search across BigQuery and AI-powered data insights.

All customers may access Gemini-powered agentic and assistive experiences in BigQuery and Looker without add-ons in the existing price model tiers!

Accelerating data science and advanced analytics

BigQuery autonomous data to AI platform is revolutionising data science and analytics by enabling new AI-driven data science experiences and engines to manage complex data and provide real-time analytics.

First, AI improves BigQuery notebooks. It adds intelligent SQL cells to your notebook that can merge data sources, comprehend data context, and make code-writing suggestions. It also uses native exploratory analysis and visualisation capabilities for data exploration and peer collaboration. Data scientists can also schedule analyses and update insights. Google Cloud also lets you construct laptop-driven, dynamic, user-friendly, interactive data apps to share insights across the organisation.

This enhanced notebook experience is complemented by the BigQuery AI query engine for AI-driven analytics. This engine lets data scientists easily manage organised and unstructured data and add real-world context—not simply retrieve it. BigQuery AI co-processes SQL and Gemini, adding runtime verbal comprehension, reasoning skills, and real-world knowledge. Their new engine processes unstructured photographs and matches them to your product catalogue. This engine supports several use cases, including model enhancement, sophisticated segmentation, and new insights.

Additionally, it provides users with the most cloud-optimized open-source environment. Google Cloud for Apache Kafka enables real-time data pipelines for event sourcing, model scoring, communications, and analytics in BigQuery for serverless Apache Spark execution. Customers have almost doubled their serverless Spark use in the last year, and Google Cloud has upgraded this engine to handle data 2.7 times faster.

BigQuery lets data scientists utilise SQL, Spark, or foundation models on Google's serverless and scalable architecture to innovate faster without the challenges of traditional infrastructure.

An independent data foundation throughout data lifetime

An independent data foundation created for modern data complexity supports its advanced analytics engines and specialised agents. BigQuery is transforming the environment by making unstructured data first-class citizens. New platform features, such as orchestration for a variety of data workloads, autonomous and invisible governance, and open formats for flexibility, ensure that your data is always ready for data science or artificial intelligence issues. It does this while giving the best cost and decreasing operational overhead.

For many companies, unstructured data is their biggest untapped potential. Even while structured data provides analytical avenues, unique ideas in text, audio, video, and photographs are often underutilised and discovered in siloed systems. BigQuery instantly tackles this issue by making unstructured data a first-class citizen using multimodal tables (preview), which integrate structured data with rich, complex data types for unified querying and storage.

Google Cloud's expanded BigQuery governance enables data stewards and professionals a single perspective to manage discovery, classification, curation, quality, usage, and sharing, including automatic cataloguing and metadata production, to efficiently manage this large data estate. BigQuery continuous queries use SQL to analyse and act on streaming data regardless of format, ensuring timely insights from all your data streams.

Customers utilise Google's AI models in BigQuery for multimodal analysis 16 times more than last year, driven by advanced support for structured and unstructured multimodal data. BigQuery with Vertex AI are 8–16 times cheaper than independent data warehouse and AI solutions.

Google Cloud maintains open ecology. BigQuery tables for Apache Iceberg combine BigQuery's performance and integrated capabilities with the flexibility of an open data lakehouse to link Iceberg data to SQL, Spark, AI, and third-party engines in an open and interoperable fashion. This service provides adaptive and autonomous table management, high-performance streaming, auto-AI-generated insights, practically infinite serverless scalability, and improved governance. Cloud storage enables fail-safe features and centralised fine-grained access control management in their managed solution.

Finaly, AI platform autonomous data optimises. Scaling resources, managing workloads, and ensuring cost-effectiveness are its competencies. The new BigQuery spend commit unifies spending throughout BigQuery platform and allows flexibility in shifting spend across streaming, governance, data processing engines, and more, making purchase easier.

Start your data and AI adventure with BigQuery data migration. Google Cloud wants to know how you innovate with data.

#technology#technews#govindhtech#news#technologynews#BigQuery autonomous data to AI platform#BigQuery#autonomous data to AI platform#BigQuery platform#autonomous data#BigQuery AI Query Engine

2 notes

·

View notes

Text

Why Python Will Thrive: Future Trends and Applications

Python has already made a significant impact in the tech world, and its trajectory for the future is even more promising. From its simplicity and versatility to its widespread use in cutting-edge technologies, Python is expected to continue thriving in the coming years. Considering the kind support of Python Course in Chennai Whatever your level of experience or reason for switching from another programming language, learning Python gets much more fun.

Let's explore why Python will remain at the forefront of software development and what trends and applications will contribute to its ongoing dominance.

1. Artificial Intelligence and Machine Learning

Python is already the go-to language for AI and machine learning, and its role in these fields is set to expand further. With powerful libraries such as TensorFlow, PyTorch, and Scikit-learn, Python simplifies the development of machine learning models and artificial intelligence applications. As more industries integrate AI for automation, personalization, and predictive analytics, Python will remain a core language for developing intelligent systems.

2. Data Science and Big Data

Data science is one of the most significant areas where Python has excelled. Libraries like Pandas, NumPy, and Matplotlib make data manipulation and visualization simple and efficient. As companies and organizations continue to generate and analyze vast amounts of data, Python’s ability to process, clean, and visualize big data will only become more critical. Additionally, Python’s compatibility with big data platforms like Hadoop and Apache Spark ensures that it will remain a major player in data-driven decision-making.

3. Web Development

Python’s role in web development is growing thanks to frameworks like Django and Flask, which provide robust, scalable, and secure solutions for building web applications. With the increasing demand for interactive websites and APIs, Python is well-positioned to continue serving as a top language for backend development. Its integration with cloud computing platforms will also fuel its growth in building modern web applications that scale efficiently.

4. Automation and Scripting

Automation is another area where Python excels. Developers use Python to automate tasks ranging from system administration to testing and deployment. With the rise of DevOps practices and the growing demand for workflow automation, Python’s role in streamlining repetitive processes will continue to grow. Businesses across industries will rely on Python to boost productivity, reduce errors, and optimize performance. With the aid of Best Online Training & Placement Programs, which offer comprehensive training and job placement support to anyone looking to develop their talents, it’s easier to learn this tool and advance your career.

5. Cybersecurity and Ethical Hacking

With cyber threats becoming increasingly sophisticated, cybersecurity is a critical concern for businesses worldwide. Python is widely used for penetration testing, vulnerability scanning, and threat detection due to its simplicity and effectiveness. Libraries like Scapy and PyCrypto make Python an excellent choice for ethical hacking and security professionals. As the need for robust cybersecurity measures increases, Python’s role in safeguarding digital assets will continue to thrive.

6. Internet of Things (IoT)

Python’s compatibility with microcontrollers and embedded systems makes it a strong contender in the growing field of IoT. Frameworks like MicroPython and CircuitPython enable developers to build IoT applications efficiently, whether for home automation, smart cities, or industrial systems. As the number of connected devices continues to rise, Python will remain a dominant language for creating scalable and reliable IoT solutions.

7. Cloud Computing and Serverless Architectures

The rise of cloud computing and serverless architectures has created new opportunities for Python. Cloud platforms like AWS, Google Cloud, and Microsoft Azure all support Python, allowing developers to build scalable and cost-efficient applications. With its flexibility and integration capabilities, Python is perfectly suited for developing cloud-based applications, serverless functions, and microservices.

8. Gaming and Virtual Reality

Python has long been used in game development, with libraries such as Pygame offering simple tools to create 2D games. However, as gaming and virtual reality (VR) technologies evolve, Python’s role in developing immersive experiences will grow. The language’s ease of use and integration with game engines will make it a popular choice for building gaming platforms, VR applications, and simulations.

9. Expanding Job Market

As Python’s applications continue to grow, so does the demand for Python developers. From startups to tech giants like Google, Facebook, and Amazon, companies across industries are seeking professionals who are proficient in Python. The increasing adoption of Python in various fields, including data science, AI, cybersecurity, and cloud computing, ensures a thriving job market for Python developers in the future.

10. Constant Evolution and Community Support

Python’s open-source nature means that it’s constantly evolving with new libraries, frameworks, and features. Its vibrant community of developers contributes to its growth and ensures that Python stays relevant to emerging trends and technologies. Whether it’s a new tool for AI or a breakthrough in web development, Python’s community is always working to improve the language and make it more efficient for developers.

Conclusion

Python’s future is bright, with its presence continuing to grow in AI, data science, automation, web development, and beyond. As industries become increasingly data-driven, automated, and connected, Python’s simplicity, versatility, and strong community support make it an ideal choice for developers. Whether you are a beginner looking to start your coding journey or a seasoned professional exploring new career opportunities, learning Python offers long-term benefits in a rapidly evolving tech landscape.

#python course#python training#python#technology#tech#python programming#python online training#python online course#python online classes#python certification

2 notes

·

View notes

Text

Best 10 Blockchain Development Companies in India 2025

Blockchain technology is transforming industries by enhancing security, transparency, and efficiency. With India's growing IT ecosystem, several companies specialize in blockchain development services, catering to industries like finance, healthcare, supply chain, and gaming. If you're looking for a trusted blockchain development company in India, here are the top 10 companies in 2025 that are leading the way with cutting-edge blockchain solutions.

1. Comfygen

Comfygen is a leading blockchain development company in India, offering comprehensive blockchain solutions for businesses worldwide. Their expertise includes smart contract development, dApps, DeFi platforms, NFT marketplaces, and enterprise blockchain solutions. With a strong focus on security and scalability, Comfygen delivers top-tier blockchain applications tailored to business needs.

Key Services:

Smart contract development

Blockchain consulting & integration

NFT marketplace development

DeFi solutions & decentralized exchanges (DEX)

2. Infosys

Infosys, a globally recognized IT giant, offers advanced blockchain solutions to enterprises looking to integrate distributed ledger technology (DLT) into their operations. Their blockchain services focus on supply chain, finance, and identity management.

Key Services:

Enterprise blockchain solutions

Smart contracts & decentralized apps

Blockchain security & auditing

3. Wipro

Wipro is known for its extensive research and development in blockchain technology. They help businesses integrate blockchain into their financial systems, healthcare, and logistics for better transparency and efficiency.

Key Services:

Blockchain consulting & strategy

Supply chain blockchain solutions

Smart contract development

4. Tata Consultancy Services (TCS)

TCS is a pioneer in the Indian IT industry and provides robust blockchain solutions, helping enterprises optimize business processes with secure and scalable decentralized applications.

Key Services:

Enterprise blockchain development

Tokenization & digital asset solutions

Decentralized finance (DeFi) applications

5. Hyperlink InfoSystem

Hyperlink InfoSystem is a well-established blockchain development company in India, specializing in building customized blockchain solutions for industries like finance, gaming, and supply chain.

Key Services:

Blockchain-based mobile app development

Smart contract auditing & security

NFT marketplace & DeFi solutions

6. Tech Mahindra

Tech Mahindra provides blockchain-as-a-service (BaaS) solutions, ensuring that businesses leverage blockchain for improved transparency and automation. They focus on finance, telecom, and supply chain industries.

Key Services:

Blockchain implementation & consulting

dApp development & smart contracts

Digital identity management solutions

7. Antier Solutions

Antier Solutions is a specialized blockchain development firm offering DeFi solutions, cryptocurrency exchange development, and metaverse applications. They provide custom blockchain solutions for startups and enterprises.

Key Services:

DeFi platform development

NFT & metaverse development

White-label crypto exchange development

8. HCL Technologies

HCL Technologies offers enterprise blockchain development services, focusing on improving security, efficiency, and automation across multiple sectors.

Key Services:

Blockchain-based digital payments

Hyperledger & Ethereum development

Secure blockchain network architecture

9. SoluLab

SoluLab is a trusted blockchain development company working on Ethereum, Binance Smart Chain, and Solana-based solutions for businesses across industries.

Key Services:

Smart contract & token development

Decentralized application (dApp) development

AI & blockchain integration

10. Mphasis

Mphasis provides custom blockchain solutions to enterprises, ensuring secure transactions and seamless business operations.

Key Services:

Blockchain for banking & financial services

Smart contract development & deployment

Blockchain security & risk management

Conclusion

India is emerging as a global hub for blockchain technology, with companies specializing in secure, scalable, and efficient blockchain development services. Whether you're a startup or an enterprise looking for custom blockchain solutions, these top 10 blockchain development companies in India provide world-class expertise and innovation.

Looking for the best blockchain development partner? Comfygen offers cutting-edge blockchain solutions to help your business thrive in the decentralized era. Contact us today to start your blockchain journey!

2 notes

·

View notes

Text

Adobe Experience Manager Services USA: Empowering Digital Transformation

Introduction

In today's digital-first world, Adobe Experience Manager (AEM) Services USA have become a key driver for businesses looking to optimize their digital experiences, streamline content management, and enhance customer engagement. AEM is a powerful content management system (CMS) that integrates with AI and cloud technologies to provide scalable, secure, and personalized digital solutions.

With the rapid evolution of online platforms, enterprises across industries such as e-commerce, healthcare, finance, and media are leveraging AEM to deliver seamless and engaging digital experiences. In this blog, we explore how AEM services in the USA are revolutionizing digital content management and highlight the leading AEM service providers offering cutting-edge solutions.

Why Adobe Experience Manager Services Are Essential for Enterprises

AEM is an advanced digital experience platform that enables businesses to create, manage, and optimize digital content efficiently. Companies that implement AEM services in the USA benefit from:

Unified Content Management: Manage web, mobile, and digital assets seamlessly from a centralized platform.

Omnichannel Content Delivery: Deliver personalized experiences across multiple touchpoints, including websites, mobile apps, and IoT devices.

Enhanced User Experience: Leverage AI-driven insights and automation to create engaging and personalized customer interactions.

Scalability & Flexibility: AEM’s cloud-based architecture allows businesses to scale their content strategies efficiently.

Security & Compliance: Ensure data security and regulatory compliance with enterprise-grade security features.

Key AEM Services Driving Digital Transformation in the USA

Leading AEM service providers in the USA offer a comprehensive range of solutions tailored to enterprise needs:

AEM Sites Development: Build and manage responsive, high-performance websites with AEM’s powerful CMS capabilities.

AEM Assets Management: Store, organize, and distribute digital assets effectively with AI-driven automation.

AEM Headless CMS Implementation: Deliver content seamlessly across web, mobile, and digital channels through API-driven content delivery.

AEM Cloud Migration: Migrate to Adobe Experience Manager as a Cloud Service for improved agility, security, and scalability.

AEM Personalization & AI Integration: Utilize Adobe Sensei AI to deliver real-time personalized experiences.

AEM Consulting & Support: Get expert guidance, training, and support to optimize AEM performance and efficiency.

Key Factors Defining Top AEM Service Providers in the USA

Choosing the right AEM partner is crucial for successful AEM implementation in the USA. The best AEM service providers excel in:

Expertise in AEM Development & Customization

Leading AEM companies specialize in custom AEM development, ensuring tailored solutions that align with business goals.

Cloud-Based AEM Solutions

Cloud-native AEM services enable businesses to scale and manage content efficiently with Adobe Experience Manager as a Cloud Service.

Industry-Specific AEM Applications

Customized AEM solutions cater to specific industry needs, from e-commerce personalization to financial services automation.

Seamless AEM Integration

Top providers ensure smooth integration of AEM with existing enterprise tools such as CRM, ERP, and marketing automation platforms.

End-to-End AEM Support & Optimization

Comprehensive support services include AEM migration, upgrades, maintenance, and performance optimization.

Top AEM Service Providers in the USA

Leading AEM service providers offer a range of solutions to help businesses optimize their Adobe Experience Manager implementations. These services include:

AEM Strategy & Consulting

Expert guidance on AEM implementation, cloud migration, and content strategy.

AEM Cloud Migration & Integration

Seamless migration from on-premise to AEM as a Cloud Service, ensuring scalability and security.

AEM Development & Customization

Tailored solutions for AEM components, templates, workflows, and third-party integrations.

AEM Performance Optimization

Enhancing site speed, caching, and content delivery for improved user experiences.

AEM Managed Services & Support

Ongoing maintenance, upgrades, and security monitoring for optimal AEM performance.

The Future of AEM Services in the USA

The future of AEM services in the USA is driven by advancements in AI, machine learning, and cloud computing. Key trends shaping AEM’s evolution include:

AI-Powered Content Automation: AEM’s AI capabilities, such as Adobe Sensei, enhance content personalization and automation.

Headless CMS for Omnichannel Delivery: AEM’s headless CMS capabilities enable seamless content delivery across web, mobile, and IoT.

Cloud-First AEM Deployments: The shift towards Adobe Experience Manager as a Cloud Service is enabling businesses to achieve better performance and scalability.

Enhanced Data Security & Compliance: With growing concerns about data privacy, AEM service providers focus on GDPR and HIPAA-compliant solutions.

Conclusion:

Elevate Your Digital Experience with AEM Services USA

As businesses embrace digital transformation, Adobe Experience Manager services in the USA provide a powerful, scalable, and AI-driven solution to enhance content management and customer engagement. Choosing the right AEM partner ensures seamless implementation, personalized experiences, and improved operational efficiency.

🚀 Transform your digital strategy today by partnering with a top AEM service provider in the USA. The future of digital experience management starts with AEM—empowering businesses to deliver exceptional content and customer experiences!

3 notes

·

View notes

Text

How Python Powers Scalable and Cost-Effective Cloud Solutions

Explore the role of Python in developing scalable and cost-effective cloud solutions. This guide covers Python's advantages in cloud computing, addresses potential challenges, and highlights real-world applications, providing insights into leveraging Python for efficient cloud development.

Introduction

In today's rapidly evolving digital landscape, businesses are increasingly leveraging cloud computing to enhance scalability, optimize costs, and drive innovation. Among the myriad of programming languages available, Python has emerged as a preferred choice for developing robust cloud solutions. Its simplicity, versatility, and extensive library support make it an ideal candidate for cloud-based applications.

In this comprehensive guide, we will delve into how Python empowers scalable and cost-effective cloud solutions, explore its advantages, address potential challenges, and highlight real-world applications.

Why Python is the Preferred Choice for Cloud Computing?

Python's popularity in cloud computing is driven by several factors, making it the preferred language for developing and managing cloud solutions. Here are some key reasons why Python stands out:

Simplicity and Readability: Python's clean and straightforward syntax allows developers to write and maintain code efficiently, reducing development time and costs.

Extensive Library Support: Python offers a rich set of libraries and frameworks like Django, Flask, and FastAPI for building cloud applications.

Seamless Integration with Cloud Services: Python is well-supported across major cloud platforms like AWS, Azure, and Google Cloud.

Automation and DevOps Friendly: Python supports infrastructure automation with tools like Ansible, Terraform, and Boto3.

Strong Community and Enterprise Adoption: Python has a massive global community that continuously improves and innovates cloud-related solutions.

How Python Enables Scalable Cloud Solutions?

Scalability is a critical factor in cloud computing, and Python provides multiple ways to achieve it:

1. Automation of Cloud Infrastructure

Python's compatibility with cloud service provider SDKs, such as AWS Boto3, Azure SDK for Python, and Google Cloud Client Library, enables developers to automate the provisioning and management of cloud resources efficiently.

2. Containerization and Orchestration

Python integrates seamlessly with Docker and Kubernetes, enabling businesses to deploy scalable containerized applications efficiently.

3. Cloud-Native Development

Frameworks like Flask, Django, and FastAPI support microservices architecture, allowing businesses to develop lightweight, scalable cloud applications.

4. Serverless Computing

Python's support for serverless platforms, including AWS Lambda, Azure Functions, and Google Cloud Functions, allows developers to build applications that automatically scale in response to demand, optimizing resource utilization and cost.

5. AI and Big Data Scalability

Python’s dominance in AI and data science makes it an ideal choice for cloud-based AI/ML services like AWS SageMaker, Google AI, and Azure Machine Learning.

Looking for expert Python developers to build scalable cloud solutions? Hire Python Developers now!

Advantages of Using Python for Cloud Computing

Cost Efficiency: Python’s compatibility with serverless computing and auto-scaling strategies minimizes cloud costs.

Faster Development: Python’s simplicity accelerates cloud application development, reducing time-to-market.

Cross-Platform Compatibility: Python runs seamlessly across different cloud platforms.

Security and Reliability: Python-based security tools help in encryption, authentication, and cloud monitoring.

Strong Community Support: Python developers worldwide contribute to continuous improvements, making it future-proof.

Challenges and Considerations

While Python offers many benefits, there are some challenges to consider:

Performance Limitations: Python is an interpreted language, which may not be as fast as compiled languages like Java or C++.

Memory Consumption: Python applications might require optimization to handle large-scale cloud workloads efficiently.

Learning Curve for Beginners: Though Python is simple, mastering cloud-specific frameworks requires time and expertise.

Python Libraries and Tools for Cloud Computing

Python’s ecosystem includes powerful libraries and tools tailored for cloud computing, such as:

Boto3: AWS SDK for Python, used for cloud automation.

Google Cloud Client Library: Helps interact with Google Cloud services.

Azure SDK for Python: Enables seamless integration with Microsoft Azure.

Apache Libcloud: Provides a unified interface for multiple cloud providers.

PyCaret: Simplifies machine learning deployment in cloud environments.

Real-World Applications of Python in Cloud Computing

1. Netflix - Scalable Streaming with Python

Netflix extensively uses Python for automation, data analysis, and managing cloud infrastructure, enabling seamless content delivery to millions of users.

2. Spotify - Cloud-Based Music Streaming

Spotify leverages Python for big data processing, recommendation algorithms, and cloud automation, ensuring high availability and scalability.

3. Reddit - Handling Massive Traffic

Reddit uses Python and AWS cloud solutions to manage heavy traffic while optimizing server costs efficiently.

Future of Python in Cloud Computing

The future of Python in cloud computing looks promising with emerging trends such as:

AI-Driven Cloud Automation: Python-powered AI and machine learning will drive intelligent cloud automation.

Edge Computing: Python will play a crucial role in processing data at the edge for IoT and real-time applications.

Hybrid and Multi-Cloud Strategies: Python’s flexibility will enable seamless integration across multiple cloud platforms.

Increased Adoption of Serverless Computing: More enterprises will adopt Python for cost-effective serverless applications.

Conclusion

Python's simplicity, versatility, and robust ecosystem make it a powerful tool for developing scalable and cost-effective cloud solutions. By leveraging Python's capabilities, businesses can enhance their cloud applications' performance, flexibility, and efficiency.

Ready to harness the power of Python for your cloud solutions? Explore our Python Development Services to discover how we can assist you in building scalable and efficient cloud applications.

FAQs

1. Why is Python used in cloud computing?

Python is widely used in cloud computing due to its simplicity, extensive libraries, and seamless integration with cloud platforms like AWS, Google Cloud, and Azure.

2. Is Python good for serverless computing?

Yes! Python works efficiently in serverless environments like AWS Lambda, Azure Functions, and Google Cloud Functions, making it an ideal choice for cost-effective, auto-scaling applications.

3. Which companies use Python for cloud solutions?

Major companies like Netflix, Spotify, Dropbox, and Reddit use Python for cloud automation, AI, and scalable infrastructure management.

4. How does Python help with cloud security?

Python offers robust security libraries like PyCryptodome and OpenSSL, enabling encryption, authentication, and cloud monitoring for secure cloud applications.

5. Can Python handle big data in the cloud?

Yes! Python supports big data processing with tools like Apache Spark, Pandas, and NumPy, making it suitable for data-driven cloud applications.

#Python development company#Python in Cloud Computing#Hire Python Developers#Python for Multi-Cloud Environments

2 notes

·

View notes

Text

Top Trends in Software Development for 2025

The software development industry is evolving at an unprecedented pace, driven by advancements in technology and the increasing demands of businesses and consumers alike. As we step into 2025, staying ahead of the curve is essential for businesses aiming to remain competitive. Here, we explore the top trends shaping the software development landscape and how they impact businesses. For organizations seeking cutting-edge solutions, partnering with the Best Software Development Company in Vadodara, Gujarat, or India can make all the difference.

1. Artificial Intelligence and Machine Learning Integration:

Artificial Intelligence (AI) and Machine Learning (ML) are no longer optional but integral to modern software development. From predictive analytics to personalized user experiences, AI and ML are driving innovation across industries. In 2025, expect AI-powered tools to streamline development processes, improve testing, and enhance decision-making.

Businesses in Gujarat and beyond are leveraging AI to gain a competitive edge. Collaborating with the Best Software Development Company in Gujarat ensures access to AI-driven solutions tailored to specific industry needs.

2. Low-Code and No-Code Development Platforms:

The demand for faster development cycles has led to the rise of low-code and no-code platforms. These platforms empower non-technical users to create applications through intuitive drag-and-drop interfaces, significantly reducing development time and cost.

For startups and SMEs in Vadodara, partnering with the Best Software Development Company in Vadodara ensures access to these platforms, enabling rapid deployment of business applications without compromising quality.

3. Cloud-Native Development:

Cloud-native technologies, including Kubernetes and microservices, are becoming the backbone of modern applications. By 2025, cloud-native development will dominate, offering scalability, resilience, and faster time-to-market.

The Best Software Development Company in India can help businesses transition to cloud-native architectures, ensuring their applications are future-ready and capable of handling evolving market demands.

4. Edge Computing:

As IoT devices proliferate, edge computing is emerging as a critical trend. Processing data closer to its source reduces latency and enhances real-time decision-making. This trend is particularly significant for industries like healthcare, manufacturing, and retail.

Organizations seeking to leverage edge computing can benefit from the expertise of the Best Software Development Company in Gujarat, which specializes in creating applications optimized for edge environments.

5. Cybersecurity by Design:

With the increasing sophistication of cyber threats, integrating security into the development process has become non-negotiable. Cybersecurity by design ensures that applications are secure from the ground up, reducing vulnerabilities and protecting sensitive data.

The Best Software Development Company in Vadodara prioritizes cybersecurity, providing businesses with robust, secure software solutions that inspire trust among users.

6. Blockchain Beyond Cryptocurrencies:

Blockchain technology is expanding beyond cryptocurrencies into areas like supply chain management, identity verification, and smart contracts. In 2025, blockchain will play a pivotal role in creating transparent, tamper-proof systems.

Partnering with the Best Software Development Company in India enables businesses to harness blockchain technology for innovative applications that drive efficiency and trust.

7. Progressive Web Apps (PWAs):

Progressive Web Apps (PWAs) combine the best features of web and mobile applications, offering seamless experiences across devices. PWAs are cost-effective and provide offline capabilities, making them ideal for businesses targeting diverse audiences.

The Best Software Development Company in Gujarat can develop PWAs tailored to your business needs, ensuring enhanced user engagement and accessibility.

8. Internet of Things (IoT) Expansion:

IoT continues to transform industries by connecting devices and enabling smarter decision-making. From smart homes to industrial IoT, the possibilities are endless. In 2025, IoT solutions will become more sophisticated, integrating AI and edge computing for enhanced functionality.

For businesses in Vadodara and beyond, collaborating with the Best Software Development Company in Vadodara ensures access to innovative IoT solutions that drive growth and efficiency.

9. DevSecOps:

DevSecOps integrates security into the DevOps pipeline, ensuring that security is a shared responsibility throughout the development lifecycle. This approach reduces vulnerabilities and ensures compliance with industry standards.

The Best Software Development Company in India can help implement DevSecOps practices, ensuring that your applications are secure, scalable, and compliant.

10. Sustainability in Software Development:

Sustainability is becoming a priority in software development. Green coding practices, energy-efficient algorithms, and sustainable cloud solutions are gaining traction. By adopting these practices, businesses can reduce their carbon footprint and appeal to environmentally conscious consumers.

Working with the Best Software Development Company in Gujarat ensures access to sustainable software solutions that align with global trends.

11. 5G-Driven Applications:

The rollout of 5G networks is unlocking new possibilities for software development. Ultra-fast connectivity and low latency are enabling applications like augmented reality (AR), virtual reality (VR), and autonomous vehicles.

The Best Software Development Company in Vadodara is at the forefront of leveraging 5G technology to create innovative applications that redefine user experiences.

12. Hyperautomation:

Hyperautomation combines AI, ML, and robotic process automation (RPA) to automate complex business processes. By 2025, hyperautomation will become a key driver of efficiency and cost savings across industries.

Partnering with the Best Software Development Company in India ensures access to hyperautomation solutions that streamline operations and boost productivity.

13. Augmented Reality (AR) and Virtual Reality (VR):

AR and VR technologies are transforming industries like gaming, education, and healthcare. In 2025, these technologies will become more accessible, offering immersive experiences that enhance learning, entertainment, and training.

The Best Software Development Company in Gujarat can help businesses integrate AR and VR into their applications, creating unique and engaging user experiences.

Conclusion:

The software development industry is poised for significant transformation in 2025, driven by trends like AI, cloud-native development, edge computing, and hyperautomation. Staying ahead of these trends requires expertise, innovation, and a commitment to excellence.

For businesses in Vadodara, Gujarat, or anywhere in India, partnering with the Best Software Development Company in Vadodara, Gujarat, or India ensures access to cutting-edge solutions that drive growth and success. By embracing these trends, businesses can unlock new opportunities and remain competitive in an ever-evolving digital landscape.

#Best Software Development Company in Vadodara#Best Software Development Company in Gujarat#Best Software Development Company in India#nividasoftware

5 notes

·

View notes

Text

Industry First: UCIe Optical Chiplet Unveiled by Ayar Labs

New Post has been published on https://thedigitalinsider.com/industry-first-ucie-optical-chiplet-unveiled-by-ayar-labs/

Industry First: UCIe Optical Chiplet Unveiled by Ayar Labs

Ayar Labs has unveiled the industry’s first Universal Chiplet Interconnect Express (UCIe) optical interconnect chiplet, designed specifically to maximize AI infrastructure performance and efficiency while reducing latency and power consumption for large-scale AI workloads.

This breakthrough will help address the increasing demands of advanced computing architectures, especially as AI systems continue to scale. By incorporating a UCIe electrical interface, the new chiplet is designed to eliminate data bottlenecks while enabling seamless integration with chips from different vendors, fostering a more accessible and cost-effective ecosystem for adopting advanced optical technologies.

The chiplet, named TeraPHY™, achieves 8 Tbps bandwidth and is powered by Ayar Labs’ 16-wavelength SuperNova™ light source. This optical interconnect technology aims to overcome the limitations of traditional copper interconnects, particularly for data-intensive AI applications.

“Optical interconnects are needed to solve power density challenges in scale-up AI fabrics,” said Mark Wade, CEO of Ayar Labs.

The integration with the UCIe standard is particularly significant as it allows chiplets from different manufacturers to work together seamlessly. This interoperability is critical for the future of chip design, which is increasingly moving toward multi-vendor, modular approaches.

The UCIe Standard: Creating an Open Chiplet Ecosystem

The UCIe Consortium, which developed the standard, aims to build “an open ecosystem of chiplets for on-package innovations.” Their Universal Chiplet Interconnect Express specification addresses industry demands for more customizable, package-level integration by combining high-performance die-to-die interconnect technology with multi-vendor interoperability.

“The advancement of the UCIe standard marks significant progress toward creating more integrated and efficient AI infrastructure thanks to an ecosystem of interoperable chiplets,” said Dr. Debendra Das Sharma, Chair of the UCIe Consortium.

The standard establishes a universal interconnect at the package level, enabling chip designers to mix and match components from different vendors to create more specialized and efficient systems. The UCIe Consortium recently announced its UCIe 2.0 Specification release, indicating the standard’s continued development and refinement.

Industry Support and Implications

The announcement has garnered strong endorsements from major players in the semiconductor and AI industries, all members of the UCIe Consortium.

Mark Papermaster from AMD emphasized the importance of open standards: “The robust, open and vendor neutral chiplet ecosystem provided by UCIe is critical to meeting the challenge of scaling networking solutions to deliver on the full potential of AI. We’re excited that Ayar Labs is one of the first deployments that leverages the UCIe platform to its full extent.”

This sentiment was echoed by Kevin Soukup from GlobalFoundries, who noted, “As the industry transitions to a chiplet-based approach to system partitioning, the UCIe interface for chiplet-to-chiplet communication is rapidly becoming a de facto standard. We are excited to see Ayar Labs demonstrating the UCIe standard over an optical interface, a pivotal technology for scale-up networks.”

Technical Advantages and Future Applications

The convergence of UCIe and optical interconnects represents a paradigm shift in computing architecture. By combining silicon photonics in a chiplet form factor with the UCIe standard, the technology allows GPUs and other accelerators to “communicate across a wide range of distances, from millimeters to kilometers, while effectively functioning as a single, giant GPU.”

The technology also facilitates Co-Packaged Optics (CPO), with multinational manufacturing company Jabil already showcasing a model featuring Ayar Labs’ light sources capable of “up to a petabit per second of bi-directional bandwidth.” This approach promises greater compute density per rack, enhanced cooling efficiency, and support for hot-swap capability.

“Co-packaged optical (CPO) chiplets are set to transform the way we address data bottlenecks in large-scale AI computing,” said Lucas Tsai from Taiwan Semiconductor Manufacturing Company (TSMC). “The availability of UCIe optical chiplets will foster a strong ecosystem, ultimately driving both broader adoption and continued innovation across the industry.”

Transforming the Future of Computing

As AI workloads continue to grow in complexity and scale, the semiconductor industry is increasingly looking toward chiplet-based architectures as a more flexible and collaborative approach to chip design. Ayar Labs’ introduction of the first UCIe optical chiplet addresses the bandwidth and power consumption challenges that have become bottlenecks for high-performance computing and AI workloads.

The combination of the open UCIe standard with advanced optical interconnect technology promises to revolutionize system-level integration and drive the future of scalable, efficient computing infrastructure, particularly for the demanding requirements of next-generation AI systems.

The strong industry support for this development indicates the potential for a rapidly expanding ecosystem of UCIe-compatible technologies, which could accelerate innovation across the semiconductor industry while making advanced optical interconnect solutions more widely available and cost-effective.

#accelerators#adoption#ai#AI chips#AI Infrastructure#AI systems#amd#Announcements#applications#approach#architecture#bi#CEO#challenge#chip#Chip Design#chips#collaborative#communication#complexity#computing#cooling#data#Design#designers#development#driving#efficiency#express#factor

2 notes

·

View notes

Text

𝗛𝗼𝘄 𝗪𝗲𝗯𝟯 𝗥𝗲𝘃𝗼𝗹𝘂𝘁𝗶𝗼𝗻𝗶𝘇𝗲𝘀 𝗧𝗿𝗮𝗱𝗶𝘁𝗶𝗼𝗻𝗮𝗹 𝗦𝗲𝗮𝗿𝗰𝗵 𝗘𝗻𝗴𝗶𝗻𝗲𝘀

The emergence of Web3 marks a significant paradigm shift in the digital landscape, transforming how information is accessed, shared, and verified. To fully comprehend Web3's impact on search technology, it's essential to examine the limitations of traditional search engines and explore how Web3 addresses these challenges.

Problems of Traditional Search Engines

Centralization of Data: Traditional search engines rely on centralized databases, controlled by a single entity, raising concerns about data privacy, censorship, and manipulation.

Privacy Concerns: Users' search data is often collected, analyzed, and used for targeted advertising, sparking serious privacy concerns and a growing desire for anonymity.

Algorithmic Bias: Search algorithms can perpetuate biases, influencing information visibility and compromising neutrality.

Data Authenticity and Quality: Information authenticity and quality can be questionable, facilitating the spread of misinformation and fake news.

Dependence on Internet Giants: A few dominant companies control the search engine market, leading to concentrated power and potential monopolistic practices.

Limited Customization and Personalization: While some personalization exists, it's primarily driven by engagement goals rather than relevance or unbiased information.

Web3: A Solution to Traditional Search Engine Limitations

Web3, built on blockchain technology, decentralized applications (dApps), and a user-centric web, offers innovative solutions:

Decentralization of Data: Web3 distributes data across networks, reducing risks associated with centralized control.

Enhanced Privacy and Anonymity: Blockchain technology enables encrypted searches and user data, providing anonymity and reducing personal data exploitation.

Reduced Algorithmic Bias: Decentralized search engines employ transparent algorithms, minimizing bias and allowing community involvement.

Improved Data Authenticity: Blockchain's immutability and transparency enhance information authenticity, verifying sources and accuracy.

Diversification of Search Engines: Web3 encourages diverse search engines, breaking monopolies and fostering innovation.

Customization and Personalization: Web3 offers personalized search experiences while respecting user privacy, using smart contracts and decentralized storage.

Tokenization and Incentives: Web3 introduces models for incentivizing content creation and curation, rewarding users and creators with tokens.

Interoperability and Integration: Web3's architecture promotes seamless integration among services and platforms.

Challenges

While Web3 offers solutions, challenges persist:

Technical Complexity: Blockchain and decentralized technologies pose adoption barriers.

User Adoption: Transitioning from traditional to decentralized search engines requires behavioral shifts.

Adot: A Web3 Search Engine Pioneer

Adot exemplifies Web3's potential in reshaping the search engine landscape:

Empowering AI with Web3: Adot optimizes AI for Web3, enhancing logical reasoning and knowledge integration.

Mission of Open Accessibility: Adot aims to make high-quality data universally accessible, surpassing traditional search engines.

User and Developer Empowerment: Adot rewards users for contributions and encourages developers to create customized search engines.

Conclusion

Web3 revolutionizes traditional search engines by addressing centralization, privacy concerns, and algorithmic biases. While challenges remain, Web3's potential for a decentralized, transparent, and user-empowered web is vast. As Web3 technologies evolve, we can expect a significant shift in how we interact with online information.

About Adot

Adot is building a Web3 search engine for the AI era, providing users with real-time, intelligent decision support.

Join the Adot Revolutionary journey:

1. Website: https://a.xyz

2. X (Twitter) handle https://x.com/Adot_web3?t=UWoRjunsR7iM1ueOKLfIZg&s=09

3. https://t.me/+McB7Gs2I-qoxMDM1

2 notes

·

View notes

Text

BIM Careers: Building Your Future in the Digital AEC Arena

The construction industry is undergoing a digital revolution, and BIM (Building Information Modeling) is at the forefront. It's no longer just a fancy 3D modeling tool; BIM is a collaborative platform that integrates data-rich models with workflows across the entire building lifecycle. This translates to exciting career opportunities for those who can harness the power of BIM.

Are you ready to step into the octagon of the digital construction arena? (Yes, we're keeping the fighting metaphor alive!) Here's a breakdown of the in-demand skills, salary ranges, and future prospects for BIM professionals:

The In-Demand Skillset: Your BIM Arsenal

Think of your BIM skills as your tools in the digital construction toolbox. Here are the top weapons you'll need:

BIM Software Proficiency: Mastering software like Revit, ArchiCAD, or Navisworks is crucial. Understanding their functionalities allows you to create, manipulate, and analyze BIM models.

Building Science Fundamentals: A solid grasp of architectural, structural, and MEP (mechanical, electrical, and plumbing) principles is essential for creating BIM models that reflect real-world construction realities.

Collaboration & Communication: BIM thrives on teamwork. The ability to collaborate effectively with architects, engineers, and other stakeholders is paramount.

Data Management & Analysis: BIM models are data-rich. Being adept at data extraction, analysis, and interpretation unlocks the true potential of BIM for informed decision-making.

Problem-Solving & Critical Thinking: BIM projects are complex. The ability to identify and solve problems creatively, while thinking critically about the design and construction process, is invaluable.

Salary Showdown: The BIM Payday Punch

Now, let's talk about the real knock-out factor – salaries! According to Indeed, BIM professionals in the US can expect an average annual salary of around $85,000. This number can vary depending on experience, location, and specific BIM expertise. Entry-level BIM roles might start around $60,000, while BIM Managers and BIM Specialists with extensive experience can command salaries exceeding $100,000.

Future Forecast: A Bright BIM Horizon

The future of BIM is bright. The global BIM market is projected to reach a staggering $8.8 billion by 2025 (Grand View Research). This translates to a continuous rise in demand for skilled BIM professionals. Here are some exciting trends shaping the future of BIM careers:

BIM for Specialty Trades: BIM is no longer just for architects and engineers. We'll see increased adoption by specialty trades like HVAC technicians and fire protection specialists.

Integration with AI and Machine Learning: Imagine BIM models that can predict potential issues or suggest optimal design solutions. AI and machine learning will revolutionize BIM capabilities.

VR and AR for Enhanced Collaboration: Virtual Reality (VR) and Augmented Reality (AR) will allow for immersive BIM model walkthroughs, facilitating better collaboration and design communication.

Ready to Join the BIM Revolution?

The BIM landscape offers a dynamic and rewarding career path for those with the right skills. If you're passionate about technology, construction, and shaping the future of the built environment, then BIM might be your perfect career match. So, hone your skills, embrace the digital revolution, and step into the exciting world of BIM with Capstone Engineering!

#tumblr blogs#bim#careers#buildings#bim consulting services#bim consultants#construction#aec#architecture#3d modeling#bim coordination#consulting#3d model#bimclashdetectionservices#engineering#MEP engineers#building information modeling#oil and gas#manufacturing#virtual reality#collaboration#bim services#uaejobs

2 notes

·

View notes

Text

10 Mind-Blowing Ways Cloudflare is Transforming the Tech Industry

It is crucial to ensure your website has strong security and optimal performance in the ever-changing IT world. Here's where Cloudflare becomes a revolutionary solution. Here we will explore Cloudflare, its many uses, how it is revolutionizing the tech industry, and how you can use its features to improve your online visibility.

The Background of Cloudflare-

The goal behind starting Cloudflare was to assist website owners in improving the speed and security of their sites. When it first started, its primary function was to use its content delivery network (CDN) to speed up websites and defend them from cyber threats. To put it simply, Cloudflare is a leading provider of performance and security solutions.

What is Cloudflare?

Cloudflare is the leader in CDN solutions that make connections to your website safe, private, and quick. It is a provider of online architecture and website security services. In its most basic definition, a content delivery network (CDN) is a collection of servers located all over the world. Their primary purpose is to collaborate in order to accelerate the transmission of web content.

In modern times, websites and web applications are widespread. We can find a website or web application to accomplish a variety of tasks. The majority of these websites and web applications are designed to be private and safe, but many small firms and non-techies have restricted access to their security guidelines. These kinds of websites benefit from Cloudflare's performance and security enhancements. Enhancing the security, performance, and dependability of anything linked to the Internet is the goal of Cloudflare, an Internet organization company. The majority of Cloudflare's essential capabilities are available for free, and it has an easy-to-use installation process. Cloudflare offers both UI and API for administering your website.

How Cloudflare is revolutionizing the tech sector-

IT and security teams will have to deal with emerging technology, risks, laws, and growing expenses in 2024. Organizations cannot afford to ignore AI in particular. Those who can't immediately and drastically change will find themselves falling behind their rivals. However, given that 39% of IT and security decision-makers feel that their companies need more control over their digital environments, it is more difficult to innovate swiftly.

How can you regain control and guarantee that your company has the efficiency and agility required to develop while maintaining security? A connection cloud is an all-in-one platform that links all of your domains and gathers resources to help you take back control, cut expenses, and lessen the risks associated with protecting a larger network environment.

Cloudflare is the top and best cloud hosting provider for connection. It enables businesses to lower complexity and costs while enabling workers, apps, and networks to operate quicker and more securely everywhere. With the most feature-rich, unified platform of cloud-native products and developer tools available, Cloudflare's connection cloud gives every organization the power to operate, grow, and expedite its business.

How does Cloudflare work?

Cloudflare improves website speed and security. It offers a content delivery network (CDN), DDoS defence, and firewall services. It safeguards websites against online dangers such as viruses and DDoS assaults. In short, Cloudflare protects websites from internet threats and improves their performance. Additionally, because of its global network, Cloudflare can efficiently handle online traffic and maintain site security.

How to Use Cloudflare?

Website owners must create an account and add their websites to the Cloudflare dashboard in order to use Cloudflare. In addition to automatically configuring its CDN and security capabilities, Cloudflare will check the website for DNS (Data Name Server) records. Website owners can use the dashboard to monitor and modify Cloudflare's settings after they have been set up.