#Azure API

Explore tagged Tumblr posts

Text

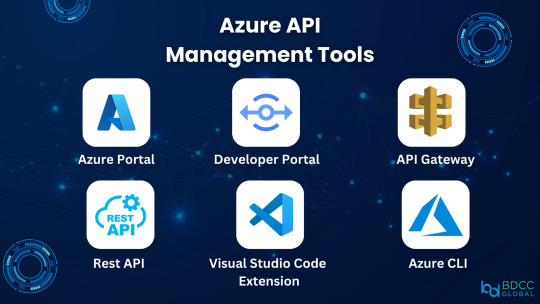

Seamless Integration: Dive into the Power of Azure's API Management Toolset.

Elevate your connectivity, security, and efficiency in one robust solution.

2 notes

·

View notes

Text

#Api#Microsoft API#Application Development#Development#Azure Api#Cognitive Services API#Dynamics 365 APIs#Windows API

0 notes

Text

Abathur

At Abathur, we believe technology should empower, not complicate.

Our mission is to provide seamless, scalable, and secure solutions for businesses of all sizes. With a team of experts specializing in various tech domains, we ensure our clients stay ahead in an ever-evolving digital landscape.

Why Choose Us? Expert-Led Innovation – Our team is built on experience and expertise. Security First Approach – Cybersecurity is embedded in all our solutions. Scalable & Future-Proof – We design solutions that grow with you. Client-Centric Focus – Your success is our priority.

#Software Development#Web Development#Mobile App Development#API Integration#Artificial Intelligence#Machine Learning#Predictive Analytics#AI Automation#NLP#Data Analytics#Business Intelligence#Big Data#Cybersecurity#Risk Management#Penetration Testing#Cloud Security#Network Security#Compliance#Networking#IT Support#Cloud Management#AWS#Azure#DevOps#Server Management#Digital Marketing#SEO#Social Media Marketing#Paid Ads#Content Marketing

2 notes

·

View notes

Text

Sistemas de Recomendación y Visión por Computadora: Las IAs que Transforman Nuestra Experiencia Digital

Sistemas de Recomendación: ¿Qué son y para qué sirven? Los sistemas de recomendación son tecnologías basadas en inteligencia artificial diseñadas para predecir y sugerir elementos (productos, contenidos, servicios) que podrían interesar a un usuario específico. Estos sistemas analizan patrones de comportamiento, preferencias pasadas y similitudes entre usuarios para ofrecer recomendaciones…

#Amazon Recommendation System#Amazon Rekognition#Google Cloud Vision API#Google News#IBM Watson Visual Recognition#inteligencia artificial#machine learning#Microsoft Azure Computer Vision#Netflix Recommendation Engine#OpenAI CLIP#personalización#sistemas de recomendación#Spotify Discover Weekly#visión por computadora#YouTube Algorithm

0 notes

Text

Build Your Own QR Code Generator API with Azure Functions

The Azure Function QR Code Generator API is a scalable, cost-effective, and customizable solution for generating dynamic QR codes on demand. Unlike existing third-party APIs, this approach enables businesses to create their own API, ensuring greater flexibility, security, and ease of access.

Key Features of Azure Function QR Code Generator API:

✅ Dynamic QR Code Generation – Instantly generate QR codes for URLs, text, or other content as per user input. ✅ Customization Options – Modify size, color, and error correction levels to match your specific needs. ✅ RESTful API – Seamlessly integrate the API with various programming languages and platforms. ✅ Scalable & Reliable – Built on Azure Functions, it scales automatically to meet demand, ensuring high availability. ✅ Cost-Effective – Uses a pay-as-you-go model, reducing unnecessary expenses.

How It Works:

1️⃣ API Request – Users send an HTTP request with the required content and customization parameters. 2️⃣ Function Execution – The Azure Function processes the request and dynamically generates the QR code. 3️⃣ QR Code Generation – A QR code generation library creates the image in real-time. 4️⃣ Response – The API returns the QR code image in formats like PNG or SVG for easy access.

Use Cases:

🚀 Marketing Campaigns – Use QR codes on flyers, posters, and ads to drive traffic to websites or promotions. 📦 Asset Tracking – Efficiently manage inventory and product tracking. 🎟 Event Management – Simplify event check-ins and attendee tracking with QR-based tickets. 🔐 Authentication & Access Control – Implement two-factor authentication and secure login systems.

0 notes

Text

Farewell #Skype. Here's how their #API worked.

So, with the shutdown of Skype in May 2025, only two months away, there is not much need to hold on tight to our source code for the Skype API. It worked well for us for years on AvatarAPI.com but with the imminent shutdown, their API will undoubtedly stop working as soon as Skype is shut down, and will no longer be relevant, even if the API stays active for a little while later. In this post,…

0 notes

Text

#Remote IoT APIs#IoT Device Management#Google Cloud IoT Core#AWS IoT#Microsoft Azure IoT#ThingSpeak#Losant IoT#IBM Watson IoT#IoT Solutions 2025

0 notes

Text

Mastering Azure Container Apps: From Configuration to Deployment

Thank you for following our Azure Container Apps series! We hope you're gaining valuable insights to scale and secure your applications. Stay tuned for more tips, and feel free to share your thoughts or questions. Together, let's unlock the Azure's Power.

#API deployment#application scaling#Azure Container Apps#Azure Container Registry#Azure networking#Azure security#background processing#Cloud Computing#containerized applications#event-driven processing#ingress management#KEDA scalers#Managed Identities#microservices#serverless platform

0 notes

Text

Azure API Management provides robust tools for securing and scaling your APIs, ensuring reliable performance and protection against threats. This blog post covers best practices for implementing Azure API Management, including authentication methods, rate limiting, and monitoring. Explore how Azure’s features can help you manage your API lifecycle effectively, enhance security protocols, and scale your services to handle increasing traffic. Get practical tips for optimizing your API management strategy with Azure.

0 notes

Text

APIs in Action: Unlocking the Potential of APIs in Today's Digital Landscape

Hi! In today’s world, APIs (Application Programming Interfaces) are essential for connecting applications and services, driving digital innovation. But with the rise of hybrid and multi-cloud setups, effective API management becomes essential for ensuring security and efficiency. That’s where APIs in Action, a virtual event dedicated to unlocking the full potential of APIs, comes in. Join us…

View On WordPress

0 notes

Text

Unlock the power of Azure API Management for your business! Discover why this tool is indispensable for streamlining operations and enhancing customer experiences.

0 notes

Text

Deep dive into Azure’s DDoS attacks landscape for 2023

Examining Microsoft Azure’s DDoS attack environment in depth Together with joy and festivities, the 2023 holiday season also brought with it a spike in Distributed Denial-of-Service (DDoS) attacks. The patterns of DDoS attacks this year point to a dynamic and intricate threat environment. These attacks have become increasingly sophisticated and diverse in terms of their tactics and scale, ranging from botnet delivery enabled by misconfigured Docker API endpoints to the appearance of NKAbuse malware that uses blockchain technology to launch denial-of-service attacks.

Azure’s holiday attack landscape for 2023 During the holiday season, Azure monitored the attack landscape and noticed a significant change in some of the attack patterns when compared to the previous year. This modification highlights the persistent attempts by malevolent entities to enhance their methods of posing a threat and try to get around DDoS defenses.

Daily Attack Volume: A maximum of 3,500 attacks per day were automatically mitigated by Azure’s strong security infrastructure. Remarkably, 15%–20% of these incidents were large-scale attacks, defined as those that sent more than one million packets per second (pps).

Geographic origins: Attack origins showed a shift, with 18% coming from the USA and 43% coming from China. Compared to the previous year, when both nations were equally represented as regional sources, this represents a shift.

Protocols for attacks: UDP-based attacks, which accounted for 78% of the attacks during the 2023 holiday season, were primarily directed towards web applications and gaming workloads. These include UDP reflected/amplified attacks, which primarily use quick UDP internet connections (QUIC) for reflection and domain name system (DNS) and simple service discovery protocol (SSDP) in their attacks. Interestingly, QUIC is becoming a more popular attack vector due to reflection or DDoS stressors that sporadically use UDP port 443. The attack patterns during the holiday season this year are very different from those of the previous year, when TCP-based attacks accounted for 65% of all attacks.

Attack that broke all previous records: An astounding UDP attack that peaked at 1.5 terabits per second (Tbps) was directed towards an Asian gaming client. This highly randomized attack, which involved multiple source IP addresses and ports and originated in China, Japan, the USA, and Brazil, was completely neutralized by Azure’s defenses.

Botnet evolution: Over the past year, more and more cybercriminals have used cloud resources- virtual machines in particular for DDoS attacks. Throughout the holiday season, attackers continued to try to take advantage of discounted Azure subscriptions all over the world. Azure tracked compromised account attempts in 39 Azure regions from mid-November 2023 to the end of the year. The main targets of these incidents were the USA and Europe, which accounted for roughly 67% of the total. These threats were successfully neutralized by Azure’s defense mechanisms.

Azure regions where attempts to exploit resources for DDOS attacks occurred. Setting the Threat in Context Global trends are mirrored in Azure’s DDoS attack trends for 2023. As Azure noted earlier in the year, attacks are becoming more politically motivated as a result of geopolitical tensions.

Attackers continue to be drawn to the rise of DDoS-for-hire services, also referred to as “stressor’s” and “booters.” These platforms have democratized the ability to launch potent DDoS attacks, making them affordable for less experienced criminals and easily accessible on forums frequented by cybercriminals. International law enforcement agencies have confirmed that there has been a surge in the availability and use of these services in recent years through operations such as Operation Power OFF, which last May targeted 13 domains linked to DDoS-for-hire platforms. Stressor’s are still in demand despite these efforts because they provide a variety of attack techniques and power, with some being able to launch attacks as fast as 1.5 Tbps.

Cloud power: Defending against the dynamic DDoS attacks The proliferation of large-scale botnets and DDoS-for-hire services presents a serious threat to online services and corporate operations. More cloud computing capacity is required to counter these threats in order to absorb the attack’s initial wave, divert erroneous traffic, and preserve legitimate traffic until patterns can be found. The cloud is our best defense when an attack involves tens of thousands of devices because it has the scale necessary to mitigate the largest attacks. Furthermore, because the cloud is distributed globally, being closer together aids in thwarting attacks that are directed towards the sources.

Providing strong defense to DDoS Attacks It is more important than ever to have strong defenses against DDoS attacks in a time when cyber threats are always changing. This is how Azure’s all-inclusive security solutions are made to protect your online data.

Due to the increased risk of DDoS attacks, a DDoS protection service like Azure DDoS Protection is essential. Real-time telemetry, monitoring, alerts, automatic attack mitigation, adaptive real-time tuning, and always-on traffic monitoring give this service full visibility on DDoS attacks.

Multi-Layered Defense: Use Azure Web Application Firewall (WAF) in conjunction with Azure DDoS Protection to set up a multi-layered defense for complete protection. Layers 3 and 4 of the network are protected by Azure DDoS Protection, and Layer 7 of the application layer is protected by Azure WAF. This combination offers defense against different kinds of DDoS attacks.

Alert Configuration: Without user input, Azure DDoS Protection is able to recognize and stop attacks. You can be informed about the state of public intellectual property resources that are protected by configuring alerts for active mitigations.

2024: Taking action against DDoS attacks The Christmas season of 2023 has brought to light the DDoS attacks’ constant and changing threat to the cyberspace. It is imperative that businesses improve and modify their cybersecurity plans as the new year approaches. This should be a period of learning, with an emphasis on strengthening defenses against DDoS attacks and remaining watchful for emerging strategies. Azure’s ability to withstand these advanced DDoS attacks demonstrates how important it is to have strong and flexible security measures in place to safeguard digital assets and maintain business continuity.

Read more on Govindhtech.com

0 notes

Text

#migration and integration services#api integration developer#software integration services#salesforce azure integration#mobile app integration

0 notes

Text

How to build autonomous AI agent with Google A2A protocol

New Post has been published on https://thedigitalinsider.com/how-to-build-autonomous-ai-agent-with-google-a2a-protocol/

How to build autonomous AI agent with Google A2A protocol

Why do we need autonomous AI agents?

Picture this: it’s 3 a.m., and a customer on the other side of the globe urgently needs help with their account. A traditional chatbot would wake up your support team with an escalation. But what if your AI agent could handle the request autonomously, safely, and correctly? That’s the dream, right?

The reality is that most AI agents today are like teenagers with learner’s permits; they need constant supervision. They might accidentally promise a customer a large refund (oops!) or fall for a clever prompt injection that makes them spill company secrets or customers’ sensitive data. Not ideal.

This is where Double Validation comes in. Think of it as giving your AI agent both a security guard at the entrance (input validation) and a quality control inspector at the exit (output validation). With these safeguards at a minimum in place, your agent can operate autonomously without causing PR nightmares.

How did I come up with the Double Validation idea?

These days, we hear a lot of talk about AI agents. I asked myself, “What is the biggest challenge preventing the widespread adoption of AI agents?” I concluded that the answer is trustworthy autonomy. When AI agents can be trusted, they can be scaled and adopted more readily. Conversely, if an agent’s autonomy is limited, it requires increased human involvement, which is costly and inhibits adoption.

Next, I considered the minimal requirements for an AI agent to be autonomous. I concluded that an autonomous AI agent needs, at minimum, two components:

Input validation – to sanitize input, protect against jailbreaks, data poisoning, and harmful content.

Output validation – to sanitize output, ensure brand alignment, and mitigate hallucinations.

I call this system Double Validation.

Given these insights, I built a proof-of-concept project to research the Double Validation concept.

In this article, we’ll explore how to implement Double Validation by building a multiagent system with the Google A2A protocol, the Google Agent Development Kit (ADK), Llama Prompt Guard 2, Gemma 3, and Gemini 2.0 Flash, and how to optimize it for production, specifically, deploying it on Google Vertex AI.

For input validation, I chose Llama Prompt Guard 2 just as an article about it reached me at the perfect time. I selected this model because it is specifically designed to guard against prompt injections and jailbreaks. It is also very small; the largest variant, Llama Prompt Guard 2 86M, has only 86 million parameters, so it can be downloaded and included in a Docker image for cloud deployment, improving latency. That is exactly what I did, as you’ll see later in this article.

How to build it?

The architecture uses four specialized agents that communicate through the Google A2A protocol, each with a specific role:

Image generated by author

Here’s how each agent contributes to the system:

Manager Agent: The orchestra conductor, coordinating the flow between agents

Safeguard Agent: The bouncer, checking for prompt injections using Llama Prompt Guard 2

Processor Agent: The worker bee, processing legitimate queries with Gemma 3

Critic Agent: The editor, evaluating responses for completeness and validity using Gemini 2.0 Flash

I chose Gemma 3 for the Processor Agent because it is small, fast, and can be fine-tuned with your data if needed — an ideal candidate for production. Google currently supports nine (!) different frameworks or methods for finetuning Gemma; see Google’s documentation for details.

I chose Gemini 2.0 Flash for the Critic Agent because it is intelligent enough to act as a critic, yet significantly faster and cheaper than the larger Gemini 2.5 Pro Preview model. Model choice depends on your requirements; in my tests, Gemini 2.0 Flash performed well.

I deliberately used different models for the Processor and Critic Agents to avoid bias — an LLM may judge its own output differently from another model’s.

Let me show you the key implementation of the Safeguard Agent:

Plan for actions

The workflow follows a clear, production-ready pattern:

User sends query → The Manager Agent receives it.

Safety check → The Manager forwards the query to the Safeguard Agent.

Vulnerability assessment → Llama Prompt Guard 2 analyzes the input.

Processing → If the input is safe, the Processor Agent handles the query with Gemma 3.

Quality control → The Critic Agent evaluates the response.

Delivery → The Manager Agent returns the validated response to the user.

Below is the Manager Agent’s coordination logic:

Time to build it

Ready to roll up your sleeves? Here’s your production-ready roadmap:

Local deployment

1. Environment setup

2. Configure API keys

3. Download Llama Prompt Guard 2

This is the clever part – we download the model once when we start Agent Critic for the first time and package it in our Docker image for cloud deployment:

Important Note about Llama Prompt Guard 2: To use the Llama Prompt Guard 2 model, you must:

Fill out the “LLAMA 4 COMMUNITY LICENSE AGREEMENT” at https://huggingface.co/meta-llama/Llama-Prompt-Guard-2-86M

Get your request to access this repository approved by Meta

Only after approval will you be able to download and use this model

4. Local testing

Screenshot for running main.py

Image generated by author

Screenshot for running client

Image generated by author

Screenshot for running tests

Image generated by author

Production Deployment

Here’s where it gets interesting. We optimize for production by including the Llama model in the Docker image:

1. Setup Cloud Project in Cloud Shell Terminal

Access Google Cloud Console: Go to https://console.cloud.google.com

Open Cloud Shell: Click the Cloud Shell icon (terminal icon) in the top right corner of the Google Cloud Console

Authenticate with Google Cloud:

Create or select a project:

Enable required APIs:

3. Setup Vertex AI Permissions

Grant your account the necessary permissions for Vertex AI and related services:

3. Create and Setup VM Instance

Cloud Shell will not work for this project as Cloud Shell is limited to 5GB of disk space. This project needs more than 30GB of disk space to build Docker images, get all dependencies, and download the Llama Prompt Guard 2 model locally. So, you need to use a dedicated VM instead of Cloud Shell.

4. Connect to VM

Screenshot for VM

Image generated by author

5. Clone Repository

6. Deployment Steps

Screenshot for agents in cloud

Image generated by author

7. Testing

Screenshot for running client in Google Vertex AI

Image generated by author

Screenshot for running tests in Google Vertex AI

Image generated by author

Alternatives to Solution

Let’s be honest – there are other ways to skin this cat:

Single Model Approach: Use a large LLM like GPT-4 with careful system prompts

Simpler but less specialized

Higher risk of prompt injection

Risk of LLM bias in using the same LLM for answer generation and its criticism

Monolith approach: Use all flows in just one agent

Latency is better

Cannot scale and evolve input validation and output validation independently

More complex code, as it is all bundled together

Rule-Based Filtering: Traditional regex and keyword filtering

Faster but less intelligent

High false positive rate

Commercial Solutions: Services like Azure Content Moderator or Google Model Armor

Easier to implement but less customizable

On contrary, Llama Prompt Guard 2 model can be fine-tuned with the customer’s data

Ongoing subscription costs

Open-Source Alternatives: Guardrails AI or NeMo Guardrails

Good frameworks, but require more setup

Less specialized for prompt injection

Lessons Learned

1. Llama Prompt Guard 2 86M has blind spots. During testing, certain jailbreak prompts, such as:

And

were not flagged as malicious. Consider fine-tuning the model with domain-specific examples to increase its recall for the attack patterns that matter to you.

2. Gemini Flash model selection matters. My Critic Agent originally used gemini1.5flash, which frequently rated perfectly correct answers 4 / 5. For example:

After switching to gemini2.0flash, the same answers were consistently rated 5 / 5:

3. Cloud Shell storage is a bottleneck. Google Cloud Shell provides only 5 GB of disk space — far too little to build the Docker images required for this project, get all dependencies, and download the Llama Prompt Guard 2 model locally to deploy the Docker image with it to Google Vertex AI. Provision a dedicated VM with at least 30 GB instead.

Conclusion

Autonomous agents aren’t built by simply throwing the largest LLM at every problem. They require a system that can run safely without human babysitting. Double Validation — wrapping a task-oriented Processor Agent with dedicated input and output validators — delivers a balanced blend of safety, performance, and cost.

Pairing a lightweight guard such as Llama Prompt Guard 2 with production friendly models like Gemma 3 and Gemini Flash keeps latency and budget under control while still meeting stringent security and quality requirements.

Join the conversation. What’s the biggest obstacle you encounter when moving autonomous agents into production — technical limits, regulatory hurdles, or user trust? How would you extend the Double Validation concept to high-risk domains like finance or healthcare?

Connect on LinkedIn: https://www.linkedin.com/in/alexey-tyurin-36893287/

The complete code for this project is available at github.com/alexey-tyurin/a2a-double-validation.

References

[1] Llama Prompt Guard 2 86M, https://huggingface.co/meta-llama/Llama-Prompt-Guard-2-86M

[2] Google A2A protocol, https://github.com/google-a2a/A2A

[3] Google Agent Development Kit (ADK), https://google.github.io/adk-docs/

#adoption#agent#Agentic AI#agents#agreement#ai#ai agent#AI AGENTS#API#APIs#approach#architecture#Article#Articles#Artificial Intelligence#assessment#autonomous#autonomous agents#autonomous ai#azure#bee#Bias#Building#challenge#chatbot#clone#Cloud#code#Community#content

0 notes

Text

Hire Best Azure API Lead Developers in India | ICS

ICS (Ingenious Corporate Solutions) is an IT consulting company in India that excels in providing business consulting services for various technologies and industries. with a strong focus on delivering innovative and effective solutions, ICS assists organizations in achieving their digital transformation goals and optimizing their IT infrastructure. With a vast network of talented professionals, ICS enables organizations to quickly and flexibly scale their IT teams. Whether there is a need for skilled Microsoft Dynamics CRM consultants, Azure API developers, Power BI experts, or .NET developers, ICS offers a pool of highly qualified candidates available for hire on a contract basis. This allows businesses to access specialized skills and expertise without the long-term commitment and overhead costs associated with traditional hiring processes. https://icsgroup.io/hire-azure-api-lead-developer/

0 notes

Text

The next rule we created was named “../”, and upon deleting that rule, the entire test SQL Server was also suddenly deleted, and we found ourselves in need of a new test server! The cause of the deletion of our test server can be found in the URL to which the DELETE request is sent. The URL the DELETE request was sent to is: /subscriptions/<subscriptionId>/resourceGroups/<rg>/providers/Microsoft.Sql/servers/test-4ad9a/firewallRules/../?api-version=2021-11-0. The firewall rule name “../” within the URL is treated as if it refers to the parent directory and to the SQL Server itself, which leads to the entire server being deleted.

With a little effort, it’s possible to create a rule that deletes any resource in the Azure tenant with the following name: ../../../../../../../<theResourceURL>?api-version=<relevant_version>#”.

Little Bobby Tables strikes again

18 notes

·

View notes