#Data Collection in Machine Learning

Explore tagged Tumblr posts

Text

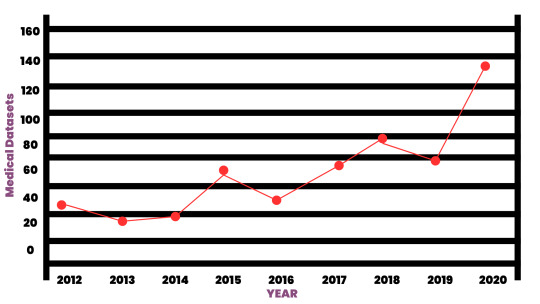

Medical Datasets represent the cornerstone of healthcare innovation in the AI era. Through careful analysis and interpretation, these datasets empower healthcare professionals to deliver more accurate diagnoses, personalized treatments, and proactive interventions. At Globose Technology Solutions, we are committed to harnessing the transformative power of medical datasets, pushing the boundaries of healthcare excellence, and ushering in a future where every patient will receive the care they deserve.

#Medical Datasets#Healthcare datasets#Healthcare AI Data Collection#Data Collection in Machine Learning#data collection company#data collection services#data collection

0 notes

Text

Navigating the Landscape of Data Collection in Machine Learning: Balancing Innovation and Ethical Considerations

Introduction:

As machine learning continues to propel advancements across various domains, the process of data collection plays a pivotal role in shaping the efficacy and ethical considerations of these intelligent systems. This article delves into the intricate landscape of data collection in machine learning, exploring its significance, challenges, and the ethical frameworks required to ensure responsible and unbiased AI development.

The Foundation of Machine Learning:

At the core of machine learning lies the need for vast and diverse datasets. These datasets serve as the foundation upon which machine learning models are trained, enabling them to recognize patterns, make predictions, and ultimately enhance their performance. The process of data collection becomes the initial step in this journey, demanding careful attention to ensure the quality, representativeness, and ethical sourcing of the data.

Ensuring Quality and Diversity:

Effective data collection hinges on the quality and diversity of the datasets. Gathering comprehensive and representative data ensures that machine learning models are equipped to handle a wide array of scenarios and demographics. This not only enhances the accuracy of predictions but also guards against biases that may arise from inadequate or skewed data representation.

Ethical Considerations in Data Collection:

The ethical dimension of data collection in machine learning is of paramount importance. Ensuring privacy, consent, and transparency in the acquisition of data is crucial to building trust between developers, AI systems, and the individuals contributing to these datasets. Striking a balance between innovation and ethical considerations requires a thoughtful approach, acknowledging the potential risks and implications associated with data collection processes.

Challenges and Biases:

Navigating the landscape of data collection comes with inherent challenges, including the presence of biases in datasets. Biases may arise from historical inequalities, underrepresented groups, or algorithmic biases in the data collection process itself. Understanding and addressing these biases are essential to prevent perpetuating unfair and discriminatory outcomes in machine learning applications.

Technological Solutions and Responsible Practices:

Embracing technological solutions such as federated learning, differential privacy, and secure multi-party computation can contribute to responsible and privacy-preserving data collection practices. Implementing these techniques not only protects sensitive information but also empowers individuals to have more control over their data, fostering a more ethical and transparent data ecosystem.

The Future of Ethical Data Collection:

Looking forward, the future of machine learning depends on the continued evolution of ethical data collection practices. Stricter regulations, industry standards, and community-driven initiatives will shape the landscape, emphasizing the importance of fairness, accountability, and transparency in data collection processes. As machine learning technologies advance, ethical considerations in data collection will be instrumental in building a trustworthy and inclusive AI future.

Conclusion:

In the ever-expanding realm of machine learning, data collection emerges as a linchpin for innovation and ethical development. Balancing the quest for knowledge with a commitment to privacy, transparency, and fairness is imperative. By navigating the complex landscape of data collection with ethical frameworks and technological solutions, the machine learning community can foster a future where intelligent systems not only excel in performance but also reflect the values and diversity of the societies they serve.

0 notes

Text

In a silicon valley, throw rocks. Welcome to my tech blog.

Antiterf antifascist (which apparently needs stating). This sideblog is open to minors.

Liberation does not come at the expense of autonomy.

* I'm taking a break from tumblr for a while. Feel free to leave me asks or messages for when I return.

Frequent tags:

#tech#tech regulation#technology#big tech#privacy#data harvesting#advertising#technological developments#spyware#artificial intelligence#machine learning#data collection company#data analytics#dataspeaks#data science#data#llm#technews

2 notes

·

View notes

Text

Revolutionize business onboarding processes in the AI era - KYB solution

New Post has been published on https://thedigitalinsider.com/revolutionize-business-onboarding-processes-in-the-ai-era-kyb-solution-2/

Revolutionize business onboarding processes in the AI era - KYB solution

Manual verification, checking, and onboarding are things of the past. Nowadays, with the emergence of artificial intelligence technology, almost all operations have become streamlined and automated.

Pre-trained algorithms of artificial intelligence and machine learning help organizations reduce manual efforts, which are time-consuming and not free from errors. Human beings can commit mistakes for being fatigued or under workload pressures.

However, AI technology is free from fatigue and workload pressure, and automated checks perform quick actions with just a single click. Therefore, companies have now replaced manual processes with automated ones and are moving toward a streamlined process for all operations.

Artificial intelligence has revolutionized the business onboarding process and enables organizations to streamline their operations regarding partnerships, investments, and other kinds of collaborations with other entities.

This blog post will highlight the role of AI technology in business onboarding and will explain how it has revolutionized the process.

How can AI revolutionize the onboarding process?

Companies have to deal with customers, employees, and other organizations for various purposes. There is a need for a streamlined process for onboarding. Before allowing access to entities on board, it is necessary to verify their authenticity and legitimacy and it is a major part of the onboarding process.

Traditionally, companies verify entities manually, and perform all the steps included in the onboarding process with human efforts. Employees collect various documents, analyze them, verify them, and then onboard entities.

However, it is no longer needed, companies can now verify entities remotely and streamline their onboarding process. They can simply employ artificial intelligence technology to streamline mind verification and the onboarding process.

Many companies offer advanced solutions that involve AI technology in their operation and provide a streamlined onboarding process for customers and businesses.

Companies that onboard other organizations as their customers or as partners can utilize the Know Your Business (KYB) service, which involves AI technology in all the operations, offers the utmost security from fraud and offers a streamlined onboarding process.

The KYB solution involves AI checks and verifying entities quickly to find their risk potential. It helps to make well-informed decisions regarding onboarding.

Role of automation in business verification and onboarding

Artificial intelligence (AI) offers an automated service for business verification, customer authentications, and the onboarding process. Companies that employ AI technology in their operations can reduce 50% time to onboard new entities.

Pretrained algorithms within the business verification and onboarding process offer a thorough screening in the form of cross-checking and verification in one click. With the help of artificial intelligence, companies have devised an all-in-one solution for streamlined onboarding processes, such as Know Your Business(KYB).

In an automated process, companies verify collected documents through automated checks, which highlight risk potential in case the documents are forged or fake.

Fraudsters generate fake identity papers which are difficult to be identified manually through the human eye. Companies need artificial intelligence sharp detectors to identify fraudster tactics. Therefore, aLong with a streamlined onboarding process, automated checks of AI offer the utmost security from fraudsters.

Businesses that have to deal with other business entities can easily identify shell companies through advanced verification solutions.

How does a streamlined business onboarding process contribute to growth and success?

The streamlined onboarding process always becomes the customer center of attraction. Nobody prefers time-consuming and complex processes for verification and onboarding.

Artificial intelligence offers a streamlined-onboarding process and enables organizations to enhance their users’ interest and satisfaction. Through remote and digital verification, which involves AI, companies can enable their users and clients to get verified while sitting at home. It makes organizations reliable and credible.

Moreover, as automated service offers the most accurate results, it makes a business credible and trustworthy to attract more and more clients and grab business opportunities.

Therefore, a streamlined verification and onboarding process contributes to business growth and success as it enables organizations to onboard more and more clients and become partners with highly successful businesses.

Organizations that do not have a streamlined business onboarding process may lose great clients and partners. People always prefer to have simplified operations, which artificial intelligence technology offers in the form of a streamlined business onboarding system.

Final words

Companies can utilize artificial intelligence technology to streamline the process to onboard a business. Automated checks of artificial intelligence within the streamlined business onboarding process enable organizations to become partners with successful organizations and attract more clients.

Users always prefer a streamlined onboarding verification process which is the result of artificial intelligence. AI has revolutionized the onboarding process and verification protocols for organizations.

Manual verification and data collection are now things of the past, and businesses utilize remote services, digital means, and automated techniques to streamline all operations, such as document collection, analysis, and verification.

#ai#AI technology#Algorithms#Analysis#artificial#Artificial Intelligence#automation#Blog#board#Business#Companies#data#data collection#deal#employees#era#eye#fatigue#form#fraud#fraudsters#growth#how#human#identity#intelligence#investments#it#learning#Machine Learning

0 notes

Text

Video Annotation Services: Transforming Autonomous Vehicle Training

Introduction:

As autonomous vehicles (AVs) progressively Video Annotation Services shape the future of transportation, the underlying technology is heavily dependent on precise and comprehensive datasets. A pivotal element facilitating this advancement is video annotation services. These services enable machine learning models to accurately perceive, interpret, and react to their environment, rendering them essential for the training of autonomous vehicles.

The Importance of Video Annotation in Autonomous Vehicles

Autonomous vehicles utilize sophisticated computer vision systems to analyze real-world data. These systems must be capable of recognizing and responding to a variety of road situations, including the identification of pedestrians, vehicles, traffic signals, road signs, lane markings, and potential hazards. Video annotation services play a crucial role in converting raw video footage into labeled datasets, allowing AI models to effectively "learn" from visual information.

The contributions of video annotation to AV training include:

Object Detection and Classification Video annotation facilitates the identification and labeling of objects such as cars, bicycles, pedestrians, and streetlights. These labels assist the AI model in comprehending various objects and their relevance on the road.

Lane and Boundary Detection By annotating road lanes and boundaries, autonomous vehicles can maintain their designated paths and execute accurate turns, thereby improving safety and navigation.

Tracking Moving Objects Frame-by-frame annotation allows AI models to monitor the movement of objects, enabling them to predict trajectories and avoid collisions.

Semantic Segmentation Annotating each pixel within a frame offers a comprehensive understanding of road environments, including sidewalks, crosswalks, and off-road areas.

Scenario-Based Training Annotated videos that encompass a range of driving scenarios—such as urban traffic, highways, and challenging weather conditions—aid in training AVs to navigate real-world complexities.

The Importance of High-Quality Video Annotation Services

The development of autonomous vehicles necessitates extensive annotated video data. The precision and dependability of these annotations significantly influence the effectiveness of AI models. Here are the reasons why collaborating with a professional video annotation service provider is essential:

Expertise in Complex Situations: Professionals possess a deep understanding of the intricacies involved in labeling complex and dynamic road environments.

Utilization of Advanced Tools and Techniques: High-quality video annotation services employ state-of-the-art tools, such as 2D and 3D annotation, bounding boxes, polygons, and semantic segmentation.

Scalability: As the development of autonomous vehicles expands, service providers are equipped to manage large volumes of data efficiently.

Consistency and Precision: Automated quality checks, along with manual reviews, guarantee that annotations adhere to the highest standards.

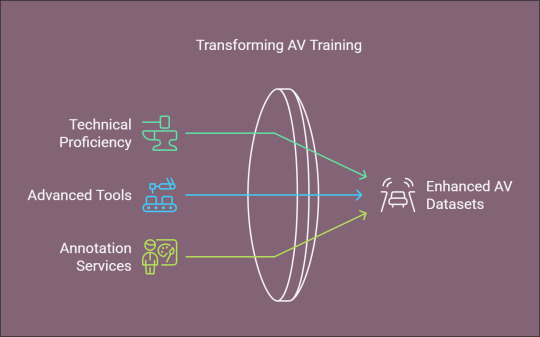

How Transforms Video Annotation

At we focus on providing exceptional image and video annotation services specifically designed for the training of autonomous vehicles. Our team merges technical proficiency with advanced tools to generate datasets that foster innovation within the AV sector.

Key Features of Our Offerings:

Tailored annotation solutions to address specific project requirements.

Support for a variety of annotation types, including bounding boxes, 3D point clouds, and polygon annotations.

Stringent quality assurance protocols to ensure data accuracy.

Scalable solutions capable of accommodating projects of any size or complexity.

By selecting you secure a dependable partner dedicated to enhancing the performance of your AI models and expediting the advancement of autonomous vehicles.

The Future of Autonomous Vehicle Training

As the demand for autonomous vehicles Globose Technology Solutions continues to rise, the necessity for accurate and diverse datasets will become increasingly critical. Video annotation services will play a pivotal role in facilitating safer, smarter, and more efficient AV systems. By investing in high-quality annotation services, companies can ensure their AI models are well-prepared to navigate the complexities of real-world environments. The success of your AI initiatives, whether in the realm of self-driving vehicles, drones, or other autonomous systems, heavily relies on video annotation services. Collaborating with specialists such as can help convert unprocessed video data into valuable insights, thereby propelling your innovation efforts.

0 notes

Text

Video Datasets for AI: Catalyzing Advancements in Machine Learning

Introduction:

In the realm of Video Dataset for AI data serves as the essential catalyst for innovation. Video datasets, in particular, have become a vital element in the advancement of state-of-the-art AI applications. Spanning areas such as computer vision and natural language processing, video datasets empower machines to comprehend intricate visual and temporal data. This article will explore the significance of video datasets, their diverse applications, the challenges associated with their creation, and notable resources to initiate your AI endeavors.

The Significance of Video Datasets in AI

Video datasets offer sequential visual data, enabling AI systems to analyze and interpret changes over time. In contrast to static image datasets, video datasets encapsulate motion, interactions, and temporal dynamics, rendering them essential for various tasks, including:

Action Recognition: Detecting human activities, such as walking, running, or dancing, from video footage.

Object Tracking: Monitoring objects as they traverse through frames in a video.

Event Detection: Identifying critical events, such as accidents, suspicious activities, or natural occurrences.

Scene Understanding: Analyzing the environment and context within video frames.

By training AI models with video datasets, researchers can develop systems capable of real-time decision-making, predictive analytics, and intricate pattern recognition.

Applications of Video Datasets in AI

Video datasets have opened up numerous opportunities across a variety of sectors and fields. Below are some key applications:

Autonomous Vehicles: Video datasets are essential for training autonomous vehicles to identify pedestrians, other cars, road signs, and traffic signals. Datasets such as the Waymo Open Dataset and KITTI offer labeled video data that supports research in self-driving technology.

Healthcare: In the realm of medical diagnostics, video datasets are utilized to examine endoscopic, ultrasound, and surgical videos. AI models developed using these datasets can aid healthcare professionals in identifying abnormalities and enhancing patient care.

Sports Analytics: AI-driven sports analysis depends on video datasets to monitor player movements, evaluate strategies, and derive insights. Technologies like Hawk-Eye in tennis and VAR in soccer leverage video data to improve decision-making processes.

Surveillance and Security: Video datasets are crucial for applications in facial recognition, anomaly detection, and the surveillance of extensive environments for security purposes.

Entertainment and Media: Video datasets facilitate the training of recommendation systems for streaming services, automate video editing processes, and enhance augmented reality (AR) and virtual reality (VR) experiences.

Robotics: To enable robots to engage with their surroundings, video data is analyzed to comprehend spatial and temporal dynamics, such as object manipulation and obstacle navigation.

Popular Video Datasets for AI Research

For the effective development of AI models, researchers depend on high-quality video datasets. Below are some commonly utilized resources:

YouTube-8M: This extensive dataset comprises millions of labeled videos obtained from YouTube, frequently employed for video classification tasks.

UCF101: This dataset features 13,000 videos categorized into 101 human action types, making it particularly suitable for action recognition studies.

Kinetics: Managed by DeepMind, Kinetics comprises thousands of video clips annotated with human actions, making it a widely utilized resource for activity recognition tasks.

AVA (Atomic Visual Actions): AVA is dedicated to spatiotemporal action recognition, offering comprehensive annotations for actions occurring within video segments.

Sports-1M: With over one million sports videos, this dataset serves as a primary resource for sports analytics and action recognition.

Cityscapes: Although it is mainly an image dataset, Cityscapes also includes video sequences that facilitate tasks such as semantic segmentation and object detection in urban settings.

Charades: This dataset is tailored for activity recognition and human-object interaction, featuring annotated video clips that depict everyday household activities.

Challenges in Creating Video Datasets

The development and upkeep of video datasets present several notable challenges:

Data Collection: Acquiring high-quality video data necessitates considerable resources, including cameras, storage solutions, and bandwidth. Achieving diversity in scenarios, environments, and subjects can be a lengthy process.

Annotation: The labeling of video datasets is a labor-intensive endeavor. Annotators are required to identify objects, actions, and events in each frame, which can be overwhelming for extensive datasets.

Privacy Concerns: Videos frequently contain recognizable faces and sensitive information, which raises ethical considerations. Adhering to privacy regulations, such as GDPR, is essential.

Data Imbalance: Certain categories or actions may be insufficiently represented in a dataset, resulting in biased AI models.

Storage and Processing: Video data demands substantial storage capacity and computational resources for effective processing and analysis, presenting logistical challenges.

Best Practices for Utilizing Video Datasets

To maximize the effectiveness of video datasets, it is essential to adhere to the following best practices:

Select an Appropriate Dataset: Identify a dataset that is in harmony with your project objectives. Take into account aspects such as size, diversity, and the quality of annotations.

Prepare Your Data: Ensure your video data is cleaned and normalized to eliminate inconsistencies and enhance model performance. This may involve resizing frames, changing formats, and extracting keyframes.

Enhance Data: Implement data augmentation strategies, including flipping, cropping, or introducing noise, to broaden dataset diversity and strengthen model resilience.

Mitigate Bias: Examine your dataset for potential biases and implement corrective measures, such as oversampling categories that are underrepresented.

Utilize Pretrained Models: Begin with pretrained models that have been developed on similar datasets to conserve time and computational resources.

Conclusion

Globose Technology Solutions are revolutionizing the field of artificial intelligence by enabling machines to comprehend and analyze intricate visual and temporal data. Their applications span various domains, including autonomous vehicles, healthcare, and entertainment, and continue to expand. Nevertheless, the complexities involved in creating, annotating, and managing these datasets underscore the necessity for ethical and resource-efficient practices.

By utilizing high-quality video datasets and following best practices, researchers and developers can fully harness the potential of AI, fostering innovation and addressing real-world challenges. Whether you are an experienced AI expert or a newcomer, video datasets present limitless opportunities to advance the frontiers of artificial intelligence.

0 notes

Text

How Video Transcription Services Improve AI Training Through Annotated Datasets

Video transcription services play a crucial role in AI training by converting raw video data into structured, annotated datasets, enhancing the accuracy and performance of machine learning models.

#video transcription services#aitraining#Annotated Datasets#machine learning#ultimate sex machine#Data Collection for AI#AI Data Solutions#Video Data Annotation#Improving AI Accuracy

0 notes

Text

How Can Data Science Predict Consumer Demand in an Ever-Changing Market?

In today’s dynamic business landscape, understanding consumer demand is more crucial than ever. As market conditions fluctuate, companies must rely on data-driven insights to stay competitive. Data science has emerged as a powerful tool that enables businesses to analyze trends and predict consumer behavior effectively. For those interested in mastering these techniques, pursuing an AI course in Chennai can provide the necessary skills and knowledge.

The Importance of Predicting Consumer Demand

Predicting consumer demand involves anticipating how much of a product or service consumers will purchase in the future. Accurate demand forecasting is essential for several reasons:

Inventory Management: Understanding demand helps businesses manage inventory levels, reducing the costs associated with overstocking or stockouts.

Strategic Planning: Businesses can make informed decisions regarding production, marketing, and sales strategies by accurately predicting consumer preferences.

Enhanced Customer Satisfaction: By aligning supply with anticipated demand, companies can ensure that they meet customer needs promptly, improving overall satisfaction.

Competitive Advantage: Organizations that can accurately forecast consumer demand are better positioned to capitalize on market opportunities and outperform their competitors.

How Data Science Facilitates Demand Prediction

Data science leverages various techniques and tools to analyze vast amounts of data and uncover patterns that can inform demand forecasting. Here are some key ways data science contributes to predicting consumer demand:

1. Data Collection

The first step in demand prediction is gathering relevant data. Data scientists collect information from multiple sources, including sales records, customer feedback, social media interactions, and market trends. This comprehensive dataset forms the foundation for accurate demand forecasting.

2. Data Cleaning and Preparation

Once the data is collected, it must be cleaned and organized. This involves removing inconsistencies, handling missing values, and transforming raw data into a usable format. Proper data preparation is crucial for ensuring the accuracy of predictive models.

3. Exploratory Data Analysis (EDA)

Data scientists perform exploratory data analysis to identify patterns and relationships within the data. EDA techniques, such as data visualization and statistical analysis, help analysts understand consumer behavior and the factors influencing demand.

4. Machine Learning Models

Machine learning algorithms play a vital role in demand prediction. These models can analyze historical data to identify trends and make forecasts. Common algorithms used for demand forecasting include:

Linear Regression: This model estimates the relationship between dependent and independent variables, making it suitable for predicting sales based on historical trends.

Time Series Analysis: Time series models analyze data points collected over time to identify seasonal patterns and trends, which are crucial for accurate demand forecasting.

Decision Trees: These models split data into branches based on decision rules, allowing analysts to understand the factors influencing consumer demand.

5. Real-Time Analytics

In an ever-changing market, real-time analytics becomes vital. Data science allows businesses to monitor consumer behavior continuously and adjust forecasts based on the latest data. This agility ensures that companies can respond quickly to shifts in consumer preferences.

Professionals who complete an AI course in Chennai gain insights into the latest machine learning techniques used in demand forecasting

Why Pursue an AI Course in Chennai?

For those looking to enter the field of data science and enhance their skills in predictive analytics, enrolling in an AI course in Chennai is an excellent option. Here’s why:

1. Comprehensive Curriculum

AI courses typically cover essential topics such as machine learning, data analysis, and predictive modeling. This comprehensive curriculum equips students with the skills needed to tackle real-world data challenges.

2. Hands-On Experience

Many courses emphasize practical, hands-on learning, allowing students to work on real-world projects that involve demand forecasting. This experience is invaluable for building confidence and competence.

3. Industry-Relevant Tools

Students often learn to use industry-standard tools and software, such as Python, R, and SQL, which are essential for conducting data analysis and building predictive models.

4. Networking Opportunities

Enrolling in an AI course in Chennai allows students to connect with peers and industry professionals, fostering relationships that can lead to job opportunities and collaborations.

Challenges in Predicting Consumer Demand

While data science offers powerful tools for demand forecasting, organizations may face challenges, including:

1. Data Quality

The accuracy of demand predictions heavily relies on the quality of data. Poor data quality can lead to misleading insights and misguided decisions.

2. Complexity of Models

Developing and interpreting predictive models can be complex. Organizations must invest in training and resources to ensure their teams can effectively utilize these models.

3. Rapidly Changing Markets

Consumer preferences can shift rapidly due to various factors, such as trends, economic changes, and competitive pressures. Businesses must remain agile to adapt their forecasts accordingly.

The curriculum of an AI course in Chennai often includes hands-on projects that focus on real-world applications of predictive analytics

Conclusion

Data science is revolutionizing how businesses predict consumer demand in an ever-changing market. By leveraging advanced analytics and machine learning techniques, organizations can make informed decisions that drive growth and enhance customer satisfaction.

For those looking to gain expertise in this field, pursuing an AI course in Chennai is a vital step. With a solid foundation in data science and AI, aspiring professionals can harness these technologies to drive innovation and success in their organizations.

#predictive analytics#predictivemodeling#predictiveanalytics#predictive programming#consumer demand#consumer behavior#demand analysis#machinelearning#machine learning#technology#data science#ai#artificial intelligence#Data science course#AI course#AI course in Chennai#Data science course in Chennai#Real-Time Analytics#Data Collection#Data Cleaning

0 notes

Text

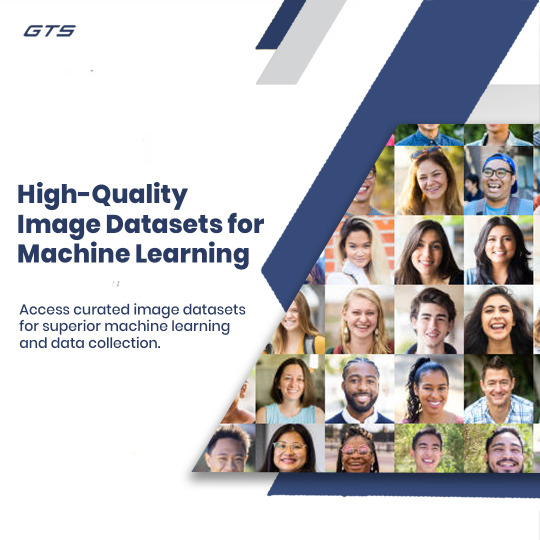

The Importance of High-Quality Image Datasets for Machine Learning: How to Optimize Data Collection for AI Success

Data is the driving force behind successful models in the ever-evolving world of artificial intelligence (AI) and machine learning (ML). Image datasets for machine learning are essential for a wide range of AI applications, from facial recognition and autonomous vehicles to healthcare diagnostics and retail automation. The quality of the dataset directly impacts the accuracy, efficiency, and overall success of any machine-learning model.

At GTS AI, we specialize in image dataset collection services designed to provide businesses with high-quality, diverse, and scalable datasets. Whether you're training a model to recognize objects, analyze medical images, or perform any other computer vision task, the right dataset is critical to achieving optimal results.

What Are Image Datasets for Machine Learning?

An image dataset is a collection of labeled images used to train, validate, and test machine learning models. These datasets are the backbone of computer vision, enabling AI systems to "see" and understand the visual world. Machine learning models are trained on these datasets to recognize patterns, classify objects, detect anomalies, and even predict future outcomes based on image data.

Each image in a dataset is typically labeled with specific information that the model uses to learn. This could be a simple object label (e.g., "dog," "cat," or "car") or more complex annotations like bounding boxes, segmentation masks, or key points. The richer and more varied the dataset, the more capable the AI model will be in understanding and processing visual information in real-world applications.

Why High-Quality Image Datasets Matter

The quality of your image datasets is crucial to the performance of your machine-learning models. Here’s why investing in high-quality, well-annotated datasets is essential

Improved Model Accuracy: The accuracy of machine learning models depends on the quality of the data they are trained on. A high-quality dataset that includes diverse images, accurate annotations, and a wide range of scenarios helps models generalize better and make more precise predictions. This leads to fewer errors and more reliable performance across various tasks.

Comprehensive Data Representation: To build a model that performs well in real-world situations, the dataset must represent as many different scenarios as possible. This includes various lighting conditions, angles, object sizes, backgrounds, and even occlusions. A well-curated dataset ensures that the AI model has enough exposure to diverse situations, making it robust and adaptable.

Bias Reduction: Bias in machine learning datasets can lead to skewed or unfair results, especially in applications like facial recognition or medical diagnosis. A diverse and balanced dataset helps reduce biases, ensuring that the AI model performs equally well across different demographic groups, environments, and contexts.

Efficient Model Training: Well-structured image datasets help AI models learn faster and more efficiently. The clearer the labels and the better the data quality, the quicker the model can learn and adjust its internal parameters. This reduces training time and computational costs, allowing businesses to deploy models faster and with fewer resources.

Scalability: As your AI applications grow, so do the demands for larger and more complex datasets. A scalable image dataset allows your model to evolve and improve continuously, ensuring it can handle more challenging tasks or process higher volumes of data as your business expands.

Key Factors in Image Dataset Collection

Building or acquiring a high-quality image dataset for machine learning is no small task. It requires a strategic approach to data collection, annotation, and preparation. Here are some key factors to consider when collecting image datasets for machine learning:

Diversity of Images: To ensure that the AI model generalizes well, the dataset should include a wide variety of images. This means capturing different object sizes, angles, colors, and lighting conditions. For example, a dataset for vehicle detection should include images of cars in different environments, under various lighting conditions, and from multiple angles.

Accurate and Consistent Annotation: Image datasets must be meticulously labeled or annotated to provide the AI model with clear and reliable information. Common types of annotations include object labels, bounding boxes, segmentation masks, and key points. Consistency in annotation is crucial for ensuring the model learns from the data accurately.

Data Augmentation: Data augmentation techniques, such as rotating, flipping, cropping, or altering the brightness of images, can help artificially increase the size of the dataset. This enhances the dataset's diversity and provides the model with more varied training data, improving its ability to generalize across new and unseen images.

Ethical Data Collection: Ethical considerations should always be at the forefront of dataset collection, especially when it involves people. Ensuring consent for the use of images, particularly in areas like facial recognition, and adhering to data privacy laws are crucial for maintaining the ethical integrity of AI projects.

Data Security and Privacy: When collecting and handling large datasets, especially those that include sensitive information, it's vital to implement strict security measures. This ensures that personal or proprietary data remains secure throughout the dataset lifecycle, from collection to training and deployment.

Applications of Image Datasets in Machine Learning

Image datasets are used in a wide array of machine-learning applications across various industries. Here are some key areas where high-quality image datasets are making a difference

Healthcare: In healthcare, image datasets are used to train AI models that analyze medical images like X-rays, MRIs, and CT scans. These models assist doctors in detecting diseases, identifying abnormalities, and improving diagnostic accuracy.

Autonomous Vehicles: Self-driving cars rely on massive image datasets to detect and classify objects on the road, such as pedestrians, other vehicles, and traffic signs. These datasets are essential for ensuring the safety and reliability of autonomous systems.

Retail and E-commerce: Image datasets are widely used in e-commerce to improve product search, recommendation engines, and visual inventory management. AI models trained on product images can automatically categorize items, detect defects, or recommend similar products to shoppers.

Agriculture: In agriculture, AI models trained on image datasets help farmers monitor crop health, detect pests, and assess yields. Image datasets collected from drones or sensors enable precision agriculture, optimizing resource usage and improving crop production.

Security and Surveillance: Image datasets are critical for training AI models used in security and surveillance systems. These systems can automatically detect unusual activities, recognize faces, and monitor large areas in real-time, improving safety and response times.

Why Choose GTS AI for Image Dataset Collection?

At GTS AI, we provide customized image dataset collection services designed to meet the specific needs of your AI projects. Here’s why partnering with us ensures the success of your machine-learning models

Diverse and High-Quality Data: We specialize in creating diverse image datasets that encompass a wide range of real-world scenarios. Our datasets include high-resolution images and detailed annotations to ensure your AI models can learn effectively.

Customizable Solutions: Whether you're building a model for healthcare, automotive, retail, or any other industry, we offer tailored dataset solutions that align with your project requirements.

Expert Annotations: Our team of experienced annotators provides accurate and consistent labeling, ensuring your dataset is ready for high-performance model training.

Scalable Data Collection: We offer scalable data collection services to meet the growing demands of your AI applications. As your needs evolve, we provide larger and more complex datasets to ensure your model continues to improve.

Data Privacy and Security: We prioritize data security, ensuring that all datasets are handled responsibly and in compliance with relevant privacy regulations.

Conclusion

High-quality image datasets for machine learning are the cornerstone of effective AI models. Whether you're developing AI for healthcare, autonomous systems, or retail, the right dataset can make all the difference. At GTS AI, we provide comprehensive image dataset collection services that empower businesses to build accurate, reliable, and scalable AI solutions.

Visit our Image Dataset Collection Services page to learn how we can help you harness the power of AI with top-tier datasets!

0 notes

Text

Text-to-speech datasets form the cornerstone of AI-powered speech synthesis applications, facilitating natural and smooth communication between humans and machines. At Globose Technology Solutions, we recognize the transformative power of TTS technology and are committed to delivering cutting-edge solutions that harness the full potential of these datasets. By understanding the importance, features, and applications of TTS datasets, we pave the way to a future where seamless speech synthesis enriches lives and drives innovation across industries.

#Text-to-speech datasets#NLP#Data Collection#Data Collection in Machine Learning#data collection company#datasets#ai#technology#globose technology solutions

0 notes

Text

Navigating the Landscape of Data Collection in Machine Learning: Balancing Innovation and Ethical Considerations

Introduction:

As machine learning continues to propel advancements across various domains, the process of data collection plays a pivotal role in shaping the efficacy and ethical considerations of these intelligent systems. This article delves into the intricate landscape of data collection in machine learning, exploring its significance, challenges, and the ethical frameworks required to ensure responsible and unbiased AI development.

The Foundation of Machine Learning:

At the core of machine learning lies the need for vast and diverse datasets. These datasets serve as the foundation upon which machine learning models are trained, enabling them to recognize patterns, make predictions, and ultimately enhance their performance. The process of data collection becomes the initial step in this journey, demanding careful attention to ensure the quality, representativeness, and ethical sourcing of the data.

Ensuring Quality and Diversity:

Effective data collection hinges on the quality and diversity of the datasets. Gathering comprehensive and representative data ensures that machine learning models are equipped to handle a wide array of scenarios and demographics. This not only enhances the accuracy of predictions but also guards against biases that may arise from inadequate or skewed data representation.

Ethical Considerations in Data Collection:

The ethical dimension of data collection in machine learning is of paramount importance. Ensuring privacy, consent, and transparency in the acquisition of data is crucial to building trust between developers, AI systems, and the individuals contributing to these datasets. Striking a balance between innovation and ethical considerations requires a thoughtful approach, acknowledging the potential risks and implications associated with data collection processes.

Challenges and Biases:

Navigating the landscape of data collection comes with inherent challenges, including the presence of biases in datasets. Biases may arise from historical inequalities, underrepresented groups, or algorithmic biases in the data collection process itself. Understanding and addressing these biases are essential to prevent perpetuating unfair and discriminatory outcomes in machine learning applications.

Technological Solutions and Responsible Practices:

Embracing technological solutions such as federated learning, differential privacy, and secure multi-party computation can contribute to responsible and privacy-preserving data collection practices. Implementing these techniques not only protects sensitive information but also empowers individuals to have more control over their data, fostering a more ethical and transparent data ecosystem.

The Future of Ethical Data Collection:

Looking forward, the future of machine learning depends on the continued evolution of ethical data collection practices. Stricter regulations, industry standards, and community-driven initiatives will shape the landscape, emphasizing the importance of fairness, accountability, and transparency in data collection processes. As machine learning technologies advance, ethical considerations in data collection will be instrumental in building a trustworthy and inclusive AI future.

Conclusion:

In the ever-expanding realm of machine learning, data collection emerges as a linchpin for innovation and ethical development. Balancing the quest for knowledge with a commitment to privacy, transparency, and fairness is imperative. By navigating the complex landscape of data collection with ethical frameworks and technological solutions, the machine learning community can foster a future where intelligent systems not only excel in performance but also reflect the values and diversity of the societies they serve.

0 notes

Text

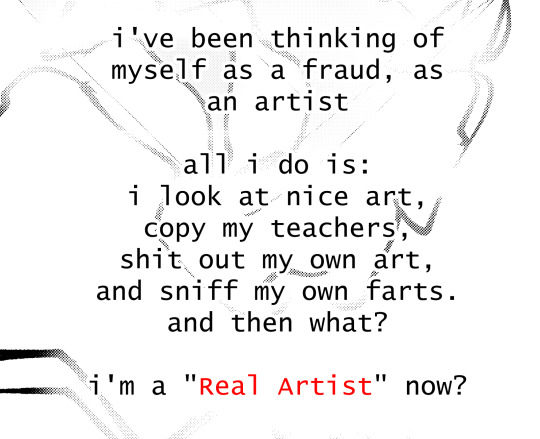

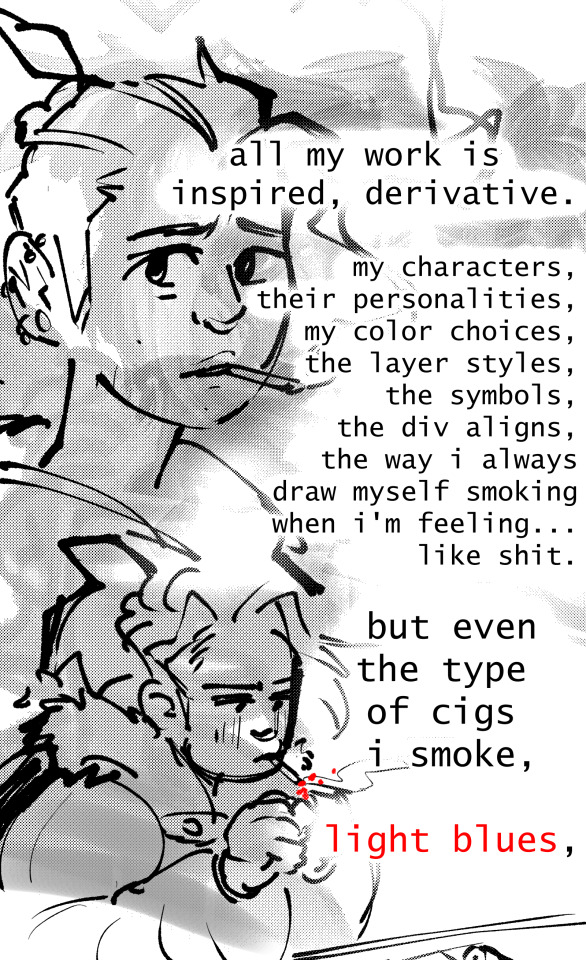

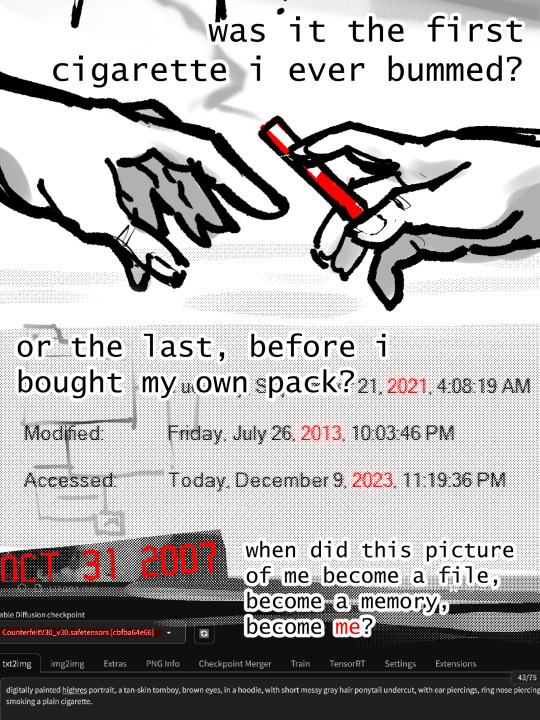

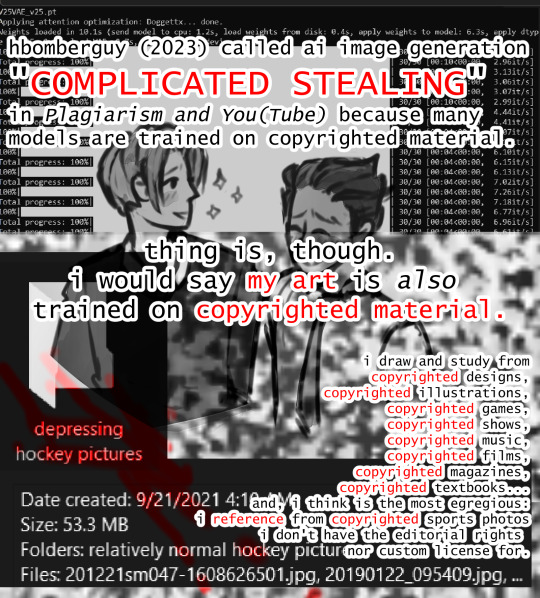

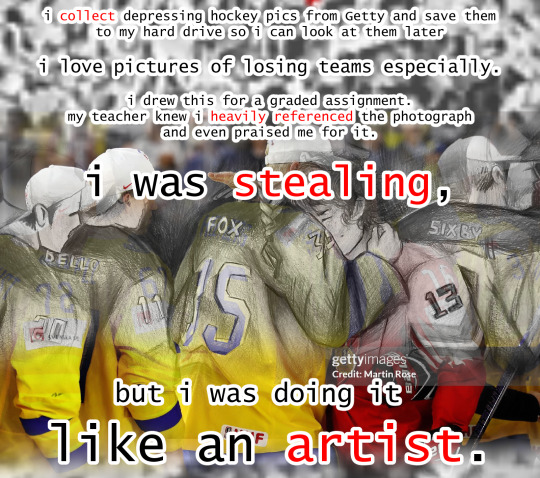

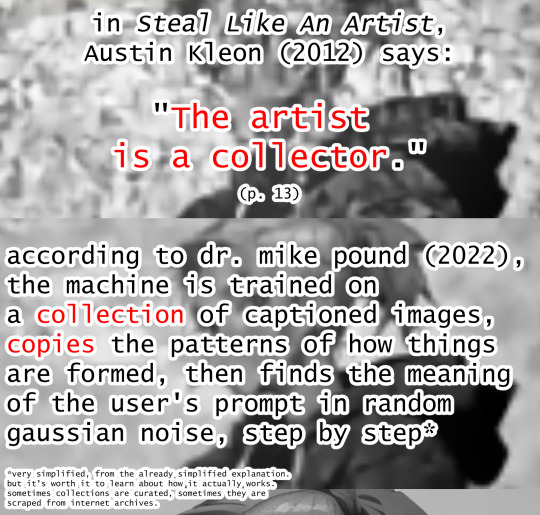

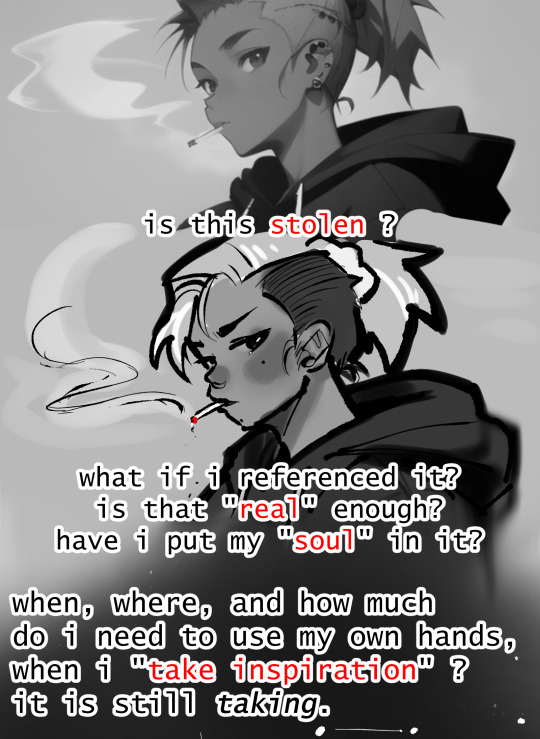

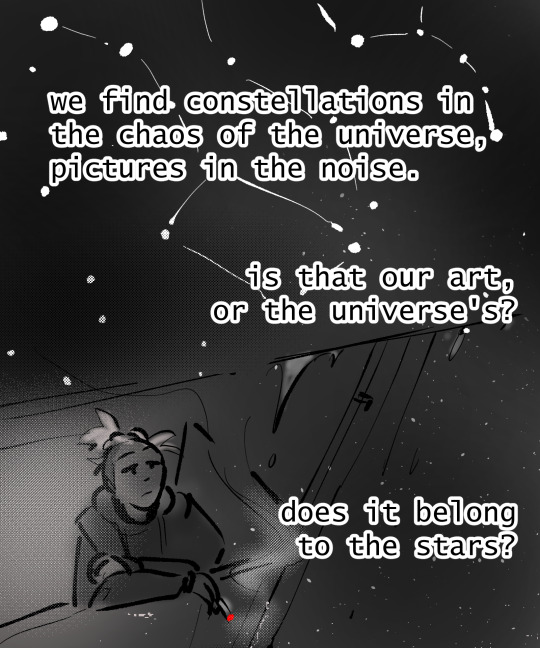

I think a lot of important nuance on the topic is missing here, but I want to share this becuase this comic/post does make some good points and provides a nuance that is missing from the larger discussion on AI. I'll make a post about this here.

"Original" Sin is what i've titled this piece. by me. sorry if you don't have "collapse long posts" enabled. I have many thoughts.

Transcript - References

#ai#jacob geller is the goat#machine learning#plaigarism#copyright#Art#artists#artificial intelligence#artwork#digital art#ownership#ethical principles#data collection services#data harvesting#data#inspiration#copyright is BS

8K notes

·

View notes

Text

The Role Of Machine Learning In Predictive Maintenance

A machinery or equipment failure can lead to increased costs, production delays, and downtimes. This further can impact productivity and efficiency as well.

Therefore, before such failures occur, it is important to foresee equipment issues and perform maintenance exactly when needed. This helps maintain productivity and leads to cost savings. By adopting predictive maintenance based on machine learning, manufacturers can reduce downtime and repair time.

Predictive maintenance with machine learning can yield substantial benefits such as minimizing the time for maintenance schedules, cutting down maintenance costs, and increasing the runtime.

In this blog post, we’ll be exploring everything a manufacturer should know about predictive maintenance with the help of machine learning models, its applications, and the future of predictive maintenance. Read More!!!

#machine learning#Predictive Maintenance#Anomalies#Asset performance#Automotive#cost savings#Data analytics#Data collection#Downtime reduction#Equipment failures#Equipment reliability#Healthcare#Historical data#IoT devices#Maintenance schedules

0 notes

Text

The Role of AI in Video Transcription Services

Introduction

In today's fast-changing digital world, the need for fast and accurate video transcription services has grown rapidly. Businesses, schools, and creators are using more video content, so getting transcriptions quickly and accurately is really important. That's where Artificial Intelligence (AI) comes in – it's changing the video transcription industry in a big way. This blog will explain how AI is crucial for video transcription services, showing how it’s transforming the industry and the many benefits it brings to users in different fields.

AI in Video Transcription: A Paradigm Shift

The use of AI in video transcription services is a big step forward for making content easier to access and manage. By using machine learning and natural language processing, AI transcription tools are becoming much faster, more accurate, and efficient. Let’s look at why AI is so important for video transcription today:

Unparalleled Speed and Efficiency

AI-powered Automation: AI transcription services can process hours of video content in a fraction of the time required by human transcribers, dramatically reducing turnaround times.

Real-time Transcription: Many AI systems offer real-time transcription capabilities, allowing for immediate access to text versions of spoken content during live events or streaming sessions.

Scalability: AI solutions can handle large volumes of video content simultaneously, making them ideal for businesses and organizations dealing with extensive media libraries.

Enhanced Accuracy and Precision

Advanced Speech Recognition: AI algorithms are trained on vast datasets, enabling them to recognize and transcribe diverse accents, dialects, and speaking styles with high accuracy.

Continuous Learning: Machine learning models powering AI transcription services continuously improve their performance through exposure to more data, resulting in ever-increasing accuracy over time.

Context Understanding: Sophisticated AI systems can grasp context and nuances in speech, leading to more accurate transcriptions of complex or technical content.

Multilingual Capabilities

Language Diversity: AI-driven transcription services can handle multiple languages, often offering translation capabilities alongside transcription.

Accent and Dialect Recognition: Advanced AI models can accurately transcribe various accents and regional dialects within the same language, ensuring comprehensive coverage.

Code-switching Detection: Some AI systems can detect and accurately transcribe instances of code-switching (switching between languages within a conversation), a feature particularly useful in multilingual environments.

Cost-effectiveness

Reduced Labor Costs: By automating the transcription process, AI significantly reduces the need for human transcribers, leading to substantial cost savings for businesses.

Scalable Pricing Models: Many AI transcription services offer flexible pricing based on usage, allowing businesses to scale their transcription needs without incurring prohibitive costs.

Reduced Time-to-Market: The speed of AI transcription can accelerate content production cycles, potentially leading to faster revenue generation for content creators.

Enhanced Searchability and Content Management

Keyword Extraction: AI transcription services often include features for automatic keyword extraction, making it easier to categorize and search through large video libraries.

Timestamping: AI can generate accurate timestamps for transcribed content, allowing users to quickly navigate to specific points in a video based on the transcription.

Metadata Generation: Some advanced AI systems can automatically generate metadata tags based on the transcribed content, further enhancing searchability and content organization.

Accessibility and Compliance

ADA Compliance: AI-generated transcripts and captions help content creators comply with accessibility guidelines, making their content available to a wider audience, including those with hearing impairments.

SEO Benefits: Transcripts generated by AI can significantly boost the SEO performance of video content, making it more discoverable on search engines.

Educational Applications: In educational settings, AI transcription can provide students with text versions of lectures and video materials, enhancing learning experiences for diverse learner types.

Integration with Existing Workflows

API Compatibility: Many AI transcription services offer robust APIs, allowing for seamless integration with existing content management systems and workflows.

Cloud-based Solutions: AI transcription services often leverage cloud computing, enabling easy access and collaboration across teams and locations.

Customization Options: Advanced AI systems may offer industry-specific customization options, such as specialized vocabularies for medical, legal, or technical fields.

Quality Assurance and Human-in-the-Loop Processes

Error Detection: Some AI transcription services incorporate error detection algorithms that can flag potential inaccuracies for human review.

Hybrid Approaches: Many services combine AI transcription with human proofreading to achieve the highest levels of accuracy, especially for critical or sensitive content.

User Feedback Integration: Advanced systems may allow users to provide feedback on transcriptions, which is then used to further train and improve the AI models.

Future of AI in Video Transcription: Navigating Opportunities

Looking ahead, the role of AI in video transcription services is poised for further expansion and refinement. As natural language processing technologies continue to advance, we can anticipate:

Enhanced Emotion and Sentiment Analysis: Future AI systems may be able to detect and annotate emotional tones and sentiments in speech, adding another layer of context to transcriptions.

Improved Handling of Background Noise: Advancements in audio processing may lead to AI systems that can more effectively filter out background noise and focus on primary speakers.

Real-time Language Translation: The integration of real-time translation capabilities with transcription services could break down language barriers in live international events and conferences.

Personalized AI Models: Organizations may have the opportunity to train AI models on their specific content, creating highly specialized and accurate transcription systems tailored to their needs.

Conclusion: Embracing the AI Advantage in Video Transcription

Integrating AI into video transcription services represents a major step forward in improving content accessibility, management, and utilization. AI-driven solutions provide unparalleled speed, accuracy, and cost-effectiveness, making them indispensable in today's video-centric environment. Businesses, educational institutions, and content creators can leverage AI for video transcription to boost efficiency, expand their audience reach, and derive greater value from their video content. As AI technology advances, the future of video transcription promises even more innovative capabilities, reinforcing its role as a critical component of contemporary digital content strategies.

0 notes

Text

Image Datasets for Machine Learning: An In-Depth Overview

Introduction:

In the field Image Dataset for Machine Learning especially within computer vision, the presence of high-quality image datasets is of paramount importance. These datasets form the cornerstone for training algorithms, validating models, and ensuring their performance in practical applications. Regardless of whether one is a novice or a seasoned professional in machine learning, grasping the importance and intricacies of image datasets is essential. This article will delve into the fundamentals of image datasets, their significance, and the avenues available for sourcing or creating them for machine learning endeavors.

Defining Image Datasets

An image dataset refers to an organized compilation of images, frequently accompanied by metadata or labels, utilized for training and evaluating machine learning models. Such datasets enable algorithms to discern patterns, identify objects, and generate predictions. For instance, a dataset designed for facial recognition might comprise thousands of labeled images of human faces, each annotated with specific attributes such as age, gender, or emotional expression.

The Significance of Image Datasets in Machine Learning

Model Development: Image datasets serve as the foundational data necessary for training machine learning models, allowing them to identify patterns and generate predictions.

Enhanced Performance: The effectiveness of a model is significantly influenced by the quality and variety of the dataset. A well-rounded dataset enables the model to perform effectively across a range of situations.

Algorithm Assessment: Evaluating algorithms using labeled datasets is crucial for determining their accuracy, precision, and overall reliability.

Specialization for Targeted Applications: Image datasets designed for specific sectors or applications facilitate more precise and efficient model development.

Categories of Image Datasets

Labeled Datasets: These datasets include annotations or labels that detail the content of each image (such as object names, categories, or bounding boxes), which are vital for supervised learning.

Unlabeled Datasets: These consist of images that lack annotations and are utilized in unsupervised or self-supervised learning scenarios.

Synthetic Datasets: These datasets are artificially created using techniques like Generative Adversarial Networks (GANs) to enhance the availability of real-world data.

Domain-Specific Datasets: These datasets concentrate on particular industries or fields, including medical imaging, autonomous driving, or agriculture.

Attributes of a Quality Image Dataset

An effective image dataset should exhibit the following attributes:

Variety: The images should encompass a range of lighting conditions, angles, backgrounds, and subjects to enhance robustness.

Volume: Larger datasets typically yield improved model performance, assuming the data is both relevant and varied.

Annotation Precision: If the dataset is labeled, the annotations must be precise and uniform throughout.

Relevance: The dataset should be closely aligned with the goals of your machine learning initiative.

Equitable Distribution: The dataset should evenly represent various categories or classes to mitigate bias.

Notable Image Datasets for Machine Learning

The following are some commonly utilized image datasets:

ImageNet: A comprehensive dataset featuring over 14 million labeled images, extensively employed in object detection and classification endeavors.

COCO (Common Objects in Context): Comprises over 300,000 images annotated for object detection, segmentation, and captioning tasks.

MNIST: A user-friendly dataset containing 70,000 grayscale images of handwritten digits, frequently used for digit recognition applications.

CIFAR-10 and CIFAR-100: These datasets include 60,000 color images categorized into 10 and 100 classes, respectively, for object classification purposes.

Open Images Dataset: A large-scale collection of 9 million images annotated with image-level labels and bounding boxes.

Kaggle Datasets: An extensive repository of datasets for various machine learning applications, contributed by the global data science community.

Medical Datasets: Specialized collections such as LUNA (for lung cancer detection) and ChestX-ray14 (for pneumonia detection).

Creating a Custom Image Dataset

When existing datasets do not fulfill your specific requirements, you have the option to develop a personalized dataset. The following steps outline the process:

Data Collection: Acquire images from diverse sources, including cameras, online platforms, or public databases. It is essential to secure the necessary permissions for image usage.

Data Cleaning: Eliminate duplicates, low-resolution images, and irrelevant content to enhance the overall quality of the dataset.

Annotation: Assign appropriate labels and metadata to the images. Utilizing tools such as LabelImg, RectLabel, or VGG Image Annotator (VIA) can facilitate this task.

Organizing the Dataset: Arrange the dataset into structured folders or adopt standardized formats like COCO or Pascal VOC.

Data Augmentation: Improve the dataset's diversity by applying transformations such as rotation, scaling, flipping, or color modifications.

Guidelines for Managing Image Datasets

Assess Your Project Requirements: Choose or create a dataset that aligns with the goals of your machine learning initiative.

Image Preprocessing: Resize, normalize, and standardize images to maintain consistency and ensure compatibility with your model.

Address Imbalanced Data: Implement strategies such as oversampling, under sampling, or synthetic data generation to achieve a balanced dataset.

Dataset Partitioning: Split the dataset into training, validation, and testing subsets, typically following a 70:20:10 distribution.

Prevent Overfitting: Mitigate the risk of overfitting by ensuring the dataset encompasses a broad range of scenarios and images.

Challenges Associated with Image Datasets

Data Privacy: Adhere to data privacy regulations when handling sensitive images.

Complexity of Annotation: Labeling extensive datasets can be labor-intensive and susceptible to inaccuracies.

Bias in Datasets: Imbalanced or unrepresentative datasets may result in biased models.

Storage and Computational Demands: Large datasets necessitate considerable storage capacity and processing power.

Conclusion

Image datasets serve as the fundamental component of machine learning initiatives within the realm of computer vision. Whether obtained from publicly accessible sources or developed independently, the importance of a well-organized and high-quality dataset cannot be overstated in the creation of effective models. By gaining insight into the various categories of image datasets and mastering their management, one can establish a solid groundwork for successful machine learning applications. Investing the necessary effort to carefully curate or choose the appropriate dataset will significantly enhance the prospects of achieving success in your machine learning projects. Image datasets are the foundation of machine learning applications in fields such as computer vision, medical diagnostics, and autonomous systems. Their quality and design directly influence the performance and generalizability of machine learning models. Large-scale datasets like ImageNet have propelled advancements in deep learning, but small, domain-specific datasets curated by experts—such as Globose Technology Solutions are equally vital.

0 notes