#Extract Streaming App Data

Explore tagged Tumblr posts

Text

OTT Media Platform Data Scraping | Extract Streaming App Data

Unlock insights with our OTT Media Platform Data Scraping. Extract streaming app data in the USA, UK, UAE, China, India, or Spain. Optimize your strategy today

know more: https://www.mobileappscraping.com/ott-media-app-scraping-services.php

#OTT Media Platform Data Scraping#Extract Streaming App Data#extracting relevant data from OTT media platforms#extracting data from websites

0 notes

Text

Top 5 Selling Odoo Modules.

In the dynamic world of business, having the right tools can make all the difference. For Odoo users, certain modules stand out for their ability to enhance data management and operations. To optimize your Odoo implementation and leverage its full potential.

That's where Odoo ERP can be a life savior for your business. This comprehensive solution integrates various functions into one centralized platform, tailor-made for the digital economy.

Let’s drive into 5 top selling module that can revolutionize your Odoo experience:

Dashboard Ninja with AI, Odoo Power BI connector, Looker studio connector, Google sheets connector, and Odoo data model.

1. Dashboard Ninja with AI:

Using this module, Create amazing reports with the powerful and smart Odoo Dashboard ninja app for Odoo. See your business from a 360-degree angle with an interactive, and beautiful dashboard.

Some Key Features:

Real-time streaming Dashboard

Advanced data filter

Create charts from Excel and CSV file

Fluid and flexible layout

Download Dashboards items

This module gives you AI suggestions for improving your operational efficiencies.

2. Odoo Power BI Connector:

This module provides a direct connection between Odoo and Power BI Desktop, a Powerful data visualization tool.

Some Key features:

Secure token-based connection.

Proper schema and data type handling.

Fetch custom tables from Odoo.

Real-time data updates.

With Power BI, you can make informed decisions based on real-time data analysis and visualization.

3. Odoo Data Model:

The Odoo Data Model is the backbone of the entire system. It defines how your data is stored, structured, and related within the application.

Key Features:

Relations & fields: Developers can easily find relations ( one-to-many, many-to-many and many-to-one) and defining fields (columns) between data tables.

Object Relational mapping: Odoo ORM allows developers to define models (classes) that map to database tables.

The module allows you to use SQL query extensions and download data in Excel Sheets.

4. Google Sheet Connector:

This connector bridges the gap between Odoo and Google Sheets.

Some Key features:

Real-time data synchronization and transfer between Odoo and Spreadsheet.

One-time setup, No need to wrestle with API’s.

Transfer multiple tables swiftly.

Helped your team’s workflow by making Odoo data accessible in a sheet format.

5. Odoo Looker Studio Connector:

Looker studio connector by Techfinna easily integrates Odoo data with Looker, a powerful data analytics and visualization platform.

Some Key Features:

Directly integrate Odoo data to Looker Studio with just a few clicks.

The connector automatically retrieves and maps Odoo table schemas in their native data types.

Manual and scheduled data refresh.

Execute custom SQL queries for selective data fetching.

The Module helped you build detailed reports, and provide deeper business intelligence.

These Modules will improve analytics, customization, and reporting. Module setup can significantly enhance your operational efficiency. Let’s embrace these modules and take your Odoo experience to the next level.

Need Help?

I hope you find the blog helpful. Please share your feedback and suggestions.

For flawless Odoo Connectors, implementation, and services contact us at

[email protected] Or www.techneith.com

#odoo#powerbi#connector#looker#studio#google#microsoft#techfinna#ksolves#odooerp#developer#web developers#integration#odooimplementation#crm#odoointegration#odooconnector

4 notes

·

View notes

Text

Unveiling the Inextricable Integration: How Data Science Became Ingrained in Our Daily Lives

Introduction

In the era of rapid technological evolution, the symbiotic relationship between data science and our daily lives has become increasingly profound. This article delves into the transformative journey of how data science seamlessly became an integral part of our existence.

Evolution of Data Science

From Concept to Reality

The inception of data science was marked by the convergence of statistical analysis, computer science, and domain expertise. Initially confined to research labs, data science emerged from its cocoon to cater to real-world challenges. As technology advanced, the accessibility of data and computing power facilitated the application of data science across diverse domains.

Data Science in Everyday Applications

Precision in Decision Making

In the contemporary landscape, data science is omnipresent, influencing decisions both big and small. From tailored recommendations on streaming platforms to predictive text in messaging apps, the algorithmic prowess of data science is ubiquitous. The precision with which these algorithms understand user behavior has transformed decision-making processes across industries.

Personalized Experiences

One of the notable impacts of data science is the creation of personalized experiences. Whether it’s curated content on social media feeds or personalized shopping recommendations, data science algorithms analyze vast datasets to understand individual preferences, providing an unparalleled level of personalization.

Data Science in Healthcare

Revolutionizing Patient Care

The healthcare sector has witnessed a paradigm shift with the integration of data science. Predictive analytics and machine learning algorithms are transforming patient care by enabling early diagnosis, personalized treatment plans, and efficient resource allocation. The marriage of data science and healthcare has the potential to save lives and optimize medical practices.

Disease Surveillance and Prevention

Data science plays a pivotal role in disease surveillance and prevention. Through the analysis of epidemiological data, health professionals can identify patterns, predict outbreaks, and implement preventive measures. This proactive approach is instrumental in safeguarding public health on a global scale.

Data Science in Business and Marketing

Unleashing Strategic Insights

Businesses today leverage data science to gain actionable insights into consumer behavior, market trends, and competitor strategies. The ability to extract meaningful patterns from massive datasets empowers organizations to make informed decisions, optimize operations, and stay ahead in competitive markets.

Targeted Marketing Campaigns

The era of blanket marketing is long gone. Data science enables businesses to create targeted marketing campaigns by analyzing customer demographics, preferences, and purchasing behaviors. This precision not only maximizes the impact of marketing efforts but also enhances the overall customer experience.

Data Science in Education

Tailoring Learning Experiences

In the realm of education, data science has ushered in a new era of personalized learning. Uncodemy is the best data science Institute in Delhi. Adaptive learning platforms use algorithms to understand students’ strengths and weaknesses, tailoring educational content to suit individual needs. This customized approach enhances student engagement and fosters a more effective learning experience.

Predictive Analytics for Student Success

Data science also contributes to the identification of at-risk students through predictive analytics. By analyzing historical data on student performance, institutions can intervene early, providing additional support to students who may face academic challenges. This proactive approach enhances overall student success rates.

The Future Landscape

Continuous Innovation

As technology continues to advance, the future landscape of data science promises even more innovation. From the evolution of machine learning algorithms to the integration of artificial intelligence, the journey of data science is an ongoing narrative of continuous improvement and adaptation.

Ethical Considerations

With the increasing reliance on data science, ethical considerations become paramount. Striking a balance between innovation and ethical responsibility is crucial to ensuring that the benefits of data science are harnessed responsibly and inclusively.

Conclusion

In the tapestry of modern existence, data science has woven itself seamlessly into the fabric of our lives. From personalized recommendations to revolutionary advancements in healthcare, the impact of data science is undeniable. As we navigate this data-driven landscape, understanding the intricate ways in which data science enhances our daily experiences is not just informative but essential.

Source Link: https://www.blogsocialnews.com/unveiling-the-inextricable-integration-how-data-science-became-ingrained-in-our-daily-lives/

2 notes

·

View notes

Text

New AI noise-canceling headphone technology lets wearers pick which sounds they hear - Technology Org

New Post has been published on https://thedigitalinsider.com/new-ai-noise-canceling-headphone-technology-lets-wearers-pick-which-sounds-they-hear-technology-org/

New AI noise-canceling headphone technology lets wearers pick which sounds they hear - Technology Org

Most anyone who’s used noise-canceling headphones knows that hearing the right noise at the right time can be vital. Someone might want to erase car horns when working indoors but not when walking along busy streets. Yet people can’t choose what sounds their headphones cancel.

A team led by researchers at the University of Washington has developed deep-learning algorithms that let users pick which sounds filter through their headphones in real time. Pictured is co-author Malek Itani demonstrating the system. Image credit: University of Washington

Now, a team led by researchers at the University of Washington has developed deep-learning algorithms that let users pick which sounds filter through their headphones in real time. The team is calling the system “semantic hearing.” Headphones stream captured audio to a connected smartphone, which cancels all environmental sounds. Through voice commands or a smartphone app, headphone wearers can select which sounds they want to include from 20 classes, such as sirens, baby cries, speech, vacuum cleaners and bird chirps. Only the selected sounds will be played through the headphones.

The team presented its findings at UIST ’23 in San Francisco. In the future, the researchers plan to release a commercial version of the system.

[embedded content]

“Understanding what a bird sounds like and extracting it from all other sounds in an environment requires real-time intelligence that today’s noise canceling headphones haven’t achieved,” said senior author Shyam Gollakota, a UW professor in the Paul G. Allen School of Computer Science & Engineering. “The challenge is that the sounds headphone wearers hear need to sync with their visual senses. You can’t be hearing someone’s voice two seconds after they talk to you. This means the neural algorithms must process sounds in under a hundredth of a second.”

Because of this time crunch, the semantic hearing system must process sounds on a device such as a connected smartphone, instead of on more robust cloud servers. Additionally, because sounds from different directions arrive in people’s ears at different times, the system must preserve these delays and other spatial cues so people can still meaningfully perceive sounds in their environment.

Tested in environments such as offices, streets and parks, the system was able to extract sirens, bird chirps, alarms and other target sounds, while removing all other real-world noise. When 22 participants rated the system’s audio output for the target sound, they said that on average the quality improved compared to the original recording.

In some cases, the system struggled to distinguish between sounds that share many properties, such as vocal music and human speech. The researchers note that training the models on more real-world data might improve these outcomes.

Source: University of Washington

You can offer your link to a page which is relevant to the topic of this post.

#A.I. & Neural Networks news#ai#Algorithms#amp#app#artificial intelligence (AI)#audio#baby#challenge#classes#Cloud#computer#Computer Science#data#ears#engineering#Environment#Environmental#filter#Future#Hardware & gadgets#headphone#headphones#hearing#human#intelligence#it#learning#LED#Link

2 notes

·

View notes

Text

Don't Miss Out on ViMusic - The Must-Have App for Streaming YouTube Music

Do you wish you could stream all your favorite tracks from YouTube Music without ads interrupting your flow? Want to keep listening to playlists and music even when you switch apps or turn off your phone's screen? ViMusic makes this possible and so much more! 🎧

ViMusic is a game-changing Android app that lets you stream audio from YouTube Music videos in the background for FREE. No more annoying ads or having music pause when you exit the YouTube app.

🎵 Key Features:

Stream ad-free music with screen off or in background

Create playlists and queues of YouTube music

Download videos and songs for offline listening

Listen to high quality audio

Intuitive and easy to use interface

Absolutely FREE

This app has smart audio extraction technology that removes video and gives you a seamless listening experience. It basically takes the best features from music streaming services and combines it with YouTube's huge catalog.

Stop wasting time and data streaming music videos just for the audio. Download ViMusic now and unlock unlimited, uninterrupted music streaming from YouTube Music on your Android device!

It's hands down the best app for enjoying YouTube music offline and on the go.

Visit https://vimusic-apk.com to get it now. You can thank me later! 😉

#youtube #music #android #app #free

2 notes

·

View notes

Text

Yes, this is due in large part to phone and tablet use, apps vs. applications, and a lack of schooling, but another significant factor is corporations' bullshit. The default user experience on a typical desktop or laptop computer is so much worse than it used to be. If you sent a Windows computer from today back in time to 1998, they'd think it was very pretty and very fast, but be horrified by pretty much everything else. The ads, the tracking, the AI fuckery—the bald-faced insult and effrontery of scanning your personal files and telling a corporation about you! They'd file lawsuits and they'd have won. These days the device you paid for does a ton of shit for a corporation's benefit, to the detriment of your experience. "Software as a service" (i.e. charging you in perpetuity to use a program, forcing you to use the newest iteration regardless of your preference, and sometimes locking your own data behind a paywall) would have made everyone apoplectic.

Both by design and by user-hostile copyright/patent/digital-rights legislation, corporations discourage you from using your device's full potential so they can extract profit from you. Before streaming services, watching or listening to media with a computing device meant you had to obtain and manage your own media library. 20 years ago, iTunes and Windows Media Player were designed for that purpose. These days it's a constant hard-sell push to Apple Music or Spotify or (saints preserve us) Amazon, and that is a rent-seeking choice made by corporations so that you don't own anything. You must pay a monthly fee to access only what they offer you, and they can arbitrarily revoke access even after you've paid them hundreds of dollars.

So computing today is more annoying and you're actively discouraged from doing things in your best interests. If you even know that the option is available. Don't judge the young ones too harshly. it's not only that they haven't been taught, it's not even that they don't have a "just try stuff" attitude, it's also that they have never experienced a time before late-stage Capitalism's dystopian techno-feudalist hellscape.

#this has been a Xennial PSA/rant#if you need to learn how to do something go to a library!#librarians are fucking awesome#if you want to give the finger to corporate bullshittery#I recommend taking back ownership by building a media collection#to do it legit you can buy from Bandcamp or similar#which is far more profitable to the artist than streaming#even borrowing and ripping CDs and DVDs from the library means more income to the artist#though they make most of their money from live concerts and merch sales#I strongly encourage the youth to turn to piracy#spend your money on merch and tickets#you aren't locked into a monthly subscription#it's less cost to you overall#you will own the media forever#and the artist gets paid more too

34K notes

·

View notes

Text

Singapore Restaurant Insights via Food App Dataset Analysis

Introduction

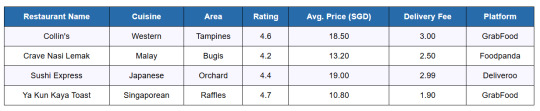

ArcTechnolabs provided a comprehensive Food Delivery Menu Dataset that helped the client extract detailed menu and pricing data from major food delivery platforms such as GrabFood, Foodpanda, and Deliveroo. This dataset included valuable insights into restaurant menus, pricing strategies, delivery fees, and popular dishes across different cuisine types in Singapore. The data also covered key factors like restaurant ratings and promotions, allowing the client to benchmark prices, identify trends, and create actionable insights for strategic decision-making. By extracting menu and pricing data at scale, ArcTechnolabs empowered the client to deliver high-impact market intelligence to the F&B industry.

Client Overview

A Singapore-based market intelligence firm partnered with ArcTechnolabs to analyze over 5,000 restaurants across the island. Their goal was to extract strategic insights from top food delivery platforms like GrabFood, Foodpanda, and Deliveroo—focusing on menu pricing, cuisine trends, delivery coverage, and customer ratings. They planned to use the insights to support restaurant chains, investors, and FMCG brands targeting the $1B+ Singapore online food delivery market.

The Challenge

The client encountered several data-related challenges, including fragmented listings across platforms, where the same restaurant had different menus and prices. There was no unified data source available to benchmark cuisine pricing or delivery charges. Additionally, inconsistent tagging for cuisines, promotions, and outlets created difficulties in standardization. The client also faced challenges in extracting food item pricing at scale and needed to perform detailed analysis by location, cuisine type, and restaurant rating. These obstacles highlighted the need for a structured and reliable dataset to overcome the fragmentation and enable accurate insights.They turned to ArcTechnolabs for a structured, ready-to-analyze dataset covering Singapore’s entire food delivery landscape.

ArcTechnolabs Solution:

ArcTechnolabs built a custom dataset using data scraped from:

GrabFood Singapore

Foodpanda Singapore

Deliveroo SG

The dataset captured details for 5,000+ restaurants, normalized for comparison and analytics.

Sample Dataset Extract

Client Testimonial

"ArcTechnolabs delivered exactly what we needed—structured, granular, and high-quality restaurant data across Singapore’s top food delivery apps. Their ability to normalize cuisine categories, menu pricing, and delivery metrics helped us drastically cut down report turnaround time. With their support, we expanded our client base and began offering zonal insights and cuisine benchmarks no one else in the market had. The quality, speed, and support were outstanding. We now rely on their weekly datasets to power everything from investor reports to competitive pricing models."

— Director of Research & Analytics, Singapore Market Intelligence Firm

Conclusion

ArcTechnolabs enabled a market intelligence firm to transform fragmented food delivery data into structured insights—analyzing over 5,000 restaurants across Singapore. With access to a high-quality, ready-to-analyze dataset, the client unlocked new revenue streams, faster reports, and higher customer value through data-driven F&B decision-making.

Source >> https://www.arctechnolabs.com/singapore-food-app-dataset-restaurant-analysis.php

#FoodDeliveryMenuDatasets#ExtractingMenuAndPricingData#FoodDeliveryDataScraping#SingaporeFoodDeliveryDataset#ScrapeFoodAppDataSingapore#ExtractSingaporeRestaurantReviews#WebScrapingServices#ArcTechnolabs

0 notes

Text

Behind the Scenes of Google Maps – The Data Science Powering Real-Time Navigation

Whether you're finding the fastest route to your office or avoiding a traffic jam on your way to dinner, Google Maps is likely your trusted co-pilot. But have you ever stopped to wonder how this app always seems to know the best way to get you where you’re going?

Behind this everyday convenience lies a powerful blend of data science, artificial intelligence, machine learning, and geospatial analysis. In this blog, we’ll take a journey under the hood of Google Maps to explore the technologies that make real-time navigation possible.

The Core Data Pillars of Google Maps

At its heart, Google Maps relies on multiple sources of data:

Satellite Imagery

Street View Data

User-Generated Data (Crowdsourcing)

GPS and Location Data

Third-Party Data Providers (like traffic and transit systems)

All of this data is processed, cleaned, and integrated through complex data pipelines and algorithms to provide real-time insights.

Machine Learning in Route Optimization

One of the most impressive aspects of Google Maps is how it predicts the fastest and most efficient route for your journey. This is achieved using machine learning models trained on:

Historical Traffic Data: How traffic typically behaves at different times of the day.

Real-Time Traffic Conditions: Collected from users currently on the road.

Road Types and Speed Limits: Major highways vs local streets.

Events and Accidents: Derived from user reports and partner data.

These models use regression algorithms and probabilistic forecasting to estimate travel time and suggest alternative routes if necessary. The more people use Maps, the more accurate it becomes—thanks to continuous model retraining.

Real-Time Traffic Predictions: How Does It Work?

Google Maps uses real-time GPS data from millions of devices (anonymized) to monitor how fast vehicles are moving on specific road segments.

If a route that normally takes 10 minutes is suddenly showing delays, the system can:

Update traffic status dynamically (e.g., show red for congestion).

Reroute users automatically if a faster path is available.

Alert users with estimated delays or arrival times.

This process is powered by stream processing systems that analyze data on the fly, updating the app’s traffic layer in real time.

Crowdsourced Data – Powered by You

A big part of Google Maps' accuracy comes from you—the users. Here's how crowdsourcing contributes:

Waze Integration: Google owns Waze, and integrates its crowdsourced traffic reports.

User Reports: You can report accidents, road closures, or speed traps.

Map Edits: Users can suggest edits to business names, locations, or road changes.

All this data is vetted using AI and manual review before being pushed live, creating a community-driven map that evolves constantly.

Street View and Computer Vision

Google Maps' Street View isn’t just for virtual sightseeing. It plays a major role in:

Detecting road signs, lane directions, and building numbers.

Updating maps with the latest visuals.

Powering features like AR navigation (“Live View”) on mobile.

These images are processed using computer vision algorithms that extract information from photos. For example, identifying a “One Way” sign and updating traffic flow logic in the map's backend.

Dynamic Rerouting and ETA Calculation

One of the app’s most helpful features is dynamic rerouting—recalculating your route if traffic builds up unexpectedly.

Behind the scenes, this involves:

Continuous location tracking

Comparing alternative paths using current traffic models

Balancing distance, speed, and risk of delay

ETA (Estimated Time of Arrival) is not just based on distance—it incorporates live conditions, driver behavior, and historical delay trends.

Mapping the World – At Scale

To maintain global accuracy, Google Maps uses:

Satellite Data Refreshes every 1–3 years

Local Contributor Programs in remote regions

AI-Powered Map Generation, where algorithms stitch together raw imagery into usable maps

In fact, Google uses deep learning models to automatically detect new roads and buildings from satellite photos. This accelerates map updates, especially in developing areas where manual updates are slow.

Voice and Search – NLP in Maps

Search functionality in Google Maps is driven by natural language processing (NLP) and contextual awareness.

For example:

Searching “best coffee near me” understands your location and intent.

Voice queries like “navigate to home” trigger saved locations and route planning.

Google Maps uses entity recognition and semantic analysis to interpret your input and return the most relevant results.

Privacy and Anonymization

With so much data collected, privacy is a major concern. Google uses techniques like:

Location anonymization

Data aggregation

Opt-in location sharing

This ensures that while Google can learn traffic patterns, it doesn’t store identifiable travel histories for individual users (unless they opt into Location History features).

The Future: Predictive Navigation and AR

Google Maps is evolving beyond just directions. Here's what's coming next:

Predictive Navigation: Anticipating where you’re going before you enter the destination.

AR Overlays: Augmented reality directions that appear on your camera screen.

Crowd Density Estimates: Helping you avoid crowded buses or busy places.

These features combine AI, IoT, and real-time data science for smarter, more helpful navigation.

Conclusion:

From finding your favorite restaurant to getting you home faster during rush hour, Google Maps is a masterpiece of data science in action. It uses a seamless combination of:

Geospatial data

Machine learning

Real-time analytics

User feedback

…all delivered in seconds through a simple, user-friendly interface.

Next time you reach your destination effortlessly, remember—it’s not just GPS. It’s algorithms, predictions, and billions of data points working together in the background.

#nschool academy#datascience#googlemaps#machinelearning#realtimedata#navigationtech#bigdata#artificialintelligence#geospatialanalysis#maptechnology#crowdsourceddata#predictiveanalytics#techblog#smartnavigation#locationintelligence#aiapplications#trafficprediction#datadriven#dataengineering#digitalmapping#computerVision#coimbatore

0 notes

Text

How Data Monetization is Reshaping Business Value

In the digital age, companies are discovering that their most valuable assets often exist as intangible bytes rather than physical inventory. Data monetization—the process of transforming information into revenue streams—has emerged as both an art and science, creating new business models while raising important questions about privacy and value exchange.

Michael Shvartsman, an investor specializing in data-driven businesses, observes: "We've entered an era where data isn't just a byproduct of operations—it's the foundation of competitive advantage. The companies that will thrive understand how to extract meaningful insights while maintaining ethical standards and customer trust."

The Spectrum of Data Value.

Not all data holds equal worth. Raw information becomes valuable only when refined into actionable intelligence or marketable products. Businesses leading in this space have moved beyond simple data collection to developing sophisticated frameworks for assessing information quality, relevance, and potential applications.

Some organizations monetize directly, selling access to specialized datasets or analytical services. Others employ indirect approaches, using insights to optimize operations, personalize offerings, or reduce risk. The most sophisticated players create data feedback loops where information improves products, which in turn generate richer data—a virtuous cycle of increasing value.

Building Ethical Monetization Frameworks.

As consumer awareness of data's worth grows, companies face increasing pressure to establish transparent value exchanges. Successful data monetization now requires clear communication about what information gets collected, how it creates value, and what benefits customers receive in return.

Michael Shvartsman notes: "The healthiest data relationships resemble equitable partnerships rather than extraction operations. When customers understand how their information leads to better products or services, they're more likely to engage willingly and authentically."

This approach manifests in various ways—retailers offering personalized discounts in exchange for purchase history, apps providing free services supported by aggregated usage analytics, or financial institutions using transaction data to offer tailored advice. In each case, the exchange feels balanced rather than exploitative.

Emerging Monetization Models.

Innovative approaches to data value creation are disrupting traditional industries. Healthcare providers are developing anonymized datasets for medical research. Automotive companies are packaging vehicle performance information for urban planners. Smart cities are transforming municipal operations data into public-private partnerships.

These models share a common thread—they identify underserved needs that existing datasets can address, then structure mutually beneficial arrangements. The most successful avoid one-time transactions in favor of ongoing data relationships that appreciate in value over time.

"The future belongs to contextual data monetization," predicts Michael Shvartsman. "Rather than selling raw information, smart companies will sell solutions powered by insights—the difference between providing GPS coordinates and delivering turn-by-turn navigation."

Technical and Organizational Foundations.

Effective data monetization requires robust infrastructure. Companies must implement systems for clean data collection, secure storage, and flexible analysis. Perhaps more challengingly, they need to break down internal silos that prevent information from flowing to where it creates the most value.

Cultural factors prove equally important. Organizations must foster data literacy across departments, helping teams understand how information can enhance their work. They need to balance openness with appropriate governance, ensuring sensitive data receives proper protection.

The Strategic Imperative.

Looking ahead, data monetization capabilities will increasingly determine competitive positioning. Businesses that develop these competencies early will gain insights that inform better decisions, create additional revenue streams, and build deeper customer relationships.

As Michael Shvartsman concludes: "Data isn't the new oil—it's more versatile and renewable than that. The companies that will lead understand how to refine it responsibly, distribute it ethically, and deploy it strategically to solve real problems. In the coming decade, this ability will separate industry leaders from followers."

For organizations embarking on this journey, the path forward involves viewing data not as a passive resource but as an active asset—one that requires investment, stewardship, and creative thinking to realize its full potential. When approached with both technical rigor and ethical consideration, data monetization becomes more than a revenue tactic—it transforms into a cornerstone of sustainable business strategy in the digital economy.

0 notes

Text

IoT Monetization Market: Size, Share, Analysis, Forecast, and Growth Trends to 2032 – Global Smart City Projects Spur Monetization

IoT Monetization Market was valued at USD 639.88 billion in 2023 and is expected to reach USD 27875.59 billion by 2032, growing at a CAGR of 52.1% from 2024-2032.

IoT Monetization Market is experiencing a rapid surge as companies across industries unlock new revenue opportunities through connected devices. From manufacturing to healthcare, businesses are leveraging IoT data to create value-driven services, optimize operations, and develop innovative pricing models. This wave of transformation is especially strong in the USA and Europe, where smart infrastructure and enterprise digitization continue to accelerate.

IoT Monetization Market in the US Gains Momentum as Enterprises Unlock New Revenue Streams

The U.S. IoT Monetization Market was valued at USD 199.06 billion in 2023 and is projected to reach USD 7,611.49 billion by 2032, expanding at a CAGR of 49.95% from 2024 to 2032.

IoT Monetization Market is expanding as organizations move beyond connectivity to capitalize on the data generated by billions of IoT endpoints. Whether through subscription-based models, usage analytics, or data marketplaces, monetizing IoT is becoming a strategic priority for businesses aiming to differentiate and grow in competitive markets.

Get Sample Copy of This Report: https://www.snsinsider.com/sample-request/6658

Market Keyplayers:

SAP SE (SAP Leonardo IoT, SAP Edge Services)

General Electric Co. (Predix Platform, Asset Performance Management)

Telefonaktiebolaget LM Ericsson (Ericsson IoT Accelerator, Ericsson Device Connection Platform)

Intel Corporation (Intel IoT Platform, Intel Edge Insights)

Microsoft Corporation (Azure IoT Hub, Azure Digital Twins)

Oracle Corporation (Oracle IoT Cloud, Oracle Autonomous Database for IoT)

IBM Corporation (Watson IoT Platform, IBM Maximo)

Amdocs Ltd. (Amdocs IoT Monetization Platform, Amdocs Digital Commerce Suite)

Thales Group (Thales IoT Security Solutions, Thales Sentinel Licensing)

Cisco Systems, Inc. (Cisco IoT Control Center, Cisco Kinetic for Cities)

Market Analysis

The IoT Monetization Market is driven by the exponential growth of connected devices and the demand for real-time, actionable insights. As businesses gather vast amounts of data from sensors, machines, and wearables, the focus has shifted to converting this data into profit-generating assets. The USA leads in platform innovation and enterprise IoT deployments, while Europe emphasizes regulatory-aligned monetization models with a focus on security and user consent.

Market Trends

Rise in data-as-a-service (DaaS) business models

Increased adoption of usage-based and pay-per-use pricing

Growth in digital twins for performance optimization and monetization

Emergence of IoT marketplaces for data trading and app services

Integration with AI and edge computing for faster ROI

Subscription-based services in industrial, healthcare, and automotive sectors

Expansion of monetization APIs for developer ecosystems

Market Scope

The scope of the IoT Monetization Market is broad and evolving rapidly. As more sectors digitize, businesses are discovering untapped revenue through IoT data streams and value-added services.

Cross-industry demand for real-time monetization platforms

IoT-based service models in agriculture, logistics, and smart cities

Telecom-driven IoT monetization through connectivity bundling

OEMs embedding monetization strategies into smart products

Partner ecosystems building scalable, multi-tenant monetization solutions

Forecast Outlook

The IoT Monetization Market is poised for significant growth as enterprises scale their IoT deployments and seek new paths to profitability. With advancements in cloud, AI, and 5G fueling real-time analytics and service innovation, the future of monetization lies in the ability to extract, package, and deliver data as a high-value commodity. Businesses that embrace flexible, scalable monetization frameworks will lead in both revenue generation and customer retention across the USA and Europe.

Access Complete Report: https://www.snsinsider.com/reports/iot-monetization-market-6658

Conclusion

IoT Monetization is no longer an afterthought—it’s a front-line business strategy. In a connected world, the value lies not just in gathering data, but in transforming it into services, experiences, and revenue. As global markets shift toward digital-first operations, those who invest in intelligent monetization tools today will shape the competitive landscape of tomorrow.

Related Reports:

US IoT MVNO market is rapidly expanding with new connectivity solutions

US IoT integration market shows rapid growth opportunities

About Us:

SNS Insider is one of the leading market research and consulting agencies that dominates the market research industry globally. Our company's aim is to give clients the knowledge they require in order to function in changing circumstances. In order to give you current, accurate market data, consumer insights, and opinions so that you can make decisions with confidence, we employ a variety of techniques, including surveys, video talks, and focus groups around the world.

Contact Us:

Jagney Dave - Vice President of Client Engagement

Phone: +1-315 636 4242 (US) | +44- 20 3290 5010 (UK)

#IoT Monetization Market#IoT Monetization Market Scope#IoT Monetization Market Share#IoT Monetization Market Growth

0 notes

Text

AI Video Analysis Solution Development Company: Build Smarter Video with AI

In today’s fast-paced digital world, video content is everywhere—on our phones, in our workplaces, and across social media. But making sense of all this video data is a challenge. That’s where AI video analysis solution development companies come in. These companies use advanced AI video analytics, AI video generator apps, and AI video creation software to help businesses unlock the full potential of their video assets. Whether you want to automate video editing, generate new content, or analyze footage for insights, AI video solutions are transforming the way we create, manage, and understand video.

What Is AI Video Analysis?

AI video analysis is the process of using artificial intelligence to automatically process, interpret, and extract valuable information from video streams. With AI video analysis software, businesses can detect objects, recognize faces, track movements, and even understand behaviors in real time. This technology is a game-changer for industries like security, healthcare, retail, sports, and manufacturing.

The Rise of AI Video Agents and Automation

AI video agents are smart software bots that monitor video feeds, detect patterns, and trigger actions automatically. Imagine having an AI video bot that watches your security cameras 24/7, identifies unusual activities, and sends instant alerts when something’s wrong. These AI video agents use advanced AI video analytics to deliver real-time insights, helping businesses stay proactive and efficient.

AI video automation goes even further. With AI video automation, you can automate repetitive video editing tasks, generate highlights, or even create entire videos from scratch using AI video generator apps and AI video creation software. This means less manual work and more time to focus on what matters.

AI Video Generator: Create Content Instantly

The AI video generator is one of the most exciting innovations in video technology. With an AI video generator app, you can turn simple text prompts into fully produced videos—complete with voiceovers, music, and visuals. AI video generators are perfect for marketers, educators, and content creators who need to produce high-quality videos quickly and at scale.

AI video generator apps are easy to use. Just type your script, select your style, and let the AI video creation software do the rest. These tools use AI-powered avatars, natural-sounding voiceovers, and smart editing features to make professional videos accessible to everyone.

AI Video Editing Software: Smarter, Faster, Easier

Traditional video editing can be time-consuming and complex. AI video editing software changes the game by automating many of the tedious tasks. With an AI video editor, you can:

Automatically cut and trim footage

Add subtitles and captions

Generate transitions and effects

Enhance audio and visuals

Summarize long videos into short clips

AI video editing software uses machine learning to understand your video content and make intelligent editing decisions. Whether you’re a beginner or a pro, AI video editors help you create polished videos in a fraction of the time.

AI Video Analytics: Unlock Actionable Insights

AI video analytics is all about turning video footage into actionable data. AI video analysis software can:

Count people or objects in a scene

Track movement patterns

Detect suspicious behavior

Analyze customer interactions in retail

Monitor patient activity in healthcare

With AI video analytics, businesses can make smarter decisions based on real-time video data. For example, retailers can use AI video analytics to optimize store layouts, while sports teams can analyze player performance and strategies.

AI Video Solutions for Every Industry

AI video solutions are not just for tech giants. Companies of all sizes and industries are using AI video analysis software, AI video generator apps, and AI video editing software to improve operations and drive results.

Security and Surveillance: AI video agents monitor CCTV feeds, detect intrusions, and flag unusual activities automatically. This helps law enforcement and security teams respond faster and prevent incidents.

Healthcare: AI video analysis software tracks patient movements, analyzes medical scans, and helps doctors diagnose diseases more accurately.

Retail: AI video analytics tracks customer movements, analyzes shopping behavior, and provides insights to boost sales and improve customer experiences.

Sports: AI video solutions analyze player movements, team strategies, and performance metrics, helping coaches and athletes gain a competitive edge.

Manufacturing: AI video analytics monitors equipment, ensures workplace safety, and enhances quality control.

How AI Video Analysis Solution Development Companies Work

AI video analysis solution development companies specialize in building custom AI video solutions tailored to your needs. Here’s how they do it:

Consultation: Understand your business goals and video challenges.

AI Model Development: Build and train AI video analytics models for tasks like object detection, facial recognition, and behavior analysis.

Integration: Deploy AI video analysis software with your existing video systems.

Customization: Fine-tune AI video generator apps and AI video editing software to match your workflow.

Support: Provide ongoing updates and technical support to ensure optimal performance.

These companies use the latest AI and deep learning techniques to deliver fast, accurate, and scalable video solutions.

The Power of AI Video Bots

AI video bots are virtual assistants that interact with your video data. They can answer questions, generate reports, and trigger actions based on what they see in the video. For example, an AI video bot can:

Alert you when a restricted area is breached

Summarize hours of footage into key highlights

Generate automatic compliance reports

With AI video bots, you get smarter video management and faster response times.

Why Choose a Leading AI Video Analysis Solution Development Company?

Partnering with a top AI video analysis solution development company gives you:

Expertise: Access to skilled AI developers and data scientists.

Customization: Solutions tailored to your industry and business needs.

Innovation: The latest AI video generator, AI video editor, and AI video analysis software.

Reliability: Proven track record of delivering high-quality AI video solutions.

Support: Ongoing maintenance and updates for your AI video analytics systems.

Real-World Examples: AI Video in Action

Retail Chain: Used AI video analytics to track customer flow, optimize product placement, and increase sales by 20%.

Hospital: Deployed AI video analysis software to monitor patient movement and prevent falls, improving patient safety.

Sports Team: Leveraged AI video generator and AI video editing software to create highlight reels and analyze game strategies.

Manufacturing Plant: Installed AI video agents to monitor equipment and detect safety hazards in real time.

The Future of AI Video Solutions

The future of video is smart, automated, and data-driven. AI video generator apps, AI video editing software, and AI video analytics are only getting better. Soon, we’ll see even more advanced AI video bots, real-time AI video automation, and seamless integration with business intelligence tools.

AI video analysis solution development companies are leading the way, helping businesses build smarter video with AI. Whether you need an AI video generator, AI video editor, or AI video analysis software, these companies deliver the tools you need to stay ahead.

Conclusion: Build Smarter Video with AI

AI video solutions are revolutionizing the way we create, edit, and analyze video. With AI video agents, AI video generator apps, and AI video analytics, businesses can automate workflows, gain valuable insights, and create engaging content faster than ever.

If you’re looking to harness the power of video, partnering with an AI video analysis solution development company is the smartest move. From AI video creation software to AI video bots and AI video automation, the possibilities are endless. Build smarter video with AI and unlock a new world of opportunities for your business.

0 notes

Text

Remote MCP server, Code Interpreter, Image Generation in API

OpenAI Responses API

Developers and organisations can now use the Responses API with Code Interpreter, image generation, and remote MCP server functionality.

Today, OpenAI's Responses API, which creates agentic apps, gets more features. Image creation, Code Interpreter, and better file search are included, along with support for all remote Model Context Protocol (Remote MCP) servers. These tools support OpenAI o-series reasoning models, GPT 4.1, and GPT 4o.

The Responses API lets o3 and o4-mini call tools and functions directly in their chain of thought, producing more relevant and contextual responses. By retaining reasoning tokens across requests and tool calls, o3 and o4-mini with the Responses API improve model intelligence and reduce developer costs and latency.

The Responses API, essential for agentic systems, has been improved. Over hundreds of thousands of developers have used the API to handle billions of tokens for agentic applications like education aids, market intelligence agents, and coding agents since March 2025.

New features and built-in tools improve agentic systems constructed with the Responses API's functionality and dependability.

Additional Responses API Resources

Many new tools are incorporated into the Responses API:

Remote MCP Server Support

Remote Model Context Protocol (remote MCP) servers can now connect to API tools. Open protocol MCP standardises how apps give Large Language Models (LLMs) context. MCP servers let developers to connect OpenAI models to Cloudflare, HubSpot, Intercom, PayPal, Plaid, Shopify, Stripe, Square, Twilio, and Zapier with little code. OpenAI joins the MCP steering committee to improve the ecosystem and standard.

Image making

Developers can use OpenAI's latest image generating model, gpt-image-1, in the Responses API. This program supports multi-turn edits for granular, step-by-step image editing through prompts and real-time streaming for image previews. Even if the Images API can produce images, the Responses API's image generating tool is innovative. The reasoning model series o3 model supports this tool.

Interpreter Code

Responses API now has this utility. The Code Interpreter can aid with data analysis, complex mathematics and coding challenges, and “thinking with images” by empowering models to understand and deal with images. Models like o3 and o4-mini fare better on Humanity's Last Exam when they use the Code Interpreter.

Enhancements to File Search

Since March 2025, the API has offered file search, but new functionalities have been introduced. Developers can use the file search tool to extract relevant document chunks into the model based on user queries. The changes enable vector storage searches and attribute filtering with arrays.

These tools work with the GPT-4o, GPT-4.1, and OpenAI o-series reasoning models (o1, o3, o3-mini, and o4-mini for availability under the pricing/availability section). Developers can use these built-in technologies to construct stronger agents with one API call. Industry-standard benchmarks show that models that call more tools while reasoning perform better. O3 and o4-mini's ability to invoke tools and functions straight from their reasoning yields more contextually relevant responses.

Saving reasoning tokens across tool calls and requests improves model intelligence and reduces latency and cost.

New Responses API Features

Along with the new tools, developers and enterprises may now use privacy, visibility, and dependability features:

Background Mode: This lets developers manage long tasks reliably and asynchronously. Background mode prevents timeouts and network issues while solving difficult problems with reasoning models, which can take minutes. Developers can stream events or poll background objects for completion to see the latest state. Agentic products like Operator, Codex, and deep research have similar functions.

Reasoning Summaries: The API may now summarise the model's internal logic in natural language. Similar to ChatGPT, this helps developers debug, audit, and improve end-user experiences. Reasoning summaries are free.

Customers who qualify for Zero Data Retention (ZDR) can reuse encrypted reasoning items between API queries. OpenAI does not store these reasoning pieces. Sharing reasoning items between function calls improves intelligence, reduces token usage, and increases cache hit rates for models like o3 and o4-mini, reducing latency and costs.

Price, availability

These new features and tools are available now. The OpenAI o-series reasoning models (o1, o3, o3-mini, and o4-mini) and GPT-4o and GPT-4.1 series support them. Only the reasoning series' o3 model supports image production.

Current tools cost the same. The new tools' pricing is specified:

Images cost $5.00/1M for text input tokens, $10.00/1M for image input tokens, and $40.00/1M for image output tokens with a 75% discount on cached input tokens.

Each Code Interpreter container costs $0.03.

File search costs $2.50/1k tool calls and $0.10/GB vector storage daily.

Developers pay for API output tokens, not the tool itself.

#remoteMCP#OpenAIResponsesAPI#OpenAI#ResponsesAPI#remoteModelContextProtocol#CodeInterpreter#technology#technews#technologyynews#news#govindhtech

0 notes

Text

How Azure Supports Big Data and Real-Time Data Processing

The explosion of digital data in recent years has pushed organizations to look for platforms that can handle massive datasets and real-time data streams efficiently. Microsoft Azure has emerged as a front-runner in this domain, offering robust services for big data analytics and real-time processing. Professionals looking to master this platform often pursue the Azure Data Engineering Certification, which helps them understand and implement data solutions that are both scalable and secure.

Azure not only offers storage and computing solutions but also integrates tools for ingestion, transformation, analytics, and visualization—making it a comprehensive platform for big data and real-time use cases.

Azure’s Approach to Big Data

Big data refers to extremely large datasets that cannot be processed using traditional data processing tools. Azure offers multiple services to manage, process, and analyze big data in a cost-effective and scalable manner.

1. Azure Data Lake Storage

Azure Data Lake Storage (ADLS) is designed specifically to handle massive amounts of structured and unstructured data. It supports high throughput and can manage petabytes of data efficiently. ADLS works seamlessly with analytics tools like Azure Synapse and Azure Databricks, making it a central storage hub for big data projects.

2. Azure Synapse Analytics

Azure Synapse combines big data and data warehousing capabilities into a single unified experience. It allows users to run complex SQL queries on large datasets and integrates with Apache Spark for more advanced analytics and machine learning workflows.

3. Azure Databricks

Built on Apache Spark, Azure Databricks provides a collaborative environment for data engineers and data scientists. It’s optimized for big data pipelines, allowing users to ingest, clean, and analyze data at scale.

Real-Time Data Processing on Azure

Real-time data processing allows businesses to make decisions instantly based on current data. Azure supports real-time analytics through a range of powerful services:

1. Azure Stream Analytics

This fully managed service processes real-time data streams from devices, sensors, applications, and social media. You can write SQL-like queries to analyze the data in real time and push results to dashboards or storage solutions.

2. Azure Event Hubs

Event Hubs can ingest millions of events per second, making it ideal for real-time analytics pipelines. It acts as a front-door for event streaming and integrates with Stream Analytics, Azure Functions, and Apache Kafka.

3. Azure IoT Hub

For businesses working with IoT devices, Azure IoT Hub enables the secure transmission and real-time analysis of data from edge devices to the cloud. It supports bi-directional communication and can trigger workflows based on event data.

Integration and Automation Tools

Azure ensures seamless integration between services for both batch and real-time processing. Tools like Azure Data Factory and Logic Apps help automate the flow of data across the platform.

Azure Data Factory: Ideal for building ETL (Extract, Transform, Load) pipelines. It moves data from sources like SQL, Blob Storage, or even on-prem systems into processing tools like Synapse or Databricks.

Logic Apps: Allows you to automate workflows across Azure services and third-party platforms. You can create triggers based on real-time events, reducing manual intervention.

Security and Compliance in Big Data Handling

Handling big data and real-time processing comes with its share of risks, especially concerning data privacy and compliance. Azure addresses this by providing:

Data encryption at rest and in transit

Role-based access control (RBAC)

Private endpoints and network security

Compliance with standards like GDPR, HIPAA, and ISO

These features ensure that organizations can maintain the integrity and confidentiality of their data, no matter the scale.

Career Opportunities in Azure Data Engineering

With Azure’s growing dominance in cloud computing and big data, the demand for skilled professionals is at an all-time high. Those holding an Azure Data Engineering Certification are well-positioned to take advantage of job roles such as:

Azure Data Engineer

Cloud Solutions Architect

Big Data Analyst

Real-Time Data Engineer

IoT Data Specialist

The certification equips individuals with knowledge of Azure services, big data tools, and data pipeline architecture—all essential for modern data roles.

Final Thoughts

Azure offers an end-to-end ecosystem for both big data analytics and real-time data processing. Whether it’s massive historical datasets or fast-moving event streams, Azure provides scalable, secure, and integrated tools to manage them all.

Pursuing an Azure Data Engineering Certification is a great step for anyone looking to work with cutting-edge cloud technologies in today’s data-driven world. By mastering Azure’s powerful toolset, professionals can design data solutions that are future-ready and impactful.

#Azure#BigData#RealTimeAnalytics#AzureDataEngineer#DataLake#StreamAnalytics#CloudComputing#AzureSynapse#IoTHub#Databricks#CloudZone#AzureCertification#DataPipeline#DataEngineering

0 notes

Text

Emerging Technologies: How AI and ML Are Transforming Apps

The landscape of Mobile App Development is undergoing a profound transformation as artificial intelligence (AI) and machine learning (ML) technologies become increasingly accessible to developers of all skill levels. What was once the exclusive domain of specialized data scientists and researchers has now been democratized through user-friendly frameworks, cloud-based services, and on-device machine learning capabilities. This technological evolution is enabling a new generation of intelligent applications that can understand context, learn from user behavior, process natural language, recognize images, and make predictions—all capabilities that were science fiction just a decade ago. As these technologies mature, they are redefining what users expect from their mobile experiences and creating competitive advantages for organizations that effectively leverage them.

On-Device Intelligence: The New Frontier

Perhaps the most significant development in AI-powered mobile applications is the shift toward on-device processing. While early AI implementations relied heavily on cloud services—sending data to remote servers for processing and returning results to the device—modern mobile hardware now supports increasingly sophisticated machine learning models running directly on smartphones. Apple's Core ML and Google's ML Kit have made on-device inference accessible to mainstream Mobile App Development teams, offering significant advantages in performance, privacy, and offline functionality. Applications can now recognize faces, classify images, transcribe speech, and even perform complex natural language processing without an internet connection. This architectural shift reduces latency, preserves user privacy by keeping sensitive data local, and enables AI-powered features to function reliably regardless of network conditions—critical considerations for mobile experiences.

Personalization at Scale

The application of machine learning to user behavior data has revolutionized how applications adapt to individual preferences and needs. Rather than relying on broad demographic segments or explicit user settings, intelligent applications can observe interaction patterns, identify preferences, and dynamically adjust their interfaces and content recommendations. Music streaming apps analyze listening history to create personalized playlists, e-commerce applications learn which products interest specific users, and productivity tools identify usage patterns to suggest workflows or features. These personalization capabilities create virtuous cycles where increased engagement generates more behavioral data, enabling even more precise personalization. Implementing these systems effectively requires careful attention to data collection, model design, and the balance between algorithmic recommendations and user control—challenges that have become central to modern Mobile App Development practices.

Natural Language Understanding and Generation

The ability to understand and generate human language represents one of the most transformative applications of AI in mobile experiences. Natural Language Processing (NLP) technologies enable applications to extract meaning from text and speech, identifying intents, entities, and sentiment that drive intelligent responses. This capability powers increasingly sophisticated chatbots and virtual assistants that can handle customer service inquiries, schedule appointments, or provide information through conversational interfaces. More recently, large language models have demonstrated remarkable capabilities for generating human-like text, enabling applications that can summarize content, draft messages, or even create creative content. These technologies are transforming communication within applications, creating opportunities for more natural, accessible interfaces while introducing new design considerations around accuracy, transparency, and appropriate delegation of tasks to AI systems.

Computer Vision: Seeing the World Through Apps

The integration of computer vision capabilities has enabled a new category of applications that can analyze and understand visual information through device cameras. Mobile App Development now encompasses applications that can identify objects in real-time, measure physical spaces, detect text in images for translation, recognize faces for authentication or photo organization, and even diagnose skin conditions or plant diseases from photographs. Augmented reality applications build on these foundations, anchoring virtual content to the physical world through precise understanding of environments and surfaces. These capabilities are enabling entirely new categories of mobile experiences that bridge digital and physical contexts, from virtual try-on features in shopping applications to maintenance instructions that overlay equipment with interactive guides.

Predictive Features and Anticipatory Design

Perhaps the most subtle but impactful application of machine learning in mobile applications is the shift toward predictive and anticipatory experiences. By analyzing patterns in user behavior, applications can predict likely actions and surface relevant functionality proactively—suggesting replies to messages, pre-loading content likely to be accessed next, or recommending actions based on time, location, or context. These capabilities transform applications from passive tools that wait for explicit commands into proactive assistants that anticipate needs. Implementing these features effectively requires sophisticated Mobile App Development approaches that balance the value of predictions against the risk of incorrect suggestions, presenting predictive elements in ways that feel helpful rather than intrusive or presumptuous.

Ethical Considerations and Responsible Implementation

The integration of AI capabilities into mobile applications introduces important ethical considerations that responsible development teams must address. Issues of bias in machine learning models can lead to applications that perform differently across demographic groups or reinforce problematic stereotypes. Privacy concerns are particularly acute when applications collect the substantial data required for personalization or behavioral modeling. Transparency about AI-powered features—helping users understand when they're interacting with automated systems and how their data influences recommendations—builds trust and sets appropriate expectations. Leading organizations in Mobile App Development are establishing ethical frameworks and review processes to ensure AI features are implemented responsibly, considering potential impacts across diverse user populations.

Development Approaches and Technical Considerations

For development teams looking to incorporate AI capabilities, several implementation paths offer different tradeoffs in terms of development complexity, customization, and resource requirements. Cloud-based AI services from providers like Google, Amazon, and Microsoft offer pre-trained models for common tasks like language processing, image recognition, and recommendation systems—accessible through APIs without requiring deep AI expertise. These services enable rapid implementation but may involve ongoing costs and privacy considerations around data transmission. On-device machine learning frameworks like TensorFlow Lite and Core ML support deploying custom or pre-trained models directly on mobile devices, offering better performance and privacy at the cost of more complex implementation. For teams with specialized needs and deeper technical resources, custom model development using frameworks like TensorFlow or PyTorch enables highly tailored capabilities that differentiate applications in competitive markets.

The Future: Multimodal AI and Ambient Intelligence

As AI technologies continue evolving, the next frontier in Mobile App Development involves multimodal systems that combine different forms of intelligence—understanding both visual and textual information, for example, or integrating location data with natural language understanding to provide contextually relevant responses. These capabilities will enable more sophisticated applications that understand context in ways that feel increasingly natural to users. Looking further ahead, the combination of ubiquitous sensors, on-device intelligence, and advanced interfaces points toward ambient computing experiences where intelligence extends beyond individual applications to create cohesive ecosystems of devices that collaboratively support user needs. This evolution will likely blur the boundaries of traditional application development, requiring new approaches to design, development, and user experience that consider intelligence as a fundamental system property rather than a feature added to conventional applications.

The integration of artificial intelligence and machine learning into mobile applications represents not just a set of new features but a fundamental shift in how applications function and what they can offer users. By understanding context, learning from experience, and anticipating needs, these intelligent applications are setting new standards for user experience that will quickly become expectations rather than differentiators. For organizations involved in Mobile App Development, the ability to effectively leverage these technologies—balancing technical possibilities with ethical considerations and meaningful user benefits—will increasingly define competitive advantage in the mobile ecosystem. As these technologies continue maturing and becoming more accessible to developers of all skill levels, their impact on mobile experiences will only accelerate, creating opportunities for applications that are not just tools but truly intelligent partners in users' digital lives.

0 notes

Text

Learn to Use SQL, MongoDB, and Big Data in Data Science

In today’s data-driven world, understanding the right tools is as important as understanding the data. If you plan to pursue a data science certification in Pune, knowing SQL, MongoDB, and Big Data technologies isn’t just a bonus — it’s essential. These tools form the backbone of modern data ecosystems and are widely used in real-world projects to extract insights, build models, and make data-driven decisions.

Whether you are planning on updating your resume, wanting to find a job related to analytics, or just have a general interest in how businesses apply data. Learning how to deal with structured and unstructured data sets should be a goal.

Now, analysing the relation of SQL, MongoDB, and Big Data technologies in data science and how they may transform your career, if you are pursuing data science classes in Pune.

Why These Tools Matter in Data Science?

Data that today’s data scientists use varies from transactional data in SQL databases to social network data stored in NoSQL, such as MongoDB, and data larger than the amount that can be processed by conventional means. It has to go through Big Data frameworks. That is why it is crucial for a person to master such tools:

1. SQL: The Language of Structured Data

SQL (Structured Query Language) is a widely used language to facilitate interaction between users and relational databases. Today, almost every industry globally uses SQL to solve organisational processes in healthcare, finance, retail, and many others.

How It’s Used in Real Life?

Think about what it would be like to become an employee in one of the retail stores based in Pune. In this case, you are supposed to know the trends of products that are popular in the festive season. Therefore, it is possible to use SQL and connect to the company’s sales database to select data for each product and sort it by categories, as well as to determine the sales velocity concerning the seasons. It is also fast, efficient, and functions in many ways that are simply phenomenal.

Key SQL Concepts to Learn:

SELECT, JOIN, GROUP BY, and WHERE clauses

Window functions for advanced analytics

Indexing for query optimisation

Creating stored procedures and views

Whether you're a beginner or brushing up your skills during a data science course in Pune, SQL remains a non-negotiable part of the toolkit.

2. MongoDB: Managing Flexible and Semi-Structured Data

As businesses increasingly collect varied forms of data, like user reviews, logs, and IoT sensor readings, relational databases fall short. Enter MongoDB, a powerful NoSQL database that allows you to store and manage data in JSON-like documents.

Real-Life Example:

Suppose you're analysing customer feedback for a local e-commerce startup in Pune. The feedback varies in length, structure, and language. MongoDB lets you store this inconsistent data without defining a rigid schema upfront. With tools like MongoDB’s aggregation pipeline, you can quickly extract insights and categorise sentiment.

What to Focus On?

CRUD operations in MongoDB

Aggregation pipelines for analysis

Schema design and performance optimisation

Working with nested documents and arrays

Learning MongoDB is especially valuable during your data science certification in Pune, as it prepares you for working with diverse data sources common in real-world applications.

3. Big Data: Scaling Your Skills to Handle Volume

As your datasets grow, traditional tools may no longer suffice. Big Data technologies like Hadoop and Spark allow you to efficiently process terabytes or even petabytes of data.

Real-Life Use Case:

Think about a logistics company in Pune tracking thousands of deliveries daily. Data streams in from GPS devices, traffic sensors, and delivery apps. Using Big Data tools, you can process this information in real-time to optimise routes, reduce fuel costs, and improve delivery times.

What to Learn?

Hadoop’s HDFS for distributed storage

MapReduce programming model.

Apache Spark for real-time and batch processing

Integrating Big Data with Python and machine learning pipelines

Understanding how Big Data integrates with ML workflows is a career-boosting advantage for those enrolled in data science training in Pune.

Combining SQL, MongoDB, and Big Data in Projects

In practice, data scientists often use these tools together. Here’s a simplified example:

You're building a predictive model to understand user churn for a telecom provider.

Use SQL to fetch customer plans and billing history.

Use MongoDB to analyse customer support chat logs.

Use Spark to process massive logs from call centres in real-time.

Once this data is cleaned and structured, it feeds into your machine learning model. This combination showcases the power of knowing multiple tools — a vital edge you gain during a well-rounded data science course in Pune.

How do These Tools Impact Your Career?

Recruiters look for professionals who can navigate relational and non-relational databases and handle large-scale processing tasks. Mastering these tools not only boosts your credibility but also opens up job roles like:

Data Analyst

Machine Learning Engineer

Big Data Engineer

Data Scientist

If you're taking a data science certification in Pune, expect practical exposure to SQL and NoSQL tools, plus the chance to work on capstone projects involving Big Data. Employers value candidates who’ve worked with diverse datasets and understand how to optimise data workflows from start to finish.

Tips to Maximise Your Learning

Work on Projects: Try building a mini data pipeline using public datasets. For instance, analyze COVID-19 data using SQL, store news updates in MongoDB, and run trend analysis using Spark.

Use Cloud Platforms: Tools like Google BigQuery or MongoDB Atlas are great for practising in real-world environments.

Collaborate and Network: Connect with other learners in Pune. Attend meetups, webinars, or contribute to open-source projects.

Final Thoughts

SQL, MongoDB, and Big Data are no longer optional in the data science world — they’re essential. Whether you're just starting or upgrading your skills, mastering these technologies will make you future-ready.

If you plan to enroll in a data science certification in Pune, look for programs that emphasise hands-on training with these tools. They are the bridge between theory and real-world application, and mastering them will give you the confidence to tackle any data challenge.

Whether you’re from a tech background or switching careers, comprehensive data science training in Pune can help you unlock your potential. Embrace the learning curve, and soon, you'll be building data solutions that make a real impact, right from the heart of Pune.

1 note

·

View note

Text

Step-by-Step Breakdown of AI Video Analytics Software Development: Tools, Frameworks, and Best Practices for Scalable Deployment

AI Video Analytics is revolutionizing how businesses analyze visual data. From enhancing security systems to optimizing retail experiences and managing traffic, AI-powered video analytics software has become a game-changer. But how exactly is such a solution developed? Let’s break it down step by step—covering the tools, frameworks, and best practices that go into building scalable AI video analytics software.

Introduction: The Rise of AI in Video Analytics

The explosion of video data—from surveillance cameras to drones and smart cities—has outpaced human capabilities to monitor and interpret visual content in real-time. This is where AI Video Analytics Software Development steps in. Using computer vision, machine learning, and deep neural networks, these systems analyze live or recorded video streams to detect events, recognize patterns, and trigger automated responses.

Step 1: Define the Use Case and Scope

Every AI video analytics solution starts with a clear business goal. Common use cases include:

Real-time threat detection in surveillance

Customer behavior analysis in retail

Traffic management in smart cities

Industrial safety monitoring

License plate recognition

Key Deliverables:

Problem statement

Target environment (edge, cloud, or hybrid)

Required analytics (object detection, tracking, counting, etc.)

Step 2: Data Collection and Annotation

AI models require massive amounts of high-quality, annotated video data. Without clean data, the model's accuracy will suffer.

Tools for Data Collection:

Surveillance cameras

Drones

Mobile apps and edge devices

Tools for Annotation:

CVAT (Computer Vision Annotation Tool)

Labelbox

Supervisely

Tip: Use diverse datasets (different lighting, angles, environments) to improve model generalization.

Step 3: Model Selection and Training

This is where the real AI work begins. The model learns to recognize specific objects, actions, or anomalies.

Popular AI Models for Video Analytics:

YOLOv8 (You Only Look Once)

OpenPose (for human activity recognition)

DeepSORT (for multi-object tracking)

3D CNNs for spatiotemporal activity analysis

Frameworks:

TensorFlow

PyTorch

OpenCV (for pre/post-processing)

ONNX (for interoperability)

Best Practice: Start with pre-trained models and fine-tune them on your domain-specific dataset to save time and improve accuracy.

Step 4: Edge vs. Cloud Deployment Strategy

AI video analytics can run on the cloud, on-premises, or at the edge depending on latency, bandwidth, and privacy needs.

Cloud:

Scalable and easier to manage

Good for post-event analysis

Edge:

Low latency

Ideal for real-time alerts and privacy-sensitive applications

Hybrid:

Initial processing on edge devices, deeper analysis in the cloud

Popular Platforms:

NVIDIA Jetson for edge

AWS Panorama

Azure Video Indexer

Google Cloud Video AI

Step 5: Real-Time Inference Pipeline Design

The pipeline architecture must handle:

Video stream ingestion

Frame extraction

Model inference

Alert/visualization output

Tools & Libraries:

GStreamer for video streaming

FFmpeg for frame manipulation

Flask/FastAPI for inference APIs

Kafka/MQTT for real-time event streaming

Pro Tip: Use GPU acceleration with TensorRT or OpenVINO for faster inference speeds.

Step 6: Integration with Dashboards and APIs