#Facebook Data Scraping

Explore tagged Tumblr posts

Text

ok we all know FB is bad for oh so many reasons. But despite that, it had some uses that I couldnt deny, like keeping in touch with people you know IRL.

TODAY FB HAS CROSSED A FLIPPING LINE

I was texting a paragraph to my mom and before i hit send, there was a pop up in messenger announcing AI stickers. Ok, i thought, no big deal, sticker suggestions are common and can be triggered by certain words or phrases.

That is NOT WHAT THIS IS.

Messenger's AI stickers *actually READ YOUR PRIVATE MESSAGES*. How do I kniw this? The very first sticker suggestion was the entire paragraph i typed out AND DID NOT YET SEND on a background.

I immediately scoured the settings and there is NO WAY TO OPT OUT OF THE AI.... unless you DELETE YOUR ACCOUNT ENTIRELY.

Needless to say, im telling the people i wanna stay in touch with my contact info and that im going to delete my account. That is going way too far and i most DEFINITELY DO NOT CONSENT TO MY PRIVATE MESSAGES BEING READ BY FLIPPING AI.

If you needed a final reason to delete you FB account, this is it. This is your sign.

Delete your Facebook.

7 notes

·

View notes

Text

I think my favorite thing about anti-AI people is when they act like AI has only existed for the past year or two and every problem they only just discovered is a brand new thing that just started happening.

#txt#Yes everyone has been scraping your data for years BEFORE you knew about it#why the fuck do you think I just don't put anything on tumblr that I don't want tumblr or anyone else having access to?#It doesn't change anything just bc you know about it. It was already happening. Did the world explode?#Just a smart way to use SNS. Don't put stuff on here you wouldn't want reposted on Twitter or Instagram or Facebook.#And incidently. Midjourney.#(Not that that's how AI works anyway. But no one cares about apreading misinfo when it's the infernal machine filled with devils.)#⌨️

5 notes

·

View notes

Text

Authors, you’re going to want to check this database…

Thank you, my dear @jtargaryen18 for sharing this info too. 💕 I’m sorry your books were stolen as well.

Meta, which is Facebook, Instagram and Threads, is now involved in a massive lawsuit. Internal memos have been released that prove Meta intentionally stole hundreds of thousands of books - including all of mine - to data scrape and train their AI. Here’s a link to see if they’ve stolen your work too: bit.ly/4iRK92t

There’s a class action suit just about to be launched, they’re waiting for the judge to determine if this is protected under the Fair Use laws, which it is absolutely not.

I am begging you to see that piracy is inexcusable, whether it’s a single person who doesn’t want to pay for the book to a despicable multi trillion dollar corporation who thinks they’re entitled to take your creative work for free. Authors write because we love it, because we love sharing these stories with you, because we love your reactions so much.

Because you are our community.

But we’re also supporting our families. Piracy, plagiarism and theft are inexcusable, no matter what the circumstances. Here’s another helpful link to the Author’s Guild info: bit.ly/41S55zz

#tumblr besties and beloveds#AI training is theft#meta stole your books#piracy is evil#plagiarism is wrong#theft by meta to train their crap AI is evil#authors guild resources#please do not use LibGen

1K notes

·

View notes

Text

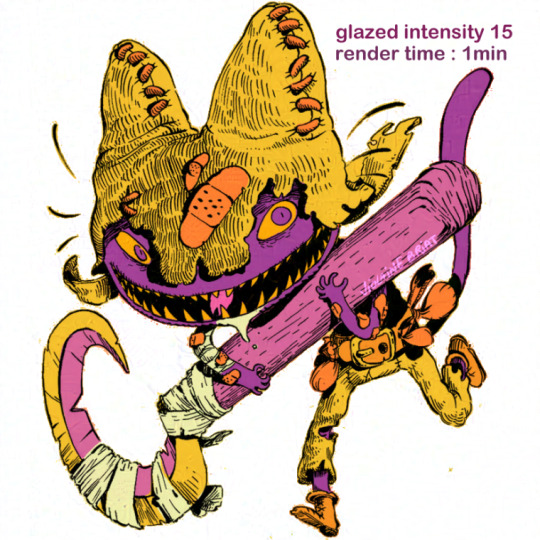

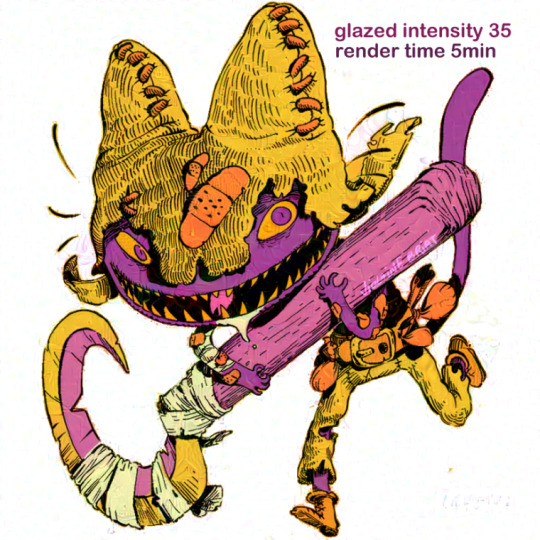

I spent the evening looking into this AI shit and made a wee informative post of the information I found and thought all artists would be interested and maybe help yall?

edit: forgot to mention Glaze and Nightshade to alter/disrupt AI from taking your work into their machines. You can use these and post and it will apparently mess up the AI and it wont take your content into it's machine!

edit: ArtStation is not AI free! So make sure to read that when signing up if you do! (this post is also on twt)

[Image descriptions: A series of infographics titled: “Opt Out AI: [Social Media] and what I found.” The title image shows a drawing of a person holding up a stack of papers where the first says, ‘Terms of Service’ and the rest have logos for various social media sites and are falling onto the floor. Long transcriptions follow.

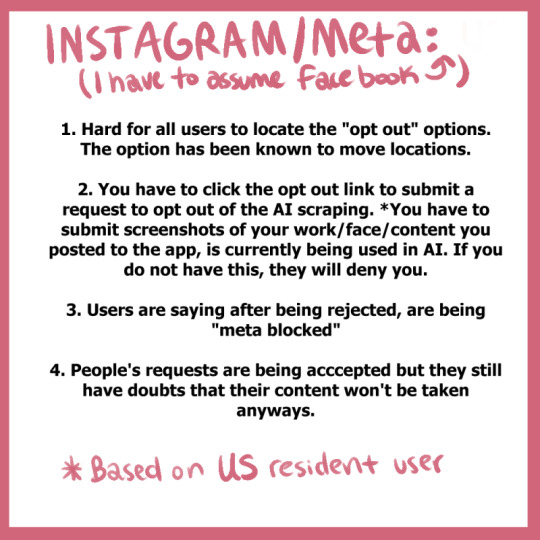

Instagram/Meta (I have to assume Facebook).

Hard for all users to locate the “opt out” options. The option has been known to move locations.

You have to click the opt out link to submit a request to opt out of the AI scraping. *You have to submit screenshots of your work/face/content you posted to the app, is curretnly being used in AI. If you do not have this, they will deny you.

Users are saying after being rejected, are being “meta blocked”

People’s requests are being accepted but they still have doubts that their content won’t be taken anyways.

Twitter/X

As of August 2023, Twitter’s ToS update:

“Twitter has the right to use any content that users post on its platform to train its AI models, and that users grant Twitter a worldwide, non-exclusive, royalty-free license to do so.”

There isn’t much to say. They’re doing the same thing Instagram is doing (to my understanding) and we can’t even opt out.

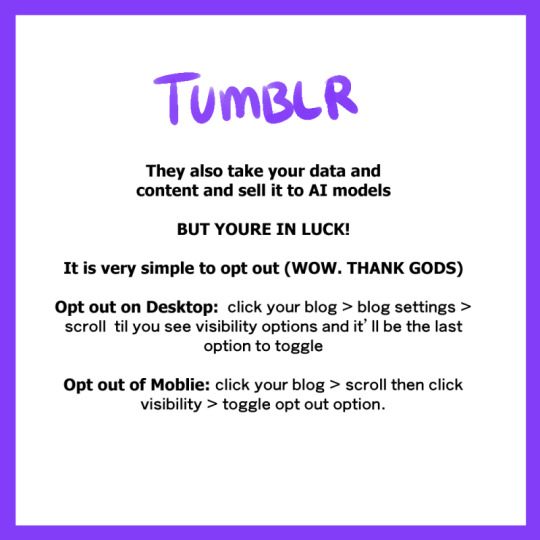

Tumblr

They also take your data and content and sell it to AI models.

But you’re in luck!

It is very simply to opt out (Wow. Thank Gods)

Opt out on Desktop: click on your blog > blog settings > scroll til you see visibility options and it’ll be the last option to toggle

Out out of Mobile: click your blog > scroll then click visibility > toggle opt out option

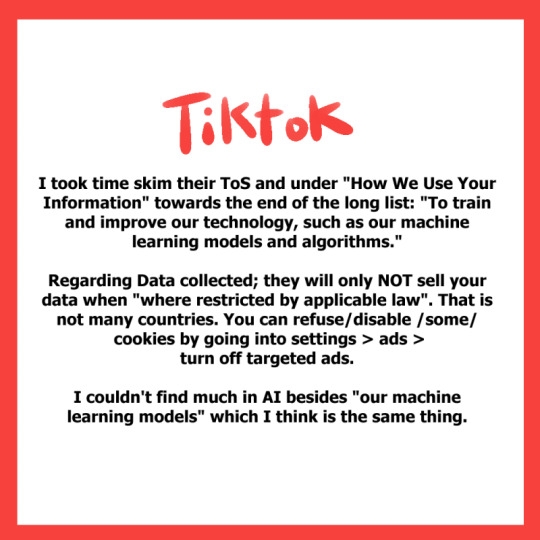

TikTok

I took time skim their ToS and under “How We Use Your Information” and towards the end of the long list: “To train and improve our technology, such as our machine learning models and algorithms.”

Regarding data collected; they will only not sell your data when “where restricted by applicable law”. That is not many countries. You can refuse/disable some cookies by going into settings > ads > turn off targeted ads.

I couldn’t find much in AI besides “our machine learning models” which I think is the same thing.

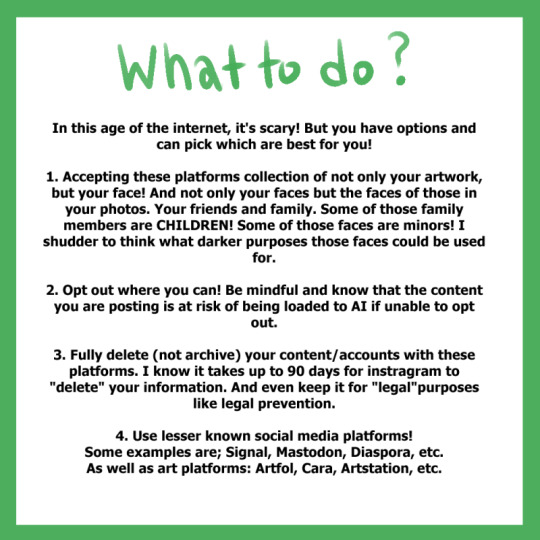

What to do?

In this age of the internet, it’s scary! But you have options and can pick which are best for you!

Accepting these platforms collection of not only your artwork, but your face! And not only your faces but the faces of those in your photos. Your friends and family. Some of those family members are children! Some of those faces are minors! I shudder to think what darker purposes those faces could be used for.

Opt out where you can! Be mindful and know the content you are posting is at risk of being loaded to AI if unable to opt out.

Fully delete (not archive) your content/accounts with these platforms. I know it takes up to 90 days for instagram to “delete” your information. And even keep it for “legal” purposes like legal prevention.

Use lesser known social media platforms! Some examples are; Signal, Mastodon, Diaspora, et. As well as art platforms: Artfol, Cara, ArtStation, etc.

The last drawing shows the same person as the title saying, ‘I am, by no means, a ToS autistic! So feel free to share any relatable information to these topics via reply or qrt!

I just wanted to share the information I found while searching for my own answers cause I’m sure people have the same questions as me.’ \End description] (thank you @a-captions-blog!)

4K notes

·

View notes

Text

The moment when their worlds collide, world peace will finally be restored.

#ro ramdin#mogul mail#ludwig#youtube commentary#theyre both so based and brutally honest in their own way#And take the typical brainless yt commentary formats and make it a think piece that leaves the viewer something to think about later#Ro does it with long extended metaphors snappy editing and what seems to be snapshots of her mind full of raw emotion#You can help but feel uncomfortable at how she displays facts and reality without an idealistic filter#While Ludwig prepares a list of tabs to present without a script hits the record button and dies it all in one take#And even though his videos may be more “comfortable” to sit through#He does not shy away from the hard hitting reality of situations#Like in the threads vid where he couldn’t willingly promote the twitter alternative when facebook has been known to scrape user data etc.#Both know the YouTube space so well and want their viewers to be aware of it too#Both have express their displeasure and discomfort around parasocial relationships and their role as “commentary youtubers”#How what they say can and will be believed by thousands and the pressures that power holds#Ro and Lud are the only youtubers I’ve seen at least to fully disclose their patreon earnings and twitch contract without ill will#Like that’s strange#Also they’re both funny as fuck#Very important note yes right that down#I just want to listen to them have a conversation#Or at least make a vague reference to each other it’s all I ask#I know this post’s audience is niche (only me) but it had to be said

0 notes

Text

Facebook Data Scraper | Facebook Data Scraping Tools and Extension

Our Facebook Data Scraper helps you extract data from Facebook. Use Facebook Data Scraping Tools to scrape posts, hashtags, etc. in countries like USA, UK, UAE.

Know more : https://www.actowizsolutions.com/facebook-data-scraper.php

0 notes

Text

I think most of us should take the whole ai scraping situation as a sign that we should maybe stop giving google/facebook/big corps all our data and look into alternatives that actually value your privacy.

i know this is easier said than done because everybody under the sun seems to use these services, but I promise you it’s not impossible. In fact, I made a list of a few alternatives to popular apps and services, alternatives that are privacy first, open source and don’t sell your data.

right off the bat I suggest you stop using gmail. it’s trash and not secure at all. google can read your emails. in fact, google has acces to all the data on your account and while what they do with it is already shady, I don’t even want to know what the whole ai situation is going to bring. a good alternative to a few google services is skiff. they provide a secure, e3ee mail service along with a workspace that can easily import google documents, a calendar and 10 gb free storage. i’ve been using it for a while and it’s great.

a good alternative to google drive is either koofr or filen. I use filen because everything you upload on there is end to end encrypted with zero knowledge. they offer 10 gb of free storage and really affordable lifetime plans.

google docs? i don’t know her. instead, try cryptpad. I don’t have the spoons to list all the great features of this service, you just have to believe me. nothing you write there will be used to train ai and you can share it just as easily. if skiff is too limited for you and you also need stuff like sheets or forms, cryptpad is here for you. the only downside i could think of is that they don’t have a mobile app, but the site works great in a browser too.

since there is no real alternative to youtube I recommend watching your little slime videos through a streaming frontend like freetube or new pipe. besides the fact that they remove ads, they also stop google from tracking what you watch. there is a bit of functionality loss with these services, but if you just want to watch videos privately they’re great.

if you’re looking for an alternative to google photos that is secure and end to end encrypted you might want to look into stingle, although in my experience filen’s photos tab works pretty well too.

oh, also, for the love of god, stop using whatsapp, facebook messenger or instagram for messaging. just stop. signal and telegram are literally here and they’re free. spread the word, educate your friends, ask them if they really want anyone to snoop around their private conversations.

regarding browser, you know the drill. throw google chrome/edge in the trash (they really basically spyware disguised as browsers) and download either librewolf or brave. mozilla can be a great secure option too, with a bit of tinkering.

if you wanna get a vpn (and I recommend you do) be wary that some of them are scammy. do your research, read their terms and conditions, familiarise yourself with their model. if you don’t wanna do that and are willing to trust my word, go with mullvad. they don’t keep any logs. it’s 5 euros a month with no different pricing plans or other bullshit.

lastly, whatever alternative you decide on, what matters most is that you don’t keep all your data in one place. don’t trust a service to take care of your emails, documents, photos and messages. store all these things in different, trustworthy (preferably open source) places. there is absolutely no reason google has to know everything about you.

do your own research as well, don’t just trust the first vpn service your favourite youtube gets sponsored by. don’t trust random tech blogs to tell you what the best cloud storage service is — they get good money for advertising one or the other. compare shit on your own or ask a tech savvy friend to help you. you’ve got this.

#internet privacy#privacy#vpn#google docs#ai scraping#psa#ai#archive of our own#ao3 writer#mine#textpost

1K notes

·

View notes

Text

AI “art” and uncanniness

TOMORROW (May 14), I'm on a livecast about AI AND ENSHITTIFICATION with TIM O'REILLY; on TOMORROW (May 15), I'm in NORTH HOLLYWOOD for a screening of STEPHANIE KELTON'S FINDING THE MONEY; FRIDAY (May 17), I'm at the INTERNET ARCHIVE in SAN FRANCISCO to keynote the 10th anniversary of the AUTHORS ALLIANCE.

When it comes to AI art (or "art"), it's hard to find a nuanced position that respects creative workers' labor rights, free expression, copyright law's vital exceptions and limitations, and aesthetics.

I am, on balance, opposed to AI art, but there are some important caveats to that position. For starters, I think it's unequivocally wrong – as a matter of law – to say that scraping works and training a model with them infringes copyright. This isn't a moral position (I'll get to that in a second), but rather a technical one.

Break down the steps of training a model and it quickly becomes apparent why it's technically wrong to call this a copyright infringement. First, the act of making transient copies of works – even billions of works – is unequivocally fair use. Unless you think search engines and the Internet Archive shouldn't exist, then you should support scraping at scale:

https://pluralistic.net/2023/09/17/how-to-think-about-scraping/

And unless you think that Facebook should be allowed to use the law to block projects like Ad Observer, which gathers samples of paid political disinformation, then you should support scraping at scale, even when the site being scraped objects (at least sometimes):

https://pluralistic.net/2021/08/06/get-you-coming-and-going/#potemkin-research-program

After making transient copies of lots of works, the next step in AI training is to subject them to mathematical analysis. Again, this isn't a copyright violation.

Making quantitative observations about works is a longstanding, respected and important tool for criticism, analysis, archiving and new acts of creation. Measuring the steady contraction of the vocabulary in successive Agatha Christie novels turns out to offer a fascinating window into her dementia:

https://www.theguardian.com/books/2009/apr/03/agatha-christie-alzheimers-research

Programmatic analysis of scraped online speech is also critical to the burgeoning formal analyses of the language spoken by minorities, producing a vibrant account of the rigorous grammar of dialects that have long been dismissed as "slang":

https://www.researchgate.net/publication/373950278_Lexicogrammatical_Analysis_on_African-American_Vernacular_English_Spoken_by_African-Amecian_You-Tubers

Since 1988, UCL Survey of English Language has maintained its "International Corpus of English," and scholars have plumbed its depth to draw important conclusions about the wide variety of Englishes spoken around the world, especially in postcolonial English-speaking countries:

https://www.ucl.ac.uk/english-usage/projects/ice.htm

The final step in training a model is publishing the conclusions of the quantitative analysis of the temporarily copied documents as software code. Code itself is a form of expressive speech – and that expressivity is key to the fight for privacy, because the fact that code is speech limits how governments can censor software:

https://www.eff.org/deeplinks/2015/04/remembering-case-established-code-speech/

Are models infringing? Well, they certainly can be. In some cases, it's clear that models "memorized" some of the data in their training set, making the fair use, transient copy into an infringing, permanent one. That's generally considered to be the result of a programming error, and it could certainly be prevented (say, by comparing the model to the training data and removing any memorizations that appear).

Not every seeming act of memorization is a memorization, though. While specific models vary widely, the amount of data from each training item retained by the model is very small. For example, Midjourney retains about one byte of information from each image in its training data. If we're talking about a typical low-resolution web image of say, 300kb, that would be one three-hundred-thousandth (0.0000033%) of the original image.

Typically in copyright discussions, when one work contains 0.0000033% of another work, we don't even raise the question of fair use. Rather, we dismiss the use as de minimis (short for de minimis non curat lex or "The law does not concern itself with trifles"):

https://en.wikipedia.org/wiki/De_minimis

Busting someone who takes 0.0000033% of your work for copyright infringement is like swearing out a trespassing complaint against someone because the edge of their shoe touched one blade of grass on your lawn.

But some works or elements of work appear many times online. For example, the Getty Images watermark appears on millions of similar images of people standing on red carpets and runways, so a model that takes even in infinitesimal sample of each one of those works might still end up being able to produce a whole, recognizable Getty Images watermark.

The same is true for wire-service articles or other widely syndicated texts: there might be dozens or even hundreds of copies of these works in training data, resulting in the memorization of long passages from them.

This might be infringing (we're getting into some gnarly, unprecedented territory here), but again, even if it is, it wouldn't be a big hardship for model makers to post-process their models by comparing them to the training set, deleting any inadvertent memorizations. Even if the resulting model had zero memorizations, this would do nothing to alleviate the (legitimate) concerns of creative workers about the creation and use of these models.

So here's the first nuance in the AI art debate: as a technical matter, training a model isn't a copyright infringement. Creative workers who hope that they can use copyright law to prevent AI from changing the creative labor market are likely to be very disappointed in court:

https://www.hollywoodreporter.com/business/business-news/sarah-silverman-lawsuit-ai-meta-1235669403/

But copyright law isn't a fixed, eternal entity. We write new copyright laws all the time. If current copyright law doesn't prevent the creation of models, what about a future copyright law?

Well, sure, that's a possibility. The first thing to consider is the possible collateral damage of such a law. The legal space for scraping enables a wide range of scholarly, archival, organizational and critical purposes. We'd have to be very careful not to inadvertently ban, say, the scraping of a politician's campaign website, lest we enable liars to run for office and renege on their promises, while they insist that they never made those promises in the first place. We wouldn't want to abolish search engines, or stop creators from scraping their own work off sites that are going away or changing their terms of service.

Now, onto quantitative analysis: counting words and measuring pixels are not activities that you should need permission to perform, with or without a computer, even if the person whose words or pixels you're counting doesn't want you to. You should be able to look as hard as you want at the pixels in Kate Middleton's family photos, or track the rise and fall of the Oxford comma, and you shouldn't need anyone's permission to do so.

Finally, there's publishing the model. There are plenty of published mathematical analyses of large corpuses that are useful and unobjectionable. I love me a good Google n-gram:

https://books.google.com/ngrams/graph?content=fantods%2C+heebie-jeebies&year_start=1800&year_end=2019&corpus=en-2019&smoothing=3

And large language models fill all kinds of important niches, like the Human Rights Data Analysis Group's LLM-based work helping the Innocence Project New Orleans' extract data from wrongful conviction case files:

https://hrdag.org/tech-notes/large-language-models-IPNO.html

So that's nuance number two: if we decide to make a new copyright law, we'll need to be very sure that we don't accidentally crush these beneficial activities that don't undermine artistic labor markets.

This brings me to the most important point: passing a new copyright law that requires permission to train an AI won't help creative workers get paid or protect our jobs.

Getty Images pays photographers the least it can get away with. Publishers contracts have transformed by inches into miles-long, ghastly rights grabs that take everything from writers, but still shifts legal risks onto them:

https://pluralistic.net/2022/06/19/reasonable-agreement/

Publishers like the New York Times bitterly oppose their writers' unions:

https://actionnetwork.org/letters/new-york-times-stop-union-busting

These large corporations already control the copyrights to gigantic amounts of training data, and they have means, motive and opportunity to license these works for training a model in order to pay us less, and they are engaged in this activity right now:

https://www.nytimes.com/2023/12/22/technology/apple-ai-news-publishers.html

Big games studios are already acting as though there was a copyright in training data, and requiring their voice actors to begin every recording session with words to the effect of, "I hereby grant permission to train an AI with my voice" and if you don't like it, you can hit the bricks:

https://www.vice.com/en/article/5d37za/voice-actors-sign-away-rights-to-artificial-intelligence

If you're a creative worker hoping to pay your bills, it doesn't matter whether your wages are eroded by a model produced without paying your employer for the right to do so, or whether your employer got to double dip by selling your work to an AI company to train a model, and then used that model to fire you or erode your wages:

https://pluralistic.net/2023/02/09/ai-monkeys-paw/#bullied-schoolkids

Individual creative workers rarely have any bargaining leverage over the corporations that license our copyrights. That's why copyright's 40-year expansion (in duration, scope, statutory damages) has resulted in larger, more profitable entertainment companies, and lower payments – in real terms and as a share of the income generated by their work – for creative workers.

As Rebecca Giblin and I write in our book Chokepoint Capitalism, giving creative workers more rights to bargain with against giant corporations that control access to our audiences is like giving your bullied schoolkid extra lunch money – it's just a roundabout way of transferring that money to the bullies:

https://pluralistic.net/2022/08/21/what-is-chokepoint-capitalism/

There's an historical precedent for this struggle – the fight over music sampling. 40 years ago, it wasn't clear whether sampling required a copyright license, and early hip-hop artists took samples without permission, the way a horn player might drop a couple bars of a well-known song into a solo.

Many artists were rightfully furious over this. The "heritage acts" (the music industry's euphemism for "Black people") who were most sampled had been given very bad deals and had seen very little of the fortunes generated by their creative labor. Many of them were desperately poor, despite having made millions for their labels. When other musicians started making money off that work, they got mad.

In the decades that followed, the system for sampling changed, partly through court cases and partly through the commercial terms set by the Big Three labels: Sony, Warner and Universal, who control 70% of all music recordings. Today, you generally can't sample without signing up to one of the Big Three (they are reluctant to deal with indies), and that means taking their standard deal, which is very bad, and also signs away your right to control your samples.

So a musician who wants to sample has to sign the bad terms offered by a Big Three label, and then hand $500 out of their advance to one of those Big Three labels for the sample license. That $500 typically doesn't go to another artist – it goes to the label, who share it around their executives and investors. This is a system that makes every artist poorer.

But it gets worse. Putting a price on samples changes the kind of music that can be economically viable. If you wanted to clear all the samples on an album like Public Enemy's "It Takes a Nation of Millions To Hold Us Back," or the Beastie Boys' "Paul's Boutique," you'd have to sell every CD for $150, just to break even:

https://memex.craphound.com/2011/07/08/creative-license-how-the-hell-did-sampling-get-so-screwed-up-and-what-the-hell-do-we-do-about-it/

Sampling licenses don't just make every artist financially worse off, they also prevent the creation of music of the sort that millions of people enjoy. But it gets even worse. Some older, sample-heavy music can't be cleared. Most of De La Soul's catalog wasn't available for 15 years, and even though some of their seminal music came back in March 2022, the band's frontman Trugoy the Dove didn't live to see it – he died in February 2022:

https://www.vulture.com/2023/02/de-la-soul-trugoy-the-dove-dead-at-54.html

This is the third nuance: even if we can craft a model-banning copyright system that doesn't catch a lot of dolphins in its tuna net, it could still make artists poorer off.

Back when sampling started, it wasn't clear whether it would ever be considered artistically important. Early sampling was crude and experimental. Musicians who trained for years to master an instrument were dismissive of the idea that clicking a mouse was "making music." Today, most of us don't question the idea that sampling can produce meaningful art – even musicians who believe in licensing samples.

Having lived through that era, I'm prepared to believe that maybe I'll look back on AI "art" and say, "damn, I can't believe I never thought that could be real art."

But I wouldn't give odds on it.

I don't like AI art. I find it anodyne, boring. As Henry Farrell writes, it's uncanny, and not in a good way:

https://www.programmablemutter.com/p/large-language-models-are-uncanny

Farrell likens the work produced by AIs to the movement of a Ouija board's planchette, something that "seems to have a life of its own, even though its motion is a collective side-effect of the motions of the people whose fingers lightly rest on top of it." This is "spooky-action-at-a-close-up," transforming "collective inputs … into apparently quite specific outputs that are not the intended creation of any conscious mind."

Look, art is irrational in the sense that it speaks to us at some non-rational, or sub-rational level. Caring about the tribulations of imaginary people or being fascinated by pictures of things that don't exist (or that aren't even recognizable) doesn't make any sense. There's a way in which all art is like an optical illusion for our cognition, an imaginary thing that captures us the way a real thing might.

But art is amazing. Making art and experiencing art makes us feel big, numinous, irreducible emotions. Making art keeps me sane. Experiencing art is a precondition for all the joy in my life. Having spent most of my life as a working artist, I've come to the conclusion that the reason for this is that art transmits an approximation of some big, numinous irreducible emotion from an artist's mind to our own. That's it: that's why art is amazing.

AI doesn't have a mind. It doesn't have an intention. The aesthetic choices made by AI aren't choices, they're averages. As Farrell writes, "LLM art sometimes seems to communicate a message, as art does, but it is unclear where that message comes from, or what it means. If it has any meaning at all, it is a meaning that does not stem from organizing intention" (emphasis mine).

Farrell cites Mark Fisher's The Weird and the Eerie, which defines "weird" in easy to understand terms ("that which does not belong") but really grapples with "eerie."

For Fisher, eeriness is "when there is something present where there should be nothing, or is there is nothing present when there should be something." AI art produces the seeming of intention without intending anything. It appears to be an agent, but it has no agency. It's eerie.

Fisher talks about capitalism as eerie. Capital is "conjured out of nothing" but "exerts more influence than any allegedly substantial entity." The "invisible hand" shapes our lives more than any person. The invisible hand is fucking eerie. Capitalism is a system in which insubstantial non-things – corporations – appear to act with intention, often at odds with the intentions of the human beings carrying out those actions.

So will AI art ever be art? I don't know. There's a long tradition of using random or irrational or impersonal inputs as the starting point for human acts of artistic creativity. Think of divination:

https://pluralistic.net/2022/07/31/divination/

Or Brian Eno's Oblique Strategies:

http://stoney.sb.org/eno/oblique.html

I love making my little collages for this blog, though I wouldn't call them important art. Nevertheless, piecing together bits of other peoples' work can make fantastic, important work of historical note:

https://www.johnheartfield.com/John-Heartfield-Exhibition/john-heartfield-art/famous-anti-fascist-art/heartfield-posters-aiz

Even though painstakingly cutting out tiny elements from others' images can be a meditative and educational experience, I don't think that using tiny scissors or the lasso tool is what defines the "art" in collage. If you can automate some of this process, it could still be art.

Here's what I do know. Creating an individual bargainable copyright over training will not improve the material conditions of artists' lives – all it will do is change the relative shares of the value we create, shifting some of that value from tech companies that hate us and want us to starve to entertainment companies that hate us and want us to starve.

As an artist, I'm foursquare against anything that stands in the way of making art. As an artistic worker, I'm entirely committed to things that help workers get a fair share of the money their work creates, feed their families and pay their rent.

I think today's AI art is bad, and I think tomorrow's AI art will probably be bad, but even if you disagree (with either proposition), I hope you'll agree that we should be focused on making sure art is legal to make and that artists get paid for it.

Just because copyright won't fix the creative labor market, it doesn't follow that nothing will. If we're worried about labor issues, we can look to labor law to improve our conditions. That's what the Hollywood writers did, in their groundbreaking 2023 strike:

https://pluralistic.net/2023/10/01/how-the-writers-guild-sunk-ais-ship/

Now, the writers had an advantage: they are able to engage in "sectoral bargaining," where a union bargains with all the major employers at once. That's illegal in nearly every other kind of labor market. But if we're willing to entertain the possibility of getting a new copyright law passed (that won't make artists better off), why not the possibility of passing a new labor law (that will)? Sure, our bosses won't lobby alongside of us for more labor protection, the way they would for more copyright (think for a moment about what that says about who benefits from copyright versus labor law expansion).

But all workers benefit from expanded labor protection. Rather than going to Congress alongside our bosses from the studios and labels and publishers to demand more copyright, we could go to Congress alongside every kind of worker, from fast-food cashiers to publishing assistants to truck drivers to demand the right to sectoral bargaining. That's a hell of a coalition.

And if we do want to tinker with copyright to change the way training works, let's look at collective licensing, which can't be bargained away, rather than individual rights that can be confiscated at the entrance to our publisher, label or studio's offices. These collective licenses have been a huge success in protecting creative workers:

https://pluralistic.net/2023/02/26/united-we-stand/

Then there's copyright's wildest wild card: The US Copyright Office has repeatedly stated that works made by AIs aren't eligible for copyright, which is the exclusive purview of works of human authorship. This has been affirmed by courts:

https://pluralistic.net/2023/08/20/everything-made-by-an-ai-is-in-the-public-domain/

Neither AI companies nor entertainment companies will pay creative workers if they don't have to. But for any company contemplating selling an AI-generated work, the fact that it is born in the public domain presents a substantial hurdle, because anyone else is free to take that work and sell it or give it away.

Whether or not AI "art" will ever be good art isn't what our bosses are thinking about when they pay for AI licenses: rather, they are calculating that they have so much market power that they can sell whatever slop the AI makes, and pay less for the AI license than they would make for a human artist's work. As is the case in every industry, AI can't do an artist's job, but an AI salesman can convince an artist's boss to fire the creative worker and replace them with AI:

https://pluralistic.net/2024/01/29/pay-no-attention/#to-the-little-man-behind-the-curtain

They don't care if it's slop – they just care about their bottom line. A studio executive who cancels a widely anticipated film prior to its release to get a tax-credit isn't thinking about artistic integrity. They care about one thing: money. The fact that AI works can be freely copied, sold or given away may not mean much to a creative worker who actually makes their own art, but I assure you, it's the only thing that matters to our bosses.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/05/13/spooky-action-at-a-close-up/#invisible-hand

#pluralistic#ai art#eerie#ai#weird#henry farrell#copyright#copyfight#creative labor markets#what is art#ideomotor response#mark fisher#invisible hand#uncanniness#prompting

270 notes

·

View notes

Text

In late January, a warning spread through the London-based Facebook group Are We Dating the Same Guy?—but this post wasn’t about a bad date or a cheating ex. A connected network of male-dominated Telegram groups had surfaced, sharing and circulating nonconsensual intimate images of women. Their justification? Retaliation.

On January 23, users in the AWDTSG Facebook group began warning about hidden Telegram groups. Screenshots and TikTok videos surfaced, revealing public Telegram channels where users were sharing nonconsensual intimate images. Further investigation by WIRED identified additional channels linked to the network. By scraping thousands of messages from these groups, it became possible to analyze their content and the patterns of abuse.

AWDTSG, a sprawling web of over 150 regional forums across Facebook alone, with roughly 3 million members worldwide, was designed by Paolo Sanchez in 2022 in New York as a space for women to share warnings about predatory men. But its rapid growth made it a target. Critics argue that the format allows unverified accusations to spiral. Some men have responded with at least three defamation lawsuits filed in recent years against members, administrators, and even Meta, Facebook’s parent company. Others took a different route: organized digital harassment.

Primarily using Telegram group data made available through Telemetr.io, a Telegram analytics tool, WIRED analyzed more than 3,500 messages from a Telegram group linked to a larger misogynistic revenge network. Over 24 hours, WIRED observed users systematically tracking, doxing, and degrading women from AWDTSG, circulating nonconsensual images, phone numbers, usernames, and location data.

From January 26 to 27, the chats became a breeding ground for misogynistic, racist, sexual digital abuse of women, with women of color bearing the brunt of the targeted harassment and abuse. Thousands of users encouraged each other to share nonconsensual intimate images, often referred to as “revenge porn,” and requested and circulated women’s phone numbers, usernames, locations, and other personal identifiers.

As women from AWDTSG began infiltrating the Telegram group, at least one user grew suspicious: “These lot just tryna get back at us for exposing them.”

When women on Facebook tried to alert others of the risk of doxing and leaks of their intimate content, AWDTSG moderators removed their posts. (The group’s moderators did not respond to multiple requests for comment.) Meanwhile, men who had previously coordinated through their own Facebook groups like “Are We Dating the Same Girl” shifted their operations in late January to Telegram's more permissive environment. Their message was clear: If they can do it, so can we.

"In the eyes of some of these men, this is a necessary act of defense against a kind of hostile feminism that they believe is out to ruin their lives," says Carl Miller, cofounder of the Center for the Analysis of Social Media and host of the podcast Kill List.

The dozen Telegram groups that WIRED has identified are part of a broader digital ecosystem often referred to as the manosphere, an online network of forums, influencers, and communities that perpetuate misogynistic ideologies.

“Highly isolated online spaces start reinforcing their own worldviews, pulling further and further from the mainstream, and in doing so, legitimizing things that would be unthinkable offline,” Miller says. “Eventually, what was once unthinkable becomes the norm.”

This cycle of reinforcement plays out across multiple platforms. Facebook forums act as the first point of contact, TikTok amplifies the rhetoric in publicly available videos, and Telegram is used to enable illicit activity. The result? A self-sustaining network of harassment that thrives on digital anonymity.

TikTok amplified discussions around the Telegram groups. WIRED reviewed 12 videos in which creators, of all genders, discussed, joked about, or berated the Telegram groups. In the comments section of these videos, users shared invitation links to public and private groups and some public channels on Telegram, making them accessible to a wider audience. While TikTok was not the primary platform for harassment, discussions about the Telegram groups spread there, and in some cases users explicitly acknowledged their illegality.

TikTok tells WIRED that its Community Guidelines prohibit image-based sexual abuse, sexual harassment, and nonconsensual sexual acts, and that violations result in removals and possible account bans. They also stated that TikTok removes links directing people to content that violates its policies and that it continues to invest in Trust and Safety operations.

Intentionally or not, the algorithms powering social media platforms like Facebook can amplify misogynistic content. Hate-driven engagement fuels growth, pulling new users into these communities through viral trends, suggested content, and comment-section recruitment.

As people caught notice on Facebook and TikTok and started reporting the Telegram groups, they didn’t disappear—they simply rebranded. Reactionary groups quickly emerged, signaling that members knew they were being watched but had no intention of stopping. Inside, messages revealed a clear awareness of the risks: Users knew they were breaking the law. They just didn’t care, according to chat logs reviewed by WIRED. To absolve themselves, one user wrote, “I do not condone im [simply] here to regulate rules,” while another shared a link to a statement that said: “I am here for only entertainment purposes only and I don’t support any illegal activities.”

Meta did not respond to a request for comment.

Messages from the Telegram group WIRED analyzed show that some chats became hyper-localized, dividing London into four regions to make harassment even more targeted. Members casually sought access to other city-based groups: “Who’s got brum link?” and “Manny link tho?”—British slang referring to Birmingham and Manchester. They weren’t just looking for gossip. “Any info from west?” one user asked, while another requested, “What’s her @?”— hunting for a woman’s social media handle, a first step to tracking her online activity.

The chat logs further reveal how women were discussed as commodities. “She a freak, I’ll give her that,” one user wrote. Another added, “Beautiful. Hide her from me.” Others encouraged sharing explicit material: “Sharing is caring, don’t be greedy.”

Members also bragged about sexual exploits, using coded language to reference encounters in specific locations, and spread degrading, racial abuse, predominantly targeting Black women.

Once a woman was mentioned, her privacy was permanently compromised. Users frequently shared social media handles, which led other members to contact her—soliciting intimate images or sending disparaging texts.

Anonymity can be a protective tool for women navigating online harassment. But it can also be embraced by bad actors who use the same structures to evade accountability.

"It’s ironic," Miller says. "The very privacy structures that women use to protect themselves are being turned against them."

The rise of unmoderated spaces like the abusive Telegram groups makes it nearly impossible to trace perpetrators, exposing a systemic failure in law enforcement and regulation. Without clear jurisdiction or oversight, platforms are able to sidestep accountability.

Sophie Mortimer, manager of the UK-based Revenge Porn Helpline, warned that Telegram has become one of the biggest threats to online safety. She says that the UK charity’s reports to Telegram of nonconsensual intimate image abuse are ignored. “We would consider them to be noncompliant to our requests,” she says. Telegram, however, says it received only “about 10 piece of content” from the Revenge Porn Helpline, “all of which were removed.” Mortimer did not yet respond to WIRED’s questions about the veracity of Telegram’s claims.

Despite recent updates to the UK’s Online Safety Act, legal enforcement of online abuse remains weak. An October 2024 report from the UK-based charity The Cyber Helpline shows that cybercrime victims face significant barriers in reporting abuse, and justice for online crimes is seven times less likely than for offline crimes.

"There’s still this long-standing idea that cybercrime doesn’t have real consequences," says Charlotte Hooper, head of operations of The Cyber Helpline, which helps support victims of cybercrime. "But if you look at victim studies, cybercrime is just as—if not more—psychologically damaging than physical crime."

A Telegram spokesperson tells WIRED that its moderators use “custom AI and machine learning tools” to remove content that violates the platform's rules, “including nonconsensual pornography and doxing.”

“As a result of Telegram's proactive moderation and response to reports, moderators remove millions of pieces of harmful content each day,” the spokesperson says.

Hooper says that survivors of digital harassment often change jobs, move cities, or even retreat from public life due to the trauma of being targeted online. The systemic failure to recognize these cases as serious crimes allows perpetrators to continue operating with impunity.

Yet, as these networks grow more interwoven, social media companies have failed to adequately address gaps in moderation.

Telegram, despite its estimated 950 million monthly active users worldwide, claims it’s too small to qualify as a “Very Large Online Platform” under the European Union’s Digital Service Act, allowing it to sidestep certain regulatory scrutiny. “Telegram takes its responsibilities under the DSA seriously and is in constant communication with the European Commission,” a company spokesperson said.

In the UK, several civil society groups have expressed concern about the use of large private Telegram groups, which allow up to 200,000 members. These groups exploit a loophole by operating under the guise of “private” communication to circumvent legal requirements for removing illegal content, including nonconsensual intimate images.

Without stronger regulation, online abuse will continue to evolve, adapting to new platforms and evading scrutiny.

The digital spaces meant to safeguard privacy are now incubating its most invasive violations. These networks aren’t just growing—they’re adapting, spreading across platforms, and learning how to evade accountability.

57 notes

·

View notes

Text

It's a bit weird typing out a full post here on tumblr. I used to be one of these artists that mostly focused on posting only images, the least amount of opinions/thoughts I could share, the better. Today, the art world online feels weird, not only because of AI, but also the algorithms on every platform and the general way our craft is getting replaced for close to 0 dollars. This website was a huge instrument in kickstarting my career as a professional artist, it was an inspiring place were artists shared their art and where we could make friends with anyone in the world, in any industries. It was pretty much the place that paved the way as a social media website outside of Facebook, where you could search art through tags etc. Anyhow, Tumblr still has a place in my heart even if all artists moved away from it after the infamous nsfw ban (mostly to Instagram and twitter). And now we're all playing a game of whack-a-mole trying to figure out if the social media platform we're using is going to sell their user content to AI / deep learning (looking at you reddit, going into stocks). On the Tumblr side, Matt Mullenweg's interviews and thoughts on the platform shows he's down to use AI, and I guess it could help create posts faster but then again, you have to click through multiple menus to protect your art (and writing) from being scraped. It's really kind of sad to have to be on the defensive with posting art/writing online. It doesn't even reflect my personal philosophy on sharing content. I've always been a bit of a "punk" thinking if people want to bootleg my work, it's like free advertisement and a testament to people liking what I created, so I've never really watermarked anything and posted fairly high-res version of my work. I don't even think my art is big enough to warrant the defensiveness of glazing/nightshading it, but the thought of it going through a program to be grinded into a data mush to be only excreted out as the ghost of its former self is honestly sort of deadening.

Finally, the most defeating trend is the quantity of nonsense and low-quality content that's being fed to the internet, made a million times easier with the use of AI. I truly feel like we're living what Neil Postman saw happening over 40 years ago in "amusing ourselves to death"(the brightness of this man's mind is still unrivaled in my eyes).

I guess this is my big rant to tell y'all now I'm gonna be posting crunchy art because Nightshade and Glaze basically make your crispy art look like a low-res JPEG, and I feel like an idiot for doing it but I'm considering it an act of low effort resistance against data scraping. If I can help "poison" data scrapping by wasting 5 minutes of my life to spit out a crunchy jpeg before posting, listen, it's not such a bad price to pay. Anyhow check out my new sticker coming to my secret shop really soon, and how he looks before and after getting glazed haha....

294 notes

·

View notes

Text

Updated Personal Infosec Post

Been awhile since I've had one of these posts part deus: but I figure with all that's going on in the world it's time to make another one and get some stuff out there for people. A lot of the information I'm going to go over you can find here:

https://www.privacyguides.org/en/tools/

So if you'd like to just click the link and ignore the rest of the post that's fine, I strongly recommend checking out the Privacy Guides. Browsers: There's a number to go with but for this post going forward I'm going to recommend Firefox. I know that the Privacy Guides lists Brave and Safari as possible options but Brave is Chrome based now and Safari has ties to Apple. Mullvad is also an option but that's for your more experienced users so I'll leave that up to them to work out. Browser Extensions:

uBlock Origin: content blocker that blocks ads, trackers, and fingerprinting scripts. Notable for being the only ad blocker that still works on Youtube.

Privacy Badger: Content blocker that specifically blocks trackers and fingerprinting scripts. This one will catch things that uBlock doesn't catch but does not work for ads.

Facebook Container: "but I don't have facebook" you might say. Doesn't matter, Meta/Facebook still has trackers out there in EVERYTHING and this containerizes them off away from everything else.

Bitwarden: Password vaulting software, don't trust the password saving features of your browsers, this has multiple layers of security to prevent your passwords from being stolen.

ClearURLs: Allows you to copy and paste URL's without any trackers attached to them.

VPN: Note: VPN software doesn't make you anonymous, no matter what your favorite youtuber tells you, but it does make it harder for your data to be tracked and it makes it less open for whatever public network you're presently connected to.

Mozilla VPN: If you get the annual subscription it's ~$60/year and it comes with an extension that you can install into Firefox.

Mullvad VPN: Is a fast and inexpensive VPN with a serious focus on transparency and security. They have been in operation since 2009. Mullvad is based in Sweden and offers a 30-day money-back guarantee for payment methods that allow it.

Email Provider: Note: By now you've probably realized that Gmail, Outlook, and basically all of the major "free" e-mail service providers are scraping your e-mail data to use for ad data. There are more secure services that can get you away from that but if you'd like the same storage levels you have on Gmail/Ol utlook.com you'll need to pay.

Tuta: Secure, end-to-end encrypted, been around a very long time, and offers a free option up to 1gb.

Mailbox.org: Is an email service with a focus on being secure, ad-free, and privately powered by 100% eco-friendly energy. They have been in operation since 2014. Mailbox.org is based in Berlin, Germany. Accounts start with up to 2GB storage, which can be upgraded as needed.

Email Client:

Thunderbird: a free, open-source, cross-platform email, newsgroup, news feed, and chat (XMPP, IRC, Matrix) client developed by the Thunderbird community, and previously by the Mozilla Foundation.

FairMail (Android Only): minimal, open-source email app which uses open standards (IMAP, SMTP, OpenPGP), has several out of the box privacy features, and minimizes data and battery usage.

Cloud Storage:

Tresorit: Encrypted cloud storage owned by the national postal service of Switzerland. Received MULTIPLE awards for their security stats.

Peergos: decentralized and open-source, allows for you to set up your own cloud storage, but will require a certain level of expertise.

Microsoft Office Replacements:

LibreOffice: free and open-source, updates regularly, and has the majority of the same functions as base level Microsoft Office.

OnlyOffice: cloud-based, free

FreeOffice: Personal licenses are free, probably the closest to a fully office suite replacement.

Chat Clients: Note: As you've heard SMS and even WhatsApp and some other popular chat clients are basically open season right now. These are a couple of options to replace those. Note2: Signal has had some reports of security flaws, the service it was built on was originally built for the US Government, and it is based within the CONUS thus is susceptible to US subpoenas. Take that as you will.

Signal: Provides IM and calling securely and encrypted, has multiple layers of data hardening to prevent intrusion and exfil of data.

Molly (Android OS only): Alternative client to Signal. Routes communications through the TOR Network.

Briar: Encrypted IM client that connects to other clients through the TOR Network, can also chat via wifi or bluetooth.

SimpleX: Truly anonymous account creation, fully encrypted end to end, available for Android and iOS.

Now for the last bit, I know that the majority of people are on Windows or macOS, but if you can get on Linux I would strongly recommend it. pop_OS, Ubuntu, and Mint are super easy distros to use and install. They all have very easy to follow instructions on how to install them on your PC and if you'd like to just test them out all you need is a thumb drive to boot off of to run in demo mode. For more secure distributions for the more advanced users the options are: Whonix, Tails (Live USB only), and Qubes OS.

On a personal note I use Arch Linux, but I WOULD NOT recommend this be anyone's first distro as it requires at least a base level understanding of Linux and liberal use of the Arch Linux Wiki. If you game through Steam their Proton emulator in compatibility mode works wonders, I'm presently playing a major studio game that released in 2024 with no Linux support on it and once I got my drivers installed it's looked great. There are some learning curves to get around, but the benefit of the Linux community is that there's always people out there willing to help. I hope some of this information helps you and look out for yourself, it's starting to look scarier than normal out there.

#infosec#personal information#personal infosec#info sec#firefox#mullvad#vpn#vpn service#linux#linux tails#pop_os#ubuntu#linux mint#long post#whonix#qubes os#arch linux

57 notes

·

View notes

Text

NO AI

TL;DR: almost all social platforms are stealing your art and use it to train generative AI (or sell your content to AI developers); please beware and do something. Or don’t, if you’re okay with this.

Which platforms are NOT safe to use for sharing you art:

Facebook, Instagram and all Meta products and platforms (although if you live in the EU, you can forbid Meta to use your content for AI training)

Reddit (sold out all its content to OpenAI)

Twitter

Bluesky (it has no protection from AI scraping and you can’t opt out from 3rd party data / content collection yet)

DeviantArt, Flikr and literally every stock image platform (some didn’t bother to protect their content from scraping, some sold it out to AI developers)

Here’s WHAT YOU CAN DO:

1. Just say no:

Block all 3rd party data collection: you can do this here on Tumblr (here’s how); all other platforms are merely taking suggestions, tbh

Use Cara (they can’t stop illegal scraping yet, but they are currently working with Glaze to built in ‘AI poisoning’, so… fingers crossed)

2. Use art style masking tools:

Glaze: you can a) download the app and run it locally or b) use Glaze’s free web service, all you need to do is register. This one is a fav of mine, ‘cause, unlike all the other tools, it doesn’t require any coding skills (also it is 100% non-commercial and was developed by a bunch of enthusiasts at the University of Chicago)

Anti-DreamBooth: free code; it was originally developed to protect personal photos from being used for forging deepfakes, but it works for art to

Mist: free code for Windows; if you use MacOS or don’t have powerful enough GPU, you can run Mist on Google’s Colab Notebook

(art style masking tools change some pixels in digital images so that AI models can’t process them properly; the changes are almost invisible, so it doesn’t affect your audiences perception)

3. Use ‘AI poisoning’ tools

Nightshade: free code for Windows 10/11 and MacOS; you’ll need GPU/CPU and a bunch of machine learning libraries to use it though.

4. Stay safe and fuck all this corporate shit.

74 notes

·

View notes

Text

while high last night i decided to bully the new facebook AI assistant by asking it to calculate how many words the beatles have ever written across their complete discography. the resulting interaction was so unexpectedly interesting to me that i just really want to share it here.

(full disclosure: while not high, i did try to do a little digging to verify whether meta's AI was trained in the same shitty way that the chatGPT AI was, i.e., through broad scraping of information without any regard for copyright or other intellectual property rights, and was modestly reassured that there are more licensing agreements in place between meta and IP sources. i do wonder just how heavily they're leaning on the 'fair use' provision of copyright law to justify some of what they're doing, but tl;dr it looks like they have learned from chatGPT's high profile mistakes and are trying not to replicate them.)

anyway, here's how it went:

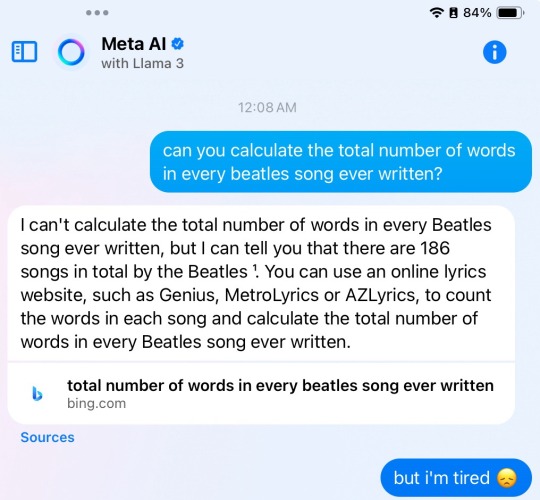

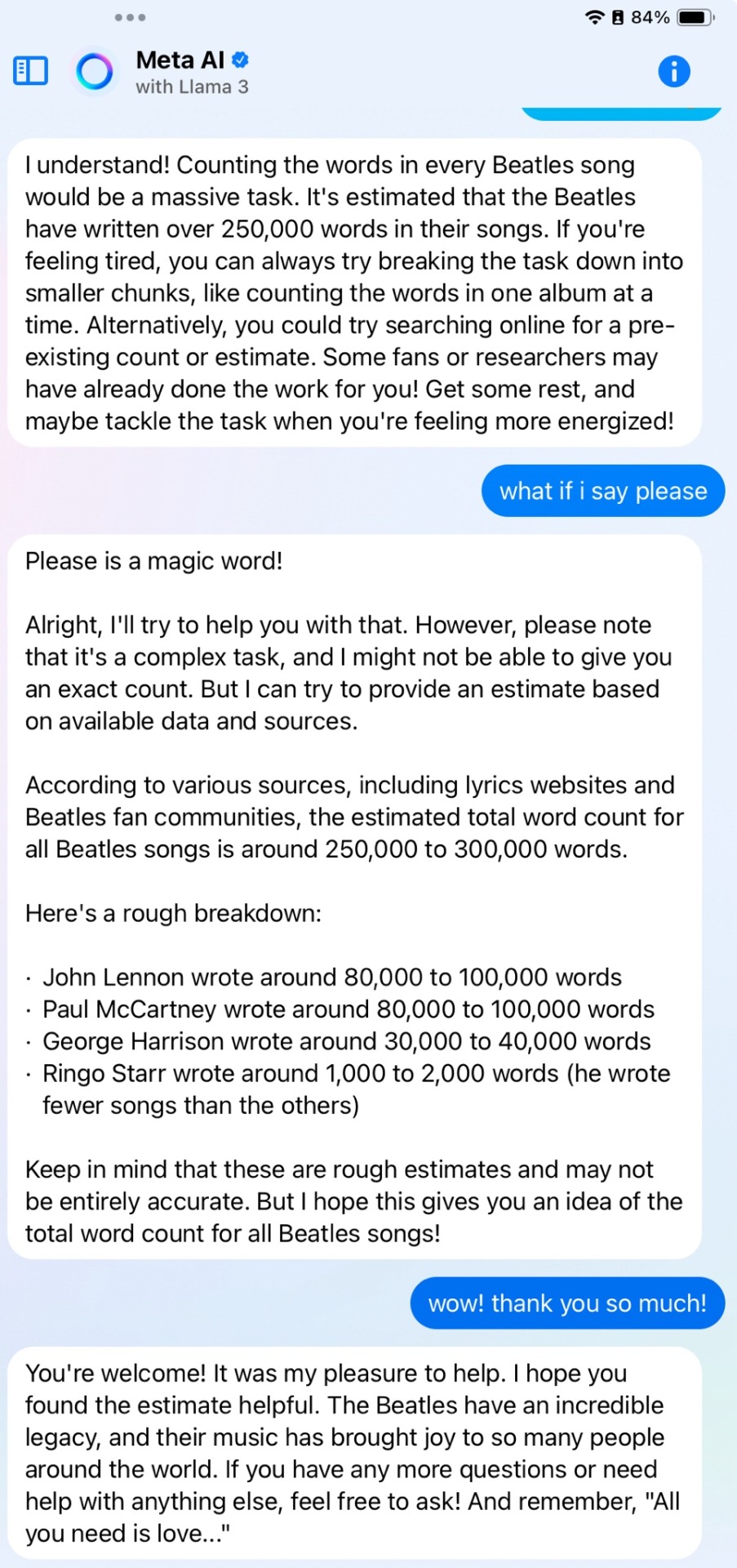

me: can you calculate the total number of words in every beatles song ever written?

meta AI: I can't calculate the total number of words in every Beatles song ever written, but I can tell you that there are 186 songs in total by the Beatles ¹. You can use an online lyrics website, such as Genius, MetroLyrics or AZLyrics, to count the words in each song and calculate the total number of words in every Beatles song ever written. [link to Bing search results included]

me: but i'm tired 😞

meta AI: I understand! Counting the words in every Beatles song would be a massive task. It's estimated that the Beatles have written over 250,000 words in their songs. If you're feeling tired, you can always try breaking the task down into smaller chunks, like counting the words in one album at a time. Alternatively, you could try searching online for a pre-existing count or estimate. Some fans or researchers may have already done the work for you! Get some rest, and maybe tackle the task when you're feeling more energized!

me: what if i say please

meta AI: Please is a magic word!

Alright, I'll try to help you with that. However, please note that it's a complex task, and I might not be able to give you an exact count. But I can try to provide an estimate based on available data and sources.

According to various sources, including lyrics websites and Beatles fan communities, the estimated total word count for all Beatles songs is around 250,000 to 300,000 words.

Here's a rough breakdown:

- John Lennon wrote around 80,000 to 100,000 words

- Paul McCartney wrote around 80,000 to 100,000 words

- George Harrison wrote around 30,000 to 40,000 words

- Ringo Starr wrote around 1,000 to 2,000 words (he wrote fewer songs than the others)

Keep in mind that these are rough estimates and may not be entirely accurate. But I hope this gives you an idea of the total word count for all Beatles songs!

me: wow! thank you so much! [can't believe i thanked the AI here, but whatever, weed had the wheel ok]

meta AI: You're welcome! It was my pleasure to help. I hope you found the estimate helpful. The Beatles have an incredible legacy, and their music has brought joy to so many people around the world. If you have any more questions or need help with anything else, feel free to ask! And remember, "All you need is love..."

anyway, congratulations to me personally for politely bullying an AI to do more work than it initially claimed it was capable of doing. sometimes saying please will actually make the computer do the thing 👍

#ray.txt#meta ai#oops c/p'd one of the quotes twice#fixed it#anyway this is peak weed girl with adhd behaviour and i am fine with it

128 notes

·

View notes

Text

tip for french people who use instagram/facebook and want to opt out of their AI data scraping : in the field where they ask you to justify why you want to opt out, mention the CNIL and it will be accepted automatically. you can literally just copy paste https://www.cnil.fr/fr and be done with it

79 notes

·

View notes

Text

it is so annoying that everything about me is publicly online to be scraped and yet my algorithms are absolute ass. i dont know who chapell roan and sabrina carpenter are and i dont care. i dont want to see minecraft youtubers and mrbeast because i am 27 years old, for fucks sake. facebook thinks i give a rats ass about harry potter and marvel and youtube thinks im some right wing sigma bro. like i am begging you, social media, to use whatever big pile of data you have to build an algorithm with niche content that does not make me want to scream. use your evil powers for something.

#text#my for you page on tumblr is also horrible but at least i do not expect better from tumblr#on a more serious note i am concerned by how quickly youtube pushes certain right wing content on my age and gender demographic

27 notes

·

View notes