#Kubernetes Solution

Text

Ensuring Data Resilience: Top 10 Kubernetes Backup Solutions

In the dynamic landscape of container orchestration, Kubernetes has emerged as a leading platform for managing and deploying containerized applications. As organizations increasingly rely on Kubernetes for their containerized workloads, the need for robust data resilience strategies becomes paramount. One crucial aspect of ensuring data resilience in Kubernetes environments is implementing reliable backup solutions. In this article, we will explore the top 10 Kubernetes backup solutions that organizations can leverage to safeguard their critical data.

1. Velero

Velero, an open-source backup and restore tool, is designed specifically for Kubernetes clusters. It provides snapshot and restore capabilities, allowing users to create backups of their entire cluster or selected namespaces.

2. Kasten K10

Kasten K10 is a data management platform for Kubernetes that offers backup, disaster recovery, and mobility functionalities. It supports various cloud providers and on-premises deployments, ensuring flexibility for diverse Kubernetes environments.

3. Stash

Stash, another open-source project, focuses on backing up Kubernetes volumes and custom resources. It supports scheduled backups, retention policies, and encryption, providing a comprehensive solution for data protection.

4. TrilioVault

TrilioVault specializes in protecting stateful applications in Kubernetes environments. With features like incremental backups and point-in-time recovery, it ensures that organizations can recover their applications quickly and efficiently.

5. Ark

Heptio Ark, now part of VMware, offers a simple and robust solution for Kubernetes backup and recovery. It supports both on-premises and cloud-based storage, providing flexibility for diverse storage architectures.

6. KubeBackup

KubeBackup is a lightweight and easy-to-use backup solution that supports scheduled backups and incremental backups. It is designed to be simple yet effective in ensuring data resilience for Kubernetes applications.

7. Rook

Rook extends Kubernetes to provide a cloud-native storage orchestrator. While not a backup solution per se, it enables the creation of distributed storage systems that can be leveraged for reliable data storage and retrieval.

8. Backupify

Backupify focuses on protecting cloud-native applications, including those running on Kubernetes. It provides automated backups, encryption, and a user-friendly interface for managing backup and recovery processes.

9. StashAway

StashAway is an open-source project that offers both backup and restore capabilities for Kubernetes applications. It supports volume backups, making it a suitable choice for organizations with complex storage requirements.

10. Duplicity

Duplicity, though not Kubernetes-specific, is a versatile backup tool that can be integrated into Kubernetes environments. It supports encryption and incremental backups, providing an additional layer of data protection.

In conclusion, selecting the right Kubernetes backup solution is crucial for ensuring data resilience in containerized environments. The options mentioned here offer a range of features and capabilities, allowing organizations to choose the solution that best fits their specific needs. By incorporating these backup solutions into their Kubernetes strategy, organizations can mitigate risks and ensure the availability and integrity of their critical data.

0 notes

Text

#kubernetes#devops service provider#devops solutions and services#devops practices#devops development company#devops#cloud#cloud solutions#devops service company in india#cloud services#cloud migration

0 notes

Text

Top 5 Open Source Kubernetes Storage Solutions

Top 5 Open Source Kubernetes Storage Solutions #homelab #ceph #rook #glusterfs #longhorn #openebs #KubernetesStorageSolutions #OpenSourceStorageForKubernetes #CephRBDKubernetes #GlusterFSWithKubernetes #OpenEBSInKubernetes #RookStorage #LonghornKubernetes

Historically, Kubernetes storage has been challenging to configure, and it required specialized knowledge to get up and running. However, the landscape of K8s data storage has greatly evolved with many great options that are relatively easy to implement for data stored in Kubernetes clusters.

Those who are running Kubernetes in the home lab as well will benefit from the free and open-source…

View On WordPress

#block storage vs object storage#Ceph RBD and Kubernetes#cloud-native storage solutions#GlusterFS with Kubernetes#Kubernetes storage solutions#Longhorn and Kubernetes integration#managing storage in Kubernetes clusters#open-source storage for Kubernetes#OpenEBS in Kubernetes environment#Rook storage in Kubernetes

0 notes

Text

Wie können Sie Kubernetes-Cluster mit ArgoCD automatisieren?:

"Automatisieren Sie Ihr Kubernetes-Cluster mit ArgoCD & MHM Digitale Lösungen UG!"

Automatisierung, Anwendungsentwicklung und Infrastructure-as-Code sind die Schlüssel zu erfolgreichen DevOps-Projekten. Nutze die Möglichkeiten von Continuous Deployment und Continuous Integration, um dein #Kubernetes-Cluster mit #ArgoCD zu automatisieren. Erfahre mehr bei #MHMDigitalSolutionsUG! #DevOps #Anwendungsentwicklung #InfrastructureAsCode

Mithilfe von ArgoCD und MHM Digitale Lösungen UG können Sie Ihr Kubernetes-Cluster einfach und schnell automatisieren. ArgoCD ist ein Open Source Continuous Delivery-Tool, das Änderungen an Kubernetes-Anwendungen automatisiert und in Echtzeit nachverfolgt. Es ermöglicht es Entwicklern, sicherzustellen, dass ihre Änderungen auf der Grundlage von Präferenzen und Richtlinien ausgeliefert werden.

MHM…

View On WordPress

#10 Keywörter: Kubernetes#Anwendungsentwicklung.#ArgoCD#Automatisierung#Cluster#Continuous Integration.#Continuous-Deployment.#DevOps#Infrastructure-as-Code#MHM Digital Solutions UG

0 notes

Text

The Great container debate: Kubernetes vs. Docker

The process of containerization has become a major player in the software development world. It allows developers to maximize time and resources by creating an isolated environments for applications. Kubernetes and Docker are two popular container solutions that have been dominating the industry as of late.

Kubernetes, an open-source system for automating deployment, scaling, and management of containerized applications, is currently the most popular choice for organizations that require flexibility when it comes to their cloud architecture. Kubernetes makes it easy to deploy and manage multiple containers across a variety of different cloud platforms. Kubernetes also provides many advanced features such as automated self-healing capabilities, service discovery, and custom resource definitions.

Docker, on the other hand, is a container platform that provides fast and reliable deployment of applications. Docker containers are lightweight and can be quickly spun up to run applications or services. It also allows developers to package applications with all their dependencies, making them easier to deploy across different platforms. Docker has been around for longer than Kubernetes and is much more established in the industry.

Ultimately, the decision of which container solution to use primarily depends on an organization's needs and goals. Kubernetes offers many advanced features but requires more technical expertise compared to Docker. Therefore organizations that prioritize flexibility should consider Kubernetes while those looking for something simpler might opt for Docker instead.

It’s important for companies to weigh the pros and cons of Kubernetes and Docker before making a decision. Both solutions can enable fast deployment of applications, but Kubernetes provides more advanced features for complex architectures and container orchestration. Ultimately, it is up to businesses to decide which solution best fits their needs.

The Kubernetes vs. Docker debate will remain ongoing as long as organizations require container solutions for their cloud applications. It’s important to remember that both Kubernetes and Docker have their own strengths and weaknesses so companies need to weigh the pros and cons before committing to one or the other.

Difference between Kubernetes and Docker:

Kubernetes is an open-source platform for automating the deployment, scaling, and management of containerized applications. Kubernetes makes it easy to deploy and manage multiple containers across a variety of different cloud platforms. Kubernetes offers many advanced features such as automated self-healing capabilities, service discovery, custom resource definitions, and more. Kubernetes also has the ability to dynamically scale applications based on usage or other metrics.

Docker is a container platform that enables fast and reliable deployment of applications. It allows developers to package applications with all their dependencies, making them easier to deploy across different platforms. Docker supports orchestration with tools such as Docker Compose, allowing developers to easily create complex multi-container applications. Docker also has its own ecosystem of tools and services, making it easy to manage containerized applications.

In conclusion, Kubernetes and Docker are both popular container solutions that offer different levels of complexity and features. Kubernetes is best suited for large organizations that require high scalability while Docker provides an easier way to deploy lightweight applications or services with minimal overhead. Ultimately, the choice between Kubernetes and Docker will come down to an organization's specific needs and goals.

0 notes

Text

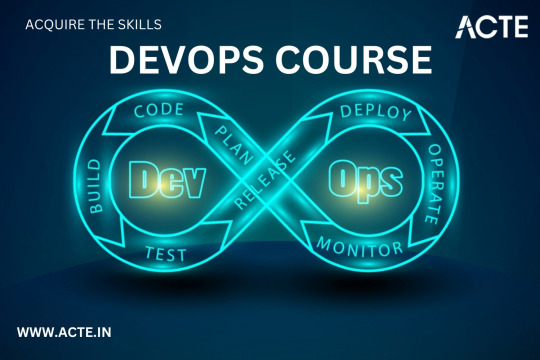

Level Up Your Software Development Skills: Join Our Unique DevOps Course

Would you like to increase your knowledge of software development? Look no further! Our unique DevOps course is the perfect opportunity to upgrade your skillset and pave the way for accelerated career growth in the tech industry. In this article, we will explore the key components of our course, reasons why you should choose it, the remarkable placement opportunities it offers, and the numerous benefits you can expect to gain from joining us.

Key Components of Our DevOps Course

Our DevOps course is meticulously designed to provide you with a comprehensive understanding of the DevOps methodology and equip you with the necessary tools and techniques to excel in the field. Here are the key components you can expect to delve into during the course:

1. Understanding DevOps Fundamentals

Learn the core principles and concepts of DevOps, including continuous integration, continuous delivery, infrastructure automation, and collaboration techniques. Gain insights into how DevOps practices can enhance software development efficiency and communication within cross-functional teams.

2. Mastering Cloud Computing Technologies

Immerse yourself in cloud computing platforms like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform. Acquire hands-on experience in deploying applications, managing serverless architectures, and leveraging containerization technologies such as Docker and Kubernetes for scalable and efficient deployment.

3. Automating Infrastructure as Code

Discover the power of infrastructure automation through tools like Ansible, Terraform, and Puppet. Automate the provisioning, configuration, and management of infrastructure resources, enabling rapid scalability, agility, and error-free deployments.

4. Monitoring and Performance Optimization

Explore various monitoring and observability tools, including Elasticsearch, Grafana, and Prometheus, to ensure your applications are running smoothly and performing optimally. Learn how to diagnose and resolve performance bottlenecks, conduct efficient log analysis, and implement effective alerting mechanisms.

5. Embracing Continuous Integration and Delivery

Dive into the world of continuous integration and delivery (CI/CD) pipelines using popular tools like Jenkins, GitLab CI/CD, and CircleCI. Gain a deep understanding of how to automate build processes, run tests, and deploy applications seamlessly to achieve faster and more reliable software releases.

Reasons to Choose Our DevOps Course

There are numerous reasons why our DevOps course stands out from the rest. Here are some compelling factors that make it the ideal choice for aspiring software developers:

Expert Instructors: Learn from industry professionals who possess extensive experience in the field of DevOps and have a genuine passion for teaching. Benefit from their wealth of knowledge and practical insights gained from working on real-world projects.

Hands-On Approach: Our course emphasizes hands-on learning to ensure you develop the practical skills necessary to thrive in a DevOps environment. Through immersive lab sessions, you will have opportunities to apply the concepts learned and gain valuable experience working with industry-standard tools and technologies.

Tailored Curriculum: We understand that every learner is unique, so our curriculum is strategically designed to cater to individuals of varying proficiency levels. Whether you are a beginner or an experienced professional, our course will be tailored to suit your needs and help you achieve your desired goals.

Industry-Relevant Projects: Gain practical exposure to real-world scenarios by working on industry-relevant projects. Apply your newly acquired skills to solve complex problems and build innovative solutions that mirror the challenges faced by DevOps practitioners in the industry today.

Benefits of Joining Our DevOps Course

By joining our DevOps course, you open up a world of benefits that will enhance your software development career. Here are some notable advantages you can expect to gain:

Enhanced Employability: Acquire sought-after skills that are in high demand in the software development industry. Stand out from the crowd and increase your employability prospects by showcasing your proficiency in DevOps methodologies and tools.

Higher Earning Potential: With the rise of DevOps practices, organizations are willing to offer competitive remuneration packages to skilled professionals. By mastering DevOps through our course, you can significantly increase your earning potential in the tech industry.

Streamlined Software Development Processes: Gain the ability to streamline software development workflows by effectively integrating development and operations. With DevOps expertise, you will be capable of accelerating software deployment, reducing errors, and improving the overall efficiency of the development lifecycle.

Continuous Learning and Growth: DevOps is a rapidly evolving field, and by joining our course, you become a part of a community committed to continuous learning and growth. Stay updated with the latest industry trends, technologies, and best practices to ensure your skills remain relevant in an ever-changing tech landscape.

In conclusion, our unique DevOps course at ACTE institute offers unparalleled opportunities for software developers to level up their skills and propel their careers forward. With a comprehensive curriculum, remarkable placement opportunities, and a host of benefits, joining our course is undoubtedly a wise investment in your future success. Don't miss out on this incredible chance to become a proficient DevOps practitioner and unlock new horizons in the world of software development. Enroll today and embark on an exciting journey towards professional growth and achievement!

10 notes

·

View notes

Text

Full Stack Development: Using DevOps and Agile Practices for Success

In today’s fast-paced and highly competitive tech industry, the demand for Full Stack Developers is steadily on the rise. These versatile professionals possess a unique blend of skills that enable them to handle both the front-end and back-end aspects of software development. However, to excel in this role and meet the ever-evolving demands of modern software development, Full Stack Developers are increasingly turning to DevOps and Agile practices. In this comprehensive guide, we will explore how the combination of Full Stack Development with DevOps and Agile methodologies can lead to unparalleled success in the world of software development.

Full Stack Development: A Brief Overview

Full Stack Development refers to the practice of working on all aspects of a software application, from the user interface (UI) and user experience (UX) on the front end to server-side scripting, databases, and infrastructure on the back end. It requires a broad skill set and the ability to handle various technologies and programming languages.

The Significance of DevOps and Agile Practices

The environment for software development has changed significantly in recent years. The adoption of DevOps and Agile practices has become a cornerstone of modern software development. DevOps focuses on automating and streamlining the development and deployment processes, while Agile methodologies promote collaboration, flexibility, and iterative development. Together, they offer a powerful approach to software development that enhances efficiency, quality, and project success. In this blog, we will delve into the following key areas:

Understanding Full Stack Development

Defining Full Stack Development

We will start by defining Full Stack Development and elucidating its pivotal role in creating end-to-end solutions. Full Stack Developers are akin to the Swiss Army knives of the development world, capable of handling every aspect of a project.

Key Responsibilities of a Full Stack Developer

We will explore the multifaceted responsibilities of Full Stack Developers, from designing user interfaces to managing databases and everything in between. Understanding these responsibilities is crucial to grasping the challenges they face.

DevOps’s Importance in Full Stack Development

Unpacking DevOps

A collection of principles known as DevOps aims to eliminate the divide between development and operations teams. We will delve into what DevOps entails and why it matters in Full Stack Development. The benefits of embracing DevOps principles will also be discussed.

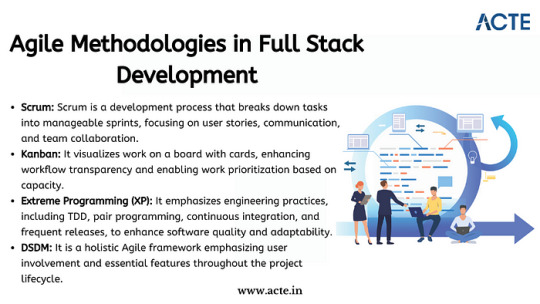

Agile Methodologies in Full Stack Development

Introducing Agile Methodologies

Agile methodologies like Scrum and Kanban have gained immense popularity due to their effectiveness in fostering collaboration and adaptability. We will introduce these methodologies and explain how they enhance project management and teamwork in Full Stack Development.

Synergy Between DevOps and Agile

The Power of Collaboration

We will highlight how DevOps and Agile practices complement each other, creating a synergy that streamlines the entire development process. By aligning development, testing, and deployment, this synergy results in faster delivery and higher-quality software.

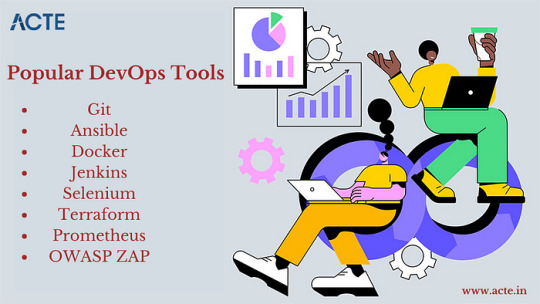

Tools and Technologies for DevOps in Full Stack Development

Essential DevOps Tools

DevOps relies on a suite of tools and technologies, such as Jenkins, Docker, and Kubernetes, to automate and manage various aspects of the development pipeline. We will provide an overview of these tools and explain how they can be harnessed in Full Stack Development projects.

Implementing Agile in Full Stack Projects

Agile Implementation Strategies

We will delve into practical strategies for implementing Agile methodologies in Full Stack projects. Topics will include sprint planning, backlog management, and conducting effective stand-up meetings.

Best Practices for Agile Integration

We will share best practices for incorporating Agile principles into Full Stack Development, ensuring that projects are nimble, adaptable, and responsive to changing requirements.

Learning Resources and Real-World Examples

To gain a deeper understanding, ACTE Institute present case studies and real-world examples of successful Full Stack Development projects that leveraged DevOps and Agile practices. These stories will offer valuable insights into best practices and lessons learned. Consider enrolling in accredited full stack developer training course to increase your full stack proficiency.

Challenges and Solutions

Addressing Common Challenges

No journey is without its obstacles, and Full Stack Developers using DevOps and Agile practices may encounter challenges. We will identify these common roadblocks and provide practical solutions and tips for overcoming them.

Benefits and Outcomes

The Fruits of Collaboration

In this section, we will discuss the tangible benefits and outcomes of integrating DevOps and Agile practices in Full Stack projects. Faster development cycles, improved product quality, and enhanced customer satisfaction are among the rewards.

In conclusion, this blog has explored the dynamic world of Full Stack Development and the pivotal role that DevOps and Agile practices play in achieving success in this field. Full Stack Developers are at the forefront of innovation, and by embracing these methodologies, they can enhance their efficiency, drive project success, and stay ahead in the ever-evolving tech landscape. We emphasize the importance of continuous learning and adaptation, as the tech industry continually evolves. DevOps and Agile practices provide a foundation for success, and we encourage readers to explore further resources, courses, and communities to foster their growth as Full Stack Developers. By doing so, they can contribute to the development of cutting-edge solutions and make a lasting impact in the world of software development.

#web development#full stack developer#devops#agile#education#information#technology#full stack web development#innovation

2 notes

·

View notes

Text

AEM aaCS aka Adobe Experience Manager as a Cloud Service

As the industry standard for digital experience management, Adobe Experience Manager is now being improved upon. Finally, Adobe is transferring Adobe Experience Manager (AEM), its final on-premises product, to the cloud.

AEM aaCS is a modern, cloud-native application that accelerates the delivery of omnichannel application.

The AEM Cloud Service introduces the next generation of the AEM product line, moving away from versioned releases like AEM 6.4, AEM 6.5, etc. to a continuous release with less versioning called "AEM as a Cloud Service."

AEM Cloud Service adopts all benefits of modern cloud based services:

Availability

The ability for all services to be always on, ensuring that our clients do not suffer any downtime, is one of the major advantages of switching to AEM Cloud Service. In the past, there was a requirement to regularly halt the service for various maintenance operations, including updates, patches, upgrades, and certain standard maintenance activities, notably on the author side.

Scalability

The AEM Cloud Service's instances are all generated with the same default size. AEM Cloud Service is built on an orchestration engine (Kubernetes) that dynamically scales up and down in accordance with the demands of our clients without requiring their involvement. both horizontally and vertically. Based on, scaling can be done manually or automatically.

Updated Code Base

This might be the most beneficial and much anticipated function that AEM Cloud Service offers to consumers. With the AEM Cloud Service, Adobe will handle upgrading all instances to the most recent code base. No downtime will be experienced throughout the update process.

Self Evolving

Continually improving and learning from the projects our clients deploy, AEM Cloud Service. We regularly examine and validate content, code, and settings against best practices to help our clients understand how to accomplish their business objectives. AEM cloud solution components that include health checks enable them to self-heal.

AEM as a Cloud Service: Changes and Challenges

When you begin your work, you will notice a lot of changes in the aem cloud jar. Here are a few significant changes that might have an effect on how we now operate with aem:-

1)The significant exhibition bottleneck that the greater part of huge endeavor DAM clients are confronting is mass transferring of resource on creator example and afterward DAM Update work process debase execution of entire creator occurrence. To determine this AEM Cloud administration brings Resource Microservices for serverless resource handling controlled by Adobe I/O. Presently when creator transfers any resource it will go straightforwardly to cloud paired capacity then adobe I/O is set off which will deal with additional handling by utilizing versions and different properties that has been designed.

2)Due to Adobe's complete management of AEM cloud service, developers and operations personnel may not be able to directly access logs. As of right now, the only way I know of to request access, error, dispatcher, and other logs will be via a cloud manager download link.

3)The only way for AEM Leads to deploy is through cloud manager, which is subject to stringent CI/CD pipeline quality checks. At this point, you should concentrate on test-driven development with greater than 50% test coverage. Go to https://docs.adobe.com/content/help/en/experience-manager-cloud-manager/using/how-to-use/understand-your-test-results.html for additional information.

4)AEM as a cloud service does not currently support AEM screens or AEM Adaptive forms.

5)Continuous updates will be pushed to the cloud-based AEM Base line image to support version-less solutions. Consequently, any Asset UI console or libs granite customizations: Up until AEM 6.5, the internal node, which could be used as a workaround to meet customer requirements, is no longer possible because it will be replaced with each base line image update.

6)Local sonar cannot use the code quality rules that are available in cloud manager before pushing to git. which I believe will result in increased development time and git commits. Once the development code is pushed to the git repository and the build is started, cloud manager will run sonar checks and tell you what's wrong. As a precaution, I recommend that you do not have any problems with the default rules in your local environment and that you continue to update the rules whenever you encounter them while pushing the code to cloud git.

AEM Cloud Service Does Not Support These Features

1.AEM Sites Commerce add-on

2.Screens add-on

3.Networks add-on

4.AEM Structures

5.Admittance to Exemplary UI.

6.Page Editor is in Developer Mode.

7./apps or /libs are ready-only in dev/stage/prod environment – changes need to come in via CI/CD pipeline that builds the code from the GIT repo.

8.OSGI bundles and settings: the dev, stage, and production environments do not support the web console.

If you encounter any difficulties or observe any issue , please let me know. It will be useful for AEM people group.

3 notes

·

View notes

Text

Microsoft Azure Fundamentals AI-900 (Part 5)

Microsoft Azure AI Fundamentals: Explore visual studio tools for machine learning

What is machine learning? A technique that uses math and statistics to create models that predict unknown values

Types of Machine learning

Regression - predict a continuous value, like a price, a sales total, a measure, etc

Classification - determine a class label.

Clustering - determine labels by grouping similar information into label groups

x = features

y = label

Azure Machine Learning Studio

You can use the workspace to develop solutions with the Azure ML service on the web portal or with developer tools

Web portal for ML solutions in Sure

Capabilities for preparing data, training models, publishing and monitoring a service.

First step assign a workspace to a studio.

Compute targets are cloud-based resources which can run model training and data exploration processes

Compute Instances - Development workstations that data scientists can use to work with data and models

Compute Clusters - Scalable clusters of VMs for on demand processing of experiment code

Inference Clusters - Deployment targets for predictive services that use your trained models

Attached Compute - Links to existing Azure compute resources like VMs or Azure data brick clusters

What is Azure Automated Machine Learning

Jobs have multiple settings

Provide information needed to specify your training scripts, compute target and Azure ML environment and run a training job

Understand the AutoML Process

ML model must be trained with existing data

Data scientists spend lots of time pre-processing and selecting data

This is time consuming and often makes inefficient use of expensive compute hardware

In Azure ML data for model training and other operations are encapsulated in a data set.

You create your own dataset.

Classification (predicting categories or classes)

Regression (predicting numeric values)

Time series forecasting (predicting numeric values at a future point in time)

After part of the data is used to train a model, then the rest of the data is used to iteratively test or cross validate the model

The metric is calculated by comparing the actual known label or value with the predicted one

Difference between the actual known and predicted is known as residuals; they indicate amount of error in the model.

Root Mean Squared Error (RMSE) is a performance metric. The smaller the value, the more accurate the model’s prediction is

Normalized root mean squared error (NRMSE) standardizes the metric to be used between models which have different scales.

Shows the frequency of residual value ranges.

Residuals represents variance between predicted and true values that can’t be explained by the model, errors

Most frequently occurring residual values (errors) should be clustered around zero.

You want small errors with fewer errors at the extreme ends of the sale

Should show a diagonal trend where the predicted value correlates closely with the true value

Dotted line shows a perfect model’s performance

The closer to the line of your model’s average predicted value to the dotted, the better.

Services can be deployed as an Azure Container Instance (ACI) or to a Azure Kubernetes Service (AKS) cluster

For production AKS is recommended.

Identify regression machine learning scenarios

Regression is a form of ML

Understands the relationships between variables to predict a desired outcome

Predicts a numeric label or outcome base on variables (features)

Regression is an example of supervised ML

What is Azure Machine Learning designer

Allow you to organize, manage, and reuse complex ML workflows across projects and users

Pipelines start with the dataset you want to use to train the model

Each time you run a pipelines, the context(history) is stored as a pipeline job

Encapsulates one step in a machine learning pipeline.

Like a function in programming

In a pipeline project, you access data assets and components from the Asset Library tab

You can create data assets on the data tab from local files, web files, open at a sets, and a datastore

Data assets appear in the Asset Library

Azure ML job executes a task against a specified compute target.

Jobs allow systematic tracking of your ML experiments and workflows.

Understand steps for regression

To train a regression model, your data set needs to include historic features and known label values.

Use the designer’s Score Model component to generate the predicted class label value

Connect all the components that will run in the experiment

Average difference between predicted and true values

It is based on the same unit as the label

The lower the value is the better the model is predicting

The square root of the mean squared difference between predicted and true values

Metric based on the same unit as the label.

A larger difference indicates greater variance in the individual label errors

Relative metric between 0 and 1 on the square based on the square of the differences between predicted and true values

Closer to 0 means the better the model is performing.

Since the value is relative, it can compare different models with different label units

Relative metric between 0 and 1 on the square based on the absolute of the differences between predicted and true values

Closer to 0 means the better the model is performing.

Can be used to compare models where the labels are in different units

Also known as R-squared

Summarizes how much variance exists between predicted and true values

Closer to 1 means the model is performing better

Remove training components form your data and replace it with a web service inputs and outputs to handle the web requests

It does the same data transformations as the first pipeline for new data

It then uses trained model to infer/predict label values based on the features.

Create a classification model with Azure ML designer

Classification is a form of ML used to predict which category an item belongs to

Like regression this is a supervised ML technique.

Understand steps for classification

True Positive - Model predicts the label and the label is correct

False Positive - Model predicts wrong label and the data has the label

False Negative - Model predicts the wrong label, and the data does have the label

True Negative - Model predicts the label correctly and the data has the label

For multi-class classification, same approach is used. A model with 3 possible results would have a 3x3 matrix.

Diagonal lien of cells were the predicted and actual labels match

Number of cases classified as positive that are actually positive

True positives divided by (true positives + false positives)

Fraction of positive cases correctly identified

Number of true positives divided by (true positives + false negatives)

Overall metric that essentially combines precision and recall

Classification models predict probability for each possible class

For binary classification models, the probability is between 0 and 1

Setting the threshold can define when a value is interpreted as 0 or 1. If its set to 0.5 then 0.5-1.0 is 1 and 0.0-0.4 is 0

Recall also known as True Positive Rate

Has a corresponding False Positive Rate

Plotting these two metrics on a graph for all values between 0 and 1 provides information.

Receiver Operating Characteristic (ROC) is the curve.

In a perfect model, this curve would be high to the top left

Area under the curve (AUC).

Remove training components form your data and replace it with a web service inputs and outputs to handle the web requests

It does the same data transformations as the first pipeline for new data

It then uses trained model to infer/predict label values based on the features.

Create a Clustering model with Azure ML designer

Clustering is used to group similar objects together based on features.

Clustering is an example of unsupervised learning, you train a model to just separate items based on their features.

Understanding steps for clustering

Prebuilt components exist that allow you to clean the data, normalize it, join tables and more

Requires a dataset that includes multiple observations of the items you want to cluster

Requires numeric features that can be used to determine similarities between individual cases

Initializing K coordinates as randomly selected points called centroids in an n-dimensional space (n is the number of dimensions in the feature vectors)

Plotting feature vectors as points in the same space and assigns a value how close they are to the closes centroid

Moving the centroids to the middle points allocated to it (mean distance)

Reassigning to the closes centroids after the move

Repeating the last two steps until tone.

Maximum distances between each point and the centroid of that point’s cluster.

If the value is high it can mean that cluster is widely dispersed.

With the Average Distance to Closer Center, we can determine how spread out the cluster is

Remove training components form your data and replace it with a web service inputs and outputs to handle the web requests

It does the same data transformations as the first pipeline for new data

It then uses trained model to infer/predict label values based on the features.

2 notes

·

View notes

Text

Join the Future of IT with Ievision’s Advanced DevOps Master Diploma

Advance Your Career with the DevOps Master Diploma from Ievision

In today’s technology-driven landscape, DevOps has become a critical framework for enhancing collaboration between development and operations teams, enabling faster and more efficient software delivery. The DevOps Master Diploma offered by Ievision is a comprehensive program designed to equip professionals with advanced knowledge, tools, and practices essential for success in the rapidly evolving IT industry.

Why Enroll in Ievision’s DevOps Master Diploma?

Our DevOps Master Diploma stands out for several reasons:

Comprehensive Curriculum:

The program covers all key aspects of DevOps, including automation, continuous integration/continuous delivery (CI/CD), containerization with Docker and Kubernetes, and infrastructure as code (IaC). You will gain deep knowledge of popular DevOps tools such as Jenkins, Git, and Terraform.

Hands-On Training:

At Ievision, we emphasize practical learning. You’ll engage in real-world simulations and hands-on labs that allow you to apply the theory in live environments. This immersive approach ensures that you’re prepared to implement DevOps solutions effectively.

Expert Instructors:

Learn from industry veterans who bring extensive experience in implementing DevOps strategies across diverse industries. Their expertise provides you with the latest trends and actionable insights needed to excel.

Globally Recognized Certification:

Earning the DevOps Master Diploma from Ievision will enhance your professional credentials. The certification is recognized globally, making you a desirable candidate for top-tier jobs in DevOps, automation, and cloud management.

Key Benefits of the DevOps Master Diploma

By completing the DevOps Master Diploma, you’ll gain:

Proficiency in setting up CI/CD pipelines for fast, reliable software releases.

Expertise in using cloud-native technologies and managing infrastructure with AWS, Azure, and GCP.

Hands-on experience with Docker and Kubernetes for containerization and orchestration.

Skills to implement and maintain infrastructure using automation tools like Ansible, Chef, and Puppet.

Career Opportunities

With a DevOps Master Diploma, you can explore lucrative career paths, including:

DevOps Engineer

Cloud Architect

Automation Engineer

Site Reliability Engineer (SRE)

DevOps is a growing field, and companies are constantly on the lookout for certified professionals who can help streamline their development processes and boost efficiency.

Conclusion

The DevOps Master Diploma from Ievision is your gateway to mastering the key skills and technologies that drive innovation in today’s IT landscape. Whether you're looking to boost your career, enhance your expertise, or lead digital transformation in your organization, this comprehensive program equips you with the advanced knowledge and practical experience required to excel. By becoming a certified DevOps professional, you'll be in a prime position to take advantage of the growing demand for skilled experts in cloud computing, automation, and IT operations. Take the next step in your career and become a DevOps leader with Ievision’s globally recognized certification.

#DevOpsMasterDiploma#IevisionCertification#DevOpsTraining#CareerInDevOps#DevOpsEngineer#CloudAutomation#CI_CD#AdvancedDevOpsSkills#TechCareerGrowth#GlobalDevOpsCertification

0 notes

Text

Transform Your Business with HawkStack’s Cloud-Native Services

In today’s fast-paced digital landscape, businesses are seeking innovative ways to enhance their performance and stay ahead of the competition. One of the most effective solutions is leveraging cloud-native services, which enable organizations to build and run scalable applications in modern, dynamic environments such as public, private, or hybrid clouds.

At HawkStack, we provide cutting-edge cloud-native services that are designed to help you transform your applications, ensuring superior performance and scalability. Here’s how HawkStack’s cloud-native approach can optimize your business solutions and drive growth.

1. What Are Cloud-Native Services?

Cloud-native services involve using a combination of modern technologies and practices to design, develop, and manage applications that take full advantage of cloud computing frameworks. These services allow businesses to:

Build scalable, resilient applications

Automate deployment processes

Improve performance and security

With cloud-native solutions, companies can respond to changing market demands rapidly, innovate continuously, and reduce time-to-market for new products and services.

2. Key Features of Cloud-Native Architecture

By adopting a cloud-native architecture, your business can benefit from:

Microservices: Decompose monolithic applications into small, independent services that are easier to manage and update.

Containerization: Use Docker or Kubernetes to package applications into lightweight containers that can run consistently across any environment.

Automation: Streamline the entire development lifecycle, from build to deployment, with automated CI/CD pipelines.

Scalability: Automatically scale resources up or down to meet demand without manual intervention, ensuring optimized performance.

Serverless Computing: Only pay for the resources you use by leveraging serverless technologies that allocate computing power on an as-needed basis.

3. Why Partner with HawkStack for Cloud-Native Transformation?

HawkStack offers a comprehensive suite of cloud-native services tailored to your business needs. Here’s how we help you achieve optimal performance and agility:

Custom Cloud Solutions: Whether you need to migrate existing applications to the cloud or develop new ones, HawkStack provides custom solutions designed to fit your specific requirements.

End-to-End Support: Our expert team of cloud engineers will guide you through the entire process, from consultation to deployment and beyond.

Performance Optimization: With a focus on performance, we ensure your applications run smoothly and efficiently in any environment, be it public, private, or hybrid cloud.

4. The Benefits of Cloud-Native Services for Your Business

Faster Time-to-Market: Deploy applications quicker and innovate faster with streamlined development processes.

Cost Efficiency: Reduce overhead costs by only paying for the resources you use and leveraging cloud automation.

Improved Reliability: Build resilient applications that recover quickly from failures, ensuring seamless user experiences.

Agility: Respond to business changes with greater agility by scaling applications on-demand.

5. Case Study: Success with Cloud-Native

One of our clients, a fast-growing e-commerce platform, was struggling with the limitations of their legacy system. By partnering with HawkStack, they transformed their monolithic application into a cloud-native, microservices-based architecture. The result? They experienced a 50% improvement in application performance and a 35% reduction in operational costs within just six months.

6. Future-Proof Your Business with HawkStack

The shift to cloud-native architecture is more than just a trend—it’s a necessity for businesses looking to stay competitive in the digital era. By partnering with HawkStack, you’re not only optimizing your current operations but also future-proofing your business for ongoing innovation and success.

Ready to transform your applications and achieve superior performance? Contact HawkStack today and let us help you navigate the future of cloud-native services!

For more details click www.hawkstack.com

#redhatcourses#information technology#containerorchestration#docker#container#kubernetes#linux#containersecurity

0 notes

Text

DevOps for Cloud Native Applications: Best Practices and Challenges

Cloud native applications offer significant benefits over traditional applications, but building and managing them requires adopting DevOps practices to ensure fast and reliable delivery. In this blog, we have discussed the best practices and challenges for DevOps in cloud native applications and how CloudZenix can help you achieve your goals.

Best practices for DevOps in cloud native applications include automation, microservices architecture, CI/CD, and IaC. Automation reduces human error, microservices architecture enables faster delivery and easier maintenance, CI/CD pipelines ensure faster and reliable delivery, and IaC makes it easier to provision and manage cloud native applications.

Challenges for DevOps in cloud native applications include container orchestration, security, and scalability. Container orchestration can be complex and challenging, security threats are prevalent, and cloud native applications can scale quickly, requiring proper load testing and capacity planning.

At CloudZenix, we provide DevOps consulting, implementation, and management services to help organizations build and manage cloud native applications. Our team of experts can help you identify the right tools and practices for DevOps in cloud native applications and provide services including Kubernetes consulting, CI/CD pipeline consulting, IaC consulting, and security consulting. By partnering with us, you can ensure faster and reliable delivery of high-quality software while mitigating potential risks and challenges.

#DevOps#devops development company#devops service provider#devops solutions and services#kubernetes#docker

0 notes

Text

Best Kubernetes Management Tools in 2023

Best Kubernetes Management Tools in 2023 #homelab #vmwarecommunities #Kubernetesmanagementtools2023 #bestKubernetescommandlinetools #managingKubernetesclusters #Kubernetesdashboardinterfaces #kubernetesmanagementtools #Kubernetesdashboard

Kubernetes is everywhere these days. It is used in the enterprise and even in many home labs. It’s a skill that’s sought after, especially with today’s push for app modernization. Many tools help you manage things in Kubernetes, like clusters, pods, services, and apps. Here’s my list of the best Kubernetes management tools in 2023.

Table of contentsWhat is Kubernetes?Understanding Kubernetes and…

View On WordPress

#best Kubernetes command line tools#containerized applications management#Kubernetes cluster management tools#Kubernetes cost monitoring#Kubernetes dashboard interfaces#Kubernetes deployment solutions#Kubernetes management tools 2023#large Kubernetes deployments#managing Kubernetes clusters#open-source Kubernetes tools

0 notes

Text

Cloud Automation is crucial for optimizing IT operations in multi-cloud environments. Tools such as Ansible, Terraform, Kubernetes, and AWS CloudFormation enable businesses to streamline workflows, automate repetitive tasks, and enhance infrastructure management. These solutions provide efficiency and scalability, making them indispensable for modern cloud management.

#cloud automation tools#IT operations#seamless IT automation#Ansible#Terraform#AWS CloudFormation#Kubernetes#multi-cloud management#cloud provisioning#infrastructure automation#DevOps tools#cloud migration

0 notes

Text

Sify’s DevSecOps Services: Integrating Security into Every Step of Development

In today’s fast-paced digital landscape, businesses require agility, speed, and security to stay competitive. Traditional approaches to application development often treated security as an afterthought, leaving vulnerabilities that could be exploited. Sify’s DevSecOps Services address this challenge by embedding security into every stage of the development process, ensuring businesses can innovate quickly without compromising on safety.

What is DevSecOps?

DevSecOps is a natural evolution of DevOps, a methodology that combines software development (Dev) and IT operations (Ops) to shorten the development lifecycle and deliver high-quality software continuously. DevSecOps integrates security (Sec) into this process, ensuring that security protocols are applied from the initial stages of development, rather than at the end.

Sify’s DevSecOps Services provide a comprehensive solution that brings development, operations, and security teams together, allowing businesses to accelerate their release cycles while maintaining high security standards.

Key Benefits of Sify’s DevSecOps Services

End-to-End Security Integration

Sify’s DevSecOps approach ensures security is woven into every phase of the software development lifecycle (SDLC). From design and coding to testing and deployment, security is treated as a critical component. This proactive approach reduces the chances of security breaches and mitigates risks before they become significant issues.

Automated Security Testing

One of the key features of Sify’s DevSecOps services is the automation of security testing. By embedding automated security tools into the CI/CD (Continuous Integration/Continuous Delivery) pipeline, Sify enables early detection of vulnerabilities. Automated scanning tools help detect code issues, vulnerabilities, and configuration errors as soon as they arise, leading to faster resolutions.

Continuous Monitoring and Threat Detection

Sify’s DevSecOps services include continuous monitoring of applications and infrastructure, ensuring real-time threat detection and response. Security operations are integrated with development cycles, providing instant feedback and minimizing the time it takes to address vulnerabilities. This continuous feedback loop creates a security-first culture within the organization.

Compliance and Governance

Sify ensures that all development practices adhere to industry standards and compliance requirements, such as GDPR, HIPAA, and PCI-DSS. The DevSecOps model ensures that organizations meet regulatory mandates while maintaining security without slowing down the development process.

Seamless Collaboration

With Sify’s DevSecOps services, businesses can foster a culture of collaboration between development, operations, and security teams. This alignment leads to better communication, faster issue resolution, and a shared responsibility for security. This cultural shift helps organizations maintain agility without compromising security or compliance.

Scalable and Flexible Solutions

Whether an enterprise is running small-scale projects or large, complex applications, Sify’s DevSecOps services are designed to scale according to business needs. The infrastructure can be tailored to fit specific workloads, ensuring that security processes are optimized for both large and small teams.

DevSecOps for Cloud-Native Environments

As businesses increasingly adopt cloud-native technologies like microservices and containerization, Sify’s DevSecOps services are built to support these modern architectures. Security is embedded within containerized environments, and tools like Kubernetes and Docker are integrated to ensure security across the cloud-native landscape.

The Sify Advantage

Sify’s DevSecOps services stand out for their comprehensive approach to security, seamless integration with modern development practices, and deep expertise in managing complex IT environments. The team of experts at Sify works closely with organizations to understand their unique needs, tailor DevSecOps solutions, and ensure smooth implementation without disrupting ongoing projects.

By adopting Sify’s DevSecOps services, businesses can accelerate their software development processes while ensuring the highest levels of security. With automated testing, continuous monitoring, and a proactive approach to vulnerability management, Sify empowers enterprises to innovate with confidence.

In a world where security breaches can cost millions and damage a company’s reputation, Sify’s DevSecOps services provide a critical layer of protection that doesn’t slow down innovation. By integrating security into every step of the development process, businesses can remain agile, competitive, and secure. Embrace Sify’s DevSecOps services and take control of your security posture while delivering top-notch software at speed.

0 notes

Text

Embarking on a Digital Journey: Your Guide to Learning Coding

In today's fast-paced and ever-evolving technology landscape, DevOps has emerged as a crucial and transformative field that bridges the gap between software development and IT operations. The term "DevOps" is a portmanteau of "Development" and "Operations," emphasizing the importance of collaboration, automation, and efficiency in the software delivery process. DevOps practices have gained widespread adoption across industries, revolutionizing the way organizations develop, deploy, and maintain software. This paradigm shift has led to a surging demand for skilled DevOps professionals who can navigate the complex and multifaceted DevOps landscape.

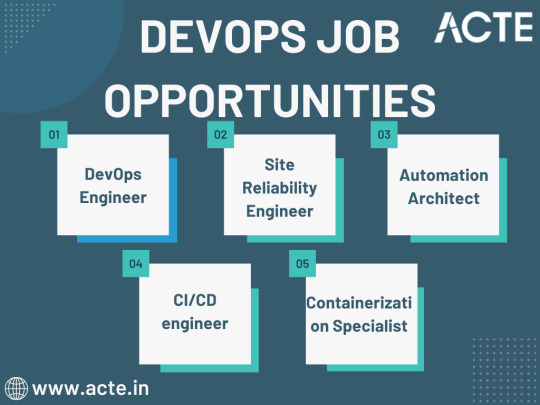

Exploring DevOps Job Opportunities

DevOps has given rise to a spectrum of job opportunities, each with its unique focus and responsibilities. Let's delve into some of the key DevOps roles that are in high demand:

1. DevOps Engineer

At the heart of DevOps lies the DevOps engineer, responsible for automating and streamlining IT operations and processes. DevOps engineers are the architects of efficient software delivery pipelines, collaborating closely with development and IT teams. Their mission is to accelerate the software delivery process while ensuring the reliability and stability of systems.

2. Site Reliability Engineer (SRE)

Site Reliability Engineers, or SREs, are a subset of DevOps engineers who specialize in maintaining large-scale, highly reliable software systems. They focus on critical aspects such as availability, latency, performance, efficiency, change management, monitoring, emergency response, and capacity planning. SREs play a pivotal role in ensuring that applications and services remain dependable and performant.

3. Automation Architect

Automation is a cornerstone of DevOps, and automation architects are experts in this domain. These professionals design and implement automation solutions that optimize software development and delivery processes. By automating repetitive and manual tasks, they enhance efficiency and reduce the risk of human error.

4. Continuous Integration/Continuous Deployment (CI/CD) Engineer

CI/CD engineers specialize in creating, maintaining, and optimizing CI/CD pipelines. The CI/CD pipeline is the backbone of DevOps, enabling the automated building, testing, and deployment of code. CI/CD engineers ensure that the pipeline operates seamlessly, enabling rapid and reliable software delivery.

5. Containerization Specialist

The rise of containerization technologies like Docker and orchestration tools such as Kubernetes has revolutionized software deployment. Containerization specialists focus on managing and scaling containerized applications, making them an integral part of DevOps teams.

Navigating the DevOps Learning Journey

To embark on a successful DevOps career, individuals often turn to comprehensive training programs and courses that equip them with the necessary skills and knowledge. The DevOps learning journey typically involves the following courses:

1. DevOps Foundation

A foundational DevOps course covers the basics of DevOps practices, principles, and tools. It serves as an excellent starting point for beginners, providing a solid understanding of the DevOps mindset and practices.

2. DevOps Certification

Advanced certification courses are designed for those who wish to delve deeper into DevOps methodologies, CI/CD pipelines, and various tools like Jenkins, Ansible, and Terraform. These certifications validate your expertise and enhance your job prospects.

3. Docker and Kubernetes Training

Containerization and container orchestration are two essential skills in the DevOps toolkit. Courses focused on Docker and Kubernetes provide in-depth knowledge of these technologies, enabling professionals to effectively manage containerized applications.

4. AWS or Azure DevOps Training

Specialized DevOps courses tailored to cloud platforms like AWS or Azure are essential for those working in a cloud-centric environment. These courses teach how to leverage cloud services in a DevOps context, further streamlining software development and deployment.

5. Advanced DevOps Courses

For those looking to specialize in specific areas, advanced DevOps courses cover topics like DevOps security, DevOps practices for mobile app development, and more. These courses cater to professionals who seek to expand their expertise in specific domains.

As the DevOps landscape continues to evolve, the need for high-quality training and education becomes increasingly critical. This is where ACTE Technologies steps into the spotlight as a reputable choice for comprehensive DevOps training.

They offer carefully thought-out courses that are intended to impart both foundational information and real-world, practical experience. Under the direction of knowledgeable educators, students can quickly advance on their path to become skilled DevOps engineers. They provide practical insights into industrial practises and issues, going beyond theory.

Your journey toward mastering DevOps practices and pursuing a successful career begins here. In the digital realm, where possibilities are limitless and innovation knows no bounds, ACTE Technologies serves as a gateway to a thriving DevOps career. With a diverse array of courses and expert instruction, you'll find the resources you need to thrive in this ever-evolving domain.

3 notes

·

View notes