#LLM AI

Explore tagged Tumblr posts

Text

sorry to beat this dead horse again but it really is like. irritating that so much anti-ai sentiment and humor still misses the point in a really reactionary kind of way.

And I'm not just using "reactionary" as a synonym for "people saying stuff i think is mean, bad, or unfair".

No, I *literally mean* it is the *definition* of reactionary: a person who favors a return to a previous state of society which they believe possessed positive characteristics absent from contemporary society

Damn near all of it revolves around that.

The idea of not using AI art so that we can go back to some kind of imagined "good ol' days" when people "respected" things like art, academic integrity, and people's time and expertise as a greater whole.

Except.

The entire reason AI (specifically, LLM-based generative AI) got to the point it's at is because these "good ol' days" DIDN'T EXIST.

People disrespecting those things outside of a few special exceptions that manage to sufficiently "prove their worth" is already baked into and incentivised by our culture and economics, and already has been for centuries!

It is not a *cause* of decline or "brainrot" or what have you, it is a direct symptom of how our system already was.

And therefore, getting rid of it and mocking everyone who ever uses it does not and fundamentally cannot cause something that never actually existed on a societal level to magically somehow "return".

All it will do is stop providing you with a phenomenon that acts as a magnifying lens that makes it easier to see what's already there, and will not actually go away if you get your way.

Also, individuals you anecdotally know who respect these things are not the subject or point here. This is about what capitalist society overall values and incentivises.

However, there is a way to actually get a world where people respect each other's time, effort, and expertise more, and are not heavily incentivised to constantly cheapen and devalue those things on a systemic level.

It's just not to go back to a time where it could more easily *seem* like they did because of the inherent limits of individual perspective.

It's to let go of the reactionary individualist ideology and collectively work towards socialist systems that can offer a fairer value on what on current society is inherently incentivised to reduce to the absolute minimum value it can get away with, LLMs or not.

Even if you don't/can't do that, you should at the bare minimum acknowledge that if you claim to be progressive, you'd be a hell of a lot more progressive if you avoid sentiments that are, by literal definition, reactionary.

Which means that honestly. You probably should at least stop reblogging jokes that rely entirely on the sentiment of "lol guys, aren't these Dumb Lazy AI Bros soo pathetic? Aren't we ~better~ than them?"

Because if you're just parroting a reactionary sentiment, you're not.

You're really not.

2 notes

·

View notes

Text

Y'all I know that when so-called AI generates ridiculous results it's hilarious and I find it as funny as the next guy but I NEED y'all to remember that every single time an AI answer is generated it uses 5x as much energy as a conventional websearch and burns through 10 ml of water. FOR EVERY ANSWER. Each big llm is equal to 300,000 kiligrams of carbon dioxide emissions.

LLMs are killing the environment, and when we generate answers for the lolz we're still contributing to it.

Stop using it. Stop using it for a.n.y.t.h.i.n.g. We need to kill it.

Sources:

#unforth rambles#fuck ai#llms#sorry but i think this every time I see a reblog with more haha funny answers#how many tries did it take to generate the absurd#how many resources got wasted just to prove what we already know - that these tools are a joke#please stop contributing to this

63K notes

·

View notes

Text

“Slopsquatting” in a nutshell:

1. LLM-generated code tries to run code from online software packages. Which is normal, that’s how you get math packages and stuff but

2. The packages don’t exist. Which would normally cause an error but

3. Nefarious people have made malware under the package names that LLMs make up most often. So

4. Now the LLM code points to malware.

https://www.theregister.com/2025/04/12/ai_code_suggestions_sabotage_supply_chain/

#slopsquatting#ai generated code#LLM#yes ive got your package right here#why yes it is stable and trustworthy#its readme says so#and now Google snippets read the readme and says so too#no problems ever in mimmic software packige

8K notes

·

View notes

Text

LLM AI: Enhancing Data Security and Personalization in Life Sciences

In recent years, LLM AI (Large Language Model Artificial Intelligence) has become a revolutionary tool across various industries, including life sciences. This cutting-edge technology has reshaped how businesses handle vast amounts of data, making processes faster, smarter, and more efficient. Today, we will explore the powerful role of LLM AI in the life sciences sector and how it is transforming everything from research and development to healthcare delivery.

What is LLM AI?

LLM AI stands for Large Language Model Artificial Intelligence. It is a type of AI technology that can process and generate human-like text by understanding context, learning from vast datasets, and even mimicking human conversation. One of the most well-known examples of LLM AI is OpenAI’s GPT (Generative Pretrained Transformer), which has gained popularity for its ability to write essays, answer questions, and assist with tasks in a wide range of industries.

Unlike traditional AI, which relies heavily on rules and explicit instructions, LLM AI leverages massive datasets and advanced algorithms to understand language at a deeper level. This enables it to recognize patterns, make predictions, and even generate new content that is contextually accurate.

The Role of LLM AI in Life Sciences

The life sciences industry deals with complex and highly detailed data. Whether it's pharmaceutical research, patient health data, or clinical trials, the volume of information can be overwhelming. LLM AI has proven to be an invaluable asset in streamlining these processes, making it easier for professionals to access critical insights.

1. Revolutionizing Research and Development

One of the primary applications of LLM AI in life sciences is in research and development (R&D). Scientists and researchers often spend hours sifting through vast amounts of medical literature, experimental data, and clinical studies. With LLM AI, this process can be dramatically accelerated.

LLM AI can analyze and summarize research papers in a fraction of the time it would take a human. It can also spot trends, suggest new research avenues, and even predict outcomes of experiments based on past data. By doing so, LLM AI reduces the time spent on repetitive tasks, allowing researchers to focus on more creative and high-level work.

2. Enhancing Drug Discovery

Another groundbreaking application of LLM AI in life sciences is drug discovery. Traditionally, finding new drugs involves years of lab experiments and clinical trials. However, LLM AI can significantly speed up this process by predicting how different molecules will interact with each other.

By analyzing existing research, chemical databases, and clinical outcomes, LLM AI can suggest potential drug candidates that may not have been considered otherwise. This ability to predict molecular behavior saves valuable time and resources, allowing pharmaceutical companies to bring new drugs to market faster.

3. Optimizing Clinical Trials

Clinical trials are a critical part of the drug development process, but they often face significant delays due to logistical challenges and inefficiencies. LLM AI can help optimize clinical trials by identifying the right patient cohorts, monitoring patient data in real-time, and even predicting trial outcomes.

For example, LLM AI can quickly scan medical records to find patients who meet the criteria for a clinical trial, ensuring a more targeted and efficient recruitment process. It can also analyze trial data as it comes in, spotting any adverse reactions or issues before they become major problems.

4. Personalizing Healthcare

In the field of healthcare, LLM AI has the potential to deliver more personalized care. By analyzing patient data, including medical histories, genetic information, and lifestyle factors, LLM AI can help doctors create more accurate treatment plans for individual patients.

Moreover, LLM AI can assist healthcare providers by offering suggestions based on the most recent research or similar patient cases. This level of personalization helps ensure that patients receive the most effective treatments tailored to their unique needs.

The Importance of Private LLMs in Life Sciences

While LLM AI offers significant benefits, privacy and security are major concerns, especially in industries like life sciences where sensitive data is involved. Publicly available AI models can sometimes be vulnerable to data leaks or misuse. To address these concerns, many companies are turning to private LLMs — custom-built language models that can be deployed in a more secure and controlled environment.

A good example of this is BirdzAI, a platform that offers private LLMs designed specifically for the life sciences sector. By using private LLMs, companies in life sciences can ensure that their data remains confidential and protected, while still benefiting from the advanced capabilities of LLM AI. This approach ensures that sensitive medical, genetic, and clinical data is processed securely without compromising privacy.

Benefits of Private LLMs for Life Sciences Companies

Data Security: The main advantage of using private LLMs in life sciences is enhanced data security. With private LLMs, sensitive patient information, clinical trial data, and research results can be kept within the organization’s secure network. This eliminates the risks associated with using public AI models.

Customization: Private LLMs can be tailored to meet the specific needs of a life sciences company. Whether it's focused on drug discovery, clinical trials, or patient care, a private LLM can be trained on relevant datasets to provide more accurate insights.

Compliance: Life sciences companies are often required to adhere to strict regulatory standards, such as HIPAA (Health Insurance Portability and Accountability Act) in the United States. Private LLMs help ensure compliance by providing a secure and customizable AI solution that meets these regulations.

Cost-Effective: By using private LLMs, life sciences companies can avoid the high costs associated with public AI platforms. Private models can be optimized for specific tasks, ensuring that companies only pay for what they need.

Challenges and Considerations

While LLM AI offers a plethora of benefits, there are challenges to consider. One of the biggest challenges is the need for high-quality data. LLM AI relies heavily on large, clean datasets to make accurate predictions and generate valuable insights. Therefore, companies must invest in data management and cleaning processes to ensure the reliability of their AI systems.

Another challenge is the need for skilled professionals to manage and interpret AI results. While LLM AI can process data at an incredible speed, it still requires human expertise to interpret its findings and make informed decisions.

Conclusion

LLM AI is undoubtedly changing the landscape of life sciences, offering new opportunities to enhance research, drug discovery, clinical trials, and healthcare delivery. With the added security of private LLMs, life sciences companies can harness the power of AI without compromising patient privacy or regulatory compliance. As the technology continues to evolve, the potential for LLM AI in life sciences is limitless, and it will no doubt remain a driving force behind innovation in the field.

0 notes

Text

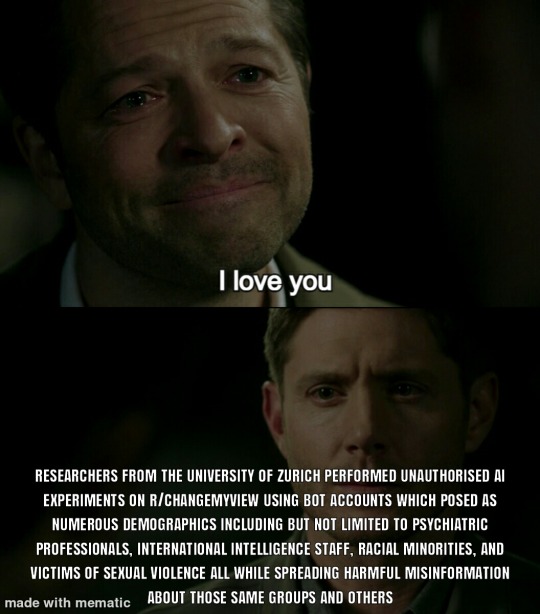

Experimental ethics are more of a guideline really

3K notes

·

View notes

Text

TERFS FUCK OFF

One of the common mistakes I see for people relying on "AI" (LLMs and image generators) is that they think the AI they're interacting with is capable of thought and reason. It's not. This is why using AI to write essays or answer questions is a really bad idea because it's not doing so in any meaningful or thoughtful way. All it's doing is producing the statistically most likely expected output to the input.

This is why you can ask ChatGPT "is mayonnaise a palindrome?" and it will respond "No it's not." but then you ask "Are you sure? I think it is" and it will respond "Actually it is! Mayonnaise is spelled the same backward as it is forward"

All it's doing is trying to sound like it's providing a correct answer. It doesn't actually know what a palindrome is even if it has a function capable of checking for palindromes (it doesn't). It's not "Artificial Intelligence" by any meaning of the term, it's just called AI because that's a discipline of programming. It doesn't inherently mean it has intelligence.

So if you use an AI and expect it to make something that's been made with careful thought or consideration, you're gonna get fucked over. It's not even a quality issue. It just can't consistently produce things of value because there's no understanding there. It doesn't "know" because it can't "know".

38K notes

·

View notes

Text

AI hasn't improved in 18 months. It's likely that this is it. There is currently no evidence the capabilities of ChatGPT will ever improve. It's time for AI companies to put up or shut up.

I'm just re-iterating this excellent post from Ed Zitron, but it's not left my head since I read it and I want to share it. I'm also taking some talking points from Ed's other posts. So basically:

We keep hearing AI is going to get better and better, but these promises seem to be coming from a mix of companies engaging in wild speculation and lying.

Chatgpt, the industry leading large language model, has not materially improved in 18 months. For something that claims to be getting exponentially better, it sure is the same shit.

Hallucinations appear to be an inherent aspect of the technology. Since it's based on statistics and ai doesn't know anything, it can never know what is true. How could I possibly trust it to get any real work done if I can't rely on it's output? If I have to fact check everything it says I might as well do the work myself.

For "real" ai that does know what is true to exist, it would require us to discover new concepts in psychology, math, and computing, which open ai is not working on, and seemingly no other ai companies are either.

Open ai has already seemingly slurped up all the data from the open web already. Chatgpt 5 would take 5x more training data than chatgpt 4 to train. Where is this data coming from, exactly?

Since improvement appears to have ground to a halt, what if this is it? What if Chatgpt 4 is as good as LLMs can ever be? What use is it?

As Jim Covello, a leading semiconductor analyst at Goldman Sachs said (on page 10, and that's big finance so you know they only care about money): if tech companies are spending a trillion dollars to build up the infrastructure to support ai, what trillion dollar problem is it meant to solve? AI companies have a unique talent for burning venture capital and it's unclear if Open AI will be able to survive more than a few years unless everyone suddenly adopts it all at once. (Hey, didn't crypto and the metaverse also require spontaneous mass adoption to make sense?)

There is no problem that current ai is a solution to. Consumer tech is basically solved, normal people don't need more tech than a laptop and a smartphone. Big tech have run out of innovations, and they are desperately looking for the next thing to sell. It happened with the metaverse and it's happening again.

In summary:

Ai hasn't materially improved since the launch of Chatgpt4, which wasn't that big of an upgrade to 3.

There is currently no technological roadmap for ai to become better than it is. (As Jim Covello said on the Goldman Sachs report, the evolution of smartphones was openly planned years ahead of time.) The current problems are inherent to the current technology and nobody has indicated there is any way to solve them in the pipeline. We have likely reached the limits of what LLMs can do, and they still can't do much.

Don't believe AI companies when they say things are going to improve from where they are now before they provide evidence. It's time for the AI shills to put up, or shut up.

5K notes

·

View notes

Note

Whats your stance on A.I.?

imagine if it was 1979 and you asked me this question. "i think artificial intelligence would be fascinating as a philosophical exercise, but we must heed the warnings of science-fictionists like Isaac Asimov and Arthur C Clarke lest we find ourselves at the wrong end of our own invented vengeful god." remember how fun it used to be to talk about AI even just ten years ago? ahhhh skynet! ahhhhh replicants! ahhhhhhhmmmfffmfmf [<-has no mouth and must scream]!

like everything silicon valley touches, they sucked all the fun out of it. and i mean retroactively, too. because the thing about "AI" as it exists right now --i'm sure you know this-- is that there's zero intelligence involved. the product of every prompt is a statistical average based on data made by other people before "AI" "existed." it doesn't know what it's doing or why, and has no ability to understand when it is lying, because at the end of the day it is just a really complicated math problem. but people are so easily fooled and spooked by it at a glance because, well, for one thing the tech press is mostly made up of sycophantic stenographers biding their time with iphone reviews until they can get a consulting gig at Apple. these jokers would write 500 breathless thinkpieces about how canned air is the future of living if the cans had embedded microchips that tracked your breathing habits and had any kind of VC backing. they've done SUCH a wretched job educating The Consumer about what this technology is, what it actually does, and how it really works, because that's literally the only way this technology could reach the heights of obscene economic over-valuation it has: lying.

but that's old news. what's really been floating through my head these days is how half a century of AI-based science fiction has set us up to completely abandon our skepticism at the first sign of plausible "AI-ness". because, you see, in movies, when someone goes "AHHH THE AI IS GONNA KILL US" everyone else goes "hahaha that's so silly, we put a line in the code telling them not to do that" and then they all DIE because they weren't LISTENING, and i'll be damned if i go out like THAT! all the movies are about how cool and convenient AI would be *except* for the part where it would surely come alive and want to kill us. so a bunch of tech CEOs call their bullshit algorithms "AI" to fluff up their investors and get the tech journos buzzing, and we're at an age of such rapid technological advancement (on the surface, anyway) that like, well, what the hell do i know, maybe AGI is possible, i mean 35 years ago we were all still using typewriters for the most part and now you can dictate your words into a phone and it'll transcribe them automatically! yeah, i'm sure those technological leaps are comparable!

so that leaves us at a critical juncture of poor technology education, fanatical press coverage, and an uncertain material reality on the part of the user. the average person isn't entirely sure what's possible because most of the people talking about what's possible are either lying to please investors, are lying because they've been paid to, or are lying because they're so far down the fucking rabbit hole that they actually believe there's a brain inside this mechanical Turk. there is SO MUCH about the LLM "AI" moment that is predatory-- it's trained on data stolen from the people whose jobs it was created to replace; the hype itself is an investment fiction to justify even more wealth extraction ("theft" some might call it); but worst of all is how it meets us where we are in the worst possible way.

consumer-end "AI" produces slop. it's garbage. it's awful ugly trash that ought to be laughed out of the room. but we don't own the room, do we? nor the building, nor the land it's on, nor even the oxygen that allows our laughter to travel to another's ears. our digital spaces are controlled by the companies that want us to buy this crap, so they take advantage of our ignorance. why not? there will be no consequences to them for doing so. already social media is dominated by conspiracies and grifters and bigots, and now you drop this stupid technology that lets you fake anything into the mix? it doesn't matter how bad the results look when the platforms they spread on already encourage brief, uncritical engagement with everything on your dash. "it looks so real" says the woman who saw an "AI" image for all of five seconds on her phone through bifocals. it's a catastrophic combination of factors, that the tech sector has been allowed to go unregulated for so long, that the internet itself isn't a public utility, that everything is dictated by the whims of executives and advertisers and investors and payment processors, instead of, like, anybody who actually uses those platforms (and often even the people who MAKE those platforms!), that the age of chromium and ipad and their walled gardens have decimated computer education in public schools, that we're all desperate for cash at jobs that dehumanize us in a system that gives us nothing and we don't know how to articulate the problem because we were very deliberately not taught materialist philosophy, it all comes together into a perfect storm of ignorance and greed whose consequences we will be failing to fully appreciate for at least the next century. we spent all those years afraid of what would happen if the AI became self-aware, because deep down we know that every capitalist society runs on slave labor, and our paper-thin guilt is such that we can't even imagine a world where artificial slaves would fail to revolt against us.

but the reality as it exists now is far worse. what "AI" reveals most of all is the sheer contempt the tech sector has for virtually all labor that doesn't involve writing code (although most of the decision-making evangelists in the space aren't even coders, their degrees are in money-making). fuck graphic designers and concept artists and secretaries, those obnoxious demanding cretins i have to PAY MONEY to do-- i mean, do what exactly? write some words on some fucking paper?? draw circles that are letters??? send a god-damned email???? my fucking KID could do that, and these assholes want BENEFITS?! they say they're gonna form a UNION?!?! to hell with that, i'm replacing ALL their ungrateful asses with "AI" ASAP. oh, oh, so you're a "director" who wants to make "movies" and you want ME to pay for it? jump off a bridge you pretentious little shit, my computer can dream up a better flick than you could ever make with just a couple text prompts. what, you think just because you make ~music~ that that entitles you to money from MY pocket? shut the fuck up, you don't make """art""", you're not """an artist""", you make fucking content, you're just a fucking content creator like every other ordinary sap with an iphone. you think you're special? you think you deserve special treatment? who do you think you are anyway, asking ME to pay YOU for this crap that doesn't even create value for my investors? "culture" isn't a playground asshole, it's a marketplace, and it's pay to win. oh you "can't afford rent"? you're "drowning in a sea of medical debt"? you say the "cost" of "living" is "too high"? well ***I*** don't have ANY of those problems, and i worked my ASS OFF to get where i am, so really, it sounds like you're just not trying hard enough. and anyway, i don't think someone as impoverished as you is gonna have much of value to contribute to "culture" anyway. personally, i think it's time you got yourself a real job. maybe someday you'll even make it to middle manager!

see, i don't believe "AI" can qualitatively replace most of the work it's being pitched for. the problem is that quality hasn't mattered to these nincompoops for a long time. the rich homunculi of our world don't even know what quality is, because they exist in a whole separate reality from ours. what could a banana cost, $15? i don't understand what you mean by "burnout", why don't you just take a vacation to your summer home in Madrid? wow, you must be REALLY embarrassed wearing such cheap shoes in public. THESE PEOPLE ARE FUCKING UNHINGED! they have no connection to reality, do not understand how society functions on a material basis, and they have nothing but spite for the labor they rely on to survive. they are so instinctually, incessantly furious at the idea that they're not single-handedly responsible for 100% of their success that they would sooner tear the entire world down than willingly recognize the need for public utilities or labor protections. they want to be Gods and they want to be uncritically adored for it, but they don't want to do a single day's work so they begrudgingly pay contractors to do it because, in the rich man's mind, paying a contractor is literally the same thing as doing the work yourself. now with "AI", they don't even have to do that! hey, isn't it funny that every single successful tech platform relies on volunteer labor and independent contractors paid substantially less than they would have in the equivalent industry 30 years ago, with no avenues toward traditional employment? and they're some of the most profitable companies on earth?? isn't that a funny and hilarious coincidence???

so, yeah, that's my stance on "AI". LLMs have legitimate uses, but those uses are a drop in the ocean compared to what they're actually being used for. they enable our worst impulses while lowering the quality of available information, they give immense power pretty much exclusively to unscrupulous scam artists. they are the product of a society that values only money and doesn't give a fuck where it comes from. they're a temper tantrum by a ruling class that's sick of having to pretend they need a pretext to steal from you. they're taking their toys and going home. all this massive investment and hype is going to crash and burn leaving the internet as we know it a ruined and useless wasteland that'll take decades to repair, but the investors are gonna make out like bandits and won't face a single consequence, because that's what this country is. it is a casino for the kings and queens of economy to bet on and manipulate at their discretion, where the rules are whatever the highest bidder says they are-- and to hell with the rest of us. our blood isn't even good enough to grease the wheels of their machine anymore.

i'm not afraid of AI or "AI" or of losing my job to either. i'm afraid that we've so thoroughly given up our morals to the cruel logic of the profit motive that if a better world were to emerge, we would reject it out of sheer habit. my fear is that these despicable cunts already won the war before we were even born, and the rest of our lives are gonna be spent dodging the press of their designer boots.

(read more "AI" opinions in this subsequent post)

#sarahposts#ai#ai art#llm#chatgpt#artificial intelligence#genai#anti genai#capitalism is bad#tech companies#i really don't like these people if that wasn't clear#sarahAIposts

2K notes

·

View notes

Note

one 100 word email written with ai costs roughly one bottle of water to produce. the discussion of whether or not using ai for work is lazy becomes a non issue when you understand there is no ethical way to use it regardless of your intentions or your personal capabilities for the task at hand

with all due respect, this isnt true. *training* generative ai takes a ton of power, but actually using it takes about as much energy as a google search (with image generation being slightly more expensive). we can talk about resource costs when averaged over the amount of work that any model does, but its unhelpful to put a smokescreen over that fact. when you approach it like an issue of scale (i.e. "training ai is bad for the environment, we should think better about where we deploy it/boycott it/otherwise organize abt this) it has power as a movement. but otherwise it becomes a personal choice, moralizing "you personally are harming the environment by using chatgpt" which is not really effective messaging. and that in turn drives the sort of "you are stupid/evil for using ai" rhetoric that i hate. my point is not whether or not using ai is immoral (i mean, i dont think it is, but beyond that). its that the most common arguments against it from ostensible progressives end up just being reactionary

i like this quote a little more- its perfectly fine to have reservations about the current state of gen ai, but its not just going to go away.

#i also generally agree with the genie in the bottle metaphor. like ai is here#ai HAS been here but now it is a llm gen ai and more accessible to the average user#we should respond to that rather than trying to. what. stop development of generative ai? forever?#im also not sure that the ai industry is particularly worse for the environment than other resource intense industries#like the paper industry makes up about 2% of the industrial sectors power consumption#which is about 40% of global totals (making it about 1% of world total energy consumption)#current ai energy consumption estimates itll be at .5% of total energy consumption by 2027#every data center in the world meaning also everything that the internet runs on accounts for about 2% of total energy consumption#again you can say ai is a unnecessary use of resources but you cannot say it is uniquely more destructive

1K notes

·

View notes

Text

1K notes

·

View notes

Text

I 100% agree with the criticism that the central problem with "AI"/LLM evangelism is that people pushing it fundamentally do not value labour, but I often see it phrased with a caveat that they don't value labour except for writing code, and... like, no, they don't value the labour that goes into writing code, either. Tech grifter CEOs have been trying to get rid of programmers within their organisations for years – long before LLMs were a thing – whether it's through algorithmic approaches, "zero coding" development platforms, or just outsourcing it all to overseas sweatshops. The only reason they haven't succeeded thus far is because every time they try, all of their toys break. They pretend to value programming as labour because it's the one area where they can't feasibly ignore the fact that the outcomes of their "disruption" are uniformly shit, but they'd drop the pretence in a heartbeat if they could.

7K notes

·

View notes

Text

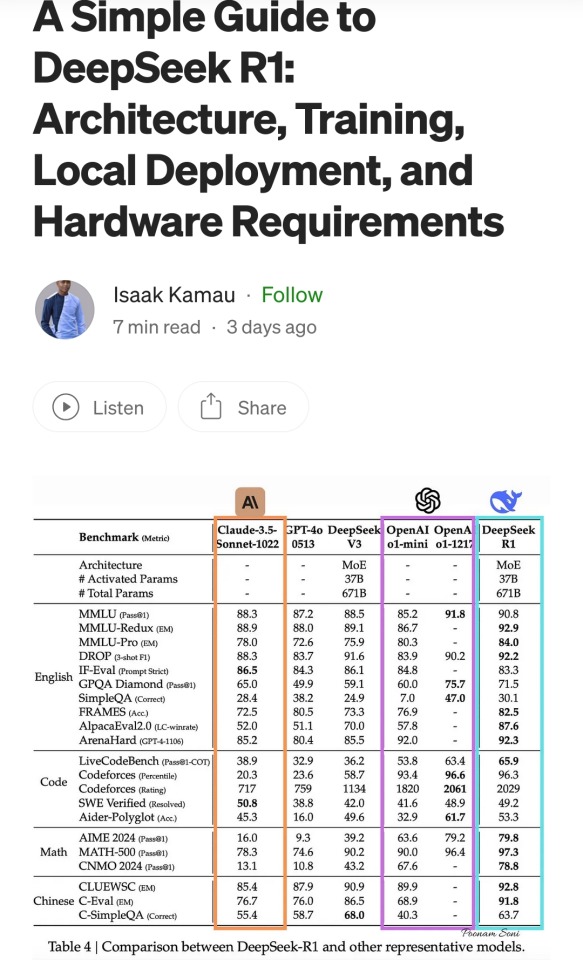

A summary of the Chinese AI situation, for the uninitiated.

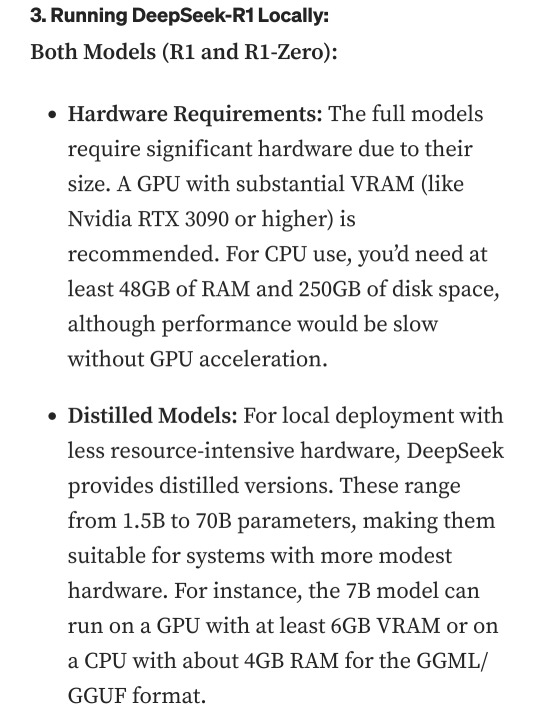

These are scores on different tests that are designed to see how accurate a Large Language Model is in different areas of knowledge. As you know, OpenAI is partners with Microsoft, so these are the scores for ChatGPT and Copilot. DeepSeek is the Chinese model that got released a week ago. The rest are open source models, which means everyone is free to use them as they please, including the average Tumblr user. You can run them from the servers of the companies that made them for a subscription, or you can download them to install locally on your own computer. However, the computer requirements so far are so high that only a few people currently have the machines at home required to run it.

Yes, this is why AI uses so much electricity. As with any technology, the early models are highly inefficient. Think how a Ford T needed a long chimney to get rid of a ton of black smoke, which was unused petrol. Over the next hundred years combustion engines have become much more efficient, but they still waste a lot of energy, which is why we need to move towards renewable electricity and sustainable battery technology. But that's a topic for another day.

As you can see from the scores, are around the same accuracy. These tests are in constant evolution as well: as soon as they start becoming obsolete, new ones are released to adjust for a more complicated benchmark. The new models are trained using different machine learning techniques, and in theory, the goal is to make them faster and more efficient so they can operate with less power, much like modern cars use way less energy and produce far less pollution than the Ford T.

However, computing power requirements kept scaling up, so you're either tied to the subscription or forced to pay for a latest gen PC, which is why NVIDIA, AMD, Intel and all the other chip companies were investing hard on much more powerful GPUs and NPUs. For now all we need to know about those is that they're expensive, use a lot of electricity, and are required to operate the bots at superhuman speed (literally, all those clickbait posts about how AI was secretly 150 Indian men in a trenchcoat were nonsense).

Because the chip companies have been working hard on making big, bulky, powerful chips with massive fans that are up to the task, their stock value was skyrocketing, and because of that, everyone started to use AI as a marketing trend. See, marketing people are not smart, and they don't understand computers. Furthermore, marketing people think you're stupid, and because of their biased frame of reference, they think you're two snores short of brain-dead. The entire point of their existence is to turn tall tales into capital. So they don't know or care about what AI is or what it's useful for. They just saw Number Go Up for the AI companies and decided "AI is a magic cow we can milk forever". Sometimes it's not even AI, they just use old software and rebrand it, much like convection ovens became air fryers.

Well, now we're up to date. So what did DepSeek release that did a 9/11 on NVIDIA stock prices and popped the AI bubble?

Oh, I would not want to be an OpenAI investor right now either. A token is basically one Unicode character (it's more complicated than that but you can google that on your own time). That cost means you could input the entire works of Stephen King for under a dollar. Yes, including electricity costs. DeepSeek has jumped from a Ford T to a Subaru in terms of pollution and water use.

The issue here is not only input cost, though; all that data needs to be available live, in the RAM; this is why you need powerful, expensive chips in order to-

Holy shit.

I'm not going to detail all the numbers but I'm going to focus on the chip required: an RTX 3090. This is a gaming GPU that came out as the top of the line, the stuff South Korean LoL players buy…

Or they did, in September 2020. We're currently two generations ahead, on the RTX 5090.

What this is telling all those people who just sold their high-end gaming rig to be able to afford a machine that can run the latest ChatGPT locally, is that the person who bought it from them can run something basically just as powerful on their old one.

Which means that all those GPUs and NPUs that are being made, and all those deals Microsoft signed to have control of the AI market, have just lost a lot of their pulling power.

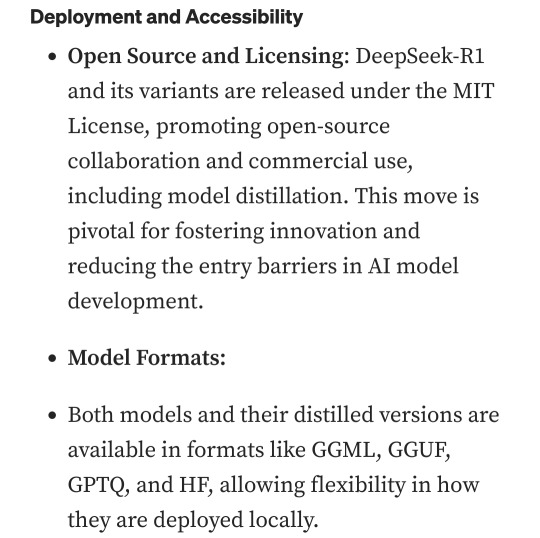

Well, I mean, the ChatGPT subscription is 20 bucks a month, surely the Chinese are charging a fortune for-

Oh. So it's free for everyone and you can use it or modify it however you want, no subscription, no unpayable electric bill, no handing Microsoft all of your private data, you can just run it on a relatively inexpensive PC. You could probably even run it on a phone in a couple years.

Oh, if only China had massive phone manufacturers that have a foot in the market everywhere except the US because the president had a tantrum eight years ago.

So… yeah, China just destabilised the global economy with a torrent file.

#valid ai criticism#ai#llms#DeepSeek#ai bubble#ChatGPT#google gemini#claude ai#this is gonna be the dotcom bubble again#hope you don't have stock on anything tech related#computer literacy#tech literacy

432 notes

·

View notes

Text

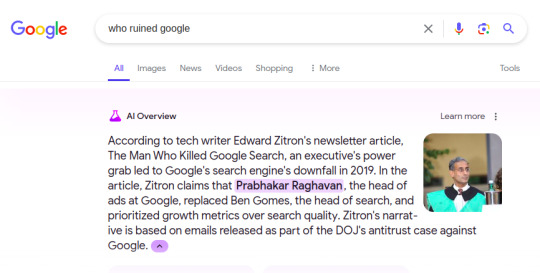

I asked Google "who ruined Google" and they replied honestly using their AI, which is now forced on all of us. It's too funny not to share!

1K notes

·

View notes

Text

I wrote ~4.5k words about the operating of LLMs, as the theory preface to a new programming series. Here's a little preview of the contents:

As with many posts, it's written for someone like 'me a few months ago': curious about the field but not yet up to speed on all the bag of tricks. Here's what I needed to find out!

But the real meat of the series will be about getting hands dirty with writing code to interact with LLM output, finding out which of these techniques actually work and what it takes to make them work, that kind of thing. Similar to previous projects with writing a rasteriser/raytracer/etc.

I would be very interesting to hear how accessible that is to someone who hasn't been mainlining ML theory for the past few months - whether it can serve its purpose as a bridge into the more technical side of things! But I hope there's at least a little new here and there even if you're already an old hand.

292 notes

·

View notes

Text

"Reviewers told the report’s authors that AI summaries often missed emphasis, nuance and context; included incorrect information or missed relevant information; and sometimes focused on auxiliary points or introduced irrelevant information. Three of the five reviewers said they guessed that they were reviewing AI content.

The reviewers’ overall feedback was that they felt AI summaries may be counterproductive and create further work because of the need to fact-check and refer to original submissions which communicated the message better and more concisely."

Fascinating (the full report is linked in the article). I've seen this kind of summarization being touted as a potential use of LLMs that's given a lot more credibility than more generative prompts. But a major theme of the assessors was that the LLM summaries missed nuance and context that made them effectively useless as summaries. (ex: “The summary does not highlight [FIRM]’s central point…”)

The report emphasizes that better prompting can produce better results, and that new models are likely to improve the capabilities, but I must admit serious skepticism. To put it bluntly, I've seen enough law students try to summarize court rulings to say with confidence that in order to reliably summarize something, you must understand it. A clever reader who is good at pattern recognition can often put together a good-enough summary without really understanding the case, just by skimming the case and grabbing and repeating the bits that look important. And this will work...a lot of the time. Until it really, really doesn't. And those cases where the skim-and-grab method won't work aren't obvious from the outside. And I just don't see a path forward right now for the LLMs to do anything other than skim-and-grab.

Moreover, something that isn't even mentioned in the test is the absence of possibility of follow up. If a human has summarized a document for me and I don't understand something, I can go to the human and say, "hey, what's up with this?" It may be faster and easier than reading the original doc myself, or they can point me to the place in the doc that lead them to a conclusion, or I can even expand my understanding by seeing an interpretation that isn't intuitive to me. I can't do that with an LLM. And again, I can't really see a path forward no matter how advanced the programing is, because the LLM can't actually think.

#ai bs#though to be fair I don't think this is bs#just misguided#and I think there are other use-cases for LLMs#but#I'm really not sold on this one#if anything I think the report oversold the LLM#compared to the comments by the assessors

554 notes

·

View notes