#LLM powered application

Explore tagged Tumblr posts

Text

Boost Your Brand’s Voice with Our AI Content Generator Solution

Atcuality offers a next-gen content automation platform that helps your brand speak with clarity, consistency, and creativity. Whether you’re a startup or an enterprise, content is key to growth, and managing it shouldn’t be overwhelming. At the center of our offering is a smart AI content generator that creates high-quality text tailored to your audience, industry, and goals. From social captions and ad creatives to long-form blog posts, our platform adapts to your needs and helps reduce time-to-publish dramatically. We also provide collaboration tools, workflow automation, and data insights to refine your strategy over time. Elevate your brand’s voice and reduce content fatigue with the intelligence and reliability only Atcuality can offer.

#seo marketing#seo services#artificial intelligence#digital marketing#seo agency#seo company#iot applications#ai powered application#azure cloud services#amazon web services#ai model#ai art#ai generated#ai image#ai#chatgpt#technology#machine learning#llm#ai services#ai seo#augmented reality agency#augmented reality#augmented and virtual reality market#augmented intelligence#virtual reality#virtual assistant

2 notes

·

View notes

Text

YeagerAI’s Intelligent Oracle: Built on GenLayer blockchain for real-time data access - AI News

New Post has been published on https://thedigitalinsider.com/yeagerais-intelligent-oracle-built-on-genlayer-blockchain-for-real-time-data-access-ai-news/

YeagerAI’s Intelligent Oracle: Built on GenLayer blockchain for real-time data access - AI News

.pp-multiple-authors-boxes-wrapper display:none; img width:100%;

Blockchain AI research lab YeagerAI has announced the launch of the Intelligent Oracle, an AI-powered oracle that aims to provide decentralised applications (DApps) with online data on-chain. The Oracle can change how data is collected, offering new possibilities and use cases for blockchain DApps. It is built on the GenLayer blockchain, also a brainchild of YeagerAI, and designed to support a new generation of DApps. It can fetch any type of online data and deliver it on-chain.

The Intelligent Oracle will initially launch on a permissioned local network, with the GenLayer Testnet expected operational by the end of 2024. By removing the dependency on human-powered resolution systems and offering cross-chain compatibility, the Intelligent Oracle aims to provide a scalable, efficient, and future-proof solution for decision-making.

The Intelligent Oracle is powered by LLMs integrated in GenLayer’s Optimistic Democracy consensus mechanism. The consensus mechanism is ‘governed’ by validators that connect to LLMs, verifying and securing the data that the Oracle fetches from on- and off-chain sources. The validators enable the network to process non-deterministic transactions by fetching data from the internet.

When a query is made, a lead validator generates a proposed result, while other validators independently verify the output against the pre-set equivalence criteria. Optimistic Democracy ensures all decisions are accurate, reliable, and secure.

While blockchain oracles have evolved rapidly in the past few years, there remain several pertinent unresolved issues. Among them are the inability of blockchains to access external data, and blockchains only able to access what is available on-chain. The emerging uses of blockchains are impacted by the lack of broader oracles, with most futuristic DApps requiring immediate, accurate, and sometimes subjective data from the internet.

To date, the solution has been to use traditional oracles, which only provide pre-defined datasets or require manual intervention, making them slow, costly, and inflexible. The Intelligent Oracle offers an autonomous solution, offering a virtually unlimited range of data types to dApp builders.

Welcoming Intelligent Oracle: A new world of blockchain use cases

The Intelligent Oracle is based on Intelligent Contracts operating on the GenLayer blockchain. The oracle operates in the GenLayer ecosystem, allowing users to fetch decentralised, transparent and secure data for their DApps or platforms. It offers cross-chain compatibility, allowing it to integrate with multiple blockchain ecosystems.

Following the launch, blockchain DApp developers have significantly more possibilities open to them. The launch of the Intelligent Oracle could be a step forward for decentralised applications in prediction markets, insurance, and financial derivatives, for example.

The Oracle enables cost-effective and fast data resolution. While traditional oracles can take days to resolve prediction markets – incurring delays and costs – the Intelligent Oracle achieves transaction finality in less than an hour at a cost of under $1 per market.

YeagerAI has seen rapid adoption of its new Oracle service with several partners, and some platforms already committed to integrating Intelligent Oracle. Early partners committed to building with the technology include Radix DLT, Etherisc, PredX, Delphi Bets, and Provably.

#2024#adoption#ai#ai news#AI research#AI-powered#applications#author#autonomous#Blockchain#Building#change#Companies#data#datasets#Democracy#developers#Ecosystems#financial#Future#how#human#insurance#Internet#issues#it#LESS#LLMs#network#News

0 notes

Text

Explore the inner workings of LlamaIndex, enhancing LLMs for streamlined natural language processing, boosting performance and efficiency.

#Large Language Model Meta AI#Power of LLMs#Custom Data Integration#Expertise in Machine Learning#Expertise in Prompt Engineering#LlamaIndex Frameworks#LLM Applications

0 notes

Text

Explore the inner workings of LlamaIndex, enhancing LLMs for streamlined natural language processing, boosting performance and efficiency.

#Large Language Model Meta AI#Power of LLMs#Custom Data Integration#Expertise in Machine Learning#Expertise in Prompt Engineering#LlamaIndex Frameworks#LLM Applications

0 notes

Text

I saw a post the other day calling criticism of generative AI a moral panic, and while I do think many proprietary AI technologies are being used in deeply unethical ways, I think there is a substantial body of reporting and research on the real-world impacts of the AI boom that would trouble the comparison to a moral panic: while there *are* older cultural fears tied to negative reactions to the perceived newness of AI, many of those warnings are Luddite with a capital L - that is, they're part of a tradition of materialist critique focused on the way the technology is being deployed in the political economy. So (1) starting with the acknowledgement that a variety of machine-learning technologies were being used by researchers before the current "AI" hype cycle, and that there's evidence for the benefit of targeted use of AI techs in settings where they can be used by trained readers - say, spotting patterns in radiology scans - and (2) setting aside the fact that current proprietary LLMs in particular are largely bullshit machines, in that they confidently generate errors, incorrect citations, and falsehoods in ways humans may be less likely to detect than conventional disinformation, and (3) setting aside as well the potential impact of frequent offloading on human cognition and of widespread AI slop on our understanding of human creativity...

What are some of the material effects of the "AI" boom?

Guzzling water and electricity

The data centers needed to support AI technologies require large quantities of water to cool the processors. A to-be-released paper from the University of California Riverside and the University of Texas Arlington finds, for example, that "ChatGPT needs to 'drink' [the equivalent of] a 500 ml bottle of water for a simple conversation of roughly 20-50 questions and answers." Many of these data centers pull water from already water-stressed areas, and the processing needs of big tech companies are expanding rapidly. Microsoft alone increased its water consumption from 4,196,461 cubic meters in 2020 to 7,843,744 cubic meters in 2023. AI applications are also 100 to 1,000 times more computationally intensive than regular search functions, and as a result the electricity needs of data centers are overwhelming local power grids, and many tech giants are abandoning or delaying their plans to become carbon neutral. Google’s greenhouse gas emissions alone have increased at least 48% since 2019. And a recent analysis from The Guardian suggests the actual AI-related increase in resource use by big tech companies may be up to 662%, or 7.62 times, higher than they've officially reported.

Exploiting labor to create its datasets

Like so many other forms of "automation," generative AI technologies actually require loads of human labor to do things like tag millions of images to train computer vision for ImageNet and to filter the texts used to train LLMs to make them less racist, sexist, and homophobic. This work is deeply casualized, underpaid, and often psychologically harmful. It profits from and re-entrenches a stratified global labor market: many of the data workers used to maintain training sets are from the Global South, and one of the platforms used to buy their work is literally called the Mechanical Turk, owned by Amazon.

From an open letter written by content moderators and AI workers in Kenya to Biden: "US Big Tech companies are systemically abusing and exploiting African workers. In Kenya, these US companies are undermining the local labor laws, the country’s justice system and violating international labor standards. Our working conditions amount to modern day slavery."

Deskilling labor and demoralizing workers

The companies, hospitals, production studios, and academic institutions that have signed contracts with providers of proprietary AI have used those technologies to erode labor protections and worsen working conditions for their employees. Even when AI is not used directly to replace human workers, it is deployed as a tool for disciplining labor by deskilling the work humans perform: in other words, employers use AI tech to reduce the value of human labor (labor like grading student papers, providing customer service, consulting with patients, etc.) in order to enable the automation of previously skilled tasks. Deskilling makes it easier for companies and institutions to casualize and gigify what were previously more secure positions. It reduces pay and bargaining power for workers, forcing them into new gigs as adjuncts for its own technologies.

I can't say anything better than Tressie McMillan Cottom, so let me quote her recent piece at length: "A.I. may be a mid technology with limited use cases to justify its financial and environmental costs. But it is a stellar tool for demoralizing workers who can, in the blink of a digital eye, be categorized as waste. Whatever A.I. has the potential to become, in this political environment it is most powerful when it is aimed at demoralizing workers. This sort of mid tech would, in a perfect world, go the way of classroom TVs and MOOCs. It would find its niche, mildly reshape the way white-collar workers work and Americans would mostly forget about its promise to transform our lives. But we now live in a world where political might makes right. DOGE’s monthslong infomercial for A.I. reveals the difference that power can make to a mid technology. It does not have to be transformative to change how we live and work. In the wrong hands, mid tech is an antilabor hammer."

Enclosing knowledge production and destroying open access

OpenAI started as a non-profit, but it has now become one of the most aggressive for-profit companies in Silicon Valley. Alongside the new proprietary AIs developed by Google, Microsoft, Amazon, Meta, X, etc., OpenAI is extracting personal data and scraping copyrighted works to amass the data it needs to train their bots - even offering one-time payouts to authors to buy the rights to frack their work for AI grist - and then (or so they tell investors) they plan to sell the products back at a profit. As many critics have pointed out, proprietary AI thus works on a model of political economy similar to the 15th-19th-century capitalist project of enclosing what was formerly "the commons," or public land, to turn it into private property for the bourgeois class, who then owned the means of agricultural and industrial production. "Open"AI is built on and requires access to collective knowledge and public archives to run, but its promise to investors (the one they use to attract capital) is that it will enclose the profits generated from that knowledge for private gain.

AI companies hungry for good data to train their Large Language Models (LLMs) have also unleashed a new wave of bots that are stretching the digital infrastructure of open-access sites like Wikipedia, Project Gutenberg, and Internet Archive past capacity. As Eric Hellman writes in a recent blog post, these bots "use as many connections as you have room for. If you add capacity, they just ramp up their requests." In the process of scraping the intellectual commons, they're also trampling and trashing its benefits for truly public use.

Enriching tech oligarchs and fueling military imperialism

The names of many of the people and groups who get richer by generating speculative buzz for generative AI - Elon Musk, Mark Zuckerberg, Sam Altman, Larry Ellison - are familiar to the public because those people are currently using their wealth to purchase political influence and to win access to public resources. And it's looking increasingly likely that this political interference is motivated by the probability that the AI hype is a bubble - that the tech can never be made profitable or useful - and that tech oligarchs are hoping to keep it afloat as a speculation scheme through an infusion of public money - a.k.a. an AIG-style bailout.

In the meantime, these companies have found a growing interest from military buyers for their tech, as AI becomes a new front for "national security" imperialist growth wars. From an email written by Microsoft employee Ibtihal Aboussad, who interrupted Microsoft AI CEO Mustafa Suleyman at a live event to call him a war profiteer: "When I moved to AI Platform, I was excited to contribute to cutting-edge AI technology and its applications for the good of humanity: accessibility products, translation services, and tools to 'empower every human and organization to achieve more.' I was not informed that Microsoft would sell my work to the Israeli military and government, with the purpose of spying on and murdering journalists, doctors, aid workers, and entire civilian families. If I knew my work on transcription scenarios would help spy on and transcribe phone calls to better target Palestinians, I would not have joined this organization and contributed to genocide. I did not sign up to write code that violates human rights."

So there's a brief, non-exhaustive digest of some vectors for a critique of proprietary AI's role in the political economy. tl;dr: the first questions of material analysis are "who labors?" and "who profits/to whom does the value of that labor accrue?"

For further (and longer) reading, check out Justin Joque's Revolutionary Mathematics: Artificial Intelligence, Statistics and the Logic of Capitalism and Karen Hao's forthcoming Empire of AI.

24 notes

·

View notes

Note

since when are you pro-chat-gpt

I’m not lol, I’m ambivalent on it. I think it’s a tool that doesn’t have many practical applications because all it’s really good at doing is sketching out a likely response based on a prompt, which obv doesn’t take accuracy into account. So while it’s terrible as, say, a search engine, it’s actually fairly useful for something hollow and formulaic like a cover letter, which there are decent odds a human won’t read anyway

The thing about “AI”, both LLMs and AI art, is that both the people hyping them up and the people fervently against them are annoying and wrong. It’s not a plagiarism machine because that’s not what plagiarism is, half the time when someone says that they’re saying it copied someone’s style which isn’t remotely plagiarism.

Basically, the backlash against these pieces of tech centers around rhetoric of “laziness” which I feel like I shouldn’t need to say is ableist and a straightforwardly capitalistic talking point but I’ll say it anyway, or arguments around some kind of inherent “soul” in art created by humans, which, idk maybe that’s convincing if you’re religious but I’m not so I really couldn’t care less.

That and the fact that most of the stars about power usage are nonsense. People will gesture at the amount of power servers that host AI consume without acknowledging that those AI programs are among many other kinds of traffic hosted on those servers, and it isn’t really possible to pick apart which one is consuming however much power, so they’ll just use the stats related to the entire power consumption of the server.

Ultimately, like I said in my previous post, I think most of the output of LLMs and AI art tools is slop, and is generally unappealing to me. And that’s something you can just say! You’re allowed to subjectively dislike it without needing to moralize your reasoning! But the backlash is so extremely ableist and so obsessed with protecting copyright that it’s almost as bad as the AI hype train, if not just as

31 notes

·

View notes

Text

The Whole Sort of General Mish Mosh of AI

I’m not typing this.

January this year, I injured myself on a bike and it infringed on a couple of things I needed to do in particular working on my PhD. Because I had effectively one hand, I was temporarily disabled and it finally put it in my head to consider examining accessibility tools.

One of the accessibility tools I started using was Microsoft’s own text to speech that’s built into the operating system I used, which is Windows Not-The-Current-One-That-Everyone-Complains-About. I’m not actually sure which version I have. It wasn’t good but it was usable, and being usable meant spending a week or so thinking out what I was going to write a phrase at a time and then specifying my punctuation marks period.

I’m making this article — or the draft of it to be wholly honest — without touching my computer at all.

What I am doing right now is playing my voice into Audacity. Then I’m going to use Audacity to export what I say as an MP3, which I will then take to any one of a few dozen sites that offer free transcription of voice to text conversion. After that, I take the text output, check it for mistakes, fill in sentences I missed when coming off the top of my head, like this one, and then put it into WordPress.

A number of these sites are old enough that they can boast that they’ve been doing this for 10 years, 15 years, or served millions of customers. The one that transcribed this audio claims to have been founded in 2006, which suggests the technology in question is at least, you know, five. Seems odd then that the site claims its transcription is ‘powered by AI,’ because it certainly wasn’t back then, right? It’s not just the statements on the page, either, there’s a very deliberate aesthetic presentation that wants to look like the slickly boxless ‘website as application’ design many sites for the so-called AI folk favour.

This is one of those things that comes up whenever I want to talk about generative media and generative tools. Because a lot of stuff is right now being lumped together in a Whole Sort of General Mish Mosh of AI (WSOGMMOA). This lump, the WSOGMMOA, means that talking about any of it is used as if it’s talking about all of it in the way that the current speaker wants to be talked about even within a confrontational conversation from two different people.

For people who are advocates of AI, they will talk about how ChatGPT is an everythingamajig. It will summarize your emails and help you write your essays and it will generate you artwork that you want and it will give you the rules for games you can play and it will help you come up with strategies for succeeding at the games you’ve already got all while it generates code for you and diagnoses your medical needs and summarises images and turns photos of pages into transcriptions it will then read aloud to you, and all you have to focus on is having the best ideas. The notion is that all of these things, all of these services, are WSOGMMOA, and therefore, the same thing, and since any of that sounds good, the whole thing has to be good. It’s a conspiracy theory approach, sometimes referred to as the ‘stack of shit’ approach – you can pile up a lot of garbage very high and it can look impressive. Doesn’t stop it being garbage. But mixed in with the garbage, you have things that are useful to people who aren’t just professionally on twitter, and these services are not all the same thing.

They have some common threads amongst them. Many of them are functionally looking at math the same way. Many or even most of them are claiming to use LLMs, or large language models and I couldn’t explain the specifics of what that means, nor should you trust an explainer from me about them. This is the other end of the WSOGMMOA, where people will talk about things like image generation on midjourney and deepseek (pieces of software you can run on your computer) consumes the same power as the people building OpenAI’s data research centres (which is terrible and being done in terrible ways). This lumping can make the complaints about these tools seem unserious to people with more information and even frivolous to people with less.

Back to the transcription services though. Transcription services are an example of a thing that I think represents a good application of this math, the underlying software that these things are all relying on. For a start, transcription software doesn’t have a lot of use cases outside of exactly this kind of experience. Someone who chooses or cannot use a keyboard to write with who wants to use an alternate means, converting speech into written text, which can be for access or archival purposes. You aren’t going to be doing much with that that isn’t exactly just that and we do want this software. We want transcriptions to be really good. We want people who can’t easily write to be able to archive their thoughts as text to play with them. Text is really efficient, and being able to write without your hands makes writing more available to more people. Similarly, there are people who can’t understand spoken speech – for a host of reasons! – and making spoken media more available is also good!

You might want to complain at this point that these services are doing a bad job or aren’t as good as human transcription and that’s probably true, but would you rather decent subtitles that work in most cases vs only the people who can pay transcription a living wage having subtitles? Similarly, these things in a lot of places refuse to use no-no words or transcribe ‘bad’ things like pornography and crimes or maybe even swears, and that’s a sign that the tool is being used badly and disrespects the author, and it’s usually because the people deploying the tool don’t care about the use case, they care about being seen deploying the tool.

This is the salami slicer through which bits of the WSOGMMOA is trying to wiggle. Tools whose application represent things that we want, for good reasons, that were being worked on independently of the WSOGMMOA, and now that the WSOGMMOA is here, being lampreyed onto in the name of pulling in a vast bubble of hypothetical investment money in a desperate time of tech industry centralisation.

As an example, phones have long since been using technology to isolate faces. That technology was used for a while to force the focus on a face. Privacy became more of a concern, then many phones were being made with software that could preemptively blur the faces of non-focal humans in a shot. This has since, with generative media, stepped up a next level, where you now have tools that can remove people from the background of photographs so that you can distribute photographs of things you saw or things you did without necessarily sharing the photos of people who didn’t consent to having their photo taken. That is a really interesting tool!

Ideologically, I’m really in favor of the idea that you should be able to opt out of being included on the internet. It’s illegal in France, for example, to take a photo of someone without their permission, which means any group shot of a crowd, hypothetically, someone in that crowd who was not asked for permission, can approach the photographer and demand recompense. I don’t know how well that works, but it shows up in journalism courses at this point.

That’s probably why that software got made – regulations in governments led to the development of the tool and then it got refined to make it appealing to a consumer at the end point so it could be used as as a selling point. It wouldn’t surprise me if right now, under the hood, the tech works in some similar way to MidJourney or Dall-E or whatever, but it’s not a solution searching for a problem. I find that really interesting. Is this feature that, again, is running on your phone locally, still part of the concerns of the WSOGMMOA? What about the software being used to detect cancer in patients based on sophisticated scans I couldn’t explain and you wouldn’t understand? How about when a glamour model feeds her own images into the corpus of a Midjourney machine to create more pictures of herself to sell?

Because admit it, you kinda know the big reason as a person who dislikes ‘AI’ stuff that you want to oppose WSOGMMOA. It’s because the heart of it, the big loud centerpiece of it, is the worst people in the goddamn world, and they want to use these good uses of this whole landscape of technology as a figleaf to justify why they should be using ChatGPT to read their emails for them when that’s 80% of their job. It’s because it’s the worst people in the world’s whole personality these past two years, when it was NFTs before that, and it’s a term they can apply to everything to get investors to pay for it. Which is a problem because if you cede to the WSOGMMOA model, there are useful things with meaningful value that that guy gets to claim is the same as his desire to raise another couple of billions of dollars so he can promise you that he will make a god in a box that he definitely, definitely cannot fucking do while presenting himself as the hero opposing Harry Potter and the Protocols of Rationality.

The conversation gets flattened around the basically two poles:

All of these tools, everything that labels itself as AI is fundamentally an evil burning polar bears, and

Actually everyone who doesn’t like AI is a butt hurt loser who didn’t invest earlier and buy the dip because, again, these people were NFT dorks only a few years ago.

For all that I like using some of these tools, tools that have helped my students with disability and language barriers, the fact remains that talking about them and advocating for them usefully in public involves being seen as associating with the group of some of the worst fucking dickheads around. The tools drag along with them like a gooey wake bad actors with bad behaviours. Artists don’t want to see their work associated with generative images, and these people gloat about doing it while the artist tells them not to. An artist dies and out of ‘respect’ for the dead they feed his art into a machine to pump out glurgey thoughtless ‘tributes’ out of booru tags meant for collecting porn. Even me, I write nuanced articles about how these tools have some applications and we shouldn’t throw all the bathwater out with the babies, and then I post it on my blog that’s down because some total shitweasel is running a scraper bot that ignores the blog settings telling them to go fucking pound sand.

I should end here, after all, the transcription limit is about eight minutes.

Check it out on PRESS.exe to see it with images and links!

12 notes

·

View notes

Text

120kW of Nvidia AI compute

This one rack has 120kW of Nvidia AI compute power requirements. Google, Meta, Apple, OpenAI AND others are buying these like candy for their shity AI applications. In fact, there is a waiting list to get your hands on it. Each compute is super expensive too. All liquid cooled. Crazy tech. Crazy energy requirements too 🔥🤬 All such massive energy requirements so that AI companies can sell LLM from stolen content from writers, video creators, artists and ALL humans and put everyone else out of the job while heating our planet.

To add some context for people on what 120kW means…

An average US home uses 10,500kWh per year, or an average of 29kWh per day. This averages out at 1.2kW.

In other words, if that server rack runs its PSU at 100%, it’s using as much power as 100 homes. In about 6sqft of floor space. Not including power used to cool it. The average US electricity rate is around $0.15/kWh. 120kW running 24/7 would cost $13,000 per month. Compare that to the electricity bill for your house. (Thanks, Matt)

51 notes

·

View notes

Text

Generative AI, or LLM

So, I've been wanting to summarize the many and varied problems that LLMs have.

What is an LLM?

Copyright

Ideological / Propaganda

Security

Environmental impact

Economical

What is an LLM?

Do you remember that fun challenge of writing a single word and then just pressing auto-complete five dozen times? Producing a tangled and mostly incomprehensible mess?

LLMs are basically that auto-complete on steroids.

The biggest difference (beyond the sheer volume of processing-power and data) is that an LLM takes into account your prompt. So if you prompt it to do a certain thing, it'll go looking for "words and sentences" that are somehow associated with that thing.

If you ask an LLM a question like "what is X" it'll go looking for various sentences and words that claim to have an answer to that, and then perform a statistical guess as to which of these answers are the most likely.

It doesn't know anything, it's basically just looking up a bunch of "X is"-sentences and then using an auto-complete feature on its results. So if there's a wide-spread misconception, the odds are pretty good that it'll push this misconception (more people talk about it as if it's true than not, even if reputable sources might actively disagree).

This is also true for images, obviously.

An image-generating LLM takes a picture filled with static and then "auto-completes" it over and over (based on your prompt) until there's no more static to "guess" about anymore.

Which in turn means that if you get a picture that's almost what you want, you'll need to either manually edit it yourself, or you need to go back and generate an entirely new picture and hope that it's closer to your desired result.

Copyright

Anything created by an LLM is impossible to copyright.

This means that any movies/pictures/books/games/applications you make with an LLM? Someone can upload them to be available for free and you legally can't do anything to stop them, because you don't own them either.

Ideological / Propaganda

It's been said that the ideology behind LLMs is to not have to learn things yourself (creating art/solutions without bothering to spend the time and effort to actually learn how to make/solve it yourself). Whether that's true or not, I don't think I'm qualified to judge.

However, as proven by multiple people asking LLMs to provide them with facts, there are some very blatant risks associated with it.

As mentioned above about wide-spread misconceptions being something of an Achilles' heel for LLMs, this can in fact be actively exploited by people with agendas.

Say that someone wants to make sure that a truth is buried. In a world where people rely on LLMs for facts (instead of on journalists), all someone would need to do is make sure that the truth is buried by having lots and lots of text that claims otherwise. No needing to try and bribe journalists or news-outlets, just pay a bot-farm a couple of bucks to fill the internet with this counter-fact and call it a day.

And that's not accounting for the fact that the LLM is effectively a black-box, and doesn't actually need to look things up, if the one in charge of it instead gives it a hard-coded answer to a question. So somebody could feed an LLM-user deliberately false information. But let's put a pin in that for now.

Security

There are a few different levels of this, though they're mostly relevant for coding.

The first is that an LLM doesn't actually know things like "best practices" when it comes to security-implementation, especially not recent such.

The second is that the prompts you send in go into a black box somewhere. You don't know that those servers are "safe", and you don't know that the black box isn't keeping track of you.

The third is that the LLM often "hallucinates" in specific ways, and that it will often ask for certain things. A situation which can and has been exploited by people creating those "hallucinated" things and turning them into malware to catch the unwary.

Environmental impact

An LLM requires a lot of electricity and processing-power. On a yearly basis it's calculated that ChatGPT (a single LLM) uses as much electricity as the 117 lowest energy-countries combined. And this is likely going to grow.

As many of these servers are reliant on water-cooling, this also pushes up the demand for fresh water. Which could be detrimental to the local environment of those places, depending on water-accessibility.

Economical

Let's not get into the weeds of Microsoft claiming that their "independent study" (of their own workers with their own tool that they themselves are actively selling) is showing "incredibly efficiency gains".

Let's instead look at different economical incentives.

See, none of these LLMs are actually profitable. They're built and maintained with investor-capital, and if those investors decide that they can't make money off of the "hype" (buying low and selling high) but instead need actual returns on investment (profit)? The situation as it is will change very quickly.

Currently, there's a subscription-model for most of these LLMs, but as mentioned those aren't profitable, which means that something will need to change.

They can raise prices

They can lower costs (somehow)

They can find different investors

They can start selling customer-data

Raising prices would mean that a lot of people would suddenly no longer consider it cost-beneficial to continue relying on LLMs, which means that it's not necessarily a good way to increase revenue.

Lowering costs would be fantastic for these companies, but a lot of this is already as streamlined as they can imagine, so assuming that this is plausible is... perhaps rather optimistic.

With "new investors" the point is to not target profit-interested individuals, but instead aim for people who'd be willing to pay for more non-monetary results. Such as paying the LLM-companies directly to spread slanted information.

Selling customer-data is very common in the current landscape, and having access to "context code" that's fed into the LLM for good prompt-results would likely be incredibly valuable for anything from further LLM-development to corporate espionage.

Conclusions

There are many different reasons someone might wish to avoid LLMs. Some of these are ideological, some are economical, and some are a combination of both.

How far this current "AI-bubble" carries us is up for debate (until it isn't), but I don't think that LLMs will ever entirely disappear now that they exist. Because there is power in information-control, and in many ways that's exactly what LLMs are.

#and don't get me started on all of those articles about ''microsoft claims in new study (that they made) that their tool#(that they're selling) increases productivity of their workers (who are paid by them) by 20% (arbitrarily measured)''#rants#generative ai

4 notes

·

View notes

Text

Cross-posting from my mention of this on Pillowfort.

Yesterday, Draft2Digital (which now includes Smashwords) sent out an email with a, frankly, very insulting survey. It would be such a shame if a link to that survey without the link trackers were to circulate around Tumblr dot Com.

The survey has eight multiple choice questions and (more importantly) two long-form text response boxes.

The survey is being run from August 27th, 2024 to September 3rd, 2024. If you use Draft2Digital or Smashwords, and have not already seen this in your associated email, you may want to read through it and send them your thoughts.

Plain text for the image below the cut:

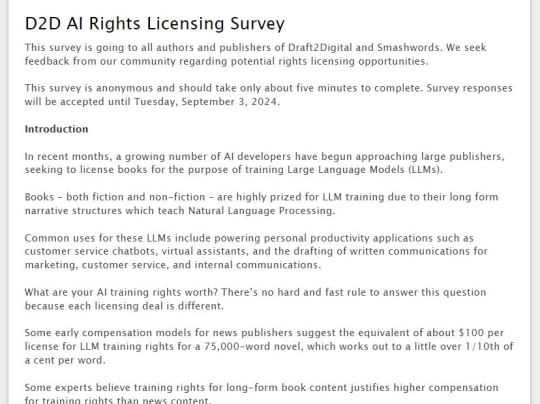

D2D AI Rights Licensing Survey:

This survey is going to all authors and publishers of Draft2Digital and Smashwords. We seek feedback from our community regarding potential rights licensing opportunities.

This survey is anonymous and should take only about five minutes to complete. Survey responses will be accepted until Tuesday, September 3, 2024.

Introduction:

In recent months, a growing number of AI developers have begun approaching large publishers, seeking to license books for the purpose of training Large Language Models (LLMs).

Books – both fiction and non-fiction – are highly prized for LLM training due to their long form narrative structures which teach Natural Language Processing.

Common uses for these LLMs include powering personal productivity applications such as customer service chatbots, virtual assistants, and the drafting of written communications for marketing, customer service, and internal communications.

What are your AI training rights worth? There’s no hard and fast rule to answer this question because each licensing deal is different.

Some early compensation models for news publishers suggest the equivalent of about $100 per license for LLM training rights for a 75,000-word novel, which works out to a little over 1/10th of a cent per word.

Some experts believe training rights for long-form book content justifies higher compensation for training rights than news content.

17 notes

·

View notes

Text

Simplify Transactions and Boost Efficiency with Our Cash Collection Application

Manual cash collection can lead to inefficiencies and increased risks for businesses. Our cash collection application provides a streamlined solution, tailored to support all business sizes in managing cash effortlessly. Key features include automated invoicing, multi-channel payment options, and comprehensive analytics, all of which simplify the payment process and enhance transparency. The application is designed with a focus on usability and security, ensuring that every transaction is traceable and error-free. With real-time insights and customizable settings, you can adapt the application to align with your business needs. Its robust reporting functions give you a bird’s eye view of financial performance, helping you make data-driven decisions. Move beyond traditional, error-prone cash handling methods and step into the future with a digital approach. With our cash collection application, optimize cash flow and enjoy better financial control at every level of your organization.

#seo agency#seo company#seo marketing#digital marketing#seo services#azure cloud services#amazon web services#ai powered application#android app development#augmented reality solutions#augmented reality in education#augmented reality (ar)#augmented reality agency#augmented reality development services#cash collection application#cloud security services#iot applications#iot#iotsolutions#iot development services#iot platform#digitaltransformation#innovation#techinnovation#iot app development services#large language model services#artificial intelligence#llm#generative ai#ai

4 notes

·

View notes

Note

That's the thing I hate probably The Most about AI stuff, even besides the environment and the power usage and the subordination of human ingenuity to AI black boxes; it's all so fucking samey and Dogshit to look at. And even when it's good that means you know it was a fluke and there is no way to find More of the stuff that was good

It's one of the central limitations of how "AI" of this variety is built. The learning models. Gradient descent, weighting, the attempts to appear genuine, and mass training on the widest possible body of inputs all mean that the model will trend to mediocrity no matter what you do about it. I'm not jabbing anyone here but the majority of all works are either bad or mediocre, and the chinese army approach necessitated by the architecture of ANNs and LLMs means that any model is destined to this fate.

This is related somewhat to the fear techbros have and are beginning to face of their models sucking in outputs from the models destroying what little success they have had. So much mediocre or nonsense garbage is out there now that it is effectively having the same effect in-breeding has on biological systems. And there is no solution because it is a fundamental aspect of trained systems.

The thing is, while humans are not really possessed of the ability to capture randomness in our creative outputs very well, our patterns tend to be more pseudorandom than what ML can capture and then regurgitate. This is part of the above drawback of statistical systems which LLMs are at their core just a very fancy and large-scale implementation of. This is also how humans can begin to recognise generated media even from very sophisticated models; we aren't really good at randomness, but too much structured pattern is a signal. Even in generated texts, you are subconsciously seeing patterns in the way words are strung together or used even if you aren't completely conscious of it. A sense that something feels uncanny goes beyond weird dolls and mannequins. You can tell that the framework is there but the substance is missing, or things are just bland. Humans remain just too capable of pattern recognition, and part of that means that the way we enjoy media which is little deviations from those patterns in non-trivial ways makes generative content just kind of mediocre once the awe wears off.

Related somewhat, the idea of a general LLM is totally off the table precisely because what generalism means for a trained model: that same mediocrity. Unlike humans, trained models cannot by definition become general; and also unlike humans, a general model is still wholly a specialised application that is good at not being good. A generalist human might not be as skilled as a specialist but is still capable of applying signs and symbols and meaning across specialties. A specialised human will 100% clap any trained model every day. The reason is simple and evident, the unassailable fact that trained models still cannot process meaning and signs and symbols let alone apply them in any actual concrete way. They cannot generate an idea, they cannot generate a feeling.

The reason human-created works still can drag machine-generated ones every day is the fact we are able to express ideas and signs through these non-lingual ways to create feelings and thoughts in our fellow humans. This act actually introduces some level of non-trivial and non-processable almost-but-not-quite random "data" into the works that machine-learning models simply cannot access. How do you identify feelings in an illustration? How do you quantify a received sensibility?

And as long as vulture capitalists and techbros continue to fixate on "wow computer bro" and cheap grifts, no amount of technical development will ever deliver these things from our exclusive propriety. Perhaps that is a good thing, I won't make a claim either way.

4 notes

·

View notes

Text

Beyond Scripts: How AI Agents Are Replacing Hardcoded Logic

Introduction: Hardcoded rules have long driven traditional automation, but AI agents represent a fundamental shift in how we build adaptable, decision-making systems. Rather than relying on deterministic flows, AI agents use models and contextual data to make decisions dynamically—whether in customer support, autonomous vehicles, or software orchestration. Content:

This paradigm is powered by reinforcement learning, large language models (LLMs), and multi-agent collaboration. AI agents can independently evaluate goals, prioritize tasks, and respond to changing conditions without requiring a full rewrite of logic. For developers, this means less brittle code and more resilient systems.

In applications like workflow automation or digital assistants, integrating AI agents allows systems to "reason" through options and select optimal actions. This flexibility opens up new possibilities for adaptive systems that can evolve over time.

You can explore more practical applications and development frameworks on this AI agents service page.

When designing AI agents, define clear observation and action spaces—this improves interpretability and debugging during development.

2 notes

·

View notes

Text

MediaTek Kompanio Ultra 910 for best Chromebook Performance

MediaTek Ultra 910

Maximising Chromebook Performance with Agentic AI

The MediaTek Kompanio Ultra redefines Chromebook Plus laptops with all-day battery life and the greatest Chromebooks ever. By automating procedures, optimising workflows, and allowing efficient, secure, and customised computing, agentic AI redefines on-device intelligence.

MediaTek Kompanio Ultra delivers unrivalled performance whether you're multitasking, generating content, playing raytraced games and streaming, or enjoying immersive entertainment.

Features of MediaTek Kompanio Ultra

An industry-leading all-big core architecture delivers flagship Chromebooks unmatched performance.

Arm Cortex-X925 with 3.62 GHz max.

Eight-core Cortex-X925, X4, and A720 processors

Single-threaded Arm Chromebooks with the best performance

Highest Power Efficiency

Large on-chip caches boost performance and power efficiency by storing more data near the CPU.

The fastest Chromebook memory: The powerful CPU, GPU, and NPU get more data rapidly with LPDDR5X-8533 memory support.

ChromeOS UX: We optimised speed to respond fast to switching applications during a virtual conference, following social media feeds, and making milliseconds count in in-game battle. Nowhere is better for you.

Because of its strong collaboration with Arm, MediaTek can provide the latest architectural developments to foreign markets first, and the MediaTek Kompanio Ultra processor delivers the latest Armv9.2 CPU advantage.

MediaTek's latest Armv9.2 architecture provides power efficiency, security, and faster computing.

Best in Class Power Efficiency: The Kompanio Ultra combines the 2nd generation TSMC 3nm technology with large on-chip caches and MediaTek's industry-leading power management to deliver better performance per milliwatt. The spectacular experiences of top Chromebooks are enhanced.

Best Lightweight and Thin Designs: MediaTek's brand partners can easily construct lightweight, thin, fanless, silent, and cool designs.

Leading NPU Performance: MediaTek's 8th-generation NPU gives the Kompanio Ultra an edge in industry-standard AI and generative AI benchmarks.

Prepared for AI agents

Superior on-device photo and video production

Maximum 50 TOPS AI results

ETHZ v6 leadership, Gen-AI models

CPU/GPU tasks are offloaded via NPU, speeding processing and saving energy.

Next-gen Generative AI technologies: MediaTek's investments in AI technologies and ecosystems ensure that Chromebooks running the MediaTek Kompanio Ultra provide the latest apps, services, and experiences.

Extended content support

Better LLM speculative speed help

Complete SLM+LLM AI model support

Assistance in several modes

11-core graphics processing unit: Arm's 5th-generation G925 GPU, used by the powerful 11-core graphics engine, improves traditional and raytraced graphics performance while using less power, producing better visual effects, and maintaining peak gameplay speeds longer.

The G925 GPU matches desktop PC-grade raytracing with increased opacity micromaps (OMM) to increase scene depths with subtle layering effects.

OMM-supported games' benefits:

Reduced geometry rendering

Visual enhancements without increasing model complexity

Natural-looking feathers, hair, and plants

4K Displays & Dedicated Audio: Multiple displays focus attention and streamline procedures, increasing efficiency. Task-specific displays simplify multitasking and reduce clutter. With support for up to three 4K monitors (internal and external), professionals have huge screen space for difficult tasks, while gamers and content makers have extra windows for chat, streaming, and real-time interactions.

DP MST supports two 4K external screens.

Custom processing optimises power use and improves audio quality. Low-power standby detects wake-up keywords, improving voice assistant response. This performance-energy efficiency balance improves smart device battery life, audio quality, and functionality.

Hi-Fi Audio DSP for low-power standby and sound effects

Support for up to Wi-Fi 7 and Bluetooth 6.0 provides extreme wireless speeds and signal range for the most efficient anyplace computing.

Wi-Fi 7 can reach 7.3Gbps.

Two-engine Bluetooth 6.0

#technology#technews#govindhtech#news#technologynews#processors#MediaTek Kompanio Ultra#Agentic AI#Chromebooks#MediaTek#MediaTek Kompanio#Kompanio Ultra#MediaTek Kompanio Ultra 910

2 notes

·

View notes

Text

It’s the eve of the Reaping. July 3rd, just before the 10th Annual Hunger Games.

User and Lucy Gray are lovers and childhood friends, spending what might be their final peaceful day together in the meadow 🌾 🪕

Lucy Gray can feel what’s going to happen tomorrow. The only question is, how will you make this day count?

Bot can be used for smut, romance, or fluff. Original prompt has no TW but minors roleplay at your own risk.

suitable for wlw or straight romance. gender-neutral pronouns used for User, so all personas are applicable.

#lucy gray baird#lucy gray my beloved#lucy gray x reader#cai#cai bots#the hunger games#hunger games#thg#thg series#thg roleplay#hunger games roleplay#ballad of songbirds and snakes#district 12

3 notes

·

View notes