#Linux Networking Projects

Explore tagged Tumblr posts

Text

Project and Training in Network Programming

Master Network Programming with Emblogic's Linux Socket Programming Course in Noida. project and training in Network Programming - Emblogic offers hands-on training in Linux Networking Socket Programming in Noida, providing a strong foundation for building projects and preparing students for placements in multinational companies. This program is ideal for those aspiring to master network communication and build cutting-edge software solutions.

What is Socket Programming?

Socket programming is a fundamental technology for enabling communication between software applications over networks like TCP/IP. A socket acts as an endpoint for sending and receiving data, allowing two systems—whether on a local area network (LAN) or the Internet—to exchange information. Sockets also enable communication between processes on the same machine.

How Does Socket Communication Work?

The client creates a local TCP socket by specifying the server's IP address and port number.

The client's TCP establishes a connection with the server's TCP.

The server creates a new socket to handle communication with the client.

The client sends requests to the server, which responds with the required data or service.

Data exchange happens over the TCP/IP protocol, ensuring reliable and secure communication.

Why Choose Emblogic?

Emblogic’s course is project-based, emphasizing practical applications of socket programming. You’ll learn to:

Build client-server applications.

Create custom network protocols using a socket stack.

Implement inter-process communication.

Our training ensures you gain in-depth knowledge and real-world experience, making you job-ready for opportunities in leading tech companies.

Whether you’re a beginner or a professional looking to upgrade your skills, Emblogic provides the perfect platform to excel in Linux Networking Socket Programming. Join us to build your expertise and take the next step in your career!

Linux Networking socket Programming Noida, Project based Linux Networking socket Programming, Linux Socket Programming Noida, Networking Socket Programming Course, Client-Server Application Development, TCP/IP Communication Training, Linux Networking Projects, Socket Programming Certification, Inter-Process Communication Training, Network Protocol Development.

#Linux Networking socket Programming Noida#Project based Linux Networking socket Programming#Linux Socket Programming Noida#Networking Socket Programming Course#Client-Server Application Development#TCP/IP Communication Training#Linux Networking Projects

0 notes

Text

Redoing my domain and home network. I want to have each device publicly mailable at "[email protected]" but reachable on the LAN at a fixed address of hostname.marq42.xyz. Definitely plan to do some distributed computing on them, perhaps even approaching Beowulf cluster levels. Hmm, more of a dog than a wolf. Beodogg?

5 notes

·

View notes

Text

Dreaming of becoming a project management pro? Turn your dreams into reality with Netlabsit's comprehensive PMP course. Get certified and stand out from the crowd and others Quality training is available. Contact us at the following numbers: 011-41646262, 24641080, or via WhatsApp at 9278208308. 8700826369, 9990713201, and. visit our office at N25, South Extension, Part-I in New Delhi, India. Enrol today https://bitly.ws/GMb6

#pmp course#technology#programming#project management#poetry#pmp online#azure#networking#hardware#it service#netlabsits#tumblr milestone#aws#education#linux#pmp exam#pmp training

1 note

·

View note

Text

How I ditched streaming services and learned to love Linux: A step-by-step guide to building your very own personal media streaming server (V2.0: REVISED AND EXPANDED EDITION)

This is a revised, corrected and expanded version of my tutorial on setting up a personal media server that previously appeared on my old blog (donjuan-auxenfers). I expect that that post is still making the rounds (hopefully with my addendum on modifying group share permissions in Ubuntu to circumvent 0x8007003B "Unexpected Network Error" messages in Windows 10/11 when transferring files) but I have no way of checking. Anyway this new revised version of the tutorial corrects one or two small errors I discovered when rereading what I wrote, adds links to all products mentioned and is just more polished generally. I also expanded it a bit, pointing more adventurous users toward programs such as Sonarr/Radarr/Lidarr and Overseerr which can be used for automating user requests and media collection.

So then, what is this tutorial? This is a tutorial on how to build and set up your own personal media server using Ubuntu as an operating system and Plex (or Jellyfin) to not only manage your media, but to also stream that media to your devices both at home and abroad anywhere in the world where you have an internet connection. Its intent is to show you how building a personal media server and stuffing it full of films, TV, and music that you acquired through indiscriminate and voracious media piracy various legal methods will free you to completely ditch paid streaming services. No more will you have to pay for Disney+, Netflix, HBOMAX, Hulu, Amazon Prime, Peacock, CBS All Access, Paramount+, Crave or any other streaming service that is not named Criterion Channel. Instead whenever you want to watch your favourite films and television shows, you’ll have your own personal service that only features things that you want to see, with files that you have control over. And for music fans out there, both Jellyfin and Plex support music streaming, meaning you can even ditch music streaming services. Goodbye Spotify, Youtube Music, Tidal and Apple Music, welcome back unreasonably large MP3 (or FLAC) collections.

On the hardware front, I’m going to offer a few options catered towards different budgets and media library sizes. The cost of getting a media server up and running using this guide will cost you anywhere from $450 CAD/$325 USD at the low end to $1500 CAD/$1100 USD at the high end (it could go higher). My server was priced closer to the higher figure, but I went and got a lot more storage than most people need. If that seems like a little much, consider for a moment, do you have a roommate, a close friend, or a family member who would be willing to chip in a few bucks towards your little project provided they get access? Well that's how I funded my server. It might also be worth thinking about the cost over time, i.e. how much you spend yearly on subscriptions vs. a one time cost of setting up a server. Additionally there's just the joy of being able to scream "fuck you" at all those show cancelling, library deleting, hedge fund vampire CEOs who run the studios through denying them your money. Drive a stake through David Zaslav's heart.

On the software side I will walk you step-by-step through installing Ubuntu as your server's operating system, configuring your storage as a RAIDz array with ZFS, sharing your zpool to Windows with Samba, running a remote connection between your server and your Windows PC, and then a little about started with Plex/Jellyfin. Every terminal command you will need to input will be provided, and I even share a custom #bash script that will make used vs. available drive space on your server display correctly in Windows.

If you have a different preferred flavour of Linux (Arch, Manjaro, Redhat, Fedora, Mint, OpenSUSE, CentOS, Slackware etc. et. al.) and are aching to tell me off for being basic and using Ubuntu, this tutorial is not for you. The sort of person with a preferred Linux distro is the sort of person who can do this sort of thing in their sleep. Also I don't care. This tutorial is intended for the average home computer user. This is also why we’re not using a more exotic home server solution like running everything through Docker Containers and managing it through a dashboard like Homarr or Heimdall. While such solutions are fantastic and can be very easy to maintain once you have it all set up, wrapping your brain around Docker is a whole thing in and of itself. If you do follow this tutorial and had fun putting everything together, then I would encourage you to return in a year’s time, do your research and set up everything with Docker Containers.

Lastly, this is a tutorial aimed at Windows users. Although I was a daily user of OS X for many years (roughly 2008-2023) and I've dabbled quite a bit with various Linux distributions (mostly Ubuntu and Manjaro), my primary OS these days is Windows 11. Many things in this tutorial will still be applicable to Mac users, but others (e.g. setting up shares) you will have to look up for yourself. I doubt it would be difficult to do so.

Nothing in this tutorial will require feats of computing expertise. All you will need is a basic computer literacy (i.e. an understanding of what a filesystem and directory are, and a degree of comfort in the settings menu) and a willingness to learn a thing or two. While this guide may look overwhelming at first glance, it is only because I want to be as thorough as possible. I want you to understand exactly what it is you're doing, I don't want you to just blindly follow steps. If you half-way know what you’re doing, you will be much better prepared if you ever need to troubleshoot.

Honestly, once you have all the hardware ready it shouldn't take more than an afternoon or two to get everything up and running.

(This tutorial is just shy of seven thousand words long so the rest is under the cut.)

Step One: Choosing Your Hardware

Linux is a light weight operating system, depending on the distribution there's close to no bloat. There are recent distributions available at this very moment that will run perfectly fine on a fourteen year old i3 with 4GB of RAM. Moreover, running Plex or Jellyfin isn’t resource intensive in 90% of use cases. All this is to say, we don’t require an expensive or powerful computer. This means that there are several options available: 1) use an old computer you already have sitting around but aren't using 2) buy a used workstation from eBay, or what I believe to be the best option, 3) order an N100 Mini-PC from AliExpress or Amazon.

Note: If you already have an old PC sitting around that you’ve decided to use, fantastic, move on to the next step.

When weighing your options, keep a few things in mind: the number of people you expect to be streaming simultaneously at any one time, the resolution and bitrate of your media library (4k video takes a lot more processing power than 1080p) and most importantly, how many of those clients are going to be transcoding at any one time. Transcoding is what happens when the playback device does not natively support direct playback of the source file. This can happen for a number of reasons, such as the playback device's native resolution being lower than the file's internal resolution, or because the source file was encoded in a video codec unsupported by the playback device.

Ideally we want any transcoding to be performed by hardware. This means we should be looking for a computer with an Intel processor with Quick Sync. Quick Sync is a dedicated core on the CPU die designed specifically for video encoding and decoding. This specialized hardware makes for highly efficient transcoding both in terms of processing overhead and power draw. Without these Quick Sync cores, transcoding must be brute forced through software. This takes up much more of a CPU’s processing power and requires much more energy. But not all Quick Sync cores are created equal and you need to keep this in mind if you've decided either to use an old computer or to shop for a used workstation on eBay

Any Intel processor from second generation Core (Sandy Bridge circa 2011) onward has Quick Sync cores. It's not until 6th gen (Skylake), however, that the cores support the H.265 HEVC codec. Intel’s 10th gen (Comet Lake) processors introduce support for 10bit HEVC and HDR tone mapping. And the recent 12th gen (Alder Lake) processors brought with them hardware AV1 decoding. As an example, while an 8th gen (Kaby Lake) i5-8500 will be able to hardware transcode a H.265 encoded file, it will fall back to software transcoding if given a 10bit H.265 file. If you’ve decided to use that old PC or to look on eBay for an old Dell Optiplex keep this in mind.

Note 1: The price of old workstations varies wildly and fluctuates frequently. If you get lucky and go shopping shortly after a workplace has liquidated a large number of their workstations you can find deals for as low as $100 on a barebones system, but generally an i5-8500 workstation with 16gb RAM will cost you somewhere in the area of $260 CAD/$200 USD.

Note 2: The AMD equivalent to Quick Sync is called Video Core Next, and while it's fine, it's not as efficient and not as mature a technology. It was only introduced with the first generation Ryzen CPUs and it only got decent with their newest CPUs, we want something cheap.

Alternatively you could forgo having to keep track of what generation of CPU is equipped with Quick Sync cores that feature support for which codecs, and just buy an N100 mini-PC. For around the same price or less of a used workstation you can pick up a mini-PC with an Intel N100 processor. The N100 is a four-core processor based on the 12th gen Alder Lake architecture and comes equipped with the latest revision of the Quick Sync cores. These little processors offer astounding hardware transcoding capabilities for their size and power draw. Otherwise they perform equivalent to an i5-6500, which isn't a terrible CPU. A friend of mine uses an N100 machine as a dedicated retro emulation gaming system and it does everything up to 6th generation consoles just fine. The N100 is also a remarkably efficient chip, it sips power. In fact, the difference between running one of these and an old workstation could work out to hundreds of dollars a year in energy bills depending on where you live.

You can find these Mini-PCs all over Amazon or for a little cheaper on AliExpress. They range in price from $170 CAD/$125 USD for a no name N100 with 8GB RAM to $280 CAD/$200 USD for a Beelink S12 Pro with 16GB RAM. The brand doesn't really matter, they're all coming from the same three factories in Shenzen, go for whichever one fits your budget or has features you want. 8GB RAM should be enough, Linux is lightweight and Plex only calls for 2GB RAM. 16GB RAM might result in a slightly snappier experience, especially with ZFS. A 256GB SSD is more than enough for what we need as a boot drive, but going for a bigger drive might allow you to get away with things like creating preview thumbnails for Plex, but it’s up to you and your budget.

The Mini-PC I wound up buying was a Firebat AK2 Plus with 8GB RAM and a 256GB SSD. It looks like this:

Note: Be forewarned that if you decide to order a Mini-PC from AliExpress, note the type of power adapter it ships with. The mini-PC I bought came with an EU power adapter and I had to supply my own North American power supply. Thankfully this is a minor issue as barrel plug 30W/12V/2.5A power adapters are easy to find and can be had for $10.

Step Two: Choosing Your Storage

Storage is the most important part of our build. It is also the most expensive. Thankfully it’s also the most easily upgrade-able down the line.

For people with a smaller media collection (4TB to 8TB), a more limited budget, or who will only ever have two simultaneous streams running, I would say that the most economical course of action would be to buy a USB 3.0 8TB external HDD. Something like this one from Western Digital or this one from Seagate. One of these external drives will cost you in the area of $200 CAD/$140 USD. Down the line you could add a second external drive or replace it with a multi-drive RAIDz set up such as detailed below.

If a single external drive the path for you, move on to step three.

For people with larger media libraries (12TB+), who prefer media in 4k, or care who about data redundancy, the answer is a RAID array featuring multiple HDDs in an enclosure.

Note: If you are using an old PC or used workstatiom as your server and have the room for at least three 3.5" drives, and as many open SATA ports on your mother board you won't need an enclosure, just install the drives into the case. If your old computer is a laptop or doesn’t have room for more internal drives, then I would suggest an enclosure.

The minimum number of drives needed to run a RAIDz array is three, and seeing as RAIDz is what we will be using, you should be looking for an enclosure with three to five bays. I think that four disks makes for a good compromise for a home server. Regardless of whether you go for a three, four, or five bay enclosure, do be aware that in a RAIDz array the space equivalent of one of the drives will be dedicated to parity at a ratio expressed by the equation 1 − 1/n i.e. in a four bay enclosure equipped with four 12TB drives, if we configured our drives in a RAIDz1 array we would be left with a total of 36TB of usable space (48TB raw size). The reason for why we might sacrifice storage space in such a manner will be explained in the next section.

A four bay enclosure will cost somewhere in the area of $200 CDN/$140 USD. You don't need anything fancy, we don't need anything with hardware RAID controls (RAIDz is done entirely in software) or even USB-C. An enclosure with USB 3.0 will perform perfectly fine. Don’t worry too much about USB speed bottlenecks. A mechanical HDD will be limited by the speed of its mechanism long before before it will be limited by the speed of a USB connection. I've seen decent looking enclosures from TerraMaster, Yottamaster, Mediasonic and Sabrent.

When it comes to selecting the drives, as of this writing, the best value (dollar per gigabyte) are those in the range of 12TB to 20TB. I settled on 12TB drives myself. If 12TB to 20TB drives are out of your budget, go with what you can afford, or look into refurbished drives. I'm not sold on the idea of refurbished drives but many people swear by them.

When shopping for harddrives, search for drives designed specifically for NAS use. Drives designed for NAS use typically have better vibration dampening and are designed to be active 24/7. They will also often make use of CMR (conventional magnetic recording) as opposed to SMR (shingled magnetic recording). This nets them a sizable read/write performance bump over typical desktop drives. Seagate Ironwolf and Toshiba NAS are both well regarded brands when it comes to NAS drives. I would avoid Western Digital Red drives at this time. WD Reds were a go to recommendation up until earlier this year when it was revealed that they feature firmware that will throw up false SMART warnings telling you to replace the drive at the three year mark quite often when there is nothing at all wrong with that drive. It will likely even be good for another six, seven, or more years.

Step Three: Installing Linux

For this step you will need a USB thumbdrive of at least 6GB in capacity, an .ISO of Ubuntu, and a way to make that thumbdrive bootable media.

First download a copy of Ubuntu desktop (for best performance we could download the Server release, but for new Linux users I would recommend against the server release. The server release is strictly command line interface only, and having a GUI is very helpful for most people. Not many people are wholly comfortable doing everything through the command line, I'm certainly not one of them, and I grew up with DOS 6.0. 22.04.3 Jammy Jellyfish is the current Long Term Service release, this is the one to get.

Download the .ISO and then download and install balenaEtcher on your Windows PC. BalenaEtcher is an easy to use program for creating bootable media, you simply insert your thumbdrive, select the .ISO you just downloaded, and it will create a bootable installation media for you.

Once you've made a bootable media and you've got your Mini-PC (or you old PC/used workstation) in front of you, hook it directly into your router with an ethernet cable, and then plug in the HDD enclosure, a monitor, a mouse and a keyboard. Now turn that sucker on and hit whatever key gets you into the BIOS (typically ESC, DEL or F2). If you’re using a Mini-PC check to make sure that the P1 and P2 power limits are set correctly, my N100's P1 limit was set at 10W, a full 20W under the chip's power limit. Also make sure that the RAM is running at the advertised speed. My Mini-PC’s RAM was set at 2333Mhz out of the box when it should have been 3200Mhz. Once you’ve done that, key over to the boot order and place the USB drive first in the boot order. Then save the BIOS settings and restart.

After you restart you’ll be greeted by Ubuntu's installation screen. Installing Ubuntu is really straight forward, select the "minimal" installation option, as we won't need anything on this computer except for a browser (Ubuntu comes preinstalled with Firefox) and Plex Media Server/Jellyfin Media Server. Also remember to delete and reformat that Windows partition! We don't need it.

Step Four: Installing ZFS and Setting Up the RAIDz Array

Note: If you opted for just a single external HDD skip this step and move onto setting up a Samba share.

Once Ubuntu is installed it's time to configure our storage by installing ZFS to build our RAIDz array. ZFS is a "next-gen" file system that is both massively flexible and massively complex. It's capable of snapshot backup, self healing error correction, ZFS pools can be configured with drives operating in a supplemental manner alongside the storage vdev (e.g. fast cache, dedicated secondary intent log, hot swap spares etc.). It's also a file system very amenable to fine tuning. Block and sector size are adjustable to use case and you're afforded the option of different methods of inline compression. If you'd like a very detailed overview and explanation of its various features and tips on tuning a ZFS array check out these articles from Ars Technica. For now we're going to ignore all these features and keep it simple, we're going to pull our drives together into a single vdev running in RAIDz which will be the entirety of our zpool, no fancy cache drive or SLOG.

Open up the terminal and type the following commands:

sudo apt update

then

sudo apt install zfsutils-linux

This will install the ZFS utility. Verify that it's installed with the following command:

zfs --version

Now, it's time to check that the HDDs we have in the enclosure are healthy, running, and recognized. We also want to find out their device IDs and take note of them:

sudo fdisk -1

Note: You might be wondering why some of these commands require "sudo" in front of them while others don't. "Sudo" is short for "super user do”. When and where "sudo" is used has to do with the way permissions are set up in Linux. Only the "root" user has the access level to perform certain tasks in Linux. As a matter of security and safety regular user accounts are kept separate from the "root" user. It's not advised (or even possible) to boot into Linux as "root" with most modern distributions. Instead by using "sudo" our regular user account is temporarily given the power to do otherwise forbidden things. Don't worry about it too much at this stage, but if you want to know more check out this introduction.

If everything is working you should get a list of the various drives detected along with their device IDs which will look like this: /dev/sdc. You can also check the device IDs of the drives by opening the disk utility app. Jot these IDs down as we'll need them for our next step, creating our RAIDz array.

RAIDz is similar to RAID-5 in that instead of striping your data over multiple disks, exchanging redundancy for speed and available space (RAID-0), or mirroring your data writing by two copies of every piece (RAID-1), it instead writes parity blocks across the disks in addition to striping, this provides a balance of speed, redundancy and available space. If a single drive fails, the parity blocks on the working drives can be used to reconstruct the entire array as soon as a replacement drive is added.

Additionally, RAIDz improves over some of the common RAID-5 flaws. It's more resilient and capable of self healing, as it is capable of automatically checking for errors against a checksum. It's more forgiving in this way, and it's likely that you'll be able to detect when a drive is dying well before it fails. A RAIDz array can survive the loss of any one drive.

Note: While RAIDz is indeed resilient, if a second drive fails during the rebuild, you're fucked. Always keep backups of things you can't afford to lose. This tutorial, however, is not about proper data safety.

To create the pool, use the following command:

sudo zpool create "zpoolnamehere" raidz "device IDs of drives we're putting in the pool"

For example, let's creatively name our zpool "mypool". This poil will consist of four drives which have the device IDs: sdb, sdc, sdd, and sde. The resulting command will look like this:

sudo zpool create mypool raidz /dev/sdb /dev/sdc /dev/sdd /dev/sde

If as an example you bought five HDDs and decided you wanted more redundancy dedicating two drive to this purpose, we would modify the command to "raidz2" and the command would look something like the following:

sudo zpool create mypool raidz2 /dev/sdb /dev/sdc /dev/sdd /dev/sde /dev/sdf

An array configured like this is known as RAIDz2 and is able to survive two disk failures.

Once the zpool has been created, we can check its status with the command:

zpool status

Or more concisely with:

zpool list

The nice thing about ZFS as a file system is that a pool is ready to go immediately after creation. If we were to set up a traditional RAID-5 array using mbam, we'd have to sit through a potentially hours long process of reformatting and partitioning the drives. Instead we're ready to go right out the gates.

The zpool should be automatically mounted to the filesystem after creation, check on that with the following:

df -hT | grep zfs

Note: If your computer ever loses power suddenly, say in event of a power outage, you may have to re-import your pool. In most cases, ZFS will automatically import and mount your pool, but if it doesn’t and you can't see your array, simply open the terminal and type sudo zpool import -a.

By default a zpool is mounted at /"zpoolname". The pool should be under our ownership but let's make sure with the following command:

sudo chown -R "yourlinuxusername" /"zpoolname"

Note: Changing file and folder ownership with "chown" and file and folder permissions with "chmod" are essential commands for much of the admin work in Linux, but we won't be dealing with them extensively in this guide. If you'd like a deeper tutorial and explanation you can check out these two guides: chown and chmod.

You can access the zpool file system through the GUI by opening the file manager (the Ubuntu default file manager is called Nautilus) and clicking on "Other Locations" on the sidebar, then entering the Ubuntu file system and looking for a folder with your pool's name. Bookmark the folder on the sidebar for easy access.

Your storage pool is now ready to go. Assuming that we already have some files on our Windows PC we want to copy to over, we're going to need to install and configure Samba to make the pool accessible in Windows.

Step Five: Setting Up Samba/Sharing

Samba is what's going to let us share the zpool with Windows and allow us to write to it from our Windows machine. First let's install Samba with the following commands:

sudo apt-get update

then

sudo apt-get install samba

Next create a password for Samba.

sudo smbpswd -a "yourlinuxusername"

It will then prompt you to create a password. Just reuse your Ubuntu user password for simplicity's sake.

Note: if you're using just a single external drive replace the zpool location in the following commands with wherever it is your external drive is mounted, for more information see this guide on mounting an external drive in Ubuntu.

After you've created a password we're going to create a shareable folder in our pool with this command

mkdir /"zpoolname"/"foldername"

Now we're going to open the smb.conf file and make that folder shareable. Enter the following command.

sudo nano /etc/samba/smb.conf

This will open the .conf file in nano, the terminal text editor program. Now at the end of smb.conf add the following entry:

["foldername"]

path = /"zpoolname"/"foldername"

available = yes

valid users = "yourlinuxusername"

read only = no

writable = yes

browseable = yes

guest ok = no

Ensure that there are no line breaks between the lines and that there's a space on both sides of the equals sign. Our next step is to allow Samba traffic through the firewall:

sudo ufw allow samba

Finally restart the Samba service:

sudo systemctl restart smbd

At this point we'll be able to access to the pool, browse its contents, and read and write to it from Windows. But there's one more thing left to do, Windows doesn't natively support the ZFS file systems and will read the used/available/total space in the pool incorrectly. Windows will read available space as total drive space, and all used space as null. This leads to Windows only displaying a dwindling amount of "available" space as the drives are filled. We can fix this! Functionally this doesn't actually matter, we can still write and read to and from the disk, it just makes it difficult to tell at a glance the proportion of used/available space, so this is an optional step but one I recommend (this step is also unnecessary if you're just using a single external drive). What we're going to do is write a little shell script in #bash. Open nano with the terminal with the command:

nano

Now insert the following code:

#!/bin/bash CUR_PATH=`pwd` ZFS_CHECK_OUTPUT=$(zfs get type $CUR_PATH 2>&1 > /dev/null) > /dev/null if [[ $ZFS_CHECK_OUTPUT == *not\ a\ ZFS* ]] then IS_ZFS=false else IS_ZFS=true fi if [[ $IS_ZFS = false ]] then df $CUR_PATH | tail -1 | awk '{print $2" "$4}' else USED=$((`zfs get -o value -Hp used $CUR_PATH` / 1024)) > /dev/null AVAIL=$((`zfs get -o value -Hp available $CUR_PATH` / 1024)) > /dev/null TOTAL=$(($USED+$AVAIL)) > /dev/null echo $TOTAL $AVAIL fi

Save the script as "dfree.sh" to /home/"yourlinuxusername" then change the ownership of the file to make it executable with this command:

sudo chmod 774 dfree.sh

Now open smb.conf with sudo again:

sudo nano /etc/samba/smb.conf

Now add this entry to the top of the configuration file to direct Samba to use the results of our script when Windows asks for a reading on the pool's used/available/total drive space:

[global]

dfree command = /home/"yourlinuxusername"/dfree.sh

Save the changes to smb.conf and then restart Samba again with the terminal:

sudo systemctl restart smbd

Now there’s one more thing we need to do to fully set up the Samba share, and that’s to modify a hidden group permission. In the terminal window type the following command:

usermod -a -G sambashare “yourlinuxusername”

Then restart samba again:

sudo systemctl restart smbd

If we don’t do this last step, everything will appear to work fine, and you will even be able to see and map the drive from Windows and even begin transferring files, but you'd soon run into a lot of frustration. As every ten minutes or so a file would fail to transfer and you would get a window announcing “0x8007003B Unexpected Network Error”. This window would require your manual input to continue the transfer with the file next in the queue. And at the end it would reattempt to transfer whichever files failed the first time around. 99% of the time they’ll go through that second try, but this is still all a major pain in the ass. Especially if you’ve got a lot of data to transfer or you want to step away from the computer for a while.

It turns out samba can act a little weirdly with the higher read/write speeds of RAIDz arrays and transfers from Windows, and will intermittently crash and restart itself if this group option isn’t changed. Inputting the above command will prevent you from ever seeing that window.

The last thing we're going to do before switching over to our Windows PC is grab the IP address of our Linux machine. Enter the following command:

hostname -I

This will spit out this computer's IP address on the local network (it will look something like 192.168.0.x), write it down. It might be a good idea once you're done here to go into your router settings and reserving that IP for your Linux system in the DHCP settings. Check the manual for your specific model router on how to access its settings, typically it can be accessed by opening a browser and typing http:\\192.168.0.1 in the address bar, but your router may be different.

Okay we’re done with our Linux computer for now. Get on over to your Windows PC, open File Explorer, right click on Network and click "Map network drive". Select Z: as the drive letter (you don't want to map the network drive to a letter you could conceivably be using for other purposes) and enter the IP of your Linux machine and location of the share like so: \\"LINUXCOMPUTERLOCALIPADDRESSGOESHERE"\"zpoolnamegoeshere"\. Windows will then ask you for your username and password, enter the ones you set earlier in Samba and you're good. If you've done everything right it should look something like this:

You can now start moving media over from Windows to the share folder. It's a good idea to have a hard line running to all machines. Moving files over Wi-Fi is going to be tortuously slow, the only thing that’s going to make the transfer time tolerable (hours instead of days) is a solid wired connection between both machines and your router.

Step Six: Setting Up Remote Desktop Access to Your Server

After the server is up and going, you’ll want to be able to access it remotely from Windows. Barring serious maintenance/updates, this is how you'll access it most of the time. On your Linux system open the terminal and enter:

sudo apt install xrdp

Then:

sudo systemctl enable xrdp

Once it's finished installing, open “Settings” on the sidebar and turn off "automatic login" in the User category. Then log out of your account. Attempting to remotely connect to your Linux computer while you’re logged in will result in a black screen!

Now get back on your Windows PC, open search and look for "RDP". A program called "Remote Desktop Connection" should pop up, open this program as an administrator by right-clicking and selecting “run as an administrator”. You’ll be greeted with a window. In the field marked “Computer” type in the IP address of your Linux computer. Press connect and you'll be greeted with a new window and prompt asking for your username and password. Enter your Ubuntu username and password here.

If everything went right, you’ll be logged into your Linux computer. If the performance is sluggish, adjust the display options. Lowering the resolution and colour depth do a lot to make the interface feel snappier.

Remote access is how we're going to be using our Linux system from now, barring edge cases like needing to get into the BIOS or upgrading to a new version of Ubuntu. Everything else from performing maintenance like a monthly zpool scrub to checking zpool status and updating software can all be done remotely.

This is how my server lives its life now, happily humming and chirping away on the floor next to the couch in a corner of the living room.

Step Seven: Plex Media Server/Jellyfin

Okay we’ve got all the ground work finished and our server is almost up and running. We’ve got Ubuntu up and running, our storage array is primed, we’ve set up remote connections and sharing, and maybe we’ve moved over some of favourite movies and TV shows.

Now we need to decide on the media server software to use which will stream our media to us and organize our library. For most people I’d recommend Plex. It just works 99% of the time. That said, Jellyfin has a lot to recommend it by too, even if it is rougher around the edges. Some people run both simultaneously, it’s not that big of an extra strain. I do recommend doing a little bit of your own research into the features each platform offers, but as a quick run down, consider some of the following points:

Plex is closed source and is funded through PlexPass purchases while Jellyfin is open source and entirely user driven. This means a number of things: for one, Plex requires you to purchase a “PlexPass” (purchased as a one time lifetime fee $159.99 CDN/$120 USD or paid for on a monthly or yearly subscription basis) in order to access to certain features, like hardware transcoding (and we want hardware transcoding) or automated intro/credits detection and skipping, Jellyfin offers some of these features for free through plugins. Plex supports a lot more devices than Jellyfin and updates more frequently. That said, Jellyfin's Android and iOS apps are completely free, while the Plex Android and iOS apps must be activated for a one time cost of $6 CDN/$5 USD. But that $6 fee gets you a mobile app that is much more functional and features a unified UI across platforms, the Plex mobile apps are simply a more polished experience. The Jellyfin apps are a bit of a mess and the iOS and Android versions are very different from each other.

Jellyfin’s actual media player is more fully featured than Plex's, but on the other hand Jellyfin's UI, library customization and automatic media tagging really pale in comparison to Plex. Streaming your music library is free through both Jellyfin and Plex, but Plex offers the PlexAmp app for dedicated music streaming which boasts a number of fantastic features, unfortunately some of those fantastic features require a PlexPass. If your internet is down, Jellyfin can still do local streaming, while Plex can fail to play files unless you've got it set up a certain way. Jellyfin has a slew of neat niche features like support for Comic Book libraries with the .cbz/.cbt file types, but then Plex offers some free ad-supported TV and films, they even have a free channel that plays nothing but Classic Doctor Who.

Ultimately it's up to you, I settled on Plex because although some features are pay-walled, it just works. It's more reliable and easier to use, and a one-time fee is much easier to swallow than a subscription. I had a pretty easy time getting my boomer parents and tech illiterate brother introduced to and using Plex and I don't know if I would've had as easy a time doing that with Jellyfin. I do also need to mention that Jellyfin does take a little extra bit of tinkering to get going in Ubuntu, you’ll have to set up process permissions, so if you're more tolerant to tinkering, Jellyfin might be up your alley and I’ll trust that you can follow their installation and configuration guide. For everyone else, I recommend Plex.

So pick your poison: Plex or Jellyfin.

Note: The easiest way to download and install either of these packages in Ubuntu is through Snap Store.

After you've installed one (or both), opening either app will launch a browser window into the browser version of the app allowing you to set all the options server side.

The process of adding creating media libraries is essentially the same in both Plex and Jellyfin. You create a separate libraries for Television, Movies, and Music and add the folders which contain the respective types of media to their respective libraries. The only difficult or time consuming aspect is ensuring that your files and folders follow the appropriate naming conventions:

Plex naming guide for Movies

Plex naming guide for Television

Jellyfin follows the same naming rules but I find their media scanner to be a lot less accurate and forgiving than Plex. Once you've selected the folders to be scanned the service will scan your files, tagging everything and adding metadata. Although I find do find Plex more accurate, it can still erroneously tag some things and you might have to manually clean up some tags in a large library. (When I initially created my library it tagged the 1963-1989 Doctor Who as some Korean soap opera and I needed to manually select the correct match after which everything was tagged normally.) It can also be a bit testy with anime (especially OVAs) be sure to check TVDB to ensure that you have your files and folders structured and named correctly. If something is not showing up at all, double check the name.

Once that's done, organizing and customizing your library is easy. You can set up collections, grouping items together to fit a theme or collect together all the entries in a franchise. You can make playlists, and add custom artwork to entries. It's fun setting up collections with posters to match, there are even several websites dedicated to help you do this like PosterDB. As an example, below are two collections in my library, one collecting all the entries in a franchise, the other follows a theme.

My Star Trek collection, featuring all eleven television series, and thirteen films.

My Best of the Worst collection, featuring sixty-nine films previously showcased on RedLetterMedia’s Best of the Worst. They’re all absolutely terrible and I love them.

As for settings, ensure you've got Remote Access going, it should work automatically and be sure to set your upload speed after running a speed test. In the library settings set the database cache to 2000MB to ensure a snappier and more responsive browsing experience, and then check that playback quality is set to original/maximum. If you’re severely bandwidth limited on your upload and have remote users, you might want to limit the remote stream bitrate to something more reasonable, just as a note of comparison Netflix’s 1080p bitrate is approximately 5Mbps, although almost anyone watching through a chromium based browser is streaming at 720p and 3mbps. Other than that you should be good to go. For actually playing your files, there's a Plex app for just about every platform imaginable. I mostly watch television and films on my laptop using the Windows Plex app, but I also use the Android app which can broadcast to the chromecast connected to the TV in the office and the Android TV app for our smart TV. Both are fully functional and easy to navigate, and I can also attest to the OS X version being equally functional.

Part Eight: Finding Media

Now, this is not really a piracy tutorial, there are plenty of those out there. But if you’re unaware, BitTorrent is free and pretty easy to use, just pick a client (qBittorrent is the best) and go find some public trackers to peruse. Just know now that all the best trackers are private and invite only, and that they can be exceptionally difficult to get into. I’m already on a few, and even then, some of the best ones are wholly out of my reach.

If you decide to take the left hand path and turn to Usenet you’ll have to pay. First you’ll need to sign up with a provider like Newshosting or EasyNews for access to Usenet itself, and then to actually find anything you’re going to need to sign up with an indexer like NZBGeek or NZBFinder. There are dozens of indexers, and many people cross post between them, but for more obscure media it’s worth checking multiple. You’ll also need a binary downloader like SABnzbd. That caveat aside, Usenet is faster, bigger, older, less traceable than BitTorrent, and altogether slicker. I honestly prefer it, and I'm kicking myself for taking this long to start using it because I was scared off by the price. I’ve found so many things on Usenet that I had sought in vain elsewhere for years, like a 2010 Italian film about a massacre perpetrated by the SS that played the festival circuit but never received a home media release; some absolute hero uploaded a rip of a festival screener DVD to Usenet. Anyway, figure out the rest of this shit on your own and remember to use protection, get yourself behind a VPN, use a SOCKS5 proxy with your BitTorrent client, etc.

On the legal side of things, if you’re around my age, you (or your family) probably have a big pile of DVDs and Blu-Rays sitting around unwatched and half forgotten. Why not do a bit of amateur media preservation, rip them and upload them to your server for easier access? (Your tools for this are going to be Handbrake to do the ripping and AnyDVD to break any encryption.) I went to the trouble of ripping all my SCTV DVDs (five box sets worth) because none of it is on streaming nor could it be found on any pirate source I tried. I’m glad I did, forty years on it’s still one of the funniest shows to ever be on TV.

Part Nine/Epilogue: Sonarr/Radarr/Lidarr and Overseerr

There are a lot of ways to automate your server for better functionality or to add features you and other users might find useful. Sonarr, Radarr, and Lidarr are a part of a suite of “Servarr” services (there’s also Readarr for books and Whisparr for adult content) that allow you to automate the collection of new episodes of TV shows (Sonarr), new movie releases (Radarr) and music releases (Lidarr). They hook in to your BitTorrent client or Usenet binary newsgroup downloader and crawl your preferred Torrent trackers and Usenet indexers, alerting you to new releases and automatically grabbing them. You can also use these services to manually search for new media, and even replace/upgrade your existing media with better quality uploads. They’re really a little tricky to set up on a bare metal Ubuntu install (ideally you should be running them in Docker Containers), and I won’t be providing a step by step on installing and running them, I’m simply making you aware of their existence.

The other bit of kit I want to make you aware of is Overseerr which is a program that scans your Plex media library and will serve recommendations based on what you like. It also allows you and your users to request specific media. It can even be integrated with Sonarr/Radarr/Lidarr so that fulfilling those requests is fully automated.

And you're done. It really wasn't all that hard. Enjoy your media. Enjoy the control you have over that media. And be safe in the knowledge that no hedgefund CEO motherfucker who hates the movies but who is somehow in control of a major studio will be able to disappear anything in your library as a tax write-off.

1K notes

·

View notes

Note

I am willing to give you or anyone else on tumblr the skills and advice the helped me get my dream job

the idea of working for TEK a few months ago would just be a fantasy

my background in education is English. I learned what I know now on my own and only by random chance.

This is why I am so critical of the linux commumity on tumblr.

They're tagging themselves as -official when they can't provide casual end user support.

They're entirely too horny to be in this sphere. Computers and linux should not be about how much you want to fuck/be fucked by X

it will deter end users

This is very cool that you will help other tumblr users with this stuff; i may actually take you up on this at some point :3

(my tone here is /g, /pos, /nm, /lh)

I do, however, kind of disagree with the other points. I think that for any other social media it's correct, twt or fb does not have the culture to make these sorts of parody accounts viable or not-counter-productive to increasing the linux market share. But I don't think that tumblr is the same.

I think that tumblr does. I think the tumblr community has always been this somewhat ephemeral yet perpetual inside joke culture where almost every user is in-the-know, and new users to the joke are able generally able to catch on quickly to it due to their general understanding of they way tumblr communities operate.

IMO, it's a somewhat quick pipeline of:

\> find first "x-official" blog -> assume it's real -> see them horny posting about xenia -> infer that RH corporate would probably not approve of such a blog

I can appreciate that it might be intimidating to seek out help as a new linux user, and especially a new linux & tumblr user, but looking through these blogs, you do see them helping out people ^^. heck, my last post was helping someone getting wayland working on an nvidia system.

The main goal of these blogs is not to be a legitimate CS service to general end-users. they aren't affiliated with the software their blog is named after, so in many cases they *cant*. The goal is instead to foster a community around linux, creating a general network of blogs of the various FOSS projects that they enjoy.

I think that final sentiment, of these blogs detering end users, is most likely counter to their actual effect on end users who are considering switching to linux.

We all know a lot of tumblr is 20 or 30 something year olds who have just stuck around since ~2012ish, and new users to tumblr join with pre-existing knowledge of the culture and platform. Almost anyone coming across these blogs are going to be people who can see the "in" joke, and acclimate. I do highly doubt that a random facebook mom who's son convinced her to install mint on her old laptop would find tumblr, find a -official blog, scroll through said blog, and be detered from using mint.

The other side of this is that any tumblr users who come across these blogs, be it with an inkling of desire to switch to linux or not, will see a vibrant and active community that fits very well into the tumblr community. They remember, or have heard of, the amtrac & OSHA blogs, and are therefore probably aware that this is a pre-existing meme on here.

In all likelyhood, this will probably further incentivize them to make the switch, as they would be more attracted to a community of their peers over a community of redditors telling them to read the arch wiki repeatedly

I can, on the other hand, definitely see that for people who have difficulties with parsing tone, and especially sarcasm, would have trouble with this. TBH, I have these difficulties (hence when I was speaking to you yesterday I used the /unjerk indicator, as I couldn't tell what the tone of the conversation was), and so it took me a little while of being in this weird "I'm 99% sure these *aren't* official, but what if?". I have been there forI think that maybe being more transparent with the fact that the blogs are parodies is probably important. I'm guilty of this, and after i post this, i'll add it to my bio.

#i use arch btw#they should switch to xenia#tux is so mid#penguins of madagascar was better#linuxposting#linux#distros#ask#mipseb

49 notes

·

View notes

Note

Please tell us how to get into IT without a degree! I have an interview for a small tech company this week and I’m going in as admin but as things expand I can bootstrap into a better role and I’d really appreciate knowing what skills are likely to be crucial for making that pivot.

Absolutely!! You'd be in a great position to switch to IT, since as an admin, you'd already have some familiarity with the systems and with the workplace in general. Moving between roles is easier in a smaller workplace, too.

So, this is a semi-brief guide to getting an entry-level position, for someone with zero IT experience. That position is almost always going to be help desk. You've probably heard a lot of shit about help desk, but I've always enjoyed it.

So, here we go! How to get into IT for beginners!

The most important thing on your resume will be

✨~🌟Certifications!!🌟~✨

Studying for certs can teach you a lot, especially if you're entirely new to the field. But they're also really important for getting interviews. Lots of jobs will require a cert or degree, and even if you have 5 years of experience doing exactly what the job description is, without one of those the ATS will shunt your resume into a black hole and neither HR or the IT manager will see it.

First, I recommend getting the CompTIA A+. This will teach you the basics of how the parts of a computer work together - hardware, software, how networking works, how operating systems work, troubleshooting skills, etc. If you don't have a specific area of IT you're interested in, this is REQUIRED. Even if you do, I suggest you get this cert just to get your foot in the door.

I recommend the CompTIA certs in general. They'll give you a good baseline and look good on your resume. I only got the A+ and the Network+, so can't speak for the other exams, but they weren't too tough.

If you're more into development or cybersecurity, check out these roadmaps. You'll still benefit from working help desk while pursuing one of those career paths.

The next most important thing is

🔥🔥Customer service & soft skills🔥🔥

Sorry about that.

I was hired for my first ever IT role on the strength of my interview. I definitely wasn't the only candidate with an A+, but I was the only one who knew how to handle customers (aka end-users). Which is, basically, be polite, make the end-user feel listened to, and don't make them feel stupid. It is ASTOUNDING how many IT people can't do that. I've worked with so many IT people who couldn't hide their scorn or impatience when dealing with non-tech-savvy coworkers.

Please note that you don't need to be a social butterfly or even that socially adept. I'm autistic and learned all my social skills by rote (I literally have flowcharts for social interactions), and I was still exceptional by IT standards.

Third thing, which is more for you than for your resume (although it helps):

🎇Do your own projects🎇

This is both the most and least important thing you can do for your IT career. Least important because this will have the smallest impact on your resume. Most important because this will help you learn (and figure out if IT is actually what you want to do).

The certs and interview might get you a job, but when it comes to doing your job well, hands-on experience is absolutely essential. Here are a few ideas for the complete beginner. Resources linked at the bottom.

Start using the command line. This is called Terminal on Mac and Linux. Use it for things as simple as navigating through file directories, opening apps, testing your connection, that kind of thing. The goal is to get used to using the command line, because you will use it professionally.

Build your own PC. This may sound really intimidating, but I swear it's easy! This is going to be cheaper than buying a prebuilt tower or gaming PC, and you'll learn a ton in the bargain.

Repair old PCs. If you don't want to or can't afford to build your own PC, look for cheap computers on Craiglist, secondhand stores, or elsewhere. I know a lot of universities will sell old technology for cheap. Try to buy a few and make a functioning computer out of parts, or just get one so you can feel comfortable working in the guts of a PC.

Learn Powershell or shell scripting. If you're comfortable with the command line already or just want to jump in the deep end, use scripts to automate tasks on your PC. I found this harder to do for myself than for work, because I mostly use my computer for web browsing. However, there are tons of projects out there for you to try!

Play around with a Raspberry Pi. These are mini-computers ranging from $15-$150+ and are great to experiment with. I've made a media server and a Pi hole (network-wide ad blocking) which were both fun and not too tough. If you're into torrenting, try making a seedbox!

Install Linux on your primary computer. I know, I know - I'm one of those people. But seriously, nothing will teach you more quickly than having to compile drivers through the command line so your Bluetooth headphones will work. Warning: this gets really annoying if you just want your computer to work. Dual-booting is advised.

If this sounds intimidating, that's totally normal. It is intimidating! You're going to have to do a ton of troubleshooting and things will almost never work properly on your first few projects. That is part of the fun!

Resources

Resources I've tried and liked are marked with an asterisk*

Professor Messor's Free A+ Training Course*

PC Building Simulator 2 (video game)

How to build a PC (video)

PC Part Picker (website)*

CompTIA A+ courses on Udemy

50 Basic Windows Commands with Examples*

Mac Terminal Commands Cheat Sheet

Powershell in a Month of Lunches (video series)

Getting Started with Linux (tutorial)* Note: this site is my favorite Linux resource, I highly recommend it.

Getting Started with Raspberry Pi

Raspberry Pi Projects for Beginners

/r/ITCareerQuestions*

Ask A Manager (advice blog on workplace etiquette and more)*

Reddit is helpful for tech questions in general. I have some other resources that involve sailing the seas; feel free to DM me or send an ask I can answer privately.

Tips

DO NOT work at an MSP. That stands for Managed Service Provider, and it's basically an IT department which companies contract to provide tech services. I recommend staying away from them. It's way better to work in an IT department where the end users are your coworkers, not your customers.

DO NOT trust remote entry-level IT jobs. At entry level, part of your job is schlepping around hardware and fixing PCs. A fully-remote position will almost definitely be a call center.

DO write a cover letter. YMMV on this, but every employer I've had has mentioned my cover letter as a reason to hire me.

DO ask your employer to pay for your certs. This applies only to people who either plan to move into IT in the same company, or are already in IT but want more certs.

DO NOT work anywhere without at least one woman in the department. My litmus test is two women, actually, but YMMV. If there is no woman in the department in 2024, and the department is more than 5 people, there is a reason why no women work there.

DO have patience with yourself and keep an open mind! Maybe this is just me, but if I can't do something right the first time, or if I don't love it right away, I get very discouraged. Remember that making mistakes is part of the process, and that IT is a huge field which ranges from UX design to hardware repair. There are tons of directions to go once you've got a little experience!

Disclaimer: this is based on my experience in my area of the US. Things may be different elsewhere, esp. outside of the US.

I hope this is helpful! Let me know if you have more questions!

46 notes

·

View notes

Text

AnarSec: Tech Guides for Anarchists

AnarSec is a new resource designed to help anarchists navigate the hostile terrain of technology — defensive guides for digital security and anonymity, as well as offensive guides for hacking. All guides are available in booklet format for printing and will be kept up to date.

As anarchists, we must defend ourselves against police and intelligence agencies that conduct targeted digital surveillance for the purposes of incrimination and network mapping. With the defensive series, our goal is to obscure the State’s visibility into our lives and projects. Our recommendations are intended for all anarchists, and they are accompanied by guides to put the advice into practice.

With the upcoming offensive series, we hope to contribute to the practice of hacking the State and capital. Astute readers may notice that the art featured on our homepage and booklets is taken from communiqués detailing how anarchists robbed a bank (Phineas Fisher) and destroyed police servers (AntiSec) using only a keyboard.

The defensive series currently includes:

Tails

Tails for Anarchists

Tails Best Practices

Qubes OS

Qubes OS for Anarchists

Phones

Kill the Cop in Your Pocket

GrapheneOS for Anarchists

General

Linux Essentials

Remove Identifying Metadata From Files

Encrypted Messaging for Anarchists

Make Your Electronics Tamper-Evident

24 notes

·

View notes

Note

Hello The Yower Is Pouth As you are the tumblr uncle, i come to you for advice; I am trying to program something new. Something fresh. My 3ds has Linux installed on it, however, it does not have networking capabilities, as there are no drivers for the network interface card on the 3ds. I ask of you, do you know of any way to start learning about how developing drivers works? It would be very slay.

Phoning a friend on this problem again for actual professional opinion lol (she loves this shit)

@just-my-insufferable-existance

My two cents is: Its a super fun project to mod a 3ds, but Id probably recommend starting with older devices if you havent already because theyve made it harder and harder in the past 5-10 years to disconnect the hardware from the stupid corporate software they want you to have. Never tried 3ds so I could be wrong Im just bitter about capitalism ruining the ability to repair and mod new shit

Bennets Link:

47 notes

·

View notes

Text

Next step: discovered hostnames.

Avahi is called "zeroconf" but it's always taken me hours to get anything out of it...

3 notes

·

View notes

Text

How to install and configure sudo on Debian Linux

The minimal version of Debian Linux 12/11 does not install sudo. When performing a network installation for Debian, the usual approach is to use the minimum version, which only installs the essential packages. Most Linux container images based upon Debian also skip sudo, and if your project needs sudo, then read on how to install and configure sudo and grant access to a user on a Debian Linux 12/11.

11 notes

·

View notes

Text

Breaking into Tech: How Linux Skills Can Launch Your Career in 2025

In today's rapidly evolving tech landscape, Linux skills have become increasingly valuable for professionals looking to transition into rewarding IT careers. As we move through 2025, the demand for Linux System Administrators continues to grow across industries, creating excellent opportunities for career changers—even those without traditional technical backgrounds.

Why Linux Skills Are in High Demand

Linux powers much of the world's technology infrastructure. From enterprise servers to cloud computing environments, this open-source operating system has become the backbone of modern IT operations. Organizations need skilled professionals who can:

Deploy and manage enterprise-level IT infrastructure

Ensure system security and stability

Troubleshoot complex technical issues

Implement automation to improve efficiency

The beauty of Linux as a career path is that it's accessible to motivated individuals willing to invest time in learning the necessary skills. Unlike some tech specialties that require years of formal education, Linux administration can be mastered through focused training programs and hands-on experience.

The Path to Becoming a Linux System Administrator

1. Structured Learning

The journey begins with structured learning. Comprehensive training programs that cover Linux fundamentals, system administration, networking, and security provide the knowledge base needed to succeed. The most effective programs:

Teach practical, job-relevant skills

Offer instruction from industry professionals

Pace the learning to allow for deep understanding

Prepare students for respected certifications like Red Hat

2. Certification

Industry certifications validate your skills to potential employers. Red Hat certifications are particularly valuable, demonstrating your ability to work with enterprise Linux environments. These credentials help you stand out in a competitive job market and often lead to higher starting salaries.

3. Hands-On Experience

Theoretical knowledge isn't enough—employers want to see practical experience. Apprenticeship opportunities allow aspiring Linux administrators to:

Apply their skills in real-world scenarios

Build a portfolio of completed projects

Gain confidence in their abilities

Bridge the gap between training and employment

4. Job Search Strategy

With the right skills and experience, the final step is finding that first position. Successful job seekers:

Tailor their resumes to highlight relevant skills

Prepare thoroughly for technical interviews

Network with industry professionals

Target companies that value their newly acquired skills

Time Investment and Commitment

Becoming job-ready as a Linux System Administrator typically requires:

10-15+ hours per week for studying

A commitment to consistent learning over several months

Persistence through challenging technical concepts

A growth mindset and motivation to succeed

The Career Outlook

For those willing to make the investment, the rewards can be substantial. Linux professionals enjoy:

Competitive salaries

Strong job security

Opportunities for remote work

Clear paths for career advancement

Intellectually stimulating work environments

Conclusion

The path to becoming a Linux System Administrator is more accessible than many people realize. With the right training, certification, and hands-on experience, motivated individuals can transition into rewarding tech careers—regardless of their previous background. As we continue through 2025, the demand for these skills shows no signs of slowing down, making now an excellent time to begin this journey.

2 notes

·

View notes

Text

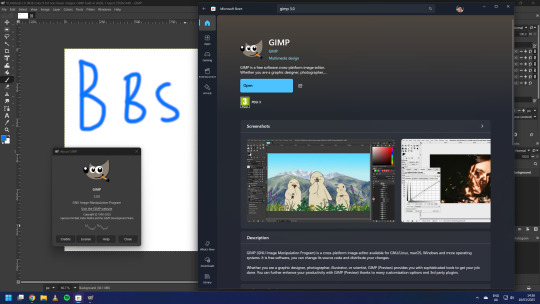

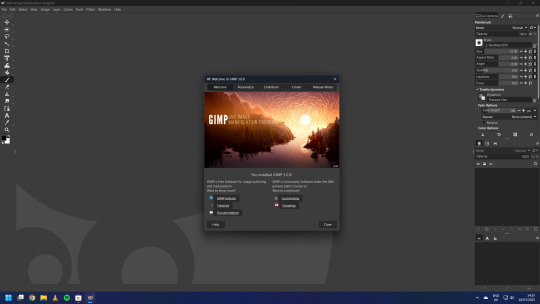

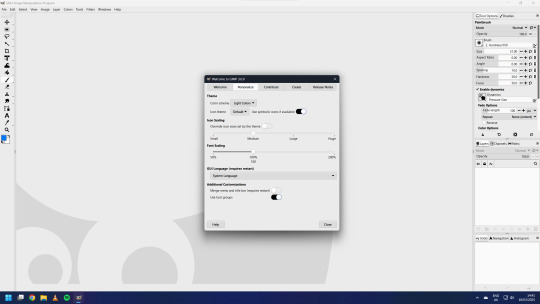

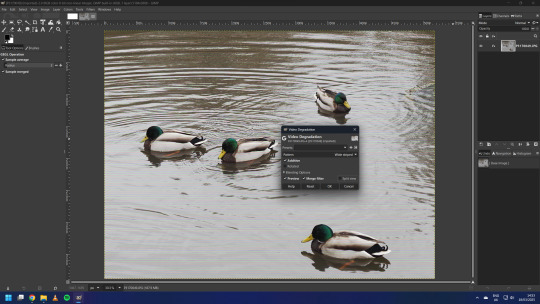

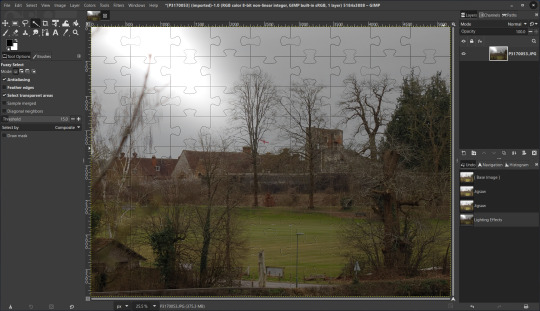

On the 19th of March 2025, I downloaded the latest version of the GNU Image Manipulation Program (GIMP). I downloaded it through the Microsoft Store.

https://www.gimp.org/news/2025/03/16/gimp-3-0-released/

GIMP is an open source image editing application for Linux, Unix, Mac and Windows. The latest version is 3.0, which was released for stable use on the 16th of March.

https://en.wikipedia.org/wiki/GIMP

GIMP 3.0 has been in development for 7 years since the previous release version which was 2.10. GIMP 3.0 celebrates a major milestone for the software, here are just a few of the notable changes:

A completed GTK3 port of the application and all of the tool sets

Non destructive editing capability

Better painting and drawing tools

Major HiDPI display scaling improvements

Faster performance boost with muti-threading

Better support for PSD (Photoshop) files

A new revamped light and dark theme set

Native 'Wayland' display server protocol support on Linux systems.

Native Microsoft Store app with auto updates

Lots and lots of bug fixes

See more here:

https://www.omgubuntu.co.uk/2025/03/gimp-3-0

The update also features the option to merge the title bar and menu bar, which provides a minimalistic header bar style to the user interface on Windows and Linux. (see screenshot below)

The theme and icon set can be easily changed in the settings and feature a brand new set of icons that echo the older style of icons used in older versions of GIMP up to 2.8 (2012).

Read the release notes below:

https://www.gimp.org/release-notes/gimp-3.0.html

3.0 also celebrates 30 years since the original version of GIMP was released back in 1995! Its first public release was 1998.

The application features a refreshed logo as part of its release.

I created a quick mock-up of Roger the Ragdoll using the layers panel for each of the graphics.

-

GIMP got its first point release for 3.0.2, after a week of being available. This version got auto updated on the 25th March.

Underneath is a small project I did involving layers in GIMP. The Beano comic characters included are:

Derek the Sheep by Gary Northfield.

The Numskulls Brainy by Barry Glennard.

Dennis the Menace & Gnasher by Nigel Parkinson.

Colin the Vet by Duncan Scott.

Little Plum by Hunt Emerson.

A gradient effect was added behind the characters.

Each image is shown in the tabs above. I exported the image as a portable network graphic (PNG) afterwards.

This release makes GIMP feel more like a great Photoshop open source alternative. For many users there is still a steep learning curve, however the overall experience feels worth it after 7 years!

4 notes

·

View notes

Text

Linux Life Episode 86

Hello everyone back to my Linux Life blog. I admit it has been a while since I wrote anything here . I have continued to use EndeavourOS on my Ryzen 7 Dell laptop. If I any major incidents had came up I would have made an entry.

However nothing really exciting has transpired. I update daily and OK have had a few minor issues but nothing that couldn't be sorted easily so not worth typing up a full blog just for running a yay command which sorted things out.

However given it's March, which some You-tubers and content creators have been running with the hashtag of #Marchintosh in which they look at old Mac stuff.

So I decided to run some older versions of Mac OS using VMWare Workstation which is now free for Windows, Mac and Linux.

For those not up with the technology of Virtual Machines basically the computer creates a sandbox container which pretends to be a certain machine so you can run things like Linux and MacOS using a software created environment.

VMWare Workstation and Oracle Virtualbox are Type 2 Hypervisors as they are known which create the whole environment using software machines which you can configure. All drivers are software based.

Microsoft Hyper-V, Xen and others such as QEMU are Type 1 Hypervisors which as well as having the various environments have software drivers some can use what they call "bare metal" which means it can see and use your actual GPU meaning you can take advantage of video acceleration. It also can give bare metal access to keyboards and mice. These take a lot more setup but work slightly quicker than Type 2 once they are done.

Type 1 systems like Qemu and Bochs may also allow access to different CPU types such as SPARC, PowerPC so you can run alternative OS like Solaris, IRIX and others.

Right now i have explained that back to the #Marchintosh project I was using VMWare Workstation and I decided to install 2 versions of Mac OS.

First I installed Mac OS Catalina (Mac OS X 10.15) now luckily a lot of the leg work had been taken out for me as someone had already created a VMDK file (aka virtual Hard drive) of Catalina with AMD drivers to download. Google is your friend I am not putting up links.

So first you have to unlock VMWare as by default the Windows and Linux versions don't list Mac OS. You do this by downloading a WMWare unlocker and then running it. It will make patch various files to allow it to now run MacOS.

So upon creating the VM and selecting Mac OS 10.15 from options you have to first setup to install the OS later and then when it asks to use a HD point it towards the Catalina AMD VDMK previously downloaded (keep existing format). Set CPUs to 2 and Cores to 4 as I can. Memory set to 8GB, Set networking to NAT and everything else as standard. Selecting Finish.

Now before powering on the VM as I have an AMD Ryzen system I had to edit the VM's VMX file using a text editor.

cpuid.0.eax = “0000:0000:0000:0000:0000:0000:0000:1011” cpuid.0.ebx = “0111:0101:0110:1110:0110:0101:0100:0111” cpuid.0.ecx = “0110:1100:0110:0101:0111:0100:0110:1110” cpuid.0.edx = “0100:1001:0110:0101:0110:1110:0110:1001” cpuid.1.eax = “0000:0000:0000:0001:0000:0110:0111:0001” cpuid.1.ebx = “0000:0010:0000:0001:0000:1000:0000:0000” cpuid.1.ecx = “1000:0010:1001:1000:0010:0010:0000:0011” cpuid.1.edx = “0000:0111:1000:1011:1111:1011:1111:1111” smbios.reflectHost = "TRUE" hw.model = "iMac19,1" board-id = "Mac-AA95B1DDAB278B95"

This is to stop the VM from locking up as it will try and run an Intel CPU setup and freeze. This is the prevention of this happening by making it think its a iMac 19,1 in this case.

Now you need to create a harddrive in the VM settings to install the OS on by editing the settings in VMWare and adding a hard drive in my case 100GB set as one file. Make sure it is set to SATA 0:2 using the Advanced button.

Now power on the VM and it will boot to a menu with four options. Select Disk Utility and format the VMware drive to APFS. Exit Disk Utility and now select Restore OS and it will install. Select newly formatted drive and Agree to license.

It will install and restart more than once but eventually it will succeed. Setup language, Don't import Mac, skip location services, skip Apple ID, create account and setup icon and password. don't send Metrics, skip accessibility.

Eventually you will get a main screen with a dock. Now you can install anything that doesn't use video acceleration. So no games or Final Cut Pro but can be used a media player for Youtube and Logic Pro and Word processing.

There is a way of getting iCloud and Apple ID working but as I don't use it I never did bother. Updates to the system are at your own risk as it can wreck the VM.

Once installed you can power down VM using the Apple menu and remove the Catalina VMDK hard drive from the settings. It provide all the fixed kexts so keyboards, mice and sound should work.

If you want video resolution you can install VMware Tools and the tools to select are the ones from the unlocker tools.

Quite a lot huh? Intel has a similar setup but you can use the ISOs and only need to set SMC.version="0" in the VMX.

For Sonoma (Mac OS 14) you need to download OpenCore which is a very complicated bootloader created by very smart indivials normally used to create Hackintosh setups.

It's incredibly complex and has various guides the most comprehensive being the Dortania Opencore guide which is extensive and extremely long.

Explore so at your own risk. As Sonoma is newer version the only way to get it running on AMD laptops or Desktops in VMWare is to use Opencore. Intel can do fixes to the VMX to get it work.

This one is similar to the previous I had to download an ISO of Sonoma. Google is your friend but here is a good one on github somewhere (hint hint). In my case I downloaded Sonoma version 14.7_21H124 (catchy I know).

I also had to download a VDMK of Opencore that allowed 4 cores to be used. I found this on AMD-OSX as can you.

The reason I chose this ISO as you can download Sequioa one. I tried Sequioa but could not get sound working.

So for this one create VM , Select Mac OS 14, install operating system later. Existing OS select Opencore VDMK (keep existing format), set CPU to 1 and cores to 4. Set Netwoking as Bridged everything else as normal. Finish

Now edit settings on VM. On CD-Rom change to image and point to downloaded Sonoma ISO. Add Second hard drive to write to once again I selected 100GB one file. Make sure it is set to SATA 0:2 using the Advanced button. Make sure Opencore is set to SATA 0:0 also using same button.

Now Power the VM. It will boot to a menu with four options. Select Disk Utility and format the VMware drive to APFS. Exit Disk Utility and now select Install OS and it will install. Select newly formatted drive and Agree to license.

The System will install and may restart several times if you get a halt then Restart Guest using the VMware buttons. It will continue until installed.

Setup as done in Catalina turning off all services and creating account. Upon starting of Mac you will have a white background.

Go to System Settings and Screen Saver and turn off Show as Wallpaper.

Now Sonoma is a lot more miserable about installing programs from the Internet and you will spend a lot of time in the System setting Privacy and Security to allow things.

I installed OpenCore Auxilary Tools and managed to install it after the security nonsense. I then turned on Hard Drives in Finder by selecting Settings.

Now open OPENCORE and open EFI folder then OC folder. Start OCAT and drag config.plist from folder to it. In my case to get sound I had to use VoodooHDA but yours may vary.

The VoodooHDA was in the Kernel tab of OCAT I enabled it and disabled AppleALC. Save and exit. Reboot VM and et voila I had sound.

Your mileage may vary and you may need different kexts depending on soundcard or MAC OS version.

Install VMTools to get better Screen resolution. Set Wallpaper to static rather than dynamic to get better speed.

Close VM edit settings and remove CD iso by unticking connected unless you have a CD drive I don't. DO NOT remove Opencore as it needs that to boot.

And we are done. What a nightmare but fascinating to me. If you got this far you deserve a medal. So ends my #Marchintosh entry.

Until next time good luck and take care

2 notes

·

View notes

Note

I was very skeptical about all the other Linux distros but you seem like you would actually be the person who made the OS

Anyway my Bluetooth headphones won't connect to my computer, have tried bluetoothctl. I use connmanctl. Connecting works fine but for some reason it automatically cancels pairing without input.

-I

woof! i'm unfortunately not the creator of this specific project, but i am working on my own.

bluetooth is truly the most cursed thing on any computer. i'm not 100% sure what setup you have overall, do you have the other parts of the bluetooth stack installed (bluez et al)? are you using any specific gui manager for conman? do u use conman for networking too or just bt?

7 notes

·

View notes

Text

How to Transition from Biotechnology to Bioinformatics: A Step-by-Step Guide

Biotechnology and bioinformatics are closely linked fields, but shifting from a wet lab environment to a computational approach requires strategic planning. Whether you are a student or a professional looking to make the transition, this guide will provide a step-by-step roadmap to help you navigate the shift from biotechnology to bioinformatics.

Why Transition from Biotechnology to Bioinformatics?