#MicroProcessor

Explore tagged Tumblr posts

Note

Do you have suggestions for how you would anthropomorphize a microprocessor? I already have an idea for the personality but when the base you have to work with is naught more than a black rectangle it's hard to come up with anything

i had a whole big thing typed out but then tumblr deleted it all ToT anyways it was basically that i’d lean into any super consequential connections you can make in your head, here’s how my design process worked:

and here’s the design i came up with based on all that:

and here we are! a square-ish bug microprocessor lady!

#asks answered#kewpie's art#microprocessor#i wanna keep this design though… she’s too cool#this was just to show off how i design characters

597 notes

·

View notes

Text

Intel Pentium ad, December 1996

101 notes

·

View notes

Text

youtube

Desk of Ladyada - 🍐🍒🫐🍊 Fruit Jam jam party https://youtu.be/MNbGPl67N0Y

Fruit Jam! Our new credit card-sized computer inspired by IchigoJam! Built on the Metro RP2350 with DVI & USB host, it's a retro-inspired mini PC with modern features. Plus, we're hunting for the perfect I2S DAC for high-quality audio output!

#raspberrypi#makers#electronics#coding#diy#hardware#dac#audio#engineering#microcontroller#computerscience#retrocomputing#hackaday#stem#diyelectronics#embedded#programming#opensource#technology#adafruit#innovation#prototyping#developertools#maker#pcbdesign#microprocessor#rp2350#electronics101#digitalfabrication#Youtube

5 notes

·

View notes

Text

A rare example of an MOS 6501 microprocessor - Vintage Computer Federation Museum, Wall, NJ

75 notes

·

View notes

Text

https://www.futureelectronics.com/p/electromechanical--timing-devices--crystals/ecs-250-18-5px-ckm-tr-ecs-inc.-4171530

Watch crystal, Quartz crystal oscillator, Voltage signal, quartz resonator

CSM-7X Series 7.3728 MHz ±30 ppm 20 pF -10 to +70 °C SMT Quartz Crystal

#Frequency Control & Timing Devices#Crystals#ECS-250-18-5PX-CKM-TR#ECS Inc#Watch crystal#Quartz crystal oscillator#Voltage signal#quartz resonator#clock#low frequency oscillators#calculator#Microprocessor#frequency#digital watches

2 notes

·

View notes

Text

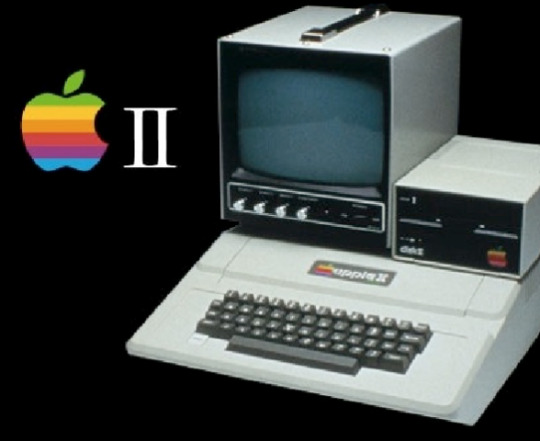

The Apple II is an early personal computer that was created by Apple Inc. It was one of the first successful mass-produced microcomputer products, and it played a significant role in the early development of the personal computer industry. It has an 8-bit microprocessor.

Manufacturer: Apple

Date introduced: June 1977

Developer: Steve Wozniak (lead designer)

Memory: 4, 8, 12, 16, 20, 24, 32, 36, 48, or 64 KiB

Year: 1977 si.edu

CPU: MOS Technology 6502; @ 1.023 MHz

Discontinued: May 1979; 44 years ago

#appleii#apple#1977#steve woznik#as old as my husband#computer#personal computer#8bit#8 bit#microprocessor#vintage#cool

4 notes

·

View notes

Text

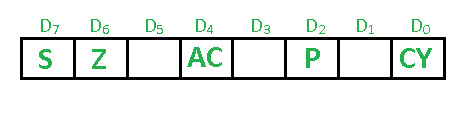

Flags Significant In Microprocessor

#NeedToKnow: Flags in microprocessor

Flags in microprocessors are single-bit storage locations within the CPU’s register set used to indicate the outcome of arithmetic and logical operations. These flags provide information about the result of an operation, allowing the CPU to make decisions based on these results. The most common flags found in microprocessors include: Zero Flag (Z): Set if the result of an operation is…

View On WordPress

2 notes

·

View notes

Text

Creating a new processor microarchitecture.

Support with your donation an important technology project that will open new opportunities for the entire microprocessor development and manufacturing industry.

Friends, I salute you all.

I am creating a fundamentally new processor microarchitecture and new units of information measurement with a team of like-minded people.

The microarchitecture I am creating will be much faster than the ones we have now. The new microarchitecture will speed up the work of all components of the processor, as well as other devices with which it interacts. New modules and fundamentally new algorithms of operation will be introduced into the work of the processor components.

Why is it important to support with a donation my project to create a new processor microarchitecture? The processors produced today have reached their technological limit in terms of reducing the size of transistors.

Over the past decades, processor performance gains have been largely achieved by reducing the transistor size and, in parallel, increasing the number of transistors on the chip.

Now, further reduction of transistor size is becoming more and more technologically challenging. One way out of this situation is to create a new microprocessor architecture that will use new algorithms. The new microprocessor architecture will make it possible to increase the performance of processors without increasing the number of transistors on the chip or reducing the size of the transistors themselves.

What has been done so far, brief description:

1. Assembled a team of developers with whom I will develop a new processor microarchitecture.

2. Technical specifications of the first samples of the new processor microarchitecture have been approved.

3. A step-by-step scheme of development of the new microarchitecture of the processor is created.

What else needs to be done, a brief description:

1. Acquire lab equipment to be able to design and build a new processor microarchitecture.

2. Purchase lab equipment to test individual compute modules on the new microarchitecture.

3. Develop individual computational modules of the new processor microarchitecture.

4. Test the created computational modules for performance and compliance with the planned specifications.

5. Create fundamentally new operating algorithms for computing modules and blocks.

6. Assemble a new microprocessor architecture from the developed computational modules.

7. Test the new processor microarchitecture against the planned performance.

8. Develop a scheme of interaction of the processor on the new architecture with peripheral devices.

9. Test the interaction of the processor on the new architecture with peripheral devices.

... And much more. The amount of work is huge.

Friends even your minimal donation will help us a lot.

You can help us with your donation through our fundraising platform (link to platform website): https://www.pledge.to/creating-a-new-processor-microarchitecture

or send funds directly to our account:

You can send a donation to the following details:

Bitcoin crypto wallet address:

14bBNxQ8UFtj1WY7QrrUdBwkPYFsvMt2Pw

Bitcoin cash crypto wallet address:

qqn47tcp5xytuj3sp0tkqa9xvdrh5u9lvvhvnsff0v

Ethereum crypto wallet address:

0x653C53216d76a58a3D180519891366D2e61f9985

Polygon (MATIC) crypto wallet address:

0x653C53216d76a58a3D180519891366D2e61f9985

Payment system Payer: Account number: P1108734121

Payment system Webmoney wallet numbers:

US dollar: Z268481228605

Euro: E294954079686

#Donation#donate#donation links#fundraiser#donations#processor#microarchitecture#Creating#technology#computing#research#futurism#microprocessor#microprocessors#project

0 notes

Text

https://www.futureelectronics.com/p/semiconductors--microcontrollers--32-bit/stm32f105vct6-stmicroelectronics-1949478

32 bit embedded microcontrollers, programmable microcontrollers, microprocessor

STM32F Series 256 kB Flash 64 kB RAM 72 MHz 32-Bit Microcontroller - LQFP-100

#Microcontrollers#32 bit#STM32F105VCT6#STMicroelectronics#32 bit embedded#programmable#microprocessor#warless microcontroller manufacturers#Low power microcontroller#lcd microcontrollers#software microcontrollers

1 note

·

View note

Text

Cube's Soul

Available in t-shirt, tank top, baseball cap, iPhone case, iPad case, stickers, magnet, mouse pad, comforter, clock, acrylic block... there are many more products for this design, just click on the link and watch them

#cube#the cube#figure#geometry#science#square#soul#chip#technology#microprocessor#room#motherboard#crystal#glass#t shirt#iphone#ipad#clock#baseball cap#sticker#magnet#print on demand#suits#men in suits

0 notes

Text

https://www.futureelectronics.com/p/semiconductors--microcontrollers--8-bit/pic18f6520-i-pt-microchip-7337520

8-bit microprocessor, 8 bit embedded microcontroller, Low power microcontroller

PIC18F Series 32 kB Flash 2 kB RAM 40 MHz 8-Bit Microcontroller - TQFP-64

#Microchip#PIC18F6520-I/PT#Microcontrollers#8 bit#microprocessor#8 bit embedded#Low power microcontroller#What is 8 bit microprocessor#Low power microcontrollers software#8-bit computing#8-bit image#lcd#Wireless

1 note

·

View note

Text

Introducing Trillium, Google Cloud’s sixth generation TPUs

Trillium TPUs

The way Google cloud engage with technology is changing due to generative AI, which is also creating a great deal of efficiency opportunities for corporate effect. However, in order to train and optimise the most powerful models and present them interactively to a worldwide user base, these advancements need on ever-increasing amounts of compute, memory, and communication. Tensor Processing Units, or TPUs, are unique AI-specific hardware that Google has been creating for more than ten years in an effort to push the boundaries of efficiency and scale.

Many of the advancements Google cloud introduced today at Google I/O, including new models like Gemma 2, Imagen 3, and Gemini 1.5 Flash, which are all trained on TPUs, were made possible by this technology. Google cloud thrilled to introduce Trillium, Google’s sixth-generation TPU, the most powerful and energy-efficient TPU to date, to offer the next frontier of models and empower you to do the same.

Comparing Trillium TPUs to TPU v5e, a remarkable 4.7X boost in peak computation performance per chip is achieved. Google cloud increased both the Interchip Interconnect (ICI) bandwidth over TPU v5e and the capacity and bandwidth of High Bandwidth Memory (HBM). Third-generation SparseCore, a dedicated accelerator for handling ultra-large embeddings frequently found in advanced ranking and recommendation workloads, is another feature that Trillium has. Trillium TPUs provide faster training of the upcoming generation of foundation models, as well as decreased latency and cost for those models. Crucially, Trillium TPUs are more than 67% more energy-efficient than TPU v5e, making them Google’s most sustainable TPU generation to date.

Up to 256 TPUs can be accommodated by Trillium in a single high-bandwidth, low-latency pod. In addition to this pod-level scalability, Trillium TPUs can grow to hundreds of pods using multislice technology and Titanium Intelligence Processing Units (IPUs). This would allow a building-scale supercomputer with tens of thousands of chips connected by a multi-petabit-per-second datacenter network.

The next stage of Trillium-powered AI innovation

Google realised over ten years ago that a novel microprocessor was necessary for machine learning. They started developing the first purpose-built AI accelerator in history, TPU v1, in 2013. In 2017, Google cloud released the first Cloud TPU. Many of Google’s best-known services, including interactive language translation, photo object recognition, and real-time voice search, would not be feasible without TPUs, nor would cutting-edge foundation models like Gemma, Imagen, and Gemini. Actually, Google Research’s foundational work on Transformers the algorithmic foundations of contemporary generative AI Fwas made possible by the size and effectiveness of TPUs.

Compute performance per Trillium chip increased by 4.7 times

Since TPUs Google cloud created specifically for neural networks, Google cloud constantly trying to speed up AI workloads’ training and serving times. In comparison to TPU v5e, Trillium performs 4.7X peak computing per chip. We’ve boosted the clock speed and enlarged the size of matrix multiply units (MXUs) to get this level of performance. Additionally, by purposefully offloading random and fine-grained access from TensorCores, SparseCores accelerate workloads that involve a lot of embedding.

The capacity and bandwidth of High Bandwidth Memory (HBM) with 2X ICI

Trillium may operate with larger models with more weights and larger key-value caches by doubling the HBM capacity and bandwidth. Higher memory bandwidth, enhanced power efficiency, and a flexible channel architecture are made possible by next-generation HBM, which also boosts memory throughput. For big models, this reduces serving latency and training time. This equates to twice the model weights and key-value caches, allowing for faster access and greater computational capability to expedite machine learning tasks. Training and inference tasks may grow to tens of thousands of chips with double the ICI bandwidth thanks to a clever mix of 256 chips per pod specialised optical ICI interconnects and hundreds of pods in a cluster via Google Jupiter Networking.

The AI models of the future will run on trillium

The next generation of AI models and agents will be powered by trillium TPUs, and they are excited to assist Google’s customers take use of these cutting-edge features. For instance, the goal of autonomous car startup Essential AI is to strengthen the bond between people and computers, and the company anticipates utilising Trillium to completely transform the way organisations function. Deloitte, the Google Cloud Partner of the Year for AI, will offer Trillium to transform businesses with generative AI.

Nuro is committed to improving everyday life through robotics by training their models with Cloud TPUs. Deep Genomics is using AI to power the future of drug discovery and is excited about how their next foundational model, powered by Trillium, will change the lives of patients. With support for long-context, multimodal model training and serving on Trillium TPUs, Google Deep Mind will also be able to train and serve upcoming generations of Gemini models more quickly, effectively, and with minimal latency.

AI-powered trillium Hypercomputer

The AI Hypercomputer from Google Cloud, a revolutionary supercomputing architecture created especially for state-of-the-art AI applications, includes Trillium TPUs. Open-source software frameworks, flexible consumption patterns, and performance-optimized infrastructure including Trillium TPUs are all integrated within it. Developers are empowered by Google’s dedication to open-source libraries like as JAX, PyTorch/XLA, and Keras 3. Declarative model descriptions created for any prior generation of TPUs can be directly mapped to the new hardware and network capabilities of Trillium TPUs thanks to support for JAX and XLA. Additionally, Hugging Face and they have teamed up on Optimum-TPU to streamline model serving and training.

Since 2017, SADA (An Insight Company) has won Partner of the Year annually and provides Google Cloud Services to optimise effect.

The variable consumption models needed for AI/ML workloads are also provided by AI Hypercomputer. Dynamic Workload Scheduler (DWS) helps customers optimise their spend by simplifying the access to AI/ML resources. By scheduling all the accelerators concurrently, independent of your entry point Vertex AI Training, Google Kubernetes Engine (GKE), or Google Cloud Compute Engine flex start mode can enhance the experience of bursty workloads like training, fine-tuning, or batch operations.

Lightricks is thrilled to recoup value from the AI Hypercomputer’s increased efficiency and performance.

Read more on govindhtech.com

#trilliumtpu#generativeAI#GoogleCloud#Gemma2#Imagen3#supercomputer#microprocessor#machinelearning#Gemini#aimodels#geminimodels#Supercomputing#HyperComputer#VertexAI#googlekubernetesengine#news#technews#technology#technologynews#technologytrends

0 notes

Text

https://www.futureelectronics.com/p/semiconductors--microcontrollers--8-bit/pic16c73b-04-sp-microchip-1274299

Microcontroller 8 bit controller, lcd microcontrollers, Pic microcontrollers

PIC16 Series 192 B RAM 4 K x 14 Bit EPROM 8-Bit CMOS Microcontroller - SPDIP-28

#Microcontrollers#8 bit#PIC16C73B-04/SP#Microchip#microprocessor#Programmable#controller#lcd microcontrollers#Pic#Digital Microcontrollers#Wireless microcontroller#8 bit embedded microcontroller

1 note

·

View note

Text

P2Pinfect - New Variant Targets MIPS Devices - Cado Security | Cloud Forensics & Incident Response

Summary A new P2Pinfect variant compiled for the Microprocessor without Interlocked Pipelined Stages (MIPS) architecture has been discovered This demonstrates increased targeting of routers, Internet of Things (IoT) and other embedded devices by those behind P2Pinfect The new sample includes updated evasion mechanisms, making it more difficult for researchers to dynamically analyse These include…

View On WordPress

0 notes

Link

In the server segment, AMD already occupies almost a quarter of the market AMD has significantly increased its share in the processor market, according to recent statistics from Mercury Research. [caption id="attachment_81293" align="aligncenter" width="728"] AMD[/caption] At the moment, AMD already occupies 19.4% of the entire CPU market. A year ago, the company’s share was 15%, and a quarter ago – 17.3%, that is, the growth is very significant. AMD is not going to ease the pressure on Intel. The biggest impact on the overall share comes from the server segment, where AMD has surged beyond its 20 percent share. The company now holds 23.3% compared to 17.5% a year earlier! Let us remember that several years ago Intel said that its goal was to prevent AMD from occupying 10-15% of the server market. As you can see, AMD has already more than doubled the lower limit of this range. [caption id="attachment_81294" align="aligncenter" width="780"] AMD[/caption] In the desktop CPU market, everything is also very good: growth from 13.9% to 19.2%, although in quarterly terms there is a slight decline, since in the second quarter AMD’s share was 19.4%. As for mobile processors, there is also growth, and also excellent: from 15.7% to 19.5%. We also note that in monetary terms, AMD as a whole occupies 16.9% of the market, but in the server segment it is already almost 30%!

#Advanced_Micro_Devices#Amd#AMD_Architecture#AMD_Radeon#AMD_Ryzen#chipsets#computer_hardware#computing#CPU#Epyc#Gaming#gaming_performance#GPU#graphics_cards#graphics_processing_unit#microprocessor#PC_components#Processors#Radeon#Ryzen#semiconductor#semiconductor_industry#technology

1 note

·

View note