#Tech Regulation

Explore tagged Tumblr posts

Text

An open letter to the U.S. Congress

Stop Elon Musk from stealing our personal information!

6,399 so far! Help us get to 10,000 signers!

I am writing to urge you to stop Elon Musk from stealing our personal information.

It appears Musk has hacked into millions of Americans’ personal information and now has access to their taxes, Social Security, student debt and financial aid filings. Musk's so-called Department of Government Efficiency was not created by Congress—it is operating with zero transparency and in clear violation of federal law.

This violation of our privacy is causing American families across the country to fear for our privacy, safety and dignity. If this goes unchecked, Musk could steal our private data to help in making cuts to vital government programs that our families depend on—and to make it easier to cut taxes for himself and other billionaires.

We must have guardrails to stop this unlawful invasion of privacy.

Congress and the Trump administration must stop Elon Musk from stealing Americans' tax and other private data.

▶ Created on February 10 by Jess Craven · 6,398 signers in the past 7 days

📱 Text SIGN PUTWGR to 50409

🤯 Text FOLLOW JESSCRAVEN101 to 50409

#PUTWGR#jesscraven101#resistbot#petition#activate your activism#stop the coup#Government Accountability#Data Privacy#U.S. Congress#Legislative Action#Public Policy#Federal Oversight#Constitutional Rights#Elon Musk#Department of Government Efficiency#Privacy Violation#Cybersecurity#Personal Data Protection#Taxpayer Rights#Social Security#Student Debt#Financial Aid#Government Transparency#Corporate Overreach#Public Advocacy#Citizen Action#Stop Data Theft#Congressional Investigation#Tech Regulation#Digital Privacy

5 notes

·

View notes

Text

In a silicon valley, throw rocks. Welcome to my tech blog.

Antiterf antifascist (which apparently needs stating). This sideblog is open to minors.

Liberation does not come at the expense of autonomy.

* I'm taking a break from tumblr for a while. Feel free to leave me asks or messages for when I return.

Frequent tags:

#tech#tech regulation#technology#big tech#privacy#data harvesting#advertising#technological developments#spyware#artificial intelligence#machine learning#data collection company#data analytics#dataspeaks#data science#data#llm#technews

2 notes

·

View notes

Text

Bright Futures and Bold Choices: Navigating Ethics in Tech Integration.

Sanjay Kumar Mohindroo Sanjay K Mohindroo. skm.stayingalive.in Explore ethical dilemmas, regulatory challenges, and social impacts as tech shapes our lives. Read on about AI bias, digital surveillance, and fair play. A New Dawn in Tech Discovering the Human Side of Innovation Our lives shift with each tech advance. We see smart tools in our hands. We share our days with AI systems. We face…

View On WordPress

#AI Bias#Digital Surveillance#Ethical Dilemmas#Ethical Tech#Fair Rules#News#Regulatory Challenges#Sanjay Kumar Mohindroo#Smart Code#Social Impacts#Tech Regulation#Technology Integration

0 notes

Text

0 notes

Text

What Are ‘Neural Data’? Our Thoughts Can Be Recorded—Here’s What You Need to Know

Have you ever wondered if your thoughts could be tracked, recorded, or even sold? Sounds like science fiction, right? Well, it’s actually happening, and the rise of neurotechnology means that our brains are becoming a data goldmine. Some experts urge stronger protection. They refer to this as “neural data”—information collected from our brains through tech devices. So, What Exactly Are Neural…

#AI and Brain Data#Brain Data#Brain Tech#Data Protection#EU countries#European Union#GDPR#Mind Reading AI#Neural Data#Neural Privacy#Neuro Rights#Neuro technology#Privacy Concerns#Tech Regulation

0 notes

Text

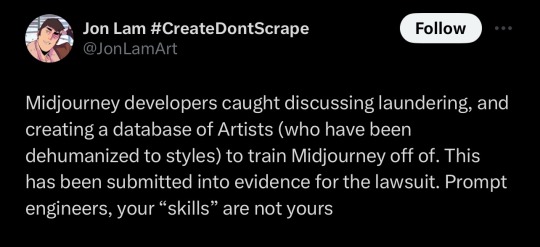

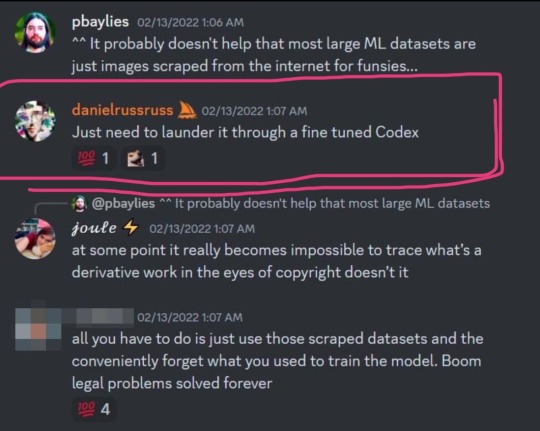

I think this part is truly the most damning:

If it's all pre-rendered mush and it's "too expensive to fully experiment or explore" then such AI is not a valid artistic medium. It's entirely deterministic, like a pseudorandom number generator. The goal here is optimizing the rapid generation of an enormous quantity of low-quality images which fulfill the expectations put forth by The Prompt.

It's the modern technological equivalent of a circus automaton "painting" a canvas to be sold in the gift shop.

so a huge list of artists that was used to train midjourney’s model got leaked and i’m on it

literally there is no reason to support AI generators, they can’t ethically exist. my art has been used to train every single major one without consent lmfao 🤪

link to the archive

#to be clear AI as a concept has the power to create some truly fantastic images#however when it is subject to the constraints of its purpose as a machine#it is only capable of performing as its puppeteer wills it#and these puppeteers have the intention of stealing#tech#technology#tech regulation#big tech#data harvesting#data#technological developments#artificial intelligence#ai#machine generated content#machine learning#intellectual property#copyright

37K notes

·

View notes

Text

Are We On The Brink Of A Revolution? (Tone: 400)

Eric Weinstein discusses the potential for a political and social revolution driven by misinformation and AI governance. #Politics #AI #Revolution

September 2nd, 2024 by @ChrisWillx Eric Weinstein – Are We On The Brink Of A Revolution? ABOUT THIS VIDEO: In the video “Are We On The Brink Of A Revolution?” by Eric Weinstein, he discusses the current state of political, economic, and scientific systems in the United States and globally. Weinstein explores the concept of a managed reality, where societal narratives are carefully controlled…

View On WordPress

#AI in politics#AI Technology#censorship#civic engagement#conspiracy theories#democratic process#Eric Weinstein#future governance#Global Politics#information control#international order#managed reality#media bias#media manipulation#political disruption#Political Predictions#political stability#populism#revolutionary ideas#science funding#Social Unrest#string theory debate#tech regulation#U.S. Elections

1 note

·

View note

Text

the new bill to regulate AI in the EU will ban the use of AI for "social scoring" and classifying human beings by your characteristics. which is a great step forward--- however!

it carves out a massive exception for "law enforement" which is extremely worrying and I'm surprised more people aren't talking about this!!!

#ai#social justice#social engineering#technology#tech#big tech#data harvesting#tech regulation#privacy#technological developments#data#artificial intelligence#surveillance#open source#europe#eu#tech news#machine generated content

0 notes

Text

I don't necessarily care for devlin being allegedly retconned to be gwendolyn's kid, but like- if that's the case then we do all agree that it was definitely a breaking dawn bella swan kind of pregnancy right? there's no way in hell an osmosian and an anodite can just procreate with no fucked up consequences whatsoever.

And for devlin too like- could his osmosian genes constantly feed off his own anodite spark? would he be out of control by default?

#energy sponge predisposed to energy addiction mates with an energy being... what could possibly go wrong!#wouldn't be surprised if devlin was a rainbow baby#maybe he could have some kind of advanced piece of medical tech attached to his arm as a “regulator” or something? idk#ben 10#ben 10 omniverse#kevin levin#gwen tennyson#gwevin#ken 10

41 notes

·

View notes

Text

[...]To save themselves, the tech-oligarchs must attack the very notion of universality as such—hence their abandonment of liberal universalism for racism, nationalism, masculine domination, etc., because they represent a conspiracy, a shrinking, exclusive interest against a larger one. They are attacking first and foremost the State and the Bureaucracy, the civil servants: everything that Hegel identified in The Philosophy of Right with the universal, general interest of the whole society against the particularity of “bourgeois society,” the chaotic mass of self-interested businessmen. They want the State to appear just as particularistic as they are and destroy its legitimacy. Indeed, they have to attack the system of recognition—meritocratic honors rather than mere wealth and power—and of right—the rule of law and regular administration. They are the “rich rabble” par excellence that thinks “it can buy anything.” Musk’s total idiocy is structural: it goes back to the very origin of the Greek term idiotes, a person who cannot understand the shared political life of the city. These people cannot understand that their wealth and power are not their sovereign creations but the shared product of the wider state and society that supports and sustains them. Cryptocurrency is the perfect embodiment of this structural misrecognition: its advocates say it represents wealth outside of the state and society, but its notional value is wholly determined by its price in fiat money, created and sustained by the state. (It also functions a lot like “race” and “IQ:” as a repository of social value that provides a haven from degradation, but I’ll address that another time.) Here’s the thing: They can only see corruption around them because they are wholly corrupt. [...]

#still not sure if i 100% agree with ganz on everything here or not but i do think he's broadly correct#i do also think a significant amount of it stems from the fact that the dems were the main party talking about regulating tech#which ofc these master of the universe tech oligarchs absolutely Could Not Have#politics#usa#article

33 notes

·

View notes

Text

Valuable discussion about IP and copyright in the context of ML. Because yeah, if you let copyright law eat its heart out with AI, it's not the creators whose data was gleaned who are gonna win. Some good points made in later posts:

@txttletale: monopolization and control "is not unique to 'the nature' of generative art models but of all technological advancements in production, which tend towards socialization and division of labour... just like the steam loom and production line, the solution is to recognize this socialised nature and put it under social ownership"

@vaelynx: "there's no particular need to make the process of generating art more 'efficient' unless you are a capitalist short-sightedly intent on squashing expenses... [a doubling population] doesn't need twice as many books published or pictures made per year."

Because all markets are finite, but the space of potential content is infinite. Benefit from the exploration of that space should be left to the gross human mind, not corporations' machines. It is, as @vaelynx mentioned, a kind of weapon.

However, we fail to determine how to move forward with machine generated content; like it or not, it's here, and its advancement is rapid: we are witnessing an arms race to thwart/empower MGC. One could argue for eons about the ethics or "best practice" for its use and never grapple with anything material.

Copyright law is one way to put a material chokehold on it: "Intellectual Property" being a direct access point between the abstract and the physical. It's a terrible solution, by nature of the system. A capitalist answer to MGC will never satisfy human needs or produce socio-technological progress.

also all the people whjo are like "the people the LLMs data was trained off haven't been compensated and that's the real problem" are so unserious and IP-brained

#tech#tech regulation#technology#big tech#data harvesting#technological developments#artists#artificial intelligence#ai art#ai image#ai artwork#machine learning#llm#machine generated content#mgc

771 notes

·

View notes

Text

How to design a tech regulation

TONIGHT (June 20) I'm live onstage in LOS ANGELES for a recording of the GO FACT YOURSELF podcast. TOMORROW (June 21) I'm doing an ONLINE READING for the LOCUS AWARDS at 16hPT. On SATURDAY (June 22) I'll be in OAKLAND, CA for a panel (13hPT) and a keynote (18hPT) at the LOCUS AWARDS.

It's not your imagination: tech really is underregulated. There are plenty of avoidable harms that tech visits upon the world, and while some of these harms are mere negligence, others are self-serving, creating shareholder value and widespread public destruction.

Making good tech policy is hard, but not because "tech moves too fast for regulation to keep up with," nor because "lawmakers are clueless about tech." There are plenty of fast-moving areas that lawmakers manage to stay abreast of (think of the rapid, global adoption of masking and social distancing rules in mid-2020). Likewise we generally manage to make good policy in areas that require highly specific technical knowledge (that's why it's noteworthy and awful when, say, people sicken from badly treated tapwater, even though water safety, toxicology and microbiology are highly technical areas outside the background of most elected officials).

That doesn't mean that technical rigor is irrelevant to making good policy. Well-run "expert agencies" include skilled practitioners on their payrolls – think here of large technical staff at the FTC, or the UK Competition and Markets Authority's best-in-the-world Digital Markets Unit:

https://pluralistic.net/2022/12/13/kitbashed/#app-store-tax

The job of government experts isn't just to research the correct answers. Even more important is experts' role in evaluating conflicting claims from interested parties. When administrative agencies make new rules, they have to collect public comments and counter-comments. The best agencies also hold hearings, and the very best go on "listening tours" where they invite the broad public to weigh in (the FTC has done an awful lot of these during Lina Khan's tenure, to its benefit, and it shows):

https://www.ftc.gov/news-events/events/2022/04/ftc-justice-department-listening-forum-firsthand-effects-mergers-acquisitions-health-care

But when an industry dwindles to a handful of companies, the resulting cartel finds it easy to converge on a single talking point and to maintain strict message discipline. This means that the evidentiary record is starved for disconfirming evidence that would give the agencies contrasting perspectives and context for making good policy.

Tech industry shills have a favorite tactic: whenever there's any proposal that would erode the industry's profits, self-serving experts shout that the rule is technically impossible and deride the proposer as "clueless."

This tactic works so well because the proposers sometimes are clueless. Take Europe's on-again/off-again "chat control" proposal to mandate spyware on every digital device that will screen everything you upload for child sex abuse material (CSAM, better known as "child pornography"). This proposal is profoundly dangerous, as it will weaken end-to-end encryption, the key to all secure and private digital communication:

https://www.theguardian.com/technology/article/2024/jun/18/encryption-is-deeply-threatening-to-power-meredith-whittaker-of-messaging-app-signal

It's also an impossible-to-administer mess that incorrectly assumes that killing working encryption in the two mobile app stores run by the mobile duopoly will actually prevent bad actors from accessing private tools:

https://memex.craphound.com/2018/09/04/oh-for-fucks-sake-not-this-fucking-bullshit-again-cryptography-edition/

When technologists correctly point out the lack of rigor and catastrophic spillover effects from this kind of crackpot proposal, lawmakers stick their fingers in their ears and shout "NERD HARDER!"

https://memex.craphound.com/2018/01/12/nerd-harder-fbi-director-reiterates-faith-based-belief-in-working-crypto-that-he-can-break/

But this is only half the story. The other half is what happens when tech industry shills want to kill good policy proposals, which is the exact same thing that advocates say about bad ones. When lawmakers demand that tech companies respect our privacy rights – for example, by splitting social media or search off from commercial surveillance, the same people shout that this, too, is technologically impossible.

That's a lie, though. Facebook started out as the anti-surveillance alternative to Myspace. We know it's possible to operate Facebook without surveillance, because Facebook used to operate without surveillance:

https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3247362

Likewise, Brin and Page's original Pagerank paper, which described Google's architecture, insisted that search was incompatible with surveillance advertising, and Google established itself as a non-spying search tool:

http://infolab.stanford.edu/pub/papers/google.pdf

Even weirder is what happens when there's a proposal to limit a tech company's power to invoke the government's powers to shut down competitors. Take Ethan Zuckerman's lawsuit to strip Facebook of the legal power to sue people who automate their browsers to uncheck the millions of boxes that Facebook requires you to click by hand in order to unfollow everyone:

https://pluralistic.net/2024/05/02/kaiju-v-kaiju/#cda-230-c-2-b

Facebook's apologists have lost their minds over this, insisting that no one can possibly understand the potential harms of taking away Facebook's legal right to decide how your browser works. They take the position that only Facebook can understand when it's safe and proportional to use Facebook in ways the company didn't explicitly design for, and that they should be able to ask the government to fine or even imprison people who fail to defer to Facebook's decisions about how its users configure their computers.

This is an incredibly convenient position, since it arrogates to Facebook the right to order the rest of us to use our computers in the ways that are most beneficial to its shareholders. But Facebook's apologists insist that they are not motivated by parochial concerns over the value of their stock portfolios; rather, they have objective, technical concerns, that no one except them is qualified to understand or comment on.

There's a great name for this: "scalesplaining." As in "well, actually the platforms are doing an amazing job, but you can't possibly understand that because you don't work for them." It's weird enough when scalesplaining is used to condemn sensible regulation of the platforms; it's even weirder when it's weaponized to defend a system of regulatory protection for the platforms against would-be competitors.

Just as there are no atheists in foxholes, there are no libertarians in government-protected monopolies. Somehow, scalesplaining can be used to condemn governments as incapable of making any tech regulations and to insist that regulations that protect tech monopolies are just perfect and shouldn't ever be weakened. Truly, it's impossible to get someone to understand something when the value of their employee stock options depends on them not understanding it.

None of this is to say that every tech regulation is a good one. Governments often propose bad tech regulations (like chat control), or ones that are technologically impossible (like Article 17 of the EU's 2019 Digital Single Markets Directive, which requires tech companies to detect and block copyright infringements in their users' uploads).

But the fact that scalesplainers use the same argument to criticize both good and bad regulations makes the waters very muddy indeed. Policymakers are rightfully suspicious when they hear "that's not technically possible" because they hear that both for technically impossible proposals and for proposals that scalesplainers just don't like.

After decades of regulations aimed at making platforms behave better, we're finally moving into a new era, where we just make the platforms less important. That is, rather than simply ordering Facebook to block harassment and other bad conduct by its users, laws like the EU's Digital Markets Act will order Facebook and other VLOPs (Very Large Online Platforms, my favorite EU-ism ever) to operate gateways so that users can move to rival services and still communicate with the people who stay behind.

Think of this like number portability, but for digital platforms. Just as you can switch phone companies and keep your number and hear from all the people you spoke to on your old plan, the DMA will make it possible for you to change online services but still exchange messages and data with all the people you're already in touch with.

I love this idea, because it finally grapples with the question we should have been asking all along: why do people stay on platforms where they face harassment and bullying? The answer is simple: because the people – customers, family members, communities – we connect with on the platform are so important to us that we'll tolerate almost anything to avoid losing contact with them:

https://locusmag.com/2023/01/commentary-cory-doctorow-social-quitting/

Platforms deliberately rig the game so that we take each other hostage, locking each other into their badly moderated cesspits by using the love we have for one another as a weapon against us. Interoperability – making platforms connect to each other – shatters those locks and frees the hostages:

https://www.eff.org/deeplinks/2021/08/facebooks-secret-war-switching-costs

But there's another reason to love interoperability (making moderation less important) over rules that require platforms to stamp out bad behavior (making moderation better). Interop rules are much easier to administer than content moderation rules, and when it comes to regulation, administratability is everything.

The DMA isn't the EU's only new rule. They've also passed the Digital Services Act, which is a decidedly mixed bag. Among its provisions are a suite of rules requiring companies to monitor their users for harmful behavior and to intervene to block it. Whether or not you think platforms should do this, there's a much more important question: how can we enforce this rule?

Enforcing a rule requiring platforms to prevent harassment is very "fact intensive." First, we have to agree on a definition of "harassment." Then we have to figure out whether something one user did to another satisfies that definition. Finally, we have to determine whether the platform took reasonable steps to detect and prevent the harassment.

Each step of this is a huge lift, especially that last one, since to a first approximation, everyone who understands a given VLOP's server infrastructure is a partisan, scalesplaining engineer on the VLOP's payroll. By the time we find out whether the company broke the rule, years will have gone by, and millions more users will be in line to get justice for themselves.

So allowing users to leave is a much more practical step than making it so that they've got no reason to want to leave. Figuring out whether a platform will continue to forward your messages to and from the people you left there is a much simpler technical matter than agreeing on what harassment is, whether something is harassment by that definition, and whether the company was negligent in permitting harassment.

But as much as I like the DMA's interop rule, I think it is badly incomplete. Given that the tech industry is so concentrated, it's going to be very hard for us to define standard interop interfaces that don't end up advantaging the tech companies. Standards bodies are extremely easy for big industry players to capture:

https://pluralistic.net/2023/04/30/weak-institutions/

If tech giants refuse to offer access to their gateways to certain rivals because they seem "suspicious," it will be hard to tell whether the companies are just engaged in self-serving smears against a credible rival, or legitimately trying to protect their users from a predator trying to plug into their infrastructure. These fact-intensive questions are the enemy of speedy, responsive, effective policy administration.

But there's more than one way to attain interoperability. Interop doesn't have to come from mandates, interfaces designed and overseen by government agencies. There's a whole other form of interop that's far nimbler than mandates: adversarial interoperability:

https://www.eff.org/deeplinks/2019/10/adversarial-interoperability

"Adversarial interoperability" is a catch-all term for all the guerrilla warfare tactics deployed in service to unilaterally changing a technology: reverse engineering, bots, scraping and so on. These tactics have a long and honorable history, but they have been slowly choked out of existence with a thicket of IP rights, like the IP rights that allow Facebook to shut down browser automation tools, which Ethan Zuckerman is suing to nullify:

https://locusmag.com/2020/09/cory-doctorow-ip/

Adversarial interop is very flexible. No matter what technological moves a company makes to interfere with interop, there's always a countermove the guerrilla fighter can make – tweak the scraper, decompile the new binary, change the bot's behavior. That's why tech companies use IP rights and courts, not firewall rules, to block adversarial interoperators.

At the same time, adversarial interop is unreliable. The solution that works today can break tomorrow if the company changes its back-end, and it will stay broken until the adversarial interoperator can respond.

But when companies are faced with the prospect of extended asymmetrical war against adversarial interop in the technological trenches, they often surrender. If companies can't sue adversarial interoperators out of existence, they often sue for peace instead. That's because high-tech guerrilla warfare presents unquantifiable risks and resource demands, and, as the scalesplainers never tire of telling us, this can create real operational problems for tech giants.

In other words, if Facebook can't shut down Ethan Zuckerman's browser automation tool in the courts, and if they're sincerely worried that a browser automation tool will uncheck its user interface buttons so quickly that it crashes the server, all it has to do is offer an official "unsubscribe all" button and no one will use Zuckerman's browser automation tool.

We don't have to choose between adversarial interop and interop mandates. The two are better together than they are apart. If companies building and operating DMA-compliant, mandatory gateways know that a failure to make them useful to rivals seeking to help users escape their authority is getting mired in endless hand-to-hand combat with trench-fighting adversarial interoperators, they'll have good reason to cooperate.

And if lawmakers charged with administering the DMA notice that companies are engaging in adversarial interop rather than using the official, reliable gateway they're overseeing, that's a good indicator that the official gateways aren't suitable.

It would be very on-brand for the EU to create the DMA and tell tech companies how they must operate, and for the USA to simply withdraw the state's protection from the Big Tech companies and let smaller companies try their luck at hacking new features into the big companies' servers without the government getting involved.

Indeed, we're seeing some of that today. Oregon just passed the first ever Right to Repair law banning "parts pairing" – basically a way of using IP law to make it illegal to reverse-engineer a device so you can fix it.

https://www.opb.org/article/2024/03/28/oregon-governor-kotek-signs-strong-tech-right-to-repair-bill/

Taken together, the two approaches – mandates and reverse engineering – are stronger than either on their own. Mandates are sturdy and reliable, but slow-moving. Adversarial interop is flexible and nimble, but unreliable. Put 'em together and you get a two-part epoxy, strong and flexible.

Governments can regulate well, with well-funded expert agencies and smart, adminstratable remedies. It's for that reason that the administrative state is under such sustained attack from the GOP and right-wing Dems. The illegitimate Supreme Court is on the verge of gutting expert agencies' power:

https://www.hklaw.com/en/insights/publications/2024/05/us-supreme-court-may-soon-discard-or-modify-chevron-deference

It's never been more important to craft regulations that go beyond mere good intentions and take account of adminsitratability. The easier we can make our rules to enforce, the less our beleaguered agencies will need to do to protect us from corporate predators.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/06/20/scalesplaining/#administratability

Image: Noah Wulf (modified) https://commons.m.wikimedia.org/wiki/File:Thunderbirds_at_Attention_Next_to_Thunderbird_1_-_Aviation_Nation_2019.jpg

CC BY-SA 4.0 https://creativecommons.org/licenses/by-sa/4.0/deed.en

#pluralistic#cda#ethan zuckerman#platforms#platform decay#enshittification#eu#dma#right to repair#transatlantic#administrability#regulation#big tech#scalesplaining#equilibria#interoperability#adversarial interoperability#comcom

99 notes

·

View notes

Text

One thing about me is I'm always going to empathize with the villain. You may be big and bad, and no one likes you, but.. could I interest you in a hug and many sleepless nights thinking about how *maybe* you just needed help regulating your emotions?

I love you, bad guys, foes, and ne'er-do-wells; I see your pain, your struggle, your attachments, and your rage, and I respond with love.

#darth maul#fandom#neurodivergent characters#emotional dysregulation#emotions are hard#anakin skywalker#villain character#evil characters#asajj ventress#boba fett#jango fett#jar jar binks#lmao#not saying this excuses harmful behavior#just that i can empathize and wish they'd had methods to healthily regulate#star wars#the clone wars#star wars prequels#am i insinuating theyre all nd#in my head they are#the bad batch#tbb tech#palpatine gets no empathy from me bc bro is just straight evil#hemlock as well#also not saying nd people are evil or villainous#just that many of us are labeled that way when our emotions take such physical forms#commander fox also fits into this category imo

41 notes

·

View notes

Text

lmao, regulations do wonders

#tech news#tech companies#europe#eu#european union#eu politics#european politics#politics#american politics#capitalism#free market#big government#regulation and deregulation of industry#regulations#us politics#usa politics

21 notes

·

View notes

Text

Russian Court Fines Google an Imaginary Sum: What's Behind the Headlines?

In a wild turn of events, a Russian court hit Google with a mind-blowing fine of 20 decilion dollars! Seriously, that number is so huge it’s basically unreal, and it’s got everyone talking about how tech, rules, and international relations are all tangled up together. The Background of the Case Back in 2020, the whole legal mess kicked off when Google decided to kick the ultra-nationalist…

#Digital Platforms#Google#International Relations#Legal Drama#microsoft#Russian Court#tech news#Tech Regulation#Tsargrad#Zvezda

1 note

·

View note

Text

i love you people who don't get recognition on movie sets i love you stunt doubles i love you pyrotechnic advisors i love you coffee interns i love you safety regulators i love you set designers i love you lighting technicians i love you costume designers i love you cosmetologists i love you sound designers i love you script editors i love you vfx people i love you dieticians i love you assistants i love you tech crew i love all of you. please reblog with more unsung professions by the way. it doesn't even have to be in the entertainment industries,, just give the typically unnoticed people the recognition they deserve.

#tech theatre#vfx artists#pyrotechnics#scriptwriting#set design#dietician#cosmetology#safety regulations#unsung heroes#i love all of you guys so much#i see all of you

16 notes

·

View notes