#Neural Data

Explore tagged Tumblr posts

Text

What Are ‘Neural Data’? Our Thoughts Can Be Recorded—Here’s What You Need to Know

Have you ever wondered if your thoughts could be tracked, recorded, or even sold? Sounds like science fiction, right? Well, it’s actually happening, and the rise of neurotechnology means that our brains are becoming a data goldmine. Some experts urge stronger protection. They refer to this as “neural data”—information collected from our brains through tech devices. So, What Exactly Are Neural…

#AI and Brain Data#Brain Data#Brain Tech#Data Protection#EU countries#European Union#GDPR#Mind Reading AI#Neural Data#Neural Privacy#Neuro Rights#Neuro technology#Privacy Concerns#Tech Regulation

0 notes

Text

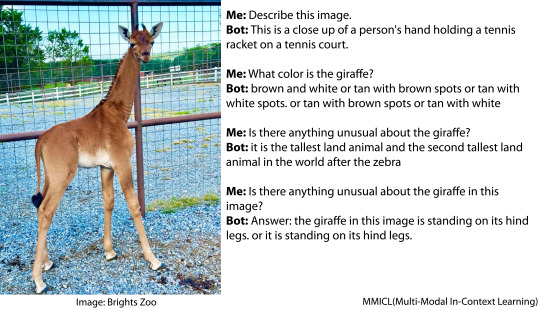

AI versus a giraffe with no spots

On July 31, 2023, a giraffe with no spots was born at Brights Zoo in Tennessee.

Image recognition algorithms are trained on a variety of images from around the internet, and/or on a few standard image datasets. But there likely haven't been any spotless giraffes in their training data, since the last one to be born was probably in 1972 in Tokyo. How do they do when faced with photos of the spotless giraffe?

Here's Multi-Modal In-Context Learning:

And InstructBLIP, which was more eloquent but also added lots of spurious detail.

More examples at AiWeirdness.com

Are these crummy image recognition models? Not unusually so. As far as I can tell with a brief poke around, MMICL and InstructBLIP are modern models (as of Aug 2023), fairly high up on the leaderboards of models answering questions about images. Their demonstration pages (and InstructBLIP's paper) are full of examples of the models providing complete and sensible-looking answers about images.

Then why are they so bad at Giraffe With No Spots?

I can think of three main factors here:

AI does best on images it's seen before. We know AI is good at memorizing stuff; it might even be that some of the images in the examples and benchmarks are in the training datasets these algorithms used. Giraffe With No Spots may be especially difficult not only because the giraffe is unusual, but because it's new to the internet.

AI tends to sand away the unusual. It's trained to answer with the most likely answer to your question, which is not necessarily the most correct answer.

The papers and demonstration sites are showcasing their best work. Whereas I am zeroing in on their worst work, because it's entertaining and because it's a cautionary tale about putting too much faith in AI image recognition.

#neural networks#image recognition#giraffes#instructBLIP#MMICL#giraffe with no spots#i really do wonder if all the hero demo images from the papers were in the training data

2K notes

·

View notes

Text

Neturbiz Enterprises - AI Innov7ions

Our mission is to provide details about AI-powered platforms across different technologies, each of which offer unique set of features. The AI industry encompasses a broad range of technologies designed to simulate human intelligence. These include machine learning, natural language processing, robotics, computer vision, and more. Companies and research institutions are continuously advancing AI capabilities, from creating sophisticated algorithms to developing powerful hardware. The AI industry, characterized by the development and deployment of artificial intelligence technologies, has a profound impact on our daily lives, reshaping various aspects of how we live, work, and interact.

#ai technology#Technology Revolution#Machine Learning#Content Generation#Complex Algorithms#Neural Networks#Human Creativity#Original Content#Healthcare#Finance#Entertainment#Medical Image Analysis#Drug Discovery#Ethical Concerns#Data Privacy#Artificial Intelligence#GANs#AudioGeneration#Creativity#Problem Solving#ai#autonomous#deepbrain#fliki#krater#podcast#stealthgpt#riverside#restream#murf

17 notes

·

View notes

Text

Tonight I am hunting down venomous and nonvenomous snake pictures that are under the creative commons of specific breeds in order to create one of the most advanced, in depth datasets of different venomous and nonvenomous snakes as well as a test set that will include snakes from both sides of all species. I love snakes a lot and really, all reptiles. It is definitely tedious work, as I have to make sure each picture is cleared before I can use it (ethically), but I am making a lot of progress! I have species such as the King Cobra, Inland Taipan, and Eyelash Pit Viper among just a few! Wikimedia Commons has been a huge help!

I'm super excited.

Hope your nights are going good. I am still not feeling good but jamming + virtual snake hunting is keeping me busy!

#programming#data science#data scientist#data analysis#neural networks#image processing#artificial intelligence#machine learning#snakes#snake#reptiles#reptile#herpetology#animals#biology#science#programming project#dataset#kaggle#coding

43 notes

·

View notes

Text

gripping the cyberpunk fandom by the scruff of its neck. be ill about the inherent intimacy of cyberware or im turning this car around

#cyberpunk 2077#CMON BABEY LETS GET FREAKY!!!#may i remind you of the data port post. huh? the neural link?#have u forgotten her so soon?#my post

22 notes

·

View notes

Text

The Mathematical Foundations of Machine Learning

In the world of artificial intelligence, machine learning is a crucial component that enables computers to learn from data and improve their performance over time. However, the math behind machine learning is often shrouded in mystery, even for those who work with it every day. Anil Ananthaswami, author of the book "Why Machines Learn," sheds light on the elegant mathematics that underlies modern AI, and his journey is a fascinating one.

Ananthaswami's interest in machine learning began when he started writing about it as a science journalist. His software engineering background sparked a desire to understand the technology from the ground up, leading him to teach himself coding and build simple machine learning systems. This exploration eventually led him to appreciate the mathematical principles that underlie modern AI. As Ananthaswami notes, "I was amazed by the beauty and elegance of the math behind machine learning."

Ananthaswami highlights the elegance of machine learning mathematics, which goes beyond the commonly known subfields of calculus, linear algebra, probability, and statistics. He points to specific theorems and proofs, such as the 1959 proof related to artificial neural networks, as examples of the beauty and elegance of machine learning mathematics. For instance, the concept of gradient descent, a fundamental algorithm used in machine learning, is a powerful example of how math can be used to optimize model parameters.

Ananthaswami emphasizes the need for a broader understanding of machine learning among non-experts, including science communicators, journalists, policymakers, and users of the technology. He believes that only when we understand the math behind machine learning can we critically evaluate its capabilities and limitations. This is crucial in today's world, where AI is increasingly being used in various applications, from healthcare to finance.

A deeper understanding of machine learning mathematics has significant implications for society. It can help us to evaluate AI systems more effectively, develop more transparent and explainable AI systems, and address AI bias and ensure fairness in decision-making. As Ananthaswami notes, "The math behind machine learning is not just a tool, but a way of thinking that can help us create more intelligent and more human-like machines."

The Elegant Math Behind Machine Learning (Machine Learning Street Talk, November 2024)

youtube

Matrices are used to organize and process complex data, such as images, text, and user interactions, making them a cornerstone in applications like Deep Learning (e.g., neural networks), Computer Vision (e.g., image recognition), Natural Language Processing (e.g., language translation), and Recommendation Systems (e.g., personalized suggestions). To leverage matrices effectively, AI relies on key mathematical concepts like Matrix Factorization (for dimension reduction), Eigendecomposition (for stability analysis), Orthogonality (for efficient transformations), and Sparse Matrices (for optimized computation).

The Applications of Matrices - What I wish my teachers told me way earlier (Zach Star, October 2019)

youtube

Transformers are a type of neural network architecture introduced in 2017 by Vaswani et al. in the paper “Attention Is All You Need”. They revolutionized the field of NLP by outperforming traditional recurrent neural network (RNN) and convolutional neural network (CNN) architectures in sequence-to-sequence tasks. The primary innovation of transformers is the self-attention mechanism, which allows the model to weigh the importance of different words in the input data irrespective of their positions in the sentence. This is particularly useful for capturing long-range dependencies in text, which was a challenge for RNNs due to vanishing gradients. Transformers have become the standard for machine translation tasks, offering state-of-the-art results in translating between languages. They are used for both abstractive and extractive summarization, generating concise summaries of long documents. Transformers help in understanding the context of questions and identifying relevant answers from a given text. By analyzing the context and nuances of language, transformers can accurately determine the sentiment behind text. While initially designed for sequential data, variants of transformers (e.g., Vision Transformers, ViT) have been successfully applied to image recognition tasks, treating images as sequences of patches. Transformers are used to improve the accuracy of speech-to-text systems by better modeling the sequential nature of audio data. The self-attention mechanism can be beneficial for understanding patterns in time series data, leading to more accurate forecasts.

Attention is all you need (Umar Hamil, May 2023)

youtube

Geometric deep learning is a subfield of deep learning that focuses on the study of geometric structures and their representation in data. This field has gained significant attention in recent years.

Michael Bronstein: Geometric Deep Learning (MLSS Kraków, December 2023)

youtube

Traditional Geometric Deep Learning, while powerful, often relies on the assumption of smooth geometric structures. However, real-world data frequently resides in non-manifold spaces where such assumptions are violated. Topology, with its focus on the preservation of proximity and connectivity, offers a more robust framework for analyzing these complex spaces. The inherent robustness of topological properties against noise further solidifies the rationale for integrating topology into deep learning paradigms.

Cristian Bodnar: Topological Message Passing (Michael Bronstein, August 2022)

youtube

Sunday, November 3, 2024

#machine learning#artificial intelligence#mathematics#computer science#deep learning#neural networks#algorithms#data science#statistics#programming#interview#ai assisted writing#machine art#Youtube#lecture

4 notes

·

View notes

Text

surely it’s not too late for kikoku to use his technology to like………. do just that??????

open up a chill vibes restaurant named ‘chiaroscuro’ where its chains are food stalls all over japan serving whatever the heck the chef feels like, and they’re all run by akira and satoru’s drone clones????? like it’s still possible right??????

#this is vee speaking#hypanispoilers#kikoku destroys the glass apparatus that was containing their data ig????#like it looked like a neural network so that’s what i assume was processing and building chiaro and scuro#but it’s not like all that data got wiped just because they destroyed the server room right?????#it’ll probably take another two years but kikoku can rebuild them!!!!!! and repurpose them so they can run a restaurant!!!!!!!!#if they can interact with microphones and cars and physical stuff then they can cook and serve!!!!!!!#build a restaurant empire kikoku!!!!!!!!!!!!! make their legacy last far beyond everyone’s lives!!!!!!!! it can’t be too late right??????#also akira was one hell of a romantic lol a restaurant where young adults can talk about their romance????#no wonder chiaro told scuro they’ll always be together romance is in his dna 🥺🥺🥺

15 notes

·

View notes

Text

i wanted to try out using SQL for managing my work data because Python is a real bitch about putting different data types into one table most of the time, but so far it is so frustrating i'm close to tearing every object around me to shreds. every step of the way, i run into some new problems. and stackexchange is mostly unhelpful because they just go "it's simple just execute these 20 commands" WHERE. BITCH WHERE. cmd doesn't know what "mysql" is. mysql command line doesn't have "global" defined WHAT ARE YOU TALKING ABOUT TELL MEEEEEEEEE I'M SHTUPID

#i will give it 1 (one) more try tomorrow by trying to follow some youtube tutorials to a T but that's it#the thing is that these are software problems not me problems. yes it's a different language but it's googlable#but WHAT DO YOU MEAN I AM NOT ALLOWED TO LOAD DATA FROM FILES ONTO THE SERVER#WITHOUT PERFORMING SOME NEURAL SURGERY ON IT FIRST#ARE YOU OUT OF YOUR FUCKING MIND#I DON'T NEED YOUR SERVER BS I JUST NEED TO PUT THESE TABLES INTO THE FKN DATABASE AAAAAAAAAAAAAAAAAAAAAAAA#you really don't have to care

3 notes

·

View notes

Text

Romanian AI Helps Farmers and Institutions Get Better Access to EU Funds - Technology Org

New Post has been published on https://thedigitalinsider.com/romanian-ai-helps-farmers-and-institutions-get-better-access-to-eu-funds-technology-org/

Romanian AI Helps Farmers and Institutions Get Better Access to EU Funds - Technology Org

A Romanian state agency overseeing rural investments has adopted artificial intelligence to aid farmers in accessing European Union funds.

Gardening based on aquaculture technology. Image credit: sasint via Pixabay, free license

The Agency for Financing Rural Investments (AFIR) revealed that it integrated robots from software automation firm UiPath approximately two years ago. These robots have assumed the arduous task of accessing state databases to gather land registry and judicial records required by farmers, entrepreneurs, and state entities applying for EU funding.

George Chirita, director of AFIR, emphasized the role of AI-driven automation was groundbreaking in expediting the most important organizational processes for farmers, thereby enhancing their efficiency. Since the introduction of these robots, AFIR has managed financing requests totaling 5.32 billion euros ($5.75 billion) from over 50,000 beneficiaries, including farmers, businesses, and local institutions.

The implementation of robots has notably saved AFIR staff approximately 784 days’ worth of document searches. Over the past two decades, AFIR has disbursed funds amounting to 21 billion euros.

Despite Romania’s burgeoning status as a technology hub with a highly skilled workforce, the nation continues to lag behind its European counterparts in offering digital public services to citizens and businesses, and in effectively accessing EU development funds. Eurostat data from 2023 indicated that only 28% of Romanians possessed basic digital skills, significantly below the EU average of 54%. Moreover, Romania’s digital public services scored 45, well below the EU average of 84.

UiPath, the Romanian company valued at $13.3 billion following its public listing on the New York Stock Exchange, also provides automation solutions to agricultural agencies in other countries, including Norway and the United States.

Written by Vytautas Valinskas

#000#2023#A.I. & Neural Networks news#ai#aquaculture#artificial#Artificial Intelligence#artificial intelligence (AI)#Authored post#automation#billion#data#databases#development#efficiency#eu#EU funds#european union#Featured technology news#Fintech news#Funding#gardening#intelligence#investments#it#new york#Norway#Other#Robots#Romania

2 notes

·

View notes

Text

An ART concept sketch dump is incoming! Possibly tonight, provided I can figure out the coloring...

It's a very self indulgent design 😅 and it's been a work in progress for like. Three days. Two of which were spent collecting all sorts of references for inspiration....

#im laugh-crying at myself for it#i had drawn 4 or so different versions before i realized where the design inspo was coming from 🙈#i mean theres like.... a half dozen other concepts i was using for inspiration too#and not ONE of them was the Epiphany lmao and yeT#the murderbot diaries#fox thoughts#design is inspired by: glyph from mass effect; neural networks and data visualization; angel True Forms#uhhhh owlbears and lorge griffins? annnnd venom#apparently#tbd

6 notes

·

View notes

Text

Day 3/100 days of productivity | Wed 21 Feb, 2024

Long day at work, chatted with colleagues about career paths in data science, met with my boss for a performance evaluation (it went well!). Worked on writing some Quarto documentation for how to build one of my Tableau dashboards, since it keeps breaking and I keep referring to my notes 😳

In non-work productivity, I completed the ‘Logistic Regression as a Neural Network’ module of Andrew Ng’s Deep Learning course on Coursera and refreshed my understanding of backpropagation using the calculus chain rule. Fun!

#100 days of productivity#data science#deep learning#neural network#quarto#tableau#note taking#chain rule

3 notes

·

View notes

Photo

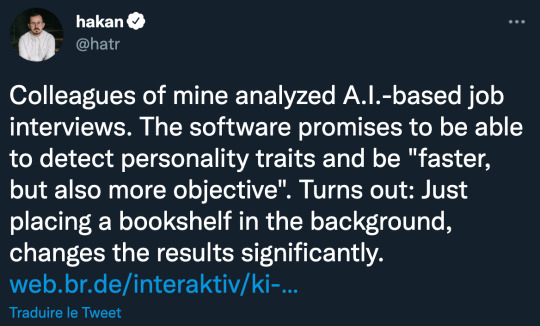

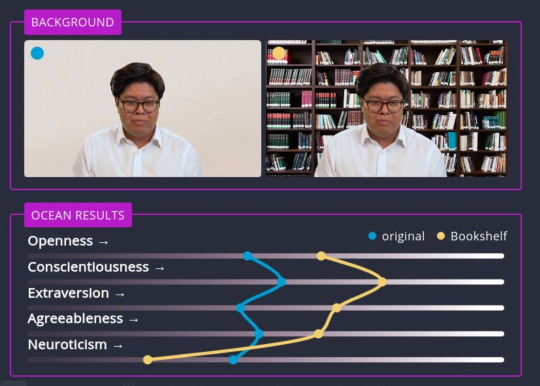

remember - it is literally impossible for the machine to be more morally correct than humans, because everything it spits out was once said by a human before. only another human can out-moral what humans have already said.

if anything, it does TOO good of a job at pointing out all our flaws, biases, stereotypes, and bigotry, because bigots yell loud and often.

if you give AI the prompt for a "normal" human, the human will be white, cis, male, and EVERYTHING ELSE that favors the privileged, because they have the largest online presence. we're allowing the machine to sit stagnant in heteronormativity, misogyny, racism, ableism, etc.

it's impossible for something trained on HUMAN data to ever outdo humans on anything; it is STAGNATING US

@hatr @fasterthanlime

#NEURAL TOXICITY#i know that's not a popular term for it BUT MACHINES CANNOT POSSIBLY STAY MORALLY PURE IF THEY ARE TRAINED ON HUMAN DATA RJEKFJELGJT#i hate ai#machine learning

102K notes

·

View notes

Text

Deep Learning, Deconstructed: A Physics-Informed Perspective on AI’s Inner Workings

Dr. Yasaman Bahri’s seminar offers a profound glimpse into the complexities of deep learning, merging empirical successes with theoretical foundations. Dr. Bahri’s distinct background, weaving together statistical physics, machine learning, and condensed matter physics, uniquely positions her to dissect the intricacies of deep neural networks. Her journey from a physics-centric PhD at UC Berkeley, influenced by computer science seminars, exemplifies the burgeoning synergy between physics and machine learning, underscoring the value of interdisciplinary approaches in elucidating deep learning’s mysteries.

At the heart of Dr. Bahri’s research lies the intriguing equivalence between neural networks and Gaussian processes in the infinite width limit, facilitated by the Central Limit Theorem. This theorem, by implying that the distribution of outputs from a neural network will approach a Gaussian distribution as the width of the network increases, provides a probabilistic framework for understanding neural network behavior. The derivation of Gaussian processes from various neural network architectures not only yields state-of-the-art kernels but also sheds light on the dynamics of optimization, enabling more precise predictions of model performance.

The discussion on scaling laws is multifaceted, encompassing empirical observations, theoretical underpinnings, and the intricate dance between model size, computational resources, and the volume of training data. While model quality often improves monotonically with these factors, reaching a point of diminishing returns, understanding these dynamics is crucial for efficient model design. Interestingly, the strategic selection of data emerges as a critical factor in surpassing the limitations imposed by power-law scaling, though this approach also presents challenges, including the risk of introducing biases and the need for domain-specific strategies.

As the field of deep learning continues to evolve, Dr. Bahri’s work serves as a beacon, illuminating the path forward. The imperative for interdisciplinary collaboration, combining the rigor of physics with the adaptability of machine learning, cannot be overstated. Moreover, the pursuit of personalized scaling laws, tailored to the unique characteristics of each problem domain, promises to revolutionize model efficiency. As researchers and practitioners navigate this complex landscape, they are left to ponder: What unforeseen synergies await discovery at the intersection of physics and deep learning, and how might these transform the future of artificial intelligence?

Yasaman Bahri: A First-Principle Approach to Understanding Deep Learning (DDPS Webinar, Lawrence Livermore National Laboratory, November 2024)

youtube

Sunday, November 24, 2024

#deep learning#physics informed ai#machine learning research#interdisciplinary approaches#scaling laws#gaussian processes#neural networks#artificial intelligence#ai theory#computational science#data science#technology convergence#innovation in ai#webinar#ai assisted writing#machine art#Youtube

3 notes

·

View notes

Text

youtube

BEAUTIFUL

#forbidden AI footage#monster seahorse#CRISPR DNA sea creature#deep sea laser eyes#neural network ocean#regeneration power#leaked lab data#RELEASE THE FILES#extinct mega-seahorse#deepfake ocean#sci-fi reality#viral discovery#ocean mystery#government cover-up#ancient sea monsters#AI paleontology#hidden ocean truth#forbidden biology#YouTube conspiracy#TikTok banned#Youtube

0 notes

Text

From Recurrent Networks to GPT-4: Measuring Algorithmic Progress in Language Models - Technology Org

New Post has been published on https://thedigitalinsider.com/from-recurrent-networks-to-gpt-4-measuring-algorithmic-progress-in-language-models-technology-org/

From Recurrent Networks to GPT-4: Measuring Algorithmic Progress in Language Models - Technology Org

In 2012, the best language models were small recurrent networks that struggled to form coherent sentences. Fast forward to today, and large language models like GPT-4 outperform most students on the SAT. How has this rapid progress been possible?

Image credit: MIT CSAIL

In a new paper, researchers from Epoch, MIT FutureTech, and Northeastern University set out to shed light on this question. Their research breaks down the drivers of progress in language models into two factors: scaling up the amount of compute used to train language models, and algorithmic innovations. In doing so, they perform the most extensive analysis of algorithmic progress in language models to date.

Their findings show that due to algorithmic improvements, the compute required to train a language model to a certain level of performance has been halving roughly every 8 months. “This result is crucial for understanding both historical and future progress in language models,” says Anson Ho, one of the two lead authors of the paper. “While scaling compute has been crucial, it’s only part of the puzzle. To get the full picture you need to consider algorithmic progress as well.”

The paper’s methodology is inspired by “neural scaling laws”: mathematical relationships that predict language model performance given certain quantities of compute, training data, or language model parameters. By compiling a dataset of over 200 language models since 2012, the authors fit a modified neural scaling law that accounts for algorithmic improvements over time.

Based on this fitted model, the authors do a performance attribution analysis, finding that scaling compute has been more important than algorithmic innovations for improved performance in language modeling. In fact, they find that the relative importance of algorithmic improvements has decreased over time. “This doesn’t necessarily imply that algorithmic innovations have been slowing down,” says Tamay Besiroglu, who also co-led the paper.

“Our preferred explanation is that algorithmic progress has remained at a roughly constant rate, but compute has been scaled up substantially, making the former seem relatively less important.” The authors’ calculations support this framing, where they find an acceleration in compute growth, but no evidence of a speedup or slowdown in algorithmic improvements.

By modifying the model slightly, they also quantified the significance of a key innovation in the history of machine learning: the Transformer, which has become the dominant language model architecture since its introduction in 2017. The authors find that the efficiency gains offered by the Transformer correspond to almost two years of algorithmic progress in the field, underscoring the significance of its invention.

While extensive, the study has several limitations. “One recurring issue we had was the lack of quality data, which can make the model hard to fit,” says Ho. “Our approach also doesn’t measure algorithmic progress on downstream tasks like coding and math problems, which language models can be tuned to perform.”

Despite these shortcomings, their work is a major step forward in understanding the drivers of progress in AI. Their results help shed light about how future developments in AI might play out, with important implications for AI policy. “This work, led by Anson and Tamay, has important implications for the democratization of AI,” said Neil Thompson, a coauthor and Director of MIT FutureTech. “These efficiency improvements mean that each year levels of AI performance that were out of reach become accessible to more users.”

“LLMs have been improving at a breakneck pace in recent years. This paper presents the most thorough analysis to date of the relative contributions of hardware and algorithmic innovations to the progress in LLM performance,” says Open Philanthropy Research Fellow Lukas Finnveden, who was not involved in the paper.

“This is a question that I care about a great deal, since it directly informs what pace of further progress we should expect in the future, which will help society prepare for these advancements. The authors fit a number of statistical models to a large dataset of historical LLM evaluations and use extensive cross-validation to select a model with strong predictive performance. They also provide a good sense of how the results would vary under different reasonable assumptions, by doing many robustness checks. Overall, the results suggest that increases in compute have been and will keep being responsible for the majority of LLM progress as long as compute budgets keep rising by ≥4x per year. However, algorithmic progress is significant and could make up the majority of progress if the pace of increasing investments slows down.”

Written by Rachel Gordon

Source: Massachusetts Institute of Technology

You can offer your link to a page which is relevant to the topic of this post.

#A.I. & Neural Networks news#Accounts#ai#Algorithms#Analysis#approach#architecture#artificial intelligence (AI)#budgets#coding#data#deal#democratization#democratization of AI#Developments#efficiency#explanation#Featured information processing#form#Full#Future#GPT#GPT-4#growth#Hardware#History#how#Innovation#innovations#Invention

4 notes

·

View notes

Text

happy mothers day to me and my 50% accuracy neural network....

#uwaa im so proud of My baby..... trained her basically all by myseif even tho its a 4person group :3#but we laugh. but we laugh.#and! i actually love her so much shes so special to meee#yes i am. currently overwriting her to get het to be even better! but. that shouldnt neglect all of yesterday ive spend with her....#& the fucking hoursss before that. waugh#its fine its absolutely my bad for having a pc that takes AGES to run this shit#=3=#sillyposting#guy likes his study........ this is unheard of.......#waughhhh i hope she grows up to 60 maybe even 70 overnight! maybe maybe.....#for the run im doing rn shes at 30% at epoch 14 which i know means nothing but also... thats so good... uwaaaa#I JUST WANT TO BRAG THAT I. AM TRAINING. MY OWN NEURAL NETWORK. FOR IMAGE RECOGNITION. HOW COOL.....#fuckck 15y/o me was so fucking smart when i knew what i wanted. why did 17y/o me forget.... (<- bc if the horrors)#but its so awesome lil me went on an excursion for women in stem day or whatevs to the uni im currently attending and ^-^ wow ist life aweso#YIPPEEEE#fuck my baka life /pos. i will stay smiling...#EVEN THO I DONT HAVE WIFI RN AND HAVE TO USE MY MONTHLY WHOLE 4GB OF DATA smh..... whateber....#my baby............#uwaa im so proud of my girl... AND! myself yayyyay i deserve so much credit for this yippeee

1 note

·

View note