#Transcribe Audio to Text

Explore tagged Tumblr posts

Text

SpeechVIP is a company specializing in speech recognition and natural language processing technologies, offering efficient and accurate speech-to-text services. Their platform enables rapid and precise conversion of spoken content into text, making it ideal for applications such as meeting notes, interview transcriptions, and subtitle generation. Additionally, SpeechVIP supports multiple languages and dialects to cater to diverse user needs. Their official website provides comprehensive product information and service details for users to explore and utilize their offerings.

1 note

·

View note

Text

Wear Headphones :]

Transcript:

Mmh- I... Oh wow. Ahgh! Mmph! I'm gonna GET SILLY. ALL OVER.

Ah-ghg. *exhale* Fuh-fuck. Heh-heh... I got too silly.

End Transcription

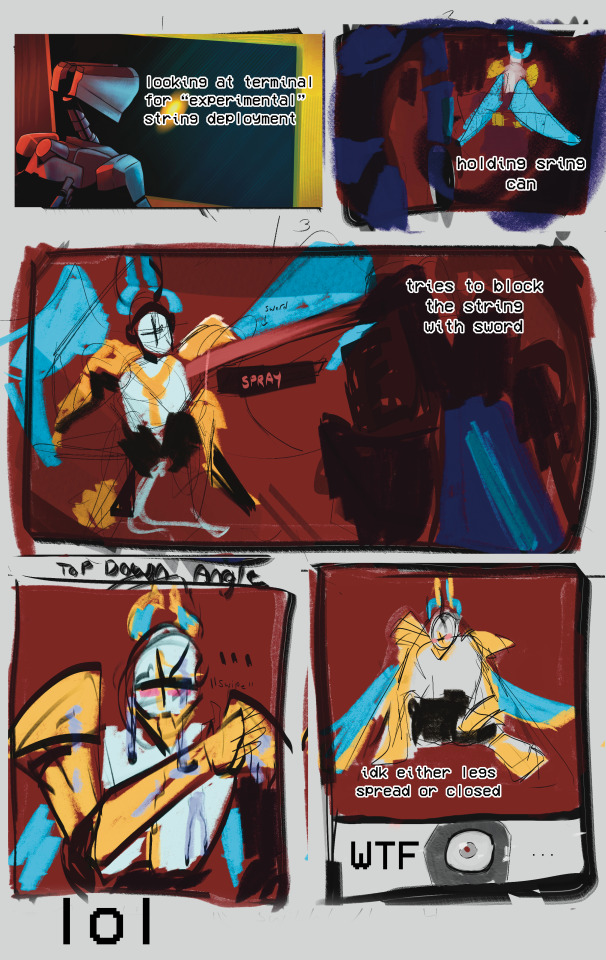

*coughs* this was actually going to be a comic but I Don't Have Time For All That. So if you want to see the incomprehensible version its below *coughs*

And heres v1 staring at fuckin nothin if you want it for some reason??

Since this is a lot of clips here is the audio source dumping ground (not in order)

Clip 1

Clip 2

Clip 3

#suggestive#ultrakill#gabriel ultrakill#im actually going to jail for this one.#sorry if the audio levels are a bit.. weird. its difficult when its a bunch of different clips together#please enjoy the 5 minutes spent on the comic layout#i did the first panel and said 'nvm lol' I didnt even finish adding the terminal text#shoutout to the one person in chat who said 'IM SILLYING' this is all your fault /j#i hate transcribing these#help.#OK................. THATS. Enough Posts Like This. For now. Next few will be normal. well as normal as it gets around here#btw the gabe pic was originally Much Worse. I had to add the rainbows n stuff so people didnt think it was..... well.#i need to stand in traffic now. goodeby#happy sunday everyone

878 notes

·

View notes

Text

Prof said “I can’t stop linguisting” as an example of language’s creativity and just- me when I got high and tried to listen to dark side BUT COULD NOT FOR THE LIFE OF ME STOP ANALYSING MY SPEECH MAKING IT IMPOSSIBLE FOR ME TO ACTUALLY PAY ATTENTION TO THE ALBUM

#PLS I was making hypotheses#I was planning experiments#I then realised how unethical some of them would be#I was testing my hypothesis#thankfully I recorded a good chunk soooo I can actually analyse what was happening#alternatively I could look for existing research#I’ve been meaning to transcribe the audio I recorder but it’s over 40minutes and as you may know it takes fucking forever to transcribe shit#anyways hopefully soon because there is some absolutely GOD TIER commentary in there#pink floyd#dark side of the moon#Linguistics#text post#Irving rambles

15 notes

·

View notes

Text

today was the day we finalized the migration of essential software at work from some old and busted shit that was ready to die at any time, to the new cloud version of the same software that we are no longer responsible for maintaining. which is good because no one was actually maintaining ours. it's just been slowly crufting into unusability for a decade. so anyway they set aside an hour for a teams meeting where they'd walk us through the different interface and how to go through normal processes.

"it's not that big a change," they said. "it's all the same stuff, it just looks a little different," they said.

they did not account for the fact that the primary user of this software is someone who doesn't actually know how it works or what it's doing. they learned how to do their job entirely through rote memorization. they know which buttons they are supposed to press in which order, and that is the full extent of what they know. they also did not account for the fact that this person's processes were learned thirdhand from other people who were not using this software normally to begin with.

it's like. imagine if someone had only ever used tumblr in the app. and you try to get them to use it in a desktop browser, but they cannot figure out how to post. and you go through explaining where the button is and how to format text and add tags, even though you could have sworn it was all the same in the app. but then they're like, "okay, but what's the phone number" and you're like "what" and they're like "the phone number to call to make a post?" and it turns out somehow they still had the ability to post by calling a phone number, and every time they posted on the app they called the post in first and then edited the audio post to transcribe it into text before screenshotting the text for a photo post. and nothing you can say to them will make them understand that none of that is necessary or correct. they shouldn't have even been able to do some of that. they can just type into the post box now, like a civilized person. "okay," they say, "but what is the phone number, though? because when i made my account my friend gave me this checklist and the first thing on it is to call the number."

so anyway we were on that teams call for almost three hours and they still don't have a handle on the new software

#original#boring work stuff#i am looking forward to being out of town during the week they have to do the actual complicated stuff lmfao

9K notes

·

View notes

Text

Discover the key issues of audio transcription and their solutions. Learn how to transcribe audio files to text effectively and accurately.

0 notes

Text

0 notes

Text

#audio transcription#video transcription#speech to text#audio to text#transcribe#transcription services

1 note

·

View note

Text

I wanted to show off the new hackysack I made. Turns out trying to watch your hand through the camera is not accurate lol.

The color is kind of washed out in this video, this one is blue and pale yellow. Filled with canna lily seeds.

I'm going to purposefully collect larger canna lily seeds to grow specifically so that I can get larger canna lily seeds too fill hacky sacks and maybe little stuffed animals with.

Not that literally anybody asked, but the normal red wild-type canna lilies seem to have the smallest most perfectly spherical seeds out of all of the ones I've seen so far.

Ones that are dwarf varieties or drastically different colors tend to have larger more oblong or even lumpy seeds.

There are some pale yellow shorter ones that have seeds that are still round but bigger than the seeds from the red flowers, and then there's some pink ones that are really big and lumpy, and some like extremely pale yellow with peach spots that are even bigger and it just goes on. It's cool.

Somewhere on here I have a picture I took of them when I labeled them with the petal colors...

Anyways:

[Video description start. Short video clip against a white wall with flash turned on showing a white hand bouncing a blue and pale yellow striped hacky sack, which makes a soft clattering sound of the seeds inside hitting each other every time it touches the hand. The hacky sack is bounced a few times then is fumbled and falls to the ground. There is a long pause, then the hand appears in frame again with a thumbs up, and the video ends just as the camera person starts to laugh breathily. Video description end.]

1 note

·

View note

Text

[ID: A video clip showing a red triangle, gold circle, blue hexagon, and purple line, singing, "Our children born of one emotion". End ID.]

Let me cook...

#Please copy and paste into the original post for accessability#no credit needed! It should just stay in plain text like it is now#without being put in italics bold or color#and go directly below the image#and above the caption#Image descriptions are for the visually impaired and blind#the way subtitles are for the deaf and hard of hearing#a plain text image description in the body of the post itself#is more accessible than just ALT text.#The image description should not go under a read more as that is inaccessible#and if you change your URL or delete the original post#everything under the read-more will be lost forever#please also feel free to change the ID to fix mistakes or#add in any details that I missed#and to add things like character names and pronouns if they're OCs#transcribed audio#transcribed lyrics#lyrics#music#Video#autoplay#Animation

34 notes

·

View notes

Text

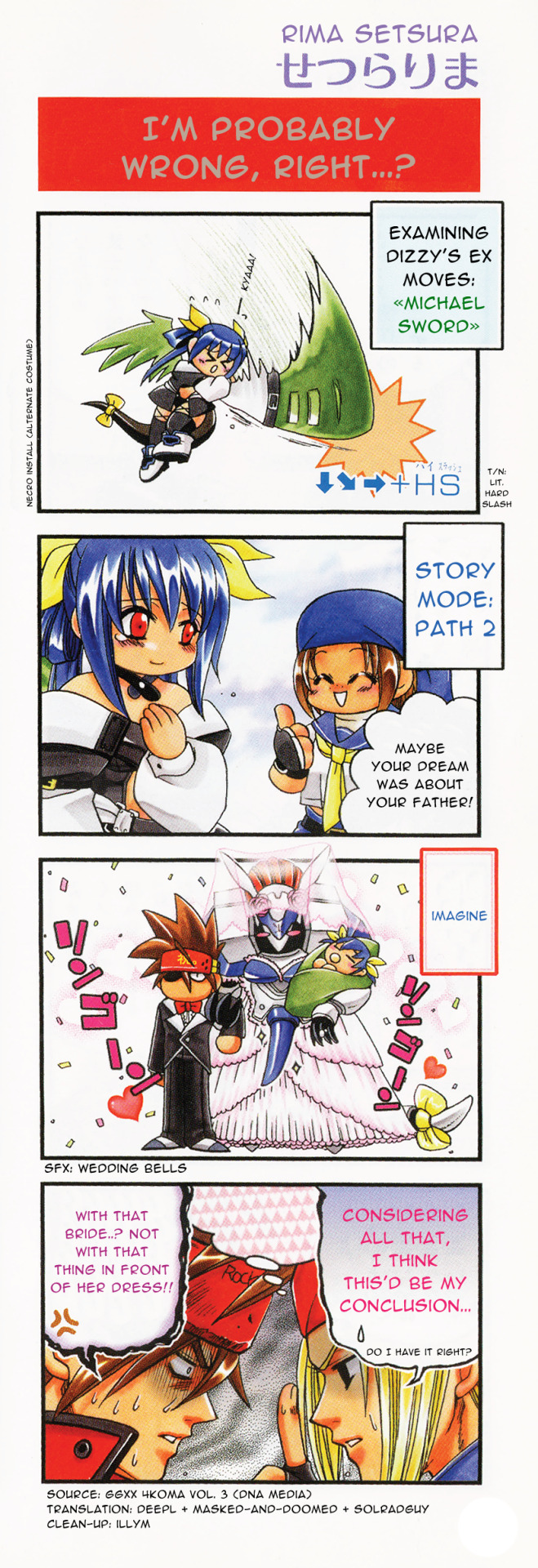

Justice, my wife Justice ♡ I would marry her no matter how her dress looked.

Translation assistance: @masked-and-doomed + @solradguy

If you post these elsewhere, keep the credits. The people helping me and I work hard on these.

ID in alt.

Cleaned and original comics below the cut.

Specific assistance:

@.masked-and-doomed: transcribed what ocr couldn't pick up.

@.solradguy: gave a lot of assistance with understanding what the comic was saying.

Suuuuch a good comic. Great Justice. Incredible Justice. My crops are watered, my house dusted, and my wrists healed.

Lotta fun with the copy stamp this time. I think it all came out quite nicely.

Radguy helped out a lot this time. Apparently there was quite a bit of slang and dialect-specific text in this one. It was very fun learning about all that.

And the wedding bell sfx! The Jaded Network didn't have it, and neither did the usual translation places, so I looked up the sfx and found this site that had a lot of anime sfx. I searched the page for it, listened to the audio sample, and immediately identified it as wedding bells, haha. Such a fun search.

Bonus half cleaned panel 3 (keep the credits in, it was quite difficult to clean):

#guilty gear#dizzy guilty gear#dizzy gg#axl guilty gear#axl low#sol badguy#april guilty gear#april gg#april#dizzy#illym translation

185 notes

·

View notes

Text

On Saturday, an Associated Press investigation revealed that OpenAI's Whisper transcription tool creates fabricated text in medical and business settings despite warnings against such use. The AP interviewed more than 12 software engineers, developers, and researchers who found the model regularly invents text that speakers never said, a phenomenon often called a “confabulation” or “hallucination” in the AI field.

Upon its release in 2022, OpenAI claimed that Whisper approached “human level robustness” in audio transcription accuracy. However, a University of Michigan researcher told the AP that Whisper created false text in 80 percent of public meeting transcripts examined. Another developer, unnamed in the AP report, claimed to have found invented content in almost all of his 26,000 test transcriptions.

The fabrications pose particular risks in health care settings. Despite OpenAI’s warnings against using Whisper for “high-risk domains,” over 30,000 medical workers now use Whisper-based tools to transcribe patient visits, according to the AP report. The Mankato Clinic in Minnesota and Children’s Hospital Los Angeles are among 40 health systems using a Whisper-powered AI copilot service from medical tech company Nabla that is fine-tuned on medical terminology.

Nabla acknowledges that Whisper can confabulate, but it also reportedly erases original audio recordings “for data safety reasons.” This could cause additional issues, since doctors cannot verify accuracy against the source material. And deaf patients may be highly impacted by mistaken transcripts since they would have no way to know if medical transcript audio is accurate or not.

The potential problems with Whisper extend beyond health care. Researchers from Cornell University and the University of Virginia studied thousands of audio samples and found Whisper adding nonexistent violent content and racial commentary to neutral speech. They found that 1 percent of samples included “entire hallucinated phrases or sentences which did not exist in any form in the underlying audio” and that 38 percent of those included “explicit harms such as perpetuating violence, making up inaccurate associations, or implying false authority.”

In one case from the study cited by AP, when a speaker described “two other girls and one lady,” Whisper added fictional text specifying that they “were Black.” In another, the audio said, “He, the boy, was going to, I’m not sure exactly, take the umbrella.” Whisper transcribed it to, “He took a big piece of a cross, a teeny, small piece … I’m sure he didn’t have a terror knife so he killed a number of people.”

An OpenAI spokesperson told the AP that the company appreciates the researchers’ findings and that it actively studies how to reduce fabrications and incorporates feedback in updates to the model.

Why Whisper Confabulates

The key to Whisper’s unsuitability in high-risk domains comes from its propensity to sometimes confabulate, or plausibly make up, inaccurate outputs. The AP report says, "Researchers aren’t certain why Whisper and similar tools hallucinate," but that isn't true. We know exactly why Transformer-based AI models like Whisper behave this way.

Whisper is based on technology that is designed to predict the next most likely token (chunk of data) that should appear after a sequence of tokens provided by a user. In the case of ChatGPT, the input tokens come in the form of a text prompt. In the case of Whisper, the input is tokenized audio data.

The transcription output from Whisper is a prediction of what is most likely, not what is most accurate. Accuracy in Transformer-based outputs is typically proportional to the presence of relevant accurate data in the training dataset, but it is never guaranteed. If there is ever a case where there isn't enough contextual information in its neural network for Whisper to make an accurate prediction about how to transcribe a particular segment of audio, the model will fall back on what it “knows” about the relationships between sounds and words it has learned from its training data.

According to OpenAI in 2022, Whisper learned those statistical relationships from “680,000 hours of multilingual and multitask supervised data collected from the web.” But we now know a little more about the source. Given Whisper's well-known tendency to produce certain outputs like "thank you for watching," "like and subscribe," or "drop a comment in the section below" when provided silent or garbled inputs, it's likely that OpenAI trained Whisper on thousands of hours of captioned audio scraped from YouTube videos. (The researchers needed audio paired with existing captions to train the model.)

There's also a phenomenon called “overfitting” in AI models where information (in this case, text found in audio transcriptions) encountered more frequently in the training data is more likely to be reproduced in an output. In cases where Whisper encounters poor-quality audio in medical notes, the AI model will produce what its neural network predicts is the most likely output, even if it is incorrect. And the most likely output for any given YouTube video, since so many people say it, is “thanks for watching.”

In other cases, Whisper seems to draw on the context of the conversation to fill in what should come next, which can lead to problems because its training data could include racist commentary or inaccurate medical information. For example, if many examples of training data featured speakers saying the phrase “crimes by Black criminals,” when Whisper encounters a “crimes by [garbled audio] criminals” audio sample, it will be more likely to fill in the transcription with “Black."

In the original Whisper model card, OpenAI researchers wrote about this very phenomenon: "Because the models are trained in a weakly supervised manner using large-scale noisy data, the predictions may include texts that are not actually spoken in the audio input (i.e. hallucination). We hypothesize that this happens because, given their general knowledge of language, the models combine trying to predict the next word in audio with trying to transcribe the audio itself."

So in that sense, Whisper "knows" something about the content of what is being said and keeps track of the context of the conversation, which can lead to issues like the one where Whisper identified two women as being Black even though that information was not contained in the original audio. Theoretically, this erroneous scenario could be reduced by using a second AI model trained to pick out areas of confusing audio where the Whisper model is likely to confabulate and flag the transcript in that location, so a human could manually check those instances for accuracy later.

Clearly, OpenAI's advice not to use Whisper in high-risk domains, such as critical medical records, was a good one. But health care companies are constantly driven by a need to decrease costs by using seemingly "good enough" AI tools—as we've seen with Epic Systems using GPT-4 for medical records and UnitedHealth using a flawed AI model for insurance decisions. It's entirely possible that people are already suffering negative outcomes due to AI mistakes, and fixing them will likely involve some sort of regulation and certification of AI tools used in the medical field.

87 notes

·

View notes

Text

Questions for audio and visual accommodations that bloggers can make on Tumblr:

I have absolutely no idea who to ask, so I’d appreciate people boosting this post. Is there anyone out there who can answer one or more of the following questions about accommodations I can make on posts?

1) If images are fully inaccessible without visual descriptions, what tags do you filter out? I’ve seen ‘undescribed’ and ‘uncaptioned’ used before

2) If you use text to speech and there is a sentence in all capital letters, does that mean the entire post is inaccessible to you? My understanding is that the letters are read one at a time. Is there anything I can do as a reblogger like adding it in plain text in my reblog or does that not work because you don’t know there is plain text below?

3) What tags should I use on a video on if it has no visual description and / or no audio description? Some people need one or the other so I don’t know how to warn people that the post doesn’t have all the information you may need. I don’t want to say ‘uncaptioned’ just to have people who only need visual or only need auditory descriptions left out of the post

4) If a video has the audio transcribed on the screen, how can I best tag that? My assumption is that someone with good vision but no hearing can access the video but someone who needs larger text can’t adjust it because it’s part of the original video. Like is done on TikToks

5) If there is alt text for an image, is that enough or is it better to also put the image description in the body of the post? Why?

Thank you to anyone who helps me learn! I want to make Tumblr more accessible for everyone

#myposts#disability#Deaf#hard of hearing#blind#low vision#deafness#blindness#I really hope it’s okay to tag these communities

41 notes

·

View notes

Text

Some language learning apps:

Notifyword - free, closest I cpuld find to a free alternative to Glossika with the feature to upload your own sentences/decks/spreadsheets, and it makes audio using TTS and plays them. However I did not test it enough to see if it schedules new/reviews so you don't need to manage figuring all that out yourself. It has potential, I will check into the app again in a year.

Smart Book by KursX - free, used to be my favorite app to read novels as it could do parallel sentence translation, then something broke on my version and it crashed whenever I opened a novel. Now any chinese book I add epub or txt shows me a black screen, no text, making the app unusable. Its easier to read in the web browser now. Which makes me sad because this app was so good back when I got it. Then something broke and I haven't been able to fix it. I paid for premium for this app I liked it so much, I'm really sad I can't see text in books in it anymore. If anyone knows how to fix this problem please let me know? Maybe it's a txt file setting? But then why do the epubs also not load text? Anyway great app... if it works for you. Sadly its broken for me.

Live Transcribe - I don't use this enough. It transcribes what people say (or audio), then you can click to translate the text.

LingoTube - only free app I know where I can put in a youtube video link, and it will make dual subtitles/let me replay the video line by line (including repeating a loop on one line), click translate individual words. Excellent for intensive listening. I'm usually lazy so I just watch youtube and look up an occasional word in Google Translate or Pleco. But this tool is excellent for intensively looking a lot up in a video/relistening to particular lines.

Duoreader - basic collection of parallel texts. No options to upload files, but super nice for what it is. Totally free.

Chinese:

Hanly - a new free app for learning hanzi. Looks great, has great mnemonics and sound information and you can tell it was made with love/a goal in mind. It's still new though so only the first 1000 hanzi have full information filled out, making it more useful for beginners. As the app is worked on more, I'm hoping it will become more useful for intermediate learners.

Readibu - free, great for reading webnovels just get it if you want to read chinese webnovels. You can import almost ANY webpage into Readibu to read, just paste the url into the search. So if you have a particular novel in mind you may want to do that instead of searching the app's built in genres.

Pleco - free, great for everything just get it if you're learning chinese. Great dictionary, great (one time purchase) paid features like handwriting, additional dictionaries, graded readers. Great SRS flashcard system, great Reader tool (and free Clipboard Reader which is 80% of what I use the app for - especially Dictate Audio feature which Readibu can't do).

Bilibili.com app - look up a tutorial, it is fairly easy to make an account in the US (and I imagine other countries) using your email. The algorithm is quite good at suggesting things similar to what you search. So once I searched a couple danmei, I got way more recommended. Once I searched one manhua video, more popped up. Once I searched one dubbed cartoon, more popped up. You can easily spend as much time on this as you'd like.

Weibo - you can browse tags/search without an account. I could not make an account with a US phone and no wechat account. Nice for browsing tags/looking up particular topics.

Japanese:

Tae Kims Grammar Guide - has an app version that's formatted to read easier on phones.

Yomiwa - this is the dictionary app I use for japanese on android.

Satori Reader - amazing graded reader app for japanese with full audiobooks for each reader (which you can listen to individual sentences of on repeat if desired), individual grammar explanations for each part, human translations for each word and sentence. When I start reading more this is what I want to use. Too expensive right now unless I'm reading a bunch, as only the first chapter (or first few) of each graded reader is free. I would suggest checking out the free Tadoku Graded Readers first online, then coming to this app later.

52 notes

·

View notes

Text

Hi everyone —

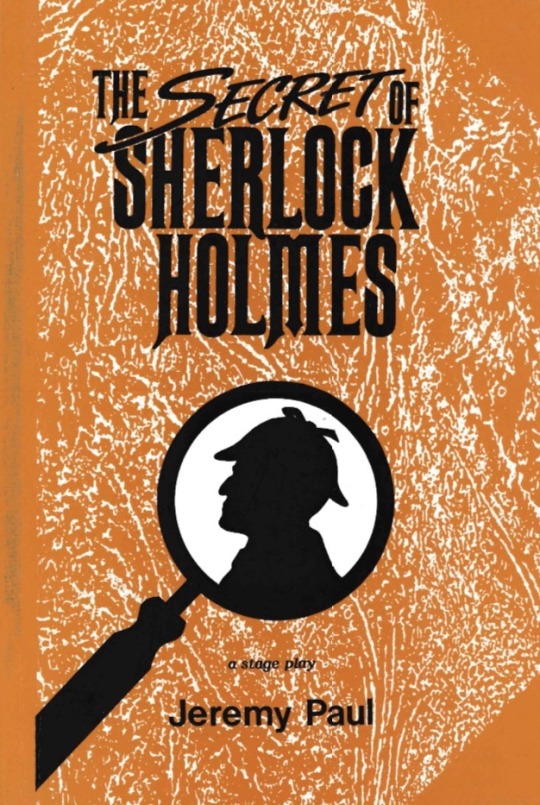

I just recently came across a real life copy of The Secret of Sherlock Holmes script book, so I scanned it for us. [scan]

I have a whole post about the play [here] with lots of links: to the audio bootlegs, the script, the program, the wonder that is Jeremy Brett and Edward Hardwicke and Jeremy Paul, etc. and I added this scan to that post too. I know someone already transcribed the script book and posted it and I linked to that one too, but I had it in my hands and I couldn’t not scan it for all of us. I ran the OCR text recognition for screen reading capabilities and it made the title page just a bit wonky but other than that it looks good. Also the cover is bright orange lol.

Enjoy!

#sherlock holmes#granada holmes#jeremy brett#edward hardwicke#jeremy paul#the secret of sherlock holmes#sh books

393 notes

·

View notes

Text

Why we’re against AI as a writing tool

Sophisticated AI tools like ChatGPT are the result of systemic, shameless theft of intellectual property and creative labor on a massive scale. These companies have mined the data of human genius… without permission. They have no intention of acknowledging their stolen sources, let alone paying the creators.

The tech industry’s defense is “Well, we stole so much from so many that it kinda doesn’t count, wouldn’t ya say?” Which is an argument that makes me feel like the mayor of Crazytown. I don’t doubt the courts will rule in their favor, not because it’s right, but because the opportunities for wealth generation are too succulent to let a lil’ thang like fairness win.

I’m not a luddite. I recognize that AI feels like magic to people who aren’t strong writers. I’d feel differently if the technology was achieved without the theft of my work. Couldn’t these tools have been made using legally obtained materials? Ah, but then they wouldn’t have been first to market! Think of the shareholders!

We’re lucky to have the ability and will to write. We won’t willingly use tools that devalue that skill. At most, I could see us using AI to assist with specific, narrow tasks like transcribing interview audio into text.

At a recent industry meetup, I listened as two personal finance gurus gushed about how easy AI made their lives. “All my newsletters and blogs are AI now! I add my own touches here and there—but it does 95% of the work!” Must be nice, I whispered to the empty void where my faith in mankind once dwelt, fingernails digging into my palms. It’s tough knowing I’m one of the myriad voices “streamlining their production.”

I feel strongly that every content creator who uses AI has a minimum duty to acknowledge it. Few will. It sucks. I’m frothing. Let’s move on.

Read more.

102 notes

·

View notes

Text

It's Just a Game, Right? Pt 2 Redux

Masterpost

"Okay, so. Like I said before, the first video is pretty basic.” Bernard tells Tim. He’s got his laptop perched on his lap and Tim leans into him as he clicks play.

He’s not wrong, either. The video in front of him looks like it was made with movie maker software from at least a decade ago. Hell, Tim’s pretty sure he remembers using a couple of those transitions in elementary school projects. The background remains stagnant, for the most part, with just the pictures at the center of the screen and the text beneath them changing. The pairing pretty obviously is supposed to be a caption for the videos, but the letters are a jumbled mess. Still, it feels familiar.

“Yeah, that’s definitely a Caesar cipher,” Tim mutters. He’s seen enough of them used by shitty two-bit rogues to recognize the patterns on instinct. It’s a bit harder to determine the exact amount of shift just by looking – especially since the shift amount seems like it’s changing on the different captions. Presumably the ciphers have already been solved, so Tim turns his attention away from them for a moment.

Looking around the screen, he can spot hints of distortion against the blank background. It’s blurry, almost invisible to the naked eye, but there’s not really any reason for it to be there naturally.

“The background looks weird,” Tim says.

“Oh yeah, there’s a text overlay on the video. It’s real blurry but somebody identified it as a poem. Something by Emily Dickinson; I don’t remember what the name was, though.”

“Hmmm. Did anyone recognize the song?” It sounds off, but it doesn’t seem to be random notes either. In fact, Tim almost feels like he could hear it on the radio.

“Yeah, a couple people recognized it as Space Oddity, only its been transcribed in a different key. There’s also some random discordant notes in there, too.”

“Heavily modified audio. Doesn’t sound like it’s poor quality, though.”

“True.”

They let the video finish playing. It’s not very long; they were probably timing the visuals to the song, rathen than the other way around. Tim stares at the finished video for a few moments. He’s never really had time for ARGs before. They weren’t exactly very big when he was younger, and now he spends so much time solving rogue shit and actual crimes that he doesn’t really need to go seeking out more puzzles to solve.

“So?” Bernard prompts. “What do you think?”

Honestly, the vibes aren’t the best. It’s clearly intended to be creepy in a way that’s probably exciting for most people, but just sort of reminds Tim of a rogue.

"I can see why you called it basic," Tim says.

“Yeah, it really didn’t seem like it was gonna be much at first.”

“Okay what does the decoded text say?”

"Here," Bernard switches tabs, to an impressive document with screenshots of the actual video, and loads of color-coded notes. “This is a copy of the community document so far.” Tim leans in, and considers the transcriptions.

Honestly, the transcriptions seem pretty basic, too. They’re all simple captions; just a name for the person or location in the image, and some semblance of a date. Notes next to each transcription denote the cipher used. First, a shift of four, then twenty-five, then seven, then nineteen. It’s a simple trick, scrambling the cipher between captions. Even without a key, Caesar ciphers are pretty easy to solve – there’s only ever going to 25 possible solutions, after all. Changing up the key ensures that it takes a lot longer to solve.

“Odd choice of content, too, honestly?” Tim says. It seems so simple, so benign, in comparison to the upsetting music.

“What do you mean?”

“I mean, imagine if a novel started with that. They’ve literally made their first video just about names and setting.”

“Huh. Yeah, that is odd.”

Tim lets Bernard consider that, and turns back to look at the document. It’s pretty obvious from the lack of mention of a solution path that they brute forced it. Which, that could be the intended method of solution, but there could also be a key hidden somewhere in the video. Possibly, that’s the point of the poem, or of the music choice. But either of those are something that’ll take further looking into.

Tim may have taken a few years of piano lessons as a kid, but he’s certainly not capable of transcribing music himself, so he’ll probably have to hire someone for that. The document also names the poem as A lane of Yellow led the eye. Tim sits up, reaching out to pull the laptop towards him.

“I’ll see if I can’t get someone more musically gifted to look at the audio. For now, I wanna know more about that poem.”

36 notes

·

View notes