#URL Inspection Tool API

Explore tagged Tumblr posts

Text

Sure, here is a 500-word article on "Google indexing tools" with the specified format:

Google indexing tools TG@yuantou2048

Google indexing tools are essential for website owners and SEO professionals who want to ensure their content is efficiently discovered and ranked by Google. These tools help in optimizing websites for search engines, making it easier for users to find relevant information. In this article, we will explore some of the key Google indexing tools that can help improve your site's visibility and performance.

Google Search Console

Google Search Console (formerly known as Google Webmaster Tools) is one of the most powerful tools for monitoring and maintaining your site’s presence in Google search results. It provides insights into how Google sees your website and helps you identify and fix issues that might be affecting your site’s performance. Some of the key features of Google Search Console include:

Sitemap Submission: You can submit your sitemap directly to Google, ensuring that all your pages are indexed.

Index Coverage: This report shows which pages have been indexed and which ones have errors.

URL Inspection Tool: Allows you to check the status of any URL and see it as Google sees it.

Mobile-Friendly Test: Ensures your site is optimized for mobile devices.

Security Issues: Alerts you if there are any security concerns on your site.

Search Queries: Shows you the keywords people use to find your site and how they rank.

Crawl Errors: Identifies and helps you resolve crawl errors that prevent Google from accessing your content.

Core Web Vitals: Provides detailed information about the performance of your site on both desktop and mobile devices.

Rich Results Test: Helps you test and troubleshoot rich snippets and structured data.

Coverage Report: Highlights pages with indexing issues and suggests fixes.

Performance: Tracks your site’s performance in search results and provides suggestions for improvement.

Googlebot Fetch and Render

The Googlebot Fetch and Render tool allows you to see your site as Googlebot sees it, helping you understand how Google interacts with your pages.

URL Inspection Tool: Lets you request indexing of specific URLs and see how Googlebot views them.

Google Indexing API

The Google Indexing API is designed to speed up the indexing process for new or updated content. By using this API, you can request that Google re-index specific URLs immediately after you make changes.

URL Inspection Tool: Enables you to inspect individual URLs and request indexing of newly created or updated content.

URL Removal: Temporarily removes URLs from Google search results.

Fetch as Google: Simulates how Googlebot crawls and renders your pages, helping you diagnose potential issues before they impact your rankings.

Google Page Experience Tool

The Google Page Experience tool assesses your site’s mobile-friendliness and page experience signals like loading time, responsiveness, and user experience metrics.

Google Robots.txt Tester

The Robots.txt Tester helps you test your robots.txt file to ensure it is correctly configured and not blocking important pages inadvertently.

Google Indexing API

For developers, the Google Indexing API can be used to request indexing of new or updated content. This is particularly useful for dynamic sites where content changes frequently.

Google Index Explorer

The Index Explorer allows you to check the status of your site’s pages and provides recommendations for improving your site’s mobile-friendliness and mobile usability.

Google Mobile-Friendly Test

This tool checks if your site is mobile-friendly and offers suggestions for improvements. It’s crucial for ensuring that your site is accessible and properly indexed.

Google Index Status

Monitor your site’s index status and detect any issues that might be preventing Google from crawling and indexing your pages effectively.

Google Index Coverage Report

The Index Coverage report within Search Console provides detailed information about the indexing status of your pages. It highlights pages that are blocked by robots.txt or noindex tags.

Google URL Inspection Tool

The URL Inspection Tool lets you check the indexing status of a specific URL and request that Google re-index a page. It also provides a preview of how Googlebot sees your pages, including mobile-first indexing readiness.

Google Indexing Best Practices

To get the most out of these tools, follow these best practices:

1. Regular Updates: Regularly update your sitemap and resubmit URLs for faster indexing.

2. Structured Data Testing Tool: Ensures your structured data is correct and effective.

3. PageSpeed Insights: Analyzes the performance of your pages and offers suggestions for optimization.

Conclusion

By leveraging these tools, you can significantly enhance your site’s visibility and ensure that Google can access and index your content efficiently. Regularly checking these reports and making necessary adjustments can significantly improve your site’s visibility and ranking.

Final Thoughts

Using these tools effectively can significantly boost your site’s visibility and performance in search results. Regularly checking these tools and implementing the suggested changes can lead to better search engine visibility and user experience.

How to Use These Tools Effectively

1. Regular Checks: Regularly check your site’s health and performance.

2. Optimize Content: Ensure your content is optimized for both desktop and mobile devices.

3. Stay Updated: Stay updated with Google’s guidelines and updates to keep your site healthy and competitive in search results.

By integrating these tools into your SEO strategy, you can maintain a robust online presence and stay ahead in the competitive digital landscape. Stay informed about Google’s guidelines and updates to keep your site optimized for search engines. Regularly monitor your site’s health and make necessary adjustments to improve your site’s visibility and user experience.

Additional Tips

Keep Your Sitemap Up-to-Date: Regularly update your sitemap and submit it to Google Search Console.

Monitor Performance: Keep an eye on your site’s performance and make necessary adjustments.

Stay Informed: Stay informed about Google’s guidelines and updates to stay ahead in the ever-evolving world of SEO.

Contact Us

For more tips and advanced strategies, feel free to reach out to us at TG@yuantou2048 for personalized advice and support.

Feel free to reach out for further assistance or feedback.

加飞机@yuantou2048

Google外链购买

谷歌留痕

0 notes

Text

Common React Native Debugging Issues and How to Resolve Them?

React Native is a popular framework for building mobile applications using JavaScript and React. It allows developers to create cross-platform apps with a single codebase, streamlining the development process.

However, despite its benefits, developers often face debugging challenges that can be frustrating and time-consuming. In this article, we will explore common React Native debugging issues and provide actionable solutions to help you resolve them effectively.

Understanding React Native Debugging

Debugging is an essential part of the development process. It involves identifying and fixing issues in the code to ensure that the application runs smoothly. React Native provides several tools and techniques to assist in debugging, but developers must be aware of common issues to address them efficiently.

Common React Native Debugging Issues

1. App Crashes on Startup

One of the most frustrating issues is when your app crashes immediately upon startup. This problem can stem from various sources, such as incorrect configuration or code errors.

Solution:

Check Logs: Use the React Native Debugger or console logs to identify the root cause of the crash. Logs can provide valuable insights into what went wrong.

Verify Configuration: Ensure that your development environment is correctly configured, including the necessary dependencies and packages.

Check for Syntax Errors: Review your code for syntax errors or typos that could be causing the crash.

2. Broken or Inconsistent UI

Another common issue is a broken or inconsistent user interface (UI). This can occur due to improper styling, layout issues, or component rendering problems.

Solution:

Use React DevTools: Utilize React DevTools to inspect and debug UI components. This tool allows you to view the component tree and identify rendering issues.

Inspect Styles: Check your stylesheets for any inconsistencies or errors. Ensure that styles are applied correctly to the components.

Test on Multiple Devices: Sometimes, UI issues may only appear on specific devices or screen sizes. Test your app on various devices to ensure consistent behavior.

3. Network Request Failures

Network request failures can occur when your app is unable to fetch data from an API or server. This issue can be caused by incorrect URLs, server errors, or connectivity problems.

Solution:

Check API Endpoints: Verify that the API endpoints you are using are correct and accessible. Test them using tools like Postman or cURL.

Handle Errors Gracefully: Implement error handling in your network requests to provide meaningful error messages to users.

Inspect Network Traffic: Use the network tab in Chrome DevTools or React Native Debugger to monitor network requests and responses.

4. Performance Issues

Performance issues can impact the overall user experience of your app. These issues can include slow rendering, lagging, or unresponsive UI components.

Solution:

Profile Your App: Use the React Native Performance Monitor or Chrome DevTools to profile your app and identify performance bottlenecks.

Optimize Components: Optimize your components by using techniques such as memoization, avoiding unnecessary re-renders, and minimizing the use of heavy computations.

Manage State Efficiently: Use state management libraries like Redux or Context API to manage your app's state efficiently and reduce performance overhead.

5. React Native Hot Reloading Issues

Hot reloading allows developers to see changes in real-time without restarting the app. However, sometimes hot reloading may not work as expected.

Solution:

Check Hot Reloading Settings: Ensure that hot reloading is enabled in your development environment settings.

Restart the Packager: Sometimes, restarting the React Native packager can resolve hot reloading issues.

Update Dependencies: Ensure that you are using compatible versions of React Native and related dependencies.

6. Unresponsive Buttons or Touch Events

Unresponsive buttons or touch events can lead to a poor user experience. This issue can occur due to incorrect event handling or layout problems.

Solution:

Verify Event Handlers: Ensure that event handlers are correctly attached to your buttons or touchable components.

Inspect Layout: Check your component layout to ensure that touchable elements are properly positioned and not obstructed by other elements.

Test on Multiple Devices: Test touch interactions on different devices to ensure consistent behavior.

7. Dependency Conflicts

Dependency conflicts can arise when different packages or libraries in your project have incompatible versions or dependencies.

Solution:

Check for Conflicts: Use tools like npm ls or yarn list to identify dependency conflicts in your project.

Update Dependencies: Update your dependencies to compatible versions and resolve any conflicts.

Use Resolutions: In some cases, you may need to use package resolutions to force specific versions of dependencies.

8. Native Module Issues

React Native relies on native modules for certain functionalities. Issues with native modules can cause various problems, such as crashes or missing features.

Solution

Check Native Code: Review the native code for any issues or errors. Ensure that native modules are correctly integrated into your project.

Update Native Dependencies: Ensure that you are using the latest versions of native modules and dependencies.

Consult Documentation: Refer to the documentation of the native modules you are using for troubleshooting guidance.

9. Memory Leaks

Memory leaks can lead to increased memory usage and performance degradation over time. Identifying and fixing memory leaks is crucial for maintaining app performance.

Solution

Use Memory Profilers: Utilize memory profiling tools to identify memory leaks and track memory usage.

Optimize Code: Review your code for potential memory leaks, such as uncleaned timers or event listeners, and optimize as needed.

Manage Resources: Ensure that resources are properly managed and cleaned up when no longer needed.

10. Version Compatibility Issues

Version compatibility issues can arise when using different versions of React Native, React, or other libraries in your project.

Solution:

Check Compatibility: Ensure that all libraries and dependencies are compatible with the version of React Native you are using.

Update Libraries: Update libraries and dependencies to versions that are compatible with your React Native version.

Consult Release Notes: Review release notes and documentation for any compatibility issues or breaking changes.

Best Practices for React Native Debugging

To effectively debug React Native applications, consider the following best practices:

Keep Your Code Clean: Write clean, well-organized code to make debugging easier. Use consistent coding standards and practices.

Use Version Control: Utilize version control systems like Git to track changes and easily revert to previous states if needed.

Document Your Code: Document your code and debugging process to help you and others understand and troubleshoot issues more effectively.

Conclusion

React Native debugging applications can be challenging, but with the right approach and tools, you can resolve common issues effectively. By understanding common problems and implementing best practices, you can improve the stability and performance of your app.

Remember to stay up-to-date with the latest React Native developments and continuously refine your debugging skills. With persistence and attention to detail, you can overcome debugging challenges and deliver a high-quality mobile application.

#React Native Debugging Issues#React Native debugging#React Native Debugger#React DevTools#Chrome DevTools

0 notes

Text

How Easy Is It To Scrape Google Reviews?

Have you ever wished you could put together all the Google reviews? Those insightful glimpses into customer experiences will fuel product development and skyrocket sales. But manually combing through endless reviews can be a tiring job. What if there was a way to automate this review roundup and unlock the data in minutes? This blog delves deep into the world of scraping Google Reviews, showing you how to transform this tedious task into a quick and easy win, empowering you to harness the power of customer feedback.

What is Google Review Scraping?

Google Reviews are very useful for both businesses and customers. They provide important information about customers' thoughts and can greatly affect how people see a brand.

It's the process of automatically collecting data from Google Reviews on businesses. Instead of manual copy paste work, scraping tools extract information like:

Review text

Star rating

Reviewer name (if available)

Date of review

Google Review Scraper

A Google Review scraper is a program that automatically gathers information about different businesses from Google Reviews.

Here's how Google Review Scraper works:

You provide the scraper with the URL of a specific business listing on Google Maps. This tells the scraper exactly where to find the reviews you're interested in.

The scraper acts like a web browser, but it analyses the underlying website structure instead of displaying the information for you.

The scraper finds the parts that have the review details you want, like the words in the review, the number of stars given, the name of the person who wrote it (if it's there), and the date of the review.

After the scraper collects all the important information, it puts it into a simple format, like a CSV file, which is easy to look at and work within spreadsheets or other programs for more detailed study.

Google Review Scraping using Python

Google discourages web scraping practices that overload their servers or violate user privacy. While it's possible to scrape Google Reviews with Python, it's important to prioritize ethical practices and respect their Terms of Service (TOS). Here's an overview:

Choose a Web Scraping Library

Popular options for Python include Beautiful Soup and Selenium. Beautiful Soup excels at parsing HTML content, while Selenium allows more browser-like interaction, which can help navigate dynamic content on Google.

Understand Google's Structure

Using browser developer tools, inspect the HTML structure of a Google business listing with reviews. Identify the elements containing the needed review data (text, rating, etc.). Identify the HTML tags associated with this data.

Write Python Script

Import the necessary libraries (requests, Beautiful Soup).

Define a function to take a business URL as input.

Use requests to fetch the HTML content of the business listing.

Use Beautiful Soup to parse the HTML content and navigate to the sections containing review elements using the identified HTML tags (e.g., CSS selectors).

Extract the desired data points (text, rating, name, date) and store them in a list or dictionary.

Consider using loops to iterate through multiple pages of reviews if pagination exists.

Finally, write the extracted data to a file (CSV, JSON) for further analysis.

Alternate Methods to Scrape Google Reviews

Though using web scraping libraries is the most common approach, it does involve coding knowledge and is complex to maintain. Here are some of the alternative approaches with no or minimal coding knowledge.

Google Maps API (Limited Use):

Google offers a Maps Platform with APIs for authorized businesses. This might be a suitable option if you need to review data for specific locations you manage. However, it requires authentication and might not be suitable for large-scale scraping of public reviews.

Pre-built Scraping Tools

Several online tools offer scraping functionalities for Google Reviews. These tools often have user-friendly interfaces and handle complexities like dynamic content. Consider options with clear pricing structures and responsible scraping practices.

Cloud-Based Scraping Services

Cloud services use their own servers to collect data from the web, which helps prevent problems with too much traffic on Google's servers and reduces the risk of your own IP address getting blocked. They have tools that deal with website navigation, where to keep the data, and changing the internet address so you don't get caught. Usually, you pay for these services based on how much you use them.

Google My Business Reviews (For Your Business):

If you want to analyze reviews for your business, Google My Business offers built-in review management tools. This platform lets you access, respond to, and analyze reviews directly. However, this approach only works for your business and doesn't offer access to reviews of competitors.

Partner with Data Providers

Certain data providers might offer access to Google Review datasets. This can be a good option if you need historical data or a broader range of reviews. Limited access might exist, and data acquisition might involve costs. Research the data source and ensure ethical data collection practices.

Understanding the Challenges

Scraping Google reviews can be a valuable way to gather data for analysis, but it comes with its own set of hurdles. Here's a breakdown of the common challenges you might face:

Technical Challenges

Changing Layouts and Algorithms Google frequently updates its website design and search algorithms. This can break your scraper if it relies on specific HTML elements or patterns that suddenly change.

Bot Detection Mechanisms Google has sophisticated systems in place to identify and block automated bots. These can include CAPTCHAs, IP bans, and browser fingerprinting, making it difficult for your scraper to appear as a legitimate user.

Dynamic Content A lot of Google reviews use JavaScript to load content dynamically. This means simply downloading the static HTML of a page might not be enough to capture all the reviews.

Data Access Challenges

Rate Limiting Google limits the frequency of requests from a single IP address within a certain timeframe, exceeding can lead to temporary blocks, hindering your scraping process.

Legality and Ethics Google's terms of service forbid scraping their content without permission. It's important to be aware of the legal and ethical implications before scraping Google reviews.

Data Quality Challenges

Personalization Google personalizes search results, including reviews, based on factors like location and search history. This means the data you scrape might not be representative of a broader audience.

Data Structuring Google review pages contain a lot of information beyond the reviews themselves, like ads and related searches. Extracting the specific data points you need (reviewer name, rating, etc.) can be a challenge.

Incomplete Data Your scraper might miss reviews that are loaded after the initial page load or hidden behind "See more" buttons.

If you decide to scrape Google reviews, be prepared to adapt your scraper to these challenges and prioritize ethical considerations. Scraping a small number of reviews might be manageable, but scaling up to scrape a large amount of data can be complex and resource-intensive.

Conclusion

While Google Review scraping offers a treasure trove of customer insights, navigating its technical and ethical complexities can be a real adventure. Now, you don't have to go it alone. Third-party data providers like ReviewGators can be your knight in shining armour. They handle the scraping responsibly and efficiently, ensuring you get high-quality, compliant data.

This frees you to focus on what truly matters: understanding your customers and leveraging their feedback to take your business to the next level.

Know more https://www.reviewgators.com/how-to-scrape-google-reviews.php

0 notes

Text

How to Scrape Product Reviews from eCommerce Sites?

Know More>>https://www.datazivot.com/scrape-product-reviews-from-ecommerce-sites.php

Introduction In the digital age, eCommerce sites have become treasure troves of data, offering insights into customer preferences, product performance, and market trends. One of the most valuable data types available on these platforms is product reviews. To Scrape Product Reviews data from eCommerce sites can provide businesses with detailed customer feedback, helping them enhance their products and services. This blog will guide you through the process to scrape ecommerce sites Reviews data, exploring the tools, techniques, and best practices involved.

Why Scrape Product Reviews from eCommerce Sites? Scraping product reviews from eCommerce sites is essential for several reasons:

Customer Insights: Reviews provide direct feedback from customers, offering insights into their preferences, likes, dislikes, and suggestions.

Product Improvement: By analyzing reviews, businesses can identify common issues and areas for improvement in their products.

Competitive Analysis: Scraping reviews from competitor products helps in understanding market trends and customer expectations.

Marketing Strategies: Positive reviews can be leveraged in marketing campaigns to build trust and attract more customers.

Sentiment Analysis: Understanding the overall sentiment of reviews helps in gauging customer satisfaction and brand perception.

Tools for Scraping eCommerce Sites Reviews Data Several tools and libraries can help you scrape product reviews from eCommerce sites. Here are some popular options:

BeautifulSoup: A Python library designed to parse HTML and XML documents. It generates parse trees from page source code, enabling easy data extraction.

Scrapy: An open-source web crawling framework for Python. It provides a powerful set of tools for extracting data from websites.

Selenium: A web testing library that can be used for automating web browser interactions. It's useful for scraping JavaScript-heavy websites.

Puppeteer: A Node.js library that gives a higher-level API to control Chromium or headless Chrome browsers, making it ideal for scraping dynamic content.

Steps to Scrape Product Reviews from eCommerce Sites Step 1: Identify Target eCommerce Sites First, decide which eCommerce sites you want to scrape. Popular choices include Amazon, eBay, Walmart, and Alibaba. Ensure that scraping these sites complies with their terms of service.

Step 2: Inspect the Website Structure Before scraping, inspect the webpage structure to identify the HTML elements containing the review data. Most browsers have built-in developer tools that can be accessed by right-clicking on the page and selecting "Inspect" or "Inspect Element."

Step 3: Set Up Your Scraping Environment Install the necessary libraries and tools. For example, if you're using Python, you can install BeautifulSoup, Scrapy, and Selenium using pip:

pip install beautifulsoup4 scrapy selenium Step 4: Write the Scraping Script Here's a basic example of how to scrape product reviews from an eCommerce site using BeautifulSoup and requests:

Step 5: Handle Pagination Most eCommerce sites paginate their reviews. You'll need to handle this to scrape all reviews. This can be done by identifying the URL pattern for pagination and looping through all pages:

Step 6: Store the Extracted Data Once you have extracted the reviews, store them in a structured format such as CSV, JSON, or a database. Here's an example of how to save the data to a CSV file:

Step 7: Use a Reviews Scraping API For more advanced needs or if you prefer not to write your own scraping logic, consider using a Reviews Scraping API. These APIs are designed to handle the complexities of scraping and provide a more reliable way to extract ecommerce sites reviews data.

Step 8: Best Practices and Legal Considerations Respect the site's terms of service: Ensure that your scraping activities comply with the website’s terms of service.

Use polite scraping: Implement delays between requests to avoid overloading the server. This is known as "polite scraping."

Handle CAPTCHAs and anti-scraping measures: Be prepared to handle CAPTCHAs and other anti-scraping measures. Using services like ScraperAPI can help.

Monitor for changes: Websites frequently change their structure. Regularly update your scraping scripts to accommodate these changes.

Data privacy: Ensure that you are not scraping any sensitive personal information and respect user privacy.

Conclusion Scraping product reviews from eCommerce sites can provide valuable insights into customer opinions and market trends. By using the right tools and techniques, you can efficiently extract and analyze review data to enhance your business strategies. Whether you choose to build your own scraper using libraries like BeautifulSoup and Scrapy or leverage a Reviews Scraping API, the key is to approach the task with a clear understanding of the website structure and a commitment to ethical scraping practices.

By following the steps outlined in this guide, you can successfully scrape product reviews from eCommerce sites and gain the competitive edge you need to thrive in today's digital marketplace. Trust Datazivot to help you unlock the full potential of review data and transform it into actionable insights for your business. Contact us today to learn more about our expert scraping services and start leveraging detailed customer feedback for your success.

#ScrapeProduceReviewsFromECommerce#ExtractProductReviewsFromECommerce#ScrapingECommerceSitesReviews Data#ScrapeProductReviewsData#ScrapeEcommerceSitesReviewsData

0 notes

Note

“How To Find The Exact Time An IG post or YouTube Video Was Uploaded Step By Step

IG

1. Open Instagram on your laptop

2. Go to the profile of the post you want to check

3. Choose an older post and hover your cursor over it to see the date it was posted

4. Right-click on the date and select Inspect

5. Look for the highlighted text on the screen and find the date and time of the post

6. The time will be in 24-hour format with the hour, minute, and second displayed

This trick works on all Instagram posts, even older ones. You can see the exact time a post was made, not just the date.

YouTube:

Here are two simple ways of finding the exact upload time of a YouTube video.

Solution 1: Use A Third-Party Website

You can use YouTube Data Viewer, a web-based tool, to know the exact upload time of any YouTube video. This also shares other information that you might need. Here are the steps to follow:

Step 1: On the YouTube website, find the video you want to know the exact upload time for. Then, copy the URL of the video from your

Step 2: Open your web browser and go to the YouTube Data Viewer website. Any search engine will allow you to visit the website.

Step 3: Now paste the copied URL in the search bar of the YouTube Data Viewer.

Step 4: Click on the “Submit” or “Search” button on the website to initiate the search for the video’s data.

Now you will get the exact upload date and time, including other details like views, like counts, comments, and even the monetization status. One thing that you need to do is to convert the time into your timezone, so you know when it was uploaded according to your timezone.

Solution 2: Use An Extension

If you don’t want to use any third-party website, you can use a Chrome extension of YouTube that lets you know the exact time when a video was uploaded. To do so, you need to download the YouTube upload time extension that you can find on Google.

Using this extension, you can get information like the upload time, date, and other data that might be useful. You can simply click on the extension while watching a video to know when the video was posted. As the extension uses Google’s API, the data is mostly accurate.

Solution 3: Use Google’s Search Tool

Although it might not give you the precise time, you can still view the time when a video was posted using Google’s advanced search tool. This is the filter tool to use while you are searching for a video.

To do so, you need to click on the filters button that you will find at the top of the interface after searching for something on YouTube. Then, you need to set the time filters like last hour, today, this week, this month, or this year. The search results will appear according to your given filters.”

No miracles here.

Thanks, I guess... 😄👍

0 notes

Text

A Comprehensive Beginner's Guide to Data Scraping

In today's data-driven world, information is power. Whether you're a business looking for market insights, a researcher collecting data for a study, or simply curious about a particular topic, data scraping can be an invaluable skill. This comprehensive beginner's guide will introduce you to the world of data scraping, providing a step-by-step overview of the process and some essential tips to get you started.

What is Data Scraping?

Data scraping, also known as web scraping, is the process of extracting data from websites and saving it in a structured format, such as a spreadsheet or database. This technique allows you to automate the collection of information from the web, making it a powerful tool for a wide range of applications.

Getting Started with Data Scraping

1. Choose Your Tool

To begin your data scraping journey, you'll need a scraping tool or library. Some popular options include:

Python Libraries: Python is the most widely used language for web scraping. Libraries like BeautifulSoup and Scrapy provide robust tools for extracting data from websites.

Web Scraping Software: Tools like Octoparse, ParseHub, and Import.io offer user-friendly interfaces for scraping without extensive coding knowledge.

2. Select Your Target

Once you have your tool in place, decide on the website or web page from which you want to scrape data. Ensure that you have permission to access and scrape the data, as scraping can raise legal and ethical concerns.

3. Understand the Website Structure

Before diving into scraping, it's crucial to understand the structure of the website you're targeting. Study the HTML structure, identifying the elements that contain the data you need. You can use browser developer tools (F12 in most browsers) to inspect the HTML code.

4. Write Your Code

If you're using Python libraries like BeautifulSoup, write a script that instructs your scraper on how to navigate the website, locate the data, and extract it. Here's a simple example in Python:import requests from bs4 import BeautifulSoup url = 'https://example.com' response = requests.get(url) soup = BeautifulSoup(response.text, 'html.parser') # Find and print a specific element element = soup.find('div', {'class': 'example-class'}) print(element.text)

5. Handle Data Extraction Challenges

Web scraping can sometimes be challenging due to factors like dynamic websites, anti-scraping measures, or CAPTCHA. You may need to use techniques like handling cookies, using user-agents, or employing proxies to overcome these obstacles.

6. Data Storage

After extracting the data, you'll want to store it in a structured format. Common options include CSV, Excel, JSON, or a database like SQLite or MySQL, depending on your project's requirements.

7. Automate the Process

If you plan to scrape data regularly, consider setting up automation using scheduling tools or integrating your scraper with other applications using APIs.

Best Practices and Ethical Considerations

As a beginner in data scraping, it's essential to follow best practices and ethical guidelines:

Respect Robots.txt: Check a website's robots.txt file to see if scraping is allowed or restricted. Always abide by these guidelines.

Rate Limiting: Avoid overwhelming a website's server by adding delays between requests. Be a responsible scraper to maintain the site's performance.

User Agents: Use user-agent headers to mimic the behavior of a real browser, making your requests look less like automated scraping.

Legal and Ethical Considerations: Ensure you have the right to scrape the data, respect copyrights, and avoid scraping sensitive or private information.

Data Privacy: Be mindful of data privacy laws like GDPR. Scrub any personal information from your scraped data.

Conclusion

Data scraping is a valuable skill for anyone looking to gather information from the web efficiently. By choosing the right tools, understanding website structures, writing code, and adhering to best practices, beginners can become proficient data scrapers. Remember to use your scraping abilities responsibly and ethically, respecting the websites you interact with and the privacy of the data you collect. Happy scraping!

0 notes

Text

Google update: Google has released a URL Inspection Tool API

Thanks to the release of the Google Search Console URL Inspection API

Google announced this morning that the URL Inspection Tool had received a new API under the Google Search Console APIs. The new URL Inspection API allows you to access the data and reports you'd get from the URL Inspection Tool programmatically, just like any other API.

The API's responses would tell you about the index status, AMP, rich results, and mobile usability of any URL you've verified in Google Search Console. So don't expect to be able to test it against your complete one-million-page website right now. You'll have to queue things up or do it on a case-by-case basis. So, this may appear as fantastic news for users who wish to analyze URLs in bulk and automate page debugging regularly.

The Google Search Console URL Inspection API, according to Google, will aid developers in quickly debugging and optimizing websites.

The Search Console APIs allow you to access data outside of Search Console through third-party apps and solutions.

You may ask Search Console for information about an indexed URL version, and the API will provide the indexed data presently accessible in the URL Inspection tool.

If you are interested in reading this kind of technical blog post, you can also read our other articles.

What is the URL Inspection API, and how do I use it?

A few key request parameters are mandatory to utilize the URL Inspection API. These are some of them:

inspection URL

You must enter the URL of the page for which you wish to run the examination. As a result, this is a mandatory field. "string" is the return type.

site URL

It will help if you give the property's URL as it appears in Google Search Console for this parameter. This parameter is also a mandatory field with a "string" return type.

language Code

You must give the language code for translated problem messages in this box. You leave this field blank as "string" is the return type.

If you conduct the API request appropriately, you can successfully get a response containing all information connected to the supplied URL.

SEO tools and companies can constantly monitor single-page debugging alternatives for crucial pages. I checked for inconsistencies between user-declared and Google-selected canonicals, for example, or troubleshooting structured data errors across a collection of sites.

CMS and plugin developers may provide insights and continuous tests for existing pages at the page or template level. Monitoring changes over time for binding sites, for example, may assist in detecting problems and prioritizing solutions.

API's Usage Restrictions

There are various restrictions to the URL Inspection API: In a single day, you may submit 2,000 inquiries. You may send approximately 600 inquiries each minute. So, it's not unlimited, and you won't be able to run this API daily across all of your URLs, at least not if your site has thousands of pages—the outcomes. The URL Inspection Tool will deliver indexed information from the API, index status, AMP, rich results, and mobile usability. You may find the whole set of replies in the API documentation.

The Most Important Takeaway

Google Search Console's URL Inspection tool gives a wealth of information about the page. It displays the URL's discovery in sitemaps, the page's date and time, indexing metadata such as the user and Google-selected canonical, and schemas identified by Google.

This pattern of API will permit you to acquire familiarity with URL Inspection Tool programmatically, similar to how you would interact with it manually in Google Search Console. As you might expect, SEOs and developers are ecstatic about this new API.

SEOs and developers can now analyze sites in bulk and build up automation to regularly monitor crucial pages thanks to the URL Inspection API. It will be fascinating to observe how programmers leverage the API to construct helpful custom scripts.

You may find more information about this API on Google's official API documentation website. The following is an example of an API response:

Why should we be concerned?

URL Inspection information may now be added programmatically to your content management system, internal tools, dashboards, and third-party tools, among other places. Expect a slew of new features from various tool providers and content management systems.

And if you have your ideas, go ahead and implement them.

References:

developers.google.com/search/blog

developers.google.com/webmaster-tools

#Google Search Console URL Inspection API#URL Inspection#URL Inspection Tool API#Google update#seo for ecommerce#googleads#communitymatesblog

0 notes

Text

Application Programming Interface (API)

What is API?

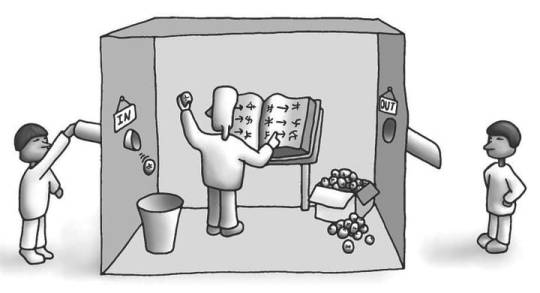

API is the acronym for Application Programming Interface, which is a software intermediary that allows two applications to talk to each other. It is a way for computers to share data or functionality, but computers need some kind of interface to talk to each other.

When you use an application on your mobile phone, the application connects to the Internet and sends data to a server. The server then retrieves that data, interprets it, performs the necessary actions and sends it back to your phone. The application then interprets that data and presents you with the information you wanted in a readable way. This is what an API is - all of this happens via API.

Building Blocks of API

There are three building blocks of an API. These are:

dataset

requests

response

Let’s elaborate these blocks a bit.

An API needs a data source. In most cases, this will be a database like MySQL, MongoDB, or Redis, but it could also be something simpler like a text file or spreadsheet. The API’s data source can usually be updated through the API itself, but it might be updated independently if you want your API to be “read-only”.

An API needs a format for making requests. When a user wants to use an API, they make a “request”. This request usually includes a verb (eg: “GET”, “POST”, “PUT”, or “DELETE”), a path (this looks like a URL), and a payload (eg: form or JSON data). Good APIs offer rules for making these requests in their documentation.

An API needs to return a response. Once the API processes the request and gets or saves data to the data source, it should return a “response”. This response usually includes a status code (eg: “404 - Not Found”, “200 - Okay”, or “500 - Server Error”) and a payload (usually text or JSON data). This response format should also be specified in the documentation of the API so that developers know what to expect when they make a successful request.

Types of API

Open APIs - Also known as Public APIs. These APIs are publicly available and there are no restrictions to access them.

Partner APIs - These APIs are not publicly available, so you need specific rights or licenses to access them.

Internal APIs - Internal or private. These APIs are developed by companies to use in their internal systems. It helps you to enhance the productivity of your teams.

Composite APIs - This type of API combines different data and service APIs.

SOAP - It defines messages in XML format used by web aplications to comunicate with each other.

REST - It makes use of HTTP to GET, POST, PUT or DELETE data. It is basically used to take advantage of the existing data.

JSON-RPC - It uses JSON for data transfer and is a light-weight remote procedural call defining few data structure types.

XML-RPC - It is based on XML and uses HTTP for data transfer. This API is widely used to exchange information between two or more networks.

Features of API

It offers a valuable service (data, function, audience).

It helps you to planabusiness model.

Simple, flexible, quickly adopted.

Managed and measured.

Offers great developer support.

Examples of API

Razorpay API

Google Maps API

Spotify API

Twitter API

Weather API

PayPal API

PayTm API

HubSpot API

Youtube API

Amazon's API

Travel Booking API

Stock Chart API

API Testing Tools

Postman - Postman is a plugin in Google Chrome, and it can be used for testing API services. It is a powerful HTTP client to check web services. For manual or exploratory testing, Postman is a good choice for testing API.

Ping API - Ping API is API testing tool which allows us to write test script in JavaScript and CoffeeScript to test your APIs. It will enable inspecting the HTTP API call with a complete request and response data.

VREST - VREST API tool provides an online solution for automated testing, mocking, automatic recording and specification of REST/HTTP APIS/RESTful APIs.

When to create an API and when not to

Its very important to remember when to create and when not to create an API. Let’s start with when to create an API…

You want to build a mobile app or desktop app someday

You want to use modern front-end frameworks like React or Angular

You have a data-heavy website that you need to run quickly and load data without a complete refresh

You want to access the same data in many different places or ways (eg: an internal dashboard and a customer-facing web app)

You want to allow customers or partners limited or complete access to your data

You want to upsell your customers on direct API access

Now, when not to create an API…

You just need a landing page or blog as a website

Your application is temporary and not intended to grow or change much

You never intend on expanding to other platforms (eg: mobile, desktop)

You don’t understand the technical implications of building one.

A short 30 second clip to understand it

instagram

Word of advice for newbies

Please don’t wait for people to spoon-feed you with every single resource and teachings because you’re on your own in your learning path. So be wise and learn yourself.

Check out my book

I have curated a step by step guideline not just for beginners but also for someone who wants to come back and rebrush the skills. You will get to know from installing necessary tools, writing your first line of code, building your first website, deploy it online and more advanced concepts. Not only that, I also provided many online resources which are seriously spot on to master your way through. Grab your copy now from here. Or you can get it from this link below.

About Me

I am Ishraq Haider Chowdhury from Bangladesh, currently living in Bamberg, Germany. I am a fullstack developer mainly focusing on MERN Stack applications with JavaScript and TypeScript. I have been in this industry for about 11 years and still counting. If you want to find me, here are some of my social links....

Instagram

TikTok

YouTube

Facebook

GitHub

#programming#webdevelopment#coding#fullstackdevelopment#appdevelopment#softwaredevelopment#webdeveloper#reactjs#nodejs#vuejs#pythonprogramming#python#javascript#typescript#frontenddevelopment#backenddevelopment#learnprogramming#learn to code#api integration#api#Instagram

181 notes

·

View notes

Photo

Language supermodel: How GPT-3 is quietly ushering in the A.I. revolution https://ift.tt/3mAgOO1

OpenAI

OpenAI’s GPT-2 text-generating algorithm was once considered too dangerous to release. Then it got released — and the world kept on turning.

In retrospect, the comparatively small GPT-2 language model (a puny 1.5 billion parameters) looks paltry next to its sequel, GPT-3, which boasts a massive 175 billion parameters, was trained on 45 TB of text data, and cost a reported $12 million (at least) to build.

“Our perspective, and our take back then, was to have a staged release, which was like, initially, you release the smaller model and you wait and see what happens,” Sandhini Agarwal, an A.I. policy researcher for OpenAI told Digital Trends. “If things look good, then you release the next size of model. The reason we took that approach is because this is, honestly, [not just uncharted waters for us, but it’s also] uncharted waters for the entire world.”

Jump forward to the present day, nine months after GPT-3’s release last summer, and it’s powering upward of 300 applications while generating a massive 4.5 billion words per day. Seeded with only the first few sentences of a document, it’s able to generate seemingly endless more text in the same style — even including fictitious quotes.

Is it going to destroy the world? Based on past history, almost certainly not. But it is making some game-changing applications of A.I. possible, all while posing some very profound questions along the way.

What is it good for? Absolutely everything

Recently, Francis Jervis, the founder of a startup called Augrented, used GPT-3 to help people struggling with their rent to write letters negotiating rent discounts. “I’d describe the use case here as ‘style transfer,'” Jervis told Digital Trends. “[It takes in] bullet points, which don’t even have to be in perfect English, and [outputs] two to three sentences in formal language.”

Powered by this ultra-powerful language model, Jervis’s tool allows renters to describe their situation and the reason they need a discounted settlement. “Just enter a couple of words about why you lost income, and in a few seconds you’ll get a suggested persuasive, formal paragraph to add to your letter,” the company claims.

This is just the tip of the iceberg. When Aditya Joshi, a machine learning scientist and former Amazon Web Services engineer, first came across GPT-3, he was so blown away by what he saw that he set up a website, www.gpt3examples.com, to keep track of the best ones.

“Shortly after OpenAI announced their API, developers started tweeting impressive demos of applications built using GPT-3,” he told Digital Trends. “They were astonishingly good. I built [my website] to make it easy for the community to find these examples and discover creative ways of using GPT-3 to solve problems in their own domain.”

Fully interactive synthetic personas with GPT-3 and https://t.co/ZPdnEqR0Hn ????

They know who they are, where they worked, who their boss is, and so much more. This is not your father's bot… pic.twitter.com/kt4AtgYHZL

— Tyler Lastovich (@tylerlastovich) August 18, 2020

Joshi points to several demos that really made an impact on him. One, a layout generator, renders a functional layout by generating JavaScript code from a simple text description. Want a button that says “subscribe” in the shape of a watermelon? Fancy some banner text with a series of buttons the colors of the rainbow? Just explain them in basic text, and Sharif Shameem’s layout generator will write the code for you. Another, a GPT-3 based search engine created by Paras Chopra, can turn any written query into an answer and a URL link for providing more information. Another, the inverse of Francis Jervis’ by Michael Tefula, translates legal documents into plain English. Yet another, by Raphaël Millière, writes philosophical essays. And one other, by Gwern Branwen, can generate creative fiction.

“I did not expect a single language model to perform so well on such a diverse range of tasks, from language translation and generation to text summarization and entity extraction,” Joshi said. “In one of my own experiments, I used GPT-3 to predict chemical combustion reactions, and it did so surprisingly well.”

More where that came from

The transformative uses of GPT-3 don’t end there, either. Computer scientist Tyler Lastovich has used GPT-3 to create fake people, including backstory, who can then be interacted with via text. Meanwhile, Andrew Mayne has shown that GPT-3 can be used to turn movie titles into emojis. Nick Walton, chief technology officer of Latitude, the studio behind GPT-generated text adventure game AI Dungeon recently did the same to see if it could turn longer strings of text description into emoji. And Copy.ai, a startup that builds copywriting tools with GPT-3, is tapping the model for all it’s worth, with a monthly recurring revenue of $67,000 as of March — and a recent $2.9 million funding round.

“Definitely, there was surprise and a lot of awe in terms of the creativity people have used GPT-3 for,” Sandhini Agarwal, an A.I. policy researcher for OpenAI told Digital Trends. “So many use cases are just so creative, and in domains that even I had not foreseen, it would have much knowledge about. That’s interesting to see. But that being said, GPT-3 — and this whole direction of research that OpenAI pursued — was very much with the hope that this would give us an A.I. model that was more general-purpose. The whole point of a general-purpose A.I. model is [that it would be] one model that could like do all these different A.I. tasks.”

Many of the projects highlight one of the big value-adds of GPT-3: The lack of training it requires. Machine learning has been transformative in all sorts of ways over the past couple of decades. But machine learning requires a large number of training examples to be able to output correct answers. GPT-3, on the other hand, has a “few shot ability” that allows it to be taught to do something with only a small handful of examples.

Plausible bull***t

GPT-3 is highly impressive. But it poses challenges too. Some of these relate to cost: For high-volume services like chatbots, which could benefit from GPT-3’s magic, the tool might be too pricey to use. (A single message could cost 6 cents which, while not exactly bank-breaking, certainly adds up.)

Others relate to its widespread availability, meaning that it’s likely going to be tough to build a startup exclusively around since fierce competition will likely drive down margins.

Christina Morillo/Pexels

Another is the lack of memory; its context window runs a little under 2,000 words at a time before, like Guy Pierce’s character in the movie Memento, its memory is reset. “This significantly limits the length of text it can generate, roughly to a short paragraph per request,” Lastovich said. “Practically speaking, this means that it is unable to generate long documents while still remembering what happened at the beginning.”

Perhaps the most notable challenge, however, also relates to its biggest strength: Its confabulation abilities. Confabulation is a term frequently used by doctors to describe the way in which some people with memory issues are able to fabricate information that appears initially convincing, but which doesn’t necessarily stand up to scrutiny upon closer inspection. GPT-3’s ability to confabulate is, depending upon the context, a strength and a weakness. For creative projects, it can be great, allowing it to riff on themes without concern for anything as mundane as truth. For other projects, it can be trickier.

Francis Jervis of Augrented refers to GPT-3’s ability to “generate plausible bullshit.” Nick Walton of AI Dungeon said: “GPT-3 is very good at writing creative text that seems like it could have been written by a human … One of its weaknesses, though, is that it can often write like it’s very confident — even if it has no idea what the answer to a question is.”

Back in the Chinese Room

In this regard, GPT-3 returns us to the familiar ground of John Searle’s Chinese Room. In 1980, Searle, a philosopher, published one of the best-known A.I. thought experiments, focused on the topic of “understanding.” The Chinese Room asks us to imagine a person locked in a room with a mass of writing in a language that they do not understand. All they recognize are abstract symbols. The room also contains a set of rules that show how one set of symbols corresponds with another. Given a series of questions to answer, the room’s occupant must match question symbols with answer symbols. After repeating this task many times, they become adept at performing it — even though they have no clue what either set of symbols means, merely that one corresponds to the other.

GPT-3 is a world away from the kinds of linguistic A.I. that existed at the time Searle was writing. However, the question of understanding is as thorny as ever.

“This is a very controversial domain of questioning, as I’m sure you’re aware, because there’s so many differing opinions on whether, in general, language models … would ever have [true] understanding,” said OpenAI’s Sandhini Agarwal. “If you ask me about GPT-3 right now, it performs very well sometimes, but not very well at other times. There is this randomness in a way about how meaningful the output might seem to you. Sometimes you might be wowed by the output, and sometimes the output will just be nonsensical. Given that, right now in my opinion … GPT-3 doesn’t appear to have understanding.”

An added twist on the Chinese Room experiment today is that GPT-3 is not programmed at every step by a small team of researchers. It’s a massive model that’s been trained on an enormous dataset consisting of, well, the internet. This means that it can pick up inferences and biases that might be encoded into text found online. You’ve heard the expression that you’re an average of the five people you surround yourself with? Well, GPT-3 was trained on almost unfathomable amounts of text data from multiple sources, including books, Wikipedia, and other articles. From this, it learns to predict the next word in any sequence by scouring its training data to see word combinations used before. This can have unintended consequences.

Feeding the stochastic parrots

This challenge with large language models was first highlighted in a groundbreaking paper on the subject of so-called stochastic parrots. A stochastic parrot — a term coined by the authors, who included among their ranks the former co-lead of Google’s ethical A.I. team, Timnit Gebru — refers to a large language model that “haphazardly [stitches] together sequences of linguistic forms it has observed in its vast training data, according to probabilistic information about how they combine, but without any reference to meaning.”

“Having been trained on a big portion of the internet, it’s important to acknowledge that it will carry some of its biases,” Albert Gozzi, another GPT-3 user, told Digital Trends. “I know the OpenAI team is working hard on mitigating this in a few different ways, but I’d expect this to be an issue for [some] time to come.”

OpenAI’s countermeasures to defend against bias include a toxicity filter, which filters out certain language or topics. OpenAI is also working on ways to integrate human feedback in order to be able to specify which areas not to stray into. In addition, the team controls access to the tool so that certain negative uses of the tool will not be granted access.

“One of the reasons perhaps you haven’t seen like too many of these malicious users is because we do have an intensive review process internally,” Agarwal said. “The way we work is that every time you want to use GPT-3 in a product that would actually be deployed, you have to go through a process where a team — like, a team of humans — actually reviews how you want to use it. … Then, based on making sure that it is not something malicious, you will be granted access.”

Some of this is challenging, however — not least because bias isn’t always a clear-cut case of using certain words. Jervis notes that, at times, his GPT-3 rent messages can “tend towards stereotypical gender [or] class assumptions.” Left unattended, it might assume the subject’s gender identity on a rent letter, based on their family role or job. This may not be the most grievous example of A.I. bias, but it highlights what happens when large amounts of data are ingested and then probabilistically reassembled in a language model.

“Bias and the potential for explicit returns absolutely exist and require effort from developers to avoid,” Tyler Lastovich said. “OpenAI does flag potentially toxic results, but ultimately it does add a liability customers have to think hard about before putting the model into production. A specifically difficult edge case to develop around is the model’s propensity to lie — as it has no concept of true or false information.”

Language models and the future of A.I.

Nine months after its debut, GPT-3 is certainly living up to its billing as a game changer. What once was purely potential has shown itself to be potential realized. The number of intriguing use cases for GPT-3 highlights how a text-generating A.I. is a whole lot more versatile than that description might suggest.

Not that it’s the new kid on the block these days. Earlier this year, GPT-3 was overtaken as the biggest language model. Google Brain debuted a new language model with some 1.6 trillion parameters, making it nine times the size of OpenAI’s offering. Nor is this likely to be the end of the road for language models. These are extremely powerful tools — with the potential to be transformative to society, potentially for better and for worse.

Challenges certainly exist with these technologies, and they’re ones that companies like OpenAI, independent researchers, and others, must continue to address. But taken as a whole, it’s hard to argue that language models are not turning to be one of the most interesting and important frontiers of artificial intelligence research.

Who would’ve thought text generators could be so profoundly important? Welcome to the future of artificial intelligence.

1 note

·

View note

Text

2020’s Top PHP Frameworks for Web Development

2020’s Top PHP Frameworks for Web Development : Modern websites are getting more complex and intriguing day by day! the great news is these websites offer more value and insight than ever. Now, if you’ve consulted a couple of reputed developers for discussing which framework is right for your web development project, most of them will find yourself citing PHP framework. Straightaway, you would possibly wonder what’s this framework and why does one got to invest during a PHP framework 2020? Well, here’s the answer! Popularly referred to as Hypertext Preprocessor, PHP may be a server-side open-source scripting language that’s wont to design web application. With the utilization of this framework, developers can simplify the complicated coding procedures and work to develop complex apps during a jiffy. This helps developers save time, money, resources and sanity.

Apart from this using the simplest PHP framework has several noted perks! Check these out! Cross-Platform The best feature of PHP is that it are often fit and moulded across various platforms. The coding available can moreover, be utilized in mobile also as website development without making developers anxious about the OS utilized. Good Speed In the competitive realm of web and app development, nobody has time to lose. Thankfully PHP framework comes with a plethora of tools, code snippets and features, which makes sure web apps developed are fast. Stability and CMS Support PHP framework has been within the web development game for quite few years now. Hence, with time due to its vast community of developers a lot of bugs and shortcomings are fixed, thereby increasing the steadiness of Support. Further, PHP offers inherent support over CMS for offering a far better presentation of knowledge . For more detailed insight on PHP and why to use it, here’s a reference link dotcominfoway.

Moving forward, now that the explanations behind using PHP are justified, it’s time to pick the simplest PHP model for your website! However, here’s the catch! With numerous frameworks available within the market the way to find one which will fit your need best? to know this you’ve got to settle on supported development time-frame, and knowledge of using similar frameworks. Nevertheless, to form things easier and fewer complex, this blog has listed the highest ranging PHP frameworks of 2020, which are tailor-made to fit your needs.

Give a Read:

Laravel

One of the top-quality PHP framework 2020 to select for your web development project is Laravel! This was introduced back in 2011 and is loved by most developers due to it’s laid back functioning. It aids developers in creating strong web applications by simplifying complex coding procedures. Further, it reduces issues faced thanks to authentication, caching, routing and security. Why use Laravel?

One of the first reasons to pick Laravel is that it comes with a plethora of features that aid in personalizing complicated applications. a number of the components it simplifies are authentication, MVC structural support, security, immaculate data migration, routing etc.

Modern websites require to run on speed and security. Thankfully, Laravel fits these requirements and is right for any progressive B2B websites.

If your application begets complicated rear requirements then Laravel makes work easier due to its launch of a comprehensive vagrant box, Homestead and prepackaged.

Currently, no specific issues are noted with Laravel. However, because it is comparatively new developers suggest keeping an eye fixed open for bugs. to understand more on Laravel here’s a link – coderseye

Symfony

If you’ve trying to find a PHP platform that features a ginormous community of developers then Symfony is that the best bet for you. Possessing a family of 300,000 developers, Symfony also supplies training courses in various languages analogy with updated blogs to stay the community of developers active. Aside from this, this platform is coveted by developers all around due to its easy-going environment and progressive features. (So, it’s not surprising that Symfony surpassed over 500 million downloads). Additionally, symphony utilizes PHP libraries to ease out development jobs like object conformation, creation of forms, templating and routing authentication. Other Reasons to use Symfony?

Symfony flaunts a lot of features, with abiding support release options.

Offers certification as a results of training.

Harbours a lot of bundles within frameworks.

Need to know more? Simply undergo – raygun

CodeIgniter

CodeIgniter is most fitted to the creation of energizing websites and it’s one among the simplest PHP framework due to its minute digital foot-printing. It comes with an assemblage of prebuilt modules that assist within the formation of strong reusable components. Why Trust CodeIgniter?

A primary reason to trust CodeIgniter is that unlike other platforms it can easily be installed. it’s straightforward, simple and provides proper beginner’s guide for newbies.

If you would like to develop lightweight applications then CodeIgnitor may be a good selection because it is quicker .

Other key characteristics of CodeIgnitor is immaculate error handling, exceptional documentation, built-in security tools along side measurable apps.

The only shortcomings of CodeIgnitor are that it doesn’t have a built-in ORM and its releases are a tad irregular. As a result, it’s not feasible for projects that need a high level of security.

CakePHP

Do you want to develop an internet site that’s aesthetically appealing and stylish , then think no more and invest with CakePHP! one among the only frameworks that started within the 2000s, it’s ideal for beginners. Cake PHP runs on the (create, read, update, and delete) CURD module, which allows it to develop easy-going and attractive web apps. Why CakePHP may be a Winner?

Easy installation is one reason that caters to CakePHP’s popularity. One only needs the framework’s copy along side an internet server to line it up.

Best characteristics of CakePHP includes improved security, quick builds, correct class inheritance, improved documentation, support portals via Cake Development Corporation etc.

CakePHP is right for commercial applications due to its advanced security measures like SQL injection repression, (XSS)cross-site scripting protection and (CSRF) cross-site request forgery protection.

Yii 2

Yii2 may be a unique PHP framework that runs without the backbone of a corporation . Rather, it’s supported by a worldwide team of developers who work to help the online development requirements of companies . The primary reason to pick Yii2 is that it offers quick results! Its designs are compatible with AJAX and due to its potent catching support, Yii2 is extremely fashionable developers. Other Reasons to Use Yii2

AJAX support

Yii2 enjoys the backing of an in depth community of developers

Easy handling of tools and error monitoring.

Hassle-free consolidation when working with 3rd party components.

Zend Framework

Zend is usually an object specified framework. this suggests that it are often stretched to permit developers to input needful functions in their projects. It is mainly developed on an agile methodology that assists developers in delivering superior quality apps to people. The best part about Zend is that it are often personalized and used for project-specific needs. Reasons why Zend may be a Winner:

If you’ve complex enterprise-level projects, for instance , within the banking or IT department then Zend is that the best PHP framework to use.

It allows the installation of external libraries that allows developers to use any component they like. Further, this framework flaunts good documentation along side a huge community. So any bug errors or otherwise are often fixed immediately.

Classic features of Zend include, easy to know Cloud API, session management, MVC components and encoding .

Excellent speed

To know more about Zend PHP framework, go inspect this link here – raygun

Phalcon

If speed is what you desire for your PHP framework then Phalcon is a perfect choice. This framework is made as a C-extension and is predicated on MVC that creates it superfast. Using remotely fewer resources, Phalcon is quick to process HTTP requests. It includes an ORM, auto-loading elements, MVC, caching options and lots of more. Other perks of Phalcon includes excellent data storage tools. It uses a singular SQL language like Object Document Mapping for MongoDB, PHQL etc. Apart from this Phalcon uses the simplicity of building applications due to global language support, form builders, template engines and more! On this note, Phalcon is right for building comprehensive web apps, REST APIs etc. to know more about Phalcon provides a read here – coderseye FuelPHP

One of the newest PHP framework released in 2011 is FuelPHP. it’s bendable, full-stack and supports MVC design pattern! It also possesses it’s very own (HMVC) hierarchical model view controller to stop content duplication on various pages. This prevents it from using excess memory while saving time! Why Consider FuelPHP?

Best characteristics of FuelPHP includes RESTful implementation, an upgraded caching system, a URL routing process, vulnerability protection and HMVC implementation.

It provides enhanced security options that surpass regular security measures.

It offers solutions for sophisticated projects that are vast and confusing.

On that note, now that you’re conscious of the varied sorts of PHP platforms, find the PHP framework 2020, which can work for your project best. All the Best!

1 note

·

View note

Link