#amazon s3 with salesforce

Explore tagged Tumblr posts

Text

#Amazon S3#Integrating Salesforce with Amazon S3#Offload Salesforce File to Amazon S3#Salesforce Amazon S3 Integration#Salesforce file management#Salesforce File Storage#Store Salesforce Files in S3#Sync Salesforce File With Amazon S3

1 note

·

View note

Text

How does cloud computing enable faster business scaling for me

Cloud Computing Market was valued at USD 605.3 billion in 2023 and is expected to reach USD 2619.2 billion by 2032, growing at a CAGR of 17.7% from 2024-2032.

Cloud Computing Market is witnessing unprecedented growth as businesses across sectors rapidly adopt digital infrastructure to boost agility, scalability, and cost-efficiency. From small startups to global enterprises, organizations are shifting workloads to the cloud to enhance productivity, improve collaboration, and ensure business continuity.

U.S. Market Leads Cloud Innovation with Expanding Enterprise Adoption

Cloud Computing Market continues to expand as emerging technologies such as AI, machine learning, and edge computing become more integrated into enterprise strategies. With increased reliance on hybrid and multi-cloud environments, providers are innovating faster to deliver seamless, secure, and flexible solutions.

Get Sample Copy of This Report: https://www.snsinsider.com/sample-request/2779

Market Keyplayers:

Amazon Web Services (AWS) (EC2, S3)

Microsoft (Azure Virtual Machines, Azure Storage)

Google Cloud (Google Compute Engine, Google Kubernetes Engine)

IBM (IBM Cloud Private, IBM Cloud Kubernetes Service)

Oracle (Oracle Cloud Infrastructure, Oracle Autonomous Database)

Alibaba Cloud (Elastic Compute Service, Object Storage Service)

Salesforce (Salesforce Sales Cloud, Salesforce Service Cloud)

SAP (SAP HANA Enterprise Cloud, SAP Business Technology Platform)

VMware (VMware vCloud, VMware Cloud on AWS)

Rackspace (Rackspace Cloud Servers, Rackspace Cloud Files)

Dell Technologies (VMware Cloud Foundation, Virtustream Enterprise Cloud)

Hewlett Packard Enterprise (HPE) (HPE GreenLake, HPE Helion)

Tencent Cloud (Tencent Cloud Compute, Tencent Cloud Object Storage)

Adobe (Adobe Creative Cloud, Adobe Document Cloud)

Red Hat (OpenShift, Red Hat Cloud Infrastructure)

Cisco Systems (Cisco Webex Cloud, Cisco Intersight)

Fujitsu (Fujitsu Cloud Service K5, Fujitsu Cloud IaaS Trusted Public S5)

Huawei (Huawei Cloud ECS, Huawei Cloud OBS)

Workday (Workday Human Capital Management, Workday Financial Management)

Market Analysis

The global cloud computing landscape is being redefined by increasing demand for on-demand IT services, software-as-a-service (SaaS) platforms, and data-intensive workloads. In the U.S., cloud adoption is accelerating due to widespread digital transformation initiatives and investments in advanced technologies. Europe is also experiencing significant growth, driven by data sovereignty concerns and regulatory frameworks like GDPR, which are encouraging localized cloud infrastructure development.

Market Trends

Surge in hybrid and multi-cloud deployments

Integration of AI and ML for intelligent workload management

Growth of edge computing reducing latency in critical applications

Expansion of industry-specific cloud solutions (e.g., healthcare, finance)

Emphasis on cybersecurity and compliance-ready infrastructure

Rise of serverless computing for agile development and scalability

Sustainability focus driving adoption of green data centers

Market Scope

Cloud computing's scope spans nearly every industry, supporting digital-first strategies, automation, and real-time analytics. Organizations are leveraging cloud platforms not just for storage, but as a foundation for innovation, resilience, and global expansion.

On-demand infrastructure scaling for startups and enterprises

Support for remote workforces with secure virtual environments

Cross-border collaboration powered by cloud-native tools

Cloud-based disaster recovery solutions

AI-as-a-Service and Data-as-a-Service models gaining traction

Regulatory-compliant cloud hosting driving European market growth

Forecast Outlook

The future of the Cloud Computing Market is driven by relentless demand for agile digital infrastructure. As cloud-native technologies become standard in enterprise IT strategies, both U.S. and European markets are expected to play pivotal roles. Advanced cloud security, integrated data services, and sustainability-focused infrastructure will be at the forefront of upcoming innovations. Strategic alliances between cloud providers and industry players will further fuel momentum, especially in AI, 5G, and IoT-powered environments.

Access Complete Report: https://www.snsinsider.com/reports/cloud-computing-market-2779

Conclusion

As the digital economy accelerates, the Cloud Computing Market stands at the core of modern enterprise transformation. It empowers businesses with the tools to scale intelligently, respond to market shifts rapidly, and innovate without limits. For leaders across the U.S. and Europe, embracing cloud technology is no longer optional—it's the strategic engine driving competitive advantage and sustainable growth.

Related Reports:

U.S.A drives innovation as Data Monetization Market gains momentum

U.S.A Wealth Management Platform Market Poised for Strategic Digital Transformation

U.S.A Trade Management Software Market Sees Surge Amid Cross-Border Trade Expansion

About Us:

SNS Insider is one of the leading market research and consulting agencies that dominates the market research industry globally. Our company's aim is to give clients the knowledge they require in order to function in changing circumstances. In order to give you current, accurate market data, consumer insights, and opinions so that you can make decisions with confidence, we employ a variety of techniques, including surveys, video talks, and focus groups around the world.

Contact Us:

Jagney Dave - Vice President of Client Engagement

Phone: +1-315 636 4242 (US) | +44- 20 3290 5010 (UK)

Mail us: [email protected]

0 notes

Text

AWS vs. Google Cloud : Quel Cloud Choisir pour Votre Entreprise en 2025?

L’adoption du cloud computing est désormais incontournable pour les entreprises souhaitant innover, scaler et optimiser leurs coûts. Parmi les leaders du marché, Amazon Web Services (AWS) et Google Cloud se démarquent. Mais comment choisir entre ces deux géants ? Cet article compare leurs forces, faiblesses, et cas d’usage pour vous aider à prendre une décision éclairée.

1. AWS : Le Pionnier du Cloud

Lancé en 2006, AWS domine le marché avec 32% de parts de marché (source : Synergy Group, 2023). Sa principale force réside dans son écosystème complet et sa maturité.

Points forts :

Portefeuille de services étendu : Plus de 200 services, incluant des solutions pour le calcul (EC2), le stockage (S3), les bases de données (RDS, DynamoDB), l’IA/ML (SageMaker), et l’IoT.

Globalisation : Présence dans 32 régions géographiques, idéal pour les entreprises ayant besoin de latence ultra-faible.

Enterprise-ready : Outils de gouvernance (AWS Organizations), conformité (HIPAA, GDPR), et une communauté de partenaires immense (ex : Salesforce, SAP).

Hybride et edge computing : Services comme AWS Outposts pour intégrer le cloud dans les data centers locaux.

Cas d’usage privilégiés :

Startups en forte croissance (ex : Netflix, Airbnb).

Projets nécessitant une personnalisation poussée.

Entreprises cherchant une plateforme « tout-en-un ».

2. Google Cloud : L’Expert en Data et IA

Google Cloud, bien que plus récent (2011), mise sur l’innovation technologique et son expertise en big data et machine learning. Avec environ 11% de parts de marché, il séduit par sa simplicité et ses tarifs compétitifs.

Points forts :

Data Analytics et AI/ML : Des outils comme BigQuery (analyse de données en temps réel) et Vertex AI (plateforme de ML unifiée) sont des références.

Kubernetes natif : Google a créé Kubernetes, et Google Kubernetes Engine (GKE) reste la solution la plus aboutie pour orchestrer des conteneurs.

Tarification transparente : Modèle de facturation à la seconde et remises automatiques (sustained use discounts).

Durabilité : Google Cloud vise le « zéro émission nette » d’ici 2030, un atour pour les entreprises éco-responsables.

Cas d’usage privilégiés :

Projets data-driven (ex : Spotify pour l’analyse d’utilisateurs).

Environnements cloud-native et conteneurisés.

Entreprises cherchant à intégrer de l’IA générative (ex : outils basés sur Gemini).

3. Comparatif Clé : AWS vs. Google Cloud

CritèreAWSGoogle CloudCalculEC2 (flexibilité maximale)Compute Engine (simplicité)StockageS3 (leader du marché)Cloud Storage (performant)Bases de donnéesAurora, DynamoDBFirestore, BigtableAI/MLSageMaker (outils variés)Vertex AI + intégration TensorFlowTarificationComplexe (mais réserve d’instances)Plus prévisible et flexibleSupport clientPayant (plans à partir de $29/mois)Support inclus à partir de $300/mois

4. Quel Cloud Choisir ?

Optez pour AWS si :

Vous avez besoin d’un catalogue de services exhaustif.

Votre architecture est complexe ou nécessite une hybridation.

La conformité et la sécurité sont prioritaires (secteurs régulés).

Préférez Google Cloud si :

Vos projets tournent autour de la data, de l’IA ou des conteneurs.

Vous cherchez une tarification simple et des innovations récentes.

La durabilité et l’open source sont des critères clés.

5. Tendances 2024 : IA Générative et Serverless

Les deux plateformes investissent massivement dans l’IA générative :

AWS propose Bedrock (accès à des modèles comme Claude d’Anthropic).

Google Cloud mise sur Duet AI (assistant codéveloppeur) et Gemini.

Côté serverless, AWS Lambda et Google Cloud Functions restent compétitifs, mais Google se distingue avec Cloud Run (conteneurs serverless).

Conclusion

AWS et Google Cloud répondent à des besoins différents. AWS est le choix « safe » pour une infrastructure complète, tandis que Google Cloud brille dans les projets innovants axés data et IA. Pour trancher, évaluez vos priorités : coûts, expertise technique, et roadmap à long terme.

Et vous, quelle plateforme utilisez-vous ? Partagez votre expérience en commentaire !

0 notes

Text

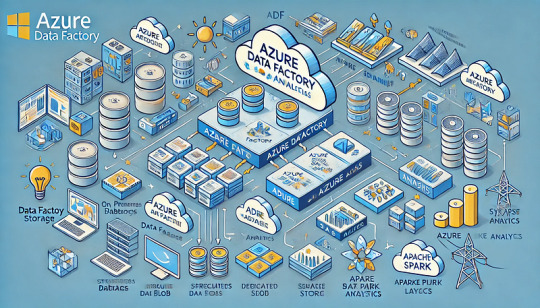

Exploring the Role of Azure Data Factory in Hybrid Cloud Data Integration

Introduction

In today’s digital landscape, organizations increasingly rely on hybrid cloud environments to manage their data. A hybrid cloud setup combines on-premises data sources, private clouds, and public cloud platforms like Azure, AWS, or Google Cloud. Managing and integrating data across these diverse environments can be complex.

This is where Azure Data Factory (ADF) plays a crucial role. ADF is a cloud-based data integration service that enables seamless movement, transformation, and orchestration of data across hybrid cloud environments.

In this blog, we’ll explore how Azure Data Factory simplifies hybrid cloud data integration, key use cases, and best practices for implementation.

1. What is Hybrid Cloud Data Integration?

Hybrid cloud data integration is the process of connecting, transforming, and synchronizing data between: ✅ On-premises data sources (e.g., SQL Server, Oracle, SAP) ✅ Cloud storage (e.g., Azure Blob Storage, Amazon S3) ✅ Databases and data warehouses (e.g., Azure SQL Database, Snowflake, BigQuery) ✅ Software-as-a-Service (SaaS) applications (e.g., Salesforce, Dynamics 365)

The goal is to create a unified data pipeline that enables real-time analytics, reporting, and AI-driven insights while ensuring data security and compliance.

2. Why Use Azure Data Factory for Hybrid Cloud Integration?

Azure Data Factory (ADF) provides a scalable, serverless solution for integrating data across hybrid environments. Some key benefits include:

✅ 1. Seamless Hybrid Connectivity

ADF supports over 90+ data connectors, including on-prem, cloud, and SaaS sources.

It enables secure data movement using Self-Hosted Integration Runtime to access on-premises data sources.

✅ 2. ETL & ELT Capabilities

ADF allows you to design Extract, Transform, and Load (ETL) or Extract, Load, and Transform (ELT) pipelines.

Supports Azure Data Lake, Synapse Analytics, and Power BI for analytics.

✅ 3. Scalability & Performance

Being serverless, ADF automatically scales resources based on data workload.

It supports parallel data processing for better performance.

✅ 4. Low-Code & Code-Based Options

ADF provides a visual pipeline designer for easy drag-and-drop development.

It also supports custom transformations using Azure Functions, Databricks, and SQL scripts.

✅ 5. Security & Compliance

Uses Azure Key Vault for secure credential management.

Supports private endpoints, network security, and role-based access control (RBAC).

Complies with GDPR, HIPAA, and ISO security standards.

3. Key Components of Azure Data Factory for Hybrid Cloud Integration

1️⃣ Linked Services

Acts as a connection between ADF and data sources (e.g., SQL Server, Blob Storage, SFTP).

2️⃣ Integration Runtimes (IR)

Azure-Hosted IR: For cloud data movement.

Self-Hosted IR: For on-premises to cloud integration.

SSIS-IR: To run SQL Server Integration Services (SSIS) packages in ADF.

3️⃣ Data Flows

Mapping Data Flow: No-code transformation engine.

Wrangling Data Flow: Excel-like Power Query transformation.

4️⃣ Pipelines

Orchestrate complex workflows using different activities like copy, transformation, and execution.

5️⃣ Triggers

Automate pipeline execution using schedule-based, event-based, or tumbling window triggers.

4. Common Use Cases of Azure Data Factory in Hybrid Cloud

🔹 1. Migrating On-Premises Data to Azure

Extracts data from SQL Server, Oracle, SAP, and moves it to Azure SQL, Synapse Analytics.

🔹 2. Real-Time Data Synchronization

Syncs on-prem ERP, CRM, or legacy databases with cloud applications.

🔹 3. ETL for Cloud Data Warehousing

Moves structured and unstructured data to Azure Synapse, Snowflake for analytics.

🔹 4. IoT and Big Data Integration

Collects IoT sensor data, processes it in Azure Data Lake, and visualizes it in Power BI.

🔹 5. Multi-Cloud Data Movement

Transfers data between AWS S3, Google BigQuery, and Azure Blob Storage.

5. Best Practices for Hybrid Cloud Integration Using ADF

✅ Use Self-Hosted IR for Secure On-Premises Data Access ✅ Optimize Pipeline Performance using partitioning and parallel execution ✅ Monitor Pipelines using Azure Monitor and Log Analytics ✅ Secure Data Transfers with Private Endpoints & Key Vault ✅ Automate Data Workflows with Triggers & Parameterized Pipelines

6. Conclusion

Azure Data Factory plays a critical role in hybrid cloud data integration by providing secure, scalable, and automated data pipelines. Whether you are migrating on-premises data, synchronizing real-time data, or integrating multi-cloud environments, ADF simplifies complex ETL processes with low-code and serverless capabilities.

By leveraging ADF’s integration runtimes, automation, and security features, organizations can build a resilient, high-performance hybrid cloud data ecosystem.

WEBSITE: https://www.ficusoft.in/azure-data-factory-training-in-chennai/

0 notes

Text

Amazon QuickSight Training Course | AWS QuickSight Online Training

AWS QuickSight vs. Tableau: Which Data Visualization Tool is Right for You?

Amazon QuickSight Training, you're likely exploring advanced business intelligence and data visualization tools to elevate your analytical capabilities. AWS QuickSight and Tableau are two leading solutions in this domain, each with unique features catering to diverse user needs. Whether you're a business looking for cost efficiency or a professional seeking robust features, choosing the right tool is crucial.

Overview of AWS QuickSight and Tableau

AWS QuickSight, Amazon's cloud-based BI solution, is designed to integrate seamlessly with other AWS services. It enables users to analyze data and share insights through interactive dashboards. On the other hand, Tableau, now part of Salesforce, is a veteran in the BI space, renowned for its user-friendly interface and extensive capabilities in data analysis.

AWS QuickSight shines with its cost-effectiveness and integration with Amazon Web Services, making it a favorite for businesses already using AWS. Tableau, however, excels in providing detailed, customizable dashboards and advanced analytics, catering to users needing more granular control.

Ease of Use

For beginners, AWS QuickSight offers a simpler, more intuitive interface, making it an excellent choice for users who prefer to avoid steep learning curves. Many users who undergo AWS QuickSight Online Training appreciate its guided learning paths and ease of implementation, especially when managing data from AWS sources. Its automated insights feature allows for faster decision-making, a key advantage for businesses with tight deadlines.

Tableau, while robust, has a steeper learning curve. Advanced users or those familiar with similar tools will find its extensive customization options invaluable. However, for new users, investing time in training is necessary to harness its full potential.

Integration Capabilities

AWS QuickSight integrates effortlessly with Amazon’s ecosystem, such as S3, Redshift, and RDS. This makes it a preferred choice for businesses already operating within the AWS framework. By enrolling in Amazon QuickSight Training, users can master these integrations, leveraging them to drive better decision-making.

Tableau, on the other hand, offers broad integration capabilities beyond cloud services, supporting various databases, third-party apps, and cloud platforms like Google Cloud and Azure. This flexibility makes it ideal for companies with heterogeneous IT infrastructures.

Scalability and Performance

AWS QuickSight boasts impressive scalability, making it a go-to option for businesses experiencing rapid growth. Its pay-per-session pricing model ensures affordability, even as user demand scales. This feature is highly valued by startups and SMBs, where cost management is crucial. QuickSight's serverless architecture means performance remains high, regardless of user volume, which is emphasized in AWS QuickSight Online Training modules.

Tableau provides excellent performance for static environments but may require additional resources for scaling, especially in enterprise setups. Tableau’s licensing can also be cost-prohibitive for smaller teams, making AWS QuickSight a more economical alternative in such scenarios.

Customization and Advanced Features

For users seeking deep customization and advanced analytics, Tableau has the edge. Its vast library of pre-built visualizations and tools like Tableau Prep for data cleaning are unmatched. However, AWS QuickSight has been catching up with features like SPICE (Super-fast, Parallel, In-memory Calculation Engine) and ML Insights. These innovations enable QuickSight to deliver insights faster and support advanced analytical needs, which are integral to any Amazon QuickSight Training curriculum.

Cost Considerations

AWS QuickSight is known for its cost-effective pricing, particularly its pay-per-session model, which eliminates the need for upfront investments. This makes it accessible to businesses of all sizes. Tableau, while offering rich features, follows a subscription-based pricing model that can be expensive, especially for large teams or enterprise setups. For organizations looking to maximize their ROI, AWS QuickSight Online Training can help users extract maximum value from this tool.

Key Use Cases

AWS QuickSight: Ideal for organizations deeply integrated with AWS, looking for scalable, cost-effective BI tools.

Tableau: Best suited for businesses requiring highly detailed analytics and those with diverse IT infrastructures.

Why Training is Essential?

For both tools, training plays a crucial role in maximizing their potential. Whether it's mastering AWS QuickSight’s seamless AWS integrations or Tableau’s intricate visualization capabilities, a structured learning path is essential. Enrolling in Amazon QuickSight Training or other specialized courses ensures users can confidently navigate features, optimize workflows, and derive actionable insights.

Conclusion

Both AWS QuickSight and Tableau have unique strengths, making them suitable for different scenarios. AWS QuickSight’s simplicity, cost-effectiveness, and integration with the AWS ecosystem make it an excellent choice for small to medium-sized businesses and startups. Tableau, with its advanced customization and broader integration capabilities, is a better fit for enterprises needing sophisticated analytics.

By enrolling in Amazon QuickSight Training or AWS QuickSight Online Training, users can develop the skills necessary to unlock the full potential of these tools. Ultimately, the choice between AWS QuickSight and Tableau depends on your specific business needs, budget, and the level of complexity required in your data visualization efforts. Both are powerful tools that can transform how businesses interact with and interpret their data, driving smarter decisions and better outcomes.

Visualpath offers AWS QuickSight Online Training for the next generation of intelligent business applications. AWS QuickSight Training in Hyderabad from industry experts and gain hands-on experience with our interactive program. Accessible globally, including in the USA, UK, Canada, Dubai, and Australia. With daily recordings and presentations available for later review. To book a free demo session, for more info, call +91-9989971070.

Key Points: AWS, Amazon S3, Amazon Redshift, Amazon RDS, Amazon Athena, AWS Glue, Amazon DynamoDB, AWS IoT Analytics, ETL Tools.

Attend Free Demo

Call Now: +91-9989971070

Whatsapp: https://www.whatsapp.com/catalog/919989971070

Visit our Blog: https://visualpathblogs.com/

Visit: https://www.visualpath.in/online-amazon-quicksight-training.html

#Amazon QuickSight Training#AWS QuickSight Online Training#Amazon QuickSight Course Online#AWS QuickSight Training in Hyderabad#Amazon QuickSight Training Course#AWS QuickSight Training

0 notes

Text

Amazon Web Service S3: How It Works And Its Advantages

Object storage from Amazon web service S3 is designed to allow you to access any quantity of data from any location.

What is Amazon S3?

An object storage solution with industry-leading scalability, data availability, security, and performance is Amazon Simple Storage solution (Amazon S3). For almost any use case, including data lakes, cloud-native apps, and mobile apps, millions of users across all sizes and sectors store, manage, analyze, and safeguard any quantity of data. You may optimize expenses, arrange and analyze data, and set up precise access restrictions to satisfy certain business and regulatory requirements with affordable storage classes and user-friendly administration tools.

How it works

Data is stored by Amazon S3 as objects in buckets S3. A file and its metadata are objects. A bucket is an object’s container. You must first establish a bucket and choose an AWS Region and bucket name before you can store your data in Amazon web service S3. After that, you upload your data as objects in S3 to that bucket. Every object in the bucket has a key, also known as a key name, which serves as its unique identification.

You can customize the functionality offered by S3 to suit your unique use case. For instance, you can restore mistakenly erased or overwritten objects by using Amazon S3 Versioning to store multiple copies of an object in the same bucket. Only those with specifically allowed access permissions can access buckets and the items within them since they are private. S3 Access Points, bucket policies, AWS IAM policies, and ACLs can manage access.Image credit to Amazon

Advantages of Amazon S3

Amazon S3 has unparalleled performance and can store almost any size of data, up to exabytes. Because Amazon web service S3 is completely elastic, it will automatically expand and contract as you add and delete data. You simply pay for what you use, and there’s no need to supply storage.

Sturdiness and accessibility

Amazon S3 offers industry-leading availability and the cloud’s most robust storage. Supported by the strongest SLAs in the cloud, S3’s distinctive architecture is built to deliver 99.99% availability and 99.999999999% (11 nines) data durability by default.

Data protection and security

Protect your data with unmatched security, compliance, and access control. Besides being private, safe, and encrypted by default, Amazon S3 has many auditing options to monitor requests for access to your resources.

Best performance at the lowest cost

Large volumes of data that are accessed frequently, seldom, or infrequently can be cost-effectively stored with Amazon web service S3 automated data lifecycle management and numerous storage classes with the greatest pricing performance for any application. Amazon S3 provides the throughput, latency, flexibility, and resilience to guarantee that storage never restricts performance.

S3 amazon price

A 12-month free trial of S3’s free tier includes 100 GB of data transfer out per month, 20,000 GET requests, 2,000 PUT, COPY, POST, or LIST requests, and 5GB of Amazon S3 storage in the S3 Standard storage class.

Only pay for what you actually use. There isn’t a minimum fee. The Amazon S3 Pricing of requests and data retrieval, data transport and acceleration, data management and insights, replication, and transform and query features are the cost components of S3.

Use cases

Construct a data lake

A data lake can hold any size structured or unstructured data. High-performance computers, AI, machine learning, and data analytics maximize data value.

A secure Amazon S3 data lake lets Salesforce users search, retrieve, and analyze all their data.

Make a backup and restore important data

With S3’s powerful replication capabilities, data security with AWS Backup, and a range of AWS Partner Network solutions, you can meet your recovery time goal (RTO), recovery point objective (RPO), and compliance needs.

Terabytes of photos may be restored in a matter of hours rather than days with Ancestry’s usage of Amazon web service S3 Glacier storage classes.

Data archiving at the most affordable price

To cut expenses, remove operational hassles, and obtain fresh insights, move your archives to the Amazon S3 Glacier storage classes.

Using Amazon S3 Glacier Instant Retrieval, the UK public service broadcaster BBC safely moved their 100-year-old flagship archive.

Make use of your data

Amazon S3 might be the beginning of your generative AI journey because it averages over 100 million requests per second and stores over 350 trillion objects exabytes of data for almost every use case.

Grendene is employing a data lake built on Amazon web service S3 to develop a generative AI-based virtual assistant for its sales force.

Read more on Govindhtech.com

#AmazonWebServiceS3#AmazonS3#AI#AWSBackup#S3storage#dataavailability#News#Technews#Technology#Technologynews#Technologytrends#govindhtech

0 notes

Text

Top 10 Cloud Services Providers in 2024

As we move into 2024, cloud computing continues to play a critical role in digital transformation for businesses worldwide. From startups to large enterprises, cloud services provide scalable infrastructure, cost-effective solutions, and the ability to innovate rapidly. But with so many providers in the market, it can be challenging to choose the right one.

In this article, we will explore the Top 10 Cloud Services Providers in 2024, highlighting their strengths, features, and why they stand out. Whether you're looking for public, private, or hybrid cloud solutions, these companies offer diverse options to meet your needs.

1. Amazon Web Services (AWS)

AWS remains the dominant force in the cloud services market in 2024, offering a wide array of services, including computing power, storage, and AI tools. With its global reach and continuous innovation, AWS is a preferred choice for both startups and large corporations.

Key Features:

Elastic Compute Cloud (EC2)

Simple Storage Service (S3)

Machine Learning and AI services

Serverless computing (AWS Lambda)

2. Microsoft Azure

Microsoft Azure has seen massive growth and remains a top cloud provider in 2024. Its integration with Microsoft products, especially Office 365, makes Azure highly attractive for businesses. Azure's hybrid cloud solutions are also favored by enterprises seeking flexibility.

Key Features:

Virtual Machines (VMs)

Azure SQL Database

DevOps Tools

AI and Machine Learning capabilities

3. Google Cloud Platform (GCP)

Google Cloud Platform (GCP) stands out for its big data, machine learning, and AI capabilities. GCP is the go-to provider for organizations looking to leverage AI for competitive advantage. With continuous investments in its cloud infrastructure, GCP offers high performance, reliability, and innovation.

Key Features:

BigQuery for data analytics

Kubernetes Engine for container management

AI and machine learning tools (TensorFlow)

Comprehensive security features

4. IBM Cloud

IBM Cloud focuses on hybrid and multi-cloud environments, making it a preferred provider for enterprises with complex IT needs. IBM Cloud's integration with its AI, Watson, and blockchain technologies sets it apart, especially for industries like healthcare, finance, and manufacturing.

Key Features:

Hybrid cloud solutions

AI and Watson services

Blockchain as a Service (BaaS)

Secure and scalable infrastructure

5. Oracle Cloud Infrastructure (OCI)

Oracle Cloud Infrastructure (OCI) is designed for high-performance computing workloads and large-scale data operations. Oracle Cloud stands out for its database management solutions and its ability to handle critical workloads for enterprises.

Key Features:

Autonomous Database

Oracle Cloud Applications

AI-driven analytics

Hybrid and multi-cloud capabilities

6. Alibaba Cloud

Alibaba Cloud, the largest cloud provider in China, is expanding its footprint globally. With its robust e-commerce and big data capabilities, Alibaba Cloud is ideal for businesses looking to tap into the Asian market.

Key Features:

Global Content Delivery Network (CDN)

Advanced AI and data analytics

Elastic Compute Service (ECS)

Strong presence in the Asia-Pacific region

7. Salesforce Cloud

Salesforce Cloud is known for its CRM solutions but has expanded its offerings to include cloud infrastructure. Salesforce's customer-centric solutions are ideal for businesses looking to enhance customer engagement through cloud-based tools.

Key Features:

Salesforce CRM

Marketing Cloud and Commerce Cloud

AI-powered Einstein analytics

Integration with third-party applications

8. VMware Cloud

VMware Cloud is a leader in virtualization and offers robust multi-cloud and hybrid cloud solutions. With its partnerships with AWS, Microsoft, and Google, VMware Cloud enables businesses to run, manage, and secure applications across cloud environments.

Key Features:

vSphere for virtualization

VMware Tanzu for Kubernetes management

CloudHealth for cost management

Extensive hybrid cloud capabilities

9. SAP Cloud Platform

SAP Cloud Platform provides specialized solutions for enterprises, particularly in the areas of ERP and business management. SAP’s cloud offerings are designed to help businesses with digital transformation by integrating cloud services into their existing SAP environments.

Key Features:

SAP S/4HANA for enterprise resource planning

Business intelligence tools

AI and machine learning for predictive analytics

Enterprise-level security and compliance

10. DigitalOcean

DigitalOcean focuses on simplicity and affordability, making it a great choice for startups, developers, and small businesses. DigitalOcean is best known for its developer-friendly cloud infrastructure and its ease of use for hosting and scaling applications.

Key Features:

Droplets (cloud servers)

Kubernetes for container orchestration

Managed databases

Affordable and easy-to-use interface

FAQs on Cloud Services Providers in 2024

Q1: What are cloud services providers?

Cloud services providers are companies that offer computing services over the internet. These services can include storage, servers, databases, networking, software, analytics, and intelligence.

Q2: How do I choose the right cloud services provider?

Choosing the right provider depends on your business needs, such as scalability, security, pricing, support, and specific tools or services required. It's important to compare providers based on your goals.

Q3: Is AWS better than Google Cloud or Microsoft Azure?

Each provider has its strengths. AWS is known for its extensive services and global reach, Azure integrates well with Microsoft products, and Google Cloud excels in AI and data analytics. The best choice depends on your specific use case.

Q4: Can small businesses benefit from cloud services?

Yes, cloud services offer scalable and cost-effective solutions for small businesses. Providers like DigitalOcean and Google Cloud offer affordable plans tailored to startups and small businesses.

Q5: What are hybrid cloud solutions?

Hybrid cloud solutions combine private and public clouds, allowing businesses to manage their data and applications across multiple environments. This offers flexibility and better control over resources.

What is Cloud Computing?

Cloud computing refers to the delivery of computing services over the internet (the cloud), enabling users to access and use data, software, and hardware remotely without having to manage physical infrastructure. Cloud computing offers scalability, flexibility, and cost efficiency, making it a key driver of modern business transformation.

Conclusion

Choosing the right cloud services provider is critical for ensuring the success of your business in 2024. Whether you're looking for AI-driven analytics, secure hybrid solutions, or cost-effective infrastructure, these top 10 providers offer reliable and innovative solutions to meet your needs. As types of cloud computing evolves, staying up-to-date with the latest offerings from these providers can give your business a competitive edge.

Contact us: [email protected]

Social Accounts:

0 notes

Text

Mastering Data Migration at Quadrant: Processes, Challenges, and Strategic Approaches at

Data migration at Quadrantis more than a technical necessity at Quadrant technologies; it's a strategic move that can significantly influence an organization's agility and efficiency. As businesses grow and evolve, so does the need to move vast amounts of data seamlessly and securely. This extended exploration into data migration will cover its processes, types, challenges, and best practices, providing a comprehensive understanding essential for ensuring successful transitions.

Storage Migration: Moving data between storage devices, often to improve performance or reduce costs. Example: Transitioning from traditional on-premises storage to cloud storage solutions like Amazon S3.

Database Migration: Transferring data between different database systems or versions. Example: Migrating from an Oracle database to MySQL.

Application Migration: Moving an application and its data from one environment to another. Example: Shifting a CRM system from an on-premises server to a cloud-based platform like Salesforce.

Cloud Migration: Moving data and applications to a cloud environment. Example: Transferring enterprise applications and data to a public cloud provider such as AWS.

Business Process Migration: Migrating data related to core business processes, such as CRM or ERP systems. Example: Consolidating ERP systems post-merger.

0 notes

Text

Dell Boomi AWS

Dell Boomi and AWS: A Match Made in Cloud Integration Heaven

In today’s rapidly evolving digital world, businesses constantly seek ways to streamline operations, improve efficiency, and unlock data-driven insights. This is where the powerful combination of Dell Boomi and Amazon Web Services (AWS) shines, transforming the way organizations unlock the true potential of their data and applications.

What is Dell Boomi?

Dell Boomi is a leading cloud-based integration platform and service (iPaaS). Consider it the digital glue connecting various applications, data sources, and systems—whether in the cloud or on-premises. Boomi’s intuitive, low-code interface simplifies the creation of complex integration flows, empowering enterprises to unlock data silos and automate business processes.

Why Partner Boomi with AWS?

AWS provides vast cloud computing services, offering unparalleled scalability, reliability, and global reach. When you integrate Boomi with AWS, you gain access to:

Comprehensive Cloud Services: Effortlessly connect your Boomi integrations with services like Amazon S3 (storage), Amazon EC2 (compute), Amazon RDS (databases), and many more.

Robust Data Management: Facilitate secure and efficient data movement between AWS services and other endpoints, enabling seamless data pipelines and analytics.

Scalability: Boomi’s cloud-native architecture and AWS’s elasticity allow your integrations to scale effortlessly to meet changing business demands.

Global Availability: AWS’s global footprint ensures that your Boomi integrations can support data flows and business processes worldwide.

Critical Use Cases for Dell Boomi and AWS

Hybrid Cloud Integration: This service bridges the gap between your on-premises systems and AWS cloud services, facilitating smooth data migration and seamless business processes across hybrid environments.

SaaS Application Integration: Effortlessly connect popular SaaS applications like Salesforce, NetSuite, Workday, and others to your AWS ecosystem, streamlining data flows and unlocking valuable business insights.

IoT Data Orchestration: Manage data flow from IoT devices into AWS services, enabling real-time insights, predictive analytics, and enhanced operational efficiency and decision-making.

Modern Data Warehousing: Simplify data extraction, transformation, and loading (ETL) processes into AWS Redshift or other data warehouse solutions for in-depth business intelligence.

API Development and Management: Build and expose Boomi APIs to streamline interactions between AWS services, external systems, and partner ecosystems.

Getting Started with Boomi on AWS

Dell Boomi provides multiple options for leveraging their iPaaS on AWS:

Boomi Marketplace: Discover pre-built integrations and connectors on the AWS Marketplace that accelerate your integration projects.

Boomi Molecule: A lightweight runtime engine from Boomi that can be deployed on AWS for scalable and fault-tolerant integration processes.

Boomi Pay-As-You-Go: Explore Boomi’s capabilities with a flexible consumption model, which is ideal for starting small with your integration needs.

The Future is Integrated

The partnership between Dell Boomi and AWS empowers businesses to break down data silos, automate workflows, and drive innovation through seamless cloud integration. As organizations continue their cloud journeys, Boomi and AWS will play an increasingly critical role in shaping the future of connected, data-driven enterprises.

youtube

You can find more information about Dell Boomi in this Dell Boomi Link

Conclusion:

Unogeeks is the No.1 IT Training Institute for Dell Boomi Training. Anyone Disagree? Please drop in a comment

You can check out our other latest blogs on Dell Boomi here – Dell Boomi Blogs

You can check out our Best In Class Dell Boomi Details here – Dell Boomi Training

Follow & Connect with us:

———————————-

For Training inquiries:

Call/Whatsapp: +91 73960 33555

Mail us at: [email protected]

Our Website ➜ https://unogeeks.com

Follow us:

Instagram: https://www.instagram.com/unogeeks

Facebook: https://www.facebook.com/UnogeeksSoftwareTrainingInstitute

Twitter: https://twitter.com/unogeek

0 notes

Text

SNOWFLAKE TECH

Snowflake: Revolutionizing Data in the Cloud

In the ever-evolving world of big data, cloud-based data warehousing has become a game-changer. Snowflake is responsible for its unique architecture and innovative features. Let’s explore what makes Snowflake a compelling solution for modern businesses.

What is Snowflake?

At its core, Snowflake is a fully managed, cloud-based data warehouse-as-a-service (DWaaS). Translated from tech jargon, this means:

Cloud-native: Snowflake is built from the ground up to operate within cloud infrastructure providers like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP).

SaaS: Like Gmail or Salesforce, you don’t have to install or manage hardware—Snowflake handles everything.

Data warehouse focus: Snowflake is optimized to store and analyze vast volumes of structured and semi-structured data, making it ideal for business intelligence and data-driven decision-making

Snowflake’s Distinctive Architecture

What sets Snowflake apart is how it separates storage, computing, and cloud services:

Storage: Your data is compressed in the underlying cloud provider’s storage system (think AWS S3).

Compute: Virtual warehouses (essentially compute clusters) spin up on-demand to process queries and workloads – you only pay when they’re active!

Cloud Services: This layer handles the coordination, authentication, metadata management, and all the logistics that make Snowflake tick.

Key Advantages of Snowflake

Elasticity: Compute resources can effortlessly scale up or down in response to changing demand. You won’t run out of horsepower during peak times or overpay for idle capacity.

Near-zero maintenance: No software patches, upgrades, or hardware headaches. Snowflake’s got it covered.

Pay-as-you-go: You’re billed by the second for computing usage, ensuring cost-efficient operations.

Data Sharing: Securely share live, governed data sets within your organization or with external partners without costly replication.

Performance: Optimized for complex queries, Snowflake delivers lightning-fast insights on vast datasets.

Popular Use Cases

Snowflake isn’t just theoretical coolness; it’s solving real-world problems:

Centralized data hubs: Consolidate information from across the enterprise for a unified view of your business.

Advanced analytics & AI/ML: Train machine learning models on large volumes of data or power sophisticated dashboards.

Customer 360: Create comprehensive customer profiles by combining data from diverse sources.

Data Lakes: Snowflake can complement your data lake by acting as a powerful query engine.

Is Snowflake For You?

If you’re dealing with growing data volumes, need agility in your analytics, and want to minimize IT overhead, Snowflake is worth considering. However, it is essential to assess your specific requirements and compare Snowflake with other cloud data platforms.

Beyond the Buzzword

Snowflake is not a magic bullet but a mighty powerful tool for the right scenarios. By understanding its architecture, strengths, and use cases, you can decide if it can propel your data strategy into the cloud-driven future.

youtube

You can find more information about Snowflake in this Snowflake

Conclusion:

Unogeeks is the No.1 IT Training Institute for SAP Training. Anyone Disagree? Please drop in a comment

You can check out our other latest blogs on Snowflake here – Snowflake Blogs

You can check out our Best In Class Snowflake Details here – Snowflake Training

Follow & Connect with us:

———————————-

For Training inquiries:

Call/Whatsapp: +91 73960 33555

Mail us at: [email protected]

Our Website ➜ https://unogeeks.com

Follow us:

Instagram: https://www.instagram.com/unogeeks

Facebook: https://www.facebook.com/UnogeeksSoftwareTrainingInstitute

Twitter: https://twitter.com/unogeeks

0 notes

Text

Cloud Computing Market Size, Share, Analysis, Forecast, and Growth Trends to 2032: Fintech and Cloud Integration Reshape Banking

Cloud Computing Market was valued at USD 605.3 billion in 2023 and is expected to reach USD 2619.2 billion by 2032, growing at a CAGR of 17.7% from 2024-2032.

Cloud Computing Market is witnessing unprecedented growth as businesses across the USA continue to shift their operations to flexible, scalable, and cost-efficient digital infrastructures. Accelerated by hybrid work models, rising data demands, and evolving enterprise needs, cloud adoption is becoming central to digital transformation strategies across industries such as healthcare, finance, retail, and manufacturing.

Rapid Digital Transformation Fuels Growth in U.S. Cloud Computing Sector

U.S. Cloud Computing Market was valued at USD 178.66 billion in 2023 and is expected to reach USD 677.09 billion by 2032, growing at a CAGR of 15.95% from 2024-2032.

Cloud Computing Market is being propelled by demand for agility, real-time collaboration, and secure data management. U.S. enterprises are increasingly turning to cloud-native solutions to support AI, machine learning, and big data analytics while optimizing IT resources. This shift is enabling companies to reduce overhead, increase resilience, and innovate faster in competitive environments.

Get Sample Copy of This Report: https://www.snsinsider.com/sample-request/2779

Market Keyplayers:

Amazon Web Services (AWS) (EC2, S3)

Microsoft (Azure Virtual Machines, Azure Storage)

Google Cloud (Google Compute Engine, Google Kubernetes Engine)

IBM (IBM Cloud Private, IBM Cloud Kubernetes Service)

Oracle (Oracle Cloud Infrastructure, Oracle Autonomous Database)

Alibaba Cloud (Elastic Compute Service, Object Storage Service)

Salesforce (Salesforce Sales Cloud, Salesforce Service Cloud)

SAP (SAP HANA Enterprise Cloud, SAP Business Technology Platform)

VMware (VMware vCloud, VMware Cloud on AWS)

Rackspace (Rackspace Cloud Servers, Rackspace Cloud Files)

Dell Technologies (VMware Cloud Foundation, Virtustream Enterprise Cloud)

Hewlett Packard Enterprise (HPE) (HPE GreenLake, HPE Helion)

Tencent Cloud (Tencent Cloud Compute, Tencent Cloud Object Storage)

Adobe (Adobe Creative Cloud, Adobe Document Cloud)

Red Hat (OpenShift, Red Hat Cloud Infrastructure)

Cisco Systems (Cisco Webex Cloud, Cisco Intersight)

Fujitsu (Fujitsu Cloud Service K5, Fujitsu Cloud IaaS Trusted Public S5)

Huawei (Huawei Cloud ECS, Huawei Cloud OBS)

Workday (Workday Human Capital Management, Workday Financial Management)

Market Analysis

The U.S. cloud computing landscape is dominated by major public cloud providers but continues to see rising interest in hybrid and multi-cloud models. The market is shaped by the need for enterprise scalability, security, and compliance with evolving federal data regulations. Growth is also influenced by increasing adoption of edge computing and the expansion of cloud services beyond storage into areas like SaaS, PaaS, and IaaS.

Surge in demand for data-driven decision-making

Expansion of digital-first business models

Growing investments in cloud security and compliance

Migration of legacy systems to modern cloud frameworks

Government and public sector embracing secure cloud infrastructure

Market Trends

Accelerated growth of hybrid and multi-cloud adoption

Edge computing gaining momentum for low-latency applications

Rise in cloud-native application development and containerization

Integration of AI and machine learning into cloud platforms

Increasing demand for zero-trust security architecture

Green cloud initiatives aimed at sustainability

Cloud-as-a-Service models driving operational flexibility

Market Scope

The Cloud Computing Market in the USA spans a wide spectrum of industries and service models, with expanding potential in both enterprise and SMB segments. Its adaptability and real-time innovation capabilities make it a core pillar of modern IT strategy.

Cloud-first strategies across public and private sectors

Rapid adoption in healthcare, finance, and education

API-driven service expansion and integration

On-demand scalability for digital product launches

High ROI for cloud migration and automation projects

Strong potential in disaster recovery and remote operations

Enhanced collaboration tools supporting distributed teams

Forecast Outlook

The outlook for the U.S. Cloud Computing Market remains highly optimistic, fueled by ongoing digital transformation and innovation. As organizations prioritize business continuity, data agility, and customer experience, cloud platforms will remain the foundation of enterprise technology. Expect continued evolution through AI-enhanced services, quantum-safe security, and industry-specific cloud solutions tailored for performance and compliance. The momentum points toward an increasingly intelligent and interconnected cloud ecosystem reshaping how U.S. businesses operate.

Access Complete Report: https://www.snsinsider.com/reports/cloud-computing-market-2779

Conclusion

In today’s fast-paced digital economy, the Cloud Computing Market is not just a trend—it’s the infrastructure of innovation. U.S. companies that harness its full potential are not only future-proofing operations but redefining how they deliver value. As agility, speed, and security become non-negotiable, cloud computing stands out as the strategic engine powering competitive advantage and sustainable growth.

Related reports:

Explore the growth of the healthcare cloud computing market in the US

Analyze trends shaping the microserver industry in the United States

About Us:

SNS Insider is one of the leading market research and consulting agencies that dominates the market research industry globally. Our company's aim is to give clients the knowledge they require in order to function in changing circumstances. In order to give you current, accurate market data, consumer insights, and opinions so that you can make decisions with confidence, we employ a variety of techniques, including surveys, video talks, and focus groups around the world.

Contact Us:

Jagney Dave - Vice President of Client Engagement

Phone: +1-315 636 4242 (US) | +44- 20 3290 5010 (UK)

0 notes

Text

Amazon Announces “Amazon Q,” the Company’s Generative AI Assistant

New Post has been published on https://thedigitalinsider.com/amazon-announces-amazon-q-the-companys-generative-ai-assistant/

Amazon Announces “Amazon Q,” the Company’s Generative AI Assistant

In a striking move within the competitive landscape of productivity software and generative AI chatbots, Amazon recently unveiled its latest innovation: “Amazon Q.” This announcement, made at the AWS Reinvent conference in Las Vegas, marks Amazon’s assertive stride into a domain where tech giants like Microsoft and Google have already established a significant presence.

The introduction of Amazon Q is not just a new product launch; it’s a statement of Amazon’s ambition and technological prowess in the rapidly evolving field of AI-driven software solutions.

The emergence of Amazon Q can be viewed in the context of the recent success of Microsoft-backed OpenAI’s ChatGPT. Since its launch, ChatGPT has revolutionized the way generative AI is perceived, offering human-like text generation based on brief inputs.

Amazon’s Q steps into this arena, signaling not only a challenge to Microsoft’s growing influence in AI but also an effort to redefine the capabilities of chatbots in the professional world. This move by Amazon could be seen as a strategic endeavor to capture a share of the market that has been intrigued and captivated by the possibilities of AI, as demonstrated by ChatGPT’s popularity.

Image: Amazon

Features and Accessibility of Amazon Q

Amazon Q emerges as a sophisticated chatbot designed to seamlessly integrate with Amazon Web Services (AWS), offering an array of functionalities tailored for the modern workplace. Central to its appeal is the ability to assist users in navigating the expansive ecosystem of AWS, offering real-time troubleshooting and guidance. This integration signifies a leap in making AWS’s complex array of services more accessible and user-friendly.

In terms of availability, Amazon has launched Amazon Q with an initial free preview, allowing users to experience its capabilities without immediate cost. This approach mirrors the common strategy in software services, where early adopters are given a chance to test and provide feedback.

Following this period, it will transition to a tiered pricing model. The standard business user tier is set at $20 per person per month, while a more feature-rich tier for developers and IT professionals will cost $25 per person per month. This pricing strategy positions the chatbot competitively against similar offerings.

Image: Amazon

Integration and Functionality

The integration of Amazon Q extends beyond AWS, encompassing popular communication applications such as Salesforce’s Slack and various text-editing tools used by software developers. This versatility underscores Amazon’s ambition to make the chatbot an indispensable part of the professional toolkit, facilitating smoother workflows and enhanced communication.

A standout feature of Amazon Q is its ability to automate modifications to source code, significantly reducing the workload for developers. This functionality is not just a time-saver; it represents a shift in how AI can be leveraged to enhance coding efficiency and accuracy.

Furthermore, it boasts the capability to connect with over 40 enterprise systems. This extensive connectivity means users can access and interact with information across various platforms like Microsoft 365, Dropbox, Salesforce, Zendesk, and AWS’s own S3 data-storage service. The ability to upload and query documents within these interactions further enhances the chatbot’s utility, making it a comprehensive tool for managing a wide array of business functions.

Amazon’s Expansion with Amazon Q

The launch of Amazon Q marks a significant milestone in Amazon’s strategic expansion into AI-assisted productivity software. Its integration with AWS, competitive pricing, and innovative features position it as a formidable player in a market dominated by tech giants like Microsoft and Google. Amazon Q’s potential to streamline AWS service navigation and enhance the efficiency of various professional tasks is notable.

Looking ahead, Amazon Q could have far-reaching implications for Amazon and the broader AI-assisted productivity software sector. It has the potential to not only diversify Amazon’s product offerings but also to redefine how businesses interact with AI tools. The success of Amazon Q could pave the way for more advanced AI applications in the workplace, further blurring the lines between human and machine-led tasks. As the AI landscape continues to evolve, Amazon Q could emerge as a key driver in shaping the future of AI integration in professional environments.

#Accessibility#ai#ai assistant#Amazon#Amazon Web Services#applications#approach#Artificial Intelligence#AWS#Business#Capture#challenge#chatbot#chatbots#chatGPT#code#coding#communication#comprehensive#conference#connectivity#data#developers#dropbox#Editing#efficiency#enterprise#Features#Future#generative

0 notes

Text

Explore how ADF integrates with Azure Synapse for big data processing.

How Azure Data Factory (ADF) Integrates with Azure Synapse for Big Data Processing

Azure Data Factory (ADF) and Azure Synapse Analytics form a powerful combination for handling big data workloads in the cloud.

ADF enables data ingestion, transformation, and orchestration, while Azure Synapse provides high-performance analytics and data warehousing. Their integration supports massive-scale data processing, making them ideal for big data applications like ETL pipelines, machine learning, and real-time analytics. Key Aspects of ADF and Azure Synapse Integration for Big Data Processing

Data Ingestion at Scale ADF acts as the ingestion layer, allowing seamless data movement into Azure Synapse from multiple structured and unstructured sources, including: Cloud Storage: Azure Blob Storage, Amazon S3, Google

Cloud Storage On-Premises Databases: SQL Server, Oracle, MySQL, PostgreSQL Streaming Data Sources: Azure Event Hubs, IoT Hub, Kafka

SaaS Applications: Salesforce, SAP, Google Analytics 🚀 ADF’s parallel processing capabilities and built-in connectors make ingestion highly scalable and efficient.

2. Transforming Big Data with ETL/ELT ADF enables large-scale transformations using two primary approaches: ETL (Extract, Transform, Load): Data is transformed in ADF’s Mapping Data Flows before loading into Synapse.

ELT (Extract, Load, Transform): Raw data is loaded into Synapse, where transformation occurs using SQL scripts or Apache Spark pools within Synapse.

🔹 Use Case: Cleaning and aggregating billions of rows from multiple sources before running machine learning models.

3. Scalable Data Processing with Azure Synapse Azure Synapse provides powerful data processing features: Dedicated SQL Pools: Optimized for high-performance queries on structured big data.

Serverless SQL Pools: Enables ad-hoc queries without provisioning resources.

Apache Spark Pools: Runs distributed big data workloads using Spark.

💡 ADF pipelines can orchestrate Spark-based processing in Synapse for large-scale transformations.

4. Automating and Orchestrating Data Pipelines ADF provides pipeline orchestration for complex workflows by: Automating data movement between storage and Synapse.

Scheduling incremental or full data loads for efficiency. Integrating with Azure Functions, Databricks, and Logic Apps for extended capabilities.

⚙️ Example: ADF can trigger data processing in Synapse when new files arrive in Azure Data Lake.

5. Real-Time Big Data Processing ADF enables near real-time processing by: Capturing streaming data from sources like IoT devices and event hubs. Running incremental loads to process only new data.

Using Change Data Capture (CDC) to track updates in large datasets.

📊 Use Case: Ingesting IoT sensor data into Synapse for real-time analytics dashboards.

6. Security & Compliance in Big Data Pipelines Data Encryption: Protects data at rest and in transit.

Private Link & VNet Integration: Restricts data movement to private networks.

Role-Based Access Control (RBAC): Manages permissions for users and applications.

🔐 Example: ADF can use managed identity to securely connect to Synapse without storing credentials.

Conclusion

The integration of Azure Data Factory with Azure Synapse Analytics provides a scalable, secure, and automated approach to big data processing.

By leveraging ADF for data ingestion and orchestration and Synapse for high-performance analytics, businesses can unlock real-time insights, streamline ETL workflows, and handle massive data volumes with ease.

WEBSITE: https://www.ficusoft.in/azure-data-factory-training-in-chennai/

0 notes

Text

Everything as a Service To Reduce Costs, Risks & Complexity

What is Everything as a Service?

The phrase “everything as a service” (XaaS) refers to the expanding practice of providing a range of goods, equipment, and technological services via the internet. It’s basically a catch-all term for all the different “as-a-service” models that have surfaced in the field of cloud computing.

Everything as a Service examples

XaaS, or “Everything as a Service,” refers to the wide range of online software and services. Many services can be “X” in XaaS. Examples of common:

SaaS: Internet-delivered software without installation. Salesforce, Google Workspace, and Office 365.

Providing virtualized computer resources over the internet. These include AWS, Azure, and GCP.

Platform as a Service (PaaS): Provides equipment and software for application development online. Google App Engine and Azure App Services are examples.

Virtual desktops are available remotely with DaaS. Horizon Cloud and Amazon WorkSpaces.

BaaS (Backend as a Service): Connects online and mobile app developers to cloud storage and APIs. Amazon Amplify and Firebase are examples.

DBaaS (Database as a Service) saves users from setting up and maintaining physical databases. Google Cloud SQL and Amazon RDS.

FaaS: A serverless computing service that lets customers execute code in response to events but not manage servers. AWS Lambda and Google Cloud Functions.

STAaS: Provides internet-based storage. DropBox, Google Drive, and Amazon S3.

Network as a Service (NaaS): Virtualizes network services for scale and flexibility. SD-WAN is one.

By outsourcing parts of their IT infrastructure to third parties, XaaS helps companies cut expenses, scale up, and simplify. Flexibility allows users to pay for what they use, optimizing resources and costs.

IBM XaaS

Enterprises are requiring models that measure business outcomes instead of just IT results in order to spur rapid innovation. These businesses are under growing pressure to restructure their IT estates in order to cut costs, minimise risk, and simplify operations.

Everything as a Service (XaaS), which streamlines processes, lowers risk, and speeds up digital transformation, is emerging as a potential answer to these problems. By 2028, 80% of IT buyers will give priority to using Everything as a Service for critical workloads that need flexibility in order to maximise IT investment, enhance IT operations capabilities, and meet important sustainability KPIs, according to an IDC white paper sponsored by IBM.

Going forward, IBM saw three crucial observations that will keep influencing how firms develop in the upcoming years.

IT should be made simpler to improve business results and ROI

Enterprises are under a lot of pressure to modernise their old IT infrastructures. The applications that IBM is currently developing will be the ones that they must update in the future.

Businesses can include mission-critical apps into a contemporary hybrid environment using Everything as a Service options, especially for workloads and applications related to artificial intelligence.

For instance, CrushBank and IBM collaborated to restructure IT assistance, optimising help desk processes and providing employees with enhanced data. As a result, resolution times were cut by 45%, and customer satisfaction significantly increased. According to CrushBank, consumers have expressed feedback of increased happiness and efficiency, enabling the company to spend more time with the people who matter most: their clients, thanks to Watsonx on IBM Cloud.

Rethink corporate strategies to promote quick innovation

AI is radically changing the way that business is conducted. Conventional business models are finding it difficult to provide the agility needed in an AI-driven economy since they are frequently limited by their complexity and cost-intensive nature. Recent IDC research, funded by IBM, indicates that 78% of IT organisations consider Everything as a Service to be essential to their long-term plans.

Businesses recognise the advantages of using Everything as a Service to handle the risks and expenses associated with meeting this need for rapid innovation. This paradigm focusses on producing results for increased operational effectiveness and efficiency rather than just tools. By allowing XaaS providers to concentrate on safe, dependable, and expandable services, the model frees up IT departments to allocate their valuable resources to meeting customer demands.

Prepare for today in order to anticipate tomorrow

The transition to a Everything as a Service model aims to augment IT operations skills and achieve business goals more quickly and nimbly, in addition to optimising IT spending.

CTO David Tan of CrushBank demonstrated at Think how they helped customers innovate and use data wherever it is in a seamless way, enabling them to create a comprehensive plan that addresses each customer’s particular business needs. Enabling an easier, quicker, and more cost-effective way to use AI while lowering the risk and difficulty of maintaining intricate IT architectures is still crucial for businesses functioning in the data-driven world of today.

The trend towards Everything as a Service is noteworthy since it is a strategic solution with several advantages. XaaS ought to be the mainstay of every IT strategy, as it may lower operational risks and expenses and facilitate the quick adoption of cutting-edge technologies like artificial intelligence.

Businesses can now reap those benefits with IBM’s as-a-service offering. In addition to assisting clients in achieving their goals, IBM software and infrastructure capabilities work together to keep mission-critical workloads safe and compliant.

For instance, IBM Power Virtual Server is made to help top firms all over the world successfully go from on-premises servers to hybrid cloud infrastructures, giving executives greater visibility into their companies. With products like Watsonx Code Assistant for Java code or enterprise apps, the IBM team is also collaborating with their customers to modernise with AI.

There is growing pressure on businesses to rebuild their legacy IT estates in order to minimise risk, expense, and complexity. With its ability to streamline processes, boost resilience, and quicken digital transformation, Everything as a Service is starting to emerge as the answer that can take on these problems head-on. IBM wants to support their customers wherever they are in their journey of change.

Read more on govindhtech.com

#Complexity#EverythingasaService#cloudcomputing#Salesforce#GoogleCloudSQL#GoogleDrive#XaaS#IBMXaaS#ibm#rol#govindhtech#improvebusinessresults#WatsonxonIBMCloud#useai#news#artificialintelligence#WatsonxCodeAssistant#technology#technews

0 notes

Text

5 Easy Ways To Improve Salesforce Data Backup Recovery Strategy

In today's data-driven world, businesses rely heavily on Customer Relationship Management (CRM) systems like Salesforce to manage their customer information, track sales, and drive growth. However, even the most robust platforms are not immune to data loss or system failures. That's why having a solid Salesforce data backup and recovery strategy is crucial for ensuring business continuity. In this blog post, we'll explore five easy ways to enhance your Salesforce data backup and recovery strategy.

Regularly Scheduled Backups:

The foundation of any effective data recovery strategy is regular data backups. Salesforce provides a built-in data export tool that allows you to export data in a structured format, such as CSV or Excel. Set up a routine schedule for these exports to ensure that your data is backed up consistently. Depending on your organization's needs, you may choose to perform daily, weekly, or monthly backups.

Automate Your Backups:

Manually exporting data can be time-consuming and prone to errors. To streamline the process, consider automating your Salesforce data backups using third-party backup solutions. These tools can schedule and execute backups automatically, ensuring that your data is consistently and reliably backed up without manual intervention.

Store Backups Securely:

Backing up your Salesforce data is only half the battle. Equally important is where you store those backups. Utilize secure and redundant storage solutions, such as cloud storage platforms like Amazon S3 or Google Cloud Storage. Implement encryption and access controls to safeguard your backups from unauthorized access.

Test Your Recovery Process:

Having backups is essential, but they're only as good as your ability to recover data from them. Regularly test your data recovery process to ensure that it works as expected. Document the steps, and make sure that your team is familiar with the recovery procedures. Conducting mock recovery drills can help identify and address any potential issues before they become critical.

Monitor and Alerting:

Proactive monitoring is key to identifying data backup and recovery issues early. Implement monitoring and alerting systems that notify you of any backup failures or anomalies. This way, you can take immediate action to rectify issues and minimize data loss in case of a failure.

Consider Salesforce Data Archiving:

As your Salesforce database grows, it can become challenging to manage large volumes of data efficiently. Salesforce offers data archiving solutions that allow you to move older or less frequently accessed data to a separate storage location. This can help reduce storage costs and improve system performance, making your data recovery strategy more manageable.

In conclusion, enhancing your Salesforce data backup and recovery strategy doesn't have to be complicated. By implementing these five easy steps, you can significantly improve your organization's ability to recover critical data in the event of data loss or system failures. Remember that data is the lifeblood of your business, and a robust backup and recovery strategy is your insurance policy against unforeseen disasters.

#Salesforce best practices#Data loss prevention#Backup automation#Data retention policies#Data encryption#Salesforce customization#Data recovery testing#Backup monitoring#Data recovery procedures#Data backup optimization#Salesforce data protection#Backup reliability#Data restoration#Backup storage solutions#Data backup documentation#Salesforce metadata backup#Data backup strategy evaluation#Data backup policies#Salesforce data backup best practices

0 notes

Text

How to upload the files to S3 using Salesforce Apex ?

How to upload the files to S3 using Salesforce Apex ?

Hello #Trailblazers, Welcome back. In this blog post, we will learn how to upload a file to Amazon S3 using Salesforce Apex. Sending the files to Amazon S3 is always a difficult task because of Amazon Authentication which is complex. The Problem Statement As a Salesforce Developer you need to upload all the files which gets uploaded under any Account to Amazon S3 using Salesforce Apex…

View On WordPress

#amazon s3#amazon s3 integration with salesforce#amazon s3 with salesforce#amazon with salesforce#how to upload file to amazon s3 using apex#how to upload files to amazon s3 using apex#integrate amazon s3 with salesofrce#integration of S3 with Salesforce apex#Salesforce#salesforce integration with amzaon#upload files to amazon s3

0 notes