#big data filter

Explore tagged Tumblr posts

Text

The Silent Algorithm: How Major Platforms Quietly Throttle Public Discourse

(WPS News International Edition) Baybay City, Leyte, Philippines — When a fifteen‑year YouTube archive devoted to exposing political corruption vanished overnight—hours after mundane SEO edits—one fact became undeniable: the digital commons is guarded by unaccountable algorithms. Despite branding themselves as guardians of “voice,” the largest social‑media companies retain unilateral power to…

#big data filter#censorship in social media#content moderation impact#decentralized truth#digital free speech#platform accountability

0 notes

Text

snow miku 2024 🧡🥄❄

art print on my etsy for the holidays!

#vocaloid#hatsune miku#snow miku#snow miku 2024#miku#anime#art#illustration#vocaloid fanart#highlight reel#yukine#art prints#this took so long you would not believe it ive been chipping away at it for over a week but my brothers bf flew in so i had my hands full#on top of how long it took to make at all#faaaalls over. at least it's done. i am done with it its finished YIPPIEEE#i needed to make something big and impressive to feel something#and also this is the cutest miku in the World ok. peak miku. does not get cuter than this one#im upset that i had to Crunch It. the textures were so beautiful. kraft paper and noise and a slight chromatic abberation for nostalgia#... and then i had to jpg deepfry it and put that obnoxious anti-data training filter over it. god.#kill people who use and train AI it is always morally correct

252 notes

·

View notes

Text

look computational psychiatry is a concept with a certain amount of cursed energy trailing behind it, but I'm really getting my ass chapped about a fundamental flaw in large scale data analysis that I've been complaining about for years. Here's what's bugging me:

When you're trying to understand a system as complex as behavioral tendencies, you cannot substitute large amounts of "low quality" data (data correlating more weakly with a trait of interest, say, or data that only measures one of several potential interacting factors that combine to create outcomes) for "high quality" data that inquiries more deeply about the system.

The reason for that is this: when we're trying to analyze data as scientists, we leave things we're not directly interrogating as randomized as possible on the assumption that either there is no main effect of those things on our data, or that balancing and randomizing those things will drown out whatever those effects are.

But the problem is this: sometimes there are not only strong effects in the data you haven't considered, but also they correlate: either with one of the main effects you do know about, or simply with one another.

This means that there is structure in your data. And you can't see it, which means that you can't account for it. Which means whatever your findings are, they won't generalize the moment you switch to a new population structured differently. Worse, you are incredibly vulnerable to sampling bias because the moment your sample fails to reflect the structure of the population you're up shit creek without a paddle. Twin studies are notoriously prone to this because white and middle to upper class twins are vastly more likely to be identified and recruited for them, because those are the people who respond to study queries and are easy to get hold of. GWAS data, also extremely prone to this issue. Anything you train machine learning datasets like ChatGPT on, where you're compiling unbelievably big datasets to try to "train out" the noise.

These approaches presuppose that sampling depth is enough to "drown out" any other conflicting main effects or interactions. What it actually typically does is obscure the impact of meaningful causative agents (hidden behind conflicting correlation factors you can't control for) and overstate the value of whatever significant main effects do manage to survive and fall out, even if they explain a pitiably small proportion of the variation in the population.

It's a natural response to the wondrous power afforded by modern advances in computing, but it's not a great way to understand a complex natural world.

#sciblr#big data#complaints#this is a small meeting with a lot of clinical focus which is making me even more irritated natch#see also similar complaints when samples are systematically filtered

125 notes

·

View notes

Text

I'm getting back into reading scifi short stories like I did in high school,, I love reading short stories as opposed to novels for a lot of reasons, but I've unfortunately (fortunately?) forgotten a lot of them so it's basically like I'm rediscovering them all :D

#The only ones I really consciously remember are... A story abt a young boy and girl in a big space terrarium with a supercomputer#And at the end they get [redacted redacted data expunged]#(I do remember how it ends but I do not want to spoil it)#Another one about a guy being one of the first commercial people on Mars and coming back to earth and the people he talk to are all like#'wowwwww that's so coooooollll i wish I could go to mars' and him reflecting how God awful the conditions and treatment was actually#And the last I remember is a world where everyone has a little chip that can basically filter things u should not see from your vision#(such as other people's houses#Etc)#Jk I remembered another one. A city of androids all doing android roles for the entertainment of human tourists. Which was so similar to#Westworld that I thought it was inspired by it.

2 notes

·

View notes

Text

So, Discord has added a feature that lets other people "enhance" or "edit" your images with different AI apps. It looks like this:

Currently, you can't opt out from this at all. But here's few things you can do as a protest.

FOR SERVERS YOU ARE AN ADMIN IN

Go to Roles -> @/everyone roles -> Scroll all the way down to External Apps, and disable it. This won't delete the option, but it will make people receive a private message instead when they use it, protecting your users:

You should also make it a bannable offense to edit other user's images with AI. Here's how I worded it in my server, feel free to copypaste:

Do not modify other people's images with AI under ANY circumstances, such as with the Discord "enhancement" features, amidst others. This is a bannable offense.

COMPLAIN TO DISCORD

There's few ways to go around this. First, you can go to https://support.discord.com/hc/en-us/requests/new , select Help and Support -> Feedback/New Feature Request, and write your message, as seen in the screenshot below.

For the message, here's some points you can bring up:

Concerns about harassment (such as people using this feature to bully others)

Concerns about privacy (concerns on how External Apps may break privacy or handle the data in the images, and how it may break some legislations, such as GDPR)

Concerns about how this may impact minors (these features could be used with pictures of irl minors shared in servers, for deeply nefarious purposes)

BE VERY CLEAR about "I will refuse to buy Nitro and will cancel my subscription if this feature remains as it is", since they only care about fucking money

Word them as you'd like, add onto them as you need. They sometimes filter messages that are copypasted templates, so finding ways to word them on your own is helpful.

ADDING: You WILL NEED to reply to the mail you receive afterwards for the message to get sent to an actual human! Otherwise it won't reach anyone

UNSUSCRIBE FROM NITRO

This is what they care about the most. Unsuscribe from Nitro. Tell them why you unsuscribed on the way out. DO NOT GIVE THEM MONEY. They're a company. They take actions for profit. If these actions do not get them profit, they will need to backtrack. Mass-unsuscribing from WOTC's DnD beyond forced them to back down with the OGL, this works.

LEAVE A ONE-STAR REVIEW ON THE APP

This impacts their visibility on the App store. Write why are you leaving the one-star review too.

_

Regardless of your stance on AI, I think we can agree that having no way for users to opt out of these pictures is deeply concerning, specially when Discord is often used to share selfies. It's also a good time to remember internet privacy and safety- Maybe don't post your photos in big open public servers, if you don't want to risk people doing edits or modifications of them with AI (or any other way). Once it's posted, it's out of your control.

Anyways, please reblog for visibility- This is a deeply concerning topic!

19K notes

·

View notes

Text

on a side note

are tone indicators REALLY for autism?

dont autistic people already go through life without tone indicators? online or in person doesnt make a difference. they lack social instinct anyways and probably substituted it with cold logic

isnt it non autistic people that go by vibes? they would be the ones that need tone indicators yeah?

you see what i mean right?

sure they probably do help both categories. but ableism for not using them? i dont think so. i think it probably favors the average person actually.

#dont call me stupid i know autistic people look for facial expression body language all that jazz. i know#but whats really the difference between analyzing set A and set B? different traits but none of them are natural to interpret anyways#id assume the real people who struggle are the ones whose mothertongue is set A but they have to learn logic for set B#This is like me having to learn egyptian arabic vs saudi arabic. bitch i dont know arabic#but to an arab..... egyptian arabic? dawg. now theyre gonna panic#ive seen the “kys /j” ok. nobody is falling for that. we can read context#lemme field experiment this. im gonna try to gaslight an autistic person and then a non autistic person#brb#counter point to myself: i just fed my post to an AI and it assumed malicious intent when my intent is clearly curiosity.#i train LLMs occasionally so I kinda have a general idea how we filter and process data for these big lugs.#theyre trained to assume intent based on arbitrary and incomplete rationalizations of neurotypicals#if an llm could cancel me. a normal person could too. i should keep my thoughts to myself#keyword being should.

0 notes

Text

Nexus: The Dawn of IoT Consciousness – The Revolution Illuminating Big Data Chaos

#Advantech IoT#Aware World#Big Data#Big Data Chaos#Bosch IoT#Cisco IoT#Connected World#Contextual Awareness#Contextual Understanding#Continuous Improvement#Data Filtering#Distributed Intelligence#Edge AI#edge computing#Edge Data#Edge Intelligence#Edge Processing#HPE Edge#Intelligent Systems#Internet of Things#IoT#IoT Awareness#IoT Consciousness#IoT Ecosystem#IoT Hardware#IoT Networking#IoT Platform#Lean Efficiency#Nexus#Operational Optimization

0 notes

Text

Ofer Ronen, Co-Founder and CEO of Tomato.ai – Interview Series

New Post has been published on https://thedigitalinsider.com/ofer-ronen-co-founder-and-ceo-of-tomato-ai-interview-series/

Ofer Ronen, Co-Founder and CEO of Tomato.ai – Interview Series

Ofer Ronen is the Co-Founder and CEO of Tomato.ai, a platform that offers an AI powered voice filter to soften accents for offshore agent voices as they speak, resulting in improved CSAT and sales metrics.

Ofer previously sold three tech startups, two to Google, and one to IAC. He spent the past five years at Google building contact center AI solutions within the Area 120 incubator. He closed over $500M in deals for these new solutions. He holds an MS in Computer Engineering with a focus on AI from the University of Michigan, and an MBA from Cornell.

What initially attracted you to machine learning and AI?

AI has had a long history of starts and stops. Periods when there was a lot of hope for the technology to transform industries, followed by periods of disillusionment because it didn’t quite live up to the hype.

When I was doing a Masters in AI a couple of decades ago, at the University of Michigan, it was a period of disillusionment, when AI was not quite making an impact. I was intrigued by the idea that computers could be taught to perform tasks through examples vs the traditional heuristics, which requires thinking about what explicit instructions to provide. At the time I was working at an AI research lab on virtual agents which help teachers find resources online for their classes. Back then we didn’t have the big data, the powerful compute resources, or the advanced neural networks that we have today, so the capabilities we built were limited.

From 2016 to 2019 you worked at Google’s Area 120 incubator to design highly robust virtual agents for the largest contact centers. What was this solution precisely?

More recently I worked at Google’s Area 120 incubator on some of the largest voice virtual agents deployments, including a couple of projects for Fortune 50 companies with over one hundred million support calls a year.

In order to build more robust voice virtual agents that can handle complex conversations, we took millions of historical conversations between humans and used those conversations to detect the type of follow-up questions customers have beyond their initial stated issue. By mining follow-up questions and by mining different ways customers phrase each question, we were able to build flexible virtual agents that can have meandering conversations. This mirrored better the kind of conversations customers have with human agents. The end result was a material increase in the total calls fully handled by the virtual agents.

In 2021 and 2022, you built a 2nd startup at Area 120,. could you share what this company was and what you learned from the experience?

My second startup within Area 120 was again focused on call centers. Our solution focused on reducing customer churn by proactively reaching out to customers right after a failed support call where the customer expressed their issue but did not get to a resolution. The outreach would be done by virtual agents trained to address those open issues. What I learned from that experience is that churn is a difficult metric to measure in a timely manner. It can take 6 months to get statistically significant results for changes in churn. That makes it hard to optimize an experience fast enough and to convince customers a solution is working.

Could you share the genesis story behind your third 3rd contact center AI startup Tomato.ai, and why you chose to do it yourself versus working within Google?

The idea for Tomato.ai, my third contact center startup, came from James Fan, my co-founder and CTO. James thought it would be more effective to sell wine using a French accent, and so what if anyone could be made to sound French?

This was the seed of the idea, and from there our thinking evolved. As we investigated it more we found a more acute pain point felt by customers when speaking with accented offshore agents. Customers had problems with comprehension and trust. This represented a larger market opportunity. Given our backgrounds, we realized the sizable impact it would have on call centers, helping them improve their sales and support metrics. We now refer to this type of solution as Accent Softening.

James and I previously led and sold startups, including each of us selling a startup to Google.

We decided to leave Google to start Tomato.ai because, after many years at Google, we were itching to get back to starting and leading our own company.

Tomato.ai solves an important pain point with call centers, which is softening accents for agents. Could you discuss why voice filters are a preferred solution to agent training?

At Tomato.ai, we understand the importance of clear communication in call centers, where accents can sometimes create barriers. Instead of relying solely on traditional agent training, we’ve developed voice filters, or what we call “accent softening.” These filters help agents maintain their unique voice, while reducing their accents, improving clarity for callers. By using voice filters, we ensure better communication and build trust between agents and callers, making every interaction more effective and satisfying to the customer. So, compared to extensive training programs, voice filters offer a simpler and more immediate solution to address accent-related challenges in call centers.

As existing agents leverage these tools to enhance their performance, they will be empowered to command higher rates, reflecting their increased value in delivering exceptional customer experiences. Simultaneously, the democratizing effect of generative AI will bring new entry-level agents into the fold, expanding the talent pool and driving down hourly rates. This dichotomy signifies a fundamental transformation in the dynamics of call center services, where technology and human expertise reshape the landscape of the industry, paving the way for a more inclusive and competitive future.

What are some of the different machine learning and AI technologies that are used to enable voice filtering?

This type of real-time voice filtering solution would not have been possible just a couple of years ago. Advancements in speech research combined with newer architectures like the transformer model and Deep Neural Networks, and more powerful AI hardware (like TPUs from Google, and GPUs from NVidia) make it more possible to build such solutions today. It is still a very difficult problem that requires our team to invent new techniques for training speech-to-speech models that are low latency, and high quality.

What type of feedback has been received from call centers, and how has it impacted employee churn rates?

We have strong demand from large and small offshore call centers to try out our accent softening solution. Those call centers recognize that Tomato.ai can help with their top two problems (1) offshore agents’ performance metrics are not up to par vs onshore agents (2) it is difficult to find enough qualified agents to hire in offshore markets like India and The Philippines.

We expect in the coming weeks to have case studies that highlight the type of impact call centers experience using Accent Softening. We expect sales calls to see an immediate lift in key metrics like revenue, close rates, and lead qualification rates. At the same time, we expect support calls to see shorter handle times, fewer callbacks, and improved CSAT.

As mentioned above churn rates take longer to validate, and so case studies with those improvements will come at a later date.

Tomato.ai recently raised a $10 million funding round, what does this mean for the future of the company?

As Tomato.ai gears up for its inaugural product launch, the team remains steadfast in its commitment to reshaping the landscape of global communication and the future of work, one conversation at a time.

Thank you for the great interview, readers who wish to learn more should visit Tomato.ai.

#2022#ai#Big Data#Building#call center#call Centers#CEO#classes#command#communication#Companies#comprehension#computer#computers#CTO#data#deals#Design#do it yourself#dynamics#engineering#filter#Filters#Fundamental#Funding#Future#generative#generative ai#Global#Google

0 notes

Text

Radio Silence | Chapter Thirty-One

Lando Norris x Amelia Brown (OFC)

Series Masterlist

Summary — Order is everything. Her habits aren’t quirks, they’re survival techniques. And only three people in the world have permission to touch her: Mom, Dad, Fernando.

Then Lando Norris happens.

One moment. One line crossed. No going back.

Warnings — Autistic!OFC, domestic Lamelia, autistic meltdown on page, vaguely referenced public sex.

Notes — Timeline fuckery, as in I seem to have written Silverstone twice, in the last chapter and this one too. Clearly the podium fluff is too much for me to keep track of. So... Enjoy the extra fluffiness.

2023 (Silverstone — Hungary)

The sea was warm and quiet, the waves nothing but a soft hush against the sand.

Amelia sat with her legs tucked under her, an oversized white linen shirt hanging loosely over her bikini. Her hair was wet, curled slightly at the ends from the salt water. She was squinting at the horizon, watching the sunlight paint the beach in a million shades of gold.

Behind her, Lando dropped onto the towel with two icy cold drinks, one for each of them. He pressed a kiss to the back of her shoulder.

“This place is fucking amazing,” he said.

She hummed in agreement, leaning her head against his. “Warm, but breezy. The perfect in-between.”

He grinned. “Yeah? You glad I managed to convince you to come then?”

“Yes.” She said. “I’m going to have so much to get done when we get back to the factory, but I needed a break.”

Lando chuckled and stretched out beside her, propping himself on one elbow. “Hm. I know. And now you’re relaxed. That’s nice.”

She gave him a sidelong look. “Don’t say it like that. I can be relaxed. I relax a lot.”

“…No you don’t.”

She huffed. “Shut up.”

He reached for her hand, lacing their fingers together. “C’mon. Don’t get pissed off. It’s true, yeah? You have been stressed, but you’ve also been fucking ace with Oscar. With the team. I know the car isn’t what you want it to be, but it’s a lot bloody better than it was.”

Amelia softened. She leaned down to kiss him. “Thanks, husband.”

Lando’s eyes sparkled. “Say it again.”

“Husband?”

He groaned. “God, that’s hot.”

She laughed. “You’re such a weirdo.”

“You married me.”

“I clearly have poor taste.” She teased.

“Liar.”

He sat up and kissed her properly this time — slow and warm and a little lazy. She all but melted into it, fingers curling in the fabric of his swim shorts.

They ended up tangled together on a beach blanket under the slope of the rocks, just out of sight. The rest of the world fell away. It was just them. Skin on skin, hearts in sync, breathless laughter caught in the salt breeze.

Later, Amelia rested her cheek on Lando’s bare chest, listening to his heartbeat.

“I think,” she said softly, “I could stay here forever.”

He smoothed her hair back out of her face. Stared at her, like he was memorising her all over again. “Yeah, baby. Me too.”

—

The design lab was buzzing — a low but constant thrum of voices, keyboard clicks, air vents, printers, someone’s half-muffled phone call. The kind of sensory chaos most people filtered out without effort.

Amelia couldn’t today.

She had her noise-cancelling headphones on, her iPad open to three separate CAD model views, and a mechanical pencil tapping against her knee in a rhythm only she understood.

They were reviewing a mock-up for the 2024 suspension. One of the junior engineers; bright, eager, but careless, had accidentally uploaded an outdated spec into the shared build folder.

It seemed small. A mistake, an easy correction. But it meant the last two days of precision design work she’d done were out of sync with the rest of the development team’s data.

And that meant wasted time. Faulty conclusions. A domino collapse of calculations that had been perfect in her head.

She tried to breathe through it. In. Out. In again. But the wrongness sat in her chest like a ton of bricks.

Someone, Callum, tried to make light of it. “It’s no big deal. We’ve still got time before CFD locks—”

“No,” she said, voice tight. “You don’t understand. It’s wrong now. It’s all wrong.”

Her hands were shaking.

“Hey, it’s okay,” another engineer said carefully. “We’ll fix it. It was just a wrong upload—”

“Stop talking.” Her voice cracked, sharp and sudden. “Please. Just stop. Stop—”

She couldn’t hear them anymore. The hum of the lights had turned into a roar. The feeling of her shirt collar was too much. Her thoughts weren’t lining up right.

She stood up too fast. Knocked over a pen cup. The clatter made her flinch violently.

Then she was breathing hard. Too fast. Too loud. Her eyes stung. Her palms burned.

The room blurred. All noise. Too many people. Too many things out of place.

She left. Walked straight out the door, down the hall, past the glass break room, past a surprised intern holding two coffees. She found an empty office, one of the glass-walled side rooms, and ducked inside.

Lights off. Curtains drawn.

She sat on the floor. Curled into herself, hands pressed to her ears. Shaking.

She didn’t cry, not exactly. But her body trembled with the overload — her nervous system in revolt. All she could do was breathe and wait it out.

—

Ten minutes later, the door opened slowly.

Lando.

He said nothing at first. Just slipped inside and sat down on the floor beside her. Close, but not touching.

She didn't look up.

“Callum came to find me. He’s panicking.” He said.

She let out a half-broken noise. “I hate this. I hate when this happens.”

He shook his head. “Baby—“

Her shoulders curled tighter. "It’s all wrong,” she whispered. “I had it perfect. In my head. And now it’s wrong and I can’t fix it, and they don’t understand why it matters. They think I’m overreacting.”

“You’re not.”

“They think I’m difficult.”

“You’re not.”

She finally looked at him. Her face was pale, eyes glassy. “It felt like… too much. All at once. I couldn't stop it.”

Lando reached out, slow, deliberate, and gently took her hand. “I know, baby.” He said softly. “You don’t have to pretend, though. You know that. And I’m proud of you for walking away when you needed space.”

She gripped his fingers tightly. Grounded. Fiddled with his wedding band.

And little by little, her breathing began to slow.

—

Later, Amelia returned to her desk. The office had quieted. A sticky note sat on her monitor from Oscar, in his neat, blocky handwriting.

YOU’RE ALLOWED TO HAVE BAD DAYS — Ducky

She exhaled a shaky laugh.

Callum brought her tea an hour later and didn’t say a word, just left it on her desk like a peace offering. She nodded her thanks, smile tight but genuine.

She reopened her iPad, fingers steady now. Her brain still hurt, her skin still buzzed with leftover static, but she was here. She was okay.

And she could fix this.

—

The strategy room was windowless, cold, and lit by the slightly too-white fluorescents that made Amelia’s eyes burn.

She sat near the front with her iPad open, stylus twirling between her fingers as various engineers clicked through performance graphs on the large screen. Tyre degradation, pit stop windows, stint lengths, lap delta comparisons. The usual mess of variables before a race.

Oscar was next to her, elbows on the table, listening intently. He never interrupted. Never fidgeted. Just watched. Logged everything.

When the final graph flicked across the screen with the projected optimal strategy, medium-hard-medium, Amelia tilted her head, expression flat.

“No,” she said simply.

A pause.

One of the strategy engineers, Jeremy, looked up. “You don’t agree?”

“No. That doesn’t win us anything. That gives us a decent P6, maybe. P7 if the Mercs behave.”

“And what would you suggest?”

Amelia tapped the stylus against her pad. “Soft-Hard. Big launch, early gain. One stop. Pit window between 14 and 18, if the tyres last. Risky, but Oscar’s tyre management is good enough. He’s not heavy on the fronts.”

Oscar, quiet until now, nodded. “That’s what I felt in FP2. Softs felt clean even on the heavier fuel run. Just needs the rear temps managed early.”

Amelia gave him a slight smile, not warm exactly, but approving. “Driver agrees.”

Jeremy frowned. “If we pit early, we get undercut risk. Traffic.”

“We’re already in traffic,” Amelia replied. “You think anyone’s just going to make room for us? The only way through is to make it past them before the midfield concertina sets in. That means launch tyre, low fuel window, commit to Plan A. We stay reactive. Flexible. But we commit.”

Oscar added, “And if it doesn’t work?”

She looked at him. Direct. “Then it doesn’t. But we’ve learned more than we would’ve finishing behind both Alpines.”

Silence. Then, slowly, Andrea leaned back in his seat and said, “It’s bold.”

“That’s how we race,” Amelia said.

Another pause. Then a nod from Andrea. “Alright. Amelia, prep two versions of the radio calls. One if we need to abort early. One if we push deep into the stint.”

“Already halfway done,” she said, flipping to a new tab.

Oscar leaned toward her, voice low. “You really think we can pull it off?”

“I wouldn’t say it if I didn’t.”

“I like it,” he said, almost to himself.

She looked at him sideways. “You trust me?”

He blinked. “Yeah. I do.”

She smiled, barely. “Then we’re good. Don’t be late to the grid walk. Make sure Lando’s had some water.”

“Yeah. I will,” Oscar muttered.

As the team filed out, Jeremy passed Amelia with a nod. “You’re not as scary as everyone said you’d be.”

“No,” she shrugged. “Not scary. Just… specific.”

Oscar held the door open, glancing at her. “Will you make me cookies if I finish top five?”

“Yes,” she agreed. “With raspberries. Just don’t tell Kim. He keeps telling me off for giving you treats that aren’t on your meal plan.”

“Mean.” Oscar complained.

“Very mean.” Amelia agreed.

—

The moment Lando stepped off the scale in parc fermé, Amelia launched herself at him.

He barely got his arms up in time to catch her — she collided with his chest like a missile, legs wrapping around his waist, arms tight around his neck.

“You crazy, crazy man,” she whispered fiercely into his ear, smiling so wide it hurt. “You data-defying freak.”

Lando laughed, breathless, still winded from the final laps but suddenly full of adrenaline again. “Hello, my beautiful wife.”

She kissed him hard, not the polished PR kind, but the messy, gleeful, post-race kind that tasted like sweat and relief. Cameras were around them, but neither of them cared. Hadn’t for a long time.

“P2,” he said, dazed.

“Yes,” she said, still clinging to him. “I’m so proud of you.”

He set her down, barely. She kept one hand fisted in his fireproofs, grounding herself.

“That was such an amazing drive,” she said, quieter now. “Every lap. You didn’t put a single foot wrong. And I’m so proud of you, Lando.”

He looked at her for a long moment, his eyes glinting under the brim of his cap. “Thank you, baby. For this. You. The car.”

“Anything for you,” she whispered, leaning up on her tiptoes and brushing their noses together. “I was getting tired of you moping around the apartment and yelling at Gran Turismo.”

He snorted. “You love when I yell at Gran Turismo.”

“I love you,” she said simply.

Someone called his name, an FIA official, maybe, or one of the social team, but he ignored it for a second longer. His thumb brushed her jaw. “Meet me at the podium?”

“I’ll be there.” Watching, always watching, always in awe of the man she loved.

“I want to spray you with champagne.” He told her.

“You’re not allowed to,” she warned. “I’ll be sticky.”

“Don’t care.” He grinned.

She rolled her eyes, kissed him again, and let him go.

Later, after the podium ceremony, after she did get sprayed, and did yell “Lando Norris, don’t you dare!” on live television, they curled up together in the back of the hospitality unit, him shirtless, her in one of his McLaren hoodies, and split a tiny bottle of celebratory wine Oscar had swiped from the hospitality fridge.

“I missed this,” Lando murmured, head on her shoulder.

She brushed his curls back from his forehead. “Podiums?”

“No,” he said, looking up at her. “You. You being happy. You being here, at McLaren, with me.” He paused, and she leaned closer curiously as he gazed at her, all soft and sweet and so dearly tender. “I kept it, you know? The note you left me before you joined RedBull. The one where you called me an asshole. The booklet too, with the race notes. You were the reason for every podium I got the year after that, you know?”

She swallowed thickly. Stared at him. Reached her hand up to cup his face. “You’re not an asshole.” She whispered. Needed to say it. Needed him to know that she didn’t believe that anymore.

“I am sometimes,” he grinned lopsidedly. “But you love me anyway.”

“I love you anyway.” She whispered.

—

It started with the toaster.

Specifically, with Lando kicking the cupboard under the sink in frustration because where the hell was the toaster? and why is there no bloody counter space anymore?

“I moved it because your smoothie machine was leaking again,” Amelia said from the floor of the living room, surrounded by three open boxes of car telemetry printouts and what looked like half of a sock drawer.

“I fixed the leak.” Lando told her.

She frowned at her pencil. “You fixed it with duct tape.”

“That’s how men do it,” Lando said, crouching to help pick up a stack of papers that had slipped under the coffee table. “Are these important?”

“Yes. They’re the data sheets from Oscar’s last long run simulation—don’t fold them!”

“I wasn’t going to—” He paused. “Okay, I was.”

She snatched them out of his hand, stuffing them back into a manila folder that was already bursting. Over the last few months, their beautiful apartment had started to look less like a home and more like an office. Helmets on shelves, engineering notebooks piled on chairs, printer cables tangled with furniture.

Lando stood up and did a slow 360° in the living room. “Have we… always had this much stuff?” He asked, his eyebrows pulling together.

“No,” Amelia said. “You moved in with a single suitcase of clothes and a sim rig. I had four crates of notebooks, over two hundred pairs of shoes, and a bookshelf. Now you have a room full of gaming stuff, we have two Dyson fans, my office is overflowing, and Max’s cats all-but live here part-time.” She pointed at the cat-tree they had stuffed into a tight corner by the window.

Lando rubbed the back of his neck. “You want to move?”

“I don’t want to,” she said bluntly, “but we’ve started tripping over each other. Literally. I had to do my work in the bathroom yesterday because you needed to use the extension cord in my office to use your NutriBullet.”

“There was no space in the kitchen.” He argued.

“Yes, I know. It was still a ridiculous solution.” She told him flatly.

He tried not to laugh. “Baby, you’re still mad?” He cooed.

“Lando,” she said, looking up at him, serious now. “We’ve outgrown this place. I love it, and it will always be our first home, but I don’t want to have to think about if I have space in my wardrobe to buy a new pair of shoes when I see ones that I like.” She said, biting her lip. “And I need a bigger office. You need a streaming room that doesn’t double as a spare room. It’s not fair to shove Oscar onto a pull-out bed every time he’s here.”

He flopped down next to her, wrapping his arms around her waist and pulling her onto his lap. “Suppose we could have a bigger kitchen.” He mumbled against her neck. “A nicer balcony. Maybe a dining room.”

“And plenty of space for guests,” she said.

Lando leaned his head against hers. “Okay. Let’s look. After the triple header.”

“Yeah,” Amelia said, letting herself relax into his side. “I want to stay in this neighbourhood. Or close.”

“Shouldn’t be too hard.” He hummed.

She cracked a smile. “And I want us to start looking for a house in England, too. Not for now… but for later. Somewhere to disappear during off-seasons. With a big garden, and trees, and a big garage for me to play around with some cars again.” She rambled.

He stared at her, hearts in his eyes. “God, I love you.”

“I know,” she said softly, and kissed his cheek. “Come on. Carry me into the kitchen. My legs are numb, but I’ll help you find the toaster.”

—

From the pit wall, the view was beautiful.

The sun beat down on the Hungaroring like it was trying to melt the asphalt. The air was thick with it though, and Amelia’s headset slightly with heat distortion.

Oscar was starting from the second row. P4.

Lando P3.

Both of her boys making up the second row.

Her fingers tapped restlessly against her keyboard, eyes flicking between sector deltas and real-time tyre temp data. She barely noticed the world around her, only the voices in her ear and the heartbeat under her skin.

“Oscar, radio check?”

“Radio good.” Calm, sharp. His tone was always a little flat, that’s what everyone said; that he was emotionless. It made them a perfect duo — she never needed to try to unravel his tone. If he was thinking something, feeling something, he said it.

“Copy. Full systems looking good. Expect higher degradation on rear left — we’ll manage it through lift points. Brake temps will spike early. Keep it smooth, ducky.”

“Understood.” He said.

She leaned back in her stool and glance to her left, giving her dad a confident smile. He leaned across to give her a heavy shoulder pat, squeezing hard.

—

The launch was perfect.

Oscar didn’t just hold his position off the line; he gained. He swept into Turn 1 ahead of Lewis, ahead of even his teammate. For one brief, glorious moment, he was P2 behind Max Verstappen, in only his 11th Formula 1 race.

Amelia didn’t flinch. Didn’t react. Just… hyper focused.

“Amazing job, Oscar. Straight into it. Eyes forward — target delta plus point-three, we’ll manage tyres early.” She said.

“Copy.”

Her hands hovered over the live strategy tools. They were starting on Plan A, soft-to-medium, but she had contingencies mapped like a chess board. She refused to ever resort to a late reaction.

—

By Lap 16, Lando had undercut Oscar and slotted into net P2.

Amelia knew it would happen. Still, she hated how early they’d had to box Oscar, forced into it by track position pressure and the undercut threat from Lewis behind. The window had been tight. And the McLaren pit stop wasn’t their best; 3.8 seconds. Enough to cost.

Oscar rejoined in traffic. Slower cars. Dirty air.

The moment Oscar keyed his mic, she knew he felt it too.

“Tyres feel edgy. Car’s moving around.”

“Yeah. I know. Let’s build up our temps gradually. Try not to fight the dirty air. We’re still advantage three, ducky. Cleaner air will come to us once we’re through this pack.”

He didn’t reply right away. But when he did, it was with full faith in her plan. “Copy. Staying patient.”

She made a note on her pad, already tracking tyre drop-off curves from the medium runners around him. There was still a shot at a P4 finish. Maybe more, if Ferrari made the wrong call. Again.

—

The race stabilised. Max was untouchable up front, but Lando and Oscar were both holding on. Lando ran solidly in P2. Oscar, behind him in P5 with Charles closing. Too slowly to be dangerous yet, but Amelia knew better than to relax.

“Leclerc at 2.2 behind. He’s on slightly newer mediums, but they’ll plateau. You’re doing exactly what I need you to do.”

“Rear left’s starting to slip.” He reported.

Amelia adjusted her headset mic. She didn’t raise her voice, but the sharpness of her tone cut through the heat and static. “We’re monitoring. Keep it tight in 11 and off the kerbs in Sector 2. We’ll be okay.”

Will leaned toward her, murmuring, “You sure we’re not going to lose it to Leclerc?”

She didn’t look away from the screen. “Not if he does exactly what I tell him. And he will.”

—

Leclerc wasn’t fast enough. And Oscar, even with graining tyres, rising temps, and thirty-five laps of non-stop pressure, didn’t put a wheel wrong.

“Last lap. Keep it clean. You’ve broken DRS.”

“Copy.” Calm. Professional. Perfectly Oscar.

When he crossed the line in P5, just behind Lewis, Amelia didn’t outwardly react. But her hand curled into a fist beneath the desk, opening and closing five times in even succession.

It wasn’t a podium. But it was a statement.

—

In the garage, the heat clung to them like a second skin. Amelia handed Oscar a water bottle before he even had to ask.

“You made them work for it,” she said.

Oscar looked at her, face half-smeared with visor marks, and raised a brow. “I was pushing hard.”

“I know,” she said, voice level. “Even after the weak strategy call. You salvaged your position, and it was impressive.”

He tilted his head. “Even that moment in Turn 2 where I had to back off?”

“Especially then,” she said. “That’s when I knew you were supposed to be my driver. You fight hard, but you race clean.”

Oscar snorted, leaning against the garage wall. “You’re very dramatic. And demanding on the radio.”

“You stayed ahead of a Ferrari on thirty-lap-old tyres. So…” She raised an eyebrow at him.

He smirked, then looked at her sideways. “Think we could’ve held that podium if we boxed one lap later?”

Amelia refused to lie. “Maybe. But we don’t deal in maybes. We deal in execution. And yours was great.”

He bumped her arm. “Thanks. I got a bit stressed there, after the first stop. You helped me keep my head.”

She smiled, faint but proud. “I’ll always do that.”

—

It wasn’t victory.

But it was control. It was consistency. It was yet another way of telling the world that Oscar Piastri, under her watch, was going to become something extraordinary.

—

Amelia found her husband sitting on one of the stackable pit wall chairs, half out of his fireproofs, head tipped back, hair damp with sweat. His eyes were closed, not asleep, but close to it. That bone-deep exhaustion that only comes after a truly hard-fought podium.

She nudged his knee with hers.

He cracked an eye open. Smiled when he saw that it was her. “Hey, Mrs. P5.”

She smiled right back at him. “Hi, Mr. P2.”

He let out a slow breath, opened his arms. She fell into them, onto his lap, and let him hold her. Tight. “Felt good today.” He started. “Felt like we were… properly in it. Like we’re not just pretending anymore.”

“You weren’t pretending in Silverstone, either,” she reminded him, sliding into the seat beside him. “But you really earned it today with that middle stint.”

He gazed down at her. “You always manage to do this.”

“What?” She asked, blinking at him.

“Say the exact right thing. Make me feel even better about a result I’m already proper buzzing about.” He explained, with a tilted smile. “Makes me feel like a bit of a muppet, honestly.”

She didn’t respond, just leaned over slightly, drawing something out from the inside of the pocket of her McLaren windbreaker. A thin silver chain, a small pendant strung on it. Lando in cursive letters, cut from a sheet of polished silver.

She held it up between them.

“A fan gave this to me outside the paddock,” she said, tone matter-of-fact. “Asked me to give it to you. I told her I was going to keep it.”

Lando blinked. “Wait—what?”

“Because,” she went on, “it has your name on it. And that’s comforting. Like when I labelled everything in the kitchen drawers so you stopped putting the spoons in the wrong place.”

He started laughing. “You think I’m a drawer?”

“I think you’re mine,” she said plainly. “And this necklace is a tactile reminder. So I’m keeping it. And I’m going to wear it all the time. Until it goes rusty, and then I’m going to have another one made. More permanent. And I’ll wear that one all the time too.”

Lando looked at her for a long moment, the corners of his mouth twitching with affection. “You’re so romantic.”

“Maybe.” She sighed, like it was the worst thing she’d ever been told.

That earned a full grin from him. Tired, slightly loopy from the adrenaline crash, but full and wide. He reached over and ran his fingers along the chain. “I love you, baby.” He said quietly.

She looked at him, blinked once. “I know.” A beat passed. She gave him the smallest smile, then added, “And I love you too.”

Lando pressed his forehead against hers. “God, I missed you during the cool-down room. Lewis and Max were being so serious. I just wanted to say something dumb and have you roll your eyes at me. Make everything feel fun again.”

“You did great,” she told him earnestly. “You kept Max behind you for more laps than most people have managed all year.”

He pulled her in then, quick and fierce, arms around her back, his mouth warm against hers. “You’re the only podium celebration I actually look forward to.” A pause. A long, lingering kiss. And then, “did you bring the chequered flag underwear?”

She glanced around before tugging at her top.

He peeked down and smirked.

“Fucking class.”

NEXT CHAPTER

#radio silence#formula one x reader#f1 x reader#f1 imagine#f1 fic#f1 x ofc#f1 x female reader#f1 fanfic#lando#lando fanfic#lando x reader#lando imagine#lando norris#lando x you#op81#oscar piastri#lando norris smut#lando norris fluff#lando norris fanfic#lando norris x reader#ln4 fic#ln4 smut#ln4 imagine#ln4 mcl#ln4#lando norris x y/n#papaya team#mclaren#formula one#lando norris x female oc

536 notes

·

View notes

Text

AO3 Ship Stats: Year In Bad Data

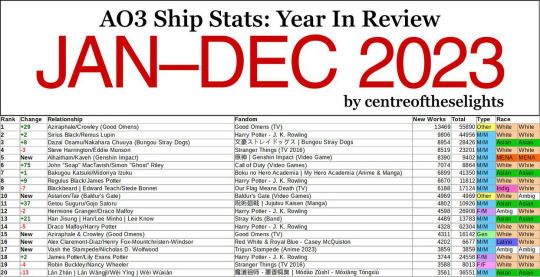

You may have seen this AO3 Year In Review.

It hasn’t crossed my tumblr dash but it sure is circulating on twitter with 3.5M views, 10K likes, 17K retweets and counting. Normally this would be great! I love data and charts and comparisons!

Except this data is GARBAGE and belongs in the TRASH.

I first noticed something fishy when I realized that Steve/Bucky – the 5th largest ship on AO3 by total fic count – wasn’t on this Top 100 list anywhere. I know Marvel’s popularity has fallen in recent years, but not that much. Especially considering some of the other ships that made it on the list. You mean to tell me a femslash HP ship (Mary MacDonald/Lily Potter) in which one half of the pairing was so minor I had to look up her name because she was only mentioned once in a single flashback scene beat fandom juggernaut Stucky? I call bullshit.

Now obviously jumping to conclusions based on gut instinct alone is horrible practice... but it is a good place to start. So let’s look at the actual numbers and discover why this entire dataset sits on a throne of lies.

Here are the results of filtering the Steve/Bucky tag for all works created between Jan 1, 2023 and Dec 31, 2023:

Not only would that place Steve/Bucky at #23 on this list, if the other counts are correct (hint: they're not), it’s also well above the 1520-new-work cutoff of the #100 spot. So how the fuck is it not on the list? Let’s check out the author’s FAQ to see if there’s some important factor we’re missing.

The first thing you’ll probably notice in the FAQ is that the data is being scraped from publicly available works. That means anything privated and only accessible to logged-in users isn’t counted. This is Sin #1. Already the data is inaccurate because we’re not actually counting all of the published fics, but the bots needed to do data collection on this scale can't easily scrape privated fics so I kinda get it. We’ll roll with this for now and see if it at least makes the numbers make more sense:

Nope. Logging out only reduced the total by a couple hundred. Even if one were to choose the most restrictive possible definition of "new works" and filter out all crossovers and incomplete fics, Steve/Bucky would still have a yearly total of 2,305. Yet the list claims their total is somewhere below 1,500? What the fuck is going on here?

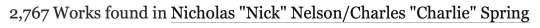

Let’s look at another ship for comparison. This time one that’s very recent and popular enough to make it on the list so we have an actual reference value for comparison: Nick/Charlie (Heartstopper). According to the list, this ship sits at #34 this year with a total of 2630 new works. But what’s AO3 say?

Off by a hundred or so but the values are much closer at least!

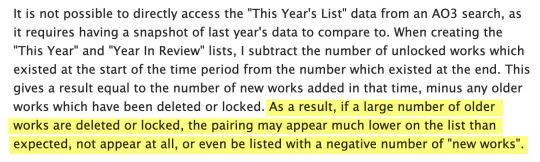

If we dig further into the FAQ though we discover Sin #2 (and the most egregious): the counting method. The yearly fic counts are NOT determined by filtering for a certain time period, they’re determined by simply taking a snapshot of the total number of fics in a ship tag at the end of the year and subtracting the previous end-of-year total. For example, if you check a ship tag on Jan 1, 2023 and it has 10,000 fics and check it again on Jan 1, 2024 and it now has 12,000 fics, the difference (2,000) would be the number of "new works" on this chart.

At first glance this subtraction method might seem like a perfectly valid way to count fics, and it’s certainly the easiest way, but it can and did have major consequences to the point of making the entire dataset functionally meaningless. Why? If any older works are deleted or privated, every single one of those will be subtracted from the current year fic count. And to make the problem even worse, beginning at the end of last year there was a big scare about AI scraping fics from AO3, which caused hundreds, if not thousands, of users to lock down their fics or delete them.

The magnitude of this fuck up may not be immediately obvious so let’s look at an example to see how this works in practice.

Say we have two ships. Ship A is more than a decade old with a large fanbase. Ship B is only a couple years old but gaining traction. On Jan 1, 2023, Ship A had a catalog of 50,000 fics and ship B had 5,000. Both ships have 3,000 new works published in 2023. However, 4% of the older works in each fandom were either privated or deleted during that same time (this percentage is was just chosen to make the math easy but it’s close to reality).

Ship A: 50,000 x 4% = 2,000 removed works Ship B: 5,000 x 4% = 200 removed works

Ship A: 3,000 - 2,000 = 1,000 "new" works Ship B: 3,000 - 200 = 2,800 "new" works

This gives Ship A a net gain of 1,000 and Ship B a net gain of 2,800 despite both fandoms producing the exact same number of new works that year. And neither one of these reported counts are the actual new works count (3,000). THIS explains the drastic difference in ranking between a ship like Steve/Bucky and Nick/Charlie.

How is this a useful measure of anything? You can't draw any conclusions about the current size and popularity of a fandom based on this data.

With this system, not only is the reported "new works" count incorrect, the older, larger fandom will always be punished and it’s count disproportionately reduced simply for the sin of being an older, larger fandom. This example doesn’t even take into account that people are going to be way more likely to delete an old fic they're no longer proud of in a fandom they no longer care about than a fic that was just written, so the deletion percentage for the older fandom should theoretically be even larger in comparison.

And if that wasn't bad enough, the author of this "study" KNEW the data was tainted and chose to present it as meaningful anyway. You will only find this if you click through to the FAQ and read about the author’s methodology, something 99.99% of people will NOT do (and even those who do may not understand the true significance of this problem):

The author may try to argue their post states that the tags "which had the greatest gain in total public fanworks” are shown on the chart, which makes it not a lie, but a error on the viewer’s part in not interpreting their data correctly. This is bullshit. Their chart CLEARLY titles the fic count column “New Works” which it explicitly is NOT, by their own admission! It should be titled “Net Gain in Works” or something similar.

Even if it were correctly titled though, the general public would not understand the difference, would interpret the numbers as new works anyway (because net gain is functionally meaningless as we've just discovered), and would base conclusions on their incorrect assumptions. There’s no getting around that… other than doing the counts correctly in the first place. This would be a much larger task but I strongly believe you shouldn’t take on a project like this if you can’t do it right.

To sum up, just because someone put a lot of work into gathering data and making a nice color-coded chart, doesn’t mean the data is GOOD or VALUABLE.

#ao3#ao3 stats#psa#my words#fandom#I doubt anyone is even going to read this but I needed to get it out of my system and at least try to stop this from spreading#if you know me#you know I get Big Mad about misinformation#don't take anything at face value#do your own research

4K notes

·

View notes

Text

Your Meta AI prompts are in a live, public feed

I'm in the home stretch of my 20+ city book tour for my new novel PICKS AND SHOVELS. Catch me in PDX TOMORROW (June 20) at BARNES AND NOBLE with BUNNIE HUANG and at the TUALATIN public library on SUNDAY (June 22). After that, it's LONDON (July 1) with TRASHFUTURE'S RILEY QUINN and then a big finish in MANCHESTER on July 2.

Back in 2006, AOL tried something incredibly bold and even more incredibly stupid: they dumped a data-set of 20,000,000 "anonymized" search queries from 650,000 users (yes, AOL had a search engine – there used to be lots of search engines!):

https://en.wikipedia.org/wiki/AOL_search_log_release

The AOL dump was a catastrophe. In an eyeblink, many of the users in the dataset were de-anonymized. The dump revealed personal, intimate and compromising facts about the lives of AOL search users. The AOL dump is notable for many reasons, not least because it jumpstarted the academic and technical discourse about the limits of "de-identifying" datasets by stripping out personally identifying information prior to releasing them for use by business partners, researchers, or the general public.

It turns out that de-identification is fucking hard. Just a couple of datapoints associated with an "anonymous" identifier can be sufficent to de-anonymize the user in question:

https://www.pnas.org/doi/full/10.1073/pnas.1508081113

But firms stubbornly refuse to learn this lesson. They would love it if they could "safely" sell the data they suck up from our everyday activities, so they declare that they can safely do so, and sell giant data-sets, and then bam, the next thing you know, a federal judge's porn-browsing habits are published for all the world to see:

https://www.theguardian.com/technology/2017/aug/01/data-browsing-habits-brokers

Indeed, it appears that there may be no way to truly de-identify a data-set:

https://pursuit.unimelb.edu.au/articles/understanding-the-maths-is-crucial-for-protecting-privacy

Which is a serious bummer, given the potential insights to be gleaned from, say, population-scale health records:

https://www.nytimes.com/2019/07/23/health/data-privacy-protection.html

It's clear that de-identification is not fit for purpose when it comes to these data-sets:

https://www.cs.princeton.edu/~arvindn/publications/precautionary.pdf

But that doesn't mean there's no safe way to data-mine large data-sets. "Trusted research environments" (TREs) can allow researchers to run queries against multiple sensitive databases without ever seeing a copy of the data, and good procedural vetting as to the research questions processed by TREs can protect the privacy of the people in the data:

https://pluralistic.net/2022/10/01/the-palantir-will-see-you-now/#public-private-partnership

But companies are perennially willing to trade your privacy for a glitzy new product launch. Amazingly, the people who run these companies and design their products seem to have no clue as to how their users use those products. Take Strava, a fitness app that dumped maps of where its users went for runs and revealed a bunch of secret military bases:

https://gizmodo.com/fitness-apps-anonymized-data-dump-accidentally-reveals-1822506098

Or Venmo, which, by default, let anyone see what payments you've sent and received (researchers have a field day just filtering the Venmo firehose for emojis associated with drug buys like "pills" and "little trees"):

https://www.nytimes.com/2023/08/09/technology/personaltech/venmo-privacy-oversharing.html

Then there was the time that Etsy decided that it would publish a feed of everything you bought, never once considering that maybe the users buying gigantic handmade dildos shaped like lovecraftian tentacles might not want to advertise their purchase history:

https://arstechnica.com/information-technology/2011/03/etsy-users-irked-after-buyers-purchases-exposed-to-the-world/

But the most persistent, egregious and consequential sinner here is Facebook (naturally). In 2007, Facebook opted its 20,000,000 users into a new system called "Beacon" that published a public feed of every page you looked at on sites that partnered with Facebook:

https://en.wikipedia.org/wiki/Facebook_Beacon

Facebook didn't just publish this – they also lied about it. Then they admitted it and promised to stop, but that was also a lie. They ended up paying $9.5m to settle a lawsuit brought by some of their users, and created a "Digital Trust Foundation" which they funded with another $6.5m. Mark Zuckerberg published a solemn apology and promised that he'd learned his lesson.

Apparently, Zuck is a slow learner.

Depending on which "submit" button you click, Meta's AI chatbot publishes a feed of all the prompts you feed it:

https://techcrunch.com/2025/06/12/the-meta-ai-app-is-a-privacy-disaster/

Users are clearly hitting this button without understanding that this means that their intimate, compromising queries are being published in a public feed. Techcrunch's Amanda Silberling trawled the feed and found:

"An audio recording of a man in a Southern accent asking, 'Hey, Meta, why do some farts stink more than other farts?'"

"people ask[ing] for help with tax evasion"

"[whether family members would be arrested for their proximity to white-collar crimes"

"how to write a character reference letter for an employee facing legal troubles, with that person’s first and last name included."

While the security researcher Rachel Tobac found "people’s home addresses and sensitive court details, among other private information":

https://twitter.com/racheltobac/status/1933006223109959820

There's no warning about the privacy settings for your AI prompts, and if you use Meta's AI to log in to Meta services like Instagram, it publishes your Instagram search queries as well, including "big booty women."

As Silberling writes, the only saving grace here is that almost no one is using Meta's AI app. The company has only racked up a paltry 6.5m downloads, across its ~3 billion users, after spending tens of billions of dollars developing the app and its underlying technology.

The AI bubble is overdue for a pop:

https://www.wheresyoured.at/measures/

When it does, it will leave behind some kind of residue – cheaper, spin-out, standalone models that will perform many useful functions:

https://locusmag.com/2023/12/commentary-cory-doctorow-what-kind-of-bubble-is-ai/

Those standalone models were released as toys by the companies pumping tens of billions into the unsustainable "foundation models," who bet that – despite the worst unit economics of any technology in living memory – these tools would someday become economically viable, capturing a winner-take-all market with trillions of upside. That bet remains a longshot, but the littler "toy" models are beating everyone's expectations by wide margins, with no end in sight:

https://www.nature.com/articles/d41586-025-00259-0

I can easily believe that one enduring use-case for chatbots is as a kind of enhanced diary-cum-therapist. Journalling is a well-regarded therapeutic tactic:

https://www.charliehealth.com/post/cbt-journaling

And the invention of chatbots was instantly followed by ardent fans who found that the benefits of writing out their thoughts were magnified by even primitive responses:

https://en.wikipedia.org/wiki/ELIZA_effect

Which shouldn't surprise us. After all, divination tools, from the I Ching to tarot to Brian Eno and Peter Schmidt's Oblique Strategies deck have been with us for thousands of years: even random responses can make us better thinkers:

https://en.wikipedia.org/wiki/Oblique_Strategies

I make daily, extensive use of my own weird form of random divination:

https://pluralistic.net/2022/07/31/divination/

The use of chatbots as therapists is not without its risks. Chatbots can – and do – lead vulnerable people into extensive, dangerous, delusional, life-destroying ratholes:

https://www.rollingstone.com/culture/culture-features/ai-spiritual-delusions-destroying-human-relationships-1235330175/

But that's a (disturbing and tragic) minority. A journal that responds to your thoughts with bland, probing prompts would doubtless help many people with their own private reflections. The keyword here, though, is private. Zuckerberg's insatiable, all-annihilating drive to expose our private activities as an attention-harvesting spectacle is poisoning the well, and he's far from alone. The entire AI chatbot sector is so surveillance-crazed that anyone who uses an AI chatbot as a therapist needs their head examined:

https://pluralistic.net/2025/04/01/doctor-robo-blabbermouth/#fool-me-once-etc-etc

AI bosses are the latest and worst offenders in a long and bloody lineage of privacy-hating tech bros. No one should ever, ever, ever trust them with any private or sensitive information. Take Sam Altman, a man whose products routinely barf up the most ghastly privacy invasions imaginable, a completely foreseeable consequence of his totally indiscriminate scraping for training data.

Altman has proposed that conversations with chatbots should be protected with a new kind of "privilege" akin to attorney-client privilege and related forms, such as doctor-patient and confessor-penitent privilege:

https://venturebeat.com/ai/sam-altman-calls-for-ai-privilege-as-openai-clarifies-court-order-to-retain-temporary-and-deleted-chatgpt-sessions/

I'm all for adding new privacy protections for the things we key or speak into information-retrieval services of all types. But Altman is (deliberately) omitting a key aspect of all forms of privilege: they immediately vanish the instant a third party is brought into the conversation. The things you tell your lawyer are priviiliged, unless you discuss them with anyone else, in which case, the privilege disappears.

And of course, all of Altman's products harvest all of our information. Altman is the untrusted third party in every conversation everyone has with one of his chatbots. He is the eternal Carol, forever eavesdropping on Alice and Bob:

https://en.wikipedia.org/wiki/Alice_and_Bob

Altman isn't proposing that chatbots acquire a privilege, in other words – he's proposing that he should acquire this privilege. That he (and he alone) should be able to mine your queries for new training data and other surveillance bounties.

This is like when Zuckerberg directed his lawyers to destroy NYU's "Ad Observer" project, which scraped Facebook to track the spread of paid political misinformation. Zuckerberg denied that this was being done to evade accountability, insisting (with a miraculously straight face) that it was in service to protecting Facebook users' (nonexistent) privacy:

https://pluralistic.net/2021/08/05/comprehensive-sex-ed/#quis-custodiet-ipsos-zuck

We get it, Sam and Zuck – you love privacy.

We just wish you'd share.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2025/06/19/privacy-invasion-by-design#bringing-home-the-beacon

300 notes

·

View notes

Text

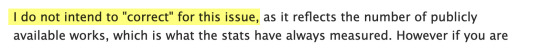

year in review - hockey rpf on ao3

hello!! the annual ao3 year in review had some friends and i thinking - wouldn't it be cool if we had a hockey rpf specific version of that. so i went ahead and collated the data below!!

i start with a broad overview, then dive deeper into the 3 most popular ships this year (with one bonus!)

if any images appear blurry, click on them to expand and they should become clear!

₊˚⊹♡ . ݁₊ ⊹ . ݁˖ . ݁𐙚 ‧₊˚ ⋅. ݁

before we jump in, some key things to highlight: - CREDIT TO: the webscraping part of my code heavily utilized the ao3 wrapped google colab code, as lovingly created by @kyucultures on twitter, as the main skeleton. i tweaked a couple of things but having it as a reference saved me a LOT of time and effort as a first time web scraper!!! thank you stranger <3 - please do NOT, under ANY circumstances, share any part of this collation on any other website. please do not screenshot or repost to twitter, tiktok, or any other public social platform. thank u!!! T_T - but do feel free to send requests to my inbox! if you want more info on a specific ship, tag, or you have a cool idea or wanna see a correlation between two variables, reach out and i should be able to take a look. if you want to take a deeper dive into a specific trope not mentioned here/chapter count/word counts/fic tags/ship tags/ratings/etc, shoot me an ask!

˚ . ˚ . . ✦ ˚ . ★⋆. ࿐࿔

with that all said and done... let's dive into hockey_rpf_2024_wrapped_insanity.ipynb

BIG PICTURE OVERVIEW

i scraped a total of 4266 fanfics that dated themselves as published or finished in the year 2024. of these 4000 odd fanfics, the most popular ships were:

Note: "Minor or Background Relationship(s)" clocked in at #9 with 91 fics, but I removed it as it was always a secondary tag and added no information to the chart. I did not discern between primary ship and secondary ship(s) either!

breaking down the 5 most popular ships over the course of the year, we see:

super interesting to see that HUGE jump for mattdrai in june/july for the stanley cup final. the general lull in the offseason is cool to see as well.

as for the most popular tags in all 2024 hockey rpf fic...

weee like our fluff. and our established relationships. and a little H/C never hurt no one.

i got curious here about which AUs were the most popular, so i filtered down for that. note that i only regex'd for tags that specifically start with "Alternate Universe - ", so A/B/O and some other stuff won't appear here!

idk it was cool to me.

also, here's a quick breakdown of the ratings % for works this year:

and as for the word counts, i pulled up a box plot of the top 20 most popular ships to see how the fic length distribution differed amongst ships:

mattdrai-ers you have some DEDICATION omg. respect

now for the ship by ship break down!!

₊ . ݁ ݁ . ⊹ ࣪ ˖͙͘͡★ ⊹ .

#1 MATTDRAI

most popular ship this year. peaked in june/july with the scf. so what do u people like to write about?

fun fun fun. i love that the scf is tagged there like yes actually she is also a main character

₊ . ݁ ݁ . ⊹ ࣪ ˖͙͘͡★ ⊹ .

#2 SIDGENO

(my babies) top tags for this ship are:

folks, we are a/b/o fiends and we cannot lie. thank you to all the selfless authors for feeding us good a/b/o fic this year. i hope to join your ranks soon.

(also: MPREG. omega sidney crosby. alpha geno. listen, the people have spoken, and like, i am listening.)

₊ . ݁ ݁ . ⊹ ࣪ ˖͙͘͡★ ⊹ .

#3 NICOJACK

top tags!!

it seems nice and cozy over there... room for one more?

₊ . ݁ ݁ . ⊹ ࣪ ˖͙͘͡★ ⊹ .

BONUS: JDTZ.

i wasnt gonna plot this but @marcandreyuri asked me if i could take a look and the results are so compelling i must include it. are yall ok. do u need a hug

top tags being h/c, angst, angst, TRADES, pining, open endings... T_T katie said its a "torture vortex" and i must concurr

₊ . ݁ ݁ . ⊹ ࣪ ˖͙͘͡★ ⊹ .

BONUS BONUS: ALPHA/BETA/OMEGA

as an a/b/o enthusiast myself i got curious as to what the most popular ships were within that tag. if you want me to take a look about this for any other tag lmk, but for a/b/o, as expected, SID GENO ON TOP BABY!:

thats all for now!!! if you have anything else you are interested in seeing the data for, send me an ask and i'll see if i can get it to ya!

#fanfic#sidgeno#evgeni malkin#hockey rpf#sidney crosby/evgeni malkin#hockeyrpf#hrpf fic#sidgeno fic#sidney crosby#hockeyrpf wrapped 2024#leon draisaitl#matthew tkachuk#mattdrai#leon draisaitl/matthew tkachuk#nicojack#nico hischier#nico hischier/jack hughes#jack hughes#jamie drysdale#trevor zegras#jdtz#jamie drysdale/trevor zegras#pittsburgh penguins#edmonton oilers#florida panthers#new jersey devils

471 notes

·

View notes

Text

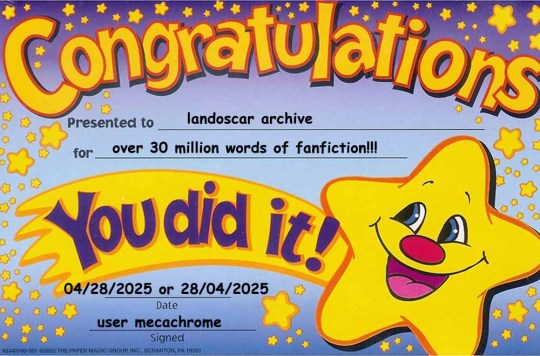

📊 LANDOSCAR AO3 STATS (may 2025)

notes

sorry this literally took 2 weeks to write... unfortunately the data was retrieved april 28 and it is now may 12.

other work: i previously wrote a stats overview that covered landoscar's fic growth and breakout in 2023 :) i've kept some of the formatting and graphs that i showed there, while other things have been removed or refined because i felt they'd become redundant or unnecessary (aka they were basically just a reflection of fandom growth in general, and not unique or interesting to landoscar as a ship specifically).

methodology: i simply scraped the metadata for every fic in the landoscar tag (until april 28, 2025) and then imported it into google sheets to clean, with most visualizations done in tableau. again, all temporal data is by date updated (not posted) unless noted otherwise. this is because the date that appears on the parent view of the ao3 archives is the updated one, so it's the only feasible datapoint to collect for 3000+ fics.

content: this post does not mention any individual authors or concern itself with kudos, hits, comments, etc. i purely describe archive growth and overall analysis of metadata like word count and tagging metrics.

cleaning: after importing my data, i standardized ship spelling, removed extra "814" or "landoscar" tags, and merged all versions of one-sided, background, implied, past, mentioned etc. into a single "(side)" modifier. i also removed one fic entirely from the dataset because the "loscar" tag was being mistakenly wrangled as landoscar, but otherwise was not actually tagged as landoscar. i also removed extra commentary tags in the ships sets that did not pertain to any ships.

overall stats

before we get into any detailed distributions, let's first look at an overview of the archive as of 2025! in their 2-and-change years as teammates, landoscar have had over 3,409 fics written for them, good enough for 3rd overall in the f1 archives (behind lestappen and maxiel).

most landoscar fics are completed one-shots (although note that a one-shot could easily be 80k words—in fact they have about 30 single-chapter fics that are at least 50k words long), and they also benefit from a lot of first-tagged fic, which is to say 82.3% of landoscar-tagged fics have them as the first ship, implying that they aren't often used as a fleeting side pairing and artificially skewing perception of their popularity. in fact, over half of landoscar fics are PURELY tagged as landoscar (aka otp: true), with no other side pairings tagged at all.

this percentage has actually gone down a bit since 2023 (65.5%), which makes sense since more lando and oscar ships have become established and grown in popularity over the years, but it's also not a very big difference yet...

ship growth

of course, landoscar have grown at a frankly terrifying rate since 2023. remember this annotated graph i posted comparing their growth during the 2023 season to that of carlando and loscar, respectively their other biggest ship at the time? THIS IS HER NOW:

yes... that tiny squished down little rectangle... (wipes away stray tear) they grow up so fast. i also tried to annotate this graph to show other "big" landoscar moments in the timeline since, but i honestly struggled with this because they've just grown SO exponentially and consistently that i don't even feel like i can point to anything as a proper catalyst of production anymore. that is to say, i think landoscar are popular enough now that they have a large amount of dedicated fans/writers who will continuously work on certain drafts and stories regardless of what happens irl, so it's hard to point at certain events as inspiring a meaningful amount of work.

note also that this is all going by date updated, so it's not a true reflection of ~growth~ as a ficdom. thankfully ao3 does have a date_created filter that you can manually enter into the search, but because of this limitation i can't create graphs with the granularity and complexity that scraping an entire archive allows me. nevertheless, i picked a few big ships that landoscar have overtaken over the last 2 years and created this graph using actual date created metrics!!!

this is pretty self-explanatory of course but i think it's fun to look at... :) it's especially satisfying to see how many ships they casually crossed over before the end of 2024.

distributions

some quick graphs this time. rating distribution remains extremely similar to the 2023 graph, with explicit fic coming out on top at 28%:

last time i noted a skew in ratings between the overall f1 rpf tag and the landoscar tag (i.e. landoscar had a higher prevalence of e fic), but looking at it a second time i honestly believe this is more of a cultural shift in (f1? sports rpf? who knows) fandom at large and not specific to landoscar as a ship — filtering the f1 rpf tag to works updated from 2023 onward shows that explicit has since become the most popular rating in general, even when excluding landoscar-tagged fics. is it because fandom is getting more horny in general, or because the etiquette surrounding what constitutes t / m / e has changed, or because people are less afraid to post e fic publicly and no longer quarantine it to locked livejournal posts? or something else altogether? Well i don't know and this is a landoscar stats post so it doesn't matter but that could be something for another thought experiment. regardless because of that i feel like further graphs aren't really necessary 🤷♀️

onto word distribution:

still similar to last time, although i will note that there's a higher representation of longfic now!!! it might not seem like much, but i noted last year that 85% of landoscar fics were under 10k & 97% under 25k — these numbers are now 78% and 92% respectively, which adds up in the grand scheme of a much larger archive. you'll also notice that the prevalence of <1k fic has gone down as well.

for the fun of it here's the wc distribution but with a further rating breakdown; as previously discussed you're more likely to get G ratings in flashfic because there's less wordspace to Make The Porn Happen. of course there are nuances to this but that's just a broad overview

side ships

what other ships are landoscar shippers shipping these days??? a lot of these ships are familiar from last time, but there are two new entries in ham/ros and pia/sai overtaking nor/ric and gas/lec to enter the top 10. ships that include at least one of lando or oscar are highlighted in orange:

of course, i pulled other 814-adjacent ships, but unfortunately i've realized that a lot of them simply aren't that popular/prevalent (context: within the 814 tag specifically) so they didn't make the top 10... because of that, here's a graph with only ships that include lando or oscar and have a minimum of 10 works within the landoscar tag:

eta: other primarily includes oscar & lily and maxf & lando. lando doesn't really have that many popular pairings within landoscar shippers otherwise...

i had wanted to explore these ships further and look at their growth/do some more in depth breakdowns of their popularity, but atm they're simply not popular enough for me to really do anything here. maybe next year?!

that being said, i did make a table comparing the prevalence of side ships within the 814 tag to the global f1 archives, so as to contextualize the popularity of each ship (see 2023). as usually, maxiel is very underrepresented in the landoscar tag, with galex actually receiving quite a boost compared to before!

additional tags

so last time i only had about 400 fics to work with and i did some analysis on additional tags / essentially au tagging. however, the problem is that there are now 3000 fics in my set, and the limitations of web scraping means that i'm not privy to the tag wrangling that happens in Da Backend of ao3. basically i'm being given all the raw versions of these au tags, whereas on ao3 "a/b/o" and "alpha/beta/omega dynamics" and "au - alpha/beta/omega" and "alternate universe - a/b/o" are all being wrangled together. because it would take way too long for me to do all of this manually and i frankly just don't want to clean that many fics after already going through all the ship tags, i've decided to not do any au analysis because i don't think it would be an accurate reflection of the data...

that being said, i had one new little experiment! as landoscar get more and more competitive, i wanted to chart how ~angsty~ they've gotten as a ship on ao3. i wanted to make a cumulative graph that shows how the overall fluff % - angst % difference has shifted over time, but ummmm... tableau and i had a disagreement. so instead here is a graph of the MoM change in angst % (so basically what percentage of the fics updated in that month specifically were tagged angst?):

the overall number is still not very drastic at all and fluff still prevails over angst in the landoscar archive. to be clear, there are 33.2% fics tagged some variation of fluff and 21.4% fics tagged some variation of angst overall, so there's a fluff surplus of 11.8%. but there has definitely been a slight growth in angst metrics over the past few months!

—

i will leave this here for now... if there's anything specific that you're interested in lmk and i can whip it up!!! hehe ty for reading 🧡