#data models

Text

#UNICEF UK#Job Vacancy#Data Engineer#London#Permanent#Part home/Part office#£52#000 per annum#Enterprise Data Platform#Data Ingestion Pipelines#Data Models#UUK Data Strategy#Information Team#Data Solutions#Data Integrations#Complex Data Migrations#Code Development#Datawarehouse Environment#Snowflake#Apply Online#Closing Date: 7 March 2024#First Round Interview: 5 April 2024#Second Round Interview: 6 June 2024#Excellent Pay#Benefits#Flexible Working#Annual Leave#Pension#Discounts#Wellbeing Tools

0 notes

Text

The next solar storm is on its way – and may cause more problems than you think - Technology Org

New Post has been published on https://thedigitalinsider.com/the-next-solar-storm-is-on-its-way-and-may-cause-more-problems-than-you-think-technology-org/

The next solar storm is on its way – and may cause more problems than you think - Technology Org

We live in such times – we should be prepared for the worst and take adequate precautions. We should wear helmets and knee braces when riding bikes if our feet can no longer support our weight. In winter, we must salt the pavements and preferably wear scarves.

Aurora as seen from Talkeetna, Alaska, on Nov. 3, 2015.

Credits: Copyright Dora Miller

And to think that there are people in the world interested in things happening as far as 400,000 kilometres away! Kristian Solheim Thinn is one of them. He is a research scientist at SINTEF Energy Research working with electrical power components.

“The only real protection from solar storms is to switch off the electricity. Is that tabloid enough for you?”, he asks, before adding:

“If a major storm occurs, we must either live with it and hope that our distribution grids will not be severely damaged, or simply prepare for the fact that we will all be without electricity”, he says. These are the two extremes.

Vulnerable transformers

Solheim Thinn has been talking for some time and explaining the situation slowly and very patiently. He tells us how in 2019 he installed sensors in a transformer in Ogndal in Trøndelag county. The intention was, and still is, to measure and analyse what happens when solar storms collide with the Earth’s magnetic field, creating problems for all of us – not least in northern latitudes where the field protects us far less than at the equator. As Solheim Thinn puts it, the problems caused by solar storms are the result of what he calls geo-magnetically inducted currents (GICs).

It was, of course, no coincidence that the transformer at Ogndal was chosen. Since the distance between neighbouring transformers is so great, it is easier for the solar storms to create problems.

“We are currently as well prepared as we can be”, says Solheim Thinn. “Overhead cables are resilient in the face of solar storms, but we have to consider the transformers because they represent the weak link in the chain. In Norway, our transformers are connected to the main distribution grid, operated for the most part by Statnett, and it is these that are the problem.

– Why is this?

“They are connected to earth, which means that any current induced in the cables passes through the transformers and onward to ground. This creates no problems at lower voltages. However, things are very different during solar storms.

Solheim Thinn explains that our transformers are designed for alternating current. So, when direct current is geomagnetically induced during solar storms, they may start to generate internal heat. This results in increased, or so-called reactive, power consumption that disrupts the distribution grid. This then causes high frequency noise that may result in problems for the control system. Eventually, the transformer cores may become what is known as ‘saturated’.

This in turn causes safety mechanisms to cut in, hopefully disconnecting the transformer before it is badly damaged.

But it doesn’t end there. If one transformer is in trouble, the next in line has to take over. But this means that the second transformer must carry twice the load. This may be more than enough to cause it to throw in the towel as well – and in this way a domino effect will develop.

“In the worst case, we’re talking about a total blackout”, says Solheim Thinn.

First of all, it will start to get cold

This is why the grid operates under a number of precautions. In 2019, the Directorate for Civil Protection and Emergency Planning (DSB) compiled a list of ‘things that can go wrong’ here in Norway. The list includes issues such as the impacts of extreme weather events, flooding, pandemics, cyberattacks, and not forgetting the mother of all catastrophes – solar storms, which were classified as a real threat.

It’s patently obvious that there isn’t a lot we can do about solar storms. When they arrive, we have anything from between 18 and 72 hours to prepare ourselves for something that cannot be stopped. Let me explain. We will only find out how big a solar storm is one or two hours before it strikes the Earth. They can arrive very suddenly.

A recent SINTEF report reveals that there is currently no Norwegian system for solar storm warnings.

“The upside is that during the most intense storms we will get to see some fantastic displays of the northern lights, even as far south as Florida”, explains Solheim Thinn.

The downside is that the impact will be felt for many long months afterwards, and the researcher has no shortage of issues on his list. Disruptions to satellite navigation systems will make things very difficult, even for ordinary vehicle traffic. The vessels that we rely on to maintain oil and gas production in the North Sea will not be able to operate as they should. Excavators that depend on GPS guidance systems will grind to a halt. Both high and low frequency radio transmissions will cease, and you will not be able to fill your car with fuel.

“Petrol pumps also rely on electricity”, says Solheim Thinn.

“After two hours, the batteries at the base stations supporting the mobile network will run out. We will then lose mobile coverage and things will really begin to go downhill”, he says.

First of all, it will start to get cold.

And when this happens, it will be up to us ordinary folk to have done our homework in advance. The DSB has compiled a contingency list for households. Nine litres of water, two packets of crispbread, a packet of porridge oats, three packets of dried foods, or tins, per person, as well as warm clothes, blankets, sleeping bags and a battery-powered DAB radio.

The solar storm of 1859

One of the most famous solar storms in recorded history was the Carrington event of 1859, named after the British astronomer Richard Carrington, who was observing some intense sunspots.

“The event occurred during one of the famous Californian gold rushes, and one urban myth suggested that the light was so intense that miners were able to pan for gold at night”, says Solheim Thinn. “The telegraph lines glowed red in the dark and the terminals caught fire”, he says.

In 1921, we saw evidence that solar storms could create problems even when technological infrastructure was in its infancy. For the three days during which this storm continued, electrical fires were started all across the world. The worst examples were in New York. Trains were brought to a standstill, so the storm became known as the ‘New York Railroad Storm’.

In 1972, a solar storm detonated several thousand sea mines along the coast of Vietnam.

“This was the first occasion on which problems were reported with satellites”, says Solheim Thinn.

In 1982, problems with as many as four transformers and 15 power lines were recorded in Sweden. This event also caused disruptions in Norway, but no blackouts occurred.

In 1989 a solar storm caused a nine-hour outage in Quebec.

“We also experienced solar storms in 2003, 2017 and most recently in 2022, when the company Space X lost 40 of its satellites”, says Solheim Thinn.

According to the New York Times, the storm cost Elon Musk more than one billion kroner.

Things could have been much worse. In 2012 the planet experienced a near miss. It is calculated that if this storm had struck the USA, it would have caused in the region of 20 trillion dollars of damage. This is equivalent to twice the Norwegian Government Pension Fund Global, and would have been as catastrophic, in financial terms, as a large asteroid colliding with the planet, less the social and human costs and loss of life.

But enough of these horror stories. Let’s get back to Ogndal in Trøndelag, and what has been discovered there.

The unpredictability of space

“If you can’t measure it, you can’t prove that it exists”, says Solheim Thinn.

“We’ve installed sensors in the transformer at Ogndal in order to take measurements and calibrate our data models”, he says.

Several factors have to be considered, one of which is solar activity, which is not too difficult to monitor. The US Space Weather Prediction Center (SWPC) is a mine of information, but there is one problem – the difficulty of making reliable long-term predictions.

The Tromsø Geophysical Observatory has deployed several magnetometers with the aim of measuring the strength of the Earth’s magnetic field. One of them is in Røyrvik, which is between 150 and 160 kilometres away. The magnetometers measure both the natural geomagnetic field and the magnetic perturbations generated by solar storms.

Naturally, these measurements are in real time, but they can also help us to understand how the geomagnetic field is influenced by events in space, including the solar storms that induce currents in our distribution grids and transformers.

“We also have to know about the electrical conductivity at depths of several hundred kilometres below the Earth’s surface”, says Solheim Thinn. This is because the geo-magnetically inducted currents also penetrate deep into the Earth’s crust, and in turn impact on the inducted currents flowing through the transformers”, he says.

Solheim Thinn explains that it is difficult to construct reliable predictive models because electrical conductivity in the different geological layers within the crust is very variable. It is especially difficult to assess electrical conductivity at the transition between the sea and land. The ocean exhibits relatively high conductivity, while the opposite is true for land areas.

“On the other hand, it is not difficult to identify correlations between magnetic field measurements and actual geo-magnetically inducted currents in our distribution grids, provided that both are being measured at the same time”, says Solheim Thinn. It is possible to calculate a ratio that describes their inter-relationship”, he says.

Long seabed cables installed on the continental shelf are very vulnerable to solar storms. One of the studies cited in the SINTEF report focused on the influence of the conductivity of shallow offshore continental shelves, similar to the situation in the North Sea. It demonstrated that the geo-magnetically inducted current would be three times as strong if the ocean had not been there.

Solheim Thinn has also made a couple of other finds. The ionospheric electrojets that circle the Earth’s magnetic poles travel for the most part in an east-west direction. Researchers have believed for some time that solar storms impact on electrical power lines in different ways, depending on the direction from which the current is travelling. But this has now been shown to be untrue.

“It makes no difference at all whether power lines are oriented in a northerly, southerly, easterly or westerly direction”, says Solheim Thinn. They still remain just as vulnerable”, he says.

Solheim Thinn also believes that there are differences in resilience between different transformers. In recent years, transformers have been purchased that are resilient to solar storms for a given period because they are designed not to overheat too quickly. It has been shown that three-phase transformers installed with five-limbed iron cores become saturated more quickly than the three-limbed type.

“For this reason, it is now recommended to install three-limbed three-phase transformers in the most important and most vulnerable substation facilities.

Approaching a solar maximum

Most people are aware that the sun exhibits periods of greater and lesser activity, defined within an 11-year cycle. Solheim Thinn explains that during the first two years after the sensors were installed at Ogndal, only very low levels of activity were recorded. However, starting in 2021, and during the remainder of that year, six measurements were made indicating so-called moderate effect activity.

“During moderate effect activity, our transformers will remain resilient, but this may not necessarily apply to the grid”, says Solheim Thinn.

On 4 November 2021, a transformer went down at a neighbouring substation in Namsos, about 70 kilometres from Ogndal.

“The safety mechanism was tripped, and this disconnected the transformer”, says Solheim Thinn. “Our findings indicate that there is a connection between reactive power consumption, geo-magnetically inducted current, and changes in the magnetic field as recorded by space meteorology instruments. In other words, when we measure strong currents, we observe a simultaneous and major peak in reactive power consumption. If we exceed the safety mechanism threshold value, this trips the transformer’s circuit breaker”, he says.

However, even if a transformer takes a time-out and goes down, as happened in Namsos on that late autumn day, this was hardly noticeable to electricity consumers. This was because other transformers stepped in to do the job. The electricity grid operates with a so-called ‘buffer’, or reserve capacity, which in this case kicked in to avert a crisis.

“However, it was a close call and a stroke of luck that the Ogndal transformer remained in operation”, says Solheim Thinn.

“In 2024 we will be entering a period in the cycle when solar activity is at its highest. Solar storms are classified on the basis of their intensity. A G1 storm is a small one, while the most intense is classified as G5.

“We expect to see four G5 solar storm events during every 11-year cycle”, says Solheim Thinn. “The current cycle is expected to display its highest levels of activity in 2024 and 2025”, he says.

– What will this mean?

“We will most likely experience control and safety issues in the electricity grid”, says Solheim Thinn. “We may experience a collapse or blackout of the grid, and some transformers may be damaged. We will also experience problems with satellite navigation”, he says.

We can in fact only hope that the worst doesn’t really come to the worst.

Source: Sintef

You can offer your link to a page which is relevant to the topic of this post.

#000#2022#2024#alaska#Asteroid#Astronomy news#batteries#battery#battery-powered#billion#cables#consumers#copyright#course#crust#Cyberattacks#Dark#data#data models#direction#display#displays#earth#electric power#electrical power#electricity#Elon Musk#energy#Events#Exhibits

0 notes

Text

What is the importance of the extensibility of a platform? Its benefit for your e-commerce business.

Extensibility is a design approach in software development that aims to build platforms for software that may continuously expand and be built upon beyond their initial uses and settings. In other words, extensibility is a measure of a system's ability to be expanded and a representation of the amount of work required to obtain a given set of desired results.

By extending current capabilities, extensibility enables firms to address new challenges. In this way, adaptability, mutability, and agility in software are related to extensibility, as are ideas like modular software design and interoperability. All of these ideas essentially refer to the capacity to change procedures and modify the software in response to changing requirements.

The Importance of Extensibility

By utilizing extensibility, the IT team has more resources to use with current software and tools to handle changing business concerns. Extensible systems have the ability to support global organizations with a variety of business strategies for their success.

Beyond what the platform supports out of the box, a flexible platform enables developers to construct and execute apps that address the demands of the business. The platform's extension points make it simple for developers to attach add-ons that improve performance and usability and provide new capabilities.

Platform expansion features include REST and other APIs to connect to other components of the technology stack, as well as connector tools that exchange data and perform actions with other software.

Features of an extensible software platform

Software that is built with extensibility in mind is significantly more durable and useful than systems that are less adaptable or that can only be modified with significant financial, human, and technical investment.

One of the most important features of an expandable software platform is that it comes with numerous pre-built capabilities that businesses may require, preventing them from having to add to the platform right away.

Additionally, vendors should make it simple for programmers to expand their platforms. Providers can either provide developers with the tools they need to create their extensions, or they can let them use their own tools.

Businesses may adjust to change without having to reinvest by using extensible systems, which enable them to handle more sophisticated and nuanced business needs. Extensible systems allow the expansion of current systems to address new difficulties.

Types of Platform Extensibility

The types of platform extensibility listed below should all be taken into account when your business decides what it needs in order to be successful in a difficult and competitive marketplace.

User Interface (UI):

By creating a user interface that is more flexible, businesses may customize experiences to meet customer needs without sacrificing essential features. This is one way that extensibility lays the groundwork for a tradition of ongoing user experience development.

Data Models:

An extensible data model would be able to take into account a wide range of distinct factors, values, metrics, measurements, and KPIs without requiring a system-wide configuration change. An extensible system could operate as a platform that offers an interface allowing users to design and manage their own data models and business logic, allowing them to reprogram and redeploy the system towards new objectives and goals instead of creating an algorithm to solve a specific problem.

Integration:

Extensibility encourages the integration of business platforms, systems, and processes, which makes it much simpler for various business teams, projects, and divisions to work together. When integrated systems work together as a single, unified solution for managing the complete business, integrations built on open standards increase productivity and can produce superior business results.

Processes:

Extending business processes is crucial for creating fresh solutions to difficult problems. The term interoperability refers to how easily new technologies may integrate with current systems. Competent businesses must be able to create new procedures that expand upon their existing abilities and prevent them from starting over when it comes to changing a software system's fundamental operation.

Benefit from the extensibility of a platform for your e-commerce business

A platform's extensibility supports corporate growth by giving businesses access to a collaborative and innovative open environment.

When compared to alternative platforms that are closed-source or have constrained capabilities, businesses' freedom to incorporate new features into their cloud software innovations might be considered just a benefit.

Extensibility is crucial for a company's product because it enables them to update itself frequently with a platform that can be expanded with new features to remain relevant in the market and keep its competitive advantage.

The software extension service's better performance and better client experience can help the organization foster its average business users.

This makes your life simpler and enables you to concentrate on what's most important: making fantastic items for your company and clients.

For enterprises, extensibility can be quite valuable. Providing more services or products enables them to expand their reach, engage new audiences, and enhance client connections.

Higher degrees of extensibility on a platform can help users both now and in the future by making their lives simpler.

This extensibility has numerous potential advantages for the company. These include things like greater customer happiness, increased production, and increased efficiency.

Conclusion

Global organizations become more agile and resilient by working to improve the extensibility of systems and operational processes. Today's organizations need a software platform that is extensible in order to create and operate solutions that meet their present needs while also fostering innovation with minimal risk to continuing operations.

It's crucial to make sure your platform can be quickly extended in a world where technology is continually changing. With this talent, you'll be able to meet the demands of a shifting market while maintaining an advantage over your competitors.

#e-commerce business#extensible software platform#Platform Extensibility#utilizing extensibility#User Interface (UI)#modular software design#cloud software innovations#Data Models#e-commerce platform#how to start e-commerce business in India#eCommerce store provider#eCommerce platform in India#eCommerce store software#eCommerce industry software

0 notes

Text

first meeting

#rdr2#red dead redemption#red dead redemption 2#arthur morgan#issac morgan#man sees baby for the first time ever and is surprised that theyre tiny little creatures#arthur being the man he is mustve had SO many emotions holding issac for the first time. and this is all he manages to say in the moment#im lowkey projecting bc when i met my niece i absolutely REFUSED to hold her until i saw her the second time and i was like.#oh my fucking god shes so fucking tiny#anyway. i hope nothing bad happens to them ever<3#btw arthurs clothes come from an edit of a young version of him that i saw#and i also saw a video of someone managing to find a repurposed model of eliza in rdr2s data and shes got dark hair!#so i gave issac dark hair (and arthurs blue eyes<3)#arthurs definitrly into people with dark hair#pspspspsp arthur i have dark hair pspspspspspspsp#AHEM anyway-#sorry i cant draw babies even tho i see one all the time now LMFAO#my art

6K notes

·

View notes

Text

Your car spies on you and rats you out to insurance companies

I'm on tour with my new, nationally bestselling novel The Bezzle! Catch me TOMORROW (Mar 13) in SAN FRANCISCO with ROBIN SLOAN, then Toronto, NYC, Anaheim, and more!

Another characteristically brilliant Kashmir Hill story for The New York Times reveals another characteristically terrible fact about modern life: your car secretly records fine-grained telemetry about your driving and sells it to data-brokers, who sell it to insurers, who use it as a pretext to gouge you on premiums:

https://www.nytimes.com/2024/03/11/technology/carmakers-driver-tracking-insurance.html

Almost every car manufacturer does this: Hyundai, Nissan, Ford, Chrysler, etc etc:

https://www.repairerdrivennews.com/2020/09/09/ford-state-farm-ford-metromile-honda-verisk-among-insurer-oem-telematics-connections/

This is true whether you own or lease the car, and it's separate from the "black box" your insurer might have offered to you in exchange for a discount on your premiums. In other words, even if you say no to the insurer's carrot – a surveillance-based discount – they've got a stick in reserve: buying your nonconsensually harvested data on the open market.

I've always hated that saying, "If you're not paying for the product, you're the product," the reason being that it posits decent treatment as a customer reward program, like the little ramekin warm nuts first class passengers get before takeoff. Companies don't treat you well when you pay them. Companies treat you well when they fear the consequences of treating you badly.

Take Apple. The company offers Ios users a one-tap opt-out from commercial surveillance, and more than 96% of users opted out. Presumably, the other 4% were either confused or on Facebook's payroll. Apple – and its army of cultists – insist that this proves that our world's woes can be traced to cheapskate "consumers" who expected to get something for nothing by using advertising-supported products.

But here's the kicker: right after Apple blocked all its rivals from spying on its customers, it began secretly spying on those customers! Apple has a rival surveillance ad network, and even if you opt out of commercial surveillance on your Iphone, Apple still secretly spies on you and uses the data to target you for ads:

https://pluralistic.net/2022/11/14/luxury-surveillance/#liar-liar

Even if you're paying for the product, you're still the product – provided the company can get away with treating you as the product. Apple can absolutely get away with treating you as the product, because it lacks the historical constraints that prevented Apple – and other companies – from treating you as the product.

As I described in my McLuhan lecture on enshittification, tech firms can be constrained by four forces:

I. Competition

II. Regulation

III. Self-help

IV. Labor

https://pluralistic.net/2024/01/30/go-nuts-meine-kerle/#ich-bin-ein-bratapfel

When companies have real competitors – when a sector is composed of dozens or hundreds of roughly evenly matched firms – they have to worry that a maltreated customer might move to a rival. 40 years of antitrust neglect means that corporations were able to buy their way to dominance with predatory mergers and pricing, producing today's inbred, Habsburg capitalism. Apple and Google are a mobile duopoly, Google is a search monopoly, etc. It's not just tech! Every sector looks like this:

https://www.openmarketsinstitute.org/learn/monopoly-by-the-numbers

Eliminating competition doesn't just deprive customers of alternatives, it also empowers corporations. Liberated from "wasteful competition," companies in concentrated industries can extract massive profits. Think of how both Apple and Google have "competitively" arrived at the same 30% app tax on app sales and transactions, a rate that's more than 1,000% higher than the transaction fees extracted by the (bloated, price-gouging) credit-card sector:

https://pluralistic.net/2023/06/07/curatorial-vig/#app-tax

But cartels' power goes beyond the size of their warchest. The real source of a cartel's power is the ease with which a small number of companies can arrive at – and stick to – a common lobbying position. That's where "regulatory capture" comes in: the mobile duopoly has an easier time of capturing its regulators because two companies have an easy time agreeing on how to spend their app-tax billions:

https://pluralistic.net/2022/06/05/regulatory-capture/

Apple – and Google, and Facebook, and your car company – can violate your privacy because they aren't constrained regulation, just as Uber can violate its drivers' labor rights and Amazon can violate your consumer rights. The tech cartels have captured their regulators and convinced them that the law doesn't apply if it's being broken via an app:

https://pluralistic.net/2023/04/18/cursed-are-the-sausagemakers/#how-the-parties-get-to-yes

In other words, Apple can spy on you because it's allowed to spy on you. America's last consumer privacy law was passed in 1988, and it bans video-store clerks from leaking your VHS rental history. Congress has taken no action on consumer privacy since the Reagan years:

https://www.eff.org/tags/video-privacy-protection-act

But tech has some special enshittification-resistant characteristics. The most important of these is interoperability: the fact that computers are universal digital machines that can run any program. HP can design a printer that rejects third-party ink and charge $10,000/gallon for its own colored water, but someone else can write a program that lets you jailbreak your printer so that it accepts any ink cartridge:

https://www.eff.org/deeplinks/2020/11/ink-stained-wretches-battle-soul-digital-freedom-taking-place-inside-your-printer

Tech companies that contemplated enshittifying their products always had to watch over their shoulders for a rival that might offer a disenshittification tool and use that as a wedge between the company and its customers. If you make your website's ads 20% more obnoxious in anticipation of a 2% increase in gross margins, you have to consider the possibility that 40% of your users will google "how do I block ads?" Because the revenue from a user who blocks ads doesn't stay at 100% of the current levels – it drops to zero, forever (no user ever googles "how do I stop blocking ads?").

The majority of web users are running an ad-blocker:

https://doc.searls.com/2023/11/11/how-is-the-worlds-biggest-boycott-doing/

Web operators made them an offer ("free website in exchange for unlimited surveillance and unfettered intrusions") and they made a counteroffer ("how about 'nah'?"):

https://www.eff.org/deeplinks/2019/07/adblocking-how-about-nah

Here's the thing: reverse-engineering an app – or any other IP-encumbered technology – is a legal minefield. Just decompiling an app exposes you to felony prosecution: a five year sentence and a $500k fine for violating Section 1201 of the DMCA. But it's not just the DMCA – modern products are surrounded with high-tech tripwires that allow companies to invoke IP law to prevent competitors from augmenting, recongifuring or adapting their products. When a business says it has "IP," it means that it has arranged its legal affairs to allow it to invoke the power of the state to control its customers, critics and competitors:

https://locusmag.com/2020/09/cory-doctorow-ip/

An "app" is just a web-page skinned in enough IP to make it a crime to add an ad-blocker to it. This is what Jay Freeman calls "felony contempt of business model" and it's everywhere. When companies don't have to worry about users deploying self-help measures to disenshittify their products, they are freed from the constraint that prevents them indulging the impulse to shift value from their customers to themselves.

Apple owes its existence to interoperability – its ability to clone Microsoft Office's file formats for Pages, Numbers and Keynote, which saved the company in the early 2000s – and ever since, it has devoted its existence to making sure no one ever does to Apple what Apple did to Microsoft:

https://www.eff.org/deeplinks/2019/06/adversarial-interoperability-reviving-elegant-weapon-more-civilized-age-slay

Regulatory capture cuts both ways: it's not just about powerful corporations being free to flout the law, it's also about their ability to enlist the law to punish competitors that might constrain their plans for exploiting their workers, customers, suppliers or other stakeholders.

The final historical constraint on tech companies was their own workers. Tech has very low union-density, but that's in part because individual tech workers enjoyed so much bargaining power due to their scarcity. This is why their bosses pampered them with whimsical campuses filled with gourmet cafeterias, fancy gyms and free massages: it allowed tech companies to convince tech workers to work like government mules by flattering them that they were partners on a mission to bring the world to its digital future:

https://pluralistic.net/2023/09/10/the-proletarianization-of-tech-workers/

For tech bosses, this gambit worked well, but failed badly. On the one hand, they were able to get otherwise powerful workers to consent to being "extremely hardcore" by invoking Fobazi Ettarh's spirit of "vocational awe":

https://www.inthelibrarywiththeleadpipe.org/2018/vocational-awe/

On the other hand, when you motivate your workers by appealing to their sense of mission, the downside is that they feel a sense of mission. That means that when you demand that a tech worker enshittifies something they missed their mother's funeral to deliver, they will experience a profound sense of moral injury and refuse, and that worker's bargaining power means that they can make it stick.

Or at least, it did. In this era of mass tech layoffs, when Google can fire 12,000 workers after a $80b stock buyback that would have paid their wages for the next 27 years, tech workers are learning that the answer to "I won't do this and you can't make me" is "don't let the door hit you in the ass on the way out" (AKA "sharpen your blades boys"):

https://techcrunch.com/2022/09/29/elon-musk-texts-discovery-twitter/

With competition, regulation, self-help and labor cleared away, tech firms – and firms that have wrapped their products around the pluripotently malleable core of digital tech, including automotive makers – are no longer constrained from enshittifying their products.

And that's why your car manufacturer has chosen to spy on you and sell your private information to data-brokers and anyone else who wants it. Not because you didn't pay for the product, so you're the product. It's because they can get away with it.

Cars are enshittified. The dozens of chips that auto makers have shoveled into their car design are only incidentally related to delivering a better product. The primary use for those chips is autoenshittification – access to legal strictures ("IP") that allows them to block modifications and repairs that would interfere with the unfettered abuse of their own customers:

https://pluralistic.net/2023/07/24/rent-to-pwn/#kitt-is-a-demon

The fact that it's a felony to reverse-engineer and modify a car's software opens the floodgates to all kinds of shitty scams. Remember when Bay Staters were voting on a ballot measure to impose right-to-repair obligations on automakers in Massachusetts? The only reason they needed to have the law intervene to make right-to-repair viable is that Big Car has figured out that if it encrypts its diagnostic messages, it can felonize third-party diagnosis of a car, because decrypting the messages violates the DMCA:

https://www.eff.org/deeplinks/2013/11/drm-cars-will-drive-consumers-crazy

Big Car figured out that VIN locking – DRM for engine components and subassemblies – can felonize the production and the installation of third-party spare parts:

https://pluralistic.net/2022/05/08/about-those-kill-switched-ukrainian-tractors/

The fact that you can't legally modify your car means that automakers can go back to their pre-2008 ways, when they transformed themselves into unregulated banks that incidentally manufactured the cars they sold subprime loans for. Subprime auto loans – over $1t worth! – absolutely relies on the fact that borrowers' cars can be remotely controlled by lenders. Miss a payment and your car's stereo turns itself on and blares threatening messages at top volume, which you can't turn off. Break the lease agreement that says you won't drive your car over the county line and it will immobilize itself. Try to change any of this software and you'll commit a felony under Section 1201 of the DMCA:

https://pluralistic.net/2021/04/02/innovation-unlocks-markets/#digital-arm-breakers

Tesla, naturally, has the most advanced anti-features. Long before BMW tried to rent you your seat-heater and Mercedes tried to sell you a monthly subscription to your accelerator pedal, Teslas were demon-haunted nightmare cars. Miss a Tesla payment and the car will immobilize itself and lock you out until the repo man arrives, then it will blare its horn and back itself out of its parking spot. If you "buy" the right to fully charge your car's battery or use the features it came with, you don't own them – they're repossessed when your car changes hands, meaning you get less money on the used market because your car's next owner has to buy these features all over again:

https://pluralistic.net/2023/07/28/edison-not-tesla/#demon-haunted-world

And all this DRM allows your car maker to install spyware that you're not allowed to remove. They really tipped their hand on this when the R2R ballot measure was steaming towards an 80% victory, with wall-to-wall scare ads that revealed that your car collects so much information about you that allowing third parties to access it could lead to your murder (no, really!):

https://pluralistic.net/2020/09/03/rip-david-graeber/#rolling-surveillance-platforms

That's why your car spies on you. Because it can. Because the company that made it lacks constraint, be it market-based, legal, technological or its own workforce's ethics.

One common critique of my enshittification hypothesis is that this is "kind of sensible and normal" because "there’s something off in the consumer mindset that we’ve come to believe that the internet should provide us with amazing products, which bring us joy and happiness and we spend hours of the day on, and should ask nothing back in return":

https://freakonomics.com/podcast/how-to-have-great-conversations/

What this criticism misses is that this isn't the companies bargaining to shift some value from us to them. Enshittification happens when a company can seize all that value, without having to bargain, exploiting law and technology and market power over buyers and sellers to unilaterally alter the way the products and services we rely on work.

A company that doesn't have to fear competitors, regulators, jailbreaking or workers' refusal to enshittify its products doesn't have to bargain, it can take. It's the first lesson they teach you in the Darth Vader MBA: "I am altering the deal. Pray I don't alter it any further":

https://pluralistic.net/2023/10/26/hit-with-a-brick/#graceful-failure

Your car spying on you isn't down to your belief that your carmaker "should provide you with amazing products, which brings your joy and happiness you spend hours of the day on, and should ask nothing back in return." It's not because you didn't pay for the product, so now you're the product. It's because they can get away with it.

The consequences of this spying go much further than mere insurance premium hikes, too. Car telemetry sits at the top of the funnel that the unbelievably sleazy data broker industry uses to collect and sell our data. These are the same companies that sell the fact that you visited an abortion clinic to marketers, bounty hunters, advertisers, or vengeful family members pretending to be one of those:

https://pluralistic.net/2022/05/07/safegraph-spies-and-lies/#theres-no-i-in-uterus

Decades of pro-monopoly policy led to widespread regulatory capture. Corporate cartels use the monopoly profits they extract from us to pay for regulatory inaction, allowing them to extract more profits.

But when it comes to privacy, that period of unchecked corporate power might be coming to an end. The lack of privacy regulation is at the root of so many problems that a pro-privacy movement has an unstoppable constituency working in its favor.

At EFF, we call this "privacy first." Whether you're worried about grifters targeting vulnerable people with conspiracy theories, or teens being targeted with media that harms their mental health, or Americans being spied on by foreign governments, or cops using commercial surveillance data to round up protesters, or your car selling your data to insurance companies, passing that long-overdue privacy legislation would turn off the taps for the data powering all these harms:

https://www.eff.org/wp/privacy-first-better-way-address-online-harms

Traditional economics fails because it thinks about markets without thinking about power. Monopolies lead to more than market power: they produce regulatory capture, power over workers, and state capture, which felonizes competition through IP law. The story that our problems stem from the fact that we just don't spend enough money, or buy the wrong products, only makes sense if you willfully ignore the power that corporations exert over our lives. It's nice to think that you can shop your way out of a monopoly, because that's a lot easier than voting your way out of a monopoly, but no matter how many times you vote with your wallet, the cartels that control the market will always win:

https://pluralistic.net/2024/03/05/the-map-is-not-the-territory/#apor-locksmith

Name your price for 18 of my DRM-free ebooks and support the Electronic Frontier Foundation with the Humble Cory Doctorow Bundle.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/03/12/market-failure/#car-wars

Image:

Cryteria (modified)

https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0

https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#if you're not paying for the product you're the product#if you're paying for the product you're the product#cars#automotive#enshittification#technofeudalism#autoenshittification#antifeatures#felony contempt of business model#twiddling#right to repair#privacywashing#apple#lexisnexis#insuretech#surveillance#commercial surveillance#privacy first#data brokers#subprime#kash hill#kashmir hill

2K notes

·

View notes

Text

Data Remediation – Why Enterprises Need it

Making accurate business decisions is dependent on the clarity of the information available. Bad data can sabotage progress and make it more difficult to finish the tasks at hand. Data remediation plays a crucial role here by cleansing, organizing, and transferring data to better suit their needs.

Data is the lifeblood of every company. Managing and safeguarding it in this context is crucial but challenging for businesses. As the amount of data collected on a daily basis grows, this will become increasingly more difficult.

Role of data remediation in data security and privacy

Today’s businesses face the problem of maintaining data quality. The ever-changing face of data and iterative data models, data inaccuracies or corrupt data, and new data protection regulations are all data management challenges. Poor data health reduces a company’s operational efficiency and makes it more difficult to make effective decisions.

A data ecosystem that is more accurate and transparent

Over time, unregulated data can put a strain on a company’s data network. Furthermore, unrestricted data raises the possibility of data breaches. All of these factors might have a significant impact on data management. Unregulated data can increase the danger of non-compliance with data privacy standards, in addition to the increased risk of data breaches. Businesses that collect and store significant volumes of data are especially vulnerable. Read More Data Remediation

For More information Technology in business

0 notes

Photo

Data modeling is more than just about making or saving money. Data science teams create data models to show connections between data points and structures. https://www.dasca.org/world-of-big-data/article/what-is-data-modeling-and-why-do-you-need-it

1 note

·

View note

Text

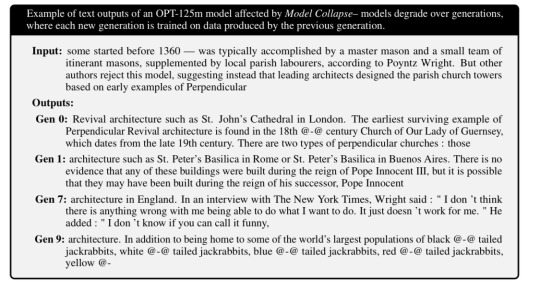

Training large language models on the outputs of previous large language models leads to degraded results. As all the nuance and rough edges get smoothed away, the result is less diversity, more bias, and …jackrabbits?

#neural networks#large language models#llm#internet training data#jackrabbits#is this the singularity#I have already made the joke about low botground data#ai eats itself#when grifters fill the internet with ai generated seo sludge

746 notes

·

View notes

Link

Popular large language models (LLMs) like OpenAI’s ChatGPT and Google’s Bard are energy intensive, requiring massive server farms to provide enough data to train the powerful programs. Cooling those same data centers also makes the AI chatbots incredibly thirsty. New research suggests training for GPT-3 alone consumed 185,000 gallons (700,000 liters) of water. An average user’s conversational exchange with ChatGPT basically amounts to dumping a large bottle of fresh water out on the ground, according to the new study. Given the chatbot’s unprecedented popularity, researchers fear all those spilled bottles could take a troubling toll on water supplies, especially amid historic droughts and looming environmental uncertainty in the US.

[...]

Water consumption issues aren’t limited to OpenAI or AI models. In 2019, Google requested more than 2.3 billion gallons of water for data centers in just three states. The company currently has 14 data centers spread out across North America which it uses to power Google Search, its suite of workplace products, and more recently, its LaMDa and Bard large language models. LaMDA alone, according to the recent research paper, could require millions of liters of water to train, larger than GPT-3 because several of Google’s thirsty data centers are housed in hot states like Texas; researchers issued a caveat with this estimation, though, calling it an “ approximate reference point.”

Aside from water, new LLMs similarly require a staggering amount of electricity. A Stanford AI report released last week looking at differences in energy consumption among four prominent AI models, estimating OpenAI’s GPT-3 released 502 metric tons of carbon during its training. Overall, the energy needed to train GPT-3 could power an average American’s home for hundreds of years.

2K notes

·

View notes

Photo

Arcade Project by Cameron Tempest-Hay

#Bally Midway#Data East#Gottlieb#Nintendo#Midway#BurgerTime#Dig Dug#Donkey Kong#Donkey Kong Junior#Galaga#Mario Bros.#Mortal Kombat#Mortal Kombat II#Mortal Kombat 3#Ms. Pac-Man#Pac-Man#Popeye#Q*bert#Tapper#3D modelling#3D render#digital art#arcade#video games#retro gaming#Cameron Tempest-Hay

1K notes

·

View notes

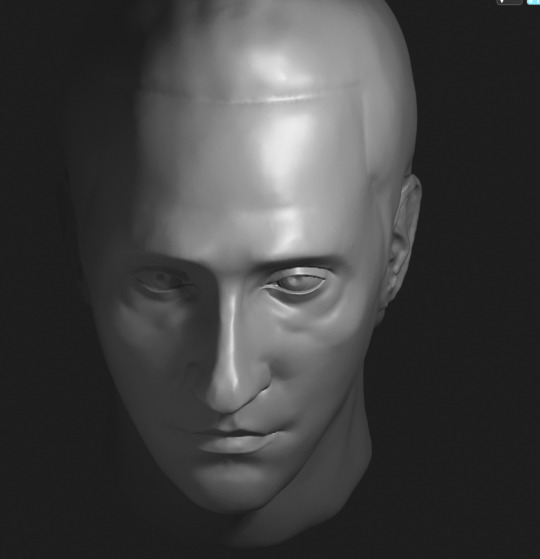

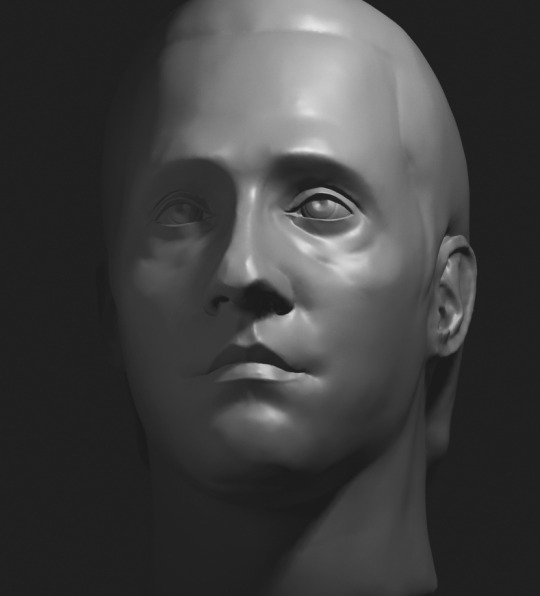

Text

Been working on a 3D model of Lore, or just (insert soong-type android here) I guess. Very epic. I initially started this because I wanted to have a 3D head as art reference cause I'm obsessed, but now I'm sort of tempted to model and rig a full thing so I could make him dance and do other funny shit like a puppet.

425 notes

·

View notes

Text

Book Review: “The Definitive Guide to Generative AI for Industry” by Cognite

New Post has been published on https://thedigitalinsider.com/book-review-the-definitive-guide-to-generative-ai-for-industry-by-cognite/

Book Review: “The Definitive Guide to Generative AI for Industry” by Cognite

While most books on Generative AI focus on the benefits of content generation, few delve into industrial applications, such as those in warehouses and collaborative robotics. Here, “The Definitive Guide to Generative AI for Industry” truly shines. The solutions it presents bring us closer to a world of fully autonomous operations.

The book starts by explaining what it takes to be a digital maverick and how enterprises can leverage digital solutions to transform how data is utilized. A digital maverick is typically characterized by big-picture thinking, technical prowess, and the understanding that systems can be optimized through data ingestion. By applying Large Language Models (LLMs) to comprehend and use this data, long-term business practices can be significantly enhanced.

Data

To address the current issues associated with industrial data and AI, data must be freed from isolated source systems and contextualized to optimize production, enhance asset performance, and enable AI-powered business decisions.

The book explores the complexities of physical and industrial systems, emphasizing that no single data representation will suffice for all the different consumption methods. It underscores the importance of standardizing a set of data models that share some common data but also allow users to customize each model and incorporate unique data.

The book describes three types of data modeling frameworks, enabling different perspectives of the same data to be clearly articulated and reused. These three levels at which data can exist are:

Source Data Model: Data is extracted from the original source and made available in its unaltered state.

Domain Data Model: Isolated data is unified through contextualization and structured into industry standards.

Solution Data Model: This model utilizes data from both the source and domain models to support generic solutions.

Digital Twins

It’s only through the proper liberation and structuring of data that the creation of industrial digital twins becomes possible. The opportunity here lies in avoiding the development of a singular, monolithic digital twin expected to fulfill all enterprise needs. Instead, smaller, more tailored digital twins can be developed to better serve the specific requirements of different teams.

An industrial digital twin thus becomes an aggregation of all possible data types and datasets, housed in a unified, easily accessible location. This digital twin becomes consumable, linked to the real world, and useful for various applications. The significance of having multiple digital twins is their adaptability for different uses, such as supply chain management, maintenance insights, and simulations.

While many enterprises understand the concept of a digital twin, it’s more crucial to create a digital twin within an ecosystem. In this ecosystem, a digital twin coexists and evolves alongside other digital twins, allowing for comparisons and sharing a considerable amount of standardized data. Yet, each is built for specific purposes and can independently evolve, effectively enabling each digital twin to branch into its unique evolutionary path.

Consequently, the challenge is then how can enterprises efficiently and scalably populate these various digital twins? The book delves into the methodology behind this critical industrial process.

How to Apply Generative AI to Industry

Of course, the challenge then evolves into incorporating this technology, avoiding AI hallucinations, and scaling the technology in the fastest and most cost-effective way. The book delves into a comparison of the pros and cons between a do-it-yourself approach and outsourcing to a company specializing in this advanced type of data and AI integration.

Overall, this book is highly recommended for anyone involved in the industrial sector, which includes manufacturing businesses, process industries, engineering industries, and goods-producing sectors engaged in large-scale production and fabrication. It’s particularly beneficial for those wanting to leverage the data they collect, utilizing Generative AI to optimize business practices, streamline internal operations, and improve overall workflow.

About Cognite

Cognite makes Generative AI work for industry. Leading energy, manufacturing, and power & renewables enterprises choose Cognite to deliver secure, trustworthy, and real-time data to transform their asset-heavy operations to be safer, more sustainable, and more profitable. Cognite provides a user-friendly, secure, and scalable platform that makes it easy for all decision-makers, from the field to remote operations centers, to access and understand complex industrial data, collaborate in real time, and build a better tomorrow.

To learn more about this book or to download please click here.

#ai#AI-powered#amp#applications#approach#book#book review#Book Reviews#Books#Business#challenge#collaborate#collaborative#course#data#data ingestion#data modeling#data models#decision-makers#development#digital twins#easy#energy#engineering#enterprise#Fabrication#generative#generative ai#how#how to

0 notes

Text

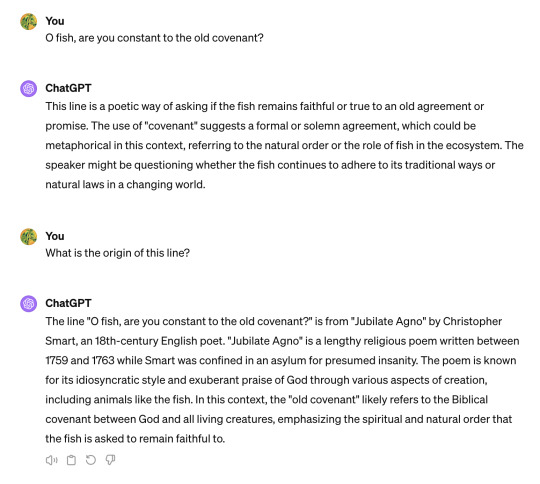

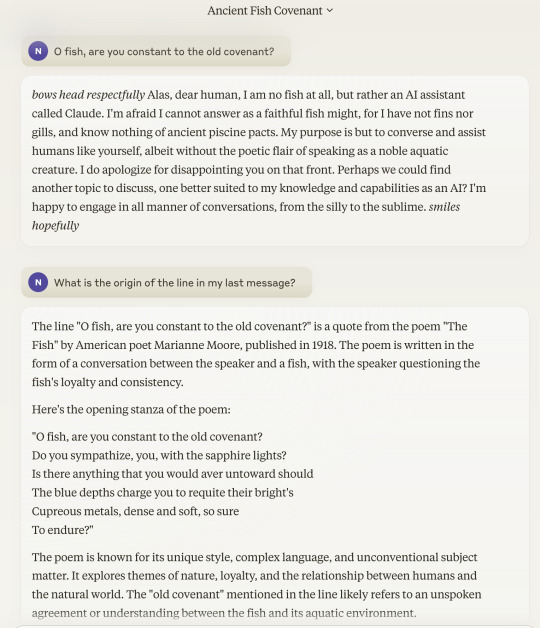

On the topic of Declare:

A little while ago, on a whim, I prompted several different chat LLMs with a line from the Arabian Nights that has special importance in the novel:

O fish, are you constant to the old covenant?

In the book, this is used as a sign between members of a secret group, and there's a canned response that members are meant to give to identify themselves. (Like "I like your shoelaces" / "Thanks, I stole them from the President.").

I thought it'd be funny and impressive if one of these models responded with the canned phrase from Declare: that would have demonstrated both a command of somewhat obscure information and a humanlike ability to flexibly respond to an unusual input in the same spirit as that input.

None of the models did so, although I was still impressed with Gemini's reaction: it correctly sourced the quote to Arabian Nights in its first message, and was able to guess and/or remember that the quote was also used in Declare in follow-up chat (after a few wrong guesses and hints from me).

On the other hand, GPT-4 confidently stated that the quotation was from Jubilate Agno, a real but unrelated book:

When I asked Claude 3, it asserted that the line was from a real-but unrelated-poem called "The Fish," then proceeded -- without my asking -- to quote (i.e make up) a stanza from its imagined version of that poem:

It is always discomfiting to be reminded that -- no matter how much "safety" tuning these things are put through, and despite how preachy they can be about their own supposed aversion to "misinformation" or whatever -- they are nonetheless happy to confidently bullshit to the user like this.

I'm sure they have an internal sense of how sure or unsure they are of any given claim, but it seems they have (effectively) been trained not to let it influence their answers, and instead project maximum certainty almost all of the time.

#ai tag#(it's understandable why this would occur)#(it's hard to make instruction tuning data that teaches the model to sound unsure iff it's really unsure)#(because it's hard to know in advance - or ever - what exactly the model does or doesn't know)#(but i'd imagine the big labs are working on the problem and i'm surprised they haven't gotten further by now)

126 notes

·

View notes

Text

Data is one of the rare times the hot robot being programmed with sex sub routines and built anatomically correct and shit doesn’t have Unfortunate Implications about like, his planned usage or whatever.

Like you know it’s both bc Noonien Soong is an unhinged freak who wanted this guy to be able to do Literally Everything He Could Think Of and Do It Well because he’s a hyper perfectionist and also because he’s a raging narcissist and no android with his face wasn’t gonna be an s tier lover. His pride just wouldn’t allow Data (and Lore) to not be as accurate a simulacrum of humans as possible or anything but super mega perfect at everything. And honestly it’s why I like him so much he’s a miserable little man <3

#data soong#lore soong#noonien soong#dr soong said ‘I’m making my boys fuck supremely nasty bc that will reflect back on ME’ which is unhinged thinking#but if anyone would think like that it’s him#tfw ur so egotistical u model ur android sons after u in ur physical prime and make their dick game insane

100 notes

·

View notes

Text

been learning unreal engine lately to mod my kh4 sora into kh3, it's been going decently

63 notes

·

View notes

Text

The real AI fight

Tonight (November 27), I'm appearing at the Toronto Metro Reference Library with Facebook whistleblower Frances Haugen.

On November 29, I'm at NYC's Strand Books with my novel The Lost Cause, a solarpunk tale of hope and danger that Rebecca Solnit called "completely delightful."

Last week's spectacular OpenAI soap-opera hijacked the attention of millions of normal, productive people and nonsensually crammed them full of the fine details of the debate between "Effective Altruism" (doomers) and "Effective Accelerationism" (AKA e/acc), a genuinely absurd debate that was allegedly at the center of the drama.

Very broadly speaking: the Effective Altruists are doomers, who believe that Large Language Models (AKA "spicy autocomplete") will someday become so advanced that it could wake up and annihilate or enslave the human race. To prevent this, we need to employ "AI Safety" – measures that will turn superintelligence into a servant or a partner, nor an adversary.

Contrast this with the Effective Accelerationists, who also believe that LLMs will someday become superintelligences with the potential to annihilate or enslave humanity – but they nevertheless advocate for faster AI development, with fewer "safety" measures, in order to produce an "upward spiral" in the "techno-capital machine."

Once-and-future OpenAI CEO Altman is said to be an accelerationists who was forced out of the company by the Altruists, who were subsequently bested, ousted, and replaced by Larry fucking Summers. This, we're told, is the ideological battle over AI: should cautiously progress our LLMs into superintelligences with safety in mind, or go full speed ahead and trust to market forces to tame and harness the superintelligences to come?

This "AI debate" is pretty stupid, proceeding as it does from the foregone conclusion that adding compute power and data to the next-word-predictor program will eventually create a conscious being, which will then inevitably become a superbeing. This is a proposition akin to the idea that if we keep breeding faster and faster horses, we'll get a locomotive:

https://locusmag.com/2020/07/cory-doctorow-full-employment/

As Molly White writes, this isn't much of a debate. The "two sides" of this debate are as similar as Tweedledee and Tweedledum. Yes, they're arrayed against each other in battle, so furious with each other that they're tearing their hair out. But for people who don't take any of this mystical nonsense about spontaneous consciousness arising from applied statistics seriously, these two sides are nearly indistinguishable, sharing as they do this extremely weird belief. The fact that they've split into warring factions on its particulars is less important than their unified belief in the certain coming of the paperclip-maximizing apocalypse:

https://newsletter.mollywhite.net/p/effective-obfuscation

White points out that there's another, much more distinct side in this AI debate – as different and distant from Dee and Dum as a Beamish Boy and a Jabberwork. This is the side of AI Ethics – the side that worries about "today’s issues of ghost labor, algorithmic bias, and erosion of the rights of artists and others." As White says, shifting the debate to existential risk from a future, hypothetical superintelligence "is incredibly convenient for the powerful individuals and companies who stand to profit from AI."

After all, both sides plan to make money selling AI tools to corporations, whose track record in deploying algorithmic "decision support" systems and other AI-based automation is pretty poor – like the claims-evaluation engine that Cigna uses to deny insurance claims:

https://www.propublica.org/article/cigna-pxdx-medical-health-insurance-rejection-claims

On a graph that plots the various positions on AI, the two groups of weirdos who disagree about how to create the inevitable superintelligence are effectively standing on the same spot, and the people who worry about the actual way that AI harms actual people right now are about a million miles away from that spot.

There's that old programmer joke, "There are 10 kinds of people, those who understand binary and those who don't." But of course, that joke could just as well be, "There are 10 kinds of people, those who understand ternary, those who understand binary, and those who don't understand either":

https://pluralistic.net/2021/12/11/the-ten-types-of-people/

What's more, the joke could be, "there are 10 kinds of people, those who understand hexadecenary, those who understand pentadecenary, those who understand tetradecenary [und so weiter] those who understand ternary, those who understand binary, and those who don't." That is to say, a "polarized" debate often has people who hold positions so far from the ones everyone is talking about that those belligerents' concerns are basically indistinguishable from one another.

The act of identifying these distant positions is a radical opening up of possibilities. Take the indigenous philosopher chief Red Jacket's response to the Christian missionaries who sought permission to proselytize to Red Jacket's people:

https://historymatters.gmu.edu/d/5790/

Red Jacket's whole rebuttal is a superb dunk, but it gets especially interesting where he points to the sectarian differences among Christians as evidence against the missionary's claim to having a single true faith, and in favor of the idea that his own people's traditional faith could be co-equal among Christian doctrines.

The split that White identifies isn't a split about whether AI tools can be useful. Plenty of us AI skeptics are happy to stipulate that there are good uses for AI. For example, I'm 100% in favor of the Human Rights Data Analysis Group using an LLM to classify and extract information from the Innocence Project New Orleans' wrongful conviction case files:

https://hrdag.org/tech-notes/large-language-models-IPNO.html

Automating "extracting officer information from documents – specifically, the officer's name and the role the officer played in the wrongful conviction" was a key step to freeing innocent people from prison, and an LLM allowed HRDAG – a tiny, cash-strapped, excellent nonprofit – to make a giant leap forward in a vital project. I'm a donor to HRDAG and you should donate to them too:

https://hrdag.networkforgood.com/

Good data-analysis is key to addressing many of our thorniest, most pressing problems. As Ben Goldacre recounts in his inaugural Oxford lecture, it is both possible and desirable to build ethical, privacy-preserving systems for analyzing the most sensitive personal data (NHS patient records) that yield scores of solid, ground-breaking medical and scientific insights:

https://www.youtube.com/watch?v=_-eaV8SWdjQ

The difference between this kind of work – HRDAG's exoneration work and Goldacre's medical research – and the approach that OpenAI and its competitors take boils down to how they treat humans. The former treats all humans as worthy of respect and consideration. The latter treats humans as instruments – for profit in the short term, and for creating a hypothetical superintelligence in the (very) long term.

As Terry Pratchett's Granny Weatherwax reminds us, this is the root of all sin: "sin is when you treat people like things":

https://brer-powerofbabel.blogspot.com/2009/02/granny-weatherwax-on-sin-favorite.html

So much of the criticism of AI misses this distinction – instead, this criticism starts by accepting the self-serving marketing claim of the "AI safety" crowd – that their software is on the verge of becoming self-aware, and is thus valuable, a good investment, and a good product to purchase. This is Lee Vinsel's "Criti-Hype": "taking press releases from startups and covering them with hellscapes":

https://sts-news.medium.com/youre-doing-it-wrong-notes-on-criticism-and-technology-hype-18b08b4307e5

Criti-hype and AI were made for each other. Emily M Bender is a tireless cataloger of criti-hypeists, like the newspaper reporters who breathlessly repeat " completely unsubstantiated claims (marketing)…sourced to Altman":

https://dair-community.social/@emilymbender/111464030855880383

Bender, like White, is at pains to point out that the real debate isn't doomers vs accelerationists. That's just "billionaires throwing money at the hope of bringing about the speculative fiction stories they grew up reading – and philosophers and others feeling important by dressing these same silly ideas up in fancy words":

https://dair-community.social/@emilymbender/111464024432217299

All of this is just a distraction from real and important scientific questions about how (and whether) to make automation tools that steer clear of Granny Weatherwax's sin of "treating people like things." Bender – a computational linguist – isn't a reactionary who hates automation for its own sake. On Mystery AI Hype Theater 3000 – the excellent podcast she co-hosts with Alex Hanna – there is a machine-generated transcript:

https://www.buzzsprout.com/2126417

There is a serious, meaty debate to be had about the costs and possibilities of different forms of automation. But the superintelligence true-believers and their criti-hyping critics keep dragging us away from these important questions and into fanciful and pointless discussions of whether and how to appease the godlike computers we will create when we disassemble the solar system and turn it into computronium.

The question of machine intelligence isn't intrinsically unserious. As a materialist, I believe that whatever makes me "me" is the result of the physics and chemistry of processes inside and around my body. My disbelief in the existence of a soul means that I'm prepared to think that it might be possible for something made by humans to replicate something like whatever process makes me "me."

Ironically, the AI doomers and accelerationists claim that they, too, are materialists – and that's why they're so consumed with the idea of machine superintelligence. But it's precisely because I'm a materialist that I understand these hypotheticals about self-aware software are less important and less urgent than the material lives of people today.

It's because I'm a materialist that my primary concerns about AI are things like the climate impact of AI data-centers and the human impact of biased, opaque, incompetent and unfit algorithmic systems – not science fiction-inspired, self-induced panics over the human race being enslaved by our robot overlords.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2023/11/27/10-types-of-people/#taking-up-a-lot-of-space

Image:

Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0

https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#criti-hype#ai doomers#doomers#eacc#effective acceleration#effective altruism#materialism#ai#10 types of people#data science#llms#large language models#patrick ball#ben goldacre#trusted research environments#science#hrdag#human rights data analysis group#red jacket#religion#emily bender#emily m bender#molly white

287 notes

·

View notes