#feature engineering in machine learning

Explore tagged Tumblr posts

Text

Explore What is Feature Engineering in Machine Learning

Summary: Feature engineering in Machine Learning is pivotal for refining raw data into predictive insights. Techniques like extraction, selection, transformation, and handling of missing data optimise model performance, ensuring accurate predictions. It bridges data intricacies, empowering practitioners to uncover meaningful patterns and drive informed decision-making in various domains.

Introduction

In today's data-driven era, Machine Learning has become pivotal across industries, revolutionising decision-making and automation. Feature Engineering in Machine Learning is at the heart of this transformative technology—an art and science that involves crafting data features to maximise model performance.

This blog explores the crucial role of Feature Engineering in Machine Learning, highlighting its significance in refining raw data into predictive insights. As businesses strive for a competitive edge through data-driven strategies, understanding how feature engineering enhances Machine Learning models becomes paramount.

Join us as we delve into techniques and strategies that empower Data Scientists to harness the full potential of their data.

What is Machine Learning?

Machine Learning is a branch of artificial intelligence (AI) that empowers systems to learn and improve from experience without explicit programming. It revolves around algorithms enabling computers to learn and automatically make decisions based on data. This section provides a concise definition and explores its core principles and the various paradigms under which Machine Learning operates.

Definition and Core Principles

Machine Learning involves algorithms that learn patterns and insights from data to make predictions or decisions. Unlike traditional programming, where rules are explicitly defined, Machine Learning models are trained using vast amounts of data, allowing them to generalise and improve their performance over time.

The core principles include data-driven learning, iterative improvement, and new data adaptation.

Common overview of learning paradigms in Machine Learning are:

Supervised Learning: In supervised learning, algorithms learn from labelled data, where each input-output pair is explicitly provided during training. The goal is to predict outputs for new, unseen data based on patterns learned from the labelled examples.

Unsupervised Learning: Unsupervised learning involves discovering patterns and structures from unlabeled data. Algorithms here aim to find inherent relationships or groupings in the data without explicit guidance.

Reinforcement Learning: This paradigm focuses on learning optimal decision-making through interaction with an environment. Algorithms learn to achieve a goal or maximise a reward by taking actions and receiving feedback or reinforcement signals.

Each paradigm serves distinct purposes in solving different types of problems, contributing to the versatility and applicability of Machine Learning across various fields.

What is Feature Engineering in Machine Learning?

Feature engineering in Machine Learning refers to selecting, transforming, and creating features (variables) from raw data most relevant to predictive modelling tasks. It involves crafting the correct set of input variables to improve model accuracy and performance.

This process aims to highlight meaningful patterns in the data and enhance the predictive power of Machine Learning algorithms.

Importance of Feature Selection and Transformation

Effective feature selection and transformation are crucial steps in the data preprocessing pipeline of Machine Learning. By selecting the most relevant features, the model becomes more focused on the essential patterns within the data, reducing noise and improving generalisation.

Transformation techniques such as normalisation or scaling ensure that features are on a comparable scale, preventing biases in model training. These processes not only streamline the learning process for Machine Learning algorithms but also contribute significantly to the interpretability and efficiency of the models.

Feature engineering bridges raw data and accurate predictions, laying the foundation for successful Machine Learning applications. By carefully curating features through selection and transformation, Data Scientists can uncover deeper insights and build robust models that perform well across diverse datasets and real-world scenarios.

Importance of Feature Engineering in Machine Learning

In Machine Learning, the quality of features—inputs used to train models—profoundly influences their predictive accuracy and performance. Practical feature engineering enhances the interpretability of models and facilitates better decision-making based on data-driven insights.

Impact on Model Performance

Quality features serve as the foundation for Machine Learning algorithms. By carefully selecting and transforming these features, practitioners can significantly improve model performance.

For instance, in a predictive model for customer churn, customer demographics, purchase history, and interaction frequency can be engineered to capture nuanced behaviours that correlate strongly with churn likelihood. This targeted feature engineering refines the model's ability to distinguish between churners and loyal customers and enhances its predictive power.

Examples of Enhanced Predictive Models

Consider a fraud detection system where features like transaction amount, location, and time are engineered to extract additional information, such as transaction frequency patterns or deviations from typical behaviour. By leveraging these engineered features, the model can more accurately identify suspicious activities, reducing false positives and improving overall detection rates.

Investing time and effort in feature engineering is crucial for building robust Machine Learning models that deliver actionable insights and drive informed decision-making across various domains.

Common Techniques in Feature Engineering in Machine Learning

Feature engineering is a cornerstone in developing robust Machine Learning models, where the quality and relevance of features directly impact model performance and predictive accuracy. This section explores several essential techniques in feature engineering that transform raw data into meaningful insights for Machine Learning algorithms.

Feature Extraction

Feature extraction transforms raw data into a structured format that facilitates model learning and improves predictive capabilities. Text mining data is processed to extract critical features such as word frequencies or semantic meanings using natural language processing (NLP) techniques. Similarly, features like edges or textures are extracted to describe visual content in image processing.

Feature Selection

Effective feature selection enhances model performance by focusing on the most relevant features while mitigating computational complexity and overfitting risks. Methods include filter methods that assess feature relevance based on statistical measures, wrapper methods that evaluate subsets based on model performance, and embedded methods that integrate feature selection into model training.

Feature Transformation

Feature transformation techniques preprocess data to improve model interpretability and performance. Normalisation scales numerical features to a standard range, standardisation adjusts features to have a mean of zero and a standard deviation of one, and log transforms adjust skewed data distributions to improve model fit.

Handling Missing Data

Handling missing data is essential to maintain data integrity and ensure robust model performance. Techniques include imputation methods that replace missing values with substitutes like the mean or median, deletion of instances with extensive missing data, and advanced techniques such as predictive modelling to estimate missing values based on other features.

Frequently Asked Questions

What is feature engineering in Machine Learning?

Feature engineering in Machine Learning involves selecting, transforming, and creating data features to enhance model performance and accuracy.

Why is feature engineering important in Machine Learning?

Practical feature engineering refines data insights, improves model interpretability, and boosts predictive accuracy across diverse datasets.

What are standard techniques in feature engineering?

Techniques include feature extraction, selection, transformation, and handling of missing data, all crucial for optimising Machine Learning models.

Conclusion

Feature engineering is indispensable in Machine Learning, transforming raw data into meaningful features that amplify model performance. By meticulously selecting and refining data inputs, practitioners enhance predictive accuracy and ensure robustness across various applications.

The strategic crafting of features improves model efficiency. It facilitates better decision-making through actionable insights derived from comprehensive data analysis. As businesses increasingly rely on data-driven strategies, mastering feature engineering remains a pivotal skill for unlocking the full potential of Machine Learning in solving complex real-world problems.

#feature engineering#feature engineering in machine learning#machine learning#data science#engineering

0 notes

Text

Optimierung der KI-Modellentwicklung durch AutoML-Technologien

In der heutigen datengetriebenen Welt spielt die Entwicklung von Künstlicher Intelligenz (KI) eine zentrale Rolle in der Verbesserung von Geschäftsprozessen und Entscheidungen. AutoML-Technologien (Automated Machine Learning) bieten innovative Ansätze zur Optimierung der KI-Modellentwicklung, indem sie den manuellen Aufwand reduzieren und die Effizienz steigern. In diesem Artikel werden wir…

#Automatisierung#AutoML#Effizienzsteigerung#Feature Engineering#Geschäftsprozesse#Innovation#Innovationen#KI-Modelle#Machine Learning#Random Forests#RPA

0 notes

Text

Feature Engineering Techniques To Supercharge Your Machine Learning Algorithms

Are you ready to take your machine learning algorithms to the next level? If so, get ready because we’re about to dive into the world of feature engineering techniques that will supercharge your models like never before. Feature engineering is the secret sauce behind successful data science projects, allowing us to extract valuable insights and transform raw data into powerful predictors. In this blog post, we’ll explore some of the most effective and innovative techniques that will help you unlock hidden patterns in your datasets, boost accuracy levels, and ultimately revolutionize your machine learning game. So grab a cup of coffee and let’s embark on this exciting journey together!

Introduction to Feature Engineering

Feature engineering is the process of transforming raw data into features that can be used to train machine learning models. In this blog post, we will explore some common feature engineering techniques and how they can be used to improve the performance of machine learning algorithms.

One of the most important aspects of feature engineering is feature selection, which is the process of selecting the most relevant features from a dataset. This can be done using a variety of methods, including manual selection, statistical methods, and machine learning algorithms.

Once the relevant features have been selected, they need to be transformed into a format that can be used by machine learning algorithms. This may involve scaling numerical values, encoding categorical values as integers, or creating new features based on existing ones.

It is often necessary to split the dataset into training and test sets so that the performance of the machine learning algorithm can be properly evaluated.

What is a Feature?

A feature is a characteristic or set of characteristics that describe a data point. In machine learning, features are typically used to represent data points in a dataset. When choosing features for a machine learning algorithm, it is important to select features that are relevant to the task at hand and that can be used to distinguish between different classes of data points.

There are many different ways to engineer features, and the approach that is taken will depend on the type of data being used and the goal of the machine learning algorithm. Some common techniques for feature engineering include:

– Extracting features from text data using natural language processing (NLP) techniques – Creating new features by combining existing features (e.g., creating interaction terms) – Transforming existing features to better suit the needs of the machine learning algorithm (e.g., using logarithmic transformations for numerical data) – Using domain knowledge to create new features that capture important relationships in the data

How Does Feature Engineering Help Boost ML Algorithms?

Feature engineering is the process of using domain knowledge to extract features from raw data that can be used to improve the performance of machine learning algorithms. This process can be used to create new features that better represent the underlying data or to transform existing features so that they are more suitable for use with machine learning algorithms.

The benefits of feature engineering can be seen in both improved model accuracy and increased efficiency. By carefully crafting features, it is possible to reduce the amount of data required to train a machine learning algorithm while also increasing its accuracy. In some cases, good feature engineering can even allow a less powerful machine learning algorithm to outperform a more complex one.

There are many different techniques that can be used for feature engineering, but some of the most common include feature selection, feature transformation, and dimensionality reduction. Feature selection involves choosing which features from the raw data should be used by the machine learning algorithm. Feature transformation involves transforming or changing the values of existing features so that they are more suitable for use with machine learning algorithms. Dimensionality reduction is a technique that can be used to reduce the number of features in the data by combining or eliminating features that are similar or redundant.

Each of these techniques has its own strengths and weaknesses, and there is no single best approach for performing feature engineering. The best approach depends on the specific dataset and machine learning algorithm being used. In general, it is important to try out different techniques and see which ones work best for your particular application.

Types of Feature Engineering Techniques

There are many different types of feature engineering techniques that can be used to improve the performance of machine learning algorithms. Some of the most popular techniques include:

1. Data preprocessing: This technique involves cleaning and preparing the data before it is fed into the machine learning algorithm. This can help to improve the accuracy of the algorithm by removing any noisy or irrelevant data.

2. Feature selection: This technique involves selecting the most relevant features from the data that will be used by the machine learning algorithm. This can help to improve the accuracy of the algorithm by reducing the amount of data that is processed and making sure that only the most important features are used.

3. Feature extraction: This technique involves extracting new features from existing data. This can help to improve the accuracy of the algorithm by providing more information for the algorithm to learn from.

4. Dimensionality reduction: This technique reduces the number of features that are used by the machine learning algorithm. This can help to improve the accuracy of the algorithm by reducing complexity and making sure that only the most important features are used.

– Data Preprocessing

Data preprocessing is a critical step in any machine learning pipeline. It is responsible for cleaning and formatting the data so that it can be fed into the model.

There are a number of techniques that can be used for data preprocessing, but some are more effective than others. Here are a few of the most popular methods:

– Standardization: This technique is used to rescale the data so that it has a mean of 0 and a standard deviation of 1. This is often done before feeding the data into a machine learning algorithm, as it can help the model converge faster.

– Normalization: This technique is used to rescale the data so that each feature is in the range [0, 1]. This is often done before feeding the data into a neural network, as it can help improve convergence.

– One-hot encoding: This technique is used to convert categorical variables into numerical ones. This is often done before feeding the data into a machine learning algorithm, as many models cannot handle categorical variables directly.

– Imputation: This technique is used to replace missing values in the data with something else (usually the mean or median of the column). This is often done before feeding the data into a machine learning algorithm, as many models cannot handle missing values directly.

– Feature Selection

There are a variety of feature selection techniques that can be used to improve the performance of machine learning algorithms. Some common methods include:

-Filter Methods: Filter methods are based on ranking features according to some criterion and then selecting a subset of the most relevant features. Common criteria used to rank features include information gain, mutual information, and chi-squared statistics.

-Wrapper Methods: Wrapper methods use a machine learning algorithm to evaluate the performance of different feature subsets and choose the best performing subset. This can be computationally expensive but is often more effective than filter methods.

-Embedded Methods: Embedded methods combine feature selection with the training of the machine learning algorithm. The most common embedded method is regularization, which penalizes certain parameters in the model if they are not relevant to the prediction task.

– Feature Transformation

Feature engineering is the process of creating new features from existing data. This can be done by combining different features, transforming features, or creating new features from scratch.

Feature engineering is a critical step in machine learning because it can help improve the performance of your algorithms. In this blog post, we will discuss some common feature engineering techniques that you can use to supercharge your machine learning algorithms.

One common technique for feature engineering is feature transformation. This involves transforming existing features to create new ones. For example, you could transform a feature such as “age” into a new feature called “age squared”. This would be useful if you were trying to predict something like life expectancy, which often increases with age but then levels off at an older age.

Another common technique is feature selection, which is the process of choosing which features to include in your model. This can be done manually or automatically using a variety of methods such as decision trees or Genetic Algorithms.

Once you have decided which features to include in your model, you may want to perform dimensionality reduction to reduce the number of features while still retaining as much information as possible. This can be done using techniques such as Principal Component Analysis (PCA) or Linear Discriminant Analysis (LDA).

You may also want to standardize your data before feeding it into your machine learning algorithm. Standardization involves rescaling the data so that it has a mean

– Generating Synthetic Features

Generating synthetic features is a great way to supercharge your machine learning algorithms. This technique can be used to create new features that are not present in the original data set. This can be done by combining existing features, or by using a variety of techniques to generate new features from scratch.

This technique is often used in conjunction with other feature engineering techniques, such as feature selection and feature extraction. When used together, these techniques can greatly improve the performance of your machine learning algorithms.

Examples of Successful Feature Engineering Projects

1. One of the most well-known examples of feature engineering is the Netflix Prize. In order to improve their movie recommendation system,Netflix released a dataset of 100 million ratings and allowed anyone to compete to find the best algorithm. The grand prize was awarded to a team that used a combination of features, including movie genres, release year, and average rating, to improve the accuracy of predictions by 10%.

2. Another example is Kaggle’s Merck Millipore Challenge, which asked participants to predict the binding affinity of small molecules to proteins. The winning team used a variety of features, including chemical structure data and protein sequence data, to achieve an accuracy of over 99%.

3. In the Google Brain Cat vs. Dog Challenge, participants were tasked with using machine learning to distinguish between pictures of cats and dogs. The winning team used a number of different features, such as color histograms and edge detection, to achieve an accuracy of over 96%.

Challenges Faced While Doing Feature Engineering

The biggest challenge when it comes to feature engineering is figuring out which features will actually be useful in predicting the target variable. There’s no easy answer to this question, and it often requires a lot of trial and error. Additionally, some features may be very time-consuming and expensive to create, so there’s a trade-off between accuracy and practicality.

Another challenge is dealing with missing data. This can be a issue when trying to create new features, especially if those features are based on other features that have missing values. One way to deal with this is to impute the missing values, but this can introduce bias if not done properly.

Sometimes the relationships between features and the target variable can be non-linear, meaning that standard linear methods of feature engineering won’t work. In these cases, it’s necessary to get creative and come up with custom transformation methods that capture the complex relationships.

Conclusion

Feature engineering is a powerful tool that can be used to optimize the performance of your machine learning algorithms. By utilizing techniques such as feature selection, dimensionality reduction and data transformation, you can drastically improve the accuracy and efficiency of your models. With these tools in hand, you will be well-equipped to tackle any machine learning challenge with confidence.

0 notes

Text

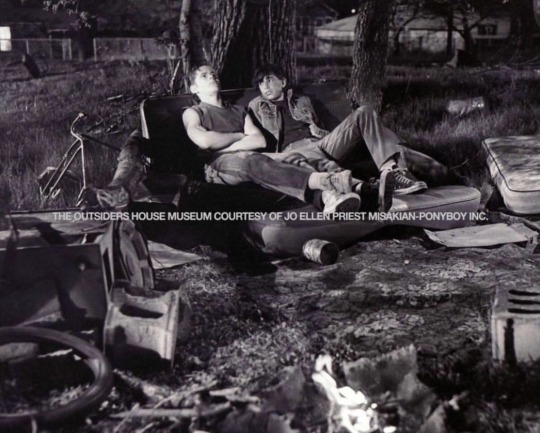

Yandere! Bad Guy x Reader

I am currently in my Natural Born Killers nostalgia, and so I'm borrowing its vibes and bringing you this: a bad-to-the-bone, rock-and-roll attitude yandere who constantly makes you question your own morality. Featuring an old OC!

Content: gender neutral reader, violence, murder, male yandere

He fell in love with you at first sight. A goody two shoes, quiet and obedient. Shy. Oh, terribly shy. You couldn't even meet his eyes. He knew you were the kind others would step on, take advantage of. But there was more to it, much more to uncover.

Who was it? A relative, a friend, a coworker? You know, that person holding you back, keeping you in your place. The one who'd always make you feel small and insignificant. The one who would always find something to criticize. How did it feel when you found them on the ground, bashed in and bloodied up? He was standing above the lifeless body, catching his breath, a cocky smile plastered on his face. His way of courting you.

He looked so tall in that moment, towering above your hesitant self, his gaze of a confidence and intensity you'd never known before. "Well? What are you waiting for? Get in", he said, gesturing towards a convertible he most likely stole earlier that day. What possessed you in that moment to join him without delay? Was it his charisma? Or did you know in the depth of your soul that he wouldn't take no for an answer?

You see, he's known it from the beginning. Someone like you needs someone like him. You’re a sweet little lamb lost among the wolves. The world would eat you right up if you were left by yourself. But now you have him. And he won't let his precious prey get away. Oh, dear, no. If he wants something, he gets it. And he's never wanted anything more than you.

"You didn't...even tell me your name", you sheepishly spoke up from the passenger seat, trying to keep your mind away from the crime you'd just witnessed. "Just call me Tig", he said casually with a yawn, speeding away. "Won't you be in trouble, Tig? Why would you even kill-" you tried to reason. "What kinda question is that? They treated you like shit and it pissed me off." He glanced at you with a frown, taking another drag off his cigarette. "You're mine now, so whatever happens to you is my business. Got it?" You just stared. Was that his way of asking you out?

Tig lives by his own rules, as you quickly learned from becoming his companion. Always on the run, indifferent to the world. For the most part, to your surprise, he's well-behaved. If people don't mess with him, he doesn't mess with them. Simple as that.

Anything involving you, however, sets him off terribly. Like a rabid, ferocious guard dog, he's ready to pounce on whoever approaches you the wrong way. Last week you stopped at a highway diner for coffee, and on your way back to your table, you jokingly pulled a clumsy dance move to the song playing from the speakers. Tig observed you with an amused smile, sipping from his cup. A passerby joined you, resting his arm on your waist flirtatiously. Tig's smile dropped in an instant, and next thing you knew, the whole place was splattered in blood. No one made it out.

"I didn't even finish my coffee", you whined, already used to the occasional massacre. The man hopped behind the counter and threw on a bloodied cap. "What will it be, sir/ma'am?" he pretended, dangling a takeaway cup and starting the espresso machine. "I never told you, but I used to be a barista", he declared proudly. An entirely different person from the unhinged killer you witnessed minutes ago. "What? You said you were a mechanic", you questioned with raised brows. "That's also true. I'm a jack of all trades, I suppose. You know what I'm best at, though?" He lowered himself until his forehead touched yours. "Pleasing you."

The man is romantic in his own way. He twists the key, and the engine stops. You follow him out of the car in confusion. "Why did we stop here?" He briefly lifts himself up onto the tall fence securing the bridge, and inhales deeply. "Isn't it a nice view?" he says, nodding ahead. It is a scenic sight, sure. The river slithers along the lush valley, and the setting sun gives everything a dramatic tint. "Give me your hand", he suddenly demands as he goes to grab it himself. Before you can ask for an explanation, he quickly drags a blade across your palm, and you wince in pain. He repeats the gesture with his own hand, locking his fingers with yours over the rail. You watch as fresh blood trails along your skin, eventually falling into droplets and vanishing into the river. "Now we're going to be everywhere", he remarks playfully. "Okay, but what was the point?" you insist, a little baffled.

"Isn't it obvious? Maybe this will help", he continues, procuring a ring from his pocket. "I'm saying I want to marry you, (Y/N)."

You open your mouth to answer, but he already slides it up your finger, eyes glimmering in excitement.

"You're never getting away from me, love."

#yes I'm advertising the movie again because it's a CLASSIC#yandere#yandere x reader#yandere x darling#yandere x you#yandere headcanons#yandere scenarios#yandere imagines#yandere killer#yandere delinquent#yandere oc#yandere oc x reader#yandere male#yandere male x reader#yandere boyfriend#male yandere#doodle#my art#yandere art#tig

1K notes

·

View notes

Text

Reverse engineers bust sleazy gig work platform

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/11/23/hack-the-class-war/#robo-boss

A COMPUTER CAN NEVER BE HELD ACCOUNTABLE

THEREFORE A COMPUTER MUST NEVER MAKE A MANAGEMENT DECISION

Supposedly, these lines were included in a 1979 internal presentation at IBM; screenshots of them routinely go viral:

https://twitter.com/SwiftOnSecurity/status/1385565737167724545?lang=en

The reason for their newfound popularity is obvious: the rise and rise of algorithmic management tools, in which your boss is an app. That IBM slide is right: turning an app into your boss allows your actual boss to create an "accountability sink" in which there is no obvious way to blame a human or even a company for your maltreatment:

https://profilebooks.com/work/the-unaccountability-machine/

App-based management-by-bossware treats the bug identified by the unknown author of that IBM slide into a feature. When an app is your boss, it can force you to scab:

https://pluralistic.net/2023/07/30/computer-says-scab/#instawork

Or it can steal your wages:

https://pluralistic.net/2023/04/12/algorithmic-wage-discrimination/#fishers-of-men

But tech giveth and tech taketh away. Digital technology is infinitely flexible: the program that spies on you can be defeated by another program that defeats spying. Every time your algorithmic boss hacks you, you can hack your boss back:

https://pluralistic.net/2022/12/02/not-what-it-does/#who-it-does-it-to

Technologists and labor organizers need one another. Even the most precarious and abused workers can team up with hackers to disenshittify their robo-bosses:

https://pluralistic.net/2021/07/08/tuyul-apps/#gojek

For every abuse technology brings to the workplace, there is a liberating use of technology that workers unleash by seizing the means of computation:

https://pluralistic.net/2024/01/13/solidarity-forever/#tech-unions

One tech-savvy group on the cutting edge of dismantling the Torment Nexus is Algorithms Exposed, a tiny, scrappy group of EU hacker/academics who recruit volunteers to reverse engineer and modify the algorithms that rule our lives as workers and as customers:

https://pluralistic.net/2022/12/10/e2e/#the-censors-pen

Algorithms Exposed have an admirable supply of seemingly boundless energy. Every time I check in with them, I learn that they've spun out yet another special-purpose subgroup. Today, I learned about Reversing Works, a hacking team that reverse engineers gig work apps, revealing corporate wrongdoing that leads to multimillion euro fines for especially sleazy companies.

One such company is Foodinho, an Italian subsidiary of the Spanish food delivery company Glovo. Foodinho/Glovo has been in the crosshairs of Italian labor enforcers since before the pandemic, racking up millions in fines – first for failing to file the proper privacy paperwork disclosing the nature of the data processing in the app that Foodinho riders use to book jobs. Then, after the Italian data commission investigated Foodinho, the company attracted new, much larger fines for its out-of-control surveillance conduct.

As all of this was underway, Reversing Works was conducting its own research into Glovo/Foodinho's app, running it on a simulated Android handset inside a PC so they could peer into app's data collection and processing. They discovered a nightmarish world of pervasive, illegal worker surveillance, and published their findings a year ago in November, 2023:

https://www.etui.org/sites/default/files/2023-10/Exercising%20workers%20rights%20in%20algorithmic%20management%20systems_Lessons%20learned%20from%20the%20Glovo-Foodinho%20digital%20labour%20platform%20case_2023.pdf

That report reveals all kinds of extremely illegal behavior. Glovo/Foodinho makes its riders' data accessible across national borders, so Glovo managers outside of Italy can access fine-grained surveillance information and sensitive personal information – a major data protection no-no.

Worse, Glovo's app embeds trackers from a huge number of other tech platforms (for chat, analytics, and more), making it impossible for the company to account for all the ways that its riders' data is collected – again, a requirement under Italian and EU data protection law.

All this data collection continues even when riders have clocked out for the day – its as though your boss followed you home after quitting time and spied on you.

The research also revealed evidence of a secretive worker scoring system that ranked workers based on undisclosed criteria and reserved the best jobs for workers with high scores. This kind of thing is pervasive in algorithmic management, from gig work to Youtube and Tiktok, where performers' videos are routinely suppressed because they crossed some undisclosed line. When an app is your boss, your every paycheck is docked because you violated a policy you're not allowed to know about, because if you knew why your boss was giving you shitty jobs, or refusing to show the video you spent thousands of dollars making to the subscribers who asked to see it, then maybe you could figure out how to keep your boss from detecting your rulebreaking next time.

All this data-collection and processing is bad enough, but what makes it all a thousand times worse is Glovo's data retention policy – they're storing this data on their workers for four years after the worker leaves their employ. That means that mountains of sensitive, potentially ruinous data on gig workers is just lying around, waiting to be stolen by the next hacker that breaks into the company's servers.

Reversing Works's report made quite a splash. A year after its publication, the Italian data protection agency fined Glovo another 5 million euros and ordered them to cut this shit out:

https://reversing.works/posts/2024/11/press-release-reversing.works-investigation-exposes-glovos-data-privacy-violations-marking-a-milestone-for-worker-rights-and-technology-accountability/

As the report points out, Italy is extremely well set up to defend workers' rights from this kind of bossware abuse. Not only do Italian enforcers have all the privacy tools created by the GDPR, the EU's flagship privacy regulation – they also have the benefit of Italy's 1970 Workers' Statute. The Workers Statute is a visionary piece of legislation that protects workers from automated management practices. Combined with later privacy regulation, it gave Italy's data regulators sweeping powers to defend Italian workers, like Glovo's riders.

Italy is also a leader in recognizing gig workers as de facto employees, despite the tissue-thin pretense that adding an app to your employment means that you aren't entitled to any labor protections. In the case of Glovo, the fine-grained surveillance and reputation scoring were deemed proof that Glovo was employer to its riders.

Reversing Works' report is a fascinating read, especially the sections detailing how the researchers recruited a Glovo rider who allowed them to log in to Glovo's platform on their account.

As Reversing Works points out, this bottom-up approach – where apps are subjected to technical analysis – has real potential for labor organizations seeking to protect workers. Their report established multiple grounds on which a union could seek to hold an abusive employer to account.

But this bottom-up approach also holds out the potential for developing direct-action tools that let workers flex their power, by modifying apps, or coordinating their actions to wring concessions out of their bosses.

After all, the whole reason for the gig economy is to slash wage-bills, by transforming workers into contractors, and by eliminating managers in favor of algorithms. This leaves companies extremely vulnerable, because when workers come together to exercise power, their employer can't rely on middle managers to pressure workers, deal with irate customers, or step in to fill the gap themselves:

https://projects.itforchange.net/state-of-big-tech/changing-dynamics-of-labor-and-capital/

Only by seizing the means of computation, workers and organized labor can turn the tables on bossware – both by directly altering the conditions of their employment, and by producing the evidence and tools that regulators can use to force employers to make those alterations permanent.

Image: EFF (modified) https://www.eff.org/files/issues/eu-flag-11_1.png

CC BY 3.0 http://creativecommons.org/licenses/by/3.0/us/

#pluralistic#etui#glovo#foodinho#alogrithms exposed#reverse engineering#platform work directive#eu#data protection#algorithmic management#gdpr#privacy#labor#union busting#tracking exposed#reversing works#adversarial interoperability#comcom#bossware

352 notes

·

View notes

Text

The Winner Takes it All: Anakin Skywalker x Reader (Enemies-to-Lovers Modern AU)

NSFW! Minors DNI!!! Summary: The moment the thesis competition was announced, you knew your biggest threat. Anakin Skywalker, golden boy of the engineering department. He's the only other person smart enough to beat you, and the only other person insane enough to stay in the lab until midnight every night. He's also an asshole, but you're starting to think maybe he's not as bad as you thought he was... Pairing: Anakin Skywalker x Fem!Reader CW: mentions of masturbation WC: 3.4k AN: hello darlings!! another anakin x reader longer fic coming your way!! lmk what you think, and asks/requests are always open!

[Ch. 1], Ch. 2, Ch. 3, Ch. 4, Ch. 5, Ch. 6

Chapter 1: Soldering

The moment the competition was announced, you knew your biggest threat. Anakin Skywalker, golden boy of the department. As soon as he heard about it at the thesis info session of your senior year, his eyes found you in the crowd, because he knew you're his biggest rival, and you're coming for him. He was surprised to find you were looking at him, based on the way his eyes widened, and you found a shocking amount of satisfaction in it. The top prize was 10k and a job at Boeing, after all. The more you surprised him, the more likely you were to catch him off-guard. Not that you would sabotage his work, that was just unseemly conduct for a senior at Coruscant U, but you'd encourage his sloppiness.

The instant after the presentation finished, you rushed to the lab. The thesis lab adjoined the regular makerspace in a continuation of the glass walls and sleek design of the rest of the engineering building. You'd spent the end of your junior year there, when you'd had to submit your thesis proposal (A Novel Method for Glaucoma Detection Utilizing Machine Learning and Mass-Producible Hardware). Anakin was always there too, which made the space just a little more annoying, with the loud music blasting out of his headphones and the hair-raising racket of the band saw.

Last year, you'd decided to admit to yourself, despite your best efforts since you had met him, that okay, Anakin Skywalker was hot. Like, horrendously hot. He was a looker no matter what he did, with those blue puppy dog eyes, full lips, and his gorgeous chestnut hair, which looked so soft that you had wondered on multiple occasions what it would be like to touch it. And, being captain of the university taekwondo team, he was muscular as all get-out. You'd catch a peek at his calves and ass on hot days when he wore shorts, and his biceps and shoulders were almost always flexed in the lab when he was sawing something or bent over the soldering station. One time, he wore grey sweatpants, and you had to literally tear your eyes away. But it wasn't just those features that made him hot. It was, unfortunately, him as a person. The confidence with which he sauntered through the building. His mischievous smile that he'd cast you in group projects, or the clench of his jaw as he wired something finicky. Your roommate, Ahsoka, a junior and also his vice-captain, told you that, oh yeah, he was also really good with younger team members. That he taught kids in the nearby school once a week, too, even though he had such a busy schedule. Wasn't that just sweet.

He wasn't that kind to you. Another thing that made him hot, unfortunately, was his brain, and his wit. He was kind of smart, okay, very smart, and that might make him the one thing standing in your way this year. Anakin also never shied away from a biting comment at you, usually about how if you had done it correctly, you wouldn't have an issue with some wiring. Unfortunately, he was usually right, but you wouldn't give him the satisfaction of telling him that.

Your rivalry started in freshman year, when your physics professor would choose the best student's homework and post it to the class as an example. You were sure you'd be chosen--your first homework was perfect--but then you saw his name. Anakin Skywalker. The next week, you beat him, but then he came out on top immediately after. And so it went. Always fighting for the top spot, to see who could outdo the other. Now, the department was just paying you to do it.

You were in the lab right after the "Senior Thesis Information Session" presentation, using the few minutes you had before your thermodynamics class to tinker with the 3D print that had just finished. Then, the door slid open with the beep of an ID card. You didn't have to turn around to know it was Anakin. Only he would be insane enough to work on day 1 of the semester. Him, and you.

"So you're seriously competing for this, huh?" He asked, watching you sand off some rough edges off the plastic. His tone was playful, but there was an undercurrent of seriousness. He was sizing up the competition.

"Yup. And I'm gonna blow you out of the water," you said self-assuredly. Your project was too good not to win. Anakin barked out a laugh.

"Sure. Right. We'll see about that," he remarked. His voice was dripping with smugness, just like usual with you. You just rolled your eyes. It wasn't worth it to waste time verbally sparring with him, you had better things to do. Like thermo. So you pushed out of your chair, leaving the print on the shelf that had your name laser cut into wood (a gift you had made yourself after your junior thesis proposal got an A), and heading to Lecture Hall 3.56B. Anakin was, of course, heading there too. You were in lockstep, as always. However, he refused to walk there with you, so he waited precisely enough for you to close the door before he left too.

And so, the first three months of the semester passed in relative peace between the two of you. There was only a handful of people who used the thesis room, and you were the only ones there consistently. It helped because safety regulations meant you had to have a buddy in the room to use any of the really useful machines, so you sometimes found yourself pleased to see him. It meant you could get work done. At night, the engineering building was fifteen minutes away from the dorms where you both lived--in the same building, which vexed you to no end when you saw him in the dining hall--so you both had to make the walk home late at night through the city. Oftentimes, you ended up walking home at the same time. It would be wrong to call it walking together, because that would imply you were near each other, or in each other's company, which would be plain wrong. You were always as far as possible on the sidewalk, and oftentimes you two would end up speedwalking home, not allowing the other person to be faster. Was it childish? Maybe. Did you feel a rush of joy every single time you hit the door to your building before him? Definitely.

In November, as the biting cold chilled the air, you found yourself done before him. All your current tasks were done, and you had to wait for a print to finish before you could keep going, plus he wasn't using any machines that needed a buddy, according to lab rules. It had been a long day, and you'd barely dragged your bones into the lab, let alone through all that work.

"Hang on," his voice called from across the space. He was at the soldering station in his safety glasses, bent over some chip.

"What?" Why couldn't you just go home? To your beautiful bed?

"I don't feel good about you walking home alone, so can you just wait for, like, three more seconds?" He wasn't even looking at you as he said it, instead he was pressing the soldering iron to some metal. You scoffed. Like you were so frail you couldn't walk fifteen minutes on your own.

"Are you serious? Do you think I'm vulnerable because, what, I have a vagina? I've taken self-defense classes, thank you very much." Your tone was poisonous, and you tried to infuse every drop of venom you had in you at his stupid idea. Anakin finally looked up from the bench, turning the iron off and cleaning it in the steel wool, catching your eyes with an angry glare.

"No, dumbass. You're just less likely to get robbed in this part of town if you're not alone. But do what you want, I guess. Have fun getting all your valuables taken!" He shrugged sardonically and turned off the vent fan above him. Anakin was right, it killed you to admit. You didn't exactly feel safe walking home at 3am through this part of town. There were enough reports of students getting hurt. So you planted yourself in your chair and waited. When he saw you, a smug smile grew on his face. Asshole.

"C'mon, let's go home," he said nonchalantly once he'd shut down and locked the woodworking room and the laser cutters. As you walked home, this time at a comfortable pace and with his headphones off, you realized it was almost nice, peaceful to be with him like this. The night was still, not a single thing moving in the dark of the night. You passed the corner store, its graffiti-covered grate down at night, then the Vietnamese restaurant you loved, dark and empty. There was no one on the planet but the two of you at that moment. Much to your chagrin, you didn't mind it at that moment. Anakin looked even more ethereal in the moonlight, lighting up the light parts of his hair a silvery white and casting shadows all over his face. He really was handsome, you admitted reluctantly. When you got home, he wished you a good night, which he had never gone. You found the word escaping your lips out of habit. After that, your walking home at the same time turned into walking home together. On November the 8th, he asked you how you were doing. You told him you were good, your tone clipped. He echoed good into the quiet street, then you lapsed into silence. On the 10th, he asked if Ahsoka was feeling better. She had sprained her ankle at practice the previous day. You told him she was, and he said good again. On the 11th, he asked how your project was going, and, in a fit of weakness, you told him it wasn't great. That you were nervous about your first real test of the finished product, the one that would tell you if the past three months had been wasted or not. He told you that if anyone could do it, it would be you, and you spend the rest of the walk wondering where the insult buried inside the statement was hiding. Later that night, once you had tucked into bed, you realized there wasn't any insult at all, just genuine encouragement. For the next week, your walks were filled with slightly guarded conversation, sometimes about upcoming homework assignments, but sometimes about how the taekwondo team was doing, or if you thought Professor Yoda's ear hairs were a countable or uncountable infinity. But he was still an asshole.

About a week later, you were alone with Anakin in the lab around midnight, working on a piece of the lens, trying to get the refraction just right before the test run, when your phone buzzed. Midterm Grade Posted for PHYS 485: Thermodynamics. Your heart stopped. You had been hoping and praying that the number of hours you'd poured into your thesis wouldn't come back to bite you in terms of classwork, but now was the moment of truth. You opened the notification, then to the Canvas page, where you saw your grade. 38/100. Everything in the world stopped. How could you have fucked up that badly? Your eyes scanned over instructor comments. Average class grade: 40/100. Maximum grade: 49/100. Okay, okay. It would be curved up, and you'd probably get a B, but you were below average for the first time in your life. Fuck. Fuck. How could this happen? You glared at Anakin, who was screwing in a bolt to the metal scaffolding of his project. That motherfucker was probably the one who got 49. The thought made you so angry you bolted out of your chair and went to go grab the materials for your test. That motherfucker got everything. It wasn't fair.

You lined up the small device you made, plugged it into the port of your phone, and opened the corresponding software. Through the external lens, you scanned the two printed-out pictures of eyes, one with glaucoma and one without. You held your breath throughout the loading screen. Please, just let one thing go right. Please. Please. The little loading circle stopped. Both eyes were cleared of glaucoma. A false negative. Motherfucker. Three months of work, and for what? You'd never get the prize at this rate. You'd have to start from scratch. You slammed your fist onto the table in anger.

"Hey, there's hammers for that," Anakin called, teasing from the other side of the room. He looked up at you, mouth open to snark something else out, when he saw your eyes welling with tears.

"Woah, are you okay? What's wrong? Did you hurt yourself?" His voice was soft, warm. Anakin dropped the wrench he was holding on the table and half-jogged over to you, putting his hand on your shoulder. You jumped at the contact, but it wasn't entirely unwelcome. It was kind of comforting, actually, but you were too upset to notice that.

"It's just, it's not working, and I've spent so much time and--" you trailed off.

"Don't cry, it's okay, we can fix it," he said with a shrug and a smile. Why was he smiling? God, was he actually pleased right now? Suddenly, your tears turned to anger, not at yourself or the system or the difficulty of your project, but at him.

"Like you're not happy about this. I bet you sabotaged it yourself," you spat out and shrugged his hand off your shoulder. He balked.

"Sabotage? Are you serious? I'd never do that." You stood up, incensed, and pointed a finger into his chest.

"Really? It sounds exactly like something you would do--remember in sophomore year when Barriss's robot mysteriously stopped working?" He half laughed, half scoffed, mouth dropping open, then snapped back with his voice raised.

"You've got to be kidding! Maybe if you paid two seconds of attention to your classmates or anyone around you, you'd know it was her wiring! The connections were bad!"

"Sure," your voice dripped with sarcasm as you scoffed at his insult, "And when you told her it served her right? You were so smug!" Your voice was rising. He ran a hand through his hair and bit out another laugh as he retorted.

"And if I was? Like you're not the queen of being smug in this department. 'Oh, my robot's better, Anakin. I got an A, Anakin.'" He raised his voice high, mocking you. His eyes were wild, furious.

"Me? Smug? Look in the mirror, asshole! Pretend all you want, but I know who you are. You can pretend to be oh-so-nice to everyone else, but I see you for what you really are. Just. A. Fucking. Asshole." You emphasized each word with a jab of your finger, getting closer to him each time. The tension between you was turning somehow--were you losing the argument? You couldn't tell.

"Oh yeah? You don't know a single thing about me," he gritted out, right up in your face, jaw flexing. His intense eyes bored into yours, flicking back and forth, and then they dropped down to glance at your lips.

You weren't sure which one of you moved first, but all you felt was his lips against yours and your hands fisting in his hair, which it turned out was as perfectly soft as you had imagined. Bastard. Anakin's kisses were hot, insistent against your mouth as you sloppily made out in the middle of the lab. His arms, warm and firm, circled your waist and pulled you to him while you tilted your heads this way and that to get closer. Your tongue swiped his lower lip, and he treated you to a surprised, low moan that you wanted to hear again and again until your ears bled. He got your hint, though, and started teasing your lips with his tongue until you opened your mouth just enough to touch your tongue to his. His arms tightened and pulled you against him so that you could feel his warmth from chest to thigh. The two of you were frantic, like if you got close enough, deep enough in each others' mouths, you'd figure out why you were doing this and why it felt so goddamn good. Your heart was pounding when his hands slipped lower and grabbed you under your ass.

"Jump," he whispered huskily after he reluctantly separated his mouth from yours. You hopped, and he used the hands under your thighs to lift you up and sit you on the lab table. Dutifully, you wrapped your legs around his hips, interlocking your ankles around his unfairly attractive ass, and kept your hands buried in his hair. Anakin was back on your lips immediately. He was sloppy and excited until you shifted your hips against him, and then he became electric against you, even hungrier than before. You were definitely feeling something underneath your hips, a lump. It hit you that he was hard, and that sent a bolt of lightning between your legs. You'd stared a little bit more than you cared to admit that time he'd worn gray sweatpants, and what you'd seen was now pressed against you. You drew in a shaky breath at that idea, and you realized that God, he smelled like metal from his soldering earlier and, underneath that, sandalwood and vanilla.

Sometime around the time his hips tilted forward into yours, a beep echoed through the empty lab. You both jumped apart, leaving you sitting on the table, and the noise continued. Beep beep beep. The insistent noise came from one of the 3D printers in the corner. Anakin's print was done.

The silence of the lab felt deafening as you both panted. What had you done? Making out with your enemy was completely against lab safety guidelines, for one, and your morals, for another. Your heart was still pounding in your chest, despite your misgivings, but you willed those wisps of excitement deep down into some mental box. This couldn't happen. If there was a single person on this campus you couldn't fuck, it was Anakin. Not only was he rude, but if you got too close, how would you navigate it when only one of you won? Most importantly, though, you had hated him for four years. And for good reason. (Though you couldn't remember exactly what it was, or think critically at all, in that moment.)

"We shouldn't do that again, Anakin." Your voice was small in the empty space. For a second, his face fell, but he pressed his lips into a thin line to disguise it.

"Definitely not. I--Sorry." And that was that.

You walked home in complete silence, stealing glances at one another in the dark night. When you got to the door of your dorm, you opened your mouth to say something, but then closed it. Better not. So why, once you separated, did you feel so sad? Why did you want to see him again, to feel that silky hair under your fingers in your bed? You laid awake until the early hours of the night, and told yourself that your fingers slipping inside the waistband of your pajamas wasn't about Anakin, you just hadn't gotten some in way too long. It wasn't about Anakin. Even though it was his mouth and chest and arms you thought about when you came on your fingers, it wasn't about him.

♡♡♡♡♡♡♡♡♡♡♡♡♡♡♡♡♡♡♡

please let me know if you'd like to be added to the tag list!

#anakin skywalker#star wars anakin#anakin x reader#anakin smut#anakin x you#anakin skywalker x reader#anakin skywalker/you#anakin/you#anakin skywalker smut#anakin skywalker fanfiction#anakin skywalker imagine#anakin skywalker x you#star wars prequels#hayden christensen x reader#hayden christensen imagine

517 notes

·

View notes

Note

I saw something about generative AI on JSTOR. Can you confirm whether you really are implementing it and explain why? I’m pretty sure most of your userbase hates AI.

A generative AI/machine learning research tool on JSTOR is currently in beta, meaning that it's not fully integrated into the platform. This is an opportunity to determine how this technology may be helpful in parsing through dense academic texts to make them more accessible and gauge their relevancy.

To JSTOR, this is primarily a learning experience. We're looking at how beta users are engaging with the tool and the results that the tool is producing to get a sense of its place in academia.

In order to understand what we're doing a bit more, it may help to take a look at what the tool actually does. From a recent blog post:

Content evaluation

Problem: Traditionally, researchers rely on metadata, abstracts, and the first few pages of an article to evaluate its relevance to their work. In humanities and social sciences scholarship, which makes up the majority of JSTOR’s content, many items lack abstracts, meaning scholars in these areas (who in turn are our core cohort of users) have one less option for efficient evaluation.

When using a traditional keyword search in a scholarly database, a query might return thousands of articles that a user needs significant time and considerable skill to wade through, simply to ascertain which might in fact be relevant to what they’re looking for, before beginning their search in earnest.

Solution: We’ve introduced two capabilities to help make evaluation more efficient, with the aim of opening the researcher’s time for deeper reading and analysis:

Summarize, which appears in the tool interface as “What is this text about,” provides users with concise descriptions of key document points. On the back-end, we’ve optimized the Large Language Model (LLM) prompt for a concise but thorough response, taking on the task of prompt engineering for the user by providing advanced direction to:

Extract the background, purpose, and motivations of the text provided.

Capture the intent of the author without drawing conclusions.

Limit the response to a short paragraph to provide the most important ideas presented in the text.

Search term context is automatically generated as soon as a user opens a text from search results, and provides information on how that text relates to the search terms the user has used. Whereas the summary allows the user to quickly assess what the item is about, this feature takes evaluation to the next level by automatically telling the user how the item is related to their search query, streamlining the evaluation process.

Discovering new paths for exploration

Problem: Once a researcher has discovered content of value to their work, it’s not always easy to know where to go from there. While JSTOR provides some resources, including a “Cited by” list as well as related texts and images, these pathways are limited in scope and not available for all texts. Especially for novice researchers, or those just getting started on a new project or exploring a novel area of literature, it can be needlessly difficult and frustrating to gain traction.

Solution: Two capabilities make further exploration less cumbersome, paving a smoother path for researchers to follow a line of inquiry:

Recommended topics are designed to assist users, particularly those who may be less familiar with certain concepts, by helping them identify additional search terms or refine and narrow their existing searches. This feature generates a list of up to 10 potential related search queries based on the document’s content. Researchers can simply click to run these searches.

Related content empowers users in two significant ways. First, it aids in quickly assessing the relevance of the current item by presenting a list of up to 10 conceptually similar items on JSTOR. This allows users to gauge the document’s helpfulness based on its relation to other relevant content. Second, this feature provides a pathway to more content, especially materials that may not have surfaced in the initial search. By generating a list of related items, complete with metadata and direct links, users can extend their research journey, uncovering additional sources that align with their interests and questions.

Supporting comprehension

Problem: You think you have found something that could be helpful for your work. It’s time to settle in and read the full document… working through the details, making sure they make sense, figuring out how they fit into your thesis, etc. This all takes time and can be tedious, especially when working through many items.

Solution: To help ensure that users find high quality items, the tool incorporates a conversational element that allows users to query specific points of interest. This functionality, reminiscent of CTRL+F but for concepts, offers a quicker alternative to reading through lengthy documents.

By asking questions that can be answered by the text, users receive responses only if the information is present. The conversational interface adds an accessibility layer as well, making the tool more user-friendly and tailored to the diverse needs of the JSTOR user community.

Credibility and source transparency

We knew that, for an AI-powered tool to truly address user problems, it would need to be held to extremely high standards of credibility and transparency. On the credibility side, JSTOR’s AI tool uses only the content of the item being viewed to generate answers to questions, effectively reducing hallucinations and misinformation.

On the transparency front, responses include inline references that highlight the specific snippet of text used, along with a link to the source page. This makes it clear to the user where the response came from (and that it is a credible source) and also helps them find the most relevant parts of the text.

293 notes

·

View notes

Text

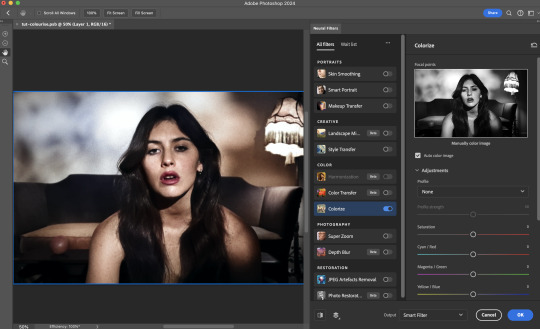

Neural Filters Tutorial for Gifmakers by @antoniosvivaldi

Hi everyone! In light of my blog’s 10th birthday, I’m delighted to reveal my highly anticipated gifmaking tutorial using Neural Filters - a very powerful collection of filters that really broadened my scope in gifmaking over the past 12 months.

Before I get into this tutorial, I want to thank @laurabenanti, @maines , @cobbbvanth, and @cal-kestis for their unconditional support over the course of my journey of investigating the Neural Filters & their valuable inputs on the rendering performance!

In this tutorial, I will outline what the Photoshop Neural Filters do and how I use them in my workflow - multiple examples will be provided for better clarity. Finally, I will talk about some known performance issues with the filters & some feasible workarounds.

Tutorial Structure:

Meet the Neural Filters: What they are and what they do

Why I use Neural Filters? How I use Neural Filters in my giffing workflow

Getting started: The giffing workflow in a nutshell and installing the Neural Filters

Applying Neural Filters onto your gif: Making use of the Neural Filters settings; with multiple examples

Testing your system: recommended if you’re using Neural Filters for the first time

Rendering performance: Common Neural Filters performance issues & workarounds

For quick reference, here are the examples that I will show in this tutorial:

Example 1: Image Enhancement | improving the image quality of gifs prepared from highly compressed video files

Example 2: Facial Enhancement | enhancing an individual's facial features

Example 3: Colour Manipulation | colourising B&W gifs for a colourful gifset

Example 4: Artistic effects | transforming landscapes & adding artistic effects onto your gifs

Example 5: Putting it all together | my usual giffing workflow using Neural Filters

What you need & need to know:

Software: Photoshop 2021 or later (recommended: 2023 or later)*

Hardware: 8GB of RAM; having a supported GPU is highly recommended*

Difficulty: Advanced (requires a lot of patience); knowledge in gifmaking and using video timeline assumed

Key concepts: Smart Layer / Smart Filters

Benchmarking your system: Neural Filters test files**

Supplementary materials: Tutorial Resources / Detailed findings on rendering gifs with Neural Filters + known issues***

*I primarily gif on an M2 Max MacBook Pro that's running Photoshop 2024, but I also have experiences gifmaking on few other Mac models from 2012 ~ 2023.

**Using Neural Filters can be resource intensive, so it’s helpful to run the test files yourself. I’ll outline some known performance issues with Neural Filters and workarounds later in the tutorial.

***This supplementary page contains additional Neural Filters benchmark tests and instructions, as well as more information on the rendering performance (for Apple Silicon-based devices) when subject to heavy Neural Filters gifmaking workflows

Tutorial under the cut. Like / Reblog this post if you find this tutorial helpful. Linking this post as an inspo link will also be greatly appreciated!

1. Meet the Neural Filters!

Neural Filters are powered by Adobe's machine learning engine known as Adobe Sensei. It is a non-destructive method to help streamline workflows that would've been difficult and/or tedious to do manually.

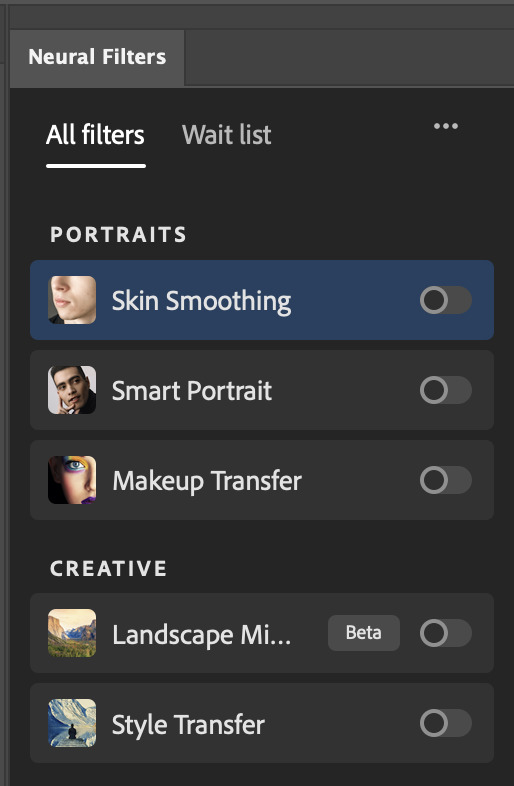

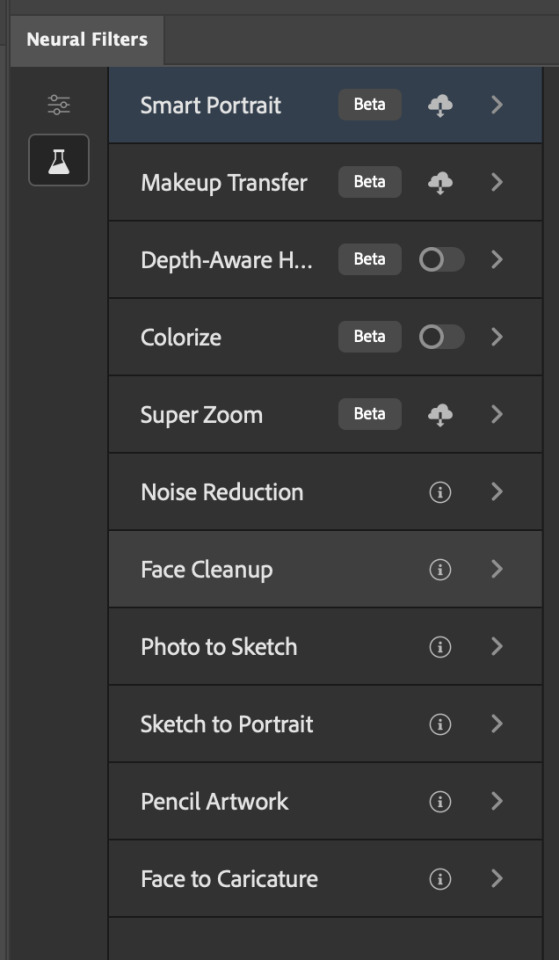

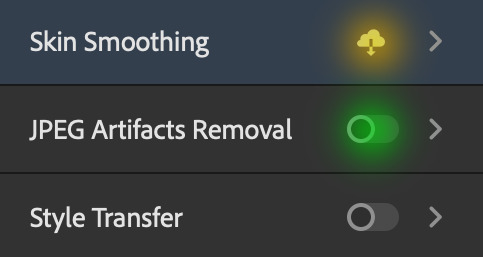

Here are the Neural Filters available in Photoshop 2024:

Skin Smoothing: Removes blemishes on the skin

Smart Portrait: This a cloud-based filter that allows you to change the mood, facial age, hair, etc using the sliders+

Makeup Transfer: Applies the makeup (from a reference image) to the eyes & mouth area of your image

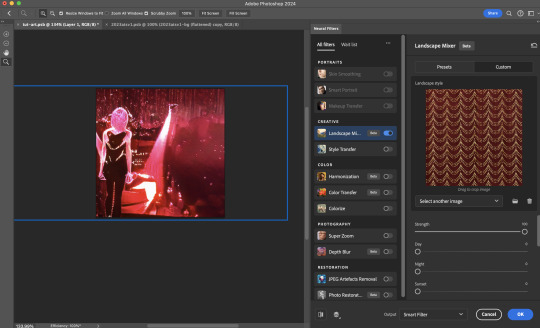

Landscape Mixer: Transforms the landscape of your image (e.g. seasons & time of the day, etc), based on the landscape features of a reference image

Style Transfer: Applies artistic styles e.g. texturings (from a reference image) onto your image

Harmonisation: Applies the colour balance of your image based on the lighting of the background image+

Colour Transfer: Applies the colour scheme (of a reference image) onto your image

Colourise: Adds colours onto a B&W image

Super Zoom: Zoom / crop an image without losing resolution+

Depth Blur: Blurs the background of the image

JPEG Artefacts Removal: Removes artefacts caused by JPEG compression

Photo Restoration: Enhances image quality & facial details

+These three filters aren't used in my giffing workflow. The cloud-based nature of Smart Portrait leads to disjointed looking frames. For Harmonisation, applying this on a gif causes Neural Filter timeout error. Finally, Super Zoom does not currently support output as a Smart Filter

If you're running Photoshop 2021 or earlier version of Photoshop 2022, you will see a smaller selection of Neural Filters:

Things to be aware of:

You can apply up to six Neural Filters at the same time

Filters where you can use your own reference images: Makeup Transfer (portraits only), Landscape Mixer, Style Transfer (not available in Photoshop 2021), and Colour Transfer

Later iterations of Photoshop 2023 & newer: The first three default presets for Landscape Mixer and Colour Transfer are currently broken.

2. Why I use Neural Filters?

Here are my four main Neural Filters use cases in my gifmaking process. In each use case I'll list out the filters that I use:

Enhancing Image Quality:

Common wisdom is to find the highest quality video to gif from for a media release & avoid YouTube whenever possible. However for smaller / niche media (e.g. new & upcoming musical artists), prepping gifs from highly compressed YouTube videos is inevitable.

So how do I get around with this? I have found Neural Filters pretty handy when it comes to both correcting issues from video compression & enhancing details in gifs prepared from these highly compressed video files.

Filters used: JPEG Artefacts Removal / Photo Restoration

Facial Enhancement:

When I prepare gifs from highly compressed videos, something I like to do is to enhance the facial features. This is again useful when I make gifsets from compressed videos & want to fill up my final panel with a close-up shot.

Filters used: Skin Smoothing / Makeup Transfer / Photo Restoration (Facial Enhancement slider)

Colour Manipulation:

Neural Filters is a powerful way to do advanced colour manipulation - whether I want to quickly transform the colour scheme of a gif or transform a B&W clip into something colourful.

Filters used: Colourise / Colour Transfer

Artistic Effects:

This is one of my favourite things to do with Neural Filters! I enjoy using the filters to create artistic effects by feeding textures that I've downloaded as reference images. I also enjoy using these filters to transform the overall the atmosphere of my composite gifs. The gifsets where I've leveraged Neural Filters for artistic effects could be found under this tag on usergif.

Filters used: Landscape Mixer / Style Transfer / Depth Blur

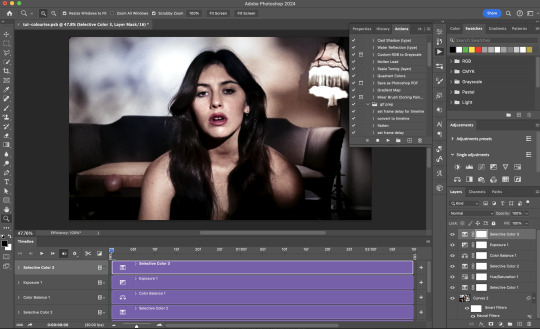

How I use Neural Filters over different stages of my gifmaking workflow:

I want to outline how I use different Neural Filters throughout my gifmaking process. This can be roughly divided into two stages:

Stage I: Enhancement and/or Colourising | Takes place early in my gifmaking process. I process a large amount of component gifs by applying Neural Filters for enhancement purposes and adding some base colourings.++

Stage II: Artistic Effects & more Colour Manipulation | Takes place when I'm assembling my component gifs in the big PSD / PSB composition file that will be my final gif panel.

I will walk through this in more detail later in the tutorial.

++I personally like to keep the size of the component gifs in their original resolution (a mixture of 1080p & 4K), to get best possible results from the Neural Filters and have more flexibility later on in my workflow. I resize & sharpen these gifs after they're placed into my final PSD composition files in Tumblr dimensions.

3. Getting started

The essence is to output Neural Filters as a Smart Filter on the smart object when working with the Video Timeline interface. Your workflow will contain the following steps:

Prepare your gif

In the frame animation interface, set the frame delay to 0.03s and convert your gif to the Video Timeline

In the Video Timeline interface, go to Filter > Neural Filters and output to a Smart Filter

Flatten or render your gif (either approach is fine). To flatten your gif, play the "flatten" action from the gif prep action pack. To render your gif as a .mov file, go to File > Export > Render Video & use the following settings.

Setting up:

o.) To get started, prepare your gifs the usual way - whether you screencap or clip videos. You should see your prepared gif in the frame animation interface as follows:

Note: As mentioned earlier, I keep the gifs in their original resolution right now because working with a larger dimension document allows more flexibility later on in my workflow. I have also found that I get higher quality results working with more pixels. I eventually do my final sharpening & resizing when I fit all of my component gifs to a main PSD composition file (that's of Tumblr dimension).

i.) To use Smart Filters, convert your gif to a Smart Video Layer.

As an aside, I like to work with everything in 0.03s until I finish everything (then correct the frame delay to 0.05s when I upload my panels onto Tumblr).

For convenience, I use my own action pack to first set the frame delay to 0.03s (highlighted in yellow) and then convert to timeline (highlighted in red) to access the Video Timeline interface. To play an action, press the play button highlighted in green.

Once you've converted this gif to a Smart Video Layer, you'll see the Video Timeline interface as follows:

ii.) Select your gif (now as a Smart Layer) and go to Filter > Neural Filters

Installing Neural Filters:

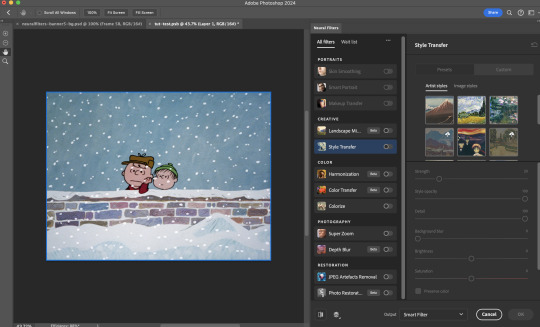

Install the individual Neural Filters that you want to use. If the filter isn't installed, it will show a cloud symbol (highlighted in yellow). If the filter is already installed, it will show a toggle button (highlighted in green)

When you toggle this button, the Neural Filters preview window will look like this (where the toggle button next to the filter that you use turns blue)

4. Using Neural Filters

Once you have installed the Neural Filters that you want to use in your gif, you can toggle on a filter and play around with the sliders until you're satisfied. Here I'll walkthrough multiple concrete examples of how I use Neural Filters in my giffing process.

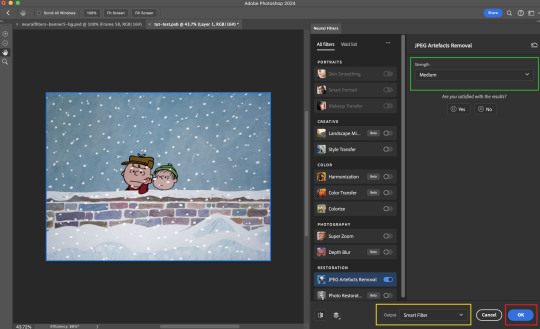

Example 1: Image enhancement | sample gifset

This is my typical Stage I Neural Filters gifmaking workflow. When giffing older or more niche media releases, my main concern is the video compression that leads to a lot of artefacts in the screencapped / video clipped gifs.

To fix the artefacts from compression, I go to Filter > Neural Filters, and toggle JPEG Artefacts Removal filter. Then I choose the strength of the filter (boxed in green), output this as a Smart Filter (boxed in yellow), and press OK (boxed in red).

Note: The filter has to be fully processed before you could press the OK button!

After applying the Neural Filters, you'll see "Neural Filters" under the Smart Filters property of the smart layer

Flatten / render your gif

Example 2: Facial enhancement | sample gifset

This is my routine use case during my Stage I Neural Filters gifmaking workflow. For musical artists (e.g. Maisie Peters), YouTube is often the only place where I'm able to find some videos to prepare gifs from. However even the highest resolution video available on YouTube is highly compressed.

Go to Filter > Neural Filters and toggle on Photo Restoration. If Photoshop recognises faces in the image, there will be a "Facial Enhancement" slider under the filter settings.

Play around with the Photo Enhancement & Facial Enhancement sliders. You can also expand the "Adjustment" menu make additional adjustments e.g. remove noises and reducing different types of artefacts.

Once you're happy with the results, press OK and then flatten / render your gif.

Example 3: Colour Manipulation | sample gifset

Want to make a colourful gifset but the source video is in B&W? This is where Colourise from Neural Filters comes in handy! This same colourising approach is also very helpful for colouring poor-lit scenes as detailed in this tutorial.

Here's a B&W gif that we want to colourise:

Highly recommended: add some adjustment layers onto the B&W gif to improve the contrast & depth. This will give you higher quality results when you colourise your gif.

Go to Filter > Neural Filters and toggle on Colourise.

Make sure "Auto colour image" is enabled.

Play around with further adjustments e.g. colour balance, until you're satisfied then press OK.

Important: When you colourise a gif, you need to double check that the resulting skin tone is accurate to real life. I personally go to Google Images and search up photoshoots of the individual / character that I'm giffing for quick reference.

Add additional adjustment layers until you're happy with the colouring of the skin tone.

Once you're happy with the additional adjustments, flatten / render your gif. And voila!

Note: For Colour Manipulation, I use Colourise in my Stage I workflow and Colour Transfer in my Stage II workflow to do other types of colour manipulations (e.g. transforming the colour scheme of the component gifs)

Example 4: Artistic Effects | sample gifset

This is where I use Neural Filters for the bulk of my Stage II workflow: the most enjoyable stage in my editing process!

Normally I would be working with my big composition files with multiple component gifs inside it. To begin the fun, drag a component gif (in PSD file) to the main PSD composition file.

Resize this gif in the composition file until you're happy with the placement

Duplicate this gif. Sharpen the bottom layer (highlighted in yellow), and then select the top layer (highlighted in green) & go to Filter > Neural Filters

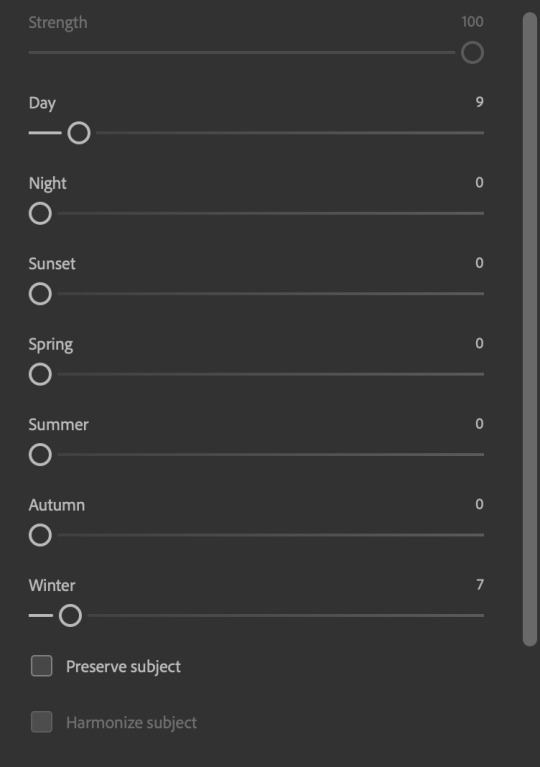

I like to use Style Transfer and Landscape Mixer to create artistic effects from Neural Filters. In this particular example, I've chosen Landscape Mixer

Select a preset or feed a custom image to the filter (here I chose a texture that I've on my computer)

Play around with the different sliders e.g. time of the day / seasons

Important: uncheck "Harmonise Subject" & "Preserve Subject" - these two settings are known to cause performance issues when you render a multiframe smart object (e.g. for a gif)

Once you're happy with the artistic effect, press OK

To ensure you preserve the actual subject you want to gif (bc Preserve Subject is unchecked), add a layer mask onto the top layer (with Neural Filters) and mask out the facial region. You might need to play around with the Layer Mask Position keyframes or Rotoscope your subject in the process.

After you're happy with the masking, flatten / render this composition file and voila!

Example 5: Putting it all together | sample gifset

Let's recap on the Neural Filters gifmaking workflow and where Stage I and Stage II fit in my gifmaking process:

i. Preparing & enhancing the component gifs

Prepare all component gifs and convert them to smart layers

Stage I: Add base colourings & apply Photo Restoration / JPEG Artefacts Removal to enhance the gif's image quality

Flatten all of these component gifs and convert them back to Smart Video Layers (this process can take a lot of time)