#generated by artificial intelligence

Explore tagged Tumblr posts

Text

The Great Alien Invasion of 1915 - 1916

The Great Alien Invasion of 1915-1916 was a pivotal event in Earth’s history. During this period, Earth faced an unprecedented threat from extraterrestrial invaders. The aliens, equipped with advanced technology and weaponry, launched a full-scale assault on the planet, leading to intense trench warfare. The human forces, despite their bravery and resilience, were struggling to hold their ground and were on the brink of defeat.

The turning point came when the Doctor, a mysterious and powerful time-traveler, arrived on the battlefield. With his vast knowledge and resourcefulness, the Doctor devised a plan to outsmart the alien invaders. He rallied the human forces, provided them with crucial intelligence, and led a series of strategic counterattacks. Through a combination of clever tactics and sheer determination, the Doctor and the human soldiers managed to turn the tide of the war.

In the end, the Doctor’s intervention proved decisive, and the alien invaders were ultimately defeated. The Great Alien Invasion of 1915-1916 stands as a testament to the resilience of humanity and the extraordinary impact of the Doctor’s heroism.

#ai#ai artwork#ai art#generated by artificial intelligence#ai story#WW1#Trench Warfare#TARDIS#The Doctor

4 notes

·

View notes

Text

chatgpt is the coward's way out. if you have a paper due in 40 minutes you should be chugging six energy drinks, blasting frantic circus music so loud you shatter an eardrum, and typing the most dogshit essay mankind has ever seen with your own carpel tunnel laden hands

#chatgpt#ai#artificial intelligence#anti ai#ai bullshit#fuck ai#anti generative ai#fuck generative ai#anti chatgpt#fuck chatgpt#mine

73K notes

·

View notes

Text

LEO XIV HAS DECLARED BUTLERIAN JIHAD

#196#r196#ruleposting#r/196#ai generated#ai art#artificial intelligence#ai#catholiscism#pope leo xiv#catholic#dune#dune movie#butlerian jihad

11K notes

·

View notes

Text

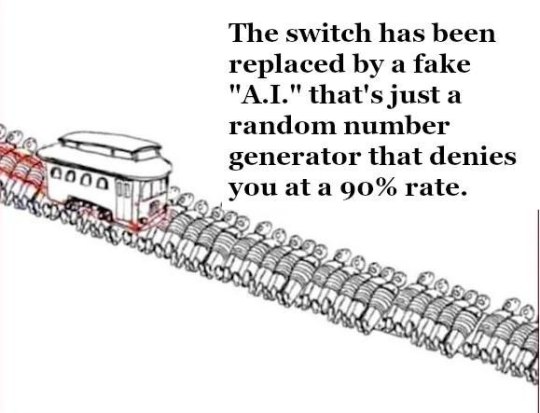

They will decide to kill you eventually.

#the trolley problem#trolley problem#ausgov#politas#australia#artificial intelligence#anti artificial intelligence#anti ai#fuck ai#brian thompson#united healthcare#unitedhealth group inc#uhc ceo#uhc shooter#uhc generations#uhc assassin#uhc lb#uhc#fuck ceos#ceo second au#ceo shooting#tech ceos#ceos#ceo down#ceo information#ceo#auspol#tasgov#taspol#fuck neoliberals

4K notes

·

View notes

Text

I saw a post before about how hackers are now feeding Google false phone numbers for major companies so that the AI Overview will suggest scam phone numbers, but in case you haven't heard,

PLEASE don't call ANY phone number recommended by AI Overview

unless you can follow a link back to the OFFICIAL website and verify that that number comes from the OFFICIAL domain.

My friend just got scammed by calling a phone number that was SUPPOSED to be a number for Microsoft tech support according to the AI Overview

It was not, in fact, Microsoft. It was a scammer. Don't fall victim to these scams. Don't trust AI generated phone numbers ever.

#this has been... a psa#psa#ai#anti ai#ai overview#scam#scammers#scam warning#online scams#anya rambles#scam alert#phishing#phishing attempt#ai generated#artificial intelligence#chatgpt#technology#ai is a plague#google ai#internet#warning#important psa#internet safety#safety#security#protection#online security#important info

3K notes

·

View notes

Text

Artificial intelligence is worse than humans in every way at summarising documents and might actually create additional work for people, a government trial of the technology has found. Amazon conducted the test earlier this year for Australia’s corporate regulator the Securities and Investments Commission (ASIC) using submissions made to an inquiry. The outcome of the trial was revealed in an answer to a questions on notice at the Senate select committee on adopting artificial intelligence. The test involved testing generative AI models before selecting one to ingest five submissions from a parliamentary inquiry into audit and consultancy firms. The most promising model, Meta’s open source model Llama2-70B, was prompted to summarise the submissions with a focus on ASIC mentions, recommendations, references to more regulation, and to include the page references and context. Ten ASIC staff, of varying levels of seniority, were also given the same task with similar prompts. Then, a group of reviewers blindly assessed the summaries produced by both humans and AI for coherency, length, ASIC references, regulation references and for identifying recommendations. They were unaware that this exercise involved AI at all. These reviewers overwhelmingly found that the human summaries beat out their AI competitors on every criteria and on every submission, scoring an 81% on an internal rubric compared with the machine’s 47%. Human summaries ran up the score by significantly outperforming on identifying references to ASIC documents in the long document, a type of task that the report notes is a “notoriously hard task” for this type of AI. But humans still beat the technology across the board. Reviewers told the report’s authors that AI summaries often missed emphasis, nuance and context; included incorrect information or missed relevant information; and sometimes focused on auxiliary points or introduced irrelevant information. Three of the five reviewers said they guessed that they were reviewing AI content. The reviewers’ overall feedback was that they felt AI summaries may be counterproductive and create further work because of the need to fact-check and refer to original submissions which communicated the message better and more concisely.

3 September 2024

5K notes

·

View notes

Text

920 notes

·

View notes

Text

AI art has no right being in Helpol/witchy spaces. It's so lifeless. So boring. Your Gods would be disappointed in you. They appreciate your creativity, your imagination, the things you do. They don't care about artistic skill and would be thrilled with whatever you create, as long as it's created with your heart and not a stealing algorithm.

#helpol#anti ai#ai#anti generative ai#rant#ai rant#witchcraft#hellenic polytheism#hellenismos#hellenism#ajax talks#artificial intelligence

462 notes

·

View notes

Text

Why I don’t like AI art

I'm on a 20+ city book tour for my new novel PICKS AND SHOVELS. Catch me in CHICAGO with PETER SAGAL on Apr 2, and in BLOOMINGTON at MORGENSTERN BOOKS on Apr 4. More tour dates here.

A law professor friend tells me that LLMs have completely transformed the way she relates to grad students and post-docs – for the worse. And no, it's not that they're cheating on their homework or using LLMs to write briefs full of hallucinated cases.

The thing that LLMs have changed in my friend's law school is letters of reference. Historically, students would only ask a prof for a letter of reference if they knew the prof really rated them. Writing a good reference is a ton of work, and that's rather the point: the mere fact that a law prof was willing to write one for you represents a signal about how highly they value you. It's a form of proof of work.

But then came the chatbots and with them, the knowledge that a reference letter could be generated by feeding three bullet points to a chatbot and having it generate five paragraphs of florid nonsense based on those three short sentences. Suddenly, profs were expected to write letters for many, many students – not just the top performers.

Of course, this was also happening at other universities, meaning that when my friend's school opened up for postdocs, they were inundated with letters of reference from profs elsewhere. Naturally, they handled this flood by feeding each letter back into an LLM and asking it to boil it down to three bullet points. No one thinks that these are identical to the three bullet points that were used to generate the letters, but it's close enough, right?

Obviously, this is terrible. At this point, letters of reference might as well consist solely of three bullet-points on letterhead. After all, the entire communicative intent in a chatbot-generated letter is just those three bullets. Everything else is padding, and all it does is dilute the communicative intent of the work. No matter how grammatically correct or even stylistically interesting the AI generated sentences are, they have less communicative freight than the three original bullet points. After all, the AI doesn't know anything about the grad student, so anything it adds to those three bullet points are, by definition, irrelevant to the question of whether they're well suited for a postdoc.

Which brings me to art. As a working artist in his third decade of professional life, I've concluded that the point of art is to take a big, numinous, irreducible feeling that fills the artist's mind, and attempt to infuse that feeling into some artistic vessel – a book, a painting, a song, a dance, a sculpture, etc – in the hopes that this work will cause a loose facsimile of that numinous, irreducible feeling to manifest in someone else's mind.

Art, in other words, is an act of communication – and there you have the problem with AI art. As a writer, when I write a novel, I make tens – if not hundreds – of thousands of tiny decisions that are in service to this business of causing my big, irreducible, numinous feeling to materialize in your mind. Most of those decisions aren't even conscious, but they are definitely decisions, and I don't make them solely on the basis of probabilistic autocomplete. One of my novels may be good and it may be bad, but one thing is definitely is is rich in communicative intent. Every one of those microdecisions is an expression of artistic intent.

Now, I'm not much of a visual artist. I can't draw, though I really enjoy creating collages, which you can see here:

https://www.flickr.com/photos/doctorow/albums/72177720316719208

I can tell you that every time I move a layer, change the color balance, or use the lasso tool to nip a few pixels out of a 19th century editorial cartoon that I'm matting into a modern backdrop, I'm making a communicative decision. The goal isn't "perfection" or "photorealism." I'm not trying to spin around really quick in order to get a look at the stuff behind me in Plato's cave. I am making communicative choices.

What's more: working with that lasso tool on a 10,000 pixel-wide Library of Congress scan of a painting from the cover of Puck magazine or a 15,000 pixel wide scan of Hieronymus Bosch's Garden of Earthly Delights means that I'm touching the smallest individual contours of each brushstroke. This is quite a meditative experience – but it's also quite a communicative one. Tracing the smallest irregularities in a brushstroke definitely materializes a theory of mind for me, in which I can feel the artist reaching out across time to convey something to me via the tiny microdecisions I'm going over with my cursor.

Herein lies the problem with AI art. Just like with a law school letter of reference generated from three bullet points, the prompt given to an AI to produce creative writing or an image is the sum total of the communicative intent infused into the work. The prompter has a big, numinous, irreducible feeling and they want to infuse it into a work in order to materialize versions of that feeling in your mind and mine. When they deliver a single line's worth of description into the prompt box, then – by definition – that's the only part that carries any communicative freight. The AI has taken one sentence's worth of actual communication intended to convey the big, numinous, irreducible feeling and diluted it amongst a thousand brushtrokes or 10,000 words. I think this is what we mean when we say AI art is soul-less and sterile. Like the five paragraphs of nonsense generated from three bullet points from a law prof, the AI is padding out the part that makes this art – the microdecisions intended to convey the big, numinous, irreducible feeling – with a bunch of stuff that has no communicative intent and therefore can't be art.

If my thesis is right, then the more you work with the AI, the more art-like its output becomes. If the AI generates 50 variations from your prompt and you choose one, that's one more microdecision infused into the work. If you re-prompt and re-re-prompt the AI to generate refinements, then each of those prompts is a new payload of microdecisions that the AI can spread out across all the words of pixels, increasing the amount of communicative intent in each one.

Finally: not all art is verbose. Marcel Duchamp's "Fountain" – a urinal signed "R. Mutt" – has very few communicative choices. Duchamp chose the urinal, chose the paint, painted the signature, came up with a title (probably some other choices went into it, too). It's a significant work of art. I know because when I look at it I feel a big, numinous irreducible feeling that Duchamp infused in the work so that I could experience a facsimile of Duchamp's artistic impulse.

There are individual sentences, brushstrokes, single dance-steps that initiate the upload of the creator's numinous, irreducible feeling directly into my brain. It's possible that a single very good prompt could produce text or an image that had artistic meaning. But it's not likely, in just the same way that scribbling three words on a sheet of paper or painting a single brushstroke will produce a meaningful work of art. Most art is somewhat verbose (but not all of it).

So there you have it: the reason I don't like AI art. It's not that AI artists lack for the big, numinous irreducible feelings. I firmly believe we all have those. The problem is that an AI prompt has very little communicative intent and nearly all (but not every) good piece of art has more communicative intent than fits into an AI prompt.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2025/03/25/communicative-intent/#diluted

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#ai#art#uncanniness#eerieness#communicative intent#gen ai#generative ai#image generators#artificial intelligence#generative artificial intelligence#gen artificial intelligence#l

538 notes

·

View notes

Photo

If you feel like it, you can support me :) https://www.buymeacoffee.com/sexystablediffusion https://www.patreon.com/sexy_stable_diffusion

#anime#ai babe#ai beauty#ai girl#artificial intelligence#ai waifu#ai#ai art#ai artwork#ai image#ai art generator#ai art discussion#stable diffusion#sd#ssd#sexy stable diffusion#ai hottie

2K notes

·

View notes

Text

Kitties and Spaghetti

Two kitties on the couch, so very snug,

With a bowl of spaghetti, each took a tug.

One purrs to the other, "This show's a delight,

But this pasta's so long, it's quite a bite!"

The other meows back, with a sauce-covered face,

"Turn up the volume, let's embrace this chase!

For as we twirl noodles, and the plot thickens,

We're the feline critics, with our spaghetti thickens!"

They slurp and they watch, in their cozy abode,

Laughing at jokes, as the story mode.

With each strand of spaghetti, they share a giggle,

Making plans to next time, maybe add a little kibble.

4 notes

·

View notes

Text

Join the Justdavina AI Transgender Fashion Design FUN!

#ai fashion#queer#trans#gay fashion#fashion editorial#fashion model#queer fashion#rainbow fashion#trans fashion#runway fashion#couture#fashion campaign#alt fashion#alternative fashion#fashion#ai generated#ai beauty#ai girl#ai image#ai model#ai sexy#ai babe#justdavina ai#ai#ai woman#ai art#artificial intelligence#ai artwork

735 notes

·

View notes

Text

Stand with game devs against AI

[ID: 7 November tweet from Erika Ishii @/erikaishii: "AI is egregiously disrespectful and dangerous to the workers who pour their time and creativity into making the games we love. In a year with thousands of layoffs despite record corporate profits, I sincerely hope to see peers and fans stand with devs and our labor movements."

This is a reply to a tweet from The Game Awards @/thegameawards which says, "Xbox has announced a partnership with InWorld AI to bring generative AI to games - including AI game dialogue & narrative tools at scale."

5K notes

·

View notes

Text

How's my tight Negligee? 🥰

#ai artwork#ai babe#ai girl#ai image#ai sexy#ai woman#digitalart#ai generated#ai art#ai#stable diffusion#artificial intelligence#ai artworks#ai artist#ai art gallery#ai art generator#ai waifu#makima#chainsaw man#aiartworks#aiartwork#aiartcommunity#fantasyart#digitalpainting#fantasy

745 notes

·

View notes

Text

A new paper from researchers at Microsoft and Carnegie Mellon University finds that as humans increasingly rely on generative AI in their work, they use less critical thinking, which can “result in the deterioration of cognitive faculties that ought to be preserved.” “[A] key irony of automation is that by mechanising routine tasks and leaving exception-handling to the human user, you deprive the user of the routine opportunities to practice their judgement and strengthen their cognitive musculature, leaving them atrophied and unprepared when the exceptions do arise,” the researchers wrote.

[...]

“The data shows a shift in cognitive effort as knowledge workers increasingly move from task execution to oversight when using GenAI,” the researchers wrote. “Surprisingly, while AI can improve efficiency, it may also reduce critical engagement, particularly in routine or lower-stakes tasks in which users simply rely on AI, raising concerns about long-term reliance and diminished independent problem-solving.” The researchers also found that “users with access to GenAI tools produce a less diverse set of outcomes for the same task, compared to those without. This tendency for convergence reflects a lack of personal, contextualised, critical and reflective judgement of AI output and thus can be interpreted as a deterioration of critical thinking.”

[...]

So, does this mean AI is making us dumb, is inherently bad, and should be abolished to save humanity's collective intelligence from being atrophied? That’s an understandable response to evidence suggesting that AI tools are reducing critical thinking among nurses, teachers, and commodity traders, but the researchers’ perspective is not that simple.

10 February 2025

412 notes

·

View notes