#primary data collection

Explore tagged Tumblr posts

Text

I dont want to do this dissertation crap anymore actually

#my god i wish i couldve just done primary data collection instead of driving myself mad with document analysis#but the time frame for primary data is so tough to manage

2 notes

·

View notes

Text

Didn't have going around campus on a male classmate's motorcycle on my bingo card

#lmfaoo#we are under the same supervisor and we are both collecting primary data from students#so yesterday we met at campus to collect data together and he brought his motorcycle? and asked me to get on it if I didn't have problem™#at first I refused to get on his bike partly because I hadn't ridden on one since childhood and#mostly because I didn't want to be seen on a guy's bike 😭 not that the particular guy is a problem#but yeah i understand that it would be highly inefficient if I walked or something so I got on it#he actually seems pretty chill and his wife(?) is our classmate as well. there's no problem unless anyone else interprets it wrong#the circumstances are funny but I have decided not to talk about it with my uni friends so hello internet

3 notes

·

View notes

Text

Everyone is always saying to me ‘what’re your sources? show me the primary source you are referencing!’ and so I go back to the man i keep locked in my basement and I beat him with a stick so I can record him saying the stuff he whispers to me in my dreams, but all he does is writhe around moaning until he cums. Useless!!

1 note

·

View note

Text

Data Quality in Research: Why It Matters for Accurate Insights

Explore the importance of data quality in research, its role in ensuring accuracy, reliability, and how it impacts insights and outcomes. For more detail visit here : https://www.philomathresearch.com/blog/2024/12/11/understanding-data-quality-in-research-why-it-matters-for-accurate-insights/

#AI in market research#data collection method#data quality in research#primary market research#qualitative research

0 notes

Text

What Is Market Research: Methods, Types & Examples

Learn about the fundamentals of market research, including various methods, types, and real-life examples. Discover how market research can benefit your business and gain insights into consumer behavior, trends, and preferences.

#Market research#Methods#Types#Examples#Data collection#Surveys#Interviews#Focus groups#Observation#Experimentation#Quantitative research#Qualitative research#Primary research#Secondary research#Online research#Offline research#Demographic analysis#Psychographic analysis#Geographic analysis#Market segmentation#Target market#Consumer behavior#Trends analysis#Competitor analysis#SWOT analysis#PESTLE analysis#Customer satisfaction#Brand perception#Product testing#Concept testing

0 notes

Text

Maximizing Data Quality: The Role of Outsourced Survey Programming Services

In the age of information, data reigns supreme. Organizations across industries rely on data-driven insights to make informed decisions, develop strategies, and drive innovation. In the realm of market research, the quality of data holds paramount importance, serving as the bedrock upon which accurate analyses and actionable recommendations are built. Enter Snware Research Services, a leading player in the field of outsourced survey programming services, dedicated to maximizing data quality and transforming raw information into invaluable insights.

The Imperative of Data Quality

In a world inundated with data, the old adage "garbage in, garbage out" has never been truer. The quality of insights derived from data hinges on the quality of the data itself. Poorly structured surveys, ambiguous questions, and technical glitches can compromise the integrity of collected data, rendering subsequent analyses ineffective and decision-making flawed. This is where the expertise of outsourced survey programming services comes into play.

The Role of Outsourced Survey Programming

Outsourcing survey programming to experts like Snware Research Services introduces a host of advantages that directly contribute to maximizing data quality. Let's delve into the multifaceted role that outsourced survey programming plays in this pursuit:

1. Designing Effective Surveys:

Crafting surveys that capture relevant information while maintaining respondent engagement is a delicate art. Snware Research Services excels in designing surveys that are intuitive, user-friendly, and strategically structured. By ensuring clear question wording, logical flow, and optimal response options, the chances of obtaining accurate and actionable data are significantly enhanced.

2. Ensuring Consistency and Standardization:

Inconsistent survey design and question formatting can introduce biases and inaccuracies. Outsourced survey programming services meticulously adhere to industry best practices, ensuring standardization across surveys and minimizing potential sources of error.

3. Navigating Complex Logic and Skip Patterns:

As surveys become more intricate, navigating complex logic and skip patterns becomes a challenge. Snware Research Services boasts the expertise to develop surveys with intricate branching logic, conditional skips, and advanced question routing, guaranteeing accurate and complete data capture.

4. Minimizing Errors and Technical Glitches:

Technical glitches can disrupt the survey-taking experience and compromise data integrity. Outsourced survey programming services employ rigorous testing procedures to identify and rectify errors, ensuring a seamless respondent experience and dependable data collection.

5. Optimizing Data Collection Platforms:

The choice of survey platform can impact data quality. We are well-versed in a variety of survey software, enabling them to recommend platforms that align with research objectives, participant demographics, and data security requirements.

6. Real-Time Monitoring and Quality Assurance:

The journey to data quality doesn't end with survey deployment. Outsourced survey programming services like Snware Research Services provide real-time monitoring, identifying potential issues, and implementing corrective measures to maintain data accuracy throughout the data collection process.

7. Tailored Solutions for Diverse Research Objectives:

Every research project is unique, with distinct goals and requirements. Snware Research Services recognizes the importance of tailoring survey programming solutions to fit the specific needs of each project, ensuring that data collection methods align with research objectives.

8. Expertise in Data Cleaning and Preparation:

Data collected through surveys often require thorough cleaning and preparation before analysis. Outsourced survey programming services possess the expertise to clean, validate, and transform raw data into formats that facilitate meaningful analysis and interpretation.

The Snware Approach: Elevating Data Quality to a Science

Snware Research Services brings a comprehensive and meticulous approach to outsourced survey programming, transforming data quality into a scientific pursuit. Leveraging a combination of technical prowess, industry experience, and an unwavering commitment to excellence, we place data quality at the forefront of every project.

Case Study: A Journey to Enhanced Data Quality

To illustrate the tangible impact of outsourced survey programming on data quality, let's explore a hypothetical case study:

Client Background: A pharmaceutical company embarks on a study to gather feedback from healthcare professionals regarding a new medical device.

Challenge: Designing a survey that captures comprehensive insights while ensuring a seamless survey-taking experience for busy healthcare professionals.

Solution: The pharmaceutical company partners with Snware Research Services for outsourced survey programming. Snware's team collaborates with the client to design a survey that features clear and concise questions, incorporates advanced logic for targeted questioning, and is accessible across various devices.

Outcome: The survey, impeccably programmed by Snware Research Services, receives high response rates and yields rich and nuanced data. The pharmaceutical company gains insights that drive product enhancements and informed decision-making, setting the stage for successful device adoption.

Conclusion: Pioneering Data Quality Through Outsourced Survey Programming

As the landscape of market research continues to evolve, the maximization of data quality emerges as a non-negotiable imperative. Outsourced survey programming services, epitomized by the expertise and dedication of Snware Research Services, play a pivotal role in elevating data quality from a lofty goal to an attainable reality. By meticulously designing surveys, optimizing data collection processes, and maintaining rigorous quality assurance, these services empower organizations to transform raw data into actionable insights that drive success, innovation, and progress in an ever-changing world. As we look to the future, the partnership between businesses and outsourced survey programming services will undoubtedly continue to shape the landscape of data-driven decision-making, propelling industries toward excellence and unlocking the full potential of their data.

#market research#survey programming#market#research#services#primary research#data collection services

0 notes

Text

What kind of bubble is AI?

My latest column for Locus Magazine is "What Kind of Bubble is AI?" All economic bubbles are hugely destructive, but some of them leave behind wreckage that can be salvaged for useful purposes, while others leave nothing behind but ashes:

https://locusmag.com/2023/12/commentary-cory-doctorow-what-kind-of-bubble-is-ai/

Think about some 21st century bubbles. The dotcom bubble was a terrible tragedy, one that drained the coffers of pension funds and other institutional investors and wiped out retail investors who were gulled by Superbowl Ads. But there was a lot left behind after the dotcoms were wiped out: cheap servers, office furniture and space, but far more importantly, a generation of young people who'd been trained as web makers, leaving nontechnical degree programs to learn HTML, perl and python. This created a whole cohort of technologists from non-technical backgrounds, a first in technological history. Many of these people became the vanguard of a more inclusive and humane tech development movement, and they were able to make interesting and useful services and products in an environment where raw materials – compute, bandwidth, space and talent – were available at firesale prices.

Contrast this with the crypto bubble. It, too, destroyed the fortunes of institutional and individual investors through fraud and Superbowl Ads. It, too, lured in nontechnical people to learn esoteric disciplines at investor expense. But apart from a smattering of Rust programmers, the main residue of crypto is bad digital art and worse Austrian economics.

Or think of Worldcom vs Enron. Both bubbles were built on pure fraud, but Enron's fraud left nothing behind but a string of suspicious deaths. By contrast, Worldcom's fraud was a Big Store con that required laying a ton of fiber that is still in the ground to this day, and is being bought and used at pennies on the dollar.

AI is definitely a bubble. As I write in the column, if you fly into SFO and rent a car and drive north to San Francisco or south to Silicon Valley, every single billboard is advertising an "AI" startup, many of which are not even using anything that can be remotely characterized as AI. That's amazing, considering what a meaningless buzzword AI already is.

So which kind of bubble is AI? When it pops, will something useful be left behind, or will it go away altogether? To be sure, there's a legion of technologists who are learning Tensorflow and Pytorch. These nominally open source tools are bound, respectively, to Google and Facebook's AI environments:

https://pluralistic.net/2023/08/18/openwashing/#you-keep-using-that-word-i-do-not-think-it-means-what-you-think-it-means

But if those environments go away, those programming skills become a lot less useful. Live, large-scale Big Tech AI projects are shockingly expensive to run. Some of their costs are fixed – collecting, labeling and processing training data – but the running costs for each query are prodigious. There's a massive primary energy bill for the servers, a nearly as large energy bill for the chillers, and a titanic wage bill for the specialized technical staff involved.

Once investor subsidies dry up, will the real-world, non-hyperbolic applications for AI be enough to cover these running costs? AI applications can be plotted on a 2X2 grid whose axes are "value" (how much customers will pay for them) and "risk tolerance" (how perfect the product needs to be).

Charging teenaged D&D players $10 month for an image generator that creates epic illustrations of their characters fighting monsters is low value and very risk tolerant (teenagers aren't overly worried about six-fingered swordspeople with three pupils in each eye). Charging scammy spamfarms $500/month for a text generator that spits out dull, search-algorithm-pleasing narratives to appear over recipes is likewise low-value and highly risk tolerant (your customer doesn't care if the text is nonsense). Charging visually impaired people $100 month for an app that plays a text-to-speech description of anything they point their cameras at is low-value and moderately risk tolerant ("that's your blue shirt" when it's green is not a big deal, while "the street is safe to cross" when it's not is a much bigger one).

Morganstanley doesn't talk about the trillions the AI industry will be worth some day because of these applications. These are just spinoffs from the main event, a collection of extremely high-value applications. Think of self-driving cars or radiology bots that analyze chest x-rays and characterize masses as cancerous or noncancerous.

These are high value – but only if they are also risk-tolerant. The pitch for self-driving cars is "fire most drivers and replace them with 'humans in the loop' who intervene at critical junctures." That's the risk-tolerant version of self-driving cars, and it's a failure. More than $100b has been incinerated chasing self-driving cars, and cars are nowhere near driving themselves:

https://pluralistic.net/2022/10/09/herbies-revenge/#100-billion-here-100-billion-there-pretty-soon-youre-talking-real-money

Quite the reverse, in fact. Cruise was just forced to quit the field after one of their cars maimed a woman – a pedestrian who had not opted into being part of a high-risk AI experiment – and dragged her body 20 feet through the streets of San Francisco. Afterwards, it emerged that Cruise had replaced the single low-waged driver who would normally be paid to operate a taxi with 1.5 high-waged skilled technicians who remotely oversaw each of its vehicles:

https://www.nytimes.com/2023/11/03/technology/cruise-general-motors-self-driving-cars.html

The self-driving pitch isn't that your car will correct your own human errors (like an alarm that sounds when you activate your turn signal while someone is in your blind-spot). Self-driving isn't about using automation to augment human skill – it's about replacing humans. There's no business case for spending hundreds of billions on better safety systems for cars (there's a human case for it, though!). The only way the price-tag justifies itself is if paid drivers can be fired and replaced with software that costs less than their wages.

What about radiologists? Radiologists certainly make mistakes from time to time, and if there's a computer vision system that makes different mistakes than the sort that humans make, they could be a cheap way of generating second opinions that trigger re-examination by a human radiologist. But no AI investor thinks their return will come from selling hospitals that reduce the number of X-rays each radiologist processes every day, as a second-opinion-generating system would. Rather, the value of AI radiologists comes from firing most of your human radiologists and replacing them with software whose judgments are cursorily double-checked by a human whose "automation blindness" will turn them into an OK-button-mashing automaton:

https://pluralistic.net/2023/08/23/automation-blindness/#humans-in-the-loop

The profit-generating pitch for high-value AI applications lies in creating "reverse centaurs": humans who serve as appendages for automation that operates at a speed and scale that is unrelated to the capacity or needs of the worker:

https://pluralistic.net/2022/04/17/revenge-of-the-chickenized-reverse-centaurs/

But unless these high-value applications are intrinsically risk-tolerant, they are poor candidates for automation. Cruise was able to nonconsensually enlist the population of San Francisco in an experimental murderbot development program thanks to the vast sums of money sloshing around the industry. Some of this money funds the inevitabilist narrative that self-driving cars are coming, it's only a matter of when, not if, and so SF had better get in the autonomous vehicle or get run over by the forces of history.

Once the bubble pops (all bubbles pop), AI applications will have to rise or fall on their actual merits, not their promise. The odds are stacked against the long-term survival of high-value, risk-intolerant AI applications.

The problem for AI is that while there are a lot of risk-tolerant applications, they're almost all low-value; while nearly all the high-value applications are risk-intolerant. Once AI has to be profitable – once investors withdraw their subsidies from money-losing ventures – the risk-tolerant applications need to be sufficient to run those tremendously expensive servers in those brutally expensive data-centers tended by exceptionally expensive technical workers.

If they aren't, then the business case for running those servers goes away, and so do the servers – and so do all those risk-tolerant, low-value applications. It doesn't matter if helping blind people make sense of their surroundings is socially beneficial. It doesn't matter if teenaged gamers love their epic character art. It doesn't even matter how horny scammers are for generating AI nonsense SEO websites:

https://twitter.com/jakezward/status/1728032634037567509

These applications are all riding on the coattails of the big AI models that are being built and operated at a loss in order to be profitable. If they remain unprofitable long enough, the private sector will no longer pay to operate them.

Now, there are smaller models, models that stand alone and run on commodity hardware. These would persist even after the AI bubble bursts, because most of their costs are setup costs that have already been borne by the well-funded companies who created them. These models are limited, of course, though the communities that have formed around them have pushed those limits in surprising ways, far beyond their original manufacturers' beliefs about their capacity. These communities will continue to push those limits for as long as they find the models useful.

These standalone, "toy" models are derived from the big models, though. When the AI bubble bursts and the private sector no longer subsidizes mass-scale model creation, it will cease to spin out more sophisticated models that run on commodity hardware (it's possible that Federated learning and other techniques for spreading out the work of making large-scale models will fill the gap).

So what kind of bubble is the AI bubble? What will we salvage from its wreckage? Perhaps the communities who've invested in becoming experts in Pytorch and Tensorflow will wrestle them away from their corporate masters and make them generally useful. Certainly, a lot of people will have gained skills in applying statistical techniques.

But there will also be a lot of unsalvageable wreckage. As big AI models get integrated into the processes of the productive economy, AI becomes a source of systemic risk. The only thing worse than having an automated process that is rendered dangerous or erratic based on AI integration is to have that process fail entirely because the AI suddenly disappeared, a collapse that is too precipitous for former AI customers to engineer a soft landing for their systems.

This is a blind spot in our policymakers debates about AI. The smart policymakers are asking questions about fairness, algorithmic bias, and fraud. The foolish policymakers are ensnared in fantasies about "AI safety," AKA "Will the chatbot become a superintelligence that turns the whole human race into paperclips?"

https://pluralistic.net/2023/11/27/10-types-of-people/#taking-up-a-lot-of-space

But no one is asking, "What will we do if" – when – "the AI bubble pops and most of this stuff disappears overnight?"

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2023/12/19/bubblenomics/#pop

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

--

tom_bullock (modified) https://www.flickr.com/photos/tombullock/25173469495/

CC BY 2.0 https://creativecommons.org/licenses/by/2.0/

4K notes

·

View notes

Text

By worrying endlessly about what makes men fight for their servitude as if it were their salvation, Western Marxism rigs the deck against ever becoming hegemonic. […] The celebration of transgression, so characteristic of queer theory, is incompatible with the struggle for legal sovereignty waged by movements of national liberation and people’s democratic dictatorships. […] Trans studies, infused with an ambivalence between gender-deviance and the desire to pass, cannot take up queer theory’s exaltation of transgression uncritically. Eastern Marxism simply does not valorize transgression as such, since its goal is hegemony, to function as a legitimate ruling party representative of the general interest, and the collective transgression of one norm in particular: imperialism.

— Nia Frome (2024), The Problem of Recognition in Transitional States, or Sympathy for the Monster

The author makes the case why 1) various strains of Eastern Marxism (MLism particularly) seem so compelling to transgender people specifically 2) how this is reflected within the tension between queer theory (what Frome describes as having a general preoccupation with the ‘exaltation of transgression’) and trans studies (what Frome describes as being more preoccupied with political goals of hegemony, eg gender-affirming healthcare, control of administrative gender data about ourselves, etc) is directly comparable to the West/East Marxist split, with the author firmly placing queer theory within Western Marxism’s anticommunist preoccupations and theories of state.

I think this is most compellingly argued when she points to the homonationalist (homo-imperialist?) commitments of western LGBT organisations, NGOs, thinktanks, etc. to ‘spreading democracy gay tolerance’ to the backwards Global South. One only need to refer to the photo of an IOF soldier standing in a bombed street in Gaza holding up a gay pride flag to recognise the academy’s role in ‘queering’ imperial pursuits. Now obviously this doesn’t mean trans studies is exempt from this (far from it), but what I think this essay does well is demonstrate why trans studies has been famously called “queer theory’s evil twin” and why more broadly the political goals of transgender people are on some level incommensurable with queer theory’s (and downstream of this, the western queer community’s) commitment to transgression as the primary mode of resistance and action.

And, ironically, why despite this desire for eternal transgression, the headline political goal of western gays for the past few decades has been marriage equality, a desire to be folded into pre-existing hegemony (perhaps another example of its Western Marxist tendencies?), in contrast to the transsexual goal of gender liberation and eventual abolition via the pursuit of using medicine and administrative state power to make ourselves our own frankenstein monsters, both scientist and creation (a goal that also necessarily requires a transitionary state, a “monster” state that is neither full capitalism (cissexualism) nor full communism (transsexualism), but an apparatus that gets us from A -> B. This description is blatantly one of both socialism and gender transition itself, and in this comparison it is revealed why the transsexual may desire Marxism-Leninism). This also reveals why transmedicalism - the desire to uphold cissexual, psychiatric, pathological conceptions of transgenderism as a mental illness and/or sexual perversion - is a dead-end, a forfeiting of even more power to those who already have it, and fundamentally different from the goal of free HRT, surgery, name changes and gender marker changes for everyone forever amen

451 notes

·

View notes

Text

"The Netherlands is pulling even further ahead of its peers in the shift to a recycling-driven circular economy, new data shows.

According to the European Commission’s statistics office, 27.5% of the material resources used in the country come from recycled waste.

For context, Belgium is a distant second, with a “circularity rate” of 22.2%, while the EU average is 11.5% – a mere 0.8 percentage point increase from 2010.

“We are a frontrunner, but we have a very long way to go still, and we’re fully aware of that,” Martijn Tak, a policy advisor in the Dutch ministry of infrastructure and water management, tells The Progress Playbook.

The Netherlands aims to halve the use of primary abiotic raw materials by 2030 and run the economy entirely on recycled materials by 2050. Amsterdam, a pioneer of the “doughnut economics” concept, is behind much of the progress.

Why it matters

The world produces some 2 billion tonnes of municipal solid waste each year, and this could rise to 3.4 billion tonnes annually by 2050, according to the World Bank.

Landfills are already a major contributor to planet-heating greenhouse gases, and discarded trash takes a heavy toll on both biodiversity and human health.

“A circular economy is not the goal itself,” Tak says. “It’s a solution for societal issues like climate change, biodiversity loss, environmental pollution, and resource-security for the country.”

A fresh approach

While the Netherlands initially focused primarily on waste management, “we realised years ago that’s not good enough for a circular economy.”

In 2017, the state signed a “raw materials agreement” with municipalities, manufacturers, trade unions and environmental organisations to collaborate more closely on circular economy projects.

It followed that up with a national implementation programme, and in early 2023, published a roadmap to 2030, which includes specific targets for product groups like furniture and textiles. An English version was produced so that policymakers in other markets could learn from the Netherlands’ experiences, Tak says.

The programme is focused on reducing the volume of materials used throughout the economy partly by enhancing efficiencies, substituting raw materials for bio-based and recycled ones, extending the lifetimes of products wherever possible, and recycling.

It also aims to factor environmental damage into product prices, require a certain percentage of second-hand materials in the manufacturing process, and promote design methods that extend the lifetimes of products by making them easier to repair.

There’s also an element of subsidisation, including funding for “circular craft centres and repair cafés”.

This idea is already in play. In Amsterdam, a repair centre run by refugees, and backed by the city and outdoor clothing brand Patagonia, is helping big brands breathe new life into old clothes.

Meanwhile, government ministries aim to aid progress by prioritising the procurement of recycled or recyclable electrical equipment and construction materials, for instance.

State support is critical to levelling the playing field, analysts say...

Long Road Ahead

The government also wants manufacturers – including clothing and beverages companies – to take full responsibility for products discarded by consumers.

“Producer responsibility for textiles is already in place, but it’s work in progress to fully implement it,” Tak says.

And the household waste collection process remains a challenge considering that small city apartments aren’t conducive to having multiple bins, and sparsely populated rural areas are tougher to service.

“Getting the collection system right is a challenge, but again, it’s work in progress.”

...Nevertheless, Tak says wealthy countries should be leading the way towards a fully circular economy as they’re historically the biggest consumers of natural resources."

-via The Progress Playbook, December 13, 2023

#netherlands#dutch#circular economy#waste management#sustainable#recycle#environment#climate action#pollution#plastic pollution#landfill#good news#hope

523 notes

·

View notes

Text

The moon sign and processing emotions 🌕

Im sure you’ve all heard that Capricorn moons don’t have feelings 😶🌫️, as well as other signs (usually everybody but water moons seems to have feelings lol), but I am here to debunk that tired myth and share a secret with y’all. 🤫

EVERY moon sign is capable of feeling. We are all feeling beings for crying out loud. The difference seen in the moon sign (as well as house, aspects, degrees, etc, but we’re just going to focus on the sign for this post), is in how we process these feelings.

Astrology is not a vacuum (where is the vacuum emoji?), where one placement works on its own.

Remember that some of these can and will overlap, I have a stellium in both Aquarius and Pisces although my moon is in cancer 5H, so with that being said— I also relate to how Aquarius, Leo and Pisces moons process emotions. My moon also aspects mars in Pisces, Saturn in Aries and Venus in Capricorn so I will have a flair of those signs in the way I process as well.

The signs:

Aries moons will process their feelings through the lens of masculinity (ik, it’s cliche but I’m just scratching the surface), they may look to men, as they are mars ruled, as examples of this. Sports, physical activity, and exerting energy are how they process feelings.

Taurus moons process their feelings through comfort. They may like to sit outside, study nature and the seasons. Gardening 👩🏾🌾 , grounding (being barefoot 🦶🏽, hugging trees🌳, just laying on grass), talking with plants, hugging/physical contact with others, helps them process.

Gemini moons process their feelings through information. They are more likely to want to talk with others about how they’ve processed said emotion, so they can gather data on which one feels right for them. Talking 🗣️, reading 📖 about feelings, or listening 👂🏾 to others helps them process

Cancer moons process our feelings through our family, and especially our mothers/primary feminine caregivers. Being with and around family 👩🏿🍼specifically being nurtured, cared for, and taken care of by them is how we process our feelings.

Leo moons process their feelings through their relationship with their father or children. Their loved ones are their strength. They can be child-like, needing to play or be dramatic. Dramatizing their feelings, or using entertainment as a vehicle to process. Such as listening to sad music or watching tear jerking movies.

Virgo moons process their feelings through collecting data, similar to Gemini moons, but they are concerned with what has worked. For example if they had a friend breakup recently, they are going to go back to the last friend breakup they had and see what worked and didn’t in relation to how they moved on (if they did), and do that. Or, figure out what’s practical for that moment. If it’s not safe to process, they will backlog it and wait for the appropriate time.

Virgo is the best sign I can use to incorporate the other signs’ ways of processing. So, for example, let’s say last time they had a romantic break up they used the gym as a way of getting through it. This Virgo moon might have moon in the 1H, or moon aspecting mars, or Aries. Or even Venus/or 2H if this had something to do with them wanting to change their physical appearance/their self worth was affected.

Libra moons process their feelings through their partner or person they see/are with the most. They might also process through creating (Venus is exalted in pisces), via writing, singing, styling, shopping, etc. they also like to use music as a vehicle like Leo’s, however the person they are with the most will largely have an impact on what they do.

Scorpio moons process their feelings through (you guessed it) death and change. I’m almost led to believe if the situation doesn’t feel like a life or death situation, they really don’t care (and not in an apathetic way), it’s just that they can hold so much, so they’re very selective as to what they want to process or what isn’t even worth it. They (imo and I mean the evolved 👀 ones) will use more therapeutic/psychological approaches to processing feelings.

(This one was the hardest one to think/feel into about) Sagittarius moons process their feelings through not processing them (lol I’m kidding) They also look to the past but through the lens of stories, fables and tales. Journals, too. They like to see how people of the past processed. They may like to connect the universal or cosmological system of feelings and how that shapes humans. I can see them processing feelings extremely quickly.

Capricorn moons process their feelings through practicality. Similar to Virgo moons, whatever makes sense at that moment is what they’re going to lean on. They may look to their elders and replicate how people more senior than them process. They feel like older people have figured out the best ways to live, or not.

Aquarius moons process their feelings through the group, especially friends and their community. They also process their feelings through talking and research. They are also similar to Capricorn (with same rulership) where they may look to their elders for emotional wisdom.

Pisces moons process their feelings through their dreams, so lucid dreaming is really good for them if they can’t quite process “irl.” as well as escaping/being lost and confused and “coming back to themselves.” They also process by daydreaming. This is a sign that will do well (Leo moons as well) with imagining their feelings as a place and coming up with ideas on how they’d navigate said world.

🌍 moons are more likely to process via herbs. Yes, smoking included as well as touch

💦 moons are more likely to process via crying or being near water, daydreaming, music

🔥 moons are more likely to process via activity, like dancing or even being around fire, cooking can be good

🌬️moons are more likely to process via information and affirmations. Talking with others, gathering information

Cardinal signs are more likely to react first and process “faster.” Most likely to want (key word, want) to get it out of their system.

Mutable signs are more likely to externalize their feelings, and relating to it through the lens of what’s happening in their life, people around them, etc.

Fixed signs are more likely to sit with their feelings and process “longer.” Most likely to internalize.

Let me know if any of these resonate with you, especially you Sagittarius moons 🫠 and let’s allllll remember that everyone has feelings 😉

159 notes

·

View notes

Text

On recent far-left attacks on the Anti-Defamation League

Before we start:

- I think the ADL is wrong about Musk's salutes.

- I think the ADL's Israel advocacy sometimes comes into conflict with their mission in the diaspora. I think their methodologies for data collection and reporting need improvement.

- I think that the ADL is flawed, imperfect and does much more good than harm.

---

Christopher Hitchens put into words what academics used to live by:

"What can be asserted without evidence can also be dismissed without evidence".

The burden of proof is on those making the claim, and the claims of droptheadl.org aren't supported with primary sources or evidence.

For example:

To support its claims about the ADL and SNCC, droptheadl.org offers a link, presenting it as a citation.

This is a link to a Google Books entry. There's no actual text, no citation, no chapter, no page, just the claim that somewhere in this 300-page book exists proof of the ADL denouncing SNCC as racist.

However, that's not in the book. Chapter two talks about this incident in detail, so I read it.

In reaponse to a SNCC newsletter (this is what a primary source looks like!) containing many factual errors about Israel,

...Morris Abram, president of the American Jewish Committee (AJC), summed up their outrage: “Anti-Semitism is anti-Semitism whether it comes from the Ku Klux Klan or from extremist Negro groups

[For those who haven't studied the era: at this point, "Negro" was still the word which the black community preferred. The transition to widespread identification as 'black' got going in the 60s and finished in the 70s. The use of the word 'Negro' here is not a slur. I state this in advance because I know how the illiberal left weilds its willful ignorance]

...

Abram was also careful to echo what the ADL had said: that SNCC’s article put it in the same anti-Israeli trench as the Arab world and the Soviet Union.

That's verifiably, unquestionably true. That's the position SNCC took, because that's where they got their information.

Droptheadl.org lied. This book doesn't say what they claim it says, which is why they didn't quote it or offer a specific citation. Why let facts get in the way of the narrative which makes them feel good about themselves?

The book, which I recommend reading, isn't about the ADL. It's a scholarly examination of the relationships between the wars the Arab world launched on Israel and the US Civil Rights Movement. This requires much discussion of the impact on the complex relationships between black communities and Jewish communities in the US in the context of their views on Israel and Palestine.

It's fascinating. Here's another excerpt illustrating why many Jews saw SNCC as taking an antisemitic turn:

One day in May of 1967, [Stokely] Carmichael and [H. Rap] Brown were in Alabama chatting with Donald Jelinek, a lawyer who worked with SNCC.

Jelinek, who was Jewish, expressed his positive feelings about Israel and his concerns about the Jewish state’s situation in that tension-filled month as war clouds were on the horizon in the Middle East.

“So it was a shock to me,” Jelinek later recounted, “when my SNCC friends mildly indicated support for the Arabs.” Mildly stated or not, their sentiments prompted Jelinek to reply, “But they may wipe out and destroy Israel.”

Carmichael adroitly changed the subject with some humor, and the men began laughing.

Jelinek thereafter overheard Brown quietly singing to himself, “arms for the Arabs, sneakers for the Jews.” When Jelinek asked him what that song meant, an embarrassed Brown explained that he had learned the song as a student in Louisiana. It implied that the Israelis would need sneakers (tennis shoes) to run from the Arabs, who were armed with weapons from abroad.

My qualms with this, my disappointment in and disagreement with both Carmichael and Brown doesn't make me a racist. It doesn't make the AJC or the ADL racist and it doesn't make Jelinek, the Jewish lawyer working with SNCC, a racist or a poor ally.

Zionism is the belief that Jews should have self-determination in their homeland.

Nazism was the belief that racially superior Aryans own the world, should be organized through fascist methods, and that the genocide of the Jewish people was explicitly required because they were the source of all evil and the obstacle to progress.

These are not the same. Suggesting they are the same, as Carmichael did, is morally and intellectually bankrupt. Pointing this out doesn't make me a racist. It makes me literate.

I still own a copy of Carmichael's book, Black Power. Carmichael (who later changed his name to Kwame Ture) was a complex person. Like every other historical figure, he was neither a saint nor a demon.

I can admire a lot about the Black Panthers without falsely claiming that nothing they ever did or said was troubling, poorly reasoned, or bigoted. The world is more complex than that.

There are no saints. Learn this important truth and use it to guide your understanding of the world around you. There are no saints.

Gandhi, for instance, was a great leader for Indian self-rule and a visionary of nonviolent protest. He was also a racist as a young man who said black people "...are troublesome, very dirty and live like animals." Read about his work in South Africa. He was also really weird about sex and slept naked with his grand niece, which we rightly recognize today as sexual abuse. He wasn't a saint or a demon, he was a person.

People are complex and flawed. If you want to understand people, history, and movements, wrap your head around this as keep it with you: People and their movements are complex and flawed.

But the depth of reasoning I see from the illiberal left is "ADL criticized SNCC, so they're Nazis."

No, child. The world is much, much more complex than that. Why did you go to college if you weren't going to learn anything there?

My 14yo is right. US leftists (not liberals, leftists) are allergic to nuance and discard the facts contradicting any narrative which makes them feel good about themselves.

Selah

Deep breath in, slow breath out.

The book is really delves into some of the factors contributing to the deteriorating relationship at the time between Jewish Americans and Black Americans. It points to this essay by James Baldwin, titled "Negroes Are Anti-Semitic Because They're Anti-White." I urge you to read it, it is a fascinating artifact of its time and place.

And this:

Jews had long advocated for black liberation by, for example, playing a role in the foundation of the National Association for the Advancement of Colored People (NAACP) in 1909. Jewish support for blacks was well known; as early as February of 1942, the American Jewish Committee published a study titled “Jewish Contribution to Negro Welfare.” Having experienced the sting of anti-Semitism, many Jews believed they were fighting in the same trench against discrimination alongside African Americans. When the civil rights struggle grew to become a mass movement in the 1950s and early 1960s, Jewish moral and financial support was crucial, and Jews were disproportionately well-represented among those whites who lent their support to the cause. Jewish financial contributions to civil rights groups were also significant. Jews even were the subject of criticism from some southern whites for the high-profile role they played in helping blacks win their freedom. All this compounded a sense of betrayal by SNCC that was felt by many Jewish Americans.

It should not be surprising or taken as racist that Jews objected to SNCC's advocacy against Israel's existence and I maintain that any call for Israel to be destroyed is innately, inarguably antisemitic. No other nation endures calls for its destruction. Just the Jewish one.

There was unquestionably tension between SNCC and the entire spectrum of non-black Americans who supported SNCC when SNCC ejected non-black members. From our perspective, decades removed, I can understand both why SNCC members narrowly voted for this AND why non-black members of SNCC were hurt and disillusioned. All of those perspectives were (and are) valid.

When I was an undergrad studying African American Political Thought, we discussed these tensions head-on, using primary sources, and evaluated them dispassionately.

We concluded that there are no villains in this story. SNCC got a bunch of facts wrong about Israel, their staunch Jewish allies were profoundly disappointed, saw hypocrisy in SNCC's position, and said so.

I think that far left Americans overlaid their feelings about a domestic struggle on a foreign one where they don't fit...and then discarded the facts and the complexity which got in the way of a satisfying narrative which made them feel like the good guys instead of forcing them to grapple with an uncomfortably complex reality.

I think that's what the illiberal left still does. It doesn't like complexity, it doesn't like academic rigor, it likes stories it can tell itself about its moral purity and discards facts, complexity, or rigor which threaten their view of themselves as saviors.

The world is complex. People are complex. Movements are complex. Organizations are complex. History is complex. Justice is complex.

The ADL isn't perfect, its leaders haven't been and are not saints or tzadikim, but the good they do for all Americans radically outweighs their failings and I'm going to keep supporting them while yelling at them to do better.

If you're an ADL hater and have any actual evidence and primary sources on racism from the ADL, I really want to see it, because this weak sauce from droptheadl.org doesn't make the case the illiberal left thinks it makes. And they'd know that if they had learned anything in college about how scholarship works and how arguments are constructed.

The illiberal left perhaps forgets how the ADL responded when Trump called for requiring American Muslims to register.

“If one day Muslim Americans will be forced to register their identities, then that is the day that this proud Jew will register as a Muslim. ”

- ADL chief executive Jonathan Greenblatt

#illiberal left#sncc#Adl#leftist antisemitism#black panthers#jumblr#Black Power and Palestine#anti defamation league#elon musk#Nuance#History#Us history#Intellectual honesty#Intellectual integrity

231 notes

·

View notes

Text

Data Quality in Research: Why It Matters for Accurate Insights

In the realm of research, data quality holds a pivotal position. It serves as the backbone of insightful decision-making, offering researchers, businesses, and policymakers the confidence to act on their findings. But what exactly is data quality in research, and why is it such a critical component of the research process? In this comprehensive blog, we’ll explore the concept of data quality, its dimensions, its importance, and how to maintain it throughout the research lifecycle.

Understanding Data Quality in Research

Data quality in research refers to the accuracy, consistency, reliability, and relevance of data collected, processed, and analyzed during a study. High-quality data ensures that research outcomes are valid, reliable, and actionable. Conversely, low-quality data can lead to erroneous conclusions, misinformed strategies, and wasted resources.

Dimensions of Data Quality in Research

To evaluate data quality effectively, researchers consider several key dimensions:

1. Accuracy

Accuracy measures how closely data reflects the real-world phenomena it represents. Errors in data collection or recording, such as typos, misinterpretation, or flawed instruments, can undermine accuracy.

2. Completeness

Completeness assesses whether all required data is collected. Missing or incomplete data can skew results and compromise the integrity of the research.

3. Consistency

Consistency examines whether data remains uniform across different datasets or timeframes. Inconsistent data can result from discrepancies in collection methods, standards, or time-sensitive variations.

4. Timeliness

Timeliness reflects whether the data is up-to-date and relevant to the research context. Stale or outdated data may not accurately capture current trends or behaviors.

5. Relevance

Relevance focuses on whether the data aligns with the research objectives. Even accurate and complete data may be of little use if it doesn’t address the study’s purpose.

6. Reliability

Reliability checks whether the data collection methods and outcomes are repeatable and produce consistent results under similar conditions.

7. Integrity

Integrity examines the authenticity and trustworthiness of data sources. Fabricated or manipulated data can severely compromise research validity.

Why Is Data Quality Important in Research?

Data quality is not merely a technical or procedural concern; it directly impacts every stage of the research process and its eventual applications. Below are the reasons why data quality is paramount in research:

1. Ensures Validity of Findings

High-quality data ensures that research findings accurately reflect the phenomenon being studied. This validity is essential for drawing credible and actionable conclusions.

2. Supports Reliable Decision-Making

In business, healthcare, or public policy, decisions are often based on research insights. Poor data quality can lead to incorrect strategies and significant financial or social repercussions.

3. Enhances Credibility

Research organizations, such as Philomath Research, thrive on their reputation. Delivering high-quality, data-backed insights strengthens credibility and client trust.

4. Facilitates Compliance and Ethical Standards

High-quality data ensures compliance with legal and ethical guidelines, particularly in sensitive fields like healthcare, finance, and social research.

5. Optimizes Resource Utilization

Data errors or inaccuracies often require additional time and resources to correct. Ensuring quality from the outset prevents these inefficiencies.

6. Drives Innovation

Reliable data fuels innovation by providing a solid foundation for new hypotheses, product developments, and process improvements.

Common Challenges in Maintaining Data Quality

Despite its importance, achieving and maintaining data quality is not without challenges. Researchers frequently encounter the following issues:

Incomplete or missing data

Errors in data collection tools or techniques

Biased sampling methods

Inconsistencies in data standards or formats

Data redundancy

Privacy and security concerns

Strategies to Ensure Data Quality in Research

Maintaining data quality requires a systematic approach that integrates best practices at every stage of the research process. Here are key strategies for ensuring data quality:

1. Define Clear Objectives

Begin with a clear research purpose and well-defined objectives. This clarity guides the selection of relevant data and reduces irrelevant noise.

2. Use Reliable Data Collection Methods

Invest in well-designed surveys, interviews, or experiments. Ensure that tools are tested for validity and reliability before deployment.

3. Implement Data Quality Checks

Incorporate routine checks for errors, inconsistencies, and missing values during and after data collection. Use statistical tools or software to automate this process.

4. Standardize Data Collection Processes

Standardize procedures across teams and timeframes to minimize inconsistencies. Provide training to ensure everyone follows the same protocols.

5. Leverage Technology

Use advanced data collection, cleaning, and analysis tools to reduce manual errors. Technologies like AI and machine learning can also identify and address anomalies in real time.

6. Maintain Data Integrity

Verify the authenticity of data sources and prioritize transparency. Avoid any practices that could compromise the reliability of the data.

7. Document and Review

Maintain detailed records of data collection and processing. Periodically review these processes to identify potential areas of improvement.

Case Study: Data Quality at Philomath Research

At Philomath Research, we emphasize data quality as the cornerstone of our research methodology. By employing robust data collection instruments, rigorous quality checks, and advanced analytics, we deliver insights that our clients can trust. Our commitment to data quality not only enhances the value of our findings but also ensures compliance with global research standards.

Future Trends in Data Quality for Research

As research methodologies evolve, so do the standards and technologies for ensuring data quality. Key future trends include:

AI-Driven Data Cleaning: Automation and machine learning algorithms will further enhance the ability to detect and correct data anomalies.

Real-Time Quality Monitoring: Advancements in technology will allow for real-time monitoring and adjustments during data collection.

Integration of Big Data: Ensuring quality in large-scale datasets will become a critical focus as researchers increasingly rely on big data.

Conclusion

Data quality in research is not just a technical necessity; it’s the foundation of credible, actionable, and impactful insights. For companies like Philomath Research, maintaining high data quality ensures that clients receive valuable and reliable findings. By understanding its dimensions, addressing challenges, and implementing best practices, researchers can uphold the integrity of their work and drive meaningful progress.

Would you like to learn more about how Philomath Research ensures data quality? Get in touch with us today!

FAQs

1. What is data quality in research?

Data quality in research refers to the accuracy, consistency, reliability, and relevance of data collected, processed, and analyzed during a study. High-quality data ensures that research outcomes are valid, actionable, and reliable.

2. Why is data quality important in research?

Data quality is crucial because it:

Ensures the validity of findings.

Supports reliable decision-making.

Enhances the credibility of research.

Facilitates compliance with ethical standards.

Optimizes resource utilization.

Drives innovation by providing reliable insights.

3. What are the key dimensions of data quality?

The key dimensions of data quality include:

Accuracy: Data accurately represents the real-world phenomena.

Completeness: All required data is collected.

Consistency: Uniformity across datasets or timeframes.

Timeliness: Data is up-to-date and relevant.

Relevance: Data aligns with the research objectives.

Reliability: Repeatability of data collection outcomes.

Integrity: Authenticity and trustworthiness of data sources.

4. What are common challenges in maintaining data quality?

Some common challenges include:

Missing or incomplete data.

Errors in data collection methods.

Biased sampling techniques.

Inconsistent data standards or formats.

Data redundancy.

Privacy and security concerns.

5. How can researchers ensure data quality?

Researchers can maintain data quality by:

Defining clear research objectives.

Using reliable data collection methods.

Conducting routine data quality checks.

Standardizing data collection processes.

Leveraging technology like AI for anomaly detection.

Ensuring data integrity and authenticity.

Documenting and periodically reviewing processes.

6. What role does technology play in maintaining data quality?

Technology enhances data quality by:

Automating data collection and cleaning processes.

Identifying and addressing anomalies in real-time using AI.

Standardizing data formats across large datasets.

Facilitating real-time quality monitoring during data collection.

7. Why is Philomath Research committed to data quality?

Philomath Research prioritizes data quality to ensure:

Credible and actionable insights for clients.

Compliance with global research standards.

Efficient and effective use of resources. Through robust methodologies and advanced analytics, Philomath Research delivers high-quality data-backed findings.

8. What are future trends in data quality for research?

Emerging trends include:

AI-Driven Data Cleaning: Automating error detection and correction.

Real-Time Quality Monitoring: Immediate adjustments during data collection.

Big Data Integration: Addressing quality concerns in large-scale datasets.

9. How does data quality impact decision-making?

High-quality data provides accurate and reliable insights, enabling informed decision-making across fields like business, healthcare, and public policy. Poor data quality can lead to erroneous conclusions and adverse outcomes.

10. How can I learn more about Philomath Research’s approach to data quality?

To explore Philomath Research’s data quality practices and how they can benefit your organization, feel free to get in touch with us today!

#data quality in research#market research companies#quality research#primary market research#AI in market research#data collection method

0 notes

Text

Primary Research vs Secondary Research: Definitions, Differences, and Examples

Both primary and secondary research holds a significant place in the researcher’s toolkit. Primary research facilitates the collection of fresh, original data, while secondary research leverages existing information to provide context and insights.

#Academic research#Advantages#Analysis#Case studies#Comparative research#data analysis#data collection#Data sources#Definitions#Differences#Disadvantages#Examples#Information sources#Literature review#market xcel#primary data#primary research#qualitative research#Quantitative research#Research comparison#Research design#Research examples#research methodology#Research methods#Research process#research techniques#Research tools#Research types#Secondary data#Secondary research

0 notes

Text

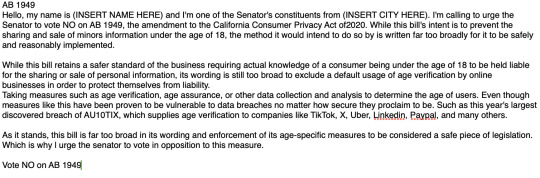

RECENT UPDATES ON THE BAD INTERNET CALIFORNIA BILLS:

Sadly, both AB1949 and SB976 passed and are now on their way to the governors desk.

We need him to veto them so they dont become Law.

If you havent Heard of the danger of those bills for the Internet , this post explain it thoroughly :

- Post doing a deep explanation on those bills here

I CANNOT emphasize enough how these would have a global effect on the Internet given that most websites and apps originates from California and not all of them could afford either following those bills or moving states.

Now, as the bills are on their way to the governor, we need Californian citizens to voice their oppositions to those bills to the Governor Gavin Newsome HERE

(Non California peeps, we are urging you to share this as well!!! )

Please keep in mind that calling with phone is much,much more efficient.

You can also send faxes with Faxzero

Here are scripts you can use as arguments : (text/alt version below the read more )

Than you for reading. Even if youre not from California, please spread the word anyway ! Make posts,tweets,etc

REBLOGS ENCOURAGED

TEXT VERSION :

AB 1949

Hello, my name is (INSERT NAME HERE) and I'm one of the Senator's constituents from (INSERT CITY HERE). I'm calling to urge the Senator to vote NO on AB 1949, the amendment to the California Consumer Privacy Act of 2020. While this bill's intent is to prevent the sharing and sale of minor's information under the age of 18, the method it would intend to do so by is written far too broadly for it to be safely and reasonably implemented.

While this bill retains a safer standard of the business requiring actual knowledge of a consumer being under the age of 18 to be held liable for the sharing or sale of personal information, its wording is still too broad to exclude a default usage of age verification by online businesses in order to protect themselves from liability. Taking measures such as age verification, age assurance, or other data collection and analysis to determine the age of users. Even though measures like this have been proven to be vulnerable to data breaches no matter how secure they proclaim to be. Such as this year's largest discovered breach of AU10TIX, which supplies age verification to companies like TikTok, X, Uber, LinkedIn, Paypal, and many others.

As it stands, this bill is far too broad in its wording and enforcement of its age-specific measures to be considered a safe piece of legislation. Which is why I urge the Senator to vote in opposition to this measure.

Vote NO on AB 1949.

---------------------------

SB 976

Hello, my name is (INSERT NAME HERE) and I'm one of the Assembly member's constituents from (INSERT CITY HERE). I'm calling to urge the Assembly member to vote NO on SB 976, the Protecting Our Kids from Social Media Addiction Act. Although this bill has intent to protect the mental and emotional health of California's youth, the method this bill would intend to use could be counterproductive to that goal, or even endanger them further.

One of this bill's primary measures includes requiring verifiable parental consent to allow websites to display “addictive” feeds to minor users. However, the ways “verify” the identity and age of a responsible parent are often invasive and dangerous. Especially since these methods have proven repeatedly to be vulnerable to data breaches that can leak sensitive information to bad actors. Such as this year's largest discovered breach of AU10TIX, which supplies age verification to companies like TikTok, X, Uber, LinkedIn, Paypal, and many others. To determine if this is necessary at all would also require collecting even more data on minors and non-minors alike to determine who would even require these measures to be set in place. Especially when it would have control over someone's access to a website or application based on the time of day, as this bill would require in order to “reasonably determine” the user is not a minor.

The vagueness of this bill's text at all is dangerous as well. The broad-spectrum definition it gives of “addictive internet-based service or application” could cause an unintended censorship effect where minors and adults alike could be blocked from accessing information purely because some part of a website or application uses a “feed” which could arguably fit the bill's definition of “addictive”

With all of this in mind, I urge the Assembly member to vote in opposition of this measure to protect the privacy and safety of California's minors and adults alike.

Vote NO on SB 976.

352 notes

·

View notes

Note

What materials is Biohazard made of? I guess not everything resists radiation

Indeed! No material is totally resistant to radiation; it always depends on the amount of radiation and the exposure time.

Let me get a little nerdy

I clarify and repeat: I'm not an expert on the subject. I did research for this AU in general and thus determined the right materials for the construction of Biohazard. I may be wrong. But this is sci-fi, and some things are improbable but intentional, like Biohazard's melting rays!

Endoskeleton and joints: titanium alloys, stainless steel, and aluminum reinforced with carbon fiber.

Internal components:

Microchips and components: specifically designed to withstand high doses of radiation and encased in a dense layer of ceramic material within a tungsten protective box.

Sensors made with materials resistant to radiation and high temperatures. Integrated into the endoskeleton and protected by a dense covering material.

Actuators: electric or hydraulic motors made with corrosion- and wear-resistant materials. Located within the joints and protected by the endoskeleton.

Metallic lithium-Ion batteries specially designed to operate in extreme environments, housed in a tungsten protective box, away from sensitive components.

Cooling system: copper tubes and non-flammable, radiation-resistant cooling fluids integrated into the endoskeleton to dissipate heat generated by electronic components and shielding.

Protection systems:

Primary shielding: lead sheets and boron-based composite materials, 1.5 centimeters thick.

Secondary/Exterior shielding: tungsten sheets, 1 cm thick.

Biohazard has numerous limbs and components functioning as redundant systems. In the event of a failure, he can continue operating with backups.

He used to integrate cameras and sensors for remote monitoring and data collection. These are no longer operational.

Being made of very dense materials, he's extremely robust and heavy! You practically couldn't lift one of his arms if he were off!

He was very, very expensive to manufacture as well. The frustration was very great when the project "didn't work".

#long post#Biohazard oc#GC Biohazard#Gamma Code AU#Gamma Code fic#GC concepts#fnaf eclipse#fnaf sun#fnaf moon#sundrop#moondrop#fnaf dca fandom#dca community#fnaf#fnaf security breach#security breach#five nights at freddy's#beloved moot#asks

124 notes

·

View notes

Text

January Check In

Hello, all!

Happy update day, catfolk! We apologize for the late update, but we wanted to share the Beta dates with you in this update. We took extra time to ensure the dates were accurate without risking something not being ready in time or causing burnout for our team. We’ve done quite a bit of internal assessment on the pros and cons of letting ~2,000+ people into the game.

Our most pressing thought was how to reconcile needing to continue working on key features while getting valuable feedback and play data on all that we already have. There was a lot to consider, in reputation, timeline, and team morale. We did not want to give the wrong impression that we felt anywhere close to a done game, but we have a lot of high fidelity mechanics and playability to share which need high population testing regardless. We’ve finally settled on rolling our sleeves up and opening to testers. At the start, we’ll provide a comprehensive breakdown of everything we’re planning to add over the months of the test, and continue our monthly check-ins on our progress. We’re ready to grind, and we hope you are too! And in many ways, it will be much nicer to get the comprehensive mass testing whenever we have something new to roll out!

We hope you will all agree that this update was worth the wait, as we have exciting news to share with you today, along with our most exciting news yet! :)

Accessory Progress

We are proud to announce that we have 47 unique accessories, altogether with 564 color variations.

We have just about reached the edge of our odyssey in the first production run of accessories. After 8 months of practice and training for our fledgling team, we’ve improved immensely at our pipeline. What has previously taken us months now takes a matter of weeks. We have one more backer accessory to develop, and any additions in the near future will be small, simplistic items in comparison to our full sets, but I’m very proud of the team for how far we’ve come, and the marvel of quality they produce in good time.

This month’s new progress includes:

Frog Friends

Illustration by Remmie, sponsored by Hag

Protogear Recolors

Recolors by Emma

New decor

We’ve also been busy with decors.

Argh, matey!

Macaws by Jersopod, cannon, barrel and newspaper stack by Giulia and Remmie

In addition, style compliant sketches of the original rose decor!

Sketches by Remmie

Archetypes

We’re here to introduce a very exciting mechanic that has been in the works since our initial overhaul. It’s one of my personal favorite concepts, and the primary motivation behind how we’ve structured the cat design system.

Introducing… cat archetypes!

Archetypes are a specific combination of traits which, when fulfilled, mark the cat’s profile with a badge and reward the user as an achievement fulfillment. (If the cat’s traits are changed, the badge will be removed. Cats may fulfill multiple Archetypes simultaneously.)

For example, this badge means the cat fulfills the Ruby archetype, a badge which requires the cat to have the color Ruby in all slots.

Examples of the Ruby archetype include:

To give examples of the specificity, other Archetypes include for example “Leopard,” which must have the following to comply:

A Yellow range Leopard overcoat

Light Greyscale or Light Yellow undercoat

Yellow or Greyscale Claws

None for the second accent

Here are some Leopards!

Owning these cats will give the user rewards, sometimes completely custom to the Archetype.

What we hope to achieve with this system is a greater incentive to think creatively within the restrictions of our cat builder, and to reward our players for intentioned play in collecting, breeding, and designing a variety of cats. After all, it is the core of the game!

And with each new addition of colors or patterns, we’ll release an onslaught of new Archetypes! We plan to introduce a healthy amount of them, some easy to get, and some harder depending on genetic obtainability and the obtainment method.

This system is already up and running on our servers, and is in its infancy. We’ll get a lot of data from testing it out!

Originally, this system was on the backburner while we focused on bigger picture mechanics, but we’ve fast tracked it so we can bolster and better encourage casual play while the Guild system is still in its preliminary beta state.

Pelt System

Perhaps the feature we are most excited to see in use and tested is the Pelt System, which we briefly introduced in the 2024 November Check In. There is a frankly insane amount of functionality behind this feature, which includes autonomous user decision and interaction every step of the way.

First, we were able to implement dynamic layering. This means that Unclipped (top layer) pelts can actually sport layers which are placed behind the cat automatically. Valuable uses for this feature include the inside of sleeves, backs of hoods, and items which you would otherwise always want to go behind anything it’s stacked above.

Users can view their pelts already submitted, see their submission progress, choose to submit more to the pelt, choose to print their pelt themselves, choose to list pelts for buyers to print on demand, and view their pelts on any cat.

There is also a draft system which allows users to store information they aren’t ready to submit yet.

Users can list pelts as a print-on-demand resource, and are able to control how many copies they will allow to be printed.

All prints will require a tax which will be dependent on the coverage %, calculated based on the amount of pixels that cover the canvas. This means that small pelts, like a hat or a monocle, will take only a small tax!

AND we have a rudimentary tagging system going as we experiment with this feature! Big news! Once we iron out the kinks, we’ll be able to roll out user filtering and tagging of other content, such as cats or forum posts.

And drumroll please…

As teased at the beginning of this update, we are overjoyed to announce the Closed Beta dates! Early access launches on February 3rd, with the regular Closed Beta starting on February 6th!

During the Closed Beta, you’ll have the chance to experience many new and polished gameplay features, exciting customization options, and the now refined economy! We encourage all testers to not only find any potential bugs and ui improvements, but also to provide feedback and suggestions on all of our game features and our economy!

All 253 Early Beta, 1940 Beta and Kickstarter codes have been generated, and we will begin sending them out shortly over the next day.

In the coming week, we’ll put out writings on our expectations early on and the features roadmap that we’re currently staring at. We can’t wait to see you all in Kotemara soon!

To summarize: We shared decors, Protogear recoloring, Frog Friends, pelt system showcase, archetypes and closed Beta dates.

What to expect next month: Further asset and development updates. Check-ins for how closed Beta will be going.

#paw borough#pet site#virtual pet#indie game#petsite#pet sim#development update#art update#pawborough#kickstarter update#closed beta#beta#beta test#kickstarter rewards

91 notes

·

View notes