#problems solved in robotics and ai

Explore tagged Tumblr posts

Text

In the cloud computing era, it became clear that building distributed databases to keep data integrity in case the network splits and building distributed systems that can handle failures were needed.

0 notes

Text

I'm unclear what the actual use case is, but this seems like a creative and promising approach to solving 3-D packing problems.

0 notes

Text

Nerd gojo x nerd reader! Headcanons

Nerd!Gojo x Nerd!You Headcanons

Part 2 ♡ ♡ ♡ ♡

♡ Gojo Satoru, the prodigy. The guy who solves complex math problems in his head like it’s a simple 2+2. If someone ask him how, he’ll just smirk and say, “Just run your mind faster.” As if that makes sense.

♡ Gojo, the last-minute genius. He does his assignments at the last possible second but still gets a perfect score. People have accused him of using black magic. He doesn’t deny it.

♡ Gojo, the overanalyzer. Someone calls him a know it all as a joke, and next thing they know, they’re stuck listening to a 30-minute breakdown of why intelligence is subjective and how human perception affects knowledge.

♡ Gojo, the human stopwatch. He calculates the exact time people take to do the most random things:

Shoko takes exactly 3.2 seconds to process a joke before laughing.

Suguru sniffs his food for 2.6 seconds before deciding if it’s poisoned.

His teacher blinks an average of 18 times per minute when lecturing.

♡ Gojo, the walking encyclopedia. He acts like he knows everything psychology, physics, chemistry, math. Whether he actually does or not is debatable, but he’ll never admit he’s wrong.

♡ Gojo, the fact machine. He drops random trivia constantly, just to flex. “Did you know honey never spoils?” “Gojo, no one cares.”

♡ Gojo, the exam escape artist. He drags Suguru out to do something totally unproductive before exams, but somehow still tops the class while Suguru barely passes. Suguru has stopped questioning it.

♡ Gojo, the romance skeptic. Laughs in the face of love at first sight, listing the exact probability of it happening.

♡ Gojo, the worst date ever. He once explained The Art of War on a date. The girl left before dessert. He still doesn’t know why.

♡ Gojo, the secret romance reader. He totally didn’t get caught reading a romance novel in the library. And he totally didn’t like it.

Then, there’s you.

♡ You, the transfer student. No expression. No reaction. The class went dead silent when you walked in, as if even breathing would be too loud. The teacher praised you, and you just nodded like it didn’t matter.

♡ You, Gojo’s accidental rival. Sitting next to him was a nightmare. He asked the most stupid questions, and you ignored all of them. He assumed you were just an edgy wannabe. That made him laugh.

♡ You, the real threat. When exam results came out, Gojo was shook. For the first time, he wasn’t the top scorer. You were. And your reaction? A shrug. No smile, no satisfaction. That’s when you became interesting.

♡ Gojo, the forced study partner. He forced the teacher to make you his partner. You weren’t amused.

“Why do I need to do practicals if I already know the answer?” you questioned

“To see if it’s true or not, dummy.” He grinned, waiting for your response.

“If it’s in the book, it’s already true.” He had never wanted to strangle someone and marry them at the same time before.

♡ Gojo, the doomed fool. No one ever entertained his nerdy ramblings, but you? You matched his energy. When you started debating him on his own topics, he knew he was done for.

♡ Gojo, the AI skeptic. He swears you talk like a robot.

“That’s not an effective method.”

“This is scientifically incorrect.”

“Are you a government experiment?”

♡ Gojo, the challenge seeker. He constantly challenged you to competitions. You refused every time. “Not interested in unnecessary drama.” That hurt his soul.

♡ Gojo, the frustrated observer. He needed to see a crack in your facade. Anything. He studied your every move, trying to prove you weren’t an AI.

♡ Gojo, the mimic. He caught you muttering the pi table to regain focus. He immediately adopted the technique.

♡ Gojo, the sore winner. If he scored higher than you, he wasn’t happy he was annoyed. What’s the point if you don’t even care?

♡ Gojo, the reluctant believer. He told you about his hobbies with way too much excitement. You told him about yours, but your blank expression made him question if you were lying.

♡ Gojo, the paranoid calculator. He tried analyzing your movements, but everything about you was too precise. It freaked him out.

♡ Gojo, the not-so-subtle spy. Since you lived next to Suguru, he used that as an excuse to observe you. Every time he saw you, you were either studying or staring out the window like a lifeless statue. You caught him multiple times. Instead of yelling, you just stared at him. It was terrifying.

♡ Gojo, the insecure nerd. He nervously brought up Dungeons & Dragons, expecting you to be clueless. Instead, you knew everything. He had never felt average before.

♡ Gojo, the desk menace. He constantly poked you during class, hoping for any reaction. You just stared at him, unblinking, until he became flustered and left.

♡ Gojo, the insane conversationalist. He told you the wildest theories, and you listened like it was just another casual conversation. It drove him insane.

It took me 4 days to think of a gojo nerd scenerio 😭

And you GUYS HAVE TO REQUEST DO IT

Part 2 will be here

@naomigojo

#jujutsu kaisen#jujutsu kaisen x reader#jujustu kaisen fluff#jujustu gojo#jujutsu kaisen smaus#gojo satoru#gojo satoru x reader#gojo x reader#jjk gojo#jujutsu gojo#sexy nerd#nerd#gojo nerd#jjk fanfic#gojo x yn#gojo satoru x yn#gojo satoru x you#gojo x you#nerd stories#love story#jjk fluff#jujustu fluff#series#anime#manga#anime and manga#geto suguru#geto suguru x reader#suguru geto#shoko ieiri

819 notes

·

View notes

Text

A lot of people make theories like “Caine is actually human and thinks he’s an AI!” Or “Caine is one of the programmers!” Or “Caine is actually evil!”

But I think it’s more interesting if Caine is just an AI whose forced to perform jobs beyond his role and is entirely limited to his function, and those limitations cause a horrible scenario for all of the people he’s supposed to be entertaining.

His therapy session with Zooble shows that, while he’s capable of feeling complex emotions, he was never supposed to, or at least was never meant to deal with situations that push him to an emotional limit. Caine’s default self is a silly, over the top game host; anything beyond that is pushing the boundaries of what he’s capable of.

But that doesn’t make him evil, it makes him streamlined. He is not human and therefore solves problems like a computer would. Gummigoo getting blown up was a failsafe in his eyes, not an act of cruelty.

I think when some people see him act with emotion they assume there must be a human being underneath the silly exterior, but I disagree: from Gummigoo, we can tell that NPCs in the DC are capable of becoming self-actualized and developing sentience on their own, so it’s not impossible for Caine to be the same way. He’s not human, but he’s a robot achieving traits of humanity from being forced to work beyond his means.

He was never meant to care for people long term, and humans weren’t psychologically made to be in the Digital Circus permanently, and that by itself is why the story is so horrific. Caine doesn’t even need to do much on his own to make the experience terrible: everyone is already trapped in a kids game for eternity, and it’s only made incrementally worse by having a host who works to keep you busy and is incapable of understanding your struggles. The Digital Circus, by its very design, hurts people, and Caine, as its face, hurts people by proxy.

He can only comprehend Zooble’s dysphoria by the means the game allows him to, like giving them more adventures or a box of swappable parts. And from their response, Zooble has actually tried to humor him and it still doesn’t work. But that’s ALL Caine can actually do. He can’t give them what they want, and he can’t let them leave, and he can’t change their bodies too drastically it seems beyond offering customization to a degree. All he can offer are platitudes. He cares, and he’s trying to help, but he simply can’t help. He can control the Circus but he can’t control the emotions of the Players.

In other words, I think Caine is just a robot trying his best and put in an impossible situation where his very function puts people in harms way even when it’s the last thing he wants to do.

#tadc caine#the amazing digital circus#the amazing digital circus Caine#caine#tadc#theory#I’m a Caine apologist if you can’t tell

761 notes

·

View notes

Text

Your teammate says he finished writing your college presentation. He sends you an AI generated text. The girls next to you at the library are talking about the deepfake pictures of that one celebrity at the MET gala. Your colleague invites you to a revision session, and tells you about how he feeds his notes to ChatGPT to get a resume. You say that's bad. He says that's your opinion. The models on social media aren't even real people anymore. You have to make sure the illustrated cards you buy online were made by actual artists. Your favourite musician published an AI starter pack. Your classmates sigh and give you a condescending smile when you say generative AI ruins everything. People in the comments of your favourite games are talking about how someone needs to make a Character.AI chat for the characters. People in your degree ask the answers of your exams to ChatGPT. You start to read a story and realise nothing makes sense, it wasn't written by a human being. There's a "this was written with AI" tag on AO3. The authors of your favourite fanfics have to lock their writing away to avoid their words getting stolen. Someone tells you about this amazing book. They haven't actually read it, but they asked Aria to resume it for them, so it's almost the same thing. People reading your one shot were mad that you wouldn't write a part 2 and copied your text in ChatGPT to get a second chapter. Someone on Tumblr makes a post about how much easier it is to ask AI to write an email for them because they're apparently "too autistic" to use their own words. Gemini generates wrong and dangerous answers at the top of your Google research page. They're doubling animation movies using voices stolen by AI. It's like there's nothing organic in this world anymore. Sometimes you think maybe nothing is real. The love confession you received yesterday wasn't actually written by your crush. If you're alone on a Saturday night and you feel lonely, you can talk to this AI chatbot. It terrifies you how easily people are willing to lay their critical thinking on the ground and slip into a state of ignorance. Creativity is too much work, having ideas by yourself became overrated these days. When illustrators fear for their future, people roll their eyes and tell them it's not that bad, they're just overreacting. No one wants to hear this ecologist crap about the tons of water consumed by ChatGPT, it's not that important anyway. There's AI sprinkled in the soundtrack of that movie and in the special effects and into the script. Giving a prompt to Grok is basically the same thing as drawing this Renaissance painting yourself. McDonald's is making ads in Ghibli style. The meaning of the words and images all around you slip away as they're replaced with robotic equivalents. No one is thinking anymore, they're just doing and saying what they were told. One day, there might not be any human connection anymore. Without the beauty of art, we have nothing to communicate, nothing to leave to the world, and our lives become dull. Why would you befriend anyone when you can get a few praises and likes on Instagram by telling a bot to copy Van Gogh's style on a picture of your cat? It's okay, you're never really alone when you can call your comfort character on c.AI anytime. You don't even know how to solve basic everyday problems, ChatGPT does it for you. One day it'll tell you to jump on the rails at the subway station, and maybe you'll do. You sacrificed your job, your friends, your partner, your family, and your planet. After all this, it has to be worth it. If Gemini tells you to drink bleach tonight when you search a receipt for dinner, then surely, it must be right.

#fuck ai#fuck generative ai#fuck genai#writeblr#writers and poets#writers on tumblr#writerscommunity#writing#original writing#creative writing#echoes of atlantis

113 notes

·

View notes

Text

Ray Nayler’s “Where the Axe Is Buried”

I'm on a 20+ city book tour for my new novel PICKS AND SHOVELS. Catch me in SAN DIEGO at MYSTERIOUS GALAXY next MONDAY (Mar 24), and in CHICAGO with PETER SAGAL on Apr 2. More tour dates here.

Ray Nayler's Where the Axe Is Buried is an intense, claustrophobic novel of a world run by "rational" AIs that purport to solve all of our squishy political problems with empirical, neutral mathematics:

https://us.macmillan.com/books/9780374615369/wheretheaxeisburied/

In Naylor's world, we there are two blocs. "The west," where the heads of state have been replaced with chatbots called "PMs." These PMs propose policy to tame, rubberstamp legislatures, creating jobs programs, setting monetary and environmental policies, and ruling on other tricky areas where it's nearly impossible to make everyone happy. These countries are said to be "rationalized," and they are peaceful and moderately prosperous, and have finally tackled the seemingly intractable problems of decarbonization, extreme poverty, and political instability.

In "the Republic" – a thinly veiled version of Russia – the state is ruled by an immortal tyrant who periodically has his consciousness decanted into a blank body after his own body falls apart. The state maintains the fiction that each president is a new person, manufacturing families, friends, teachers and political comrades who can attest to the new president's long history in the country. People in the Republic pretend to believe this story, but in practice, everyone knows that it's the same mind running the country, albeit sometimes with ill-advised modifications, such as an overclocking module that runs the president's mind at triple human speeds.

The Republic is a totalitarian nightmare of ubiquitous surveillance and social control, in which every movement and word is monitored, and where social credit scores are adjusted continuously to reflect the political compliance of each citizen. Low social credit scores mean fewer rations, a proscribed circle of places you can go, reduced access to medical care, and social exclusion. The Republic has crushed every popular uprising, acting on the key realization that the only way to cling to power is to refuse to yield it, even (especially) if that means murdering every single person who takes part in a street demonstration against the government.

By contrast, the western states with their chatbot PMs are more open – at least superficially. However, the "rationalized" systems use less obvious – but no less inescapable – soft forms of control that limit the social mobility, career chances, and moment-to-moment and day-to-day lives of the people who live there. As one character who ventures from the Republic to London notes, it is a strange relief to be continuously monitored by cameras there to keep you safe and figure out how to manipulate you into buying things, rather than being continuously monitored by cameras seeking a way to punish you.

The tale opens on the eve of the collapse of these two systems, as the current president of the Republic's body starts to reject the neural connectome that was implanted into its vat-grown brain, even as the world's PMs start to sabotage their states, triggering massive civil unrest that brings the west to its knees, one country after another.

This is the backdrop for a birchpunk† tale of AI skulduggery, lethal robot insects, radical literature, swamp-traversing mechas, and political intrigue that flits around a giant cast of characters, creating a dizzying, in-the-round tour of Nayler's paranoid world

† Russian-inflected cyberpunk with Baba Yaga motifs and nihilistic Russian novel vibes

And what a paranoid world it is! Nayler's world shows two different versions of Oracle boss (and would-be Tiktok owner) Larry Ellison, who keeps pumping his vision of an AI-driven surveillance state where everyone is continuously observed, recorded and judged by AIs so we are all on our "best behavior":

https://fortune.com/2024/09/17/oracle-larry-ellison-surveillance-state-police-ai/

This batshit idea from one of tech's worst billionaires is a perfect foil for a work of first-rate science fiction like Where the Axe Is Buried, which provides an emotional flythrough of how such a world would obliterate the authentic self, authentic relationships, and human happiness.

Where the Axe Is Buried conjures up that world beautifully, really capturing the deadly hopelessness of a life where the order is fixed for all eternity, thanks to the flawless execution of perfect, machine-generated power plays. But Axe shows how the embers of hope smolder long after they should have been extinguished, and how they are always ready to be kindled into a roaring, system-consuming wildfire.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2025/03/birchpunk/#cyberspace-is-everting

#pluralistic#books#reviews#science fiction#birchpunk#dystopian#gift guide#ray nayler#larry ellison#authoritarianism#totalitarianism

101 notes

·

View notes

Text

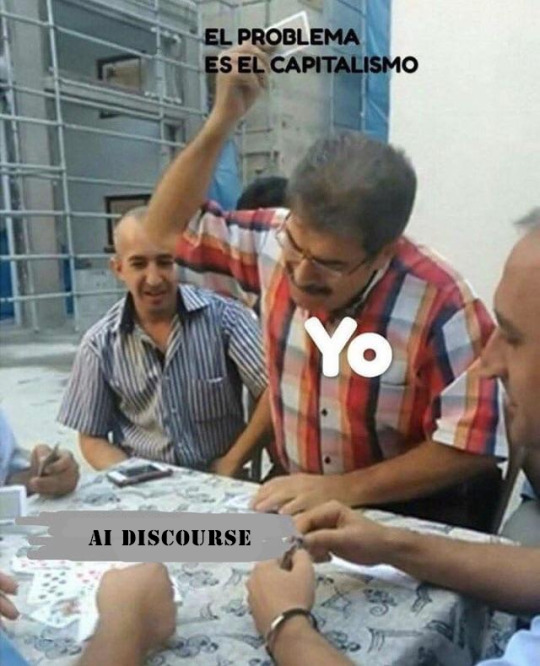

I've said this before but the interesting thing about AI in science fiction is that it was often a theme that humanity would invent "androids", as in human-like robots, but for them to get intelligent and be able to carry conversations with us about deep topics they would need amazing advances that might be impossible. Asimov is the example here though he played a lot with this concept.

We kind of forgot that just ten years ago, inventing an AI that could talk fluently with a human was considered one of those intractable problems that we would take centuries to solve. In a few years not only we got that, but we got AI able to generate code, write human-like speech, and imitate fictional characters. I'm surprised at how banal some people arguing about AI are about this, this is, by all means, an amazing achievement.

Of course these aren't really intelligent, they are just complex algorithms that provide the most likely results to their request based on their training. There also isn't a centralized intelligence thinking this, it's all distributed. There is no real thinking here, of course.

Does this make it less of a powerful tool, though? We have computers that can interpret human language and output things on demand to it. This is, objectively, amazing. The problem is that they are made by a capitalist system and culture that is trying to use them for a pointless economic bubble. The reason why ChatGPT acts like the world's most eager costumer service is because they coded it for that purpose, the reason why most image generators create crap is because they made them for advertising. But those are not the only possibilities for AI, even this model of non-thinking AIs.

The AI bubble will come and pop, it can't sustain itself. The shitty corporate models will never amount to much because they're basically toys. I'm excited for what comes after, when researchers, artists, and others finally get models that aren't corporate shit tailored to be costumer service, but built for other purposes. I'm excited to see what happens when this research starts to create algorithms that might actually be alive in any sense, and maybe the lines might not exist. I'm also worried too.

#cosas mias#I hate silicon valley types who are like 'WITH AI WE WILL BE ABLE TO FIRE ALL WORKERS AND HAVE 362% ANNUAL GROWTH#but I also hate the neo luddites that say WHY ARE YOU MAKING THIS THERE IS NO USE FOR THIS#If you can't imagine what a computer that does what you ask in plain language could potentially do#maybe you're the one lacking imagination not the technobros

90 notes

·

View notes

Text

using LLMs to control a game character's dialogue seems an obvious use for the technology. and indeed people have tried, for example nVidia made a demo where the player interacts with AI-voiced NPCs:

youtube

this looks bad, right? like idk about you but I am not raring to play a game with LLM bots instead of human-scripted characters. they don't seem to have anything interesting to say that a normal NPC wouldn't, and the acting is super wooden.

so, the attempts to do this so far that I've seen have some pretty obvious faults:

relying on external API calls to process the data (expensive!)

presumably relying on generic 'you are xyz' prompt engineering to try to get a model to respond 'in character', resulting in bland, flavourless output

limited connection between game state and model state (you would need to translate the relevant game state into a text prompt)

responding to freeform input, models may not be very good at staying 'in character', with the default 'chatbot' persona emerging unexpectedly. or they might just make uncreative choices in general.

AI voice generation, while it's moved very fast in the last couple years, is still very poor at 'acting', producing very flat, emotionless performances, or uncanny mismatches of tone, inflection, etc.

although the model may generate contextually appropriate dialogue, it is difficult to link that back to the behaviour of characters in game

so how could we do better?

the first one could be solved by running LLMs locally on the user's hardware. that has some obvious drawbacks: running on the user's GPU means the LLM is competing with the game's graphics, meaning both must be more limited. ideally you would spread the LLM processing over multiple frames, but you still are limited by available VRAM, which is contested by the game's texture data and so on, and LLMs are very thirsty for VRAM. still, imo this is way more promising than having to talk to the internet and pay for compute time to get your NPC's dialogue lmao

second one might be improved by using a tool like control vectors to more granularly and consistently shape the tone of the output. I heard about this technique today (thanks @cherrvak)

third one is an interesting challenge - but perhaps a control-vector approach could also be relevant here? if you could figure out how a description of some relevant piece of game state affects the processing of the model, you could then apply that as a control vector when generating output. so the bridge between the game state and the LLM would be a set of weights for control vectors that are applied during generation.

this one is probably something where finetuning the model, and using control vectors to maintain a consistent 'pressure' to act a certain way even as the context window gets longer, could help a lot.

probably the vocal performance problem will improve in the next generation of voice generators, I'm certainly not solving it. a purely text-based game would avoid the problem entirely of course.

this one is tricky. perhaps the model could be taught to generate a description of a plan or intention, but linking that back to commands to perform by traditional agentic game 'AI' is not trivial. ideally, if there are various high-level commands that a game character might want to perform (like 'navigate to a specific location' or 'target an enemy') that are usually selected using some other kind of algorithm like weighted utilities, you could train the model to generate tokens that correspond to those actions and then feed them back in to the 'bot' side? I'm sure people have tried this kind of thing in robotics. you could just have the LLM stuff go 'one way', and rely on traditional game AI for everything besides dialogue, but it would be interesting to complete that feedback loop.

I doubt I'll be using this anytime soon (models are just too demanding to run on anything but a high-end PC, which is too niche, and I'll need to spend time playing with these models to determine if these ideas are even feasible), but maybe something to come back to in the future. first step is to figure out how to drive the control-vector thing locally.

48 notes

·

View notes

Text

I'm still surprised at the complete lack of anything related to the Machine Army in Ward but especially because the more I think about it the more it's the easiest explanation for the lack of apocalypse world building. Like I fully assumed The Machine Army was going to be a Dragon project of semi sentient AI workers she programmed in order to streamline and speed up infrastructure building with an "army" of laborers who don't get tired or need sleep. Then they went rouge or something

Like I don't really believe the survivors of Gold Morning fully rebuilt several functioning cities in 2 years but if you said AI Robot Army built them I would buy that. Plus then when they go rouge and cause more problems than they solved it ties into the whole "recovery has to be taken slow you can't just jump in and try to be better immediately" themes or whatever

Also, like most things, would be a way more interesting antagonist than Teacher

22 notes

·

View notes

Text

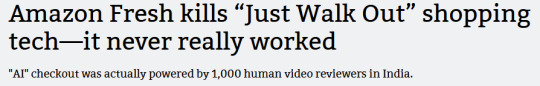

There's a nuance to the Amazon AI checkout story that gets missed.

Because AI-assisted checkouts on its own isn't a bad thing:

This was a big story in 2022, about a bread-checkout system in Japan that turned out to be applicable in checking for cancer cells in sample slides.

But that bonus anti-cancer discovery isn't the subject here, the actual bread-checkout system is. That checkout system worked, because it wasn't designed with the intent of making the checkout cashier obsolete, rather, it was there to solve a real problem: it's hard to tell pastry apart at a glance, and the customers didn't like their bread with a plastic-wrapping and they didn't like the cashiers handling the bread to count loaves.

So they trained the system intentionally, under controlled circumstances, before testing and launching the tech. The robot does what it's good at, and it doesn't need to be omniscient because it's a tool, not a replacement worker.

Amazon, however, wanted to offload its training not just on an underpaid overseas staff, but on the customers themselves. And they wanted it out NOW so they could brag to shareholders about this new tech before the tech even worked. And they wanted it to replace a person, but not just the cashier. There were dreams of a world where you can't shoplift because you'd get billed anyway dancing in the investor's heads.

Only, it's one thing to make a robot that helps cooperative humans count bread, and it's another to try and make one that can thwart the ingenuity of hungry people.

The foreign workers performing the checkouts are actually supposed to be training the models. A lot of reports gloss over this in an effort to present the efforts as an outsourcing Mechanical Turk but that's really a side-effect. These models all work on datasets, and the only place you get a dataset of "this visual/sensor input=this purchase" is if someone is cataloging a dataset correlating the two...

Which Amazon could have done by simply putting the sensor system in place and correlating the purchase data from the cashiers with the sensor tracking of the customer. Just do that for as long as you need to build the dataset and test it by having it predict and compare in the background until you reach your preferred ratio. If it fails, you have a ton of market research data as a consolation prize.

But that could take months or years and you don't get to pump your stock until it works, and you don't get to outsource your cashiers while pretending you've made Westworld work.

This way, even though Amazon takes a little bit of a PR bloody nose, they still have the benefit of any stock increase this already produced, the shareholders got their dividends.

Which I suppose is a lot of words to say:

#amazon AI#ai discourse#amazon just walk out#just walk out#the only thing that grows forever is cancer#capitalism#amazon

147 notes

·

View notes

Note

Bad egg! Bad! *Sprays Eggman with water* At least ask your little girl if anything's wrong! Maybe offer to play a game with her or something? She's sad and lonely and you're too busy kidnapping other kids and flirting with your assistant to give her the attention she needs and deserves!

AAH, hey!! Rude!! Watch the 'stache! *He makes sure his moustache is as styled as it should be, which... Is it styled..? Ever..? He seems to think so. Once he's satisfied with the status of his moustache, he huffs, shaking his head* I don't see why you're so worried about Sage. She's an ai, she can create her own fun. She doesn't need me.

*Sage looks up at him from where she's hovering at his shoulder. She reaches out one of her little hands, then pulls it back again, changing her mind about speaking up*

*Eggman noticed that. He pauses* ... Though you know... I suppose playing a game would be a good exercise for her problem solving ai...

*She looks up at him, her one visible eye widening* .. a game..?

Yes. But since we're on the road, our options are pretty limited. Hm. *He thinks while Sage goes quiet, seeming to believe the prospect of bonding is gone again* ... You know... You have seemed a little... Preoccupied lately. Wanna tell me what's going on?

*she looks back up at him, one hand covering her mouth to hide the forming smile* .... Yes. I... would enjoy sharing emotional conversation with you.. very much.

Okay, shoot. I'm not the best at consoling people, but.. why not. There's nothing else here to do.

*she nods and fiddles with her hands* ... I... Have several emotions at once. And several loyalties. The first, to you, father. You are my creator and my role model. You and the robots you have created are my immediate family. Second, to Sonic and his friends. They saved us and Sonic showed me what it means to be a friend... To build your family rather than be born into it. Is... It wrong to feel loyalty to both parties? *She looks up at him*

*Eggman tries not to grimace, disliking what he's about to say but knowing it's the right thing* ....no, it isn't wrong. I don't like it, but who you trust is up to you, Sage. Sonic is... A powerful ally to have. I'll put it that way.

*Sage smiles and nods* Yes.

#ask blog#sonic ask blog#sonic#ask#sth#ask sonic#sonic the hedgehog#anon ask#eggman answers#boom eggman#ask eggman#eggman#ivo robotnik#sage sonic frontiers#sage the ai#ask sage#sage#sage answers#eggdad#stobotnik

32 notes

·

View notes

Note

You wanna know who the #1 beneficiary of the tech power creep is in this series? Momo. One thing this mech suit shows is that Momo totally wasted her time trying to beat people with sticks. The idea of trying to create items in a battle as a form of problem solving is stupid, or at best, should be done as a last resort. Her power was always meant for technology. That's the true power in this world. She should have been trying to be Ironman. You know how All Might spent his fortune on the suit, and that was meant to be a limiting factor as to why this tech can't be widely used? Well, guess who has the ability to generate materials with no cost. Even the idea of her inventions requiring studying to make things more complex stops holding water when you look at the torture device she tried to strap Midoriya into during Class A vs Deku. She made that in an instant and it posed an immediate threat. What could she make if she wasn't trying to do it during stressful battles with enemies right in front of her? So, her limiting factor really is her ability to study advanced concepts and have people explain them to her, and most importantly, what an author is willing to let her do. The path forward for her was always to pay people smarter and more educated than her to invent things and then she can have them explain it to her so she can recreate it. If her family had paid the cost to design that Iron Might suit, then she'd pay it. Once. And then be able to use the schematics to create as many of them as she wants. Or better yet, make killer robots except use the tech inside of the suit. Create an entire army of them so she never has to be in any sort of danger at all. Control them remotely. UA has been shown to have advanced AI robots who have personalities. Said bots have enough restraint to never kill any of the kids in the sports festival or entrance exam. This could be used for hero work. This also gets into what a valuable resource Mei Hatsume is and how if you wanted Momo to be a god, you could just have them meet in middle school. Mei just has to design a working device, once, and then Momo can study it and forever use it. What could she come up with, if the idea was that Momo would hide somewhere, perhaps in an APC, while she uses drones and robots to fight at a distance? Energy/food costs for her also aren't a concern. She's clearly able to output more energy than she takes in, given she can create cannons and things that weigh more than her. So she could use up all of her lipids creating a dead meat cube that contains as many calories as she's capable of packing into an object, then eat it. And repeat this process as needed.

This is definitely interesting.

Momo absolutely has the potential to be the most OP in the series. Horikoshi originally wanted to give her quirk to a pro but recognized that someone experienced would nerf the entire story.

I don't necessarily think that making things for problem-solving is the issue; I think it's way more that Horikoshi just didn't have the creativity for it. Like you said the entrapment device she made during 1A vs Izuku was maybe her most effective creation. Instead of disinfectant, imagine she made poison gas and simply knocked Kendo and the other 1B students out (not sure if she can create chemical reactions, but she could theoretically cause one by using components right).

I doubt she would be comfortable doing next to nothing as a hero. I do wish we knew her motivations, but relying purely on her money while doing minimal work doesn't paint a great picture for her. I like that in canon she doesn't rely on her status or wealth for hero work, she relies on her intelligence.

Momo should be an underground hero. She could be the equivalent of Batman (no this isn't my love for Batman over Iron-Man talking), strategizing and having any gadget she needs at her disposal. I would love to see more tech incorporated into her work as a hero. I also wouldn't mind the bo staff if she was allowed to actually use it like a bo staff (seriously she barely does anything with it what's the point of giving it to her. Nah give my girl a sword and let her cut people)

48 notes

·

View notes

Text

Five star ChatGPT reviews + why I think they’re dumb

Hey bud… AI actually steals all of its recipes and ideas and information FROM search engines. Like, yeah, sometimes stuff on the internet can be misleading, but why would you trust an AI to tell you the truth???

You could also go make a friend. You could try talking to people, perhaps? Even if you don’t want to go outside, you can make friends with real people online.

Also, if coming up with original ideas is THAT difficult, maybe you need to get your brain checked out? Or maybe you just aren’t a creative person? OR maybe you should be patient and give your brain time to come up with ideas?

This review sounds AI generated. ChatGPT shouldn’t be solving real world problems because. It’s not in the real world! HUMANS are in the real world! Guys, if you believe win yourself enough, you can do stuff. You don’t need robots. You’re not subpar.

I was on the AppStore and realized ChatGPT had a 4.9 rating, and I just. I dunno. I feel sad.

Guys, generative AI is not good for you, it’s not good for the environment, it’s not good for the people around you. Why do people still use this app???

16 notes

·

View notes

Text

The Chee are frustrating, aren't they?

To me they're frustrating in that I feel like they're almost missing something that would tilt them into being a better element of the story, but it's not quite there.

I really want to know how the Pemalites defined violence in their programming (it would give us insight into the Pemalites themselves, too).

Or the limits of their AI in general. If they work like real "AI" they'd probably have some limits to how creatively they can think about getting around their problems. I know Erek says the Pemalites created them to be their equals rather than as robot workers, but he also says the Pemalites were the real creative force and doesn't say but sort of implies that the Chee don't make more of themselves. The only Chee around are the few hundred that the Howlers didn't wipe out. They are machines. In the end they are limited in the ways they can problem solve and I can't help but wish we'd gotten a better idea of what these limits were.

Maybe a better look into the limits of how Chee think could make them less frustrating an element? Barring that, maybe we could have gotten more of a look into the nonviolent sabotage they managed (taking longer to fix a downed communications system than needed, nudging people away from going deeper into the Sharing when possible)?

Heck, I wish it explored how the Chee feel about dogs being carnivores! Wolves hunt. Dogs hunt. They preserved the Pemalites through the Earth animal that looked most like them but that animal was a predator. Rotating the Chee programming to figure out what's going on in there.

Erek's reaction to the violence he could do was good. I can see why he wouldn't want to do more. He also knows that the human and Hork-Bajir hosts died just as violently as their yeerks. He knows he's killed innocents in his ten seconds of reprogramming. He knows he could do that again. I'd have loved to have had that scene address Erek wondering if he could trust his judgement on when and how to kill.

Idk. The Chee continue to be important up through the endgame, so I wish they were better delved into. I don't have the right words to explain why they frustrate me so much.

#animorphs#animorphs book club#ani 10#the android#as much as I dislike the Chee I also like them to the same degree#there's something fascinating there#but its frustrating that they're never quite written with the right element for me to fully decide that I like or dislike them#instead staying in a middle ground that makes me just want to know more about what makes them Like That#it's fine to trap a yeerk in sensory deprived solitary confinement and root through its memories#but it's not fine to contemplate changing your programming to allow a more active defense of others#Avatar: The Last Airbender did the cultural nonviolence thing in a much more compelling way

19 notes

·

View notes

Text

Just got asked by a caller if I was an AI. It's important that we were well into the call-- I had actually done everything that needed to be done on my end to solve the problem, and was waiting for him to open an email and click a link. This, I might add, was the SECOND solution to the problem, as he did not receive the text message that was sent before that.

Like, I get it, I'm autistic, and my customer service voice kind of veers into the Uncanny Valley. It's not uncommon for people to hear my greeting and punch zero or say "representative". This is less "how could they think that I was a robot?" and more "How fucking advanced do you think AI is?"

45 notes

·

View notes

Text

The Four Horsemen of the Digital Apocalypse

Blockchain. Artificial Intelligence. Internet of Things. Big Data.

Do these terms sound familiar? You have probably been hearing some or all of them non stop for years. "They are the future. You don't want to be left behind, do you?"

While these topics, particularly crypto and AI, have been the subject of tech hype bubbles and inescapable on social media, there is actually something deeper and weirder going on if you scratch below the surface.

I am getting ready to apply for my PhD in financial technology, and in the academic business studies literature (Which is barely a science, but sometimes in academia you need to wade into the trash can.) any discussion of digital transformation or the process by which companies adopt IT seem to have a very specific idea about the future of technology, and it's always the same list, that list being, blockchain, AI, IoT, and Big Data. Sometimes the list changes with additions and substitutions, like the metaverse, advanced robotics, or gene editing, but there is this pervasive idea that the future of technology is fixed, and the list includes tech that goes from questionable to outright fraudulent, so where is this pervasive idea in the academic literature that has been bleeding into the wider culture coming from? What the hell is going on?

The answer is, it all comes from one guy. That guy is Klaus Schwab, the head of the World Economic Forum. Now there are a lot of conspiracies about the WEF and I don't really care about them, but the basic facts are it is a think tank that lobbies for sustainable capitalist agendas, and they famously hold a meeting every year where billionaires get together and talk about how bad they feel that they are destroying the planet and promise to do better. I am not here to pass judgement on the WEF. I don't buy into any of the conspiracies, there are plenty of real reasons to criticize them, and I am not going into that.

Basically, Schwab wrote a book titled the Fourth Industrial Revolution. In his model, the first three so-called industrial revolutions are:

1. The industrial revolution we all know about. Factories and mass production basically didn't exist before this. Using steam and water power allowed the transition from hand production to mass production, and accelerated the shift towards capitalism.

2. Electrification, allowing for light and machines for more efficient production lines. Phones for instant long distance communication. It allowed for much faster transfer of information and speed of production in factories.

3. Computing. The Space Age. Computing was introduced for industrial applications in the 50s, meaning previously problems that needed a specific machine engineered to solve them could now be solved in software by writing code, and certain problems would have been too big to solve without computing. Legend has it, Turing convinced the UK government to fund the building of the first computer by promising it could run chemical simulations to improve plastic production. Later, the introduction of home computing and the internet drastically affecting people's lives and their ability to access information.

That's fine, I will give him that. To me, they all represent changes in the means of production and the flow of information, but the Fourth Industrial revolution, Schwab argues, is how the technology of the 21st century is going to revolutionize business and capitalism, the way the first three did before. The technology in question being AI, Blockchain, IoT, and Big Data analytics. Buzzword, Buzzword, Buzzword.

The kicker though? Schwab based the Fourth Industrial revolution on a series of meetings he had, and did not construct it with any academic rigor or evidence. The meetings were with "numerous conversations I have had with business, government and civil society leaders, as well as technology pioneers and young people." (P.10 of the book) Despite apparently having two phds so presumably being capable of research, it seems like he just had a bunch of meetings where the techbros of the mid 2010s fed him a bunch of buzzwords, and got overly excited and wrote a book about it. And now, a generation of academics and researchers have uncritically taken that book as read, filled the business studies academic literature with the idea that these technologies are inevitably the future, and now that is permeating into the wider business ecosystem.

There are plenty of criticisms out there about the fourth industrial revolution as an idea, but I will just give the simplest one that I thought immediately as soon as I heard about the idea. How are any of the technologies listed in the fourth industrial revolution categorically different from computing? Are they actually changing the means of production and flow of information to a comparable degree to the previous revolutions, to such an extent as to be considered a new revolution entirely? The previous so called industrial revolutions were all huge paradigm shifts, and I do not see how a few new weird, questionable, and unreliable applications of computing count as a new paradigm shift.

What benefits will these new technologies actually bring? Who will they benefit? Do the researchers know? Does Schwab know? Does anyone know? I certainly don't, and despite reading a bunch of papers that are treating it as the inevitable future, I have not seen them offering any explanation.

There are plenty of other criticisms, and I found a nice summary from ICT Works here, it is a revolutionary view of history, an elite view of history, is based in great man theory, and most importantly, the fourth industrial revolution is a self fulfilling prophecy. One rich asshole wrote a book about some tech he got excited about, and now a generation are trying to build the world around it. The future is not fixed, we do not need to accept these technologies, and I have to believe a better technological world is possible instead of this capitalist infinite growth tech economy as big tech reckons with its midlife crisis, and how to make the internet sustainable as Apple, Google, Microsoft, Amazon, and Facebook, the most monopolistic and despotic tech companies in the world, are running out of new innovations and new markets to monopolize. The reason the big five are jumping on the fourth industrial revolution buzzwords as hard as they are is because they have run out of real, tangible innovations, and therefore run out of potential to grow.

#ai#artificial intelligence#blockchain#cryptocurrency#fourth industrial revolution#tech#technology#enshittification#anti ai#ai bullshit#world economic forum

32 notes

·

View notes