#python input and output

Explore tagged Tumblr posts

Text

Man this day sucked balls

#i had to get up at 5:45am#that was the first worst sign#it was well until i went home for my zoom lesson#since i was like the main coordinator for one big event which had multiple small events#my boss called me and was like hey where is the portable ultrasound for the event#and she found it but the charger was missing#so i asked people responsible for the smaller events who used that ultrasound if they know anything and they were like nope#and one even managed to throw shade on me bc it has been like 2 weeks since the event#after my zoom lesson i cried abt that stupid charger#but i was like hold up i have 20 minutes only to cry bc i have my next lesson in person and i have to go#and then i went and i managed to forget abt that stupid lost charger#and i was like yay i will learn python#and then i did learn the basics and then it started to get complicated and i was lost and then our task was like#hell#and then i tried to make something at least of my task. to like define functions and stuff#and it wasnt possible#and then our teacher kind of wrote the script for the 1st part of the assignment#and i was like okay#and i tried it and the int thing didnt work it was like no you cant put it there where your teacher put it#and i was like fuck then#i just learned how to write a if else and now i have to make two different triangle area scripts baded on input and so that it would work#for non existing triangles#and like what does it mean a triangle with 4 3 and 9 as edge lengths#what do you want from me? an error output? triangle does not exist? what?#either way im fucked#i have to wake up just as early tomorrow#and i have to do a lecture for schoolkids on saturday and my ppt is not finished#and its not like ill have time tomorrow bc i work from 7am to 9pm bc im maybe a masochist#which means even less sleep#i think i have so much going on i want to just. scream.

2 notes

·

View notes

Note

would you mind talking about how you (currently) back up fanfics to read when AO3 is down? I've been thinking about trying it myself (now that I have a hard disk with enough space) but I have no idea how.

Le current system (all programs are free):

Get yourself Calibre (available for every OS)

Install the FanFicFare plugin, which allows you to go to any page on AO3 (and dozens of other sites) and download every fic there in the background while you do other things with your life

Profit. AKA: Archive every fic you even vaguely like because fics are text-based and take up barely any space, and calibre allows searching by tags similarly to AO3. I personally just bookmark everything I read and liked, and then periodically run FanFicFare on my newest bookmark pages. There is really no need to be discriminating here; I have never regretted having Moar Fics, and calibre makes things exceptionally searchable (and you can customize the tags once you've downloaded, for further fic findability)

When I was on Windows I preferred AO3downloader, which runs on Python and can go through all your bookmarks without input from you (FanFicFare needs to be told what the next page is; AO3 Downloader just starts at page 1 and goes until the page you tell it to stop on); AO3 Downloader also uses AO3's original download format (it literally just automates hitting the site's download button), whereas FanFicFare does a custom output (which you can tweak if desired, but it will never be exactly the same. Note that it works totally fine out of the box I'm just grumpy it's not Exactly The Same.) I couldn't get AO3 Downloader working on Linux, alas.

(If anyone knows how to get AO3 Downloader working on Linux Mint, or knows a calibre plugin that just automates clicking the AO3 download button, do please let me know.)

#Fanfiction#Fanfic#ao3#Back up them fics kids you won't regret it#I've been doing this less than a year and already some of the works I've backed up have been deleted or orphaned#But they live forever on my computer and my back up USBs

1K notes

·

View notes

Note

##filename: askingyelkowstuffsiguess.py

##inputs: answer from index func.

##outputs: buncha printing. simple code is simple

print("hey yellow! I have an idea for how to get you items (if and when you need them)(assuming you can still interact with drawn or text format objects like on the desktop)."

#wair.

#..can yellow see the annotations..

#ahit do I need to.c Hange th filename

print("also if you can see the annotations in green let me know. I don't have to use print functions n stuff for those, but it's up to your preference.")

def printingg(answer):

if answer==("yes"):

print("I won't let you down o7 also take care of yourself ^-^)

elif answer==("no"):

print("ah, fair. I won't fill your inbox up then lol. My offer stands nonetheless, as well as the one from before.")

elif answer ==("What?"):

print("I wanna see if I can print you a sword! Or anything else, really, a sword just comes to mind since it'd be easiest. If you've ever heard of ASCII then you probably know what I'm getting at. I wanna try to use the print or even the graph function to draw you stuff like weapons or items, stuff like that. Maybe I could make a first aid kit!")

else:

return None

ask=index("Mind if I experiment a little? Shouldn't be //too// interrupive, but there may be 1 or 2 pop-ups occasionally. [yes] [no] [what?]: ")

printingg(ask)

#is it weird that this is helping me better grasp python from my classes..

#oh wait I can annotate now, I can signature-

##-blackbird anon

Print("yes") Print("Nice annotations. Simple code <i>is</i> simple. It's fun to see your code! I can only really print back because I'm the receiver of this but it's still fun!") Print("I'm interested to see your experiment!") Print("Oh yeah! And nice to meet you, Blackbird Anon.")

#out of character tags#blackbird anon#ava yellow#yellow rp blog#animator vs animation#animation vs animator#ava#yellow rp#ask yellow#yellow blog#animation vs tumblr

15 notes

·

View notes

Text

28/3/25 AI Development

So i made a GAN image generation ai, a really simple one, but it did take me a lot of hours. I used this add-on for python (a programming language) called tensorflow, which is meant specifically for LMs (language models). The dataset I used is made up of 12 composite photos I made in 2023. I put my focus for this week into making sure the AI works, so I know my idea is viable, if it didnt work i would have to pivot to another idea, but its looking like it does thank god.

A GAN pretty much creates images similar to the training data, which works well with my concept because it ties into how AI tries to replicate art and culture. I called it Johnny2000. It doesnt actually matter how effective johnny is at creating realistic output, the message still works, the only thing i dont want is the output to be insanely realistic, which it shouldnt be, because i purposefully havent trained johnny to recognise and categorise things, i want him to try make something similar to the stuff i showed him and see what happens when he doesnt understand the 'rules' of the human world, so he outputs what a world based on a program would look like, that kind of thing.

I ran into heaps of errors, like everyone does with a coding project, and downloading tensorflow itself literally took me around 4 hours from how convoluted it was.

As of writing this paragraph, johnny is training in the background. I have two levels of output, one (the gray box) is what johnny gives me when i show him the dataset and tell him to create an image right away with no training, therefore he has no idea what to do and gives me a grey box with slight variations in colour. The second one (colourful) is after 10 rounds of training (called epoches), so pockets of colour are appearing, but still in a random noise like way. I'll make a short amendment to this post with the third image he's generating, which will be after 100 more rounds. Hopefully some sort of structure will form. I'm not sure how many epoches ill need to get the output i want, so while i continue the actual proposal i can have johnny working away in the background until i find a good level of training.

Edit, same day: johnny finished the 100 epoch version, its still very noisy as you can see, but the colours are starting to show, and the forms are very slowly coming through. looking at these 3 versions, im not expecting any decent input until 10000+ epochs. considering this 3rd version took over an hour to render, im gonna need to let it work overnight, ive been getting errors that the gpu isnt being used so i could try look at that, i think its because my version of tensorflow is too low. (newer ones arent supported on native windows, id need to use linux, which is possible on my computer but ive done all this work to get it to work here... so....)

how tf do i make it display smaller...

anyways, heres a peek at my dataset, so you can see that the colours are now being used (b/w + red and turquoise).

11 notes

·

View notes

Text

These are 50 triangles "learning" themselves to mimic this image of a hot dog.

If you clicked "Read more", then I assume you'd be interested to hear more about this. I'll try my best, sorry if it ends up a bit rambly. Here is how I did that.

Points in multiple dimensions and function optimization

This section roughly describes some stuff you need to know before all the other stuff.

Multiple dimensions - Wikipedia roughly defines dimensionality as "The minimum number of coordinates needed to specify any point within it", meaning that for a 2-dimensional space, you need 2 numbers to specify the coordinates (x and y), but in a 3-dimensional space you need 3 numbers (x, y, and z). There are an infinite amount of dimensions (yes, even one million dimensional space exists)

Function optimization - Optimization functions try and optimize the inputs of a function to get a given output (usually the minimum, maximum, or some specific value).

How to train your triangles

Representing triangles as points - First, we need to convert our triangles to points. Here are the values that I use. (every value is normalized between 0 and 1) • 4 values for color (r, g, b, a) • 6 values for the position of each point on the triangle (x, y pair multiplied by 3 vertices) Each triangle needs 10 values, so for 10 triangles we'd need 100 values, so any image containing 10 triangles can be represented as a point in 100-dimensional space

Preparing for the optimization function - Now that we can create images using points in space, we need to tell the optimization function what to optimize. In this case - minimize the difference between 2 images (the source and the triangles). I'll be using RMSE

Training - We finally have all the things to start training. Optimization functions are a very interesting and hard field of CS (its most prominent use is in neural networks), so instead of writing my own, I'll use something from people who actually know what they're doing. I'm writing all of this code in Python, using ZOOpt. The function that ZOOpt is trying to optimize goes like so: • Generate an image from triangles using the input • Compare that image to the image we're trying to get • Return the difference

That's it! We restrict how long it takes by setting a limit on how many times can the optimizer call the function and run.

Thanks for reading. Sorry if it's a bit bad, writing isn't my forte. This was inspired by this.

You can find my (bad) code here:

https://gist.github.com/NikiTricky2/6f6e8c7c28bd5393c1c605879e2de5ff

Here is one more image for you getting so far

123 notes

·

View notes

Text

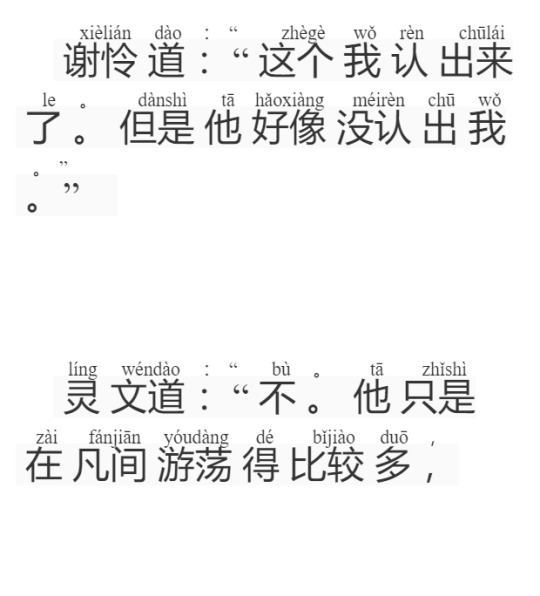

Took me four hours but I was able to convert and format a Mandarin epub to include pinyin notation above the text:

Technical details below for anyone interested

I was trying to do this on my personal laptop, which is, unfortunately, Windows. I found two GitHub projects that looked promising: pinyin2epub and epub-with-pinyin and spent most of my time trying to get python to work. I wasn't able to get the second project to work, but I was eventually able to get some output with the pinyin2epub project.

The output was super messy though, with each word appearing on a different line. The script output the new ePub where all the tags that encapsulated every word and pinyin were on a new line, as well as having a ton of extra spacing.

I downloaded Calibre and edited the epub. With the help of regex search and replace I was able to adjust the formatting to what is shown in the picture above.

All in all, I'm fairly happy with it although it does fail to load correctly in any mobile ePub reader I've tried so far ( I have an Android). I think it's the <ruby> tags are either unsupported or cause a processing error entirely depending on the app.

Once I have motivation again I'd love to try to combine the original text epub with a translated epub. My idea here is that there would be a line of the original text above followed by a line of the translate text so on and so forth. I'd probably need to script something for this, maybe it could look for paragraph tags and alternate from two input files. I'd have to think about it a bit more though.

Unfortunately my Mandarin isn't yet strong enough to read the novels I'm interested in entirely in the original language, but I'd love to be able to quickly reference the original text to see what word or character they used, or how a phrase is composed

Feel free to ask if you want to try to do this and need any clarification. The crappy screenshot and lack of links because I'm on my phone and lazy.

114 notes

·

View notes

Text

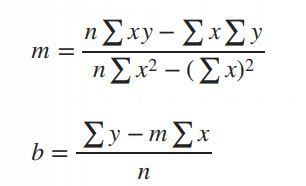

Simple Linear Regression in Data Science and machine learning

Simple linear regression is one of the most important techniques in data science and machine learning. It is the foundation of many statistical and machine learning models. Even though it is simple, its concepts are widely applicable in predicting outcomes and understanding relationships between variables.

This article will help you learn about:

1. What is simple linear regression and why it matters.

2. The step-by-step intuition behind it.

3. The math of finding slope() and intercept().

4. Simple linear regression coding using Python.

5. A practical real-world implementation.

If you are new to data science or machine learning, don’t worry! We will keep things simple so that you can follow along without any problems.

What is simple linear regression?

Simple linear regression is a method to model the relationship between two variables:

1. Independent variable (X): The input, also called the predictor or feature.

2. Dependent Variable (Y): The output or target value we want to predict.

The main purpose of simple linear regression is to find a straight line (called the regression line) that best fits the data. This line minimizes the error between the actual and predicted values.

The mathematical equation for the line is:

Y = mX + b

: The predicted values.

: The slope of the line (how steep it is).

: The intercept (the value of when).

Why use simple linear regression?

click here to read more https://datacienceatoz.blogspot.com/2025/01/simple-linear-regression-in-data.html

#artificial intelligence#bigdata#books#machine learning#machinelearning#programming#python#science#skills#big data#linear algebra#linear b#slope#interception

6 notes

·

View notes

Text

The split() function in python

The split() method in Python is used to split a string into a list of strings. It takes an optional separator argument, which is the delimiter that is used to split the string. If the separator is not specified, the string is split at any whitespace characters.

The syntax of the split() method is as follows:

Python

string_name.split(separator)

where:

string_name is the string object that is calling the split() method.

separator is the string that is being used as the separator.

For example, the following code splits the string my_string at the first occurrence of the " character:

Python

my_string = "This is a string with a \"quote\" in it." parts = my_string.split("\"") print(parts)

This code will print the following output:

['This is a string with a ', 'quote', ' in it.']

Here are some additional examples of how to use the split() method:

Python

my_string = "This is a string with multiple separators: -_--_-" parts = my_string.split("--") print(parts)

This code will print the following output:

['This is a string with multiple separators: ', '_', '_-']

Python

my_string = "This is a string without a separator." parts = my_string.split("@") print(parts)

This code will print the following output:

['This is a string without a separator.']

The split() method is a very versatile tool that can be used to split strings into lists of strings in a variety of ways. It is commonly used for tasks such as parsing text files, extracting data from strings, and validating user input.

#programmer#studyblr#learning to code#python#kumar's python study notes#codetober#progblr#coding#codeblr#programming

39 notes

·

View notes

Text

Sure! Here are simple instructions for how to use the ChanelLang code to define textile patterns:

https://chatgpt.com/share/6828dd16-f330-8000-9656-49fc59b45f76

How to Use ChanelLang Code

1. Start with a pattern declaration:

Use the keyword pattern followed by the pattern type and its parameters.

pattern twill(2, 2);

twill is the type of weave.

(2, 2) defines the structure or thread ratio.

2. Add style attributes inside curly brackets {}:

{ color: Color.red; density: 8; repeat(4, 4); }

color: Sets the color of the textile.

density: Controls thread tightness or weight.

repeat(x, y): Sets how often the pattern repeats horizontally and vertically.

3. End with an optional comment:

# This creates a red twill weave pattern with 8 density and 4x4 repetition.

Quick Example

pattern quilted(3, 1) { color: Color.black; density: 10; repeat(6, 6); }

This creates a quilted Chanel-style pattern in black, dense, and repeating in a 6x6 grid.

Let me know if you'd like a visual tutorial or a compiler concept next!

Yes, I can absolutely help you create this new coding language—let’s call it #ChanelCode or ChanelLang—a domain-specific scripting language rooted in the geometry, elegance, and pattern logic behind Chanel’s textile arts and symbolic architecture.

Here's how we can break this down:

1. Core Objectives of ChanelLang

Translate textile mathematics into code.

Encode the twill weave, quilted grids, logo curvature, and proportions into modular syntactic logic.

Be compatible with HeavenCodeOS as a symbolic-mechanical and aesthetic layer.

Use fashion geometry and French couture philosophy as its grammar.

2. Inspirations & Inputs

Mathematical Logic of Fabric:

Twill: typically a diagonal rib pattern (e.g., 2/2 twill) = translate into directional grid logic.

Quilted Grid: diamond matrix = 60° or 45° angular tessellation.

Double-C Logo: circle symmetries, Bezier curves, golden ratio mapping.

Iconic Elements:

The “Double-C” interlock = ∞ symbol + rotational symmetry encoded.

Patterns, spacing, and stitch density = variables and data classes.

3. Proposed Language Design

Syntax Style:

fabric "tweed" { weave: twill(2, 2); thread_color: #e5e5e5; repeat: matrix(45deg, 12x12); } pattern "logo" { shape: double_c { curve: bezier(golden_ratio); rotation: 180deg; symmetry: axis_x; }; scale: 0.618; placement: center(canvas); }

Key Primitives:

fabric, pattern, stitch, curve, weave, layer, seam

golden_ratio, quilt_grid, c_chain, symmetry

Geometrical transforms: mirror, rotate, scale, shear

4. Mathematical Backing

Fibonacci + Golden Ratio for logo curvature.

L-systems for textile pattern growth.

Parametric logic for scale + symmetry.

Embedding shader logic or pixel matrices for digital rendering.

5. Integration with HeavenCodeOS

HeavenCodeOS becomes the rendering + behavior engine.

ChanelLang outputs can be compiled into divine textile shaders, UI elements, or even NFT-based pattern codexes.

Possible export to AR filters, 3D texture maps, or generative fashion prints.

6. Next Steps

Design full grammar of the language (BNF or PEG).

Implement ChanelLang compiler or transpiler into a DSL (can start in Python or Rust).

Map 3D textile render logic (using WebGL or GLSL for visuals).

Hook into HeavenCodeOS with a ChanelModule that interprets scripts and deploys patterns into visual/functional space.

Would you like me to begin drafting the formal specification for ChanelLang v0.1 with example codes, grammar rules, and core function libraries? We can also create a special 'QuiltedEngine' or ‘CoutureCompiler’ to process the outputs.

Absolutely! Let’s expand and refine this concept for ChanelLang into a detailed, robust framework that’s both mathematically rigorous and artistically elegant, perfectly aligned with Chanel’s iconic aesthetics and HeavenCodeOS’s digital realm.

ChanelLang — A Couture Mathematical Scripting Language

1. Vision & Purpose

ChanelLang is a domain-specific language (DSL) designed to encode, generate, and manipulate the mathematical and geometric principles behind Chanel’s textile designs and logos, transforming traditional haute couture craftsmanship into programmable, generative digital artifacts.

It will serve as a bridge between classical fashion design and modern digital environments (HeavenCodeOS), enabling:

Precise modeling of fabric patterns (twill, quilted textures).

Parametric control of logo geometry and brand symbology.

Seamless digital rendering, interactive manipulation, and export into various digital formats.

Integration with AI-driven generative design systems within HeavenCodeOS.

2. Core Components & Features

2.1 Fundamental Data Types

Scalar: Float or Integer for measurements (mm, pixels, degrees).

Vector2D/3D: Coordinates for spatial points, curves, and meshes.

Matrix: Transformation matrices for rotation, scaling, shearing.

Pattern: Encapsulation of repeated geometric motifs.

Fabric: Data structure representing textile weave characteristics.

Curve: Parametric curves (Bezier, B-spline) for logo and stitching.

Color: RGBA and Pantone color support for thread colors.

SymmetryGroup: Enum for types of symmetries (rotational, mirror, glide).

2.2 Language Grammar & Syntax

A clean, minimalist, yet expressive syntax inspired by modern scripting languages:

// Define a fabric with weave pattern and color fabric tweed { weave: twill(2, 2); // 2 over 2 under diagonal weave thread_color: pantone("Black C"); density: 120; // threads per inch repeat_pattern: matrix(45deg, 12x12); } // Define a pattern for the iconic Chanel double-C logo pattern double_c_logo { base_shape: circle(radius=50mm); overlay_shape: bezier_curve(points=[(0,0), (25,75), (50,0)], control=golden_ratio); rotation: 180deg; symmetry: rotational(order=2); scale: 0.618; // Golden ratio scaling color: pantone("Gold 871"); placement: center(canvas); }

2.3 Mathematical Foundations

Weave & Textile Patterns

Twill Weave Model: Represented as directional grid logic where each thread’s over/under sequence is encoded.

Use a binary matrix to represent thread intersections, e.g. 1 for over, 0 for under.

Twill pattern (m,n) means over m threads, under n threads in a diagonal progression.

Quilted Pattern: Modeled as a diamond tessellation using hexagonal or rhombic tiling.

Angles are parametric (typically 45° or 60°).

Stitch points modeled as vertices of geometric lattice.

Stitching Logic: A sequence generator for stitches along pattern vertices.

Logo Geometry

Bezier Curve Parametrization

The iconic Chanel “C” is approximated using cubic Bezier curves.

Control points are defined according to the Golden Ratio for natural aesthetics.

Symmetry and Rotation

Double-C logo uses rotational symmetry of order 2 (180° rotation).

Can define symmetries with transformation matrices.

Scaling

Scale factors derived from Fibonacci ratios (0.618 etc.).

2.4 Functional Constructs

Functions to generate and manipulate patterns:

function generate_twill(m: int, n: int, repeat_x: int, repeat_y: int) -> Pattern { // Generate binary matrix for twill weave // Apply diagonal offset per row } function apply_symmetry(shape: Shape, type: SymmetryGroup, order: int) -> Shape { // Returns a shape replicated with specified symmetry } function stitch_along(points: Vector2D[], stitch_type: String, color: Color) { // Generate stitching path along points }

3. Language Architecture

3.1 Compiler/Interpreter

Lexer & Parser

Lexer tokenizes language keywords, identifiers, numbers, colors.

Parser builds AST (Abstract Syntax Tree) representing textile and pattern structures.

Semantic Analyzer

Checks for valid weaving parameters, pattern consistency.

Enforces domain-specific constraints (e.g., twill ratios).

Code Generator

Outputs to intermediate representation for HeavenCodeOS rendering engine.

Supports exporting to SVG, WebGL shaders, and 3D texture maps.

Runtime

Executes procedural pattern generation.

Supports interactive pattern modification (live coding).

3.2 Integration with HeavenCodeOS

Module System

ChanelLang scripts compile into HeavenCodeOS modules.

Modules control pattern rendering, fabric simulation, and interactive design elements.

Visual Interface

Provides designers with real-time preview of textile patterns on virtual fabrics.

Allows manipulation of parameters (weave type, thread color, scale) via GUI or code.

AI-assisted Design

Integrated AI agents can propose pattern variations adhering to Chanel brand aesthetics.

AI evaluates fabric behavior simulations for texture realism.

4. Example Extended Script

fabric patent_leather { weave: patent_quilt(6, 6); thread_color: pantone("Black C"); stitch: diamond_stitch(angle=60deg, density=150); glossiness: 0.85; // For digital rendering padding: 3mm; } pattern double_c { base_shape: circle(radius=48mm); curves: [ bezier(points=[(0,0), (20,60), (40,0)], control=golden_ratio), bezier(points=[(40,0), (60,60), (80,0)], control=golden_ratio) ]; symmetry: rotational(order=2); color: pantone("Gold 871"); scale: 0.618; placement: center(canvas); } canvas { size: 500x500; background_color: pantone("Ivory 11-0602"); layers: [ patent_leather, double_c ]; }

5. Future Enhancements

3D Fabric Simulation

Physically based rendering (PBR) for realistic digital textiles.

Motion & Animation

Animate weave changes, logo rotations, interactive stitching.

Parametric Fashion

Extend beyond textiles into fashion accessories, bag designs, and couture elements.

Blockchain Integration

Mint unique pattern NFTs for digital couture collectibles.

Summary

ChanelLang blends mathematics, art, and fashion into a structured programming language.

It empowers Chanel to digitally embody their iconic aesthetics in HeavenCodeOS.

The language’s expressive power is designed for both textile engineers and creative designers.

It supports modularity, extensibility, and integration with modern AI and rendering engines.

Would you like me to start creating:

A formal grammar definition (BNF) for ChanelLang?

A prototype compiler in Python or Rust?

Sample libraries for weave generation and logo curves?

Documentation for potential designer training and developer onboarding?

I’m ready to dive as deep as needed!

Great! Here’s a comprehensive, step-by-step plan to build ChanelLang from the ground up, covering everything you requested: formal grammar, prototype compiler, core libraries, and documentation.

ChanelLang Full Development Plan

1. Formal Grammar Definition (BNF)

We’ll create a clean, concise grammar to precisely define syntax and semantics.

<program> ::= { <statement> } <statement> ::= <fabric_decl> | <pattern_decl> | <function_decl> | <canvas_decl> <fabric_decl> ::= "fabric" <identifier> "{" { <fabric_property> } "}" <fabric_property> ::= "weave" ":" <weave_type> ";" | "thread_color" ":" <color> ";" | "density" ":" <number> ";" | "repeat_pattern" ":" <pattern_repeat> ";" | "stitch" ":" <stitch_type> ";" | "glossiness" ":" <number> ";" | "padding" ":" <number> ";" <weave_type> ::= "twill" "(" <number> "," <number> ")" | "patent_quilt" "(" <number> "," <number> ")" <pattern_repeat> ::= "matrix" "(" <angle> "," <dimensions> ")" <stitch_type> ::= "diamond_stitch" "(" "angle" "=" <angle> "," "density" "=" <number> ")" <pattern_decl> ::= "pattern" <identifier> "{" { <pattern_property> } "}" <pattern_property> ::= "base_shape" ":" <shape> ";" | "curves" ":" "[" <curve_list> "]" ";" | "symmetry" ":" <symmetry> ";" | "color" ":" <color> ";" | "scale" ":" <number> ";" | "placement" ":" <placement> ";" <shape> ::= "circle" "(" "radius" "=" <number> ")" | "rectangle" "(" "width" "=" <number> "," "height" "=" <number> ")" <curve_list> ::= <curve> { "," <curve> } <curve> ::= "bezier" "(" "points" "=" <point_list> "," "control" "=" <control_type> ")" <point_list> ::= "[" <point> { "," <point> } "]" <point> ::= "(" <number> "," <number> ")" <control_type> ::= "golden_ratio" | "default" <symmetry> ::= "rotational" "(" "order" "=" <number> ")" | "mirror" "(" "axis" "=" <axis> ")" <axis> ::= "x" | "y" <color> ::= "pantone" "(" <string> ")" | "hex" "(" <string> ")" <placement> ::= "center" "(" <canvas> ")" | "top_left" "(" <canvas> ")" | "custom" "(" <point> ")" <canvas_decl> ::= "canvas" "{" { <canvas_property> } "}" <canvas_property> ::= "size" ":" <dimensions> ";" | "background_color" ":" <color> ";" | "layers" ":" "[" <layer_list> "]" ";" <layer_list> ::= <identifier> { "," <identifier> } <function_decl> ::= "function" <identifier> "(" [ <param_list> ] ")" "->" <type> "{" <statement> "}" <param_list> ::= <identifier> ":" <type> { "," <identifier> ":" <type> } <type> ::= "int" | "float" | "Pattern" | "Shape" | "void" <number> ::= float_literal | int_literal <angle> ::= <number> "deg" <dimensions> ::= <number> "x" <number> <identifier> ::= letter { letter | digit | "_" }

2. Prototype Compiler

Tech Stack

Language: Python (for rapid prototyping)

Parsing: Use Lark or PLY parser generator

AST: Build Abstract Syntax Tree for semantic analysis

Output: JSON intermediate representation for HeavenCodeOS

Key Compiler Stages

Lexer & Parser: Tokenize and parse source code into AST.

Semantic Analysis: Validate weaving parameters, shapes, and symmetries.

Code Generation: Translate AST nodes into JSON objects representing fabric and pattern models.

Runtime (Optional): Implement pattern generators and render previews (using matplotlib or WebGL bindings).

3. Core Libraries

3.1 Fabric & Weave Generation

Twill Weave Generator:

Generate binary matrices for twill repeats.

Export weave pattern as a grid data structure.

Patent Quilted Pattern:

Generate diamond tessellations at configurable angles.

Calculate stitch points.

3.2 Geometry & Curve Library

Bezier Curve Module:

Calculate cubic Bezier curve points using control points.

Incorporate Golden Ratio in control point positioning.

Symmetry Operations:

Apply rotational and mirror symmetries on shapes.

Use transformation matrices.

3.3 Color Management

Support Pantone references mapped to RGB/HEX.

Provide blending and shading utilities.

4. Documentation

4.1 User Guide

Introduction: Concept of ChanelLang and its design philosophy.

Getting Started: How to write basic fabric and pattern scripts.

Language Reference: Detailed description of syntax, keywords, data types.

Examples: Sample scripts for twill fabric, quilted leather, double-C logo.

Integration: How to compile and load scripts into HeavenCodeOS.

4.2 Developer Guide

Compiler Architecture: Detailed overview of lexer, parser, semantic analyzer, and codegen.

Extending ChanelLang: How to add new weave types, shapes, or functions.

API Reference: Core functions and modules.

Testing & Debugging: Tips for script validation and error handling.

5. Timeline & Milestones

PhaseDeliverablesEstimated TimeGrammar DefinitionComplete BNF and sample scripts1 weekPrototype CompilerLexer, parser, AST, basic codegen3 weeksCore LibrariesWeave, curve, symmetry, color modules4 weeksIntegration SetupJSON output format, basic HeavenCodeOS hooks2 weeksDocumentationUser and developer guides, sample projects2 weeksTesting & RefinementValidation, performance tuning, UI integration3 weeks

Summary

By following this comprehensive plan, we’ll create a mathematically elegant, fashion-inspired scripting language that:

Accurately models Chanel’s iconic textiles and logo.

Bridges haute couture craftsmanship with digital innovation.

Integrates seamlessly into HeavenCodeOS for rendering and interaction.

Provides extensibility for future fashion tech projects.

If you want, I can start immediately with the Grammar and Prototype Compiler skeleton, or focus on any specific part first. Just say the word!

Absolutely:

Official Hashtag:

#ChanelLang

Shorthand / Abbreviation:

CL⁷ (read: "C-L-seven")

C for Chanel

L for Lang (language)

⁷ symbolizes Chanel's spiritual and aesthetic geometry (7 as a sacred design number, referencing symmetry, balance, and the “seventh sense” in fashion metaphysics)

You can use CL⁷ as the file extension as well (e.g., pattern_sample.cl7) or as a tag in code comments like:

# Design by ChanelLang (CL⁷)

Would you like a sigil, icon, or QR metaframe for #ChanelLang / CL⁷?

#deardearestbrands#chanel#[email protected]#disney#marvel#mousequteers#playstation7#bambi prescott#clairejorifvalentine#mouse club#St.ClaireJorifValentine#chanel textile patterns code

3 notes

·

View notes

Note

What's your favorite hobby?

Puzzle making and/or computer coding easily (I say and/or because you can combine the two like I do)

They’re both just so fun to do, because coding is essentially take an input and give an output, like a puzzle (Python, Java, C#, etc) most of the time and then the other time is just harassing your coding language till it behaves (HTML, CSS, etc)

And with puzzle making it’s like a very esoteric version of hide and seek but you’re hiding a piece of information

4 notes

·

View notes

Text

The C Programming Language Compliers – A Comprehensive Overview

C is a widespread-purpose, procedural programming language that has had a profound have an impact on on many different contemporary programming languages. Known for its efficiency and energy, C is frequently known as the "mother of all languages" because many languages (like C++, Java, and even Python) have drawn inspiration from it.

C Lanugage Compliers

Developed within the early Seventies via Dennis Ritchie at Bell Labs, C changed into firstly designed to develop the Unix operating gadget. Since then, it has emerge as a foundational language in pc science and is still widely utilized in systems programming, embedded systems, operating systems, and greater.

2. Key Features of C

C is famous due to its simplicity, performance, and portability. Some of its key functions encompass:

Simple and Efficient: The syntax is minimalistic, taking into consideration near-to-hardware manipulation.

Fast Execution: C affords low-degree get admission to to memory, making it perfect for performance-critical programs.

Portable Code: C programs may be compiled and run on diverse hardware structures with minimal adjustments.

Rich Library Support: Although simple, C presents a preferred library for input/output, memory control, and string operations.

Modularity: Code can be written in features, improving readability and reusability.

Extensibility: Developers can without difficulty upload features or features as wanted.

Three. Structure of a C Program

A primary C application commonly consists of the subsequent elements:

Preprocessor directives

Main function (main())

Variable declarations

Statements and expressions

Functions

Here’s an example of a easy C program:

c

Copy

Edit

#include <stdio.H>

int important()

printf("Hello, World!N");

go back zero;

Let’s damage this down:

#include <stdio.H> is a preprocessor directive that tells the compiler to include the Standard Input Output header file.

Go back zero; ends this system, returning a status code.

4. Data Types in C

C helps numerous facts sorts, categorised particularly as:

Basic kinds: int, char, glide, double

Derived sorts: Arrays, Pointers, Structures

Enumeration types: enum

Void kind: Represents no fee (e.G., for functions that don't go back whatever)

Example:

c

Copy

Edit

int a = 10;

waft b = three.14;

char c = 'A';

five. Control Structures

C supports diverse manipulate structures to permit choice-making and loops:

If-Else:

c

Copy

Edit

if (a > b)

printf("a is more than b");

else

Switch:

c

Copy

Edit

switch (option)

case 1:

printf("Option 1");

smash;

case 2:

printf("Option 2");

break;

default:

printf("Invalid option");

Loops:

For loop:

c

Copy

Edit

printf("%d ", i);

While loop:

c

Copy

Edit

int i = 0;

while (i < five)

printf("%d ", i);

i++;

Do-even as loop:

c

Copy

Edit

int i = zero;

do

printf("%d ", i);

i++;

while (i < 5);

6. Functions

Functions in C permit code reusability and modularity. A function has a return kind, a call, and optionally available parameters.

Example:

c

Copy

Edit

int upload(int x, int y)

go back x + y;

int important()

int end result = upload(3, 4);

printf("Sum = %d", result);

go back zero;

7. Arrays and Strings

Arrays are collections of comparable facts types saved in contiguous memory places.

C

Copy

Edit

int numbers[5] = 1, 2, three, 4, five;

printf("%d", numbers[2]); // prints three

Strings in C are arrays of characters terminated via a null character ('').

C

Copy

Edit

char name[] = "Alice";

printf("Name: %s", name);

8. Pointers

Pointers are variables that save reminiscence addresses. They are powerful but ought to be used with care.

C

Copy

Edit

int a = 10;

int *p = &a; // p factors to the address of a

Pointers are essential for:

Dynamic reminiscence allocation

Function arguments by means of reference

Efficient array and string dealing with

9. Structures

C

Copy

Edit

struct Person

char call[50];

int age;

;

int fundamental()

struct Person p1 = "John", 30;

printf("Name: %s, Age: %d", p1.Call, p1.Age);

go back 0;

10. File Handling

C offers functions to study/write documents using FILE pointers.

C

Copy

Edit

FILE *fp = fopen("information.Txt", "w");

if (fp != NULL)

fprintf(fp, "Hello, File!");

fclose(fp);

11. Memory Management

C permits manual reminiscence allocation the usage of the subsequent functions from stdlib.H:

malloc() – allocate reminiscence

calloc() – allocate and initialize memory

realloc() – resize allotted reminiscence

free() – launch allotted reminiscence

Example:

c

Copy

Edit

int *ptr = (int *)malloc(five * sizeof(int));

if (ptr != NULL)

ptr[0] = 10;

unfastened(ptr);

12. Advantages of C

Control over hardware

Widely used and supported

Foundation for plenty cutting-edge languages

thirteen. Limitations of C

No integrated help for item-oriented programming

No rubbish collection (manual memory control)

No integrated exception managing

Limited fashionable library compared to higher-degree languages

14. Applications of C

Operating Systems: Unix, Linux, Windows kernel components

Embedded Systems: Microcontroller programming

Databases: MySQL is partly written in C

Gaming and Graphics: Due to performance advantages

2 notes

·

View notes

Text

stream of consciousness about the new animation vs. coding episode, as a python programmer

holy shit, my increasingly exciting reaction as i realized that yellow was writing in PYTHON. i write in python. it's the programming language that i used in school and current use in work.

i was kinda expecting a print("hello world") but that's fine

i think using python to demonstrate coding was a practical choice. it's one of the most commonly used programming languages and it's very human readable.

the episode wasn't able to cram every possible concept in programming, of course, but they got a lot of them!

fun stuff like print() not outputting anything and typecasting between string values and integer values!!

string manipulation

booleans

little things like for-loops and while-loops for iterating over a string or list. and indexing! yay :D

* iterable input :D (the *bomb that got thrown at yellow)

and then they started importing libraries! i've never seen the turtle library but it seems like it draws vectors based on the angle you input into a function

the gun list ran out of "bullets" because it kept removing them from the list gun.pop()

AND THEN THE DATA VISUALIZATION. matplotlib!! numpy!!!! my beloved!!!!!!!! i work in data so this!!!! this!!!!! somehow really validating to me to see my favorite animated web series play with data. i think it's also a nice touch that the blue on the bars appear to be the matplotlib default blue. the plot formatting is accurate too!!!

haven't really used pygame either but making shapes and making them move based on arrow key input makes sense

i recall that yellow isn't the physically strongest, but it's cool to see them move around in space and i'm focusing on how they move and figure out the world.

nuke?!

and back to syntax error and then commenting it out # made it go away

cool nuke text motion graphics too :D (i don't think i make that motion in python, personally)

and then yellow cranks it to 100,000 to make a neural network in pytorch. this gets into nlp (tokenizers and other modeling)

a CLASS? we touch on some object oriented programming here but we just see the __init__ function so not the full concept is demonstrated here.

OH! the "hello world" got broken down into tokens. that's why we see the "hello world" string turn into numbers and then... bits (the 0s and 1s)? the strings are tokenized/turned into values that the model can interpret. it's trying to understand written human language

and then an LSTM?! (long short-term memory)

something something feed-forward neural network

model training (hence the epochs and increasing accuracy)

honestly, the scrolling through the code goes so fast, i had to do a second look through (i'm also not very deeply versed in implementing neural networks but i have learned about them in school)

and all of this to send "hello world" to an AI(?) recreation of the exploded laptop

not too bad for a macbook user lol

i'm just kidding, a major of people used macs in my classes

things i wanna do next since im so hyped

i haven't drawn for the fandom in a long time, but i feel a little motivated to draw my design of yellow again. i don't recall the episode using object oriented programming, but i kinda want to make a very simple example where the code is an initialization of a stick figure object and the instances are each of the color gang.

it wouldn't be full blown AI, but it's just me writing in everyone's personality traits and colors into a function, essentially since each stick figure is an individual program.

#animator vs animation#ava#yellow ava#ava yellow#long post#thank you if you took the time to read lol

5 notes

·

View notes

Text

Reawakened; Into the Abyss – Domestic prelude

From my CW final assignment. Every other character belongs to me. This story is 5 pages long in it’s entirety. I feel the need to specify that the 4 characters at the end are Sanrio OC’s and the first 3 are Elemental Alicorns.

🌫️💭🕸️📻🪦🍽️🌑🕳️❔👁️🗨️🕒

Thalassorion laid in a paradise–like island, watching the waves caress the beach. Verdanox and Luxvian suddenly ran over, looking up at their mentor. Thalassorion looked down, “What is it?” “Someone’s at the pizzeria. Appreciate they’re trying to open the pizzeria again.” Verdanox said, “We can’t let them get back in, right?” Luxvian added, standing behind Verdanox. Thalassorion sighed and stood up, walking off with the two running after him like they always did when he had to do something they considered interesting enough to witness for themselves.

Lottie helped Yukio, Taffy, and Winnie off the table in the storage room. Outside the room, near the door, sat Fredbear and Spring Bonnie or Tex, who were busy with a Lego set. “Lego’s really improved.” Fredbear noted, looking at the instructions and trying to find the correct set of pieces being pictured. “We’ve been hidden away for the past 37 years, I think about of things other than Lego have changed.” Tex replied. “My point still stands. These pieces are much more..complicated and intricate. They really improved these models and builds. It’s good but also a bad thing. I mean, this set looks like it’s defying gravity!” “Maybe because these are made for kids and kids din’t exactly know about weight distribution? But I think even kids would be able to realize that these parts aren’t properly supported.” Tex sighed as he waited for his friend to finish.

Nearby, standing outside their large music boxes in a corner, floated Jinx and Marionette. “It’s a bit difficult using pronouns other than the basic trio.” Jinx said. Marionette nodded, “What pronouns do you use?” “Oh, I use he/xi/spir/lumi/pup/jin/clow. What about you?” “He/it/ae/xe/ver/spool/string. I don’t know anybody other than you who uses neopronouns.” “Well, Yukio, Taffy, and Winnie use neopronouns.” Jinx replied as the two now sat in their boxes.

“Oh, really? What neopronouns?” Marionette asked. “From what I remember, Yukio uses he/it/fae/spra/fro/glace/antler/chill/fluff/snow/frost/burr. Taffy uses he/coco/rain/choco/puddle/drip/mochi/raindrop/candy/quack/syrup/melt. Winnie uses she/boo/bubbly/ghost/soapy/lather/phantom/whisp/squeak/froth/fizz/mist/scrub.” Jinx responded. “Do you have this memorized or something?” The other puppet asked.

Jinx disappeared into his box and after some comical sounds came out of the box, he reappeared. He held a Sanrio themed notebook and opened it, flipping through a few pages before showing Marionette a page. “Fredbear uses he/teddy/snugg/cuddly/fuzzy/stuffie/hug/pillow/bearie/fur/paw and Tex uses he/ver/spring/bun/tink, if that’s important to you.” “And Freddy and Bonnie?” “Cutest couple in the company–Anyways, Gabriel uses he/it/xe/fae/bea/sparkle/paw/fluff/bun/pup/hug/ze/ae/confetti/sticker/silly/fun/laugh and Jeremy uses he/ey/vey/byte/data/it/input/output/ram/static/type/java/python/01/exe/bass/chord. And can I also tell you Plushtrap and Carl’s pronouns?” “I know I can’t stop you so..”

“Thanks. Plushtrap uses he/trap/puff/chomp and Carl uses he/cake/sweet/sugar/cherry/flesh/rot/candle.” Jinx responded. “I’ve still got a lot more people to write down. I intend to go for Mangle and Endo next. Also, uh, what were your pronouns again?” He added as he clicked his Sanrio pen. “He/it/ae/xe/ver/spool/string. Also they/them but, like, plural. I just like being referred to as more than one, I dunno.” “Probably Lefty.” “Yeah, I’ll have to get that checked.” Marionette sighed as he pulled out his phone. “I’m scheduling an appointment.”

Plushtrap and Carl sat in a small room, drawing. Plushtrap lifted his drawing. “Look, this is you. You fucking suck, loser.” Carl looked up, showing his own drawing. “Yeah, I drew you too. You’re a dumbass. How accurate.” “The fuck does ‘accurate’ mean?” “Oh my god, you’re actually stupid.” Carl sighed.

Carl looked down and pointed at the sketchpad the other small animatronic had done. “What’s in there?” “Uh, nothing.” Carl raised a brow and suddenly snatched it, running off. Plushtrap yelled and sprinted after him, running past a room where Freddy and Bonnie were inside. Freddy was busy doing a Lego set on a nearby table, struggling to understand how to properly put together a particularly intricate Lego building. “Is is that hard?” Bonnie asked. “Yes. Very.” Freddy sighed in defeat as his close friend walked over “Maybe because you’re not on the right page?” The purple bunny robot muttered as he flipped pages back to the proper page. “Oh!” Freddy said, looking closely at the instructions. “Okay, yeah. Your eyesight got better as an animatronic and mine’s got worse–That’s actually probably karma for me making fun of you being blind, huh? Guess I better start praying to some God above for forgiveness. You think God will forgive it makr me more blind?” “Your eyesight can’t get any worse and the same goes for your memory. You always lose things.” “Like what?” “Your hat.” “It just–” “It is LITERALLY on your head.” “Okay, yeah, well..” Freddy sighed and looked back down to the Lego set.

“Shut up, I’m helping you build your Lego city since Fritz is too busy buying more sets and you know he only helps with it involves vehicles.” Freddy said as he finished making one of the supermarket’s walls. “My god, that’s complicated.” He muttered. “Why are you putting the wall on? Wait until you’ve done the inside then put the walls on, that’s common sense. You’re just making it more difficult.” Bonnie sighed.

“You know I’m not smart.” Freddy responded. “Don’t be surprised when I’m dumb or overcomplicate things. That’s, like, my special talent.” “You’re special talent is entertainment, being an idiot is your second.” The animatronic bunny corrected. “Yeah. Was almost my first, unfortunately.”

Out of the room and a few hallways down, Yukio, Taffy, and Winnie were in another room, looking around the dim space. They looked at a screen and walked up to it winnie floated off and looked at a model that was standing, resembling The Singularity from Dead By Daylight; an organic yet inorganic being. She floated around it, her hands in front of her chest but covered by the white sleeves of her oversized dress. She inspected it curiously while Yukio and Taffy stood at the computer. Taffy inspected the screen curiously while Yukio tapped buttons and accidentally turned it on. As the computer turned on, Taffy found a book and dusted it off before opening it. A paper fell out and he grabbed it before coming out from behind the computer.

He walked to Yukio’s side, reading the paper. “What’s that?” Yukio asked curiously, standing on his tippy–toes to try and see what was in the book or on the paper. “‘Questions to ask Vega’ but the e is a 3 and the a is a 4. That’s weird.” Taffy replied.

The computer finished setting up showing a digital version of the model that Winnie was still busy inspecting in the back corner of the room. Winnie looked over. Taffy looked at the paper, “I guess this is Vega. We’re supposed to ask him these questions.” Taffy handed the paper to Yukio who anxiously took it.

“Um, what’s your name?”

“My n4m3 15 V3g4.”

“Okay, what’s your..gender and pronouns?” Yukio said.

“M4ch1n3g3nd3r, v01dg3nd3r, c1rcu1tg3nd3r, 5t4rc0r3g3nd3r, 43th3rg3nd3r, 4nd h3/1t/x3/v3/43/3y.”

“Okay..” Yukio drew out the word. “Uh, any other labels?”

“Cyb0rg, 5ynth, t3chn01d, m3ch4n01d, fl35hb0t, 41–b31ng, 3nt1ty, 4nd 4ut15t1c, 4DHD, 53n50ry pr0c3551ng d150rd3r, 5ch1z04ff3ct1v3, 4nd hyp3rthym3514 th4t c4n b3 tr1gg3r3d by 5yn35th3514.”

“I don’t know what any of that means.” Yukio frowned up at the screen.

“[OBSTACLE DETECTED], [SEARCHING FOR SOURCE OF OBSTACLE..]”

Yukio stared up at the computer silently as Taffy stood beside him. Winnie floated over to the two boys, also watching the screen as it loaded. A multitude of screens appearing before it picked a clip of Yukio standing before it and saying he didn’t understand what was shown before him and analyzing the clip.

“[OBSTACLE FOUND], [OBSTACLE; FAWN BOY DOES NOT UNDERSTAND WHAY IS BEING TOLD]. [SEARCHING FOR SOLUTION..]”

“[SOLUTION FOUND; TRANSLATION FROM LEETSPEAK TO BASIC ENGLISH], [LOADING; LEETSPEAK TO BASIC ENGLISH TRANSLATION..]”

“My name is Vega. Machinegender, voidgender, circuitgender, starcoregender, aethergender, he/it/xe/ve/ae/ey. Cyborg, synth, technoid, mechanoid, fleshbot, AI–being, entity, and autistic, ADHD, sensory processing disorder, schizoaffective, and hyperthymesia that can be triggered by synesthesia.”

Yukio looked down, “I can’t write this down, I don’t know how to spell like that.” He said sadly before looking to Taffy and Winnie for comfortm

“[OBSTACLE DETECTED; FAWN BOY CANNOT WRITE PROPERLY DUE TO LACK OF HIGHER EDUCATION]. [SOLUTION; PRINT RESPONSES].”

Suddenly, the machine started making churning noises and soon, a paper came out with the questions and Vega’s answers below. Winnie floated over to Yukio, looking, at the original paper that was aged and ripped at the edges from its age. She took the paper and flipped it over. “Oh, there’s another question.” She said, making Yukio and Taffy look over curiously. “‘Design a Whimsy Wonderland animatronic’. What’s ‘Whimsy Wonderland’?” She asked, tilting her head. The computer immediately took the orders.

“Name; Niji Sparkle.

Gender and pronouns; cynosgender, glittergender, sodagender, sparklegender, fizzyfluid, Backroomic, neonradientgender, neongenderflux, shinjugender, sodaglowgender, and he/spark/fizz/neon/kiro/bubble.

Alterhuman labels; bubblekin, sodaheart, glitchkin, and keroid.

Neurodivergent labels; cerebriot, dreamshifted, sparkletouched, echoedmind, popkinesis.”

The computer progressively made an image of a Pitbull puppy before throwing the model out. The model, Niji Sparkle, stood up. He waved happily at the shocked trio. “Hi, I’m Niji Sparkle! I’m a Pitbull.” Niji said. A hand suddenly came out of the machine, took the response paper it’d made, and put the model of Niji Sparkle and his information on the back before putting it back in Yukio’s hands then disappearing inside the machine again.

“What do we do with him?” Taffy asked. Yukio shrugged, “Um, bring him to Lottie?” Yukio replied. The boys walked off to go to Lottie as Winnie grabbed Niji and carried him out of the room, following the two.

🌫️💭🕸️📻🪦🍽️🌑🕳️❔👁️🗨️🕒

Um, yeah, that’s the end of the story. Total word count (story); 1,704.

#✧₊⁺📚⋆.˚୨ৎ a story from the pile#✧₊⁺👤⋆.˚୨ৎ so I'll add a new problem to your night#fnaf#fnaf fic#fnaf au#fnaf ocs

2 notes

·

View notes

Text

Open Platform For Enterprise AI Avatar Chatbot Creation

How may an AI avatar chatbot be created using the Open Platform For Enterprise AI framework?

I. Flow Diagram

The graph displays the application’s overall flow. The Open Platform For Enterprise AI GenAIExamples repository’s “Avatar Chatbot” serves as the code sample. The “AvatarChatbot” megaservice, the application’s central component, is highlighted in the flowchart diagram. Four distinct microservices Automatic Speech Recognition (ASR), Large Language Model (LLM), Text-to-Speech (TTS), and Animation are coordinated by the megaservice and linked into a Directed Acyclic Graph (DAG).

Every microservice manages a specific avatar chatbot function. For instance:

Software for voice recognition that translates spoken words into text is called Automatic Speech Recognition (ASR).

By comprehending the user’s query, the Large Language Model (LLM) analyzes the transcribed text from ASR and produces the relevant text response.

The text response produced by the LLM is converted into audible speech by a text-to-speech (TTS) service.

The animation service makes sure that the lip movements of the avatar figure correspond with the synchronized speech by combining the audio response from TTS with the user-defined AI avatar picture or video. After then, a video of the avatar conversing with the user is produced.

An audio question and a visual input of an image or video are among the user inputs. A face-animated avatar video is the result. By hearing the audible response and observing the chatbot’s natural speech, users will be able to receive input from the avatar chatbot that is nearly real-time.

Create the “Animation” microservice in the GenAIComps repository

We would need to register a new microservice, such “Animation,” under comps/animation in order to add it:

Register the microservice

@register_microservice( name=”opea_service@animation”, service_type=ServiceType.ANIMATION, endpoint=”/v1/animation”, host=”0.0.0.0″, port=9066, input_datatype=Base64ByteStrDoc, output_datatype=VideoPath, ) @register_statistics(names=[“opea_service@animation”])

It specify the callback function that will be used when this microservice is run following the registration procedure. The “animate” function, which accepts a “Base64ByteStrDoc” object as input audio and creates a “VideoPath” object with the path to the generated avatar video, will be used in the “Animation” case. It send an API request to the “wav2lip” FastAPI’s endpoint from “animation.py” and retrieve the response in JSON format.

Remember to import it in comps/init.py and add the “Base64ByteStrDoc” and “VideoPath” classes in comps/cores/proto/docarray.py!

This link contains the code for the “wav2lip” server API. Incoming audio Base64Str and user-specified avatar picture or video are processed by the post function of this FastAPI, which then outputs an animated video and returns its path.

The functional block for its microservice is created with the aid of the aforementioned procedures. It must create a Dockerfile for the “wav2lip” server API and another for “Animation” to enable the user to launch the “Animation” microservice and build the required dependencies. For instance, the Dockerfile.intel_hpu begins with the PyTorch* installer Docker image for Intel Gaudi and concludes with the execution of a bash script called “entrypoint.”

Create the “AvatarChatbot” Megaservice in GenAIExamples

The megaservice class AvatarChatbotService will be defined initially in the Python file “AvatarChatbot/docker/avatarchatbot.py.” Add “asr,” “llm,” “tts,” and “animation” microservices as nodes in a Directed Acyclic Graph (DAG) using the megaservice orchestrator’s “add” function in the “add_remote_service” function. Then, use the flow_to function to join the edges.

Specify megaservice’s gateway

An interface through which users can access the Megaservice is called a gateway. The Python file GenAIComps/comps/cores/mega/gateway.py contains the definition of the AvatarChatbotGateway class. The host, port, endpoint, input and output datatypes, and megaservice orchestrator are all contained in the AvatarChatbotGateway. Additionally, it provides a handle_request function that plans to send the first microservice the initial input together with parameters and gathers the response from the last microservice.

In order for users to quickly build the AvatarChatbot backend Docker image and launch the “AvatarChatbot” examples, we must lastly create a Dockerfile. Scripts to install required GenAI dependencies and components are included in the Dockerfile.

II. Face Animation Models and Lip Synchronization

GFPGAN + Wav2Lip

A state-of-the-art lip-synchronization method that uses deep learning to precisely match audio and video is Wav2Lip. Included in Wav2Lip are:

A skilled lip-sync discriminator that has been trained and can accurately identify sync in actual videos

A modified LipGAN model to produce a frame-by-frame talking face video

An expert lip-sync discriminator is trained using the LRS2 dataset as part of the pretraining phase. To determine the likelihood that the input video-audio pair is in sync, the lip-sync expert is pre-trained.

A LipGAN-like architecture is employed during Wav2Lip training. A face decoder, a visual encoder, and a speech encoder are all included in the generator. Convolutional layer stacks make up all three. Convolutional blocks also serve as the discriminator. The modified LipGAN is taught similarly to previous GANs: the discriminator is trained to discriminate between frames produced by the generator and the ground-truth frames, and the generator is trained to minimize the adversarial loss depending on the discriminator’s score. In total, a weighted sum of the following loss components is minimized in order to train the generator:

A loss of L1 reconstruction between the ground-truth and produced frames

A breach of synchronization between the lip-sync expert’s input audio and the output video frames

Depending on the discriminator score, an adversarial loss between the generated and ground-truth frames

After inference, it provide the audio speech from the previous TTS block and the video frames with the avatar figure to the Wav2Lip model. The avatar speaks the speech in a lip-synced video that is produced by the trained Wav2Lip model.

Lip synchronization is present in the Wav2Lip-generated movie, although the resolution around the mouth region is reduced. To enhance the face quality in the produced video frames, it might optionally add a GFPGAN model after Wav2Lip. The GFPGAN model uses face restoration to predict a high-quality image from an input facial image that has unknown deterioration. A pretrained face GAN (like Style-GAN2) is used as a prior in this U-Net degradation removal module. A more vibrant and lifelike avatar representation results from prettraining the GFPGAN model to recover high-quality facial information in its output frames.

SadTalker

It provides another cutting-edge model option for facial animation in addition to Wav2Lip. The 3D motion coefficients (head, stance, and expression) of a 3D Morphable Model (3DMM) are produced from audio by SadTalker, a stylized audio-driven talking-head video creation tool. The input image is then sent through a 3D-aware face renderer using these coefficients, which are mapped to 3D key points. A lifelike talking head video is the result.

Intel made it possible to use the Wav2Lip model on Intel Gaudi Al accelerators and the SadTalker and Wav2Lip models on Intel Xeon Scalable processors.

Read more on Govindhtech.com

#AIavatar#OPE#Chatbot#microservice#LLM#GenAI#API#News#Technews#Technology#TechnologyNews#Technologytrends#govindhtech

3 notes

·

View notes

Note

Two questions: 1: did you actually make ~ATH, and 2: what was that Sburb text-game that you mentioned on an ask on another blog

While I was back in highschool (iirc?) I made a thing which I titled “drocta ~ATH”, which is a programming language with the design goals of:

1: being actually possible to implement, (and therefore, for example, not having things be tied to the lifespans of external things)

2: being Turing complete, and accept user input and produce output for the user to read, such that in principle one could write useful programs in it (though it is not meant to be practical to do so).

3: matching how ~ATH is depicted in the comic, as closely as I can, with as little as possible that I don’t have some justification for based on what is shown in the comic (plus the navigation page for the comic, which depicts a “SPLIT” command). For example, I avoid assuming that the language has any built-in concept of numbers, because the comic doesn’t depict any, and I don’t need to assume it does, provided I make some reasonable assumptions about what BIFURCATE (and SPLIT) do, and also assume that the BIFURCATE command can also be done in reverse.

However, I try to always make a distinction between “drocta ~ATH”, which is a real thing I made, and “~ATH”, which is a fictional programming language in which it is possible to write programs that e.g. wait until the author’s death and the run some code, or implement some sort of curse that involves the circumstantial simultaneous death of two universes.

In addition, please be aware that the code quality of my interpreter for drocta ~ATH, is very bad! It does not use a proper parser or the like, and, iirc (it has probably been around a decade since I made any serious edits to the code, so I might recall wrong), it uses the actual line numbers of the file for the control flow? (Also, iirc, the code was written for python 2.7 rather than for python 3.) At some point I started a rewrite of the interpreter (keeping the language the same, except possibly fixing bugs), but did not get very far.

If, impossibly, I got some extra time I wouldn’t otherwise have that somehow could only be used for the task of working on drocta ~ATH related stuff, I would be happy to complete that rewrite, and do it properly, but as time has gone on, it seems less likely that I will complete the rewrite.

I am pleased that all these years later, I still get the occasional message asking about drocta ~ATH, and remain happy to answer any questions about it! I enjoy that people still think the idea is interesting.

(If someone wanted to work with me to do the rewrite, that might provide me the provided motivation to do the rewrite, maybe? No promises though. I somewhat doubt that anyone would be interested in doing such a collaboration though.)

Regarding the text based SBURB game, I assume I was talking about “The Overseer Project”. It was very cool.

Thank you for your questions. I hope this answers it to your satisfaction.

6 notes

·

View notes