#sql on hadoop

Explore tagged Tumblr posts

Text

What is Data Science? A Comprehensive Guide for Beginners

In today’s data-driven world, the term “Data Science” has become a buzzword across industries. Whether it’s in technology, healthcare, finance, or retail, data science is transforming how businesses operate, make decisions, and understand their customers. But what exactly is data science? And why is it so crucial in the modern world? This comprehensive guide is designed to help beginners understand the fundamentals of data science, its processes, tools, and its significance in various fields.

#Data Science#Data Collection#Data Cleaning#Data Exploration#Data Visualization#Data Modeling#Model Evaluation#Deployment#Monitoring#Data Science Tools#Data Science Technologies#Python#R#SQL#PyTorch#TensorFlow#Tableau#Power BI#Hadoop#Spark#Business#Healthcare#Finance#Marketing

0 notes

Link

0 notes

Text

Short-Term vs. Long-Term Data Analytics Course in Delhi: Which One to Choose?

In today’s digital world, data is everywhere. From small businesses to large organizations, everyone uses data to make better decisions. Data analytics helps in understanding and using this data effectively. If you are interested in learning data analytics, you might wonder whether to choose a short-term or a long-term course. Both options have their benefits, and your choice depends on your goals, time, and career plans.

At Uncodemy, we offer both short-term and long-term data analytics courses in Delhi. This article will help you understand the key differences between these courses and guide you to make the right choice.

What is Data Analytics?

Data analytics is the process of examining large sets of data to find patterns, insights, and trends. It involves collecting, cleaning, analyzing, and interpreting data. Companies use data analytics to improve their services, understand customer behavior, and increase efficiency.

There are four main types of data analytics:

Descriptive Analytics: Understanding what has happened in the past.

Diagnostic Analytics: Identifying why something happened.

Predictive Analytics: Forecasting future outcomes.

Prescriptive Analytics: Suggesting actions to achieve desired outcomes.

Short-Term Data Analytics Course

A short-term data analytics course is a fast-paced program designed to teach you essential skills quickly. These courses usually last from a few weeks to a few months.

Benefits of a Short-Term Data Analytics Course

Quick Learning: You can learn the basics of data analytics in a short time.

Cost-Effective: Short-term courses are usually more affordable.

Skill Upgrade: Ideal for professionals looking to add new skills without a long commitment.

Job-Ready: Get practical knowledge and start working in less time.

Who Should Choose a Short-Term Course?

Working Professionals: If you want to upskill without leaving your job.

Students: If you want to add data analytics to your resume quickly.

Career Switchers: If you want to explore data analytics before committing to a long-term course.

What You Will Learn in a Short-Term Course

Introduction to Data Analytics

Basic Tools (Excel, SQL, Python)

Data Visualization (Tableau, Power BI)

Basic Statistics and Data Interpretation

Hands-on Projects

Long-Term Data Analytics Course

A long-term data analytics course is a comprehensive program that provides in-depth knowledge. These courses usually last from six months to two years.

Benefits of a Long-Term Data Analytics Course

Deep Knowledge: Covers advanced topics and techniques in detail.

Better Job Opportunities: Preferred by employers for specialized roles.

Practical Experience: Includes internships and real-world projects.

Certifications: You may earn industry-recognized certifications.

Who Should Choose a Long-Term Course?

Beginners: If you want to start a career in data analytics from scratch.

Career Changers: If you want to switch to a data analytics career.

Serious Learners: If you want advanced knowledge and long-term career growth.

What You Will Learn in a Long-Term Course

Advanced Data Analytics Techniques

Machine Learning and AI

Big Data Tools (Hadoop, Spark)

Data Ethics and Governance

Capstone Projects and Internships

Key Differences Between Short-Term and Long-Term Courses

FeatureShort-Term CourseLong-Term CourseDurationWeeks to a few monthsSix months to two yearsDepth of KnowledgeBasic and Intermediate ConceptsAdvanced and Specialized ConceptsCostMore AffordableHigher InvestmentLearning StyleFast-PacedDetailed and ComprehensiveCareer ImpactQuick Entry-Level JobsBetter Career Growth and High-Level JobsCertificationBasic CertificateIndustry-Recognized CertificationsPractical ProjectsLimitedExtensive and Real-World Projects

How to Choose the Right Course for You

When deciding between a short-term and long-term data analytics course at Uncodemy, consider these factors:

Your Career Goals

If you want a quick job or basic knowledge, choose a short-term course.

If you want a long-term career in data analytics, choose a long-term course.

Time Commitment

Choose a short-term course if you have limited time.

Choose a long-term course if you can dedicate several months to learning.

Budget

Short-term courses are usually more affordable.

Long-term courses require a bigger investment but offer better returns.

Current Knowledge

If you already know some basics, a short-term course will enhance your skills.

If you are a beginner, a long-term course will provide a solid foundation.

Job Market

Short-term courses can help you get entry-level jobs quickly.

Long-term courses open doors to advanced and specialized roles.

Why Choose Uncodemy for Data Analytics Courses in Delhi?

At Uncodemy, we provide top-quality training in data analytics. Our courses are designed by industry experts to meet the latest market demands. Here’s why you should choose us:

Experienced Trainers: Learn from professionals with real-world experience.

Practical Learning: Hands-on projects and case studies.

Flexible Schedule: Choose classes that fit your timing.

Placement Assistance: We help you find the right job after course completion.

Certification: Receive a recognized certificate to boost your career.

Final Thoughts

Choosing between a short-term and long-term data analytics course depends on your goals, time, and budget. If you want quick skills and job readiness, a short-term course is ideal. If you seek in-depth knowledge and long-term career growth, a long-term course is the better choice.

At Uncodemy, we offer both options to meet your needs. Start your journey in data analytics today and open the door to exciting career opportunities. Visit our website or contact us to learn more about our Data Analytics course in delhi.

Your future in data analytics starts here with Uncodemy!

2 notes

·

View notes

Text

Wielding Big Data Using PySpark

Introduction to PySpark

PySpark is the Python API for Apache Spark, a distributed computing framework designed to process large-scale data efficiently. It enables parallel data processing across multiple nodes, making it a powerful tool for handling massive datasets.

Why Use PySpark for Big Data?

Scalability: Works across clusters to process petabytes of data.

Speed: Uses in-memory computation to enhance performance.

Flexibility: Supports various data formats and integrates with other big data tools.

Ease of Use: Provides SQL-like querying and DataFrame operations for intuitive data handling.

Setting Up PySpark

To use PySpark, you need to install it and set up a Spark session. Once initialized, Spark allows users to read, process, and analyze large datasets.

Processing Data with PySpark

PySpark can handle different types of data sources such as CSV, JSON, Parquet, and databases. Once data is loaded, users can explore it by checking the schema, summary statistics, and unique values.

Common Data Processing Tasks

Viewing and summarizing datasets.

Handling missing values by dropping or replacing them.

Removing duplicate records.

Filtering, grouping, and sorting data for meaningful insights.

Transforming Data with PySpark

Data can be transformed using SQL-like queries or DataFrame operations. Users can:

Select specific columns for analysis.

Apply conditions to filter out unwanted records.

Group data to find patterns and trends.

Add new calculated columns based on existing data.

Optimizing Performance in PySpark

When working with big data, optimizing performance is crucial. Some strategies include:

Partitioning: Distributing data across multiple partitions for parallel processing.

Caching: Storing intermediate results in memory to speed up repeated computations.

Broadcast Joins: Optimizing joins by broadcasting smaller datasets to all nodes.

Machine Learning with PySpark

PySpark includes MLlib, a machine learning library for big data. It allows users to prepare data, apply machine learning models, and generate predictions. This is useful for tasks such as regression, classification, clustering, and recommendation systems.

Running PySpark on a Cluster

PySpark can run on a single machine or be deployed on a cluster using a distributed computing system like Hadoop YARN. This enables large-scale data processing with improved efficiency.

Conclusion

PySpark provides a powerful platform for handling big data efficiently. With its distributed computing capabilities, it allows users to clean, transform, and analyze large datasets while optimizing performance for scalability.

For Free Tutorials for Programming Languages Visit-https://www.tpointtech.com/

2 notes

·

View notes

Text

How-To IT

Topic: Core areas of IT

1. Hardware

• Computers (Desktops, Laptops, Workstations)

• Servers and Data Centers

• Networking Devices (Routers, Switches, Modems)

• Storage Devices (HDDs, SSDs, NAS)

• Peripheral Devices (Printers, Scanners, Monitors)

2. Software

• Operating Systems (Windows, Linux, macOS)

• Application Software (Office Suites, ERP, CRM)

• Development Software (IDEs, Code Libraries, APIs)

• Middleware (Integration Tools)

• Security Software (Antivirus, Firewalls, SIEM)

3. Networking and Telecommunications

• LAN/WAN Infrastructure

• Wireless Networking (Wi-Fi, 5G)

• VPNs (Virtual Private Networks)

• Communication Systems (VoIP, Email Servers)

• Internet Services

4. Data Management

• Databases (SQL, NoSQL)

• Data Warehousing

• Big Data Technologies (Hadoop, Spark)

• Backup and Recovery Systems

• Data Integration Tools

5. Cybersecurity

• Network Security

• Endpoint Protection

• Identity and Access Management (IAM)

• Threat Detection and Incident Response

• Encryption and Data Privacy

6. Software Development

• Front-End Development (UI/UX Design)

• Back-End Development

• DevOps and CI/CD Pipelines

• Mobile App Development

• Cloud-Native Development

7. Cloud Computing

• Infrastructure as a Service (IaaS)

• Platform as a Service (PaaS)

• Software as a Service (SaaS)

• Serverless Computing

• Cloud Storage and Management

8. IT Support and Services

• Help Desk Support

• IT Service Management (ITSM)

• System Administration

• Hardware and Software Troubleshooting

• End-User Training

9. Artificial Intelligence and Machine Learning

• AI Algorithms and Frameworks

• Natural Language Processing (NLP)

• Computer Vision

• Robotics

• Predictive Analytics

10. Business Intelligence and Analytics

• Reporting Tools (Tableau, Power BI)

• Data Visualization

• Business Analytics Platforms

• Predictive Modeling

11. Internet of Things (IoT)

• IoT Devices and Sensors

• IoT Platforms

• Edge Computing

• Smart Systems (Homes, Cities, Vehicles)

12. Enterprise Systems

• Enterprise Resource Planning (ERP)

• Customer Relationship Management (CRM)

• Human Resource Management Systems (HRMS)

• Supply Chain Management Systems

13. IT Governance and Compliance

• ITIL (Information Technology Infrastructure Library)

• COBIT (Control Objectives for Information Technologies)

• ISO/IEC Standards

• Regulatory Compliance (GDPR, HIPAA, SOX)

14. Emerging Technologies

• Blockchain

• Quantum Computing

• Augmented Reality (AR) and Virtual Reality (VR)

• 3D Printing

• Digital Twins

15. IT Project Management

• Agile, Scrum, and Kanban

• Waterfall Methodology

• Resource Allocation

• Risk Management

16. IT Infrastructure

• Data Centers

• Virtualization (VMware, Hyper-V)

• Disaster Recovery Planning

• Load Balancing

17. IT Education and Certifications

• Vendor Certifications (Microsoft, Cisco, AWS)

• Training and Development Programs

• Online Learning Platforms

18. IT Operations and Monitoring

• Performance Monitoring (APM, Network Monitoring)

• IT Asset Management

• Event and Incident Management

19. Software Testing

• Manual Testing: Human testers evaluate software by executing test cases without using automation tools.

• Automated Testing: Use of testing tools (e.g., Selenium, JUnit) to run automated scripts and check software behavior.

• Functional Testing: Validating that the software performs its intended functions.

• Non-Functional Testing: Assessing non-functional aspects such as performance, usability, and security.

• Unit Testing: Testing individual components or units of code for correctness.

• Integration Testing: Ensuring that different modules or systems work together as expected.

• System Testing: Verifying the complete software system’s behavior against requirements.

• Acceptance Testing: Conducting tests to confirm that the software meets business requirements (including UAT - User Acceptance Testing).

• Regression Testing: Ensuring that new changes or features do not negatively affect existing functionalities.

• Performance Testing: Testing software performance under various conditions (load, stress, scalability).

• Security Testing: Identifying vulnerabilities and assessing the software’s ability to protect data.

• Compatibility Testing: Ensuring the software works on different operating systems, browsers, or devices.

• Continuous Testing: Integrating testing into the development lifecycle to provide quick feedback and minimize bugs.

• Test Automation Frameworks: Tools and structures used to automate testing processes (e.g., TestNG, Appium).

19. VoIP (Voice over IP)

VoIP Protocols & Standards

• SIP (Session Initiation Protocol)

• H.323

• RTP (Real-Time Transport Protocol)

• MGCP (Media Gateway Control Protocol)

VoIP Hardware

• IP Phones (Desk Phones, Mobile Clients)

• VoIP Gateways

• Analog Telephone Adapters (ATAs)

• VoIP Servers

• Network Switches/ Routers for VoIP

VoIP Software

• Softphones (e.g., Zoiper, X-Lite)

• PBX (Private Branch Exchange) Systems

• VoIP Management Software

• Call Center Solutions (e.g., Asterisk, 3CX)

VoIP Network Infrastructure

• Quality of Service (QoS) Configuration

• VPNs (Virtual Private Networks) for VoIP

• VoIP Traffic Shaping & Bandwidth Management

• Firewall and Security Configurations for VoIP

• Network Monitoring & Optimization Tools

VoIP Security

• Encryption (SRTP, TLS)

• Authentication and Authorization

• Firewall & Intrusion Detection Systems

• VoIP Fraud DetectionVoIP Providers

• Hosted VoIP Services (e.g., RingCentral, Vonage)

• SIP Trunking Providers

• PBX Hosting & Managed Services

VoIP Quality and Testing

• Call Quality Monitoring

• Latency, Jitter, and Packet Loss Testing

• VoIP Performance Metrics and Reporting Tools

• User Acceptance Testing (UAT) for VoIP Systems

Integration with Other Systems

• CRM Integration (e.g., Salesforce with VoIP)

• Unified Communications (UC) Solutions

• Contact Center Integration

• Email, Chat, and Video Communication Integration

2 notes

·

View notes

Text

PolyBase – Bridging the Gap Between Relational and Non-Relational Data. We can use T-SQL queries to fetch data from external data sources, such as Hadoop or Azure Blob Storage. Let's Explore Deeply:

https://madesimplemssql.com/polybase/

Please follow us on FB: https://www.facebook.com/profile.php?id=100091338502392

OR

Join our Group: https://www.facebook.com/groups/652527240081844

2 notes

·

View notes

Text

Essential Skills for Aspiring Data Scientists in 2024

Welcome to another edition of Tech Insights! Today, we're diving into the essential skills that aspiring data scientists need to master in 2024. As the field of data science continues to evolve, staying updated with the latest skills and tools is crucial for success. Here are the key areas to focus on:

1. Programming Proficiency

Proficiency in programming languages like Python and R is foundational. Python, in particular, is widely used for data manipulation, analysis, and building machine learning models thanks to its rich ecosystem of libraries such as Pandas, NumPy, and Scikit-learn.

2. Statistical Analysis

A strong understanding of statistics is essential for data analysis and interpretation. Key concepts include probability distributions, hypothesis testing, and regression analysis, which help in making informed decisions based on data.

3. Machine Learning Mastery

Knowledge of machine learning algorithms and frameworks like TensorFlow, Keras, and PyTorch is critical. Understanding supervised and unsupervised learning, neural networks, and deep learning will set you apart in the field.

4. Data Wrangling Skills

The ability to clean, process, and transform data is crucial. Skills in using libraries like Pandas and tools like SQL for database management are highly valuable for preparing data for analysis.

5. Data Visualization

Effective communication of your findings through data visualization is important. Tools like Tableau, Power BI, and libraries like Matplotlib and Seaborn in Python can help you create impactful visualizations.

6. Big Data Technologies

Familiarity with big data tools like Hadoop, Spark, and NoSQL databases is beneficial, especially for handling large datasets. These tools help in processing and analyzing big data efficiently.

7. Domain Knowledge

Understanding the specific domain you are working in (e.g., finance, healthcare, e-commerce) can significantly enhance your analytical insights and make your solutions more relevant and impactful.

8. Soft Skills

Strong communication skills, problem-solving abilities, and teamwork are essential for collaborating with stakeholders and effectively conveying your findings.

Final Thoughts

The field of data science is ever-changing, and staying ahead requires continuous learning and adaptation. By focusing on these key skills, you'll be well-equipped to navigate the challenges and opportunities that 2024 brings.

If you're looking for more in-depth resources, tips, and articles on data science and machine learning, be sure to follow Tech Insights for regular updates. Let's continue to explore the fascinating world of technology together!

#artificial intelligence#programming#coding#python#success#economy#career#education#employment#opportunity#working#jobs

2 notes

·

View notes

Text

The Ever-Evolving Canvas of Data Science: A Comprehensive Guide

In the ever-evolving landscape of data science, the journey begins with unraveling the intricate threads that weave through vast datasets. This multidisciplinary field encompasses a diverse array of topics designed to empower professionals to extract meaningful insights from the wealth of available data. Choosing the Top Data Science Institute can further accelerate your journey into this thriving industry. This educational journey is a fascinating exploration of the multifaceted facets that constitute the heart of data science education.

Let's embark on a comprehensive exploration of what one typically studies in the realm of data science.

1. Mathematics and Statistics Fundamentals: Building the Foundation

At the core of data science lies a robust understanding of mathematical and statistical principles. Professionals delve into Linear Algebra, equipping themselves with the knowledge of mathematical structures and operations crucial for manipulating and transforming data. Simultaneously, they explore Probability and Statistics, mastering concepts that are instrumental in analyzing and interpreting data patterns.

2. Programming Proficiency: The Power of Code

Programming proficiency is a cornerstone skill in data science. Learners are encouraged to acquire mastery in programming languages such as Python or R. These languages serve as powerful tools for implementing complex data science algorithms and are renowned for their versatility and extensive libraries designed specifically for data science applications.

3. Data Cleaning and Preprocessing Techniques: Refining the Raw Material

Data rarely comes in a pristine state. Hence, understanding techniques for Handling Missing Data becomes imperative. Professionals delve into strategies for managing and imputing missing data, ensuring accuracy in subsequent analyses. Additionally, they explore Normalization and Transformation techniques, preparing datasets through standardization and transformation of variables.

4. Exploratory Data Analysis (EDA): Unveiling Data Patterns

Exploratory Data Analysis (EDA) is a pivotal aspect of the data science journey. Professionals leverage Visualization Tools like Matplotlib and Seaborn to create insightful graphical representations of data. Simultaneously, they employ Descriptive Statistics to summarize and interpret data distributions, gaining crucial insights into the underlying patterns.

5. Machine Learning Algorithms: Decoding the Secrets

Machine Learning is a cornerstone of data science, encompassing both supervised and unsupervised learning. Professionals delve into Supervised Learning, which includes algorithms for tasks such as regression and classification. Additionally, they explore Unsupervised Learning, delving into clustering and dimensionality reduction for uncovering hidden patterns within datasets.

6. Real-world Application and Ethical Considerations: Bridging Theory and Practice

The application of data science extends beyond theoretical knowledge to real-world problem-solving. Professionals learn to apply data science techniques to practical scenarios, making informed decisions based on empirical evidence. Furthermore, they navigate the ethical landscape, considering the implications of data usage on privacy and societal values.

7. Big Data Technologies: Navigating the Sea of Data

With the exponential growth of data, professionals delve into big data technologies. They acquaint themselves with tools like Hadoop and Spark, designed for processing and analyzing massive datasets efficiently.

8. Database Management: Organizing the Data Universe

Professionals gain proficiency in database management, encompassing both SQL and NoSQL databases. This skill set enables them to manage and query databases effectively, ensuring seamless data retrieval.

9. Advanced Topics: Pushing the Boundaries

As professionals progress, they explore advanced topics that push the boundaries of data science. Deep Learning introduces neural networks for intricate pattern recognition, while Natural Language Processing (NLP) focuses on analyzing and interpreting human language data.

10. Continuous Learning and Adaptation: Embracing the Data Revolution

Data science is a field in constant flux. Professionals embrace a mindset of continuous learning, staying updated on evolving technologies and methodologies. This proactive approach ensures they remain at the forefront of the data revolution.

In conclusion, the study of data science is a dynamic and multifaceted journey. By mastering mathematical foundations, programming languages, and ethical considerations, professionals unlock the potential of data, making data-driven decisions that impact industries across the spectrum. The comprehensive exploration of these diverse topics equips individuals with the skills needed to thrive in the dynamic world of data science. Choosing the best Data Science Courses in Chennai is a crucial step in acquiring the necessary expertise for a successful career in the evolving landscape of data science.

4 notes

·

View notes

Text

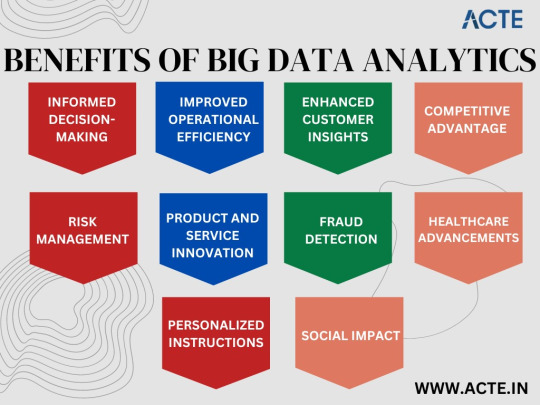

Transform Your Career with Our Big Data Analytics Course: The Future is Now

In today's rapidly evolving technological landscape, the power of data is undeniable. Big data analytics has emerged as a game-changer across industries, revolutionizing the way businesses operate and make informed decisions. By equipping yourself with the right skills and knowledge in this field, you can unlock exciting career opportunities and embark on a path to success. Our comprehensive Big Data Analytics Course is designed to empower you with the expertise needed to thrive in the data-driven world of tomorrow.

Benefits of Our Big Data Analytics Course

Stay Ahead of the Curve

With the ever-increasing amount of data generated each day, organizations seek professionals who can effectively analyze and interpret this wealth of information. By enrolling in our Big Data Analytics Course, you gain a competitive edge by staying ahead of the curve. Learn the latest techniques and tools used in the industry to extract insights from complex datasets, enabling you to make data-driven decisions that propel organizations into the future.

Highly Lucrative Opportunities

The demand for skilled big data professionals continues to skyrocket, creating a vast array of lucrative job opportunities. As more and more companies recognize the value of harnessing their data, they actively seek individuals with the ability to leverage big data analytics for strategic advantages. By completing our course, you position yourself as a sought-after professional capable of commanding an impressive salary and enjoying job security in this rapidly expanding field.

Broaden Your Career Horizon

Big data analytics transcends industry boundaries, making this skillset highly transferrable. By mastering the art of data analysis, you open doors to exciting career prospects in various sectors ranging from finance and healthcare to marketing and e-commerce. The versatility of big data analytics empowers you to shape your career trajectory according to your interests, guaranteeing a vibrant and dynamic professional journey.

Ignite Innovation and Growth

In today's digital age, data is often referred to as the new oil, and for a good reason. The ability to unlock insights from vast amounts of data enables organizations to identify trends, optimize processes, and identify new opportunities for growth. By acquiring proficiency in big data analytics through our course, you become a catalyst for innovation within your organization, driving positive change and propelling businesses towards sustainable success.

Information Provided by Our Big Data Analytics Course

Advanced Data Analytics Techniques

Our course dives deep into advanced data analytics techniques, equipping you with the knowledge and skills to handle complex datasets. From data preprocessing and data visualization to statistical analysis and predictive modeling, you will gain a comprehensive understanding of the entire data analysis pipeline. Our experienced instructors use practical examples and real-world case studies to ensure you develop proficiency in applying these techniques to solve complex business problems.

Cutting-Edge Tools and Technologies

Staying ahead in the field of big data analytics requires fluency in the latest tools and technologies. Throughout our course, you will work with industry-leading software, such as Apache Hadoop and Spark, Python, R, and SQL, which are widely used for data manipulation, analysis, and visualization. Hands-on exercises and interactive projects provide you with invaluable practical experience, enabling you to confidently apply these tools in real-world scenarios.

Ethical Considerations in Big Data

As the use of big data becomes more prevalent, ethical concerns surrounding privacy, security, and bias arise. Our course dedicates a comprehensive module to explore the ethical considerations in big data analytics. By understanding the impact of your work on individuals and society, you learn how to ensure responsible data handling and adhere to legal and ethical guidelines. By fostering a sense of responsibility, the course empowers you to embrace ethical practices and make a positive contribution to the industry.

Education and Learning Experience

Expert Instructors

Our Big Data Analytics Course is led by accomplished industry experts with a wealth of experience in the field. These instructors possess a deep understanding of big data analytics and leverage their practical knowledge to deliver engaging and insightful lessons. Their guidance and mentorship ensure you receive top-quality education that aligns with industry best practices, optimally preparing you for the challenges and opportunities that lie ahead.

Interactive and Collaborative Learning

We believe in the power of interactive and collaborative learning experiences. Our Big Data Analytics Course fosters a vibrant learning community where you can engage with fellow students, share ideas, and collaborate on projects. Through group discussions, hands-on activities, and peer feedback, you gain a comprehensive understanding of big data analytics while also developing vital teamwork and communication skills essential for success in the professional world.

Flexible Learning Options

We understand that individuals lead busy lives, juggling multiple commitments. That's why our Big Data Analytics Course offers flexible learning options to suit your schedule. Whether you prefer attending live virtual classes or learning at your own pace through recorded lectures, we provide a range of options to accommodate your needs. Our user-friendly online learning platform empowers you to access course material anytime, anywhere, making it convenient for you to balance learning with your other commitments.

The future is now, and big data analytics has the potential to transform your career. By enrolling in our Big Data Analytics Course at ACTE institute, you gain the necessary knowledge and skills to excel in this rapidly evolving field. From the incredible benefits and the wealth of information provided to the exceptional education and learning experience, our course equips you with the tools you need to thrive in the data-driven world of the future. Don't wait - take the leap and embark on an exciting journey towards a successful and fulfilling career in big data analytics.

6 notes

·

View notes

Text

Data Engineering Concepts, Tools, and Projects

All the associations in the world have large amounts of data. If not worked upon and anatomized, this data does not amount to anything. Data masterminds are the ones. who make this data pure for consideration. Data Engineering can nominate the process of developing, operating, and maintaining software systems that collect, dissect, and store the association’s data. In modern data analytics, data masterminds produce data channels, which are the structure armature.

How to become a data engineer:

While there is no specific degree requirement for data engineering, a bachelor's or master's degree in computer science, software engineering, information systems, or a related field can provide a solid foundation. Courses in databases, programming, data structures, algorithms, and statistics are particularly beneficial. Data engineers should have strong programming skills. Focus on languages commonly used in data engineering, such as Python, SQL, and Scala. Learn the basics of data manipulation, scripting, and querying databases.

Familiarize yourself with various database systems like MySQL, PostgreSQL, and NoSQL databases such as MongoDB or Apache Cassandra.Knowledge of data warehousing concepts, including schema design, indexing, and optimization techniques.

Data engineering tools recommendations:

Data Engineering makes sure to use a variety of languages and tools to negotiate its objects. These tools allow data masterminds to apply tasks like creating channels and algorithms in a much easier as well as effective manner.

1. Amazon Redshift: A widely used cloud data warehouse built by Amazon, Redshift is the go-to choice for many teams and businesses. It is a comprehensive tool that enables the setup and scaling of data warehouses, making it incredibly easy to use.

One of the most popular tools used for businesses purpose is Amazon Redshift, which provides a powerful platform for managing large amounts of data. It allows users to quickly analyze complex datasets, build models that can be used for predictive analytics, and create visualizations that make it easier to interpret results. With its scalability and flexibility, Amazon Redshift has become one of the go-to solutions when it comes to data engineering tasks.

2. Big Query: Just like Redshift, Big Query is a cloud data warehouse fully managed by Google. It's especially favored by companies that have experience with the Google Cloud Platform. BigQuery not only can scale but also has robust machine learning features that make data analysis much easier. 3. Tableau: A powerful BI tool, Tableau is the second most popular one from our survey. It helps extract and gather data stored in multiple locations and comes with an intuitive drag-and-drop interface. Tableau makes data across departments readily available for data engineers and managers to create useful dashboards. 4. Looker: An essential BI software, Looker helps visualize data more effectively. Unlike traditional BI tools, Looker has developed a LookML layer, which is a language for explaining data, aggregates, calculations, and relationships in a SQL database. A spectacle is a newly-released tool that assists in deploying the LookML layer, ensuring non-technical personnel have a much simpler time when utilizing company data.

5. Apache Spark: An open-source unified analytics engine, Apache Spark is excellent for processing large data sets. It also offers great distribution and runs easily alongside other distributed computing programs, making it essential for data mining and machine learning. 6. Airflow: With Airflow, programming, and scheduling can be done quickly and accurately, and users can keep an eye on it through the built-in UI. It is the most used workflow solution, as 25% of data teams reported using it. 7. Apache Hive: Another data warehouse project on Apache Hadoop, Hive simplifies data queries and analysis with its SQL-like interface. This language enables MapReduce tasks to be executed on Hadoop and is mainly used for data summarization, analysis, and query. 8. Segment: An efficient and comprehensive tool, Segment assists in collecting and using data from digital properties. It transforms, sends, and archives customer data, and also makes the entire process much more manageable. 9. Snowflake: This cloud data warehouse has become very popular lately due to its capabilities in storing and computing data. Snowflake’s unique shared data architecture allows for a wide range of applications, making it an ideal choice for large-scale data storage, data engineering, and data science. 10. DBT: A command-line tool that uses SQL to transform data, DBT is the perfect choice for data engineers and analysts. DBT streamlines the entire transformation process and is highly praised by many data engineers.

Data Engineering Projects:

Data engineering is an important process for businesses to understand and utilize to gain insights from their data. It involves designing, constructing, maintaining, and troubleshooting databases to ensure they are running optimally. There are many tools available for data engineers to use in their work such as My SQL, SQL server, oracle RDBMS, Open Refine, TRIFACTA, Data Ladder, Keras, Watson, TensorFlow, etc. Each tool has its strengths and weaknesses so it’s important to research each one thoroughly before making recommendations about which ones should be used for specific tasks or projects.

Smart IoT Infrastructure:

As the IoT continues to develop, the measure of data consumed with high haste is growing at an intimidating rate. It creates challenges for companies regarding storehouses, analysis, and visualization.

Data Ingestion:

Data ingestion is moving data from one or further sources to a target point for further preparation and analysis. This target point is generally a data storehouse, a unique database designed for effective reporting.

Data Quality and Testing:

Understand the importance of data quality and testing in data engineering projects. Learn about techniques and tools to ensure data accuracy and consistency.

Streaming Data:

Familiarize yourself with real-time data processing and streaming frameworks like Apache Kafka and Apache Flink. Develop your problem-solving skills through practical exercises and challenges.

Conclusion:

Data engineers are using these tools for building data systems. My SQL, SQL server and Oracle RDBMS involve collecting, storing, managing, transforming, and analyzing large amounts of data to gain insights. Data engineers are responsible for designing efficient solutions that can handle high volumes of data while ensuring accuracy and reliability. They use a variety of technologies including databases, programming languages, machine learning algorithms, and more to create powerful applications that help businesses make better decisions based on their collected data.

4 notes

·

View notes

Text

Unlock the World of Data Analysis: Programming Languages for Success!

💡 When it comes to data analysis, choosing the right programming language can make all the difference. Here are some popular languages that empower professionals in this exciting field

https://www.clinicalbiostats.com/

🐍 Python: Known for its versatility, Python offers a robust ecosystem of libraries like Pandas, NumPy, and Matplotlib. It's beginner-friendly and widely used for data manipulation, visualization, and machine learning.

📈 R: Built specifically for statistical analysis, R provides an extensive collection of packages like dplyr, ggplot2, and caret. It excels in data exploration, visualization, and advanced statistical modeling.

🔢 SQL: Structured Query Language (SQL) is essential for working with databases. It allows you to extract, manipulate, and analyze large datasets efficiently, making it a go-to language for data retrieval and management.

💻 Java: Widely used in enterprise-level applications, Java offers powerful libraries like Apache Hadoop and Apache Spark for big data processing. It provides scalability and performance for complex data analysis tasks.

📊 MATLAB: Renowned for its mathematical and numerical computing capabilities, MATLAB is favored in academic and research settings. It excels in data visualization, signal processing, and algorithm development.

🔬 Julia: Known for its speed and ease of use, Julia is gaining popularity in scientific computing and data analysis. Its syntax resembles mathematical notation, making it intuitive for scientists and statisticians.

🌐 Scala: Scala, with its seamless integration with Apache Spark, is a valuable language for distributed data processing and big data analytics. It combines object-oriented and functional programming paradigms.

💪 The key is to choose a language that aligns with your specific goals and preferences. Embrace the power of programming and unleash your potential in the dynamic field of data analysis! 💻📈

#DataAnalysis#ProgrammingLanguages#Python#RStats#SQL#Java#MATLAB#JuliaLang#Scala#DataScience#BigData#CareerOpportunities#biostatistics#onlinelearning#lifesciences#epidemiology#genetics#pythonprogramming#clinicalbiostatistics#datavisualization#clinicaltrials

4 notes

·

View notes

Text

BCA in Data Engineering: Learn Python, SQL, Hadoop, Spark & More

In today’s data-driven world, businesses rely heavily on data engineers to collect, store, and transform raw data into meaningful insights. The Bachelor of Computer Applications (BCA) in Data Engineering offered by Edubex is designed to equip you with both the theoretical foundation and hands-on technical skills needed to thrive in this booming field.

Through this program, you'll gain practical expertise in the most in-demand tools and technologies:

Python – The programming language of choice for automation, data manipulation, and machine learning.

SQL – The industry standard for querying and managing relational databases.

Hadoop – A powerful framework for handling and processing big data across distributed computing environments.

Apache Spark – A fast and general-purpose engine for large-scale data processing and real-time analytics.

And that’s just the beginning. You’ll also explore cloud platforms, ETL pipelines, data warehousing, and more — all designed to prepare you for real-world roles such as Data Engineer, Big Data Developer, Database Administrator, and ETL Specialist.

Whether you aim to work in finance, healthcare, e-commerce, or tech startups, this BCA program empowers you with the data skills that modern employers are looking for.

0 notes

Text

What is a PGP in Data Science? A Complete Guide for Beginners

Businesses in the data-driven world of today mostly depend on insights from vast amounts of data. From predicting customer behavior to optimizing supply chains, data science plays a vital role in decision-making processes across industries. As the demand for skilled data scientists continues to grow, many aspiring professionals are turning to specialized programs like the PGP in Data Science to build a strong foundation and excel in this field.

If you’re curious about what a Post Graduate Program in Data Science entails and how it can benefit your career, this comprehensive guide is for you.

What is Data Science?

Data science is a multidisciplinary field that uses statistical methods, machine learning, data analysis, and computer science to extract insights from structured and unstructured data. It is used to solve real-world problems by uncovering patterns and making predictions.

The role of a data scientist is to collect, clean, analyze, and interpret large datasets to support strategic decision-making. With the growth of big data, cloud computing, and AI technologies, data science has become a highly lucrative and in-demand career path.

What is a PGP in Data Science?

A PGP in Data Science (Post Graduate Program in Data Science) is a comprehensive program designed to equip learners with both theoretical knowledge and practical skills in data science, analytics, machine learning, and related technologies. Unlike traditional degree programs, PGPs are typically more industry-focused, tailored for working professionals or graduates who want to quickly upskill or transition into the field of data science.

These programs are often offered by reputed universities, tech institutions, and online education platforms, with durations ranging from 6 months to 2 years.

Why Choose a Post Graduate Program in Data Science?

Here are some key reasons why a Post Graduate Program in Data Science is worth considering:

High Demand for Data Scientists

Data is the new oil, and businesses need professionals who can make sense of it. According to various industry reports, there is a massive talent gap in the data science field, and a PGP can help bridge this gap.

Industry-Relevant Curriculum

Unlike traditional degree programs, a PGP focuses on the tools, techniques, and real-world applications currently used in the industry.

Fast-Track Career Transition

PGP programs are structured to deliver maximum value in a shorter time frame, making them ideal for professionals looking to switch to data science.

Global Career Opportunities

Data scientists are in demand not just in India but globally. Completing a PGP in Data Science makes you a competitive candidate worldwide.

Key Components of a Post Graduate Program in Data Science

Most PGP in Data Science programs cover the following key areas:

Statistics and Probability

Python and R Programming

Data Wrangling and Visualization

Machine Learning Algorithms

Deep Learning & Neural Networks

Natural Language Processing (NLP)

Big Data Technologies (Hadoop, Spark)

SQL and NoSQL Databases

Business Analytics

Capstone Projects

Some programs include soft skills training, resume building, and interview preparation sessions to boost job readiness.

Who Should Enroll in a PGP in Data Science?

A Post Graduate Program in Data Science is suitable for:

Fresh graduates looking to enter the field of data science

IT professionals aiming to upgrade their skills

Engineers, mathematicians, and statisticians transitioning to data roles

Business analysts who want to learn data-driven decision-making

Professionals from non-technical backgrounds looking to switch careers

Whether you are a beginner or have prior knowledge, a PGP can provide the right blend of theory and hands-on learning.

Skills You Will Learn

By the end of a PGP in Data Science, you will gain expertise in:

Programming languages: Python, R

Data preprocessing and cleaning

Exploratory data analysis

Model building and evaluation

Machine learning algorithms like Linear Regression, Decision Trees, Random Forests, SVM, etc.

Deep learning frameworks like TensorFlow and Keras

SQL for data querying

Data visualization tools like Tableau or Power BI

Real-world business problem-solving

These skills make you job-ready and help you handle real-time projects with confidence.

Curriculum Overview

Here’s a general breakdown of a Post Graduate Program in Data Science curriculum:

Module 1: Introduction to Data Science

Fundamentals of data science

Tools and technologies overview

Module 2: Programming Essentials

Python programming

R programming basics

Jupyter Notebooks and IDEs

Module 3: Statistics & Probability

Descriptive and inferential statistics

Hypothesis testing

Probability distributions

Module 4: Data Manipulation and Visualization

Pandas, NumPy

Matplotlib, Seaborn

Data storytelling

Module 5: Machine Learning

Supervised and unsupervised learning

Model training and tuning

Scikit-learn

Module 6: Deep Learning and AI

Neural networks

Convolutional Neural Networks (CNN)

Recurrent Neural Networks (RNN)

Module 7: Big Data Technologies

Introduction to Hadoop ecosystem

Apache Spark

Real-time data processing

Module 8: Projects & Capstone

Industry case studies

Group projects

Capstone project on end-to-end ML pipeline

Duration and Mode of Delivery

Most PGP in Data Science programs are designed to be completed in 6 to 12 months, depending on the institution and the pace of learning (part-time or full-time). Delivery modes include:

Online (Self-paced or Instructor-led)

Hybrid (Online + Offline workshops)

Classroom-based (Less common today)

Online formats are highly popular due to flexibility, recorded sessions, and access to mentors and peer groups.

Admission Requirements

Admission criteria for a Post Graduate Program in Data Science generally include:

A bachelor’s degree (any discipline)

Basic understanding of mathematics and statistics

Programming knowledge (optional, but beneficial)

An exam or interview may be required by some institutions.

Why a Post Graduate Program in Data Science from Career Amend?

Career Amend offers a comprehensive Post Graduate Program (PGP) in Data Science designed to be completed in just one year, making it an ideal choice for professionals and graduates who wish to enter the field of data science without spending multiple years in formal education. This program has been thoughtfully curated to combine foundational theory with hands-on practical learning, ensuring that students not only understand the core principles. Still, it can also apply them to real-world data challenges.

The one-year structure of Career Amend’s PGP in Data Science is intensive yet flexible, catering to both full-time learners and working professionals. The curriculum spans various topics, including statistics, Python programming, data visualization, machine learning, deep learning, and big data tools. Learners are also introduced to key technologies and platforms like SQL, Tableau, TensorFlow, and cloud services like AWS or Azure. This practical approach helps students gain industry-relevant skills that are immediately applicable.

What sets Career Amend apart is its strong focus on industry integration. The course includes live projects, case studies, and mentorship from experienced data scientists. Learners gain exposure to real-time business problems and data sets through these components, making them job-ready upon completion. The capstone project at the end of the program allows students to showcase their comprehensive knowledge by solving a complex, practical problem, an asset they can add to their portfolios.

Additionally, Career Amend offers dedicated career support services, including resume building, mock interviews, and job placement assistance. Whether a student is looking to switch careers or upskill within their current role, this one-year PGP in Data Science opens doors to numerous high-growth roles such as data scientist, machine learning engineer, data analyst, and more.

Final Thoughts

A PGP in Data Science is an excellent option for anyone looking to enter the field of data science without committing to a full-time degree. It combines the depth of a traditional postgraduate degree with the flexibility and industry alignment of modern learning methods. Whether a recent graduate or a mid-level professional, enrolling in a Post Graduate Program in Data Science can provide the competitive edge you need in today's tech-driven job market.

So, suppose you're asking yourself, "Is a PGP in Data Science worth it?". In that case, the answer is a YES, especially if you are serious about building a career in one of the most dynamic and high-paying domains of the future.

#PGP in Data Science#Post Graduate Program in Data Science#data science#machine learning#data analysis#data analytics#datascience

1 note

·

View note

Text

Scaling Your Australian Business with AI: A CEO’s Guide to Hiring Developers

In today’s fiercely competitive digital economy, innovation isn’t a luxury—it’s a necessity. Australian businesses are increasingly recognizing the transformative power of Artificial Intelligence (AI) to streamline operations, enhance customer experiences, and unlock new revenue streams. But to fully harness this potential, one crucial element is required: expert AI developers.

Whether you’re a fast-growing fintech in Sydney or a manufacturing giant in Melbourne, if you’re looking to implement scalable AI solutions, the time has come to hire AI developers who understand both the technology and your business landscape.

In this guide, we walk CEOs, CTOs, and tech leaders through the essentials of hiring AI talent to scale operations effectively and sustainably.

Why AI is Non-Negotiable for Scaling Australian Enterprises

Australia has seen a 270% rise in AI adoption across key industries like retail, healthcare, logistics, and finance over the past three years. From predictive analytics to conversational AI and intelligent automation, AI has become central to delivering scalable, data-driven solutions.

According to Deloitte Access Economics, AI is expected to contribute AU$ 22.17 billion to the Australian economy by 2030. For CEOs and decision-makers, this isn’t just a trend—it’s a wake-up call to start investing in the right AI talent to stay relevant.

The Hidden Costs of Delaying AI Hiring

Still relying on a traditional tech team to handle AI-based initiatives? You could be leaving significant ROI on the table. Without dedicated experts, your AI projects risk:

Delayed deployments

Poorly optimized models

Security vulnerabilities

Lack of scalability

Wasted infrastructure investment

By choosing to hire AI developers, you're enabling faster time-to-market, more accurate insights, and a competitive edge in your sector.

How to Hire AI Developers: A Strategic Approach for Australian CEOs

The process of hiring AI developers is unlike standard software recruitment. You’re not just hiring a coder—you’re bringing on board an innovation partner.

Here’s what to consider:

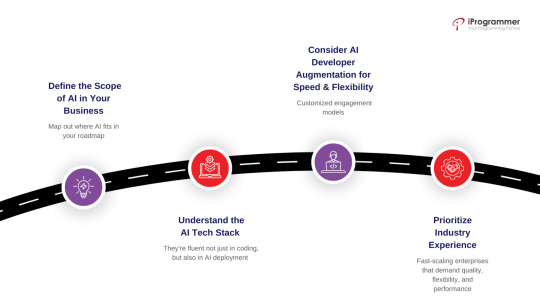

1. Define the Scope of AI in Your Business

Before hiring, map out where AI fits in your roadmap:

Are you looking for machine learning-driven forecasting?

Want to implement AI chatbots for 24/7 customer service?

Building a computer vision solution for your manufacturing line?

Once you identify the use cases, it becomes easier to hire ML developers or AI experts with the relevant domain and technical experience.

2. Understand the AI Tech Stack

A strong AI developer should be proficient in:

Python, R, TensorFlow, PyTorch

Scikit-learn, Keras, OpenCV

Data engineering with SQL, Spark, Hadoop

Deployment tools like Docker, Kubernetes, AWS SageMaker

When you hire remote AI engineers, ensure they’re fluent not just in coding, but also in AI deployment and scalability best practices.

3. Consider AI Developer Augmentation for Speed & Flexibility

Building an in-house AI team is time-consuming and expensive. That’s why AI developer staff augmentation is a smarter choice for many Australian enterprises.

With our staff augmentation services, you can:

Access pre-vetted, highly skilled AI developers

Scale up or down depending on your project phase

Save costs on infrastructure and training

Retain full control over your development process

Whether you need to hire ML developers for short-term analytics or long-term AI product development, we offer customized engagement models to suit your needs.

4. Prioritize Industry Experience

AI isn’t one-size-fits-all. Hiring developers who have experience in your specific industry—be it healthcare, fintech, ecommerce, logistics, or manufacturing—ensures faster onboarding and better results.

We’ve helped companies in Australia and across the globe integrate AI into:

Predictive maintenance systems

Smart supply chain analytics

AI-based fraud detection in banking

Personalized customer experiences in ecommerce

This hands-on experience allows our developers to deliver solutions that are relevant and ROI-driven.

Why Choose Our AI Developer Staff Augmentation Services?

At iProgrammer, we bring over a decade of experience in empowering businesses through intelligent technology solutions. Our AI developer augmentation services are designed for fast-scaling enterprises that demand quality, flexibility, and performance.

What Sets Us Apart:

AI-First Talent Pool: We don’t generalize. We specialize in AI, ML, NLP, computer vision, and data science.

Quick Deployment: Get developers onboarded and contributing in just a few days.

Cost Efficiency: Hire remote AI developers from our offshore team and reduce development costs by up to 40%.

End-to-End Support: From hiring to integration and project execution, we stay involved to ensure success.

A Case in Point: AI Developer Success in an Australian Enterprise

One of our clients, a mid-sized logistics company in Brisbane, wanted to predict delivery delays using real-time data. Within 3 weeks of engagement, we onboarded a senior ML developer who built a predictive model using historical shipment data, weather feeds, and traffic APIs. The result? A 25% reduction in customer complaints and a 15% improvement in delivery time accuracy.

This is the power of hiring the right AI developer at the right time.

Final Thoughts: CEOs Must Act Now to Stay Ahead

If you’re a CEO, CTO, or decision-maker in Australia, the question isn’t “Should I hire AI developers?” It’s “How soon can I hire the right AI developer to scale my business?”

Whether you're launching your first AI project or scaling an existing system, AI developer staff augmentation provides the technical depth and agility you need to grow fast—without the friction of long-term hiring.

Ready to Build Your AI Dream Team?

Let’s connect. Talk to our AI staffing experts today and discover how we can help you hire remote AI developers or hire ML developers who are ready to make an impact from day one.

👉 Contact Us Now | Schedule a Free Consultation

0 notes

Text

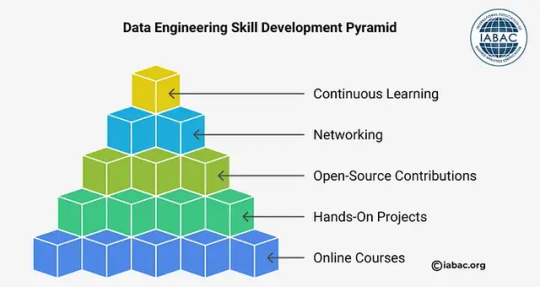

Essential data engineering skills include data modeling, SQL, Python, ETL pipeline development, cloud platforms, and knowledge of big data tools like Spark or Hadoop. These skills help manage, process, and prepare large datasets for analytics and machine learning.

To know more, Visit: www.iabac.org

#iabac#machine learning#online certification#data analytics#certification#professional certification#iabac certification#data science#hr#hr analytics

0 notes

Text

Big Data Technologies: Hadoop, Spark, and Beyond

In this era where every click, transaction, or sensor emits a massive flux of information, the term "Big Data" has gone past being a mere buzzword and has become an inherent challenge and an enormous opportunity. These are datasets so enormous, so complex, and fast-growing that traditional data-processing applications cannot handle them. The huge ocean of information needs special tools; at the forefront of this big revolution being Big Data Technologies- Hadoop, Spark, and beyond.

One has to be familiar with these technologies if they are to make some modern-day sense of the digital world, whether they be an aspiring data professional or a business intent on extracting actionable insights out of their massive data stores.

What is Big Data and Why Do We Need Special Technologies?

Volume: Enormous amounts of data (terabytes, petabytes, exabytes).

Velocity: Data generated and processed at incredibly high speeds (e.g., real-time stock trades, IoT sensor data).

Variety: Data coming in diverse formats (structured, semi-structured, unstructured – text, images, videos, logs).

Traditional relational databases and processing tools were not built to handle this scale, speed, or diversity. They would crash, take too long, or simply fail to process such immense volumes. This led to the emergence of distributed computing frameworks designed specifically for Big Data.

Hadoop: The Pioneer of Big Data Processing

Apache Hadoop was an advanced technological tool in its time. It had completely changed the facets of data storage and processing on a large scale. It provides a framework for distributed storage and processing of datasets too large to be processed on a single machine.

· Key Components:

HDFS (Hadoop Distributed File System): It is a distributed file system, where the data is stored across multiple machines and hence are fault-tolerant and highly scalable.

MapReduce: A programming model for processing large data sets with a parallel, distributed algorithm on a cluster. It subdivides a large problem into smaller ones that can be solved independently in parallel.

What made it revolutionary was the fact that Hadoop enabled organizations to store and process data they previously could not, hence democratizing access to massive datasets.

Spark: The Speed Demon of Big Data Analytics

While MapReduce on Hadoop is a formidable force, disk-based processing sucks up time when it comes to iterative algorithms and real-time analytics. And so came Apache Spark: an entire generation ahead in terms of speed and versatility.

· Key Advantages over Hadoop MapReduce:

In-Memory Processing: Spark processes data in memory, which is from 10 to 100 times faster than MapReduce-based operations, primarily in iterative algorithms (Machine Learning is an excellent example here).

Versatility: Several libraries exist on top of Spark's core engine:

Spark SQL: Structured data processing using SQL

Spark Streaming: Real-time data processing.

MLlib: Machine Learning library.

GraphX: Graph processing.

What makes it important, actually: Spark is the tool of choice when it comes to real-time analytics, complex data transformations, and machine learning on Big Data.

And Beyond: Evolving Big Data Technologies

The Big Data ecosystem is growing by each passing day. While Hadoop and Spark are at the heart of the Big Data paradigm, many other technologies help in complementing and extending their capabilities:

NoSQL Databases: (e.g., MongoDB, Cassandra, HBase) – The databases were designed to handle massive volumes of unstructured or semi-structured data with high scale and high flexibility as compared to traditional relational databases.

Stream Processing Frameworks: (e.g., Apache Kafka, Apache Flink) – These are important for processing data as soon as it arrives (real-time), crucial for fraud-detection, IoT Analytics, and real-time dashboards.

Data Warehouses & Data Lakes: Cloud-native solutions (example, Amazon Redshift, Snowflake, Google BigQuery, Azure Synapse Analytics) for scalable, managed environments to store and analyze big volumes of data often with seamless integration to Spark.

Cloud Big Data Services: Major cloud providers running fully managed services of Big Data processing (e.g., AWS EMR, Google Dataproc, Azure HDInsight) reduce much of deployment and management overhead.

Data Governance & Security Tools: As data grows, the need to manage its quality, privacy, and security becomes paramount.

Career Opportunities in Big Data

Mastering Big Data technologies opens doors to highly sought-after roles such as:

Big Data Engineer

Data Architect

Data Scientist (often uses Spark/Hadoop for data preparation)

Business Intelligence Developer

Cloud Data Engineer

Many institutes now offer specialized Big Data courses in Ahmedabad that provide hands-on training in Hadoop, Spark, and related ecosystems, preparing you for these exciting careers.

The journey into Big Data technologies is a deep dive into the engine room of the modern digital economy. By understanding and mastering tools like Hadoop, Spark, and the array of complementary technologies, you're not just learning to code; you're learning to unlock the immense power of information, shaping the future of industries worldwide.

Contact us

Location: Bopal & Iskcon-Ambli in Ahmedabad, Gujarat

Call now on +91 9825618292

Visit Our Website: http://tccicomputercoaching.com/

0 notes