#Data Science Technologies

Explore tagged Tumblr posts

Text

Data Science Technologies and Trends are pacing swiftly toward a greater landscape that drives hyper-automation and astounding business returns. This could be your chance to know the trending evolution that the future of data science will hold. Read the latest developments here https://bit.ly/3ZieX4i

0 notes

Text

What is Data Science? A Comprehensive Guide for Beginners

In today’s data-driven world, the term “Data Science” has become a buzzword across industries. Whether it’s in technology, healthcare, finance, or retail, data science is transforming how businesses operate, make decisions, and understand their customers. But what exactly is data science? And why is it so crucial in the modern world? This comprehensive guide is designed to help beginners understand the fundamentals of data science, its processes, tools, and its significance in various fields.

#Data Science#Data Collection#Data Cleaning#Data Exploration#Data Visualization#Data Modeling#Model Evaluation#Deployment#Monitoring#Data Science Tools#Data Science Technologies#Python#R#SQL#PyTorch#TensorFlow#Tableau#Power BI#Hadoop#Spark#Business#Healthcare#Finance#Marketing

0 notes

Text

The article provides a comprehensive overview of the diverse tools and technologies used in the field of data science, offering insights into the evolving landscape of this dynamic industry. It highlights the essential tools and their applications, facilitating a deeper understanding of data science practices. Read more...

0 notes

Text

Cloudburst

Enshittification isn’t inevitable: under different conditions and constraints, the old, good internet could have given way to a new, good internet. Enshittification is the result of specific policy choices: encouraging monopolies; enabling high-speed, digital shell games; and blocking interoperability.

First we allowed companies to buy up their competitors. Google is the shining example here: having made one good product (search), they then fielded an essentially unbroken string of in-house flops, but it didn’t matter, because they were able to buy their way to glory: video, mobile, ad-tech, server management, docs, navigation…They’re not Willy Wonka’s idea factory, they’re Rich Uncle Pennybags, making up for their lack of invention by buying out everyone else:

https://locusmag.com/2022/03/cory-doctorow-vertically-challenged/

But this acquisition-fueled growth isn’t unique to tech. Every administration since Reagan (but not Biden! more on this later) has chipped away at antitrust enforcement, so that every sector has undergone an orgy of mergers, from athletic shoes to sea freight, eyeglasses to pro wrestling:

https://www.whitehouse.gov/cea/written-materials/2021/07/09/the-importance-of-competition-for-the-american-economy/

But tech is different, because digital is flexible in a way that analog can never be. Tech companies can “twiddle” the back-ends of their clouds to change the rules of the business from moment to moment, in a high-speed shell-game that can make it impossible to know what kind of deal you’re getting:

https://pluralistic.net/2023/02/27/knob-jockeys/#bros-be-twiddlin

To make things worse, users are banned from twiddling. The thicket of rules we call IP ensure that twiddling is only done against users, never for them. Reverse-engineering, scraping, bots — these can all be blocked with legal threats and suits and even criminal sanctions, even if they’re being done for legitimate purposes:

https://locusmag.com/2020/09/cory-doctorow-ip/

Enhittification isn’t inevitable but if we let companies buy all their competitors, if we let them twiddle us with every hour that God sends, if we make it illegal to twiddle back in self-defense, we will get twiddled to death. When a company can operate without the discipline of competition, nor of privacy law, nor of labor law, nor of fair trading law, with the US government standing by to punish any rival who alters the logic of their service, then enshittification is the utterly foreseeable outcome.

To understand how our technology gets distorted by these policy choices, consider “The Cloud.” Once, “the cloud” was just a white-board glyph, a way to show that some part of a software’s logic would touch some commodified, fungible, interchangeable appendage of the internet. Today, “The Cloud” is a flashing warning sign, the harbinger of enshittification.

When your image-editing tools live on your computer, your files are yours. But once Adobe moves your software to The Cloud, your critical, labor-intensive, unrecreatable images are purely contingent. At at time, without notice, Adobe can twiddle the back end and literally steal the colors out of your own files:

https://pluralistic.net/2022/10/28/fade-to-black/#trust-the-process

The finance sector loves The Cloud. Add “The Cloud” to a product and profits (money you get for selling something) can turn into rents (money you get for owning something). Profits can be eroded by competition, but rents are evergreen:

https://pluralistic.net/2023/07/24/rent-to-pwn/#kitt-is-a-demon

No wonder The Cloud has seeped into every corner of our lives. Remember your first iPod? Adding music to it was trivial: double click any music file to import it into iTunes, then plug in your iPod and presto, synched! Today, even sophisticated technology users struggle to “side load” files onto their mobile devices. Instead, the mobile duopoly — Apple and Google, who bought their way to mobile glory and have converged on the same rent-seeking business practices, down to the percentages they charge — want you to get your files from The Cloud, via their apps. This isn’t for technological reasons, it’s a business imperative: 30% of every transaction that involves an app gets creamed off by either Apple or Google in pure rents:

https://www.kickstarter.com/projects/doctorow/red-team-blues-another-audiobook-that-amazon-wont-sell/posts/3788112

And yet, The Cloud is undeniably useful. Having your files synch across multiple devices, including your collaborators’ devices, with built-in tools for resolving conflicting changes, is amazing. Indeed, this feat is the holy grail of networked tools, because it’s how programmers write all the software we use, including software in The Cloud.

If you want to know how good a tool can be, just look at the tools that toolsmiths use. With “source control” — the software programmers use to collaboratively write software — we get a very different vision of how The Cloud could operate. Indeed, modern source control doesn’t use The Cloud at all. Programmers’ workflow doesn’t break if they can’t access the internet, and if the company that provides their source control servers goes away, it’s simplicity itself to move onto another server provider.

This isn’t The Cloud, it’s just “the cloud” — that whiteboard glyph from the days of the old, good internet — freely interchangeable, eminently fungible, disposable and replaceable. For a tool like git, Github is just one possible synchronization point among many, all of which have a workflow whereby programmers’ computers automatically make local copies of all relevant data and periodically lob it back up to one or more servers, resolving conflicting edits through a process that is also largely automated.

There’s a name for this model: it’s called “Local First” computing, which is computing that starts from the presumption that the user and their device is the most important element of the system. Networked servers are dumb pipes and dumb storage, a nice-to-have that fails gracefully when it’s not available.

The data structures of source-code are among the most complicated formats we have; if we can do this for code, we can do it for spreadsheets, word-processing files, slide-decks, even edit-decision-lists for video and audio projects. If local-first computing can work for programmers writing code, it can work for the programs those programmers write.

Local-first computing is experiencing a renaissance. Writing for Wired, Gregory Barber traces the history of the movement, starting with the French computer scientist Marc Shapiro, who helped develop the theory of “Conflict-Free Replicated Data” — a way to synchronize data after multiple people edit it — two decades ago:

https://www.wired.com/story/the-cloud-is-a-prison-can-the-local-first-software-movement-set-us-free/

Shapiro and his co-author Nuno Preguiça envisioned CFRD as the building block of a new generation of P2P collaboration tools that weren’t exactly serverless, but which also didn’t rely on servers as the lynchpin of their operation. They published a technical paper that, while exiting, was largely drowned out by the release of GoogleDocs (based on technology built by a company that Google bought, not something Google made in-house).

Shapiro and Preguiça’s work got fresh interest with the 2019 publication of “Local-First Software: You Own Your Data, in spite of the Cloud,” a viral whitepaper-cum-manifesto from a quartet of computer scientists associated with Cambridge University and Ink and Switch, a self-described “industrial research lab”:

https://www.inkandswitch.com/local-first/static/local-first.pdf

The paper describes how its authors — Martin Kleppmann, Adam Wiggins, Peter van Hardenberg and Mark McGranaghan — prototyped and tested a bunch of simple local-first collaboration tools built on CFRD algorithms, with the goal of “network optional…seamless collaboration.” The results are impressive, if nascent. Conflicting edits were simpler to resolve than the authors anticipated, and users found URLs to be a good, intuitive way of sharing documents. The biggest hurdles are relatively minor, like managing large amounts of change-data associated with shared files.

Just as importantly, the paper makes the case for why you’d want to switch to local-first computing. The Cloud is not reliable. Companies like Evernote don’t last forever — they can disappear in an eyeblink, and take your data with them:

https://www.theverge.com/2023/7/9/23789012/evernote-layoff-us-staff-bending-spoons-note-taking-app

Google isn’t likely to disappear any time soon, but Google is a graduate of the Darth Vader MBA program (“I have altered the deal, pray I don’t alter it any further”) and notorious for shuttering its products, even beloved ones like Google Reader:

https://www.theverge.com/23778253/google-reader-death-2013-rss-social

And while the authors don’t mention it, Google is also prone to simply kicking people off all its services, costing them their phone numbers, email addresses, photos, document archives and more:

https://pluralistic.net/2022/08/22/allopathic-risk/#snitches-get-stitches

There is enormous enthusiasm among developers for local-first application design, which is only natural. After all, companies that use The Cloud go to great lengths to make it just “the cloud,” using containerization to simplify hopping from one cloud provider to another in a bid to stave off lock-in from their cloud providers and the enshittification that inevitably follows.

The nimbleness of containerization acts as a disciplining force on cloud providers when they deal with their business customers: disciplined by the threat of losing money, cloud companies are incentivized to treat those customers better. The companies we deal with as end-users know exactly how bad it gets when a tech company can impose high switching costs on you and then turn the screws until things are almost-but-not-quite so bad that you bolt for the doors. They devote fantastic effort to making sure that never happens to them — and that they can always do that to you.

Interoperability — the ability to leave one service for another — is technology’s secret weapon, the thing that ensures that users can turn The Cloud into “the cloud,” a humble whiteboard glyph that you can erase and redraw whenever it suits you. It’s the greatest hedge we have against enshittification, so small wonder that Big Tech has spent decades using interop to clobber their competitors, and lobbying to make it illegal to use interop against them:

https://locusmag.com/2019/01/cory-doctorow-disruption-for-thee-but-not-for-me/

Getting interop back is a hard slog, but it’s also our best shot at creating a new, good internet that lives up the promise of the old, good internet. In my next book, The Internet Con: How to Seize the Means of Computation (Verso Books, Sept 5), I set out a program fro disenshittifying the internet:

https://www.versobooks.com/products/3035-the-internet-con

The book is up for pre-order on Kickstarter now, along with an independent, DRM-free audiobooks (DRM-free media is the content-layer equivalent of containerized services — you can move them into or out of any app you want):

http://seizethemeansofcomputation.org

Meanwhile, Lina Khan, the FTC and the DoJ Antitrust Division are taking steps to halt the economic side of enshittification, publishing new merger guidelines that will ban the kind of anticompetitive merger that let Big Tech buy its way to glory:

https://www.theatlantic.com/ideas/archive/2023/07/biden-administration-corporate-merger-antitrust-guidelines/674779/

The internet doesn’t have to be enshittified, and it’s not too late to disenshittify it. Indeed — the same forces that enshittified the internet — monopoly mergers, a privacy and labor free-for-all, prohibitions on user-side twiddling — have enshittified everything from cars to powered wheelchairs. Not only should we fight enshittification — we must.

Back my anti-enshittification Kickstarter here!

If you’d like an essay-formatted version of this post to read or share, here’s a link to it on pluralistic.net, my surveillance-free, ad- free, tracker-free blog:

https://pluralistic.net/2023/08/03/there-is-no-cloud/#only-other-peoples-computers

Image: Drahtlos (modified) https://commons.wikimedia.org/wiki/File:Motherboard_Intel_386.jpg

CC BY-SA 4.0 https://creativecommons.org/licenses/by-sa/4.0/deed.en

—

cdsessums (modified) https://commons.wikimedia.org/wiki/File:Monsoon_Season_Flagstaff_AZ_clouds_storm.jpg

CC BY-SA 2.0 https://creativecommons.org/licenses/by-sa/2.0/deed.en

#pluralistic#web3#darth vader mba#conflict-free replicated data#CRDT#computer science#saas#Mark McGranaghan#Adam Wiggins#evernote#git#local-first computing#the cloud#cloud computing#enshittification#technological self-determination#Martin Kleppmann#Peter van Hardenberg

889 notes

·

View notes

Text

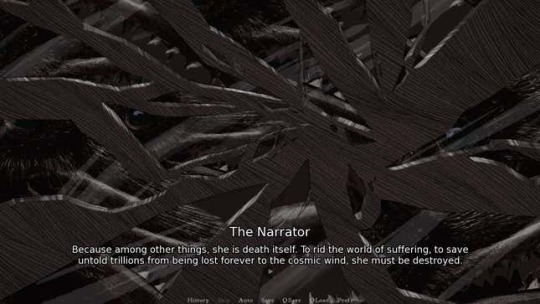

Heroes, Gods, and the Invisible Narrator

Slay the Princess as a Framework for the Cyclical Reproduction of Colonialist Narratives in Data Science & Technology

An Essay by FireflySummers

All images are captioned.

Content Warnings: Body Horror, Discussion of Racism and Colonialism

Spoilers for Slay the Princess (2023) by @abby-howard and Black Tabby Games.

If you enjoy this article, consider reading my guide to arguing against the use of AI image generators or the academic article it's based on.

Introduction: The Hero and the Princess

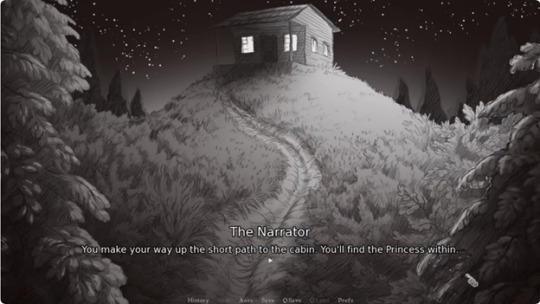

You're on a path in the woods, and at the end of that path is a cabin. And in the basement of that cabin is a Princess. You're here to slay her. If you don't, it will be the end of the world.

Slay the Princess is a 2023 indie horror game by Abby Howard and published through Black Tabby Games, with voice talent by Jonathan Sims (yes, that one) and Nichole Goodnight.

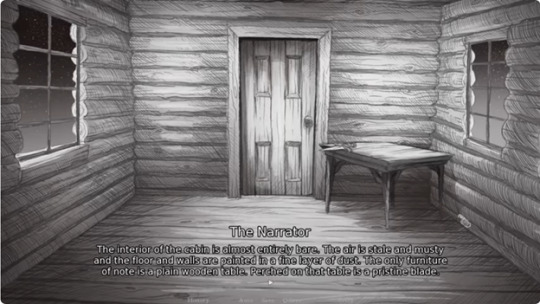

The game starts with you dropped without context in the middle of the woods. But that’s alright. The Narrator is here to guide you. You are the hero, you have your weapon, and you have a monster to slay.

From there, it's the player's choice exactly how to proceed--whether that be listening to the voice of the narrator, or attempting to subvert him. You can kill her as instructed, or sit and chat, or even free her from her chains.

It doesn't matter.

Regardless of whether you are successful in your goal, you will inevitably (and often quite violently) die.

And then...

You are once again on a path in the woods.

The cycle repeats itself, the narrator seemingly none the wiser. But the woods are different, and so is the cabin. You're different, and worse... so is she.

Based on your actions in the previous loop, the princess has... changed. Distorted.

Had you attempted a daring rescue, she is now a damsel--sweet and submissive and already fallen in love with you.

Had you previously betrayed her, she has warped into something malicious and sinister, ready to repay your kindness in full.

But once again, it doesn't matter.

Because the no matter what you choose, no matter how the world around you contorts under the weight of repeated loops, it will always be you and the princess.

Why? Because that’s how the story goes.

So says the narrator.

So now that we've got that out of the way, let's talk about data.

Chapter I: Echoes and Shattered Mirrors

The problem with "data" is that we don't really think too much about it anymore. Or, at least, we think about it in the same abstract way we think about "a billion people." It's gotten so big, so seemingly impersonal that it's easy to forget that contemporary concept of "data" in the west is a phenomenon only a couple centuries old [1].

This modern conception of the word describes the ways that we translate the world into words and numbers that can then be categorized and analyzed. As such, data has a lot of practical uses, whether that be putting a rover on mars or tracking the outbreak of a viral contagion. However, this functionality makes it all too easy to overlook the fact that data itself is not neutral. It is gathered by people, sorted into categories designed by people, and interpreted by people. At every step, there are people involved, such that contemporary technology is embedded with systemic injustices, and not always by accident.

The reproduction of systems of oppression are most obvious from the margins. In his 2019 article As If, Ramon Amaro describes the Aspire Mirror (2016): a speculative design project by by Joy Buolamwini that contended with the fact that the standard facial recognition algorithm library had been trained almost exclusively on white faces. The simplest solution was to artificially lighten darker skin-tones for the algorithm to recognize, which Amaro uses to illustrate the way that technology is developed with an assumption of whiteness [2].

This observation applies across other intersections as well, such as trans identity [3], which has been colloquially dubbed "The Misgendering Machine" [4] for its insistence on classifying people into a strict gender binary based only on physical appearance.

This has also popped up in my own research, brought to my attention by the artist @b4kuch1n who has spoken at length with me about the connection between their Vietnamese heritage and the clothing they design in their illustrative work [5]. They call out AI image generators for reinforcing colonialism by stripping art with significant personal and cultural meaning of their context and history, using them to produce a poor facsimile to sell to the highest bidder.

All this describes an iterative cycle which defines normalcy through a white, western lens, with a limited range of acceptable diversity. Within this cycle, AI feeds on data gathered under colonialist ideology, then producing an artifact that reinforces existing systemic bias. When this data is, in turn, once again fed to the machine, that bias becomes all the more severe, and the range of acceptability narrower [2, 6].

Luciana Parisi and Denise Ferreira da Silva touch on a similar point in their article Black Feminist Tools, Critique, and Techno-poethics but on a much broader scale. They call up the Greek myth of Prometheus, who was punished by the gods for his hubris for stealing fire to give to humanity. Parisi and Ferreira da Silva point to how this, and other parts of the “Western Cosmology” map to humanity’s relationship with technology [7].

However, while this story seems to celebrate the technological advancement of humanity, there are darker colonialist undertones. It frames the world in terms of the gods and man, the oppressor and the oppressed; but it provides no other way of being. So instead the story repeats itself, with so-called progress an inextricable part of these two classes of being. This doesn’t bode well for visions of the future, then–because surely, eventually, the oppressed will one day be the machines [7, 8].

It’s… depressing. But it’s only really true, if you assume that that’s the only way the story could go.

“Stories don't care who takes part in them. All that matters is that the story gets told, that the story repeats. Or, if you prefer to think of it like this: stories are a parasitical life form, warping lives in the service only of the story itself.” ― Terry Pratchett, Witches Abroad

Chapter II: The Invisible Narrator

So why does the narrator get to call the shots on how a story might go? Who even are they? What do they want? How much power do they actually have?

With the exception of first person writing, a lot of the time the narrator is invisible. This is different from an unreliable narrator. With an unreliable narrator, at some point the audience becomes aware of their presence in order for the story to function as intended. An invisible narrator is never meant to be seen.

In Slay the Princess, the narrator would very much like to be invisible. Instead, he has been dragged out into the light, because you (and the inner voices you pick up along the way), are starting to argue with him. And he doesn’t like it.

Despite his claims that the princess will lie and cheat in order to escape, as the game progresses it’s clear that the narrator is every bit as manipulative–if not moreso, because he actually knows what’s going on. And, if the player tries to diverge from the path that he’s set before them, the correct path, then it rapidly becomes clear that he, at least to start, has the power to force that correct path.

While this is very much a narrative device, the act of calling attention to the narrator is important beyond that context.

The Hero’s Journey is the true monomyth, something to which all stories can be reduced. It doesn’t matter that the author, Joseph Campbell, was a raging misogynist whose framework flattened cultures and stories to fit a western lens [9, 10]. It was used in Star Wars, so clearly it’s a universal framework.

The metaverse will soon replace the real world and crypto is the future of currency! Never mind that the organizations pushing it are suspiciously pyramid shaped. Get on board or be left behind.

Generative AI is pushed as the next big thing. The harms it inflicts on creatives and the harmful stereotypes it perpetuates are just bugs in the system. Never mind that the evangelists for this technology speak over the concerns of marginalized people [5]. That’s a skill issue, you gotta keep up.

Computers will eventually, likely soon, advance so far as to replace humans altogether. The robot uprising is on the horizon [8].

Who perpetuates these stories? What do they have to gain?

Why is the only story for the future replications of unjust systems of power? Why must the hero always slay the monster?

Because so says the narrator. And so long as they are invisible, it is simple to assume that this is simply the way things are.

Chapter III: The End...?

This is the part where Slay the Princess starts feeling like a stretch, but I’ve already killed the horse so I might as well beat it until the end too.

Because what is the end result here?

According to the game… collapse. A recursive story whose biases narrow the scope of each iteration ultimately collapses in on itself. The princess becomes so sharp that she is nothing but blades to eviscerate you. The princess becomes so perfect a damsel that she is a caricature of the trope. The story whittles itself away to nothing. And then the cycle begins anew.

There’s no climactic final battle with the narrator. He created this box, set things in motion, but he is beyond the player’s reach to confront directly. The only way out is to become aware of the box itself, and the agenda of the narrator. It requires acknowledgement of the artificiality of the roles thrust upon you and the Princess, the false dichotomy of hero or villain.

Slay the Princess doesn’t actually provide an answer to what lies outside of the box, merely acknowledges it as a limit that can be overcome.

With regards to the less fanciful narratives that comprise our day-to-day lives, it’s difficult to see the boxes and dichotomies we’ve been forced into, let alone what might be beyond them. But if the limit placed is that there are no stories that can exist outside of capitalism, outside of colonialism, outside of rigid hierarchies and oppressive structures, then that limit can be broken [12].

Denouement: Doomed by the Narrative

Video games are an interesting artistic medium, due to their inherent interactivity. The commonly accepted mechanics of the medium, such as flavor text that provides in-game information and commentary, are an excellent example of an invisible narrator. Branching dialogue trees and multiple endings can help obscure this further, giving the player a sense of genuine agency… which provides an interesting opportunity to drag an invisible narrator into the light.

There are a number of games that have explored the power differential between the narrator and the player (The Stanley Parable, Little Misfortune, Undertale, Buddy.io, OneShot, etc…)

However, Slay the Princess works well here because it not only emphasizes the artificial limitations that the narrator sets on a story, but the way that these stories recursively loop in on themselves, reinforcing the fears and biases of previous iterations.

Critical data theory probably had nothing to do with the game’s development (Abby Howard if you're reading this, lmk). However, it works as a surprisingly cohesive framework for illustrating the ways that we can become ensnared by a narrative, and the importance of knowing who, exactly, is narrating the story. Although it is difficult or impossible to conceptualize what might exist beyond the artificial limits placed by even a well-intentioned narrator, calling attention to them and the box they’ve constructed is the first step in breaking out of this cycle.

“You can't go around building a better world for people. Only people can build a better world for people. Otherwise it's just a cage.” ― Terry Pratchett, Witches Abroad

Epilogue

If you've read this far, thank you for your time! This was an adaptation of my final presentation for a Critical Data Studies course. Truthfully, this course posed quite a challenge--I found the readings of philosophers such as Kant, Adorno, Foucault, etc... difficult to parse. More contemporary scholars were significantly more accessible. My only hope is that I haven't gravely misinterpreted the scholars and researchers whose work inspired this piece.

I honestly feel like this might have worked best as a video essay, but I don't know how to do those, and don't have the time to learn or the money to outsource.

Slay the Princess is available for purchase now on Steam.

Screencaps from ManBadassHero Let's Plays: [Part 1] [Part 2] [Part 3] [Part 4] [Part 5] [Part 6]

Post Dividers by @cafekitsune

Citations:

Rosenberg, D. (2018). Data as word. Historical Studies in the Natural Sciences, 48(5), 557-567.

Amaro, Ramon. (2019). As If. e-flux Architecture. Becoming Digital. https://www.e-flux.com/architecture/becoming-digital/248073/as-if/

What Ethical AI Really Means by PhilosophyTube

Keyes, O. (2018). The misgendering machines: Trans/HCI implications of automatic gender recognition. Proceedings of the ACM on human-computer interaction, 2(CSCW), 1-22.

Allred, A.M., Aragon, C. (2023). Art in the Machine: Value Misalignment and AI “Art”. In: Luo, Y. (eds) Cooperative Design, Visualization, and Engineering. CDVE 2023. Lecture Notes in Computer Science, vol 14166. Springer, Cham. https://doi.org/10.1007/978-3-031-43815-8_4

Amaro, R. (2019). Artificial Intelligence: warped, colorful forms and their unclear geometries.

Parisisi, L., Ferreira da Silva, D. Black Feminist Tools, Critique, and Techno-poethics. e-flux. Issue #123. https://www.e-flux.com/journal/123/436929/black-feminist-tools-critique-and-techno-poethics/

AI - Our Shiny New Robot King | Sophie from Mars by Sophie From Mars

Joseph Campbell and the Myth of the Monomyth | Part 1 by Maggie Mae Fish

Joseph Campbell and the N@zis | Part 2 by Maggie Mae Fish

How Barbie Cis-ified the Matrix by Jessie Gender

#slay the princess#stp spoilers#stp#stp princess#abby howard#black tabby games#academics#critical data studies#computer science#technology#hci#my academics#my writing#long post

245 notes

·

View notes

Text

youtube

How To Learn Math for Machine Learning FAST (Even With Zero Math Background)

I dropped out of high school and managed to became an Applied Scientist at Amazon by self-learning math (and other ML skills). In this video I'll show you exactly how I did it, sharing the resources and study techniques that worked for me, along with practical advice on what math you actually need (and don't need) to break into machine learning and data science.

#How To Learn Math for Machine Learning#machine learning#free education#education#youtube#technology#educate yourselves#educate yourself#tips and tricks#software engineering#data science#artificial intelligence#data analytics#data science course#math#mathematics#Youtube

21 notes

·

View notes

Text

If only William from HR would visit more often...

#vintage illustration#vintage computers#computers#computing#technology#electronics#vintage electronics#vintage tech#vintage technology#engineering#computer science#mainframes#mainframe computers#tape drives#magnetic tape#tape storage#magnetic tape drives#univac#data entry

19 notes

·

View notes

Text

To be clear, AI can drive scientific breakthroughs. My concern is about their magnitude and frequency. Has AI really shown enough potential to justify such a massive shift in talent, training, time, and money away from existing research directions and towards a single paradigm?

Every field of science is experiencing AI differently, so we should be cautious about making generalizations. I’m convinced, however, that some of the lessons from my experience are broadly applicable across science:

AI adoption is exploding among scientists less because it benefits science and more because it benefits the scientists themselves.

Because AI researchers almost never publish negative results, AI-for-science is experiencing survivorship bias.

The positive results that get published tend to be overly optimistic about AI’s potential.

As a result, I’ve come to believe that AI has generally been less successful and revolutionary in science than it appears to be.

Ultimately, I don’t know whether AI will reverse the decades-long trend of declining scientific productivity and stagnating (or even decelerating) rates of scientific progress. I don’t think anyone does. But barring major (and in my opinion unlikely) breakthroughs in advanced AI, I expect AI to be much more a normal tool of incremental, uneven scientific progress than a revolutionary one.

3 notes

·

View notes

Text

Aluminum scandium nitride films: Enabling next-gen ferroelectric memory devices

Imagine a thin film, just nanometers thick, that could store gigabytes of data—enough for movies, video games, and videos. This is the exciting potential of ferroelectric materials for memory storage. These materials have a unique arrangement of ions, resulting in two distinct polarization states analogous to 0 and 1 in binary code, which can be used for digital memory storage. These states are stable, meaning they can 'remember' data without power, and can be switched efficiently by applying a small electric field. This property makes them extremely energy-efficient and capable of fast read and write speeds. However, some well-known ferroelectric materials, such as Pb(Zr,Ti)O3 (PZT) and SrBi2Ta2O9, degrade and lose their polarization when exposed to heat treatment with hydrogen during fabrication.

Read more.

#Materials Science#Science#Thin films#Ferroelectric#Data storage#Aluminum#Scandium#Nitrides#Electronics#Tokyo Institute of Technology

15 notes

·

View notes

Text

"From Passion to Profession: Steps to Enter the Tech Industry"

How to Break into the Tech World: Your Comprehensive Guide

In today’s fast-paced digital landscape, the tech industry is thriving and full of opportunities. Whether you’re a student, a career changer, or someone passionate about technology, you may be wondering, “How do I get into the tech world?” This guide will provide you with actionable steps, resources, and insights to help you successfully navigate your journey.

Understanding the Tech Landscape

Before you start, it's essential to understand the various sectors within the tech industry. Key areas include:

Software Development: Designing and building applications and systems.

Data Science: Analyzing data to support decision-making.

Cybersecurity: Safeguarding systems and networks from digital threats.

Product Management: Overseeing the development and delivery of tech products.

User Experience (UX) Design: Focusing on the usability and overall experience of tech products.

Identifying your interests will help you choose the right path.

Step 1: Assess Your Interests and Skills

Begin your journey by evaluating your interests and existing skills. Consider the following questions:

What areas of technology excite me the most?

Do I prefer coding, data analysis, design, or project management?

What transferable skills do I already possess?

This self-assessment will help clarify your direction in the tech field.

Step 2: Gain Relevant Education and Skills

Formal Education

While a degree isn’t always necessary, it can be beneficial, especially for roles in software engineering or data science. Options include:

Computer Science Degree: Provides a strong foundation in programming and system design.

Coding Bootcamps: Intensive programs that teach practical skills quickly.

Online Courses: Platforms like Coursera, edX, and Udacity offer courses in various tech fields.

Self-Learning and Online Resources

The tech industry evolves rapidly, making self-learning crucial. Explore resources like:

FreeCodeCamp: Offers free coding tutorials and projects.

Kaggle: A platform for data science practice and competitions.

YouTube: Channels dedicated to tutorials on coding, design, and more.

Certifications

Certifications can enhance your credentials. Consider options like:

AWS Certified Solutions Architect: Valuable for cloud computing roles.

Certified Information Systems Security Professional (CISSP): Great for cybersecurity.

Google Analytics Certification: Useful for data-driven positions.

Step 3: Build a Portfolio

A strong portfolio showcases your skills and projects. Here’s how to create one:

For Developers

GitHub: Share your code and contributions to open-source projects.

Personal Website: Create a site to display your projects, skills, and resume.

For Designers

Design Portfolio: Use platforms like Behance or Dribbble to showcase your work.

Case Studies: Document your design process and outcomes.

For Data Professionals

Data Projects: Analyze public datasets and share your findings.

Blogging: Write about your data analysis and insights on a personal blog.

Step 4: Network in the Tech Community

Networking is vital for success in tech. Here are some strategies:

Attend Meetups and Conferences

Search for local tech meetups or conferences. Websites like Meetup.com and Eventbrite can help you find relevant events, providing opportunities to meet professionals and learn from experts.

Join Online Communities

Engage in online forums and communities. Use platforms like:

LinkedIn: Connect with industry professionals and share insights.

Twitter: Follow tech influencers and participate in discussions.

Reddit: Subreddits like r/learnprogramming and r/datascience offer valuable advice and support.

Seek Mentorship

Finding a mentor can greatly benefit your journey. Reach out to experienced professionals in your field and ask for guidance.

Step 5: Gain Practical Experience

Hands-on experience is often more valuable than formal education. Here’s how to gain it:

Internships

Apply for internships, even if they are unpaid. They offer exposure to real-world projects and networking opportunities.

Freelancing

Consider freelancing to build your portfolio and gain experience. Platforms like Upwork and Fiverr can connect you with clients.

Contribute to Open Source

Engaging in open-source projects can enhance your skills and visibility. Many projects on GitHub are looking for contributors.

Step 6: Prepare for Job Applications

Crafting Your Resume

Tailor your resume to highlight relevant skills and experiences. Align it with the job description for each application.

Writing a Cover Letter

A compelling cover letter can set you apart. Highlight your passion for technology and what you can contribute.

Practice Interviewing

Prepare for technical interviews by practicing coding challenges on platforms like LeetCode or HackerRank. For non-technical roles, rehearse common behavioral questions.

Step 7: Stay Updated and Keep Learning

The tech world is ever-evolving, making it crucial to stay current. Subscribe to industry newsletters, follow tech blogs, and continue learning through online courses.

Follow Industry Trends

Stay informed about emerging technologies and trends in your field. Resources like TechCrunch, Wired, and industry-specific blogs can provide valuable insights.

Continuous Learning

Dedicate time each week for learning. Whether through new courses, reading, or personal projects, ongoing education is essential for long-term success.

Conclusion

Breaking into the tech world may seem daunting, but with the right approach and commitment, it’s entirely possible. By assessing your interests, acquiring relevant skills, building a portfolio, networking, gaining practical experience, preparing for job applications, and committing to lifelong learning, you’ll be well on your way to a rewarding career in technology.

Embrace the journey, stay curious, and connect with the tech community. The tech world is vast and filled with possibilities, and your adventure is just beginning. Take that first step today and unlock the doors to your future in technology!

contact Infoemation wensite: https://agileseen.com/how-to-get-to-tech-world/ Phone: 01722-326809 Email: [email protected]

#tech career#how to get into tech#technology jobs#software development#data science#cybersecurity#product management#UX design#tech education#networking in tech#internships#freelancing#open source contribution#tech skills#continuous learning#job application tips

9 notes

·

View notes

Text

Unlocking the Power of Data: Essential Skills to Become a Data Scientist

In today's data-driven world, the demand for skilled data scientists is skyrocketing. These professionals are the key to transforming raw information into actionable insights, driving innovation and shaping business strategies. But what exactly does it take to become a data scientist? It's a multidisciplinary field, requiring a unique blend of technical prowess and analytical thinking. Let's break down the essential skills you'll need to embark on this exciting career path.

1. Strong Mathematical and Statistical Foundation:

At the heart of data science lies a deep understanding of mathematics and statistics. You'll need to grasp concepts like:

Linear Algebra and Calculus: Essential for understanding machine learning algorithms and optimizing models.

Probability and Statistics: Crucial for data analysis, hypothesis testing, and drawing meaningful conclusions from data.

2. Programming Proficiency (Python and/or R):

Data scientists are fluent in at least one, if not both, of the dominant programming languages in the field:

Python: Known for its readability and extensive libraries like Pandas, NumPy, Scikit-learn, and TensorFlow, making it ideal for data manipulation, analysis, and machine learning.

R: Specifically designed for statistical computing and graphics, R offers a rich ecosystem of packages for statistical modeling and visualization.

3. Data Wrangling and Preprocessing Skills:

Raw data is rarely clean and ready for analysis. A significant portion of a data scientist's time is spent on:

Data Cleaning: Handling missing values, outliers, and inconsistencies.

Data Transformation: Reshaping, merging, and aggregating data.

Feature Engineering: Creating new features from existing data to improve model performance.

4. Expertise in Databases and SQL:

Data often resides in databases. Proficiency in SQL (Structured Query Language) is essential for:

Extracting Data: Querying and retrieving data from various database systems.

Data Manipulation: Filtering, joining, and aggregating data within databases.

5. Machine Learning Mastery:

Machine learning is a core component of data science, enabling you to build models that learn from data and make predictions or classifications. Key areas include:

Supervised Learning: Regression, classification algorithms.

Unsupervised Learning: Clustering, dimensionality reduction.

Model Selection and Evaluation: Choosing the right algorithms and assessing their performance.

6. Data Visualization and Communication Skills:

Being able to effectively communicate your findings is just as important as the analysis itself. You'll need to:

Visualize Data: Create compelling charts and graphs to explore patterns and insights using libraries like Matplotlib, Seaborn (Python), or ggplot2 (R).

Tell Data Stories: Present your findings in a clear and concise manner that resonates with both technical and non-technical audiences.

7. Critical Thinking and Problem-Solving Abilities:

Data scientists are essentially problem solvers. You need to be able to:

Define Business Problems: Translate business challenges into data science questions.

Develop Analytical Frameworks: Structure your approach to solve complex problems.

Interpret Results: Draw meaningful conclusions and translate them into actionable recommendations.

8. Domain Knowledge (Optional but Highly Beneficial):

Having expertise in the specific industry or domain you're working in can give you a significant advantage. It helps you understand the context of the data and formulate more relevant questions.

9. Curiosity and a Growth Mindset:

The field of data science is constantly evolving. A genuine curiosity and a willingness to learn new technologies and techniques are crucial for long-term success.

10. Strong Communication and Collaboration Skills:

Data scientists often work in teams and need to collaborate effectively with engineers, business stakeholders, and other experts.

Kickstart Your Data Science Journey with Xaltius Academy's Data Science and AI Program:

Acquiring these skills can seem like a daunting task, but structured learning programs can provide a clear and effective path. Xaltius Academy's Data Science and AI Program is designed to equip you with the essential knowledge and practical experience to become a successful data scientist.

Key benefits of the program:

Comprehensive Curriculum: Covers all the core skills mentioned above, from foundational mathematics to advanced machine learning techniques.

Hands-on Projects: Provides practical experience working with real-world datasets and building a strong portfolio.

Expert Instructors: Learn from industry professionals with years of experience in data science and AI.

Career Support: Offers guidance and resources to help you launch your data science career.

Becoming a data scientist is a rewarding journey that blends technical expertise with analytical thinking. By focusing on developing these key skills and leveraging resources like Xaltius Academy's program, you can position yourself for a successful and impactful career in this in-demand field. The power of data is waiting to be unlocked – are you ready to take the challenge?

3 notes

·

View notes

Text

Pickl.AI offers a comprehensive approach to data science education through real-world case studies and practical projects. By working on industry-specific challenges, learners gain exposure to how data analysis, machine learning, and artificial intelligence are applied to solve business problems. The hands-on learning approach helps build technical expertise while developing critical thinking and problem-solving abilities. Pickl.AI’s programs are designed to prepare individuals for successful careers in the evolving data-driven job market, providing both theoretical knowledge and valuable project experience.

#Pickl.AI#data science#data science certification#data science case studies#machine learning#AI#artificial intelligence#data analytics#data science projects#career in data science#online education#real-world data science#data analysis#big data#technology

2 notes

·

View notes

Text

Liquid Crystal Art

#stract#digital art#minimalism#simple background#3D Abstract#lines#red#Plexus#technology#connection#internet#communication#backgrounds#data#geometric Shape#futuristic#spotted#business#science#computer#shape#computer Network#space#three-dimensional Shape#vector#complexity#design#pattern#illustration#concepts

3 notes

·

View notes

Text

doing geological mapping on a section of mars and it's like genuinely crazymaking that HiRISE and CTX give us high enough quality images that i can do it in 1:10000 resolution. on mars. you know. another planet

#1:10000 mapping is REALLY detailed for mars. hell it's fucking annoying to do on earth because there's so much at that scale#plus MRO was launched in 2007 so it's not even brand new technology#additional data are from odyssey and mex which are 2001 and 2003 respectively hello#anyway. back to craters. fuck meeeeee#huge what science is capable of but it's saurrr tedious#veni veni#there are many disadvantages to being a geologist

5 notes

·

View notes

Text

Read More Here: Substack 🤖

2 notes

·

View notes

Text

youtube

Statistics - A Full Lecture to learn Data Science (2025 Version)

Welcome to our comprehensive and free statistics tutorial (Full Lecture)! In this video, we'll explore essential tools and techniques that power data science and data analytics, helping us interpret data effectively. You'll gain a solid foundation in key statistical concepts and learn how to apply powerful statistical tests widely used in modern research and industry. From descriptive statistics to regression analysis and beyond, we'll guide you through each method's role in data-driven decision-making. Whether you're diving into machine learning, business intelligence, or academic research, this tutorial will equip you with the skills to analyze and interpret data with confidence. Let's get started!

#education#free education#technology#educate yourselves#educate yourself#data analysis#data science course#data science#data structure and algorithms#youtube#statistics for data science#statistics#economics#education system#learn data science#learn data analytics#Youtube

4 notes

·

View notes