#temporal and spatial summation

Explore tagged Tumblr posts

Text

Let's Explore the differences between temporal and spatial summation. We will be discussing the mechanism, differences and comparisons of both summations to come to an understanding which is better. If you're medical science student, this blog will be really helpful for you. Visit My Assignment Services read more such blogs written by top Canadian Experts

#temporal and spatial summation#temporal Vs spatial summation#Medical science assignment help#biology assignment help#neurobiology assignment help#brain science#Study of brain

0 notes

Text

The Science Research Manuscripts of S. Sunkavally, p410.

#synaptic density#IQ#cold water#temporal summation#spatial summation#threshold of firing in neurones#conversion of nitrogen to carbon monoxide#cold block#sciatic nerve#boron#neutron absorption#hydration shell#specific heat#mean free path#catalysis#temperature#rate of ageing#bacterial growth#potassium-rich medium#hyoglycemia#sleep#anaphylactic reactions#satyendra sunkavally#theoretical biology#cursive handwriting#manuscript

2 notes

·

View notes

Text

It occurred to me that nocturnal animals might be able to sum photons in space and time to increase sensitivity, akin to pooling pixels to create a larger and more sensitive pixel, or on a camera lengthening the exposure time. This would require neurons at some higher level in the visual system that sum the photoreceptor signals coming from small groups of neighbouring ommatidia (the optical building blocks of compound eyes, each consisting of a lens-pair and an underlying bundle of photoreceptors). Since each ommatidium is responsible for sampling a single pixel of the visual scene, this neural spatial summation would create a large ‘super ommatidium’ that samples a large ‘super pixel’. Similarly, some higher neuron, or circuit of neurons, might be responsible for lengthening the visual exposure time (which is equivalent to the visual integration time).

The downside of such a summation strategy is, however, that fewer larger pixels reduce spatial resolution, and a longer exposure time reduces temporal resolution. In other words, to improve sensitivity one would need to throw away the finer and faster details in a visual scene in order to see the coarser and slower ones a lot better. But this might be better than seeing nothing at all! With this realisation, I started to build a mathematical model to calculate the finest spatial detail that a nocturnal animal, with a given eye design, might see using such a summation strategy as light levels fell (Warrant, 1999).

The results were surprising – spatial and temporal summation should in theory allow nocturnal animals to see at light levels several orders of magnitude dimmer than would have been possible had summation not been used. But could nocturnal animals actually do this? The benefits of summation seemed obvious, and I became convinced that it must be a crucial component of nocturnal visual processing.

I also realised that the same strategies could be used to improve video filmed in very dim light, and quite out of the blue, not long after I had published my model, I was contacted by the car manufacturer Toyota (who had realised the same thing). Toyota were very keen to develop an in-car camera system that could automatically monitor the road ahead at night – using only the existing natural light – and warn the driver of impending obstacles.

I also realised that the same strategies could be used to improve video filmed in very dim light, and quite out of the blue, not long after I had published my model, I was contacted by the car manufacturer Toyota (who had realised the same thing). Toyota were very keen to develop an in-car camera system that could automatically monitor the road ahead at night – using only the existing natural light – and warn the driver of impending obstacles.

they are using bug vision in the cars....

49 notes

·

View notes

Text

Tremblay et al.: Figures and Boxes

Figure 1: Diversity, Classification, and Properties of Neocortical GABAergic INs

Neocortical GABAergic IN: GABA-releasing (likely inhibitory) interneuron (connecting between perception/motor output?) within the fetal brain

all of these express one of [PV parvalbumin (relation to general albumin carrier in the blood?), somatostatin (insulin/glucagon balance regulator), 5HT3a serotonin receptor] and can thus be categorized by those markers

further classification of different neocortical GABA-ergic INs can be obtained via morphology, targeting biases, other biomarkers, and electrophysiological/synaptic properties

tree classification: 1 of 3 markers » morphology » targeting bias » anatomy » biophysical properties of firing (firing patterns, spiking, refractory) » synaptic properties » addt'l markers

Figure 2: Laminar Distribution of IN Groups

L1, 2, 3, 4, 5a, 5b, and 6 are all layers in the neocortex

distribution of the three major GABA-ergic IN types as distinguished by PV, Sst, and 5HT3a marker expression (5HT3a separated into VIP and non-VIP expressing cells) varies with neocortical layer

significance: varying compositional make-up of L layers signifies likely variance in roles they play in fetal brain function + later development of cognition

Box 1: PV FS Basket Cells Are Specialized for Speed, Efficiency, and Temporal Precision

physical properties of PV FS cells suit them for rapid, precise firing

machinery for fast EPSPs: AMPA receptors w/ GluR1 subunits only, low membrane resistance, very active dendrites

machinery for brief and highly repetitive APs: sub-threshold Kv1 channels that allow for quick repolarization, "Na+ channels with slower inactivation and faster recovery"

these components are all concentrated at the appropriate areas of the neuron to reach high efficacy

Figure 3: Cell-Specific Connectivity and Subcellular Domains Targeted by IN Subtypes

Sst martinotti cells synapse near the soma of the L2/3 cell

nonVIP 5HT3aR NGFCs synapse near the branches of the L2/3 dendrite/axon

in L5/6 pyramidal cells, NGFC inputs are broken up by different layers (?)

Figure 4: Circuit Motifs Involving INs

different functions of interneurons in modulating synaptic signaling are dependent on placement of IN and source of excitatory input

feedforward inhibition: distal excitation » IN and pyramidal cell; IN then also inhibits pyramidal cell to modulate the effects of the distal excitation

feedback inhibition: PC excitation » IN inhibits the original PC source of excitation to gradually modulate its signal » IN also inhibits same-layer proximal PCs to unify regional signaling pattern

disinhibition: IN » IN » PC so that the end result is the reduction of inhibition on the pyramidal cell's excitatory activity

Figure 5: Thalamocortical FFI by PV Neurons Imposes Coincidence Detection

FFI via PV neurons allows for temporal summation window in certain spaces/moments of time

this is achieved by increasing inhibition at all other time periods; now "near-synchornous inputs are required for efficient summation of EPSPs and to drive AP firing on the PC"

[I think that] NGFCs weaken the PV inhibition of PCs, allowing for a wider window of temporal summation and lateral signaling recruitment of other PCs?

FFI prevents saturation via PV cell recruitment; NGFC signaling weakens this mechanism. Thus, depending on how much FFI is needed in certain regions, the NGFC vs. PV cell population concentration ratios will vary

Figure 6: FBI and Differential Effect of PV and Sst IN-Mediated Inhibition

difference between PV and Sst IN inhibition: PVs show decreased response to repeated external inputs due to anatomical and synaptic features from Box 1; Ssts show increased response due to "opposing" or somewhat "antithetical" physical properties to PVs

thus PVs function to synchronize activity laterally across two or more pyramidal cells, i.e., spatial summation; Ssts function to amplify all inputs to a single PC, i.e., temporal summation

PVs have high permeability (low resistance) which means that EPSPs generated diffuse and disperse easily, so highly repetitive inputs do not build up and the cell is unable to undergo temporal summation; on the other hand, insensitivity to temporal summation means that the cell can 'detect' synchronized spatial summation » it's not just a LACK of temporal summation, it's that a "large amount of input at one time" must be present, which means it's uniquely suited to spatial summation

Ssts have low permeability (high resistance) which means they can essentially STORE charge and thus undergo temporal summation with highly repetitive inputs from external sources

Figure 7: Vip IN-Mediated Disinhibition

Vip INs selectively inhibit Sst INs that are targeting a single pyramidal cell, so that when Vips fire, PCs are disinhibited

area-wide excitability is increased broadly as Vips are recruited; or, if a single Vip is activated, so too are just a few selected PCs disinhibited

12 notes

·

View notes

Text

Monday is such a long day and on top of that I got drenched in rain.😭😭😭

.

Talking about long, Axons.

.

FEW KEY TERMS/POINTS TO REMEMBER💭:

~Nernst equation.

~GHK equation.

~Na+ moves in - depolarization

~K+ moves out - repolarization

~Graded potentials

~Action potentials

~Spatial summation

~Temporal summation

~IPSP

~EPSP

~Ca2+ and synaptic transmission

~Cell Soma

~Axon hillock

~Axon

~PNS has 2 types of glial cells while CNS has 4 (try remember the names of these and their functions)

~CNS has 2 main components while PNS has 2 main divisions which then branch out further (sympathetic neurons in spinal cord (CNS) interact with SNS of ANS of PNS)

…

P.s. do I use too many abbreviations?🤔

.

STUDY TIP🤓:

~Make mindmaps which shortly summarise each topic chapter to it's key points. You need to really understand the subject to be able to do this and it makes great tools for quick revision.😊

#student#studentlife#study#studyblr#studyspo#unilife#university#science#stationery#study motivation#studygram#uni#lifesciences#my notes#notes#study notes#biology#motivation#notebook#stabilo#stabilopastel#studying#new studyblr#heysprouht#studylife#organised#heystudious#whatsonmydesk#sprouht_study#heyaly

4 notes

·

View notes

Photo

1.1 Adaptive Procedural Template = checklist of useful tools, procedures…

Use adaptive, procedural templates to aid individuals join trade federations. Affiliated organizations are geo-spatially, temporally located in distributed, dispersed locations across time – space. Member organizations may join or leave in an adhoc, agile manner to take advantage or react to events, situations while retaining autonomy or the ability to act on one’s own behalf, control one’s own activities, The process involves agile, adhoc joins, merges, drops to / from federation in lieu of formal merger, and acquisition

1.2 Scope

Trade federations form among local communities or among sovereign (First) nations. The off-site connector workflow object convention connects, mitigates, adjusts by summation, statistical mean by aggregation among federated, non-federated groups acting as format gateways among participating, non – participating groups.

1 note

·

View note

Text

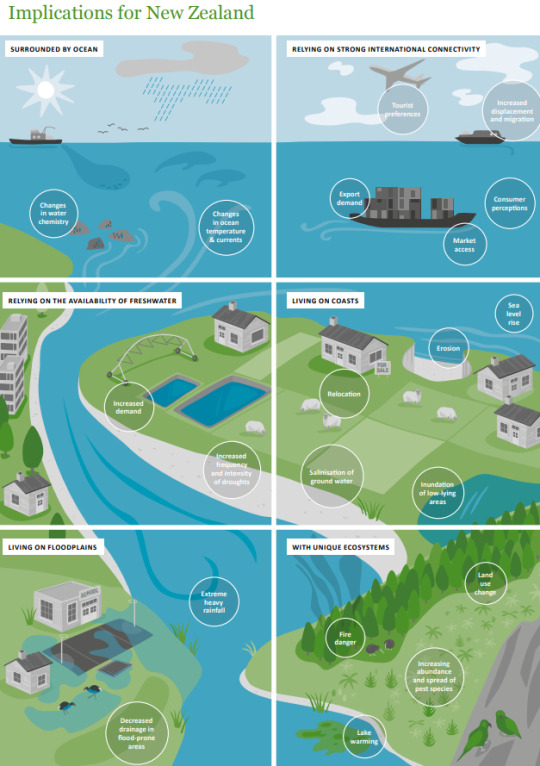

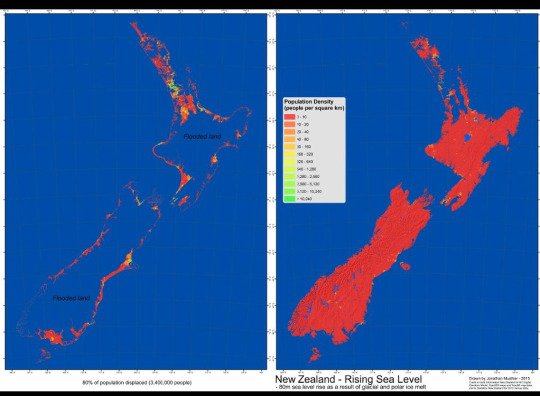

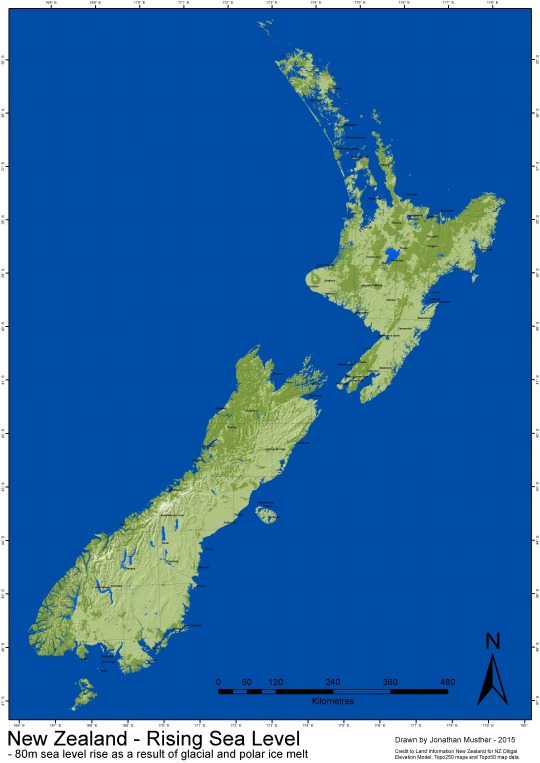

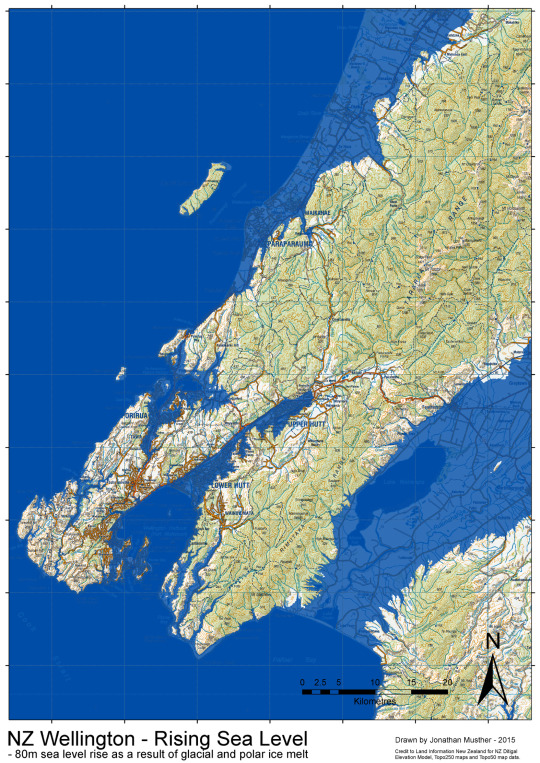

In urban settings along coastlines around the world, rising seas threaten infrastructure necessary for local jobs and regional industries. Roads, bridges, subways, water supplies, oil and gas wells, power plants, sewage treatment plants, landfills— the list is practically endless — All we are as New Zealanders at risk from sea level rise. This is Wellington with an 80 meter water rise.

** Crazy opening pic of Wellington

Estimates of sea-level rise are extremely complex, but ongoing research and increased understanding is leading many researchers to believe that widely publicized estimates (such as those from IPCC reports*) are far too conservative.

** IPCC flooding pic IPCC - [Intergovernmental Panel on Climate Change]

Historical evidence suggests that ice sheets may respond to climate changes very rapidly, and recent data show increased rates of melting and growing instability.

** CLIMATE CHANGE

Predicting climate change is phenomenally difficult; we are now well outside the sphere of collective of human experience and expertise. Sea-level rise is happening now, and its rate will likely take us by surprise.

** ST MARKS FLOODED

As the tide draws in, I start to wonder how long it will take until we have no choice but to build over or around the ocean to adapt to the steady rise. I have found that in my past works I have connected with the ‘More than human city’ arena of practice in some of my prior works.

** A Bengalese kiosk in Venice, Italy, with the waters sitting at 156 centimeters. Photo: Nicola Zolin

I plan to delve into potential sites that are suspected to be overwhelmed with the sea levels rising within the Wellington region over the next 100 years, rather than identifying the limitations of this, I wonder how we will adapt to survive the inevitable.

** MYANMAR

Many cities such as Venice, Italy, and Amsterdam, Holland, which are heavily reliant on the water systems and canals within their city, are already redefining new buildings to accommodate

the inevitability of water levels rising due to extreme circumstances. Whereas many Asiatic countries have been preparing for extremes for hundreds of years.

One of the findings in research, published in April last year in leading scientific journal ‘Natural Hazards and Earth Science Systems’, in the article “Spatial and temporal analysis of extreme storm-tide and skew-surge events around the coastline of New Zealand” in which NIWA researchers describe how small increases in sea level rise are likely to drive huge increases in the frequency of coastal flooding in the next 20–30 years.

**

Kraanspoor by OTH Architecten, Amsterdam, Netherlands

Dr Stephens says; “Small sea-level rise increments of 20cm predicted to happen around the New Zealand coast in the next 20 years will drive big increases in the number of times coastal areas are likely to flood. 100 Years from now, there is a one meter rise in sea water if we continue with the same carbon emissions. If we increase these emissions this process is accelerated.

** Climate change implications for New Zealand - April 2016 Te Aparangi

“Dr Stephens also says it points to the fact that sea-level rise will not necessarily manifest itself as large catastrophic events but will bring more “nuisance” flooding more often,”.

If this continues at this rate however, flash-flooding will destroy the land, then eventually become reclaimed by the ocean.

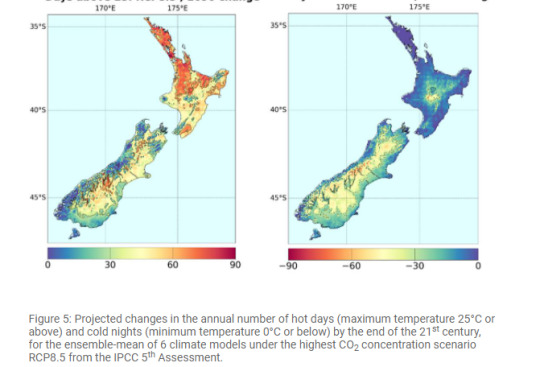

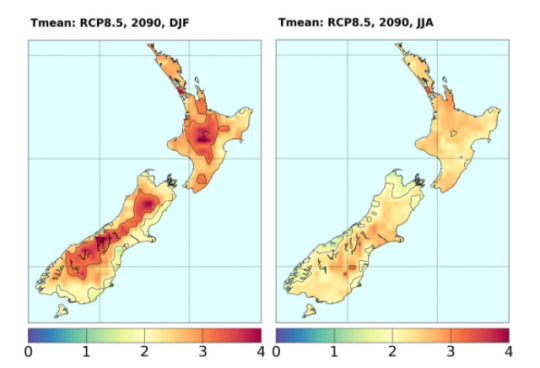

Subsequently, by 2090 this will likely result in the following;

Higher temperatures may force people to stay indoors, with greater demands for air conditioning, ergo using more electricity during summer.

** TEMPERATURE CARBON EMMISIONS

Denser populations within confined spaces due to water reclaiming the land, further pressuring room for civilians. Despite the exponential growth in population, and decrease in space. Population map **Increase in temperatures, rising sea levels, more extreme weather events and an increase in winter rainfall.

**

More frequent intense winter rainfalls are expected to increase the likelihood of rivers/estuaries nuisance/flash flooding.

Sea levels rising to reclaim the land will put pressure on the space we are able to occupy, cultivate, and power. Resource depletion would eventually stand as a barrier to travel and live within coastal cities such as Wellington, unless we adapt and overcome the inevitably of environmental consequence.

**

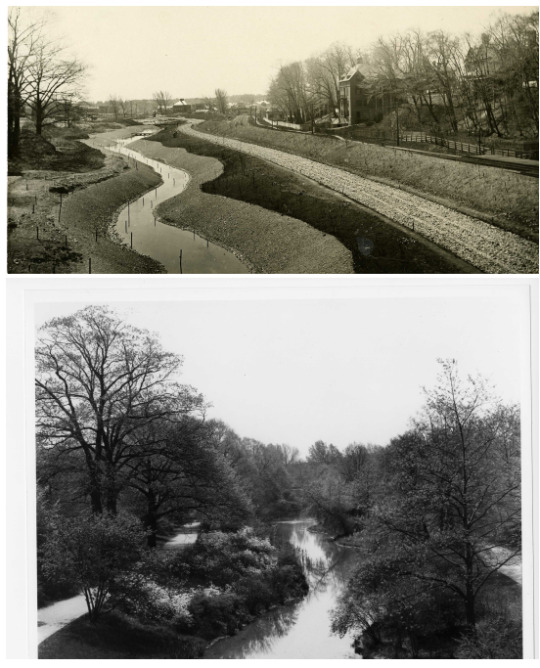

Upon reading ‘Joel Sanders’ Human Nature Landscape/Architecture Divide. I found that there are references to the disregard in forward progression in adapting to the way that the ‘wilderness/nature’ interacts with space. Which is summarized later on in the text as a blatant disregard for the environmental changes occurring around us due to architectural hubris.

The global environmental crisis underscores the imperative for design professionals— architects and landscape architects—to join forces to create integrated designs that address ecological issues.

However, Architecture and landscape architecture have been professionally segregated since at least the late nineteenth century.

The historian William Cronon writes, “If we allow ourselves to believe that nature, to be true, must also be wild, then our very presence in nature represents its fall. The place where we are is the place where nature is not” (Cronon 1996: 80–1)

**

By positing that the human is entirely outside the natural, wilderness presents a fundamental paradox. As the consequences of our environmental disregard catch up to us, it is important for us to think about how nature will influence our architecture in the near future.

Through Frederick Law Olmsted’s work he betrays the paradoxes at the heart of wilderness thinking. Olmsted fully embraced making nature accessible to urban citizens. Only thirty years later, however, a new generation of landscape architects had lost its way, its efforts stymied by the supposed incompatibility of nature and design.

This intrigues me however, as these works were designed for harmonious purposes rather than necessity. It poses the hypothetical that had we as westerners harnessed the relationship between nature and interior earlier, rather than focusing on the segregation of the two, perhaps we would be in a different circumstance now. Sanders states

**

“sustainable design unwittingly reinforces one root of the problem: the dualistic paradigm of the building as a discrete object spatially, socially, and ecologically divorced from its site. As a consequence, this American ideal—itself derived from wilderness thinking—inhibits designers and manufacturers from treating buildings and landscapes holistically as reciprocal systems that together impact the environment.”

**

McHarg, writing 1969, said Olmsted’s worst predictions had been realized—rapacious capitalism aided by remarkable technological advances had tipped the precarious balance between nature and civilization, resulting in environmental casualties in America’s polluted, slum-ridden cities. McHarg compared city dwellers to “patients in mental hospitals” consigned to live in “God’s Junkyard” (McHarg 1969: 20, 23)

In summation, Sanders remarks: “Relinquishing wilderness values will allow designers to adopt the more complicated viewpoint advanced by progressive scholars and scientists: a recognition that nature and civilization, although not the same, have always been intertwined and are becoming more so. Climate change reveals that there is not a square inch of the planet that does not in some way bear the imprint of humans.”

Through this project I hope to visualize the preparations for environmental extremes in regards to long term environmental changes.

https://niwa.co.nz/news/small-sea-level-rises-to-drive-more-intense-flooding-say-scientists

Stilt Houses in Inle Lake, Myanmar / Photo: https://thevaiven.wordpress.com/2011/05/

“Curtain Wars: Architecture, Decorating and the Twentieth-Century Interior” (Sanders 2002),

Carolyn Merchant, The Death of Nature: Women, Ecology and the Scientific Revolution (1980),

Kraanspoor by OTH Architecten, Amsterdam, Netherlands

A Bengalese kiosk in Venice, Italy, with the waters sitting at 156 centimeters. Photo: Nicola Zolin

Climate change implications for New Zealand - April 2016 Te Apa*rangi

- New Zealand Sea-Level Rise Maps by Jonathan Musther are licensed under a Creative Commons Attribution-NoDerivatives 4.0 International License.

Pedersen Glacier Retreat Lake Expansion, Alaska Posted by Mauri Pelto

Marine Biologist Dr. Jonathan Mustler Sea-level rise projections 2019

- Stephens, D. S. (2020, April 17). Small sea-level rises to drive more intense flooding, say scientists. Retrieved March 15, 2021, from https://niwa.co.nz/news/small-sea-level-rises-to-drive-more-intense-flooding-say-scientists

https://teara.govt.nz/en/diagram/7556/new-zealand-temperature-projections-to-the-2090

- TAYLOR, A. (2019, November 13). Venice Underwater: The Highest Tide in 50 Years. Retrieved from https://www.theatlantic.com/photo/2019/11/photos-of-venice-underwater-highest-tide-in-50-years/601930/

0 notes

Link

Experiments with an artificial model that mimics real neurons. "The neural simulation now includes these behaviors: A precise neuron action threshold, a precise action pulse waveform in axons, a variable action recovery (refractory) period, inhibitory neurons and synapses, rapid rise / slow drop of potential in synapses, temporal summation of pulses in synapses, spatial summation of pulses in dendrites, and long-term potentiation (LTP) and depression (LTD) in synapses."

His simulation uses neurons connected in an extremely regular, symmetric fashion, and he used a 1-pixel-per-neuron representation scheme to watch the simulations, with color codes for the various neuron states.

What has he learned?

Neurons have a precise action threshold. "This behavior is well understood and indicates that the brain is not a purely analog system. This aspect is more like a digital behavior. A neuron can only fire or not fire. There is no partial credit for an input potential just below the action threshold. It is ironic that many public ANNs (simulations on digital computers) attempt to be fully analog." That's because backpropagation, an algorithm based on the chain rule in calculus, needs for everything to be fully differentiable.

"The action pulse that travels through the axon also looks like a digitally generated square wave. It switches on very precisely, has a precise duration (approx. 1 msec), and then switches off very precisely -- with one exception: when the action potential switches off, it goes negative for short period. It is interesting that this is also a digital behavior."

"Once a neuron fires, it normally cannot fire again for approx. 10 msec."

"Neuroscientists have observed some neurons send excitatory (positive) pulses to other neurons and cause more activations, and some send inhibitory (negative) pulses and suppress activations."

"The complex electro-chemical processes in the synapses change the axon pulse dramatically. It is typically reduced in strength (by 95%!), slightly delayed, and no longer looks like a digital square wave."

"Time-based behavior occurs when multiple action pulses pass through a single synapse in rapid succession, close enough to overlap."

"This time-based behavior was discovered in 1966 and is still considered to be one of the main mechanisms supporting long-term memory and learning in the brain."

0 notes

Link

The exhaustive breadth of Amit Dutta's films refuses easy summation. My first encounter was with a dreamy rendition of rural life in India—the breathtaking Kramasha (2007)—and I have followed his films ever since. They traverse genres, moving effortlessly from crafted scenario to spontaneous encounter, from mindful self-reflexivity to ghostly magic. Art—literature, music, and particularly painting—permeates Dutta's work. It appears as the subject of his films, yet it is also absorbed into their very material as cinematography and soundscape—as cinema. Sudden sweeping camera pans veer left only to turn right, and surprising sounds of alarm bells and ticking clocks startle us. Like paintings, these films have surface tension. Inches behind this surface lies a cavernous, echoing space where unhurried tracking shots and distant ambiences suggest a less immediate kind of time, one that is long past. Just as such temporal and spatial registers commingle and blur in Dutta's films, so do art and nature, indeed, the man-made and the natural, until their distinctions fade and make way for the rich resonances that emerge from the gaps in between. I interviewed Amit over the course of a month on the occasion of his upcoming retrospective at the Berkeley Art Museum and Pacific Film Archive that will include a sneak preview of his latest work-in-progress, The Unknown Craftsman.

31 notes

·

View notes

Text

9/18/19 standing ground

This week I focus on the historical context of physical modeling.

What has been done?

What are the similarities and differences among the three commonly used approaches?

To what extent are people modeling shapes and materials?

How are people simulating resonating bodies?

Am I sure about my own PDE (partial differential equations) formulations?

How to do sanity checks for the sounds I fabricated ?

My reference would be “Digital Sound Synthesis by Physical Modeling Using the Functional Transformation Method” by Lutz Trautman and Rudolf Rabenstein, and Dr. Julius O Smith’s online book “Physical Audio Signal Processing”. The former outlines theoretical proofs and applications of FTM, and the latter focuses on another method developed in CCRMA called DWG (Digital WaveGuide Networks).

How they used to do it

Classically physical modeling is done in roughly 4 ways - FDM (Finite difference method), DWG (Digital waveguide method), MS (model synthesis), and the relatively newer method FTM (functional transformation method), aka my approach(although not exactly as I discovered and would explain later). FDM and DWG are time-based synthesis while MS and FTM are frequency-based approaches. Both FDM and FTM relies on formal physical formulation, while MS and DWG start from analyzing existing recorded sounds. I will briefly describe all the approaches and then point out their limitations and possible combinations.

FDM

FDM starts from solving one or a set of partial differential equations with initial-boundary conditions that describe the given structure’s vibrational mechanism. Then it discretizes any existing temporal and spatial derivatives by Taylor expansion. This approach is straightforward, general, and works with most complex shapes, however tends to neglect higher-order terms. It is possible to improve its accuracy by using smaller discretization, although at the same time it would introduce heavy computations. It is often an offline method, not suitable to be implemented in real-time synthesis. The solution contains time-evolution of all the points on the object in question.

FTM

FTM shares the same formulation as FDM but solves the equations differently. It eliminates the temporal and spatial derivatives by carrying out two transformations consecutively - Laplace transformation and SLT (Sturm-Liouville transformation). Both transformations turn the entire equation to be expressed in terms of another domain parallel to time-space, from (t,x) to (s,mu). In the alternate domain, derivatives on t and x are transformed to simple-to-solve algebraic equations in terms of s and mu. Afterwards, the solution maybe written in the form of a transfer function multiplying the activation function (derived from whatever force is exerted on the object). This transfer function may be inverted back to time-space domain and a solution would be obtained. The transfer function has its poles and zeros, from which we derive analytical solutions for each frequency partials and their decay rates. This approach is frequency-based in that the final solution is a summation of sinusoidal waves of different modes, with their own decay exponential terms. It accurately simulates the partials resulting from the sounding object however reaches its limitation when it comes to larger modes. It is more computational-friendly than FDM, however limits itself into simpler geometries - most of the time rectangular and circular shapes.

DWG

DWG analyzes a recorded sound and simulate the exact sound with bidirectional delay lines(for simulating traveling waves in both directions), digital filters, and some nonlinear elements. The method is based on Karplus-Strong algorithm and the filter coefficients+impedence of traveling waves are interpolated from the sound. This method is the most efficient but cannot describe a parametrized model. All changes in physical parameters need to be recorded again and be re-interpolated.

MS

MS considers objects as coupled systems of substructures and characterizes each substructure with their own mode/damping coefficient data. It then synthesizes sounds by summing all weighted modes. The modal data is usually obtained by measurements of the object itself rather than deriving from an analytical solution like FTM, and thus MS cannot react to parameter change in the physical model. Furthermore since no analytical solution is derived, MS requires measurements on discretized spatial points and the accuracy here is compromised.

So what do we wanna use

Based on the summary from Trautmann and Rabenstein’s book I extracted:

“

frequency-based method are generally better than time-based methods due to the frequency-based mechanisms in the human auditory system

for more complicated systems simulations of spatial parameter variations is much easier in the FDM than FTM since eigenvalues can only be calculated numerically in FTM.

FDM is the most general and the most complex synthesizing method. It can handle arbitrary shapes.

FTM is limited to simple spatial shapes like rectangular or circular membranes

DWG is even more limited than FTM or MS. It can only simulate systems accurately having low dispersion due to the use of low-order dispersions.

human ear seems to be more sensitive to the number of simulated partials than to inaccuracies in the partial frequencies and decay times

“

How my approach is slightly different from FTM

My approach is technically a FTM approach however with slight modifications. FTM first formulates the physical model, perform Laplace and SLT transform, get transfer function, and then derive the impulse response by inverting the transfer function back to time-space domain. My approach follows the same formulation, however only perform the Laplace transform. At this point I would assume the solution to be of sinusoidal form and calculate explicitly what the spatial derivatives are. Due to properties of sinusoidal form, even high order derivatives wouldn’t escape their original forms. After that, I derive the transfer function and would only perform inverse-laplace transform to obtain the impulse response, instead of performing inverse-laplace transform and inverse-SLT transform.

Whether this approach yields utterly different results, why would people go through the trouble of performing SLT spatial transformation, and whether it’s safe to assume the solution be of sinusoidal form is still under investigation.

What can be done

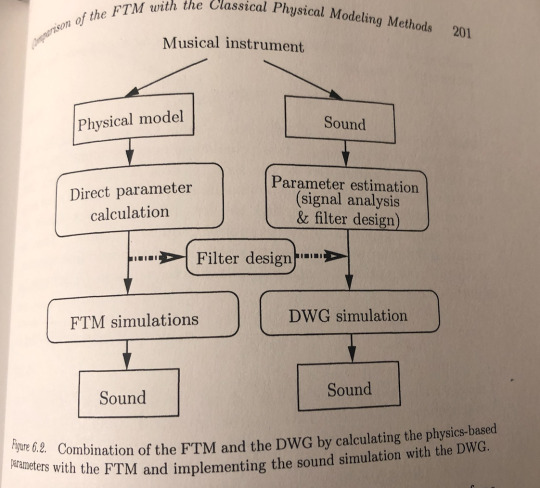

FTM+DWG

From FTM we can derive accurate modes and their decay rate coefficients, however it costs computational power to synthesize the actual sound (summation of multitudes of mode, calculation of exponentials, sine operations etc)

On the other hand from DWG we design filters based on pre-recorded sounds (not flexible to morph between different physical parameters), however very computationally-efficient to synthesize the sounds.

The book mentions a promising solution of combining the two methods - use accurate modes/decay rate information obtained from FTM to design filters and leave the sound synthesis business to DWG. Next week I will explore further on the combination of these two approaches.

0 notes

Text

Describe the major events involved in image formation on the retina, and list in the correct order the components of the eye that light passes through on its way to the retina.

Describe the major events involved in image formation on the retina, and list in the correct order the components of the eye that light passes through on its way to the retina.

1. Name the type of joint, and list the movements permitted at the shoulder joint. Under each movements name, list the names of the muscles responsible for each of these movements along with descriptions of their bone insertion.2. What are EPSPs and IPSPs, and how are they produced? Explain how these electrical currents are used in spatial and temporal summation to initiate or inhibit the…

View On WordPress

0 notes

Text

Name the type of joint, and list the movements permitted at the shoulder joint.

Name the type of joint, and list the movements permitted at the shoulder joint.

1. Name the type of joint, and list the movements permitted at the shoulder joint. Under each movement’s name, list the names of the muscles responsible for each of these movements along with descriptions of their bone insertion. 2. What are EPSPs and IPSPs, and how are they produced? Explain how these electrical currents are used in spatial and temporal summation to initiate or inhibit the…

View On WordPress

0 notes

Text

Describe how the central nervous system is protected from injury. List the components of a spinal reflex arc. Describe the function of each component.

Describe how the central nervous system is protected from injury. List the components of a spinal reflex arc. Describe the function of each component.

1. Name the type of joint, and list the movements permitted at the shoulder joint. Under each movement’s name, list the names of the muscles responsible for each of these movements along with descriptions of their bone insertion. 2. What are EPSPs and IPSPs, and how are they produced? Explain how these electrical currents are used in spatial and temporal summation to initiate or inhibit the…

View On WordPress

0 notes

Text

Measuring Water Pressure | Fluence

According to the U.N., more than 2 billion persons all around the world are living in international locations with “excess h2o worry.”

Having said that it’s measured, h2o insufficiency influences billions all around the world

“Water stress” is a term we hear more and more, but its definition is not nevertheless the same throughout corporations and national borders. California Water Sustainability, a venture of University of California Davis, explains that 1 widely utilized instrument, the Water Pressure Index, is outlined by the United Nations and many other individuals as the big difference concerning complete h2o use and h2o availability. As supply and demand move nearer, it’s more probably worry will manifest, equally in nature and in human programs.

According to the 2017 U.N. Report on Development To the Sustainable Improvement Ambitions:

Much more than 2 billion persons globally are living in international locations with excess h2o worry, outlined as the ratio of complete freshwater withdrawn to complete renewable freshwater means over a threshold of 25 for each cent.

In Northern Africa and Western Asia, h2o worry degrees have spiked to more than 60%, presaging upcoming h2o shortages.

One particular of the most widely utilized and time-honored assessment applications is the 1989 Falkenmark Indicator, which establishes a four-point scale:

No stressStressScarcityAbsolute shortage

Despite the fact that Falkenmark is an quick-to-use, wide instrument for assessing the relative h2o worry at the countrywide stage, a number of objections have been lifted in excess of the nearly 30 decades of its existence. For occasion, contamination often renders a percentage of h2o unusable, which is not deemed in the assessment method. Neither are seasonal variation or accessibility.

Amber Brown and Marty D. Matlock of the University of Arkansas Sustainability Consortium observe:

Simple thresholds omit crucial variations in demand among the international locations due to society, way of living, local climate, etcetera […] Eventually, this index appears to under-evaluate the influence of scaled-down populations […].”.

Evolution of Water Pressure Assessment

Other methods for assessing h2o worry that have followed Falkenmark take into account added factors. For occasion, the WaterStrategyMan Venture at the University of Athens (Greece) Chemical Engineering Office lists a heritage of a dozen write-up-Falkenmark h2o source assessment methods. 3 of them use the term “water worry.”

One particular system takes advantage of “dry period circulation by river basin,” taking temporal variability into account. An additional, the Index of Water Scarcity, normally takes desalinated h2o into account, which Falkenmark and other schemas do not. But it fails to take into account temporal variations, spatial variations, and h2o top quality.

The Water Poverty Index, designed by the Centre for Ecology and Hydrology in Wallingford, United Kingdom, has elicited a lot dialogue. It makes an attempt to show the hyperlinks concerning h2o shortage concerns and socio-economic factors, ranking nations in accordance to the provision of h2o. It considers 5 factors: means, entry, use, potential, and environment (h2o worry a subset of the environment part).

Water Pressure and Civil Unrest

Although tutorial conversations of h2o worry are critical to making predictive and allocative standards for corporations and governments, they can predict trouble. Somini Sengupta of The New York Situations reported in a latest posting that:

A panel of retired United States army officers warned in December that h2o worry […] would arise as ‘a increasing issue in the world’s scorching spots and conflict regions.’

The posting describes many situations of civil unrest sparked by h2o worry, for instance in India, exactly where terrorist insurgencies often arise in h2o stressed regions.

Water worry often incites mass flight from rural regions, which are no for a longer period equipped to guidance agriculture, to urban regions, exactly where migrants find a new established of complications. Iran, the next most populated nation in the Middle East, with more than 80 million persons, has faced just this sort of a migration crisis along with a 14-year drought. Sengupta wrote:

A former Iranian agriculture minister, Issa Kalantari, the moment famously mentioned that h2o shortage, if left unchecked, would make Iran so harsh that 50 million Iranians would go away the nation completely.

Decentralized Procedure System

With this sort of troubles in advance, however, new technological innovation and new tactics are emerging. Maybe the most crucial new strategy is decentralized treatment method, which allows flexibility by bringing tiny, scalable wastewater reuse and desalination solutions to anywhere they are wanted.

Decentralized treatment method has an rapid gain of doing away with the need for highly-priced piping infrastructure. It allows h2o and wastewater treatment method to be deployed in distant regions. And in densely populated regions, decentralized treatment method can help save dollars with its adaptability to altering requirements.

From North The us to the Middle East to China, Fluence has excelled at delivering cost-effective and trusted decentralized solutions. In actuality, Frost & Sullivan not long ago honored Fluence with its 2018 Worldwide Decentralized Water & Wastewater Procedure Enterprise of the Calendar year Award, citing the company’s “Visionary Innovation & Performance and Purchaser Impression.”

For more facts about how decentralized treatment method can assistance address equally rapid and extensive-term h2o shortage troubles, remember to get hold of Fluence.

Explaining Filtration Products Web page: Filtration-Products.com website discloses the just unveiled announcements, summations and filter equipment from the treatment method venues. Filtration-Products.com keeps you in the know on filter and all the principal industrial goods which includes spun filters, pleated filter cartridges, melt blown depth cartridges, bag filtration, RO filters, from brands this sort of as PecoFacet utilized in particle separation, and anything at all else the filter business enterprise has to notify.

The post Measuring Water Pressure | Fluence appeared first on Filtration Products.

from Filtration Products http://ift.tt/2ElvM7x

0 notes

Video

How Mechanism Synaptic Potential and Cellular Integration Human Nervous ... How Mechanism Synaptic Potential and Cellular Integration Human Nervous SystemSynaptic potential refers to the difference in voltage between the inside and outside of a postsynaptic neuron. In other words, it is the “incoming” signal of a neuron. Synaptic potential comes in two forms: excitatory and inhibitory. Excitatory post-synaptic potentials (EPSPs) depolarize the membrane and move it closer to the threshold for an action potential. Inhibitory postsynaptic potentials (IPSPs) hyperpolarize the membrane and move it farther away from the threshold. In order to depolarize a neuron enough to cause an action potential, there must be enough EPSPs to both counterbalance the IPSPs and also depolarize the membrane from its resting membrane potential to its threshold. As an example, consider a neuron with a resting membrane potential of -70 mV (millivolts) and a threshold of -50 mV. It will need to be raised 20 mV in order to pass the threshold and fire an action potential. The neuron will account for all the many incoming excitatory and inhibitory signals via neural integration, and if the result is an increase of 20 mV or more, an action potential will occur.Synaptic potentials are small and many are needed to add up to reach the threshold. The two ways that synaptic potentials can add up to potentially form an action potential are spatial summation and temporal summation. Spatial summation refers to several excitatory stimuli from different synapses converging on the same postsynaptic neuron at the same time to reach the threshold needed to reach an action potential. Temporal summation refers to successive excitatory stimuli on the same location of the postsynaptic neuron. Both types of summation are the result of adding together many excitatory potentials. The difference being whether the multiple stimuli are coming from different locations (spatial) or at different times (temporal). Summation has been referred to as a “neurotransmitter induced tug-of-war” between excitatory and inhibitory stimuli. Whether the effects are combined in space or in time, they are both additive properties that require many stimuli acting together to reach the threshold. Synaptic potentials, unlike action potentials, degrade quickly as they move away from the synapse. This is the case for both excitatory and inhibitory postsynaptic potentials.

0 notes

Text

Comments on Bataille’s “Disequilibrium“

Summation of the Bataillian Notion of “Disequilibrium”

Bataille’s notion of disequilibrium seems to involve, subordinately, the notion of excess. To begin with, it is in the notion of excess that Bataille equally locates both death and reproduction (Bataille 1986, 42). Bataille later on also notes, as if regarding life itself as a kind of material excess (Bataille 1986, 59):

[...] we refuse to see that only death guarantees the fresh upsurging without which life would be blind. We refuse to see that life is the trap set for the balanced order, that life is nothing but instability and disequilibrium. Life is a swelling tumult continuously at the verge of explosion.

While Bataille’s idea of disequilibrium has up to this point yet to be spelled out--although variously exemplified--these exemplifications provide a clue for what this notion implicates in Bataille’s system of thought. To say that life is always at “the verge of explosion” is to say, not only that life seems to have as its object more energy than its own materiality can contain (e.g., it cannot swallow up the entirety of available energy without its own internal destruction), but also that life is always at the verge of decomposition. To start with the latter in order that the former become more intelligible, the processes of life, insofar as it is a physical process like any other, is subject to the principle of maximum entropy, which is to say simply that it submits to a tendency towards maximal energy spread, or towards thermal equilibrium. In this way, the body stands as, not just congealed energy (e.g., matter), but as an internal energy motor, specifically of the organic, metabolic sort. The body is energy both inert and in motion: “the mammalian organism is a gulf that swallows vast quantities of energy” (Bataille 1986, 60).

In this way, life stands at the precipice of death and races against it. However, this superfluous energy which becomes the object of life as such, so as to avoid the installment of death--that is, so as to deal with the reality of mortality (the contradictory presence of death in life)--must strike a bargain between the expenditure of that energy for internal or external purposes. That is, for growth or for waste. As Bataille notes in his other book, The Accursed Share: An Essay on General Economy, Vol. I (Bataille 1988, 21):

The living organism, in a situation determined by the play of energy on the surface of the globe, ordinarily receives more energy than is necessary for maintaining life; the excess energy (wealth) can be used for the growth of a system (e.g., an organism); if the system can no longer grow, or if the excess cannot be completely absorbed in its growth, it must necessarily be lost without profit; it must be spent, willingly or not, gloriously or catastrophically.

This is in its most primitive form observable precisely in, not just the possibility, but the necessity of excretory and defecative mechanisms within the body of the multicellular organism, or, not just the possibility, but the necessity of selective or semi- permeability within the unicellular organism. But death is defecation taken to its logical extreme, while sickness is excretion taken to its logical extreme, and both may paradoxically result from an overconsumption by the organism, or from a wallowing in the organism’s own surrounding and self-produced putrefaction: “the horror we feel at the thought of a corpse is akin to the feeling we have at human excreta” (Bataille 1986, 57).

This, of course, has something to do with the nascent violence of nervous impulse, or more generally of organic motion, for in that case the act of life and of the reproduction of life both disrupt, albeit at a local level, the tendency towards thermal/”energic” equilibrium, and for this reason living is a precarious condition. Being precarious, multicellular life must be willing to consume itself, and mutually so. Yet at the same time it must profess a refusal to do so in some frenzy, so that mutually assured destruction is counter-acted. “There is no reason to look at a man’s corpse otherwise than an animal’s,” but it must be, for the human animal, looked upon in such a way so that it may preserve itself well enough (Bataille 1986, 57). In conclusion, a “disequilibrium” for Bataille is, broadly speaking, something which attempts to maximally capture the possibilities of a system such that it usurps the tendential distribution of those possibilities and internalizes the threat of the cessation its own possibility of being. This “possibility” Bataille is concerned with in this case is, of course, not merely possibility proper, but possibility treated as the minimal potentiality of work (e.g., the minimal capacity to do work). That is, energy.

To the extent the previously expounded notion of technology as preceding both the taboo and work (thus preceding the existence of society in some sense) is true, then it seems necessary that technology be conceived of as the potential external expression of the disequilibrium of life--potential in that, besides a tool being something that is produced, it must also be something produced under a mode of representational thought (so the product can function as symbol for the organism). That is, a tool is both a useful or effective product and a conveyor of information. However, with the sophistication of technology consequent of the discovery of fuel (starting with humanity’s discovery of fire), the distinction between technology and life had been set into a path of increasing blurriness, presenting itself now in the problem of hard AI. But in fact, the problem of blurriness was already there in the start as long as the arrival of life itself--especially that of the rich mental life humanity has access to--remained mysterious, as there is then no clear designating line for the beginning of representational thought (again, this is not a general representationalist model of mind--it says that the mind is necessary for representation, and not that representation is necessary for mind). Nonetheless, without digression into any attempts at solving such a problem, the point is that it would seem society is a surplus or excess of environment as it is a disequilibrium that expands the harnessing of energy further afield. At least according to this treatment of equilibrium.

What is the Relationship Between Equilibrium and Systemic Instability (or “Time”)?

But there is something amiss here, for it is common knowledge in biology that living bodies are characterized by processes of homeostasis. Now, someone may likely wring their hands and point out that homeostasis is about stability while equilibrium is about balance of competition, homogeneity of force/concentration. This is true--there is a difference between them. This is not in dispute. The question instead regards yet another chicken-or-egg question (it should be apparent by now I fancy these): does equilibrium produce homeostasis, or does homeostasis produce equilibrium? Put in other terms, is there some prior stability of a system that dictates its point of equilibrium, or is it systemic equilibrium that sets the conditions for stability in a system? To warn, I will be proceeding from a perspective of some ignorance regarding the mathematical intricacies of physics in addressing this question, so most of what follows will likely be entirely sloppy.

Notwithstanding, it would seem that notions of equilibrium are related conceptually, at the very least, with the notion of geometric symmetries or conservation laws in modern physics when considering classical mechanics. The introduction of symmetry in physics as a way to speak of conserved quantities would seem to act as an a priori reformulation of the Humean “uniformity of nature” hypothesis. The reason it works better than a mere hypothesis is because such symmetries have been understood as homogeneities of structure--this is clear from the basic principle of translation (or spatial) symmetry and temporal symmetry, both of which assume “behavioral” physical system neutrality both in respect of place and of time. Further, these principles of symmetry have their expression in the behavior of physical objects to the extent that the motion of physical objects are really just symmetry-preserving, local deformations of the occupying space or duration of mass-energy. These are not experienced as deformations, of course, because different collections of mass and energy are perceived as qualitatively different, and so their motion is perceived in terms of discrete reference-points or their creation or destruction into each other. One does not perceive these movements as deformations in a continuum of mass-energy.

There is something strange, of course, in the fact that this reformulation of the uniformity of nature seems rationalist or idealist in character; nonetheless, equilibrium seems to be, or seems to be modeled in, a function of symmetry, and notions of physical equilibrium have much of their basis in statistical probability. In sum, symmetry seems specifically to regard the homogeneity of possibilities across space and time as well as the structural relation between them, whereas actualities are treated as either final or initial conditions of those possibilities. Looked at in this way, it is not strange for the “uniformity of nature” hypothesis to be reformulated mathematically in an empirical science, if it is reformulated in terms of possibility, as empirical science is concerned precisely with contingency even as it seeks predictive power (that is, this “strangeness” is already inscribed within the practice of empirical science, in terms of the inconsistency between its backward-looking and forward-looking methodological aspects--the hermeneutic commitment to causal determinism undertaken by scientists in virtue of science’s focus on predictability is always a latent metaphysical commitment).

However, if this is so, it would seem that stability--the resistance to change--exhibited either within or by a physical system is actually not something which results from equilibrium as long as resistance to change is also resistance to alternate possibility. Yet, stability also does not ground equilibrium, in that a resistance to alternate possibility is not a resistance to possibility simpliciter. Rather, the resistance itself is already inscribed within each of the given possibilities. Thereby, the trajectory of a physical system or object is actualized precisely as a function of the mutual resistance between possibilities, originating in the internal resistance of possibility as such to its own impossibility. E.g., the resistance of possibility qua possibility, or the resistance of the possibility of possibility, to the possibility of impossibility. In this way, a physical system is actually an instability in a larger context of possibility. Nonetheless it stands as a stability within its own sphere of action (possibility for it takes the restricted range of that which “works,” and so is characterized by energy). In fact, energy merely being a special case of potentiality is something already implied within these latent metaphysical commitments of modern physics by virtue of its predictive stance, when the predictive stance is applied in retrospect (i.e., retrodiction); this comes to a head in quantum mechanics, as Žižek himself noted (Žižek 2012, 920):

[...] possibility as such possesses an actuality of its own; that is, it produces real effects-for example, the father's authority is fundamentally virtual, a threat of violence. In a similar way, in the quantum universe, the actual trajectory of a particle can only be explained if one takes into account all of its possible trajectories within its wave function. In both cases, the actualization does not simply abolish the previous panoply of possibilities: what might have happened continues to echo in what actually happens as its virtual background.

And this “echo” is possibility as probability. Ultimately, what can be said about the relationship between equilibrium and stability, then, is that stability--resistance to change--is relative, while instability is absolute. For example, resistance to changing a domestic policy, itself a possibility, is in its actuality relative to the whole field of interlocking possibilities that manifest as a deadlock for it as a possibility. However, a lack of resistance to the same does not have anything to do with the actualization or lack of actualization of some possibility by virtue of the full network of possibilities--rather, it is an expression of the whole interplay of possibility precisely as it expresses itself in or through any given actuality. The internal resistance (to impossibility) in a possibility is thus retroactive, while the lack of that resistance (to impossibility) is proactive. Within the organism, this is precisely what unifies birth and death--death is the possibility of impossibility, while birth the possibility of possibility, but birth, a resistance to death, is sustained only on condition that impossibility remain as amongst one of the possibilities of Dasein.

Etalon and Equilibria

This means, however, that equilibrium can be interpreted as a continual displacement of stability. This is because symmetries are actually just products of a displacement of stability. A structurally related homogeneity of possibility already stands as an instance of a larger heterogeneous space of possibility, and it is actualized at the same moment some possibility is. The frame of reference cannot precede the actualization of possibility, except after some possibility has already been actualized (space-time follows existence, not the other way around). In fact, the strange quantum phenomena of wave-particle duality mediated by measurement can be spoken of in exactly this way. This is why the etalon, while not the same as equilibrium, is the basis of equilibrium: the “etalon” is the limit or impossibility of the resistance uniquely characterizing some possibility/actuality. In this sense, the homeostasis of biological systems have their own equilibrium (and disequilibrium) insofar as they (albeit contingently) produce their own etalon. Everything is its own measure.

The assertion by Bataille, then, that life is disequilibrium seems to depend on either some underlying metaphysical assumptions, or otherwise the hermeneutic or provisional privileging of some perspective (as, of course, constrained by some metaphysical system). What is the etalon Bataille is using for this proclamation, and does it correspond with some arche he holds to? It is true in some sense that disequilibrium is fundamental, but that is because without it there would be no such thing as an equilibrium of any type or form. For example, a true vacuum, involving a state of asymmetry and responsible for mass, is the state requiring less energy input--it requires no energy at all. Meanwhile, a false vacuum with symmetry, thereby no produced mass but with a possibility of equilibrium, requires too much energy. This means that the symmetry or equilibrium of a physical system is always a consequence of deferred stability. The existence of mass is the expression of an instability in the vacuum, as an ejection of its own stability. But this does not mean the true vacuum lacks stability--it just means this stability of the vacuum is not expressed in the vacuum as such. That is, after all, the difference between the “true” and “false” vacuum. The “false” vacuum is able to continue, or to be, only by way of becoming a “true” vacuum. The unity of both types of vacuums expresses itself in the fact that stability of the vacuum relies on its destabilization.

To add, QFT and the Higgs field, a result of empirical investigation in physics, seems non-coincidental from a metaphysical point of view--the same sort of relationship between the true and false vacuum holds for the relative Nothing (the Nothing which exists) and the absolute Nothing (the Nothing which does not exist) that are merely two hierarchical aspects of Nothing as a whole (i.e., of impossibility as such) within my own more philosophical studies into Nothingness. In addition, the notion of virtual particles corresponds to that of existence always being virtual, and thus entities always landing between Being and Nothing. In sum, the existence of excess does not contradict the existence of equilibrium--the equilibrium is contingent on etalon. One could then even say then that there are competing equilibriums (which is to say competing chains of cooperation).

Bataille, Georges. Erotism: Death & Sensuality. Trans. Mary Dalwood. San Francisco: City Lights, 1986. Print.

Žižek, Slavoj. Less Than Nothing: Hegel and the Shadow of Dialectical Materialism. London: Verso, 2012. Print.

#possibility#actuality#philosophy#philosophy of space and time#philosophy of biology#philosophy of life#metaphysics#philosophy of science#philosophy of physics#physics#quantum field theory#vacuum#nothing#nothingness#ontology#meontology#qft#bataille#georges bataille#heidegger#martin heidegger#takato yamamoto#cern#higgs boson#cosmology#metaphysical cosmology#cosmogony#metaphysical cosmogony#equilibrium#disequilibrium

1 note

·

View note