#tensorflow api

Explore tagged Tumblr posts

Text

Federated Learning & AI Help In Hospital’s Cancer Detection

Medical Facilities Use Federated Learning and AI to Improve Cancer Detection. Using NVIDIA-powered Federated learning, a panel of experts from leading research institutions and medical facilities in the United States is assessing the effectiveness of federated learning and AI-assisted annotation in training AI models for tumor segmentation.

What Is Federated Learning?

A method for creating more precise, broadly applicable AI models that are trained on data from several data sources without compromising data security or privacy is called Federated learning. It enables cooperation between several enterprises on the creation of an AI model without allowing sensitive data to ever leave their systems.

“The only feasible way to stay ahead is to use Federated learning to create and test models at numerous locations simultaneously. It is a really useful tool.

The team, comprising collaborators from various universities such as Case Western, Georgetown, Mayo Clinic, University of California, San Diego, University of Florida, and Vanderbilt University, utilized NVIDIA FLARE (NVFlare), an open-source framework featuring strong security features, sophisticated privacy protection methods, and an adaptable system architecture, to assist with their most recent project.

The committee was given four NVIDIA RTX A5000 GPUs via the NVIDIA Academic Grant Program, and they were dispersed throughout the collaborating research institutions so that they could configure their workstations for Federated learning. Further collaborations demonstrated NVFLare’s adaptability by using NVIDIA GPUs in on-premises servers and cloud environments.

Federated Learning AI

Remote Multi-Party Cooperation

Federated learning reduces the danger of jeopardizing data security or privacy while enabling the development and validation of more precise and broadly applicable AI models from a variety of data sources. It makes it possible to create AI models using a group of data sources without the data ever leaving the specific location.

Features

Algorithms Preserving Privacy

With the help of privacy-preserving techniques from NVIDIA FLARE, every modification to the global model is kept secret and the server is unable to reverse-engineer the weights that users input or find any training data.

Workflows for Training and Evaluation

Learning algorithms for FedAvg, FedOpt, and FedProx are among the integrated workflow paradigms that leverage local and decentralized data to maintain the relevance of models at the edge.

Wide-ranging Management Instruments

Management tools provide orchestration via an admin portal, safe provisioning via SSL certificates, and visualization of Federated learning experiments using TensorBoard.

Accommodates Well-Known ML/DL Frameworks

Federated learning may be integrated into your present workflow with the help of the SDK, which has an adaptable architecture and works with PyTorch, Tensorflow, and even Numpy.

Wide-ranging API

Researchers may create novel federated workflow techniques, creative learning, and privacy-preserving algorithms thanks to its comprehensive and open-source API.

Reusable Construction Pieces

NVIDIA FLARE offers a reusable building element and example walkthrough that make it simple to conduct Federated learning experiments.

Breaking Federated Learning’s Code

For the initiative, which focused on renal cell carcinoma, a kind of kidney cancer, data from around fifty medical imaging investigations were submitted by each of the six collaborating medical institutes. An initial global model transmits model parameters to client servers in a Federated learning architecture. These parameters are used by each server to configure a localized version of the model that has been trained using the company’s confidential data.

Subsequently, the global model receives updated parameters from each of the local models, which are combined to create a new global model. Until the model’s predictions no longer become better with each training round, the cycle is repeated. In order to optimize for training speed, accuracy, and the quantity of imaging studies needed to train the model to the requisite degree of precision, the team experimented with model topologies and hyperparameters.

NVIDIA MONAI-Assisted AI-Assisted Annotation

The model’s training set was manually labeled during the project’s first phase. The team’s next step is using NVIDIA MONAI for AI-assisted annotation to assess the performance of the model with training data segmented using AI vs conventional annotation techniques.

“Federated learning activities are most difficult when data is not homogeneous across places. Individuals just label their data differently, utilize various imaging equipment, and follow different processes, according to Garrett. “It’s aim to determine whether adding MONAI to the Federated learning model during its second training improves overall annotation accuracy.”

The group is making use of MONAI Label, an image-labeling tool that cuts down on the time and effort required to produce new datasets by allowing users to design unique AI annotation applications. Prior to being utilized for model training, the segmentations produced by AI will be verified and improved by experts. Flywheel, a top medical imaging data and AI platform that has included NVIDIA MONAI into its services, hosts the data for both the human and AI-assisted annotation stages.

NVIDIA FLARE

The open-source, flexible, and domain-neutral NVIDIA Federated Learning Application Runtime Environment (NVIDIA FLARE) SDK is designed for Federated learning. Platform developers may use it to provide a safe, private solution for a dispersed multi-party cooperation, and academics and data scientists can modify current ML/DL process to a federated paradigm.

Maintaining Privacy in Multi-Party Collaboration

Create and verify more precise and broadly applicable AI models from a variety of data sources while reducing the possibility that data security and privacy may be jeopardized by including privacy-preserving algorithms and workflow techniques.

Quicken Research on AI

enables data scientists and researchers to modify the current ML/DL process (PyTorch, RAPIDS, Nemo, TensorFlow) to fit into a Federated learning model.

Open-Source Structure

A general-purpose, cross-domain Federated learning SDK with the goal of establishing a data science, research, and developer community.

Read more on govindhtech.com

#FederatedLearning#CancerDetection#AImodels#AIHelpInHospital#NVIDIARTX#Tensorflow#SDK#NVIDIAMONAI#medicalimaging#NVIDIAFLARE#QuickenResearch#MultiPartyCollaboration#api#MaintainingPrivacy#NVIDIA#FederatedLearningAI#ai#technology#technews#news#govindhtech

0 notes

Text

Day 17 _ Hyperparameter Tuning with Keras Tuner

Hyperparameter Tuning with Keras Tuner A Comprehensive Guide to Hyperparameter Tuning with Keras Tuner Introduction In the world of machine learning, the performance of your model can heavily depend on the choice of hyperparameters. Hyperparameter tuning, the process of finding the optimal settings for these parameters, can be time-consuming and complex. Keras Tuner is a powerful library that…

#artificial intelligence#Dee p learning#functional Keras api#hyperparameter#keras Tuner#Lee as#machine learning#TensorFlow#tuner

0 notes

Text

Why Python Will Thrive: Future Trends and Applications

Python has already made a significant impact in the tech world, and its trajectory for the future is even more promising. From its simplicity and versatility to its widespread use in cutting-edge technologies, Python is expected to continue thriving in the coming years. Considering the kind support of Python Course in Chennai Whatever your level of experience or reason for switching from another programming language, learning Python gets much more fun.

Let's explore why Python will remain at the forefront of software development and what trends and applications will contribute to its ongoing dominance.

1. Artificial Intelligence and Machine Learning

Python is already the go-to language for AI and machine learning, and its role in these fields is set to expand further. With powerful libraries such as TensorFlow, PyTorch, and Scikit-learn, Python simplifies the development of machine learning models and artificial intelligence applications. As more industries integrate AI for automation, personalization, and predictive analytics, Python will remain a core language for developing intelligent systems.

2. Data Science and Big Data

Data science is one of the most significant areas where Python has excelled. Libraries like Pandas, NumPy, and Matplotlib make data manipulation and visualization simple and efficient. As companies and organizations continue to generate and analyze vast amounts of data, Python’s ability to process, clean, and visualize big data will only become more critical. Additionally, Python’s compatibility with big data platforms like Hadoop and Apache Spark ensures that it will remain a major player in data-driven decision-making.

3. Web Development

Python’s role in web development is growing thanks to frameworks like Django and Flask, which provide robust, scalable, and secure solutions for building web applications. With the increasing demand for interactive websites and APIs, Python is well-positioned to continue serving as a top language for backend development. Its integration with cloud computing platforms will also fuel its growth in building modern web applications that scale efficiently.

4. Automation and Scripting

Automation is another area where Python excels. Developers use Python to automate tasks ranging from system administration to testing and deployment. With the rise of DevOps practices and the growing demand for workflow automation, Python’s role in streamlining repetitive processes will continue to grow. Businesses across industries will rely on Python to boost productivity, reduce errors, and optimize performance. With the aid of Best Online Training & Placement Programs, which offer comprehensive training and job placement support to anyone looking to develop their talents, it’s easier to learn this tool and advance your career.

5. Cybersecurity and Ethical Hacking

With cyber threats becoming increasingly sophisticated, cybersecurity is a critical concern for businesses worldwide. Python is widely used for penetration testing, vulnerability scanning, and threat detection due to its simplicity and effectiveness. Libraries like Scapy and PyCrypto make Python an excellent choice for ethical hacking and security professionals. As the need for robust cybersecurity measures increases, Python’s role in safeguarding digital assets will continue to thrive.

6. Internet of Things (IoT)

Python’s compatibility with microcontrollers and embedded systems makes it a strong contender in the growing field of IoT. Frameworks like MicroPython and CircuitPython enable developers to build IoT applications efficiently, whether for home automation, smart cities, or industrial systems. As the number of connected devices continues to rise, Python will remain a dominant language for creating scalable and reliable IoT solutions.

7. Cloud Computing and Serverless Architectures

The rise of cloud computing and serverless architectures has created new opportunities for Python. Cloud platforms like AWS, Google Cloud, and Microsoft Azure all support Python, allowing developers to build scalable and cost-efficient applications. With its flexibility and integration capabilities, Python is perfectly suited for developing cloud-based applications, serverless functions, and microservices.

8. Gaming and Virtual Reality

Python has long been used in game development, with libraries such as Pygame offering simple tools to create 2D games. However, as gaming and virtual reality (VR) technologies evolve, Python’s role in developing immersive experiences will grow. The language’s ease of use and integration with game engines will make it a popular choice for building gaming platforms, VR applications, and simulations.

9. Expanding Job Market

As Python’s applications continue to grow, so does the demand for Python developers. From startups to tech giants like Google, Facebook, and Amazon, companies across industries are seeking professionals who are proficient in Python. The increasing adoption of Python in various fields, including data science, AI, cybersecurity, and cloud computing, ensures a thriving job market for Python developers in the future.

10. Constant Evolution and Community Support

Python’s open-source nature means that it’s constantly evolving with new libraries, frameworks, and features. Its vibrant community of developers contributes to its growth and ensures that Python stays relevant to emerging trends and technologies. Whether it’s a new tool for AI or a breakthrough in web development, Python’s community is always working to improve the language and make it more efficient for developers.

Conclusion

Python’s future is bright, with its presence continuing to grow in AI, data science, automation, web development, and beyond. As industries become increasingly data-driven, automated, and connected, Python’s simplicity, versatility, and strong community support make it an ideal choice for developers. Whether you are a beginner looking to start your coding journey or a seasoned professional exploring new career opportunities, learning Python offers long-term benefits in a rapidly evolving tech landscape.

#python course#python training#python#technology#tech#python programming#python online training#python online course#python online classes#python certification

2 notes

·

View notes

Text

What is Python, How to Learn Python?

What is Python?

Python is a high-level, interpreted programming language known for its simplicity and readability. It is widely used in various fields like: ✅ Web Development (Django, Flask) ✅ Data Science & Machine Learning (Pandas, NumPy, TensorFlow) ✅ Automation & Scripting (Web scraping, File automation) ✅ Game Development (Pygame) ✅ Cybersecurity & Ethical Hacking ✅ Embedded Systems & IoT (MicroPython)

Python is beginner-friendly because of its easy-to-read syntax, large community, and vast library support.

How Long Does It Take to Learn Python?

The time required to learn Python depends on your goals and background. Here’s a general breakdown:

1. Basics of Python (1-2 months)

If you spend 1-2 hours daily, you can master:

Variables, Data Types, Operators

Loops & Conditionals

Functions & Modules

Lists, Tuples, Dictionaries

File Handling

Basic Object-Oriented Programming (OOP)

2. Intermediate Level (2-4 months)

Once comfortable with basics, focus on:

Advanced OOP concepts

Exception Handling

Working with APIs & Web Scraping

Database handling (SQL, SQLite)

Python Libraries (Requests, Pandas, NumPy)

Small real-world projects

3. Advanced Python & Specialization (6+ months)

If you want to go pro, specialize in:

Data Science & Machine Learning (Matplotlib, Scikit-Learn, TensorFlow)

Web Development (Django, Flask)

Automation & Scripting

Cybersecurity & Ethical Hacking

Learning Plan Based on Your Goal

📌 Casual Learning – 3-6 months (for automation, scripting, or general knowledge) 📌 Professional Development – 6-12 months (for jobs in software, data science, etc.) 📌 Deep Mastery – 1-2 years (for AI, ML, complex projects, research)

Scope @ NareshIT:

At NareshIT’s Python application Development program you will be able to get the extensive hands-on training in front-end, middleware, and back-end technology.

It skilled you along with phase-end and capstone projects based on real business scenarios.

Here you learn the concepts from leading industry experts with content structured to ensure industrial relevance.

An end-to-end application with exciting features

Earn an industry-recognized course completion certificate.

For more details:

#classroom#python#education#learning#teaching#institute#marketing#study motivation#studying#onlinetraining

2 notes

·

View notes

Text

Top 10 In- Demand Tech Jobs in 2025

Technology is growing faster than ever, and so is the need for skilled professionals in the field. From artificial intelligence to cloud computing, businesses are looking for experts who can keep up with the latest advancements. These tech jobs not only pay well but also offer great career growth and exciting challenges.

In this blog, we’ll look at the top 10 tech jobs that are in high demand today. Whether you’re starting your career or thinking of learning new skills, these jobs can help you plan a bright future in the tech world.

1. AI and Machine Learning Specialists

Artificial Intelligence (AI) and Machine Learning are changing the game by helping machines learn and improve on their own without needing step-by-step instructions. They’re being used in many areas, like chatbots, spotting fraud, and predicting trends.

Key Skills: Python, TensorFlow, PyTorch, data analysis, deep learning, and natural language processing (NLP).

Industries Hiring: Healthcare, finance, retail, and manufacturing.

Career Tip: Keep up with AI and machine learning by working on projects and getting an AI certification. Joining AI hackathons helps you learn and meet others in the field.

2. Data Scientists

Data scientists work with large sets of data to find patterns, trends, and useful insights that help businesses make smart decisions. They play a key role in everything from personalized marketing to predicting health outcomes.

Key Skills: Data visualization, statistical analysis, R, Python, SQL, and data mining.

Industries Hiring: E-commerce, telecommunications, and pharmaceuticals.

Career Tip: Work with real-world data and build a strong portfolio to showcase your skills. Earning certifications in data science tools can help you stand out.

3. Cloud Computing Engineers: These professionals create and manage cloud systems that allow businesses to store data and run apps without needing physical servers, making operations more efficient.

Key Skills: AWS, Azure, Google Cloud Platform (GCP), DevOps, and containerization (Docker, Kubernetes).

Industries Hiring: IT services, startups, and enterprises undergoing digital transformation.

Career Tip: Get certified in cloud platforms like AWS (e.g., AWS Certified Solutions Architect).

4. Cybersecurity Experts

Cybersecurity professionals protect companies from data breaches, malware, and other online threats. As remote work grows, keeping digital information safe is more crucial than ever.

Key Skills: Ethical hacking, penetration testing, risk management, and cybersecurity tools.

Industries Hiring: Banking, IT, and government agencies.

Career Tip: Stay updated on new cybersecurity threats and trends. Certifications like CEH (Certified Ethical Hacker) or CISSP (Certified Information Systems Security Professional) can help you advance in your career.

5. Full-Stack Developers

Full-stack developers are skilled programmers who can work on both the front-end (what users see) and the back-end (server and database) of web applications.

Key Skills: JavaScript, React, Node.js, HTML/CSS, and APIs.

Industries Hiring: Tech startups, e-commerce, and digital media.

Career Tip: Create a strong GitHub profile with projects that highlight your full-stack skills. Learn popular frameworks like React Native to expand into mobile app development.

6. DevOps Engineers

DevOps engineers help make software faster and more reliable by connecting development and operations teams. They streamline the process for quicker deployments.

Key Skills: CI/CD pipelines, automation tools, scripting, and system administration.

Industries Hiring: SaaS companies, cloud service providers, and enterprise IT.

Career Tip: Earn key tools like Jenkins, Ansible, and Kubernetes, and develop scripting skills in languages like Bash or Python. Earning a DevOps certification is a plus and can enhance your expertise in the field.

7. Blockchain Developers

They build secure, transparent, and unchangeable systems. Blockchain is not just for cryptocurrencies; it’s also used in tracking supply chains, managing healthcare records, and even in voting systems.

Key Skills: Solidity, Ethereum, smart contracts, cryptography, and DApp development.

Industries Hiring: Fintech, logistics, and healthcare.

Career Tip: Create and share your own blockchain projects to show your skills. Joining blockchain communities can help you learn more and connect with others in the field.

8. Robotics Engineers

Robotics engineers design, build, and program robots to do tasks faster or safer than humans. Their work is especially important in industries like manufacturing and healthcare.

Key Skills: Programming (C++, Python), robotics process automation (RPA), and mechanical engineering.

Industries Hiring: Automotive, healthcare, and logistics.

Career Tip: Stay updated on new trends like self-driving cars and AI in robotics.

9. Internet of Things (IoT) Specialists

IoT specialists work on systems that connect devices to the internet, allowing them to communicate and be controlled easily. This is crucial for creating smart cities, homes, and industries.

Key Skills: Embedded systems, wireless communication protocols, data analytics, and IoT platforms.

Industries Hiring: Consumer electronics, automotive, and smart city projects.

Career Tip: Create IoT prototypes and learn to use platforms like AWS IoT or Microsoft Azure IoT. Stay updated on 5G technology and edge computing trends.

10. Product Managers

Product managers oversee the development of products, from idea to launch, making sure they are both technically possible and meet market demands. They connect technical teams with business stakeholders.

Key Skills: Agile methodologies, market research, UX design, and project management.

Industries Hiring: Software development, e-commerce, and SaaS companies.

Career Tip: Work on improving your communication and leadership skills. Getting certifications like PMP (Project Management Professional) or CSPO (Certified Scrum Product Owner) can help you advance.

Importance of Upskilling in the Tech Industry

Stay Up-to-Date: Technology changes fast, and learning new skills helps you keep up with the latest trends and tools.

Grow in Your Career: By learning new skills, you open doors to better job opportunities and promotions.

Earn a Higher Salary: The more skills you have, the more valuable you are to employers, which can lead to higher-paying jobs.

Feel More Confident: Learning new things makes you feel more prepared and ready to take on tougher tasks.

Adapt to Changes: Technology keeps evolving, and upskilling helps you stay flexible and ready for any new changes in the industry.

Top Companies Hiring for These Roles

Global Tech Giants: Google, Microsoft, Amazon, and IBM.

Startups: Fintech, health tech, and AI-based startups are often at the forefront of innovation.

Consulting Firms: Companies like Accenture, Deloitte, and PwC increasingly seek tech talent.

In conclusion, the tech world is constantly changing, and staying updated is key to having a successful career. In 2025, jobs in fields like AI, cybersecurity, data science, and software development will be in high demand. By learning the right skills and keeping up with new trends, you can prepare yourself for these exciting roles. Whether you're just starting or looking to improve your skills, the tech industry offers many opportunities for growth and success.

#Top 10 Tech Jobs in 2025#In- Demand Tech Jobs#High paying Tech Jobs#artificial intelligence#datascience#cybersecurity

2 notes

·

View notes

Text

Unlock the Power of Python Programming: A Complete Guide

Python programming has become one of the most sought-after skills in the world of technology. Its simplicity, flexibility, and vast ecosystem of libraries make it a top choice for both beginners and experienced developers. In this guide, we will explore various aspects of Python programming, from basic concepts to advanced applications like machine learning and web development.

Python Programming: A Beginner-Friendly Language

Python programming is renowned for its readability and straightforward syntax, making it ideal for beginners. Whether you are just starting to code or transitioning from another language, Python offers a smooth learning curve. Key Python programming concepts include variables, data types, and control structures, which are essential for writing functional code.

youtube

Python Data Structures: Organizing Data Efficiently

One of the core strengths of Python programming is its rich set of data structures. Lists, dictionaries, tuples, and sets help you store and manage data effectively. Understanding Python data structures allows you to create more efficient programs by organizing and manipulating data effortlessly.

Functions in Python Programming: Building Reusable Code

Functions are a fundamental part of Python programming. They allow you to break down complex problems into smaller, reusable chunks of code. Python functions not only promote code reusability but also make your programs more organized and easier to maintain.

Loops in Python Programming: Automating Repeated Tasks

Loops are an essential feature in Python programming, allowing you to perform repeated operations efficiently. With Python loops such as for and while, you can iterate over sequences or perform tasks until a specific condition is met. Mastering loops is a key part of becoming proficient in Python.

Object-Oriented Programming in Python: Structured Development

Python programming supports object-oriented programming (OOP), a paradigm that helps you build structured and scalable software. OOP in Python allows you to work with classes and objects, making it easier to model real-world scenarios and design complex systems in a manageable way.

Python Automation Scripts: Simplify Everyday Tasks

Python programming can be used to automate repetitive tasks, saving you time and effort. Python automation scripts can help with file management, web scraping, and even interacting with APIs. With Python libraries like os and shutil, automation becomes a breeze.

Python Web Development: Creating Dynamic Websites

Python programming is also a popular choice for web development. Frameworks like Django and Flask make it easy to build robust, scalable web applications. Whether you're developing a personal blog or an enterprise-level platform, Python web development empowers you to create dynamic and responsive websites.

APIs and Python Programming: Connecting Services

Python programming allows seamless integration with external services through APIs. Using libraries like requests, you can easily interact with third-party services, retrieve data, or send requests. This makes Python an excellent choice for building applications that rely on external data or services.

Error Handling in Python Programming: Writing Resilient Code

Python programming ensures that your code can handle unexpected issues using error handling mechanisms. With try-except blocks, you can manage errors gracefully and prevent your programs from crashing. Error handling is a critical aspect of writing robust and reliable Python code.

Python for Machine Learning: Leading the AI Revolution

Python programming plays a pivotal role in machine learning, thanks to powerful libraries like scikit-learn, TensorFlow, and PyTorch. With Python, you can build predictive models, analyze data, and develop intelligent systems. Machine learning with Python opens doors to exciting opportunities in artificial intelligence and data-driven decision-making.

Python Data Science: Turning Data Into Insights

Python programming is widely used in data science for tasks such as data analysis, visualization, and statistical modeling. Libraries like pandas, NumPy, and Matplotlib provide Python programmers with powerful tools to manipulate data and extract meaningful insights. Python data science skills are highly in demand across industries.

Python Libraries Overview: Tools for Every Task

One of the greatest advantages of Python programming is its extensive library support. Whether you're working on web development, automation, data science, or machine learning, Python has a library for almost every need. Exploring Python libraries like BeautifulSoup, NumPy, and Flask can significantly boost your productivity.

Python GUI Development: Building User Interfaces

Python programming isn't just limited to back-end or web development. With tools like Tkinter and PyQt, Python programmers can develop graphical user interfaces (GUIs) for desktop applications. Python GUI development allows you to create user-friendly software with visual elements like buttons, text fields, and images.

Conclusion: Python Programming for Every Developer

Python programming is a versatile and powerful language that can be applied in various domains, from web development and automation to machine learning and data science. Its simplicity, combined with its extensive libraries, makes it a must-learn language for developers at all levels. Whether you're new to programming or looking to advance your skills, Python offers endless possibilities.

At KR Network Cloud, we provide expert-led training to help you master Python programming and unlock your potential. Start your Python programming journey today and take the first step toward a successful career in tech!

#krnetworkcloud#python#language#programming#linux#exams#coding#software engineering#coding for beginners#careers#course#training#learning#education#technology#computing#tech news#business#security#futurism#Youtube

2 notes

·

View notes

Text

Can I use Python for big data analysis?

Yes, Python is a powerful tool for big data analysis. Here’s how Python handles large-scale data analysis:

Libraries for Big Data:

Pandas:

While primarily designed for smaller datasets, Pandas can handle larger datasets efficiently when used with tools like Dask or by optimizing memory usage..

NumPy:

Provides support for large, multi-dimensional arrays and matrices, along with a collection of mathematical functions to operate on these arrays.

Dask:

A parallel computing library that extends Pandas and NumPy to larger datasets. It allows you to scale Python code from a single machine to a distributed cluster

Distributed Computing:

PySpark:

The Python API for Apache Spark, which is designed for large-scale data processing. PySpark can handle big data by distributing tasks across a cluster of machines, making it suitable for large datasets and complex computations.

Dask:

Also provides distributed computing capabilities, allowing you to perform parallel computations on large datasets across multiple cores or nodes.

Data Storage and Access:

HDF5:

A file format and set of tools for managing complex data. Python’s h5py library provides an interface to read and write HDF5 files, which are suitable for large datasets.

Databases:

Python can interface with various big data databases like Apache Cassandra, MongoDB, and SQL-based systems. Libraries such as SQLAlchemy facilitate connections to relational databases.

Data Visualization:

Matplotlib, Seaborn, and Plotly: These libraries allow you to create visualizations of large datasets, though for extremely large datasets, tools designed for distributed environments might be more appropriate.

Machine Learning:

Scikit-learn:

While not specifically designed for big data, Scikit-learn can be used with tools like Dask to handle larger datasets.

TensorFlow and PyTorch:

These frameworks support large-scale machine learning and can be integrated with big data processing tools for training and deploying models on large datasets.

Python’s ecosystem includes a variety of tools and libraries that make it well-suited for big data analysis, providing flexibility and scalability to handle large volumes of data.

Drop the message to learn more….!

2 notes

·

View notes

Text

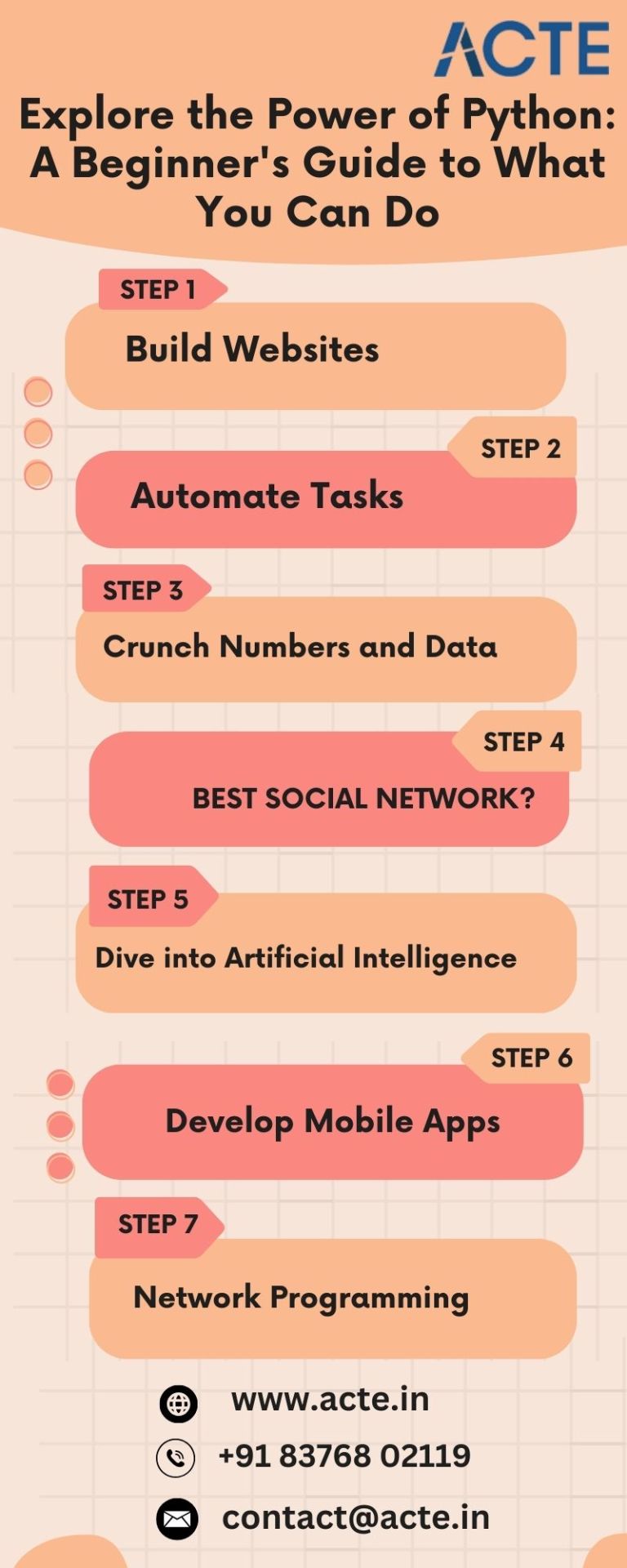

Demystifying Python: Exploring 7 Exciting Capabilities of This Coding Marvel

Greetings aspiring coders! Are you ready to unravel the wonders of Python programming? If you're curious about the diverse possibilities that Python offers, you're in for a treat. Let's delve into seven captivating things you can achieve with Python, explained in simple terms from the best Python Training Institute.

1. Craft Dynamic Websites:

Python serves as the backbone for numerous websites you encounter daily. Utilizing robust frameworks such as Django and Flask, you can effortlessly fashion web applications and dynamic websites. Whether your ambition is to launch a personal blog or the next big social platform, Python is your reliable companion. If you want to learn more about Python from the Beginner to Advance level, I will highly recommend the best Python course in Bangalore

2. Automate Mundane Tasks:

Say goodbye to repetitive tasks! Python comes to the rescue with its automation prowess. From organizing files to sending emails and even extracting information from websites, Python's straightforward approach empowers your computer to handle these tasks autonomously.

3. Master Data Analysis:

For those who revel in manipulating numbers and data, Python is a game-changer. Libraries like NumPy, Pandas, and Matplotlib transform data analysis into an enjoyable and accessible endeavor. Visualize data, discern patterns, and unlock the full potential of your datasets.

4. Embark on Game Development:

Surprising as it may be, Python allows you to dip your toes into the realm of game development. Thanks to libraries like Pygame, you can bring your gaming ideas to life. While you may not be creating the next AAA blockbuster, Python provides an excellent starting point for game development enthusiasts.

5. Explore Artificial Intelligence:

Python stands out as a juggernaut in the field of artificial intelligence. Leveraging libraries such as TensorFlow and PyTorch, you can construct machine learning models. Teach your computer to recognize images, comprehend natural language, and even engage in gaming – the possibilities are limitless.

6. Craft Mobile Applications:

Yes, you read that correctly. Python empowers you to develop mobile applications through frameworks like Kivy and BeeWare. Now, you can turn your app concepts into reality without the need to learn an entirely new language for each platform.

7. Mastery in Network Programming:

Python emerges as your ally in the realm of networking. Whether you aspire to create network tools, collaborate with APIs, or automate network configurations, Python simplifies the intricacies of networking.

In essence, Python can be likened to a versatile Swiss Army knife for programmers. It's approachable for beginners, flexible, and applicable across diverse domains. Whether you're drawn to web development, data science, or AI, Python stands as the ideal companion for your coding journey. So, grab your keyboard, start coding, and witness the magic of Python unfold!

2 notes

·

View notes

Text

cs student: creates the most basic perceptron from the well baby-sat open source dataset people: OMG THAT'S WHAT AI FOR ✨✨✨

This is such basic shit. Her model recognizes basic signs at VERY limited speed. Moreover, the recognition works per frame. This means that her model isn't able to provide a meaningful translation for a series of signs, which results in tremendous loss of context. So the actual accuracy is going to be much lower.

Took a look at her github repo. She uses:

pretrained TensorFlow model

She DIDN'T create model from scratch by herself. She even DIDN'T perform advanced training and tuning of pretrained model.

the entire Object Detection TensorFlow API

She DIDN'T create a single advanced function by herself.

She brings zero novelty. I give my students harder projects as homework. The hype around this girl is embarrassing.

The actual working solutions for sign language translation have been existing since early 00s (well actually since 80s but you're not ready for this conversation).

A computer science student named Priyanjali Gupta, studying in her third year at Vellore Institute of Technology, has developed an AI-based model that can translate sign language into English.

70K notes

·

View notes

Text

Anton R Gordon’s Blueprint for Real-Time Streaming AI: Kinesis, Flink, and On-Device Deployment at Scale

In the era of intelligent automation, real-time AI is no longer a luxury—it’s a necessity. From fraud detection to supply chain optimization, organizations rely on high-throughput, low-latency systems to power decisions as data arrives. Anton R Gordon, an expert in scalable AI infrastructure and streaming architecture, has pioneered a blueprint that fuses Amazon Kinesis, Apache Flink, and on-device machine learning to deliver real-time AI performance with reliability, scalability, and security.

This article explores Gordon’s technical strategy for enabling AI-powered event processing pipelines in production, drawing on cloud-native technologies and edge deployments to meet enterprise-grade demands.

The Case for Streaming AI at Scale

Traditional batch data pipelines can’t support dynamic workloads such as fraud detection, anomaly monitoring, or recommendation engines in real-time. Anton R Gordon's architecture addresses this gap by combining:

Kinesis Data Streams for scalable, durable ingestion.

Apache Flink for complex event processing (CEP) and model inference.

Edge inference runtimes for latency-sensitive deployments (e.g., manufacturing or retail IoT).

This trio enables businesses to execute real-time AI pipelines that ingest, process, and act on data instantly, even in disconnected or bandwidth-constrained environments.

Real-Time Data Ingestion with Amazon Kinesis

At the ingestion layer, Gordon uses Amazon Kinesis Data Streams to collect data from sensors, applications, and APIs. Kinesis is chosen for:

High availability across multiple AZs.

Native integration with AWS Lambda, Firehose, and Flink.

Support for shard-based scaling—enabling millions of records per second.

Kinesis is responsible for normalizing raw data and buffering it for downstream consumption. Anton emphasizes the use of data partitioning and sequencing strategies to ensure downstream applications maintain order and performance.

Complex Stream Processing with Apache Flink

Apache Flink is the workhorse of Gordon’s streaming stack. Deployed via Amazon Kinesis Data Analytics (KDA) or self-managed ECS/EKS clusters, Flink allows for:

Stateful stream processing using keyed aggregations.

Windowed analytics (sliding, tumbling, session windows).

ML model inference embedded in UDFs or side-output streams.

Anton R Gordon’s implementation involves deploying TensorFlow Lite or ONNX models within Flink jobs or calling SageMaker endpoints for real-time predictions. He also uses savepoints and checkpoints for fault tolerance and performance tuning.

On-Device Deployment for Edge AI

Not all use cases can wait for roundtrips to the cloud. For industrial automation, retail, and automotive, Gordon extends the pipeline with on-device inference using NVIDIA Jetson, AWS IoT Greengrass, or Coral TPU. These edge devices:

Consume model updates via MQTT or AWS IoT.

Perform low-latency inference directly on sensor input.

Reconnect to central pipelines for data aggregation and model retraining.

Anton stresses the importance of model quantization, pruning, and conversion (e.g., TFLite or TensorRT) to deploy compact, power-efficient models on constrained devices.

Monitoring, Security & Scalability

To manage the entire lifecycle, Gordon integrates:

AWS CloudWatch and Prometheus/Grafana for observability.

IAM and KMS for secure role-based access and encryption.

Flink Autoscaling and Kinesis shard expansion to handle traffic surges.

Conclusion

Anton R Gordon’s real-time streaming AI architecture is a production-ready, scalable framework for ingesting, analyzing, and acting on data in milliseconds. By combining Kinesis, Flink, and edge deployments, he enables AI applications that are not only fast—but smart, secure, and cost-efficient. This blueprint is ideal for businesses looking to modernize their data workflows and unlock the true potential of real-time intelligence.

0 notes

Text

Top AI Companies in India Driving Business Innovation in 2025

India has rapidly emerged as a global hub for technological innovation, with Artificial Intelligence (AI) at the forefront of this transformation. The AI industry in India is not only growing at an impressive pace but is also playing a crucial role in reshaping how businesses operate. From healthcare to retail, and manufacturing to logistics, AI is redefining productivity, decision-making, and customer engagement.

In 2025, companies are increasingly turning to AI-powered software companies to stay competitive and agile. Among these, WebSenor stands out as a leader among the top AI companies in India, offering cutting-edge solutions tailored to business needs. With the rising demand for data-driven services, machine learning, and intelligent automation, AI is becoming an indispensable tool for digital transformation.

The Rise of AI in the Indian Business Ecosystem

AI's impact on Indian industries is both broad and deep. In healthcare, AI-powered diagnostics and predictive care models are improving patient outcomes. In manufacturing, predictive maintenance and robotics are reducing downtime. Retail businesses leverage AI for demand forecasting and personalized customer experiences. Financial institutions use it for fraud detection, credit scoring, and customer service automation.

Government support through initiatives like Digital India and NITI Aayog's National Strategy for Artificial Intelligence has created a fertile environment for growth. These programs provide regulatory support, funding, and research infrastructure to boost AI innovation. The growth of AI startups and increased enterprise adoption of AI technologies underline the industry's maturity in 2025.

Criteria for Identifying Top AI Companies in India

Proven Industry Experience and Case Studies

Reliable AI development companies in India showcase a consistent track record of delivering impactful solutions across diverse industries. A dependable partner like WebSenor demonstrates real-world applications through client success stories, such as AI chatbots in eCommerce, and predictive analytics tools in healthcare.

Technical Expertise and Innovation Capabilities

Top AI companies possess deep expertise in technologies like natural language processing (NLP), machine learning, deep learning, computer vision, and robotic process automation (RPA). Frameworks like TensorFlow, PyTorch, and OpenAI APIs are common in their development stack. WebSenor continually invests in R&D and stays ahead of trends by exploring emerging AI use cases and developing proprietary tools.

Scalability and Customization of Solutions

AI solutions must be scalable and customizable to fit unique business workflows. WebSenor builds flexible AI architectures that integrate seamlessly with existing enterprise systems. Their agile approach ensures each solution aligns with client objectives and is ready to scale as business needs grow.

Trust, Transparency, and Ethical AI Practices

Responsible AI development requires ethical guidelines and compliance with data privacy laws. The best AI companies in India, like WebSenor, prioritize transparent processes, explainable AI models, and adherence to both local and international data protection regulations.

Top AI Companies in India Leading Innovation in 2025

WebSenor – Delivering Real-World AI Impact

WebSenor has positioned itself as a leading artificial intelligence agency by offering AI services that solve complex business challenges. Their portfolio includes:

AI-powered analytics dashboards for real-time retail insights

Predictive maintenance platforms for manufacturing equipment

Smart chatbots and voice assistants for customer service automation

Fraud detection algorithms for financial transactions

WebSenor's blend of domain knowledge and technical expertise allows them to deliver reliable and high-performance AI applications. Their custom solutions cater to startups, SMEs, and large enterprises looking for cost-effective AI implementation.

Other Prominent AI Companies in India

While WebSenor is a key player, several other notable names are contributing to the AI landscape in India:

TCS and Infosys: Enterprise-grade AI and automation services

Haptik and Yellow.ai: Conversational AI and chatbot platforms

Fractal Analytics: Data-driven AI for decision intelligence

CureMetrix and Qure.ai: AI in healthcare imaging

These companies represent the breadth of expertise in India's artificial intelligence industry and underscore the nation's growing authority in the field.

How AI Is Driving Business Innovation in 2025

Enhancing Operational Efficiency

AI automates repetitive processes, streamlines operations, and reduces costs. In logistics, route optimization algorithms minimize delivery delays. In HR, AI tools handle resume screening and employee engagement analysis.

Empowering Data-Driven Decision Making

AI helps businesses harness data through predictive analytics and real-time dashboards. Companies are using machine learning to identify market trends, optimize inventory, and detect customer churn.

Transforming Customer Experience

AI personalizes digital experiences through recommendation engines, dynamic pricing models, and intelligent customer support. Businesses offering mobile-friendly AI interfaces see higher customer satisfaction and retention.

Enabling Smarter Products and Services

AI is embedded into mobile apps, IoT devices, and enterprise platforms, turning traditional products into intelligent solutions. WebSenor contributes by developing AI-enhanced apps that adapt to user behavior and deliver value continuously.

Why Businesses Choose WebSenor for AI Solutions

Business-Centric Approach

WebSenor begins with in-depth requirement analysis and industry research. Their team works closely with clients to align AI development with strategic goals, focusing on measurable ROI and long-term value.

Scalable, Custom AI Development

Unlike one-size-fits-all solutions, WebSenor delivers tailored AI applications designed for scale and performance. Their offerings suit a range of businesses, from startups in need of rapid MVPs to large corporations requiring full-stack AI infrastructure.

Transparent Delivery and Support

WebSenor follows agile development principles, offering regular progress updates, collaborative feedback loops, and continuous post-deployment support. Their model optimization and maintenance services ensure ongoing performance improvements.

Frequently Asked Questions (FAQ)

What industries does WebSenor serve with AI solutions?

WebSenor works across retail, healthcare, fintech, manufacturing, real estate, and logistics.

How long does it take to implement an AI project?

Depending on complexity, most AI projects range from 6 to 20 weeks from discovery to deployment.

What is the typical cost of AI development in India?

Costs vary by scope and technology used but are generally more affordable than global averages, making India a cost-effective hub for AI outsourcing.

How does WebSenor ensure ethical and secure AI development?

They implement best practices in data governance, ensure compliance with GDPR and Indian IT laws, and build explainable AI models.

Can WebSenor integrate AI with legacy enterprise systems?

Yes. WebSenor's developers are experienced in integrating AI capabilities with legacy ERP, CRM, and database systems.

Conclusion

Artificial Intelligence is not a future promise—it's a present-day business imperative. Companies across India are leveraging AI to stay competitive, cut costs, and innovate faster. Identifying a reliable AI partner is essential for success in this transformative era.

WebSenor stands out among the top AI companies in India for its deep expertise, ethical practices, and business-focused solutions. If you're looking for an artificial intelligence agency that can help your business thrive in 2025 and beyond, WebSenor is the trusted partner you need.

#TopAICompaniesIndia#AIinBusiness#ArtificialIntelligenceIndia#BestAICompanies#AIStartupsIndia#WebSenorAI#MachineLearningIndia#AIForEnterprises#AIInnovation2025

0 notes

Text

Day 13 _ What is Keras

Understanding Keras and Its Role in Deep Learning Understanding Keras and Its Role in Deep Learning What is Keras? Keras is an open-source software library that provides a Python interface for artificial neural networks. It serves as a high-level API, simplifying the process of building and training deep learning models. Developed by François Chollet, a researcher at Google, Keras was first…

0 notes

Text

The Best Open-Source Tools for Data Science in 2025

Data science in 2025 is thriving, driven by a robust ecosystem of open-source tools that empower professionals to extract insights, build predictive models, and deploy data-driven solutions at scale. This year, the landscape is more dynamic than ever, with established favorites and emerging contenders shaping how data scientists work. Here’s an in-depth look at the best open-source tools that are defining data science in 2025.

1. Python: The Universal Language of Data Science

Python remains the cornerstone of data science. Its intuitive syntax, extensive libraries, and active community make it the go-to language for everything from data wrangling to deep learning. Libraries such as NumPy and Pandas streamline numerical computations and data manipulation, while scikit-learn is the gold standard for classical machine learning tasks.

NumPy: Efficient array operations and mathematical functions.

Pandas: Powerful data structures (DataFrames) for cleaning, transforming, and analyzing structured data.

scikit-learn: Comprehensive suite for classification, regression, clustering, and model evaluation.

Python’s popularity is reflected in the 2025 Stack Overflow Developer Survey, with 53% of developers using it for data projects.

2. R and RStudio: Statistical Powerhouses

R continues to shine in academia and industries where statistical rigor is paramount. The RStudio IDE enhances productivity with features for scripting, debugging, and visualization. R’s package ecosystem—especially tidyverse for data manipulation and ggplot2 for visualization—remains unmatched for statistical analysis and custom plotting.

Shiny: Build interactive web applications directly from R.

CRAN: Over 18,000 packages for every conceivable statistical need.

R is favored by 36% of users, especially for advanced analytics and research.

3. Jupyter Notebooks and JupyterLab: Interactive Exploration

Jupyter Notebooks are indispensable for prototyping, sharing, and documenting data science workflows. They support live code (Python, R, Julia, and more), visualizations, and narrative text in a single document. JupyterLab, the next-generation interface, offers enhanced collaboration and modularity.

Over 15 million notebooks hosted as of 2025, with 80% of data analysts using them regularly.

4. Apache Spark: Big Data at Lightning Speed

As data volumes grow, Apache Spark stands out for its ability to process massive datasets rapidly, both in batch and real-time. Spark’s distributed architecture, support for SQL, machine learning (MLlib), and compatibility with Python, R, Scala, and Java make it a staple for big data analytics.

65% increase in Spark adoption since 2023, reflecting its scalability and performance.

5. TensorFlow and PyTorch: Deep Learning Titans

For machine learning and AI, TensorFlow and PyTorch dominate. Both offer flexible APIs for building and training neural networks, with strong community support and integration with cloud platforms.

TensorFlow: Preferred for production-grade models and scalability; used by over 33% of ML professionals.

PyTorch: Valued for its dynamic computation graph and ease of experimentation, especially in research settings.

6. Data Visualization: Plotly, D3.js, and Apache Superset

Effective data storytelling relies on compelling visualizations:

Plotly: Python-based, supports interactive and publication-quality charts; easy for both static and dynamic visualizations.

D3.js: JavaScript library for highly customizable, web-based visualizations; ideal for specialists seeking full control.

Apache Superset: Open-source dashboarding platform for interactive, scalable visual analytics; increasingly adopted for enterprise BI.

Tableau Public, though not fully open-source, is also popular for sharing interactive visualizations with a broad audience.

7. Pandas: The Data Wrangling Workhorse

Pandas remains the backbone of data manipulation in Python, powering up to 90% of data wrangling tasks. Its DataFrame structure simplifies complex operations, making it essential for cleaning, transforming, and analyzing large datasets.

8. Scikit-learn: Machine Learning Made Simple

scikit-learn is the default choice for classical machine learning. Its consistent API, extensive documentation, and wide range of algorithms make it ideal for tasks such as classification, regression, clustering, and model validation.

9. Apache Airflow: Workflow Orchestration

As data pipelines become more complex, Apache Airflow has emerged as the go-to tool for workflow automation and orchestration. Its user-friendly interface and scalability have driven a 35% surge in adoption among data engineers in the past year.

10. MLflow: Model Management and Experiment Tracking

MLflow streamlines the machine learning lifecycle, offering tools for experiment tracking, model packaging, and deployment. Over 60% of ML engineers use MLflow for its integration capabilities and ease of use in production environments.

11. Docker and Kubernetes: Reproducibility and Scalability

Containerization with Docker and orchestration via Kubernetes ensure that data science applications run consistently across environments. These tools are now standard for deploying models and scaling data-driven services in production.

12. Emerging Contenders: Streamlit and More

Streamlit: Rapidly build and deploy interactive data apps with minimal code, gaining popularity for internal dashboards and quick prototypes.

Redash: SQL-based visualization and dashboarding tool, ideal for teams needing quick insights from databases.

Kibana: Real-time data exploration and monitoring, especially for log analytics and anomaly detection.

Conclusion: The Open-Source Advantage in 2025

Open-source tools continue to drive innovation in data science, making advanced analytics accessible, scalable, and collaborative. Mastery of these tools is not just a technical advantage—it’s essential for staying competitive in a rapidly evolving field. Whether you’re a beginner or a seasoned professional, leveraging this ecosystem will unlock new possibilities and accelerate your journey from raw data to actionable insight.

The future of data science is open, and in 2025, these tools are your ticket to building smarter, faster, and more impactful solutions.

#python#r#rstudio#jupyternotebook#jupyterlab#apachespark#tensorflow#pytorch#plotly#d3js#apachesuperset#pandas#scikitlearn#apacheairflow#mlflow#docker#kubernetes#streamlit#redash#kibana#nschool academy#datascience

0 notes

Text

Understanding AI Architectures: A Guide by an AI Development Company in UAE

In a world where screens rule our day, Artificial Intelligence (AI) quietly drives most of the online tools we now take for granted. Whether it's Netflix recommending the next film, a smartphone assistant setting reminders, or stores guessing what shirt you might buy next, the trick behind the curtain is the framework-the architecture.

Knowing how that framework works matters to more than just coders and CTOs; it matters to any leader who dreams of putting AI to work. As a top AI company based in the UAE, we think it is time to untangle the idea of AI architecture, explain why it is important, and show how companies here can win by picking the right setup for their projects.

What Is AI Architecture?

AI architecture is simply the plan that lines up all the parts of an AI system and shows how they talk to one another. Think of it as the blueprint for a house; once the beams are in place, the system knows where to read data, learn trends, decide on an action, and respond to people or other software.

A solid architecture brings four quick wins:

speed: data is processed fast

growth: the platform scales when new tasks arrive

trust: sensitive details are kept safe

harmony: it plugs into tools the business already uses

Because goals, data amounts, and launch settings vary, every model-whether machine learning, deep learning, NLP or something else-needs its own twist on that blueprint.

Core Layers of AI Architecture

Whether you're putting together a chatbot, a movie recommender, or a smart analytics dashboard, most projects rest on four basic layers.

1. Data Layer Every AI starts with data, so this layer is ground zero. It handles:

Input sources, both structured tables and messy text

Storage options, from classic databases to modern data lakes

Cleaning tools that tidy and sort raw bits into useable sets

In the UAE, firms juggle Arabic, English, and several dialects across fields like finance and tourism, so keeping fast, local data clean can make-or-break a project.

2. Modelling Layer Next up, the brains of the operation live here. Data scientists and engineers use this stage to craft, teach, and test their models.

Major pieces include:

Machine-learning algorithms, such as SVMs, random forests, or gradient boosting

Deep-learning networks, like CNNs for images or Transformers for text

Training platforms, with tools from TensorFlow, Keras, or PyTorch

An AI shop in Dubai or Abu Dhabi tunes this layer to local patterns, legal rules, and industry demands-whether that's AML flags for banks, fast scans for hospitals, or fair-value estimates for buyers.

3. Serving Layer After the models finish training, they must be put into action and made available to users or business tools. This step includes:

APIs that let other software talk to the model

Places to run the model (on-site, in the cloud, or a mix)

Speed tweaks so answers come back fast

In a fast-moving market like the UAE, especially in Dubai and Abu Dhabi, a slow reply can turn customers away. That makes this layer so important.

4. Feedback and Monitoring Layer AI systems are not plug-and-play for life; they learn, drift, and need care. This layer keeps things fresh with:

Watching how the model performs

Collecting feedback from real-world results

Re-training and rolling out new versions

Without that routine check-up, models can grow stale, skewed, or just plain useless.

Popular AI Architectures in Practice:

Lets highlight a few AI setups that companies across the UAE already count on.

1. Client-Server AI Architecture Perfect for small and mid-sized firms. The model sits on a server, and the client zips data back and forth through an API.

Use Case: Retail chains analyze shopper behavior to better place stock.

2. Cloud-Native AI Architecture Built straight into big clouds such as AWS, Azure, or Google Cloud. It scales up easily and can be deployed with a few clicks.

Use Case: Fintech firms sifting through millions of records to spot fraud and score loans.

3. Edge AI Architecture Edge AI moves brainpower right onto the gadget itself instead of sending every bit of data to faraway cloud servers. This design works well when speed is vital or when sensitive info cant leave the device.

Use Case: Think of smart cameras scanning mall hallways or airport lounges in the UAE, spotting unusual behavior while keeping footage onsite.

4. Hybrid AI Architecture Hybrid AI blends edge smarts with cloud muscle, letting apps react quickly on a device but tap the cloud for heavy lifting when needed.

Use Case: A medical app that checks your heart rate and ECG in real time but uploads that data so doctors can run big-pattern analysis later.

Challenges to Consider While Designing AI Architectures

Building a solid AI backbone is not as simple as plug-and-play. Here are key hurdles firms in the UAE often encounter.

Data Privacy Regulations

With the UAE tightening digital-security rules, models must meet the Personal Data Protection Law or face fines.

Infrastructure Costs

Top-notch GPUs, fast storage, and chilled racks add up fast. A skilled UAE partner will size the setup wisely.

Localization and Multilingual Support

Arabic-English chatbots have to handle dialects and culture cues, which means fresh, on-the-ground training, not off-the-shelf data.

Talent Availability

Brilliant models need more than code; they rely on data engineers, AI researchers, DevOps pros, and industry insiders speaking the same language.

How UAE Businesses Can Profit from Custom AI Setups?

Across the UAE, artificial intelligence is spreading quickly-from online government services to real-estate apps and tourism chatbots. Picking or creating a custom AI setup delivers:

Faster decisions thanks to real-time data analysis

Better customer support through smart, automated replies

Lower costs via predictive maintenance and lean processes

Higher revenue by personalizing each users journey

Partnering with a seasoned local AI firm gives you technical skill, market know-how, rule-following advice, and lasting help as your project grows.

0 notes

Text

AI Product Development: Building the Smart Solutions of Tomorrow

Artificial Intelligence (AI) is no longer a futuristic idea — it’s here, transforming how businesses operate, how users interact with products, and how industries deliver value. From automating workflows to enabling predictive insights, AI product development is now a cornerstone of modern digital innovation.

Companies across sectors are realizing that integrating AI into their digital offerings isn’t just a competitive advantage — it’s becoming a necessity. If you’re thinking about building intelligent products, this is the perfect time to act.

Let’s dive into what AI product development involves, why it matters, and how to approach it effectively.

What is AI Product Development?

AI product development is the process of designing, building, and scaling digital products powered by artificial intelligence. These products are capable of learning from data, adapting over time, and automating tasks that traditionally required human input.

Common examples include:

Personalized recommendation engines (e.g., Netflix, Amazon)

Chatbots and virtual assistants

Predictive analytics platforms

AI-driven diagnostics in healthcare

Intelligent process automation in enterprise SaaS tools

The goal is to embed intelligence into the product’s core, making it smarter, more efficient, and more valuable to users.

Why Businesses are Investing in AI Products

Here’s why AI product development is surging across every industry:

Enhanced User Experience: AI can tailor interfaces, suggestions, and features to user behavior.

Increased Efficiency: Automating repetitive tasks saves time and reduces human error.

Better Decision-Making: Predictive analytics and insights help businesses make informed choices.

Cost Savings: AI can reduce the need for large manual teams over time.

Competitive Edge: Products that adapt and evolve with users outperform static alternatives.

Incorporating AI doesn’t just make your product better — it redefines what’s possible.

Key Steps in AI Product Development

Building an AI-driven product isn’t just about coding a machine learning model. It’s a structured, iterative process that includes:

1. Problem Identification

Every great AI product starts with a real-world problem. Whether it’s automating customer support or predicting user churn, the goal must be clearly defined.

2. Data Strategy

AI runs on data. That means collecting, cleaning, labeling, and organizing datasets is critical. Without quality data, even the best algorithms fail.

3. Model Design & Training

This step involves choosing the right algorithms (e.g., regression, classification, neural networks) and training them on historical data. The model must be evaluated for accuracy, fairness, and bias.

4. Product Integration

AI doesn’t operate in isolation. It needs to be integrated into a product in a way that’s intuitive and valuable for the user — whether it's real-time suggestions or behind-the-scenes automation.

5. Testing & Iteration

AI products must be constantly tested in real-world environments and retrained as new data comes in. This ensures they remain accurate and effective over time.

6. Scaling & Maintenance

Once proven, the model and infrastructure need to scale. This includes managing compute resources, optimizing APIs, and maintaining performance.

Who Should Build Your AI Product?

To succeed, businesses often partner with specialists. Whether you're building in-house or outsourcing, you’ll need to hire developers with experience in:

Machine learning (ML)

Natural Language Processing (NLP)

Data engineering

Cloud-based AI services (AWS, Azure, GCP)

Python, TensorFlow, PyTorch, and similar frameworks

But beyond technical expertise, your team must understand product thinking — how to align AI capabilities with user needs.

That’s why many companies turn to saas experts who can combine AI with a product-led growth mindset. Especially in SaaS platforms, AI adds massive value through automation, personalization, and customer insights.

AI + Web3: A New Frontier

If you’re at the edge of innovation, consider combining AI with decentralized technologies. A future-forward web3 development company can help you integrate AI into blockchain-based apps.

Some exciting AI + Web3 use cases include:

Decentralized autonomous organizations (DAOs) that evolve using AI logic

AI-driven NFT pricing or authentication

Smart contracts that learn and adapt based on on-chain behavior

Privacy-preserving machine learning using decentralized storage

This intersection offers businesses the ability to create trustless, intelligent systems — a true game-changer.

How AI Transforms SaaS Platforms

For SaaS companies, AI is not a feature — it’s becoming the foundation. Here’s how it changes the game:

Automated Customer Support: AI chatbots can resolve up to 80% of Tier 1 queries.

Churn Prediction: Identify at-risk users and re-engage them before it’s too late.

Dynamic Pricing: Adjust pricing based on usage, demand, or user profiles.

Smart Onboarding: AI can personalize tutorials and walkthroughs for each user.

Data-driven Feature Development: Understand what features users want before they ask.

If you’re already a SaaS provider or plan to become one, AI integration is the next logical step—and working with saas experts who understand AI workflows can dramatically speed up your go-to-market timeline.

Real-World Examples of AI Products

Grammarly: Uses NLP to improve writing suggestions.

Spotify: Combines AI and behavioral data for music recommendations.

Notion AI: Embeds generative AI for writing, summarizing, and planning.

Zendesk: Automates customer service with AI bots and smart routing.

These companies didn’t just adopt AI — they built it into the core value of their platforms.

Final Thoughts: Build Smarter, Not Just Faster

AI isn’t just a trend—it’s the future of software. Whether you're improving internal workflows or building customer-facing platforms, AI product development helps you create experiences that are smart, scalable, and user-first.

The success of your AI journey depends not just on technology but on strategy, talent, and execution. Whether you’re launching an AI-powered SaaS tool, a decentralized app, or a smart enterprise solution, now is the time to invest in intelligent innovation.Ready to build an AI-powered product that stands out in today’s crowded market? AI product development done right can give you that edge.

0 notes

Text

How Generative AI Training in Bengaluru Can Boost Your Tech Career?

In recent years, Generative AI has emerged as one of the most disruptive technologies transforming industries across the globe. From personalized content creation to AI-driven design, code generation, and even advanced medical imaging—Generative AI is revolutionizing how we work, interact, and innovate.

And if you are a tech enthusiast or working professional based in India’s Silicon Valley, you’re in the perfect place to jump into this exciting field. Generative AI Training in Bengaluru offers a unique blend of industry exposure, expert-led education, and career acceleration opportunities. This blog will guide you through the benefits of pursuing generative AI training in Bengaluru and how it can supercharge your career in the tech domain.

What is Generative AI?

Before diving into the career benefits, let’s define what Generative AI actually is. Generative AI refers to a class of artificial intelligence models capable of generating new content—text, images, audio, video, or code—based on patterns learned from existing data.

Popular tools and models include:

ChatGPT (OpenAI) – for conversational AI and text generation

DALL·E & Midjourney – for AI-generated images

Codex & GitHub Copilot – for AI-assisted programming

Runway ML & Sora – for generative video

Stable Diffusion – for open-source creative tasks

Industries are actively seeking professionals who can understand, implement, and innovate with these tools. That’s where Generative AI training comes in.

Why Choose Bengaluru for Generative AI Training?

Bengaluru is more than just a city—it’s the beating heart of India’s tech ecosystem. Here’s why enrolling in a Generative AI training in Bengaluru program can be a game-changer:

1. Home to India’s Leading Tech Companies

From Infosys and Wipro to Google, Microsoft, and OpenAI-partnered startups—Bengaluru hosts a vast number of AI-focused organizations. Training in the city means you’re close to the action, with easier access to internships, workshops, and networking events.

2. Cutting-Edge Training Institutes

Bengaluru boasts some of the top AI and ML training providers in India. These institutions offer hands-on experience with real-world projects, industry mentorship, and certifications that are recognized globally.

3. Startup Ecosystem

With a thriving startup culture, Bengaluru is a breeding ground for innovation. After completing your training, you’ll find ample opportunities in early-stage ventures working on next-gen generative AI products.

4. Tech Community and Events

The city is buzzing with meetups, hackathons, AI summits, and conferences. This vibrant tech community provides a great platform to learn, collaborate, and grow.

What Does Generative AI Training in Bengaluru Include?

Most leading programs in Bengaluru offer comprehensive coverage of the following:

✅ Core AI and ML Concepts

Understanding the foundational building blocks—supervised/unsupervised learning, deep learning, and neural networks.

✅ Generative Models

Focused training on GANs (Generative Adversarial Networks), VAEs (Variational Autoencoders), and Diffusion Models.

✅ Large Language Models (LLMs)

Working with GPT-3.5, GPT-4, Claude, LLaMA, and other state-of-the-art transformer-based models.

✅ Prompt Engineering

Learning the art and science of crafting prompts to generate better, controlled outputs from AI models.

✅ Toolkits and Platforms

Hands-on experience with tools like OpenAI APIs, Hugging Face, TensorFlow, PyTorch, GitHub Copilot, and LangChain.

✅ Capstone Projects

End-to-end implementation of real-world generative AI projects in areas like healthcare, e-commerce, finance, and creative media.

How Generative AI Training in Bengaluru Can Boost Your Tech Career?

Let’s get to the heart of it—how can this training actually boost your career?

1. Future-Proof Your Skill Set

As automation and AI continue to evolve, companies are constantly seeking professionals with AI-forward skills. Generative AI is at the forefront, and training in it makes you an in-demand candidate across industries.

2. Land High-Paying Roles

According to industry data, professionals with Generative AI skills are commanding salaries 20-30% higher than traditional tech roles. Roles such as:

AI Product Engineer

Prompt Engineer

Machine Learning Scientist

Generative AI Researcher

AI Consultant

LLM Application Developer

are on the rise.

3. Open Doors to Global Opportunities

With Bengaluru's global tech footprint, professionals trained here can easily transition to remote roles, international placements, or work with multinational companies using generative AI.

4. Enhance Your Innovation Quotient

Generative AI unlocks creativity in code, design, storytelling, and more. Whether you're building an app, automating a workflow, or launching a startup, these skills amplify your ability to innovate.

5. Accelerate Your Freelancing or Startup Journey

Many tech professionals in Bengaluru are turning into solopreneurs and startup founders. With generative AI, you can quickly prototype MVPs, create content, or offer freelance services in writing, video creation, or coding—all powered by AI.

Who Should Enroll in a Generative AI Training Program?

A Generative AI training in Bengaluru is ideal for:

Software Developers & Engineers – who want to transition into AI-focused roles.

Data Scientists – looking to expand their capabilities in creative and generative models.

Students & Graduates – aiming for a future-proof tech career.

Designers & Content Creators – interested in AI-assisted creation.

Entrepreneurs & Product Managers – who wish to integrate AI into their offerings.

IT Professionals – looking to reskill or upskill for better job roles.

Why Choose a Professional Institute?

While there are many online courses available, a classroom or hybrid program in Bengaluru often provides:

Mentorship from industry experts

Collaborative learning environment

Real-time feedback and doubt-solving

Placement support and career counseling

Live projects using real-world datasets

One such reputed name is the Boston Institute of Analytics, which offers industry-relevant, project-based Generative AI training programs in Bengaluru. Their curriculum is tailored to meet evolving market demands, ensuring students graduate job-ready.

Final Thoughts

In today’s fast-evolving tech landscape, staying ahead means staying adaptable—and Generative AI is the perfect skill to future-proof your career. Whether you want to break into AI development, build cutting-edge products, or simply enhance your tech toolkit, enrolling in a Generative AI Training in Bengaluru can set you on a path of accelerated growth and innovation.

Bengaluru’s thriving tech ecosystem, access to global companies, and expert-led training institutions make it the ideal place to begin your generative AI journey. Don’t wait for the future—start building it now with the right training, tools, and support.

#Generative AI courses in Bengaluru#Generative AI training in Bengaluru#Agentic AI Course in Bengaluru#Agentic AI Training in Bengaluru

0 notes