#the ethics behind ai

Text

AI technology continues to advance and become more integrated into various aspects of society, and ethical considerations have become increasingly important. but as AI becomes more advanced and ubiquitous, concerns are being raised about its impact on society. In this video, we'll explore the ethics behind AI and discuss how we can ensure fairness and privacy in the age of AI.

#theethicsbehindai#ensuringfairnessprivacyandbias#limitlesstech#ai#artificialintelligence#aiethics#machinelearning#aitechnology#ethicsofaitechnology#ethicalartificialintelligence#aisystem

The Ethics Behind AI: Ensuring Fairness, Privacy, and Bias

#the ethics behind ai#ensuring fairness privacy and bias#ai ethics#artificial intelligence#ai#machine learning#what is ai ethics#the ethics of artificial intelligence#definition of ai ethics#ai technology#ai fairness#ai privacy#ai bias#ethics of ai technology#ethical concerns of ai#ethical artificial intelligence#LimitLess Tech 888#responsible ai#what is ai bias#the truth about ai and ethics#ai system#ethics of artificial intelligence#ai ethical issues

0 notes

Text

AI technology continues to advance and become more integrated into various aspects of society, and ethical considerations have become increasingly important. but as AI becomes more advanced and ubiquitous, concerns are being raised about its impact on society. In this video, we'll explore the ethics behind AI and discuss how we can ensure fairness and privacy in the age of AI.

Artificial Intelligence has emerged as a transformative technology, revolutionizing industries and societies across the globe. From personalized recommendations to autonomous vehicles, AI systems are becoming deeply integrated into our daily lives. However, this rapid advancement also brings forth a host of ethical concerns that demand careful consideration. Among these concerns, ensuring fairness, privacy, and mitigating bias are paramount.

Ensuring fairness in AI systems is paramount. AI algorithms, often trained on large datasets, have the potential to perpetuate and even exacerbate existing social biases. This can manifest in various ways, from biased hiring decisions in AI-driven recruitment tools to discriminatory loan approvals in automated financial systems. Achieving fairness involves developing algorithms that are not only technically proficient but also ethically sound. It requires the recognition of biases, both subtle and overt, in data and the implementation of measures to mitigate these biases.

AI systems often require access to vast amounts of personal data to function effectively. This raises profound privacy concerns, as the misuse or mishandling of such data can lead to surveillance, identity theft, and unauthorized access to sensitive information. Striking a balance between data collection for AI improvement and safeguarding individual privacy is a significant ethical challenge. The implementation of robust data anonymization techniques, data encryption, and the principle of data minimization are vital in ensuring that individuals' privacy rights are respected in the age of AI.

The ethical underpinnings of AI demand transparency and accountability from developers and organizations. AI systems must provide clear explanations for their decisions, especially when they impact individuals' lives. The concept of the "black box" AI, where decisions are made without understandable reasons, raises concerns about the potential for unchecked power and biased outcomes. Implementing mechanisms such as interpretable AI and model explainability can help in building trust and ensuring accountability.

#theethicsbehindai#ensuringfairnessprivacyandbias#limitlesstech#ai#artificialintelligence#aiethics#machinelearning#aitechnology#ethicsofaitechnology#ethicalartificialintelligence#aisystem

The Ethics Behind AI: Ensuring Fairness, Privacy, and Bias

#the ethics behind ai#ensuring fairness privacy and bias#ai ethics#artificial intelligence#ai#machine learning#what is ai ethics#the ethics of artificial intelligence#definition of ai ethics#ai technology#ai fairness#ai privacy#ai bias#ethics of ai technology#ethical artificial intelligence#LimitLess Tech 888#responsible ai#what is ai bias#ethical concerns of ai#the truth about ai and ethics#ai system#ethics of artificial intelligence#ai ethical issues

0 notes

Text

Interestingly enough I think calling language learning models a.i. is doing too much to humanize them. Because of how scifi literature has built up a.i. as living beings with actual working thought processes deserving of the classification of person (bicentennial man etc) a lot of people want to view a.i. as entities. And corporations pushing a.i. can take advtange of your soft feelings toward it like that. But LLMs are nowhere close to that, and tbh I don't even feel the way they learn approaches it. Word order guessing machines can logic the way to a regular sounding sentence but thats not anything approaching having a conversation with a person. Remembering what you said is just storing the information you are typing into it, its not any kind of indication of existance. And yet, so many people online are acting like when my grandma was convinced siri was actually a lady living in her phone. I think we need to start calling Language Learning Models "LLMs" and not giving the corps pushing them more of an in with the general public. Its marketting spin, stop falling for it.

#ai#llms#chatgpt#character ai#the fic ive seen written with it is also so sad and bland#even leaving the ethical qualms behind in the fact its trained off uncompensated work stolen off the internet and then used to make#commercial work outsode the fic sphere#it also does a bad job#please read more quality stuff so you can recognize this

112 notes

·

View notes

Text

examining a seemingly normal image only to slowly realize the clear signs of AI generated art.... i know what you are... you cannot hide your true nature from me... go back where you came from... out of my sight with haste, wretched and vile husk

#BEGONE!!! *wizard beam blast leaving a black smoking crater in the middle of the tumblr dashboard*#I think another downside to everyone doing everything on phone apps on shitty tiny screens nowadays is the inability to really see details#of an image and thus its easier to share BLATANTLY fake things like.. even 'good' ai art has pretty obvious tells at this point#but especially MOST of it is not even 'good' and will have details that are clearly off or lines that dont make sense/uneven (like the imag#of a house interior and in the corner there's a cabinet and it has handles as if it has doors that open but there#are no actual doors visible. or both handles are slightly different shapes. So much stuff that looks 'normal' at first glance#but then you can clearly tell it's just added details with no intention or thought behind it. a pattern that starts and then just abruptly#doesn't go anywhere. etc. etc. )#the same thing with how YEARS ago when I followed more fashion type blogs on tumblr and 'colored hair' was a cool ''''New Thing''' instead#of being the norm now basically. and people would share photos of like ombre hair designs and stuff that were CLEARLY photoshop like#you could LITERally see the coloring outside of the lines. blurs of color that extend past the hair line to the rest of the image#or etc. But people would just share them regardless and comment like 'omg i wish I could do this to my hair!' or 'hair goallzzzz!! i#wonder what salon they went to !!' which would make me want to scream and correct them everytime ( i did not lol)#hhhhhhggh... literally view the image on anything close to a full sized screen and You Will SEe#I don't know why it's such a pet peeve of mine. I think just as always I'm obsessed with the reality and truth of things. most of the thing#that annoy me most about people are situations in which people are misinterpreting/misunderstanding how something works or having a misconc#eption about somehting thats easily provable as false or etc. etc. Even if it's harmless for some random woman on facebook to believe that#this AI generated image of a cat shaped coffee machine is actually a real product she could buy somewhere ... I still urgently#wish I could be like 'IT IS ALL AN ILLUSION. YOU SEE???? ITS NOT REALL!!!!! AAAAA' hjhjnj#Like those AI shoes that went around for a while with 1000000s of comments like 'omg LOVE these where can i get them!?' and it's like YOU#CANT!!! YOU CANT GET THEM!!! THEY DONT EXIST!!! THE EYELETS DONT EVEN LINE UP THE SHOES DONT EVEN#MATCH THE PATTERNS ARE GIBBERISH!! HOW CAN YOU NOT SEE THEY ARE NOT REAL!??!!' *sobbing in the rain like in some drama movie*#Sorry I'm a pedantic hater who loves truth and accuracy of interpretation and collecting information lol#I think moreso the lacking of context? Like for example I find the enneagram interesting but I nearly ALWAYS preface any talking about it#with ''and I know this is not scientifically accurate it's just an interesting system humans invented to classify ourselve and our traits#and I find it sociologically fascinating the same way I find religion fascinating'. If someone presented personality typing information wit#out that sort of context or was purporting that enneagram types are like 100% solid scientific truth and people should be classified by the#unquestionaingly in daily life or something then.. yeah fuck that. If these images had like disclaimers BIG in the image description somewh#re like 'this is not a real thing it's just an AI generated image I made up' then fine. I still largely disagree with the ethics behind AI#art but at least it's informed. It's the fact that people just post images w/o context or beleive a falsehood about it.. then its aAAAAAA

14 notes

·

View notes

Text

Friendly reminder: If I catch you post/ reblog AI art and follow me I'll throw you into the pits of hell.

I would rather have 1000 p0rnbots follow me than any of you leeches.

#every da yi see people defending this shit and it upsets me#ai art in its current form is not ethical nor legal#laws just lag behind when it comes to internet stuff#and even then#you see your artists having legitimate complains about it and all you do is rub their explotation into their face#disgusting#typos bcs im tired idc

22 notes

·

View notes

Text

i also really really don't mind if people disagree with me or think ai art is more trouble than it's worth or are worried about it interfering with their livelihood as artists those are completely fair and understandable takes! i just wish the most prevalent refrain about it was less.... how it is. genuine criticism of ai based on how it's being utilized and implemented is something i enjoy engaging with just as much as seeing what interesting concepts people are able to pull off when also making use of it thoughtfully.

#ai art itself isn't very interesting to me because i'm way too finicky for it but i love when discussions about it get us talking about the#nature of art and art tools and the ethics behind new technology and how it can be used for the better

3 notes

·

View notes

Text

funny how most people can see a difference between a normal, legal, copy and plagiarism and between photomanipulating someone's pictures without their permission and doing a collage yet somehow it eludes them why a.i. is unethical...

#it's that easy#ai discussion#anti ai#the problem is claiming something that isn't yours as yours#and/or using someone's work without permission#the only way i can imagine ai used ethically would be crediting the artists whos works get used + listing the works that got remixed#and the ai 'artist' to be credited merely as a remixer-author of the concept behind the remix#BUT due to the very nature of the computer program it's impossible to trace down every work used which makes the entire thing unusable#it's just shameless stealing

2 notes

·

View notes

Text

sigh

the "ai art to paint by numbers" post to Make Us Think is lowkey annoying because i don't consider paint by numbers to be art either lol. like if anything i would even say ai art is More in-line with my definition of art than paint by numbers ???

art has so many components to it but the main thing for me is a sense of meaning. what did the person want to say and how did their control of the medium bring it into existence.

a paint by numbers says nothing. you're practicing brush stroke techniques but following someone else's outline and composition and colors. it's a fun exercise using art tools but it's not Art. you didn't put any of your own meaning into it.

ai art Can have artistic intent and an understanding of artistic principles. i still don't like it. i hate the stolen art part of it. but in SOME cases it would fit my definition of art.

ai gen can be a vehicle for art but not everything that comes out of it is art. pencils and paper can be a vehicle for art but not everything you do with a pencil is art.

i guess the definition of art rly is the problem honestly, since some people probably think of it solely as a visual medium on one side so of course your Pretty Picture With Weird Background Merging is art to you. but the other side is debating it in terms of what makes something "art" in a meaningful way that also encompasses music etc. and some people probably think art doesn't Have to have meaning because Anything can be art. and i mean, i gravitate toward that too. there IS something to be said about how the viewer of that art can take meaning from it regardless if no meaning was put into it too. but like, i could derive meaning from a penny on the sidewalk if i really wanted to yk.

but anyway paint by numbers is not going to elicit a new layer to ai gen discourse to me sorry. the piece you're mimicking in paint by numbers is art, but your finished product isn't. your finished product isn't transformative in the way i personally define art to be, unless you put your own spin on it. same shit applies to ai gen, or at least it could apply more cleanly if not for all the stolen fucking art it's built on.

also why is he pouring so much grease in the sink.

#txt#'is it art because it elicits a reaction from the viewer' it does not elicit anything but another layer of tiredness for me#maybe if it weren't paint by numbers it would hold more of a thought puzzle for me#like if you paint your spin on an AI gen piece#but im guessing the AI bro behind that post didn't have any investment in like.#doing the actual work it takes to become an artist in a way that contributes to the discussion#so the world may never know#to be clear im not even against ethical AI art#i think 9/10 times ai generated stuff is soulless as hell#and it would be even built from an ethical library#but you can inject actual art principles and meaning into it if you have enough determination to#and there are lots of derivative techniques in the pre-existing art world too like photobashing and tracing#and there is a lot to talk about in terms of where ai could fit into it all if it weren't fucking up the lives of professional artists#like i do actually want to Be Made To Think in terms of the discussion#but rn with the whole STOLEN ART thing and lack of regulations i don't really care#and a paint by numbers Thought Experiment is too fake deep to make me care

1 note

·

View note

Note

How exactly do you advance AI ethically? Considering how much of the data sets that these tools use was sourced, wouldnt you have to start from scratch?

a: i don't agree with the assertion that "using someone else's images to train an ai" is inherently unethical - ai art is demonstrably "less copy-paste-y" for lack of a better word than collage, and nobody would argue that collage is illegal or ethically shady. i mean some people might but i don't think they're correct.

b: several people have done this alraedy - see, mitsua diffusion, et al.

c: this whole argument is a red herring. it is not long-term relevant adobe firefly is already built exclusively off images they have legal rights to. the dataset question is irrelevant to ethical ai use, because companies already have huge vaults full of media they can train on and do so effectively.

you can cheer all you want that the artist-job-eating-machine made by adobe or disney is ethically sourced, thank god! but it'll still eat everyone's jobs. that's what you need to be caring about.

the solution here obviously is unionization, fighting for increased labor rights for people who stand to be affected by ai (as the writer's guild demonstrated! they did it exactly right!), and fighting for UBI so that we can eventually decouple the act of creation from the act of survival at a fundamental level (so i can stop getting these sorts of dms).

if you're interested in actually advancing ai as a field and not devils advocating me you can also participate in the FOSS (free-and-open-source) ecosystem so that adobe and disney and openai can't develop a monopoly on black-box proprietary technology, and we can have a future where anyone can create any images they want, on their computer, for free, anywhere, instead of behind a paywall they can't control.

fun fact related to that last bit: remember when getty images sued stable diffusion and everybody cheered? yeah anyway they're releasing their own ai generator now. crazy how literally no large company has your interests in mind.

cheers

2K notes

·

View notes

Text

forever tired of our voices being turned into commodity.

forever tired of thorough medaocrity in the AAC business. how that is rewarded. How it fails us as users. how not robust and only robust by small small amount communication systems always chosen by speech therapists and funded by insurance.

forever tired of profit over people.

forever tired of how companies collect data on every word we’ve ever said and sell to people.

forever tired of paying to communicate. of how uninsured disabled people just don’t get a voice many of the time. or have to rely on how AAC is brought into classrooms — which usually is managed to do in every possible wrong way.

forever tired of the branding and rebranding of how we communicate. Of this being amazing revealation over and over that nonspeakers are “in there” and should be able to say things. of how every single time this revelation comes with pre condition of leaving the rest behind, who can’t spell or type their way out of the cage of ableist oppression. or are not given chance & resources to. Of the branding being seen as revolution so many times and of these companies & practitioners making money off this “revolution.” of immersion weeks and CRP trainings that are thousands of dollars and wildly overpriced letterboards, and of that one nightmare Facebook group g-d damm it. How this all is put in language of communication freedom. 26 letters is infinite possibilities they say - but only for the richest of families and disabled people. The rest of us will have to live with fewer possibilities.

forever tired of engineer dads of AAC users who think they can revolutionize whole field of AAC with new terrible designed apps that you can’t say anything with them. of minimally useful AI features that invade every AAC app to cash in on the new moment and not as tool that if used ethically could actually help us, but as way of fixing our grammar our language our cultural syntax we built up to sound “proper” to sound normal. for a machine, a large language model to model a small language for us, turn our inhuman voices human enough.

forever tired of how that brand and marketing is never for us, never for the people who actually use it to communicate. it is always for everyone around us, our parents and teachers paras and SLPs and BCBAs and practitioners and doctors and everyone except the person who ends up stuck stuck with a bad organized bad implemented bad taught profit motivated way to talk. of it being called behavior problems low ability incompetence noncompliance when we don’t use these systems.

you all need to do better. We need to democritize our communication, put it in our own hands. (My friend & communication partner who was in Occupy Wall Street suggested phrase “Occupy AAC” and think that is perfect.) And not talking about badly made non-robust open source apps either. Yes a robust system needs money and recources to make it well. One person or community alone cannot turn a robotic voice into a human one. But our human voice should not be in hands of companies at all.

(this is about the Tobii Dynavox subscription thing. But also exploitive and capitalism practices and just lazy practices in AAC world overall. Both in high tech “ mainstream “ AAC and methods that are like ones I use in sense that are both super stigmatized and also super branded and marketed, Like RPM and S2C and spellers method. )

#I am not a product#you do not have to make a “spellers IPA beer ‘ about it I promise#communication liberation does not have a logo#AAC#capitalism#disability#nonspeaking#dd stuff#ouija talks#ouija rants

281 notes

·

View notes

Text

Been thinking recently about the goings-on with Duolingo & AI, and I do want to throw my two cents in, actually.

There are ways in which computers can help us with languages, certainly. They absolutely should not be the be-all and end-all, and particularly for any sort of professional work I am wholly in favour of actually employing qualified translators & interpreters, because there's a lot of important nuances to language and translation (e.g. context, ambiguity, implied meaning, authorial intent, target audience, etc.) that a computer generally does not handle well. But translation software has made casual communication across language barriers accessible to the average person, and that's something that is incredibly valuable to have, I think.

Duolingo, however, is not translation software. Duolingo's purpose is to teach languages. And I do not think you can be effectively taught a language by something that does not understand it itself; or rather, that does not go about comprehending and producing language in the way that a person would.

Whilst a language model might be able to use probability & statistics to put together an output that is grammatically correct and contextually appropriate, it lacks an understanding of why, beyond "statistically speaking, this element is likely to come next". There is no communicative intent behind the output it produces; its only goal is mimicking the input it has been trained on. And whilst that can produce some very natural-seeming output, it does not capture the reality of language use in the real world.

Because language is not just a set of probabilities - there are an infinite array of other factors at play. And we do not set out only to mimic what we have seen or heard; we intend to communicate with the wider world, using the tools we have available, and that might require deviating from the realm of the expected.

Often, the most probable output is not actually what you're likely to encounter in practice. Ungrammatical or contextually inappropriate utterances can be used for dramatic or humorous effect, for example; or nonstandard linguistic styles may be used to indicate one's relationship to the community those styles are associated with. Social and cultural context might be needed to understand a reference, or a linguistic feature might seem extraneous or confusing when removed from its original environment.

To put it briefly, even without knowing exactly how the human brain processes and produces language (which we certainly don't), it's readily apparent that boiling it down to a statistical model is entirely misrepresentative of the reality of language.

And thus a statistical model is unlikely to be able to comprehend and assist with many of the difficulties of learning a language.

A statistical model might identify that a learner misuses some vocabulary more often than others; what it may not notice is that the vocabulary in question are similar in form, or in their meaning in translation. It might register that you consistently struggle with a particular grammar form; but not identify that the root cause of the struggle is that a comparable grammatical structure in your native language is either radically different or nonexistent. It might note that you have trouble recalling a common saying, but not that you lack the cultural background needed to understand why it has that meaning. And so it can identify points of weakness; but it is incapable of addressing them effectively, because it does not understand how people think.

This is all without considering the consequences of only having a singular source of very formal, very rigid input to learn from, unable to account for linguistic variation due to social factors. Without considering the errors still apparent in the output of most language models, and the biases they are prone to reproducing. Without considering the source of their data, and the ethical considerations regarding where and how such a substantial sample was collected.

I understand that Duolingo wants to introduce more interactivity and adaptability to their courses (and, I suspect, to improve their bottom line). But I genuinely think that going about it in this way is more likely to hinder than to help, and wrongfully prioritises the convenience of AI over the quality and expertise that their existing translators and course designers bring.

#alright getting off my soapbox now#apologies if this is not particularly coherent - i was very much working through my thoughts as I was writing#but yeah tl;dr i am not huge on LLMs especially for language education and am deeply disappointed by the way things are trending#anyways if anyone knows any learning tools that are similarly structured i'd appreciate the recommendation#being able to easily do a little bit of study on a regular basis helped me a lot and i've yet to find anything similar#duolingo#language#language learning

463 notes

·

View notes

Text

Lena could feel the weight in her hand. A little extra swing in her fist as she walked, sending a jolt up her arm as she jogged up the steps to Kara’s apartment. She’d decided to walk today, to clear her head a little as she went to see her best friend. She had a lot on her mind lately- usual Luthor stuff like defusing random death traps that Lex left behind, fending off attempts to dethrone her as CEO and challenge her status as he brother’s heir, and cures for intractable diseases and solutions for the energy crisis and thorny ethical issues around the advance project department’s latest AI experiments… and Kara.

Kara was on her mind. She had a way of sneaking into Lena’s mind at the most inopportune moments, like a board meeting, or a symposium, or her TED talk. It was really a TEDx talk; the organization wasn’t *quite* ready to invite Lena to the real deal, no matter how many photo ops she did with Supergirl or cancer research facilities she paid for. That didn’t stop Kara from following her around saying “thanks for listening to my Ted talk” for three weeks after the fact.

She had been thinking about Kara so much that it had finally been noticed. Sam flew in from Metropolis earlier that week for a catch up lunch, and as usual, after business was handled they shared a bottle of wine and things grew informal.

“Lena,” Sam said. “I’ve been talking for five minutes and you’ve been holding that glass of rosé and staring at it for the entire time. What’s going on?”

Lena almost dropped the glass when she heard her name. “Oh, right. Yes. Wine.”

She took a sip, hoping Sam would drop her question, but she persisted.

“I know that look. You were miles away. What is it? Did the cure for cancer pop into your head?”

“No,” Lena said. “It’s nothing, I was just lost in thought.”

“Mmm,” said Sam. “I’m sure.”

“What?”

Sam smiled enigmatically and finished her wine. “I’d better get going. I’m taking a red eye back to Metropolis.”

“Sam, you’re flying on a Lexcorp charter. It doesn’t work that way.”

Sam snorted and left Lena sitting there, wondering what that was about. Of course she’d been daydreaming about Kara, about her hands specifically- she’d nodded off last weekend and woke to see Kara at her ease, brow furrowed and hands moving wildly as she painted something. Lena had remained still and watched, fascinated by Kara’s hands, the skill and dexterity she showed.

It was that day that Kara had passed her the key she now carried in her hand. A key to Kara’s apartment. Unfettered access. Lena didn’t have to knock (she would anyway) and could stop by when Kara wasn’t even there. She hadn’t said anything but she’d been holding back tears the entire ride home; Lena had no problems with *access*, but trust was another matter. That was what the key was. It was a talisman of trust, Kara’s confidence in her given form.

Lena did knock before she turned the key and swung the door open. She was expected, but part of her worried that Kara wouldn’t be alone. It seemed odd to Lena that Kara hadn’t started dating again- her best friend had taken the whole Mon-El thing very poorly, and it was bizarre to begin with, so Lena understood why she’d stay single for a while, but it had been years.

Years of kindling a soft, secret hope, a desire so fragile and so brittle that Lena rarely dared think of it, afraid that the tiniest brush of longing would crumble it and with it break something inside her permanently.

The apartment smelled like cookies. Burnt cookies. Kara was in the kitchen, brow furrowed, bent in concentration over a cookbook, eyes darting to a mixing bowl. Foul smelling attempted cookies practically filled the garbage can.

“Hey,” Kara said, cheerfully. She gave Lena a soft, gentle smile that seemed only for her, and brushed a loose gold curl from her eyes. “You’re early.”

“I wanted more Kara time,” said Lena. “I was hoping to get a few minutes alone with you before the few shows up. Just us.”

Kara looked at her curiously, then turned to her project.

“I can’t get this right. I cream the sugar like it says, but they keep coming out wrong.”

Lena moved closer, stopping her hand from seeking the small of Kara’s back. When she saw the carton of cream on the counter, she busted out laughing so hard she snorted.

“What?” said Kara.

“Darling, you don’t put actual cream in it. Here, let me help you.”

For the next half hour, Lena and Kara made cookie dough, laboriously, by hand. Every step brought them closer together, literally. By the time they were scooping out evenly sized blobs of it together, they were hip to hip, both floured and sugared, hands greasy with butter.

“I’ll pop them in the oven,” said Kara. “You go clean up and relax.”

“Alright,” Lena said.

She ended up on the couch. Game night would begin hours later, and Lena turned on a nature documentary. (She had her own distinct username on Kara’s Netflix.)

Lena must have dozed off, because the alarm on the oven, along with a warm, pleasant, homey smell, woke her up. She padded on her stocking feet into the kitchen to see how the cookies came out.

Kara had already taken them out and was holding the tray, hot from the oven. Something was off. It nagged at Lena’s mind.

Then it hit her. Kara seemed to realize at the same time.

She wasn’t wearing oven mitts. No heating pad. Not even a dish towel. Kara was holding the hot tray, fresh from the oven, in her bare hands.

Lena yelped. “Kara! You’ll burn yourself!”

Kara started to move. A cry rose on her lips, then died. She stared at Lena with such softness, her eyes full of hesitation, but more than that, a kind of longing that echoed Lena’s own soul.

“I’m tired of lying to you,” Kara said, still holding the tray. “It doesn’t hurt. I can barely feel it.”

They stood for a frozen moment that lasted an eternity, the truth just on the wrong side of revealing itself. Lena already knew, but she didn’t want to acknowledge it. Say it.

“You’re Supergirl,” Lena whispered, soft and breathy.

Kara nodded, starting to choke up. She put the tray down almost violently and stepped back.

“I’ll understand if you need time, if you’re angry, if you don’t want to continue our friendship-“

She didn’t finish her ramble. Lena crossed the space between them in three quick steps, firmly took Kara’s face between her palms, and kissed her.

Pure terror gripped her. What if she was wrong? What if this was a mistake? Why wasn’t Kara moving, responding, reacting?

That question responded when hands that could crush diamonds moved her her body with surpassing tenderness, turning the awkward kiss into something more, Kara guiding Lena as their bodies molded together and Kara kissed her back with hopeful desperation, drawing it out as if she was afraid to let it end for fear it might never be repeated.

It was, intimately and immediately. Lena was shocked but pleased when Kara let Lena push her back against the counter, bending her back lightly, almost climbing her. Kara almost shocked Lena when her hand slid up her side and found her breast even as Lena grabbed a double handful of steely buns and squeezed.

Then someone coughed and they jerked apart.

Alex stood by the door, arms folded.

“I’m going to go ahead and text the others so they know game night is cancelled,” she said, smirking. “Next time, hang a sock on the doorknob or something.”

“This is my house,” said Kara.

Alex rolled her eyes. “I’m leaving now.”

As the door slammed shut, and Alex could plainly be heard blurting, “Jesus Christ,” Lena turned back to Kara.

“Should we talk?” she said, her voice small. “What is this? What are we doing?”

Kara swallowed, hard. “What do you want it to be, Lena?”

Lena couldn’t answer. She just stared.

“I know what I want it to be,” said Kara. “I want us to be an us. I’m so tired of wanting you so bad it hurts, but being scared to touch you a certain way or look too long or too openly or be afraid I’ll say the wrong thing. I’m tired of hiding so much from you.”

Lena licked her lips.

“The truth is, I’ve wanted you for years.”

Kara’s gorgeous eyes lit up with unbridled delight, and with shocking quickness, Kara had Lena in a bridal carry. Lena instinctively curled up in her arms, practically wrapping herself around Kara’s body.

“What do you want to do now?” said Kara. “I don’t know how to do this part, Lena.”

Lena smiled. “I think what you do now is carry me back in the bedroom and cream your sugar.”

“You want to make more cookies? Why… oh.”

“Oh indeed,” said Lena.

Lena didn’t make a habit of it, but this one time, she let Kara talk her into cookies for breakfast.

#supercorp#supergirl fanfiction#supergirl#supercorp fanfic#lena luthor#kara danvers#kara x lena#karlena#supergirl fanfic#ficlet

436 notes

·

View notes

Text

There is no obvious path between today’s machine learning models — which mimic human creativity by predicting the next word, sound, or pixel — and an AI that can form a hostile intent or circumvent our every effort to contain it.

Regardless, it is fair to ask why Dr. Frankenstein is holding the pitchfork. Why is it that the people building, deploying, and profiting from AI are the ones leading the call to focus public attention on its existential risk? Well, I can see at least two possible reasons.

The first is that it requires far less sacrifice on their part to call attention to a hypothetical threat than to address the more immediate harms and costs that AI is already imposing on society. Today’s AI is plagued by error and replete with bias. It makes up facts and reproduces discriminatory heuristics. It empowers both government and consumer surveillance. AI is displacing labor and exacerbating income and wealth inequality. It poses an enormous and escalating threat to the environment, consuming an enormous and growing amount of energy and fueling a race to extract materials from a beleaguered Earth.

These societal costs aren’t easily absorbed. Mitigating them requires a significant commitment of personnel and other resources, which doesn’t make shareholders happy — and which is why the market recently rewarded tech companies for laying off many members of their privacy, security, or ethics teams.

How much easier would life be for AI companies if the public instead fixated on speculative theories about far-off threats that may or may not actually bear out? What would action to “mitigate the risk of extinction” even look like? I submit that it would consist of vague whitepapers, series of workshops led by speculative philosophers, and donations to computer science labs that are willing to speak the language of longtermism. This would be a pittance, compared with the effort required to reverse what AI is already doing to displace labor, exacerbate inequality, and accelerate environmental degradation.

A second reason the AI community might be motivated to cast the technology as posing an existential risk could be, ironically, to reinforce the idea that AI has enormous potential. Convincing the public that AI is so powerful that it could end human existence would be a pretty effective way for AI scientists to make the case that what they are working on is important. Doomsaying is great marketing. The long-term fear may be that AI will threaten humanity, but the near-term fear, for anyone who doesn’t incorporate AI into their business, agency, or classroom, is that they will be left behind. The same goes for national policy: If AI poses existential risks, U.S. policymakers might say, we better not let China beat us to it for lack of investment or overregulation. (It is telling that Sam Altman — the CEO of OpenAI and a signatory of the Center for AI Safety statement — warned the E.U. that his company will pull out of Europe if regulations become too burdensome.)

1K notes

·

View notes

Text

The Fires of Raven, or Why I Side-eye anyone who claims it's the "good ending".

I keep on seeing takes about how the Fires of Raven ending is the Actual Good Ending and not gonna lie it makes me kind of sick. Partially because "Wow do you literally just believe the first thing people tell you? No wonder propaganda works as well as it does."

The other is that as far as I'm concerned, Coral is a People. Not human by any means but personhood is hardly the kind of thing that's exclusive to humanity. If it can communicate, if it has a culture, if it has agency and emotion, then it is a people. It's basically the concept of a nonhuman person. Coral is depicted as having clear sentience. And at that point I think it's essentially just a people.

It's why I think the idea of AI becoming self aware is one that we're not prepared for, not because of paperclip factories or skynet (which is an inane fear as far as I'm concerned) but because we will have made a person, and we're not ready for that ethical question. Not by a longshot.

The initial Fires of Ibis weren't a spontaneous Coral event. It was a deliberate act; it's why Walter brings up the story in Chapter 4. The story of the man who burned it all who, as we come to learn in the logs, was Professor Nagai. Coral Collapse is a consequence with a scary name but it's one that is not defined; we don't know what Coral Collapse actually entails.

This isn't a mistake on Fromsoft's part; it's a deliberate choice. That ambiguity is part of the point. The lack of a known quantity to Coral Collapse is a big driver of the fear behind it. It's a fear great enough to cause Nagai to burn the stars, in favor of the world that is.

And yet the world that is was also the one that lobotomized C4 621.

The world that is has a company like Arquebus wage war against civilians. Send them to re-education camps which, if you know anything about real world re-education camps, you would know that they are an abomination, and there is no exception on Rubicon.

This is a world that saw Rubicon, a planet of endless possibility and natural beauty, and built towering, continent-sized oil rigs to suck it dry.

Coral was allowed to live for eons before Humanity fucking colonized it. Coral was allowed to grow, to exist, to evolve into its current form until humanity began to shove it into a container.

And Coral was allowed to live before one of the colonizers decided a people was too dangerous to let live based on a chance.

This sounds like an excuse that I have seen too many times.

#armored core 6#armored core#armored core 6 spoilers#fires of raven#ending spoilers#colonialism#space colonialism#or spolonialism#if you will

588 notes

·

View notes

Text

pinterest has been ruined by ai generated images not in a moral panic way like there's plenty of ethically dubious things going on with pinterest however a bitch enjoys collecting jpegs in my little albums sometimes. but i hate when on first glance i am awed by some architectural feat or tridimensional art piece or interior design (what i fall for way more often than like, digital art or fake paintings, which i have more experience identifying) and it turns out its ai generated. like what bothers me is that with art there is arguably an innate appreciation for the mind behind the object and in that way you are establishing communication with the person who made it, right, like transmitter and reciever. and yeah you could argue that you are communing with the engineers who created the technology or the user who envisioned a prompt but it's not the same... i think i could appreciate it through that lens if it was disclosed front and center, yeah? like that's why we care about the medium wether it's language or the body or oils on canvas or whathaveyou. it's the deception that makes me feel hollow. like talking to a customer service robot. like confusing the pre-recorded message for the real thing and beginning a conversation with the answering machine before it hits you with the "leave a number..!" simulacra and simulation and all that. welcome to the desert of the real i guess

193 notes

·

View notes

Text

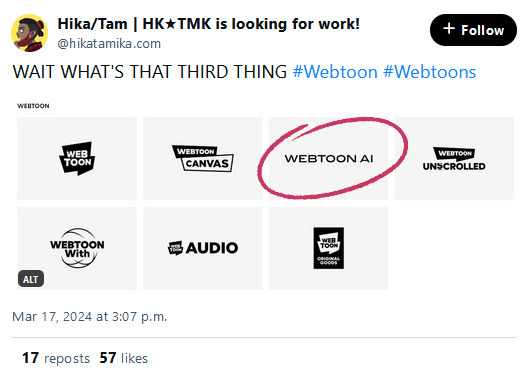

I'm sorry, but this should come as a shock to absolutely no one.

Just a little bit of 'insider info' (and by 'insider' I mean I was a part of the beta testing crew a few years ago) Webtoons has been messing with AI tools for years. You can literally play test that very same AI tool that I beta-tested here:

Mind you, this is just an AI Painter, similar to the Clip Studio Colorize tool, but it goes to show where WT's priorities are headed. I should mention, btw, that this tool is incredibly useless for anyone not creating a Korean-style webtoon, like you can deadass tell it was trained exclusively on the imports because it can't handle any skin tone outside of white (trying to use darker colors just translates as "shadows" to the program, meaning it'll just cast some fugly ass shadows over a white-toned character no matter how hard you try) and you just know the AI wouldn't know what to do with itself if you gave it an art style that didn't exactly match with the provided samples lmao

And let's be real, can we really expect the company that regularly exploits, underpays, and overworks its creators to give a damn about the ethical concerns of AI? They're gonna take the path of least resistance to make the most money possible.

So the fact that we're now seeing AI comics popping up on Webtoons left and right - and now, an actual "Webtoon AI" branding label - should come as zero shock to anyone. Webtoons is about quantity over quality and so AI is the natural progression of that.

So yeah, if you were looking for any sign to check out other platforms outside of Webtoons, this is it. Here are some of my own recommendations:

ComicFury - Independently run, zero ads, zero subscription costs (though I def recommend supporting them on Patreon if you're able), full control over site appearance, optional hosting for only the cost of the domain name, and best of all, strictly anti-AI. Not allowed, not even with proper labelling or disclosure. Full offense to the tech bro hacks, eat shit.

GlobalComix - Very polished hosting site that offers loads of monetization tools for creators without any exclusive contracts or subscriptions needed. They do offer a subscription program, but that's purely for reading the comics on the site that are exclusively behind paywalls. Not strictly anti-AI but does require in their ToS that AI comics be properly labelled and disclosed, whether they're made partially or fully with AI, to ensure transparency for readers who want to avoid AI comics.

Neocities - If you want to create your own site the good ole' fashioned way (i.e. HTML / CSS) this is the place. Independently run, offers a subscription plan for people who want more storage and bandwidth but it only costs $5/month so it's very inexpensive, and even without that subscription cost you won't have to deal with ads or corporate management bullshit.

Be safe out there pals, don't be afraid to set out into the unknown if it means protecting your work and keeping your control as a creator. Know your rights, know your power.

368 notes

·

View notes