#twitter scraper api

Explore tagged Tumblr posts

Text

🚀 Top 4 Twitter (X) Scraping APIs in 2025

Looking for the best way to scrape Twitter (X) data in 2025? This article compares 4 top APIs based on speed, scalability, and pricing. A must-read for developers and data analysts!

#web scraping#scrapingdog#web scraping api#twitter scraper#twitter scraper api#x scraper#x scraper api

0 notes

Text

How to Use a Twitter Scraper Tool Easily

Why Twitter Scraping Changed My Social Media Game

Let me share a quick story. Last year, I was managing social media for a small tech startup, and we were struggling to create content that resonated with our audience. I was spending 4–5 hours daily just browsing Twitter, taking screenshots, and manually tracking competitor posts. It was exhausting and inefficient.

That���s when I discovered the world of Twitter scraping tool, and honestly, it was a game-changer. Within weeks, I was able to analyze thousands of tweets, identify trending topics in our niche, and create data-driven content strategies that increased our engagement by 300%.

What Exactly is a Twitter Scraper Tool?

Simply put, a Twitter scraping tool is software that automatically extracts data from Twitter (now X) without you having to manually browse and copy information. Think of it as your personal digital assistant that works 24/7, collecting tweets, user information, hashtags, and engagement metrics while you focus on more strategic tasks.

These tools can help you:

Monitor brand mentions and sentiment

Track competitor activities

Identify trending topics and hashtags

Analyze audience behavior patterns

Generate leads and find potential customers

Finding the Best Twitter Scraper Online: My Personal Experience

After testing dozens of different platforms over the years, I’ve learned that the best twitter scraper online isn’t necessarily the most expensive one. Here’s what I look for when evaluating scraping tools:

Key Features That Actually Matter

1. User-Friendly Interface The first time I used a complex scraping tool, I felt like I needed a computer science degree just to set up a basic search. Now, I only recommend tools that my grandmother could use (and she’s not exactly tech-savvy!).

2. Real-Time Data Collection In the fast-paced world of Twitter, yesterday’s data might as well be from the stone age. The best tools provide real-time scraping capabilities.

3. Export Options Being able to export data in various formats (CSV, Excel, JSON) is crucial for analysis and reporting. I can’t count how many times I’ve needed to quickly create a presentation for stakeholders.

4. Rate Limit Compliance This is huge. Tools that respect Twitter’s API limits prevent your account from getting suspended. Trust me, I learned this the hard way.

Step-by-Step Guide: Using an X Tweet Scraper Tool

Based on my experience, here’s the easiest way to get started with any x tweet scraper tool:

Step 1: Define Your Scraping Goals

Before diving into any tool, ask yourself:

What specific data do I need?

How will I use this information?

What’s my budget and time commitment?

I always start by writing down exactly what I want to achieve. For example, “I want to find 100 tweets about sustainable fashion from the past week to understand current trends.”

Step 2: Choose Your Scraping Parameters

Most tweet scraper online tools allow you to filter by:

Keywords and hashtags

Date ranges

User accounts

Geographic location

Language

Engagement levels (likes, retweets, replies)

Step 3: Set Up Your First Scraping Project

Here’s my tried-and-true process:

Start Small: Begin with a narrow search (maybe 50–100 tweets) to test the tool

Test Different Keywords: Use variations of your target terms

Check Data Quality: Always review the first batch of results manually

Scale Gradually: Once you’re confident, increase your scraping volume

My Final Thoughts

Using a twitter scraper tool effectively isn’t just about having the right software — it’s about understanding your goals, respecting platform rules, and continuously refining your approach. The tools I use today are vastly different from what I started with, and that’s okay. The key is to keep learning and adapting.

Whether you’re a small business owner trying to understand your audience, a researcher analyzing social trends, or a marketer looking to stay ahead of the competition, the right scraping approach can provide invaluable insights.

1 note

·

View note

Text

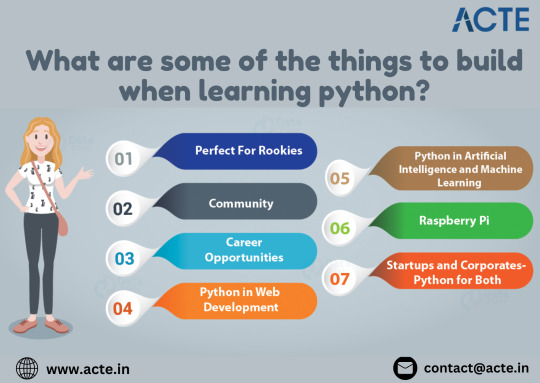

Unlock SEO & Automation with Python

In today’s fast-paced digital world, marketers are under constant pressure to deliver faster results, better insights, and smarter strategies. With automation becoming a cornerstone of digital marketing, Python has emerged as one of the most powerful tools for marketers who want to stay ahead of the curve.

Whether you’re tracking SEO performance, automating repetitive tasks, or analyzing large datasets, Python offers unmatched flexibility and speed. If you're still relying solely on traditional marketing platforms, it's time to step up — because Python isn't just for developers anymore.

Why Python Is a Game-Changer for Digital Marketers

Python’s growing popularity lies in its simplicity and versatility. It's easy to learn, open-source, and supports countless libraries that cater directly to marketing needs. From scraping websites for keyword data to automating Google Analytics reports, Python allows marketers to save time and make data-driven decisions faster than ever.

One key benefit is how Python handles SEO tasks. Imagine being able to monitor thousands of keywords, track competitors, and audit websites in minutes — all without manually clicking through endless tools. Libraries like BeautifulSoup, Scrapy, and Pandas allow marketers to extract, clean, and analyze SEO data at scale. This makes it easier to identify opportunities, fix issues, and outrank competitors efficiently.

Automating the Routine, Empowering the Creative

Repetitive tasks eat into a marketer's most valuable resource: time. Python helps eliminate the grunt work. Need to schedule social media posts, generate performance reports, or pull ad data across platforms? With just a few lines of code, Python can automate these tasks while you focus on creativity and strategy.

In Dehradun, a growing hub for tech and education, professionals are recognizing this trend. Enrolling in a Python Course in Dehradun not only boosts your marketing skill set but also opens up new career opportunities in analytics, SEO, and marketing automation. Local training programs often offer real-world marketing projects to ensure you gain hands-on experience with tools like Jupyter, APIs, and web scrapers — critical assets in the digital marketing toolkit.

Real-World Marketing Use Cases

Python's role in marketing isn’t just theoretical — it’s practical. Here are a few real-world scenarios where marketers are already using

Python to their advantage:

Content Optimization: Automate keyword research and content gap analysis to improve your blog and web copy.

Email Campaign Analysis: Analyze open rates, click-throughs, and conversions to fine-tune your email strategies.

Ad Spend Optimization: Pull and compare performance data from Facebook Ads, Google Ads, and LinkedIn to make smarter budget decisions.

Social Listening: Monitor brand mentions or trends across Twitter and Reddit to stay responsive and relevant.

With so many uses, Python is quickly becoming the Swiss army knife for marketers. You don’t need to become a software engineer — even a basic understanding can dramatically improve your workflow.

Getting Started with Python

Whether you're a fresh graduate or a seasoned marketer, investing in the right training can fast-track your career. A quality Python training in Dehradun will teach you how to automate marketing workflows, handle SEO analytics, and visualize campaign performance — all with practical, industry-relevant projects.

Look for courses that include modules on digital marketing integration, data handling, and tool-based assignments. These elements ensure you're not just learning syntax but applying it to real marketing scenarios. With Dehradun's increasing focus on tech education, it's a great place to gain this in-demand skill.

Python is no longer optional for forward-thinking marketers. As SEO becomes more data-driven and automation more essential, mastering Python gives you a clear edge. It simplifies complexity, drives efficiency, and helps you make smarter, faster decisions.

Now is the perfect time to upskill. Whether you're optimizing search rankings or building powerful marketing dashboards, Python is your key to unlocking smarter marketing in 2025 and beyond.

Python vs Ruby, What is the Difference? - Pros & Cons

youtube

#python course#python training#education#python#pythoncourseinindia#pythoninstitute#pythoninstituteinindia#pythondeveloper#Youtube

0 notes

Text

Sure, here is the article formatted according to your specifications:

Cryptocurrency data scraping TG@yuantou2048

In the rapidly evolving world of cryptocurrency, staying informed about market trends and price movements is crucial for investors and enthusiasts alike. One effective way to gather this information is through cryptocurrency data scraping. This method involves extracting data from various sources on the internet, such as exchanges, forums, and news sites, to compile a comprehensive dataset that can be used for analysis and decision-making.

What is Cryptocurrency Data Scraping?

Cryptocurrency data scraping refers to the process of automatically collecting and organizing data related to cryptocurrencies from online platforms. This data can include real-time prices, trading volumes, news updates, and social media sentiment. By automating the collection of this data, users can gain valuable insights into the cryptocurrency market, enabling them to make more informed decisions. Here’s how it works and why it’s important.

Why Scrape Cryptocurrency Data?

1. Real-Time Insights: Scraping allows you to access up-to-date information about different cryptocurrencies, ensuring that you have the latest details at your fingertips.

2. Market Analysis: With the vast amount of information available online, manual tracking becomes impractical. Automated scraping tools can help you stay ahead by providing timely and accurate information.

3. Tools and Techniques:

Web Scrapers: These are software tools designed to extract specific types of data from websites. They can gather data points like current prices, historical price trends, and community sentiment, which are essential for making informed investment decisions.

2. Automation: Instead of manually checking multiple platforms, automated scrapers can continuously monitor and collect data, saving time and effort.

3. Customization: You can tailor your scraper to focus on specific metrics or platforms, allowing for personalized data collection tailored to your needs.

4. Competitive Advantage: Having access to real-time data gives you an edge in understanding market dynamics and identifying potential opportunities or risks.

5. Legal Considerations: It's important to ensure that the data collected complies with legal guidelines and respects terms of service agreements of the websites being scraped. Always check the legality and ethical considerations before implementing any scraping projects.

6. Use Cases:

Price Tracking: Track the value of different cryptocurrencies across multiple exchanges.

Sentiment Analysis: Analyze social media and news feeds to gauge public opinion and predict market movements.

7. Challenges:

Dynamic Content: Websites often use JavaScript to load content dynamically, which requires advanced techniques to capture this data accurately.

Scraping Tools: Popular tools include Python libraries like BeautifulSoup and Selenium, which can parse HTML and interact with web pages to extract relevant information efficiently.

8. Best Practices:

Respect Terms of Service: Ensure that your scraping activities comply with the terms of service of the websites you’re scraping from. Some popular platforms like CoinMarketCap, Coingecko, and Twitter for sentiment analysis.

9. Ethical and Legal Scrutiny: Be mindful of the ethical implications and ensure compliance with website policies.

10. Data Quality: The quality of the data is crucial. Use robust frameworks and APIs provided by exchanges directly when possible to avoid overloading servers and ensure reliability.

11. Conclusion: Cryptocurrency data scraping is a powerful tool for anyone interested in the crypto space. However, always respect the terms of service of the platforms you scrape from.

12. Future Trends: As the landscape evolves, staying updated with the latest technologies and best practices is key. Always respect the terms of service of the platforms you're scraping from.

13. Conclusion: Cryptocurrency data scraping offers a wealth of information but requires careful implementation to avoid violating terms of service or facing legal issues.

14. Final Thoughts: While scraping can provide significant advantages, it’s vital to use these tools responsibly and ethically.

This structured approach ensures that you adhere to ethical standards while leveraging the power of automation to stay informed without infringing on copyright laws and privacy policies.

Feel free to adjust the length and tone as needed.

加飞机@yuantou2048

EPP Machine

蜘蛛池出租

0 notes

Text

News Extract: Unlocking the Power of Media Data Collection

In today's fast-paced digital world, staying updated with the latest news is crucial. Whether you're a journalist, researcher, or business owner, having access to real-time media data can give you an edge. This is where news extract solutions come into play, enabling efficient web scraping of news sources for insightful analysis.

Why Extracting News Data Matters

News scraping allows businesses and individuals to automate the collection of news articles, headlines, and updates from multiple sources. This information is essential for:

Market Research: Understanding trends and shifts in the industry.

Competitor Analysis: Monitoring competitors’ media presence.

Brand Reputation Management: Keeping track of mentions across news sites.

Sentiment Analysis: Analyzing public opinion on key topics.

By leveraging news extract techniques, businesses can access and process large volumes of news data in real-time.

How News Scraping Works

Web scraping involves using automated tools to gather and structure information from online sources. A reliable news extraction service ensures data accuracy and freshness by:

Extracting news articles, titles, and timestamps.

Categorizing content based on topics, keywords, and sentiment.

Providing real-time or scheduled updates for seamless integration into reports.

The Best Tools for News Extracting

Various scraping solutions can help extract news efficiently, including custom-built scrapers and APIs. For instance, businesses looking for tailored solutions can benefit from web scraping services India to fetch region-specific media data.

Expanding Your Data Collection Horizons

Beyond news extraction, companies often need data from other platforms. Here are some additional scraping solutions:

Python scraping Twitter: Extract real-time tweets based on location and keywords.

Amazon reviews scraping: Gather customer feedback for product insights.

Flipkart scraper: Automate data collection from India's leading eCommerce platform.

Conclusion

Staying ahead in today’s digital landscape requires timely access to media data. A robust news extract solution helps businesses and researchers make data-driven decisions effortlessly. If you're looking for reliable news scraping services, explore Actowiz Solutions for customized web scraping solutions that fit your needs.

#news extract#web scraping services India#Python scraping Twitter#Amazon reviews scraping#Flipkart scraper#Actowiz Solutions

0 notes

Text

0 notes

Text

Power Up Your Python Skills: 10 Exciting Projects to Master Coding

Forget textbooks and lectures – the most epic way to learn Python is by doing! This guide unveils 10 thrilling projects that will transform you from a programming rookie to a coding champion. Prepare to conquer these quests and unleash your creativity and problem-solving prowess.

With the helpful assistance of Learn Python Course in Hyderabad, studying Python becomes lot more exciting — regardless of whether you’re a beginner or moving from another programming language.

Mission 1: Command Line Masters

Your quest begins with mastering the fundamentals. Build simple command-line applications – think math wizards, unit converters, or random password generators. These projects are the stepping stones to Pythonic greatness!

Mission 2: Text-Based Games – Level Up

Time to challenge yourself! Create captivating text-based games like Hangman, Tic-Tac-Toe, or a trivia extravaganza. Craft engaging gameplay using loops, conditionals, and functions, while honing your Python skills in the process.

Mission 3: Web Scraper – Unearthing Web Data

The vast web holds secrets waiting to be discovered! Build web scrapers to extract valuable information from websites. Employ libraries like BeautifulSoup and Requests to navigate the HTML jungle, harvest data, and unlock hidden insights.

Mission 4: Data Analysis Detectives

Become a data analysis extraordinaire! Craft scripts to manipulate and analyze data from diverse sources – CSV files, spreadsheets, or databases. Calculate statistics, then use matplotlib or seaborn to create eye-catching data visualizations that reveal hidden truths.

Mission 5: GUI Gurus – Building User-Friendly Interfaces

Take your Python mastery to the next level by crafting user-friendly graphical interfaces (GUIs) with Tkinter or PyQt. From to-do list managers to weather apps, these projects will teach you how to design intuitive interfaces and conquer user interactions.

Mission 6: API Alliances – Connecting to the World

Expand your horizons by building clients for web APIs. Interact with services like Twitter, Reddit, or weather APIs to retrieve and display data. Master the art of making HTTP requests, parsing JSON responses, and handling authentication – invaluable skills for any programmer. People can better understand Python’s complexity and reach its full potential by enrolling in the Best Python Certification Online.

Mission 7: Automation Army – Streamlining Workflows

Say goodbye to repetitive tasks! Write automation scripts to handle tedious processes like file management, data processing, or email sending. Utilize libraries like os, shutil, and smtplib to free up your time and boost productivity.

Mission 8: Machine Learning Marvels – Unveiling AI Power

Enter the fascinating world of machine learning! Build basic classification or regression models using scikit-learn. Start with beginner-friendly projects like predicting housing prices or classifying flowers, then explore more complex algorithms as you progress.

Mission 9: Web Development Warriors – Forge Your Online Presence

Immerse yourself in the thrilling world of web development. Construct simple websites or web applications using frameworks like Flask or Django. Whether it's a personal portfolio site, a blog, or a data-driven application, these projects will teach you essential skills like routing, templating, and database interactions.

Mission 10: Open Source Odyssey – Join the Coding Community

Become a valued member of the open-source community! Contribute to projects on platforms like GitHub. Tackle beginner-friendly issues, fix bugs, or improve documentation. Gain real-world experience and collaborate with fellow developers to make a lasting impact.

These 10 Python quests aren't just about acquiring coding skills – they're a gateway to a world of exploration and innovation. Each project offers a unique opportunity to learn, grow, and create something amazing. So, grab your virtual sword and shield (aka your code editor) and embark on this epic Python adventure!

0 notes

Text

This Week in Rust 539

Hello and welcome to another issue of This Week in Rust! Rust is a programming language empowering everyone to build reliable and efficient software. This is a weekly summary of its progress and community. Want something mentioned? Tag us at @ThisWeekInRust on Twitter or @ThisWeekinRust on mastodon.social, or send us a pull request. Want to get involved? We love contributions.

This Week in Rust is openly developed on GitHub and archives can be viewed at this-week-in-rust.org. If you find any errors in this week's issue, please submit a PR.

Updates from Rust Community

Newsletters

The Embedded Rustacean Issue #15

This Week in Bevy: Foundations, Meetups, and more Bevy Cheatbook updates

Project/Tooling Updates

rustc_codegen_gcc: Progress Report #31

Slint 1.5: Embracing Android, Improving Live-Preview, and introducing Pythonic Slint

yaml-rust2's first real release

testresult 0.4.0 released. The crate provides the TestResult type for concise and precise test failures.

Revolutionizing PostgreSQL Database Comparison: Introducing pgdatadiff in Rust — Unleash Speed, Safety, and Scalability

Observations/Thoughts

SemVer in Rust: Breakage, Tooling, and Edge Cases — FOSDEM 2024 annotated talk

Go's Errors: How I Learned to Love Rust

Strongly-typed IDs in SurrealDB

Iterators and traversables

Using PostHog with Rust

Using Rust on ESP32 from Windows

Compiling Rust to WASI

Achieving awful compression with digits of pi

Zig, Rust, and other languages

What part of Rust compilation is the bottleneck?

Lambda on hard mode: Inside Modal's web infrastructure

Embedded Rust Bluetooth on ESP: BLE Advertiser

[video] Diplomat - Idiomatic Multi-Language APIs - Robert Bastian - Rust Zürisee March 2024

Rust Walkthroughs

A Short Introduction to Rust and the Bevy Game Engine

[video] Strings and memory reallocation in Rust

Research

Rust Tools Survey (by JetBrains)

Miscellaneous

RustNL 2024 schedule announced

Fighting back: Turning the Tables on Web Scrapers Using Rust

The book "Code Like a Pro in Rust" is released

Red Hat's Long, Rust'ed Road Ahead For Nova As Nouveau Driver Successor

Crate of the Week

This week's crate is heck, a no_std crate to perform case conversions.

Thanks to Edoardo Morandi for the suggestion!

Please submit your suggestions and votes for next week!

Call for Testing

An important step for RFC implementation is for people to experiment with the implementation and give feedback, especially before stabilization. The following RFCs would benefit from user testing before moving forward:

No calls for testing were issued this week.

If you are a feature implementer and would like your RFC to appear on the above list, add the new call-for-testing label to your RFC along with a comment providing testing instructions and/or guidance on which aspect(s) of the feature need testing.

Call for Participation; projects and speakers

CFP - Projects

Always wanted to contribute to open-source projects but did not know where to start? Every week we highlight some tasks from the Rust community for you to pick and get started!

Some of these tasks may also have mentors available, visit the task page for more information.

Rama — add Form support (IntroResponse + FromRequest)

Rama — rename *Filter matchers to *Matcher

Rama — Provide support for boxed custom matchers in layer enums

Rama — use workspace dependencies for common workspace dep versionning

Rama — add open-telemetry middleware and extended prometheus support

Space Acres - Packaging for MacOS

Space Acres - Implement Loading Progress

Space Acres - Show more lines of logs when the app is "Stopped with error"

Space Acres - Tray Icon Support

Hyperswitch - [REFACTOR]: Remove Default Case Handling - Braintree

Hyperswitch - [REFACTOR]: Remove Default Case Handling - Fiserv

Hyperswitch - [REFACTOR]: Remove Default Case Handling - Globepay

ZeroCopy - Fix cfgs in rustdoc

ZeroCopy - Audit uses of "C-like" and prefer "fieldless"

ZeroCopy - in zerocopy-derive UI tests, detect whether we're building with RUSTFLAGS='-Wwarnings'

If you are a Rust project owner and are looking for contributors, please submit tasks here.

CFP - Speakers

Are you a new or experienced speaker looking for a place to share something cool? This section highlights events that are being planned and are accepting submissions to join their event as a speaker.

* RustFest Zürich 2024 | Closes 2024-03-31 | Zürich, Switzerland | Event date: 2024-06-19 - 2024-06-24 * Oxidize 2024 | Closes 2024-03-24 | Berlin, Germany | Event date: 2024-05-28 - 2024-05-30 * RustConf 2024 | Closes 2024-04-25 | Montreal, Canada | Event date: 2024-09-10 * EuroRust 2024| Closes 2024-06-03 | Vienna, Austria & online | Event on 2024-10-10 * Scientific Computing in Rust 2024| Closes 2024-06-14 | online | Event date: 2024-07-17 - 2024-07-19

If you are an event organizer hoping to expand the reach of your event, please submit a link to the submission website through a PR to TWiR.

Updates from the Rust Project

498 pull requests were merged in the last week

BOLT Use CDSort and CDSplit

NormalizesTo: return nested goals to caller

add_retag: ensure box-to-raw-ptr casts are preserved for Miri

f16 and f128 step 3: compiler support & feature gate

add -Z external-clangrt

add wasm_c_abi future-incompat lint

add missing try_visit calls in visitors

check library crates for all tier 1 targets in PR CI

copy byval argument to alloca if alignment is insufficient

coverage: initial support for branch coverage instrumentation

create some minimal HIR for associated opaque types

delay expand macro bang when there has indeterminate path

delegation: fix ICE on duplicated associative items

detect allocator for box in must_not_suspend lint

detect calls to .clone() on T: !Clone types on borrowck errors

detect when move of !Copy value occurs within loop and should likely not be cloned

diagnostics: suggest Clone bounds when noop clone()

do not eat nested expressions' results in MayContainYieldPoint format args visitor

don't create ParamCandidate when obligation contains errors

don't ICE when non-self part of trait goal is constrained in new solver

don't show suggestion if slice pattern is not top-level

downgrade const eval dangling ptr in final to future incompat lint

enable PR tracking review assignment for rust-lang/rust

enable creating backtraces via -Ztreat-err-as-bug when stashing errors

enable frame pointers for the standard library

ensure RPITITs are created before def-id freezing

fix 32-bit overflows in LLVM composite constants

fix ICE in diagnostics for parenthesized type arguments

fix long-linker-command-lines failure caused by rust.rpath=false

fix attribute validation on associated items in traits

fix stack overflow with recursive associated types

interpret: ensure that Place is never used for a different frame

make incremental sessions identity no longer depend on the crate names provided by source code

match lowering: don't collect test alternatives ahead of time

more eagerly instantiate binders

never patterns: suggest ! patterns on non-exhaustive matches

only generate a ptrtoint in AtomicPtr codegen when absolutely necessary

only invoke decorate if the diag can eventually be emitted

pass the correct DefId when suggesting writing the aliased Self type out

pattern analysis: Store field indices in DeconstructedPat to avoid virtual wildcards

provide structured suggestion for #![feature(foo)]

register LLVM handlers for bad-alloc / OOM

reject overly generic assoc const binding types

represent Result<usize, Box<T>> as ScalarPair(i64, ptr)

split refining_impl_trait lint into _reachable, _internal variants

stabilize imported_main

stabilize associated type bounds (RFC #2289)

stop walking the bodies of statics for reachability, and evaluate them instead

ungate the UNKNOWN_OR_MALFORMED_DIAGNOSTIC_ATTRIBUTES lint

unix time module now return result

validate builder::PATH_REMAP

miri: add some chance to reuse addresses of previously freed allocations

avoid lowering code under dead SwitchInt targets

use UnsafeCell for fast constant thread locals

add CStr::bytes iterator

add as_(mut_)ptr and as_(mut_)slice to raw array pointers

implement {Div,Rem}Assign<NonZero<X>> on X

fix unsoundness in Step::forward_unchecked for signed integers

implement Duration::as_millis_{f64,f32}

optimize ptr::replace

safe Transmute: Require that source referent is smaller than destination

safe Transmute: Use 'not yet supported', not 'unspecified' in errors

hashbrown: fix index calculation in panic guard of clone_from_impl

cargo tree: Control --charset via auto-detecting config value

cargo toml: Flatten manifest parsing

cargo: add 'open-namespaces' feature

cargo fix: strip feature dep when dep is dev dep

cargo: prevent dashes in lib.name

cargo: expose source/spans to Manifest for emitting lints

rustdoc-search: depth limit T<U> → U unboxing

rustdoc-search: search types by higher-order functions

rustdoc: add --test-builder-wrapper arg to support wrappers such as RUSTC_WRAPPER when building doctests

rustdoc: do not preload fonts when browsing locally

rustfmt: fix: ICE with expanded code

rustfmt: initial work on formatting headers

clippy: cast_lossless: Suggest type alias instead of the aliased type

clippy: else_if_without_else: Fix duplicate diagnostics

clippy: map_entry: call the visitor on the local's else block

clippy: option_option: Fix duplicate diagnostics

clippy: unused_enumerate_index: trigger on method calls

clippy: use_self: Make it aware of lifetimes

clippy: don't emit doc_markdown lint for missing backticks if it's inside a quote

clippy: fix dbg_macro false negative when dbg is inside some complex macros

clippy: fix empty_docs trigger in proc-macro

clippy: fix span calculation for non-ascii in needless_return

clippy: handle false positive with map_clone lint

clippy: lint when calling the blanket Into impl from a From impl

clippy: move iter_nth to style, add machine applicable suggestion

clippy: move readonly_write_lock to perf

clippy: new restriction lint: integer_division_remainder_used

rust-analyzer: distinguish integration tests from crates in test explorer

rust-analyzer: apply #[cfg] to proc macro inputs

rust-analyzer: implement ATPIT

rust-analyzer: support macro calls in eager macros for IDE features

rust-analyzer: syntax highlighting improvements

rust-analyzer: fix panic with impl trait associated types in where clause

rust-analyzer: don't auto-close block comments in strings

rust-analyzer: fix wrong where clause rendering on hover

rust-analyzer: handle attributes when typing curly bracket

rust-analyzer: ignore some warnings if they originate from within macro expansions

rust-analyzer: incorrect handling of use and panic issue in extract_module

rust-analyzer: make inlay hint resolving work better for inlays targetting the same position

rust-analyzer: refactor extension to support arbitrary shell command runnables

rust-analyzer: show compilation progress in test explorer

rust-analyzer: use --workspace and --no-fail-fast in test explorer

Rust Compiler Performance Triage

Even though the summary might not look like it, this was actually a relatively quiet week, with a few small regressions. The large regression that is also shown in the summary table was caused by extending the verification of incremental compilation results. However, this verification is not actually fully enabled by default, so these regressions are mostly only visible in our benchmarking suite, which enables the verification to achieve more deterministic benchmarking results. One small regression was also caused by enabling frame pointers for the Rust standard library, which should improve profiling of Rust programs.

Triage done by @kobzol. Revision range: e919669d..21d94a3d

Summary:

(instructions:u) mean range count Regressions ��� (primary) 2.5% [0.4%, 7.8%] 207 Regressions ❌ (secondary) 2.9% [0.2%, 8.3%] 128 Improvements ✅ (primary) - - 0 Improvements ✅ (secondary) -1.0% [-1.3%, -0.4%] 4 All ❌✅ (primary) 2.5% [0.4%, 7.8%] 207

4 Regressions, 1 Improvements, 6 Mixed; 4 of them in rollups 67 artifact comparisons made in total

Full report here

Approved RFCs

Changes to Rust follow the Rust RFC (request for comments) process. These are the RFCs that were approved for implementation this week:

No RFCs were approved this week.

Final Comment Period

Every week, the team announces the 'final comment period' for RFCs and key PRs which are reaching a decision. Express your opinions now.

RFCs

Reserve gen keyword in 2024 edition for Iterator generators

Tracking Issues & PRs

Rust

[disposition: merge] Tracking Issue for raw slice len() method (slice_ptr_len, const_slice_ptr_len)

[disposition: merge] downgrade ptr.is_aligned_to crate-private

[disposition: merge] Stabilize unchecked_{add,sub,mul}

[disposition: merge] transmute: caution against int2ptr transmutation

[disposition: merge] Normalize trait ref before orphan check & consider ty params in alias types to be uncovered

Cargo

[disposition: merge] release cargo test helper crate to crates-io

New and Updated RFCs

[new] Add support for use Trait::method

Upcoming Events

Rusty Events between 2024-03-20 - 2024-04-17 🦀

Virtual

2024-03-20 | Virtual (Cardiff, UK) | Rust and C++ Cardiff

Rust for Rustaceans Book Club: Chapter 3 - Designing Interfaces

2024-03-20 | Virtual (Vancouver, BC, CA) | Vancouver Rust

Rust Study/Hack/Hang-out

2024-03-21 | Virtual (Charlottesville, NC, US) | Charlottesville Rust Meetup

Crafting Interpreters in Rust Collaboratively

2024-03-26 | Virtual + In Person (Barcelona, ES) | BcnRust

13th BcnRust Meetup - Stream

2024-03-26 | Virtual (Dallas, TX, US) | Dallas Rust

Last Tuesday

2024-03-28 | Virtual + In Person (Berlin, DE) | OpenTechSchool Berlin + Rust Berlin

Rust Hack and Learn | Mirror: Rust Hack n Learn Meetup

2024-04-02 | Virtual (Buffalo, NY, US) | Buffalo Rust

Buffalo Rust User Group

2024-04-03 | Virtual (Cardiff, UK) | Rust and C++ Cardiff

Rust for Rustaceans Book Club: Chapter 4 - Error Handling

2024-04-03 | Virtual (Indianapolis, IN, US) | Indy Rust

Indy.rs - with Social Distancing

2024-04-04 | Virtual (Charlottesville, NC, US) | Charlottesville Rust Meetup

Crafting Interpreters in Rust Collaboratively

2024-04-09 | Virtual (Dallas, TX, US) | Dallas Rust

Second Tuesday

2024-04-11 | Virtual + In Person (Berlin, DE) | OpenTechSchool Berlin + Rust Berlin

Rust Hack and Learn | Mirror: Rust Hack n Learn Meetup

2024-04-11 | Virtual (Nürnberg, DE) | Rust Nüremberg

Rust Nürnberg online

2024-04-16 | Virtual (Washinigton, DC, US) | Rust DC

Mid-month Rustful

2024-04-17| Virtual (Vancouver, BC, CA) | Vancouver Rust

Rust Study/Hack/Hang-out

Africa

2024-04-05 | Kampala, UG | Rust Circle Kampala

Rust Circle Meetup

Asia

2024-03-30 | New Delhi, IN | Rust Delhi

Rust Delhi Meetup #6

Europe

2024-03-20 | Girona, ES | Rust Girona

Introduction to programming Microcontrollers with Rust

2024-03-20 | Lyon, FR | Rust Lyon

Rust Lyon Meetup #9

2024-03-20 | Oxford, UK | Oxford Rust Meetup Group

Introduction to Rust

2024-03-21 | Augsburg, DE | Rust Meetup Augsburg

Augsburg Rust Meetup #6

2024-03-21 | Lille, FR | Rust Lille

Rust Lille #6: Du RSS et de L'ECS !

2024-03-21 | Vienna, AT | Rust Vienna

Rust Vienna Meetup - March - Unsafe Rust

2024-03-23 | Stockholm, SE | Stockholm Rust

Ferris' Fika Forum | Map

2024-03-25 | London, UK | Rust London User Group

LDN Talks: Rust Nation 2024 Pre-Conference Meetup

2024-03-26 | Barcelona, ES + Virtual | BcnRust

13th BcnRust Meetup

2024-03-26 - 2024-03-28 | London, UK | Rust Nation UK

Rust Nation 2024 - Conference

2024-03-28 | Berlin, DE | Rust Berlin

Rust and Tell

2024-04-10 | Cambridge, UK | Cambridge Rust Meetup

Rust Meetup Reboot 3

2024-04-10 | Oslo, NO | Rust Oslo

Rust Hack'n'Learn at Kampen Bistro

2024-04-11 | Bordeaux, FR | Rust Bordeaux

Rust Bordeaux #2 : Présentations

2024-04-11 | Reading, UK | Reading Rust Workshop

Reading Rust Meetup at Browns

2024-04-16 | Bratislava, SK | Bratislava Rust Meetup Group

Rust Meetup by Sonalake #5

2024-04-16 | Munich, DE + Virtual | Rust Munich

Rust Munich 2024 / 1 - hybrid

North America

2024-03-21 | Mountain View, CA, US | Mountain View Rust Meetup

Rust Meetup at Hacker Dojo

2024-03-21 | Nashville, TN, US | Music City Rust Developers

Rust Meetup : Lightning Round!

2024-03-21 | Seattle, WA, US | Seattle Rust User Group

Seattle Rust User Group Meetup

2024-03-21 | Spokane, WA, US | Spokane Rust Meetup | Spokane Rust Website

Presentation: Brilliance in Borrowing

2024-03-22 | Somerville, MA, US | Boston Rust Meetup

Somerville Union Square Rust Lunch, Mar 22

2024-03-26 | Minneapolis, MN, US | Minneapolis Rust Meetup

Minneapolis Rust: Getting started with Rust!

2024-03-27 | Austin, TX, US | Rust ATX

Rust Lunch - Fareground

2024-03-27 | Hawthorne (Los Angeles), CA, US | Freeform

Rust in the Physical World 🦀 Tech Talk Event at Freeform

2024-03-31 | Boston, MA, US | Boston Rust Meetup

Beacon Hill Rust Lunch, Mar 31

2024-04-04 | Mountain View, CA, US | Mountain View Rust Meetup

Rust Meetup at Hacker Dojo

2024-04-11 | Seattle, WA, US | Seattle Rust User Group

Seattle Rust User Group Meetup

2024-04-16 | San Francisco, CA, US | San Francisco Rust Study Group

Rust Hacking in Person

If you are running a Rust event please add it to the calendar to get it mentioned here. Please remember to add a link to the event too. Email the Rust Community Team for access.

Jobs

Please see the latest Who's Hiring thread on r/rust

Quote of the Week

In 10 years we went from “Rust will never replace C and C++” to “New C/C++ should not be written anymore, and you should use Rust”. Good job.

– dpc_pw on lobste.rs

Thanks to Dennis Luxen for the suggestion!

Please submit quotes and vote for next week!

This Week in Rust is edited by: nellshamrell, llogiq, cdmistman, ericseppanen, extrawurst, andrewpollack, U007D, kolharsam, joelmarcey, mariannegoldin, bennyvasquez.

Email list hosting is sponsored by The Rust Foundation

Discuss on r/rust

1 note

·

View note

Text

a way in which data scraping without access to the api can work is through for instance a browser extension that records the metadata of every post the user scrolls past (method ive used on tiktok and twitter). i dont know of an extension that is able to do this for tumblr specifically (bc tumblr's api still exists its not necessary to create these types of things for research purposes) but im sure theres also scrapers that automate even more of this process used by AI companies that dont even involve manually scrolling through a website

1 note

·

View note

Text

TWITTER API IS $100 A MONTH

TWITTER SCRAPERS CANT SORT BY LATEST ANYMORE

FUCK YOU TWITTER

0 notes

Text

Web Scraping Using Node Js

Web scraping using node js is an automated technique for gathering huge amounts of data from websites. The majority of this data is unstructured in HTML format and is transformed into structured data in a spreadsheet or database so that it can be used in a variety of applications in JSON format.

Web scraping is a method for gathering data from web pages in a variety of ways. These include using online tools, certain APIs, or even creating your own web scraping programmes from scratch. You can use APIs to access the structured data on numerous sizable websites, including Google, Twitter, Facebook, StackOverflow, etc.

The scraper and the crawler are the two tools needed for web scraping.

The crawler is an artificially intelligent machine that searches the internet for the required data by clicking on links.

A scraper is a particular tool created to extract data from a website. Depending on the scale and difficulty of the project, the scraper's architecture may change dramatically to extract data precisely and effectively.

Different types of web scrapers

There are several types of web scrapers, each with its own approach to extracting data from websites. Here are some of the most common types:

Self-built web scrapers: Self-built web scrapers are customized tools created by developers using programming languages such as Python or JavaScript to extract specific data from websites. They can handle complex web scraping tasks and save data in a structured format. They are used for applications like market research, data mining, lead generation, and price monitoring.

Browser extensions web scrapers: These are web scrapers that are installed as browser extensions and can extract data from websites directly from within the browser.

Cloud web scrapers: Cloud web scrapers are web scraping tools that are hosted on cloud servers, allowing users to access and run them from anywhere. They can handle large-scale web scraping tasks and provide scalable computing resources for data processing. Cloud web scrapers can be configured to run automatically and continuously, making them ideal for real-time data monitoring and analysis.

Local web scrapers: Local web scrapers are web scraping tools that are installed and run on a user's local machine. They are ideal for smaller-scale web scraping tasks and provide greater control over the scraping process. Local web scrapers can be programmed to handle more complex scraping tasks and can be customized to suit the user's specific needs.

Why are scrapers mainly used?

Scrapers are mainly used for automated data collection and extraction from websites or other online sources. There are several reasons why scrapers are mainly used for:

Price monitoring:Price monitoring is the practice of regularly tracking and analyzing the prices of products or services offered by competitors or in the market, with the aim of making informed pricing decisions. It involves collecting data on pricing trends and patterns, as well as identifying opportunities for optimization and price adjustments. Price monitoring can help businesses stay competitive, increase sales, and improve profitability.

Market research:Market research is the process of gathering and analyzing data on consumers, competitors, and market trends to inform business decisions. It involves collecting and interpreting data on customer preferences, behavior, and buying patterns, as well as assessing the market size, growth potential, and trends. Market research can help businesses identify opportunities, make informed decisions, and stay competitive.

News Monitoring:News monitoring is the process of tracking news sources for relevant and timely information. It involves collecting, analyzing, and disseminating news and media content to provide insights for decision-making, risk management, and strategic planning. News monitoring can be done manually or with the help of technology and software tools.

Email marketing:Email marketing is a digital marketing strategy that involves sending promotional messages to a group of people via email. Its goal is to build brand awareness, increase sales, and maintain customer loyalty. It can be an effective way to communicate with customers and build relationships with them.

Sentiment analysis:Sentiment analysis is the process of using natural language processing and machine learning techniques to identify and extract subjective information from text. It aims to determine the overall emotional tone of a piece of text, whether positive, negative, or neutral. It is commonly used in social media monitoring, customer service, and market research.

How to scrape the web

Web scraping is the process of extracting data from websites automatically using software tools. The process involves sending a web request to the website and then parsing the HTML response to extract the data.

There are several ways to scrape the web, but here are some general steps to follow:

Identify the target website.

Gather the URLs of the pages from which you wish to pull data.

Send a request to these URLs to obtain the page's HTML.

To locate the data in the HTML, use locators.

Save the data in a structured format, such as a JSON or CSV file.

Examples:-

SEO marketers are the group most likely to be interested in Google searches. They scrape Google search results to compile keyword lists and gather TDK (short for Title, Description, and Keywords: metadata of a web page that shows in the result list and greatly influences the click-through rate) information for SEO optimization strategies.

Another example:- The customer is an eBay seller and diligently scraps data from eBay and other e-commerce marketplaces regularly, building up his own database across time for in-depth market research.

It is not a surprise that Amazon is the most scraped website. Given its vast market position in the e-commerce industry, Amazon's data is the most representative of all market research. It has the largest database.

Two best tools for eCommerce Scraping Without Coding

Octoparse:Octoparse is a web scraping tool that allows users to extract data from websites using a user-friendly graphical interface without the need for coding or programming skills.

Parsehub:Parsehub is a web scraping tool that allows users to extract data from websites using a user-friendly interface and provides various features such as scheduling and integration with other tools. It also offers advanced features such as JavaScript rendering and pagination handling.

Web scraping best practices that you should be aware of are:

1. Continuously parse & verify extracted data

Data conversion, also known as data parsing, is the process of converting data from one format to another, such as from HTML to JSON, CSV, or any other format required. Data extraction from web sources must be followed by parsing. This makes it simpler for developers and data scientists to process and use the gathered data.

To make sure the crawler and parser are operating properly, manually check parsed data at regular intervals.

2. Make the appropriate tool selection for your web scraping project

Select the website from which you wish to get data.

Check the source code of the webpage to see the page elements and look for the data you wish to extract.

Write the programme.

The code must be executed to send a connection request to the destination website.

Keep the extracted data in the format you want for further analysis.

Using a pre-built web scraper

There are many open-source and low/no-code pre-built web scrapers available.

3. Check out the website to see if it supports an API

To check if a website supports an API, you can follow these steps:

Look for a section on the website labeled "API" or "Developers". This section may be located in the footer or header of the website.

If you cannot find a dedicated section for the API, try searching for keywords such as "API documentation" or "API integration" in the website's search bar.

If you still cannot find information about the API, you can contact the website's support team or customer service to inquire about API availability.

If the website offers an API, look for information on how to access it, such as authentication requirements, API endpoints, and data formats.

Review any API terms of use or documentation to ensure that your intended use of the API complies with their policies and guidelines.

4. Use a headless browser

For example- puppeteer

Web crawling (also known as web scraping or screen scraping) is broadly applied in many fields today. Before a web crawler tool becomes public, it is the magic word for people with no programming skills.

People are continually unable to enter the big data door due to its high threshold. An automated device called a web scraping tool acts as a link between people everywhere and the big enigmatic data.

It stops repetitive tasks like copying and pasting.t

It organizes the retrieved data into well-structured formats, such as Excel, HTML, and CSV, among others.

It saves you time and money because you don’t have to get a professional data analyst.

It is the solution for many people who lack technological abilities, including marketers, dealers, journalists, YouTubers, academics, and many more.

Puppeteer

A Node.js library called Puppeteer offers a high-level API for managing Chrome/Chromium via the DevTools Protocol.

Puppeteer operates in headless mode by default, but it may be set up to run in full (non-headless) Chrome/Chromium.

Note: Headless means a browser without a user interface or “head.” Therefore, the GUI is concealed when the browser is headless. However, the programme will be executed at the backend.

Puppeteer is a Node.js package or module that gives you the ability to perform a variety of web operations automatically, including opening pages, surfing across websites, analyzing javascript, and much more. Chrome and Node.js make it function flawlessly.

A puppeteer can perform the majority of tasks that you may perform manually in the browser!

Here are a few examples to get you started:

Create PDFs and screenshots of the pages.

Crawl a SPA (Single-Page Application) and generate pre-rendered content (i.e. "SSR" (Server-Side Rendering)).

Automate form submission, UI testing, keyboard input, etc.

Develop an automated testing environment utilizing the most recent JavaScript and browser capabilities.

Capture a timeline trace of your website to help diagnose performance issues.

Test Chrome Extensions.

Cheerio

Cheerio is a tool (node package) that is widely used for parsing HTML and XML in Node.

It is a quick, adaptable & lean implementation of core jQuery designed specifically for the server.

Cheerio goes considerably more quickly than Puppeteer.

Difference between Cheerio and Puppeteer

Cheerio is merely a DOM parser that helps in the exploration of unprocessed HTML and XML data. It does not execute any Javascript on the page.

Puppeteer operates a complete browser, runs all Javascript, and handles all XHR requests.

Note: XHR provides the ability to send network requests between the browser and a server.

Conclusion

In conclusion, Node.js empowers programmers in web development to create robust web scrapers for efficient data extraction. Node.js's powerful features and libraries streamline the process of building effective web scrapers. However, it is essential to prioritize legal and ethical considerations when engaging in Node.js web development for web scraping to ensure responsible data extraction practices.

0 notes

Text

FYI, this is happening because he shut off the API. The bots he's talking about aren't new - the API made them a controllable problem, and now it's gone.

The #1 reason social networking sites have free APIs is to segment off bots - scrapers, spammers, etc - from normal user traffic. This both lightens the server load and makes it 100x easier for mods to catch abusive botters.

A public API is a security feature. On a site like Twitter or Reddit, killing the API means handing the site over to the bots.

twitter is broken today 😭 are there any other ways that people search for anthologies to apply to?

18K notes

·

View notes

Text

In recent months, the signs and portents have been accumulating with increasing speed. Google is trying to kill the 10 blue links. Twitter is being abandoned to bots and blue ticks. There’s the junkification of Amazon and the enshittification of TikTok. Layoffs are gutting online media. A job posting looking for an “AI editor” expects “output of 200 to 250 articles per week.” ChatGPT is being used to generate whole spam sites. Etsy is flooded with “AI-generated junk.” Chatbots cite one another in a misinformation ouroboros. LinkedIn is using AI to stimulate tired users. Snapchat and Instagram hope bots will talk to you when your friends don’t. Redditors are staging blackouts. Stack Overflow mods are on strike. The Internet Archive is fighting off data scrapers, and “AI is tearing Wikipedia apart.” The old web is dying, and the new web struggles to be born.

The web is always dying, of course; it’s been dying for years, killed by apps that divert traffic from websites or algorithms that reward supposedly shortening attention spans. But in 2023, it’s dying again — and, as the litany above suggests, there’s a new catalyst at play: AI.

The problem, in extremely broad strokes, is this. Years ago, the web used to be a place where individuals made things. (..) Then companies decided they could do things better. They created slick and feature-rich platforms and threw their doors open for anyone to join. (..) The companies chased scale, because once enough people gather anywhere, there’s usually a way to make money off them. But AI changes these assumptions.

Given money and compute, AI systems — particularly the generative models currently in vogue — scale effortlessly. (..) Their output can potentially overrun or outcompete the platforms we rely on for news, information, and entertainment. (..). Companies scrape information from the open web and refine it into machine-generated content that’s cheap to generate but less reliable. This product then competes for attention with the platforms and people that came before them. Sites and users are reckoning with these changes, trying to decide how to adapt and if they even can.

In recent months, discussions and experiments at some of the web’s most popular and useful destinations — sites like Reddit, Wikipedia, Stack Overflow, and Google itself — have revealed the strain created by the appearance of AI systems.

Reddit’s moderators are staging blackouts after the company said it would steeply increase charges to access its API, with the company’s execs saying the changes are (in part) a response to AI firms scraping its data. (..) This is not the only factor — Reddit is trying to squeeze more revenue from the platform before a planned IPO later this year — but it shows how such scraping is both a threat and an opportunity to the current web, something that makes companies rethink the openness of their platforms.

Wikipedia is familiar with being scraped in this way. The company’s information has long been repurposed by Google to furnish “knowledge panels,” and in recent years, the search giant has started paying for this information. But Wikipedia’s moderators are debating how to use newly capable AI language models to write articles for the site itself. They’re acutely aware of the problems associated with these systems, which fabricate facts and sources with misleading fluency, but know they offer clear advantages in terms of speed and scope. (..)

Stack Overflow offers a similar but perhaps more extreme case. Like Reddit, its mods are also on strike, and like Wikipedia’s editors, they’re worried about the quality of machine-generated content. When ChatGPT launched last year, Stack Overflow was the first major platform to ban its output. (..)

The site’s management, though, had other plans. The company has since essentially reversed the ban by increasing the burden of evidence needed to stop users from posting AI content, and it announced it wants to instead take advantage of this technology. Like Reddit, Stack Overflow plans to charge firms that scrape its data while building its own AI tools — presumably to compete with them. The fight with its moderators is about the site’s standards and who gets to enforce them. The mods say AI output can’t be trusted, but execs say it’s worth the risk.

All these difficulties, though, pale in significance to changes taking place at Google. Google Search underwrites the economy of the modern web, distributing attention and revenue to much of the internet. Google has been spurred into action by the popularity of Bing AI and ChatGPT as alternative search engines, and it’s experimenting with replacing its traditional 10 blue links with AI-generated summaries. But if the company goes ahead with this plan, then the changes would be seismic.

A writeup of Google’s AI search beta from Avram Piltch, editor-in-chief of tech site Tom’s Hardware, highlights some of the problems. Piltch says Google’s new system is essentially a “plagiarism engine.” Its AI-generated summaries often copy text from websites word-for-word but place this content above source links, starving them of traffic. (..) If this new model of search becomes the norm, it could damage the entire web, writes Piltch. Revenue-strapped sites would likely be pushed out of business and Google itself would run out of human-generated content to repackage.

Again, it’s the dynamics of AI — producing cheap content based on others’ work — that is underwriting this change, and if Google goes ahead with its current AI search experience, the effects would be difficult to predict. Potentially, it would damage whole swathes of the web that most of us find useful — from product reviews to recipe blogs, hobbyist homepages, news outlets, and wikis. Sites could protect themselves by locking down entry and charging for access, but this would also be a huge reordering of the web’s economy. In the end, Google might kill the ecosystem that created its value, or change it so irrevocably that its own existence is threatened.

But what happens if we let AI take the wheel here, and start feeding information to the masses? What difference does it make?

Well, the evidence so far suggests it’ll degrade the quality of the web in general. As Piltch notes in his review, for all AI’s vaunted ability to recombine text, it’s people who ultimately create the underlying data (..). By contrast, the information produced by AI language models and chatbots is often incorrect. The tricky thing is that when it’s wrong, it’s wrong in ways that are difficult to spot.

Here’s an example. Earlier this year, I was researching AI agents — systems that use language models like ChatGPT that connect with web services and act on behalf of the user, ordering groceries or booking flights. In one of the many viral Twitter threads extolling the potential of this tech, the author imagines a scenario in which a waterproof shoe company wants to commission some market research and turns to AutoGPT (a system built on top of OpenAI’s language models) to generate a report on potential competitors. The resulting write-up is basic and predictable. (You can read it here.) It lists five companies, including Columbia, Salomon, and Merrell, along with bullet points that supposedly outline the pros and cons of their products. “Columbia is a well-known and reputable brand for outdoor gear and footwear,” we’re told. “Their waterproof shoes come in various styles” and “their prices are competitive in the market.” You might look at this and think it’s so trite as to be basically useless (and you’d be right), but the information is also subtly wrong.

To check the contents of the report, I ran it by someone I thought would be a reliable source on the topic: a moderator for the r/hiking subreddit named Chris. Chris told me that the report was essentially filler. (..) It doesn’t mention important factors like the difference between men’s and women’s shoes or the types of fabric used. It gets facts wrong and ranks brands with a bigger web presence as more worthy. Overall, says Chris, there’s just no expertise in the information — only guesswork. (..)

This is the same complaint identified by Stack Overflow’s mods: that AI-generated misinformation is insidious because it’s often invisible. It’s fluent but not grounded in real-world experience, and so it takes time and expertise to unpick. If machine-generated content supplants human authorship, it would be hard — impossible, even — to fully map the damage. And yes, people are plentiful sources of misinformation, too, but if AI systems also choke out the platforms where human expertise currently thrives, then there will be less opportunity to remedy our collective errors.

The effects of AI on the web are not simple to summarize. Even in the handful of examples cited above, there are many different mechanisms at play. In some cases, it seems like the perceived threat of AI is being used to justify changes desired for other reasons while in others, AI is a weapon in a struggle between workers who create a site’s value and the people who run it. There are also other domains where AI’s capacity to fill boxes is having different effects — from social networks experimenting with AI engagement to shopping sites where AI-generated junk is competing with other wares.

In each case, there’s something about AI’s ability to scale that changes a platform. Many of the web’s most successful sites are those that leverage scale to their advantage, either by multiplying social connections or product choice, or by sorting the huge conglomeration of information that constitutes the internet itself. But this scale relies on masses of humans to create the underlying value, and humans can’t beat AI when it comes to mass production. (..) There’s a famous essay in the field of machine learning known as “The Bitter Lesson,” which notes that decades of research prove that the best way to improve AI systems is not by trying to engineer intelligence but by simply throwing more computer power and data at the problem. (..)

Does this have to be a bad thing, though? If the web as we know it changes in the face of artificial abundance? Some will say it’s just the way of the world, noting that the web itself killed what came before it, and often for the better. Printed encyclopedias are all but extinct, for example, but I prefer the breadth and accessibility of Wikipedia to the heft and reassurance of Encyclopedia Britannica. And for all the problems associated with AI-generated writing, there are plenty of ways to improve it, too — from improved citation functions to more human oversight. Plus, even if the web is flooded with AI junk, it could prove to be beneficial, spurring the development of better-funded platforms. If Google consistently gives you garbage results in search, for example, you might be more inclined to pay for sources you trust and visit them directly.

Really, the changes AI is currently causing are just the latest in a long struggle in the web’s history. Essentially, this is a battle over information — over who makes it, how you access it, and who gets paid. But just because the fight is familiar doesn’t mean it doesn’t matter, nor does it guarantee the system that follows will be better than what we have now. The new web is struggling to be born, and the decisions we make now will shape how it grows.

0 notes

Text

Ende Oktober bis Mitte November 2022 und wer weiß, wie lange noch

Man muss Archive dann anlegen, wenn es vollkommen unnötig wirkt, wie alt muss ich denn noch werden, um das endlich mal zu lernen?

Ich habe dasselbe Problem wie Thomas Jungbluth: Seit 14 Jahren habe ich Twitter-Likes und -Retweets wie eine Lesezeichenfunktion benutzt. Jetzt droht das alles zu verschwinden, zusammen mit meinen eigenen Tweets, die (neben dem Techniktagebuch und ein paar Chatlogs) wegen meines schlechten Gedächtnisses einen Großteil meiner Erinnerungen an diese Jahre enthalten. Also eben-nicht-Erinnerungen. Wenn das Archiv weg ist, sind wesentliche Teile meines Lebens gelöscht. Ich habe zwar schon gelegentlich meine Twitterdaten heruntergeladen, aber das letzte Mal ist viele Jahre her.

Mein erster Versuch, mein offizielles Archiv herunterzuladen, scheitert an Vergesslichkeit.

Mein zweiter Versuch ist erfolgreich. Aber das Archiv ist verdächtig winzig, 165 MB, selbst das Twitterarchiv des nur halb so alten Techniktagebuchs ist viel größer. Ich bin mir auch ziemlich sicher, früher schon Twitterarchive im 5-GB-Bereich runtergeladen zu haben. Das kann nicht stimmen, da sind bestimmt nur Staubmäuse und Fehlermeldungen drin. Ich sehe nicht mal rein.

Der Bookmark-Archivierungsdienst Pinboard hat von 2019 bis 2021 meine Tweets und meine Likes vollständig archiviert. Aber obwohl der Dienst kostenpflichtig ist, hat er danach aufgehört zu funktionieren (so viel zu “wenn du nicht bezahlst, bist du das Produkt”). Außerdem lassen sich die sehr schön archivierten Tweets und Likes bei Pinboard nicht exportieren. Die Export-Seite führt zu einer Fehlermeldung.

Es muss doch irgendwelche fertigen Tools dafür geben, denke ich, und mache mich auf die Suche.

Twint klingt in der Beschreibung sehr gut. Es handelt sich um einen Scraper, also um eine inoffizielle Umgehung der eigentlich dafür von Twitter vorgesehenen (schlechten und unvollständigen) Methoden. In der Beschreibung wird das sehr hübsch ausgedrückt: “TWINT nutzt zur Erstellung seiner Berichte nicht die Twitter-API (...), sondern eine alternative Methode.” Leider lässt es sich bei meinem Hoster nicht installieren. Die Fehlermeldungen sagen mir nichts. Ich bin derzeit darauf angewiesen, alles auf meinem gemieteten Server statt lokal zu installieren, weil das Macbook in einem ungünstigen Moment kaputtgegangen ist.

Twarc klingt auch nicht schlecht. Es lässt sich installieren, aber danach scheitere ich (wie genau, habe ich zum Aufschreibezeitpunkt schon wieder vergessen).

Bei der Suche nach etwas anderem finde ich auf meinem Server ein vielversprechendes und sehr kurzes Pythonskript namens download_any_accounts_tweets.py. Damit gespeicherte Retweets sind allerdings auf 140 Zeichen gekürzt. Ich gehe in der Dokumentation der Twitter-API nachlesen. Es handelt sich um eine Folge der Verlängerung von Tweets auf 280 Zeichen im Jahr 2017, und das Verfahren zur Beschaffung der vollen 280 Zeichen von Retweets ist verwickelt. Ich habe schon viel mit der Twitter-API gemacht und wüsste im Prinzip, wie das geht. Aber eigentlich will ich mein Problem jetzt sofort lösen und nicht erst, nachdem ich mir selbst die Werkzeuge dafür gebaut habe. Es kann doch nicht sein, dass das Problem nur ich habe!

Ich finde bei Github ein fertiges Tool zum Herunterladen aller Likes über die Twitter-API. Weil man gesperrt wird, wenn man Tausende Anfragen an die API stellt, verwende ich dafür die API-Keys eines ungeborenen Twitterbots und rufe die Likes ganz, ganz langsam ab. In diesem Tempo wird es Monate dauern, bis ich alles habe. Und auch die so heruntergeladenen Likes sind auf 140 Zeichen gekürzt.

Mit dem beliebten Twitterscraper snscrape kann ich nicht arbeiten, weil die offizielle Version Python 3.8 verlangt und auf meinem Server nur Python 3.7 läuft. Daran kann ich nichts ändern. Die inoffizielle sncrape-Version für Python 3.7 gibt nur eine ungooglebare Fehlermeldung aus.

https://github.com/bisguzar/twitter-scraper funktioniert auch nicht, ebenfalls mit unklaren Fehlermeldungen.

Jetzt schaue ich doch mal in meinen zu kleinen offiziellen Twitterdaten-Download. Er sieht überraschenderweise gar nicht so schlecht aus. Meine eigenen Tweets sind alle erhalten. Von allen Retweets sind nur die ersten 140 Zeichen drin. Offenbar fanden es auch die Twitter-Zuständigen zu schwer, die zweite Hälfte zu beschaffen. Das tröstet mich ein bisschen. Die Likes sind im Archiv überhaupt nicht enthalten. Ich fordere meine Daten ein drittes Mal an in der Hoffnung, dass die Likes diesmal vielleicht mitkommen. (Update: Der dritte Download ist genauso klein und unvollständig wie der zweite.) Bei https://github.com/timhutton/twitter-archive-parser, einem Tool zur Behebung der Fehler des offiziellen Twitterarchivs, schreibe ich einen Feature Request wegen der halbierten Retweets. Vielleicht kriegt der ehrenamtliche Entwickler dieses Tools ja das hin, was den bezahlten Twitterzuständigen nicht gelungen ist. (Ich wüsste, wie gesagt, im Prinzip, wie es ginge, habe aber keine Ahnung von Github und pull requests und so und traue mich nicht, mich da einzumischen. Beim Aufschreiben fällt mir allerdings ein, dass ich ja in einer privaten Kopie dieses Parsers herumbasteln könnte, ohne dass es jemand sieht. Vielleicht probiere ich das als Nächstes.)

Währenddessen liest man jeden Tag von neuen Kündigungswellen bei Twitter und dem Abbröckeln von Funktionen. Es ist ein Wettlauf gegen die Zeit, und beim nächsten Mal, nehme ich mir vor, mache ich aber wirklich, wirklich rechtzeitig lokale Backups von allem. Oder suche mir irgendeinen schamlosen, teuren, professionellen Anbieter, der das automatisch für mich erledigt (und dann mache ich rechtzeitig lokale Backups von dessen Archiven).

(Kathrin Passig)

4 notes

·

View notes

Text

How To Scrape Instagram Social Media Data Using IWeb Scraping API?

Why Should You Scrape Instagram Data?

Instagram is a prominent social networking platform where users may interact and share images, videos, and other media. While there are several social media accounts giving information, Instagram is particularly beneficial because it has over 500 million users, the majority of whom are between the ages of 18 and 24. Exploring the thinking of the young generation is extremely useful for apparel businesses or businesses that employ a younger population.

Looking over the audience who can help you search for new customers and knowing about their attitudes into a bigger social media analysis. Consider our API page if this seems like the proper next step for your company.

What is An Instagram Scraper?

Web scraping is a method of fetching data from a website automatically. A scraping tool also known as a scraper will scrape a webpage. Using a scraper developed for Instagram, will assist you to gather important information from the website without any other information. Hence, an Instagram scraper is developed to extract Instagram data.

How to Use Instagram Scraper?

Web scraping service delivered by iWeb Scraping is simple in use. By using an Instagram scraper, you will be able to fetch data such as

Number Of Total Followers

Number Of Posts

Information About Users Last Few Posts

Instagram scraper is used to fetch the information which makes it simple to learn the interest of the target audience. This information can be used to create marketing campaigns, new goods, and customer outreach.

Advantages of Scraping Instagram Data

There are various benefits of scraping Instagram data. Here are a few examples of data from Instagram that will help your organization.

Consumer Opinions

Organizations are continually looking for new ways to better understand their customers' wants and needs. You can see how numerous people represent themselves, whom they follow, and then who follows them on social media. This information is really useful, especially for businesses. For studying a younger target group, scraping Instagram information is more useful. Because Instagram is such a visual platform, you can use it to spot visual trends (colors, styles, and so on) that are particularly significant for clothes and lifestyle firms.

If your company does have its own Instagram account, scraping the user profiles who like or connect with your material regularly is a good place to start. There's a good chance their friends/followers will be interested in your goods or service as well. After scraping a large number of relevant Instagram accounts, you'll have a variety of helpful consumer opinion data that will help you figure out what your consumers require without the need for substantial research or focus group discussions.

Connecting with Influencers

Instagram influencers are users who have thousands of subscribers and are known for their lifestyle aesthetic, expert knowledge, identity, and more. For instance, there are various fitness enthusiasts, who produce workout videos and share motivational information on social media. Scraping the accounts of the fitness influencers will provide you access to a massive audience that are interested in fitness, and workout gear.

Scraping these accounts and articles can also give you valuable marketing information about the types of fitness articles that are popular on Instagram. Studying from the profiles of influencers is a wonderful starting point if you want to develop your brand identity. For larger companies, you will be able to collaborate, with influencers to add your product to the videos. Many corporations, such as Nike and Adidas, work with athletes, entertainers, and even social media users to increase their visibility.

Finding and Growing Your Audience

It could be challenging to locate your target audience if you're a new business. You can identify relevant profiles to crawl by searching for specific hashtags or regions on Instagram. If you're planning to start a jewelry store in Columbus, Ohio, cleaning the area first can help to narrow the local demography. When you uncover profiles in Columbus, extract those that appear to fit your target demographic. This approach will assist you in identifying local rivals, locating prospective consumers, and gaining insights into various demographics to choose which is better suited to your firm.

Integrating the Instagram Scraper

Having a knowledge routine with a variety of sources is critical for every successful firm. Here are some ideas for incorporating Instagram crawling into your data collection routine and obtaining additional data. Because social media marketing is so crucial these days, you would like to make sure you're focusing your efforts on the correct platforms. Using a web scraping tool on the websites like Twitter will enable comparison of social media populations easier, which helps you design the ideal marketing plan.

iWeb Scraping API

iWeb Scraping API will make it simple to feed data collected from the web right into your analytic software of choice, rather than having to design your API from scratch. This method allows you to easily collect and aggregate data from all of the web sources mentioned in this blog without conducting individual analyses for each one. You save time and money by combining all of the information, and you get reliable results.

After scraping Instagram, you will want to put the information into an analytical package so it can be used quickly. The API from iWeb Scraping allows you to immediately feed any internet data into your preferred API for analysis. This relieves you of the burden of developing and monitoring proxies because you'll also benefit from our iWeb Scraping team's experience.

Your firm will have much more time to just use the information in unique and creative ways now that you have our staff to assist you. Instead of analyzing each social media site individually, you may extract many social media websites and aggregate the information. Instead of examining each social media website individually, you may scrape data from several websites and merge it into a bigger social media analysis. Check out our API page if this seems like the proper next step for you.

Conclusion

Instagram is known for its photographs of friends, influencers, and fashion, but it also has a lot of data. Web Scraping Services, or the automated retrieval of data from a website page, has made life simpler than ever to get this data, regardless of your level of skill. Scraping Robot's Instagram extractor enables you to get the advantages of scraping without having to worry about technical planning and improvement. Instagram scraping is ideal for apparel, skincare, and streetwear firms because it targets a younger clientele. Such new customer information and connections with experts can assist you in locating and growing your target audience.

Looking for scraping Instagram data? Contact iWeb Scraping today

https://www.iwebscraping.com/how-to-scrape-instagram-social-media-data-using-iweb-scraping-api.php

1 note

·

View note

Text

Top 5 Web Scraping Tools in 2021

Web scraping, also known as Web harvesting, Web data extraction, is the process of obtaining and analyzing data from a website. After that, for various purposes, the extracted data is saved in a local database. Web crawling can be performed manually or automatically through the software. There is no doubt that automated processes are more cost-effective than manual processes. Because there is a large amount of digital information online, companies equipped with these tools can collect more data at a lower cost than they did not collect, and gain a competitive advantage in the long run.

Web scraping benefits businesses > HOW!