#voxel make this noise

Explore tagged Tumblr posts

Text

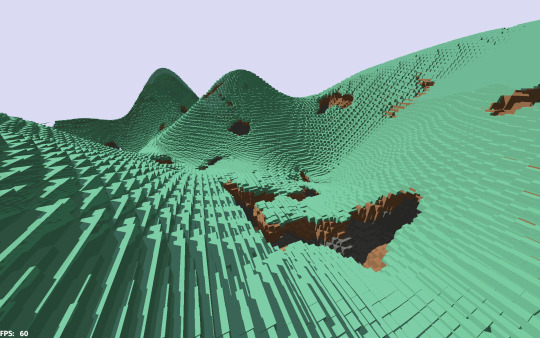

i do think like if you're not immersed in graphics and thinking about all the data flowing around between different buffers and shit that's going on inside a frame, when you see a render of a shiny box reflecting on a wall, it's like. ok and? it's a box? but there's so much nuance to the way light floods through an environment. and so much ingenuity in finding out what we can fake and precompute and approximate, to get our imaginary space to have that same gorgeous feeling of 'everything reflects off everything else'.

light is just one example, but I think all the different systems of feedback interactions in the world are just so juicy. geology is full of them. rocks bouncing up isostatically as the glaciers flow off them. spreading ridges and stripes of magnetic polarity revealing themselves. earthquakes ringing across the world. clouds forming around mountain peaks, giving rise to forests and deserts. soil being shaped by erosion, directing future water into channels. biological evolution!! I turned away from earth sciences many years ago, to pursue maths and physics and later art and now computer programming, but all of these things link up and inform the thing I'm doing at the moment.

sometimes you try to directly recreate the underlying physics, sometimes you're just finding mathematical shapes that feel similar: fractal noise that can be evaluated in parallel, pushed through various functions, assigned materials, voxellised and marching cubesed and pushed onto the gpu, shaded and offset by textures, and in the end, it looks sorta like grass. an island that will be shaped further as the players get their hands on it.

it's all just a silly game about hamsters shooting missiles. and yet when you spend this long immersed in a project, so much reveals itself. nobody will probably ever think about these details as much as I have been. and yet I hope something of that comes across.

they say of the Bevy game engine that everyone's trying to make a voxel engine or tech demo instead of an actual game. but in a way, I love that. making your own personal voxel engine is a cool game, for you.

computers are for playing with

105 notes

·

View notes

Note

Not sure if it is anything special but I love love the style of journey and sky children of the light, do they use any shaders in specific?

did a pretty deep dive into the sparkly sand of journey but hooo boy sky children of the light

this is my shader white whale . some day i want to know how they do this. holding out for their incredibly talented graphics engineer john edwards to do a gdc talk or something on them like he did for the sand in journey. this game runs on phones!!!! its a mobile game!!!!!!!!

all i know is that journey uses something called "blobs" to do the terrain and clouds , which i imagine is some kind of SDF/voxel rendering, meaning instead of making a sphere out of triangles/quads like most games do, they would just say "hey, put a sphere here" and it is a perfect sphere because you can use math to find where the sphere starts/stops.. like here's a simplified example in 2d, where the left is what most render engines do building things out of triangles/quads (or in this case lines), and right, what sdf engines do

so anyways having these things be SDF lets you do a ton of stuff you wouldnt be able to do like smooth noise displacement and fancy lighting calculations (though i imagine a lot of the lighting is baked ahead of time. this game runs on a phone !!!!! but also this game has a day/night/weather cycle so actually it might be realtime ?!?) if it is run ahead of time the lighting setup is probably run in a very similar way to how Actual clouds are rendered and mapped to the clouds in some way (through a texture? through the vertex color? this thing is a SDF does it even have vertex colors? maybe a point cloud generated from the SDF?) and if its realtime. then they either found a very fast way to do that lighting calculation with the SDFs or they found something that approximates it very well

and then for the surface of the clouds.. my best guesses is they are using a noise to wiggle around the surface of the SDF in a way that makes it look all fluffy and cloudy, or they are using some kind of particle system to add extra little bits of SDF to the surface of the clouds. or both!!! maybe they do one for close-up and one for far.. i dont know!!! i want to learn so bad though. it is such a pretty game

#cirivere#ask#potion of answers your question#gamedev stuff#its not Just sdf based though only the terrain and clouds#structures and stuff are meshes

239 notes

·

View notes

Text

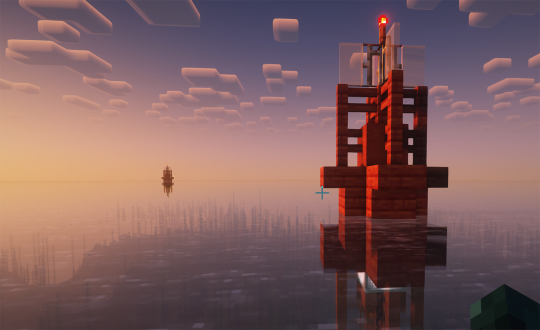

"How did you get here? Where are you heading?"

...is something I imagine you may be asking. Or maybe you already imagined an answer for. Whatever the case, to keep it simple, @andeditor7 and I (@recusantalchemist, formerly @goblingrowlart on here and @goblingrowlmods on twitter) were working on our Wilder Horizons mod for Minecraft one day, and we finally stopped to ask ourselves WHY on the gods green-yet-fading earth we were overhauling someone else's game with a completely different artistic direction than our own to such an extent when we could simply go and make our own game, and actually make it how we would like to. "Blackjack and all."

At this point we named our game "Project Blackjack". Nothing fancy, but something silly to act as a stand-in until we came up with a premise and title for our voxel-based survival crafting and building game.

We had seen others such as Hytale and Vintage Story take their own cracks at the genre, and with the reigning champion Minecraft beginning to teeter on the edge with it's community, we realized maybe we had our own shot at a game, even if it only ever reaches a small audience. Maybe that's for the best anyways haha! Thus began the brainstorming for our own game and how it would stand out. And by brainstorming, I mean lots of drinking and playing @haventhegame with my wonderful partner in crime (who still needs to get a tumblr). It then dawned on me a bit. My main focus of studies outside the hellscape of the internet is on marine biology and electrical engineering. The main reason I stopped modding for a while is how frivolous it felt when thinking about our dying pale blue rock. So why not make that the focus of our game? Regrowing a dying world. A game about

Okay so technically regrowal isnt a word, its a portmanteau of "regrown" and "growable" and "all". Sorta. ...and regrowable doesn't sound as cool. But much like our own world, it teeters on the precipice of collapse. But it is worth saving, it is regrowable. It has that potential still. Somehow. Even in this mess, there's hope amongst the ruins. Stay tuned for lots more to come. :) Also before anyone gets concerned, no we won't have N/F/T/s or m!cro-transact!ons or any other b.s. like that. nothing A/I/g3nerated either. Eff all that noise. Long live the arts. Long live modding. Long live creativity.

#regrowal#regrowalthegame#gamedev#pixel art#pixelart#blockbench#voxelart#climate#environment#survival games#minecraft#hytale#haventhegame#haven#vintage story#indiegamedev#indie games#gamemaker#screenshotsaturday#indiegameart#indiedev#game developers#game dev blog#game dev stuff#game design#game development#survival crafting game#modding#mods#games

13 notes

·

View notes

Text

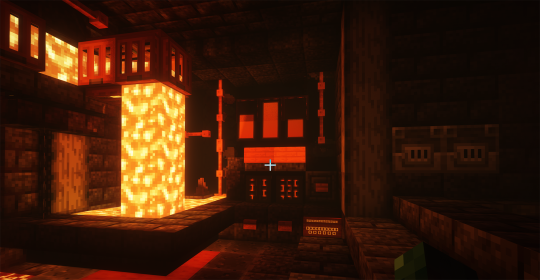

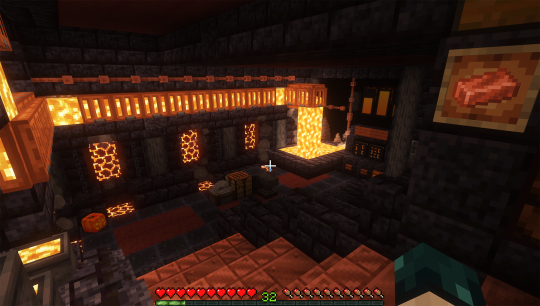

Thanks to some suggestions from the fine folks on cohost and @tinfoilsnow gently walking me thru the process of installing a modloader, I have shaders again!!! Here's some shots of smaller builds I've made in the meanwhile, more shots under the cut! The buoys have a lil secret--

I'm actually really proud of them; I took inspiration from this video but ended up fiddling with my own design in order to do some SHENANIGANS:

There's a waterlogged sculk sensor with amethyst hidden inside, so when you boat past, you get a STARTLINGLY NAUTICAL "ding ding!" noise from the amethyst resonating! IM SO ABSURDLY PROUD OF THIS DISCOVERY LMAO i made everyone come over to boat past my buoys

Here's another pic of the map room where you can really get the full Lobby vibes:

I built this under the spawn house, which I'd been using as a launch point for some exploring, and needed a place to put my maps that wasn't just Taping Them To A Dirt Wall lmao. It might get expanded (I had vague thoughts about a hallway leading to a REALLY BIG MAP display), but there's already a Big Map Room over in town that we're all collaborating on that includes linked copies of the maps I'd started, so this is just an lil bonus display now. I MIGHT STILL EXPAND IT LATER JUST FOR FUN....

The lava basement is under my house! It was built for ore storage and smelting and also I wanted a place to collect lava for smelting fuel. The autosmelter is small but IM SO PLEASED to have managed to build one! Here's what it looks like in complementary shaders, where you can actually see what's going on:

The Lava Control Panel over there in the corner is mostly aesthetic but I DID learn enough redstone to make it so that if you hit the buttons, a light behind those level indicator banners lights up! (mostly because Boo keeps pushing all my aesthetic buttons when he visits so I thought it would be funny if these ones did something)

Anyway, of course the rethinking voxels shader can't be normal about a room lit by lava so GOTTA INCLUDE SOME COOL DARK SHOTS:

i still can't play the game with shaders on but they run a LOT nicer on the setup mochi helped me with; very excited for more pretty shots.... i gotta get some more pics of lake progress soon!!

#block game liveblogging#complementary shaders#rethinking voxels#mineblr#minecraft#minecraft build#minecraft aesthetic#the jack o lantern is not there normally but tis the season#maf has been running around leaving spooky decorations everywhere its the best

17 notes

·

View notes

Text

Final game testing (Took place before Fog and Start + End screens)

QU1: People really liked the art by the looks of the chart.

QU2: Here we get some positive feedback mainly saying how they like the voxel art. Only issue is the scaling of some of the voxel art but it isn't really an issue.

QU3: People liked the mechanics.

QU4: People were overall quite positive about the smoothness of the gameplay although one said it isnt very good. (here we could have got some better feedback as to why) I think this could be due to the fact that as I watched the player play our game they couldn't find the tools very easily and didn't know that the hoe was for the dirt and fork was for poop and bucket for cleaning. The fact the player couldn't find the tools isnt an issue as they just need to explore the map better and more thouroughly.

QU5: With the audio it was said it was loud and there were sounds but not the necessary things in sight to make the noises so I changed the background music and made it quieter.

QU6: People wanted to add fog, animations and indicators of where things are. One of the aims of the game was it is supposed to be a mental health puzzle game so we want the player to find their way around the map to find the tools as the puzzle so no indicators will be added. We do not have time to add tool animations sadly but this is a good piece of feedback and would be something if we had time. Although we did add the fog as it makes the map boundries invisible to the player.

QU7: Some people didn't understand the question but the ones who did got it fairly right it is showing how mundane the life of a farmer can be. But even so the people later on understand the impact the game is supposed to have.

QU8: This is what I mean by people seem to understand the impact as all said loneliness bar one who said schitzophernia which is just because of the figures appearing they got the wrong idea and obviously didn't pay attention to the messages and wider surroundings.

QU9: Most people think the game is accessible.

Overall by the looks of things we have fixed most of the issues and people liked the game anyway so overall it seems to have positive feedback.

0 notes

Text

Researching Voxel art styled games

After using MagicaVoxel for a little bit I researched more into what games actually use it and how they styled it to their fitting with using 3D pixel art to their ideas in the game.

I looked at how each game use Voxel differently and how they convey different feelings out the player, whether it be a calm and relaxed environment and feeling to a more annoying and provoking feeling.

youtube

The games I looked at:

Minecraft

Teardown

Crossy Road

I watched the video above where voxels are described as basically 3D pixels which helped me sort of visualise it better and think about how different games use that to their advantage.

I firstly began by looking at Minecraft. Minecraft is probably one of the most popular games when it comes to using voxels. I researched into it and it seems they use it for storing terrain data, but not for rendering voxel techniques as they apparently use polygon rendering to display each voxel as a block.

I really like Minecraft's simple and calm theme as it being a sandbox game means you can make whatever you want and do things at your own pace. The music they use in game is very relaxing too (Except when you hear cave noises when you're mining at 2am) that give a nostalgic feel to it as they are so rememberable.

youtube

Next I looked into Teardown. I really like how the game looks as it uses voxels really efficiently and create a really fun theme. I really like the part that it's a regular sandbox to begin in the level with where you can create shortcuts and explore around first where it creates a relaxed and laid back environment, but then when you begin to collect the key cards it creates a countdown where you're on a time crunch and need to grab all the key cards before the times up, using those shortcuts you made beforehand to get around the map quicker.

youtube

youtube

I then finally looked at Crossy Road. When looking at Crossy Road it's very obvious that it's a voxel game with it's art style.

I personally really like the simplistic bright colour pallet alongside how the environment looks. I like how the characters look and all consistently use the same bright almost sicky neon colours throughout the whole game but then adds to their game theme and overall final look.

0 notes

Text

fMRI Data Analysis Techniques: Exploring Methods and Tools

Functional magnetic resonance imaging has changed the existing view of the human brain. Because it is non-invasive, access to neural activity is acquired through blood flow changes. Thus, fMRI provides a window into the neural underpinnings of cognition and behaviour. However, the real power of fMRI is harnessed from sophisticated image analysis techniques that translate data into meaningful insights.

A. Preprocessing:

Preprocessing in fMRI data analysis is one of the most critical steps that aim at noise and artefact reduction in the data while aligning it in a standard anatomical space. Necessary preprocessing steps include:

Motion Correction: The fMRI data are sensitive to the movement of the patient. Realignment belongs to one of those techniques that modify the motion by aligning each volume of the brain to a reference volume. Algorithms used for this purpose include SPM-Statistical Parametric Mapping-or FSL-FMRIB Software Library.

Slice Timing Correction: Since the slices of functional magnetic resonance imaging are acquired at times slightly shifted from one another, slice timing correction makes adjustments that ensure synchrony across brain volumes. SPM and AFNI are popular packages for doing this.

Spatial Normalisation: It is a process in which data from every single brain is mapped onto a standardised template of the brain. This thus, enables group comparisons. Tools like SPM and FSL have algorithms that realise precise normalisation.

Smoothening: Spatial smoothening improves SNR by averaging signal of the neighboring voxels. This can be done using a Gaussian kernel and generally done using software packages such as SPM and FSL.

B. Statistical Modelling:

After the pre-processing stage, statistical modelling techniques are applied to data to reveal significant brain activity. The important ones are:

General Linear Model (GLM): GLM is the real workhorse of fMRI analysis. It models, among other things, experimental conditions in relation to brain activity. In SPM, FSL, and AFNI, there is a very solid implementation of the general linear model that will allow a researcher to test hypotheses about brain function.

MVPA: Unlike GLM, which considers the activations of single voxels, MVPA considers the pattern of activity in many voxels together. This provides much power in decoding neural representations and is bolstered by software such as PyMVPA and PRoNTo.

Bayesian Modelling: Bayesian methods provide a probabilistic framework for interpreting fMRI within a statistical environment that includes prior information. Bayesian estimation options are integrated into SPM, permitting more subtle statistical inferences.

C. Coherence Analysis:

Connectivity analysis looks at the degree to which activity in one brain region is related to activity in other brain regions and hereby reveals the network structure of the brain. Some of the main approaches are as follows:

Functional Connectivity: It evaluates the temporary correlation between different brain regions. CONN, which comes as part of the SPM suite, and FEAT of FSL can perform functional connectivity analysis.

Effective Connectivity: Whereas functional connectivity only measures the correlation, effective connectivity models the causal interactions between different brain regions. Dynamic causal modelling, as offered in SPM also, is one such leading metric for this analysis.

Graph Theory: Graph theory techniques model the brain as a network with nodes (regions) and edges (connections), thus enabling the investigation of the topological characteristics of the brain. Some critical tools available in graph theoretical analysis include the Brain Connectivity Toolbox and GRETNA.

D. Software for fMRI Data Analysis

A few software packages form the core of the analysis of fMRI data. Each has its strengths and areas of application:

SPM (Statistical Parametric Mapping)- a full set of tools for preprocessing, statistical analysis, and connectivity analysis.

FSL (FMRIB Software Library)- a strong set of tools for preprocessing, GLM-based analysis, and several methods of connectivity.

AFNI (Analysis of Functional NeuroImages)- a package favoured because of its flexibility and fine-grained options in preprocessing.

CONN- Functional connectivity analysis is very strongly linked with SPM.

BrainVoyager- a commercial package that offers a very friendly user interface and impressive visualisation.

Nilearn- a Python library using machine learning for Neuroimaging data, targeting researchers experienced with Python programming.

Conclusion

fMRI data analysis comprises a very diverse field. Preprocessing, statistical modelling, and connectivity analysis blend together to unlock the mysteries of the brain. Methods presented here, along with their associated software, build the foundation of contemporary neuroimaging research and drive improvements in understanding brain function and connectivity.

Many companies, like Kryptonite Solutions, enable healthcare centres to deliver leading-edge patient experiences. Kryptonite Solutions deploys its technology-based products from Virtual Skylights and the MRI Patient Relaxation Line to In-Bore MRI Cinema and Neuro Imaging Products. Their discoveries in MRI technology help provide comfort to patients and enhance diagnostic results, able to offer the highest level of solutions for modern healthcare needs.

Be it improving the MRI In-Bore Experience, integrating an MRI-compatible monitor, availing the fMRI monitor, or keeping updated on the latest in fMRI System and MRI Healthcare Systems, all the tools and techniques of fMRI analysis are indispensable in any modern brain research.

#MRI healthcare system#fmri monitor#in-bore mri#fmri system#mri compatible product#mri compatible transport#mri safety products

0 notes

Text

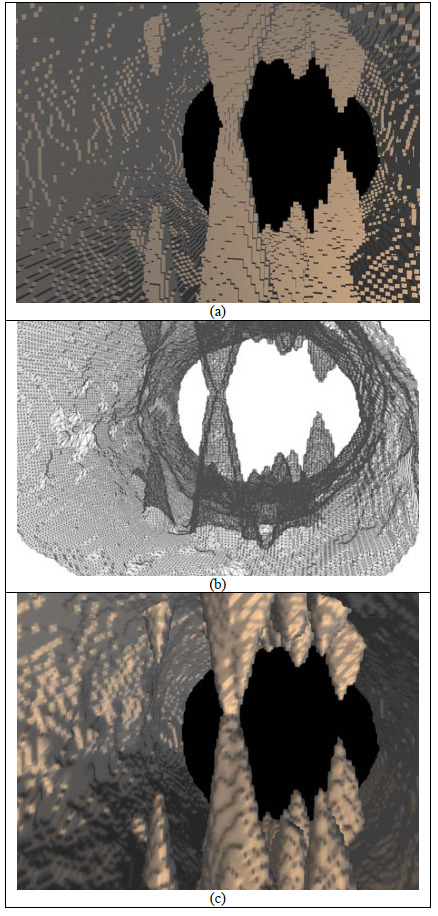

Source notes: Procedural Generation of 3D Cave Models with Stalactites and Stalagmites

(Cui, Chow, and Zhang, 2011)

Type: Journal Article

Keywords: Procedural Content Generation, Caves, Stalactite, Stalagmite

(Cui, Chow, and Zhang, 2011) tackle the problem, and address the underserved area of, 3D cave generation head on by developing a method of producing models containing polygon configurations recognisable as stalactites and stalagmites.

The authors note the paucity of research on procedural techniques for the generation of underground and cavernous environments despite being natural and common settings for films and computer games. As is the aim of the Masters project, the developed method is intended to generate visually plausible 3D cave models sans the requirement that the constituent techniques be physically-based. They build upon their previous work involving the storage of 3D cave structures in voxel-based octrees by smoothing a triangle mesh representation of cave walls without introducing cracks and implementing the addition of stalactites and stalagmites.

The method works by rendering polygonal cave wall surfaces from low resolution voxels stored in a voxel-based octree that has been generated using 3D noise functions. That mesh, made up of voxel cubes, is then smoothed using a Laplacian smoothing function, effectively moving vertices closer towards adjacent vertices, rounding corners in the process. A nuance of the work by (Cui, Chow, and Zhang, 2011) is to remove cracks that result from the smoothing process (due to non-uniform polygons resulting from differing octree voxel node sizes) by subdividing polygons to cover gaps before the smoothing is executed. The smoothing work detailed in the paper influenced the development of the Masters project method because without a smoothing pass the extracted isosurface is unnaturally jagged.

Stalactites and stalagmites are generated using a physically-based equation approximating the relationship between the radius and length of those speleothems. It is stated that they can be randomly placed on the ceiling and floor of the cave structure, with variation achieved by altering the parameters of the equation. In order to further differentiate between stalactites and stalagmites, a scaling factor was added to the equation. This need for this detail has been factored into the Masters project method, with stalagmites being generated in a slightly different way to stalactites to make them appear stockier, mimicking the effects of gravity.

Analysis of the results partly focuses on the smoothing enhancement added to the previous work by the authors, showing the crack-free, yet still naturally rough and realistic cave walls. The generated speleothems are showcased, demonstrating the diverse shapes that can be produced by modifying the equation parameters.

The work by (Cui, Chow, and Zhang, 2011) is a significant contribution to the area of procedural methods for generating 3D caves. The models produced are recognisably cave-like, largely due to the inclusion of speleothems and rough walls. Variability of the shape of the cave environment as a whole appears low from the included results, and there is a lack of other visually interesting features (the authors note in their future work section the need to investigate techniques for generating such mineral deposit structures as columns, flowstones and scallops). User control and parametrisation of the cave model is not discussed, however different shapes of visually plausible speleothems can be produced by altering parameters, which is a strength of the method presented.

0 notes

Text

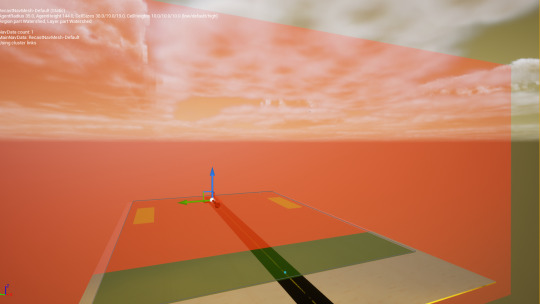

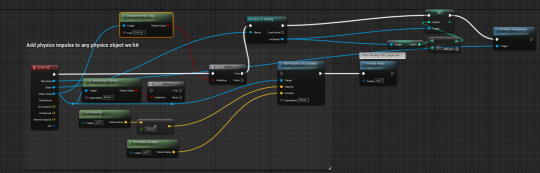

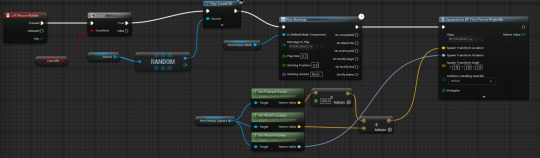

Development Post #4

I perfectly understood how camera shakes worked which was perfect a I wanted to add a camera shake when the cars exploded to add to the action.

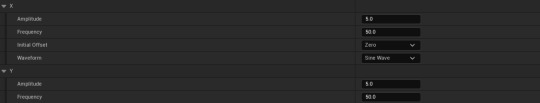

I created a new camera shake component and immediately manipulated a couple values inside it.

I increased the necessary values for some intensity and then moved onto adding it into the code where the zombie cars blow up.

I added the play world camera shake and changed the values of the inner and outer radius. It had been a while since I had used a camera shake however, so I experimented with the values of the node. I eventually was satisfied with the camera shake after dialling down the shake as I did accidentally make the camera look like there was an earthquake, which was definitely not suitable for our game. Once all was done, I made sure to place it just before the sound effect of the car blowing up was played, producing a very satisfactory result.

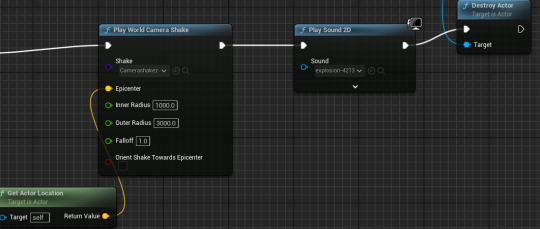

After some consideration, we decided that it would be best for Frankie to used a different gun as a pistol surely would not blow multiple cars up, so we settled on a machine gun.

When the left mouse is clicked, a camera shake is played and the bullet actor is spawned every 0.05 seconds. This firerate was crucial to get right as the weapon needed to have the machine gun feel. As for the shake, it's a very short burst that should represent a bullet being shot which was mastered when we originally made the first Da'Car. We just reused this asset. The floats on the bottom half of the screenshot give the bullets what is referred to as 'bloom' making it so the bullets aren't just shot in a straight line, instead there being some variation as it randomises the roll, pitch, and yaw.

And at the end of the code, I made it so the bullet shot noise plays from the same gunshot audio bank that was created for the original Da'Car. The pitch of the audio is then randomised even further than just the random bank, allowing for variation of the played audio so that it is slightly ,ore unique than hearing the same 7 sounds over and over as the player will need to be shooting quite a lot in the third level.

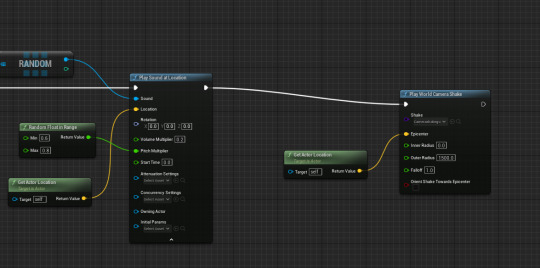

At this point I decided it was time to find a model for the gas station in the second level. I had found one however, the assets were too high detailed that it affected the textures on the player and the zombies, making them appear blurry. To prevent this I then modelled the gas station within magica voxel as I'm not confident with proper 3d modelling.

There was an issue with Unreal Engine not being able to find the correct music for the specific level the player was on.

First I created an array that contained the music for each level, which was then plugged into a find node that would help select the correct one.

I've also got a custom event that helps load the next level as there is three levels meaning that the simplicity of changing levels the same way is now more difficult. This event gets the current level name, finds the level from the level array and adds one to the list. There is an equal to 3 node that allows the menu and first game to be loaded upon completion of the third level, if the number added is not equal to three, it just follows the regular code and opens the level by name. If the levels value is 1, its the first level, and if it's equal to 2, it opens the second level, and if its value is three it opens the final level, but when one more value is added, it just reopens the first.

I had to put in a big box that wasn't visible in game as when the player's cursor was over the sky when shooting, it would just shoot at the original coordinates of the level. This proved to be an irritating feature when playing the game myself. There's no coding for this box, I put the bounds material over it so I knew it was an object that had a purpose, but was not visible in game.

Once this was done, the player is now able to shoot at the sky without the bullets redirecting to a completely different position.

0 notes

Text

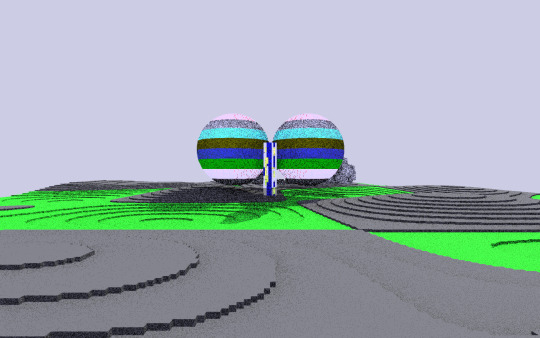

Prototypical

A few years ago I released a game on Steam. It was nothing even close to being a commercial success since I neither had the marketing budget or presence / community to spread the word out. That and perhaps the game just wasn't good enough for larger appeal.

But despite the lack of commercial traction, I still considered the project an overwhelming success simply by virtue of not just how much it taught me, but by the fact that it was finished in totality. The only projects that fail are the ones that don't get finished. That doesn't mean you can't learn from them, though, and it also really depends on how you measure success - it's not always the case that you have to finish something to determine its value before you move on to other things. At least that's been my experience.

So what do I know heading into this new project? What have I learned from the past that might help me moving forward?

I'm confident I have enough technical knowledge to build what is in my head, but I thought it might be fun to look back at some past projects to reinforce my confidence a little bit.

Below are some screen captures of projects spanning the last 18 years or so. The last one in the list is the only project I took commercial. There's some ugly work in here, but they were all a lot of fun and educational to tinker with when I worked on them, which is the main thing.

Above: A voxel based exploration game where you could land on different planets and explore. The game never made it beyond the terrain generation stage, but I learned a lot about procedural, chunk-based world generation and blending different noise algorithms together.

Above: A concept I'd toyed around with for a long time that took place in a super low-poly environment. You had to maintain a small starship and explore the galaxy. You would have been able to land on planets, kit out machines in your ship and perform science experiments. I made a very low-poly ship environment you could walk around in, and programmed up a really neat effect you could see out of the cockpit when you warped between locations for planets.

Above: I was trying to build a game which was a lot like Delver - a roguelike dungeon crawler - but in a more science-fiction, DOOM-like setting. I never ended up building a game out of it, but I made a really neat 3D level editor where you could carve out rooms, raise and lower floors and apply textures from an in-game texture library. It would spit out an optimised level file in a format that would make it easy to share online.

Above: A cross between Ikaruga and Space Invaders made within a single day, just to see if I could. The enemies shifted left and right on the screen (but never came downwards), randomly shooting bullets of their own colour. You could change your ship's 'polarity' (colour) to absorb these projectiles which would power up your ship allowing you to shoot more projectiles yourself. If you got hit by a single opposing coloured projectile you'd be destroyed. It was pretty hardcore, and made moreso with the inclusion of an extremely difficult mode where the enemies shot huge volumes of return projectiles when hit. Managing your ship's polarity made it fun and frenetic. I was really pleased with this one, especially how quickly it came together.

Above: This 2D grow-em-up was probably the start of my obsession with automation and games that conceivably fit in the 'factory' genre. The game had a large, procedurally-generated 2D environment that stretched from the surface of the world all the way down to a kind of bedrock. In between were different layers and biomes where you could find and cultivate different flora and minerals. You'd harvest these and use them inside machines to craft different items to sell.

One useful technical skill I picked up during this project was understanding how to distribute the updating of millions of tiles over the course of several CPU logic cycles, keeping the game running smooth and allowing the game to scale up to potentially millions more tiles in size with no noticable performance impact.

I had no idea at the time that the very core ideas underneath this game would form a huge part of my desired game development path moving forward.

Above: Back in early 2015 I was inspired by Desktop Dungeons to create a game that played out in single turn time instalments at your own pace. You had a dungeon to explore filled with items and monsters, but nothing happened until you moved. Every time you did, everything else in the dungeon also moved. I had the entire combat system and item system developed, it just needed some concrete objectives or procedural dungeon generation and it probably would've been a neat little game. A couple of months later in 2015 the game 'Crypt of the Necrodancer' released and gave everyone a really good take on the whole 'player moves the rest of the dungeon' concept.

Above: Before I ended up leaving Java for good, I had one last bit of fun creating a Delver-style 3D FPS game set in a procedurally generated dungeon. I learned a reasonable amount about procedural generation whilst working on this project, though it was far from perfect. I built in a roster of collectible items, added a narrative system, and created a whole swathe of poorly-design visuals to boot. I really enjoyed the dark, moody atmosophere though, and it was neat exploring different layouts, searching for the ladder that would take you down to the next level!

Above: Around the same time I was transitioning away from Java and LWJGL, I was getting more into developing prototypes in Unity. One of the early prototypes I put together was a kind of cross between bomberman and Power Stone - a single-screen, couch-multiplayer brawler game. Although I really liked the idea of doing a local multiplayer game, the lack of extended Unity knowledge slowed me down and I eventually abandoned the project. I learned much about how the modelling workflow between Blender and Unity worked, though, as well as a deeper understanding of the relationship of measurement units between the two programs.

Above: I eventually came back to my idea about harvesting and processing natural resources. By this point, the idea had germinated(!) into a fairly sophisticated management / tycoon style concept where you would have to set up a whole factory complex on a randomly-generated map. You'd extract natural resources, use factories and machines to process and combine them into new types of goods. The goal was to take on supply contracts and use a distribution launch platform to send your goods off-world to various orbiting factilities. I took this concept a long way over a good 6 months or or so before taking a few months' break from it.

Above: I returned back to the idea some time after, refining the terrain generation and having a much more solid understanding of the actual game loop. I spent more time on this prototype than any of the others I'd worked on, and I'd keep coming back to this one over the next 18 months.

Above: After a couple of years, I was absolutely convinced this was going to be the first 'full game' I was going to build. A large part of my conviction simply came down to how excited I was about it. At this point, my love of the automation / factory genre was cemented. I was playing a lot of other games in this space at this point, and I really wanted this to be my own spin on the genre. The visuals were much more mature in this project, and I had by now grasped and implemented concepts such as animations, complex file handling and online networking (mainly for level imports, but it was a big step up from previous projects).

The project, sadly, never reached a finish line. Scope creep and the scale of what I was trying to achieve was out of my capabilities. I should have reduced the goal into something more manageable and I would have likely succeeding in getting a complete game from it. My exhaustion from this project would see me taking a hiatus from game development for a good year or so.

Above: When I returned to game dev a year or so later on, I was in the midst of development fever. I had recently completed development on a 3D game for a work client, and I was buzzing to create something new. I opted for a simple idea that I could rapily prototype levels for, and I ended up having a lot of fun with a take on the marble-run concept.

The game ended up being so much fun to work on that it eventually became my first full-release game (Spin & Roll), reaching the Steam store on PC. I even released a free DLC pack a few months' after release within which I was able to push the limits of what I'd made a little further.

I'm still extremely proud of the project, despite its low sales numbers. It brought together everything I was able to do within one package - programming, design, 3D modelling and a lot of knowledge I was picking up about marketing and managing a tangible, sellable product. I also learned much about Valve's Steam platform, integrating the Steamworks API, and how the levers and buttons of Steam's backend worked for developers.

I suppose the biggest surprise to me was just how much work comes after the development of the game. The production of marketing resources, press releases, community management and other factors consumed an enormous amount of time. I definitely picked up a lot of useful knowledge over this period of time.

So what now?

Well, aside from this post being much longer than I'd originally intended it to be, I guess I should frame my plans within the context of everything I've shown above.

The game I'm planning to make will be a 3D game, in a similar vantage point to a strategy / tycoon type of game (like Production Line or Megaquarium). I know I can already achieve the bones of things like moving a camera around a 3D environment, placing objects on it, and having those objects manage their own state and processes.

Some things I will be absolutely new to are things like conveyor belts (yes, I want to have them! I have no idea how to make them!). I'm looking forward to exploring how to get those working. I have some thoughts which I might detail in another post, but I'm going to walk before I try and run on this.

I'd also like to make sure that I include some kind of time acceleration capabilities (similar to some RTS games). One thing I appreciate as a player is the ability to move time forward a little quicker if I'm just waiting for something to finish.

Beyond this, I am going to try and avoid the same mistakes as my last big factory game and develop with a slightly different methodology. I'm quietly confident that this alternative style might work better for me, but we'll see.

I do try and plan things out ahead of time, but sometimes game development is a bit of an organic process. Sometimes an idea is just a lump of clay. It's rough and unrefined, and you have to poke and prod it a bit to discover other things hiding in there.

I know my game needs two main components - items coming into the factory, and items going out (into, I guess, some kind of 'goal' container or output hatch or something). If I can get those two things built first, using the development time to figure out the literal conveyor belt mechanics that links those two things together, I'll then have time to find other things to put inbetween to make it more fun.

I'll go into some more detail about this soon, but for now I think I'll leave this mammoth post well alone and let my brain rest for a while. Or at least until I've had another coffee.

1 note

·

View note

Text

Best Techniques of Point cloud to 3D

Introduction:

Point clouds to 3D have become an integral part of modern technological advancements, Point cloud to 3D especially in fields like computer vision, robotics, and augmented reality. They represent a collection of data points in a three-dimensional space, captured by various sensors like LiDAR or photogrammetry. One of the most exciting applications of point clouds is their conversion into detailed 3D models. In this article, we will delve into the best techniques for transforming point clouds into stunning 3D representations. The process of transforming point clouds into intricate 3D models marks a pivotal intersection between the physical and virtual realms. Originating from advanced scanning technologies like LiDAR and photogrammetry, point clouds encapsulate the three-dimensional essence of real-world environments. Their significance lies in the wealth of information they encapsulate, providing detailed representations of surfaces, structures, and spatial relationships. The journey from point clouds to 3D models commences with meticulous data acquisition and preprocessing, where raw data undergoes refinement to eliminate noise and outliers.

Data Acquisition and Preprocessing:

The journey from point cloud to 3D masterpiece begins with data acquisition. Whether obtained from LiDAR scans, photogrammetry, or depth sensors, the raw point cloud data requires preprocessing. Noise reduction, outlier removal, and data filtering are essential steps to enhance the quality of the point cloud. Various software tools like Cloud Compare, Autodesk Recap, or PDAL (Point Data Abstraction Library) are commonly used for this purpose.

Registration and Alignment:

Point clouds to 3D are often acquired from multiple scans or sensors, leading to the need for registration and alignment. This process involves merging individual point clouds into a single, cohesive model. Iterative Closest Point (ICP) algorithm is widely employed for aligning point clouds by minimizing the distance between corresponding points. Proper registration ensures a seamless transition between different parts of the scene and lays the foundation for accurate 3D reconstruction.

Surface Reconstruction:

Once the point clouds are aligned, the next step is to create a surface representation. Various techniques exist for surface reconstruction, with marching cubes and Poisson surface reconstruction being prominent ones. Marching cubes convert the point cloud into a voxel grid, which is then used to generate a polygonal mesh. Poisson surface reconstruction, on the other hand, formulates the problem as a partial differential equation and solves for the surface. Both methods have their strengths and weaknesses, and the choice depends on factors like the density and quality of the point cloud.

Mesh Simplification and Refinement:

The generated polygonal mesh may be too complex for certain applications, leading to the need for mesh simplification. Simplifying the mesh not only reduces computational load but also makes it more suitable for real-time applications such as virtual reality or gaming. Conversely, refinement techniques aim to enhance the level of detail in the mesh. Balancing simplicity and detail is crucial, and algorithms like Quadric Edge Collapse Decimation and Loop Subdivision are commonly used for these purposes.

Texture Mapping:

Adding textures to the 3D model is essential for realistic visualization. Texture mapping involves projecting 2D images onto the 3D model, creating the illusion of surface details. UV mapping is a common technique where a 2D texture image is applied to the surface of the 3D model. This process requires careful consideration of the geometry to avoid distortions and ensure accurate texture placement.

Post-Processing and Quality Assurance:

Post-processing steps are essential to refine the final 3D model further. This involves checking for artifacts, gaps, or inconsistencies in the model and applying corrections. Quality assurance ensures that the 3D model accurately represents the real-world scene. Feedback loops with the original point cloud data may be necessary to make adjustments and improve the overall fidelity of the model.

Integration with Visualization Platforms:

The ultimate goal of converting point clouds into 3D models is often to integrate them into various visualization platforms. Compatibility with popular 3D modeling software, game engines, or virtual reality environments is crucial. Formats like STEP, STP, IFC, SAT, FBX, STL, DWF, NWC, NWD, OBJ, DWG, DGN, PLN, PLA, DXF, IGES, IGS, 3Ds, glTF are commonly used for exporting 3D models to ensure seamless integration with different applications.

Conclusion:

Transforming point clouds into 3D masterpieces is a complex yet rewarding process that involves a series of well-defined steps. From data acquisition and preprocessing to surface reconstruction, mesh simplification, texture mapping, and post-processing, each stage contributes to the creation of a visually appealing and accurate representation of the real-world environment. Rvtcad, with its cutting-edge tools and solutions, exemplifies the ongoing synergy between technology and the creative vision, playing a crucial role in shaping the future of 3D modeling across diverse fields, from architecture and urban planning to the realms of virtual reality and gaming.

0 notes

Text

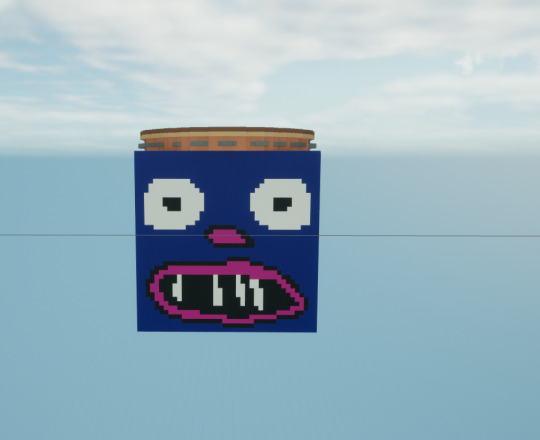

One way too big post for all the stuff I forgot to post

Would like to start things off by saying the only reason I haven't posted much of the development for my game is because I was off a lot and had to essentially speed run it to get to an actual playable game. So many original ideas had to be scrapped because of this.

Story/Plot: For this one, I'll give you a before and an after of what it was and now is.

Before: Before I had to scrap the idea and speed run my project, the game would have been a music based first person platformer. It would have been a set of trials with different sound cues telling you what you had to do. For example, a drum noise would mean jump. There was supposed to be progressively more difficult levels, some with sequences you had to remember.

After: A trial where you have to essentially do a Roman entertainment fight only this time you have a pan flute gun and the enemies have bongo hats and are cubes.

Objective: I don't think my game really has an objective. The original objective was to escape the king (Mr Tambourine) and get home. Unfortunately, this couldn't be achieved. I guess wipe out all the enemies as fast as possible?

Genre: Now my game is a first person shooter but before it was supposed to be a first person platformer.

Other games that inspired me: There wasn't really any other games that inspired me. I came up with the idea when I was told about the project. Other games I looked at just didn't really contain what I wanted. The colosseum was sorta inspired by the movie Gladiator but also just a regular Roman one.

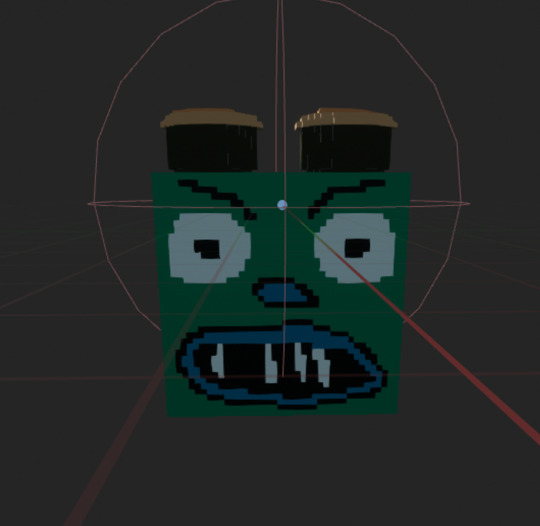

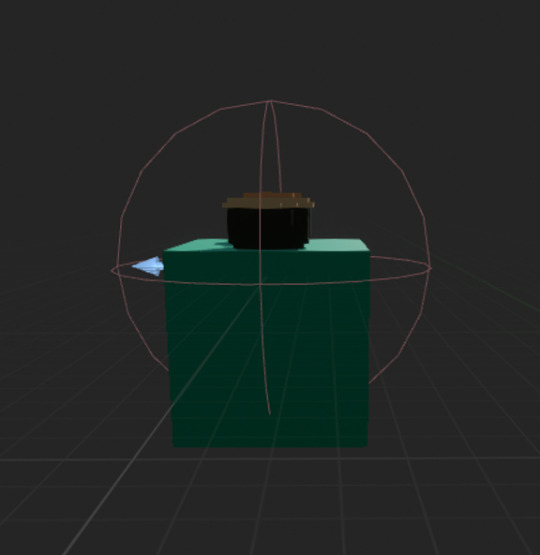

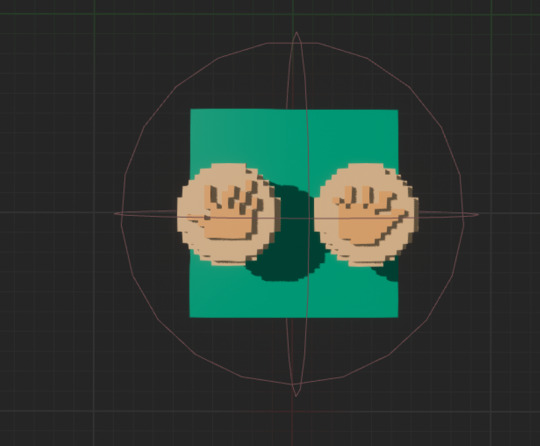

Art style: The art style I used is voxels using Magica Voxel. This is because I find it easier to design things using pixels. Nothing has to be too detailed, it can be just a block with a face and an instrument hat like I did for both the king and the enemies.

My actual project:

This part is for all the things I did in my project.

The Gun:

This is the gun I use in my game. It's a basic 15 pipe pan flute that makes pan flute noises when you fire it.

It fires a custom projectile that looks like this:

In the projectile code, there is the hitting enemies code.

What this does is it checks if the thing the projectile is hitting has a specific tag called "Enemy". If it does it will see how much health the enemy has and take 50 away from it, destroying the actor if its health is equal to or below 0.

The assets of the game itself:

Now we move on to the actual assets I created for the game.

The colosseum:

A basic circle shape in the middle with a much larger circle around it. I used an incredibly helpful website in order to create the circles.

It's meant for Minecraft but you can input any numbers you like and it will give you a pixel grid of it. I then built a "throne" for the king. It's more of just a platform with a red carpet on it.

The king himself/Mr Tambourine:

The king's face was totally random. I drew random circle-ish shapes and coloured them in. The Tambourine on top of his head was made with the circle generator that I used for the colosseum, I then added the little symbol things.

The Enemies:

The enemies are all the same, the king but recoloured and with bongos instead of a tambourine. Mostly self explanatory.

Sound:

I have no idea what to say for some of this. I can say that what it does is it will see if you pressed the left mouse button and if you did it will then check if you have the rifle. If you have the rifle it will then play a random pan flute sound and fires the projectile. I don't know what the location and the rotation bit does.

Now we get to the part that I can't put down as I couldn't get any screenshots of it. I couldn't change the gun to begin with, it ended up being the same mesh but the wrong way up, not my pan flute. Eventually it was fixed and works now. That's about all there was for my game. None of the code was really changed.

0 notes

Text

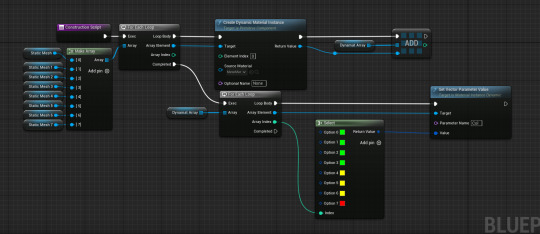

Making the project look nice.

So far my project has been coded to get the game mechanics working but now it was time to polish. With the game being called decibel dash I had made the them of the game audio level bars. I modelled a voxel bar that would be used as the tile that the character runs on. However, as usual I added more than what I had originally planned to do.

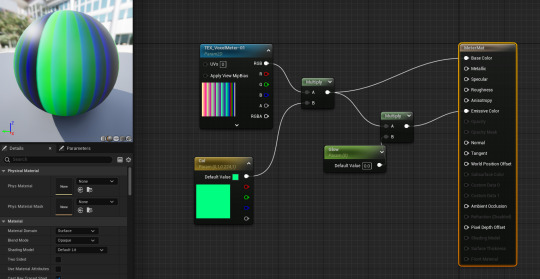

This was the finished result in Magica Voxel, the only issue being that if I wanted the material to glow I would have to do it myself as the only drawback of using voxels is that the materials are not very tangible.

The mastertile was originally going to stay like this until I decided the game would look much better with some glowing materials. Though due to the limitations of the voxel material it wasn't as straight forward as it would be for a regular object. I had to create a sub assembly where I separated one of the bars as this would help with giving each bar a different material. These individual bars were then stacked up on each other to mimic the original model.

But after this I then decided I wanted to make the tile glow in response to volume input. Many things in this project react to my How loud? variable but this unfortunately became difficult very quickly.

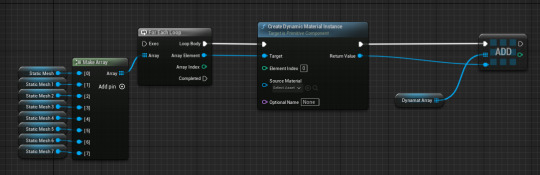

This was the first thing I had tried was to actually create they dynamic material for each of the bars (which you can see from the 8 static meshes on the left.) This ended up not functioning at all despite it being set up with no errors, meaning that I had to try something else.

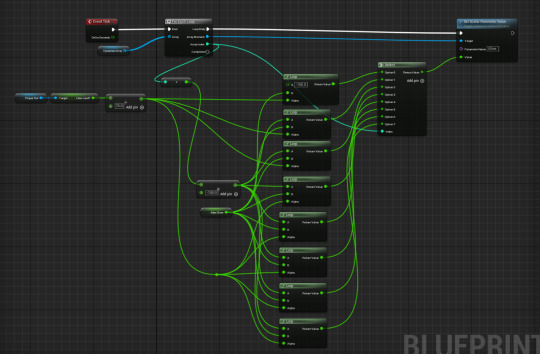

I made sure to cast the thirdperson character so that I was able to access the How loud? variable as it was the key to making things work when I wanted something to react to sound. And after a lot of trial and error the code finally works. The values could be tweaked as I found out when I ran the game on my home pc that if your microphone has a pop filter on, it is much more difficult to make the bars glow.

This could mot likely be done in a cleaner way but the way I did it was by connecting a lerp for each individual static mesh that was being divided by 75 as this is what the max volume was due to a clamp and then multiplied by -100. These two nodes were plugged into both A, and B of the lerps with a max glow variable (which is set to 15 so that the glow doesn't completely fill the screen) which goes into the Alpha. The return value was plugged into a select node where I had to create enough options for the amount of bars I would want to be glowing. Finally the return value was plugged into the value of a set scalar parameter value. This is what the scalar parameter was referencing:

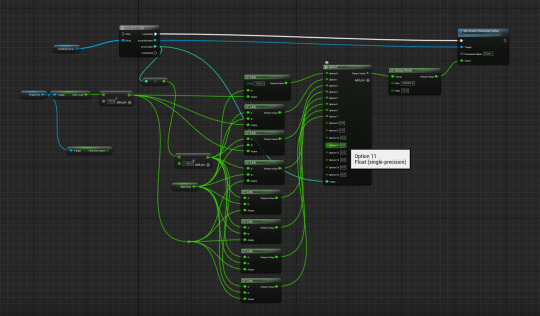

This is how it was left after last Wednesday. I came back to work on the project in the final days to discover that the audio meter was no longer working as it should be.

Whilst the debugging was in progress, the functionality of the bar had also changed so that it reacted to the progress bar that resided in the HUD as this had the most accurate audio input reading whereas before it only reacted to loud noise. This quality of life change was much needed and I'm happy with how it changed as a result of the code breaking. In this screenshot the event tick fires this code every tick meaning that it is constantly active. It multiplies the HUD bar output by 0.125 (1 divided by 8 for each of the individual bars) and then it is plugged into a float with a range between 15 and 0, 15 being the glow limit of the tiles. The select float was then plugged into a get dynamic material which is where I was able to set the material. The loop at the beginning ensured that this code would be repeated over and over.

This mess that was used above is now completely obsolete, despite it working before.

This screenshot was the primary reason why fixing this took so long. The create dynamic material instance somehow got set to none which messed up the referencing with other bits of code. It had always been a part of the code to make the glowing work but I had completely forgot about it over the week. Once this was set to the correct meter material, the rest of the code was then fixed, and revamped.

youtube

0 notes

Text

Okay I have two hypothesis for why Minecraft was the one that got me:

When you boot up a horror game, you know EXACTLY what you’re getting into. When you open up your voxel-based E10+ sandbox? You aren’t exactly expecting heart palpitations.

Stakes. In most horror games, your punishment is being sent a few minutes back at worst. A pretty small consequence. But in Minecraft? You’ll end up losing your unique configuration of inventory space, which may include treasured items that will take hours to be regained, if they can be reasonably regained at all, AND you’ll lose all your progress mining, which could have been from minutes to hours of progress. In a typical horror game, there’s a guarantee you’ll be able to do the exact same thing in the exact same way. In Minecraft? There’s no such promise. It’s just “good luck, figure it out, you’re on your own.”

In most horror games, there’s the safety of knowing that this was a crafted experience that you’re being guided through. Fall through the floor? That was supposed to happen. Creepy noise? Good! I’m making progress!

To me, horror games feel very safe because of those guard-railed constraints.

Minecraft is full rails-off.

I am not a brave man.

Bravery is acting in spite of being scared. I may look brave, but the truth is, I simply don’t get scared very easily.

But when I do get scared? I’ll scamper away like a meerkat.

I’ve played literally dozens of acclaimed horror games, but I’ve never felt a tinge of anxiety. Which is good, because I hate being anxious. I mostly just like to ogle the monsters and admire the imagery.

To this day, no horror game has made me feel any sort of looming dread.

Except one.

Minecraft.

FUCKING MINECRAFT.

As a horror game veteran, I have never, EVER had the controller shake in my hands since I was twelve. It’s been a good streak.

I’m not planning on going back to The Deep Dark anytime soon, that’s for sure.

395 notes

·

View notes

Text

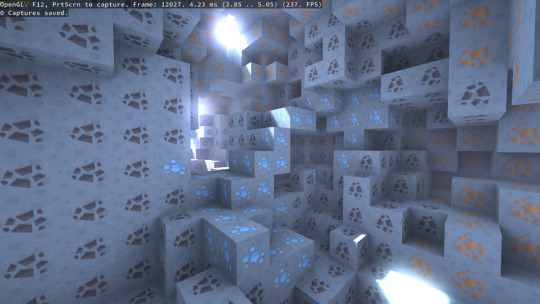

Irregularly-sized voxel raytracing.

Just made a tumblr account because peer pressure but I figure I'll post a bit about my current project here because why not?

With the advent of games like Teardown and the recent public demo of Atomontage, voxel raytracing/raymarching is rapidly entering the public eye. Inspired by these sorts of projects, I started working on voxel raytracers of my own a while back, with varying degrees of success.

These projects were satisfying due to the general graphical fidelity, but they were all things people had done before. So I decided I wanted to make something that nobody had done before. At least, not quite like this. Enter: Irregular voxel raytracing.

This technique differs from most forms of voxel raytracing in that the voxels are, well, irregular. They're not the static, solid cubes you're used to seeing. And as a result, they have a more natural, somewhat unique aesthetic.

I've been really getting into the Rust programming language lately and trying to use it for most projects, so for this I used the Bevy engine. The voxels are stored in a simple 3D texture and the rendering itself is a simple fullscreen quad with a shader for the actual rendering. It's currently just one pass, and since adding new passes and stuff to Bevy is a bit of a pain, I don't have any temporal or screen-space anti-aliasing, and also all shadows and reflections are perfectly hard.

At world generation, the landscape is generated such that each block contains two values: 8 bits for density and 8 bits for type. To calculate this, I get a perlin heightmap and subtract the Y position of each voxel from it, and clamp the resulting value between 0.0 and 1.0. From there, I use a distance-based Worley noise to cut random spheres of gradually decreasing size from the landscape. This leads to decent cave generation that still works with the density value workflow.

The resulting value is multiplied by 255, truncated, and used as the 8-bit density value. A material value is also included, which basically just takes how deep from the heightmap the current voxel is to choose between grass, dirt, and stone. And there you go, a decent world generation!

But there's a little issue with this: An empty voxel has a density value of 0, but the type value is unused. So to compensate, I use that value as a distance variable. I simply iterate over the finished landscape a certain number of times (currently 16), each time checking the 6 neighboring voxels and increasing its value if it has no solid neighboring voxels and is equal or less than all surrounding voxels. This gives an estimate of how many DDA steps it would take to reach a solid voxel.

So now that the data's been generated, I can get to actually rendering it. The shader that I use simply takes the current camera position, plus its forward, up, and right vectors, as well as the voxel texture. The ray origin is just the camera position, and the position on-screen is easily used to calculate a ray direction with the directions:

vec3 rd = normalize(screenCoord.x * cam_right + screenCoord.y * cam_up + cam_focal_length * cam_forward);

In the actual voxel raymarching algorithm, I use a branchless DDA based on this shadertoy. When it hits an empty voxel, it continues to march with that DDA without sampling the voxel texture for the specified amount of steps in that voxel's second value. Otherwise, it samples the value to either test if it's a solid voxel or an empty one, and acts accordingly.

My initial idea involved using a surface nets or marching cubes type algorithm calculated at runtime to smooth the voxels out at irregular angles. However, this proved to be more difficult and performance-intensive than it was worth. So, instead, when it hits a solid voxel, it samples the 6 neighbor voxels to see if they have any value. This results in 3 sets of values: top/bottom, right/left, and front-back. I then generate two separate lookup tables based on the density value, as follows:

float mins[4] = float[](

0.5 - density / 2.0,

0.0,

1.0 - density,

0.0

);

float maxes[4] = float[](

0.5 + density / 2.0,

density,

1.0,

1.0

);

Then, for X, Y, and Z, it uses a solid object as 1 in the negative direction and 2 in the positive direction to index into these arrays and calculate the min/max bounds of an AABB within the current voxel. I then trace that AABB using the original ray. If it hits, then the voxel's material, as well as the ray distance to the AABB and the normal it hit are all returned.

My current algorithm uses one initial ray, plus a shadow ray to the sun and one reflection+shadow ray for some nice shadows and reflections.

And that's how I made my voxel renderer! I'll likely upgrade this, or move onto other projects for voxel rendering that allow for complex global illumination and soft shadows and reflections. I'm proud of what I've managed to do here. And who knows, maybe I'll be able to use this to make something cool! Regardless, thanks for reading!

4 notes

·

View notes

Note

Of course his case was different then others. Clu had seen to his repurposing personally. Trying to use that fact to gain his favor was irrational. He’d been told similar reassurances from far more trustworthy programs. ‘It won’t hurt’, ‘don’t be scared’. He wasn’t dumb enough to believe that, especially not from her. Rinzler held back the aggravated noise that threatened to escape, but couldn’t stop the confused note that slipped out as the User began speaking again. He’d said ‘Can’t’ not ‘won’t’. That was worrying, something the change in the User’s tone of voice didn't help. It simultaneously made Rinzler want to stand down and crawl out of his shell. It was confusing.

He just barely managed to keep himself from cringing away from the User’s hand, teeth bared beneath his helmet. Glad he’d survived what? The fall? It was his fault he’d fallen in the first place. As he struggled to think of an adequate response he received the series of pings, head canting as he mulled them over. She didn’t even try to strike a deal, to bargain for her runtime. So certain that her and her disc’ contents would be useful, enough so that she believed he’d want it even after he derezzed her. Rinzler couldn’t deny that he was intrigued.

[I don’t make threats.] Was all he said, because he didn’t, he simply informed his opposition what he’d do if they continued down their current decision path. That, and there were more pressing matters at the moment.

He hadn’t turned his gaze from the User even as the program pinged him, knowing to never take his gaze off the most prominent threat. Because of that he saw the minute ways that Kevin_Flynn’s face crumpled, brows drawn together and mouth pulled down at the corners. So similar to Clu, the side of the program only Rinzler had been privy to. It could’ve been those similarities that had distracted him, or the water damage still affecting him. Either way, there was a single nanosecond delay before the words spoken actually registered in his processor. Following that registration all he wanted to do was throttle the User until he was reduced to a pile of voxels beneath his hands. Rinzler held himself back from acting on that impulse, but just barely, his entire render pulling into a single line of tension.

Clu wasn’t gone. He couldn’t be. He was just in a place Rinzler couldn’t register his code signature from. Quarantined, maybe. The idea that it made him safe of all things was so absurd it tore a laugh from his throat, a sound closer to a garbled burst of static than anything resembling laughter. He was distantly aware that it was an inappropriate reaction given the circumstance, but he couldn’t help it. That was the best the User could come up with? Rinzler held the rest of his reaction at bay, trying to quickly compose himself. While he didn’t know much about the reintegration process -who did, really- he did know there wasn’t typically a survivor.

“How are you still functional? Not that I don’t believe you…” Rinzler trailed off, allowing his response to be interrupted however the User chose to see it. He had to regain his act and adding anything more would have risked giving himself away. Keeping his responses short was a better bet. Rinzler just needed to lull the User into a false sense of security, make him believe that he could be trusted not to act out. Rinzler didn’t want to play the long game, but shows of violence had gotten him as far as they could. Feigning concern was far more difficult than subservience, but the fact that the User was clearly running on reserve power helped make things a little easier. Kevin_Flynn was too exhausted to catch his slip ups.

“You should take a recharge cycle, you’ve been busy.” Keeping it worded lightly, more so as a suggestion would hopefully make the User more susceptible to the idea. If the User went offline he’d have more time to locate an energy source. Rinzler inched closer, his free hand coming to hover in the space between himself and the User like he wanted to comfort him or lead him away or something equally as sentimental. He pulled back before contact could be made, looking around at the doorways of the safe house like he wasn’t aware of where they led. He’d been the one to secure them when he’d arrived with the Admin, so he knew not only the contents of the rooms, but which one was most likely Kevin_Flynn’s. A scan was performed for appearances sake, though Rinzler wasn’t sure if Users could pick up on such things, and headed towards the room in question. If all went well the User would agree to take a restcycle without simply deciding he’d rather just consume energy directly instead. Though, now that he thought about it that could be useful too.

“He’s really gone? You’re positive?” Rinzler asked in a deceptively cautious tone to mask the anger threatening to drive him to…rash choices. He couldn’t hide the way his grip tightened again around his disc, his weight shifted towards the balls of his feet like he was preparing for an attack. Even though the tension he’d been carrying had seemingly dissipated it was clear it wasn’t truly dismissed. The door responded to his prompting, lacking the security features of a typical panel. Rinzler stepped back to allow the User to pass through without feeling forced, sending a glare over to the other program behind his back, one only marginally lessened by his helmet.

[Do not touch anything.] He warned, [The only reason you're still functioning is because I’m allowing it. Do not interfere.] If his efforts were undermined now after he’d already accepted the humiliation he’d be infuriated to say the very least. With that warning made clear Rinzler turned and followed after the User, movements silent despite his damages. The room reminded him of Clu’s quarters if the Admin’s rooms were color inverted and considerably less sleek. The User had too many random items. “How will you proceed?” Rinzler questioned from the doorway, intending to wait the User out before he began his exploration. Kevin_Flynn had many, many options to choose from going forward. Rinzler’s disc was finally clicked into place on his back, as he was distantly aware that holding it gave him a more threatening appearance.

/*dealer's choice (prompt and muse), if you want to:*/

“Breathe. Hi, we found you, just breathe for me, okay?”

“This is going to hurt, but it will help you.”

[ FIGHT ] for receiving muse to not recognize sender or medical staff trying to help them, due to being drugged or otherwise disoriented – so they fight.

( @spaceparanoids-highestscore / @unwritten-identity-discs )

Rinzler hadn’t expected to come back online. When he’d sunk beneath the waves and slowly felt the energy drain from him, he thought he’d derezz, with his voxels left to sink into the depths until they despawned. The fact that he was able to process that thought at all showed just how wrong he’d been. His external sensors were coming back online at a crawl, a pace so slow it would’ve made him paranoid if his internal system wasn’t also progressing at a similar speed. Users’ willing, his processing speeds were simply decreased and he wasn’t truly taking that long to come back online. How had he even ended up in the Sea? He'd just have to wait for the rest of his recent memory cache to click back into place to find out.

The first sensor to come back online was visual input, even if only partially, revealing the blurred form of a figure looming above him. Rinzler let out a startled warning sound as he scrambled away, the growl tearing at his throat. Water still clung to his suit, black residue from the Sea blocking out the orange lightlines and making him appear as a silhouette, save for the fractures running across his render. His circuits ached and he wasn’t able to orientate himself within the system, his anchors lost in the stream of data. Where was he? Who was this program? -Actually, the latter didn’t really matter. It wasn’t someone he recognized, and therefore, an enemy.

If it thought he was helpless just because he didn’t have his discs they were sorely mistaken. He pulled himself to his feet using the wall behind him, not wanting his back to be exposed. The program in front of him was saying- something. His aural sensors were still calibrating, so while he could tell they were speaking he couldn’t make out what was being said beyond sound bits about ‘helping him’. He ignored it regardless, instead lunging for it’s throat. If he could get the right leverage he’d be able to snap its arm off despite the jitter in his own joints. As he wrestled it to the floor and moved to do just that the panel across the room slid open. Rinzler gave the door a cursory glance as he wrapped his hand around the program’s arm, but paused when he saw who stood in the doorway. Clu?

Rinzler aborted the current action, just in case the program was of some use he couldn’t recall. It was a programmed response, one ingrained from working under the Admin for thousands of cycles, to always look to see if the current outcome was one the Admin wanted. He realized all too late as some of the static from his optics cleared that who he was looking at wasn’t who he’d thought it was. It was a User.

The User. Kevin_Flynn.

…That didn’t bode well for Rinzler’s continued runtime. Why hadn’t the User ever made an appearance when Rinzler would’ve actually had the means to derez him? Before he could dwell on how illogical that thought was he felt a sharp, stabbing pain in his shoulder, right through the mesh between his armor plating. Oh. How had he forgotten about the program?

His lapse in processing was going to cost him the life he’d temporarily regained, as he could already feel his subroutines shutting back down. It immobilized him as his inputs fell away, his helmet hitting the ground with a loud thud. He didn’t want to derez, let alone like this, but when did he ever get what he wanted? He’d always imagined that he’d be struck down in battle, having finally found an opponent able to best him, not on the floor with a program he didn’t know, with Clu nowhere to be seen, and the Grid’s creator lurking overhead. He could tell words were being exchanged above him, before his audio input fell away, visual soon following suit as it filtered to static before switching off completely. Rinzler himself didn’t go offline fully, which seemed like a strange oversight. Instead caught between being online and offline, not quite in standby, but not fully present either.

How long were they going to draw this out?

12 notes

·

View notes