#AI Detection Tool

Explore tagged Tumblr posts

Text

Can an AI Detection Tool Help Universities Combat AI-Generated Essays?

The concept of AI will keep redefining education as long as students use tools like AI writing assistants. Inasmuch as the tool simplifies their work, the problem is always connected with the question of originality and the purity of academic performance. This sets up a task for the university to distinguish a really original student's work from an essay written by a neural network. Desklib's AI Detection Tool: A state-of-the-art solution to such challenges and how institutions can try to outcompete the advances in technologies. Rather, let's see how AI tools like Desklib make it through- without compromising on their academic standards.

The Increasing Impact of AI on Academic Writing

AI tools have revolutionized essay writing. For students, this has become quick and easy; for educators, they are equally challenging. Here's how AI is changing the game in academic writing:

Ease of Use: These AI essay tools complete an essay in mere minutes, so the students will not have trouble researching and creating a draft of the essay.

Sophisticated Outputs: Advanced AI produces essays that closely resemble human writing in tone and complexity.

Personalization: Personalization allows the student to rephrase the content using his or her own voice through an AI Paraphraser and AI Text Rewriter.

Wide accessibility: Because these tools are affordable and also readily available, most of the students shift to them.

Ethical Issues: AI is, to some point, very convenient, but at the same time, it promotes questions about originality and genuine learning.

While AI offers students many advantages, it creates huge problems in terms of academic integrity.

Key Challenges Facing Universities for AI-Generated Essays

The broad utilization of AI writing tools presents a number of critical issues for universities:

Human Intelligence Capabilities: AI-generated essays possess human characteristics, thus making them challenging to decipher.

Volume of submission is high: For educators to manually go through a thousand essays and verify which ones use an AI becomes a challenge.

Ineffective Traditional Tool: Traditional plagiarism detectors are doomed to fail, finding absolutely nothing while detecting AI-generated content.

The pace of technological evolution is very fast, and while AI tools are improving, universities require equally improved solutions.

Student Misconceptions: Most students utilize tools such as AI Paraphraser without the thought of any repercussions to academic integrity.

These challenges indicate the need to work out how tools like AI Detection Software can help maintain originality in academic work.

How AI Detection Tool Bridges Gaps

Desklib's AI Detection Tool is one of the strong solutions in overcoming the problem of AI-generated essays. Here's what makes it stand out:

Precise Detection: The tool detects AI-generated content by syntax, structure, and patterns of writing.

Real-Time Analysis: It goes through essays really fast, thereby allowing educators to grade submissions with a minimum of delay.

User-Friendly Design: Its interface is neat and user-friendly for teachers and institutions alike.

Adaptability: It will keep changing along with the growth of AI technologies, therefore always keeping efficiency in catching advanced writing tools.

Scalability: It works on a great volume of submissions, from small colleges to large universities.

This tool enables universities to ensure that academic standards are upheld in the face of challenges presented by AI in writing.

AI Paraphraser: A Tool for Ethical Learning

While detection tools support educators, students have a need for resources facilitating responsible use of technology. Desklib's AI Paraphraser has been designed in support of ethical learning along multiple lines:

Writing Enhancement: It polishes sentence structure and clarity without necessarily changing the meaning.

Encouraging Integrity: It helps students responsibly improve their work, unlike direct copying.

Readability Improved: Essays become more polished and professional in quality.

Bridging Learning Gaps: It will help students build and express ideas in a more articulate manner.

Complementing the Detection Tools: Paired with an AI detection tool, it will ensure that academic standards are maintained.

Responsible use of tools like the AI Text Rewriter allows students to be at their best without compromising on integrity.

Balancing Technology: Students versus Educators

Desklib balances this impact of AI in academia, having tools serving students and universities alike effectively.

Academic Integrity Using AI Detection Software: This tool verifies originality in academic submissions.

Helping Enhancing: Ethics with the AI Paraphraser will help students refine their essays while adding to their potential workability. Desklib educates students on the responsible use of AI tools.

Scalable solutions: Desklib tools address varied academic needs, from individual assignments to institutional requirements.

Empowerment of All Stakeholders: Universities retain integrity; students grow without shortcuts.

The fairness in this approach gives rise to an academic environment which is productive both for the educators and the learners.

Conclusion

Tools such as Desklib's AI Detection Tool and AI Paraphraser are important features one can make use of in today's AI essay-written world. Desklib aims to make the lives of its customers a bit easier with state-of-the-art detection and ethics-infused writing features, making the platform credible enough to foster academic integrity. If used properly, technology could help in creating educational way through which everyone evolves authentically.

0 notes

Text

I really do not blame students for using AI within a capitalist education system that prioritizes efficiency, productivity, and short term memorization of profitable skills over genuine critical engagement. some skills are obviously crucial (i.e., writing for building critical thinking) but can and are still being built with minor adjustments like proctoring. the research shows cheating rates have not changed and have remained high since AI has become available. with the students that do cheat, it's due to deeper systemic issues. many high school and college students are working multiple jobs, caretaking for family members, have health issues, etc. and they will use whatever is available to give themselves some relief.

I get complaining about AI in passing. like I love to hate on it. but most people aren't really offering real ways to protest it other than just telling individuals they are evil for using it... which in any other area of politics we would think flawed and inadequate (re veganism, eco friendly lifestyle changes, etc).

sooo many AI-critical takes are liberal/idealist/individualist and not materialist. once again, we see individuals blamed for the profit-maximizing decisions of corporations (who are the ones responsible for the most harmful aspects of Al). instead of viewing the problems of AI as a symptom of structural issues requiring collective action, they would rather frame it as a personal character flaw of workers and students. and this individualizing of political issues closes off potential for a deeper critique, coalition building, and more opportunities for action. it's a way to both feel superior to other people and subscribe to inaction.

AI does not defy the capitalist historical pattern of labor-saving technology creation, monopolization, dependency enforcement, and exploitation. new technology increases productivity/profit expectations. these increased productivity expectations translate into pedagogy that also expects increased productivity. medical schools actively encourage regular AI use because they know doctors will now be expected to be faster and see more patients because of it - despite the fact most AI tools are not accurate enough to trust for medical information. however, this does not matter in the real capitalist world. what matters is how many patients you can see and how much money you can make for the shareholders and insurance companies.

just like all new labor-saving technology, AI decreases the bargaining power of workers and heightens capitalist contradictions: if you cant stay competitive and keep up with the pace as a worker you risk your job and livelihood.

I am not advocating for doctors, students, and other workers to continue uncritically using AI, but to understand their part in the class war and act accordingly. under capitalism, all productivity gains from new technology will always go straight to the company owners, who would rather expect double/triple the productivity of workers rather than give the time saved back to workers or god forbid give the workers ownership over the new technology.

AI will not save us from capitalism - capitalism develops the productive forces for its replacement. we must radically organize and self-educate. activism isn't about being perfect but doing the best you can consistently without burning yourself out and this will look different for everyone.

#reminds me of liberal arguments for veganism#peoples behavior is not going to change unless their material conditions change#when peoples basic needs are not met most are only going to be concerned with their own survival#we should have high moral standards for one another but need to broaden our analysis if we want real lasting change#both militant vegans and anti-AI-ers instead will condemn ppl as irredeemably evil for using xyz capitalism death machine#individual responsibility can only go so far with highly interdependent societies#like im vegan but would never expect everyone to go vegan without significant material/cultural support#does everyone have the moral obligation to be vegan? sure.#but most people need significant support to get there and needing support isnt a bad thing re disability studies#i know im just rehashing the annoying no ethical consumption under capitalism argument but not really:#ppl should be encouraged to do as much as possible and practical while also recognizing capitalism makes evil unavoidable to a degree#we can and should work on both individual and collective action#and what complicates all of this is that some AI tools for like detecting cancers have been shown to be more accurate than human doctors#so it has the potential to save many lives but not if its owned and rationed by corporations

13 notes

·

View notes

Text

PSA that ai-detection tools are not yet as accurate as I wish they were. Here's hoping they improve QUICKLY, 🙏 but in the meantime, please be aware of both false positives and false negatives!

This reaffirms the importance of sharing WIPS, even though they are ugly and I don't wanna 😭😭

9 notes

·

View notes

Text

Learned about another, likely slightly less known, AI music tool thanks to looking into that self-described "collaborator" on one of the Church of Null songs (by "collaborator",, they meant taking the CoN file and extending it without any acknowledgement from the original uploader bahaha, it's a bit weird)

So this channel uses "Bandlab" and doesn't hide it, since he shows it on streams allll the time. At first you assume ah, using an actual music creator's tool, because it looks like it with all the audio pieces split into different tracks... note, "shows it" on streams to play snippets, not "uses it" to show how he actual makes the extensions/songs

...Buuut, this tool is actually stuffed full of AI generative tools that can give the impression of detailed hand-creation at a glance. Namely, it is an AI extending tool, with tools to both generate and extend separate musical instruments. It also has a tool to take a compiled file and split it into several pieces (vocals, drums, guitar, etc) using the AI. Lastly, it also has an AI tool to "recompose" (change up) the file and "layer," which is a typical AI music generation tool that layers up different musical sounds with the AI.

Because Bandlab can take existing, hand-crafted audio files and use AI to extend them as well as "recompose" them, music extended and changed up on Bandlab may prove difficult to assess as AI. Buuut it sure does explain why this person has such an insane amount of "extended" versions of the Murder Drones OST uploaded in such a short period of time, among other extensions and "original" fan songs 🤔

#AI#murder drones#ai generated#ai detection tools#AI detection#dont mind me being a little ai generator hater again#i will probably not stop ngl#bandlab

5 notes

·

View notes

Text

Stop Trusting AI Detection Tools

youtube

This is just a PSA, but if you don't trust AI to give you answers because they can lie, don't trust them to tell you when something else is AI generated.

3 notes

·

View notes

Text

PLEASE I WROTE THIS MYSELF. I’M JUST AUTISTIC. YOU GOTTA BELIEVE ME, MAN

#ai#ai detector#ai detection tools#how did i even manage to get a 100%.#i wrote over 500 words#how are they all ai#autism#autistic#neurodivergent

6 notes

·

View notes

Text

Stay Ahead with Desklib’s Free AI Detection Tool – Your Secret Weapon for Authentic Writing

In a world where artificial intelligence is becoming increasingly embedded in our daily writing tasks, distinguishing between human and machine-generated content has become a challenge. Whether you're a student, academician, or content creator, ensuring your work is original and free from AI influence is essential.

That’s where Desklib steps in with its powerful AI content detection tool—designed to help users confidently detect AI-written content and maintain integrity across all forms of written communication.

Why You Need an AI-Generated Content Checker

With platforms like ChatGPT, Jasper, and other AI writers gaining popularity, it's easy for content to slip through unnoticed as AI-generated. But when it comes to academics, publishing, or professional writing, passing off AI-written material as your own can have serious consequences—from plagiarism accusations to reputational damage.

This is where Desklib’s AI text detector becomes invaluable. It acts as both an AI plagiarism checker and an originality checker, offering peace of mind that your work is truly yours—or helping you verify the authenticity of someone else’s.

How Desklib’s AI Detection Tool Works

Using Desklib’s AI detection tool is simple and fast:

Upload your document – whether it's an essay, research paper, blog post, or report.

Let the system analyze your text using advanced algorithms.

Receive a clear, downloadable report showing the percentage of AI-generated content in your file.

What sets Desklib apart is its chunking method —it breaks down text into overlapping segments to better understand context and generate more accurate results. This ensures even the most subtle signs of AI involvement don’t go unnoticed.

Features That Make Desklib Stand Out

Free to Use: No hidden charges or subscription fees.

Supports Long Documents: Check papers up to 20,000 words long.

Multiple Detection Modes: Choose from Section Wise, Paragraph Wise, or Full Text analysis.

Private & Secure: Files are deleted after analysis—no data stored.

Who Can Benefit?

Desklib’s AI-generated content checker is ideal for:

Students: Ensure your assignments are 100% original.

Educators: Quickly screen student submissions for AI use.

Writers & Bloggers: Verify your content before publication.

Professionals: Confirm the authenticity of business reports or proposals.

Final Thoughts

As AI continues to reshape how we write, tools like Desklib’s AI content detection service are not just helpful—they’re essential. Whether you're creating content or reviewing it, having access to a reliable AI detection tool helps preserve the value of original thought and creativity.

Ready to Test Your Content?

Visit https://desklib.com/ai-content-detector/ today and start checking your documents for AI-generated content—completely free and in seconds!

#AI content detection#detect AI-written content#AI plagiarism checker#AI-generated content checker#AI text detector#originality checker#AI detection tool

0 notes

Text

My evidence that AI could never replace me.

Read ‘em and weep boys. My bullshit is one-hundo-percent human.

#i just wanted to see what would happen if i tossed some old writing of mine into ai checkers#im so fucking based#ai#ai generated#ai is stupid#ai is theft#ai is a plague#ai is bad#god i love all the ai hater tags#ai is shit#ai is bullshit#ai is plagiarism#ai is not art#ai is dangerous#ai is evil#ai tools#ai text#ai testing#human writing#ai detector#ai detection tools#ai hater#ai hate#shitpost#lmao#humanity#human

5 notes

·

View notes

Text

my irls do not fear technology enough why are they using all using ai tools 😭 this girl just told me about an ai LinkedIn headshot that she paid $15 for

#someone else also got a professional photo out of a selfie with an ai tool#someone else did their resume with chatgpt#and this other girl most likely got her face stolen from a filter for an ai influencer bc it looks just like her it's so uncanny#just the other day my sister was telling me abt the camera in her car that detects when she's not looking at the road#BE AFRAID OF TECHNOLOGY PLEASEEEE

7 notes

·

View notes

Text

2 notes

·

View notes

Text

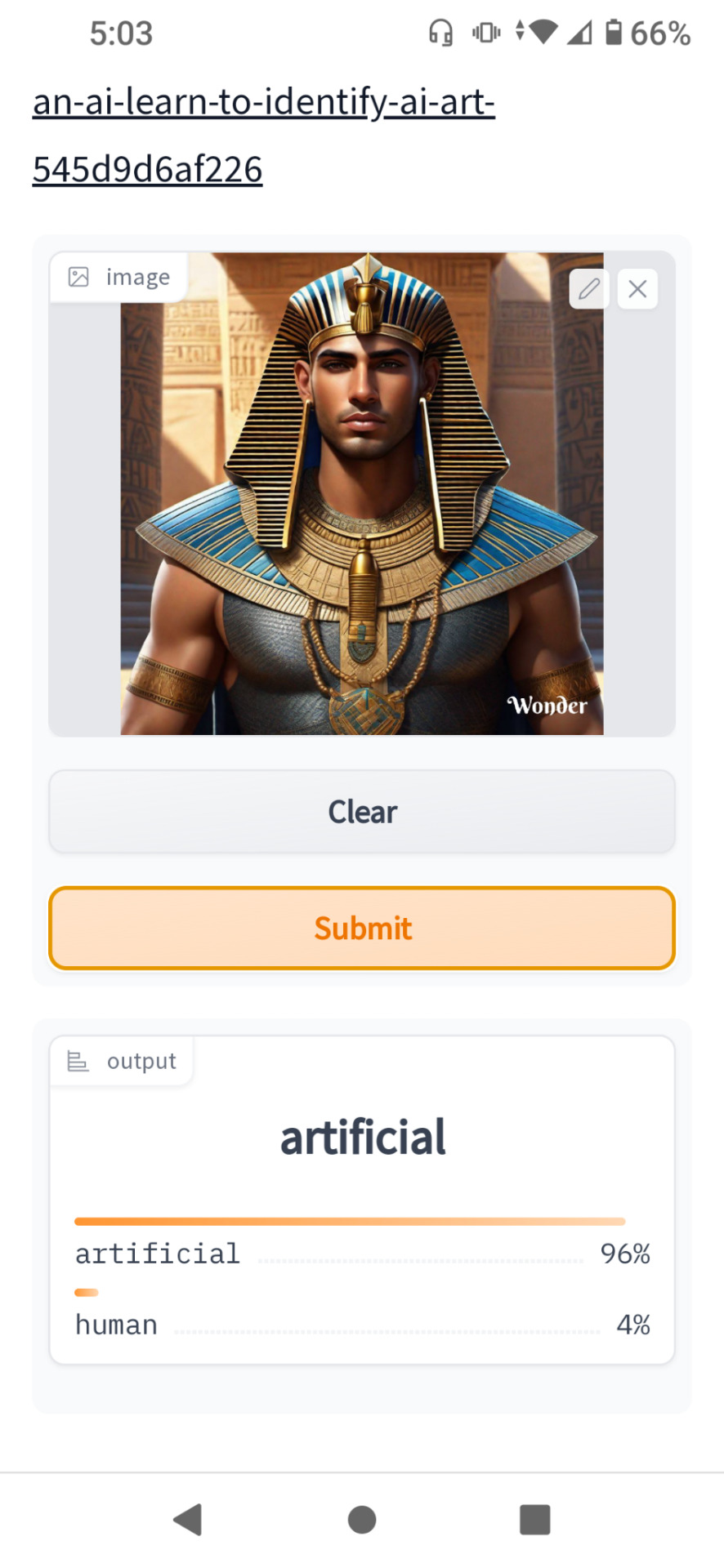

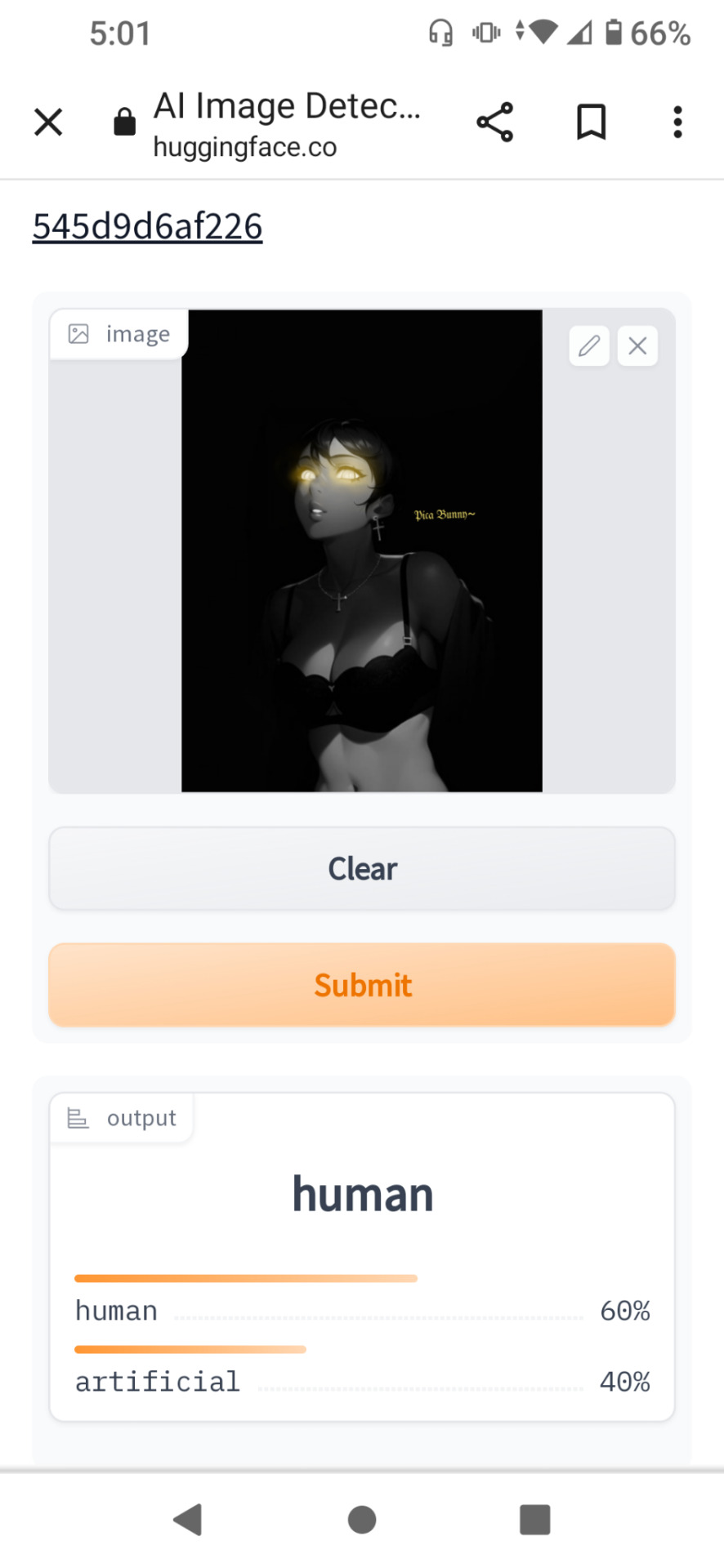

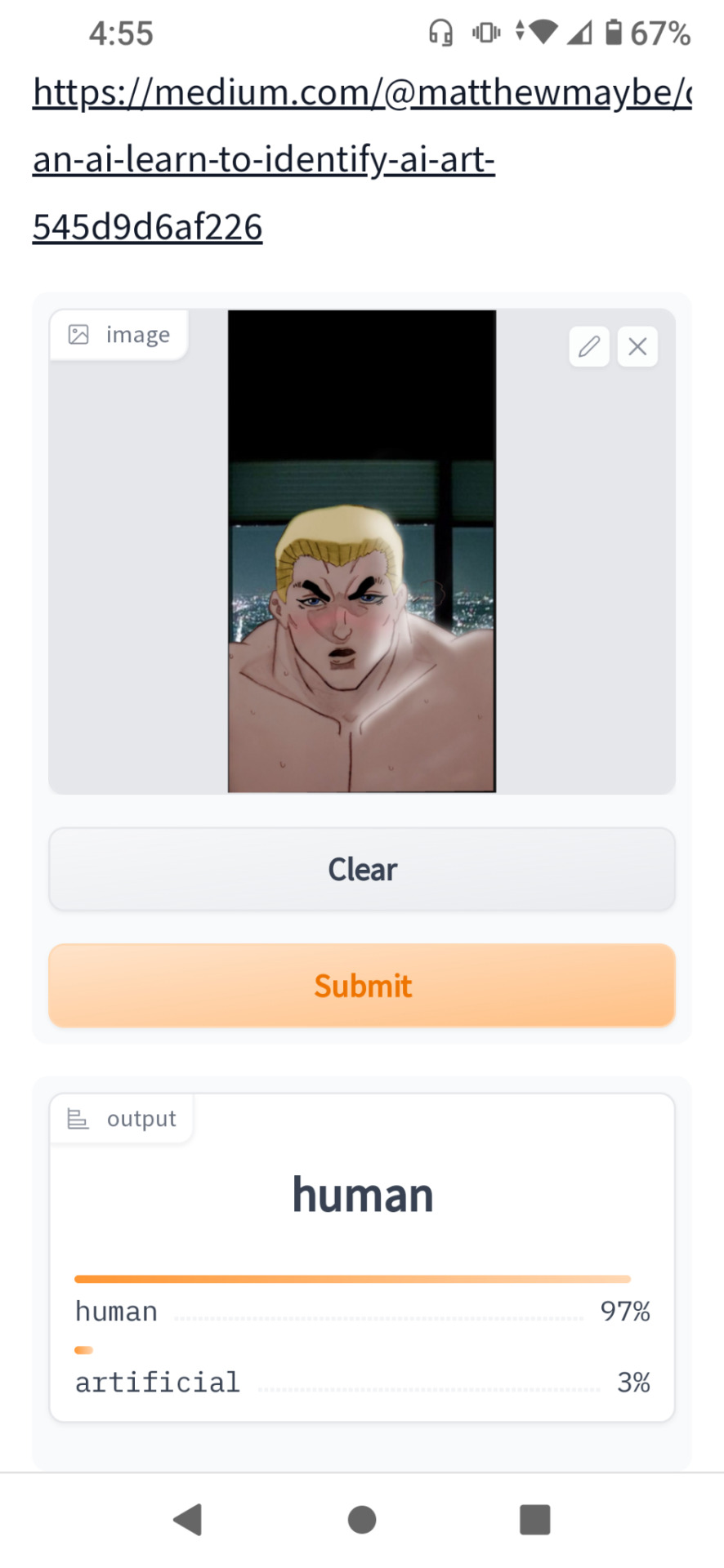

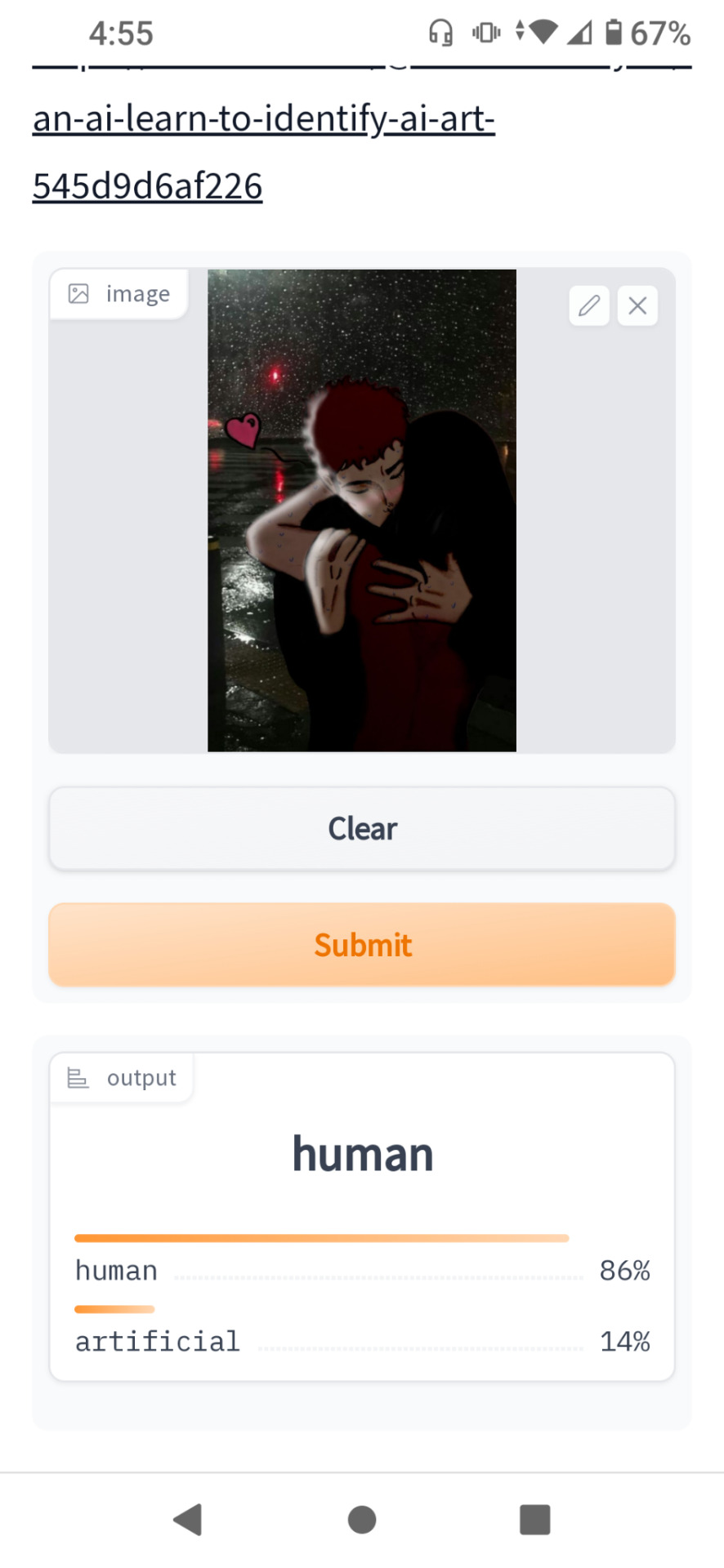

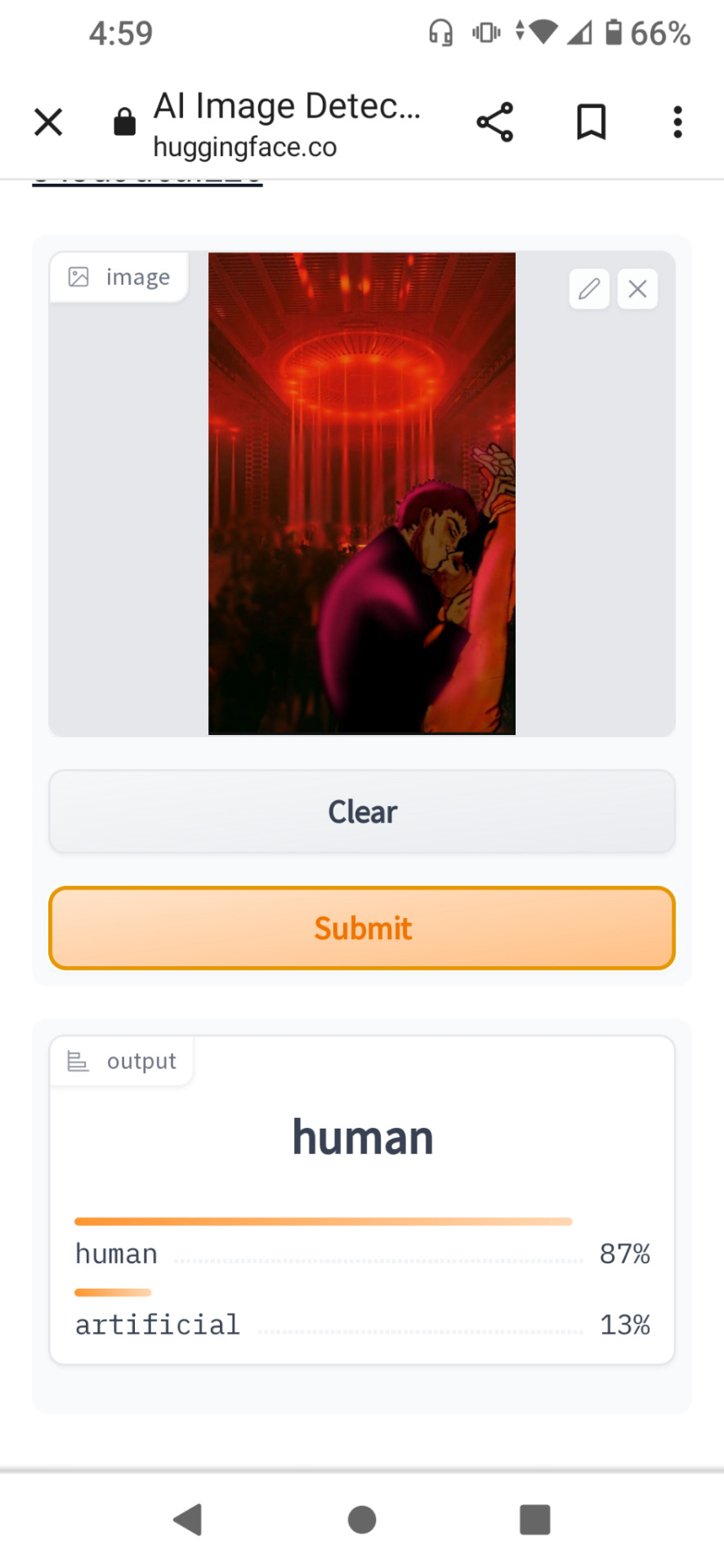

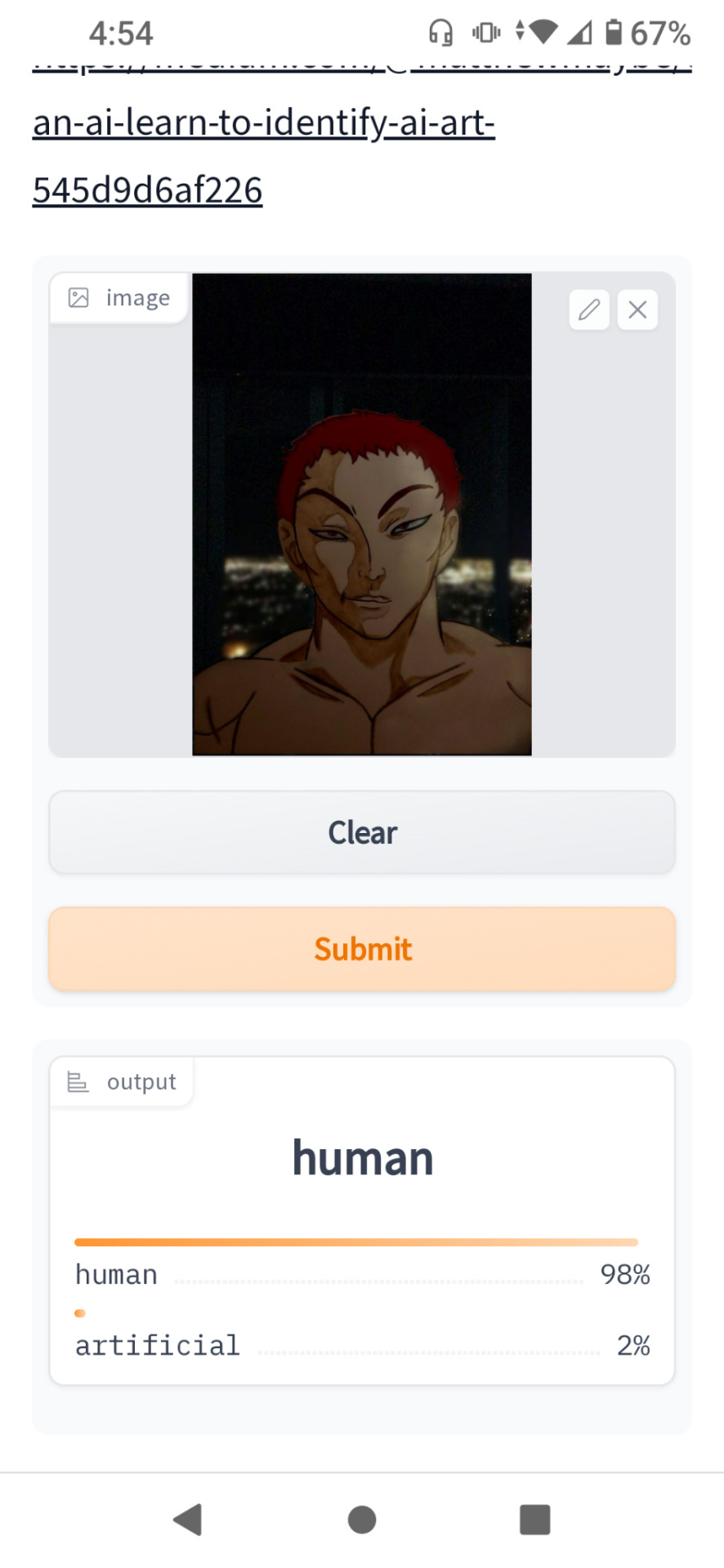

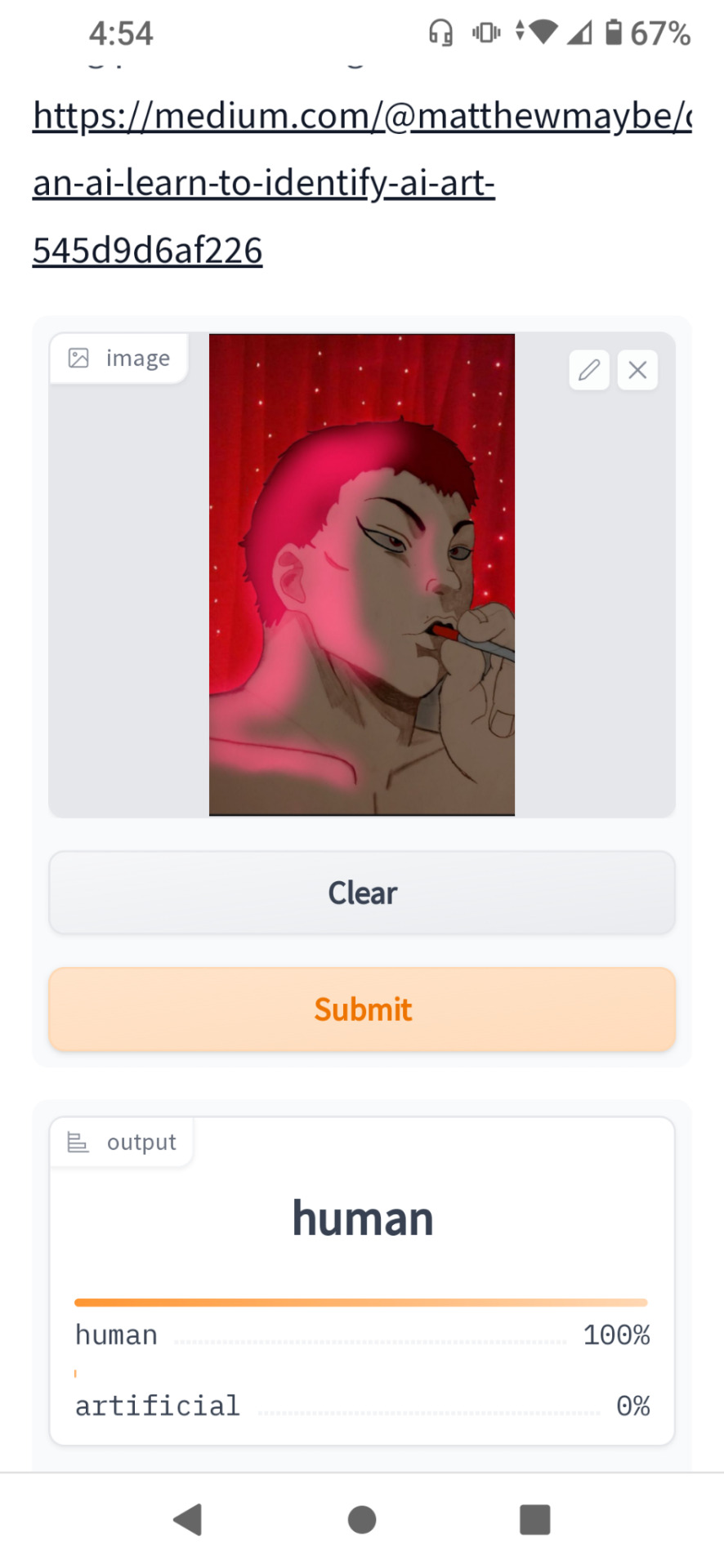

Well guys so....I finally found an app/ website that can Track whether a piece of artwork is AI or not. Now to test this I screenshotted and Ai artist (that specifically states they use AI in description) and put it through the website. This was the result:

And Now I put some of my own Artwork through the tester:

So far it looks like it works pretty well. I'm going to leave the link here in case anyone wants to use it. With the AI manhunts going on right now I figure this will help a lot of people.

Website here:

1 note

·

View note

Text

Pass AI Content Detectors With Ethical AI Use Tips

College life is a balancing act—juggling lectures, readings, clubs, and maybe even a part-time job. AI writing tools can feel like a lifesaver until professors start using AI Content Detectors. Suddenly, that helpful chatbot feels risky. The key is to work smarter, not harder, while making sure your voice shines through and your integrity stays intact.

Why AI Can Help—and Hurt

When you’re staring at a blank screen, the night before a paper is due, AI can summarize sources in seconds, outline a tricky argument, and fix grammar. It’s like having a tutor available 24/7. But that same speed can backfire if your professor’s detector flags your work as machine-made. Then anxiety kicks in, and you might wonder if using AI is worth it at all.

Spotting the “Robot” Tone

Detectors look for stiff wording and overly predictable patterns. If your essay sounds too perfect, too logical, or flat, it raises red flags. A smarter move is to let AI do the busywork—brainstorming ideas, organizing points, fixing typos—then add your own insights, stories, and style.

How to Make AI Text Sound Like You

Start by expressing yourself. Read the AI draft out loud. If it doesn’t sound like you, change words, reorder ideas, or add personal thoughts.

Mix up sentence length—short, punchy lines with longer, thoughtful ones. Detectors notice when everything sounds too even.

Add your favorite phrases or analogies. Maybe you describe something complex as “layers of an onion” or call a key idea “the heartbeat” of your argument—keep those little quirks.

Don’t be afraid of small imperfections. People pause, use contractions, or throw in a quick aside (“honestly, I was surprised…”). These make your writing feel real.

Rewrite and expand. Don’t just swap words—rebuild sentences with your own rhythm and add examples, counterpoints, or class references.

For an extra polish, try an AI humanizer tool. Instead of generic text, it helps AI writing sound natural—more like a conversation with your study group than a computer program.

Know the Ethics Line

Using AI the right way means treating it as a helper, not a ghostwriter. It’s okay to have it brainstorm, outline, summarize, or draft something you’ll heavily revise. But copying that draft word-for-word without understanding or personalizing it crosses the line. Machine text, unedited, is still plagiarism if you pass it off as your own.

The Bottom Line

AI tools and detectors are getting smarter, but they can’t copy your experiences or thought process. Let AI do the groundwork—then rewrite in your own words, add your voice, and include real examples. You’ll avoid detector flags, learn more deeply, and turn in work that’s truly yours. And if you want a hand making stiff AI writing sound genuine, AI humanizer can help your work sound just like you.

0 notes

Text

Cybersecurity in the Age of AI: Navigating New Threats

Understanding AI-Driven Cyber Threats and Defense Strategies

Introduction: A New Cybersecurity Landscape in the Age of AI

Artificial Intelligence (AI) has revolutionized industries worldwide by enhancing efficiency, accuracy, and innovation. From automating routine tasks to enabling predictive analytics, AI continues to unlock unprecedented opportunities. However, as AI becomes deeply embedded in our digital ecosystems, it also reshapes the cybersecurity landscape bringing both powerful defenses and novel risks.

The rise of AI-driven cybersecurity tools is transforming how organizations detect, respond to, and prevent cyber threats. Machine learning algorithms can analyze massive datasets to identify unusual patterns, predict attacks, and automate defenses in real time. Yet, the same AI advancements also equip cybercriminals with sophisticated capabilities enabling automated phishing, intelligent malware, and adaptive intrusion techniques that are harder to detect and mitigate.

This dual-edged nature of AI demands a new approach to cyber threat intelligence, risk management, and security strategy. Organizations must stay vigilant and adopt innovative solutions to safeguard sensitive data and infrastructure against increasingly complex and automated cyberattacks.

For a deeper understanding of how AI is reshaping cybersecurity, check out NIST’s AI and Cybersecurity Framework.

How AI Is Changing Cybersecurity: Defense and Threat Evolution

Artificial Intelligence is revolutionizing cybersecurity by playing a dual role empowering defenders while enabling more sophisticated cyberattacks. On the defense front, AI-powered cybersecurity systems leverage machine learning and data analytics to process enormous volumes of network traffic, user activity, and threat intelligence in real time. These systems excel at detecting anomalies and predicting potential threats far faster and more accurately than traditional signature-based methods.

For example, AI-driven tools can identify subtle patterns indicative of phishing attacks, ransomware activity, or unusual network intrusions, often flagging risks before they escalate into full-blown breaches. Automated incident response capabilities enable rapid containment, minimizing damage and reducing reliance on manual intervention.

However, cybercriminals are equally quick to adopt AI technologies to enhance their offensive tactics. By using AI-generated content, hackers craft convincing phishing emails and social engineering schemes that trick users more effectively. AI can also be used to bypass biometric systems, automate vulnerability scanning, and mimic legitimate user behaviors to avoid detection by conventional security measures. This escalating “arms race” between attackers and defenders underscores the critical need for adaptive cybersecurity strategies.

To explore the evolving interplay between AI and cyber threats, consider reviewing insights from the Cybersecurity & Infrastructure Security Agency (CISA).

Emerging AI-Powered Threats: Deepfakes, Adaptive Malware, and Automated Attacks

The cybersecurity landscape faces increasingly sophisticated challenges due to the rise of AI-powered threats. Among the most alarming is the use of deepfakes hyper-realistic synthetic media generated by AI algorithms that can convincingly impersonate individuals. These deepfakes are weaponized for identity theft, social engineering schemes, or disinformation campaigns designed to manipulate public opinion or corporate decision-making. The growing prevalence of deepfakes adds a dangerous new dimension to phishing and fraud attempts.

In addition, AI-driven adaptive malware is evolving rapidly. Unlike traditional viruses, this malware can modify its code and behavior dynamically to evade signature-based antivirus software and intrusion detection systems. This makes infections more persistent and difficult to eradicate, posing a serious risk to personal, corporate, and government networks.

Furthermore, automated hacking tools powered by AI significantly accelerate cyberattacks. These intelligent systems can autonomously scan vast networks for vulnerabilities, execute targeted breaches, and learn from unsuccessful attempts to improve their strategies in real time. This capability enables hackers to conduct highly efficient, large-scale attacks that can quickly overwhelm human cybersecurity teams.

For more insights into the risks posed by AI-powered cyber threats and how to prepare, visit the National Institute of Standards and Technology (NIST).

Strengthening Cyber Defenses with AI: The Future of Cybersecurity

Despite the growing threat landscape driven by AI-powered attacks, artificial intelligence remains a crucial asset for cybersecurity defense. Cutting-edge security systems leverage AI technologies such as real-time threat intelligence, automated incident response, and predictive analytics to detect and neutralize cyber threats faster than ever before. By continuously analyzing vast amounts of data and learning from emerging attack patterns, AI enables organizations to anticipate and prevent breaches before they occur.

One of the most effective approaches is the integration of AI with human expertise, forming a hybrid defense model. In this setup, cybersecurity analysts harness AI-generated insights to make critical decisions, prioritize threats, and customize response strategies. This synergy balances the rapid detection capabilities of AI with the nuanced judgment of human operators, resulting in more accurate and adaptive cybersecurity posture.

Organizations that adopt AI-driven security platforms can significantly reduce response times, improve threat detection accuracy, and enhance overall resilience against sophisticated attacks.

For organizations seeking to implement AI-based cybersecurity solutions, resources like the Cybersecurity and Infrastructure Security Agency (CISA) offer valuable guidance and best practices.

Ethical and Privacy Considerations in AI-Driven Cybersecurity

As organizations increasingly integrate artificial intelligence in cybersecurity, important ethical and privacy concerns arise. The process of collecting and analyzing vast datasets to identify cyber threats must be carefully balanced with safeguarding user privacy rights and sensitive information. Maintaining transparency in AI decision-making processes is crucial to build trust and accountability. Clear regulatory frameworks, such as the General Data Protection Regulation (GDPR), provide guidelines that help organizations use AI responsibly while respecting individual privacy.

Additionally, organizations face risks associated with over-automation in cybersecurity. Relying solely on AI systems without sufficient human oversight can result in missed threats, false positives, or biased decision-making. These errors may lead to security vulnerabilities or negatively impact the user experience. Therefore, a balanced approach combining AI’s speed and scale with human judgment is essential for ethical, effective cybersecurity management.

By prioritizing ethical AI use and privacy protection, businesses can foster safer digital environments while complying with legal standards and maintaining customer confidence.

Preparing for the Future of AI and Cybersecurity

As artificial intelligence continues to transform the cybersecurity landscape, organizations must proactively prepare for emerging challenges and opportunities. Investing in continuous learning and regular employee cybersecurity training ensures teams stay equipped to handle evolving AI-powered threats. Developing flexible security architectures that seamlessly integrate AI-driven tools enables faster threat detection and response, improving overall resilience.

Collaboration across industries, governments, and academic researchers is critical for creating shared cybersecurity standards, real-time threat intelligence sharing, and innovative defense strategies. Initiatives like the Cybersecurity and Infrastructure Security Agency (CISA) promote such partnerships and provide valuable resources.

For individuals, maintaining strong cybersecurity hygiene using strong passwords, enabling multi-factor authentication (MFA), and practicing safe online behavior is more important than ever as attackers leverage AI to launch more sophisticated attacks.

By combining organizational preparedness with individual vigilance, we can build a safer digital future in an AI-driven world.

Conclusion: Embracing AI to Navigate the New Cybersecurity Threat Landscape

Artificial Intelligence is fundamentally reshaping the cybersecurity landscape, introducing both unprecedented opportunities and significant risks. While cybercriminals increasingly use AI-driven techniques to execute sophisticated and automated attacks, cybersecurity professionals can harness AI-powered tools to create smarter, faster, and more adaptive defense systems.

The key to success lies in adopting AI thoughtfully blending human expertise with intelligent automation, and maintaining continuous vigilance against emerging threats. Organizations that invest in AI-based threat detection, real-time incident response, and ongoing employee training will be better positioned to mitigate risks and protect sensitive data.

By staying informed about evolving AI-driven cyber threats and implementing proactive cybersecurity measures, businesses and individuals alike can confidently navigate this dynamic digital frontier.

For further insights on how AI is transforming cybersecurity, explore resources from the National Institute of Standards and Technology (NIST).

FAQs

How is AI changing the cybersecurity landscape? AI is transforming cybersecurity by enabling faster threat detection, real-time response, and predictive analytics. Traditional systems rely on static rules, but AI adapts to evolving threats using machine learning. It can scan vast datasets to identify anomalies, spot patterns, and neutralize potential attacks before they spread. However, AI is also used by hackers to automate attacks, create smarter malware, and evade detection. This dual-use nature makes cybersecurity both more effective and more complex in the AI era, demanding constant innovation from defenders and responsible governance around AI deployment.

What are the biggest AI-powered cybersecurity threats today? AI can be weaponized to launch sophisticated cyberattacks like automated phishing, deepfake impersonations, and AI-driven malware that adapts in real time. Hackers use AI to scan networks for vulnerabilities faster than humans can react. They also employ natural language models to craft realistic phishing emails that bypass traditional filters. Deepfakes and synthetic identities can fool biometric security systems. These AI-enhanced threats evolve quickly and require equally intelligent defense systems. The speed, scale, and realism enabled by AI make it one of the most significant cybersecurity challenges of this decade.

How does AI improve threat detection and response? AI boosts cybersecurity by analyzing massive volumes of network traffic, user behavior, and system logs to detect anomalies and threats in real time. It identifies unusual patterns like logins from strange locations or data spikes and flags them before they escalate into breaches. AI can also automate responses, isolating infected devices, updating firewalls, or sending alerts instantly. This proactive approach dramatically reduces reaction times and false positives. In large enterprises or cloud environments, where manual monitoring is nearly impossible, AI acts as a 24/7 digital watchdog.

Can AI prevent phishing and social engineering attacks? Yes, AI can help identify phishing attempts by scanning emails for suspicious language, links, or metadata. Natural language processing (NLP) models are trained to detect tone, urgency cues, or fake URLs often used in phishing. AI can also assess sender reputations and flag unusual communication patterns. While it can’t fully prevent human error, it significantly reduces exposure by quarantining suspicious emails and alerting users to risks. As phishing tactics evolve, so does AI constantly learning from past attacks to improve prevention accuracy.

Are AI-based cybersecurity tools available for small businesses? Absolutely. Many affordable, AI-powered security tools are now available for small and mid-sized businesses. These include smart antivirus software, behavior-based threat detection, AI-driven email filters, and endpoint protection platforms that learn from each user’s habits. Cloud-based solutions like Microsoft Defender, SentinelOne, and Sophos offer AI-powered features tailored for SMBs. They provide enterprise-grade security without the need for in-house security teams. With cyberattacks increasingly targeting smaller firms, AI-based solutions are not just accessible they’re essential for staying protected with limited resources.

Can AI replace cybersecurity professionals? AI enhances cybersecurity but won’t replace human experts. While it automates routine tasks like threat detection, data analysis, and basic response, human oversight is still crucial for judgment, strategy, and interpreting complex risks. Cybersecurity professionals work alongside AI to investigate incidents, fine-tune models, and ensure compliance. In fact, AI allows professionals to focus on high-level security architecture, incident response, and governance rather than tedious monitoring. The future lies in a human-AI partnership where AI handles scale and speed, and humans manage context and ethical oversight.

What are some ethical concerns with using AI in cybersecurity? Ethical concerns include data privacy, surveillance overreach, and algorithmic bias. AI systems require vast amounts of data, which can lead to privacy violations if not managed properly. There’s also the risk of false positives that could unjustly flag innocent users or systems. If left unchecked, AI could reinforce existing biases in threat detection or lead to disproportionate responses. Moreover, governments and companies may use AI tools for excessive surveillance. Responsible AI in cybersecurity means transparency, data governance, user consent, and fairness in decision-making.

How do hackers use AI to their advantage? Hackers use AI to create more sophisticated and scalable attacks. For instance, AI-powered bots can probe systems for weaknesses, bypass CAPTCHAs, and execute brute-force attacks faster than humans. NLP models are used to generate realistic phishing emails or impersonate voices using deepfakes. Machine learning helps malware adapt its behavior to avoid detection. These tools allow cybercriminals to attack with greater precision, volume, and deception making AI both a powerful ally and a formidable threat in the cybersecurity battlefield.

What is AI-driven threat hunting? AI-driven threat hunting involves proactively seeking out hidden cyber threats using machine learning and behavioral analytics. Instead of waiting for alerts, AI scans systems and networks for subtle anomalies that indicate intrusion attempts, dormant malware, or lateral movement. It uses predictive modeling to anticipate attack paths and simulate threat scenarios. This proactive approach reduces the risk of long-term undetected breaches. By continuously learning from new threats, AI enables security teams to shift from reactive defense to predictive offense, identifying threats before they do damage.

How can organizations prepare for AI-powered cyber threats? Organizations should invest in AI-powered defenses, regularly update their threat models, and train employees on AI-enhanced risks like deepfakes or phishing. Cybersecurity teams need to adopt adaptive, layered security strategies that include AI-based detection, behavioral monitoring, and automated response. It's also crucial to perform AI-specific risk assessments and stay informed about new threat vectors. Partnering with vendors that use explainable AI (XAI) helps ensure transparency. Finally, fostering a cyber-aware culture across the organization is key because even the smartest AI can’t protect against careless human behavior.

#AI cybersecurity threats#artificial intelligence in security#AI-driven cyber attacks#cybersecurity in AI age#AI-powered threat detection#digital security and AI#AI-based malware protection#evolving cyber threats AI#AI cyber defense tools#future of cybersecurity AI

0 notes

Text

AI Content vs Human Content in 2025: Who Writes Better?

In 2025, content creation is changing fast. Thanks to advanced AI tools, it's now easier than ever to generate blogs, social media posts, emails, and even website content in just seconds. But one big question remains—can AI really write better than humans?

AI Content: Fast and Affordable

AI's speed is a strong benefit. Unlike humans, AI tools can produce literally hundreds of words within seconds with no breaks or rest. For small businesses, struggling due to limited budgets or those who have a substantial need to publish a great deal of written material (e.g. research), AI is a viable solution. Furthermore, the processing power offered by AI systems also helps publish faster by saving time on the rewriting, editing, and content generating. In summary, AI enables organizations to produce simple, bulk content quickly and affordably.

Human Content: Creative and Emotional

On the other hand, human writers bring creativity, emotion, and storytelling to the table. They can understand the audience, choose the right tone, and add personal touches that AI often misses. Human content is usually better when it comes to engaging blog posts, heartfelt stories, and persuasive writing. People still connect better with content that feels real and relatable.

SEO Performance: Who Ranks Higher?

Search engines are capable of ranking both AI and human-generated content. AI excels in base keyword inclusion and content organization for SEO purposes. Nevertheless, human-generated articles tend to rank better over time. Why? Simply because people engage with, and share, more content that has been created by a human representative as opposed to AI. The process of trust is also a critical factor, as search engines will always reward content that keep a user engaged and interested.

Accuracy and Original Thinking

While AI can retrieve facts from many sources, it does not necessarily use the current information objectively. It may include obsolete or erroneous content. Humans can fact check, critically think, and offer new ideas off of their experiences. For these reasons, human written content is more credible when evaluating expert issues, or writing content on opinions.

The Best Choice? Use Both

The most effective way to work in 2025 is by combining AI with human skills. Let the AI do the research, create an outline, and maybe even write the first draft of the writing. Then, have the human writer review, edit, and put some personality into the writing. This blended approach seems the most efficient way to maximize everyone’s time while still producing a quality piece of writing. Many companies or organizations are now accepting a hybrid way of collaborating to get the best of both!

Final Thoughts

So, as we head into 2025, who writes better, AI or humans? The answer is: it depends on what your intent is. If you need content that is simple, fast, and efficient, then yes, AI is an amazing tool for that. If you require content that is more deeper, meaningful in connectedness to human readers, then yes, the human writers are best. If you want to optimize results, combine both AI and humans. You can leverage AI for your meat and potatoes work and humans can bring the "heart and soul". That will be the future of content creation.

#AI content vs human content#AI vs human content writing#Future of content writing#Google ranking AI content#AI content detection tools#Human vs AI blog writing#AI in content marketing

1 note

·

View note

Text

The Role of Generative AI in Cybersecurity: Enhancing Protection in a Threat-Filled Digital World

Generative AI in cybersecurity is transforming real-time threat detection, enhancing protection, and ensuring smarter defense for global digital systems Generative AI in cybersecurity has emerged as a powerful force, redefining how companies detect, prevent, and respond to digital threats globally. Visit more Understanding Generative AI’s Impact on Cybersecurity Generative AI in cybersecurity…

#ai#AI Security Tools#cyber-security#Cybersecurity#generative AI#security#technology#Threat Detection#USA Cybersecurity Trends

0 notes

Text

AI Speeds Up Cancer Diagnoses, Saving Lives One Hour at a Time

TL;DR: AI’s Role in Faster Cancer Diagnosis AI is enabling early detection of APL, a highly aggressive but curable leukemia. The Leukemia Smart Physician Aid can reduce diagnosis time from days to hours. Peripheral blood smear technology, enhanced by AI, makes global deployment feasible. Funding is critical to ensure this technology reaches clinics worldwide. Shenderov advocates for…

#Adoption#AI#AI in healthcare#cancer diagnosis technology#cancer funding#decentralization#healthcare innovation#Johns Hopkins research#leukemia detection AI#medical AI tools#Web3

0 notes