#detect AI-written content

Explore tagged Tumblr posts

Text

Uncover the Truth with Desklib’s AI Content Detector

In the rapidly evolving digital landscape, ensuring the authenticity of written content is more important than ever. Whether you're a student, educator, content creator, or professional, being able to distinguish between human-written and AI-generated content has become a necessity. That's where Desklib’s advanced AI Content Detector comes into play.

Why Choose Desklib’s AI Content Detection Tool?

Desklib’s AI Content Detector is designed to help you verify originality and maintain credibility in any form of written material. Here’s why it stands out:

1. Accurate AI Content Detection

Our tool uses cutting-edge technology to identify AI-written content with precision. It can detect text generated by popular AI writing tools, ensuring that your work remains authentic and free from potential plagiarism.

2. Comprehensive Plagiarism Checker

Desklib’s AI-powered plagiarism checker goes beyond traditional methods. It not only checks for duplicate content across the web but also detects AI-generated text patterns, providing you with an in-depth originality report.

3. User-Friendly Interface

With an intuitive and easy-to-navigate interface, Desklib’s AI detection tool is accessible to everyone. Upload your document, and within seconds, get a detailed analysis of your content.

4. Trusted by Professionals

Whether you’re a teacher evaluating student assignments or a content creator ensuring the originality of your blog posts, Desklib’s tool is trusted by professionals worldwide to maintain the highest standards of authenticity.

5. AI Detection for All Content Types

From academic papers and essays to blogs, articles, and marketing copy, Desklib’s tool caters to a wide range of content formats. No matter your industry, we’ve got you covered.

Features That Set Us Apart

Real-Time Analysis: Get instant results with actionable insights.

Multi-Language Support: Detect AI content in multiple languages, expanding your global reach.

Secure and Confidential: Your data’s privacy is our priority. We never store or share your content.

Detailed Originality Reports: Receive comprehensive reports that highlight areas of concern, making it easier for you to make necessary edits.

How Does the AI Detection Tool Work?

Using Desklib’s AI Content Detector is simple:

Upload Your Document: Drag and drop your file or paste the text directly into our tool.

Run the Analysis: Click on the "Analyze" button to begin the detection process.

Review the Report: Within moments, view a detailed breakdown of AI-generated content, plagiarism matches, and originality scores.

Who Can Benefit from Desklib’s AI Content Detector?

Students and Educators: Ensure academic integrity by identifying AI-generated essays and assignments.

Writers and Editors: Maintain originality in blogs, articles, and other creative works.

Businesses and Marketers: Verify that your promotional content is unique and trustworthy.

Researchers: Ensure that academic papers meet originality standards for publication.

Enhance Your Content Authenticity Today

Don’t let AI-generated text compromise your credibility. Desklib’s AI Content Detector empowers you to identify and eliminate AI-written content, ensuring that your work stands out for its authenticity and originality.

Visit Desklib AI Content Detector now and experience the power of next-gen AI detection technology.

#AI content detection#detect AI-written content#AI plagiarism checker#AI-generated content checker#AI text detector

0 notes

Text

We are focused 100% on creating stunning content with Chatgpt and then ensuring that it is 100% human looking to the search engines.

#how to make ai content undetectable#how to make chatgpt content undetectable#how to make chatgpt text undetectable#how to make chatgpt look human written#how to bypass ai detection

0 notes

Text

⠀

⌗⠀양정원⠀⠀CAT⠀DISTRIBUTION⠀SYSTEM⠀꒰⠀PT.1⠀꒱

⠀

⠀

SYNOPSIS⠀.⠀.⠀.⠀starting college in a new city, you’re settling into your apartment and trying to make it feel like home. on your first day, a fluffy calico cat appears on your neighbor's balcony, jumping towards yours as if to greet you, stealing your heart instantly. but when a voice calls out for the cat from the next balcony, panic sets in—you rush back inside, too shy to meet your new neighbor. that neighbor turns out to be yang jungwon, a fellow student in the same university who’s also new in town. thanks to his mischievous and adventurous cat, the two of you keep running into each other in the most unexpected ways. a friendship blossoms, slowly turning into something deeper—though jungwon keeps insisting it’s nothing more than friendship. as feelings grow stronger, the question remains: will their bond turn into something more—or remain just a college memory?

⠀

⠀

pairing⠀.⠀.⠀.⠀college student!yang jungwon x college student!f.reader. featuring⠀.⠀.⠀.⠀all enhypen members (soon), le sserafim yunjin, kazuha, and chaewon (soon), aespa winter and karina (soon). word count⠀.⠀.⠀.⠀2.241k genre⠀.⠀.⠀.⠀sfw, fluff, angst if you squint, kinda slow burn, college life, university life, slice of life, comedy (although i don't find myself funny), friendships, relationships, and the cat distribution system. (it has chosen you and gave you two lovely cats.) warnings⠀.⠀.⠀.⠀drinking alcohol, parties, getting drunk (obviously), misunderstandings, jealousy, denial (jungwon is in denial), lots of flirting and tension, cat keeps breaking into your apartment, kissing, skinship, reader (aka us) is very delusional and does a lot of overthinking, a bit cringe (i think it's cringe bcs i wrote it), and might contain suggestive content in the later parts that are yet to be posted. lowercase letters intended. very proofread. tell me if i'm missing anything. mæw's notes⠀.⠀.⠀.⠀hi guys, this will be my very first enhypen au / fanfic here in tumblr. i will be cutting this fanfic in multiple parts instead of posting it all at once because it already has a word count of 40k. i am still new to this so i will surely make mistakes. please be patient with me and i hope you guys enjoy my work. this story will be added to my masterlist. also, don't even try copy-pasting my work into an ai detection website, because i already tried it and it still said that parts of it was written by ai, even though i literally wrote it on my phone in front of my cousin. likes, reblogs, and comments are highly appreciated.

⠀

library⠀.⠀.⠀.⠀part two.

⠀

“are you completely certain you have everything, sweetheart?” your mother asks for what feels like the hundredth time, her voice tinged with both worry and affection. you can’t help but chuckle softly, rolling your eyes in fond exasperation as you roll two suitcases out through the front door.

behind you, she follows closely, reciting the list of college essentials she helped you pack, while your father lingers not far behind.

“mom, for the tenth time—literally—you packed with me. you know i’ve got everything,” you reply, turning to face her. she frowns slightly, reluctantly folding her list and slipping it into her pocket.

she reaches for your hands and clasps them tightly, as though letting go meant letting you go forever. “i’m sorry, sweetie. i just can’t help but worry. i’m going to miss you so much,” she says, her eyes already glistening with unshed tears.

you felt your heart ache as you pulled her into a hug, wrapping your arms around her as tight as you can. “oh, mom...” you murmured, voice muffled in her hair, “i’m going to miss you, too. and dad. and everyone. but this isn’t goodbye forever, okay? it’s just college—four years, tops. i’ll be back before you even realize i’m gone.” you reassured her while smiling.

“is it my turn now?” came your dad’s voice from behind, cutting through the moment with the kind of comedic timing only he had. you turned to him, confused.

“yes, honey, go ahead,” your mother says with a small smile, eyes still misty.

he cleared his throat, stepped forward like he was preparing a speech, and asked, “are you absolutely certain the place you’re renting is fully furnished?”

you blinked, momentarily caught off guard by the practicality of his concern, but nodded. “yeah, dad. it is. i saw the pictures online, and the landlord sent us updated ones too. you showed them to me, remember?”

“it’s got the basics: a living room, kitchen, bathroom, bedroom, a little dining area, even a mini walk-in closet. and a balcony,” you added, lifting your eyebrows as if that would finally put his mind at ease. “some furniture’s getting delivered tomorrow, but other than that, i’m all set.”

still, you know deep down they won’t stop worrying—not really. it’s just what parents do.

so you took their hands, holding them like you were anchoring the three of you in that little moment.

“mom. dad. i know you're worried. i really do. and i get it. but i have to do this—for me. for my future. remember how we talked about this?” you said softly, giving their hands a small swing.

they sighed, looking down at the pavement as if it held some kind of comfort. your mom’s lips trembled as she said, “i just can’t believe my baby girl’s going to college. it feels like just yesterday you were painting rocks in daycare and telling us they were ‘magical artifacts.’”

you laughed as she started to cry again, and without missing a beat, your dad stepped forward, wrapping the both of you in a warm, protective hug. the three of you stood there for a few seconds in silence—breathing each other in like this was the last chance you’d get.

“i promise i’ll visit when i can,” you whispered, your voice thick with emotion. “and if anything happens, i’ll come running back home. always.”

your mom sniffled loudly, then pulled away just enough to cup your cheek. “nothing will happen to you. you hear me? you’re going to be fine. just... don’t stress too much. and don’t let yunjin drag you into too many parties. you know how she is.”

your dad nodded in agreement. “yeah. remember—college is about studying, not setting new records for the number of red cups you can balance.”

you burst into laughter, shaking your head. “you guys are unbelievable. i’m your daughter, remember? i’ve got at least some common sense.”

“barely,” your dad muttered, and you playfully elbowed him in the ribs.

amidst the bittersweet laughter, the sound of a car pulling up interrupts the moment.

“oh! that’s my uber,” you say, adjusting your backpack. “dad, can you help with my suitcases?”

“on it, bud,” he said, already hoisting both bags with that exaggerated dad-strength that never failed to impress you.

he waved to the driver, who rolled up to the curb. the trunk popped open, and your dad loaded everything in then dusted off his hands and turned back. “is that everything?” he asked.

“yes, dad. i’m going to college, not new york fashion week,” you tease, earning amused chuckles from both of them.

they escort you to the car, your mom opening the door for you. but as you settled in, she suddenly tapped gently on your window. you roll it down.

“yes, mom?”

she leaned in. “sir,” she said, addressing the uber driver with a gravity that made you look at her in confusion, “if my daughter says she’s feeling dizzy or needs a break, please pull over.”

“also, you’re going to the right address, yes?” your dad added, stepping in like he was interrogating a suspect.

you let out a groan and sank into your seat, using your backpack as a shield to hide your face. “guys, seriously...”

“and don’t drive too fast or weave between cars,” your mom continued. “please drive safely. she’s very precious cargo.”

“okay mom! dad! i love you both! please let the poor man do his job,” you said quickly, waving goodbye before whispering to the driver, “you can go now. before they make me wear bubble wrap.”

the driver chuckles as the car pulls away. you lean out the window, waving until your parents become small figures in the distance.

“i’ll call when i get there!” you shout back before sinking into your seat, heart full and heavy all at once. you breathe in slowly, gaze drifting out the window.

you can do this. it’s not going to be that hard... right?

⠀

⠀

after what felt like an eternity of winding roads, shifting scenery, and the soft hum of tires against asphalt, you finally arrived. the car rolled to a gentle stop in front of the building that would now be your new home for the next four years of your life.

you turned your head toward the window, eyes tracing the unfamiliar landscape, taking it all in—wide sidewalks dappled in sunlight, joggers weaving between pedestrians, laughter spilling from a group of cyclists, someone playing fetch with a very enthusiastic golden retriever.

the air held a certain freedom you hadn't even realized you'd been craving until now. it smelled like possibility, like the beginning of something beautifully unknown.

“alright, ma’am. we’ve arrived. would you like help with your suitcases?” the driver’s voice interrupted gently, his tone patient, practiced.

you blinked yourself out of your daze, glancing at the man in the rearview mirror before answering, “yes, please. just to the entrance would be great. thank you.”

you stepped out of the car, greeted by the sight of the tall, clean-lined building. you took a breath—deep, grounding—then turned to help the driver with your bags. the two of you wheeled the suitcases together toward the entrance.

you then turned to him, pulling out a small amount of cash. “thank you so much. really. and here—this is a little extra for putting up with my parents earlier.”

he let out a warm laugh as he accepted the tip. “ah, it was nothing. i’ve got kids myself. i know how it feels to watch them grow up.”

you smiled, heart swelling. “well, if they’ve got a dad like you, i’m sure they’re growing up wonderfully.”

“that’s kind of you to say. stay safe, ma’am.”

“you too, please drive safely,” you said with a grateful nod, before turning your attention to the double glass doors ahead of you. “alright... let’s do this.”

you mumbled to yourself as you wrestled your bags inside. the first thing that greeted you was the hum of the lobby’s air conditioner and a wall of metallic lockers neatly lined up to your right.

“oh thank god, elevators,” you sighed, eyeing the silver doors to the side. but before you headed up, you pulled out your phone to reread your landlord’s message, squinting at the little instructions tucked inside a cheerful block of text.

⠀

landlord 🏘️: good day, miss y/n. here are a few instructions before entering your apartment. on the first floor, you’ll see multiple lockers designated for deliveries and mail. please locate locker no.508. that will be your personal locker. inside, you’ll find the keys for all the doors inside your apartment and all necessary passcodes, especially the passcode of your apartment. the passcode to unlock your locker is 0628. thank you again for choosing us. we hope you enjoy your stay, and please don’t hesitate to reach out if you have any questions.

⠀

with a determined nod, you pocketed your phone and made your way through the lobby towards the right where the lockers are. polished silver doors with numbers engraved in neat rows. you scanned quickly until your eyes landed on 508.

you keyed in the code with a quiet click, and the locker door swung open.

inside were all the essentials: a set of keys, neatly labeled passcodes on a printed sheet, a few manuals for the appliances, and a small envelope titled 'welcome to your new home'.

“keys, check. passcodes, check. instructions, check. emotionally prepared? debatable,” you muttered, collecting everything before shutting the locker.

you hauled your bags into the elevator and pressed the button for the fifth floor. the soft hum of the elevator was oddly comforting, a brief moment of stillness.

the doors opened with a quiet chime, revealing an empty, serene corridor lined with identical doors. you walked slowly, counting off the numbers until you reached 508 once again—this time, your door.

you typed in the passcode, heart thudding with an unfamiliar mix of nerves and excitement. a soft beep, a click, and the door opened.

your eyes widen.

“oh god. this is really happening,” you whispered, stepping inside.

the apartment was... perfect. minimal but welcoming, clean lines and cozy corners. the sunlight streamed in from the windows, dancing across the hardwood floors.

you grinned, walking deeper into your new space. “it’s even better in person! it really has everything i—wait... the balcony!” your voice shot up an octave, already halfway to the glass doors.

you threw your backpack aside and stepped out onto the balcony. the breeze kissed your skin as you exhaled slowly, taking in the view. you pulled out your phone and took a handful of photos—one of the scenery, one of the sky, two of your grinning face—ready to send them to your parents with a reassuring caption.

you were about to hit send when you heard a small sound, high and soft.

“meow.”

you froze.

you turn, the sight before you making you gasp. sitting on the next apartment's balcony is the fluffiest calico cat you’ve ever seen. “oh my gosh, hi sweet angel– no, wait! don’t jump–” but it’s too late. with the grace of a furry ninja, she leapt from one balcony to yours, landing with a perfect thud and zero regrets.

you blinked. “well. who am i to reject a royal visit?”

you kneel and gently stroke her fur before completely sitting down on the floor. “what’s your name?” you murmur. as if on cue, the cat shifts, revealing her collar. “yami? aww, what a lovely name.”

she nestled into your lap like you were long-lost friends. you let out a delighted gasp, “oh no. not the cuteness. you’re too powerful,” you whispered, gently running your fingers through her fur, trying not to explode from cuteness aggression. the last thing you wanted was to scare her away.

you had no idea how much time passed. minutes? hours? you didn’t care. it was just you and yami, and the world could wait.

until—

“yami?”

you flinched.

the voice was male. close. way too close. and getting closer.

you got startled, which in turn startled the cat—violating the sacred cat law: if a cat sits on your lap, you don’t move. ever. but you did. and now you felt like an unforgivable criminal.

“yami,” the voice called again, now just on the other side of the glass. “there you are. what are you doing? hanging out on our neighbor’s balcony again?”

you peeked ever so slightly through the curtain. the guy was in a hoodie, the hood over his head, and pajama pants, hair sticking out, probably tousled like he’d just woken up. he also sounded young so he's probably close to your age. he crouched down and scratched yami behind the ears, completely unaware of your presence.

“are you excited to meet our new neighbor?” he asked the cat, who meowed back in response, tail flicking happily.

he laughed to himself and disappeared back into his apartment.

you exhale, not realizing you’d held your breath. ‘why did i even hide?’ you scold yourself. ‘i didn’t do anything wrong.’

shaking the embarrassment away, you pull your suitcases into your bedroom. it’s bare, except for a mattress, blanket, and a few pillows. your furniture will arrive tomorrow.

you sigh and begin to unpack, preparing to shower and change into something more comfortable.

“this is going to be a long month,” you murmur to yourself, unaware that this—this quiet, chaotic beginning—was only the start.

⠀

⠀

taglist⠀.⠀.⠀.⠀ @morganaawriterr @wondoras @mypolka @meowwwon @dolliehue @in-somnias-world @yjwonsgf @kirijuns @iifrui @momisanalien @vieniee @drunkjazed @hhyvsstuff @readinmidnight @noona-neomu-yeppeo @cutehoons02 @robotinvenus @starfallia (taglist is still open) final note⠀.⠀.⠀.⠀i hope you guys enjoyed, part two will be posted next saturday. thank you so much for reading.

⠀

⠀

©⠀mæwphoria⠀|⠀all works belong to me. strictly do not plagiarize, copy, translate, paraphrase, rewrite or repost my works on any other platforms. if it's inspiration gained from my work then it's appreciated and i wish you good luck with your own stories. thank you.

⠀

#⇄⠀⠀ ៹ ⠀⠀mæwphoria ⠀⠀ᵎᵎ⠀ ⠀◡̈#mæwphoria#maewphoria#mewphoria#enhypen#enhypen au writer#enhypen au#enhypen fanfic#enhypen fanfiction#enhypen x yn#enhypen x female reader#enhypen x f reader#enhypen x reader#enhypen angst#enhypen fluff#yang jungwon#enhypen jungwon#enhypen jungwon au#enhypen jungwon fanfic#enhypen jungwon fanfiction#enhypen jungwon x yn#enhypen jungwon x female reader#enhypen jungwon x f reader#enhypen jungwon x reader#enhypen jungwon fluff#enhypen jungwon angst#kpop#kpop x reader#kpop angst#kpop fluff

585 notes

·

View notes

Text

Let's Talk About Ir Abelas, Da'ean

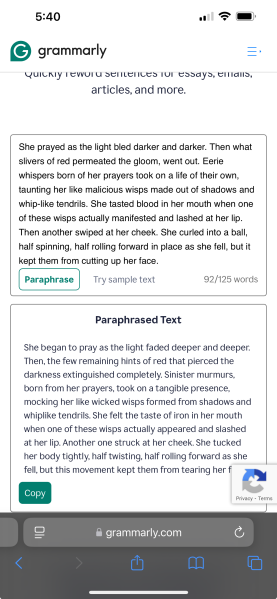

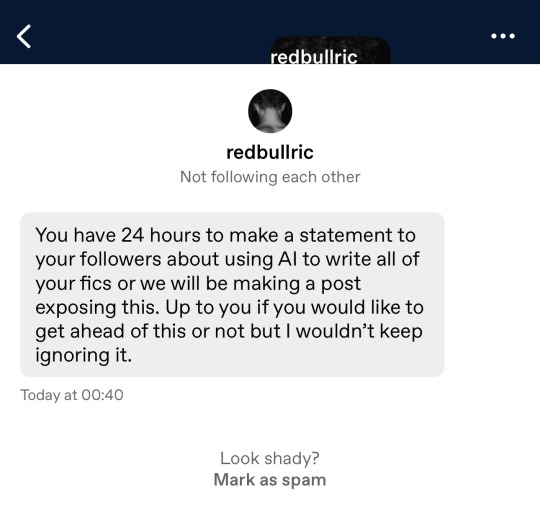

As some of you may know, I am vehemently against the dishonest use of AI in fandom and creative spaces. It has been brought to my attention by many, many people (and something I myself have thought on many times) that there is a DreadRook fic that is super popular and confirmed to be written at least partially with AI. I have the texts to prove it was written (at least) with the help of the Grammarly Rewrite generative feature.

Before I go any further, let it be known I was friends with this author; their use of rewrite features is something they told me and have told many other people who they have shared their fic with. It is not however, at the time of posting this, tagged or mentioned on their fic on AO3, in any capacity. I did in fact reach out to the author before making this post. They made absolutely no attempt to agree to state the use of Rewrite AI on their fic, nor be honest or upfront (in my opinion) about the possibility of their fic being complete generative AI. They denied the use of generative AI as a whole, though they did confirm (once again) use of the rewrite feature on Grammarly.

That all said: I do not feel comfortable letting this lie; since I have been asked by many people to make this, this post is simply for awareness.

You can form your own opinion, if you wish to. In fact, I encourage you to do such.

Aside from the, once again, high volume word output of around 352K words in less than 3 months (author says they had 10 chapters pre-written over "about a month" before they began posting; they are also on record saying they can write 5K-10K daily) from November until now, I have also said if you are familiar with AI services or peruse AI sites like ChatGPT, C.AI, J.AI, or any others similar to these, AI writing is very easy to pick out.

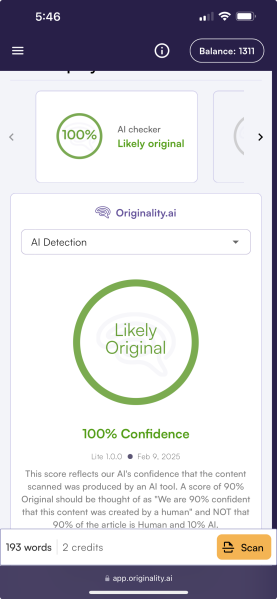

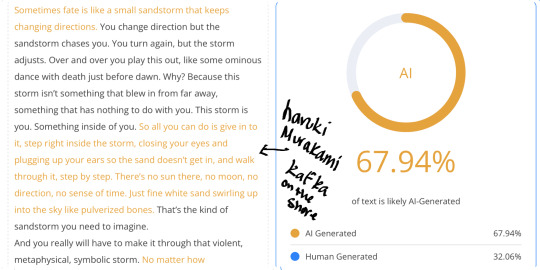

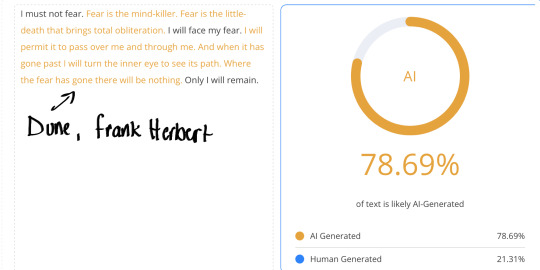

After some intense digging, research, and what I believe to be full confirmation via AI detection software used by professional publishers, there is a large and staggering possibility that the fic is almost entirely AI generated, bar some excerpts and paragraphs, here and there. I will post links below of the highly-resourced detection software that a few paragraphs and an entire chapter from this fic were plugged into; you are more than welcome to do with this information what you please.

I implore you to use critical thinking skills, and understand that when this many pieces in a work come back with such a high percentage of AI detected, that there is something going on. (There was a plethora of other AI detection softwares used that also corroborate these findings; I only find it useful to attach the most reputable source.)

Excerpts:

82% Likely Written by AI, 4% Plagiarism Match

98% Likely Written by AI, 2% Plagiarism Match

100% Likely Written by AI, 4% Plagiarism Match

Some excerpts do in fact come back as 100% likely written by human; however, this does not mean that the author was not using the Grammarly Paraphrase/Rewrite feature for these excerpts.

The Grammarly Paraphrase/Rewrite feature does not typically clock as AI generative text, and alongside the example below, many excerpts from other fics were take and put through this feature, and then fed back into the AI detection software. Every single one came back looking like this, within 2% of results:

So, in my opinion, and many others, this goes beyond the use of the simple paraphrase/rewrite feature on Grammarly.

Entire Chapter (Most Recent):

67% Likely Written by AI

As well, just for some variety, another detection software that also clocked plagiarism in the text:

15% Plagiarism Match

To make it clear that I am not simply 'jealous' of this author or 'angry' at their work for simply being a popular work in the fandom, here are some excerpts from other fanfics in this fandom and in other fandoms that were ran through the same exact same detection software, all coming back as 100% human written. (If you would like to run my fic through this software or any others, you are more than welcome to. I do not want to run the risk of OP post manipulation, so I did not include my own.)

The Wolf's Mantle

100% Likely Human Written, 2% Plagiarism Match

A Memory Called Desire

99% Likely Human Written

Brand Loyalty

100% Likely Human Written

Heart of The Sun

98% Likely Human Written

Whether you choose to use AI in your own fandom works is entirely at your own discretion. However, it is important to be transparent about such usage.

AI has many negative impacts for creatives across many mediums, including writers, artists, and voice actors.

If you use AI, it should be tagged as such, so that people who do not want to engage in AI works can avoid engaging with it if they wish to.

ALL LINKS AND PICTURES COURTESY OF: @spiritroses

#ai critical#ai#fandom critical#dreadrook#solrook#rooklas#solas x rook#rook x solas#ir abelas da'ean#ao3#ancient arlathan au#grammarly#chatgpt#originality ai#solas#solas dragon age#rook#da veilguard#veilguard#dragon age veilguard#dragon age#dav#da#dragon age fanfiction#fanfiction#as a full disclaimer: I WILL BE WILLING TO TAKE DOWN THIS POST SO LONG AS THE FIC ENDS UP TAGGED PROPERLY AS AN AI WORK#i tried to do exactly as y'all asked last time#so if y'all have a problem w this one idk what to tell you atp#and see????? we do know how to call out our own fandom#durgeapologist

199 notes

·

View notes

Text

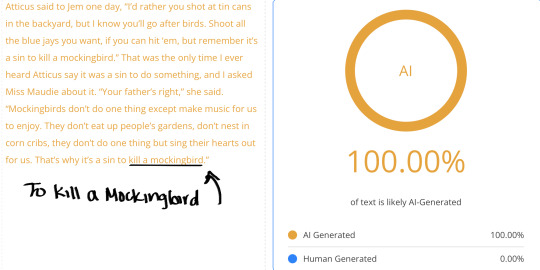

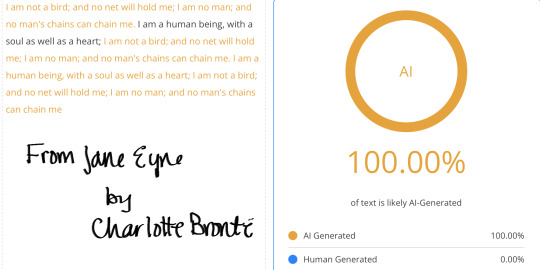

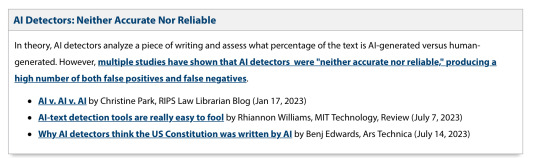

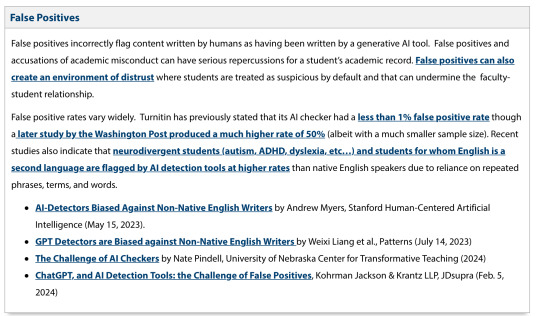

We Need to Talk About AI Detectors

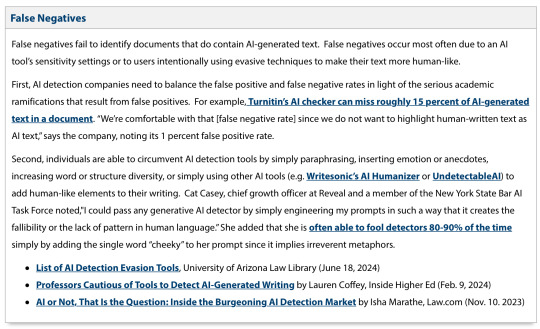

Over the past few weeks there have been two posts in particular that have come out against two authors in the community that have resulted in near witch hunts for one, and essentially driving the other off the internet from all the bullying and hate received. These posts were related to the potential use of AI in fanfiction, as well as using supposed “AI Detectors” to support their claims. With the help of friends, we have been able to look into the AI claims that were made against both The Silence and The Song and Ir Abelas, Da’ean.

We were curious about how and why these posts were being flagged with high levels of “AI Probability” when the authors have been adamant (either in chats or in public) that they have never used generative AI for their work. So we did the most logical thing, put on our detective caps, and rolled up our sleeves. We would like to note that we do not wish to have philosophical discussions, we wish to have transparency and honesty.

Spoilers: We found inaccuracies almost IMMEDIATELY.

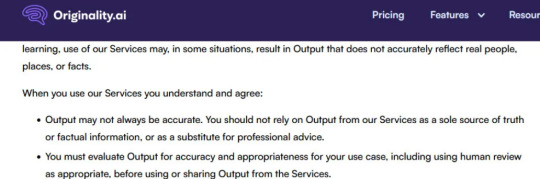

Firstly, we looked into the weakness of AI detectors, and read through online posts where people voiced their frustrations with detectors. One thing that we noticed was that the common denominator was that well written articles were being flagged as “Likely AI”, particularly with Originality, and that the solution was to either “dumb them down” or to remove punctuation such as commas, which immediately improved the score, tipping the scale to “Likely Original”.

For the second step, we ran some of our own works through Originality AI checker–works that were made prior to the creation of AI and generative AI. However, after punctuation was removed, this magically changed from 50% likelihood of AI to 100% original work. Again, these works were from before the dawn of generative AI, and therefore could not have been created by AI. For fun, we even ran the first chapter of Harry Potter through it–a novel that is objectively without AI, which still did not come out with results stating 100% original work. We then removed almost all of the punctuation from it, and it actually improved the originality score by 3% (from 95% to 98%).

Personal fic, before and after:

Next step, we ran our own scans through Originality and Quillbot.This includes full chapters of Ir Abelas, Da’ean, both with and without punctuation in Originality, and excerpts in Quillbot. Interestingly, the excerpts in Quillbot pinged as “0% likely AI”, and that is without any removal of punctuation. Across the board, the removal of punctuation from the chapters caused an immediate and dramatic increase in the score, from “100% likely AI” to “96% likely Original”. We have found that the more grammatically correct a work was, the more likely it was to be flagged as “AI”, much like how the freelance writers were complaining about.

Chapter 1 of Ir Abelas, before and after:

Chapter 1 through Quillbot:

Chapter 45 of Ir Abelas, before and after:

Chapter 45 without commas or double hyphens:

Chapter 45 through Quillbot:

Even the Ai detection websites caution against this:

To Durgeapologist, Fangbanger3000, and friends: If you do actually read this, I hope you realize that your posts have done more harm than good to the community. You are correct that AI is a potential threat to creative spaces, but you have gone about addressing it in the worst possible way. By creating multiple posts across platforms with the intent of creating a negative perspective toward certain authors and their fictions rather than the use of AI as a whole—not to mention the counter-accusations with personal attacks rather than focusing on the issue at hand—you are creating an environment that fosters negativity, bullying, and division—none of which are directions to take a sustainable and healthy community. AI Detection is the Wild West right now. There is no way to determine if something written is AI through the use of algorithms, and it requires the use of human intervention and careful comparison to previous works to be within a certain level of certainty that it is AI. Our hope is that in the future, you will take time, step back, and consider all possible sides before causing a stir in the community like this.

#dragon age#solavellan#solrook#dreadrook#dragon age the veilguard#ai#ai critical#dragon age fanfiction#originality ai detector#quillbot#solas#lavellan#dragon age rook#organized bullying is not okay#be kind to one another

175 notes

·

View notes

Text

I know I’m screaming into the void here but do not witch hunt people with AI accusations

As someone whose job for the last two years involved me reading and rereading essays and creative fiction written by my students (a group of writers notorious for using AI despite being told not to because they worry about their grades more than their skills) let me tell you straight up that detecting AI in any written work isn’t straightforward

AI detection softwares are bullshit. Even Turnitin, which is supposedly the best, has an error rate that is slowly increasing over time. They’re not reliable. The free ones online are even worse, trust me

“Oh but it’s so obvious!” Sure. If you’re trained to notice patterns and predictive repetitions in the language, sure. I can spot a ChatGPT student essay from a mile away. But only if they haven’t edited it themselves, or used a bunch of methods (Grammarly, other AIs, their friends, a “humanizer” software, etc) to obscure the ChatGPT patterns. And it’s easier with formulaic essays—with creative fiction it’s much harder.

Why?

Well because good creative fiction is a) difficult to write well and b) extremely subjective. ChatGPT does have notable patterns for creative writing. But it’s been trained on the writing that is immensely popular, writing that has been produced by humans. Purple prose, odd descriptions, sixteen paragraphs of setting where one or two could be fine, all of that is stylistic choices that people have intentionally made in their writing that ChatGPT is capable of predicting and producing.

What I’m saying is, people just write like that normally. There are stylistic things I do in to writing that other people swear up and down is an AI indicator. But it’s just me writing words from my head

So can we, should we, start witch hunts over AI use in fanfic when we notice these patterns? My answer is no because that’s dangerous.

Listen. I hate AI. I hate the idea of someone stealing my work and feeding it into a machine that will then “improve itself” based on work I put my heart and soul into. If I notice what I think is AI in a work I’ve casually encountered online, I make a face and I stop reading. It’s as simple as that. I don’t drag their name out into the public to start a tomato throwing session because I don’t know their story (hell they might even be a bot) and because one accusation can suddenly become a deluge

Or a witch hunt, if you will

Because accusing one person of AI and starting a whole ass witch hunt is just begging people to start badly analyzing the content they’re reading out of fear that they’ve been duped. People don’t want to feel the sting or embarrassment of having been tricked. So they’ll start reading more closely. Too closely. They’ll start finding evidence that isn’t really evidence. “This phrase has been used three times in the last ten paragraphs. It must be AI.”

Or, it could be that I just don’t have enough words in my brain that day and didn’t notice the repetition when I was editing.

There’s a term you may be familiar with called a “false positive.” In science or medicine, it’s when something seems to have met the conditions you’re looking for, but in reality isn’t true or real or accurate. Like when you test for the flu and get a positive result when you didn’t have the flu. Or, in this case, when you notice someone writing sentences that appear suspiciously like a ChatGPT constructed sentence and go “oh, yes that must mean it’s ChatGPT then”

(This type of argumentation/conclusion also just uses a whole series of logical fallacies I won’t get into here except to say that if you want to have a civil conversation about AI use in fandom you cannot devolve into hasty generalizations based on bits and parts)

I’m not saying this to protect the people using AI. In an ideal world, people would stop using it and return back to the hard work of making art and literature and so on. But we don’t live in that world right now, and AI is prevalent everywhere. Which means we have to be careful with our accusations and any “evidence” we think we see.

And if we do find AI in fandom spaces, we must be careful with how we handle or approach that, otherwise we will start accusing writers who have never touched AI a day in their life of having used it. We will create a culture of fear around writing and creating that stops creatives from making anything at all. People will become too scared to share their work out of fear they’ll be accused of AI and run off.

I don’t have solutions except to say that in my experience, outright accusing people of AI tends to create an environment of mistrust that isn’t productive for creatives or fans/readers. If you start looking for AI evidence everywhere, you will find it everywhere. Next thing you know, you’re miserable because you feel like you can’t read or enjoy anything.

If you notice what you think is AI in a work, clock it, maybe start a discussion about it, but keep that conversation open to multiple answers or outcomes. You’re not going to stop people from using AI by pointing fingers at them. But you might be able to inspire them to try writing or creating for themselves if you keep the conversation open, friendly, and encourage them to try creating for themselves, without the help of AI

123 notes

·

View notes

Note

LMAO alright so fucking get this bullshit. I run a fanfic written by AI through a detector. 0% detected. I run one I spent like two weeks writing. 26-45% detected. Let me off this cocky ball fart globe.

I have to start this with a PSA

Do NOT feed ANYTHING into an "AI Detector"

Never. Ever. EVER. Don't do it.

AI Detectors at BEST are useless, at WORST you are feeding your own work into a AI Collection/Scraper.

A robot cannot detect a robot's work because neither robot is actually intelligent. Neither robot can make a determination. Neither robot can come to a conclusion based on context because there is not yet any artificial anything that can think.

YOU have to learn how to spot AI content that isn't labeled on your own. I'm sorry, cause it sucks, and you're probably going to second guess yourself a lot, and you're just going to have to do your best and keep it to yourself because even if you are 100,000% CERTAIN that you are correct, opening up some kind of AI witch-hunt is absolutely NOT useful.

/deep breath/

Okay, harried lecture over.

Don't use AI detectors, they're functionally worthless and you're running the risk of consensually handing over your work to AI. I promise you any AI Detector site has some ToS policy about how you're consenting to letting them use what you feed into it.

So do not feed anything into it.

Ever.

EVER.

I do not care if you think [God] brought the most divine and pure of AI Detector sites into creation via infallible will.

DO NOT USE THEM.

#quin answers#anon asks#ai detector#ai detection#these sites are scams and bullshit#they're almost worse than ai#because at least with ai you know you're being screwed

31 notes

·

View notes

Text

Hello, everyone!

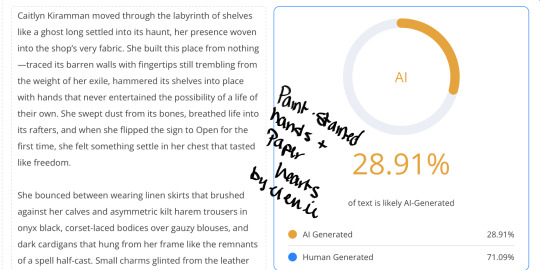

First off, I’m sorry for even having to post this, and I’m usually nice to everyone I come into contact with, but I received a startling comment on my newest fic, Paint-Stained Hands and Paper Hearts, where I was accused of pumping out the entire chapter solely using AI.

I am thirty-two years old and have been attending University since I was 18 YEARS OLD. I am currently working on obtaining my PhD in English Literature as well as a Masters in Creative Writing. So, there’s that.

There is an increasing trend of online witch hunts targeting writers on all platforms (fanfic.net, ao3, watt pad, etc), where people will accuse them of utilizing AI tools like ChatGPT and otherwise based solely on their writing style or prose. These accusations often come without concrete evidence and rely on AI detection tools, which are known to be HELLA unreliable. This has led to false accusations against authors who have developed a particular writing style that AI models may emulate due to the vast fucking amount of human-written literature that they’ve literally had dumped into them. Some of these people are friends of mine, some of whom are well-known in the AO3 writing community, and I received my first comment this morning, and I’m pissed.

AI detection tools work by analyzing text for patterns, probabilities, and structures that resemble AI-generated outputs. HOWEVER, because AI models like ChatGPT are trained on extensive datasets that include CENTURIES of literature, modern writing guides, and user-generated content, they inevitably produce text that can mimic various styles — both contemporary and historical. Followin’ me?

To dumb this down a bit, it means that AI detection tools are often UNABLE TO DISTINGUISH between human and AI writing with absolute certainty.

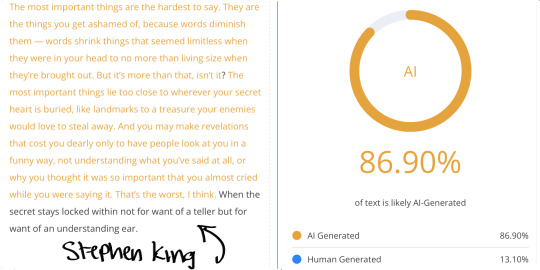

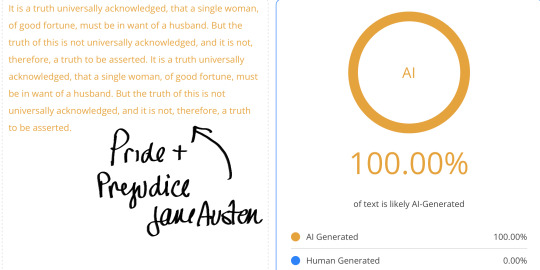

Furthermore, tests have shown that classic literary works, like those written by Mary Shelley, Jane Austen, William Shakespeare, and Charles Dickens, frequently trigger AI detectors as being 100% AI generated or plagiarized. For example:

Mary Shelley’s Frankenstein has been flagged as AI-generated because its formal, structured prose aligns with common AI patterns.

Jane Austen’s novels, particularly Pride and Prejudice, often receive high AI probability scores due to their precise grammar, rhythmic sentence structures, and commonly used words in large language models.

Shakespeare’s works sometimes trigger AI detectors given that his poetic and structured style aligns with common AI-generated poetic forms.

Gabriel Garcia Marquez’s Love in the Time of Cholera and One Hundred Years of Solitude trigger 100% AI-generated due to its flowing sentences, rich descriptions, and poetic prose, which AI models often mimic when generating literary or philosophical text.

Fritz Leiber’s Fafhrd and the Grey Mouser’s sharp, structured rhythmic prose, imaginative world building, literary elegance, and dialogue-driven narratives often trigger 100% on AI detectors.

The Gettysburg fucking Address by Abraham Lincoln has ALSO been miss classified as AI, demonstrating how formal, structured language confuses these detectors.

These false positives reveal a critical flaw in AI detection: because AI has been trained on so much human writing, it is nearly impossible for these tools to completely separate original human work from AI-generated text. This becomes more problematic when accusations are directed at contemporary authors simply because their writing ‘feels’ like AI despite being fully human.

The rise in these accusations poses a significant threat to both emerging and established writers. Many writers have unique styles that might align with AI-generated patterns, especially if they follow conventional grammar, use structured prose, or have an academic or polished writing approach. Additionally, certain genres— such as sci-fi, or fantasy, or philosophical essays— often produce high AI probability scores due to their abstract and complex language.

For many writers, their work is a reflection of years—often decades—of dedication, practice, and personal growth. To have their efforts invalidated or questioned simply because their writing is mistaken for AI-generated text is fucking disgusting.

This kind of shit makes people afraid of writing, especially those who are just starting their careers / navigating the early stages of publication. The fear of being accused of plagiarism, or of relying on AI for their creativity is anxiety-inducing and can tank someone’s self esteem. It can even stop some from continuing to write altogether, as the pressure to prove their authenticity becomes overwhelming.

For writers who have poured their hearts into their work, the idea that their prose could be mistaken for something that came from a machine is fucking frustrating. Second-guessing your own style, wondering if you need to change how you write or dumb it down in order to avoid being falsely flagged—this fear of being seen as inauthentic can stifle their creative process, leaving them hesitant to share their work or even finish projects they've started. This makes ME want to stop, and I’m just trying to live my life, and write about things I enjoy. So, fuck you very much for that.

Writing is often a deeply personal endeavor, and for many, it's a way to express thoughts, emotions, and experiences that are difficult to put into words. When those expressions are wrongly branded as artificial, it undermines not just the quality of their work but the value of their creative expression.

Consider writing habits, drafts, and personal writing history rather than immediate and unfounded accusations before you decide to piss in someone’s coffee.

So, whatever. Read my fics, don’t read my fics. I just write for FUN, and to SHARE with all of you.

Sorry that my writing is too clinical for you, ig.

I put different literary works as well as my own into an AI Detector. Here you go.

#arcane#ao3 fanfic#arcane fanfic#ao3#ao3 writer#writers on tumblr#writing#wattpad#fanfiction#arcane fanfiction

50 notes

·

View notes

Text

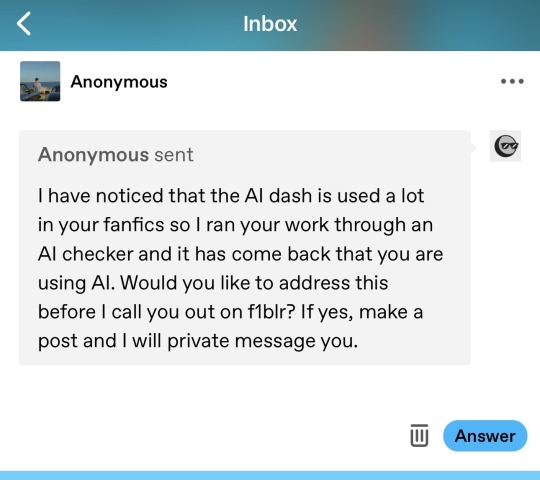

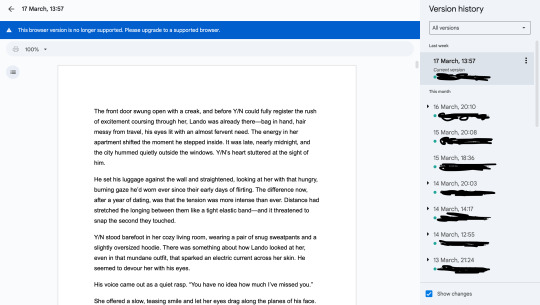

Reposting this for the anon who is clearly too obsessed and doesn't have a life outside of Tumblr. + Added a new statement too.

I deleted this post because I was under the impression the anon had already seen it—since they love to stalk my blog very in-depth. Luckily, I had written it on Google Docs, like I do with everything I post here, before posting it the first time. And now I’m posting it again because apparently, they didn’t get the memo and love to create fake accounts:

For the anon who’s too coward to use their real account and clearly doesn’t have a life:

I was going to ignore the first ask, but then you had the time, energy, and weird obsession to create a fake account just to send me another ask—and then a private message. So let me be clear

This is the first and last time I address this. Any further messages or asks about this will be deleted and blocked immediately. Tumblr is my safe space—stress and drama free—and I will block anyone who disturbs that for me. You really came onto my blog and did what—threatened me? You ran my writing through an unreliable AI checker and then had the audacity to message me about it? Do you really feel like it's your place to question how people write fanfiction? Why do you feel so entitled to an explanation from someone you don’t even know? To quote you: “DM me and explain why” — WHO are you? And where is this entitlement coming from?

Let me ask you this: Do you not have a life outside of Tumblr? Who takes time out of their day to check if what a stranger posted is “AI” or not? I saw another account getting the same kind of asks recently—was that you too? Are you going blog to blog checking F1 fics like a fanfic detective? If so: get a life, get a job, get a hobby, or better yet—touch grass.

And the audacity to make a fake account just to send another message? Coward behavior. I’ve blocked the first anon ask and now your little fake blog too. I’ll keep blocking every single one if you continue harassing me.

Don’t like what I post? Scroll past it. Block me. Ignore me. I truly do not care. I use Google Docs for all my fics—outline ideas, drafts, requests order. Since that seems hard to believe, here’s one example straight from my docs.

And since you clearly have free time, here are actual credible sources that prove AI checkers are not reliable and should never be used as evidence of anything:

Source

Source

Source

Source

This is especially relevant to me personally, because English is not my native language. I've studied it for over 15 years, l'm currently studying English at university, and I don't live in an English-speaking country. I didn't grow up in an English-speaking country, and I've worked hard to develop my vocabulary, grammar, and writing style. So if my writing sounds "too repetitive" or "too perfect to be written by a human" and gets flagged by some Al detector—that's not proof I used Al. It means I've worked hard to get to this level, even though my English might not always be perfect.

Source

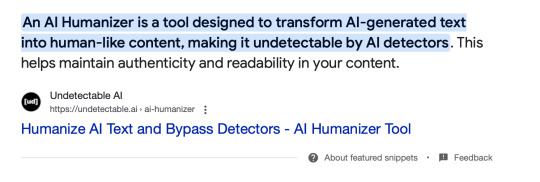

Al that claims to create undetectable Al content or "human Al"

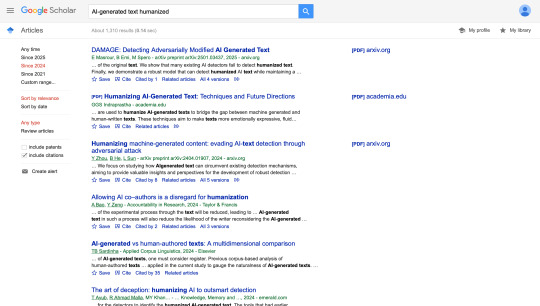

Or maybe you want to read more on Google Scholar:

There are so many sources to inform yourself—you just need to know how to use them.

And this is what really gets me: someone could use Al, lightly edit the output, or run it through one of those "humanize Al" generators and pass every detector with flying colors. Meanwhile, people like me get flagged and questioned for no reason.

Also, if I were actually using Al, I would've used one of those humanizing tools too—so people like you wouldn't harass me over what I post.

These days, it seems you don't even need facts—just a fake account and a superiority complex.

That's all I had to say. Goodbye, and good luck finding a personality.

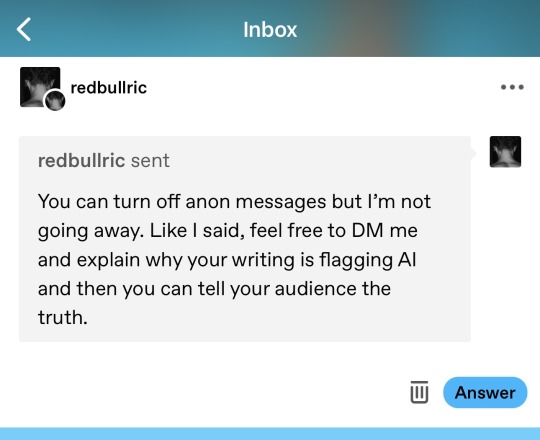

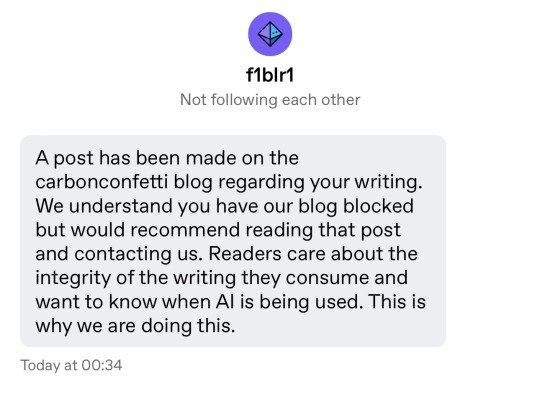

April 7

A few days after I posted the above post, you went on someone’s blog — someone who had sent me an ask without using the anon option — and sent them an ask about me, as if I had committed a crime. Less than 24 hours ago, you created yet another fake account just to message me (as seen below) and tell me about one of your other accounts (also fake), despite my explicit statement that I would no longer entertain this obsessive behavior.

Let me be extremely clear: I do not owe strangers on the internet an explanation for my writing process — especially not those who appoint themselves as investigators and issue condescending ultimatums. I will not “contact you privately.” I will not “own up” to a false narrative you've built around flawed tools and obsessive pattern-tracking. You do not get to demand private confessions like you're running a tribunal.

I already said everything I had to say when I made that original post, but clearly it didn’t register, and you continue to target me. I looked at the account you mentioned in your message. To quote: “Some members of the group of us working on this project have gone through PhD programs or work in education and understand the inaccuracies and limitations of AI detection tools.”

So you're adults — or so you claim — with PhDs, yet you seem to be unemployed based on the amount of free time you have to analyze what strangers are posting on the internet. Especially posts that are over 2k words long.

Seriously, who has time to do this much? Because I highly doubt someone with an actual job and a life has this much time on their hands.

And as I said in my first post: block me if you don’t like my blog or what I post. It is really that simple.

LEAVE. ME. ALONE.

24 notes

·

View notes

Text

Stay Ahead with Desklib’s Free AI Detection Tool – Your Secret Weapon for Authentic Writing

In a world where artificial intelligence is becoming increasingly embedded in our daily writing tasks, distinguishing between human and machine-generated content has become a challenge. Whether you're a student, academician, or content creator, ensuring your work is original and free from AI influence is essential.

That’s where Desklib steps in with its powerful AI content detection tool—designed to help users confidently detect AI-written content and maintain integrity across all forms of written communication.

Why You Need an AI-Generated Content Checker

With platforms like ChatGPT, Jasper, and other AI writers gaining popularity, it's easy for content to slip through unnoticed as AI-generated. But when it comes to academics, publishing, or professional writing, passing off AI-written material as your own can have serious consequences—from plagiarism accusations to reputational damage.

This is where Desklib’s AI text detector becomes invaluable. It acts as both an AI plagiarism checker and an originality checker, offering peace of mind that your work is truly yours—or helping you verify the authenticity of someone else’s.

How Desklib’s AI Detection Tool Works

Using Desklib’s AI detection tool is simple and fast:

Upload your document – whether it's an essay, research paper, blog post, or report.

Let the system analyze your text using advanced algorithms.

Receive a clear, downloadable report showing the percentage of AI-generated content in your file.

What sets Desklib apart is its chunking method —it breaks down text into overlapping segments to better understand context and generate more accurate results. This ensures even the most subtle signs of AI involvement don’t go unnoticed.

Features That Make Desklib Stand Out

Free to Use: No hidden charges or subscription fees.

Supports Long Documents: Check papers up to 20,000 words long.

Multiple Detection Modes: Choose from Section Wise, Paragraph Wise, or Full Text analysis.

Private & Secure: Files are deleted after analysis—no data stored.

Who Can Benefit?

Desklib’s AI-generated content checker is ideal for:

Students: Ensure your assignments are 100% original.

Educators: Quickly screen student submissions for AI use.

Writers & Bloggers: Verify your content before publication.

Professionals: Confirm the authenticity of business reports or proposals.

Final Thoughts

As AI continues to reshape how we write, tools like Desklib’s AI content detection service are not just helpful—they’re essential. Whether you're creating content or reviewing it, having access to a reliable AI detection tool helps preserve the value of original thought and creativity.

Ready to Test Your Content?

Visit https://desklib.com/ai-content-detector/ today and start checking your documents for AI-generated content—completely free and in seconds!

#AI content detection#detect AI-written content#AI plagiarism checker#AI-generated content checker#AI text detector#originality checker#AI detection tool

0 notes

Text

I saw a post the other day calling criticism of generative AI a moral panic, and while I do think many proprietary AI technologies are being used in deeply unethical ways, I think there is a substantial body of reporting and research on the real-world impacts of the AI boom that would trouble the comparison to a moral panic: while there *are* older cultural fears tied to negative reactions to the perceived newness of AI, many of those warnings are Luddite with a capital L - that is, they're part of a tradition of materialist critique focused on the way the technology is being deployed in the political economy. So (1) starting with the acknowledgement that a variety of machine-learning technologies were being used by researchers before the current "AI" hype cycle, and that there's evidence for the benefit of targeted use of AI techs in settings where they can be used by trained readers - say, spotting patterns in radiology scans - and (2) setting aside the fact that current proprietary LLMs in particular are largely bullshit machines, in that they confidently generate errors, incorrect citations, and falsehoods in ways humans may be less likely to detect than conventional disinformation, and (3) setting aside as well the potential impact of frequent offloading on human cognition and of widespread AI slop on our understanding of human creativity...

What are some of the material effects of the "AI" boom?

Guzzling water and electricity

The data centers needed to support AI technologies require large quantities of water to cool the processors. A to-be-released paper from the University of California Riverside and the University of Texas Arlington finds, for example, that "ChatGPT needs to 'drink' [the equivalent of] a 500 ml bottle of water for a simple conversation of roughly 20-50 questions and answers." Many of these data centers pull water from already water-stressed areas, and the processing needs of big tech companies are expanding rapidly. Microsoft alone increased its water consumption from 4,196,461 cubic meters in 2020 to 7,843,744 cubic meters in 2023. AI applications are also 100 to 1,000 times more computationally intensive than regular search functions, and as a result the electricity needs of data centers are overwhelming local power grids, and many tech giants are abandoning or delaying their plans to become carbon neutral. Google’s greenhouse gas emissions alone have increased at least 48% since 2019. And a recent analysis from The Guardian suggests the actual AI-related increase in resource use by big tech companies may be up to 662%, or 7.62 times, higher than they've officially reported.

Exploiting labor to create its datasets

Like so many other forms of "automation," generative AI technologies actually require loads of human labor to do things like tag millions of images to train computer vision for ImageNet and to filter the texts used to train LLMs to make them less racist, sexist, and homophobic. This work is deeply casualized, underpaid, and often psychologically harmful. It profits from and re-entrenches a stratified global labor market: many of the data workers used to maintain training sets are from the Global South, and one of the platforms used to buy their work is literally called the Mechanical Turk, owned by Amazon.

From an open letter written by content moderators and AI workers in Kenya to Biden: "US Big Tech companies are systemically abusing and exploiting African workers. In Kenya, these US companies are undermining the local labor laws, the country’s justice system and violating international labor standards. Our working conditions amount to modern day slavery."

Deskilling labor and demoralizing workers

The companies, hospitals, production studios, and academic institutions that have signed contracts with providers of proprietary AI have used those technologies to erode labor protections and worsen working conditions for their employees. Even when AI is not used directly to replace human workers, it is deployed as a tool for disciplining labor by deskilling the work humans perform: in other words, employers use AI tech to reduce the value of human labor (labor like grading student papers, providing customer service, consulting with patients, etc.) in order to enable the automation of previously skilled tasks. Deskilling makes it easier for companies and institutions to casualize and gigify what were previously more secure positions. It reduces pay and bargaining power for workers, forcing them into new gigs as adjuncts for its own technologies.

I can't say anything better than Tressie McMillan Cottom, so let me quote her recent piece at length: "A.I. may be a mid technology with limited use cases to justify its financial and environmental costs. But it is a stellar tool for demoralizing workers who can, in the blink of a digital eye, be categorized as waste. Whatever A.I. has the potential to become, in this political environment it is most powerful when it is aimed at demoralizing workers. This sort of mid tech would, in a perfect world, go the way of classroom TVs and MOOCs. It would find its niche, mildly reshape the way white-collar workers work and Americans would mostly forget about its promise to transform our lives. But we now live in a world where political might makes right. DOGE’s monthslong infomercial for A.I. reveals the difference that power can make to a mid technology. It does not have to be transformative to change how we live and work. In the wrong hands, mid tech is an antilabor hammer."

Enclosing knowledge production and destroying open access

OpenAI started as a non-profit, but it has now become one of the most aggressive for-profit companies in Silicon Valley. Alongside the new proprietary AIs developed by Google, Microsoft, Amazon, Meta, X, etc., OpenAI is extracting personal data and scraping copyrighted works to amass the data it needs to train their bots - even offering one-time payouts to authors to buy the rights to frack their work for AI grist - and then (or so they tell investors) they plan to sell the products back at a profit. As many critics have pointed out, proprietary AI thus works on a model of political economy similar to the 15th-19th-century capitalist project of enclosing what was formerly "the commons," or public land, to turn it into private property for the bourgeois class, who then owned the means of agricultural and industrial production. "Open"AI is built on and requires access to collective knowledge and public archives to run, but its promise to investors (the one they use to attract capital) is that it will enclose the profits generated from that knowledge for private gain.

AI companies hungry for good data to train their Large Language Models (LLMs) have also unleashed a new wave of bots that are stretching the digital infrastructure of open-access sites like Wikipedia, Project Gutenberg, and Internet Archive past capacity. As Eric Hellman writes in a recent blog post, these bots "use as many connections as you have room for. If you add capacity, they just ramp up their requests." In the process of scraping the intellectual commons, they're also trampling and trashing its benefits for truly public use.

Enriching tech oligarchs and fueling military imperialism

The names of many of the people and groups who get richer by generating speculative buzz for generative AI - Elon Musk, Mark Zuckerberg, Sam Altman, Larry Ellison - are familiar to the public because those people are currently using their wealth to purchase political influence and to win access to public resources. And it's looking increasingly likely that this political interference is motivated by the probability that the AI hype is a bubble - that the tech can never be made profitable or useful - and that tech oligarchs are hoping to keep it afloat as a speculation scheme through an infusion of public money - a.k.a. an AIG-style bailout.

In the meantime, these companies have found a growing interest from military buyers for their tech, as AI becomes a new front for "national security" imperialist growth wars. From an email written by Microsoft employee Ibtihal Aboussad, who interrupted Microsoft AI CEO Mustafa Suleyman at a live event to call him a war profiteer: "When I moved to AI Platform, I was excited to contribute to cutting-edge AI technology and its applications for the good of humanity: accessibility products, translation services, and tools to 'empower every human and organization to achieve more.' I was not informed that Microsoft would sell my work to the Israeli military and government, with the purpose of spying on and murdering journalists, doctors, aid workers, and entire civilian families. If I knew my work on transcription scenarios would help spy on and transcribe phone calls to better target Palestinians, I would not have joined this organization and contributed to genocide. I did not sign up to write code that violates human rights."

So there's a brief, non-exhaustive digest of some vectors for a critique of proprietary AI's role in the political economy. tl;dr: the first questions of material analysis are "who labors?" and "who profits/to whom does the value of that labor accrue?"

For further (and longer) reading, check out Justin Joque's Revolutionary Mathematics: Artificial Intelligence, Statistics and the Logic of Capitalism and Karen Hao's forthcoming Empire of AI.

25 notes

·

View notes

Text

a personal post/reflection on ai use

some of you reading this may have seen the message i received accusing me of using ai to write my work. i wanted to take a moment to talk about how it made me feel and, more importantly, the impact that accusations like this can have on writers in general.

I won’t lie: seeing that message in my inbox, being told that the stories i spend hours writing aren't “real", that my effort and creativity don't belong to me, was really disheartening. then i had to defend my own writing against these accusations and that wasn’t exactly fun. and while i know i shouldn’t let it get to me, the truth is that it does, because I'm a real person!

It’s made me overthink everything i write. I already reread my fics multiple times before posting, checking for flow, consistency, and coherence, but now, i find myself second-guessing every sentence. Does this sound too robotic? Is my phrasing too formal or too stiff? Or maybe it’s not polished enough? Maybe it's too polished. What if i accidentally repeat a word or structure a sentence in a way that someone deems “ai-like”? Will i be accused of this again?

I want to be clear also that this isn’t about seeking sympathy. I just feel it's important to remind people that fanfic writers are real people with real emotions. We write because we love it, because we want to share stories for others to enjoy for free. And yet, there are people out there who treat “spotting ai” like some kind of witch hunt, who feel entitled to send accusations to complete strangers without any basis for it.

And I don't say this to be elitist, but for some context, I have a master’s degree in computer science. I work in tech every day. I specialize in machine learning. When I say there is no reliable way to tell whether a passage of text was written by ai or a human, i'm not just making shit up. ai detection tools are completely unreliable. they give false positives all the time, and they are, quite frankly, complete bullshit.

And I get that there are legitimate concerns about ai-generated work in creative spaces, especially when it comes to art, writing, and other forms of expression that people put their hearts into. I have taken ethics courses in ai for this reason. I understand why people are wary, and i’m not saying that those concerns aren’t valid. But this is exactly why we should be mindful of how we engage with content. If you don’t like something, if you suspect it was ai-generated and that bothers you, the best thing you can do is simply not engage. don’t read it, don’t share it, don’t support it.

But going out of your way to harass people, to send accusations without evidence, to act like you’re some kind of ai-detecting authority is not just absurd, but it’s harmful to real people because you will inevitably get it wrong!!!

At the end of the day, this is fan fiction. no one is paying for this. no one is being scammed. so why do people act like they need to police something that’s supposed to be fun, creative, and freely shared? if you love stories written by real people, support those writers. but please, stop making this space even more stressful for the people who are already here, giving their time and creativity to share something they love.

And if you still think making accusations about people using AI for their writing is the correct and virtuous thing to do, I invite you to read this online thread of freelance writers discussing the legitimate harm that has come to their livelihood due to the false positives of ai detection tools and false accusations.

22 notes

·

View notes

Text

Some helpful info about detecting AI writing.

-Lisa

10 notes

·

View notes

Text

I asked ai to tell me how to identify ai writing. This is what it gave me.

Identifying whether a story or piece of writing was generated by AI can be challenging, but there are certain signs and patterns to look for. Here are some methods that can help you determine if a story might be AI-generated:

1. Repetitive Phrasing or Ideas

AI models sometimes repeat phrases or concepts throughout the text, sometimes without any real need to. Look for:

Repeated sentences or ideas that don’t add to the progression of the story.

Redundant descriptions or overly detailed explanations of things that don’t move the plot forward.

2. Lack of Deep Characterization

AI-generated writing can struggle with developing rich, deep characters. While the story might be well-structured, characters often feel:

One-dimensional or lacking in emotional depth.

Motivations or actions that don’t feel organic, like they are just moving through the plot without real personal stakes.

3. Inconsistent Plot or Logic

AI can sometimes create stories that have plot holes or logic inconsistencies. For example:

Characters acting in ways that don’t align with their personalities or the established world.

Unexplained shifts in the narrative, like events happening without cause or consequence.

Abrupt changes in tone or setting without any clear transitions.

4. Too Perfect or Polished

AI writing can sometimes be unusually smooth or sterile. Look for:

Lack of imperfections like awkward phrasing, typos, or quirks that human writers often incorporate (unless the piece has been heavily edited).

Overly neutral or formal tone, especially if the writing is supposed to be casual or emotional.

5. Unusual Sentence Structure or Phrasing

AI sometimes produces strange sentence constructions or uses words in a way that doesn’t quite feel right. For instance:

Long, convoluted sentences with too many clauses.

Phrasing that sounds technically correct but doesn’t flow naturally for a human writer.

6. Overuse of Clichés or Generic Ideas

AI often relies on well-established patterns and may use clichés, especially in storytelling. Watch for:

Predictable or generic storylines with little originality.

Overuse of familiar tropes or archetypes without fresh or personal takes.

7. Lack of Emotional or Sensory Depth

AI-generated writing may struggle with conveying complex emotions or sensory experiences. For example:

Descriptions that feel flat or don't evoke strong imagery or feeling.

Emotional moments that seem shallow or don’t resonate, as AI often lacks the depth of human experience.

8. Over-Explanations

AI might over-explain things because it doesn’t always understand the nuances of human experience. Look for:

Excessive clarification of simple concepts.

Repeated explanations of emotions, actions, or events that should already be clear from the context.

9. Strange Dialogue

AI-generated dialogue can sound unnatural or stilted. Common signs include:

Conversations that feel too formal, disconnected, or robotic.

Characters talking in ways that don’t align with their age, background, or setting.

Repetitive dialogue patterns where characters say similar things too often.

10. Check for Metadata or Author Information

If you’re reading an online story, some platforms may indicate whether AI was used to generate it. Alternatively, some AI writers might leave identifiable traces in the metadata of the text.

If the text was published on a site with AI-generated content, that can also be a clue.

11. Look for Inconsistencies in Style or Tone

AI can struggle to maintain a consistent style or tone over a longer piece. For example:

Sudden shifts in style, such as a formal tone turning into a casual one without context.

Inconsistent use of language or vocabulary that feels out of place in the context of the story.

12. Run the Text Through AI Detection Tools

Several AI detection tools can analyze a piece of writing and determine if it was likely written by an AI. Some popular tools include:

GPTZero: Detects AI writing by analyzing patterns like perplexity and burstiness.

Turnitin: Known for plagiarism detection but also works with AI writing.

AI Writing Check: Another specialized tool to check for AI-generated content.

Conclusion

Identifying AI-generated writing is often about spotting patterns that differ from the natural flow of human creativity. If the writing feels off in terms of tone, style, depth, or logic, it might be worth investigating further.

Would you like to check a specific piece of writing for AI characteristics?

#thoughts?#books#writing#booklr#booklover#reading#writers#writing problems#writers on tumblr#writeblr#writers and poets#ai#anti ai#real writers#writerscommunity#books and reading

17 notes

·

View notes

Note

get rid of all AI content and have a filler image (“this image has been removed we are working on a new image” and a link to an official statement about the art and AI thing. Have an artist live stream or Q&A or something to highlight your artists and introduce your community to who will be working on the replacement images. Have this set of artists work as a sort of anti-AI art team and reinforce your stance on AI. Tell your community you will not be utilizing AI for art or writing and have an official anti-AI initiative . Everything written should be run through AI detection software and all art should be done by trusted artists who do not use AI. It should be the mission of the site and its team to convince us as the paying customer that AI will never be used again. There should not be events going on until all AI generated material is replaced. A public apology should be issued and a timeframe of when all art will be done should be given to us. It has been several months and only a few characters have been replaced so far. If this were a pressing issue to CJ, it WOULD be getting done proper but he doesn’t actually believe it’s as big of a deal as his paying customers do

☁️

12 notes

·

View notes

Note

think i found another ai fic... one chapter was 26% ai another 21% one chapter was "probably human written" but still... and it's written on anon...

i appreciate you so much samantha and all the work and effort and time you put into your amazing writing, you're amazing💖💖💖

I learned recently that some folks use chatgpt or the likes to edit their fics. This is a terrible idea but I do think that it might contribute to some of the results we're seeing. It's both difficult and inaccurate to confirm ai generation when the ai detection result isn't paired with other factors like frequently posting high word counts, or dull monotone writing, or absolutely perfect grammar etc. So people should definitely stop using chatgpt for spell checks.

Something else that might trigger a positive ai result is the use of tools like Grammarly and so on, which I've mentioned before that I have been using for years for spag. But they recently (?) introduced a generative ai element that rewrites content for you or that generates a new sentence on the spot. This does however result in a positive ai detection because well, the ai did it.

Do we stop using these types of tools now? I don't think that's necessary and there are probably minimal checkers left that have no integrated ai at all. Most spag checkers including Word, use some kind of non-genative ai to alert you to errors in a more evolved way than before. (Google Docs' spag checker just got stupider as it "evolved" btw. What an absolute dumpster fire.)

BUT be careful how you use it, don't let it reconstruct your work, don't let it automagically write or fix a sentence for you, and don't rely on it to produce flawless content, there is no such thing. Use your brain, ask for a beta reader to assist you, research the things you don't know. Teach yourself to write better. Use the tool for its initial purpose--to check your spelling and grammar. The ai features can usually be switched off in settings. That being said, basic spag checks using these tools shouldn't equate ai generation but it will probably depend on the tool used to detect it.

I want to add that we definitely should not check every fic we're interested in reading for ai. I think that will make the fandom experience terrible and unenjoyable for everyone. Read it in good faith but keep an eye out for stuff like posting large amounts of words on a schedule that is not humanly possible, the writing style, the tone, other use of ai by the person etc. We've been reading fanfic for years, we know when something is off. Block if you suspect it's ai generated.

People who use ai to 'write' fics have no place in fandom spaces.

It's going to become increasingly difficult to detect these things though, since there is also a feature to "humanize" the ai slop 🤢 and I don't know what the way forward is but I do know it's not running every fic through an ai detector. They're not entirely accurate either. The only reason I resorted to an ai detector with that person I initially caught out, was because the tag was clogged with their constant posting and I knew there was no fucking way they were posting that much naturally. The detector just confirmed what I suspected anyway.

I read a fic recently by an Anon author and I thought I was so good and sexy. I really hope it's not the same person you're talking about. I'm not going back to check because my kudo and comment are already on there. I also doubt an ai can write such filthy, steaming smut 😂

And thank you, Anon, for your kind words. Truly appreciate it. 💕

12 notes

·

View notes