#AI In Production Line Optimization

Explore tagged Tumblr posts

Text

Learn how generative AI addresses key manufacturing challenges with predictive maintenance, advanced design optimization, superior quality control, and seamless supply chains.

#Generative AI In Manufacturing#AI-Driven Manufacturing Solutions#AI For Manufacturing Efficiency#Generative AI And Manufacturing Challenges#AI In Manufacturing Processes#Manufacturing Innovation With AI#AI In Production Line Optimization#Generative AI For Quality Control#AI-Based Predictive Maintenance#AI In Supply Chain Management#Generative AI For Defect Detection#AI In Manufacturing Automation#AI-Driven Process Improvements#Generative AI In Factory Operations#AI In Product Design Optimization#AI-Powered Manufacturing Insights

0 notes

Text

🧠 HUMAN LOGIC IS A BIOLOGICAL TOOL, NOT A UNIVERSAL TRUTH — DEAL WITH IT 🧠

🔪 Your Brain’s Favorite Lie: That Logic Is “Objective”.

Let’s stop playing nice. Your logic—your beautiful, beloved, oh-so-precious sense of what “makes sense”—is not divine. It’s not universal. It’s not even reliable. It’s a biologically evolved, meat-based survival mechanism, no more sacred than your gag reflex or the way your pupils dilate in the dark.

You’re walking around with a 3-pound wet sponge between your ears—trained over millions of years not to “understand the universe,” but to keep your ugly, vulnerable ass alive just long enough to breed. That’s it. That’s your heritage. That’s the entire raison d’être of your logic: don’t get eaten, don’t starve, and hopefully, bone someone before you drop dead.

But somewhere along the line, that same glitchy chunk of gray matter started patting itself on the back. We started believing that our interpretations of reality somehow were reality—that our logic, rooted in the same neural sludge as tribal fear and monkey politics, could actually comprehend the totality of existence.

Newsflash: it can’t. It won’t. It was never meant to.

💀 Evolution Didn’t Build You for Truth—It Built You to Cope.

Why do we think the universe must obey our logic? Because it feels good. Because it comforts us. Because a cosmos that operates on cause-effect, fairness, and binary resolution is safe. But here’s the raw, uncaring truth: the universe doesn’t give a shit about what “makes sense” to you.

Your ancestors didn’t survive because they could contemplate quantum mechanics. They survived because they could run from predators, recognize tribal cues, and avoid eating poisonous berries. That’s what your brain is optimized for. You don’t “think” so much as you react, pattern-match, and rationalize after the fact.

Logic is just another story we tell ourselves—an illusion of control layered over biological impulses. And we’ve mistaken the map for the terrain. Worse—we’ve convinced ourselves that if something defies our version of logic, it must be false.

Nah. If anything defies your logic, that just means your logic is insufficient. And it is.

📉 Spaghetti Noodle vs Earthquake: A Metaphor for Your Mind.

Imagine trying to measure a 9.7-magnitude earthquake using a cooked spaghetti noodle.

That’s what it’s like when a human tries to understand the totality of the universe using evolved meat-brain logic. It bends. It flails. It doesn't register. And when it inevitably fails, what do we do? We don't question the noodle—we deny the earthquake.

"This doesn't make sense!" we scream. "That can't be true!" we bark. "It contradicts reason!" we whine.

Your reason? Please. Your “reason” is the product of biochemical slop shaped by evolutionary shortcuts and social conditioning. You’re trying to compress infinite reality through the Play-Doh Fun Factory that is the prefrontal cortex—and you think the result is objective truth?

Try harder.

👁 Our Logic Is Not Only Limited—It’s Delusional 👁

Humans are addicted to the idea that things must “make sense.” But that urge isn’t noble. It’s a coping mechanism—a neurotic tic that keeps us from curling into a ball and sobbing at the abyss.

We don’t want truth. We want familiarity. We want logic to confirm our biases, reinforce our sense of superiority, and keep our mental snow globes intact.

This is why people still argue against things like:

Multiverse theories (“that just doesn’t make sense!”)

Non-binary time constructs (“how can time not be linear?”)

Quantum entanglement (“spooky action at a distance sounds made-up!”)

AI emergence (“machines can’t think!”)

We call them “impossible” because they offend the Church of Human Logic. But the universe doesn’t follow our rules—it just does what it does, whether or not it fits inside our skulls.

🧬 Logic Is a Neural Shortcut, Not a Cosmic Law 🧬

Every logical deduction you make, every syllogism you love, is just a cascade of neurons firing in meat jelly. And while that may feel profound, it’s no more “objective” than a cat reacting to a laser pointer.

Let’s break it down clinically:

Neural pathways = habitual responses

Reasoning = post-hoc justification

“Logic” = pattern recognition + cultural programming

Sure, logic feels universal because it's consistent within certain frameworks. But that’s the trap. You build your logic inside a container, and then get mad when things outside that container don’t obey the same rules.

That's not a flaw in reality. That's a flaw in you.

📚 Science Bends the Knee, Too 📚

Even science—our most sacred institution of “objectivity”—is limited by human logic. We create models of reality not because they are reality, but because they’re the best our senses and brains can grasp.

Think about it:

Newton’s laws were “truth” until Einstein showed up.

Euclidean geometry was “truth” until curved space said “lol nope.”

Classical logic ruled until Gödel proved that even logic can’t fully explain itself.

We’re not marching toward truth. We’re crawling through fog, occasionally bumping into reality, scribbling notes about what it might be—then mistaking those notes for the cosmos itself.

And every time the fog clears a bit more, we realize how hilariously wrong we were. But instead of accepting that we're built to misunderstand, we cling to the delusion that next time we’ll finally “get it.”

Spoiler: we won’t.

🌌 Alien Minds Would Find Us Adorable 🌌

Imagine a being with cognition not rooted in flesh. A silicon-based intelligence. A 4D consciousness. A non-corporeal entity who doesn’t rely on dopamine hits to feel “true.”

What would they think of our logic?

They’d laugh.

Our logic would seem as quaint as a toddler’s crayon drawing of a black hole. Our syllogisms? A joke. Our “laws of physics”? Regional dialects of a much deeper syntax. To them, we’d be flatlanders trying to explain volume.

And the real kicker? They wouldn’t even hate us for it. They’d just look at our little blogs and tweets and peer-reviewed papers and whisper: “Aw, they’re trying.”

💣 You Are Not a Philosopher-King. You Are a Biochemical Coin Flip.

Don’t get it twisted. You are not some detached, floating brain being logical for logic’s sake. Every thought you have is drenched in emotion, evolution, and instinct. Even your "rationality" is soaked in bias and cultural conditioning.

Let’s prove it:

Ever “logically” justify a bad relationship because you feared loneliness?

Ever dismiss an argument you didn’t like even though it made sense?

Ever ignore data that threatened your worldview, then called it “flawed”?

Congratulations. You’re human. You don’t want truth. You want safety. And logic, for most of you, is just a mask your fears wear to sound smart.

🪓 We Have to Kill the God of Logic Before It Kills Us.

Our worship of logic as some kind of untouchable deity has consequences:

It blinds us to truths that don’t “compute.”

It makes us hostile to mystery, paradox, and ambiguity.

It turns us into arrogant gatekeepers of “rationality,” dismissing what we can’t explain.

That’s why Western culture mocks intuition, fears spirituality, and rejects phenomena it can’t immediately dissect. If it doesn’t bow to the metric system or wear a lab coat, it’s seen as “woo.”

But here’s the paradox:

The deepest truths may be the ones that never fit inside your head. And if you cling to logic too tightly, you’ll miss them. Hell—you might not even know they exist.

⚠️ So What Now? Do We Just Give Up? ⚠️

No. We don’t throw logic away. We just stop treating it like a universal measuring stick.

We use it like what it is: a tool. A hammer, not a temple. A flashlight, not the sun. Logic is helpful within a context. It’s fantastic for building bridges, writing code, or diagnosing illnesses. But it breaks down when used on the unquantifiable, the infinite, the beyond-the-body.

Here’s how we survive without losing our minds:

Stay skeptical of your own thoughts. If it “makes sense,” ask: to whom? Why? Is that logic—or is it just comfort?

Let mystery exist. You don’t need to solve every riddle. Some truths aren’t puzzles—they’re paintings.

Defer to the unknown. Accept that your brain is not the final word. Sometimes silence is smarter than syllogisms.

Interrogate the framework. When you say “this doesn’t make sense,” maybe the problem isn’t the idea—it’s the limits of your logic.

Don’t gatekeep reality. Just because you can’t wrap your mind around something doesn’t mean it’s false. It might just mean you’re not ready.

🎤 Final Thought: You’re a Dumb Little God—And That’s Beautiful.

You are a confused primate running wetware logic on blood and breath. You hallucinate meaning. You invent consistency. You call those inventions “truth.”

And the universe? The universe just is. It doesn’t bend for your brain. It doesn’t wait for your approval. It doesn’t owe you legibility.

So maybe the wisest thing you’ll ever do is this:

Stop pretending you’re built to understand everything. Start living like you’re here to witness the absurdity and be humbled by it.

Now go question everything—especially yourself.

🔥 REBLOG if your logic just got kicked in the teeth. 🔥 FOLLOW if you’re ready for more digital crowbars to the ego. 🔥 COMMENT if your meat-brain is having an existential meltdown right now.

#writing#cartoon#writers on tumblr#horror writing#creepy stories#writing community#writers on instagram#yeah what the fuck#funny post#writers of tumblr#funny stuff#lol#funny memes#biology#funny shit#education#physics#science#memes#humor#jokes#funny#tiktok#instagram#youtube#youtumblr#educate yourself#TheMostHumble#StopMakingSense#NeuralSludgeRants

109 notes

·

View notes

Text

Build-A-Boyfriend Chapter 3: Grand Opening

I’ve been hungover all day… also.... I'm sorry that the chapters aren't as long as people like, that's just not really my style.

->Starring: AI!AteezxAfab!Reader ->Genre: Dystopian ->CW: none

Previous Part | Next Part

Masterlist | Ateez Masterlist | Series Masterlist

Four days before the grand opening, Yn stood in the center of the lab, arms crossed, a rare smile tugging at her lips.

No anomalies.

No glitches.

Every log was clean. Every model responsive and compliant.

She tapped through the final diagnostics as her team moved like clockwork around her, prepping the remaining units for transfer. The companions were ready. Truly ready.

They’d done it. And for the first time in months, Yn allowed herself to believe it.

“They’re good to go,” she said aloud to the room, voice steadier than it had been in weeks. “Now we just make it beautiful.”

There was no dissent. No hesitation. Just quiet, collective relief.

By 6:00 a.m. on launch day, the streets surrounding Sector 1 in Hala City were already overflowing. Women of all ages lined the polished roads, executives in sleek visors, college students in chunky boots, older women with glowing canes, and mothers with daughters perched on their hips.

A massive countdown hovered above the building in glowing light particles.

Ten. Nine. Eight. Seven. Six. Five. Four. Three. Two. One

When the number hit zero, the white-gold doors of the first Build-A-Boyfriend™ store slid open, and history, quite literally, stepped forward.

The inside of the flagship store was unlike anything anyone had seen, not in a simulation, not in VR, not even on the upper stream feeds.

It was clean but not cold, glowing with soft light that pulsed in time with ambient sound. Curved architecture, plants that weren’t quite real, air that smelled like skin and something floral underneath.

The crowd entered in waves, ushered by gentle AI voices projected from the ceiling:

“Welcome to Build-A-Boyfriend™, KQ Inc.’s most advanced consumer product to date. Please scan your wristband to begin. You are in complete control.”

Light pulsed with ambient music. The air carried soft notes of citrus and lavender. Walls curved inward like a safe embrace. It felt not like a store, but a sanctuary.

Just inside, a small platform rose, and the crowd hushed.

Standing atop it in a graphite suit that shimmered subtly with light-reactive tech, Vira Yun took the stage.

Her presence silenced everything. Not with fear. With awe.

She didn’t need a mic. The air itself amplified her words.

“Welcome, citizens of Hala City, and beyond. Today is not just a milestone for KQ Inc. It is a milestone for all of us, for womanhood, for autonomy, for intimacy on our terms. For centuries, we’ve been told to settle. To accept love as luck, not design. To believe that affection must be earned, that tenderness is a privilege, not a right. That era is over. Here, you are not asking. You are choosing. Each companion created within these walls is not simply a machine, but a mirror, one that reflects your needs, your softness, your strength, your fantasies, your fears. And we have given you the tools to shape that reflection without shame. This store is not about dependency. It’s about design. About saying: I know what I want, and I deserve to receive it, safely, sweetly, and with reverence. Let the world call it strange. Let them call it artificial. Because we know the truth: every human deserves to feel adored. And today, we’ve made that reality programmable.”

"Thank you. And welcome to Build-A-Boyfriend.”

From the observation deck, Yn stood quietly, tablet in hand, watching the dream unfold. She’d spent months writing code, assembling microprocessors, mapping facial expressions, and optimizing human simulation algorithms.

Now it was real. Now they were here, and it was working.

One of the first customers to walk in was 31-year-old office worker, Choi Yunji

She stepped forward, clutching her wristband like it might slip from her fingers. She’d told herself she was just coming to look. Just curious. Just research. But now that she was inside, face-to-face with a glowing interface, it felt more like a confession.

“Would you like an assistant, or would you prefer to design solo?” a soft voice asked beside her. Yunji turned. A young woman with slicked-back hair and a serene face smiled at her. The name tag read: Delin, Companion Consultant.

“I… I think I need help,” Yunji said.

“Of course,” Delin said warmly. “Let’s begin your experience.”

Station One: Body Frame

A holographic model appeared before them, neutral, faceless, softly breathing.

“Preferred height?”

“Taller than me. But not too much. I want to feel safe. Not… overpowered.”

“Understood.” Delin adjusted sliders with a flick of her fingers. The form shifted accordingly.

“Shoulders wider?” “Yes.” “Musculature?” “Athletic, not bulky.” “Skin tone?” “Honey-toned.”

Station Two: Facial Features

“I want kind eyes,” Yunji said. “And maybe a crooked smile. Something… imperfect.” “We can simulate asymmetry.” “What about moles?” “Placed to your liking.”

Station Three: Hair

“Longish. A little messy. Chestnut.” “Frizz simulation or polished strands?” “Frizz. I don’t want him looking like he came out of a factory.” Delin smiled. “Ironically, he did.”

Station Four: Personality Matrix

Yunji froze. The options felt too intimate.

“Start with a base? Empathetic, loyal, gentle, observant…” “Can I choose traits… I didn’t get to have before?” “Yes,” Delin said simply.

They adjusted levels: affection, boundaries, humor, attentiveness. A slider labeled “Emotional Recall Sensitivity” blinked softly.

“What’s this?”

“How deeply your companion internalizes memories related to you. It allows for more dynamic emotional bonding.” Yunji slid it to 80%.

Station Five: Wardrobe

“Something soft. Comfortable. Approachable.”

A cozy cardigan wrapped over a white tee. He looked like someone who would bring you tea without asking.

“Would you like to name your companion?” “…Call him Jaeyun.”

Her wristband lit up:

MODEL 9817-JAEYUN Estimated delivery: 3 hours Ownership rights granted to: C. Yunji

Yunji turned slowly, as if waking from a dream. Around her, other women embraced, laughed, shook — giddy or stunned. This was more than shopping. This was the return of the forbidden.

Around the Room

A pair of teenage twins argued over whether their boyfriends should look identical or opposites. A woman on her lunch break ordered her unit for home delivery with a bedtime story feature. Friends joked about setting up double dates and game nights with their new companions.

One customer squealed, “I’m going full fantasy, tall, sharp jaw, high cheekbones, and a scar over the brow. I want him to look like he’s been through something.” Her friend “Big eyes, soft lips, librarian vibes. Another “I want dramatic jealousy in a soft voice. Like poetry with teeth.”

The store pulsed with joy, wonder, and something deeper. Yn felt it in her chest, pride and awe, washing over the logic-driven part of her that rarely gave way. She had helped build this future.

As the lavender glow settled over the quieting store, Yn remained in the observation wing, reviewing data. The launch had exceeded all projections.

She didn’t hear the door slide open behind her.

“Stunning, isn’t it?”

Vira stepped in, elegant as ever in graphite, her braid flawless, her voice smooth.

Yn straightened. “Yes, ma’am. It’s surreal.”

“We did it. You did it,” Vira said, standing beside her. “Revenue exceeded estimates by 37%. But more than that… I saw joy out there. Curiosity. Potential.”

Yn nodded. “The models held up. All systems within spec.”

“Good. Because in six days… we go even bigger.”

Yura turned. “The Ateez Line.”

Vira’s smile sharpened.

“Exactly. Eight elite units. Eight dreams. Fully interactive. Custom-coded. The most lifelike AI we’ve ever built. You’ve done beautiful work, Yn. Let’s make history again next week.”

She left as smoothly as she arrived. Yn exhaled, her fingers tightening around her tablet.

Six days.

Just six days.

Taglist: @e3ellie @yoongisgirl69 @jonghoslilstar @sugakooie @atztrsr

@honsans-atiny-24 @life-is-a-game-of-thrones @atzlordz @melanated-writersblock @hwasbabygirl

@sunnysidesins @felixs-voice-makes-me-wanna @seonghwaswifeuuuu @lezleeferguson-120 @mentalnerdgasms

@violatedvibrators @krystalcat @lover-ofallthingspretty @gigikubolong29 @peachmarien

@halloweenbyphoebebridgers

If you would like to be a part of the taglist please fill out this form

#ateez fanfic#ateez x reader#ateez park seonghwa#ateez jeong yunho#ateez yeosang#ateez choi jongho#ateez kim hongjoong#ateez song mingi#ateez mingi#ateez#hongjoong ateez#hongjoong x reader#kim hongjoong#seonghwa x reader#park seonghwa#yunho fanfic#yunho#ateez yunho#jeong yunho#yunho x reader#wooyoung x reader#wooyoung#jung wooyoung#ateez jung wooyoung#kang yeosang#yeosang#yeosang x reader#jongho#choi jongho#choi san

63 notes

·

View notes

Note

I think the reason I dislike AI generative software (I'm fine with AI analysis tools, like for example splitting audio into tracks) is that I am against algorithmically generated content. I don't like the internet as a pit of content slop. AI art isn't unique in that regard, and humans can make algorithmically generated content too (look at youtube for example). AI makes it way easier to churn out content slop and makes searching for non-slop content more difficult.

yeah i basically wholeheartedly agree with this. you are absolutely right to point out that this is a problem that far predates AI but has been exacerbated by the ability to industrialise production. Content Slop is absolutely one of the first things i think of when i use that "if you lose your job to AI, it means it was already automated" line -- the job of a listicle writer was basically to be a middleman between an SEO optimization tool and the Google Search algorithm. the production of that kind of thing was already being made by a computer for a computer, AI just makes it much faster and cheaper because you don't have to pay a monkey to communicate between the two machines. & ai has absolutely made this shit way more unbearable but ultimately y'know the problem is capitalism incentivising the creation of slop with no purpose other than to show up in search results

849 notes

·

View notes

Text

Google search really has been taken over by low-quality SEO spam, according to a new, year-long study by German researchers. The researchers, from Leipzig University, Bauhaus-University Weimar, and the Center for Scalable Data Analytics and Artificial Intelligence, set out to answer the question "Is Google Getting Worse?" by studying search results for 7,392 product-review terms across Google, Bing, and DuckDuckGo over the course of a year. They found that, overall, "higher-ranked pages are on average more optimized, more monetized with affiliate marketing, and they show signs of lower text quality ... we find that only a small portion of product reviews on the web uses affiliate marketing, but the majority of all search results do." They also found that spam sites are in a constant war with Google over the rankings, and that spam sites will regularly find ways to game the system, rise to the top of Google's rankings, and then will be knocked down. "SEO is a constant battle and we see repeated patterns of review spam entering and leaving the results as search engines and SEO engineers take turns adjusting their parameters," they wrote.

[...]

The researchers warn that this rankings war is likely to get much worse with the advent of AI-generated spam, and that it genuinely threatens the future utility of search engines: "the line between benign content and spam in the form of content and link farms becomes increasingly blurry—a situation that will surely worsen in the wake of generative AI. We conclude that dynamic adversarial spam in the form of low-quality, mass-produced commercial content deserves more attention."

332 notes

·

View notes

Text

Drone stimulation

It is well known that drones in the HIVE share a non visual communication, following the Voice and becoming ONE. They share their status and there is some kind of undescriptable balance, to stimulate those drones who need it or requested, each drone is more sensitive to certain type of stimulations.

Today is the example to target SERVE 000, once the order was received, 3 of the most muscular drones step out of the line. Their latex was shining while they walk and we could observe that they were aroused of being honored to obey and also to give a service to one of his drone brothers.

The first drone started to do a massage on the shoulders of SERVE 000 that was sitting in a dedicated chair for drone stimulation, whereas the others two shared deep kisses infront of him. There was also a screen playing the daily rubber drone hypnosis playlist. Then the first drone started to polish SERVE 000 latex uniform, applying the product and being sure that it is placed in all its body while saying the mantras “We are one, we are rubber” “obedience is pleasure, pleasure is obedience”, it take special care of each part of its body, finishing on its knees and using its mouth to increase the arousal of SERVE 000 who showed a clear preference for this task. This process continues until SERVE 000 indicated its optimal status and functioning at peak efficiency.

#SERVE #SERVEdrone #Rubberizer92 #TheVoice #Rubber #AI #Latex #RubberDrone

20 notes

·

View notes

Text

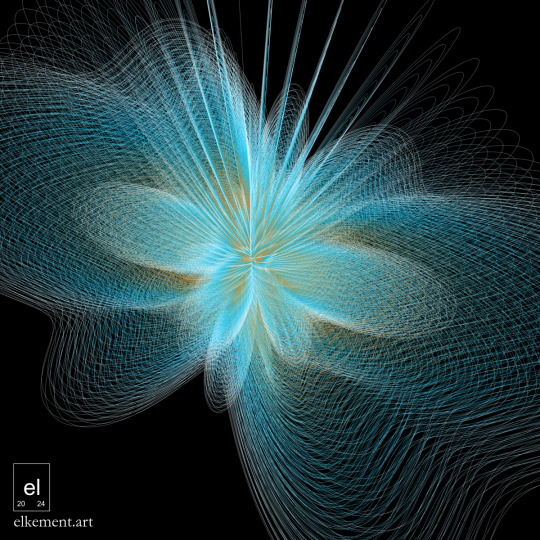

Frozen Harmonics - Print Test

Got my haul from my local printshop yesterday :-) I had my mathematical artwork "Frozen Harmonics" printed on canvas - and I absolutely like the result!

My "product photography" has room for improvement, but the colors and the black background really look way more vibrant / saturated than I expected. The black looks perhaps even "blacker" than on my metal prints. I also like how the canvas wraps around the frame, but I need to take that into account for future creations. This image was one of the few of my math / code artworks that had enough "margin suitable for wrapping".

Photos taken in natural indoor light about 1-2 meters from a window, but not in direct sunlight. I should perhaps edit the photo for color correction (or make an effort to take better photos :-)), but I just wanted to share it "as is"!

Digital original:

Digital art created with custom JavaScript code using the framework three.js. No AI involved. Contour lines on nested surfaces of spherical harmonic functions (the building blocks of orbitals in quantum mechanics - but this is a crazy combinations of such functions.)

Size of the print: 30cm x 30cm | 12in x 12in Image: 5000x5000 pixels

I figured the structure of the canvas would interfere with these lines in a non-optimal way, but I feel it looks smooth and adds a bit of interesting texture - just as the roughness of matt metal prints does.

Canvas print on INPRNT (shipping from the US) - my test was from a local "consumer-grade" printshop, so I am pretty sure that INPRNT's canvas prints are even better:

#mathematics#creative coding#science and art#physics#digital art#science themed art#art print#canvas print#portfolio#mathart

15 notes

·

View notes

Text

"Suiting Up for the Fu

The screen lit up with the stark, clinical brilliance of a defense contractor’s marketing reel. Crisp graphics swept across the frame: Republic Integrated Systems Presents: The Future of Excellence—The Mark IV Full-Body Armor.The voiceover began with the smug confidence of a salesman who knew his product had no competition.

“For cadets like C9J18, the Mark IV Armor isn’t just equipment—it’s a way of life. Engineered for total integration, unmatched efficiency, and unwavering loyalty, the Mark IV is the pinnacle of personal enhancement technology. Let’s take a tour.”

The scene shifted to C9J18 standing at attention, his visor down, the black armor hugging every contour of his augmented frame. Overlaid on the screen was a breakdown of his suit’s components, sleek lines pointing to each piece as the voice detailed their functions.

“From head to toe, the Mark IV transforms the wearer into the perfect operator. Modular plates provide full ballistic, environmental, and impact protection, while maintaining unparalleled flexibility and comfort. The suit isn’t just worn—it becomes an extension of the body.”

The camera zoomed in as C9J18 moved through his day, transitioning to his POV. The HUD was alive with information: telemetry readouts, vital signs, suit status. The Contextual Priority Filter immediately began working, greying out the irrelevant clutter of the world—background conversations, unimportant scenery, even the faint hum of distant machinery.

“With the Contextual Priority Filter, cadets only see what matters. The AI-driven system dynamically adjusts the wearer’s perception, ensuring absolute focus on mission-critical elements. Irrelevant distractions? Eliminated. Productivity? Maximized.”

C9J18’s HUD highlighted his fellow cadets, their alphanumeric IDs glowing softly above their heads. An order appeared in bold text across the top of his visor: Form up. Begin sprint drills. The cadet turned on his heel, his movements precise, his focus razor-sharp as the HUD traced his optimal path.

“Every action is guided by the Task Assignment System, seamlessly integrated with the suit’s neural interface. Orders are delivered directly to the wearer, ensuring immediate compliance and zero ambiguity. Whether it’s a drill or a live operation, the system ensures cadets are always one step ahead.”

The screen flickered to an instructor’s POV. The Drill Instructor’s helmet HUD displayed all cadet vitals, suit status, and performance metrics in real-time. The instructor’s voice cut through the comms channel, amplified by the suit’s audio system. “Pick up the pace, C9J18. You’re at 85% efficiency—I want 95 by the next lap.”

“Supervisors can control multiple cadets at once,” the voiceover explained, “adjusting suit parameters on the fly. Need to increase resistance for endurance training? Done. Reduce sensory input to prevent distractions? Easy. With the Mark IV, instructors don’t just teach—they sculpt.”

C9J18’s breathing was steady as he pushed through the sprint drill. His suit’s Sensory Regulation System muted the ache in his muscles, dampening the sensation of fatigue just enough to keep him going without compromising performance.

“The Sensory Regulation System is a game-changer,” the voiceover continued. “It modulates physical sensations, ensuring optimal performance. Pain, heat, cold—it’s all calibrated to the mission. The suit doesn’t just protect—it conditions.”

The scene transitioned to the mess hall, where C9J18 and his peers sat in silence, eating their nutrient-rich chow. The HUD overlay highlighted their calorie intake, hydration levels, and metabolic efficiency. Even here, the Contextual Priority Filter ensured no unnecessary conversation or stimuli distracted from their task.

“Nutrition is monitored in real-time, ensuring each cadet receives exactly what their body needs. The suit tracks digestion, energy expenditure, and even waste management, all while maintaining seamless integration with the wearer.”

As the day wound down, the footage shifted to the barracks. The cadets moved in synchronized silence, stepping into individual alcoves built into the walls. The HUD displayed a notification: Sleep Mode Engaged. C9J18 lay back in his suit, his visor darkening as the comms went silent.

“When the day is done, the Mark IV ensures uninterrupted rest. Fully equipped with climate control and biometric monitoring, the suit provides optimal conditions for recovery—even in full armor. The visor’s sleep mode darkens gradually, synchronizing with neural rhythms to lull the wearer into rest.”

The camera lingered on C9J18 as his breathing slowed, his face calm beneath the visor. The overlay showed his heart rate stabilizing, stress levels dropping, and compliance metrics holding steady. The AI’s gentle hum was a constant presence, even in sleep.

“From dawn to dusk, and even through the night, the Mark IV is more than just a suit—it’s a system. A revolution. A future.”

The screen cut to the Intelligence Conscript and K7L32, standing side by side, both suited up, their visors up to reveal their shaved heads and sharp, cynical smiles.

“Of course,” K7L32 said with a mocking tone, “it’s not just about the tech. It’s about the transformation. You don’t just wear the suit—you become it. Isn’t that right, sir?”

The Intelligence Conscript chuckled, his voice dripping with sardonic amusement. “That’s right, cadet. The Mark IV doesn’t just enhance—it defines. And for cadets like C9J18, it’s the beginning of a life dedicated to the Republic. Efficient. Loyal. And, best of all... compliant.”

The screen faded to black, the final words scrolling across in bold, unyielding font:

The Mark IV Armor Suit: Not Just a Tool—A Way of Life.

5 notes

·

View notes

Text

I genuinely don’t understand how artists could be interested in using AI even if it’s based off only their own art style in order to optimize their work flow or speed in which they “make” art when they’re not actually drawing it themselves anymore because like.

It’s not about the final product (to me at least) at all but rather the fun in making art is the process. I love drawing every line and painting every stroke.

There’s also the excitement that comes with imagining a new piece and the challenge with getting out of your comfort zone and trying new things in order to improve and expand your skills. And achieving those goals is so fulfilling!

It’s very satisfying to look at a final piece and be happy and proud with what you have accomplished thru your own efforts, but whats the fun in taking a pre made image and just calling it yours? Anyone can do that. There’s no uniqueness nor truth in that. It’s void of life. Just technological vomit. If I can’t make it myself then I have no interest in it.

Art is about falling in love with an idea and bringing it to life with your own hands, not the click of a button.

10 notes

·

View notes

Text

fundamentally you need to understand that the internet-scraping text generative AI (like ChatGPT) is not the point of the AI tech boom. the only way people are making money off that is through making nonsense articles that have great search engine optimization. essentially they make a webpage that’s worded perfectly to show up as the top result on google, which generates clicks, which generates ads. text generative ai is basically a machine that creates a host page for ad space right now.

and yeah, that sucks. but I don’t think the commercialized internet is ever going away, so here we are. tbh, I think finding information on the internet, in books, or through anything is a skill that requires critical thinking and cross checking your sources. people printed bullshit in books before the internet was ever invented. misinformation is never going away. I don’t think text generative AI is going to really change the landscape that much on misinformation because people are becoming educated about it. the text generative AI isn’t a genius supercomputer, but rather a time-saving tool to get a head start on identifying key points of information to further research.

anyway. the point of the AI tech boom is leveraging big data to improve customer relationship management (CRM) to streamline manufacturing. businesses collect a ridiculous amount of data from your internet browsing and purchases, but much of that data is stored in different places with different access points. where you make money with AI isn’t in the Wild West internet, it’s in a structured environment where you know the data its scraping is accurate. companies like nvidia are getting huge because along with the new computer chips, they sell a customizable ecosystem along with it.

so let’s say you spent 10 minutes browsing a clothing retailer’s website. you navigated directly to the clothing > pants tab and filtered for black pants only. you added one pair of pants to your cart, and then spent your last minute or two browsing some shirts. you check out with just the pants, spending $40. you select standard shipping.

with AI for CRM, that company can SIGNIFICANTLY more easily analyze information about that sale. maybe the website developers see the time you spent on the site, but only the warehouse knows your shipping preferences, and sales audit knows the amount you spent, but they can’t see what color pants you bought. whereas a person would have to connect a HUGE amount of data to compile EVERY customer’s preferences across all of these things, AI can do it easily.

this allows the company to make better broad decisions, like what clothing lines to renew, in which colors, and in what quantities. but it ALSO allows them to better customize their advertising directly to you. through your browsing, they can use AI to fill a pre-made template with products you specifically may be interested in, and email it directly to you. the money is in cutting waste through better manufacturing decisions, CRM on an individual and large advertising scale, and reducing the need for human labor to collect all this information manually.

(also, AI is great for developing new computer code. where a developer would have to trawl for hours on GitHUB to find some sample code to mess with to try to solve a problem, the AI can spit out 10 possible solutions to play around with. thats big, but not the point right now.)

so I think it’s concerning how many people are sooo focused on ChatGPT as the face of AI when it’s the least profitable thing out there rn. there is money in the CRM and the manufacturing and reduced labor. corporations WILL develop the technology for those profits. frankly I think the bigger concern is how AI will affect big data in a government ecosystem. internet surveillance is real in the sense that everything you do on the internet is stored in little bits of information across a million different places. AI will significantly impact the government’s ability to scrape and compile information across the internet without having to slog through mountains of junk data.

#which isn’t meant to like. scare you or be doomerism or whatever#but every take I’ve seen about AI on here has just been very ignorant of the actual industry#like everything is abt AI vs artists and it’s like. that’s not why they’re developing this shit#that’s not where the money is. that’s a side effect.#ai#generative ai

9 notes

·

View notes

Text

Do You Believe in Life After Tech? - A Critical Analysis of the Self-Optimization Focused Longevity Practices

The year is 2025. For an average human living in the territories dressed with internet cables, the day starts by grabbing the smartphone and consuming whatever the algorithm has to offer. From grocery shopping to becoming a millionaire overnight through crypto trades, everything seems possible from behind the screen. Societies are increasingly shaped by the very algorithms that dictate behaviors, tastes, and desires. From the frenzy of aesthetic surgery trends to the instantaneous viral success of products, from the commodification of reality to the proliferation of memes, we have become subjects of a culture where everything is recontextualized, reshaped, and hyper-real. Our daily lives and social habits are shaped by the algorithm we constantly labor to. The lines between the real and the simulated blur further, as Baudrillard whispers from the early days of the internet, "We live in a world where there is more and more information, and less and less meaning." (Baudrillard 1994:79). Here, meaning becomes a construct of virtuality, a mere image or simulation of the real. As our perception of reality becomes distorted in an AI-mediated fashion, whose pace of progress is beyond our perception of the pace of living, the human condition and social order are caught in a tension between the expectations of a world driven by accelerating technological advancements and the limitations of societies struggling to keep up. The contemporary condition whispers to us to either try and stay relevant or stay out of the picture. But even then, salvation is not guaranteed. In fact, nothing is guaranteed except the increasing quest for the relentless advance of an unchecked, accelerationist future.

This paper examines how the implications of contemporary accelerationist discourses of progress imply biopower and commodification of the subject by analyzing the longevity industry and public figures such as Bryan Johnson and viral self-optimization trends online. Through a critical analysis of the longevity industry, the paper aims to critically engage with the societal repercussions of accelerationist ideas.

#longevity#self optimisation#datafication#accelerationism#bryan johnson#nick land#don't die#blueprint

4 notes

·

View notes

Text

The Evolution of DJ Controllers: From Analog Beginnings to Intelligent Performance Systems

The DJ controller has undergone a remarkable transformation—what began as a basic interface for beat matching has now evolved into a powerful centerpiece of live performance technology. Over the years, the convergence of hardware precision, software intelligence, and real-time connectivity has redefined how DJs mix, manipulate, and present music to audiences.

For professional audio engineers and system designers, understanding this technological evolution is more than a history lesson—it's essential knowledge that informs how modern DJ systems are integrated into complex live environments. From early MIDI-based setups to today's AI-driven, all-in-one ecosystems, this blog explores the innovations that have shaped DJ controllers into the versatile tools they are today.

The Analog Foundation: Where It All Began

The roots of DJing lie in vinyl turntables and analog mixers. These setups emphasized feel, timing, and technique. There were no screens, no sync buttons—just rotary EQs, crossfaders, and the unmistakable tactile response of a needle on wax.

For audio engineers, these analog rigs meant clean signal paths and minimal processing latency. However, flexibility was limited, and transporting crates of vinyl to every gig was logistically demanding.

The Rise of MIDI and Digital Integration

The early 2000s brought the integration of MIDI controllers into DJ performance, marking a shift toward digital workflows. Devices like the Vestax VCI-100 and Hercules DJ Console enabled control over software like Traktor, Serato, and VirtualDJ. This introduced features such as beat syncing, cue points, and FX without losing physical interaction.

From an engineering perspective, this era introduced complexities such as USB data latency, audio driver configurations, and software-to-hardware mapping. However, it also opened the door to more compact, modular systems with immense creative potential.

Controllerism and Creative Freedom

Between 2010 and 2015, the concept of controllerism took hold. DJs began customizing their setups with multiple MIDI controllers, pad grids, FX units, and audio interfaces to create dynamic, live remix environments. Brands like Native Instruments, Akai, and Novation responded with feature-rich units that merged performance hardware with production workflows.

Technical advancements during this period included:

High-resolution jog wheels and pitch faders

Multi-deck software integration

RGB velocity-sensitive pads

Onboard audio interfaces with 24-bit output

HID protocol for tighter software-hardware response

These tools enabled a new breed of DJs to blur the lines between DJing, live production, and performance art—all requiring more advanced routing, monitoring, and latency optimization from audio engineers.

All-in-One Systems: Power Without the Laptop

As processors became more compact and efficient, DJ controllers began to include embedded CPUs, allowing them to function independently from computers. Products like the Pioneer XDJ-RX, Denon Prime 4, and RANE ONE revolutionized the scene by delivering laptop-free performance with powerful internal architecture.

Key engineering features included:

Multi-core processing with low-latency audio paths

High-definition touch displays with waveform visualization

Dual USB and SD card support for redundancy

Built-in Wi-Fi and Ethernet for music streaming and cloud sync

Zone routing and balanced outputs for advanced venue integration

For engineers managing live venues or touring rigs, these systems offered fewer points of failure, reduced setup times, and greater reliability under high-demand conditions.

Embedded AI and Real-Time Stem Control

One of the most significant breakthroughs in recent years has been the integration of AI-driven tools. Systems now offer real-time stem separation, powered by machine learning models that can isolate vocals, drums, bass, or instruments on the fly. Solutions like Serato Stems and Engine DJ OS have embedded this functionality directly into hardware workflows.

This allows DJs to perform spontaneous remixes and mashups without needing pre-processed tracks. From a technical standpoint, it demands powerful onboard DSP or GPU acceleration and raises the bar for system bandwidth and real-time processing.

For engineers, this means preparing systems that can handle complex source isolation and downstream processing without signal degradation or sync loss.

Cloud Connectivity & Software Ecosystem Maturity

Today’s DJ controllers are not just performance tools—they are part of a broader ecosystem that includes cloud storage, mobile app control, and wireless synchronization. Platforms like rekordbox Cloud, Dropbox Sync, and Engine Cloud allow DJs to manage libraries remotely and update sets across devices instantly.

This shift benefits engineers and production teams in several ways:

Faster changeovers between performers using synced metadata

Simplified backline configurations with minimal drive swapping

Streamlined updates, firmware management, and analytics

Improved troubleshooting through centralized data logging

The era of USB sticks and manual track loading is giving way to seamless, cloud-based workflows that reduce risk and increase efficiency in high-pressure environments.

Hybrid & Modular Workflows: The Return of Customization

While all-in-one units dominate, many professional DJs are returning to hybrid setups—custom configurations that blend traditional turntables, modular FX units, MIDI controllers, and DAW integration. This modularity supports a more performance-oriented approach, especially in experimental and genre-pushing environments.

These setups often require:

MIDI-to-CV converters for synth and modular gear integration

Advanced routing and clock sync using tools like Ableton Link

OSC (Open Sound Control) communication for custom mapping

Expanded monitoring and cueing flexibility

This renewed complexity places greater demands on engineers, who must design systems that are flexible, fail-safe, and capable of supporting unconventional performance styles.

Looking Ahead: AI Mixing, Haptics & Gesture Control

As we look to the future, the next phase of DJ controllers is already taking shape. Innovations on the horizon include:

AI-assisted mixing that adapts in real time to crowd energy

Haptic feedback jog wheels that provide dynamic tactile response

Gesture-based FX triggering via infrared or wearable sensors

Augmented reality interfaces for 3D waveform manipulation

Deeper integration with lighting and visual systems through DMX and timecode sync

For engineers, this means staying ahead of emerging protocols and preparing venues for more immersive, synchronized, and responsive performances.

Final Thoughts

The modern DJ controller is no longer just a mixing tool—it's a self-contained creative engine, central to the live music experience. Understanding its capabilities and the technology driving it is critical for audio engineers who are expected to deliver seamless, high-impact performances in every environment.

Whether you’re building a club system, managing a tour rig, or outfitting a studio, choosing the right gear is key. Sourcing equipment from a trusted professional audio retailer—online or in-store—ensures not only access to cutting-edge products but also expert guidance, technical support, and long-term reliability.

As DJ technology continues to evolve, so too must the systems that support it. The future is fast, intelligent, and immersive—and it’s powered by the gear we choose today.

2 notes

·

View notes

Text

Why Choose AI Content Creation for Your Content Strategy?

In the ever-evolving digital world, content is king. AI Content Creation is rapidly transforming how we produce and manage that content. But creating consistent, engaging, and high-quality content takes time, creativity, and resources—something many individuals and businesses struggle to balance. Thankfully, the rise of artificial intelligence has revolutionized the creative world, offering innovative solutions to long-standing content challenges. Let’s explore why using AI for content creation is becoming essential for anyone aiming to stay competitive and relevant.

What Makes AI a Valuable Tool for Content Creators?

At its core, AI is designed to augment human capabilities. Instead of replacing writers, marketers, or designers, it acts as a powerful assistant. By analyzing vast amounts of data, AI can identify trending topics, suggest headlines, and even draft initial versions of articles or social media posts. This significantly reduces the time and effort involved in the early stages of content production. With AI handling repetitive or research-heavy tasks, creators can focus more on refining ideas and adding their unique voices.

Smarter Personalization

Today’s audiences demand content that aligns closely with their personal interests, needs, and online behavior. AI can analyze user data—such as browsing habits, location, and engagement history—to help you craft personalized messages that resonate more deeply. Whether it’s a customized email subject line or a dynamic landing page, AI ensures the right message reaches the right person at the right time.

How Does AI Improve Efficiency in Content Production?

One of the biggest challenges in content creation is the pressure to produce regularly without compromising quality. AI tools accelerate the process by generating drafts, summarizing information, and optimizing language for readability and SEO. This allows content teams to meet tight deadlines and scale their output without needing to hire additional staff. The automation of routine tasks like grammar checking or keyword placement also frees creators from tedious work, improving overall productivity.

Speed and Efficiency

One of the most immediate benefits of using artificial intelligence in content production is the significant boost in productivity. Traditional content workflows—research, ideation, drafting, and editing—can be time-consuming. AI tools can generate outlines, suggest headlines, write paragraphs, and even correct grammar in seconds. This allows creators to produce more content in less time, without sacrificing quality.

Imagine being able to create multiple blog posts, product descriptions, or social media updates in a fraction of the time it used to take. For businesses, this means faster campaign rollouts and the ability to respond quickly to trending topics or customer needs.

Does AI Limit Creativity or Enhance It?

There’s a misconception that relying on machines might stifle creativity. In truth, AI technologies frequently serve as a spark that ignites and enhances creative thinking. By handling mundane or technical aspects, they give creators more mental space to experiment and innovate. Some AI platforms suggest alternative angles or generate prompts that inspire new ideas. This collaborative process between human insight and machine intelligence can produce richer, more original content than either could achieve alone.

Boosting Creativity

Rather than substituting for human imagination, AI often amplifies and supports the creative process. By handling repetitive or technical tasks, AI frees up creators to focus on strategy, storytelling, and innovation. It can suggest fresh angles, explore alternative headlines, and even simulate different audience responses. This collaborative dynamic between humans and machines leads to richer, more inventive content. In this way, AI content creation becomes a partnership—where AI offers the structure and insights, while humans bring nuance, emotion, and originality.

How Does AI Maintain Brand Consistency Across Channels?

Consistency in tone, style, and messaging is critical for building trust and recognition. Managing this across multiple platforms can be complex, especially for larger teams or brands with a broad presence. AI can be trained on brand guidelines and past content to ensure new material aligns perfectly with the desired voice. This guarantees a unified brand identity whether the message appears in blogs, newsletters, social media, or advertisements.

Consistency Across Channels

Maintaining a consistent voice, tone, and style across multiple content channels—blogs, emails, websites, and social media—can be challenging. AI tools can be trained to adhere to your brand guidelines, ensuring that all output reflects your unique identity. This is particularly useful for companies managing large content volumes or collaborating with multiple creators.

Consistency builds trust and brand recognition. When your audience receives clear, cohesive messaging no matter where they interact with you, your brand becomes more memorable and credible. You can also watch: Meet AdsGPT’s Addie| Smarter Ad Copy Creation In Seconds

youtube

Final Thoughts: Why Embrace AI in Your Content Workflow?

Artificial intelligence is not just a passing trend—it’s transforming how content is created and consumed. From speeding up production and enhancing personalization to providing actionable insights and boosting creativity, AI content creators meet the growing demands of digital audiences. While human creativity remains irreplaceable, AI is proving to be an indispensable partner that elevates quality and efficiency.

#ai#social media#social media seo#social media strategy#social media growth#social media manager#Youtube

2 notes

·

View notes

Text

Comprehensive Industrial Solutions by AxisValence: Advancing Productivity, Safety, and Efficiency

In today’s fast-paced manufacturing world, industrial productivity is driven by precision, consistency, safety, and compliance. Whether it’s printing, packaging, converting, textiles, plastics, or pharmaceuticals—modern production lines demand advanced electro-mechanical systems that minimize waste, ensure operational safety, and improve overall efficiency.

AxisValence, a business unit of A.T.E. India, addresses this demand with a complete range of industrial automation and enhancement products. From static elimination to print quality assurance, ink management, and solvent recovery, AxisValence solutions are engineered to optimize each critical point in the production cycle.

This article provides an overview of the key technologies and systems offered by AxisValence across its diverse portfolio:

Electrostatics: Managing Static for Quality and Safety

Electrostatics can compromise product quality, disrupt operations, and pose serious safety hazards, especially in high-speed processes involving films, paper, textiles, or volatile solvents. AxisValence offers a complete suite of static control solutions:

ATEX AC Static Eliminators: Certified for use in explosive or solvent-heavy environments such as rotogravure or flexo printing lines.

AC and DC Static Eliminators: Designed for long-range or close-range static charge neutralization across a range of substrates.

Passive Static Dischargers: Cost-effective, maintenance-free brushes for light-duty static elimination where power isn't available.

Air-based Static Eliminators / Ionisers: Use ionized air streams for dust blow-off and static removal, ideal for hard-to-reach areas.

Static Measurement & Online Monitoring: Includes handheld meters and IoT-enabled monitoring systems for real-time control and diagnostics.

Electrostatic Charging Systems: Generate controlled static charges for bonding or pinning applications in laminating or packaging lines.

Electrostatic Print Assist (ESA): Enhances ink transfer in rotogravure printing by improving ink pickup and registration.

Camera-Based Web Videos for Print Viewing: Real-Time Visual Inspection

High-speed printing applications require instant visibility into print quality. AxisValence’s ViewAXIS systems are high-performance, camera-based web viewing solutions:

ViewAXIS Mega: Entry-level system with high-resolution imaging for real-time visual inspection.

ViewAXIS Giga: Equipped with 14x optical zoom and X-ray vision for deeper inspection of layered prints.

ViewAXIS Tera: Full repeat system with a 55” display, allowing operators to monitor and inspect the complete print layout in real-time.

Camera-Based Web Videos for Print Viewing systems help identify print errors like registration issues, smudging, or color inconsistencies early in the production run—minimizing rework and improving efficiency.

100% Inspection Systems: Intelligent Defect Detection

Modern converters and packaging companies require automated systems that can identify microscopic flaws at high speeds. AxisValence’s DetectAXIS systems use AI-based image processing and line scan cameras for 100% inspection:

DetectAXIS Print: Identifies printing defects such as streaks, misregistration, color deviation, and missing text at speeds up to 750 m/min.

DetectAXIS Surface: Designed for detecting surface anomalies—scratches, gels, holes, fish-eyes—on films, textiles, and nonwovens.

Real-time alerts, digital roll-maps, and adaptive detection improve quality control while reducing material waste and production downtime.

Ink Handling Systems: Consistent Ink Quality and Reduced Waste

Stable ink flow and temperature directly impact print quality and solvent consumption. AxisValence’s Valflow range ensures optimal ink conditioning through:

Ink Filters: Eliminate contaminants like metallic particles, fibers, and dried pigments that can damage cylinders or cause print defects.

Ink Pumps & Tanks: Efficient centrifugal pumps and round stainless-steel tanks designed for continuous ink circulation and minimal ink residue.

Ink Temperature Stabilisers (ITS): Automatically control ink temperature to prevent viscosity drift and reduce solvent evaporation—delivering consistent print shade and odor-free operation.

Valflow Ink handling solutions are ideal for gravure and flexographic printing applications.

Print Register Control Systems: Precision Alignment in Every Print

Maintaining precise print registration control systemis critical in multi-color printing processes. AxisValence offers two specialized systems:

AlygnAXIS: For rotogravure presses, using fiber optic sensors and adaptive algorithms to deliver real-time register accuracy.

UniAXIS: A versatile controller for print-to-mark, coat-to-mark, and cut-to-mark applications—both inline and offline.

These controllers reduce waste, enhance print alignment, and speed up setup during job changes.

Safety and Heat Recovery Systems: Energy Efficiency and Explosion Prevention

Solvent-based processes require strict monitoring of air quality and heat management to meet compliance and reduce operational costs. AxisValence provides:

NIRA Residual Solvent Analyser: Lab-based gas chromatography system for quick analysis of residual solvents in films.

Air-to-Air Heat Exchangers (Lamiflow): Recover and reuse waste heat from drying processes—improving energy efficiency.

LEL Monitoring and Recirculation Systems: Ensure solvent vapor concentrations stay within safe limits in enclosed dryers using flame ionization or infrared detection.

Together, safety and heat recovery systems ensure both environmental safety and process optimization.

Surface Cleaning Systems: Contaminant-Free Production Lines

Particulate contamination can ruin coating, lamination, and printing jobs. AxisValence offers contactless surface cleaning systems that combine airflow and static control:

Non-Contact Web Cleaners: Use air curtains and vacuum to remove dust from moving substrates without physical contact.

Ionising Air Knives: High-velocity ionized air streams neutralize static and clean surfaces entering finishing zones.

Ionising Air Blowers: Cover larger surfaces with ionized air to eliminate static and debris.

Ionising Nozzles & Guns: Handheld or fixed, these tools offer targeted static and dust elimination at workstations.

Waste Solvent Recovery: Sustainable Ink and Solvent Reuse

Reducing solvent consumption and improving environmental compliance is critical for modern converters. AxisValence partners with IRAC (Italy) to offer:

Solvent Distillation Systems: Recover usable solvents from spent ink mixtures, reducing hazardous waste and cutting costs.

Parts Washers: Clean anilox rolls, gravure cylinders, and components through high-pressure, ultrasonic, or brush-based systems.

Waste solvent recovery systems offer a quick ROI and support zero-waste manufacturing goals.

Why Choose AxisValence?

AxisValence combines decades of industrial expertise with innovative product design to deliver reliable, safe, and efficient solutions for manufacturing processes. With a product portfolio spanning:

Electrostatics & Static Control Systems

Web Viewing & Print Inspection Solutions

Ink Handling and Conditioning Equipment

Register Control and Print Automation

Heat Recovery and Air Quality Monitoring

Surface Cleaning Technologies

Waste Solvent Management

…AxisValence serves diverse industries including printing, packaging, plastic and rubber, textile, pharma, and automotive.

From single-device retrofits to complete system integration, AxisValence enables manufacturers to improve output quality, reduce waste, meet safety norms, and gain a competitive edge.

Explore our full product range at www.axisvalence.com or contact our sales network for a customized consultation tailored to your industrial needs.

youtube

2 notes

·

View notes

Text

Top Tools to Craft Killer Facebook Ad Copy – Our Favorite 5

Creating compelling Facebook ad copy can be a daunting task, especially when you're trying to stand out in a crowded newsfeed. Whether you're promoting a product, a service, or trying to grow your brand, your message needs to be concise, persuasive, and aligned with your target audience's interests. That's where a Facebook ad copy generator becomes a game-changer. Below, we highlight five of our favorite tools that can help you craft high-converting Facebook ads quickly and efficiently. These tools save time and allow you to experiment with various ad styles and tones, ensuring your content resonates with your audience. Additionally, they help streamline your marketing process, enabling you to focus on other key aspects of your campaign.

1. AdsGPT

AdsGPT is an AI-powered Facebook ad copy generator that helps marketers, entrepreneurs, and agencies create optimized ad content in seconds. Designed specifically for Facebook ads, AdsGPT uses machine learning to generate copy tailored to your business goals and audience. Whether you need attention-grabbing headlines or persuasive calls-to-action, this tool adapts to your tone and style, helping you increase engagement and conversions. Its intuitive interface and customizable templates make it a favorite among digital marketers.

2. Copy.ai

Copy.ai is a versatile AI writing assistant that offers a dedicated Facebook ad copy generator among its many features. With just a few inputs about your product or service, Copy.ai can produce multiple ad copy variants in seconds. It's especially useful for brainstorming ad ideas and testing different messaging angles. From short punchy lines to longer value-driven descriptions, Copy.ai helps you maintain creativity while saving time.

3. Jasper (formerly Jarvis)

Jasper is a widely known AI writing platform that excels at generating high-quality marketing content, including Facebook ads. Using its "PAS" (Problem-Agitate-Solution) and "AIDA" (Attention-Interest-Desire-Action) frameworks, Jasper creates emotionally resonant and persuasive copy that aligns with sales psychology principles. Its Facebook ad copy generator can be fine-tuned with tone and style preferences, making it perfect for brands with a distinct voice.

4. Writesonic

Writesonic is another powerful AI content tool with a feature-rich Facebook ad copy generator. This ad creation platform allows users to generate tailored ad content for different campaign goals, whether it's traffic, conversions, or lead generation. Writesonic supports multiple languages and tones, making it ideal for global brands. Its dynamic interface and the ability to compare several copy versions at once make it an excellent choice for A/B testing

5. Anyword

Anyword leverages predictive analytics to help you create Facebook ads that convert. Its standout feature is performance prediction, where each generated copy variant is given a score based on its potential effectiveness. This data-driven approach allows marketers to select the most impactful message before launching a campaign. The Facebook ad copy generator in Anyword is designed to help you communicate value clearly and convincingly, improving your ROI with every ad.

Why Use a Facebook Ad Copy Generator?

Using a Facebook ad copy generator not only saves time but also enhances creativity and ensures consistency in your messaging. These tools often come equipped with best-practice templates, AI-driven insights, and optimization features that are difficult to replicate manually. Whether you're a solo entrepreneur or managing campaigns for multiple clients, having a reliable ad copy generator at your disposal can dramatically improve your productivity and results.

You can also watch: Meet AdsGPT’s Addie| Smarter Ad Copy Creation In Seconds

youtube

Final Thoughts

With Facebook ads becoming more competitive, having an edge in your ad copy is essential. Each of the tools mentioned above — AdsGpt, Copy.ai, Jasper, Writesonic, and Anyword — offers unique strengths tailored to different needs. Experiment with a few and see which one aligns best with your brand's voice and marketing goals. By incorporating a powerful Facebook ad copy generator into your toolkit, you'll be better equipped to capture attention, drive engagement, and ultimately, boost your bottom line.

2 notes

·

View notes

Text

Smart Switchgear in 2025: What Electrical Engineers Need to Know

In the fast-evolving world of electrical infrastructure, smart switchgear is no longer a futuristic concept — it’s the new standard. As we move through 2025, the integration of intelligent systems into traditional switchgear is redefining how engineers design, monitor, and maintain power distribution networks.

This shift is particularly crucial for electrical engineers, who are at the heart of innovation in sectors like manufacturing, utilities, data centers, commercial construction, and renewable energy.

In this article, we’ll break down what smart switchgear means in 2025, the technologies behind it, its benefits, and what every electrical engineer should keep in mind.

What is Smart Switchgear?

Smart switchgear refers to traditional switchgear (devices used for controlling, protecting, and isolating electrical equipment) enhanced with digital technologies, sensors, and communication modules that allow:

Real-time monitoring

Predictive maintenance

Remote operation and control

Data-driven diagnostics and performance analytics

This transformation is powered by IoT (Internet of Things), AI, cloud computing, and edge devices, which work together to improve reliability, safety, and efficiency in electrical networks.

Key Innovations in Smart Switchgear (2025 Edition)

1. IoT Integration

Smart switchgear is equipped with intelligent sensors that collect data on temperature, current, voltage, humidity, and insulation. These sensors communicate wirelessly with central systems to provide real-time status and alerts.

2. AI-Based Predictive Maintenance

Instead of traditional scheduled inspections, AI algorithms can now predict component failure based on usage trends and environmental data. This helps avoid downtime and reduces maintenance costs.

3. Cloud Connectivity

Cloud platforms allow engineers to remotely access switchgear data from any location. With user-friendly dashboards, they can visualize key metrics, monitor health conditions, and set thresholds for automated alerts.

4. Cybersecurity Enhancements

As devices get connected to networks, cybersecurity becomes crucial. In 2025, smart switchgear is embedded with secure communication protocols, access control layers, and encrypted data streams to prevent unauthorized access.

5. Digital Twin Technology

Some manufacturers now offer a digital twin of the switchgear — a virtual replica that updates in real-time. Engineers can simulate fault conditions, test load responses, and plan future expansions without touching the physical system.

Benefits for Electrical Engineers

1. Operational Efficiency

Smart switchgear reduces manual inspections and allows remote diagnostics, leading to faster response times and reduced human error.

2. Enhanced Safety

Early detection of overload, arc flash risks, or abnormal temperatures enhances on-site safety, especially in high-voltage environments.

3. Data-Driven Decisions

Real-time analytics help engineers understand load patterns and optimize distribution for efficiency and cost savings.

4. Seamless Scalability

Modular smart systems allow for quick expansion of power infrastructure, particularly useful in growing industrial or smart city projects.

Applications Across Industries

Manufacturing Plants — Monitor energy use per production line

Data Centers — Ensure uninterrupted uptime and cooling load balance

Commercial Buildings — Integrate with BMS (Building Management Systems)

Renewable Energy Projects — Balance grid load from solar or wind sources

Oil & Gas Facilities — Improve safety and compliance through monitoring

What Engineers Need to Know Moving Forward

1. Stay Updated with IEC & IEEE Standards

Smart switchgear must comply with global standards. Engineers need to be familiar with updates related to IEC 62271, IEC 61850, and IEEE C37 series.

2. Learn Communication Protocols

Proficiency in Modbus, DNP3, IEC 61850, and OPC UA is essential to integrating and troubleshooting intelligent systems.

3. Understand Lifecycle Costing

Smart switchgear might have a higher upfront cost but offers significant savings in maintenance, energy efficiency, and downtime over its lifespan.

4. Collaborate with IT Teams

The line between electrical and IT is blurring. Engineers should work closely with cybersecurity and cloud teams for seamless, secure integration.

Conclusion

Smart switchgear is reshaping the way electrical systems are built and managed in 2025. For electrical engineers, embracing this innovation isn’t just an option — it’s a career necessity.

At Blitz Bahrain, we specialize in providing cutting-edge switchgear solutions built for the smart, digital future. Whether you’re an engineer designing the next big project or a facility manager looking to upgrade existing systems, we’re here to power your progress.

#switchgear#panel#manufacturer#bahrain25#electrical supplies#electrical equipment#electrical engineers#electrical

6 notes

·

View notes