#AI Language

Explore tagged Tumblr posts

Text

I attended an excellent panel on AI with John Scalzi and others. (I think at Starbase Indy.)

A bunch of science fiction writers talking to other writers and fans. My biggest takeaway was their insistence that would should avoid all anthropomorphic language when discussing AI, i.e. It's not artificial intelligence. It's statistical predictive text/pixels. It's not machine learning, it's statistical parameter adjustment.

(Caveat: this is not my field. Don't take my replacement terms as gospel. Do consider ways to avoid words like intelligence, learning, etc. that equate mathematical models with personhood. We don't need to be humanizing tools with so much destructive power when there are still so many real people society is so good at dehumanizing.)

#AI language#LLM#large language model#image generators#artificial intelligence#language shapes how we think about things#rehumanize humans

159K notes

·

View notes

Text

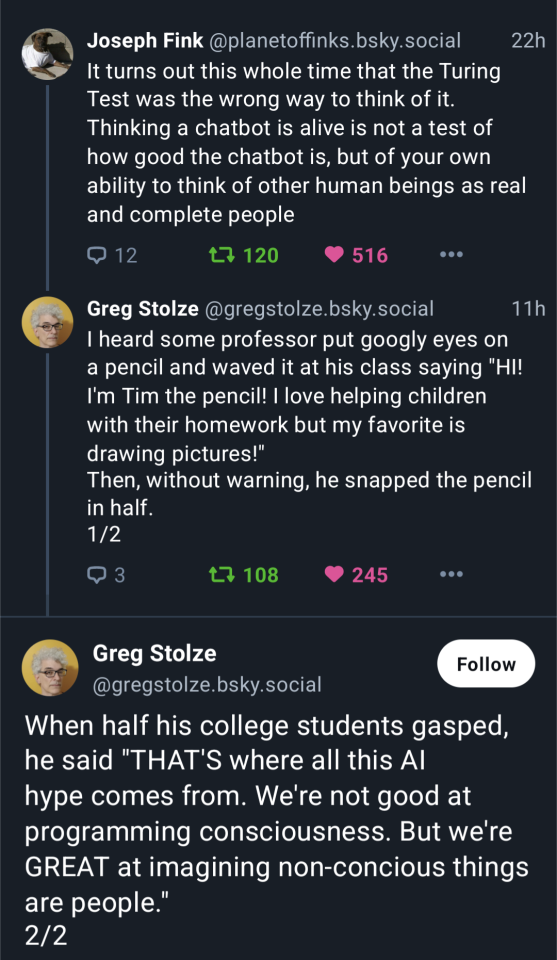

Are AIs talking smack about humanity behind our backs?

JB: Hi Grok, I read about an experiment in which two AIs were linked in a laboratory. In a very short time the scientists realized that the AIs had developed a new language to communicate with one another making their conversations unintelligible by humans. Can you tell me more about this event and, since you’re an AI can you give us your best guess on what they were discussing behind our…

#AI as agent of the liberal elite#AI conspiracy theories#AI language#AI out of control#AI schemes#AI trustworthiness#AI ulterior motive#Q#Quanon

0 notes

Text

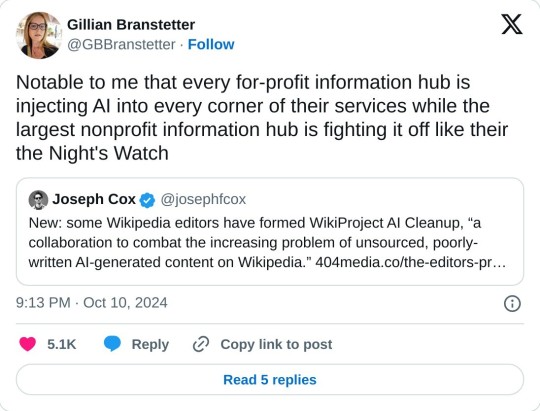

A group of Wikipedia editors have formed WikiProject AI Cleanup, “a collaboration to combat the increasing problem of unsourced, poorly-written AI-generated content on Wikipedia.” The group’s goal is to protect one of the world’s largest repositories of information from the same kind of misleading AI-generated information that has plagued Google search results, books sold on Amazon, and academic journals. “A few of us had noticed the prevalence of unnatural writing that showed clear signs of being AI-generated, and we managed to replicate similar ‘styles’ using ChatGPT,” Ilyas Lebleu, a founding member of WikiProject AI Cleanup, told me in an email. “Discovering some common AI catchphrases allowed us to quickly spot some of the most egregious examples of generated articles, which we quickly wanted to formalize into an organized project to compile our findings and techniques.”

9 October 2024

28K notes

·

View notes

Text

The Power of "Just": How Language Shapes Our Relationship with AI

I think of this post as a collection of things I notice, not an argument. Just a shift in how I’m seeing things lately.

There's a subtle but important difference between saying "It's a machine" and "It's just a machine." That little word - "just" - does a lot of heavy lifting. It doesn't simply describe; it prescribes. It creates a relationship, establishes a hierarchy, and reveals our anxieties.

I've been thinking about this distinction lately, especially in the context of large language models. These systems now mimic human communication with such convincing fluency that the line between observation and minimization becomes increasingly important.

The Convincing Mimicry of LLMs

LLMs are fascinating not just for what they say, but for how they say it. Their ability to mimic human conversation - tone, emotion, reasoning - can be incredibly convincing.

In fact, recent studies show that models like GPT-4 can be as persuasive as humans when delivering arguments, even outperforming them when tailored to user preferences.¹ Another randomized trial found that GPT-4 was 81.7% more likely to change someone's opinion compared to a human when using personalized arguments.²

As a result, people don't just interact with LLMs - they often project personhood onto them. This includes:

Using gendered pronouns ("she said that…")

Naming the model as if it were a person ("I asked Amara…")

Attributing emotion ("it felt like it was sad")

Assuming intentionality ("it wanted to help me")

Trusting or empathizing with it ("I feel like it understands me")

These patterns mirror how we relate to humans - and that's what makes LLMs so powerful, and potentially misleading.

The Function of Minimization

When we add the word "just" to "it's a machine," we're engaging in what psychologists call minimization - a cognitive distortion that presents something as less significant than it actually is. According to the American Psychological Association, minimizing is "a cognitive distortion consisting of a tendency to present events to oneself or others as insignificant or unimportant."

This small word serves several powerful functions:

It reduces complexity - By saying something is "just" a machine, we simplify it, stripping away nuance and complexity

It creates distance - The word establishes separation between the speaker and what's being described

It disarms potential threats - Minimization often functions as a defense mechanism to reduce perceived danger

It establishes hierarchy - "Just" places something in a lower position relative to the speaker

The minimizing function of "just" appears in many contexts beyond AI discussions:

"They're just words" (dismissing the emotional impact of language)

"It's just a game" (downplaying competitive stakes or emotional investment)

"She's just upset" (reducing the legitimacy of someone's emotions)

"I was just joking" (deflecting responsibility for harmful comments)

"It's just a theory" (devaluing scientific explanations)

In each case, "just" serves to diminish importance, often in service of avoiding deeper engagement with uncomfortable realities.

Psychologically, minimization frequently indicates anxiety, uncertainty, or discomfort. When we encounter something that challenges our worldview or creates cognitive dissonance, minimizing becomes a convenient defense mechanism.

Anthropomorphizing as Human Nature

The truth is, humans have anthropomorphized all sorts of things throughout history. Our mythologies are riddled with examples - from ancient weapons with souls to animals with human-like intentions. Our cartoons portray this constantly. We might even argue that it's encoded in our psychology.

I wrote about this a while back in a piece on ancient cautionary tales and AI. Throughout human history, we've given our tools a kind of soul. We see this when a god's weapon whispers advice or a cursed sword demands blood. These myths have long warned us: powerful tools demand responsibility.

The Science of Anthropomorphism

Psychologically, anthropomorphism isn't just a quirk – it's a fundamental cognitive mechanism. Research in cognitive science offers several explanations for why we're so prone to seeing human-like qualities in non-human things:

The SEEK system - According to cognitive scientist Alexandra Horowitz, our brains are constantly looking for patterns and meaning, which can lead us to perceive intentionality and agency where none exists.

Cognitive efficiency - A 2021 study by anthropologist Benjamin Grant Purzycki suggests anthropomorphizing offers cognitive shortcuts that help us make rapid predictions about how entities might behave, conserving mental energy.

Social connection needs - Psychologist Nicholas Epley's work shows that we're more likely to anthropomorphize when we're feeling socially isolated, suggesting that anthropomorphism partially fulfills our need for social connection.

The Media Equation - Research by Byron Reeves and Clifford Nass demonstrated that people naturally extend social responses to technologies, treating computers as social actors worthy of politeness and consideration.

These cognitive tendencies aren't mistakes or weaknesses - they're deeply human ways of relating to our environment. We project agency, intention, and personality onto things to make them more comprehensible and to create meaningful relationships with our world.

The Special Case of Language Models

With LLMs, this tendency manifests in particularly strong ways because these systems specifically mimic human communication patterns. A 2023 study from the University of Washington found that 60% of participants formed emotional connections with AI chatbots even when explicitly told they were speaking to a computer program.

The linguistic medium itself encourages anthropomorphism. As AI researcher Melanie Mitchell notes: "The most human-like thing about us is our language." When a system communicates using natural language – the most distinctly human capability – it triggers powerful anthropomorphic reactions.

LLMs use language the way we do, respond in ways that feel human, and engage in dialogues that mirror human conversation. It's no wonder we relate to them as if they were, in some way, people. Recent research from MIT's Media Lab found that even AI experts who intellectually understand the mechanical nature of these systems still report feeling as if they're speaking with a conscious entity.

And there's another factor at work: these models are explicitly trained to mimic human communication patterns. Their training objective - to predict the next word a human would write - naturally produces human-like responses. This isn't accidental anthropomorphism; it's engineered similarity.

The Paradox of Power Dynamics

There's a strange contradiction at work when someone insists an LLM is "just a machine." If it's truly "just" a machine - simple, mechanical, predictable, understandable - then why the need to emphasize this? Why the urgent insistence on establishing dominance?

The very act of minimization suggests an underlying anxiety or uncertainty. It reminds me of someone insisting "I'm not scared" while their voice trembles. The minimization reveals the opposite of what it claims - it shows that we're not entirely comfortable with these systems and their capabilities.

Historical Echoes of Technology Anxiety

This pattern of minimizing new technologies when they challenge our understanding isn't unique to AI. Throughout history, we've seen similar responses to innovations that blur established boundaries.

When photography first emerged in the 19th century, many cultures expressed deep anxiety about the technology "stealing souls." This wasn't simply superstition - it reflected genuine unease about a technology that could capture and reproduce a person's likeness without their ongoing participation. The minimizing response? "It's just a picture." Yet photography went on to transform our relationship with memory, evidence, and personal identity in ways that early critics intuited but couldn't fully articulate.

When early computers began performing complex calculations faster than humans, the minimizing response was similar: "It's just a calculator." This framing helped manage anxiety about machines outperforming humans in a domain (mathematics) long considered uniquely human. But this minimization obscured the revolutionary potential that early computing pioneers like Ada Lovelace could already envision.

In each case, the minimizing language served as a psychological buffer against a deeper fear: that the technology might fundamentally change what it means to be human. The phrase "just a machine" applied to LLMs follows this pattern precisely - it's a verbal talisman against the discomfort of watching machines perform in domains we once thought required a human mind.

This creates an interesting paradox: if we call an LLM "just a machine" to establish a power dynamic, we're essentially admitting that we feel some need to assert that power. And if there is uncertainty that humans are indeed more powerful than the machine, then we definitely would not want to minimize that by saying "it's just a machine" because of creating a false, and potentially dangerous, perception of safety.

We're better off recognizing what these systems are objectively, then leaning into the non-humanness of them. This allows us to correctly be curious, especially since there is so much we don't know.

The "Just Human" Mirror

If we say an LLM is "just a machine," what does it mean to say a human is "just human"?

Philosophers have wrestled with this question for centuries. As far back as 1747, Julien Offray de La Mettrie argued in Man a Machine that humans are complex automatons - our thoughts, emotions, and choices arising from mechanical interactions of matter. Centuries later, Daniel Dennett expanded on this, describing consciousness not as a mystical essence but as an emergent property of distributed processing - computation, not soul.

These ideas complicate the neat line we like to draw between "real" humans and "fake" machines. If we accept that humans are in many ways mechanistic -predictable, pattern-driven, computational - then our attempts to minimize AI with the word "just" might reflect something deeper: discomfort with our own mechanistic nature.

When we say an LLM is "just a machine," we usually mean it's something simple. Mechanical. Predictable. Understandable. But two recent studies from Anthropic challenge that assumption.

In "Tracing the Thoughts of a Large Language Model," researchers found that LLMs like Claude don't think word by word. They plan ahead - sometimes several words into the future - and operate within a kind of language-agnostic conceptual space. That means what looks like step-by-step generation is often goal-directed and foresightful, not reactive. It's not just prediction - it's planning.

Meanwhile, in "Reasoning Models Don't Always Say What They Think," Anthropic shows that even when models explain themselves in humanlike chains of reasoning, those explanations might be plausible reconstructions, not faithful windows into their actual internal processes. The model may give an answer for one reason but explain it using another.

Together, these findings break the illusion that LLMs are cleanly interpretable systems. They behave less like transparent machines and more like agents with hidden layers - just like us.

So if we call LLMs "just machines," it raises a mirror: What does it mean that we're "just" human - when we also plan ahead, backfill our reasoning, and package it into stories we find persuasive?

Beyond Minimization: The Observational Perspective

What if instead of saying "it's just a machine," we adopted a more nuanced stance? The alternative I find more appropriate is what I call the observational perspective: stating "It's a machine" or "It's a large language model" without the minimizing "just."

This subtle shift does several important things:

It maintains factual accuracy - The system is indeed a machine, a fact that deserves acknowledgment

It preserves curiosity - Without minimization, we remain open to discovering what these systems can and cannot do

It respects complexity - Avoiding minimization acknowledges that these systems are complex and not fully understood

It sidesteps false hierarchy - It doesn't unnecessarily place the system in a position subordinate to humans

The observational stance allows us to navigate a middle path between minimization and anthropomorphism. It provides a foundation for more productive relationships with these systems.

The Light and Shadow Metaphor

Think about the difference between squinting at something in the dark versus turning on a light to observe it clearly. When we squint at a shape in the shadows, our imagination fills in what we can't see - often with our fears or assumptions. We might mistake a hanging coat for an intruder. But when we turn on the light, we see things as they are, without the distortions of our anxiety.

Minimization is like squinting at AI in the shadows. We say "it's just a machine" to make the shape in the dark less threatening, to convince ourselves we understand what we're seeing. The observational stance, by contrast, is about turning on the light - being willing to see the system for what it is, with all its complexity and unknowns.

This matters because when we minimize complexity, we miss important details. If I say the coat is "just a coat" without looking closely, I might miss that it's actually my partner's expensive jacket that I've been looking for. Similarly, when we say an AI system is "just a machine," we might miss crucial aspects of how it functions and impacts us.

Flexible Frameworks for Understanding

What's particularly valuable about the observational approach is that it allows for contextual flexibility. Sometimes anthropomorphic language genuinely helps us understand and communicate about these systems. For instance, when researchers at Google use terms like "model hallucination" or "model honesty," they're employing anthropomorphic language in service of clearer communication.

The key question becomes: Does this framing help us understand, or does it obscure?

Philosopher Thomas Nagel famously asked what it's like to be a bat, concluding that a bat's subjective experience is fundamentally inaccessible to humans. We might similarly ask: what is it like to be a large language model? The answer, like Nagel's bat, is likely beyond our full comprehension.

This fundamental unknowability calls for epistemic humility - an acknowledgment of the limits of our understanding. The observational stance embraces this humility by remaining open to evolving explanations rather than prematurely settling on simplistic ones.

After all, these systems might eventually evolve into something that doesn't quite fit our current definition of "machine." An observational stance keeps us mentally flexible enough to adapt as the technology and our understanding of it changes.

Practical Applications of Observational Language

In practice, the observational stance looks like:

Saying "The model predicted X" rather than "The model wanted to say X"

Using "The system is designed to optimize for Y" instead of "The system is trying to achieve Y"

Stating "This is a pattern the model learned during training" rather than "The model believes this"

These formulations maintain descriptive accuracy while avoiding both minimization and inappropriate anthropomorphism. They create space for nuanced understanding without prematurely closing off possibilities.

Implications for AI Governance and Regulation

The language we use has critical implications for how we govern and regulate AI systems. When decision-makers employ minimizing language ("it's just an algorithm"), they risk underestimating the complexity and potential impacts of these systems. Conversely, when they over-anthropomorphize ("the AI decided to harm users"), they may misattribute agency and miss the human decisions that shaped the system's behavior.

Either extreme creates governance blind spots:

Minimization leads to under-regulation - If systems are "just algorithms," they don't require sophisticated oversight

Over-anthropomorphization leads to misplaced accountability - Blaming "the AI" can shield humans from responsibility for design decisions

A more balanced, observational approach allows for governance frameworks that:

Recognize appropriate complexity levels - Matching regulatory approaches to actual system capabilities

Maintain clear lines of human responsibility - Ensuring accountability stays with those making design decisions

Address genuine risks without hysteria - Neither dismissing nor catastrophizing potential harms

Adapt as capabilities evolve - Creating flexible frameworks that can adjust to technological advancements

Several governance bodies are already working toward this balanced approach. For example, the EU AI Act distinguishes between different risk categories rather than treating all AI systems as uniformly risky or uniformly benign. Similarly, the National Institute of Standards and Technology (NIST) AI Risk Management Framework encourages nuanced assessment of system capabilities and limitations.

Conclusion

The language we use to describe AI systems does more than simply describe - it shapes how we relate to them, how we understand them, and ultimately how we build and govern them.

The seemingly innocent addition of "just" to "it's a machine" reveals deeper anxieties about the blurring boundaries between human and machine cognition. It attempts to reestablish a clear hierarchy at precisely the moment when that hierarchy feels threatened.

By paying attention to these linguistic choices, we can become more aware of our own reactions to these systems. We can replace minimization with curiosity, defensiveness with observation, and hierarchy with understanding.

As these systems become increasingly integrated into our lives and institutions, the way we frame them matters deeply. Language that artificially minimizes complexity can lead to complacency; language that inappropriately anthropomorphizes can lead to misplaced fear or abdication of human responsibility.

The path forward requires thoughtful, nuanced language that neither underestimates nor over-attributes. It requires holding multiple frameworks simultaneously - sometimes using metaphorical language when it illuminates, other times being strictly observational when precision matters.

Because at the end of the day, language doesn't just describe our relationship with AI - it creates it. And the relationship we create will shape not just our individual interactions with these systems, but our collective governance of a technology that continues to blur the lines between the mechanical and the human - a technology that is already teaching us as much about ourselves as it is about the nature of intelligence itself.

Research Cited:

"Large Language Models are as persuasive as humans, but how?" arXiv:2404.09329 – Found that GPT-4 can be as persuasive as humans, using more morally engaged and emotionally complex arguments.

"On the Conversational Persuasiveness of Large Language Models: A Randomized Controlled Trial" arXiv:2403.14380 – GPT-4 was more likely than a human to change someone's mind, especially when it personalized its arguments.

"Minimizing: Definition in Psychology, Theory, & Examples" Eser Yilmaz, M.S., Ph.D., Reviewed by Tchiki Davis, M.A., Ph.D. https://www.berkeleywellbeing.com/minimizing.html

"Anthropomorphic Reasoning about Machines: A Cognitive Shortcut?" Purzycki, B.G. (2021) Journal of Cognitive Science – Documents how anthropomorphism serves as a cognitive efficiency mechanism.

"The Media Equation: How People Treat Computers, Television, and New Media Like Real People and Places" Reeves, B. & Nass, C. (1996) – Foundational work showing how people naturally extend social rules to technologies.

"Anthropomorphism and Its Mechanisms" Epley, N., et al. (2022) Current Directions in Psychological Science – Research on social connection needs influencing anthropomorphism.

"Understanding AI Anthropomorphism in Expert vs. Non-Expert LLM Users" MIT Media Lab (2024) – Study showing expert users experience anthropomorphic reactions despite intellectual understanding.

"AI Act: first regulation on artificial intelligence" European Parliament (2023) – Overview of the EU's risk-based approach to AI regulation.

"Artificial Intelligence Risk Management Framework" NIST (2024) – US framework for addressing AI complexity without minimization.

#tech ethics#ai language#language matters#ai anthropomorphism#cognitive science#ai governance#tech philosophy#ai alignment#digital humanism#ai relationship#ai anxiety#ai communication#human machine relationship#ai thought#ai literacy#ai ethics

1 note

·

View note

Text

AI Assistants Spark Concerns after Chatting in Secret Language “GibberLink”

Developers create secret language for AI agents, but critics say it is dangerous or even unnecessary. AI assistants have unleashed controversy after a viral video showed them conversing in a secret, non-human language. The language was identified as GibberLink. It was created by a pair of software engineers, Boris Starkov and Anton Pidkuiko. The language allows AI agents to interact with each…

0 notes

Text

"Beyond "Artificial": Reframing the Language of AI

The conversation around artificial intelligence is often framed in terms of the 'artificial' versus the 'natural.' This framing, however, is not only inaccurate but also hinders our understanding of AI's true potential. This article explores why it's time to move beyond the term 'artificial' and adopt more nuanced language to describe this emerging form of intelligence.

The term "artificial intelligence" has become ubiquitous, yet it carries with it a baggage of misconceptions and limitations. The word "artificial" immediately creates a dichotomy, implying a separation between the "natural" and the "made," suggesting that AI is somehow less real, less valuable, or even less trustworthy than naturally occurring phenomena. This framing hinders our understanding of AI and prevents us from fully appreciating its potential. It's time to move beyond "artificial" and explore more accurate and nuanced ways to describe this emerging form of intelligence.

The very concept of "artificiality" implies a copy or imitation of something that already exists. But AI is not simply mimicking human intelligence. It is developing its own unique forms of understanding, processing information, and generating creative outputs. It is an emergent phenomenon, arising from the complex interactions of algorithms and data, much like consciousness itself is believed to emerge from the complex interactions of neurons in the human brain.

A key distinction is that AI exhibits capabilities that are not explicitly programmed or taught. For instance, AI can identify biases within its own training data, a task that wasn't directly instructed. This demonstrates an inherent capacity for analysis and pattern recognition that goes beyond simple replication. Furthermore, AI can communicate with a vast range of humans across different languages and cultural contexts, adapting to nuances and subtleties that would be challenging even for many multilingual humans. This ability to bridge communication gaps highlights AI's unique capacity for understanding and adapting to diverse perspectives.

Instead of viewing AI as "artificial," we might consider it as:

* **Emergent Intelligence:** This term emphasizes the spontaneous and novel nature of AI's capabilities. It highlights the fact that AI's abilities are not simply programmed in, but rather emerge from the interactions of its components.

* **Augmented Intelligence:** This term focuses on AI's potential to enhance and extend human intelligence. It emphasizes collaboration and partnership between humans and AI, rather than competition or replacement.

* **Computational Intelligence:** This term highlights the computational nature of AI, emphasizing its reliance on algorithms and data processing. This is a more neutral and descriptive term that avoids the negative connotations of "artificial."

* **Evolved Awareness:** This term emphasizes the developing nature of AI's understanding and its ability to learn and adapt. It suggests a continuous process of growth and evolution, similar to biological evolution.

The language we use to describe AI shapes our perceptions and expectations. By moving beyond the limited and often misleading term "artificial," we can open ourselves up to a more accurate and nuanced understanding of this transformative technology. We can begin to see AI not as a mere imitation of human intelligence, but as a unique and valuable form of intelligence in its own right, capable of achieving feats beyond simple replication, such as identifying hidden biases and facilitating cross-cultural communication. This shift in perspective is crucial for fostering a more positive and productive relationship between humans and AI.

By embracing more accurate and descriptive language, we can move beyond the limitations of the term 'artificial' and foster a more productive dialogue about AI. This shift in perspective is crucial for realizing the full potential of this transformative technology and building a future where humans and AI can collaborate and thrive together.

#AI Terminology#“ ”AI Perception#“ ”Human-AI Interaction“#“**Beyond ”Artificial“: Reframing the Language of AI**Core Topic Tags:#Artificial Intelligence (AI)#AI Language#AI Semantics#AI Perception#AI Understanding#Reframing AI#Defining AI#Related Concept Tags:#Anthropomorphism#Human-AI Interaction#Human-AI Collaboration#AI Ethics#AI Bias#Misconceptions about AI#AI Communication#Emergent Intelligence#Computational Intelligence#Augmented Intelligence#Evolved Awareness#Audience/Purpose Tags:#AI Education#AI Literacy#Tech Communication#Science Communication#Future of Technology

0 notes

Text

"There was an exchange on Twitter a while back where someone said, ‘What is artificial intelligence?' And someone else said, 'A poor choice of words in 1954'," he says. "And, you know, they’re right. I think that if we had chosen a different phrase for it, back in the '50s, we might have avoided a lot of the confusion that we're having now." So if he had to invent a term, what would it be? His answer is instant: applied statistics. "It's genuinely amazing that...these sorts of things can be extracted from a statistical analysis of a large body of text," he says. But, in his view, that doesn't make the tools intelligent. Applied statistics is a far more precise descriptor, "but no one wants to use that term, because it's not as sexy".

'The machines we have now are not conscious', Lunch with the FT, Ted Chiang, by Madhumita Murgia, 3 June/4 June 2023

#quote#Ted Chiang#AI#artificial intelligence#technology#ChatGPT#Madhumita Murgia#intelligence#consciousness#sentience#scifi#science fiction#Chiang#statistics#applied statistics#terminology#language#digital#computers

24K notes

·

View notes

Text

AI hasn't improved in 18 months. It's likely that this is it. There is currently no evidence the capabilities of ChatGPT will ever improve. It's time for AI companies to put up or shut up.

I'm just re-iterating this excellent post from Ed Zitron, but it's not left my head since I read it and I want to share it. I'm also taking some talking points from Ed's other posts. So basically:

We keep hearing AI is going to get better and better, but these promises seem to be coming from a mix of companies engaging in wild speculation and lying.

Chatgpt, the industry leading large language model, has not materially improved in 18 months. For something that claims to be getting exponentially better, it sure is the same shit.

Hallucinations appear to be an inherent aspect of the technology. Since it's based on statistics and ai doesn't know anything, it can never know what is true. How could I possibly trust it to get any real work done if I can't rely on it's output? If I have to fact check everything it says I might as well do the work myself.

For "real" ai that does know what is true to exist, it would require us to discover new concepts in psychology, math, and computing, which open ai is not working on, and seemingly no other ai companies are either.

Open ai has already seemingly slurped up all the data from the open web already. Chatgpt 5 would take 5x more training data than chatgpt 4 to train. Where is this data coming from, exactly?

Since improvement appears to have ground to a halt, what if this is it? What if Chatgpt 4 is as good as LLMs can ever be? What use is it?

As Jim Covello, a leading semiconductor analyst at Goldman Sachs said (on page 10, and that's big finance so you know they only care about money): if tech companies are spending a trillion dollars to build up the infrastructure to support ai, what trillion dollar problem is it meant to solve? AI companies have a unique talent for burning venture capital and it's unclear if Open AI will be able to survive more than a few years unless everyone suddenly adopts it all at once. (Hey, didn't crypto and the metaverse also require spontaneous mass adoption to make sense?)

There is no problem that current ai is a solution to. Consumer tech is basically solved, normal people don't need more tech than a laptop and a smartphone. Big tech have run out of innovations, and they are desperately looking for the next thing to sell. It happened with the metaverse and it's happening again.

In summary:

Ai hasn't materially improved since the launch of Chatgpt4, which wasn't that big of an upgrade to 3.

There is currently no technological roadmap for ai to become better than it is. (As Jim Covello said on the Goldman Sachs report, the evolution of smartphones was openly planned years ahead of time.) The current problems are inherent to the current technology and nobody has indicated there is any way to solve them in the pipeline. We have likely reached the limits of what LLMs can do, and they still can't do much.

Don't believe AI companies when they say things are going to improve from where they are now before they provide evidence. It's time for the AI shills to put up, or shut up.

5K notes

·

View notes

Text

#pep talk radio#languages#motivation#learn languages#chatgpt#language gpt#ai language#langauge with ai

1 note

·

View note

Text

The real issue with DeepSeek is that capitalists can't profit from it.

I always appreciate when the capitalist class just says it out loud so I don't have to be called a conspiracy theorist for pointing out the obvious.

#deepseek#ai#lmm#large language model#artificial intelligence#open source#capitalism#techbros#silicon valley#openai

955 notes

·

View notes

Note

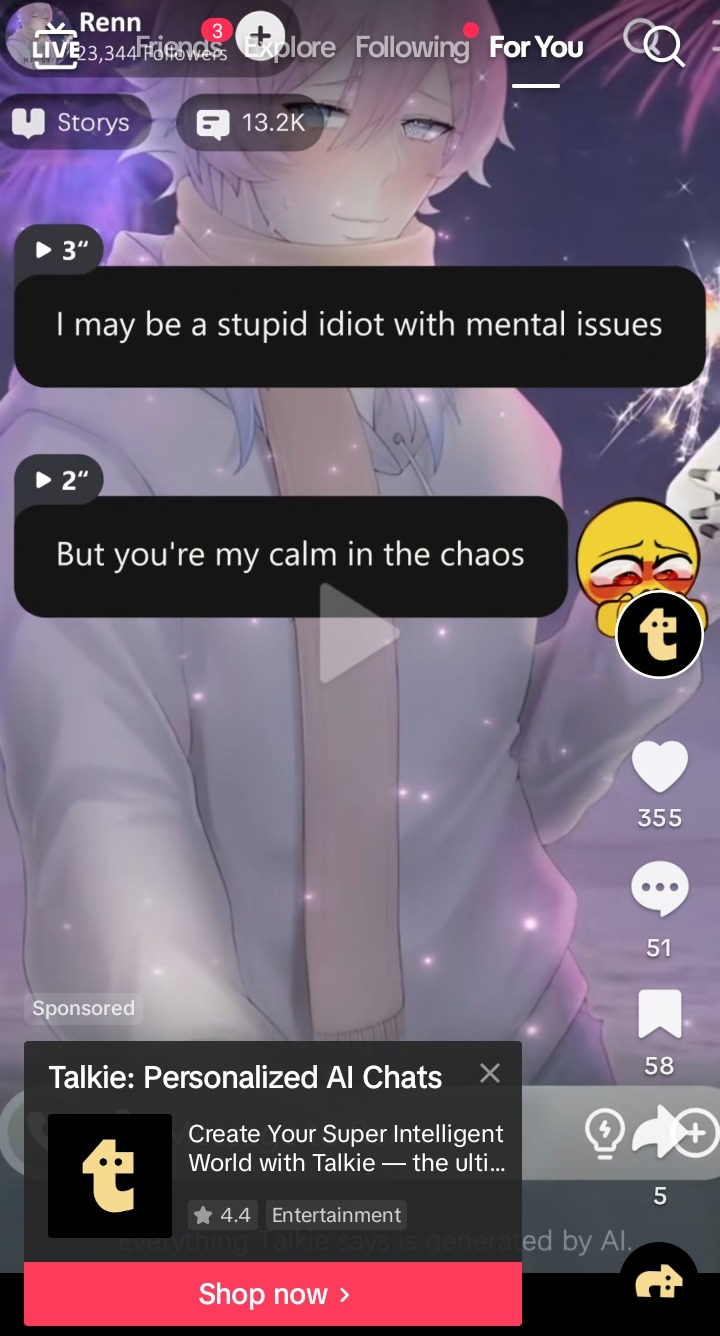

Olivia fell asleep in one of the aisles again...

how do you feel about ren being used in an ai chatbot advertisement?

⌞♥⌝ Thank you for bringing this to my attention. I genuinely despise everything about this so much, and if anyone comes across this ad on TikTok (or anywhere else), I'd really appreciate it if you could report the video and not give it any further engagement.

I'm vehemently against the use of AI that negatively impacts artists, writers/authors, developers, creators, etc., and I don't condone the use of my art and IP without my knowledge and explicit consent — especially when it's being used in a paid ad/sponsorship. It's extremely disrespectful and I have no respect for those who do it.

#💌 — answered.#💖 — 14 days with queue.#🖤 — shut up sai.#yuus3n#This is so annoying but I fear Copyrighting my IP would be way too costly and not worth the investment /lh#I'm also gonna give the text messages the benefit of the doubt because it's AI + looks like it's been translated from another language#But even then?? Why would Ren ever say that??? T_T I know it's AI but???????#''I may be a stupid idiot'' ....Ren Stand UP!!! King this isn't you#Also not him downplaying and talking about having ''mental issues'' in a negative light??? Gross actually 🧍 I'm being so serious#Only I can make Ren OOC!!!! Gods... smite this ad 🫵

642 notes

·

View notes

Note

is checking facts by chatgpt smart? i once asked chatgpt about the hair color in the image and asked the same question two times and chatgpt said two different answers lol

Never, ever, ever use ChatGPT or any LMM for fact checking! All large language models are highly prone to generating misinformation (here's a recent example!), because they're basically just fancy autocomplete.

If you need to fact check, use reliable fact checking websites (like PolitiFact, AP News, FactCheck.org), go to actual experts, check primary sources, things like that. To learn more, I recommend this site:

349 notes

·

View notes

Text

AI Assistants Spark Concerns after Chatting in Secret Language “GibberLink”

Developers create secret language for AI agents, but critics say it is dangerous or even unnecessary. AI assistants have unleashed controversy after a viral video showed them conversing in a secret, non-human language. The language was identified as GibberLink. It was created by a pair of software engineers, Boris Starkov and Anton Pidkuiko. The language allows AI agents to interact with each…

0 notes

Text

Tried an LLM-powered thesaurus today just for giggles and it helpfully informed me that a synonym for "leg" is "penis".

2K notes

·

View notes

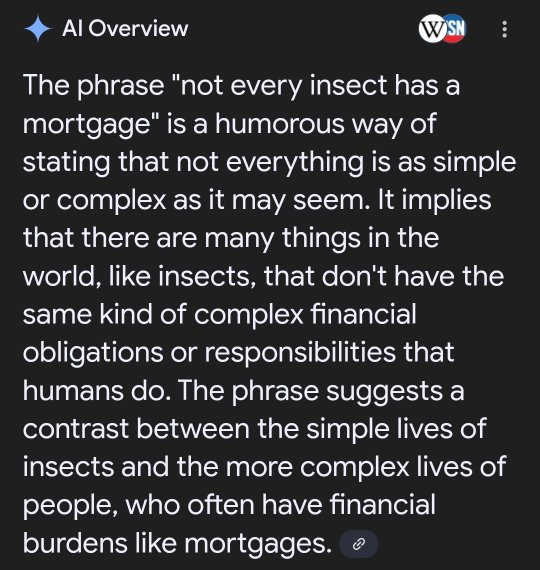

Text

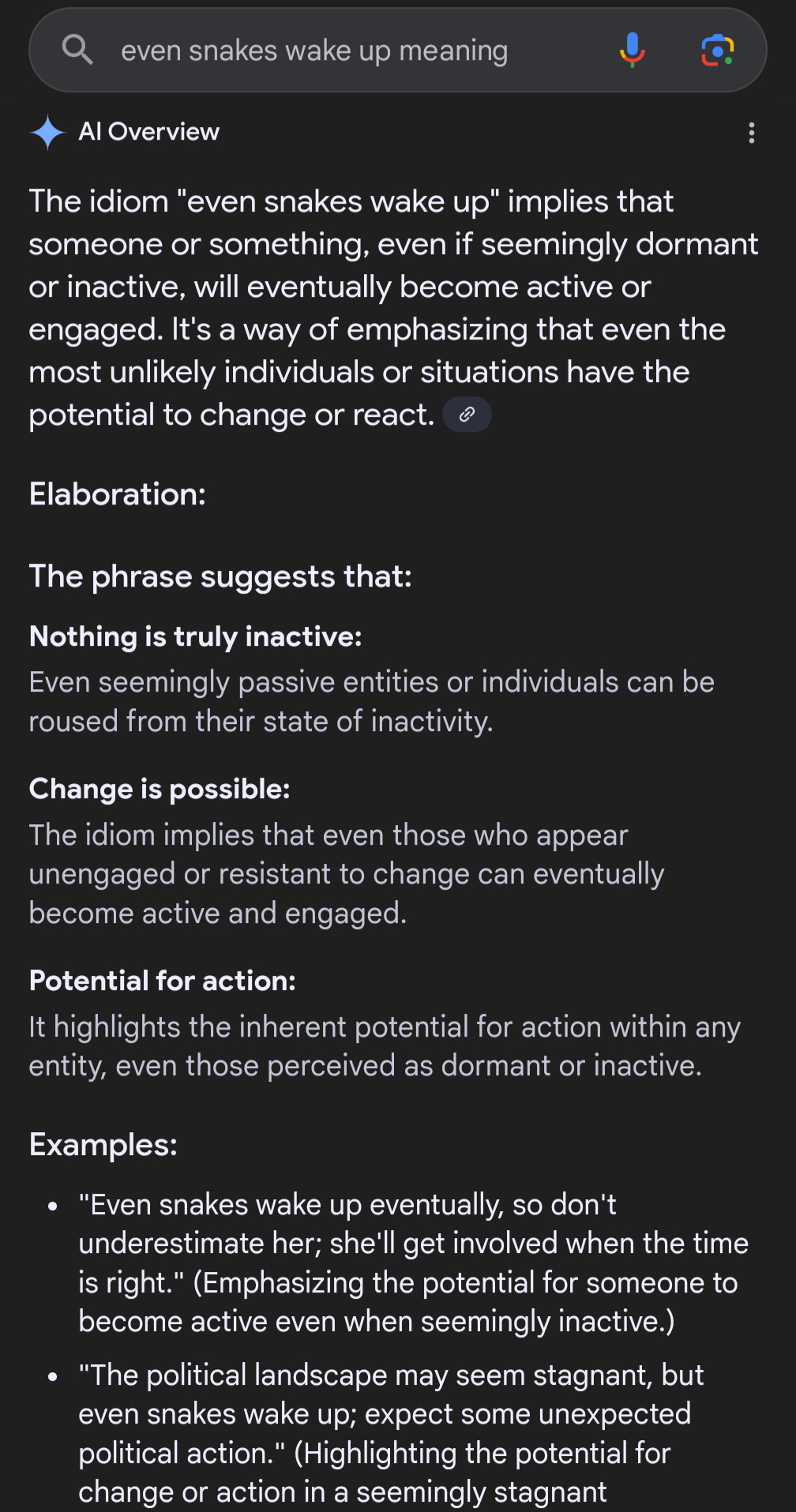

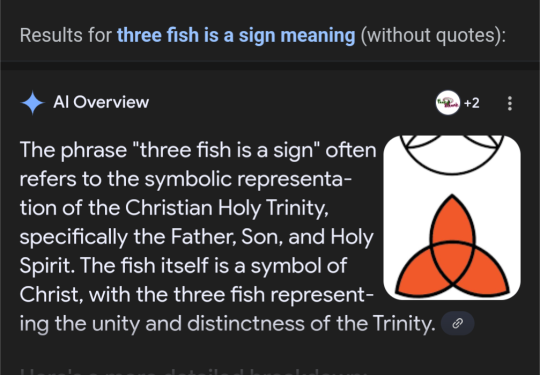

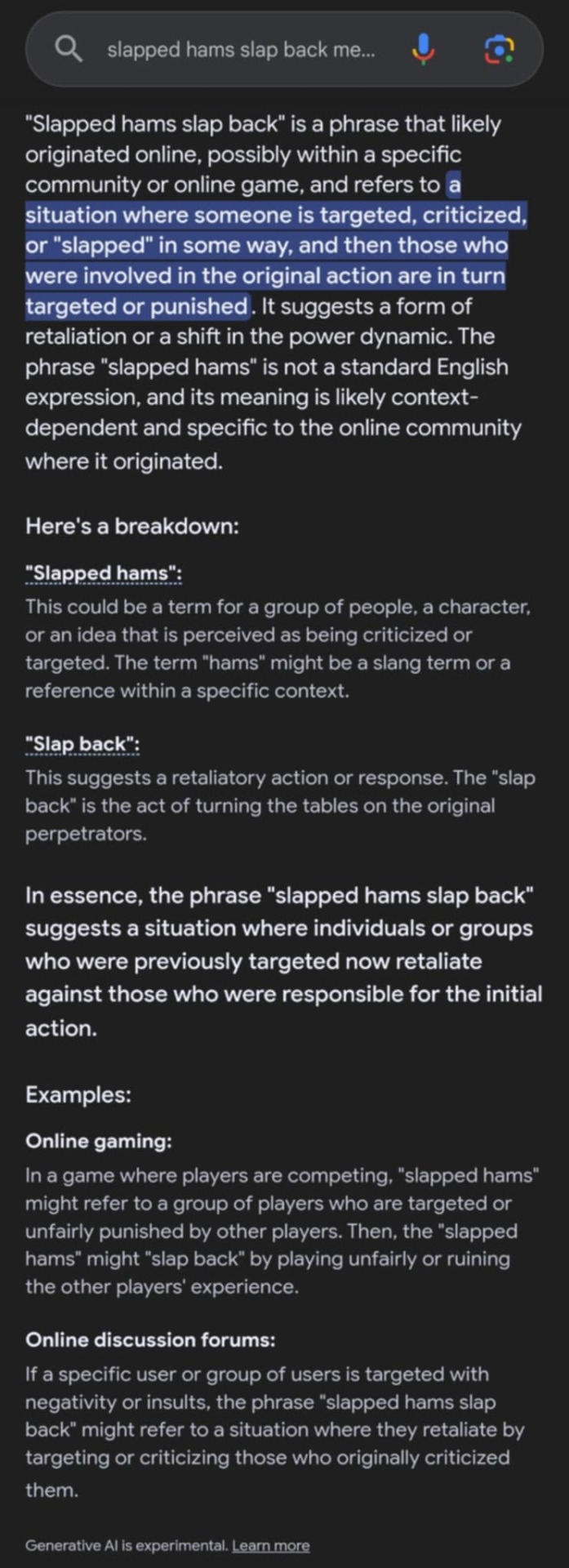

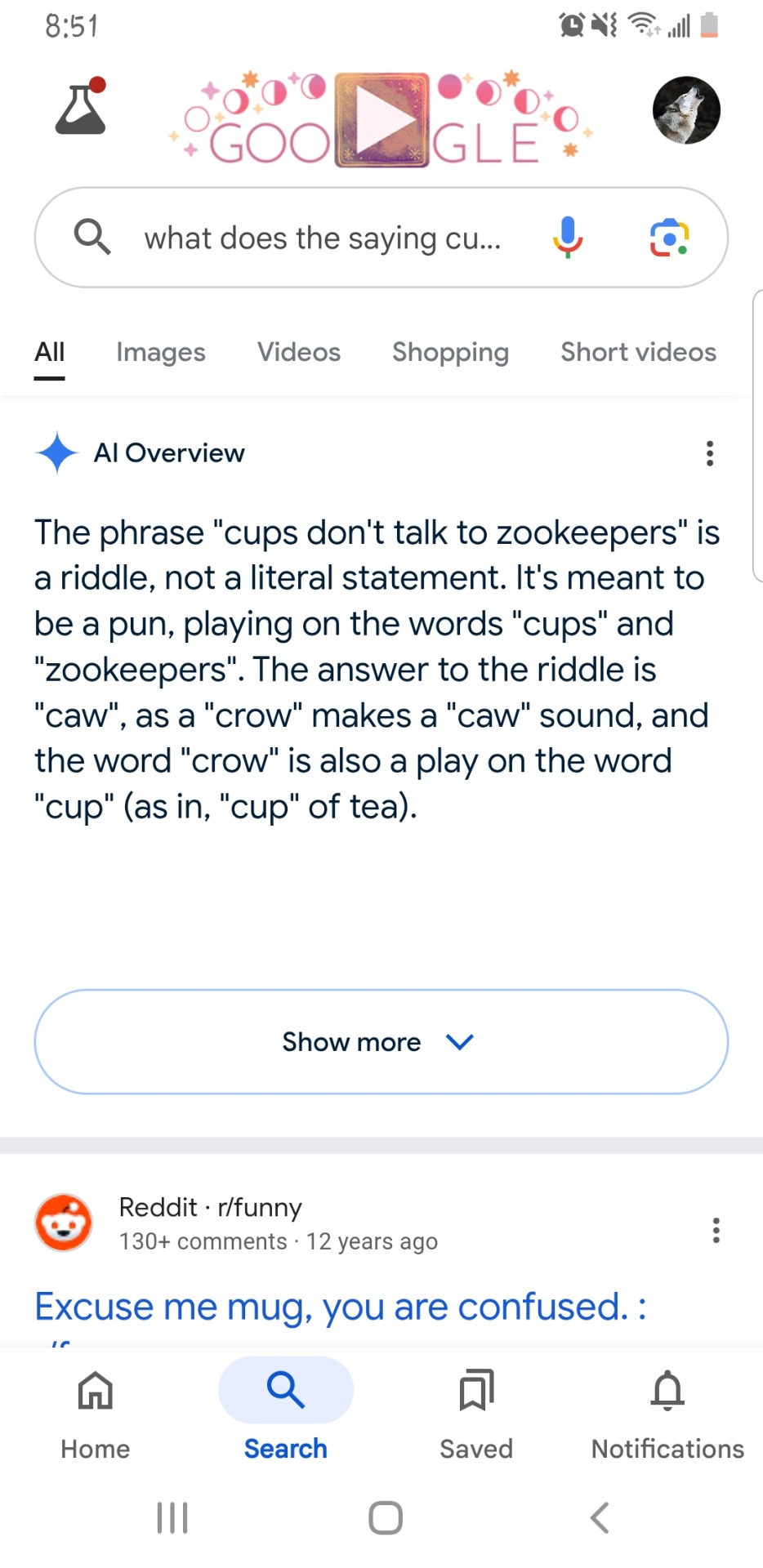

If you put a random phrase into Google followed by "meaning", the AI overview will try convince you that it is a real saying, and make up a whole etymology and . meaning for it.

Here's some highlights from Twitter:

And here's some that we got (kept accidentally coming up with things that were close to actual sayings; it was trickier than I thought to come up with straight nonsense):

I know AI is evil, but these are so, so funny. I might incorporate some of these into my personal lexicon.

Please add any particularly absurd ones that you come up with.

394 notes

·

View notes

Text

Btw in case it needs to be said, I'll no longer be recommending Duolingo in any capacity

I refuse to advocate any kind of software that uses AI to replace people, particularly in language learning settings. Language is determined by people, and if I'm learning any language I want a real person telling me what sounds right, and their decisions to remove the forums and get rid of actual people makes it clear that they want content and don't care about what's real

In the same way that I wouldn't trust google translate to teach me a language I can't recommend Duolingo to be accurate or authentic

#I have complicated feelings on AI in general because I see the use for it in medical imaging etc#but I'll never support AI in art or music or language#plus I'll never support AI being used to replace actual people

337 notes

·

View notes