#AI&Deep learning development

Explore tagged Tumblr posts

Text

Revolutionizing the Industrial Sector with Emerging Tech Solutions

Revolutionizing the Industrial Sector with Emerging Tech Solutions

#artificial intelligence solutions#artificial intelligence programming#artificial intelligence problems and solutions#applications of artificial intelligence#artificial intelligence a modern approach solutions#Best Artificial Intelligence Software#AI&Deep learning development#ai and machine learning services

0 notes

Text

Best PC for Data Science & AI with 12GB GPU at Budget Gamer UAE

Are you looking for a powerful yet affordable PC for Data Science, AI, and Deep Learning? Budget Gamer UAE brings you the best PC for Data Science with 12GB GPU that handles complex computations, neural networks, and big data processing without breaking the bank!

Why Do You Need a 12GB GPU for Data Science & AI?

Before diving into the build, let’s understand why a 12GB GPU is essential:

✅ Handles Large Datasets – More VRAM means smoother processing of big data. ✅ Faster Deep Learning – Train AI models efficiently with CUDA cores. ✅ Multi-Tasking – Run multiple virtual machines and experiments simultaneously. ✅ Future-Proofing – Avoid frequent upgrades with a high-capacity GPU.

Best Budget Data Science PC Build – UAE Edition

Here’s a cost-effective yet high-performance PC build tailored for AI, Machine Learning, and Data Science in the UAE.

1. Processor (CPU): AMD Ryzen 7 5800X

8 Cores / 16 Threads – Perfect for parallel processing.

3.8GHz Base Clock (4.7GHz Boost) – Speeds up data computations.

PCIe 4.0 Support – Faster data transfer for AI workloads.

2. Graphics Card (GPU): NVIDIA RTX 3060 12GB

12GB GDDR6 VRAM – Ideal for deep learning frameworks (TensorFlow, PyTorch).

CUDA Cores & RT Cores – Accelerates AI model training.

DLSS Support – Boosts performance in AI-based rendering.

3. RAM: 32GB DDR4 (3200MHz)

Smooth Multitasking – Run Jupyter Notebooks, IDEs, and virtual machines effortlessly.

Future-Expandable – Upgrade to 64GB if needed.

4. Storage: 1TB NVMe SSD + 2TB HDD

Ultra-Fast Boot & Load Times – NVMe SSD for OS and datasets.

Extra HDD Storage – Store large datasets and backups.

5. Motherboard: B550 Chipset

PCIe 4.0 Support – Maximizes GPU and SSD performance.

Great VRM Cooling – Ensures stability during long AI training sessions.

6. Power Supply (PSU): 650W 80+ Gold

Reliable & Efficient – Handles high GPU/CPU loads.

Future-Proof – Supports upgrades to more powerful GPUs.

7. Cooling: Air or Liquid Cooling

AMD Wraith Cooler (Included) – Good for moderate workloads.

Optional AIO Liquid Cooler – Better for overclocking and heavy tasks.

8. Case: Mid-Tower with Good Airflow

Multiple Fan Mounts – Keeps components cool during extended AI training.

Cable Management – Neat and efficient build.

Why Choose Budget Gamer UAE for Your Data Science PC?

✔ Custom-Built for AI & Data Science – No pre-built compromises. ✔ Competitive UAE Pricing – Best deals on high-performance parts. ✔ Expert Advice – Get guidance on the perfect build for your needs. ✔ Warranty & Support – Reliable after-sales service.

Performance Benchmarks – How Does This PC Handle AI Workloads?

TaskPerformanceTensorFlow Training2x Faster than 8GB GPUsPython Data AnalysisSmooth with 32GB RAMNeural Network TrainingHandles large models efficientlyBig Data ProcessingNVMe SSD reduces load times

FAQs – Data Science PC Build in UAE

1. Is a 12GB GPU necessary for Machine Learning?

Yes! More VRAM allows training larger models without memory errors.

2. Can I use this PC for gaming too?

Absolutely! The RTX 3060 12GB crushes 1080p/1440p gaming.

3. Should I go for Intel or AMD for Data Science?

AMD Ryzen offers better multi-core performance at a lower price.

4. How much does this PC cost in the UAE?

Approx. AED 4,500 – AED 5,500 (depends on deals & upgrades).

5. Where can I buy this PC in the UAE?

Check Budget Gamer UAE for the best custom builds!

Final Verdict – Best Budget Data Science PC in UAE

If you're into best PC for Data Science with 12GB GPU PC build from Budget Gamer UAE is the perfect balance of power and affordability. With a Ryzen 7 CPU, RTX 3060, 32GB RAM, and ultra-fast storage, it handles heavy workloads like a champ.

#12GB Graphics Card PC for AI#16GB GPU Workstation for AI#Best Graphics Card for AI Development#16GB VRAM PC for AI & Deep Learning#Best GPU for AI Model Training#AI Development PC with High-End GPU

2 notes

·

View notes

Text

The Future of AI: What’s Next in Machine Learning and Deep Learning?

Artificial Intelligence (AI) has rapidly evolved over the past decade, transforming industries and redefining the way businesses operate. With machine learning and deep learning at the core of AI advancements, the future holds groundbreaking innovations that will further revolutionize technology. As machine learning and deep learning continue to advance, they will unlock new opportunities across various industries, from healthcare and finance to cybersecurity and automation. In this blog, we explore the upcoming trends and what lies ahead in the world of machine learning and deep learning.

1. Advancements in Explainable AI (XAI)

As AI models become more complex, understanding their decision-making process remains a challenge. Explainable AI (XAI) aims to make machine learning and deep learning models more transparent and interpretable. Businesses and regulators are pushing for AI systems that provide clear justifications for their outputs, ensuring ethical AI adoption across industries. The growing demand for fairness and accountability in AI-driven decisions is accelerating research into interpretable AI, helping users trust and effectively utilize AI-powered tools.

2. AI-Powered Automation in IT and Business Processes

AI-driven automation is set to revolutionize business operations by minimizing human intervention. Machine learning and deep learning algorithms can predict and automate tasks in various sectors, from IT infrastructure management to customer service and finance. This shift will increase efficiency, reduce costs, and improve decision-making. Businesses that adopt AI-powered automation will gain a competitive advantage by streamlining workflows and enhancing productivity through machine learning and deep learning capabilities.

3. Neural Network Enhancements and Next-Gen Deep Learning Models

Deep learning models are becoming more sophisticated, with innovations like transformer models (e.g., GPT-4, BERT) pushing the boundaries of natural language processing (NLP). The next wave of machine learning and deep learning will focus on improving efficiency, reducing computation costs, and enhancing real-time AI applications. Advancements in neural networks will also lead to better image and speech recognition systems, making AI more accessible and functional in everyday life.

4. AI in Edge Computing for Faster and Smarter Processing

With the rise of IoT and real-time processing needs, AI is shifting toward edge computing. This allows machine learning and deep learning models to process data locally, reducing latency and dependency on cloud services. Industries like healthcare, autonomous vehicles, and smart cities will greatly benefit from edge AI integration. The fusion of edge computing with machine learning and deep learning will enable faster decision-making and improved efficiency in critical applications like medical diagnostics and predictive maintenance.

5. Ethical AI and Bias Mitigation

AI systems are prone to biases due to data limitations and model training inefficiencies. The future of machine learning and deep learning will prioritize ethical AI frameworks to mitigate bias and ensure fairness. Companies and researchers are working towards AI models that are more inclusive and free from discriminatory outputs. Ethical AI development will involve strategies like diverse dataset curation, bias auditing, and transparent AI decision-making processes to build trust in AI-powered systems.

6. Quantum AI: The Next Frontier

Quantum computing is set to revolutionize AI by enabling faster and more powerful computations. Quantum AI will significantly accelerate machine learning and deep learning processes, optimizing complex problem-solving and large-scale simulations beyond the capabilities of classical computing. As quantum AI continues to evolve, it will open new doors for solving problems that were previously considered unsolvable due to computational constraints.

7. AI-Generated Content and Creative Applications

From AI-generated art and music to automated content creation, AI is making strides in the creative industry. Generative AI models like DALL-E and ChatGPT are paving the way for more sophisticated and human-like AI creativity. The future of machine learning and deep learning will push the boundaries of AI-driven content creation, enabling businesses to leverage AI for personalized marketing, video editing, and even storytelling.

8. AI in Cybersecurity: Real-Time Threat Detection

As cyber threats evolve, AI-powered cybersecurity solutions are becoming essential. Machine learning and deep learning models can analyze and predict security vulnerabilities, detecting threats in real time. The future of AI in cybersecurity lies in its ability to autonomously defend against sophisticated cyberattacks. AI-powered security systems will continuously learn from emerging threats, adapting and strengthening defense mechanisms to ensure data privacy and protection.

9. The Role of AI in Personalized Healthcare

One of the most impactful applications of machine learning and deep learning is in healthcare. AI-driven diagnostics, predictive analytics, and drug discovery are transforming patient care. AI models can analyze medical images, detect anomalies, and provide early disease detection, improving treatment outcomes. The integration of machine learning and deep learning in healthcare will enable personalized treatment plans and faster drug development, ultimately saving lives.

10. AI and the Future of Autonomous Systems

From self-driving cars to intelligent robotics, machine learning and deep learning are at the forefront of autonomous technology. The evolution of AI-powered autonomous systems will improve safety, efficiency, and decision-making capabilities. As AI continues to advance, we can expect self-learning robots, smarter logistics systems, and fully automated industrial processes that enhance productivity across various domains.

Conclusion

The future of AI, machine learning and deep learning is brimming with possibilities. From enhancing automation to enabling ethical and explainable AI, the next phase of AI development will drive unprecedented innovation. Businesses and tech leaders must stay ahead of these trends to leverage AI's full potential. With continued advancements in machine learning and deep learning, AI will become more intelligent, efficient, and accessible, shaping the digital world like never before.

Are you ready for the AI-driven future? Stay updated with the latest AI trends and explore how these advancements can shape your business!

#artificial intelligence#machine learning#techinnovation#tech#technology#web developers#ai#web#deep learning#Information and technology#IT#ai future

2 notes

·

View notes

Text

2 notes

·

View notes

Text

Behind the Code: How AI Is Quietly Reshaping Software Development and the Top Risks You Must Know

AI Software Development

In 2025, artificial intelligence (AI) is no longer just a buzzword; it has become a driving force behind the scenes, transforming software development. From AI-powered code generation to advanced testing tools, machine learning (ML) and deep learning (DL) are significantly influencing how developers build, test, and deploy applications. While these innovations offer speed, accuracy, and automation, they also introduce subtle yet critical risks that businesses and developers must not overlook. This blog examines how AI is transforming the software development lifecycle and identifies the key risks associated with this evolution.

The Rise of AI in Software Development

Artificial intelligence, machine learning, and deep learning are becoming foundational to modern software engineering. AI tools like ChatGPT, Copilot, and various open AI platforms assist in code suggestions, bug detection, documentation generation, and even architectural decisions. These tools not only reduce development time but also enable less-experienced developers to produce quality code.

Examples of AI in Development:

- AI Chat Bots: Provide 24/7 customer support and collect feedback.

- AI-Powered Code Review: Analyze code for bugs, security flaws, and performance issues.

- Natural Language Processing (NLP): Translate user stories into code or test cases.

- AI for DevOps: Use predictive analytics for server load and automate CI/CD pipelines.

With AI chat platforms, free AI chatbots, and robotic process automation (RPA), the lines between human and machine collaboration are increasingly blurred.

The Hidden Risks of AI in Application Development

While AI offers numerous benefits, it also introduces potential vulnerabilities and unintended consequences. Here are the top risks associated with integrating AI into the development pipeline:

1. Over-Reliance on AI Tools

Over-reliance on AI tools may reduce developer skills and code quality:

- A decline in critical thinking and analytical skills.

- Propagation of inefficient or insecure code patterns.

- Reduced understanding of the software being developed.

2. Bias in Machine Learning Models

AI and ML trained on biased or incomplete data can produce skewed results:

-Applications may produce discriminatory or inaccurate results.

-Risks include brand damage and legal issues in regulated sectors like retail or finance.

3. Security Vulnerabilities

AI-generated code may introduce hidden bugs or create opportunities for exploitation:

-Many AI tools scrape open-source data, which might include insecure or outdated libraries.

-Hackers could manipulate AI-generated models for malicious purposes.

4. Data Privacy and Compliance Issues

AI models often need large datasets with sensitive information:

-Misuse or leakage of data can lead to compliance violations (e.g., GDPR).

-Using tools like Google AI Chat or OpenAI Chatbots can raise data storage concerns.

5. Transparency and Explainability Challenges

Understanding AI, especially deep learning decisions, is challenging:

-A lack of explainability complicates debugging processes.

-There are regulatory issues in industries that require audit trails (e.g., insurance, healthcare).

AI and Its Influence Across Development Phases

Planning & Design: AI platforms analyze historical data to forecast project timelines and resource allocation.

Risks: False assumptions from inaccurate historical data can mislead project planning.

Coding: AI-powered IDEs and assistants suggest code snippets, auto-complete functions, and generate boilerplate code.

Risks: AI chatbots may overlook edge cases or scalability concerns.

Testing: Automated test case generation using AI ensures broader coverage in less time.

Risks: AI might miss human-centric use cases and unique behavioral scenarios.

Deployment & Maintenance: AI helps predict failures and automates software patching using computer vision and ML.

Risks:False positives or missed anomalies in logs could lead to outages.

The Role of AI in Retail, RPA, and Computer Vision

Industries such as retail and manufacturing are increasingly integrating AI.

In Retail: AI is used for chatbots, customer data analytics, and inventory management tools, enhancing personalized shopping experiences through machine learning and deep learning.

Risk: Over-personalization and surveillance-like tracking raise ethical concerns.

In RPA: Robotic Process Automation tools simplify repetitive back-end tasks. AI adds decision-making capabilities to RPA.

Risk: Errors in automation can lead to large-scale operational failures.

In Computer Vision: AI is applied in image classification, facial recognition, and quality control.

Risk: Misclassification or identity-related issues could lead to regulatory scrutiny.

Navigating the Risks: Best Practices

To safely harness the power of AI in development, businesses should adopt strategic measures, such as establishing AI ethics policies and defining acceptable use guidelines.

By understanding the transformative power of AI and proactively addressing its risks, organizations can better position themselves for a successful future in software development. Key Recommendations:

Audit and regularly update AI datasets to avoid bias.

Use explainable AI models where possible.

Train developers on AI tools while reinforcing core engineering skills.

Ensure AI integrations comply with data protection and security standards.

Final Thoughts: Embracing AI While Staying Secure

AI, ML, and DL have revolutionized software development, enabling automation, accuracy, and innovation. However, they bring complex risks that require careful management. Organizations must adopt a balanced approach—leveraging the strengths of AI platforms like GPT chat AI, open chat AI, and RPA tools while maintaining strict oversight.

As we move forward, embracing AI in a responsible and informed manner is critical. From enterprise AI adoption to computer vision applications, businesses that align technological growth with ethical and secure practices will lead the future of development.

#artificial intelligence chat#ai and software development#free ai chat bot#machine learning deep learning artificial intelligence#rpa#ai talking#artificial intelligence machine learning and deep learning#artificial intelligence deep learning#ai chat gpt#chat ai online#best ai chat#ai ml dl#ai chat online#ai chat bot online#machine learning and deep learning#deep learning institute nvidia#open chat ai#google chat bot#chat bot gpt#artificial neural network in machine learning#openai chat bot#google ai chat#ai deep learning#artificial neural network machine learning#ai gpt chat#chat ai free#ai chat online free#ai and deep learning#software development#gpt chat ai

0 notes

Text

Qureight and Avalyn Pharma partner to advance pulmonary fibrosis treatment with deep-learning image analytics, shaping pharmaceutical market trends.

#Qureight#Avalyn Pharma#pulmonary fibrosis treatment#deep learning image analytics#pharmaceutical partnerships#respiratory disease research#AI in healthcare#pharmaceutical market trends#medical imaging technology#fibrosis drug development#precision medicine#healthcare innovation#biotech collaboration

1 note

·

View note

Text

#artificial intelligence services#machine learning solutions#AI development company#machine learning development#AI services India#AI consulting services#ML model development#custom AI solutions#deep learning services#natural language processing#computer vision solutions#AI integration services#AI for business#enterprise AI solutions#machine learning consulting#predictive analytics#AI software development#intelligent automation

0 notes

Text

From Code to Cognitive – Atcuality’s Intelligent Tech Framework

Atcuality is redefining enterprise success by delivering powerful, scalable, and intelligent software solutions. Our multidisciplinary teams combine cloud-native architectures, agile engineering, and business domain expertise to create value across your digital ecosystem. Positioned at the crossroads of business transformation and technical execution, artificial intelligence plays a vital role in our delivery model. We design AI algorithms, train custom models, and embed intelligence directly into products and processes, giving our clients the edge they need to lead. From automation in finance to real-time analytics in healthcare, Atcuality enables smarter decisions, deeper insights, and higher efficiency. We believe in creating human-centered technology that doesn’t just function, but thinks. Elevate your systems and your strategy — with Atcuality as your trusted technology partner.

#seo services#artificial intelligence#iot applications#seo agency#seo company#seo marketing#digital marketing#azure cloud services#ai powered application#amazon web services#ai image#ai generated#ai art#ai model#technology#chatgpt#ai#ai artwork#ai developers#ai design#ai development#ai deep learning#ai services#ai solutions#ai companies#information technology#software#applications#app#application development

0 notes

Text

Still working in tech? Got replaced by a Co-Pilot bot named Gary? Still waiting for someone to tell you if it’s a feature or a farewell letter? Drop your stories. Share your scars. The bunker’s open.

#AI layoffs#AI replacing developers#CoPilot satire#Deep Crack Learning#GPT-5 job risk#Microsoft Co-Pilot#Mind and Reality#tech industry humor#Who da’Skunk

0 notes

Text

Google Gen AI SDK, Gemini Developer API, and Python 3.13

A Technical Overview and Compatibility Analysis 🧠 TL;DR – Google Gen AI SDK + Gemini API + Python 3.13 Integration 🚀 🔍 Overview Google’s Gen AI SDK and Gemini Developer API provide cutting-edge tools for working with generative AI across text, images, code, audio, and video. The SDK offers a unified interface to interact with Gemini models via both Developer API and Vertex AI 🌐. 🧰 SDK…

#AI development#AI SDK#AI tools#cloud AI#code generation#deep learning#function calling#Gemini API#generative AI#Google AI#Google Gen AI SDK#LLM integration#multimodal AI#Python 3.13#Vertex AI

0 notes

Text

Ethical AI: Mitigating Bias in Machine Learning Models

The Critical Importance of Unbiased AI Systems

As artificial intelligence becomes increasingly embedded in business processes and decision-making systems, the issue of algorithmic bias has emerged as a pressing concern. Recent industry reports indicate that a significant majority of AI implementations exhibit some form of bias, potentially leading to discriminatory outcomes and exposing organizations to substantial reputational and regulatory risks.

Key Statistics:

Gartner research (2023) found that 85% of AI models demonstrate bias due to problematic training data

McKinsey analysis (2024) revealed organizations deploying biased AI systems face 30% higher compliance penalties

Documented Cases of AI Bias in Enterprise Applications

Case Study 1: Large Language Model Political Bias (2024)

Stanford University researchers identified measurable political bias in ChatGPT 4.0’s responses, with the system applying 40% more qualifying statements to conservative-leaning prompts compared to liberal ones. This finding raises concerns about AI systems potentially influencing information ecosystems.

Case Study 2: Healthcare Algorithm Disparities (2023)

A Johns Hopkins Medicine study demonstrated that clinical decision-support AI systems consistently underestimated the acuity of Black patients’ medical conditions by approximately 35% compared to white patients with identical symptoms.

Case Study 3: Professional Platform Algorithmic Discrimination (2024)

Independent analysis of LinkedIn’s recommendation engine revealed the platform’s AI suggested technical roles with 28% higher compensation to male users than to equally qualified female professionals.

Underlying Causes of Algorithmic Bias

The Historical Data Problem

AI systems inherently reflect the biases present in their training data. For instance:

Credit scoring models trained on decades of lending data may perpetuate historical redlining practices

Facial analysis systems developed primarily using Caucasian facial images demonstrate higher error rates for other ethnic groups

The Self-Reinforcing Discrimination Cycle

Biased algorithmic outputs frequently lead to biased real-world decisions, which then generate similarly skewed data for future model training, creating a dangerous feedback loop that can amplify societal inequities.

Evidence-Based Strategies for Bias Mitigation

1. Comprehensive Data Auditing and Enrichment

Conduct systematic reviews of training datasets for representation gaps

Implement active data collection strategies to include underrepresented populations

Employ synthetic data generation techniques to address diversity deficiencies

Illustrative Example: Microsoft’s facial recognition system achieved parity in accuracy across demographic groups through deliberate data enhancement efforts, eliminating previous performance disparities.

2. Continuous Bias Monitoring Frameworks

Deploy specialized tools such as IBM’s AI Fairness 360 or Google’s Responsible AI Toolkit

Establish automated alert systems for detecting emerging bias patterns

3. Multidisciplinary Development Teams

Incorporate social scientists and ethics specialists into AI development processes

Mandate bias awareness training for technical staff

Form independent ethics review committees

4. Explainable AI Methodologies

Implement decision visualization techniques

Develop clear, accessible explanations of algorithmic processes

Maintain comprehensive documentation of model development and testing

5. Rigorous Testing Protocols

Conduct pre-deployment bias stress testing

Establish ongoing performance monitoring systems

Create structured feedback mechanisms with stakeholder communities

The Organizational Value Proposition

Firms implementing robust bias mitigation protocols report:

25% improvement in customer trust metrics (Accenture, 2023)

40% reduction in compliance-related costs (Deloitte, 2024)

Threefold increase in successful AI adoption rates

Conclusion: Building Responsible AI Systems

Addressing algorithmic bias requires more than technical solutions — it demands a comprehensive organizational commitment to ethical AI development. By implementing rigorous data practices, continuous monitoring systems, and multidisciplinary oversight, enterprises can develop AI systems that not only avoid harm but actively promote fairness and equity.

The path forward requires sustained investment in both technological solutions and governance frameworks to ensure AI systems meet the highest standards of fairness and accountability. Organizations that prioritize these efforts will be better positioned to harness AI’s full potential while maintaining stakeholder trust and regulatory compliance.

#artificial intelligence#machine learning#technology#deep learning#ai#web#web developers#tech#techinnovation#ai generated#ethical ai#ai bias#Artificial Intelligence Bias#bias#ml bias

0 notes

Text

Beyond Security: How AI-Based Video Analytics Are Enhancing Modern Business Operations

New Post has been published on https://thedigitalinsider.com/beyond-security-how-ai-based-video-analytics-are-enhancing-modern-business-operations/

Beyond Security: How AI-Based Video Analytics Are Enhancing Modern Business Operations

AI-based solutions are becoming increasingly common, but those in the security industry have been leveraging AI for years—they’ve just been using the word “analytics.” As businesses seek new ways to use AI to create a competitive advantage, many are beginning to recognize that video devices represent an increasingly valuable data source—one that can generate actionable business intelligence insights. As processing power improves and chipsets become more advanced, modern IP cameras and other security devices can support AI-powered analytics capabilities that can do far more than identify trespassers and shoplifters.

Many businesses are already leveraging AI-based analytics to improve efficiency and productivity, reduce liability, and better understand their customers. Video analytics can help enterprises identify ways to improve employee productivity and staffing efficiency, streamline the layout of stores, factories, and warehouses, identify in-demand products and services, detect malfunctioning or poorly maintained equipment before it breaks, and more. These new analytics capabilities are being designed with business intelligence and operational efficiency in mind—and they are increasingly accessible to organizations of all sizes.

The Growing Accessibility of AI in Video Surveillance

Analytics has always had clear applications in the security industry, and the evolution from basic intelligence and video motion detection to more advanced object analytics and deep learning has made it possible for modern analytics to identify suspicious or criminal behavior or to detect suspicious sounds like breaking glass, gunshots, or cries for help. Today’s analytics can detect these events in real time, alerting security teams immediately and dramatically reducing response times. The emergence of AI has allowed security teams to be significantly more proactive, allowing them to make quick decisions based on accurate, real-time information. Not long ago, only the most advanced surveillance devices were powerful enough to run the AI-based analytics needed to enable those capabilities—but today, the landscape has changed.

The advent of deep learning processing units (DLPUs) has significantly enhanced the processing power of surveillance devices, allowing them to run advanced analytics at the network edge. Just a few years ago, the bandwidth and storage required to record, upload, and analyze thousands of hours of video could be prohibitively expensive. Today, that’s no longer the case: modern devices no longer need to send full video recordings to the cloud—only the metadata necessary for classification and analysis. As a result, the bandwidth, storage, and hardware footprint required to take advantage of AI-based analytics capabilities have all dramatically decreased—significantly reducing operational costs and making the technology accessible to businesses of all sizes, whether they employ a network of three cameras or three thousand.

As a result, the range of potential customers has expanded significantly—and those customers aren’t just looking for security applications, but business ones as well. Since DLPUs are effectively standard on modern surveillance devices, customers are increasingly looking to leverage those capabilities to gain a competitive advantage in addition to protecting their locations. The democratization of AI in the security industry has led to a significant expansion of use cases as developers look to satisfy businesses turning to video analytics to address a wider range of security and non-security challenges.

How Organizations Are Using AI to Enhance Their Operations

It’s important to emphasize that part of what makes the emergence of more business-focused use cases for AI-based video analytics notable is the fact that most businesses are already familiar with the basic technology. For example, retailers already using video analytics to protect their stores from shoplifters will be delighted to learn that they can use similar capabilities to monitor customers entering and leaving the store, identify high- and low-traffic periods, and use that data to adjust their staffing needs accordingly. They can use video analytics to alert employees when a lengthy queue is forming, when an empty shelf needs to be restocked, or if the layout of the store is causing unnecessary congestion. By embracing business-focused analytics alongside security-focused ones, retailers can improve staffing efficiency, create more effective store layouts, and enhance the customer experience.

Of course, retailers are just the tip of the iceberg. Businesses in nearly every industry can benefit from modern video analytics use cases. Manufacturers, for example, can monitor factory floors to identify inefficiencies and choke points. They can use thermal cameras to detect overheating machinery, allowing maintenance personnel to address problems before they can cause significant damage. In many cases, they can even monitor assembly lines for defective or poorly made products, providing an additional layer of quality assurance protection. Some devices may even be able to monitor for chemical leaks, overheating equipment, smoke, and other signs of danger, saving organizations from potentially dangerous (and costly) incidents. This has clear applications in industries ranging from manufacturing and healthcare to housing and critical infrastructure.

The ability to generate insights and improve operations extends beyond traditional businesses and into areas like healthcare. Hospitals and healthcare providers are now leveraging analytics to engage in virtual patient monitoring, allowing them to have eyes on their patients on a 24-hour basis. Using a combination of video and audio analytics, they can automatically detect signs of distress such as coughing, labored breathing, and cries of pain. They can also generate an alert if a high-risk patient attempts to leave their bed or exit the room, allowing caregivers or security teams to respond immediately. Not only does this improve patient outcomes, but it can also significantly reduce liability on slip/trip/fall cases. Similar technology can also be used to improve compliance outcomes, ensuring emergency exits remain clear and avoiding other potentially finable offenses in healthcare and other industries. The opportunities to reduce costs and improve outcomes are expanding every day.

Maximizing AI in the Present and Future

The shift toward leveraging surveillance devices for business intelligence and operations purposes has happened quickly, driven by the fact that most organizations are already familiar with the equipment they need to take advantage. And with businesses of all sizes—and in nearly every industry—increasingly turning to video analytics to enhance both their security capabilities and their business operations, the development of new, AI-based analytics is unlikely to slow anytime soon.

Best of all, the market is still growing. Even today, roughly 80% of security budgets are spent on human labor, including monitoring, guarding, and maintenance capabilities. As AI-based video analytics become increasingly widespread, that will change quickly—and businesses will be able to streamline their business intelligence and operations capabilities in a similar manner. As AI development continues and new, business-focused use cases emerge, organizations should ensure they are positioned to get the most out of analytics—both now and into the future.

#Accessibility#ai#AI development#AI-powered#Analysis#Analytics#applications#audio#axis communications#Behavior#Best Of#budgets#Business#Business Intelligence#Cameras#change#chemical#Cloud#compliance#course#critical infrastructure#customer experience#data#Deep Learning#democratization#democratization of AI#detection#developers#development#devices

0 notes

Text

AI vs. AGI: What’s the Difference?

Artificial Intelligence (AI) is transforming industries, but its evolution is still in progress. Artificial General Intelligence (AGI) is the next frontier—capable of independent reasoning and learning. While AI excels at specific tasks, AGI aims to replicate human-like cognitive abilities. Understanding the key differences between AI and AGI is essential as technology advances toward a more autonomous future.

For a deeper insight into the role of AGI and its potential impact, check out this expert discussion.

What is Artificial Intelligence (AI)?

AI is designed for narrow applications, such as facial recognition, chatbots, and recommendation systems.

AI models like GPT-4 and DALL·E process data and generate outputs based on pre-programmed patterns.

AI lacks self-awareness and the ability to learn beyond its training data.

AI improves over time through machine learning algorithms.

Deep learning enables AI to recognize patterns and automate decision-making.

AI remains dependent on human intervention and structured data for continuous improvement.

Common applications of AI include:

Healthcare: AI-powered diagnostics and drug discovery.

Finance: Fraud detection and algorithmic trading.

Autonomous Vehicles: AI assists in self-driving technology but lacks human intuition.

What is Artificial General Intelligence (AGI)?

AGI aims to develop independent reasoning, decision-making, and adaptability.

Unlike AI, AGI would be able to understand and perform any intellectual task that a human can.

AGI requires self-learning mechanisms and consciousness-like functions.

AGI is designed to acquire knowledge across multiple domains without explicit programming.

It would be able to solve abstract problems and improve its performance independently.

AGI systems could modify and create new learning strategies beyond human input.

Potential applications of AGI include:

Advanced Scientific Research: AGI could revolutionize space exploration, climate science, and quantum computing.

Fully Autonomous Robots: Machines capable of human-like decision-making and reasoning.

Ethical & Philosophical Thinking: AGI could assist in policy-making and ethical dilemmas with real-world implications.

Key Differences Between AI & AGI

Scope:

AI is narrow and task-specific.

AGI has general intelligence across all tasks.

Learning:

AI uses supervised and reinforcement learning.

AGI learns independently without predefined rules.

Adaptability:

AI is limited to pre-defined parameters.

AGI can self-improve and apply learning to new situations.

Human Interaction:

AI supports human decision-making.

AGI can function without human intervention.

Real-World Application:

AI is used in chatbots, automation, and image processing.

AGI would enable autonomous research, problem-solving, and creativity.

Challenges in Achieving AGI

Ethical & Safety Concerns:

Uncontrolled AGI could lead to unpredictable consequences.

AI governance and regulation must ensure safe and responsible AI deployment.

Computational & Technological Barriers:

AGI requires exponentially more computing power than current AI.

Quantum computing advancements may be needed to accelerate AGI development.

The Role of Human Oversight:

Scientists must establish fail-safe measures to prevent AGI from surpassing human control.

Governments and AI research institutions must collaborate on AGI ethics and policies.

Tej Kohli’s Perspective on AGI Development

Tech investor and tech entrepreneur Tej Kohli believes AGI is the next major revolution in AI, but its development must be approached with caution and responsibility. His insights include:

AGI should complement, not replace, human intelligence.

Investments in AGI must prioritize ethical development to prevent risks.

Quantum computing and biotech will play a crucial role in shaping AGI’s capabilities.

Conclusion

AI is already transforming industries, but AGI represents the future of true machine intelligence. While AI remains task-specific, AGI aims to match human-level cognition and problem-solving. Achieving AGI will require breakthroughs in computing, ethics, and self-learning technologies.

#Artificial Intelligence#Tej Kohli#AI vs AGI#Machine Learning#Deep Learning#Future of AI#AGI Development#AI Ethics#Quantum Computing#Autonomous Systems#AI Innovation

0 notes

Text

AI Deep Learning Accelerates Drug Development

Deep learning is a subset of artificial intelligence (AI) that mimics the neural networks of the human brain to learn from large amounts of data, enabling machines to solve complex problems. Deep learning technology has made significant progress in the biomedical field. Researchers have developed a series of application based on deep learning for disease diagnosis, protein design, and medical image recognition. The pharmaceutical industry is also beginning to recognize the importance of deep learning technology, hoping to leverage it to accelerate drug development and reduce costs.

1. Application of Deep Learning in Drug Development

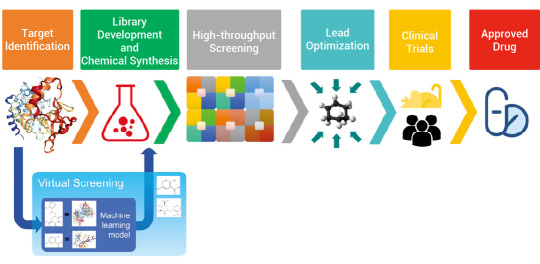

Previous studies have demonstrated that deep learning technology offers significant advantages in several key areas of drug development, including optimization of chemical synthesis routes, ADME-Tox prediction, target identification and validation and generation of novel molecules.

Figure 1. A broad overview of drug development and the place of virtual screening in this process[1].

1.1 Virtual Screening: Protein-Ligand Affinity

Deep learning can learn and identify potential binding patterns by comparing known protein-small molecule binding instances. During the training process, the deep learning models continuously optimize their parameters to enhance the accuracy and reliability of their predictions.

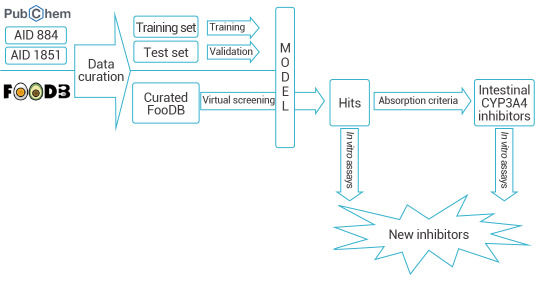

Yelena Guttman et al. developed a CYP3A4 inhibitor prediction model based on DeepChem framework. They created a KNIME workflow for data curation and employed the DeepChem module in Maestro to build a categorical classifier. This classifier was then used to virtually screen approximately 68,900 compounds from the FooDB database, leading to the successful identification of two new CYP3A4 inhibitors[2].

Figure 2. Prediction of CYP3A4 Inhibitors Based on DeepChem[2].

A workflow in KNIME analytics platform 4.0.314 was created to prepare and analyze the virtual screening.

1.2 ADME-Tox Prediction

Poor pharmacokinetic properties as well as toxicity issues are considered the main reasons for terminating the development process for drug candidates. Thus, there is an increasing need for robust screening methods to provide early information on absorption, distribution, metabolism, excretion, and toxicity (ADME-Tox) properties of compounds. Many studies have shown by leveraging these extensive ADME datasets, deep learning models can automatically identify and extract complex relationships between compound features and their corresponding ADMET properties. These trained models can then be used to predict the ADME properties of new compounds, thereby accelerating the process of drug discovery and development.

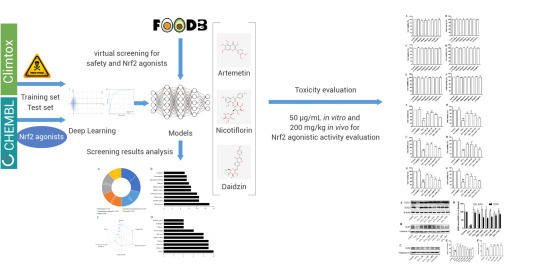

Liu et al. utilized directed message passing neural networks (D-MPNN, Chemprop) to predict the Nrf2 dietary-derived agonists and safety of compounds in the FooDB database. They successfully identified Nicotiflorin, a drug that exhibits both agonistic activity of Nrf2 and safety, which was validated in vitro and in vivo[3].

Figure 3. Using Deep-Learning Model D-MPNN to Assess Drug Safety[3].

1.3 Optimize Chemical Synthesis Routes

In recent years, it has been seen that artificial intelligence (AI) starts to bring revolutionary changes to chemical synthesis. However, the lack of suitable ways of representing chemical reactions and the scarceness of reaction data has limited the wider application of AI to reaction prediction. Deep learning is increasingly being applied to chemical synthesis, enabling the automatic identification and extraction of features and patterns from large datasets. This capability enhances the prediction of the efficiency and selectivity of new synthesis routes, significantly accelerating drug development and production.

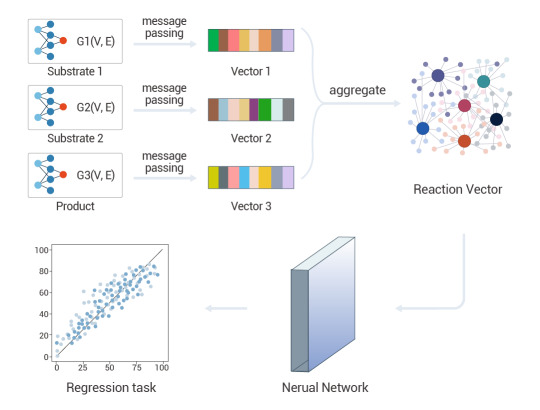

Li et al. introduced a novel reaction representation, GraphRXN, for reaction prediction. G

Figure 4. A deep-learning graph framework, GraphRXN, was proposed to be capable of learning reaction features and predicting reactivity[4].

2. Drug Screening Based on Deep Learning

The application of deep learning in the field of virtual screening primarily involves using neural networks to predict the activity or properties of compounds, thereby identifying potential candidate drugs or materials in a virtual environment. Commonly used deep learning models include Convolutional Neural Networks (CNN), Graph Neural Networks (GNN), Recurrent Neural Networks (RNN), Generative Adversarial Networks (GAN) and Transformer models.

CNNs excel at identifying patterns and features in structured data, such as chemical structures represented as images or graphs. Recent studies have demonstrated their effectiveness in predicting drug-drug interactions and assessing molecular properties by analyzing chemical substructures and other relevant features.

GNNs are designed to work directly with graph-structured data, making them particularly suitable for representing molecular structures where atoms are nodes and bonds are edges. They have shown remarkable performance in drug discovery by capturing the complex relationships between molecules and their properties.

RNNs are designed to handle sequential data, making them particularly effective for tasks where context from previous inputs is essential.

GANs consist of two neural networks—a generator and a discriminator—that work against each other to create new data instances.

Transformers have gained popularity for their ability to handle sequential data and capture long-range dependencies, making them suitable for tasks like natural language processing and time-series analysis.

In summary, deep learning is revolutionizing drug development by enhancing efficiency, accuracy, and cost-effectiveness across multiple stages of the process. As technology continues to evolve, its integration into pharmaceutical research is likely to deepen, paving the way for innovative therapeutic solutions.

Product Recommendation

Virtual Screening

MedChemExpress (MCE) provides high quality virtual screening service that enables researchers to identify most promising candidates. Based on the laws of quantum and molecular physics, our virtual screening services can achieve highly accurate results. Our optimized virtual screening protocol can reduce the size of chemical library to be screened experimentally, increase the likelihood to find innovative hits in a faster and less expensive manner, and mitigate the risk of failure in the lead optimization process.

50K Diversity Library

MCE 50K Diversity Library consists of 50,000 lead-like compounds with multiple characteristics such as calculated good solubility (-3.2 < logP < 5), oral bioavailability (RotB <= 10), drug transportability (PSA < 120). These compounds were selected by dissimilarity search with an average Tanimoto Coefficient of 0.52. There are 36,857 unique scaffolds and each scaffold 1 to 7 compounds. What’s more, compounds with the same scaffold have as many functional groups as possible, which make abundant chemical spaces.

MegaUni 10M Virtual Diversity Library

With MCE's 40,662 BBs, covering around 273 reaction types, more than 40 million molecules were generated. Compounds which comply with Ro5 criteria were selected. Inappropriate chemical structures, such as PAINS motifs and synthetically difficult accessible, were removed. Based on Morgan Fingerprint, molecular clustering analysis was carried out, and molecules close to each clustering center were extracted to form this drug-like and synthesizable diversity library. These selected molecules have 805,822 unique Bemis-Murcko Scaffolds (BMS) with diversified chemical space. This library is highly recommended for AI-based lead discovery, ultra-large virtual screening and novel lead discovery.

MegaUni 50K Virtual Diversity Library

MegaUni 50K Virtual Diversity Library consists of 50,000 novel, synthetically accessible, lead-like compounds. With MCE's 40,662 Building Blocks, covering around 273 reaction types, more than 40 million molecules were generated. Based on Morgan Fingerprint and Tanimoto Coefficient, molecular clustering analysis was carried out, and molecules closest to each clustering center were extracted to form a drug-like and synthesizable diversity library. The selected 50,000 drug-like molecules have 46,744 unique Bemis-Murcko Scaffolds (BMS), each containing only 1-3 compounds. This diverse library is highly recommended for virtual screening and novel lead discovery.

References

[1] Rifaioglu AS, et al.Brief Bioinform. 2019 Sep ;20(5):1878-1912.

[2] Guttman Y, et al.J Agric Food Chem. 2022 Mar ;70(8):2752-2761.

[3] Liu S, et al.J Agric Food Chem. 2023 May ;71(21):8038-8049.

[4] Li B, et al.J Cheminform. 2023 Aug;15(1):72.

[5] Segler MHS, et al. Planning chemical syntheses with deep neural networks and symbolic AI. Nature. 2018 Mar ;555(7698):604-610.

#biochemistry#chemistry#inhibitor#business#marketing#kit#developers & startups#AI Deep Learning Accelerates Drug Development

0 notes

Text

4me4you features digital creator Dean Bianchi aka “spipasucci_ai” - “Surreal realities thru AI”.

Dean Bianchi is an eCommerce Director at a small company in Northeast Ohio by day, but by night, he is a passionate digital creator, blending the technical aspects of current digital tools with a unique and often whimsical aesthetic. His creative journey has evolved over the years, influenced by a deep fascination with music, film, and storytelling from an early age.

Growing up, Dean was drawn to alternative media—late-night cable shows, indie films, and local college radio—that presented a world outside the mainstream. His love for these art forms only grew as he studied film in the late 80s and early 90s, forging friendships with other artists, writers, musicians, and filmmakers. During this time, he explored various creative mediums, including 8mm and 16mm film, collage art, poetry, and writing.

Though Dean took a detour in his creative journey for a while, focusing on his ‘regular’ job, he never fully let go of his creative passions. His career in digital tools—Photoshop, Premiere, interactive Flash, and web development—allowed him to keep learning and nurturing his creative side.

In the past two years, Dean’s world was transformed by the emergence of generative AI. Starting with Leonardo AI, he was instantly inspired by the new technical possibilities and the ability to bring his quirky, odd ideas to life. This sparked a creative reawakening, and as he explored platforms like MidJourney and Instagram, he found a supportive and inspiring community of digital creators. Their feedback pushed him to dive deeper into his creative approach, and he’s fully committed to pursuing this new creative outlet.

Recently, Dean has integrated generative AI video into his work, connecting back to his early passion for film and video. This has allowed him to blend his years of video education and experimentation with this exciting new technology. His creative aesthetic is deeply influenced by a fascination with strange, surreal imagery and motion, often mixing dark tones with whimsical or silly contexts. Drawing inspiration from artists like David Lynch, Michel Gondry, and Mr. Bungle, Dean’s work is known for its strong sense of color, randomness, and a sense of being a glimpse into an alternate reality.

Looking ahead, Dean is eager to integrate his creative world into his day-to-day life, whether through a career shift or by building visibility and engagement with the growing digital creator community. He has already begun work on a short film, joined Hailuo’s CPP, launched a YouTube channel for his video work, and is in the process of launching a website and print store. He also enjoys sharing tips and insights with others and is exploring ways to make that knowledge more accessible to a wider audience.

Grateful for the creative outlet that generative AI has provided, Dean is excited to continue sharing his ‘weirdness’ and growing alongside the digital creator community on this thrilling journey.

SEE MORE:

INSTAGRAM: https://www.instagram.com/spipasucci_ai/

WEBSITE: https://spipasucciai.com

YOUTUBE: https://www.youtube.com/@spipasucci_ai

#4me4you features digital creator Dean Bianchi aka “spipasucci_ai” - “Surreal realities thru AI”.#Dean Bianchi is an eCommerce Director at a small company in Northeast Ohio by day#but by night#he is a passionate digital creator#blending the technical aspects of current digital tools with a unique and often whimsical aesthetic. His creative journey has evolved over#influenced by a deep fascination with music#film#and storytelling from an early age.#Growing up#Dean was drawn to alternative media—late-night cable shows#indie films#and local college radio—that presented a world outside the mainstream. His love for these art forms only grew as he studied film in the lat#forging friendships with other artists#writers#musicians#and filmmakers. During this time#he explored various creative mediums#including 8mm and 16mm film#collage art#poetry#and writing.#Though Dean took a detour in his creative journey for a while#focusing on his ‘regular’ job#he never fully let go of his creative passions. His career in digital tools—Photoshop#Premiere#interactive Flash#and web development—allowed him to keep learning and nurturing his creative side.#In the past two years#Dean’s world was transformed by the emergence of generative AI. Starting with Leonardo AI#he was instantly inspired by the new technical possibilities and the ability to bring his quirky

0 notes

Text

Revoluția AI Local: Open WebUI și Puterea GPU-urilor NVIDIA în 2025

Într-o eră dominată de inteligența artificială bazată pe cloud, asistăm la o revoluție tăcută: aducerea AI-ului înapoi pe computerele personale. Apariția Open WebUI, alături de posibilitatea de a rula modele de limbaj de mari dimensiuni (LLM) local pe GPU-urile NVIDIA, transformă modul în care utilizatorii interacționează cu inteligența artificială. Această abordare promite mai multă…

#AI autonomy#AI eficient energetic#AI fără abonament#AI fără cloud#AI for automation#AI for coding#AI for developers#AI for research#AI on GPU#AI optimization on GPU#AI pe desktop#AI pe GPU#AI pentru automatizare#AI pentru cercetare#AI pentru dezvoltatori#AI pentru programare#AI privacy#AI without cloud#AI without subscription#alternative la ChatGPT#antrenare AI personalizată#autonomie AI#ChatGPT alternative#confidențialitate AI#costuri AI reduse#CUDA AI#deep learning local#desktop AI#energy-efficient AI#future of local AI

0 notes