#AIaccelerators

Explore tagged Tumblr posts

Text

Amazon SageMaker HyperPod Presents Amazon EKS Support

Amazon SageMaker HyperPod

Cut the training duration of foundation models by up to 40% and scale effectively across over a thousand AI accelerators.

We are happy to inform you today that Amazon SageMaker HyperPod, a specially designed infrastructure with robustness at its core, will enable Amazon Elastic Kubernetes Service (EKS) for foundation model (FM) development. With this new feature, users can use EKS to orchestrate HyperPod clusters, combining the strength of Kubernetes with the robust environment of Amazon SageMaker HyperPod, which is ideal for training big models. By effectively scaling across over a thousand artificial intelligence (AI) accelerators, Amazon SageMaker HyperPod can save up to 40% of training time.

- Advertisement -

SageMaker HyperPod: What is it?

The undifferentiated heavy lifting associated with developing and refining machine learning (ML) infrastructure is eliminated by Amazon SageMaker HyperPod. Workloads can be executed in parallel for better model performance because it is pre-configured with SageMaker’s distributed training libraries, which automatically divide training workloads over more than a thousand AI accelerators. SageMaker HyperPod occasionally saves checkpoints to guarantee your FM training continues uninterrupted.

You no longer need to actively oversee this process because it automatically recognizes hardware failure when it occurs, fixes or replaces the problematic instance, and continues training from the most recent checkpoint that was saved. Up to 40% less training time is required thanks to the robust environment, which enables you to train models in a distributed context without interruption for weeks or months at a time. The high degree of customization offered by SageMaker HyperPod enables you to share compute capacity amongst various workloads, from large-scale training to inference, and to run and scale FM tasks effectively.

Advantages of the Amazon SageMaker HyperPod

Distributed training with a focus on efficiency for big training clusters

Because Amazon SageMaker HyperPod comes preconfigured with Amazon SageMaker distributed training libraries, you can expand training workloads more effectively by automatically dividing your models and training datasets across AWS cluster instances.

Optimum use of the cluster’s memory, processing power, and networking infrastructure

Using two strategies, data parallelism and model parallelism, Amazon SageMaker distributed training library optimizes your training task for AWS network architecture and cluster topology. Model parallelism divides models that are too big to fit on one GPU into smaller pieces, which are then divided among several GPUs for training. To increase training speed, data parallelism divides huge datasets into smaller ones for concurrent training.

- Advertisement -

Robust training environment with no disruptions

You can train FMs continuously for months on end with SageMaker HyperPod because it automatically detects, diagnoses, and recovers from problems, creating a more resilient training environment.

Customers may now use a Kubernetes-based interface to manage their clusters using Amazon SageMaker HyperPod. This connection makes it possible to switch between Slurm and Amazon EKS with ease in order to optimize different workloads, including as inference, experimentation, training, and fine-tuning. Comprehensive monitoring capabilities are provided by the CloudWatch Observability EKS add-on, which offers insights into low-level node metrics on a single dashboard, including CPU, network, disk, and other. This improved observability includes data on container-specific use, node-level metrics, pod-level performance, and resource utilization for the entire cluster, which makes troubleshooting and optimization more effective.

Since its launch at re:Invent 2023, Amazon SageMaker HyperPod has established itself as the go-to option for businesses and startups using AI to effectively train and implement large-scale models. The distributed training libraries from SageMaker, which include Model Parallel and Data Parallel software optimizations to assist cut training time by up to 20%, are compatible with it. With SageMaker HyperPod, data scientists may train models for weeks or months at a time without interruption since it automatically identifies, fixes, or replaces malfunctioning instances. This frees up data scientists to concentrate on developing models instead of overseeing infrastructure.

Because of its scalability and abundance of open-source tooling, Kubernetes has gained popularity for machine learning (ML) workloads. These benefits are leveraged in the integration of Amazon EKS with Amazon SageMaker HyperPod. When developing applications including those needed for generative AI use cases organizations frequently rely on Kubernetes because it enables the reuse of capabilities across environments while adhering to compliance and governance norms. Customers may now scale and maximize resource utilization across over a thousand AI accelerators thanks to today’s news. This flexibility improves the workflows for FM training and inference, containerized app management, and developers.

With comprehensive health checks, automated node recovery, and work auto-resume features, Amazon EKS support in Amazon SageMaker HyperPod fortifies resilience and guarantees continuous training for big-ticket and/or protracted jobs. Although clients can use their own CLI tools, the optional HyperPod CLI, built for Kubernetes settings, can streamline job administration. Advanced observability is made possible by integration with Amazon CloudWatch Container Insights, which offers more in-depth information on the health, utilization, and performance of clusters. Furthermore, data scientists can automate machine learning operations with platforms like Kubeflow. A reliable solution for experiment monitoring and model maintenance is offered by the integration, which also incorporates Amazon SageMaker managed MLflow.

In summary, the HyperPod service fully manages the HyperPod service-generated Amazon SageMaker HyperPod cluster, eliminating the need for undifferentiated heavy lifting in the process of constructing and optimizing machine learning infrastructure. This cluster is built by the cloud admin via the HyperPod cluster API. These HyperPod nodes are orchestrated by Amazon EKS in a manner akin to that of Slurm, giving users a recognizable Kubernetes-based administrator experience.

Important information

The following are some essential details regarding Amazon EKS support in the Amazon SageMaker HyperPod:

Resilient Environment: With comprehensive health checks, automated node recovery, and work auto-resume, this integration offers a more resilient training environment. With SageMaker HyperPod, you may train foundation models continuously for weeks or months at a time without interruption since it automatically finds, diagnoses, and fixes errors. This can result in a 40% reduction in training time.

Improved GPU Observability: Your containerized apps and microservices can benefit from comprehensive metrics and logs from Amazon CloudWatch Container Insights. This makes it possible to monitor cluster health and performance in great detail.

Scientist-Friendly Tool: This release includes interaction with SageMaker Managed MLflow for experiment tracking, a customized HyperPod CLI for job management, Kubeflow Training Operators for distributed training, and Kueue for scheduling. Additionally, it is compatible with the distributed training libraries offered by SageMaker, which offer data parallel and model parallel optimizations to drastically cut down on training time. Large model training is made effective and continuous by these libraries and auto-resumption of jobs.

Flexible Resource Utilization: This integration improves the scalability of FM workloads and the developer experience. Computational resources can be effectively shared by data scientists for both training and inference operations. You can use your own tools for job submission, queuing, and monitoring, and you can use your current Amazon EKS clusters or build new ones and tie them to HyperPod compute.

Read more on govindhtech.com

#AmazonSageMaker#HyperPodPresents#AmazonEKSSupport#foundationmodel#artificialintelligence#AI#machinelearning#ML#AIaccelerators#AmazonCloudWatch#AmazonEKS#technology#technews#news#govindhtech

0 notes

Text

Battling Bakeries in an AI Arms Race! Inside the High-Tech Doughnut Feud

#AI#TechSavvy#commercialwar#AIAccelerated#EdgeAnalytic#CloudComputing#DeepLearning#NeuralNetwork#AICardUpgradeCycle#FutureProof#ComputerVision#ModelTraining#artificialintelligence#ai#Supergirl#Batman#DC Official#Home of DCU#Kara Zor-El#Superman#Lois Lane#Clark Kent#Jimmy Olsen#My Adventures With Superman

2 notes

·

View notes

Text

How Does AI Generate Human-Like Voices? 2025

How Does AI Generate Human-Like Voices? 2025

Artificial Intelligence (AI) has made incredible advancements in speech synthesis. AI-generated voices now sound almost indistinguishable from real human speech. But how does this technology work? What makes AI-generated voices so natural, expressive, and lifelike? In this deep dive, we’ll explore: ✔ The core technologies behind AI voice generation. ✔ How AI learns to mimic human speech patterns. ✔ Applications and real-world use cases. ✔ The future of AI-generated voices in 2025 and beyond.

Understanding AI Voice Generation

At its core, AI-generated speech relies on deep learning models that analyze human speech and generate realistic voices. These models use vast amounts of data, phonetics, and linguistic patterns to synthesize speech that mimics the tone, emotion, and natural flow of a real human voice. 1. Text-to-Speech (TTS) Systems Traditional text-to-speech (TTS) systems used rule-based models. However, these sounded robotic and unnatural because they couldn't capture the rhythm, tone, and emotion of real human speech. Modern AI-powered TTS uses deep learning and neural networks to generate much more human-like voices. These advanced models process: ✔ Phonetics (how words sound). ✔ Prosody (intonation, rhythm, stress). ✔ Contextual awareness (understanding sentence structure). 💡 Example: AI can now pause, emphasize words, and mimic real human speech patterns instead of sounding monotone.

2. Deep Learning & Neural Networks AI speech synthesis is driven by deep neural networks (DNNs), which work like a human brain. These networks analyze thousands of real human voice recordings and learn: ✔ How humans naturally pronounce words. ✔ The pitch, tone, and emphasis of speech. ✔ How emotions impact voice (anger, happiness, sadness, etc.). Some of the most powerful deep learning models include: WaveNet (Google DeepMind) Developed by Google DeepMind, WaveNet uses a deep neural network that analyzes raw audio waveforms. It produces natural-sounding speech with realistic tones, inflections, and even breathing patterns. Tacotron & Tacotron 2 Tacotron models, developed by Google AI, focus on improving: ✔ Natural pronunciation of words. ✔ Pauses and speech flow to match human speech patterns. ✔ Voice modulation for realistic expression. 3. Voice Cloning & Deepfake Voices One of the biggest breakthroughs in AI voice synthesis is voice cloning. This technology allows AI to: ✔ Copy a person’s voice with just a few minutes of recorded audio. ✔ Generate speech in that person’s exact tone and style. ✔ Mimic emotions, pitch, and speech variations. 💡 Example: If an AI listens to 5 minutes of Elon Musk’s voice, it can generate full speeches in his exact tone and speech style. This is called deepfake voice technology. 🔴 Ethical Concern: This technology can be used for fraud and misinformation, like creating fake political speeches or scam calls that sound real.

How AI Learns to Speak Like Humans

AI voice synthesis follows three major steps: Step 1: Data Collection & Training AI systems collect millions of human speech recordings to learn: ✔ Pronunciation of words in different accents. ✔ Pitch, tone, and emotional expression. ✔ How people emphasize words naturally. 💡 Example: AI listens to how people say "I love this product!" and learns how different emotions change the way it sounds. Step 2: Neural Network Processing AI breaks down voice data into small sound units (phonemes) and reconstructs them into natural-sounding speech. It then: ✔ Creates realistic sentence structures. ✔ Adds human-like pauses, stresses, and tonal changes. ✔ Removes robotic or unnatural elements. Step 3: Speech Synthesis Output After processing, AI generates speech that sounds fluid, emotional, and human-like. Modern AI can now: ✔ Imitate accents and speech styles. ✔ Adjust pitch and tone in real time. ✔ Change emotional expressions (happy, sad, excited).

Real-World Applications of AI-Generated Voices

AI-generated voices are transforming multiple industries: 1. Voice Assistants (Alexa, Siri, Google Assistant) AI voice assistants now sound more natural, conversational, and human-like than ever before. They can: ✔ Understand context and respond naturally. ✔ Adjust tone based on conversation flow. ✔ Speak in different accents and languages. 2. Audiobooks & Voiceovers Instead of hiring voice actors, AI-generated voices can now: ✔ Narrate entire audiobooks in human-like voices. ✔ Adjust voice tone based on story emotion. ✔ Sound different for each character in a book. 💡 Example: AI-generated voices are now used for animated movies, YouTube videos, and podcasts. 3. Customer Service & Call Centers Companies use AI voices for automated customer support, reducing costs and improving efficiency. AI voice systems: ✔ Respond naturally to customer questions. ✔ Understand emotional tone in conversations. ✔ Adjust voice tone based on urgency. 💡 Example: Banks use AI voice bots for automated fraud detection calls. 4. AI-Generated Speech for Disabled Individuals AI voice synthesis is helping people who have lost their voice due to medical conditions. AI-generated speech allows them to: ✔ Type text and have AI speak for them. ✔ Use their own cloned voice for communication. ✔ Improve accessibility for those with speech impairments. 💡 Example: AI helped Stephen Hawking communicate using a computer-generated voice.

The Future of AI-Generated Voices in 2025 & Beyond

AI-generated speech is evolving fast. Here’s what’s next: 1. Fully Realistic Conversational AI By 2025, AI voices will sound completely human, making robots and AI assistants indistinguishable from real humans. 2. Real-Time AI Voice Translation AI will soon allow real-time speech translation in different languages while keeping the original speaker’s voice and tone. 💡 Example: A Japanese speaker’s voice can be translated into English, but still sound like their real voice. 3. AI Voice in the Metaverse & Virtual Worlds AI-generated voices will power realistic avatars in virtual worlds, enabling: ✔ AI-powered characters with human-like speech. ✔ AI-generated narrators in VR experiences. ✔ Fully voiced AI NPCs in video games.

Final Thoughts

AI-generated voices have reached an incredible level of realism. From voice assistants to deepfake voice cloning, AI is revolutionizing how we interact with technology. However, ethical concerns remain. With the ability to clone voices and create deepfake speech, AI-generated voices must be used responsibly. In the future, AI will likely replace human voice actors, power next-gen customer service, and enable lifelike AI assistants. But one thing is clear—AI-generated voices are becoming indistinguishable from real humans. Read Our Past Blog: What If We Could Live Inside a Black Hole? 2025For more information, check this resource.

How Does AI Generate Human-Like Voices? 2025 - Everything You Need to Know

Understanding ai in DepthRelated Posts- How Does AI Generate Human-Like Voices? 2025 - How Does AI Generate Human-Like Voices? 2025 - How Does AI Generate Human-Like Voices? 2025 - How Does AI Generate Human-Like Voices? 2025 Read the full article

#1#2#2025-01-01t00:00:00.000+00:00#3#4#5#accent(sociolinguistics)#accessibility#aiaccelerator#amazonalexa#anger#animation#artificialintelligence#audiodeepfake#audiobook#avatar(computing)#blackhole#blog#brain#chatbot#cloning#communication#computer-generatedimagery#conversation#customer#customerservice#customersupport#data#datacollection#deeplearning

0 notes

Text

Announcing the new Forlinx FET-MX95xx-C SoM! Based on the NXP i.MX95 series flagship processor, it integrates 6 Cortex-A55 cores, Cortex-M7 and Cortex-M33 cores. With a powerful 2TOPS NPU, it offers excellent AI computing capability, ideal for edge computing, smart cockpits, Industry 4.0 and more.

The FET-MX95xx-C features rich interfaces including 5x CAN-FD, 1x 10GbE, 2x GbE, 2x PCIe Gen3, and an embedded ISP supporting 4K@30fps video capture with powerful GPU acceleration. It also has strong safety features compliant with ASIL-B and SIL-2 standards.

Want to learn more? Check out the Forlinx website

Let's explore Forlinx's latest technology together and power up your products!

#ForlinxEmbedded#NXPIMX95#EdgeComputing#IndustrialIoT#SmartCockpit#ComputerOnModule#AIAcceleration#FunctionalSafety#SoM

0 notes

Text

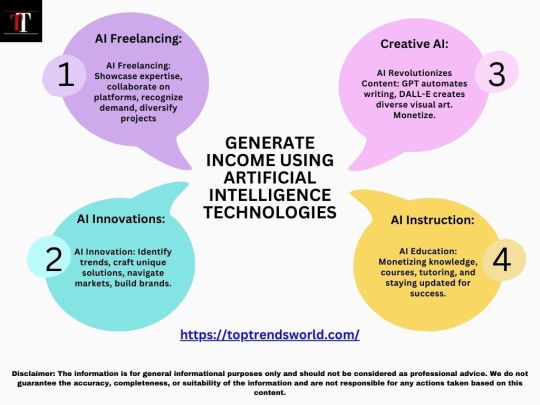

Artificial Intelligence (AI) Updates

"Top Trends LLC (DBA ""Top Trends"") is a dynamic and information-rich web platform that empowers its readers with a broad spectrum of knowledge, insights, and data-driven trends. Our professional writers, industry experts, and enthusiasts dive deep into Artificial Intelligence, Finance, Startups, SEO and Backlinks.

#AIAdvancements#AIBreakthroughs#AIFrontiers#AIAcceleration#AIInnovationWave#AIDiscoveries#AIEvolutions#AIRevolution#AITrends2024#AIBeyondLimits

0 notes

Text

Our AI-based performance testing accelerator automates the scripting process reducing the scripting time to 24 hours only. This brings a drastic improvement from the conventional weeks-long scripting process. Unlike the manual scripting standards, our accelerator rapidly addresses diverse testing scenarios within minutes, offering unmatched efficiency. This not only accelerates the testing process but also ensures adaptability to various scenarios.

Talk to our performance experts at https://rtctek.com/contact-us/. Visit https://rtctek.com/performance-testing-services to learn more about our services.

#rtctek#roundtheclocktechnologies#performancetesting#performance#aiaccelerator#ptaccelerator#testingaccelerator

0 notes

Link

#AD#ADAS#advanceddriver-assistancesystems#AIaccelerator#AIprocessing#AIvisionprocessor#autonomousdriving#CES2024#edgeAI#Futurride#Gunsens#Hailo#Hailo-15#Hailo-8#iMotion#Renesas#smartcamera#sustainablemobility#TierIV#Truen#TTControl#Velo.ai#videoanalytics

0 notes

Photo

🚀 Ready to revolutionize AI acceleration? UALink 1.0 is here to redefine connectivity! 🌐 Discover how UALink empowers companies like AMD, Broadcom, and Intel to rival Nvidia's NVLink by leveraging an open, industry-standard technology. The UALink 1.0 spec provides a high-speed, low-latency interconnect, supporting up to 1,024 GPUs with a staggering 200 GT/s bandwidth per processor. It's a game-changer for AI and HPC, promising a competitive edge through scalability and cost-effectiveness. The UALink Consortium's dedication to an open ecosystem ensures a future where diverse AI solutions can thrive. As more companies adopt this innovative technology, AI applications will skyrocket in performance and accessibility. Think your data center is ready for UALink transformation? 🤔 Tell us how you envision UALink changing the game in AI acceleration. Comment below! ⬇️ #UALink #AIAcceleration #HPC #TechnologyRevolution #GameChanger #Nvidia #Innovation #TechUpdates #DataCenters #GroovyComputers 🌟

0 notes

Text

GPU-as-a-Service = Cloud Power Level: OVER 9000! ⚙️☁️ From $5.6B → $28.4B by 2034 #GPUaaS #NextGenComputing

GPU as a Service (GPUaaS) is revolutionizing computing by providing scalable, high-performance GPU resources through the cloud. This model enables businesses, developers, and researchers to access powerful graphics processing units without investing in expensive hardware. From AI model training and deep learning to 3D rendering, gaming, and video processing, GPUaaS delivers unmatched speed and efficiency.

To Request Sample Report : https://www.globalinsightservices.com/request-sample/?id=GIS24347 &utm_source=SnehaPatil&utm_medium=Article

Its flexibility allows users to scale resources based on workload demands, making it ideal for startups, enterprises, and institutions pursuing innovation. With seamless integration, global access, and pay-as-you-go pricing, GPUaaS fuels faster development cycles and reduces time to market. As demand for compute-intensive tasks grows across industries like healthcare, automotive, fintech, and entertainment, GPUaaS is set to be the cornerstone of next-gen digital infrastructure.

#gpuservice #gpuaas #cloudgpu #highperformancecomputing #aiacceleration #deeplearninggpu #renderingincloud #3dgraphicscloud #cloudgaming #machinelearningpower #datacentergpu #remotegpuresources #gputraining #computeintensive #cloudinfrastructure #gpuoncloud #payasyougpu #techacceleration #innovationaservice #aidevelopmenttools #gpurendering #videoprocessingcloud #scalablegpu #gpubasedai #virtualgpu #edgecomputinggpu #startupsincloud #gpuforml #scientificcomputing #medicalimaginggpu #enterpriseai #nextgentech #gpuinfrastructure #cloudinnovation #gpucloudservices #smartcomputing

Research Scope:

· Estimates and forecast the overall market size for the total market, across type, application, and region

· Detailed information and key takeaways on qualitative and quantitative trends, dynamics, business framework, competitive landscape, and company profiling

· Identify factors influencing market growth and challenges, opportunities, drivers, and restraints

· Identify factors that could limit company participation in identified international markets to help properly calibrate market share expectations and growth rates

· Trace and evaluate key development strategies like acquisitions, product launches, mergers, collaborations, business expansions, agreements, partnerships, and R&D activities

About Us:

Global Insight Services (GIS) is a leading multi-industry market research firm headquartered in Delaware, US. We are committed to providing our clients with highest quality data, analysis, and tools to meet all their market research needs. With GIS, you can be assured of the quality of the deliverables, robust & transparent research methodology, and superior service.

Contact Us:

Global Insight Services LLC 16192, Coastal Highway, Lewes DE 19958 E-mail: [email protected] Phone: +1–833–761–1700 Website: https://www.globalinsightservices.com/

0 notes

Text

AI Chips = The Future! Market Skyrocketing to $230B by 2034 🚀

Artificial Intelligence (AI) Chip Market focuses on high-performance semiconductor chips tailored for AI computations, including machine learning, deep learning, and predictive analytics. AI chips — such as GPUs, TPUs, ASICs, and FPGAs — enhance processing efficiency, enabling autonomous systems, intelligent automation, and real-time analytics across industries.

To Request Sample Report : https://www.globalinsightservices.com/request-sample/?id=GIS25086 &utm_source=SnehaPatil&utm_medium=Article

Market Trends & Growth:

GPUs (45% market share) lead, driven by parallel processing capabilities for AI workloads.

ASICs (30%) gain traction for customized AI applications and energy efficiency.

FPGAs (25%) are increasingly used for flexible AI model acceleration.

Inference chips dominate, optimizing real-time AI decision-making at the edge and cloud.

Regional Insights:

North America dominates the AI chip market, with strong R&D and tech leadership.

Asia-Pacific follows, led by China’s semiconductor growth and India’s emerging AI ecosystem.

Europe invests in AI chips for automotive, robotics, and edge computing applications.

Future Outlook:

With advancements in 7nm and 5nm fabrication technologies, AI-driven cloud computing, and edge AI innovations, the AI chip market is set for exponential expansion. Key players like NVIDIA, Intel, AMD, and Qualcomm are shaping the future with next-gen AI architectures and strategic collaborations.

#aichips #artificialintelligence #machinelearning #deeplearning #neuralnetworks #gpus #cpus #fpgas #asics #npus #tpus #edgeai #cloudai #computervision #speechrecognition #predictiveanalytics #autonomoussystems #aiinhealthcare #aiinautomotive #aiinfinance #semiconductors #highperformancecomputing #waferfabrication #chipdesign #7nmtechnology #10nmtechnology #siliconchips #galliumnitride #siliconcarbide #inferenceengines #trainingchips #cloudcomputing #edgecomputing #aiprocessors #quantumcomputing #neuromorphiccomputing #iotai #aiacceleration #hardwareoptimization #smartdevices #bigdataanalytics #robotics #aiintelecom

0 notes

Text

IBM And Intel Introduce Gaudi 3 AI Accelerators On IBM Cloud

Cloud-Based Enterprise AI from Intel and IBM. To assist businesses in scaling AI, Intel and IBM will implement Gaudi 3 AI accelerators on IBM Cloud.

Gaudi 3 AI Accelerator

The worldwide deployment of Intel Gaudi 3 AI accelerators as a service on IBM Cloud is the result of an announcement made by IBM and Intel. Anticipated for release in early 2025, this product seeks to support corporate AI scalability more economically and foster creativity supported by security and resilience.

Support for Gaudi 3 will also be possible because to this partnership with IBM’s Watsonx AI and analytics platform. The first cloud service provider (CSP) to use Gaudi 3 is IBM Cloud, and the product will be offered for on-premises and hybrid setups.

Intel and IBM

“AI’s true potential requires an open, cooperative environment that gives customers alternatives and solutions. are generating new AI capabilities and satisfying the need for reasonably priced, safe, and cutting-edge AI computing solutions by fusing Xeon CPUs and Gaudi 3 AI accelerators with IBM Cloud.

Why This Is Important: Although generative AI may speed up transformation, the amount of computational power needed highlights how important it is for businesses to prioritize availability, performance, cost, energy efficiency, and security. By working together, Intel and IBM want to improve performance while reducing the total cost of ownership for using and scaling AI.

Gaudi 3

Gaudi 3’s integration with 5th generation Xeon simplifies workload and application management by supporting corporate AI workloads in data centers and the cloud. It also gives clients insight and control over their software stack. Performance, security, and resilience are given first priority as clients expand corporate AI workloads more affordably with the aid of IBM Cloud and Gaudi 3.

IBM’s Watsonx AI and data platform will support Gaudi 3 to improve model inferencing price/performance. This will give Watsonx clients access to extra AI infrastructure resources for scaling their AI workloads across hybrid cloud environments.

“IBM is dedicated to supporting Intel customers in driving innovation in AI and hybrid cloud by providing solutions that address their business demands. According to Alan Peacock, general manager of IBM Cloud, “Intel commitment to security and resilience with IBM Cloud has helped fuel IBM’s hybrid cloud and AI strategy for Intel enterprise clients.”

Intel Gaudi 3 AI Accelerator

“The clients will have access to a flexible enterprise AI solution that aims to optimize cost performance by utilizing IBM Cloud and Intel’s Gaudi 3 accelerators.” They are making possible new AI business prospects available to their customers so they may test, develop, and implement AI inferencing solutions more affordably.

IBM and Intel

How It Works: IBM and Intel are working together to provide customers using AI a Gaudi 3 service capability. IBM and Intel want to use IBM Cloud’s security and compliance features to assist customers in a variety of sectors, including highly regulated ones.

Scalability and Flexibility: Clients may modify computing resources as required with the help of scalable and flexible solutions from IBM Cloud and Intel, which may result in cost savings and improved operational effectiveness.

Improved Security and Performance: By integrating Gaudi 3 with IBM Cloud Virtual Servers for VPC, x86-based businesses will be able to execute applications more securely and quickly than they could have before, which will improve user experiences.

What’s Next: Intel and IBM have a long history of working together, starting with the IBM PC and continuing with Gaudi 3 to create corporate AI solutions. General availability of IBM Cloud with Gaudi 3 products is scheduled for early 2025. In the next months, stay out for additional developments from IBM and Intel.

Intel Gaudi 3: The Distinguishing AI

Introducing your new, high-performing choice for every kind of workplace AI task.

An Improved Method for Using Enterprise AI

The Intel Gaudi 3 AI accelerators are designed to withstand rigorous training and inference tasks. They are based on the high-efficiency Intel Gaudi platform, which has shown MLPerf benchmark performance.

Support AI workloads from node to mega cluster in your data center or in the cloud, all running on Ethernet equipment you probably already possess. Intel Gaudi 3 may be crucial to the success of any AI project, regardless of how many accelerators you require one or hundreds.

Developed to Meet AI’s Real-World Needs

With the help of industry-standard Ethernet networking and open, community-based software, you can grow systems more flexibly thanks to the Intel Gaudi 3 AI accelerators.

Adopt Easily

Whether you are beginning from scratch, optimizing pre-made models, or switching from a GPU-based method, using Intel Gaudi 3 AI accelerators is easy.

Designed with developers in mind: To quickly catch up, make use of developer resources and software tools.

Encouragement of Both New and Old Models: Use open source tools, such as Hugging Face resources, to modify reference models, create new ones, or migrate old ones.

Included PyTorch: Continue using the library that your team is already familiar with.

Simple Translation of Models Based on GPUs: With the help of their specially designed software tools, quickly transfer your current solutions.

Ease Development from Start to Finish

Take less time to get from proof of concept to manufacturing. Intel Gaudi 3 AI Accelerators are backed by a robust suite of software tools, resources, and training from migration to implementation. Find out what resources are available to make your AI endeavors easier.

Scale Without Effort: Integrate AI into everyday life. The goal of the Intel Gaudi 3 AI Accelerators is to provide even the biggest and most complicated installations with straightforward, affordable AI scaling.

Increased I/O: Benefit from 33 percent greater I/O connection per accelerator than in H100,4 to allow for huge scale-up and scale-out while maintaining optimal cost effectiveness.

Constructed for Ethernet: Utilize the networking infrastructure you currently have and use conventional Ethernet gear to accommodate growing demands.

Open: Steer clear of hazardous investments in proprietary, locked technologies like NVSwitch, InfiniBand, and NVLink.

Boost Your AI Use Case: Realize the extraordinary on any scale. Modern generative AI and LLMs are supported by Intel Gaudi 3 AI accelerators in the data center. These accelerators work in tandem with Intel Xeon processors, the preferred host CPU for cutting-edge AI systems, to provide enterprise performance and dependability.

Read more on govindhtech.com

#IBM#IntroduceGaudi3#AIAccelerators#IBMCloud#IBMWatsonxAI#ibm#intel#AIcomputing#NVLink#AIworkloads#hybridcloud#IntelGaudiplatform#MLPerfbenchmark#IntelXeonprocessors#technology#technews#news#govindhtech

0 notes

Text

"AI + Semiconductors = The Ultimate Power Duo for Predicting the Future (2025-2033)"

AI for Predictive Semiconductor Trends Market is revolutionizing the semiconductor industry by leveraging artificial intelligence to forecast trends, optimize production, and streamline supply chain management. AI-powered demand forecasting, yield optimization, and predictive maintenance enable semiconductor manufacturers, designers, and suppliers to enhance efficiency, cut costs, and stay ahead of market shifts.

To Request Sample Report: https://www.globalinsightservices.com/request-sample/?id=GIS32975 &utm_source=SnehaPatil&utm_medium=Article

The automotive sector leads the market, integrating AI for autonomous driving and advanced safety systems. Consumer electronics follows closely, with rising AI adoption in smart home devices and personal gadgets. Additionally, telecommunications benefits from AI-driven 5G network optimization. North America dominates the market due to its strong AI research ecosystem and semiconductor innovation. Europe ranks second, with major investments in AI-powered semiconductor advancements, particularly in Germany and the UK. Meanwhile,��Asia-Pacific is emerging as a key player, as China, South Korea, and Taiwan invest in AI-enhanced semiconductor manufacturing.

The market is poised for exponential growth, fueled by cloud AI, edge computing, and hybrid AI models. As AI continues to reshape semiconductor manufacturing, predictive analytics will drive greater efficiency, sustainability, and innovation in the industry.

#aiinsemiconductors #predictiveanalytics #machinelearning #deeplearning #aiinnovation #smartmanufacturing #semiconductortrends #yieldoptimization #supplychainai #techforecasting #cloudai #edgecomputing #autonomousdriving #5gnetworks #artificialintelligence #bigdataanalytics #chipdesign #aiaccelerators #quantumcomputing #smartfactories #digitaltransformation #computervision #naturalanguageprocessing #automation #semiconductorindustry #aioptimization #iot #processautomation #datascience #techdisruption #futuretech

0 notes

Text

In-Memory Computing Chips: The Next Big Thing? Market to Hit $12.4B by 2034

In-Memory Computing Chips Market is experiencing rapid growth as industries demand faster data processing, real-time analytics, and energy-efficient computing. Unlike traditional architectures, in-memory computing chips store and process data in the same location, eliminating latency and dramatically improving performance. This breakthrough technology is transforming industries such as AI, big data, edge computing, healthcare, finance, and autonomous systems.

To Request Sample Report: https://www.globalinsightservices.com/request-sample/?id=GIS10637 &utm_source=SnehaPatil&utm_medium=Article

Why In-Memory Computing Chips?

✅ Accelerate AI & machine learning applications ✅ Enable real-time big data analytics ✅ Reduce power consumption & latency ✅ Optimize cloud computing & edge AI

Market Growth Drivers:

📈 Growing demand for AI-driven computing & deep learning 📈 Expansion of IoT, 5G, and high-performance computing (HPC) 📈 Rising need for energy-efficient data centers & cloud infrastructure 📈 Advancements in neuromorphic and resistive RAM (ReRAM) technologies

The global in-memory computing chips market is set to expand, with major tech giants and startups investing in AI accelerators, neuromorphic computing, and next-gen memory architectures. As AI, blockchain, and real-time analytics continue to evolve, in-memory computing is emerging as a critical enabler of high-speed, low-latency processing.

With quantum computing and edge AI pushing the limits of traditional computing, in-memory computing chips are paving the way for a faster, smarter, and more efficient digital future.

What are your thoughts on in-memory computing? Let’s discuss! 👇

#InMemoryComputing #AIAccelerators #EdgeAI #HighPerformanceComputing #BigData #RealTimeAnalytics #CloudComputing #MachineLearning #ArtificialIntelligence #NeuromorphicComputing #NextGenChips #TechInnovation #AIChips #DataProcessing #DeepLearning #IoT #5G #QuantumComputing #SmartComputing #ChipTechnology #EnergyEfficientTech #HPC #DataCenters #ReRAM #CloudAI #AIHardware #FutureOfComputing #FastProcessing #LowLatency #NextGenMemory 🚀

Research Scope:

· Estimates and forecast the overall market size for the total market, across type, application, and region

· Detailed information and key takeaways on qualitative and quantitative trends, dynamics, business framework, competitive landscape, and company profiling

· Identify factors influencing market growth and challenges, opportunities, drivers, and restraints

· Identify factors that could limit company participation in identified international markets to help properly calibrate market share expectations and growth rates

· Trace and evaluate key development strategies like acquisitions, product launches, mergers, collaborations, business expansions, agreements, partnerships, and R&D activities

About Us:

Global Insight Services (GIS) is a leading multi-industry market research firm headquartered in Delaware, US. We are committed to providing our clients with highest quality data, analysis, and tools to meet all their market research needs. With GIS, you can be assured of the quality of the deliverables, robust & transparent research methodology, and superior service.

Contact Us:

Global Insight Services LLC 16192, Coastal Highway, Lewes DE 19958 E-mail: [email protected] Phone: +1–833–761–1700 Website: https://www.globalinsightservices.com/

0 notes

Text

🟢🙏💙 THE UNCENSORED TRUTH About What's REALLY Going ON! Geoffrey Hoppe Channels Adamus Saint Germain! (Tone: 353)

🌍 Democracy is shifting. The world isn’t ending—it’s evolving. AI, governance, & self-love are redefining the future. What’s next? A new Earth by 2044. Are you ready? 🚀 #ConsciousnessShift #AIAcceleration #FuturePredictions

Posted on Feb 13th, 2025 by @inspirenation Compelling Summary In this thought-provoking conversation, Michael Sandler interviews Geoffrey Hoppe, the channeler of Adamus Saint Germain, to explore the massive global changes unfolding today. The discussion dives into the potential end of democracy, the rapid evolution of human consciousness, and how humanity is preparing for an unprecedented…

#2032 shift#2044 vision#Adamus Saint Germain#AI acceleration#ascension#awakening humanity#consciousness evolution#consciousness shift#Crimson Circle#democracy transition#energy work#future predictions#governance evolution#Heaven’s Cross#Jeffrey Hoppe#leadership transformation#lightworkers#Michael Sandler#New Earth#self-love#Spiritual Awakening

0 notes

Text

RISE เปิดโครงการ AI Accelerator เป็นครั้งแรกใน SEA

สถาบันเร่งสปีดนวัตกรรมองค์กร เปิดตัวโปรแกรม AI Accelerator เป็นครั้งแรกในเอเชียตะวันออกเฉียงใต้ เดินหน้าสร้างองค์ความรู้ด้านปัญญาประดิษฐ์ สู่ต้นแบบธุ��กิจหลายหลายบริการ ซึ่งจะเป็นโปรแกรมเร่งสปีดการนำเทคโนโลยีปัญญาประดิษฐ์ หรือ Artificial Intelligence (AI) มาใช้ในองค์กร มุ่งเน้นให้เกิดผลลัพธ์ทางธุรกิจที่จับต้องได้ และสามารถตอบโจทย์ขององค์กรชั้นนำในภูมิภาคเอเชียตะวันออกเฉียงใต้ RISE.AI เป็นโปรแกรมเร่งสปีดนวัตกรรมในด้าน AI สำหรับองค์กร โดย RISE ทำงานร่วมกับเครือข่ายพาร์ทเนอร์ที่ครอบคลุมทั้งในภูมิภาคเอเชียตะวันออกเฉียงใต้และทั่วโลก โดยใช้ความเชี่ยวชาญของ RISE ในการนำ AI มาใช้เพื่อพัฒนานวัตกรรมองค์กร เพื่อมุ่งเน้นให้เกิดผลลัพธ์ที่เป็นรูปธรรมและนำไปใช้ได้จริง ด้วยการเชื่อมต่อแนวคิดเชิงนวัตกรรมเข้ากับแนวทางปฏิบัติที่ประยุกต์ใช้ได้ในการเร่งสปีดการพัฒนาเทคโนโลยี AI โปรแกรม RISE.AI นี้ มีจุดมุ่งหมายเพื่อรวบรวมสตาร์ทอัพที่มีนวัตกรรม AI ที่ดีที่สุดจากทั่วโลก และผู้เชี่ยวชาญด้านเทคโนโลยีปัญญาประดิษฐ์มาร่วมกันพัฒนาโครงการนำร่องต่าง ๆ กับบริษัทชั้นนำในภาคธุรกิจต่าง ๆ เช่น การเงิน & การธนาคาร ประกันภัย พลังงาน และเทคโนโลยีสะอาด เป็นต้น องค์กรชั้นนำในประเทศไทยที่เข้าร่วมโปรแกรมนี้ ได้แก่ บริษัท ปตท. สำรวจและผลิตปิโตรเลียม จำกัด (มหาชน) (ปตท.สผ) บริษัท เอไอ แอนด์ โรโบติกส์ เวนเจอร์ส จำกัด ธนาคารกรุงศรีอยุธยา จำกัด (มหาชน) และสำนักงานส่งเสริมเศรษฐกิจดิจิทัล (depa) โดยโปรแกรมนี้จะจัดขึ้นในช่วงเดือนเมษายน – กันยายน พ.ศ. 2562

นายณัฐภัทร ธเนศวรกุล Head of Ventures สถาบันเร่งสปีดนวัตกรรมองค์กร หรือ RISE นายณัฐภัทร ธเนศวรกุล Head of Ventures สถาบันเร่งสปีดนวัตกรรมองค์กร หรือ RISE– Regional Corporate Innovation Accelerator กล่าวว่า เทคโนโลยีปัญญาประดิษฐ์จะเป็นตัวขับเคลื่อนหลักที่ช่วยกระตุ้นการเติบโตของจีดีพีโดยรวมของประเทศไทยและภูมิภาคเอเชียตะวันออกเฉียงใต้ ซึ่งการจัดตั้งวัฒนธรรมที่ขับเคลื่อนด้วยข้อมูลในองค์กรธุรกิจ จะ���่วยให้องค์กรระดับภูมิภาคต่าง ๆ สามารถปรับกลยุทธ์ทางธุรกิจให้เหมาะสมและยกระดับผลิตภัณฑ์ และบริการต่าง ๆ เพื่อนำไปสู่การเติบโตทางเศรษฐกิจที่ยั่งยืน ขณะนี้ อุตสาหกรรมของ AI กำลังเติบโตและมีผลกระทบอย่างมากต่อทั้งธุรกิจและสังคม ซึ่งการเพิ่มขึ้นของการใช้ปัญญาประดิษฐ์ก่อให้เกิดการปรับเปลี่ยนและพัฒนามากมายในอุตสาหกรรมต่าง ๆ ทำให้องค์กรเปลี่ยนแนวทางการดำเนินธุรกิจ ปฏิรูปวิธีการ เปลี่ยนแปลงสภาพแวดล้อม เพื่อให้องค์กรสามารถทำธุรกิจของตนเอง และแข่งขันในเศรษฐกิจโลกได้ ดังนั้น ธุรกิจต่าง ๆ จึงจำเป็นต้องนำเทคโนโลยีใหม่มาใช้ในการรับมือกับการเปลี่ยนแปลงทางเทคโนโลยีอย่างรวดเร็วและสร้างโอกาสทางธุรกิจใหม่ ๆ เพื่อช่วยเสริมสร้างความสามารถในการแข่งขันและเพิ่มรายได้ให้สูงขึ้น อีกทั้ง จากข้อมูลวิจัยของ McKinsey ได้ระบุว่า การปรับใช้ AI จะส่งผลทำให้กำไรของธุรกิจต่าง ๆ ในทุกภาคธุรกิจเพิ่มขึ้นอย่างมากในปี พ.ศ. 2578 โดยเฉพาะอย่างยิ่งด้านการศึกษา การให้บริการที่พัก & อาหาร และการก่อสร้าง ซึ่งคาดว่าจะเพิ่มสูงขึ้นมากกว่า 70% นอกจากนี้ มีการคาดว่าการใช้ AI ในธุรกิจค้าส่งและค้าปลีก การเกษตร ป่าไม้ การประมงและการดูแลสุขภาพจะทำให้ผลกำไรเพิ่มขึ้นมากกว่า 50% รวมทั้งเมื่อพิจารณาถึงความได้เปรียบจากการนำ AI มาใช้ในตลาดก่อนคู่แข่งขัน ในขณะนี้ ธุรกิจต่าง ๆ มีความกระตือรือร้นที่จะพัฒนาขีดความสามารถด้าน AI ของตนเองเพื่อสร้างความได้เปรียบในการแข่งขันดังกล่าว “อย่างไรก็ตาม การนำ AI มาใช้นั้นจะต้องใช้เวลานานและมีค่าใช้จ่ายสูง บริษัทส่วนใหญ่ในเอเชียตะวันออกเฉียงใต้ กำลังเผชิญหน้ากับปัญหาทรัพยากรไม่เพียงพอในการพัฒนาเทคโนโลยี AI ภายในองค์กร และยังไม่สามารถเข้าถึงนักพัฒนา AI ทั่วโลกได้อีกด้วย สภาพแวดล้อมเหล่านี้ คือเหตุผลว่าทำไมโปรแกรม RISE.AI จึงถูกออกแบบให้เชื่อมโยงกับองค์กรต่าง ๆ และนักพัฒนา AI ทั่วโลกที่มีคุณสมบัติเหมาะสมเข้าไว้ด้วยกัน เพื่อร่วมกันทำงานที่มีศักยภาพและรักษาความสามารถในการแข่งขันในเศรษฐกิจโลกที่กำลังมีการเปลี่ยนแปลง” นายณัฐภัทร กล่าว

นายธนา สราญเวทย์พันธุ์ ผู้จัดการอาวุโสสายงานบริหารเทคโนโลยีและองค์ความรู้ ปตท.สผ ด้านนายธนา สราญเวทย์พันธุ์ ผู้จัดการอาวุโสสายงานบริหารเทคโนโลยีและองค์ความรู้ ปตท.สผ กล่าวว่า “ ปตท.สผ ได้วางแผนในการนำเทคโนโลยี AI มาใช้ในหลายส่วนที่สำคัญขององค์กร เพื่อยกระดับการดำเนินธุรกิจขององค์กร ทางเรารู้สึกตื่นเต้นที่จะเข้ามาเป็นหนึ่งในพันธมิตรที่มีส่วนร่วมอย่างเป็นทางการของโปรแกรม RISE.AI ที่จะช่วยให้ ปตท.สผ ค้นหาสตาร์ทอัพที่ดีที่สุดจากทั่วโลกมาผลักดันนวัตกรรมองค์กรด้าน AI RISE.AI เป็นโปรแกรมเร่งสปีด AI ที่ช่วยให้องค์กรต่าง ๆ สามารถเข้าถึงแหล่งคอมมูนิตี้ AI ทั่วโลก โดยโปรแกรมนี้จะคัดเลือกสตาร์ทอัพจากความสามารถในการแก้ไขปัญหาในโจทย์ที่ได้รับจากแต่ละองค์กร ทั้งนี้ สตาร์ทอัพด้าน AI ทั้งหมดที่ได้รับคัดเลือกให้เข้าร่วมโปรแกรม จะมีโอกาสเข้าร่วมแคมป์เพื่อร่วมกันพัฒนาโครงการนำร่องต่าง ๆ เป็นเวลา 9 สัปดาห์กับพันธมิตรองค์กรชั้นนำต่าง ๆ ของ RISE และรับการให้คำปรึกษาส่วนตัวจากผู้เชี่ยวชาญด้าน AI จาก New York University Tandon Future Labs เพื่อทำให้มั่นใจได้ว่าในโครงการที่สร้างขึ้นภายในกรอบเวลาของโปรแกรมมีศักยภาพระดับสากล นอกจากนั้น ด้วยโปรแกรมการประเมินเชิงกลยุทธ์และการให้คำปรึกษาจากพันธมิตรผู้เชี่ยวชาญของ RISE.AI จะทำให้ RISE.AI เป็นแพลตฟอร์มที่มีแนวโน้มในการพัฒนา AI ขององค์กรในภูมิภาคเอเชียตะวันออกเฉียงใต้ได้สำเร็จ ซึ่งโปรแกรมดังกล่าวได้เปิดตัวอย่างเป็นทางการแล้วในเดือนเมษายน พ.ศ. 2562 โดยมีแผนที่จะจัดงานโรดโชว์ในเมืองใหญ่ 10 แห่ง ทั่วเอเชีย ได้แก่ กรุงเทพฯ สิงคโปร์ โตเกียว เมืองโฮจิมินห์ ปักกิ่ง หางโจว เซินเจิ้น ฮ่องกง โซล และไทเป ด้าน มีนา ซาลิบ ผู้อำนวยการโครงการจาก New York University Tandon Future Labs ซึ่งเป็นหนึ่งในพันธมิตรทางกลยุทธ์ ของ RISE.AI กล่าวว่า “ โปรแกรม RISE.AI เป็นโอกาสที่ดีที่สุด สำหรับสต��ร์ทอัพ ที่ต้องการการขยายตัวและการเติบโตของธุรกิจในเอเชียตะวันออกเฉียงใต้ เพราะองค์กรธุรกิจในภูมิภาคนี้ทุ่มการลงทุนในเทคโนโลยี AI เป็นอย่างมาก ดังนั้น ผมจึงสนับสนุนให้สตาร์ทอัพ AI จากทั่วโลกเข้าร่วมกับ RISE.AI ซึ่งถือเป็น Corporate AI Accelerator ครั้งแรกในภูมิภาคเอเชียตะวันออกเฉียงใต้” ลิงค์ที่เกี่ยวข้อง www.riseaccel.com Read the full article

0 notes

Photo

Benefits of the new IBM z16 Mainframe• Accelerated AIAccelerated AI insights to create new value for business• Cyber resiliencyProtect against current and future threats by securing your data• ModernizationSpeed up modernization of workloads and integrate them seamlessly across the hybrid cloud Source- IBM Contact us for Mainframe Services

0 notes