#APIs help software

Explore tagged Tumblr posts

Text

0 notes

Text

Welcome to Something Eternal: A Website Forum in 2023 wtf lmao

It's 2023, and a single belligerent rich guy destroyed one of the primary focal points of uh...global communication. Tumblr is, shockingly, kinda thriving despite the abuse it gets from its owners, but that I will call the iconic refusal of Tumblr users to let Tumblr get in the way of their using Tumblr. Reddit killed its API, removing the functionality of mobile apps that made it remotely readable (rip rif.) Discord, our current primary hangout, has made countless strange choices lately that indicate it has reached the summit of its usability and functionality, and can only decline from here as changes get made to prepare for shareholders. (NOTE: WROTE THIS POST BEFORE THEIR MOBILE "REDESIGN" LMAO)

The enshittification is intense, and it's coming from every direction. Social media platforms that felt like permanent institutions are instead slowly going to let fall fallow incredible amounts of history, works of art, thought, and fandoms. It kinda sucks!

A couple years ago, I posted about a new plan with a new domain, to focus on the archiving of media content, as I saw that to be the fatal weakness of the current ways the internet and fandoms work. Much has happened since to convince me to alter the direction of those efforts, though not abandon them entirely.

Long story short? We are launching a fucking website forum. In 2023.

If you remember In the Rose Garden, much about Something Eternal will be familiar. But this has been a year in the making, and in many ways it's far more ambitious than IRG was. We have put money on this. The forum is running on the same software major IT and technology businesses use, because I don't want the software to age out of usability within five years. It has an attached gallery system for me to post content to, including the Chiho Saito art collection. It has a profile post system that everyone already on the forum has decided is kinda like mini Twitter? But it is, fundamentally, a website forum, owned and run and moderated by us. We are not web devs. But we have run a website on pure spite and headbutting code for over twenty years, and we have over a decade of experience maintaining social spaces online, both on the OG forum, and on our Discord. Better skilled people with far more time than we have can and will build incredible alternatives to what is collapsing around us. But they're not in the room right now. We are. And you know what? Maybe it's time to return to a clunkier, slower moving, more conversation focused platform.

You're not joining a social media platform with the full polish of dozens of devs and automated moderation. Things might break, and I might need time to fix them. The emojis and such are still a work in progress. Because e-mails no longer route in reasonable normal ways, the sign-up process instead happens within the software, and has to be approved by mods. Design and structure elements may change. Etc. The point being, that the forum isn't finished, but it is at a place where I feel like I can present it to people, and it's people I need to help direct what functions and things will be in this space. You all will shape its norms, its traditions, its options...choices I could try to make now, but really...they're for us to create as a group! But the important stuff? That's there. Now let's drive this baby off the damn lot already!

Come! Join us!!

PS. As always, TERFs and Nazis need not apply.

#revolutionary girl utena#shoujo kakumei utena#rgu#sku#empty movement#utena meta#fandom stuff#fandoms#expect a somewhat spicy atmosphere#empty movement has always had deep something awful roots#and i expect the migration back to a forum will bring with it some of that more spicy attitude#also lol henry kissinger is dead god that rules

1K notes

·

View notes

Text

YouTube says it will intentionally cripple the playback of its videos in third-party apps that block its ads. A Monday post in YouTube's help forum notes netizens using applications that strip out adverts while streaming YouTube videos may encounter playback issues due to buffering or error messages indicating that the content is not available. "We want to emphasize that our terms don’t allow third-party apps to turn off ads because that prevents the creator from being rewarded for viewership, and Ads on YouTube help support creators and let billions of people around the world use the streaming service," said a YouTube team member identified as Rob. "We also understand that some people prefer an entirely ad-free experience, which is why we offer YouTube Premium." This crackdown is coming at the API level, as these outside apps use this interface to access the Google-owned giant's videos. Last year, YouTube acknowledged it was running scripts to detect ad-blocking extensions in web browsers, which ended up interfering with Firefox page loads and prompted a privacy complaint to Ireland's Data Protection Commission. And several months before that, the internet video titan experimented with popup notifications warning YouTube web visitors that ad-blocking software is not allowed. A survey published last month by Ghostery, a maker of software that promotes privacy by blocking ads and tracking scripts, found that Google's efforts to crack down on ad blocking made about half of respondents (49 percent) more willing to use an ad blocker. According to the survey, the majority of Americans now use advert blockers, something recommended by the FBI when conducting internet searches.

Download NewPipe, it's what I use on Android

276 notes

·

View notes

Note

Hey hey! Wyrd told me you trained your dog to help with executive dysfunction stickyness/ repetitive action and I would LOVE to know how you trained this. I am training my pet to do a few in-home things before I get my prospect in hopefully this year

Oh, hi! There's a longer post about this topic elsewhere in my Matilda tag you might want to check out.

A lot of my training approach is informed by the experimenting I did with alarms that interact with other senses besides acoustics during COVID. I got completely nonresponsive to phone alarms and things, and I was under a truly catastrophic amount of stress related to my PhD at the time, so my general functioning wasn't great and I really NEEDED external cues to trigger basic daily tasks. Unfortunately I have a pretty impressive ability to hyperfocus right past obnoxious alarms, and worse, I am very very good at absently turning alarms off or mimicking paying attention without actually pulling my focus away from the subject of my attention. You get a 5-30sec buffer of retained information for the purposes of holding up a conversation which I am continuously dumping. I am not necessarily doing it consciously, but that doesn't make it not frustrating. Especially because if a human does get my attention, many years of RSD tends to set me at hyper defensive right out of the gate. That's not ideal for a bunch of reasons.

Anyway, I found that vibration or tactile stimuli, as well as visual stimuli (I rigged a disco lamp to turn on at hourly intervals in a desperate attempt to track the passage of time), worked quite well to capture my attention and let me step out of hyperfocus enough to do the next thing. I figured eventually I would have to see humans in their meat suits again and people get weird about shit like this, so I needed something relatively discreet and quiet that shouldn't be disruptive to anyone else. I started thinking about building myself aids.

So the first idea I had was to just program a series of alarms into a smartwatch that could automatically attach them to alerts from my gcal, but it turns out that they don't have an api function that hooks up to stuff like "make watch buzz" and I ran out of bandwidth to deal with it. It eventually just seemed easier to train an entire dog to respond to a quiet alarm than to fight with the hardware and software to make a really good buzzwatch. I use a couple of different alarm ring tones to cue different actions just as you might train any dog to a word: this one means we go to the bedroom, that one means that if you take meds I get candy, and so forth. The actual sound of the alarm is a cue in its own right. I have some discussion in that other post about how I encouraged my dog to essentially play a game with me where she had to figure out how to get my attention without hurting (aka NO SCREAMING WITH YOUR VERY LOUD HIGH PITCHED BARK). Essentially, I'm shaping that out of whatever behaviors she offers me that successfully catch my attention, defined operationally to her as "standing up + sustained eye contact."

In terms of catching me when I'm tending to get stuck on something or stationary without moving, that one is less "Yes I and my dog are amazing and I've trained her to read my mind" and more "I don't make eye contact when I'm dissociating and I almost always am staring into my phone." So if Matilda catches me drifting across the kitchen glued to phone, she knows that if she rockets up and nudges me into paying attention to my body, she'll get a reward. Consequentially, she's a bit enthusiastic about this one and will sometimes ram passersby with her nose, so definitely figure out your failure modes before you teach the dogs anything.

39 notes

·

View notes

Text

How to play RPGMaker Games in foreign languages with Machine Translation

This is in part a rewrite of a friend's tutorial, and in part a more streamlined version of it based on what steps I found important, to make it a bit easier to understand!

Please note that as with any Machine Translation, there will errors and issues. You will not get the same experience as someone fluent in the language, and you will not get the same experience as with playing a translation done by a real person.

If anyone has questions, please feel free to ask!

1. Download and extract the Locale Emulator

Linked here!

2. Download and set up your Textractor!

Textractor tutorial and using it with DeepL. The browser extension tools are broken, so you will need to use the built in textractor API (this has a limit, so be careful), or copy-paste the extracted text directly into your translation software of choice. Note that the textractor DOES NOT WORK on every game! It works well with RPGMaker, but I've had issues with visual novels. The password for extraction is visual_novel_lover

3. Ensure that you are downloading your game in the right region.

In your region/language (administrative) settings, change 'Language for non-unicode programs' to the language of your choice. This will ensure that the file names are extracted to the right language. MAKE SURE you download AND extract the game with the right settings! DO NOT CHECK THE 'use utf-8 encoding' BOX. This ONLY needs to be done during the initial download and extraction; once everything is downloaded+extracted+installed, you can set your region back to your previous settings, but test to ensure that the text will display properly after you return to your original language settings; there have been issues before. helpful tutorials are here and here!

4. Download your desired game and, if necessary, relevant RTP

The tools MUST be downloaded and extracted in the game's language. For japanese games, they are here. ensure that you are still in the right locale for non-unicode programs!

5. Run through the Locale Emulator

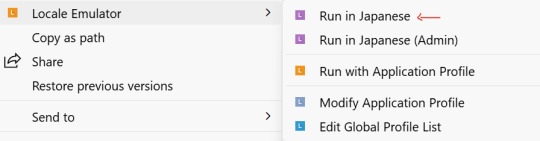

YES, this is a necessary step, EVEN IF YOUR ADMIN-REGION/LANGUAGE SETTINGS ARE CORRECT. Some games will not display the correct text unless you also RUN it in the right locale. You should be able to right click the game and see the Locale Emulator as an option like this. Run in Japanese (or whatever language is needed). You don't need to run as Admin if you don't want to, it should work either way.

6. Attach the Textractor and follow previously linked tutorials on how to set up the tools and the MTL.

Other notes:

There are also inbuilt Machine Translation Extensions, but those have a usage limit due to restrictions on the API. The Chrome/Firefox add-ons in the walkthrough in step 4 get around this by using the website itself, which doesn't have the same restrictions as the API does.

This will work best for RPGMaker games. For VNs, the textractor can have difficulties hooking in to extract the text, and may take some finagling.

#rpgmaker#tutorial#rpgmaker games#aria rambles#been meaning to make a proper version of this for a while#i have another version of this but it's specifically about coe#it was time to make a more generalized version

104 notes

·

View notes

Text

my term paper written in 2018 (how ND games were made and why they will never be made that way again)

hello friends, I am going to be sharing portions of a paper i wrote way back in 2018 for a college class. in it, i was researching exactly how the ND games were made, and why they would not be made that way anymore.

if you have any interest in the behind the scenes of how her interactive made their games and my theories as to why our evil overlord penny milliken made such drastic changes to the process, read on!

warning that i am splicing portions of this paper together, so you don't have to read my ramblings about the history of nancy and basic gameplay mechanics:

Use of C++, DirectX, and Bink Video

Upon completion of each game, the player can view the game’s credits. HeR states that each game was developed using C++ and DirectX, as well as Bink Video later on.

C++

C++ is a general-purpose programming language. This means that many things can be done with it, gaming programming included. It is a compiled language, which Jack Copeland explains as the “process of converting a table of instructions into a standard description or a description number” (Copeland 12). This means that written code is broken down into a set of numbers that the computer can then understand. C++ first appeared in 1985 and was first standardized in 1998. This allowed programmers to use the language more widely. It is no coincidence that 1998 is also the year that the first Nancy Drew game was released.

C++ Libraries

When there is a monetary investment to make a computer game, there are more people using and working on whatever programming language they are using. Because there was such an interest in making games in the late 1990’s and early 2000’s, there was essentially a “boom” in how much C++ and other languages were being used. With that many people using the language, they collectively added on to the language to make it simpler to use. This process ends up creating what is called “libraries.” For example:

If a programmer wants to make a function to add one, they must write out the code that does that (let’s say approximately three lines of code). To make this process faster, the programmer can define a symbol, such as + to mean add. Now, when the programmer types “+”, the language knows that equals the three lines of code previously mentioned, as opposed to typing out those three lines of code each time the programmer wants to add. This can be done for all sorts of symbols and phrases, and when they are all put together, they are called a “package” or “library.”

Libraries can be shared with other programmers, which allows everyone to do much more with the language much faster. The more libraries there are, the more that can be done with the language.

Because of the interest in the gaming industry in the early 2000’s, more people were being paid to use programming languages. This caused a fast increase in the ability of programming. This helps to explain how HeR was able to go from jerky, bobble-headed graphics in 1999 to much more fluid and realistic movements in 2003.

Microsoft DirectX

DirectX is a collection of application programming interfaces (APIs) for tasks related to multimedia, especially video game programming, on Microsoft platforms. Among many others, these APIs include Direct3D (allows the user to draw 3D graphics and render 3D animation), DirectDraw (accelerates the rendering of graphics), and DirectMusic (allows interactive control over music and sound effects). This software is crucial for the development of many games, as it includes many services that would otherwise require multiple programs to put together (which would not only take more time but also more money, which is important to consider in a small company like HeR).

Bink Video

According to the credits which I have painstakingly looked through for each game, HeR started using Bink Video in game 7, Ghost Dogs of Moon Lake (2002). Bink is a file format (.bik) developed by RAD Game Tools. This file format has to do with how much data is sent in a package to the Graphical User Interface (GUI). (The GUI essentially means that the computer user interacts with representational graphics rather than plain text. For example, we understand that a plain drawing of a person’s head and shoulders means “user.”) Bink Video structures the data sent in a package so that when it reaches the Central Processing Unit (CPU), it is processed more efficiently. This allows for more data to be transferred per second, making graphics and video look more seamless and natural. Bink Video also allows for more video sequences to be possible in a game.

Use of TransGaming Inc.

Sea of Darkness is the only title that credits a company called TransGaming Inc, though I’m pretty sure they’ve been using it for every Mac release, starting in 2010. TransGaming created a technology called Cider that allowed video game developers to run games designed for Windows on Mac OS X (https://en.wikipedia.org/wiki/Findev). As one can imagine, this was an incredibly helpful piece of software that allowed for HeR to start releasing games on Mac platforms. This was a smart way for them to increase their market.

In 2015, a portion of TransGaming was acquired by NVIDIA, and in 2016, TransGaming changed its business focus from technology to real estate financing. Though it is somewhat difficult to determine which of its formal products are still available, it can be assumed that they will not be developing anything else technology-based from 2016 on.

Though it is entirely possible that there is other software available for converting Microsoft based games to Mac platforms, the loss of TransGaming still has large consequences. For a relatively small company like Her Interactive, hiring an entire team to convert the game for Mac systems was a big deal (I know they did this because it is in the credits of SEA which you can see at the end of this video: https://www.youtube.com/watch?v=Q0gAzD7Q09Y). Without this service, HeR loses a large portion of their customers.

Switch to Unity

Unity is a game engine that is designed to work across 27 platforms, including Windows, Mac, iOS, Playstation, Xbox, Wii, and multiple Virtual Reality systems. The engine itself is written in C++, though the user of the software writes code in C#, JavaScript (also called UnityScript), or less commonly Boo. Its initial release took place in 2005, with a stable release in 2017 and another in March of 2018. Some of the most popular games released using Unity include Pokemon Go for iOS in 2016 and Cuphead in 2017.

HeR’s decision to switch to Unity makes sense on one hand but is incredibly frustrating on the other. Let’s start with how it makes sense. The software HeR was using from TransGaming Inc. will (from what I can tell) never be updated again, meaning it will become virtually useless soon, if it hasn’t already. That means that HeR needed to find another software that would allow them to convert their games onto a Mac platform so that they would not lose a large portion of their customers. This was probably seen as an opportunity to switch to something completely new that would allow them to reach even more platforms. One of the points HeR keeps harping on and on about in their updates to fans is the tablet market, as well as increasing popularity in VR. If HeR wants to survive in the modern game market, they need to branch outside of PC gaming. Unity will allow them to do that. The switch makes sense.

However, one also has to consider all of the progress made in their previous game engine. Everything discussed up to this point has taken 17 years to achieve. And, because their engine was designed by their developers specifically for their games, it is likely that after the switch, their engine will never be used again. Additionally, none of the progress HeR made previously applies to Unity, and can only be used as a reference. Plus, it’s not just the improvements made in the game engine that are being erased. It is also the staff at HeR who worked there for so long, who were so integral in building their own engine and getting the game quality to where it is in Sea of Darkness, that are being pushed aside for a new gaming engine. New engine, new staff that knows how to use it.

The only thing HeR won’t lose is Bink Video, if that means anything to anyone. Bink2 works with Unity. According to the Bink Video website, Bink supplies “pre-written plugins for both Unreal 4 and Unity” (Rad Game Tools). However, I can’t actually be sure that HeR will still use Bink in their next game since I don’t work there. It would make sense if they continued to use it, but who knows.

Conclusions and frustrations

To me, Her Interactive is the little company that could. When they set out to make the first Nancy Drew game, there was no engine to support it. Instead of changing their tactics, they said to heck with it and built their own engine. As years went on, they refined their engine using C++ and DirectX and implemented Bink Video. In 2010 they began using software from TransGaming Inc. that allowed them to convert their games to Mac format, allowing them to increase their market. However, with TransGaming Inc.’s falling apart starting in 2015, HeR was forced to rethink its strategy. Ultimately they chose to switch their engine out for Unity, essentially throwing out 17 years worth of work and laying off many of their employees. Now three years in the making, HeR is still largely secretive about the status of their newest game. The combination of these factors has added up to a fanbase that has become distrustful, frustrated, and altogether largely disappointed in what was once that little company that could.

Suggested Further Reading:

Midnight in Salem, OR Her Interactive’s Marketing Nightmare (Part 2): https://saving-face.net/2017/07/07/midnight-in-salem-or-her-interactives-marketing-nightmare-part-2/

Compilation of MID Facts: http://community.herinteractive.com/showthread.php?1320771-Compilation-of-MID-Facts

Game Building - Homebrew or Third Party Engines?: https://thementalattic.com/2016/07/29/game-building-homebrew-or-third-party-engines/

/end of essay. it is crazy to go back and read this again in 2025. mid had not come out yet when i wrote this and i genuinely did not think it would ever come out. i also had to create a whole power point to go along with this and present it to my entire class of people who barely even knew what nancy drew was, let alone that there was a whole series of pc games based on it lol

18 notes

·

View notes

Text

Elon Musk’s so-called Department of Government Efficiency (DOGE) has plans to stage a “hackathon” next week in Washington, DC. The goal is to create a single “mega API”—a bridge that lets software systems talk to one another—for accessing IRS data, sources tell WIRED. The agency is expected to partner with a third-party vendor to manage certain aspects of the data project. Palantir, a software company cofounded by billionaire and Musk associate Peter Thiel, has been brought up consistently by DOGE representatives as a possible candidate, sources tell WIRED.

Two top DOGE operatives at the IRS, Sam Corcos and Gavin Kliger, are helping to orchestrate the hackathon, sources tell WIRED. Corcos is a health-tech CEO with ties to Musk’s SpaceX. Kliger attended UC Berkeley until 2020 and worked at the AI company Databricks before joining DOGE as a special adviser to the director at the Office of Personnel Management (OPM). Corcos is also a special adviser to Treasury Secretary Scott Bessent.

Since joining Musk’s DOGE, Corcos has told IRS workers that he wants to pause all engineering work and cancel current attempts to modernize the agency’s systems, according to sources with direct knowledge who spoke with WIRED. He has also spoken about some aspects of these cuts publicly: "We've so far stopped work and cut about $1.5 billion from the modernization budget. Mostly projects that were going to continue to put us down the death spiral of complexity in our code base," Corcos told Laura Ingraham on Fox News in March.

Corcos has discussed plans for DOGE to build “one new API to rule them all,” making IRS data more easily accessible for cloud platforms, sources say. APIs, or application programming interfaces, enable different applications to exchange data, and could be used to move IRS data into the cloud. The cloud platform could become the “read center of all IRS systems,” a source with direct knowledge tells WIRED, meaning anyone with access could view and possibly manipulate all IRS data in one place.

Over the last few weeks, DOGE has requested the names of the IRS’s best engineers from agency staffers. Next week, DOGE and IRS leadership are expected to host dozens of engineers in DC so they can begin “ripping up the old systems” and building the API, an IRS engineering source tells WIRED. The goal is to have this task completed within 30 days. Sources say there have been multiple discussions about involving third-party cloud and software providers like Palantir in the implementation.

Corcos and DOGE indicated to IRS employees that they intended to first apply the API to the agency’s mainframes and then move on to every other internal system. Initiating a plan like this would likely touch all data within the IRS, including taxpayer names, addresses, social security numbers, as well as tax return and employment data. Currently, the IRS runs on dozens of disparate systems housed in on-premises data centers and in the cloud that are purposefully compartmentalized. Accessing these systems requires special permissions and workers are typically only granted access on a need-to-know basis.

A “mega API” could potentially allow someone with access to export all IRS data to the systems of their choosing, including private entities. If that person also had access to other interoperable datasets at separate government agencies, they could compare them against IRS data for their own purposes.

“Schematizing this data and understanding it would take years,” an IRS source tells WIRED. “Just even thinking through the data would take a long time, because these people have no experience, not only in government, but in the IRS or with taxes or anything else.” (“There is a lot of stuff that I don't know that I am learning now,” Corcos tells Ingraham in the Fox interview. “I know a lot about software systems, that's why I was brought in.")

These systems have all gone through a tedious approval process to ensure the security of taxpayer data. Whatever may replace them would likely still need to be properly vetted, sources tell WIRED.

"It's basically an open door controlled by Musk for all American's most sensitive information with none of the rules that normally secure that data," an IRS worker alleges to WIRED.

The data consolidation effort aligns with President Donald Trump’s executive order from March 20, which directed agencies to eliminate information silos. While the order was purportedly aimed at fighting fraud and waste, it also could threaten privacy by consolidating personal data housed on different systems into a central repository, WIRED previously reported.

In a statement provided to WIRED on Saturday, a Treasury spokesperson said the department “is pleased to have gathered a team of long-time IRS engineers who have been identified as the most talented technical personnel. Through this coalition, they will streamline IRS systems to create the most efficient service for the American taxpayer. This week the team will be participating in the IRS Roadmapping Kickoff, a seminar of various strategy sessions, as they work diligently to create efficient systems. This new leadership and direction will maximize their capabilities and serve as the tech-enabled force multiplier that the IRS has needed for decades.”

Palantir, Sam Corcos, and Gavin Kliger did not immediately respond to requests for comment.

In February, a memo was drafted to provide Kliger with access to personal taxpayer data at the IRS, The Washington Post reported. Kliger was ultimately provided read-only access to anonymized tax data, similar to what academics use for research. Weeks later, Corcos arrived, demanding detailed taxpayer and vendor information as a means of combating fraud, according to the Post.

“The IRS has some pretty legacy infrastructure. It's actually very similar to what banks have been using. It's old mainframes running COBOL and Assembly and the challenge has been, how do we migrate that to a modern system?” Corcos told Ingraham in the same Fox News interview. Corcos said he plans to continue his work at IRS for a total of six months.

DOGE has already slashed and burned modernization projects at other agencies, replacing them with smaller teams and tighter timelines. At the Social Security Administration, DOGE representatives are planning to move all of the agency’s data off of legacy programming languages like COBOL and into something like Java, WIRED reported last week.

Last Friday, DOGE suddenly placed around 50 IRS technologists on administrative leave. On Thursday, even more technologists were cut, including the director of cybersecurity architecture and implementation, deputy chief information security officer, and acting director of security risk management. IRS’s chief technology officer, Kaschit Pandya, is one of the few technology officials left at the agency, sources say.

DOGE originally expected the API project to take a year, multiple IRS sources say, but that timeline has shortened dramatically down to a few weeks. “That is not only not technically possible, that's also not a reasonable idea, that will cripple the IRS,” an IRS employee source tells WIRED. “It will also potentially endanger filing season next year, because obviously all these other systems they’re pulling people away from are important.”

(Corcos also made it clear to IRS employees that he wanted to kill the agency’s Direct File program, the IRS’s recently released free tax-filing service.)

DOGE’s focus on obtaining and moving sensitive IRS data to a central viewing platform has spooked privacy and civil liberties experts.

“It’s hard to imagine more sensitive data than the financial information the IRS holds,” Evan Greer, director of Fight for the Future, a digital civil rights organization, tells WIRED.

Palantir received the highest FedRAMP approval this past December for its entire product suite, including Palantir Federal Cloud Service (PFCS) which provides a cloud environment for federal agencies to implement the company’s software platforms, like Gotham and Foundry. FedRAMP stands for Federal Risk and Authorization Management Program and assesses cloud products for security risks before governmental use.

“We love disruption and whatever is good for America will be good for Americans and very good for Palantir,” Palantir CEO Alex Karp said in a February earnings call. “Disruption at the end of the day exposes things that aren't working. There will be ups and downs. This is a revolution, some people are going to get their heads cut off.”

15 notes

·

View notes

Note

I don't mean to be rude and I completely agree. Cellbit has indeed had subtitles for awhile but as a person who only speaks english but is learning portuguese, the subtitlea are great when they work which most of the time they didnt lol. This could only be a me problem but most of the time they just didnt function properly. Sometimes I would have to wait for like 20 minutes for them to start working, most times they wouldnt work at all, or it would only pick up like 1 or 2 words in every sentence which really doesnt help much. so I'm really hoping quackity's works better. But yes keep learning languages. I continued to watch when the subtitlea didnt work. I am having a ton of fun learning portuguese 👍 👍 👍

i think you may have just had a technical issue, as the translated captions worked pretty well for me when i used them, particularly pierre's. i will say that they require manually turning them on at the start of stream, and sometimes cellbit would forget for a bit until reminded, but that's not the fault of the captions themselves nor do i blame him considering most of his audience has been and continues to be portuguese speakers and a memory slip is not anyone's fault. i'm not really sure why you had so many issues with it, although i'm sorry to hear that.

in terms of q's translator, if it runs off the same software and api that they were previously using for the qlobal translator, i doubt it will work any better in terms of translation than pierre's, although i've seen some folks speculating it will involve different api (kind of doubtful, to me, as from my admittedly limited technical understanding, they used google and amazon api previously because those have the best LLMs for translation services, and even if they made their own api, they would likely still need to use google translate's LLM/language corpus). it will be nice, regardless, that it'll work for all audio and not just the audio routed through the individual streamer's mic.

18 notes

·

View notes

Text

How do I manage to cite thousands of photos?

I have officially passed 1,000 posts cited on this blog! That's 1000 posts of riots, exercises, mildly disgruntled guys in balaclavas, whatever.

If you were wondering if I do all the citing myself, you'd be correct! Every citation has been agonizingly researched by me. Is it a waste of time? Definitely. But I'm too far in to stop now...

However, it's not all by hand. I'm a software developer by trade, so I did make something to help with citation. Using the Tumblr API, these are the tools I made to make the process just a bit less painful!

You may have noticed all of my posts follow a certain format. Well, this is why! The citations view brings up my most recent unattributed post for me to add a citation to. Then, I can search multiple reverse image websites to find the source!

Google Lens is usually best for most things (even Russian pictures on VK and official military websites). TinEye is great for photos that are heavily cropped, or are stock, since they're sponsored by Adobe and Alamy. Everything else, including Yandex, kind of sucks.

I can also create new posts using this tool, which you've probably seen recently:

Throughout it all I've developed an auto tagging system that picks up on keywords inside the posts I write. This list goes on and on! Who knew there were so many special forces with three letter acronyms...

BRI SEK AOS KSK BAC B2R CDI DSI СБУ DSU BMD KSM SAS JTF! Which ones can you name?

You may have seen me delete posts that you liked. That was the job of the duplicate remover, which helps me find and delete identical posts. Even I forget what I've reblogged...

Of course, I have to fetch all 6000 images first and compare them using a unique "perceptual hash", but... it's worth it!

Through all my editing, posting, sorting, and loading, I need to keep an eye on the Tumblr API limits. I can actually reach them with the amount of posts I'm making...

And that's pretty much it! I probably won't release the tool since I doubt anyone would actually want to waste time doing this, but I'm pretty proud of it.

I might make a post later about the things I've found, but here are some fun facts:

France and Germany make up almost half of my entire list. For reference, the USA is about 40!

137 posts are marked with #needs-attribution, or about one in ten. 14 are under #needs-more-info. The sources for these are lost to time :(

There's a limit of 1000 likes per day. I've reached it...

There's a limit of 1000 posts in the queue, which I've pretty much been at since I started this blog. However, my current "cited" backlog is about 10 days long at 25 posts per day.

I still have about 3500 posts left to go before everything's done...

Thanks for sticking with me as I add summaries to posts that most people would have probably liked more if it was just the picture :^)

Maybe my captions are making things... too real?

18 notes

·

View notes

Text

0 notes

Text

ok i've done some light research. if you want a software engineer/fic writer's inital take on lore.fm, i'll keep it short and sweet.

my general understanding of lore.fm functionality:

they use OpenAI's public API. they take in the text from the URL provided and use it to spit out your AI-read fic. their API uses HTTP requests, meaning a connection is made to an OpenAI server over HTTP to do as lore.fm asks and then give back the audio. my concern is that i wasn't able to find out what exactly that means. does OpenAI just parse the data and spit out a response? is that data then stored somewhere to better their model (probably yes)? does OpenAI do anything to ensure that the data is being used the way it was intended (we know this probably isn't true because lore.fm exists)?

lore.fm stores the generated audio (i am almost certain of this because of the features described in this reddit post). meaning that someone's fic is sitting in a lore.fm database. what are they doing with that data? what can they do with it? how is it being stored? what is being stored, the text and the audio, or just the audio?

i find transparency a very difficult thing to ask for in tech. people are concerned with technological trade secrets and stifling innovation (hilarious when i think about lore.fm, because it doesn't take a genius to feed text into AI and display the response somewhere, sorry to say). and while i find the idea of AI being used to help further accessibility on apps that don't yet provide it promising, i find the method that lore.fm (and OpenAI) chooses to do this to be dangerous and pave a path for a harmful integration of AI (and also fanfiction in general -- we write to interact, and lore.fm removes that aspect of it entirely).

we already know that AI companies have been paying to scrape data from different sources for the purposes of bettering their models, and we already know that they've only started asking for permission to do this because users found out (and not from the goodness of their hearts, because more data means better models, and asking for permission adds overhead). but this way of using it allows AI to backdoor-scrape data that the original sources of the data didn't give consent to. maybe the author declined to have their fic scraped by AI on the site they posted it onto (if the site asked at all), but they didn't know a third-party app like lore.fm would feed it into an AI model anyways.

what's the point of writing fics if i have no control over my own content?

#i could talk about harmful integrations of AI for days#but this way of using it definitely sets a bad precedent#and i think ao3 unfortunately didn't anticipate this kind of thing when it was created#and so i don't want to blame ao3 entirely because they are a group of independent devs that are volunteering to do this#but i also think they either need to make an effort to protect text from being scraped this way#or they need to lockdown the site altogether#we call it fail-closed in cs terms#and right now it's fail-open#the problem with that is that there are probably people posting to ao3 right now that have no idea this is going on#and they don't know they should lockdown their fics#and now their fics are being fed into this model and they have no idea#idk#lore.fm#fanfiction#ao3

23 notes

·

View notes

Text

ok since i've been sharing some piracy stuff i'll talk a bit about how my personal music streaming server is set up. the basic idea is: i either buy my music on bandcamp or download it on soulseek. all of my music is stored on an external hard drive connected to a donated laptop that's next to my house's internet router. this laptop is always on, and runs software that lets me access and stream my any song in my collection to my phone or to other computers. here's the detailed setup:

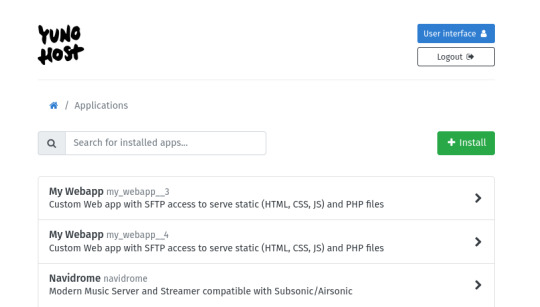

my home server is an old thinkpad laptop with a broken keyboard that was donated to me by a friend. it runs yunohost, a linux distribution that makes it simpler to reuse old computers as servers in this way: it gives you a nice control panel to install and manage all kinds of apps you might want to run on your home server, + it handles the security part by having a user login page & helping you install an https certificate with letsencrypt.

***

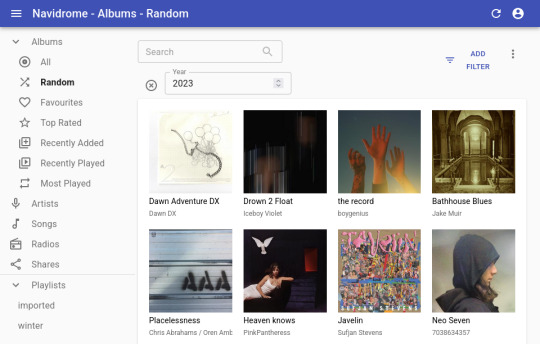

to stream my music collection, i use navidrome. this software is available to install from the yunohost control panel, so it's straightforward to install. what it does is take a folder with all your music and lets you browse and stream it, either via its web interface or through a bunch of apps for android, ios, etc.. it uses the subsonic protocol, so any app that says it works with subsonic should work with navidrome too.

***

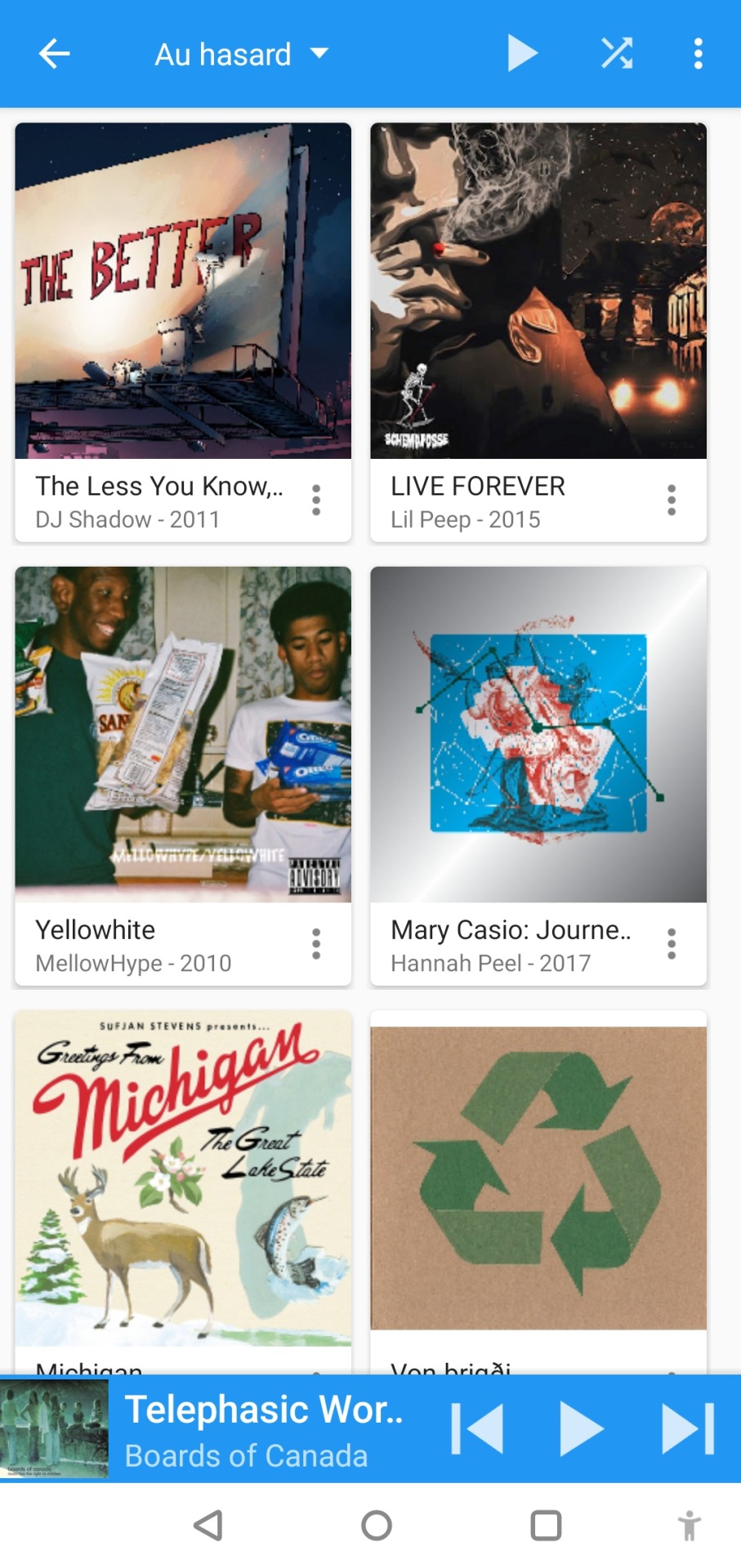

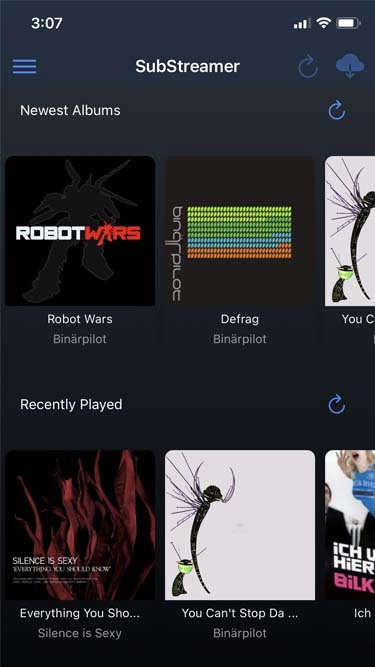

to listen to my music on my phone, i use DSub. It's an app that connects to any server that follows the subsonic API, including navidrome. you just have to give it the address of your home server, and your username and password, and it fetches your music and allows you to stream it. as mentionned previously, there's a bunch of alternative apps for android, ios, etc. so go take a look and make your pick. i've personally also used and enjoyed substreamer in the past. here are screenshots of both:

***

to listen to my music on my computer, i use tauon music box. i was a big fan of clementine music player years ago, but it got abandoned, and the replacement (strawberry music player) looks super dated now. tauon is very new to me, so i'm still figuring it out, but it connects to subsonic servers and it looks pretty so it's fitting the bill for me.

***

to download new music onto my server, i use slskd which is a soulseek client made to run on a web server. soulseek is a peer-to-peer software that's found a niche with music lovers, so for anything you'd want to listen there's a good chance that someone on soulseek has the file and will share it with you. the official soulseek client is available from the website, but i'm using a different software that can run on my server and that i can access anywhere via a webpage, slskd. this way, anytime i want to add music to my collection, i can just go to my server's slskd page, download the files, and they directly go into the folder that's served by navidrome.

slskd does not have a yunohost package, so the trick to make it work on the server is to use yunohost's reverse proxy app, and point it to the http port of slskd 127.0.0.1:5030, with the path /slskd and with forced user authentification. then, run slskd on your server with the --url-base slskd, --no-auth (it breaks otherwise, so it's best to just use yunohost's user auth on the reverse proxy) and --no-https (which has no downsides since the https is given by the reverse proxy anyway)

***

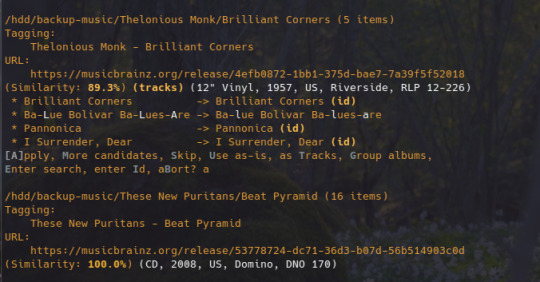

to keep my music collection organized, i use beets. this is a command line software that checks that all of the tags on your music are correct and puts the file in the correct folder (e.g. artist/album/01 trackname.mp3). it's a pretty complex program with a ton of features and settings, i like it to make sure i don't have two copies of the same album in different folders, and to automatically download the album art and the lyrics to most tracks, etc. i'm currently re-working my config file for beets, but i'd be happy to share if someone is interested.

that's my little system :) i hope it gives the inspiration to someone to ditch spotify for the new year and start having a personal mp3 collection of their own.

34 notes

·

View notes

Text

How to Build Software Projects for Beginners

Building software projects is one of the best ways to learn programming and gain practical experience. Whether you want to enhance your resume or simply enjoy coding, starting your own project can be incredibly rewarding. Here’s a step-by-step guide to help you get started.

1. Choose Your Project Idea

Select a project that interests you and is appropriate for your skill level. Here are some ideas:

To-do list application

Personal blog or portfolio website

Weather app using a public API

Simple game (like Tic-Tac-Toe)

2. Define the Scope

Outline what features you want in your project. Start small and focus on the minimum viable product (MVP) — the simplest version of your idea that is still functional. You can always add more features later!

3. Choose the Right Tools and Technologies

Based on your project, choose the appropriate programming languages, frameworks, and tools:

Web Development: HTML, CSS, JavaScript, React, or Django

Mobile Development: Flutter, React Native, or native languages (Java/Kotlin for Android, Swift for iOS)

Game Development: Unity (C#), Godot (GDScript), or Pygame (Python)

4. Set Up Your Development Environment

Install the necessary software and tools:

Code editor (e.g., Visual Studio Code, Atom, or Sublime Text)

Version control (e.g., Git and GitHub for collaboration and backup)

Frameworks and libraries (install via package managers like npm, pip, or gems)

5. Break Down the Project into Tasks

Divide your project into smaller, manageable tasks. Create a to-do list or use project management tools like Trello or Asana to keep track of your progress.

6. Start Coding!

Begin with the core functionality of your project. Don’t worry about perfection at this stage. Focus on getting your code to work, and remember to:

Write clean, readable code

Test your code frequently

Commit your changes regularly using Git

7. Test and Debug

Once you have a working version, thoroughly test it. Look for bugs and fix any issues you encounter. Testing ensures your software functions correctly and provides a better user experience.

8. Seek Feedback

Share your project with friends, family, or online communities. Feedback can provide valuable insights and suggestions for improvement. Consider platforms like GitHub to showcase your work and get input from other developers.

9. Iterate and Improve

Based on feedback, make improvements and add new features. Software development is an iterative process, so don’t hesitate to refine your project continuously.

10. Document Your Work

Write documentation for your project. Include instructions on how to set it up, use it, and contribute. Good documentation helps others understand your project and can attract potential collaborators.

Conclusion

Building software projects is a fantastic way to learn and grow as a developer. Follow these steps, stay persistent, and enjoy the process. Remember, every project is a learning experience that will enhance your skills and confidence!

3 notes

·

View notes

Text

Ran into a data issue I couldn’t figure out on my own at work. Forced to look through the software guide. Absolutely infuriating because the articles are all either:

You must already have followed training course [broken link] and completed steps 1-30 to configure the Jizzr API key and interface FlingleFlangle. Align the FROP print queue to begin quota arrangement now. If you have not already extended Bungle permissions, fear for your life

Helpful tip! You can click on things with your mouse 😊

Like where are the tips for an intermediate user. Like for a person who kind of knows what FlingleFlangle is but just wants to double check the correct output formats

5 notes

·

View notes

Text

The Ultimate Guide to Online Media Tools: Convert, Compress, and Create with Ease

In the fast-paced digital era, online tools have revolutionized the way we handle multimedia content. From converting videos to compressing large files, and even designing elements for your website, there's a tool available for every task. Whether you're a content creator, a developer, or a business owner, having the right tools at your fingertips is essential for efficiency and creativity. In this blog, we’ll explore the most powerful online tools like Video to Audio Converter Online, Video Compressor Online Free, Postman Online Tool, Eazystudio, and Favicon Generator Online—each playing a unique role in optimizing your digital workflow.

Video to Audio Converter Online – Extract Sound in Seconds

Ever wanted just the audio from a video? Maybe you’re looking to pull music, dialogue, or sound effects for a project. That’s where a Video to Audio Converter Online comes in handy. These tools let you convert video files (MP4, AVI, MOV, etc.) into MP3 or WAV audio files in just a few clicks. No software installation required.

Using a Video to Audio Converter Online is ideal for:

Podcast creators pulling sound from interviews.

Music producers isolating tracks for remixing.

Students or professionals transcribing lectures or meetings.

The beauty lies in its simplicity—upload the video, choose your audio format, and download. It’s as straightforward as that

2. Video Compressor Online Free – Reduce File Size Without Losing Quality

Large video files are a hassle to share or upload. Whether you're sending via email, uploading to a website, or storing in the cloud, a bulky file can be a roadblock. This is where a Video Compressor Online Free service shines.

Key benefits of using a Video Compressor Online Free:

Shrink video size while maintaining quality.

Fast, browser-based compression with no downloads.

Compatible with all major formats (MP4, AVI, MKV, etc.).

If you're managing social media content, YouTube uploads, or email campaigns, compressing videos ensures faster load times and better performance—essential for keeping your audience engaged.

3. Postman Online Tool – Streamline Your API Development

Developers around the world swear by Postman, and the Postman Online Tool brings that power to the cloud. This tool is essential for testing APIs, monitoring responses, and managing endpoints efficiently—all without leaving your browser.

Features of Postman Online Tool include:

Send GET, POST, PUT, DELETE requests with real-time response visualization.

Organize your API collections for collaborative development.

Automate testing and environment management.

Whether you're debugging or building a new application,Postman Online Tool provides a robust platform that simplifies complex API workflows, making it a must-have in every developer's toolkit.

4. Eazystudio – Your Creative Powerhouse

When it comes to content creation and design, Eazystudio is a versatile solution for both beginners and professionals. From editing videos and photos to crafting promotional content, Eazystudio makes it incredibly easy to create high-quality digital assets.

Highlights of Eazystudio:

User-friendly interface for designing graphics, videos, and presentations.

Pre-built templates for social media, websites, and advertising.

Cloud-based platform with drag-and-drop functionality.

Eazystudio is perfect for marketers, influencers, and businesses looking to stand out online. You don't need a background in graphic design—just an idea and a few clicks.

5. Favicon Generator Online – Make Your Website Look Professional

A small icon can make a big difference. The Favicon Generator Online helps you create favicons—the tiny icons that appear next to your site title in a browser tab. They enhance your website’s branding and improve user recognition.

With a Favicon Generator Online, you can:

Convert images (JPG, PNG, SVG) into favicon.ico files.

Generate multiple favicon sizes for different platforms and devices.

Instantly preview how your favicon will look in a browser tab or bookmark list.

For web developers and designers, using a Favicon Generator Online is an easy yet impactful way to polish a website and improve brand presence.

Why These Tools Matter in 2025

The future is online. As remote work, digital content creation, and cloud computing continue to rise, browser-based tools will become even more essential. Whether it's a Video to Audio Converter Online that simplifies sound editing, a Video Compressor Online Freefor seamless sharing, or a robust Postman Online Tool for development, these platforms boost productivity while cutting down on time and costs.

Meanwhile, platforms like Eazystudio empower anyone to become a designer, and tools like Favicon Generator Online ensure your brand always makes a professional first impression.

Conclusion

The right tools can elevate your workflow, save you time, and improve the quality of your digital output. Whether you're managing videos, developing APIs, or enhancing your website’s design, tools like Video to Audio Converter Online, Video Compressor Online Free, Postman Online Tool, Eazystudio, and Favicon Generator Online are indispensable allies in your digital toolbox.

So why wait? Start exploring these tools today and take your digital productivity to the next level

2 notes

·

View notes

Text

Cloud Computing: Definition, Benefits, Types, and Real-World Applications

In the fast-changing digital world, companies require software that matches their specific ways of working, aims and what their customers require. That’s when you need custom software development services. Custom software is made just for your organization, so it is more flexible, scalable and efficient than generic software.

What does Custom Software Development mean?

Custom software development means making, deploying and maintaining software that is tailored to a specific user, company or task. It designs custom Software Development Services: Solutions Made Just for Your Business to meet specific business needs, which off-the-shelf software usually cannot do.

The main advantages of custom software development are listed below.

1. Personalized Fit

Custom software is built to address the specific needs of your business. Everything is designed to fit your workflow, whether you need it for customers, internal tasks or industry-specific functions.

2. Scalability

When your business expands, your software can also expand. You can add more features, users and integrations as needed without being bound by strict licensing rules.

3. Increased Efficiency

Use tools that are designed to work well with your processes. Custom software usually automates tasks, cuts down on repetition and helps people work more efficiently.

4. Better Integration

Many companies rely on different tools and platforms. You can have custom software made to work smoothly with your CRMs, ERPs and third-party APIs.

5. Improved Security

You can set up security measures more effectively in a custom solution. It is particularly important for industries that handle confidential information, such as finance, healthcare or legal services.

Types of Custom Software Solutions That Are Popular

CRM Systems

Inventory and Order Management

Custom-made ERP Solutions

Mobile and Web Apps

eCommerce Platforms

AI and Data Analytics Tools

SaaS Products

The Process of Custom Development

Requirement Analysis

Being aware of your business goals, what users require and the difficulties you face in running the business.

Design & Architecture

Designing a software architecture that can grow, is safe and fits your requirements.

Development & Testing

Writing code that is easy to maintain and testing for errors, speed and compatibility.

Deployment and Support

Making the software available and offering support and updates over time.

What Makes Niotechone a Good Choice?

Our team at Niotechone focuses on providing custom software that helps businesses grow. Our team of experts works with you throughout the process, from the initial idea to the final deployment, to make sure the product is what you require.

Successful experience in various industries

Agile development is the process used.

Support after the launch and options for scaling

Affordable rates and different ways to work together

Final Thoughts

Creating custom software is not only about making an app; it’s about building a tool that helps your business grow. A customized solution can give you the advantage you require in the busy digital market, no matter if you are a startup or an enterprise.

#software development company#development company software#software design and development services#software development services#custom software development outsourcing#outsource custom software development#software development and services#custom software development companies#custom software development#custom software development agency#custom software development firms#software development custom software development#custom software design companies#custom software#custom application development#custom mobile application development#custom mobile software development#custom software development services#custom healthcare software development company#bespoke software development service#custom software solution#custom software outsourcing#outsourcing custom software#application development outsourcing#healthcare software development

2 notes

·

View notes