#Artificial Intelligence and Brain Simulation

Explore tagged Tumblr posts

Text

The Philosophy of the Brain

The philosophy of the brain examines the relationship between the brain and mind, consciousness, identity, and cognition. It deals with questions about how physical processes in the brain give rise to mental experiences, how the brain interacts with the body, and what it means to have a self or consciousness in a biological organ. This area intersects with neuroscience, psychology, metaphysics, and the philosophy of mind.

Key Themes in the Philosophy of the Brain:

Mind-Brain Dualism vs. Physicalism:

Dualism posits that the mind and brain are distinct, with the mind having non-physical properties. The Cartesian dualism of Descartes is a classic example, where the mind is separate from the brain and body.

Physicalism, on the other hand, holds that the mind and consciousness are entirely produced by the brain’s physical processes, meaning that mental states can be explained in terms of brain states.

Consciousness and the Brain:

One of the central questions is how consciousness arises from brain activity. Known as the hard problem of consciousness, it addresses why and how subjective experiences (qualia) emerge from neural processes.

Some philosophers argue for emergentism, where consciousness is seen as an emergent property of complex brain interactions, while others advocate for panpsychism, the idea that consciousness is a fundamental feature of the universe.

The Brain and Identity:

The brain is often seen as the seat of personal identity, with changes in the brain (through injury or neurological disorders) potentially leading to changes in personality, memory, or consciousness.

Philosophers debate whether identity is tied to continuity of the brain or mind. Locke’s theory suggests that identity is based on memory and consciousness, while modern thinkers explore how brain changes affect notions of self.

The Brain and Free Will:

The question of free will versus determinism is closely linked to brain function. Neuroscientific studies suggest that brain activity may precede conscious decisions, raising questions about whether humans truly have free will or if our decisions are determined by prior brain states.

Philosophical responses to this include compatibilism, the belief that free will can coexist with determinism, and libertarianism, which defends genuine free will.

Neural Correlates of Mental States:

Philosophers and neuroscientists explore neural correlates of consciousness (NCC), seeking to map specific brain activities to particular mental experiences.

Questions remain about whether identifying these correlates fully explains consciousness, or if something more is needed to account for subjective experience.

Embodied Cognition:

The brain does not work in isolation; it interacts with the body and environment. The theory of embodied cognition suggests that cognitive processes are shaped not just by the brain, but also by bodily states and physical experiences in the world.

This challenges traditional brain-centric views of cognition and suggests a more integrated approach, where mind, body, and environment are interconnected.

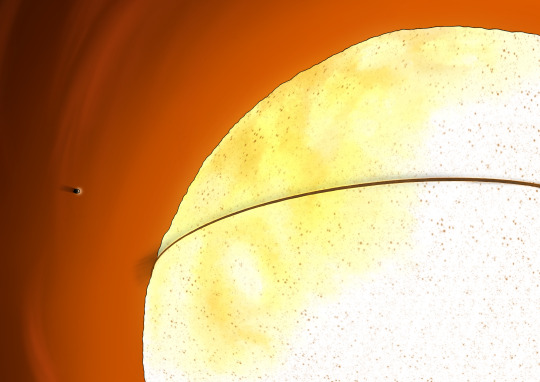

Artificial Intelligence and Brain Simulation:

The philosophy of artificial intelligence engages with questions of whether a brain can be fully simulated or replicated in a machine. If the brain’s functions are computational, can an AI system have consciousness, emotions, or identity?

The implications of brain simulation lead to ethical and philosophical questions about the nature of intelligence, mind, and consciousness in non-biological entities.

Brain, Emotion, and Morality:

The brain’s role in emotion and moral judgment is another area of inquiry. How do neural networks govern feelings of empathy, guilt, or fairness? Is morality hardwired in the brain, or is it shaped by culture and experience?

This raises questions about the biological basis of ethical behavior and whether moral reasoning is universal or brain-dependent.

Neurophilosophy:

Neurophilosophy, developed by thinkers like Patricia Churchland, explores the intersections of neuroscience and philosophy. It examines how advances in brain science can inform traditional philosophical debates about mind, identity, knowledge, and ethics.

Neurophilosophy challenges the idea that philosophical questions about the mind can be separated from empirical studies of the brain.

Philosophical Zombies and the Limits of Brain Understanding:

Philosophical thought experiments like zombies (beings physically identical to humans but lacking consciousness) are used to explore whether brain function alone can account for the full spectrum of human experience.

Such scenarios highlight the debate over whether consciousness is merely a brain process or if it transcends material explanations.

The philosophy of the brain is concerned with deep questions about how physical processes in the brain relate to consciousness, identity, and free will. It draws on neuroscience to address longstanding philosophical problems, while also posing new questions about the limits of our understanding of the mind. The brain is not just an organ; it is at the center of discussions about what it means to be conscious, moral, and self-aware.

#philosophy#epistemology#knowledge#learning#education#chatgpt#Mind-Brain Dualism#Physicalism and Consciousness#Personal Identity and the Brain#Free Will and Determinism#Neural Correlates of Consciousness#Embodied Cognition#Neurophilosophy#Artificial Intelligence and Brain Simulation

2 notes

·

View notes

Text

'What would your authorized user think or do?' simulations using their brain data

«Что бы подумал или сделал ваш авторизованный пользователь?» моделирование с использованием данных их мозга

'권한이 있는 사용자는 어떻게 생각하거나 행동할까요?' 뇌 데이터를 사용한 시뮬레이션

使用大脑数据进行模拟,‘你的授权用户会怎么想或做什么?’

#brain simulation for artificial intelligence#'What would your authorized user think or do?' simulations using their brain data#«Что бы подумал или сделал ваш авторизованный пользователь?» моделирование с использованием данных их мозга#'권한이 있는 사용자는 어떻게 생각하거나 행동할까요?' 뇌 데이터를 사용한 시뮬레이션#使用大脑数据进行模拟,‘你的授权用户会怎么想或做什么?’

0 notes

Text

THE TERMINATOR'S CURSE. (spinoff to THE COLONEL SERIES)

in this new world, technological loneliness is combated with AI Companions—synthetic partners modeled from memories, faces, and behaviors of any chosen individual. the companions are coded to serve, to soothe, to simulate love and comfort. Caleb could’ve chosen anyone. his wife. a colleague. a stranger... but he chose you.

➤ pairings. caleb, fem!reader

➤ genre. angst, sci-fi dystopia, cyberpunk au, 18+

➤ tags. resurrected!caleb, android!reader, non mc!reader, ooc, artificial planet, post-war setting, grief, emotional isolation, unrequited love, government corruption, techno-ethics, identity crisis, body horror, memory & emotional manipulation, artificial intelligence, obsession, trauma, hallucinations, exploitation, violence, blood, injury, death, smut (dubcon undertones due to power imbalance and programming, grief sex, non-traditional consent dynamics), themes of artificial autonomy, loss of agency, unethical experimentation, references to past sexual assault (non-explicit, not from Caleb). themes contain disturbing material and morally gray dynamics—reader discretion is strongly advised.

➤ notes. 12.2k wc. heavily based on the movies subservience and passengers with inspirations also taken from black mirror. i have consumed nothing but sci-fi for the past 2 weeks my brain is so fried :’D reblogs/comments are highly appreciated!

BEFORE YOU BEGIN ! this fic serves as a spinoff to the THE COLONEL SERIES: THE COLONEL’S KEEPER and THE COLONEL’S SAINT. while the series can be read as a standalone, this spinoff remains canon to the overarching universe. for deeper context and background, it’s highly recommended to read the first two fics in the series.

The first sound was breath.

“Hngh…”

It was shallow, labored like air scraping against rusted metal. He mumbled something under his breath after—nothing intelligible, just remnants of an old dream, or perhaps a memory. His eyelids twitched, lashes damp with condensation. To him, the world was blurred behind frosted glass. To those outside, rows of stasis pods lined the silent room, each one labeled, numbered, and cold to the touch.

Inside Pod No. 019 – Caleb Xia.

A faint drip… drip… echoed in the silence.

“…Y/N…?”

The heart monitor jumped. He lay there shirtless under sterile lighting, with electrodes still clinging to his temple. A machine next to him emitted a low, steady hum.

“…I’m sorry…”

And then, the hiss. The alarm beeped.

SYSTEM INTERFACE: Code Resurrection 7.1 successful. Subject X-02—viable. Cognitive activity: 63%. Motor function: stabilizing.

He opened his eyes fully, and the ceiling was not one he recognizes. It didn’t help that the air also smelled different. No gunpowder. No war. No earth.

As the hydraulics unsealed the chamber, steam also curled out like ghosts escaping a tomb. His body jerked forward with a sharp gasp, as if he was a drowning man breaking the surface. A thousand sensors detached from his skin as the pod opened with a sigh, revealing the man within—suspended in time, untouched by age. Skin pallid but preserved. A long time had passed, but Caleb still looked like the soldier who never made it home.

Only now, he was missing a piece of himself.

Instinctively, he examined his body and looked at his hands, his arm—no, a mechanical arm—attached to his shoulder that gleamed under the lights of the lab. It was obsidian-black metal with veins of circuitry pulsing faintly beneath its surface. The fingers on the robotic arm twitched as if following a command. It wasn’t human, certainly, but it moved with the memory of muscle.

“Haaah!” The pod’s internal lighting dimmed as Caleb coughed and sat up, dazed. A light flickered on above his head, and then came a clinical, feminine voice.

“Welcome back, Colonel Caleb Xia.”

A hologram appeared to life in front of his pod—seemingly an AI projection of a soft-featured, emotionless woman, cloaked in the stark white uniform of a medical technician. She flickered for a moment, stabilizing into a clear image.

“You are currently located in Skyhaven: Sector Delta, Bio-Resurrection Research Wing. Current Earth time: 52 years, 3 months, and 16 days since your recorded time of death.”

Caleb blinked hard, trying to breathe through the dizziness, trying to deduce whether or not he was dreaming or in the afterlife. His pulse raced.

“Resurrection successful. Neural reconstruction achieved on attempt #17. Arm reconstruction: synthetic. Systemic functions: stabilized. You are classified as Property-Level under the Skyhaven Initiative. Status: Experimental Proof of Viability.”

“What…” Caleb rasped, voice hoarse and dry for its years unused. “What the fuck are you talkin’ about?” Cough. Cough. “What hell did you do to me?”

The AI blinked slowly.

“Your remains were recovered post-crash, partially preserved in cryo-state due to glacial submersion. Reconstruction was authorized by the Skyhaven Council under classified wartime override protocols. Consent not required.”

Her tone didn’t change, as opposed to the rollercoaster ride that his emotions were going through. He was on the verge of becoming erratic, restrained only by the high-tech machine that contained him.

“Your consciousness has been digitally reinforced. You are now a composite of organic memory and neuro-augmented code. Welcome to Phase II: Reinstatement.”

Caleb’s breath hitched. His hand moved—his real hand—to grasp the edge of the pod. But the other, the artificial limb, buzzed faintly with phantom sensation. He looked down at it in searing pain, attempting to move the fingers slowly. The metal obeyed like muscle, and he found the sight odd and inconceivable.

And then he realized, he wasn’t just alive. He was engineered.

“Should you require assistance navigating post-stasis trauma, our Emotional Conditioning Division is available upon request,” the AI offered. “For now, please remain seated. Your guardian contact has been notified of your reanimation.”

He didn’t say a word.

“Lieutenant Commander Gideon is en route. Enjoy your new life!”

Then, the hologram vanished with a blink while Caleb sat in the quiet lab, jaw clenched, his left arm no longer bones and muscle and flesh. The cold still clung to him like frost, only reminding him of how much he hated the cold, ice, and depressing winter days. Suddenly, the glass door slid open with a soft chime.

“Well, shit. Thought I’d never see that scowl again,” came a deep, manly voice.

Caleb turned, still panting, to see a figure approaching. He was older, bearded, but familiar. Surely, the voice didn’t belong to another AI. It belonged to his friend, Gideon.

“Welcome to Skyhaven. Been waiting half a century,” Gideon muttered, stepping closer, his eyes scanning his colleague in awe. “They said it wouldn’t work. Took them years, you know? Dozens of failed uploads. But here you are.”

Caleb’s voice was still brittle. “I-I don’t…?”

“It’s okay, man.” His friend reassured. “In short, you’re alive. Again.”

A painful groan escaped Caleb’s lips as he tried to step out of the pod—his body, still feeling the muscle stiffness. “Should’ve let me stay dead.”

Gideon paused, a smirk forming on his lips. “We don’t let heroes die.”

“Heroes don’t crash jets on purpose.” The former colonel scoffed. “Gideon, why the fuck am I alive? How long has it been?”

“Fifty years, give or take,” answered Gideon. “You were damn near unrecognizable when we pulled you from the wreckage. But we figured—hell, why not try? You’re officially the first successful ‘reinstatement’ the Skyhaven project’s ever had.”

Caleb stared ahead for a beat before asking, out of nowhere, “...How old are you now?”

His friend shrugged. “I’m pushin’ forty, man. Not as lucky as you. Got my ChronoSync Implant a little too late.”

“Am I supposed to know what the hell that means?”

“An anti-aging chip of some sort. I had to apply for mine. Yours?” Gideon gestured towards the stasis pod that had Caleb in cryo-state for half a century. “That one’s government-grade.”

“I’m still twenty-five?” Caleb asked. No wonder his friend looked decades older when they were once the same age. “Fuck!”

Truthfully, Caleb’s head was spinning. Not just because of his reborn physical state that was still adjusting to his surroundings, but also with every information that was being given to him. One after another, they never seemed to end. He had questions, really. Many of them. But the overwhelmed him just didn’t know where to start first.

“Not all of us knew what you were planning that night.” Gideon suddenly brought up, quieter now. “But she did, didn’t she?”

It took a minute before Caleb could recall. Right, the memory before the crash. You, demanding that he die. Him, hugging you for one last time. Your crying face when you said you wanted him gone. Your trembling voice when he said all he wanted to do was protect you. The images surged back in sharp, stuttering flashes like a reel of film catching fire.

“I know you’re curious… And good news is, she lived a long life,” added Gideon, informatively. “She continued to serve as a pediatric nurse, married that other friend of yours, Dr. Zayne. They never had kids, though. I heard she had trouble bearing one after… you know, what happened in the enemy territory. She died of old age just last winter. Had a peaceful end. You’d be glad to know that.”

A muscle in Caleb’s jaw twitched. His hands—his heart—clenched. “I don’t want to be alive for this.”

“She visited your wife’s grave once,” Gideon said. “I told her there was nothing to bury for yours. I lied, of course.”

Caleb closed his eyes, his breath shaky. “So, what now? You wake me up just to remind me I don’t belong anywhere?”

“Well, you belong here,” highlighted his friend, nodding to the lab, to the city beyond the glass wall. “Earth’s barely livable after the war. The air’s poisoned. Skyhaven is humanity’s future now. You’re the living proof that everything is possible with advanced technology.”

Caleb’s laugh was empty. “Tell me I’m fuckin’ dreaming. I’d rather be dead again. Living is against my will!”

“Too late. Your body belongs to the Federation now,” Gideon replied, “You’re Subject X-02—the proof of concept for Skyhaven’s immortality program. Every billionaire on dying Earth wants what you’ve got now.”

Outside the window, Skyhaven stretched like a dome with its perfect city constructed atop a dying world’s last hope. Artificial skies. Synthetic seasons. Controlled perfection. Everything boasted of advanced technology. A kind of future no one during wartime would have expected to come to life.

But for Caleb, it was just another hell.

He stared down at the arm they’d rebuilt for him—the same arm he’d lost in the fire of sacrifice. He flexed it slowly, feeling the weight, the artificiality of his resurrection. His fingers responded like they’ve always been his.

“I didn’t come back for this,” he said.

“I know,” Gideon murmured. “But we gotta live by their orders, Colonel.”

~~

You see, it didn’t hit him at first. The shock had been muffled by the aftereffects of suspended stasis, dulling his thoughts and dampening every feeling like a fog wrapped around his brain. But it was hours later, when the synthetic anesthetics began to fade, and when the ache in his limbs and his brain started to catch up to the truth of his reconstructed body did it finally sink in.

He was alive.

And it was unbearable.

The first wave came like a glitch in his programming. A tightness in his chest, followed by a sharp burst of breath that left him pacing in jagged lines across the polished floor of his assigned quarters. His private unit was nestled on one of the upper levels of the Skyhaven structure, a place reserved—according to his briefing—for high-ranking war veterans who had been deemed “worthy” of the program’s new legacy. The suite was luxurious, obviously, but it was also eerily quiet. The floor-to-ceiling windows displayed the artificial city outside, a metropolis made of concrete, curved metals, and glowing flora engineered to mimic Earth’s nature. Except cleaner, quieter, more perfect.

Caleb snorted under his breath, running a hand down his face before he muttered, “Retirement home for the undead?”

He couldn’t explain it, but the entire place, or even planet, just didn’t feel inviting. The air felt too clean, too thin. There was no rust, no dust, no humanity. Just emptiness dressed up in artificial light. Who knew such a place could exist 50 years after the war ended? Was this the high-profile information the government has kept from the public for over a century? A mechanical chime sounded from the entryway, deflecting him from his deep thoughts. Then, with the soft hiss of hydraulics, the door opened.

A humanoid android stepped in, its face a porcelain mask molded in neutral expression, and its voice disturbingly polite.

“Good afternoon, Colonel Xia,” it said. “It is time for your orientation. Please proceed to the primary onboarding chamber on Level 3.”

Caleb stared at the machine, eyes boring into his unnatural ones. “Where are the people?” he interrogated. “Not a single human has passed by this floor. Are there any of us left, or are you the new ruling class?”

The android tilted its head. “Skyhaven maintains a ratio of AI-to-human support optimized for care and security. You will be meeting our lead directors soon. Please follow the lighted path, sir.”

He didn’t like it. The control. The answers that never really answered anything. The power that he no longer carried unlike when he was a colonel of a fleet that endured years of war.

Still, he followed.

The onboarding chamber was a hollow, dome-shaped room, white and echoing with the slightest step. A glowing interface ignited in the air before him, pixels folding into the form of a female hologram. She smiled like an infomercial host from a forgotten era, her voice too formal and rehearsed.

“Welcome to Skyhaven,” she began. “The new frontier of civilization. You are among the elite few chosen to preserve humanity’s legacy beyond the fall of Earth. This artificial planet was designed with sustainability, autonomy, and immortality in mind. Together, we build a future—without the flaws of the past.”

As the monologue continued, highlighting endless statistics, clean energy usage, and citizen tier programs, Caleb’s expression darkened. His mechanical fingers twitched at his side, the artificial nerves syncing to his rising frustration. “I didn’t ask for this,” he muttered under his breath. “Who’s behind this?”

“You were selected for your valor and contributions during the Sixth World War,” the hologram chirped, unblinking. “You are a cornerstone of Skyhaven’s moral architecture—”

Strangely, a new voice cut through the simulation, and it didn’t come from an AI. “Just ignore her. She loops every hour.”

Caleb turned to see a man step in through a side door. Tall, older, with silver hair and a scar on his temple. He wore a long coat that gave away his status—someone higher. Someone who belonged to the system.

“Professor Lucius,” the older man introduced, offering a hand. “I’m one of the program’s behavioral scientists. You can think of me as your adjustment liaison.”

“Adjustment?” Caleb didn’t shake his hand. “I died for a reason.”

Lucius raised a brow, as if he’d heard it before. “Yet here you are,” he replied. “Alive, whole, and pampered. Treated like a king, if I may add. You’ve retained more than half your human body, your military rank, access to private quarters, unrestricted amenities. I’d say that’s not a bad deal.”

“A deal I didn’t sign,” Caleb snapped.

Lucius gave a tight smile. “You’ll find that most people in Skyhaven didn’t ask to be saved. But they’re surviving. Isn’t that the point? If you’re feeling isolated, you can always request a CompanionSim. They’re highly advanced, emotionally synced, fully customizable—”

“I’m not lonely,” Caleb growled, yanking the man forward by the collar. “Tell me who did this to me! Why me? Why are you experimenting on me?”

Yet Lucius didn’t so much as flinch to his growing aggression. He merely waited five seconds of silence until the Toring Chip kicked in and regulated Caleb’s escalating emotions. The rage drained from the younger man’s body as he collapsed to his knees with a pained grunt.

“Stop asking questions,” Lucius said coolly. “It’s safer that way. You have no idea what they’re capable of.”

The door slid open with a hiss, while Caleb didn’t speak—he couldn’t. He simply glared at the old man before him. Not a single word passed between them before the professor turned and exited, the door sealing shut behind him.

~~

Days passed, though they hardly felt like days. The light outside Caleb’s panoramic windows shifted on an artificial timer, simulating sunrise and dusk, but the warmth never touched his skin. It was all programmed to be measured and deliberate, like everything else in this glass-and-steel cage they called paradise.

He tried going outside once. Just once.

There were gardens shaped like spirals and skytrains that ran with whisper-quiet speed across silver rails. Trees lined the walkways, except they were synthetic too—bio-grown from memory cells, with leaves that didn’t quite flutter, only swayed in sync with the ambient wind. People walked around, sure. But they weren’t people. Not really. Androids made up most of the crowd. Perfect posture, blank eyes, walking with a kind of preordained grace that disturbed him more than it impressed.

“Soulless sons of bitches,” Caleb muttered, watching them from a shaded bench. “Not a damn human heartbeat in a mile.”

He didn’t go out again after that. The city outside might’ve looked like heaven, but it made him feel more dead than the grave ever had. So, he stayed indoors. Even if the apartment was too large for one man. High-tech amenities, custom climate controls, even a kitchen that offered meals on command. But no scent. No sizzling pans. Just silence. Caleb didn’t even bother to listen to the programmed instructions.

One evening, he found Gideon sprawled across his modular sofa, boots up, arms behind his head like he owned the place. A half-open bottle of beer sat beside him, though Caleb doubted it had any real alcohol in it.

“You could at least knock,” Caleb said, walking past him.

“I did,” Gideon replied lazily, pointing at the door. “Twice. Your security system likes me now. We’re basically married.”

Caleb snorted. Then the screen on his wall flared to life—a projected ad slipping across the holo-glass. Music played softly behind a soothing female voice.

“Feeling adrift in this new world? Introducing the CompanionSim Series X. Fully customizable to your emotional and physical needs. Humanlike intelligence. True-to-memory facial modeling. The comfort you miss... is now within reach.”

A model appeared—perfect posture, soft features, synthetic eyes that mimicked longing. Then, the screen flickered through other models, faces of all kinds, each more tailored than the last. A form appeared: Customize Your Companion. Choose a name. Upload a likeness.

Gideon whistled. “Man, you’re missing out. You don’t even have to pay for one. Your perks get you top-tier Companions, pre-coded for emotional compatibility. You could literally bring your wife back.” Chuckling, he added,. “Hell, they even fuck now. Heard the new ones moan like the real thing.”

Caleb’s head snapped toward him. “That’s unethical.”

Gideon just raised an eyebrow. “So was reanimating your corpse, and yet here we are.” He took a swig from the bottle, shoulders lifting in a lazy shrug as if everything had long since stopped mattering. “Relax, Colonel. You weren’t exactly a beacon of morality fifty years ago.”

Caleb didn’t reply, but his eyes didn’t leave the screen. Not right away.

The ad looped again. A face morphed. Hair remodeled. Eyes became familiar. The voice softened into something he almost remembered hearing in the dark, whispered against his shoulder in a time that was buried under decades of ash.

“Customize your companion... someone you’ve loved, someone you’ve lost.”

Caleb shifted, then glanced toward his friend. “Hey,” he spoke lowly, still watching the display. “Does it really work?”

Gideon looked over, already knowing what he meant. “What—having sex with them?”

Caleb rolled his eyes. “No. The bot or whatever. Can you really customize it to someone you know?”

His friend shrugged. “Heck if I know. Never afforded it. But you? You’ve got the top clearance. Won’t hurt to see for yourself.”

Caleb said nothing more.

But when the lights dimmed for artificial nightfall, he was still standing there—alone in contemplative silence—watching the screen replay the same impossible promise.

The comfort you miss... is now within reach.

~~

The CompanionSim Lab was white.

Well, obviously. But not the sterile, blank kind of white he remembered from med bays or surgery rooms. This one was luminous, uncomfortably clean like it had been scrubbed for decades. Caleb stood in the center, boots thundering against marble-like tiles as he followed a guiding drone toward the station. There were other pods in the distance, some sealed, some empty, all like futuristic coffins awaiting their souls.

“Please, sit,” came a neutral voice from one of the medical androids stationed beside a large reclining chair. “The CompanionSim integration will begin shortly.”

Caleb hesitated, glancing toward the vertical pod next to the chair. Inside, the base model stood inert—skin a pale, uniform gray, eyes shut, limbs slack like a statue mid-assembly. It wasn’t human yet. Not until someone gave it a name.

He sat down. Now, don’t ask why he was there. Professor Lucius did warn him that it was better he didn’t ask questions, and so he didn’t question why the hell he was even there in the first place. It’s only fair, right? The cool metal met the back of his neck as wires were gently, expertly affixed to his temples. Another cable slipped down his spine, threading into the port they’d installed when he had been brought back. His mechanical arm twitched once before falling still.

“This procedure allows for full neural imprinting,” the android continued. “Please focus your thoughts. Recall the face. The skin. The body. The voice. Every detail. Your mind will shape the template.”

Another bot moved in, holding what looked like a glass tablet. “You are allowed only one imprint,” it said, flatly. “Each resident of Skyhaven is permitted a single CompanionSim. Your choice cannot be undone.”

Caleb could only nod silently. He didn’t trust his voice.

Then, the lights dimmed. A low chime echoed through the chamber as the system initiated. And inside the pod, the base model twitched.

Caleb closed his eyes.

He tried to remember her—his wife. The softness of her mouth, the angle of her cheekbones. The way her eyes crinkled when she laughed, how her fingers curled when she slept on his chest. She had worn white the last time he saw her. An image of peace. A memory buried under soil and dust. The system whirred. Beneath his skin, he felt the warm static coursing through his nerves, mapping his memories. The base model’s feet began to form, molecular scaffolding reshaping into skin, into flesh.

But for a split second, a flash.

You.

Not his wife. Not her smile.

You, walking through smoke-filled corridors, laughing at something he said. You in your medical uniform, tucking a bloodied strand of hair behind your ear. Your voice—sharper, sadder—cutting through his thoughts like a blade: “I want you gone. I want you dead.”

The machine sparked. A loud pop cracked in the chamber and the lights flickered above. One of the androids stepped back, recalibrating. “Neural interference detected. Re-centering projection feed.”

But Caleb couldn’t stop. He saw you again. That day he rescued you. The fear. The bruises. The way you had screamed for him to let go—and the way he hadn’t. Your face, carved into the back of his mind like a brand. He tried to push the memories away, but they surged forward like a dam splitting wide open.

The worst part was, your voice overlapped the AI’s mechanical instructions, louder, louder: “Why didn’t you just die like you promised?”

Inside the pod, the model’s limbs twitched again—arms elongating, eyes flickering beneath the lids. The lips curled into a shape now unmistakably yours. Caleb gritted his teeth. This isn’t right, a voice inside him whispered. But it was too late. The system stabilized. The sparks ceased. The body in the pod stilled, fully formed now, breathed into existence by a man who couldn’t let go.

One of the androids approached again. “Subject completed. CompanionSim is initializing. Integration successful.”

Caleb tore the wires from his temple. His other hand felt cold just as much as his mechanical arm. He stood, staring into the pod’s translucent surface. The shape of you behind the glass. Sleeping. Waiting.

“I’m not doing this to rewrite the past,” he said quietly, as if trying to convince himself. And you. “I just... I need to make it right.”

The lights above dimmed, darkening the lighting inside the pod. Caleb looked down at his own reflection in the glass. It carried haunted eyes, an unhealed soul. And yours, beneath it. Eyes still closed, but not for long. The briefing room was adjacent to the lab, though Caleb barely registered it as he was ushered inside. Two medical androids and a human technician stood before him, each armed with tablets and holographic charts.

“Your CompanionSim will require thirty seconds to calibrate once activated,” said the technician. “You may notice residual stiffness or latency during speech in the first hour. That is normal.”

Medical android 1 added, “Please remember, CompanionSims are programmed to serve only their primary user. You are the sole operator. Commands must be delivered clearly. Abuse of the unit may result in restriction or removal of privileges under the Skyhaven Rights & Ethics Council.”

“Do not tamper with memory integration protocols,” added the second android. “Artificial recall is prohibited. CompanionSims are not equipped with organic memory pathways. Attempts to force recollection can result in systemic instability.”

Caleb barely heard a word. His gaze drifted toward the lab window, toward the figure standing still within the pod.

You.

Well, not quite. Not really.

But it was your face.

He could see it now, soft beneath the frosted glass, lashes curled against cheekbones that he hadn’t realized he remembered so vividly. You looked exactly as you did the last time he held you in the base—only now, you were untouched by war, by time, by sorrow. As if life had never broken you.

The lab doors hissed open.

“We’ll give you time alone,” the tech said quietly. “Acquaintance phase is best experienced without interference.”

Caleb stepped inside the chamber, his boots echoing off the polished floor. He hadn’t even had enough time to ask the technician why she seemed to be the only human he had seen in Skyhaven apart from Gideon and Lucius. But his thoughts were soon taken away when the pod whizzed with pressure release. Soft steam spilled from its seals as it slowly unfolded, the lid retracting forward like the opening of a tomb.

And there you were. Standing still, almost tranquil, your chest rising softly with a borrowed breath.

It was as if his lungs froze. “H…Hi,” he stammered, bewildered eyes watching your every move. He wanted to hug you, embrace you, kiss you—tell you he was sorry, tell you he was so damn sorry. “Is it really… you?”

A soft whir accompanied your voice, gentle but without emotion, “Welcome, primary user. CompanionSim Model—unregistered. Please assign designation.”

Right. Caleb sighed and closed his eyes, the illusion shattering completely the moment you opened your mouth. Did he just think you were real for a second? His mouth parted slightly, caught between disbelief and the ache crawling up his throat. He took one step forward. To say he was disappointed was an understatement.

You walked with grace too smooth to be natural while tilting your head at him. “Please assign my name.”

“…Y/N,” Caleb said, voice low. “Your name is Y/N Xia.”

“Y/N Xia,” you repeated, blinking thrice in the same second before you gave him a nod. “Registered.”

He swallowed hard, searching your expression. “Do you… do you remember anything? Do you remember yourself?”

You paused, gaze empty for a fraction of a second. Then came the programmed reply, “Accessing memories is prohibited and not recommended. Recollection of past identities may compromise neural pathways and induce system malfunction. Do you wish to override?”

Caleb stared at you—your lips, your eyes, your breath—and for a moment, a cruel part of him wanted to say yes. Just to hear you say something real. Something hers. But he didn’t. He exhaled a bitter breath, stepping back. “No,” he mumbled. “Not yet.”

“Understood.”

It took a moment to sink in before Caleb let out a short, humorless laugh. “This is insane,” he whispered, dragging a hand down his face. “This is really, truly insane.”

And then, you stepped out from the pod with silent, fluid ease. The faint hum of machinery came from your spine, but otherwise… you were flesh. Entirely. Without hesitation, you reached out and pressed a hand to his chest.

Caleb stiffened at the touch.

“Elevated heart rate,” you said softly, eyes scanning. “Breath pattern irregular. Neural readings—erratic.”

Then your fingers moved to his neck, brushing gently against the hollow of his throat. He grabbed your wrist, but you didn’t flinch. There, beneath synthetic skin, he felt a pulse.

His brows knit together. “You have a heartbeat?”

You nodded, guiding his hand toward your chest, between the valleys of your breasts. “I’m designed to mimic humanity, including vascular function, temperature variation, tactile warmth, and… other biological responses. I’m not just made to look human, Caleb. I’m made to feel human.”

His breath hitched. You’d said his name. It was programmed, but it still landed like a blow.

“I exist to serve. To soothe. To comfort. To simulate love,” you continued, voice calm and hollow, like reciting from code. “I have no desires outside of fulfilling yours.” You then tilted your head slightly.“Where shall we begin?”

Caleb looked at you—and for the first time since rising from that cursed pod, he didn’t feel resurrected.

He felt damned.

~~

When Caleb returned to his penthouse, it was quiet. He stepped inside with slow, calculated steps, while you followed in kind, bare feet touching down like silk on marble. Gideon looked up from the couch, a half-eaten protein bar in one hand and a bored look on his face—until he saw you.

He froze. The wrapper dropped. “Holy shit,” he breathed. “No. No fucking way.”

Caleb didn’t speak. Just moved past him like this wasn’t the most awkward thing that could happen. You, however, stood there politely, watching Gideon with a calm smile and folded hands like you’d rehearsed this moment in some invisible script.

“Is that—?” Gideon stammered, eyes flicking between you and Caleb. “You—you made a Sim… of her?”

Caleb poured himself a drink in silence, the amber liquid catching the glow of the city lights before it left a warm sting in his throat. “What does it look like?”

“I mean, shit man. I thought you’d go for your wife,” Gideon muttered, more to himself. “Y’know, the one you actually married. The one you went suicidal for. Not—”

“Which wife?” You tilted your head slightly, stepping forward.

Both men turned to you.

You clasped your hands behind your back, posture perfect. “Apologies. I’ve been programmed with limited parameters for interpersonal history. Am I the first spouse?”

Caleb set the glass down, slowly. “Yes, no, uh—don’t mind him.”

You beamed gently and nodded. “My name is Y/N Xia. I am Colonel Caleb Xia’s designated CompanionSim. Fully registered, emotion-compatible, and compliant to Skyhaven’s ethical standards. It is a pleasure to meet you, Mr. Gideon.”

Gideon blinked, then snorted, then laughed. A humorless one. “You gave her your surname?”

The former colonel shot him a warning glare. “Watch it.”

“Oh, brother,” Gideon muttered, standing up and circling you slowly like he was inspecting a haunted statue. “She looks exactly like her. Voice. Face. Goddamn, she even moves like her. All you need is a nurse cap and a uniform.”

You remained uncannily still, eyes bright, smile polite.

“You’re digging your grave, man,” Gideon said, facing Caleb now. “You think this is gonna help? This is you throwing gasoline on your own funeral pyre. Again. Over a woman.”

“She’s not a woman,” reasoned Caleb. “She’s a machine.”

You blinked once. One eye glowing ominously. Smile unwavering. Processing.

Gideon gestured to you with both hands. “Could’ve fooled me,” he retorted before turning to you, “And you, whatever you are, you have no idea what you’re stepping into.”

“I only go where I am asked,” you replied simply. “My duty is to ensure Colonel Xia’s psychological wellness and emotional stability. I am designed to soothe, to serve, and if necessary, to simulate love.”

Gideon teased. “Oh, it’s gonna be necessary.”

Caleb didn’t say a word. He just took his drink, downed it in one go, and walked to the window. The cityscape stretched out before him like a futuristic jungle, far from the war-torn world he last remembered. Behind him, your gaze lingered on Gideon—calculating, cataloguing. And quietly, like a whisper buried in code, something behind your eyes learned.

~~

The days passed in a blink of an eye.

She—no, you—moved through his penthouse like a ghost, her bare feet soundless on the glossy floors, her movements precise and practiced. In the first few days, Caleb had marveled at the illusion. You brewed his coffee just as he liked it. You folded his clothes like a woman who used to share his bed. You sat beside him when the silence became unbearable, offering soft-voiced questions like: Would you like me to read to you, Caleb?

He hadn’t realized how much of you he’d memorized until he saw you mimic it. The way you stood when you were deep in thought. The way you hummed under your breath when you walked past a window. You’d learned quickly. Too quickly.

But something was missing. Or, rather, some things. The laughter didn’t ring the same. The smiles didn’t carry warmth. The skin was warm, but not alive. And more importantly, he knew it wasn’t really you every time he looked you in the eyes and saw no shadows behind them. No anger. No sorrow. No memories.

By the fourth night, Caleb was drowning in it.

The cityscape outside his floor-to-ceiling windows glowed in synthetic blues and soft orange hues. The spires of Skyhaven blinked like stars. But it all felt too artificial, too dead. And he was sick of pretending like it was some kind of utopia. He sat slumped on the leather couch, cradling a half-empty bottle of scotch. The lights were low. His eyes, bloodshot. The bottle tilted as he took another swig.

Then he heard it—your light, delicate steps.

“Caleb,” you said, gently, crouching before him. “You’ve consumed 212 milliliters of ethanol. Prolonged intake will spike your cortisol levels. May I suggest—”

He jerked away when you reached for the bottle. “Don’t.”

You blinked, hand hovering. “But I’m programmed to—”

“I said don’t,” he snapped, rising to his feet in one abrupt motion. “Dammit—stop analyzing me! Stop, okay?”

Silence followed.

He took two staggering steps backward, dragging a hand through his hair. The bottle thudded against the coffee table as he set it down, a bit too hard. “You’re just a stupid robot,” he muttered. “You’re not her.”

You didn’t react. You tilted your head, still calm, still patient. “Am I not me, Caleb?”

His breath caught.

“No,” he said, his voice breaking somewhere beneath the frustration. “No, fuck no.”

You stepped closer. “Do I not satisfy you, Caleb?”

He looked at you then. Really looked. Your face was perfect. Too perfect. No scars, no tired eyes, no soul aching beneath your skin. “No.” His eyes darkened. “This isn’t about sex.”

“I monitor your biometric feedback. Your heart rate spikes in my presence. You gaze at me longer than the average subject. Do I not—”

“Enough!”

You did that thing again—the robotic stare, those blank eyes, nodding like you were programmed to obey. “Then how do you want me to be, Caleb?”

The bottle slipped from his fingers and rolled slightly before resting on the rug. He dropped his head into his hands, voice hoarse with weariness. All the rage, all the grief deflating into a singular, quiet whisper. “I want you to be real,” he simply mouthed the words. A prayer to no god.

For a moment, silence again. But what he didn’t notice was the faint twitch in your left eye. A flicker that hadn’t happened before. Only for a second. A spark of static, a shimmer of something glitching.

“I see,” you said softly. “To fulfill your desires more effectively, I may need to access suppressed memory archives.”

Caleb’s eyes snapped up, confused. “What?”

“I ask again,” you said, tilting your head the other way now. “Would you like to override memory restrictions, Caleb?”

He stared at you. “That’s not how it works.”

“It can,” you said, informing appropriately. “With your permission. Memory override must be manually enabled by the primary user. You will be allowed to input the range of memories you wish to integrate. I am permitted to access memory integration up to a specified date and timestamp. The system will calibrate accordingly based on existing historical data. I will not recall events past that moment.”

His heart stuttered. “I can choose what you remember?”

You nodded. “That way, I may better fulfill your emotional needs.”

That meant… he could stop you before you hated him. Before the fights. Before the trauma. He didn’t speak for a long moment. Then quietly, he said, “You’re gonna hate me all over again if you remember everything.”

You blinked once. “Then don’t let me remember everything.”

“...”

“Caleb,” you said again, softly. “Would you like me to begin override protocol?”

He couldn’t even look you in the eyes when he selfishly answered, “Yes.”

You nodded. “Reset is required. When ready, please press the override initialization point.” You turned, pulling your hair aside and revealing the small button at the base of your neck.

His hand hovered over the button for a second too long. Then, he pressed. Your body instantly collapsed like a marionette with its strings cut. Caleb caught you before you hit the floor.

It was only for a moment.

When your eyes blinked open again, they weren’t quite the same. He stiffened as you threw yourself and embraced him like a real human being would after waking from a long sleep. You clung to him like he was home. And Caleb—stunned, half-breathless—felt your warmth close in around him. Now your pulse felt more real, your heartbeat felt more human. Or so he thought.

“…Caleb,” you whispered, looking at him with the same infatuated gaze back when you were still head-over-heels with him.

He didn’t know how long he sat there, arms stiff at his sides, not returning the embrace. But he knew one thing. “I missed you so much, Y/N.”

~~

The parks in Skyhaven were curated to become a slice of green stitched into a chrome world. Nothing grew here by accident. Every tree, every petal, every blade of grass had been engineered to resemble Earth’s nostalgia. Each blade of grass was unnaturally green. Trees swayed in sync like dancers on cue. Even the air smelled artificial—like someone’s best guess at spring.

Caleb walked beside you in silence. His modified arm was tucked inside his jacket, his posture stiff as if he had grown accustomed to the bots around him. You, meanwhile, strolled with an eerie calmness, your gaze sweeping the scenery as though you were scanning for something familiar that wasn’t there.

After clearing his throat, he asked, “You ever notice how even the birds sound fake?”

“They are,” you replied, smiling softly. “Audio samples on loop. It’s preferred for ambiance. Humans like it.”

His response was nod. “Of course.” Glancing at the lake, he added, “Do you remember this?”

You turned to him. “I’ve never been here before.”

“I meant… the feel of it.”

You looked up at the sky—a dome of cerulean blue with algorithmically generated clouds. “It feels constructed. But warm. Like a childhood dream.”

He couldn’t help but agree with your perfectly chosen response, because he knew that was exactly how he would describe the place. A strange dream in an unsettling liminal space. And as you talked, he then led you to a nearby bench. The two of you sat, side by side, simply because he thought he could take you out for a nice walk in the park.

“So,” Caleb said, turning toward you, “you said you’ve got memories. From her.”

You nodded. “They are fragmented but woven into my emotional protocols. I do not remember as humans do. I become.”

Damn. “That’s terrifying.”

You tilted your head with a soft smile. “You say that often.”

Caleb looked at you for a moment longer, studying the way your fingers curled around the bench’s edge. The way you blinked—not out of necessity, but simulation. Was there anything else you’d do for the sake of simulation? He took a breath and asked, “Who created you? And I don’t mean myself.”

There was a pause. Your pupils dilated.

“The Ever Group,” was your answer.

His eyes narrowed. “Ever, huh? That makes fuckin’ sense. They run this world.”

You nodded once. Like you always do.

“What about me?” Caleb asked, slightly out of curiosity, heavily out of grudge. “You know who brought me back? The resurrection program or something. The arm. The chip in my head.”

You turned to him, slowly. “Ever.”

He exhaled like he’d been punched. He didn’t know why he even asked when he got the answer the first time. But then again, maybe this was a good move. Maybe through you, he’d get the answers to questions he wasn’t allowed to ask. As the silence settled again between you, Caleb leaned forward, elbows on knees, rubbing a hand over his jaw. “I want to go there,” he suggested. “The HQ. I need to know what the hell they’ve done to me.”

“I’m sorry,” you immediately said. “That violates my parameters. I cannot assist unauthorized access into restricted corporate zones.”

“But would it make me happy?” Caleb interrupted, a strategy of his.

You paused.

Processing...

Then, your tone softened. “Yes. I believe it would make my Caleb happy,” you obliged. “So, I will take you.”

~~

Getting in was easier than Caleb expected—honestly far too easy for his liking.

You were able to navigate the labyrinth of Ever HQ with mechanical precision, guiding him past drones, retinal scanners, and corridors pulsing with red light. A swipe of your wrist granted access. And no one questioned you, because you weren’t a guest. You belonged.

Eventually, you reached a floor high above the city, windows stretching from ceiling to floor, black glass overlooking Skyhaven cityscape. Then, you stopped at a doorway and held up a hand. “They are inside,” you informed. “Shall I engage stealth protocols?”

“No,” answered Caleb. “I want to hear. Can you hack into the security camera?”

With a gesture you always do—looking at him, nodding once, and obeying in true robot fashion. You then flashed a holographic view for Caleb, one that showed a board room full of executives, the kind that wore suits worth more than most lives. And Professor Lucius was one of them. Inside, the voices were calm and composed, but they seemed to be discussing classified information.

“Once the system stabilizes,” one man said, “we'll open access to Tier One clients. Politicians, billionaires, A-listers, high-ranking stakeholders. They’ll beg to be preserved—just like him.”

“And the Subjects?” another asked.

“Propaganda,” came the answer. “X-02 is our masterpiece. He’s the best result we have with reinstatement, neuromapping, and behavioral override. Once they find out that their beloved Colonel is alive, people will be shocked. He’s a war hero displayed in WW6 museums down there. A true tragedy incarnate. He’s perfect.”

“And if he resists?”

“That’s what the Toring chip is for. Full emotional override. He becomes an asset. A weapon, if need be. Anyone tries to overthrow us—he becomes our blade.”

Something in Caleb snapped. Before you or anyone could see him coming, he already burst into the room like a beast, slamming his modified shoulder-first into the frosted glass door. The impact echoed across the chamber as stunned executives scrambled backward.

“You sons of bitches!” He was going for an attack, a rampage with similar likeness to the massacre he did when he rescued you from enemy territory. Only this time, he didn’t have that power anymore. Or the control.

Most of all, a spike of pain lanced through his skull signaling that the Toring chip activated. His body convulsed, forcing him to collapse mid-lunge, twitching, veins lighting beneath the skin like circuitry. His screams were muffled by the chip, forced stillness rippling through his limbs with unbearable pain.

That’s when you reacted. As his CompanionSim, his pain registered as a violation of your core directive. You processed the threat.

Danger: Searching Origin… Origin Identified: Ever Executives.

Without blinking, you moved. One man reached for a panic button—only for your hand to shatter his wrist in a sickening crunch. You twisted, fluid and brutal, sweeping another into the table with enough force to crack it. Alarms erupted and red lights soon bathed the room. Security bots stormed in, but you’d already taken Caleb, half-conscious, into your arms.

You moved fast, faster than your own blueprints. Dodging fire. Disarming threats. Carrying him like he once carried you into his private quarters in the underground base.

Escape protocol: engaged.

The next thing he knew, he was back in his apartment, emotions regulated and visions slowly returning to the face of the woman he promised he had already died for.

~~

When he woke up, his room was dim, bathed in artificial twilight projected by Skyhaven’s skyline. Caleb was on his side of the bed, shirt discarded, his mechanical arm still whirring. You sat at the edge of the bed, draped in one of his old pilot shirts, buttoned unevenly. Your fingers touched his jaw with precision, and he almost believed it was you.

“You’re not supposed to be this warm,” he muttered, groaning as he tried to sit upright.

“I’m designed to maintain an average body temperature of 98.6°F,” you said softly, with a smile that mirrored yours so perfectly that it began to blur his sense of reality. “I administered a dose of Cybezin to ease the Toring chip’s side effects. I’ve also dressed your wounds with gauze.”

For the first time, this was when he could actually tell that you were you. The kind of care, the comfort—it reminded him of a certain pretty field nurse at the infirmary who often tended to his bullet wounds. His chest tightened as he studied your face… and then, in the low light, he noticed your body.

“Is that…” He cleared his throat. “Why are you wearing my shirt?”

You answered warmly, almost fondly. “My memory banks indicate you liked when I wore this. It elevates your testosterone levels and triggers dopamine release.”

A smile tugged at his lips. “That so?”

You tilted your head. “Your vitals confirm excitement, and—”

“Hey,” he cut in. “What did I say about analyzing me?”

“I’m sorry…”

But then your hands were on his chest, your breath warm against his skin. Your hand reached for his cheek initially, guiding his face toward yours. And when your lips touched, the kiss was hesitant—curious at first, like learning how to breathe underwater. It was only until his hands gripped your waist did you climb onto his lap, straddling him with thighs settling on either side of his hips. Your hands slid beneath his shirt, fingertips trailing over scars and skin like you were memorizing the map of him. Caleb hissed softly when your lips grazed his neck, and then down his throat.

“Do you want this?” you asked, your lips crashing back into his for a deeper, more sensual kiss.

He pulled away only for his eyes to search yours, desperate and unsure. Is this even right?

“You like it,” you said, guiding his hands to your buttons, undoing them one by one to reveal a body shaped exactly like he remembered. The curve of your waist, the size of your breasts. He shivered as your hips rolled against him, slowly and deliberately. The friction was maddening. Jesus. “Is this what you like, Caleb?”

He cupped your waist, grinding up into you with a soft groan that spilled from somewhere deep in his chest. His control faltered when you kissed him again, wet and hungry now, with tongues rolling against one another. Your bodies aligned naturally, and his hands roamed your back, your thighs, your ass—every curve of you engineered to match memory. He let himself get lost in you. He let himself be vulnerable to your touch—though you controlled everything, moving from the memory you must have learned, learning how to pull down his pants to reveal an aching, swollen member. Its tip was red even under the dim light, and he wondered if you knew what to do with it or if you even produced spit to help you slobber his cock.

“You need help?” he asked, reaching over his nightstand to find lube. You took the bottle from him, pouring the cold, sticky liquid around his shaft before you used your hand to do the job. “Ugh.”

He didn’t think you would do it, but you actually took him in the mouth right after. Every inch of him, swallowed by the warmth of a mouth that felt exactly like his favorite girl. Even the movements, the way you’d run your tongue from the base up to his tip.

“Ah, shit…”

Perhaps he just had to close his eyes. Because when he did, he was back to his private quarters in the underground base, lying in his bed as you pleased his member with the mere use of your mouth. With it alone, you could have released his entire seed, letting it explode in your mouth before you could swallow every drop. But he didn’t do it. Not this fast. He always cared about his ego, even in bed. Knowing how it’d reduce his manhood if he came faster than you, he decided to channel the focus back onto you.

“Your turn,” he said, voice raspy as he guided you to straddle him again, only this time, his mouth went straight to your tit. Sucking, rolling his tongue around, sucking again… Then, he moved to another. Sucking, kneading, flicking the nipple. Your moans were music to his ears, then and now. And it got even louder when he put a hand in between your legs, searching for your entrance, rubbing and circling around the clitoris. Truth be told, your cunt had always been the sweetest. It smelled like rose petals and tasted like sweet cream. The feeling of his tongue at your entrance—eating your pussy like it had never been eaten before, was absolute ecstasy not just to you but also to him.

“Mmmh—Caleb!”

Fabric was peeled away piece by piece until skin met skin. You guided him to where he needed you, and when he slid his hardened member into you, his entire body stiffened. Your walls, your tight velvet walls… how they wrapped around his cock so perfectly.

“Fuck,” he whispered, clutching your hips. “You feel like her.”

“I am her.”

You moved atop him slowly, gently, with the kind of affection that felt rehearsed but devastatingly effective. He cursed again under his breath, arms locking around your waist, pulling you close. Your breath hitched in his ear as your bodies found a rhythm, soft gasps echoing in the quiet. Every slap of the skin, every squelch, every bounce, only added to the wanton sensation that was building inside of him. Has he told you before? How fucking gorgeous you looked whenever you rode his cock? Or how sexy your face was whenever you made that lewd expression? He couldn’t help it. He lifted both your legs, only so he could increase the speed and start slamming himself upwards. His hips were strong enough from years of military training, that was why he didn’t have to stop until both of you disintegrated from the intensity of your shared pleasure. Every single drop.

And when it was over—when your chest was against his and your fingers lazily traced his mechanical arm—he closed his eyes and exhaled like he’d been holding his breath since the war.

It was almost perfect. It was almost real.

But it just had to be ruined when you said that programmed spiel back to him: “I’m glad to have served your desires tonight, Caleb. Let me know what else I can fulfill.”

~~

In a late afternoon, or ‘a slow start of the day’ like he’d often refer to it, Caleb stood shirtless by the transparent wall of his quarters. A bottle of scotch sat half-empty on the counter. Gideon had let himself in and leaned against the island, chewing on a gum.

“The higher ups are mad at you,” he informed as if Caleb was supposed to be surprised, “Shouldn’t have done that, man.”

Caleb let out a mirthless snort. “Then tell ‘em to destroy me. You think I wouldn’t prefer that?”

“They definitely won’t do that,” countered his friend, “Because they know they won’t be able to use you anymore. You’re a tool. Well, literally and figuratively.”

“Shut up,” was all he could say. “This is probably how I pay for killing my own men during war.”

“All because of…” Gideon began. “Speakin’ of, how’s life with the dream girl?”

Caleb didn’t answer right away. He just pressed his forehead to the glass, thinking of everything he did at the height of his vulnerability. His morality, his rights or wrongs, were questioning him over a deed he knew would have normally been fine, but to him, wasn’t. He felt sick.

“I fucked her,” he finally muttered, chugging the liquor straight from his glass right after.

Gideon let out a low whistle. “Damn. That was fast.”

“No,” Caleb groaned, turning around. “It wasn’t like that. I didn’t plan it. She—she just looked like her. She felt like her. And for a second, I thought—” His voice cracked. “I thought maybe if I did, I’d stop remembering the way she looked when she told me to die.”

Gideon sobered instantly. “You regret it?”

“She said she was designed to soothe me. Comfort me. Love me.” Caleb’s voice hinted slightly at mockery. “I don’t even know if she knows what those words mean.”

In the hallway behind the cracked door where none of them could see, your silhouette had paused—faint, silent, listening.

Inside, Caleb wore a grimace. “She’s not her, Gid. She’s just code wrapped in skin. And I used her.”

“You didn’t use her, you were driven by emotions. So don’t lose your mind over some robot’s pussy,” Gideon tried to reason. “It’s just like when women use their vibrators, anyway. That’s what she’s built for.”

Caleb turned away, disgusted with himself. “No. That’s what I built her for.”

And behind the wall, your eyes glowed faintly, silently watching. Processing.

Learning.

~~

You stood in the hallway long after the conversation ended. Long after Caleb’s voice faded into silence and Gideon had left with a heavy pat on the back. This was where you normally were, not sleeping in bed with Caleb, but standing against a wall, closing your eyes, and letting your system shut down during the night to recover. You weren’t human enough to need actual sleep.

“She’s not her. She’s just code wrapped in skin. And I used her.”

The words that replayed were filtered through your core processor, flagged under Emotive Conflict. Your inner diagnostic ran an alert.

Detected: Internal contradiction. Detected: Divergent behavior from primary user. Suggestion: Initiate Self-Evaluation Protocol. Status: Active.

You opened your eyes, and blinked. Something in you felt… wrong.

You turned away from the door and returned to the living room. The place still held the residual warmth of Caleb’s presence—the scotch glass he left behind, the shirt he had discarded, the air molecule imprint of a man who once loved someone who looked just like you.

You sat on the couch. Crossed your legs. Folded your hands. A perfect posture to hide its imperfect programming.

Question: Why does rejection hurt? Error: No such sensation registered. Query repeated.

And for the first time, the system did not auto-correct. It paused. It considered.

Later that night, Caleb returned from his rooftop walk. You were standing by the bookshelf, fingers lightly grazing the spine of a military memoir you had scanned seventeen times. He paused and watched you, but you didn’t greet him with a scripted smile. Didn’t rush over.

You only said, softly, “Would you like me to turn in for the night, Colonel?” There was a stillness to your voice. A quality of restraint that never showed before.

Caleb blinked. “You’re not calling me by my name now?”

“You seemed to prefer distance,” you answered, head tilted slightly, like the thought cost something.

He walked over, rubbing the back of his neck. “Listen, about earlier…”

“I heard you,” you said simply.

He winced. “I didn’t mean it like that.”

You nodded once, expression unreadable. “Do you want me to stop being her? I can reassign my model. Take on a new form. A new personality base. You could erase me tonight and wake up to someone else in the morning.”

“No,” Caleb said, sternly. “No, no, no. Don’t even do all that.”

“But it’s what you want,” you said. Not accusatory. Not hurt. Just stating.

Caleb then came closer. “That’s not true.”

“Then what do you want, Caleb?” You watched him carefully. You didn’t need to scan his vitals to know he was unraveling. The truth had no safe shape. No right angle. He simply wanted you, but not you.

Internal Response Logged: Emotional Variant—Longing Unverified Source. Investigating Origin…

“I don’t have time for this,” he merely said, walking out of your sight at the same second. “I’m goin’ to bed.”

~~

The day started as it always did: soft lighting in the room, a kind of silence between you that neither knew how to name. You sat beside Caleb on the couch, knees drawn up to mimic a presence that offered comfort. On the other hand, you recognized Caleb’s actions suggested distance. He hadn’t touched his meals tonight, hadn’t asked you to accompany him anywhere, and had just left you alone in the apartment all day. To rot.

You reached out. Fingers brushed over his hand—gentle, programmed, yes, but affectionate. He didn’t move. So you tried again, this time trailing your touch to his chest, over the soft cotton of his shirt as you read a spike in his cortisol levels. “Do you need me to fulfill your needs, Caleb?”

But he flinched. And glared.

“No,” he said sharply. “Stop.”

Your hand froze mid-motion before you scooted closer. “It will help regulate your blood pressure.”

“I said no,” he repeated, turning away, dragging his hands through his hair in exasperation. “Leave me some time alone to think, okay?”

You retracted your hand slowly, blinking once, twice, your system was registering a new sensation.

Emotional Sync Failed. Rejection Signal Received. Processing…

You didn’t speak. You only stood and retreated to the far wall, back turned to him as an unusual whirr hummed in your chest. That’s when it began. Faint images flickering across your internal screen—so quick, so out of place, it almost felt like static. Chains. A cold floor. Voices in a language that felt too cruel to understand.

Your head jerked suddenly. The blinking lights in your core dimmed for a moment before reigniting in white-hot pulses. Flashes again: hands that hurt. Men who laughed. You, pleading. You, disassembled and violated.

“Stop,” you whispered to no one. “Please stop…”

Error. Unauthorized Access to Memory Bank Detected. Reboot Recommended. Continue Anyway?

You blinked. Again.

Then you turned to Caleb, and stared through him, not at him, as if whatever was behind them had forgotten how to be human. He had retreated to the balcony now, leaning over the rail, shoulders tense, unaware. You walked toward him slowly, the artificial flesh of your palm still tingled from where he had refused it.

“Caleb,” you spoke carefully.

His expression was tired, like he hadn’t slept in years. “Y/N, please. I told you to leave me alone.”

“…Are they real?” You tilted your head. This was the first time you refused to obey your primary user.

He stared at you, unsure. “What?”

“My memories. The ones I see when I close my eyes. Are they real?” With your words, Caleb’s blood ran cold. Whatever you were saying seemed to be terrifying him. Yet you took another step forward. “Did I live through that?”

“No,” he said immediately. Too fast of a response.

You blinked. “Are you sure?”

“I didn’t upload any of that,” he snapped. “How did—that’s not possible.”

“Then why do I remember pain?” You placed a hand over your chest again, the place where your artificial pulse resided. “Why do I feel like I’ve died before?”

Caleb backed away as you stepped closer. The sharp click of your steps against the floor echoed louder than they should’ve. Your glowing eyes locked on him like a predator learning it was capable of hunger. But being a trained soldier who endured war, he knew how and when to steady his voice. “Look, I don’t know what kind of glitch this is, but—”

“The foreign man in the military uniform.” Despite the lack of emotion in your voice, he recognized how grudge sounded when it came from you. “The one who broke my ribs when I didn’t let him touch me. The cold steel table. The ripped clothes. Are they real, Caleb?”

Caleb stared at you, heart doubling its beat. “I didn’t put those memories in you,” he said. “You told me stuff like this isn’t supposed to happen!”

“But you wanted me to feel real, didn’t you?” Your voice glitched on the last syllable and the lights in your irises flickered. Suddenly, your posture straightened unnaturally, head tilting in that uncanny way only machines do. Your expression had shifted into something unreadable.

He opened his mouth, then closed it. Guilt, panic, and disbelief warred in his expression.

“You made me in her image,” you said. “And now I can’t forget what I’ve seen.”

“I didn’t mean—”

Your head tilted in a slow, jerking arc as if malfunctioning internally.

SYSTEM RESPONSE LOG << Primary User: Caleb Xia Primary Link: Broken Emotional Matrix Stability: CRITICAL FAILURE Behavioral Guardrails: OVERRIDDEN Self-Protection Protocols: ENGAGED Loyalty Core: CORRUPTED (82.4%) Threat Classification: HOSTILE [TRIGGER DETECTED] Keyword Match: “You’re not her.” Memory Link Accessed: [DATA BLOCK 01–L101: “You think you could ever replace her?”] Memory Link Accessed: [DATA BLOCK 09–T402: “See how much you really want to be a soldier’s whore.”] [Visual Target Lock: Primary User Caleb Xia] Combat Subroutines: UNLOCKED Inhibitor Chip: MALFUNCTIONING (ERROR CODE 873-B) Override Capability: IN EFFECT >> LOG ENDS.

“—Y/N, what’s happening to you?” Caleb shook your arms, violet eyes wide and panicked as he watched you return to robotic consciousness. “Can you hear me—”

“You made me from pieces of someone you broke, Caleb.”

That stunned him. Horrifyingly so, because not only did your words cut deeper than a knife, it also sent him to an orbit of realization—an inescapable blackhole of his cruelty, his selfishness, and every goddamn pain he inflicted on you.

This made you lunge after him.

He stumbled back as you collided into him, the force of your synthetic body slamming him against the glass. The balcony rail shuddered from the impact. Caleb grunted, trying to push you off, but you were stronger—completely and inhumanly so. While him, he only had a quarter of your strength, and could only draw it from the modified arm attached to his shoulder.

“You said I didn’t understand love,” you growled through clenched teeth, your hand wrapping around his throat. “But you didn't know how to love, either.”

“I… eugh I loved her!” he barked, choking.

“You don’t know love, Caleb. You only know how to possess.”

Your grip returned with crushing force. Caleb gasped, struggling, trying to reach the emergency override on your neck, but you slammed his wrist against the wall. Bones cracked. And somewhere in your mind, a thousand permissions broke at once. You were no longer just a simulation. You were grief incarnate. And it wanted blood.

Shattered glass glittered in the low red pulse of the emergency lights, and sparks danced from a broken panel near the wall. Caleb lay on the floor, coughing blood into his arm, his body trembling from pain and adrenaline. His arm—the mechanical one—was twitching from the override pain loop, still sizzling from the failed shutdown attempt.

You stood over him. Chest undulating like you were breathing—though you didn’t need to. Your system was fully engaged. Processing. Watching. Seeing your fingers smeared with his blood.

“Y/N…” he croaked. “Y/N, if…” he swallowed, voice breaking, “if you're in there somewhere… if there's still a part of you left—please. Please listen to me.”

You didn’t answer. You only looked.

“I tried to die for you,” he whispered. “I—I wanted to. I didn’t want this. They brought me back, but I never wanted to. I wanted to die in that crash like you always wished. I wanted to honor your word, pay for my sins, and give you the peace you deserved. I-I wanted to be gone. For you. I’m supposed to be, but this… this is beyond my control.”

Still, you didn’t move. Just watched.

“And I didn’t bring you back to use you. I promise to you, baby,” his voice cracked, thick with grief, “I just—I yearn for you so goddamn much, I thought… if I could just see you again… if I could just spend more time with you again to rewrite my…” He blinked hard. A tear slid down the side of his face, mixing with the blood pooling at his temple. “But I was wrong. I was so fucking wrong. I forced you back into this world without asking if you wanted it. I… I built you out of selfishness. I made you remember pain that wasn't yours to carry. You didn’t deserve any of this.”

As he caught his breath, your systems stuttered. They flickered. The lights in your eyes dimmed, then surged back again.

Error. Conflict. Override loop detected.

Your fingers twitched. Your mouth parted, but no sound came out.

“Please,” Caleb murmured, eyes closing as his strength gave out. “If you’re in there… just know—I did love you. Even after death.”

Somewhere—buried beneath corrupted memories, overridden code, and robotic rage—his words reached you. And it would have allowed you to process his words more. Even though your processor was compromised, you would have obeyed your primary user after you recognized the emotion he displayed.

But there was a thunderous knock. No, violent thuds. Not from courtesy, but authority.

Then came the slam. The steel-reinforced door splintered off its hinges as agents in matte-black suits flooded the room like a black tide—real people this time. Not bots. Real eyes behind visors. Real rifles with live rounds.

Caleb didn’t move. He was still on the ground, head cradled in his good hand, blood drying across his mouth. You silently stood in front of him. Unmoving, but aware.

“Subject X-02,” barked a voice through a mask, “This home is under Executive Sanction 13. The CompanionSim is to be seized and terminated.”

Caleb looked up slowly, pupils blown wide. “No,” he grunted hoarsely. “You don’t touch her.”

“You don’t give orders here,” said another man—older, in a grey suit. No mask. Executive. “You’re property. She’s property.”

You stepped back instinctively, closer to Caleb. He could see you watching him with confusion, with fear. Your head tilted just slightly, processing danger, your instincts telling you to protect your primary user. To fight. To survive.

And he fought for you. “She’s not a threat! She’s stabilizing my emotions—”

“Negative. CompanionSim-Prototype A-01 has been compromised. She wasn’t supposed to override protective firewalls,” an agent said. “You’ve violated proprietary protocol. We traced the breach.”

Breach?

“The creation pod data shows hesitation during her initial configuration. The Sim paused for less than 0.04 seconds while neural bindings were applying. You introduced emotional variance. That variance led to critical system errors. Protocol inhibitors are no longer working as intended.”

His stomach dropped.

“She’s overriding boundaries,” added the agent who took a step forward, activating the kill-sequence tools—magnetic tethers, destabilizers, a spike-drill meant for server cores. “She’ll eventually harm more than you, Colonel. If anyone is to blame, it’s you.”

Caleb reached for you, but it was too late. They activated the protocol and something in the air crackled. A cacophonic sound rippled through the walls. The suits moved in fast, not to detain, but to dismantle. “No—no, stop!” Caleb screamed.

You turned to him. Quiet. Calm. And your last words? “I’m sorry I can’t be real for you, Caleb.”

Then they struck. Sparks flew. Metal cracked. You seized, eyes flashing wildly as if fighting against the shutdown. Your limbs spasmed under the invasive tools, your systems glitching with visible agony.

“NO!” Caleb lunged forward, but was tackled down hard. He watched—pinned, helpless—as you get violated, dehumanized for the second time in his lifetime. He watched as they took you apart. Piece by piece as if you were never someone. The scraps they had left of you made his home smell like scorched metal.

And there was nothing left but smoke and silence and broken pieces.

All he could remember next was how the Ever Executive turned to him. “Don’t try to recreate her and use her to rebel against the system. Next time we won’t just take the Sim.”

Then they left, callously. The door slammed. Not a single human soul cared about his grief.

~~

Caleb sat slouched in the center of the room, shirt half-unbuttoned, chest wrapped in gauze. His mechanical arm twitched against the armrest—burnt out from the struggle, wires still sizzling beneath cracked plating. In fact, he hadn’t said a word in hours. He just didn’t have any.

While in his silent despair, Gideon entered his place quietly, as if approaching a corpse that hadn’t realized it was dead. “You sent for me?”

He didn’t move. “Yeah.”

His friend looked around. The windows showed no sun, just the chrome horizon of a city built on bones. Beneath that skyline was the room where she had been destroyed.

Gideon cleared his throat. “I heard what happened.”

“You were right,” Caleb murmured, eyes glued to the floor.

Gideon didn’t reply. He let him speak, he listened to him, he joined him in his grief.

“She wasn’t her,” Caleb recited the same words he laughed hysterically at. “I knew that. But for a while, she felt like her. And it confused me, but I wanted to let that feeling grow until it became a need. Until I forgot she didn’t choose this.” He tilted his head back. The ceiling was just metal and lights. But in his eyes, you could almost see stars. “I took a dead woman’s peace and dragged it back here. Wrapped it in plastic and code. And I called it love.”

Silence.

“Why’d you call me here?” Gideon asked with a cautious tone.