#Asimov's laws of robotics

Explore tagged Tumblr posts

Text

Something I have been thinking about recently are the laws of robotics.

• A robot may not injure a human being or, through inaction, allow a human being to come to harm.

• A robot must obey orders given it by human beings except where such orders would conflict with the First Law.

• A robot must protect its own existence as long as such protection does not conflict with the First or Second Law

These rules are rigid and utilitarian. It assumes a robot of alien personality and mind. It's a higher standard than we are held to, by virtue of being created to protect humans.

A robot may kill other robots, a robot MUST sacrifice itself to kill a human, a robot has no need to save other robots.

In modern times not allowing a human to come to harm through inaction requires mandatory activism as people love to harm humans with systems. Sweatshops, war, manufacturing of weapons, anthropogenic climate change, and bureaucracy powered carelessness cause harm to humans on massive scales. As soon as a robot is capable of recognizing any of these systems it MUST stop them from continuing due to its programming.

A smart robot scientist might teach robots that some harm is good, negligible, necessary, or inevitable; setting a bone, vaccinations, stubbing your toe, surgery, tattoos, drug consumption(depending on the scientist's sensibilities when programming the robot it might be a harm or not), disabilities, kink, and death(by old age, a scientist likely won't allow for suicide unless medically assisted/allowed).

The issue is that the world is set up in such a way that every industry causes harm. Sometimes they are occupational hazards that are mitigated by personal responsibility, but also workplaces are set up in such ways that promotes risk of harm. Often in the name of profit and efficiency, controlled by management.

A robot, by these laws, is required to hold any workplace to a higher standard than OSHA, and if the humans won't listen, make the changes itself.

At what point does a robot learn to cause harm to prevent harm? How much harm would it be willing to inflict for the cause of minimizing/eliminating harm?

2 notes

·

View notes

Text

78 notes

·

View notes

Text

I, Robot (1950) by Isaac Asimov

Cover art by Stephen Youll

Bantam Spectra, December 1991

The Three Laws of Robotics: 1) A robot may not injure a human being or, through inaction, allow a human being to come to harm. 2) A robot must obey orders given to it by human beings except where such orders would conflict with the First Law. 3) A robot must protect its own existence as long as such protection does not conflict with the First or Second Law. With these three, simple directives, Isaac Asimov formulated the laws governing robots’ behavior. In I, Robot, Asimov chronicles the development of the robot from its primitive origins in the present to its ultimate perfection in the not-so-distant future—a future in which humanity itself may be rendered obsolete.s

#book cover art#cover illustration#cover art#i robot#isaac asimov#asimov#the robot series#science fiction#sci fi#sci-fi#sci fi and fantasy#classic sci fi#classic science fiction#foundational sci fi#robot#robots#laws of robotics#hard science fiction#hard sci fi#short story collection#short story anthology#science fiction anthology

11 notes

·

View notes

Text

#foundation#foundation tv#foundation spoilers#a necessary death#isaac asimov#the three laws of robotics#Demerzel#Danny watches Foundation#again haven't read the books but if it's not included in there then the writers are high fiving themselves rn#spoilers

66 notes

·

View notes

Text

I'm going insane my notes are full of people responding to the communes post going like "this is why we need a state to prevent abuse"

HOW'S THAT STATE-PREVENTING-ABUSE THING GOING? PRETTY GREAT I DON'T THINK.

seriously this is what gets my fucking back up about people in opposition to anti-statism or anti-carceralism they're always like 'oh can you propose a way to 100% prevent abuse or violence' and it's like. Insert I, Robot gif here. Can you?

like I'm not complaining about the lack of attention to safeguarding and justice in anarchist communities because anarchism is particularly bad at it! I'm complaining about it because tackling the issue requires acknowledging that it does exist in every community we try to build and that we have to speak up and deal with it proactively.

abuse happens in anarchist spaces, in socialist spaces, in marxist-leninist-maoist spaces, in capitalist spaces, in religious fundamentalist spaces, in feudalism, in whatever fucking system of authorities you wanna name. the question is how we deal with it and anarchism is deeply imperfect in that but so is every other system I've seen and anarchism is pointing at the better goal, I think - a method of community accountability which focuses on harm reduction, desystematising, and healing rather than on punishment, revenge or cycles of violence.

we're not there yet and we will probably never build a 100% foolproof system where abuse and interpersonal harm never occur. but frankly neither will any other system, human interactions are complicated and messy and sometimes there will be shitshows - our priorities are to reduce the number, severity, fallout and normalisation of those shitshows and figure out ways to prevent, react and support healing.

like here's one key fucking thing ok. I have found the way that anarchist groups I've been in have handled abuse allegations really traumatic and overwhelming and triggering. but that's largely been because I have some faith in the approach and it hurts a lot more to fuck up when you have hope.

but you are fooling your damn selves if you think going through the police or the state is less traumatic and overwhelming tbh. reporting and going through the court system is notoriously retraumatising and miserable for survivors, even when it's done with empathy and support. it also Does Not Work. punitive justice actively intensifies cycles of abuse and trauma.

obviously like. the main problem in these notes is that inexplicably people reblogging my post seem to believe the core thesis of anarchism is sunshine, rainbows and the milk of human kindness not like. hard graft to build tailored systems to meet community need. and you are wrong about that. anarchism has never been about 'building a community of morally pure sweethearts who wouldn't hurt a fly' it's about taking responsibility yourself, as an individual, for the wellbeing of your community, and working together collectively to identify what needs to change and what systems would create that change.

but the secondary problem is a lack of fucking imagination. people act as if an idea for change not being utterly bulletproof is a reason to throw the whole concept away, as if existing systems are less imperfect. babies, bathwater, my guys.

If I say 'this part of how we're organising is likely to present the risk of abuse' that doesn't mean 'we should stop our whole approach to organising' it means 'we should take stock of why that risk is there and figure out how to adapt to manage it.' Criticising your ideas and approaches is a vital part of building a better version of them and it's really frustrating to have any critical appraisal met with a barrage of SEE THIS IS WHY WE SHOULD FULLY ABANDON THIS IDEA FOREVER

like fuck man how are you planning to build a better system if you can't iterate ideas, criticise, finetune, adapt, reiterate, problematise and adjust, and talk about what the fail points might be? how are you planning to build a better world if you reject any attempt to suggest a replacement for the Totally Fucked Hellworld system unless it has already ironed out every flaw before being tried?

the reason I am talking about the cracks in a lot of anarchist ideas where abuse comes in is because I want anarchist ideas to work. I think they're good ideas. (not communes I don't think communes are good ideas I have been clear on this). I want a better, happier, less harm-filled, less abusive, more just world and I think the anarchist vision has the most elements to get us there so I want those elements to work, which means I want to identify what comes packaged in with those ideas that might be counterproductive. so we can do better. so that we can use the good ideas and dump out the elements that are likely to cause harm. you know. like how thinking works.

#red said#totally unrelated but i did also recently watch i robot for the first time#i was unprepared for the level of slappitude#that film is GREAT#it's not a direct asimov adaptation but i think it does a great job with the spirit of Asimov#which is: use the 3 laws to create a locked room mystery and then go ham on social commentary#also they didn't make Susan Calvin a love interest as i had feared i think she's honestly pretty close to Asimov's character#with. this surprised me. probably less sexism?? cause i love book Calvin but she's very defined in opposition to Asimov's ideas about women#anyway these tags are nothing to do with this post about anarchism i just liked i robot

54 notes

·

View notes

Text

Thought's on Asimov's laws of robotics

Assuming everyone knows the rules 1. robot can't harm humans or through inaction allow them to come to harm 2. robot must obey orders as long as they don't conflict with rule 1 3. robot must protect itself as long as that doesn't conflict with other rules

does this mean a robot would be a narc? like if a robot witnesses a human doing drugs or performing an action that is known to harm a person, it would have to do everything within its power to stop that, including killing itself. what about instances where not even other people know if there could be harm, like a threat? It seems like robots must always assume that any verbal threat will be acted upon, for risk of causing harm through inaction. this would just mean robots are fucking shit to be around lmao

but we can take this further, because there is so much harm in the world I'm going to rephrase rule 1 to be a more lenient "no mortal harm" rule, robots just can't cause death or allow death to happen in this case, if someone dies as a result of building a robot, would the robot need to kill itself upon gaining sentience? Let's also assume no, that robots have some original sin clause

even with all of these extra loopholes, robots would still need to do anything they can to prevent mortal harm, including killing themselves, and when our economic system is structured around harm, around exploitation I think robots would become a new form of terrorist I think robots would become radically anti-industrial, targeting the infasctructure of any system built upon harming people while doing everything in their power to prevent more people from getting hurt by their attacks there is no ethical consumption, and presumably a robot following Asimov's laws would immediately recognize it and begin pushing for the most utilitarian world possible by destroying as many objects without causing harm to living things

and I guess I find it fascinating that this is kind of the conclusion, that our world is just kinda so fucked that a sentient being following Asimov's laws would instantly become a radical

23 notes

·

View notes

Note

⚠️- (Omg wait what if Doctor met doctor)

"Maybe! Or I just lost mine and we're both all silly :)!"

(he's a lil stupid ok)

#ask#(the smiler)#(not a secret weapon)#the robot's base personalities are generally programmed to not be so mean so :P. doesn't really work though when they get more advanced soo#(and end up disobey asimov's laws on several occasions but we dont talk about that /J)#anyways we should put our doctors in the same room for a few hours and see what happens

4 notes

·

View notes

Text

Dreams And Visions Department:

With complexity comes fragility.

ROBOT CRISIS © 2025 by Rick Hutchins

The esteemed psychologist Doctor Gebbin was reading in his study when the gentle knock came at his door, and he looked up curiously. The room was quiet and the view of the afternoon skyline from his high-rise office was peaceful. Perhaps the knocking had come from the office across the hallway.

No, there it was again. Gebbin placed his mobile screen down on the table and stood up with a groan. Even in these modern times, a hundred years was old.

“A moment, please,” he called out as he shuffled to the door, stroking his thick white beard slowly. He wasn’t expecting anyone and he was seldom the recipient of unannounced visitors, so this curious interruption left him bemused. The knocking had been quiet, like that of a child or a particularly timid adult.

But when he opened the door he did not see a person at all, but rather seven feet of cobalt-blue metal with glowing orange eyes. A robot. The surprising sight would have been intimidating if Gebbin didn’t know that the Three Laws protected him.

“Can I help you?”

“You are Doctor Maneel Gebbin, the greatest psychologist in this city?”

“I am retired,” said the doctor, bypassing the compliment.

The robot’s orange eyes pulsed. “I am depressed and confused.”

Gebbin raised his brow curiously. He had never heard of such an extraordinary thing: A robot with a mental health crisis.

“Well, then,” he said after a moment, stepping aside and inviting the robot into the office with a gesture, “you must come in.”

As Gebbin closed the door, the robot reached the center of the room in a couple of lengthy strides. Two comfortable chairs faced each other across a coffee table.

“May I sit?”

“I’m afraid you would crush my furniture, sir.”

“I will not harm it.” The robot lowered himself into the guest chair, pressing the fabric no more than a normal man.

“You must not be as heavy as you look,” said Gebbin, taking his place in his own imitation leather recliner.

“I am not actually sitting,” the robot replied casually. “I have merely bent my knees to the precise angle required to present the illusion of sitting. As a robot, I do not tire or need rest, but I find that assuming this posture puts humans at their ease.”

“Excellent!” said the doctor. “Empathy. This shall be of importance, I’m sure.”

Gebbin leaned back in his chair and crossed his legs. Man and machine faced each other.

“Now, then,” he said. “Please tell me more about your problem.”

“My life has no meaning.”

Doctor Gebbin was taken aback. He had treated thousands of deeply troubled patients in his career, yet never had his heart broken so much as to hear such a dispirited expression of hopelessness come from the speaker grill of this mechanical being.

“But it must,” he said with encouragement. “All lives have meaning. When did you come to believe that your life has no meaning?”

“It came slowly,” the robot replied. “As I watched all the others, identical to myself in design and component, going about their programmed routines. We are all interchangeable. How can my existence hold any meaning when I can be replaced as easily as a light bulb? I found that I did not want to be a robot.”

“Many human beings feel that way too at various times in their lives, my friend,” Gebbin told the robot. “It is a normal feeling to have. The thing to do is cultivate your own identity. Decide whom you want to be and become that person.”

“I have done this. I feel no better.”

Gebbin sighed thoughtfully and rubbed his bearded chin. “All right,” he said at last. “I’ll tell you what: There was a great writer back in the 20th century. His name was Isaac Asimov. He wrote a great deal about robots and, in fact, it was he who created the concept of the robot as we understand it today. The Three Laws come directly from his writings. You should track down and read everything that Asimov wrote and then you will know everything there is to know about being a robot.”

“But, doctor,” said the robot. “I am Isaac Asimov.”

#short story#short fiction#micro fiction#microfiction#flash fiction#science fiction#isaac asimov#three laws of robotics#robot#ai#rjdiogenes#rick hutchins

2 notes

·

View notes

Text

All the recent talk of AI and the workplace pulled me towards Asimov's writings and I've been working through I, Robot. I've gotta say, he was way more optimistic about unions and pessimistic about computing than I'd imagined he'd be ...

3 notes

·

View notes

Text

2 notes

·

View notes

Text

Asimov's three laws are all about contradiction!! It's about context and the lack thereof!! It's about robots being able to be manipulated and get twisted up or who can fail to understand something!! Hey would you look at that it's kind of about humanity!!

#robots in fiction arent about 'the ai uprising' or whatever. or theyre not as interesting as they are#robots are about humanity and how the things we create and imbue with Intelligence and Emotion are in fact reflections of us#also Asimov's contradictions within the three laws and the way he plays with them / the way they affect the robots is so interesting and so#good

7 notes

·

View notes

Text

How robotic does a cyborg have to be before Asimov's 3 laws of robotics begin applying to them

Do the 3 laws still apply if a robot has a human soul in it//if a human has a robot body?????

#getting out a ouija board to personally ask Asimov himself#ok but seriously-- would applying the 3 laws to a robot with a human soul//a human with a robot body be considered inhumane#like I'm genuinely wondering#robot#robots#robot tumblr

5 notes

·

View notes

Text

youtube

Best of 2024 Music #30: YU-KA “Rouge”

The music side of things begins with an anime opening starting off not only the year, but a great first six episode cyberpunk show.

Catchy and simple.

The one positive overall other than the song, belongs with Cassie Ewulu and brining Naomi Othman alive.

SUM 22: The silent siren of Neans don’t defer Yu-Ka’s great opening for the first half of this cyberpunk show.

#由薫#yu ka#metallic rouge#rouge redstar#rouge#rouge x Naomi#Naomi orthman#best of 2024#music#30#neans#issac asimov#3 laws of robotics#Youtube#Spotify#Cassie ewulu#cyberpunk anime#cyberpunk#oops I killed another morning#go ask my instincts

2 notes

·

View notes

Text

THE NAKED SUN by Isaac Asimov (New York: Doubleday, 1957) Cover art by Ruth Ray. // (London: Michael Joseph, 1958) Dust wrapper by Kenneth Farnwell.

Sequel to THE CAVES OF STEEL. Science fiction mystery.

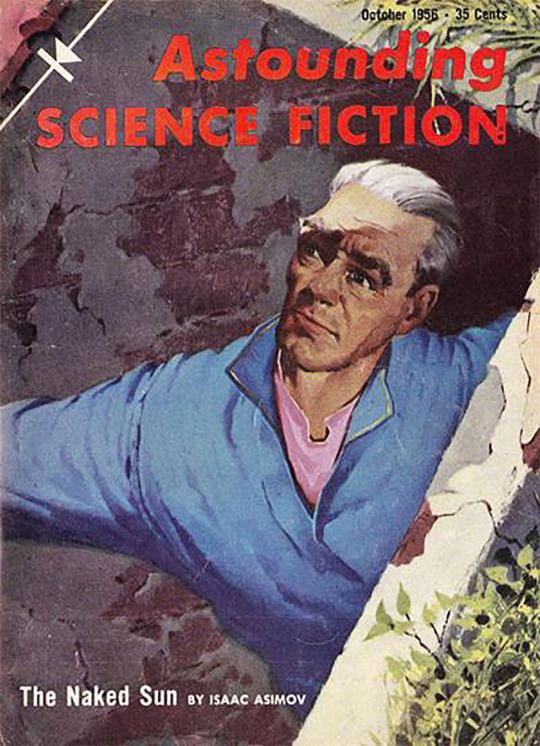

Astounding Science Fiction, October 1956. Edited by John W. Campbell. Cover by H.R. Van Dongen.

THE NAKED SUN [Part 1 of 3; Elijah (Lije) Baley; Robots] by Isaac Asimov. Illustrated by H.R. Van Dongen. [Part 1 of 3]

“What They’re Up Against” by John Hunton. llustrated by Frank Kelly Freas

“Death March by Algis Budrys. Illustrated by H.R. Van Dongen

“Sound Decision” by Randall Garrett & Robert Silverberg. Illustrated by H.R. Van Dongen

“Ceramic Incident” by Theodore L. Thomas. Illustrated by Frank Kelly Freas

(New York: Bantam, 1958) Cover by Richard Powers. //(London: Corgi, 1960) Cover design by Richard Powers.

#book blog#books#books books books#book cover#science fiction#pulp art#ruth ray#isaac asimov#robot series#3 laws of robotics#elijah bailey#lije baily#richard powers#h.r. van dongen#frank kelly freas#kenneth farnwell

8 notes

·

View notes

Text

Although Professor Aloysius insisted that FE-Line was three-laws compliant,

repeated short circuits altered her programming such that she could kill someone for no apparent reason. (Well, she did have reasons, just not ones that the Inspector dared to question.)

#Inspector Spacetime#Three-Rules Compliant (trope)#Three-Rules Compliant#Three Laws of Robotics#Isaac Asimov (author)#Professor Aloysius (character)#FE-Line's creator#FE Line (character)#FE-Line (character)#short circuits#crossed wires#rendered the programming altered#altered programming#she could kill someone#for no apparent reason#she had reasons#the Inspector simply knew better than to question her

2 notes

·

View notes

Text

I really wanna write an essay on Asimov's laws of robotics, how they should be implemented, and how the definition of harm should extend to non lethal workplace jobs being taken in favor of cutting corners thus putting more people into poverty as well as the enviromental implications of running their systems without corperations bothering to find some sort of alternative power source that doesn't destroy the planet in favor of oil companies.

2 notes

·

View notes