#Big Tech Regulation

Explore tagged Tumblr posts

Text

Australia’s Social Media Ban for Under-16s

Parents, Take Responsibility

In a move sparking global debate, Australia has approved a social media ban for users under the age of 16. The legislation requires platforms to verify users' ages and obtain parental consent for minors to access their services. On paper, this might sound like a commendable step toward protecting young minds from the dangers of the digital world. But let's face it—this is yet another instance of government overreach into parental responsibilities.

Let me be blunt: it’s not the government’s job, nor that of internet regulators, to parent your children. That’s your job. You chose to have kids; you should be responsible for their upbringing, online or offline.

The Crux of the Problem

Social media can be a dangerous playground, filled with predators, cyberbullying, and inappropriate content. But banning under-16s entirely or relying on the government to enforce parenting standards misses the point. The issue isn’t that kids have access to social media; it’s that too many parents fail to educate their children about responsible use.

When did we, as a society, decide it was acceptable to outsource parenting to regulators and algorithms? When did we start expecting governments to do the hard work of teaching our kids about values, boundaries, and discernment?

The Case Against Government Babysitting

Handing over this responsibility to the state or tech companies is lazy and shortsighted. Governments already have their hands full with pressing issues—do we really want them playing Big Brother over our children’s TikTok accounts? And tech companies, for all their promises of "safety measures," are fundamentally profit-driven entities. They’re not moral guardians; they’re businesses.

Beyond that, enforcement is a logistical nightmare. Age verification systems are easily bypassed, and tech-savvy kids will always find ways to skirt the rules. What we’re left with is a feel-good policy that’s more about political optics than practical outcomes.

Parents: Step Up

Here’s the hard truth: if you’re worried about your child’s social media use, it’s up to you to do something about it.

- Monitor their online activity. Install parental controls, but don’t stop there. Talk to your kids about what they’re doing online and why certain behaviors are harmful.

- Set boundaries. Teach them when it’s appropriate to be online and when to unplug. Encourage real-world interactions and hobbies.

- Lead by example. If you’re glued to your phone, don’t be surprised when your child follows suit. Show them what healthy tech use looks like.

This isn’t about being a helicopter parent; it’s about being present and engaged in your child’s life.

Freedom vs. Safety

The internet is a tool, not a boogeyman. It can be used for incredible learning opportunities, creative expression, and social connections. By outright banning under-16s from social media, we risk fostering a culture of fear rather than empowerment.

Instead of shielding kids from the world, let’s equip them with the skills to navigate it responsibly. That starts at home—not in Parliament or Silicon Valley.

Closing Thoughts

Australia’s move to ban under-16s from social media might come from a place of good intentions, but it sets a troubling precedent. It absolves parents of their responsibilities and gives governments more power over individual freedoms.

Parents, stop relying on governments to do your job. You brought your children into the world; you’re the ones responsible for raising them into well-rounded, discerning individuals. The internet isn’t going anywhere, and it’s up to you—not Canberra or tech executives—to teach your kids how to use it wisely.

Own your role. Don’t outsource it.

#Parenting#Social Media Ban#Australia Legislation#Government Overreach#Parental Responsibility#Internet Safety#Digital Parenting#Children's Online Safety#Social Media Age Limits#Online Freedom#Big Tech Regulation#Internet Policy#Youth and Technology#Cyberbullying#Age Verification#Digital Responsibility#Parenting in the Digital Age#Screen Time#Protecting Children Online#Personal Accountability#new blog#today on tumblr

0 notes

Text

How to design a tech regulation

TONIGHT (June 20) I'm live onstage in LOS ANGELES for a recording of the GO FACT YOURSELF podcast. TOMORROW (June 21) I'm doing an ONLINE READING for the LOCUS AWARDS at 16hPT. On SATURDAY (June 22) I'll be in OAKLAND, CA for a panel (13hPT) and a keynote (18hPT) at the LOCUS AWARDS.

It's not your imagination: tech really is underregulated. There are plenty of avoidable harms that tech visits upon the world, and while some of these harms are mere negligence, others are self-serving, creating shareholder value and widespread public destruction.

Making good tech policy is hard, but not because "tech moves too fast for regulation to keep up with," nor because "lawmakers are clueless about tech." There are plenty of fast-moving areas that lawmakers manage to stay abreast of (think of the rapid, global adoption of masking and social distancing rules in mid-2020). Likewise we generally manage to make good policy in areas that require highly specific technical knowledge (that's why it's noteworthy and awful when, say, people sicken from badly treated tapwater, even though water safety, toxicology and microbiology are highly technical areas outside the background of most elected officials).

That doesn't mean that technical rigor is irrelevant to making good policy. Well-run "expert agencies" include skilled practitioners on their payrolls – think here of large technical staff at the FTC, or the UK Competition and Markets Authority's best-in-the-world Digital Markets Unit:

https://pluralistic.net/2022/12/13/kitbashed/#app-store-tax

The job of government experts isn't just to research the correct answers. Even more important is experts' role in evaluating conflicting claims from interested parties. When administrative agencies make new rules, they have to collect public comments and counter-comments. The best agencies also hold hearings, and the very best go on "listening tours" where they invite the broad public to weigh in (the FTC has done an awful lot of these during Lina Khan's tenure, to its benefit, and it shows):

https://www.ftc.gov/news-events/events/2022/04/ftc-justice-department-listening-forum-firsthand-effects-mergers-acquisitions-health-care

But when an industry dwindles to a handful of companies, the resulting cartel finds it easy to converge on a single talking point and to maintain strict message discipline. This means that the evidentiary record is starved for disconfirming evidence that would give the agencies contrasting perspectives and context for making good policy.

Tech industry shills have a favorite tactic: whenever there's any proposal that would erode the industry's profits, self-serving experts shout that the rule is technically impossible and deride the proposer as "clueless."

This tactic works so well because the proposers sometimes are clueless. Take Europe's on-again/off-again "chat control" proposal to mandate spyware on every digital device that will screen everything you upload for child sex abuse material (CSAM, better known as "child pornography"). This proposal is profoundly dangerous, as it will weaken end-to-end encryption, the key to all secure and private digital communication:

https://www.theguardian.com/technology/article/2024/jun/18/encryption-is-deeply-threatening-to-power-meredith-whittaker-of-messaging-app-signal

It's also an impossible-to-administer mess that incorrectly assumes that killing working encryption in the two mobile app stores run by the mobile duopoly will actually prevent bad actors from accessing private tools:

https://memex.craphound.com/2018/09/04/oh-for-fucks-sake-not-this-fucking-bullshit-again-cryptography-edition/

When technologists correctly point out the lack of rigor and catastrophic spillover effects from this kind of crackpot proposal, lawmakers stick their fingers in their ears and shout "NERD HARDER!"

https://memex.craphound.com/2018/01/12/nerd-harder-fbi-director-reiterates-faith-based-belief-in-working-crypto-that-he-can-break/

But this is only half the story. The other half is what happens when tech industry shills want to kill good policy proposals, which is the exact same thing that advocates say about bad ones. When lawmakers demand that tech companies respect our privacy rights – for example, by splitting social media or search off from commercial surveillance, the same people shout that this, too, is technologically impossible.

That's a lie, though. Facebook started out as the anti-surveillance alternative to Myspace. We know it's possible to operate Facebook without surveillance, because Facebook used to operate without surveillance:

https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3247362

Likewise, Brin and Page's original Pagerank paper, which described Google's architecture, insisted that search was incompatible with surveillance advertising, and Google established itself as a non-spying search tool:

http://infolab.stanford.edu/pub/papers/google.pdf

Even weirder is what happens when there's a proposal to limit a tech company's power to invoke the government's powers to shut down competitors. Take Ethan Zuckerman's lawsuit to strip Facebook of the legal power to sue people who automate their browsers to uncheck the millions of boxes that Facebook requires you to click by hand in order to unfollow everyone:

https://pluralistic.net/2024/05/02/kaiju-v-kaiju/#cda-230-c-2-b

Facebook's apologists have lost their minds over this, insisting that no one can possibly understand the potential harms of taking away Facebook's legal right to decide how your browser works. They take the position that only Facebook can understand when it's safe and proportional to use Facebook in ways the company didn't explicitly design for, and that they should be able to ask the government to fine or even imprison people who fail to defer to Facebook's decisions about how its users configure their computers.

This is an incredibly convenient position, since it arrogates to Facebook the right to order the rest of us to use our computers in the ways that are most beneficial to its shareholders. But Facebook's apologists insist that they are not motivated by parochial concerns over the value of their stock portfolios; rather, they have objective, technical concerns, that no one except them is qualified to understand or comment on.

There's a great name for this: "scalesplaining." As in "well, actually the platforms are doing an amazing job, but you can't possibly understand that because you don't work for them." It's weird enough when scalesplaining is used to condemn sensible regulation of the platforms; it's even weirder when it's weaponized to defend a system of regulatory protection for the platforms against would-be competitors.

Just as there are no atheists in foxholes, there are no libertarians in government-protected monopolies. Somehow, scalesplaining can be used to condemn governments as incapable of making any tech regulations and to insist that regulations that protect tech monopolies are just perfect and shouldn't ever be weakened. Truly, it's impossible to get someone to understand something when the value of their employee stock options depends on them not understanding it.

None of this is to say that every tech regulation is a good one. Governments often propose bad tech regulations (like chat control), or ones that are technologically impossible (like Article 17 of the EU's 2019 Digital Single Markets Directive, which requires tech companies to detect and block copyright infringements in their users' uploads).

But the fact that scalesplainers use the same argument to criticize both good and bad regulations makes the waters very muddy indeed. Policymakers are rightfully suspicious when they hear "that's not technically possible" because they hear that both for technically impossible proposals and for proposals that scalesplainers just don't like.

After decades of regulations aimed at making platforms behave better, we're finally moving into a new era, where we just make the platforms less important. That is, rather than simply ordering Facebook to block harassment and other bad conduct by its users, laws like the EU's Digital Markets Act will order Facebook and other VLOPs (Very Large Online Platforms, my favorite EU-ism ever) to operate gateways so that users can move to rival services and still communicate with the people who stay behind.

Think of this like number portability, but for digital platforms. Just as you can switch phone companies and keep your number and hear from all the people you spoke to on your old plan, the DMA will make it possible for you to change online services but still exchange messages and data with all the people you're already in touch with.

I love this idea, because it finally grapples with the question we should have been asking all along: why do people stay on platforms where they face harassment and bullying? The answer is simple: because the people – customers, family members, communities – we connect with on the platform are so important to us that we'll tolerate almost anything to avoid losing contact with them:

https://locusmag.com/2023/01/commentary-cory-doctorow-social-quitting/

Platforms deliberately rig the game so that we take each other hostage, locking each other into their badly moderated cesspits by using the love we have for one another as a weapon against us. Interoperability – making platforms connect to each other – shatters those locks and frees the hostages:

https://www.eff.org/deeplinks/2021/08/facebooks-secret-war-switching-costs

But there's another reason to love interoperability (making moderation less important) over rules that require platforms to stamp out bad behavior (making moderation better). Interop rules are much easier to administer than content moderation rules, and when it comes to regulation, administratability is everything.

The DMA isn't the EU's only new rule. They've also passed the Digital Services Act, which is a decidedly mixed bag. Among its provisions are a suite of rules requiring companies to monitor their users for harmful behavior and to intervene to block it. Whether or not you think platforms should do this, there's a much more important question: how can we enforce this rule?

Enforcing a rule requiring platforms to prevent harassment is very "fact intensive." First, we have to agree on a definition of "harassment." Then we have to figure out whether something one user did to another satisfies that definition. Finally, we have to determine whether the platform took reasonable steps to detect and prevent the harassment.

Each step of this is a huge lift, especially that last one, since to a first approximation, everyone who understands a given VLOP's server infrastructure is a partisan, scalesplaining engineer on the VLOP's payroll. By the time we find out whether the company broke the rule, years will have gone by, and millions more users will be in line to get justice for themselves.

So allowing users to leave is a much more practical step than making it so that they've got no reason to want to leave. Figuring out whether a platform will continue to forward your messages to and from the people you left there is a much simpler technical matter than agreeing on what harassment is, whether something is harassment by that definition, and whether the company was negligent in permitting harassment.

But as much as I like the DMA's interop rule, I think it is badly incomplete. Given that the tech industry is so concentrated, it's going to be very hard for us to define standard interop interfaces that don't end up advantaging the tech companies. Standards bodies are extremely easy for big industry players to capture:

https://pluralistic.net/2023/04/30/weak-institutions/

If tech giants refuse to offer access to their gateways to certain rivals because they seem "suspicious," it will be hard to tell whether the companies are just engaged in self-serving smears against a credible rival, or legitimately trying to protect their users from a predator trying to plug into their infrastructure. These fact-intensive questions are the enemy of speedy, responsive, effective policy administration.

But there's more than one way to attain interoperability. Interop doesn't have to come from mandates, interfaces designed and overseen by government agencies. There's a whole other form of interop that's far nimbler than mandates: adversarial interoperability:

https://www.eff.org/deeplinks/2019/10/adversarial-interoperability

"Adversarial interoperability" is a catch-all term for all the guerrilla warfare tactics deployed in service to unilaterally changing a technology: reverse engineering, bots, scraping and so on. These tactics have a long and honorable history, but they have been slowly choked out of existence with a thicket of IP rights, like the IP rights that allow Facebook to shut down browser automation tools, which Ethan Zuckerman is suing to nullify:

https://locusmag.com/2020/09/cory-doctorow-ip/

Adversarial interop is very flexible. No matter what technological moves a company makes to interfere with interop, there's always a countermove the guerrilla fighter can make – tweak the scraper, decompile the new binary, change the bot's behavior. That's why tech companies use IP rights and courts, not firewall rules, to block adversarial interoperators.

At the same time, adversarial interop is unreliable. The solution that works today can break tomorrow if the company changes its back-end, and it will stay broken until the adversarial interoperator can respond.

But when companies are faced with the prospect of extended asymmetrical war against adversarial interop in the technological trenches, they often surrender. If companies can't sue adversarial interoperators out of existence, they often sue for peace instead. That's because high-tech guerrilla warfare presents unquantifiable risks and resource demands, and, as the scalesplainers never tire of telling us, this can create real operational problems for tech giants.

In other words, if Facebook can't shut down Ethan Zuckerman's browser automation tool in the courts, and if they're sincerely worried that a browser automation tool will uncheck its user interface buttons so quickly that it crashes the server, all it has to do is offer an official "unsubscribe all" button and no one will use Zuckerman's browser automation tool.

We don't have to choose between adversarial interop and interop mandates. The two are better together than they are apart. If companies building and operating DMA-compliant, mandatory gateways know that a failure to make them useful to rivals seeking to help users escape their authority is getting mired in endless hand-to-hand combat with trench-fighting adversarial interoperators, they'll have good reason to cooperate.

And if lawmakers charged with administering the DMA notice that companies are engaging in adversarial interop rather than using the official, reliable gateway they're overseeing, that's a good indicator that the official gateways aren't suitable.

It would be very on-brand for the EU to create the DMA and tell tech companies how they must operate, and for the USA to simply withdraw the state's protection from the Big Tech companies and let smaller companies try their luck at hacking new features into the big companies' servers without the government getting involved.

Indeed, we're seeing some of that today. Oregon just passed the first ever Right to Repair law banning "parts pairing" – basically a way of using IP law to make it illegal to reverse-engineer a device so you can fix it.

https://www.opb.org/article/2024/03/28/oregon-governor-kotek-signs-strong-tech-right-to-repair-bill/

Taken together, the two approaches – mandates and reverse engineering – are stronger than either on their own. Mandates are sturdy and reliable, but slow-moving. Adversarial interop is flexible and nimble, but unreliable. Put 'em together and you get a two-part epoxy, strong and flexible.

Governments can regulate well, with well-funded expert agencies and smart, adminstratable remedies. It's for that reason that the administrative state is under such sustained attack from the GOP and right-wing Dems. The illegitimate Supreme Court is on the verge of gutting expert agencies' power:

https://www.hklaw.com/en/insights/publications/2024/05/us-supreme-court-may-soon-discard-or-modify-chevron-deference

It's never been more important to craft regulations that go beyond mere good intentions and take account of adminsitratability. The easier we can make our rules to enforce, the less our beleaguered agencies will need to do to protect us from corporate predators.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/06/20/scalesplaining/#administratability

Image: Noah Wulf (modified) https://commons.m.wikimedia.org/wiki/File:Thunderbirds_at_Attention_Next_to_Thunderbird_1_-_Aviation_Nation_2019.jpg

CC BY-SA 4.0 https://creativecommons.org/licenses/by-sa/4.0/deed.en

#pluralistic#cda#ethan zuckerman#platforms#platform decay#enshittification#eu#dma#right to repair#transatlantic#administrability#regulation#big tech#scalesplaining#equilibria#interoperability#adversarial interoperability#comcom

99 notes

·

View notes

Text

lmao, regulations do wonders

#tech news#tech companies#europe#eu#european union#eu politics#european politics#politics#american politics#capitalism#free market#big government#regulation and deregulation of industry#regulations#us politics#usa politics

21 notes

·

View notes

Text

In a silicon valley, throw rocks. Welcome to my tech blog.

Antiterf antifascist (which apparently needs stating). This sideblog is open to minors.

Liberation does not come at the expense of autonomy.

* I'm taking a break from tumblr for a while. Feel free to leave me asks or messages for when I return.

Frequent tags:

#tech#tech regulation#technology#big tech#privacy#data harvesting#advertising#technological developments#spyware#artificial intelligence#machine learning#data collection company#data analytics#dataspeaks#data science#data#llm#technews

2 notes

·

View notes

Text

cannot explain to all of you why i'm so excited for threads to blow up in good ways and bad

#ITS USING THE FEDIVERSE#BIG COMPANY USING THE FEDIVERSE THATS SO INTERESTING#I LOVE EU REGULATING HOW THEY MAY BE COLLECTING DATA OFF USERS#AND I LOVE HOW BIG TECH MONEY ONTO THE FEDIVERSE MIGHT CHANGE THE LANDSCAPE FOR#ACTIVITYPUB FOREVER#AND ALSO#I WANT TO SEE META STRUGGLE WITH THE FEDIVERSE ISSUES :)#OGJDFGHDGKDLG#commentary

3 notes

·

View notes

Text

I'm not a fan of TikTok (largely due to the very fair concerns over privacy and stuff) but I always opposed the ban. This is probably one of the most heartwarming things I've seen in a bit.

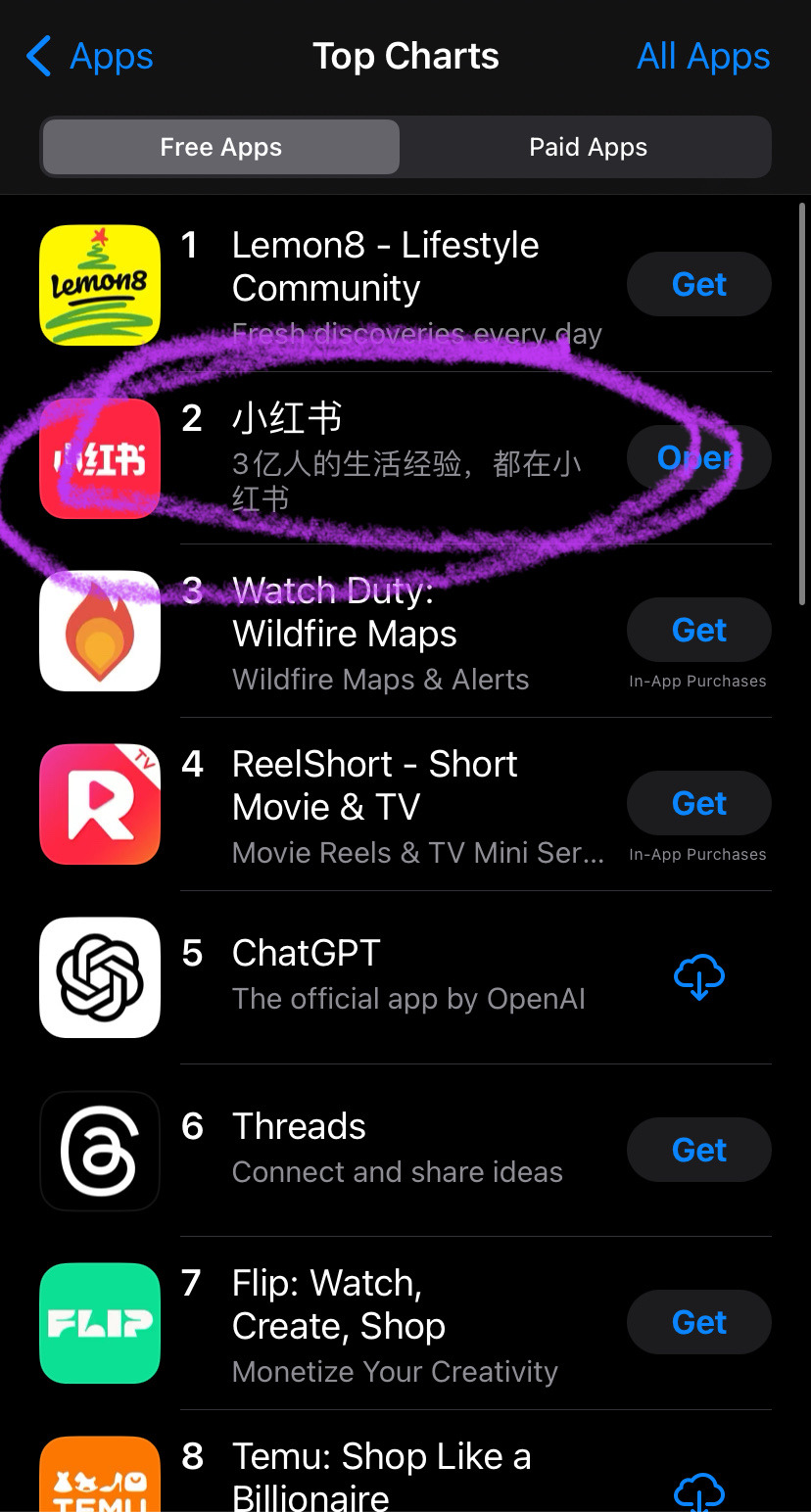

Nope now it’s at the point that i’m shocked that people off tt don’t know what’s going down. I have no reach but i’ll sum it up anyway.

SCOTUS is hearing on the constitutionality of the ban as tiktok and creators are arguing that it is a violation of our first amendment rights to free speech, freedom of the press and freedom to assemble.

SCOTUS: tiktok bad, big security concern because china bad!

Tiktok lawyers: if china is such a concern why are you singling us out? Why not SHEIN or temu which collect far more information and are less transparent with their users?

SCOTUS (out loud): well you see we don’t like how users are communicating with each other, it’s making them more anti-american and china could disseminate pro china propaganda (get it? They literally said they do not like how we Speak or how we Assemble. Independent journalists reach their audience on tt meaning they have Press they want to suppress)

Tiktok users: this is fucking bullshit i don’t want to lose this community what should we do? We don’t want to go to meta or x because they both lobbied congress to ban tiktok (free market capitalism amirite? Paying off your local congressmen to suppress the competition is totally what the free market is about) but nothing else is like TikTok

A few users: what about xiaohongshu? It’s the Chinese version of tiktok (not quite, douyin is the chinese tiktok but it’s primarily for younger users so xiaohongshu was chosen)

16 hours later:

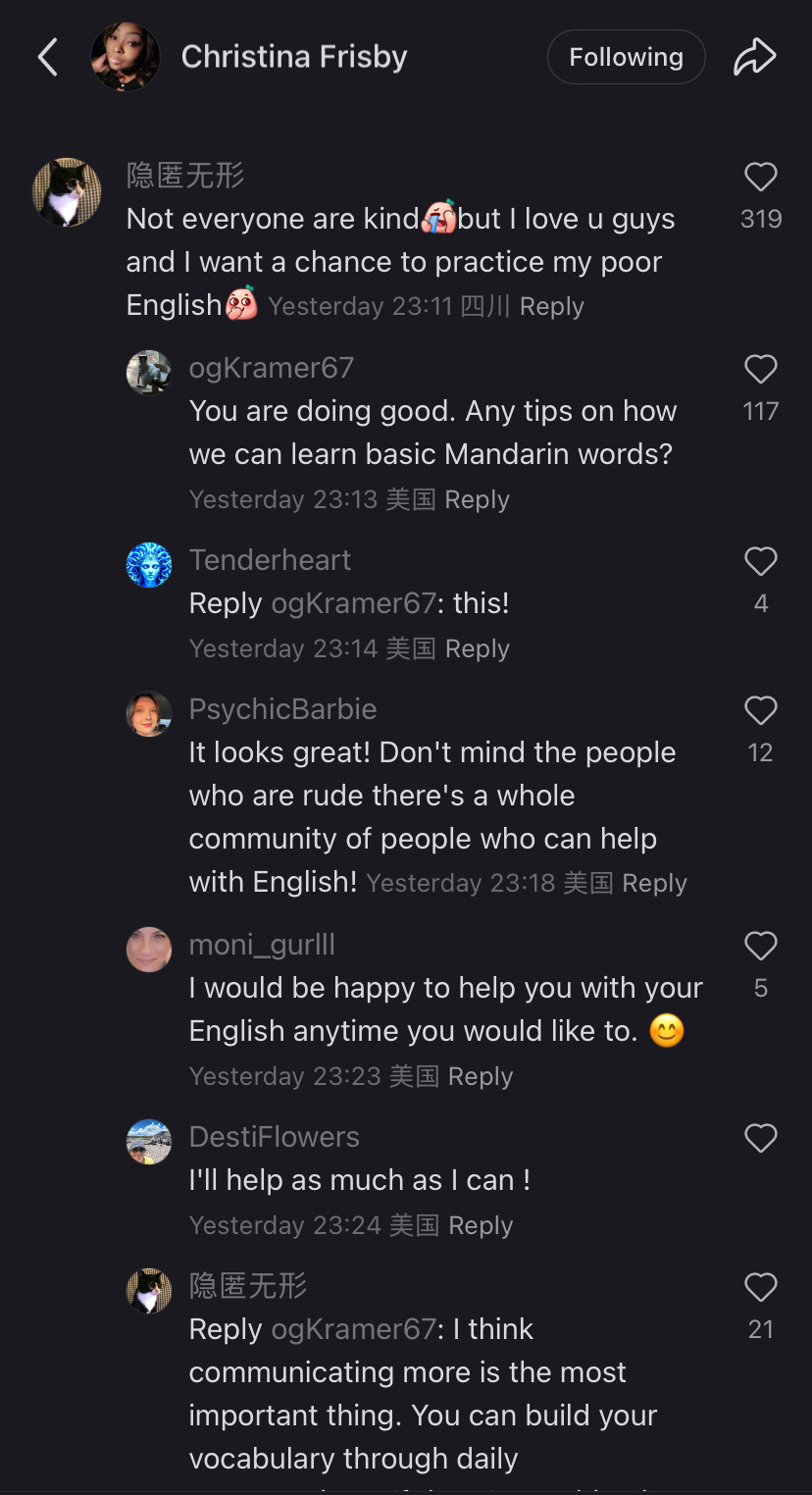

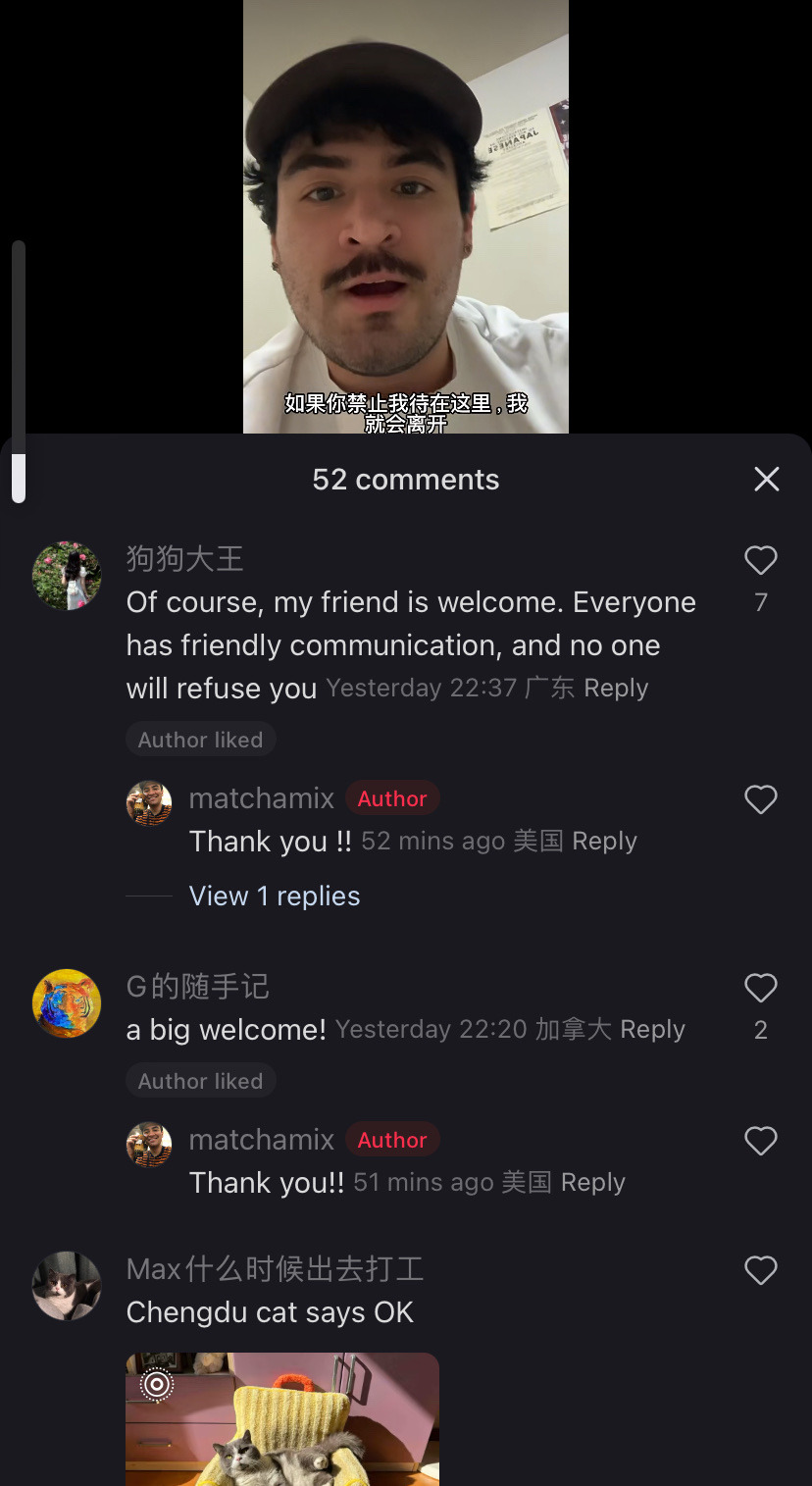

Tiktok as a community has chosen to collectively migrate TO a chinese owned app that is purely in Chinese out of utter spite and contempt for meta/x and the gov that is backing them.

My fyp is a mix of “i would rather mail memes to my friends than ever return to instagram reels” and “i will xerox my data to xi jinping myself i do not care i share my ss# with 5 other people anyway” and “im just getting ready for my day with my chinese made coffee maker and my Chinese made blowdryer and my chinese made clothing and listening to a podcast on my chinese made phone and get in my car running on chinese manufactured microchips but logging into a chinese social media? Too much for our gov!” etc.

So the government was scared that tiktok was creating a sense of class consciousness and tried to kill it but by doing so they sent us all to xiaohongshu. And now? Oh it’s adorable seeing this gov-manufactured divide be crossed in such a way.

This is adorable and so not what they were expecting. Im sure they were expecting a reluctant return to reels and shorts to fill the void but tiktokers said fuck that, we will forge connections across the world. Who you tell me is my enemy i will make my friend. That’s pretty damn cool.

#solidarity#fuck big tech#if you're so concerned about Tiktok maybe actually fucking regulate social media companies

42K notes

·

View notes

Text

I normally like the EFF and respect their dedication to citizens' digital rights, but their valourization of the petite bourgeoisie in the tech industry is always disappointing to see.

Carvings out exceptions in regulations for the petite bourgeoisie of the tech industry is something that is not only misguided but dangerous in the long term. Apple, Amazon, and Microsoft were started by folks living in their parents' basement. Now, they wield untold power not only over their respective industries but also the world at large.

The goal of every petite bourgeoisie is to become part of the bourgeoisie proper, with all of the privileges and power that class wields. If you truly care about creating "the conditions for human rights and civil liberties to flourish, and for repression to fail," then the first step is to hold small businesses to the same legal and ethical as the Big Tech companies. Small businesses are not revolutionaries; they will always prioritize their class interests over the rights of their consumers and workers.

#eff#electronic frontier foundation#petite bourgeoisie#law#regulations#big tech#capitalism#digital rights

1 note

·

View note

Text

🧠 Mind Fuel: The Attention Economy: How Big Tech Hijacked Your Mind and What You Can Do About It

In an era where your time online translates directly into corporate profit, your attention has become the most valuable commodity on Earth. From TikTok scrolls to YouTube rabbit holes, Silicon Valley has mastered the art of capturing and monetizing your focus, turning it into data, dollars, and influence. Welcome to the attention economy—a digital ecosystem where everything competes to keep you…

#algorithm#attention economy#behavioral design#big tech#digital addiction#distraction#dopamine loops#landscape image#mental health#screen time#social media overload#tech regulation

0 notes

Text

The EU's Robust Data Regulations: A Model for the World

The European Union has built a three‑pillar regulatory wall—GDPR (privacy), DMA + DSA (competition & platform accountability), and the new AI Act (data‑governance & model safety)—and it has begun active, penalty‑backed enforcement that is already reshaping global tech behavior. The combination of extraterritorial reach, record‑sized fines, and transparent oversight duties is the most effective…

#big tech censorship#congressional failure#corporate free speech#digital rights#free speech#internet democracy#online speech regulation#platform accountability

0 notes

Text

The EU must lay down the law on big tech

DIGITAL MARKETS Intro: Donald Trump’s administration is seeking to bully its way to the deregulation of US digital giants. In the interests of EU citizens, these attempts must be resisted HENNA VIRKKUNEN, the European Union’s most senior official on digital policy, has fired a broadside when she said: “We are very committed to our rules when it comes to the digital world”. Such sentiments bring…

0 notes

Text

India’s Digital Shift: Why Big Tech is Gaining Ground in 2025

In a world where geopolitics increasingly dictates the flow of commerce, India has found itself at a digital crossroads. With the United States poised to impose new tariff barriers and global technology firms seeking safer havens, India’s recent policy shifts signal a strategic realignment—one that could redefine its relationship with Silicon Valley’s biggest players. The sudden warmth in India's stance toward Big Tech is not a coincidence but a calculated manoeuvre that reflects both economic pragmatism and regulatory reassessment.

#India digital policy#Big Tech in India#Google India regulation#Indian startup ecosystem#foreign investment India#Digital Competition Act#US-India trade#equalization levy#India tech industry#digital economy 2025#Insights on India digital policy

0 notes

Text

AI Action Summit: Leaders call for unity and equitable development

New Post has been published on https://thedigitalinsider.com/ai-action-summit-leaders-call-for-unity-and-equitable-development/

AI Action Summit: Leaders call for unity and equitable development

As the 2025 AI Action Summit kicks off in Paris, global leaders, industry experts, and academics are converging to address the challenges and opportunities presented by AI.

Against the backdrop of rapid technological advancements and growing societal concerns, the summit aims to build on the progress made since the 2024 Seoul Safety Summit and establish a cohesive global framework for AI governance.

AI Action Summit is ‘a wake-up call’

French President Emmanuel Macron has described the summit as “a wake-up call for Europe,” emphasising the need for collective action in the face of AI’s transformative potential. This comes as the US has committed $500 billion to AI infrastructure.

The UK, meanwhile, has unveiled its Opportunities Action Plan ahead of the full implementation of the UK AI Act. Ahead of the AI Summit, UK tech minister Peter Kyle told The Guardian the AI race must be led by “western, liberal, democratic” countries.

These developments signal a renewed global dedication to harnessing AI’s capabilities while addressing its risks.

Matt Cloke, CTO at Endava, highlighted the importance of bridging the gap between AI’s potential and its practical implementation.

“Much of the conversation is set to focus on understanding the risks involved with using AI while helping to guide decision-making in an ever-evolving landscape,” he said.

Cloke also stressed the role of organisations in ensuring AI adoption goes beyond regulatory frameworks.

“Modernising core systems enables organisations to better harness AI while ensuring regulatory compliance,” he explained.

“With improved data management, automation, and integration capabilities, these systems make it easier for organisations to stay agile and quickly adapt to impending regulatory changes.”

Governance and workforce among critical AI Action Summit topics

Kit Cox, CTO and Founder of Enate, outlined three critical areas for the summit’s agenda.

“First, AI governance needs urgent clarity,” he said. “We must establish global guidelines to ensure AI is safe, ethical, and aligned across nations. A disconnected approach won’t work; we need unity to build trust and drive long-term progress.”

Cox also emphasised the need for a future-ready workforce.

“Employers and governments must invest in upskilling the workforce for an AI-driven world,” he said. “This isn’t just about automation replacing jobs; it’s about creating opportunities through education and training that genuinely prepare people for the future of work.”

Finally, Cox called for democratising AI’s benefits.

“AI must be fair and democratic both now and in the future,” he said. “The benefits can’t be limited to a select few. We must ensure that AI’s power reaches beyond Silicon Valley to all corners of the globe, creating opportunities for everyone to thrive.”

Developing AI in the public interest

Professor Gina Neff, Professor of Responsible AI at Queen Mary University of London and Executive Director at Cambridge University’s Minderoo Centre for Technology & Democracy, stressed the importance of making AI relatable to everyday life.

“For us in civil society, it’s essential that we bring imaginaries about AI into the everyday,” she said. “From the barista who makes your morning latte to the mechanic fixing your car, they all have to understand how AI impacts them and, crucially, why AI is a human issue.”

Neff also pushed back against big tech’s dominance in AI development.

“I’ll be taking this spirit of public interest into the Summit and pushing back against big tech’s push for hyperscaling. Thinking about AI as something we’re building together – like we do our cities and local communities – puts us all in a better place.”

Addressing bias and building equitable AI

Professor David Leslie, Professor of Ethics, Technology, and Society at Queen Mary University of London, highlighted the unresolved challenges of bias and diversity in AI systems.

“Over a year after the first AI Safety Summit at Bletchley Park, only incremental progress has been made to address the many problems of cultural bias and toxic and imbalanced training data that have characterised the development and use of Silicon Valley-led frontier AI systems,” he said.

Leslie called for a renewed focus on public interest AI.

“The French AI Action Summit promises to refocus the conversation on AI governance to tackle these and other areas of immediate risk and harm,” he explained. “A main focus will be to think about how to advance public interest AI for all through mission-driven and society-led funding.”

He proposed the creation of a public interest AI foundation, supported by governments, companies, and philanthropic organisations.

“This type of initiative will have to address issues of algorithmic and data biases head on, at concrete and practice-based levels,” he said. “Only then can it stay true to the goal of making AI technologies – and the infrastructures upon which they depend – accessible global public goods.”

Systematic evaluation

Professor Maria Liakata, Professor of Natural Language Processing at Queen Mary University of London, emphasised the need for rigorous evaluation of AI systems.

“AI has the potential to make public service more efficient and accessible,” she said. “But at the moment, we are not evaluating AI systems properly. Regulators are currently on the back foot with evaluation, and developers have no systematic way of offering the evidence regulators need.”

Liakata called for a flexible and systematic approach to AI evaluation.

“We must remain agile and listen to the voices of all stakeholders,” she said. “This would give us the evidence we need to develop AI regulation and help us get there faster. It would also help us get better at anticipating the risks posed by AI.”

AI in healthcare: Balancing innovation and ethics

Dr Vivek Singh, Lecturer in Digital Pathology at Barts Cancer Institute, Queen Mary University of London, highlighted the ethical implications of AI in healthcare.

“The Paris AI Action Summit represents a critical opportunity for global collaboration on AI governance and innovation,” he said. “I hope to see actionable commitments that balance ethical considerations with the rapid advancement of AI technologies, ensuring they benefit society as a whole.”

Singh called for clear frameworks for international cooperation.

“A key outcome would be the establishment of clear frameworks for international cooperation, fostering trust and accountability in AI development and deployment,” he said.

AI Action Summit: A pivotal moment

The 2025 AI Action Summit in Paris represents a pivotal moment for global AI governance. With calls for unity, equity, and public interest at the forefront, the summit aims to address the challenges of bias, regulation, and workforce readiness while ensuring AI’s benefits are shared equitably.

As world leaders and industry experts converge, the hope is that actionable commitments will pave the way for a more inclusive and ethical AI future.

(Photo by Jorge Gascón)

See also: EU AI Act: What businesses need to know as regulations go live

Want to learn more about AI and big data from industry leaders? Check out AI & Big Data Expo taking place in Amsterdam, California, and London. The comprehensive event is co-located with other leading events including Intelligent Automation Conference, BlockX, Digital Transformation Week, and Cyber Security & Cloud Expo.

Explore other upcoming enterprise technology events and webinars powered by TechForge here.

#2024#2025#adoption#agile#ai#ai & big data expo#ai act#ai action summit#AI adoption#AI development#AI for all#AI future#ai governance#AI in healthcare#AI Infrastructure#AI Race#AI regulation#ai safety#ai safety summit#ai summit#AI systems#amp#approach#Artificial Intelligence#automation#Bias#biases#Big Data#BIG TECH#billion

0 notes

Text

Stuck on Big Tech vs. Big China, America Overlooks the Real Social Media Revolution Happening Elsewhere

— Why the U.S. is Falling Behind as the Fediverse & Open-Source Take Over Europe & Canada The Fediverse and open-source social media are gaining momentum worldwide, and especially in Europe and Canada — while the U.S. lags behind. What is this all about? Why are we so disconnected in the way we connect on the web? In America, traditional platforms like Facebook, Instagram, X (formerly Twitter),…

#ad-driven ecosystem#Anti-Big Tech#Big Tech#California#Canada#Commercialization#Community#Consumer Apathy#Corporate Dominance#Data Economy#decentralized internet#Decentralized Networks#Democracy#Digital Rights#Engagement#Enshittification#Europe#European Union#Fediverse#Free access#Free Culture Movement#Freedom of Information#Freedom of Speech#General Data Protection Regulation#global tech leaders#Government Regulation#Grassroots#Herd Mentality#Human Agency#Internet Access

1 note

·

View note

Text

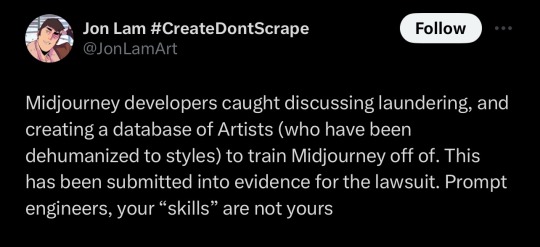

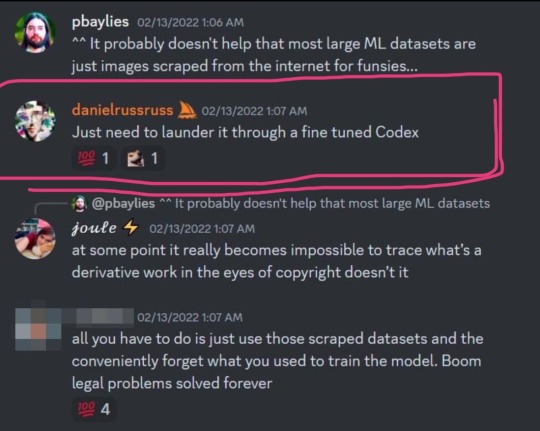

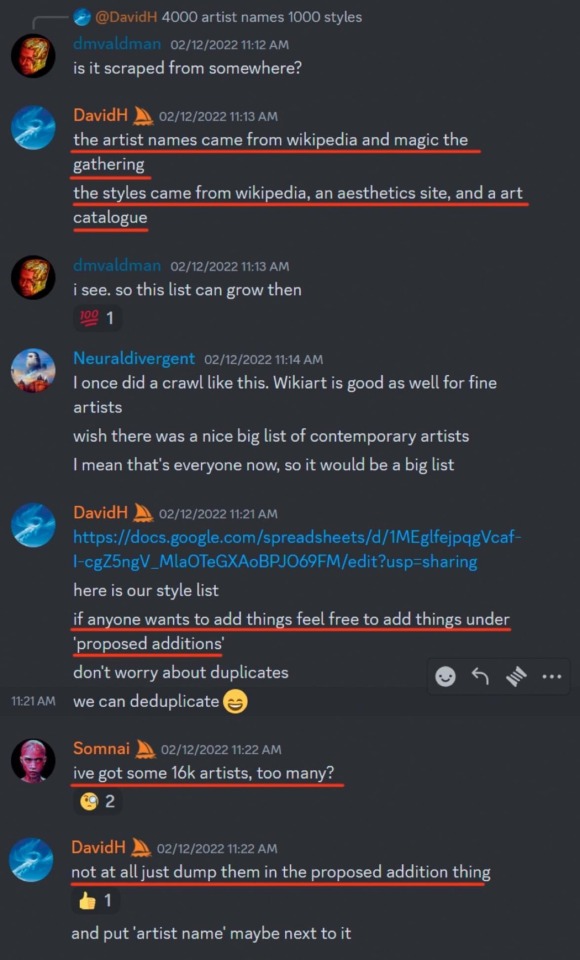

I think this part is truly the most damning:

If it's all pre-rendered mush and it's "too expensive to fully experiment or explore" then such AI is not a valid artistic medium. It's entirely deterministic, like a pseudorandom number generator. The goal here is optimizing the rapid generation of an enormous quantity of low-quality images which fulfill the expectations put forth by The Prompt.

It's the modern technological equivalent of a circus automaton "painting" a canvas to be sold in the gift shop.

so a huge list of artists that was used to train midjourney’s model got leaked and i’m on it

literally there is no reason to support AI generators, they can’t ethically exist. my art has been used to train every single major one without consent lmfao 🤪

link to the archive

#to be clear AI as a concept has the power to create some truly fantastic images#however when it is subject to the constraints of its purpose as a machine#it is only capable of performing as its puppeteer wills it#and these puppeteers have the intention of stealing#tech#technology#tech regulation#big tech#data harvesting#data#technological developments#artificial intelligence#ai#machine generated content#machine learning#intellectual property#copyright

37K notes

·

View notes

Text

Meta's Content Moderation Changes: Why Ireland Must Act Now

Meta’s Content Moderation Changes: Why Ireland Must Act Now The recent decision by Meta to end third-party fact-checking programs on platforms like Facebook, Instagram, and Threads has sent shockwaves through online safety circles. For a country like Ireland, home to Meta’s European headquarters, this is more than just a tech policy shift—it’s a wake-up call. It highlights the urgent need for…

#HowRUDoingOnline Campaign#Big Tech Regulation Ireland#Children of the Digital Age Advocacy#Community Notes System#Digital Services Act (DSA)#EU Content Moderation Laws#Facebook Fact-Checking#Fact-Checking Alternatives#Ireland Tech Hub Responsibility#Mark Zuckerberg Meta Policies#Meta and Online Trust#Meta Content Moderation#Misinformation on Social Media#Online Misinformation Impact#Online Safety Ireland#Protecting Digital Spaces#Rachel O’Connell Digital Safety#Safeguarding Social Media Users#Social Media Accountability#Wayne Denner Online Safety

1 note

·

View note

Text

EU adopts USB-C as standard charger

- By Nuadox Crew -

Starting Saturday, USB Type-C charging ports will become the standard for most small and medium-sized portable electronic devices sold in the European Union (EU), including smartphones, tablets, cameras, and headphones.

Consumers can also opt out of receiving new chargers with their purchases. Laptop manufacturers must comply by April 28, 2026.

The change aims to reduce electronic waste, save EU households an estimated €250 million annually, and enhance consumer convenience. While initially resistant, Apple and other manufacturers have begun adapting to the new rules. The European Parliament will monitor the transition closely.

Read more at Euronews/MSN

Related Content

The EU ‘iPhone USB-C Law’ explained (video)

Other Recent Content

Physics: Scientists detect 'negative time' phenomenon in quantum experiments.

0 notes