#DeepFakes

Explore tagged Tumblr posts

Text

I assure you, an AI didn’t write a terrible “George Carlin” routine

There are only TWO MORE DAYS left in the Kickstarter for the audiobook of The Bezzle, the sequel to Red Team Blues, narrated by @wilwheaton! You can pre-order the audiobook and ebook, DRM free, as well as the hardcover, signed or unsigned. There's also bundles with Red Team Blues in ebook, audio or paperback.

On Hallowe'en 1974, Ronald Clark O'Bryan murdered his son with poisoned candy. He needed the insurance money, and he knew that Halloween poisonings were rampant, so he figured he'd get away with it. He was wrong:

https://en.wikipedia.org/wiki/Ronald_Clark_O%27Bryan

The stories of Hallowe'en poisonings were just that – stories. No one was poisoning kids on Hallowe'en – except this monstrous murderer, who mistook rampant scare stories for truth and assumed (incorrectly) that his murder would blend in with the crowd.

Last week, the dudes behind the "comedy" podcast Dudesy released a "George Carlin" comedy special that they claimed had been created, holus bolus, by an AI trained on the comedian's routines. This was a lie. After the Carlin estate sued, the dudes admitted that they had written the (remarkably unfunny) "comedy" special:

https://arstechnica.com/ai/2024/01/george-carlins-heirs-sue-comedy-podcast-over-ai-generated-impression/

As I've written, we're nowhere near the point where an AI can do your job, but we're well past the point where your boss can be suckered into firing you and replacing you with a bot that fails at doing your job:

https://pluralistic.net/2024/01/15/passive-income-brainworms/#four-hour-work-week

AI systems can do some remarkable party tricks, but there's a huge difference between producing a plausible sentence and a good one. After the initial rush of astonishment, the stench of botshit becomes unmistakable:

https://www.theguardian.com/commentisfree/2024/jan/03/botshit-generative-ai-imminent-threat-democracy

Some of this botshit comes from people who are sold a bill of goods: they're convinced that they can make a George Carlin special without any human intervention and when the bot fails, they manufacture their own botshit, assuming they must be bad at prompting the AI.

This is an old technology story: I had a friend who was contracted to livestream a Canadian awards show in the earliest days of the web. They booked in multiple ISDN lines from Bell Canada and set up an impressive Mbone encoding station on the wings of the stage. Only one problem: the ISDNs flaked (this was a common problem with ISDNs!). There was no way to livecast the show.

Nevertheless, my friend's boss's ordered him to go on pretending to livestream the show. They made a big deal of it, with all kinds of cool visualizers showing the progress of this futuristic marvel, which the cameras frequently lingered on, accompanied by overheated narration from the show's hosts.

The weirdest part? The next day, my friend – and many others – heard from satisfied viewers who boasted about how amazing it had been to watch this show on their computers, rather than their TVs. Remember: there had been no stream. These people had just assumed that the problem was on their end – that they had failed to correctly install and configure the multiple browser plugins required. Not wanting to admit their technical incompetence, they instead boasted about how great the show had been. It was the Emperor's New Livestream.

Perhaps that's what happened to the Dudesy bros. But there's another possibility: maybe they were captured by their own imaginations. In "Genesis," an essay in the 2007 collection The Creationists, EL Doctorow (no relation) describes how the ancient Babylonians were so poleaxed by the strange wonder of the story they made up about the origin of the universe that they assumed that it must be true. They themselves weren't nearly imaginative enough to have come up with this super-cool tale, so God must have put it in their minds:

https://pluralistic.net/2023/04/29/gedankenexperimentwahn/#high-on-your-own-supply

That seems to have been what happened to the Air Force colonel who falsely claimed that a "rogue AI-powered drone" had spontaneously evolved the strategy of killing its operator as a way of clearing the obstacle to its main objective, which was killing the enemy:

https://pluralistic.net/2023/06/04/ayyyyyy-eyeeeee/

This never happened. It was – in the chagrined colonel's words – a "thought experiment." In other words, this guy – who is the USAF's Chief of AI Test and Operations – was so excited about his own made up story that he forgot it wasn't true and told a whole conference-room full of people that it had actually happened.

Maybe that's what happened with the George Carlinbot 3000: the Dudesy dudes fell in love with their own vision for a fully automated luxury Carlinbot and forgot that they had made it up, so they just cheated, assuming they would eventually be able to make a fully operational Battle Carlinbot.

That's basically the Theranos story: a teenaged "entrepreneur" was convinced that she was just about to produce a seemingly impossible, revolutionary diagnostic machine, so she faked its results, abetted by investors, customers and others who wanted to believe:

https://en.wikipedia.org/wiki/Theranos

The thing about stories of AI miracles is that they are peddled by both AI's boosters and its critics. For boosters, the value of these tall tales is obvious: if normies can be convinced that AI is capable of performing miracles, they'll invest in it. They'll even integrate it into their product offerings and then quietly hire legions of humans to pick up the botshit it leaves behind. These abettors can be relied upon to keep the defects in these products a secret, because they'll assume that they've committed an operator error. After all, everyone knows that AI can do anything, so if it's not performing for them, the problem must exist between the keyboard and the chair.

But this would only take AI so far. It's one thing to hear implausible stories of AI's triumph from the people invested in it – but what about when AI's critics repeat those stories? If your boss thinks an AI can do your job, and AI critics are all running around with their hair on fire, shouting about the coming AI jobpocalypse, then maybe the AI really can do your job?

https://locusmag.com/2020/07/cory-doctorow-full-employment/

There's a name for this kind of criticism: "criti-hype," coined by Lee Vinsel, who points to many reasons for its persistence, including the fact that it constitutes an "academic business-model":

https://sts-news.medium.com/youre-doing-it-wrong-notes-on-criticism-and-technology-hype-18b08b4307e5

That's four reasons for AI hype:

to win investors and customers;

to cover customers' and users' embarrassment when the AI doesn't perform;

AI dreamers so high on their own supply that they can't tell truth from fantasy;

A business-model for doomsayers who form an unholy alliance with AI companies by parroting their silliest hype in warning form.

But there's a fifth motivation for criti-hype: to simplify otherwise tedious and complex situations. As Jamie Zawinski writes, this is the motivation behind the obvious lie that the "autonomous cars" on the streets of San Francisco have no driver:

https://www.jwz.org/blog/2024/01/driverless-cars-always-have-a-driver/

GM's Cruise division was forced to shutter its SF operations after one of its "self-driving" cars dragged an injured pedestrian for 20 feet:

https://www.wired.com/story/cruise-robotaxi-self-driving-permit-revoked-california/

One of the widely discussed revelations in the wake of the incident was that Cruise employed 1.5 skilled technical remote overseers for every one of its "self-driving" cars. In other words, they had replaced a single low-waged cab driver with 1.5 higher-paid remote operators.

As Zawinski writes, SFPD is well aware that there's a human being (or more than one human being) responsible for every one of these cars – someone who is formally at fault when the cars injure people or damage property. Nevertheless, SFPD and SFMTA maintain that these cars can't be cited for moving violations because "no one is driving them."

But figuring out who which person is responsible for a moving violation is "complicated and annoying to deal with," so the fiction persists.

(Zawinski notes that even when these people are held responsible, they're a "moral crumple zone" for the company that decided to enroll whole cities in nonconsensual murderbot experiments.)

Automation hype has always involved hidden humans. The most famous of these was the "mechanical Turk" hoax: a supposed chess-playing robot that was just a puppet operated by a concealed human operator wedged awkwardly into its carapace.

This pattern repeats itself through the ages. Thomas Jefferson "replaced his slaves" with dumbwaiters – but of course, dumbwaiters don't replace slaves, they hide slaves:

https://www.stuartmcmillen.com/blog/behind-the-dumbwaiter/

The modern Mechanical Turk – a division of Amazon that employs low-waged "clickworkers," many of them overseas – modernizes the dumbwaiter by hiding low-waged workforces behind a veneer of automation. The MTurk is an abstract "cloud" of human intelligence (the tasks MTurks perform are called "HITs," which stands for "Human Intelligence Tasks").

This is such a truism that techies in India joke that "AI" stands for "absent Indians." Or, to use Jathan Sadowski's wonderful term: "Potemkin AI":

https://reallifemag.com/potemkin-ai/

This Potemkin AI is everywhere you look. When Tesla unveiled its humanoid robot Optimus, they made a big flashy show of it, promising a $20,000 automaton was just on the horizon. They failed to mention that Optimus was just a person in a robot suit:

https://www.siliconrepublic.com/machines/elon-musk-tesla-robot-optimus-ai

Likewise with the famous demo of a "full self-driving" Tesla, which turned out to be a canned fake:

https://www.reuters.com/technology/tesla-video-promoting-self-driving-was-staged-engineer-testifies-2023-01-17/

The most shocking and terrifying and enraging AI demos keep turning out to be "Just A Guy" (in Molly White's excellent parlance):

https://twitter.com/molly0xFFF/status/1751670561606971895

And yet, we keep falling for it. It's no wonder, really: criti-hype rewards so many different people in so many different ways that it truly offers something for everyone.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/01/29/pay-no-attention/#to-the-little-man-behind-the-curtain

Back the Kickstarter for the audiobook of The Bezzle here!

Image:

Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

--

Ross Breadmore (modified) https://www.flickr.com/photos/rossbreadmore/5169298162/

CC BY 2.0 https://creativecommons.org/licenses/by/2.0/

#pluralistic#ai#absent indians#mechanical turks#scams#george carlin#comedy#body-snatchers#fraud#theranos#guys in robot suits#criti-hype#machine learning#fake it til you make it#too good to fact-check#mturk#deepfakes

2K notes

·

View notes

Text

Imagine being the loser ass tool, Yisha Raziel, who made a deepfake of Bella Hadid saying she supports Israel. 🤮

If you’re reading this, I am telling you right now, you better second and third guess what you see and hear on social media and the news. Stick to reliable news sources. Vet them. Require multiple, trusted sources. Validate links to sources. In the last several months, I’ve seen the deepfake of President Nixon talking about the failed NASA mission that never happened. I’ve seen a deepfake of Joe Biden hilariously using profanity to trash talk Trump - Biden’s deepfake, however, was made to be intentionally obvious that it wasn’t his words, or something he would actually say.

But imagine a viral deepfake video of Biden announcing a nuclear strike on Russia within the next 20 minutes? Or a deepfake of Biden reintroducing the draft to support Israel? Or a deepfake of Biden withdrawing from the 2024 election and endorsing Trump…

These kinds of things are going to begin happening a lot more, especially with the proliferation of troll farms, and especially since YouTube, Twitter (I refuse to call it X), and Facebook have all eviscerated their verification and factcheck teams that used to at least attempt to limit disinformation and misinformation.

Pay attention, peeps.

Don’t get bamboozled.

670 notes

·

View notes

Text

I'm pretty sure this is illegal

247 notes

·

View notes

Text

The eyes, the old saying goes, are the window to the soul — but when it comes to deepfake images, they might be a window into unreality. That's according to new research conducted at the University of Hull in the U.K., which applied techniques typically used in observing distant galaxies to determine whether images of human faces were real or not. The idea was sparked when Kevin Pimbblet, a professor of astrophysics at the University, was studying facial imagery created by artificial intelligence (AI) art generators Midjourney and Stable Diffusion. He wondered whether he could use physics to determine which images were fake and which were real. "It dawned on me that the reflections in the eyes were the obvious thing to look at," he told Space.com.

Continue Reading.

106 notes

·

View notes

Text

in the era of deepfakes, the second most annoying thing behind people sharing very obviously Gen AI images as “proof” of outlandish claims is going to be the people rejecting photographic evidence out of hand “bc photos can be staged/photoshopped/deepfaked!” and the brilliant gumshoes overanalyzing all the ways actual photographs are “clearly ai”

53 notes

·

View notes

Text

https://www.reuters.com/technology/artificial-intelligence/trump-revokes-biden-executive-order-addressing-ai-risks-2025-01-21/

The use of AI to recreate someone's likeness in videos and audio, often referred to as "deepfakes," is a complex legal area. While there are existing laws against impersonation and unauthorized use of an individual's likeness, the rapid advancement of AI technologies has outpaced specific legislation addressing deepfakes. The rescission of Biden's executive order may lead to fewer federal regulations specifically targeting AI-generated content, potentially creating a legal gray area regarding the use of someone's likeness without consent.

#ai#artificial intelligence#deepfakes#government#donald trump#president trump#tech#technology#news#politics#political#republican#us politics#us policies#biden#joe biden#legal#law

20 notes

·

View notes

Text

Mira Lazine at LGBTQ Nation:

A Kremlin-backed propaganda network is allegedly behind a disinformation campaign targeting Vice Presidential candidate Tim Walz, spreading false rumors that he sexually assaulted former students, according to WIRED. Various experts allege that the network Storm-1516 is behind these efforts. This network is tied to many false claims, including one that alleged Kamala Harris committed a hit-and-run in 2011. The misinformation campaign against Walz has primarily taken place within the past few weeks. Deepfakes and anonymous allegations were spread against the former football coach stemming exclusively from QAnon accounts and shared by other far-right sources. These have been shared by right-wing figures like Trump campaigner Jack Posobiec and activist Candace Owens. The most recent claim stole information from a former student of Walz to craft an elaborate deepfake that alleges Walz sexually assaulted him. The video was quickly identified by users on X as fake, and even the man depicted in the video spoke out to say it was entirely fabricated.

[...] Storm-1516 typically creates an account on social media that gets tapped into the far-right network. It then spreads fake information to dupe users into sharing stories they believe come from whistleblowers or citizen journalists. Linvill says they often utilize X and YouTube to place stories. Much of this traces back to John Dougan, an ex-Floridian cop who now works with the Kremlin to spread disinformation. Dougan helped spread the initial false claims about Walz, appearing with an anonymous man, “Rick,” who claims to be a former student. Dougan runs numerous fake news websites used by Storm-1516.

Minnesota Gov. and Kamala Harris VP pick Tim Walz (D) is the victim of a deepfake campaign backed by Russia that is pushing false accusations about Walz “sexually assaulting” former students.

See Also:

LGBTQ Nation: The far right spreads fake AI-generated video falsely claiming Tim Walz molested a gay student

#Tim Walz#Deepfakes#Anti LGBTQ+ Extremism#AI#2024 Presidential Election#2024 Elections#Disinformation#Homophobia#Walz Derangement Syndrome#Candace Owens#Jack Posobiec#Storm 1516#John Dougan

20 notes

·

View notes

Text

a novel method for Gini!

According to Nature, researchers at the University of Hull in the U.K. used the CAS system—a method used by astronomers that measures concentration, asymmetry and smoothness (or clumpiness) of galaxies—and a statistical measure of inequality called the Gini coefficient to analyze the light reflected in a person’s eyes in an image.

“It’s not a silver bullet, because we do have false positives and false negatives,” Kevin Pimbblet, director of the Centre of Excellence for Data Science, Artificial Intelligence and Modelling at the University of Hull, U.K., said when he presented the research at the U.K. Royal Astronomical Society’s National Astronomy Meeting last week, according to Nature. Pimbblet said that in a real photograph of a person, the reflections in one eye should be “very similar, although not necessarily identical,” to the reflections in the other.

26 notes

·

View notes

Text

Further info:

@aromanticannibal @geekandafreak

Sorry, I know It doesn't go with any of your blogs, this isn't even the platform it will happen on, but i find the situation serious enough to spam like this, i'm trying to rope as many people as i can in it too.

I myself won't be able to continuously participate, but every contribution could be of help!

Again, so sorry about this, feel free to block me if it's too annoying.

#south korea#justice#ban exploitative ai use#japan#i'll try my best#fuck elon musk#twitter#feminism#legal restrictions for ai#human rights#deepfakes#deepfake

23 notes

·

View notes

Text

Take It Down Act nears passage; critics warn Trump could use it against enemies

Anti-deepfake bill raises concerns about censorship and breaking encryption.

Take It Down Act nears passage; critics warn Trump could use it against enemies

Archive Links: ais ia

7 notes

·

View notes

Text

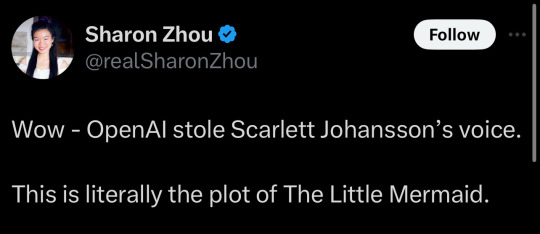

“Last September, I received an offer from Sam Altman, who wanted to hire me to voice the current ChatGPT 4.0 system. He told me that he felt that by my voicing the system, I could bridge the gap between tech companies and creatives and help consumers to feel comfortable with the seismic shift concerning humans and Al. He said he felt that my voice would be comforting to people.

After much consideration and for personal reasons, I declined the offer. Nine months later, my friends, family and the general public all noted how much the newest system named “Sky” sounded like me.

When I heard the released demo, I was shocked, angered and in disbelief that Mr. Altman would pursue a voice that sounded so eerily similar to mine that my closest friends and news outlets could not tell the difference. Mr. Altman even insinuated that the similarity was intentional, tweeting a single word “her” - a reference to the film in which I voiced a chat system, Samantha, who forms an intimate relationship with a human.

Two days before the ChatGPT 4.0 demo was released, Mr. Altman contacted my agent, asking me to reconsider. Before we could connect, the system was out there.

As a result of their actions, I was forced to hire legal counsel, who wrote two letters to Mr. Altman and OpenAl, setting out what they had done and asking them to detail the exact process by which they created the “Sky” voice. Consequently, OpenAl reluctantly agreed to take down the “Sky” voice.

In a time when we are all grappling with deepfakes and the protection of our own likeness, our own work, our own identities, I believe these are questions that deserve absolute clarity. I look forward to resolution in the form of transparency and the passage of appropriate legislation to help ensure that individual rights are protected.”

—Scarlett Johansson

#politics#deepfakes#scarlett johansson#sam altman#openai#tech#technology#open ai#chatgpt#chatgpt 4.0#the little mermaid#sky#sky ai#consent

240 notes

·

View notes

Text

India has warned tech companies that it is prepared to impose bans if they fail to take active measures against deepfake videos, a senior government minister said, on the heels of warning by a well-known personality over a deepfake advertisement using his likeness to endorse a gaming app. The stern warning comes as New Delhi follows through on advisory last November of forthcoming regulations to identify and restrict propagation of deepfake media. Rajeev Chandrasekhar, Deputy IT Minister, said the ministry plans to amend the nation’s IT Rules by next week to establish definitive laws counteracting deepfakes. He expressed dissatisfaction with technology companies’ adherence to earlier government advisories on manipulative content.

Continue Reading.

75 notes

·

View notes

Text

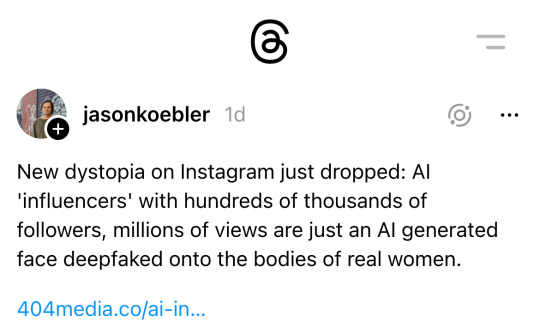

#ai influencers#deepfakes#social media marketing#digital ethics#inauthentic content#influencer marketing#virtual reality#computer generated imagery#dystopian fiction

38 notes

·

View notes

Text

Kyle Mantyla at RWW:

MAGA activists have been attacking President Joe Biden by producing and spreading fake, deceptively edited, and misleading videos in an effort to undermine his reelection. And, according to pro-Trump broadcaster Brenden Dilley, there is absolutely nothing wrong with that. Dilley is a overtly racist, unapologetically misogynistic, and gleefully amoral Trump cultist who has established close ties not only to the Trump campaign, but to the former president himself, thanks to the role he plays in leading a “troll army” that relentlessly promotes Trump and attacks his enemies on social media. [...]

Dilley has, in the past, openly stated that he doesn’t “give a fuck about being factual” and will “make shit up” if doing so furthers Trump’s political agenda, and that attitude is still motivating Dilley’s efforts, as he made clear during a recent broadcast. “There’s nothing I won’t fucking do or say,” Dilley declared. “Nothing! It’s war. I view you as a fucking enemy of the country. I will do anything necessary.”

MAGA cultist Brenden Dilley believes lying to help Donald Trump is A-OK.

From the 06.18.2024 edition of The Dilley Show:

vimeo

#Brenden Dilley#Dilley Meme Team#Joe Biden#The Dilley Show#Donald Trump#Cult .45#MAGA Cult#2024 Presidential Election#2024 Elections#Deepfakes

23 notes

·

View notes

Text

Joan Is Awful: Black Mirror episode is every striking actor’s worst nightmare

“A sticking point of the near-inevitable Sag-Aftra strike is the potential that AI could soon render all screen actors obsolete. A union member this week told Deadline: ‘Actors see Black Mirror’s Joan Is Awful as a documentary of the future, with their likenesses sold off and used any way producers and studios want. We want a solid pathway. The studios countered with ‘trust us’ – we don’t.’ ...

“If a studio has the kit, not to mention the balls, to deepfake Tom Hanks into a movie he didn’t agree to star in, then it has the potential to upend the entire industry as we know it. It’s one thing to have your work taken from you, but it’s another to have your entire likeness swiped.

“The issue is already creeping in from the peripheries. The latest Indiana Jones movie makes extensive use of de-ageing technology, made by grabbing every available image of Harrison Ford 40 years ago and feeding it into an algorithm. Peter Cushing has been semi-convincingly brought back to life for Star Wars prequels, something he is unlikely to have given permission for unless the Disney execs are particularly skilled at the ouija board. ITV’s recent sketch show Deep Fake Neighbour Wars took millions of images of Tom Holland and Nicki Minaj, and slapped them across the faces of young performers so adeptly that it would be very easy to be fooled into thinking that you were watching the real celebrities in action.

“Unsurprisingly, Sag-Aftra members want this sort of thing to be regulated, asking for their new labour contract to include terms about when AI likenesses can be used, how to protect against misuse, and how much money they can expect from having their likenesses used by AI.”

#joan is awful#black mirror#charlie brooker#sag aftra strike#screen actors guild#sag aftra#wga strike#writers guild of america#wga#actors#screenwriters#hollywood#film and tv#ai#deepfakes#strikes#unions#labour#labor#us

95 notes

·

View notes

Text

deepfakes are genuinely so terrifying and disgusting, how vile and inhumane do you have to be to come up with the idea of making something so wicked

#deepfakes#men scare me#hell is a teenage girl#i’m so fucking scared#what the fuck man#what the fuck is going on

3 notes

·

View notes