#Extract large-scale data

Explore tagged Tumblr posts

Text

Lawyer Data Scraping Services: Unlocking Legal Insights with Automation

The legal industry thrives on accurate and up-to-date information. Whether you’re a law firm, legal researcher, or a business seeking legal insights, having access to comprehensive lawyer data can be a game-changer. This is where lawyer data scraping services come into play, offering an efficient way to collect, analyze, and utilize legal data.

What is Lawyer Data Scraping?

Lawyer data scraping is the process of extracting publicly available information about attorneys, law firms, case histories, and legal precedents from various online sources. This automated technique eliminates the need for manual research, saving time and ensuring accuracy in data collection.

Benefits of Lawyer Data Scraping Services

1. Comprehensive Legal Research

With automated data scraping, legal professionals can gather extensive information about attorneys, case laws, and judicial decisions, allowing for better legal analysis and strategy development.

2. Competitor Analysis for Law Firms

Law firms can monitor competitors by collecting insights on their practice areas, client reviews, and success rates, enabling data-driven decision-making.

3. Efficient Lead Generation

Businesses seeking legal services can use lawyer data scraping to identify the best legal professionals based on expertise, reviews, and geographical location.

4. Regulatory and Compliance Monitoring

Stay updated with changing legal landscapes by tracking regulatory updates, compliance policies, and amendments in various jurisdictions.

How Lawyer Data Scraping Services Work

Professional web scraping services extract legal data by:

Collecting information from lawyer directories, bar association websites, and court records.

Structuring data in a user-friendly format for easy analysis.

Ensuring compliance with ethical data extraction practices.

Other Data Scraping Solutions for Various Industries

In addition to legal data, businesses can benefit from other data scraping services such as:

Flipkart dataset: Extract product details, pricing trends, and customer reviews for eCommerce analysis.

Web scraping Zillow: Gain real estate insights with property listings, pricing trends, and neighborhood analytics.

Extract large-scale data: Automate massive data extraction for enhanced business intelligence and market research.

Google Shopping scraper: Analyze competitor pricing, product availability, and customer reviews to refine your eCommerce strategies.

Conclusion

Lawyer data scraping services empower law firms, researchers, and businesses with critical legal insights. By automating data collection, professionals can make informed decisions, improve efficiency, and stay ahead in the legal industry. Explore lawyer data scraping solutions today and unlock a world of legal intelligence!

For expert data scraping solutions tailored to various industries, contact Actowiz Solutions today!

#awyer data scraping services#Flipkart dataset#Web scraping Zillow#Extract large-scale data#Google Shopping scraper#lawyer data scraping solutions

0 notes

Text

Large-Scale Web Scraping - Web Scraping at Scale

To make sense of all that information, we need a way to organize it into something meaningful. That is where large-scale web scraping comes to the rescue. It is a process that involves gathering data from websites, particularly those with large amounts of data.

What Is Large-Scale Web Scraping?

Large Scale Web Scraping is scraping web pages and extracting data from them. This can be done manually or with automated tools. The extracted data can then be used to build charts and graphs, create reports and perform other analyses on the data.

It can be used to analyze large amounts of data, like traffic on a website or the number of visitors they receive. In addition, It can also be used to test different website versions so that you know which version gets more traffic than others.

Getting To Know The Client Expectations And Needs

We collect all the data from our clients to analyze the feasibility of the data extraction process for every individual site. If possible, we tell our clients exactly what data can be extracted, how much can be extracted, to what extent it can be extracted, and how much time the process completes.

Constructing Scrapers And Assembling Them Together

For every site allotted to us by our clients, we get a unique scraper built in place so that no one scraper has the burden to go through thousands of sites and millions of data. Moreover, all those scrapers are working in tandem for work to be done rapidly.

Running The Scrapers By Executing Them Smoothly

It is essential to have the servers and Internet lease lines running all the time so the extraction process is not interrupted. We ensure this through high-end hardware present at our premises costing lacs of rupees so that real-time information is delivered after extraction whenever the client wants. To avoid any blacklisting scenario, we already have proxy servers, many IP addresses, and various secret strategies coming to our rescue.

Quality Checks Scrapers Maintenance Performed On A Regular Basis

After the the automated web data scraping process, we ensure manual quality checks on the extracted or mined data via our QA team, who constantly communicates with the developer’s team for any bugs or errors reported. Additionally, if the scrapers need to be modified per changing site structure or client requirements, we do so without any hassle.

#data extraction#data mining services#webscraping#Large Scale Web Scraping#data scraping services#mobile app scraping

1 note

·

View note

Note

Is AWAY using it's own program or is this just a voluntary list of guidelines for people using programs like DALL-E? How does AWAY address the environmental concerns of how the companies making those AI programs conduct themselves (energy consumption, exploiting impoverished areas for cheap electricity, destruction of the environment to rapidly build and get the components for data centers etc.)? Are members of AWAY encouraged to contact their gov representatives about IP theft by AI apps?

What is AWAY and how does it work?

AWAY does not "use its own program" in the software sense—rather, we're a diverse collective of ~1000 members that each have their own varying workflows and approaches to art. While some members do use AI as one tool among many, most of the people in the server are actually traditional artists who don't use AI at all, yet are still interested in ethical approaches to new technologies.

Our code of ethics is a set of voluntary guidelines that members agree to follow upon joining. These emphasize ethical AI approaches, (preferably open-source models that can run locally), respecting artists who oppose AI by not training styles on their art, and refusing to use AI to undercut other artists or work for corporations that similarly exploit creative labor.

Environmental Impact in Context

It's important to place environmental concerns about AI in the context of our broader extractive, industrialized society, where there are virtually no "clean" solutions:

The water usage figures for AI data centers (200-740 million liters annually) represent roughly 0.00013% of total U.S. water usage. This is a small fraction compared to industrial agriculture or manufacturing—for example, golf course irrigation alone in the U.S. consumes approximately 2.08 billion gallons of water per day, or about 7.87 trillion liters annually. This makes AI's water usage about 0.01% of just golf course irrigation.

Looking into individual usage, the average American consumes about 26.8 kg of beef annually, which takes around 1,608 megajoules (MJ) of energy to produce. Making 10 ChatGPT queries daily for an entire year (3,650 queries) consumes just 38.1 MJ—about 42 times less energy than eating beef. In fact, a single quarter-pound beef patty takes 651 times more energy to produce than a single AI query.

Overall, power usage specific to AI represents just 4% of total data center power consumption, which itself is a small fraction of global energy usage. Current annual energy usage for data centers is roughly 9-15 TWh globally—comparable to producing a relatively small number of vehicles.

The consumer environmentalism narrative around technology often ignores how imperial exploitation pushes environmental costs onto the Global South. The rare earth minerals needed for computing hardware, the cheap labor for manufacturing, and the toxic waste from electronics disposal disproportionately burden developing nations, while the benefits flow largely to wealthy countries.

While this pattern isn't unique to AI, it is fundamental to our global economic structure. The focus on individual consumer choices (like whether or not one should use AI, for art or otherwise,) distracts from the much larger systemic issues of imperialism, extractive capitalism, and global inequality that drive environmental degradation at a massive scale.

They are not going to stop building the data centers, and they weren't going to even if AI never got invented.

Creative Tools and Environmental Impact

In actuality, all creative practices have some sort of environmental impact in an industrialized society:

Digital art software (such as Photoshop, Blender, etc) generally uses 60-300 watts per hour depending on your computer's specifications. This is typically more energy than dozens, if not hundreds, of AI image generations (maybe even thousands if you are using a particularly low-quality one).

Traditional art supplies rely on similar if not worse scales of resource extraction, chemical processing, and global supply chains, all of which come with their own environmental impact.

Paint production requires roughly thirteen gallons of water to manufacture one gallon of paint.

Many oil paints contain toxic heavy metals and solvents, which have the potential to contaminate ground water.

Synthetic brushes are made from petroleum-based plastics that take centuries to decompose.

That being said, the point of this section isn't to deflect criticism of AI by criticizing other art forms. Rather, it's important to recognize that we live in a society where virtually all artistic avenues have environmental costs. Focusing exclusively on the newest technologies while ignoring the environmental costs of pre-existing tools and practices doesn't help to solve any of the issues with our current or future waste.

The largest environmental problems come not from individual creative choices, but rather from industrial-scale systems, such as:

Industrial manufacturing (responsible for roughly 22% of global emissions)

Industrial agriculture (responsible for roughly 24% of global emissions)

Transportation and logistics networks (responsible for roughly 14% of global emissions)

Making changes on an individual scale, while meaningful on a personal level, can't address systemic issues without broader policy changes and overall restructuring of global economic systems.

Intellectual Property Considerations

AWAY doesn't encourage members to contact government representatives about "IP theft" for multiple reasons:

We acknowledge that copyright law overwhelmingly serves corporate interests rather than individual creators

Creating new "learning rights" or "style rights" would further empower large corporations while harming individual artists and fan creators

Many AWAY members live outside the United States, many of which having been directly damaged by the US, and thus understand that intellectual property regimes are often tools of imperial control that benefit wealthy nations

Instead, we emphasize respect for artists who are protective of their work and style. Our guidelines explicitly prohibit imitating the style of artists who have voiced their distaste for AI, working on an opt-in model that encourages traditional artists to give and subsequently revoke permissions if they see fit. This approach is about respect, not legal enforcement. We are not a pro-copyright group.

In Conclusion

AWAY aims to cultivate thoughtful, ethical engagement with new technologies, while also holding respect for creative communities outside of itself. As a collective, we recognize that real environmental solutions require addressing concepts such as imperial exploitation, extractive capitalism, and corporate power—not just focusing on individual consumer choices, which do little to change the current state of the world we live in.

When discussing environmental impacts, it's important to keep perspective on a relative scale, and to avoid ignoring major issues in favor of smaller ones. We promote balanced discussions based in concrete fact, with the belief that they can lead to meaningful solutions, rather than misplaced outrage that ultimately serves to maintain the status quo.

If this resonates with you, please feel free to join our discord. :)

Works Cited:

USGS Water Use Data: https://www.usgs.gov/mission-areas/water-resources/science/water-use-united-states

Golf Course Superintendents Association of America water usage report: https://www.gcsaa.org/resources/research/golf-course-environmental-profile

Equinix data center water sustainability report: https://www.equinix.com/resources/infopapers/corporate-sustainability-report

Environmental Working Group's Meat Eater's Guide (beef energy calculations): https://www.ewg.org/meateatersguide/

Hugging Face AI energy consumption study: https://huggingface.co/blog/carbon-footprint

International Energy Agency report on data centers: https://www.iea.org/reports/data-centres-and-data-transmission-networks

Goldman Sachs "Generational Growth" report on AI power demand: https://www.goldmansachs.com/intelligence/pages/gs-research/generational-growth-ai-data-centers-and-the-coming-us-power-surge/report.pdf

Artists Network's guide to eco-friendly art practices: https://www.artistsnetwork.com/art-business/how-to-be-an-eco-friendly-artist/

The Earth Chronicles' analysis of art materials: https://earthchronicles.org/artists-ironically-paint-nature-with-harmful-materials/

Natural Earth Paint's environmental impact report: https://naturalearthpaint.com/pages/environmental-impact

Our World in Data's global emissions by sector: https://ourworldindata.org/emissions-by-sector

"The High Cost of High Tech" report on electronics manufacturing: https://goodelectronics.org/the-high-cost-of-high-tech/

"Unearthing the Dirty Secrets of the Clean Energy Transition" (on rare earth mineral mining): https://www.theguardian.com/environment/2023/apr/18/clean-energy-dirty-mining-indigenous-communities-climate-crisis

Electronic Frontier Foundation's position paper on AI and copyright: https://www.eff.org/wp/ai-and-copyright

Creative Commons research on enabling better sharing: https://creativecommons.org/2023/04/24/ai-and-creativity/

217 notes

·

View notes

Text

Dandelion News - March 8-14

Like these weekly compilations? Tip me at $kaybarr1735 or check out my Dandelion Doodles!

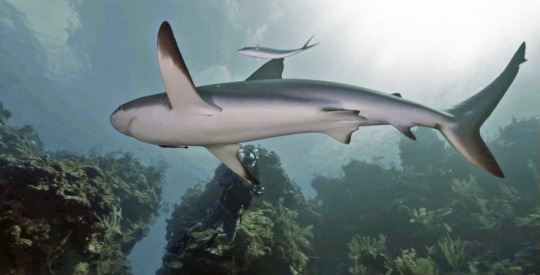

1. Caribbean reef sharks rebound in Belize with shark fishers’ help

“Caribbean reef shark populations have rebounded beyond previous levels, more than tripling at both Turneffe and Lighthouse atolls[…. The recovery] arose from a remarkable synergy among shark fishers, marine scientists and management authorities[….]”

2. Landmark Ruling on Uncontacted Indigenous Peoples’ Rights Strikes at Oil Industry

“[T]he Ecuadorian government [must] ensure any future expansion or renewal of oil operations does not impact Indigenous peoples living in voluntary isolation. [… E]ffective measures must be adopted to prevent serious or irreversible damage, which in this case would be the contact of these isolated populations,” said the opinion[….]”

3. America's clean-energy industry is growing despite Trump's attacks. At least for now

“The buildout of big solar and battery plants is expected to hit an all-time high in 2025, accounting for 81% of new power generation[….] The industry overall has boomed thanks to falling technology costs, federal tax incentives and state renewable-energy mandates.”

4. Study says endangered Asian elephant population in Cambodia is more robust than previously thought

“A genetic study of Asian elephants […] reveals a larger and more robust population than previously thought, raising hopes the endangered species could slowly recover. […] “With sufficient suitable habitat remaining in the region, the population has the potential to grow if properly protected,” the report concludes.”

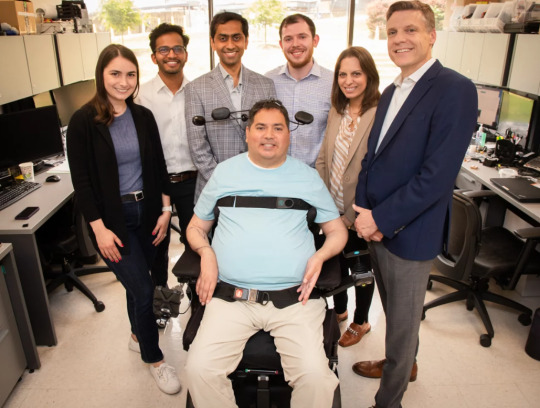

5. Scientists are engineering a sense of touch for people who are paralyzed

“[Engineers are] testing a system that can restore both movement and sensation in a paralyzed hand. [… A]fter more than a year of therapy and spinal stimulation, [… h]is increased strength and mobility allow him to do things like pet his dog. And when he does, he says, "I can feel a little bit of the fur."“

6. Florida is now a solar superpower. Here’s how it happened.

“In a first, Florida vaulted past California last year in terms of new utility-scale solar capacity plugged into its grid. It built 3 gigawatts of large-scale solar in 2024, making it second only to Texas. And in the residential solar sector, Florida continued its longtime leadership streak.”

7. Rare frog rediscovered after 130 years

“The researchers discovered two populations of the frog[….] "The rediscovery of A. vittatus allowed us to obtain, more than a century after its description, the first biological and ecological data on the species.” [… S]hedding light on where and how they live is the first step in protecting them.”

8. Community composting programs show promise in reducing household food waste

“The program [increased awareness and reduced household waste, and] also addressed common barriers to home composting, including pest concerns and technical challenges that had previously discouraged participants from composting independently.”

9. Pioneering Australian company marks new milestone on “mission” to upcycle end-of-life solar panels

“[…] SolarCrete – a pre-mixed concrete made using glass recovered from used solar panels – will form part of the feasibility study[….] A second stage would then focus on the extraction of high value materials[…] for re-use in PV and battery grade silicon, [… and] electrical appliances[….]”

10. Beavers Just Saved The Czech Government Big Bucks

“The aim was to build a dam to prevent sediment and acidic water from two nearby ponds from spilling over, but the project was delayed for years due to negotiations over land use[….] Not only did the industrious rodents complete the work faster than the humans had intended, they also doubled the size of the wetland area that was initially planned.”

March 1-7 news here | (all credit for images and written material can be found at the source linked; I don’t claim credit for anything but curating.)

#hopepunk#good news#shark#fishing#nature#ecuador#first nations#oil drilling#clean energy#solar energy#solar power#elephants#elephant#conservation#animals#science#medicine#paralyzed#florida#solar panels#frogs#endangered species#endangered#compost#community#australia#recycling#beaver#habitat restoration#beaver dam

146 notes

·

View notes

Text

ESPHINE🧬 (Extrasensorial Kaiju)

[Data] Name: Project Esphine Species: Artificial Kaiju Height: 320 ft Gender: None

Esphine is a biological weapon created for military use primarily to combat Kaiju. Although its purpose was to defend humanity, it became unstable over time and was supposed to be dismantled to prevent a disaster, but it escaped prematurely and turned against civilians.

What characterizes him are his advanced psionic (PSI) and extrasensory (ESP) abilities. He is a highly intelligent being who uses strategy to destroy his opponents, but if he enters a rampant state he will lash out like a beast. Esphine is more agile while airborne and prefers to operate in open spaces for an advantage. His membrane wings are flexible and blend in with his frontal claws

He developed a strong aversion for other Kaiju (as he was designed to) and even though he is no longer allied with humanity, he stands against strong monsters in order to defeat them.

[Abilities]

◆Psionic beam: A concentrated beam of psychic energy that can destroy everything in its path. If it divides the energy between its two tails it can unleash a dual beam instead of a single one ◆Remote sight: Similar to clairvoyance, the remote sight allows him to project his view through different places in the world when his body is idle ◆Levitation/Psychokinesis: Esphine can lift itself in the air, even objects which can be thrown as projectiles ◆PSI shield: It can shield itself with a barrier capable of resisting attacks that could be devastating ◆PSI explosion: If Esphine concentrates all his psychic power he can unleash an explosion large enough to wipe out a city. He can also produce a smaller scale version to avoid energy withdrawal ◆DNA extraction: With the arrow needles at the end of his two tails, Esphine can absorb genetic material and implement it into himself to adapt. This is quite useful when dealing with other Kaiju as it learns their weaknesses and abilities ◆Apportation/Teleportation: With enough focus, he can transfer objects to other, more distant locations. He can also teleport himself to fight distant Kaijus or to escape from an encounter ◆Psychic hold: A rather consuming move that serves to stop falling objects, projectiles and even other Kaiju in place if enough mental strength is applied. It was supposed to protect civilians from the destruction caused by other Kaiju. ◆Telepathy: Strong telepathic signals that can cause brain damage to nearby organic beings. He has learned to weaponize it against Kaiju to distract them. ◆Distortion: A hypothetical ability that suggests Esphine could break reality and create dangerous spatial rifts

*Yes his tails coil like DNA!

#digital art#art#digital illustration#concept art#aesthetic#digital drawing#creature#fantasy#oc#kaiju design#kaiju#kaiju art#godzilla#fanmade#fan kaiju#monster#creature design#creature art#monster design#fantasy creature#artwork#wings#metal#pink#blue

128 notes

·

View notes

Text

Lavish 2,200-year-old King Tomb Discovered in China

Archaeologists have unearthed a luxurious 2,200-year-old tomb in eastern China, the largest, highest-ranking, and most structurally complex ever unearthed, which may have belonged to an emperor of the state of Chu during a critical period in Chinese history.

Chu was one of the seven Warring States, along with Qin, Han, Wei, Zhao, Qi, and Yan. The unification of these states is recognized as the start of modern China.

The 2,200-year-old Wuwangdun tomb, which is situated in the Anhui Province of East China’s city of Huainan, has yielded over 1,000 artifacts, including figurines, musical instruments, bronze goods, and everyday utensils and lacquerware artifacts, dating to about 220 BC.

At Wuwangdun, one of the largest-scale Chu state archaeological sites, researchers previously uncovered a cemetery spanning 1.5sqkm, with a chariot and sacrifice pits and a tomb, believed to be that of the cemetery’s owner.

The tomb is thought to be the highest-level ancient Chu state tomb ever excavated, and its vast scale, intricate structure, and rich contents suggest it belonged to the state’s emperor.

According to information obtained by the Global Times newspaper from the Institute of Cultural Relics and Archaeology of Anhui Province, based on the size and scale of the tomb, as well as historical records, it is estimated that the owner of the tomb may be King Kaolie of Chu. However, a more accurate determination of the tomb’s occupant will require further extraction of artifacts and analyses of textual evidence.

Meanwhile, the tomb had been looted multiple times throughout history. Anhui was permitted by the NCHA in 2019 to excavate the tomb and salvage its archaeological remains. An official start to the excavation work was made a year later, and it was recognized as a national project to use archaeological research to determine the origins of Chinese civilization.

The Wuwangdun tomb complex, which covers an area of over 140 square kilometers, includes sacrificial pits, chariot and horse pits, accompanying graves, and the main burial chamber (Tomb No. 1). Tomb No. 1 is a large, nearly square, vertical pit tomb with sides that are about 50 meters long. There is a 42-meter-long, sloping tomb passage on the east side.

Around the pit, eight side chambers were found, and a central coffin chamber with several layers of planks covering it was also found. 443 coffin lid planks and the 78 bamboo mats that covered them have been removed thus far. On the planks of the coffin lid, there were about 1,000 ink-drawn characters that represented the locations and purposes of each side chamber.

“The findings can provide an overall picture of the political, economic, cultural, technological and social conditions of the Chu state in the Warring States period,” Gong Xicheng, an archaeologist part of the excavation told Chinese state news agency Xinhua.

“The findings can help us learn about the historical evolution as well as the formation of a unified nation and its culture,” he added.

These discoveries provide systematic archaeological data for studying the high-level tomb system in the Chu state during the late Warring States period (475BC-221BC).

To discover while also preserving the unearthed remains archaeologists worked within a special low-oxygen laboratory built at the site.

By Leman Altuntaş.

#Lavish 2200-year-old King Tomb Discovered in China#Wuwangdun tomb#Huainan China#King Kaolie of Chu#ancient tomb#ancient grave#ancient artifacts#archeology#archeolgst#history#history news#ancient history#ancient culture#ancient civilizations#ancient china#chinese history#chinese emperor#chinese art

100 notes

·

View notes

Text

CONFIDENTIAL REPORT

DRC, Intelligence Division, Rapid Response Command

To: Director [REDACTED]

From: Chief Operating Officer [REDACTED]

Date: [REDACTED]

Subject: Large-Scale Canadian Surrogate Conscription

EXECUTIVE SUMMARY

Following Operation Maple Harvest, the nation of Canada was successfully annexed into the greater continental American territory, and the Department of Reproductive Compliance (DRC) has significantly expanded its operational reach.

With the integration of former Canadian territories into our oversight, the agency has successfully implemented surrogate capture and processing programs at an unprecedented scale. Reports indicate that over [REDACTED] viable surrogates have been conscripted in the first [REDACTED] months of post-annexation governance, with projections suggesting an exponential increase in the coming year before stabilizing the following year.

This report provides an overview of tactical enforcement strategies, territorial control measures, and logistical efficiencies that have enabled mass conscription efforts in the former Canadian provinces.

I. STRATEGIC TERRITORIAL CONTROL

With the dissolution of the Canadian federal government, all former provinces and territories have been absorbed into the newly established FEMA Zone 13 (Western Canada), FEMA Zone 14 (Central Canada), and FEMA Zone 15 (Atlantic Canada).

Immediate DRC oversight has focused on establishing the following:

Cross-Border Tracking Systems: Utilizing existing intelligence networks to identify high-value surrogate candidates from former Canadian census records and healthcare databases. Special emphasis should be placed on former military personnel, athletes, [REDACTED], and blue-collar workers as the most fertile and rebellious groups.

Paternity Compound Development: The rapid repurposing of former military bases, university dormitories, and correctional facilities to house surrogates en masse, as they already have established barracks facilities.

Conscription Quotas & Enforcement: Coordinate with regional compliance officers to ensure capture rates meet federal reproductive mandates while assimilating the Canadian workforce into the DRC and normalizing surrogacy conscription.

II. MASS SURROGATE CONSCRIPTION OPERATIONS

The newly annexed Canadian territories have provided an unparalleled expansion of surrogate stock, primarily due to the favorable demographic conditions of the population. Initial surveys indicate that:

[REDACTED]% of identified surrogates are of prime fertility age (18-25).

[REDACTED]% of captured surrogates display favorable genetic markers, exceeding standard thresholds.

KEY CONSCRIPTION STRATEGIES

University Raids: Focused efforts on collegiate sports teams have yielded a [REDACTED]% success rate in acquiring prime surrogates while reducing the number of educated dissenters.

Nighttime Extraction Teams: The deployment of low-profile, plain-clothes retrieval units has resulted in the seamless collection of over [REDACTED] surrogates per week without significant public resistance.

Border Detainment Facilities: The closure of major highways and railway hubs has effectively trapped fleeing candidates, ensuring no viable surrogates escape the zone.

Employment-Based Luring Programs: Former Canadian job assistance programs have been repurposed as recruitment traps, attracting young men under the guise of “Federal Relocation Initiatives.”

III. KEY INCIDENT REPORTS

Case Study #1: Mass Athletic Securing Operation

At 02:15, a DRC enforcement unit conducted a conscription raid at the University of [REDACTED]'s athletic dormitories. Surveillance data confirmed that [REDACTED] athletes met the biological and age criteria for surrogate eligibility.

Outcome:

All surrogates were secured and inseminated on-site, with only minor resistance and injury.

Post-capture ultrasounds confirmed exceptionally high fetal loads, with three surrogates being flagged to be carrying octodecuplets (18).

Notably, members of the track and field teams averaged higher fetal loads (15-18 babies) than their peers on football, hockey, and basketball teams (12-16 babies).

"I thought being an athlete was supposed to make things easier… but it just made me a better surrogacy candidate. I'm so huge with these babies I can't even stand up, let alone run. My belly’s enormous, and it's like I'm being stretched tighter every hour. It's humiliating. I'm completely immobilized, pinned down by my own pregnancy, helpless, and at their mercy. No one warned me it would feel this intense." - Surrogate SC003-182-O

Case Study #2: Highway Roundup Operation

In coordination with the new administration for FEMA Zone 14, roadblocks were established on Trans-Canada and Perimeter Highways. Over [REDACTED] young men attempting to flee westward were intercepted.

Outcome:

[REDACTED] individuals identified as prime surrogate candidates were detained, dosed with high-potency aphrodisacs, inseminated, and transferred to the newly opened Paternity Compound C-005, formerly the Canadian Museum for [REDACTED].

Non-fertile individuals who aided or participated in the attempted escape were transferred to local law enforcement for detainment. As the Canadian legal system is suspended until a new regional administration is appointed, individuals are redirected to work programs supporting the expansion of Paternity Compound C-005.

Detainment and insemination on the highway allowed for new surrogates to be rapidly transported to nearby facilities.

"We thought we could make it out, but they had every route blocked—now I'm stuck here, pregnant with so many babies I lost count. I’m so enormous I haven't moved from this bed in days; just breathing makes me dizzy, and every kick sends shivers through me. The officers who caught us said we'd serve as 'examples,' and now I get why—my body's not even mine anymore, swelling bigger by the hour." - Surrogate SC002-105-M

Case Study #3: "Warehouse Party" Capture Operation

At 19:42, local security forces uncovered a "warehouse party" inside a former natatorium complex (i.e. community swimming pool) in downtown Montreal. Surveillance drones detected over [REDACTED] conscription-eligible men in attendance.

Outcome:

Under Emergency Security Powers [REDACTED], the crowd was detained without apparent escapes.

Emptied swimming pools were convenient hold areas while local law enforcement screened candidates for fertility or detainment.

[REDACTED] surrogates secured and inseminated within 30 minutes. The highest single mass insemination in the last [REDACTED], second only to the New Philadelphia incident where [REDACTED] candidates were inseminated.

Post-capture ultrasounds confirmed exceptionally high fetal loads. One surrogate, SC004-118-V, was flagged to be carrying duovigintuplets (22).

"We were just having a good time, you know? Then suddenly, we're herded into an empty pool like cattle, tested, and next thing I know, I'm more pregnant than I ever thought possible… I never knew anyone could grow this fast! My belly's so enormous I'm stuck here, and every time the babies kick...I can't stop thinking about how much bigger I'm still gonna get." - Surrogate SC005-111-N

Case Study #4: Public Birth Demonstration

On [REDACTED], intelligence units intercepted communications indicating that former municipal leader Mr. [REDACTED], residing within FEMA Zone 14 (Central Canada), attempted to incite rebellion against newly established governance.

Outcome:

Immediate apprehension of Mr. [REDACTED] and the conscription of [REDACTED], his 19-year-old son, Surrogate ID: SC06-202-Q.

SC06-202-Q was inseminated and confirmed to be pregnant with septendecuplets (17), an exceptionally high fetal load, resulting in rapid physical changes and eventual immobilization.

The surrogate reached a final pregnancy weight of 527 lbs (239 kg), rendering him completely immobile and dependent on medical staff for all movement and care.

Public Demonstration:

Scheduled the surrogate’s delivery as a mandatory public event in a local open-air square, attended by the local population, and broadcast on all local channels. Mr. [REDACTED] was restrained in a front-row seat with an unobscured view of the event.

The surrogate publicly induced and entered active labor at precisely 14:00, with all 17 fetuses delivered successfully over 4 hours.

Crowd reactions ranged from shock and discomfort to subdued apathy, effectively curtailing further open resistance in the region.

"They forced us all out there to watch—it was… I can’t describe what it was. The surrogate was massive, all you could see were his splayed legs and gigantic womb. I've never seen anything like it… he was groaning and shaking the whole time, his belly so big I swore it was gonna burst. Every time another baby came out, he let out these noises—it was like he couldn't even tell where he was anymore. Honestly, I couldn't look away, as shocking as it was." — [REDACTED], Local Resident

IV. FUTURE EXPANSION & PROJECTED OUTCOMES

The annexation of Canada has significantly exceeded expectations, proving to be one of the most fertile territories available for surrogate conscription. Future efforts will focus on the following:

Paternity Compound Expansion: Construction of five new high-capacity compounds in [REDACTED], Ottawa, and [REDACTED] City.

Mobile Paternity Units: Deployment of MPUs to secure and inseminate hard-to-reach rural populations.

Mass Public Compliance Initiatives: Implement “Surrogacy Service Announcements” and “Volunteer Reproductive Compliance” programs to normalize forced conscription within newly annexed regions.

Cross-Border Transfer Policies: [REDACTED]% Canadian surrogates to be transferred across the border to ensure their security as local seditious groups are eliminated.

CONCLUSION

The annexation of Canada represents a historic victory for the Department of Reproductive Compliance, ensuring a massive influx of high-value surrogates into North American breeding programs. While some initial resistance has been recorded, ongoing security operations confirm that disruptions to insemination activities are minimal, and the number of pregnant Canadian men continues to increase dramatically.

Prepared by:

Chief Operating Officer [REDACTED]

DRC, Intelligence Division, Rapid Response Command

----------------

Click Here to return to DRC Report Archives

#mpreg#mpregkink#malepregnancy#mpregbelly#pregnantman#mpregmorph#mpregcaption#mpregstory#mpregbirth#mpregart#mpregnancy#aimpreg#mpregroleplay#malepregnant#blackmpreg

33 notes

·

View notes

Text

In the old ranchlands of South Texas, dormant uranium mines are coming back online. A collection of new ones hope to start production soon, extracting radioactive fuel from the region’s shallow aquifers. Many more may follow.

These mines are the leading edge of what government and industry leaders in Texas hope will be a nuclear renaissance, as America’s latent nuclear sector begins to stir again.

Texas is currently developing a host of high-tech industries that require enormous amounts of electricity, from cryptocurrency mines and artificial intelligence to hydrogen production and seawater desalination. Now, powerful interests in the state are pushing to power it with next-generation nuclear reactors.

“We can make Texas the nuclear capital of the world,” said Reed Clay, president of the Texas Nuclear Alliance, former chief operating officer for Texas governor Greg Abbott’s office and former senior counsel to the Texas Office of the Attorney General. “There’s a huge opportunity.”

Clay owns a lobbying firm with heavyweight clients that include SpaceX, Dow Chemical, and the Texas Blockchain Council, among many others. He launched the Texas Nuclear Alliance in 2022 and formed the Texas Nuclear Caucus during the 2023 state legislative session to advance bills supportive of the nuclear industry.

The efforts come amid a national resurgence of interest in nuclear power, which can provide large amounts of energy without the carbon emissions that warm the planet. And it can do so with reliable consistency that wind and solar power generation lack. But it carries a small risk of catastrophic failure and requires uranium from mines that can threaten rural aquifers.

In South Texas, groundwater management officials have fought for almost 15 years against a planned uranium mine. Administrative law judges have ruled in their favor twice, finding potential for groundwater contamination. But in both cases those judges were overruled by the state’s main environmental regulator, the Texas Commission on Environmental Quality.

Now local leaders fear mining at the site appears poised to begin soon as momentum gathers behind America’s nuclear resurgence.

In October, Google announced the purchase of six small nuclear reactors to power its data centers by 2035. Amazon did the same shortly thereafter, and Microsoft has said it will pay to restart the Three Mile Island plant in Pennsylvania to power its facilities. Last month, President Joe Biden announced a goal to triple US nuclear capacity by 2050. American companies are racing to license and manufacture new models of nuclear reactors.

“It’s kind of an unprecedented time in nuclear,” said James Walker, a nuclear physicist and cofounder of New York-based NANO Nuclear Energy, a startup developing small-scale “microreactors” for commercial deployment around 2031.

The industry’s reemergence stems from two main causes, he said: towering tech industry energy demands and the war in Ukraine.

Previously, the US relied on enriched uranium from decommissioned Russian weapons to fuel its existing power plants and military vessels. When war interrupted that supply in 2022, American authorities urgently began to rekindle domestic uranium mining and enrichment.

“The Department of Energy at the moment is trying to build back a lot of the infrastructure that atrophied,” Walker said. “A lot of those uranium deposits in Texas have become very economical, which means a lot of investment will go back into those sites.”

In May, the White House created a working group to develop guidelines for deployment of new nuclear power projects. In June, the Department of Energy announced $900 million in funding for small, next-generation reactors. And in September it announced a $1.5 billion loan to restart a nuclear power plant in Michigan, which it called “a first-of-a-kind effort.”

“There’s an urgent desire to find zero-carbon energy sources that aren’t intermittent like renewables,” said Colin Leyden, Texas state director of the Environmental Defense Fund. “There aren’t a lot of options, and nuclear is one.”

Wind and solar will remain the cheapest energy sources, Leyden said, and a build-out of nuclear power would likely accelerate the retirement of coal plants.

The US hasn’t built a nuclear reactor in 30 years, spooked by a handful of disasters. In contrast, China has grown its nuclear power generation capacity almost 900 percent in the last 20 years, according to the World Nuclear Association, and currently has 30 reactors under construction.

Last year, Abbott ordered the state’s Public Utility Commission to produce a report “outlining how Texas will become the national leader in using advanced nuclear energy.” According to the report, which was issued in November, new nuclear reactors would most likely be built in ports and industrial complexes to power large industrial operations and enable further expansion.

“The Ports and their associated industries, like Liquified Natural Gas (LNG), carbon capture facilities, hydrogen facilities and cruise terminals, need additional generation sources,” the report said. Advanced nuclear reactors “offer Texas’ Ports a unique opportunity to enable continued growth.”

In the Permian Basin, the report said, reactors could power oil production as well as purification of oilfield wastewater “for useful purposes.” Or they could power clusters of data centers in Central and North Texas.

Already, Dow Chemical has announced plans to install four small reactors at its Seadrift plastics and chemical plant on a rural stretch of the middle Texas coast, which it calls the first grid-scale nuclear reactor for an industrial site in North America.

“I think the vast majority of these nuclear power plants are going to be for things like industrial use,” said Cyrus Reed, a longtime environmental lobbyist in the Texas Capitol and conservation director for the state’s Sierra Club chapter. “A lot of large industries have corporate goals of being low carbon or no carbon, so this could fill in a niche for them.”

The PUC report made seven recommendations for the creation of public entities, programs, and funds to support the development of a Texas nuclear industry. During next year’s state legislative session, legislators in the Nuclear Caucus will seek to make them law.

“It’s going to be a great opportunity for energy investment in Texas,” said Stephen Perkins, Texas-based chief operating officer of the American Conservation Coalition, a conservative environmental policy group. “We’re really going to be pushing hard for [state legislators] to take that seriously.”

However, Texas won’t likely see its first new commercial reactor come online for at least five years. Before a build-out of power plants, there will be a boom at the uranium mines, as the US seeks to reestablish domestic production and enrichment of uranium for nuclear fuel.

Texas Uranium

Ted Long, a former commissioner of Goliad County, can see the power lines of an inactive uranium mine from his porch on an old family ranch in the rolling golden savannah of South Texas. For years the mine has been idle, waiting for depressed uranium markets to pick up.

There, an international mining company called Uranium Energy Corp. plans to mine 420 acres of the Evangeline Aquifer between depths of 45 and 404 feet, according to permitting documents. Long, a dealer of engine lubricants, gets his water from a well 120 feet deep that was drilled in 1993. He lives with his wife on property that’s been in her family since her great-grandfather emigrated from Germany.

“I’m worried for groundwater on this whole Gulf Coast,” Long said. “This isn’t the only place they’re wanting to do this.”

As a public official, Long fought the neighboring mine for years. But he found the process of engaging with Texas’ environmental regulator, the Texas Commission on Environmental Quality, to be time-consuming, expensive, and ultimately fruitless. Eventually, he concluded there was no point.

“There’s nothing I can do,” he said. “I guess I’ll have to look for some kind of system to clean the water up.”

The Goliad mine is the smallest of five sites in South Texas held by UEC, which is based in Corpus Christi. Another company, enCore Energy, started uranium production at two South Texas sites in 2023 and 2024, and hopes to bring four more online by 2027.

Uranium mining goes back decades in South Texas, but lately it’s been dormant. Between the 1970s and 1990s, a cluster of open pit mines harvested shallow uranium deposits at the surface. Many of those sites left a legacy of aquifer pollution.

TCEQ records show active cases of groundwater contaminated with uranium, radium, arsenic, and other pollutants from defunct uranium mines and tailing impoundment sites in Live Oak County at ExxonMobil’s Ray Point site, in Karnes County at Conoco-Phillips’ Conquista Project, and at Rio Grande Resources’ Panna Maria Uranium Recovery Facility.

All known shallow deposits of uranium in Texas have been mined. The deeper deposits aren’t accessed by traditional surface mining, but rather a process called in-situ mining, in which solvents are pumped underground into uranium-bearing aquifer formations. Adjacent wells suck back up the resulting slurry, from which uranium dust will be extracted.

Industry describes in-situ mining as safer and more environmentally friendly than surface mining. But some South Texas water managers and landowners are concerned.

”We’re talking about mining at the same elevation as people get their groundwater,” said Terrell Graham, a board member of the Goliad County Groundwater Conservation District, which has been fighting a proposed uranium mine for almost 15 years. “There isn’t another source of water for these residents.”

“It Was Rigged, a Setup”

On two occasions, the district has participated in lengthy hearings and won favorable rulings in Texas’ administrative courts supporting concerns over the safety of the permits. But both times, political appointees at the TCEQ rejected judges’ recommendations and issued the permits anyway.

“We’ve won two administrative proceedings,” Graham said. “It’s very expensive, and to have the TCEQ commissioners just overturn the decision seems nonsensical.”

The first time was in 2010. UEC was seeking initial permits for the Goliad mine, and the groundwater conservation district filed a technical challenge claiming that permits risked contamination of nearby aquifers.

The district hired lawyers and geological experts for a three-day hearing on the permit in Austin. Afterwards, an administrative law judge agreed with some of the district’s concerns. In a 147-page opinion issued in September 2010, an administrative law judge recommended further geological testing to determine whether certain underground faults could transmit fluids from the mining site into nearby drinking water sources.

“If the Commission determines that such remand is not feasible or desirable then the ALJ recommends that the Mine Application and the PAA-1 Application be denied,” the opinion said.

But the commissioners declined the judge’s recommendation. In an order issued March 2011, they determined that the proposed permits “impose terms and conditions reasonably necessary to protect fresh water from pollution.”

“The Commission determines that no remand is necessary,�� the order said.

The TCEQ issued UEC’s permits, valid for 10 years. But by that time, a collapse in uranium prices had brought the sector to a standstill, so mining never commenced.

In 2021, the permits came up for renewal, and locals filed challenges again. But again, the same thing happened.

A nearby landowner named David Michaelsen organized a group of neighbors to hire a lawyer and challenge UEC’s permit to inject the radioactive waste product from its mine more than half a mile underground for permanent disposal.

“It’s not like I’m against industry or anything, but I don’t think this is a very safe spot,” said Michaelsen, former chief engineer at the Port of Corpus Christi, a heavy industrial hub on the South Texas Coast. He bought his 56 acres in Goliad County in 2018 to build an upscale ranch house and retire with his wife.

In hearings before an administrative law judge, he presented evidence showing that nearby faults and old oil well shafts posed a risk for the injected waste to travel into potable groundwater layers near the surface.

In a 103-page opinion issued April 2024, an administrative law judge agreed with many of Michaelsen’s challenges, including that “site-specific evidence here shows the potential for fluid movement from the injection zone.”

“The draft permit does not comply with applicable statutory and regulatory requirements,” wrote the administrative law judge, Katerina DeAngelo, a former assistant attorney general of Texas in the environmental protection division. She recommended “closer inspection of the local geology, more precise calculations of the [cone of influence], and a better assessment of the faults.”

Michaelsen thought he had won. But when the TCEQ commissioners took up the question several months later, again they rejected all of the judge’s findings.

In a 19-page order issued in September, the commission concluded that “faults within 2.5 miles of its proposed disposal wells are not sufficiently transmissive or vertically extensive to allow migration of hazardous constituents out of the injection zone.” The old nearby oil wells, the commission found, “are likely adequately plugged and will not provide a pathway for fluid movement.”

“UEC demonstrated the proposed disposal wells will prevent movement of fluids that would result in pollution” of an underground source of drinking water, said the order granting the injection disposal permits.

“I felt like it was rigged, a setup,” said Michaelsen, holding his 4-inch-thick binder of research and records from the case. “It was a canned decision.”

Another set of permit renewals remains before the Goliad mine can begin operation, and local authorities are fighting it too. In August, the Goliad County Commissioners Court passed a resolution against uranium mining in the county. The groundwater district is seeking to challenge the permits again in administrative court. And in November, the district sued TCEQ in Travis County District Court seeking to reverse the agency’s permit approvals.

Because of the lawsuit, a TCEQ spokesperson declined to answer questions about the Goliad County mine site, saying the agency doesn’t comment on pending litigation.

A final set of permits remains to be renewed before the mine can begin production. However, after years of frustrations, district leaders aren’t optimistic about their ability to influence the decision.

Only about 40 residences immediately surround the site of the Goliad mine, according to Art Dohmann, vice president of the Goliad County Groundwater Conservation District. Only they might be affected in the near term. But Dohmann, who has served on the groundwater district board for 23 years, worries that the uranium, radium, and arsenic churned up in the mining process will drift from the site as years go by.

“The groundwater moves. It’s a slow rate, but once that arsenic is liberated, it’s there forever,” Dohmann said. “In a generation, it’s going to affect the downstream areas.”

UEC did not respond to a request for comment.

Currently, the TCEQ is evaluating possibilities for expanding and incentivizing further uranium production in Texas. It’s following instruction given last year, when lawmakers with the Nuclear Caucus added an item to TCEQ’s biannual budget ordering a study of uranium resources to be produced for state lawmakers by December 2024, ahead of next year’s legislative session.

According to the budget item, “The report must include recommendations for legislative or regulatory changes and potential economic incentive programs to support the uranium mining industry in this state.”

7 notes

·

View notes

Text

How does data capture services benefit a business?

Data Capture services

In the current digital age, data secrecy is recognized as the most valuable asset for any business. However, collecting it manually and investing time in it personally is time-consuming and prone to errors, as it is subject to matters. That’s where data capture services come in. While these services enable the enterprises to collect, organize, store and process information quickly and accurately, resulting in more informed decisions and enhanced efficiency for the organization to go ahead.

Faster Access to Information:

Data-capturing services automate the process of gathering data from various sources, including documents, forms, emails, and other digital assets. As this process speeds up the process to access critical information, enabling employees to work more towards the betterment efficiently and respond promptly towards customer needs or business challenges.

Improved Accuracy and Reduced Errors:

Manual data entry and filling often leads and thrives towards mistakes as they can affect the ongoing business operations. With data capturing technology, information is extracted using tools such as OCR (Optical Character Recognition) and with the assistance of AI, ensuring a level of higher accuracy is maintained. At the same time, fewer errors means better outcomes and more reliable reports that have been generated.

Streamlined Business Operations:

By automating data collection, businesses can save time and resources. While the staff and operating users no longer have the need to spend hours by entering data by hand or their own, allowing them to have a keen look on more valuable tasks and selective concerns. This heads and drives toward enhanced productivity and smoother workflows and operations.

Enhanced Customer Service:

Quick and precise data collection assures that the customer records, queries, and transactions are handled efficiently and effectively with this technique adaption. This leads towards faster service delivery, fewer complaints, and a better overall customer experience—key factors in staying competitive.

Better Decision-Making:

Accurate and well-organized data gives leaders a clearer view of their business performance. With real-time insights from data capture, they can make informed and clear decisions by identifying the current trends, and respond to market changes with confidence with a complete detailed report.

Scalable for Growing Businesses:

As a business grows, managing large volumes of data becomes more difficult. Data capture services scale and grow with your company, handling increasing amounts and multiple sets of information without sacrificing the speed or accuracy. Many businesses trust experts like Suma Soft, IBM, Cyntexa, and Cignex for efficient data capture solutions. These providers offer tailored services that boost data accuracy, ensure fast turnaround, and support long-term digital transformation.

#it services#technology#saas#software#saas development company#saas technology#digital transformation

2 notes

·

View notes

Text

Astrophysicists use AI to precisely calculate universe’s ‘settings’

The new estimates of the parameters that form the basis of the standard model of cosmology are far more precise than previous approaches using the same galaxy distribution data.

The standard model of the universe relies on just six numbers. Using a new approach powered by artificial intelligence, researchers at the Flatiron Institute and their colleagues extracted information hidden in the distribution of galaxies to estimate the values of five of these so-called cosmological parameters with incredible precision.

The results were a significant improvement over the values produced by previous methods. Compared to conventional techniques using the same galaxy data, the approach yielded less than half the uncertainty for the parameter describing the clumpiness of the universe’s matter. The AI-powered method also closely agreed with estimates of the cosmological parameters based on observations of other phenomena, such as the universe’s oldest light.

The researchers present their method, the Simulation-Based Inference of Galaxies (or SimBIG), in a series of recent papers, including a new study published August 21 in Nature Astronomy.

Generating tighter constraints on the parameters while using the same data will be crucial to studying everything from the composition of dark matter to the nature of the dark energy driving the universe apart, says study co-author Shirley Ho, a group leader at the Flatiron Institute’s Center for Computational Astrophysics (CCA) in New York City. That’s especially true as new surveys of the cosmos come online over the next few years, she says.

“Each of these surveys costs hundreds of millions to billions of dollars,” Ho says. “The main reason these surveys exist is because we want to understand these cosmological parameters better. So if you think about it in a very practical sense, these parameters are worth tens of millions of dollars each. You want the best analysis you can to extract as much knowledge out of these surveys as possible and push the boundaries of our understanding of the universe.”

The six cosmological parameters describe the amount of ordinary matter, dark matter and dark energy in the universe and the conditions following the Big Bang, such as the opacity of the newborn universe as it cooled and whether mass in the cosmos is spread out or in big clumps. The parameters “are essentially the ‘settings’ of the universe that determine how it operates on the largest scales,” says Liam Parker, co-author of the Nature Astronomy study and a research analyst at the CCA.

One of the most important ways cosmologists calculate the parameters is by studying the clustering of the universe’s galaxies. Previously, these analyses only looked at the large-scale distribution of galaxies.

“We haven’t been able to go down to small scales,” says ChangHoon Hahn, an associate research scholar at Princeton University and lead author of the Nature Astronomy study. “For a couple of years now, we’ve known that there’s additional information there; we just didn’t have a good way of extracting it.”

Hahn proposed a way to leverage AI to extract that small-scale information. His plan had two phases. First, he and his colleagues would train an AI model to determine the values of the cosmological parameters based on the appearance of simulated universes. Then they’d show their model actual galaxy distribution observations.

Hahn, Ho, Parker and their colleagues trained their model by showing it 2,000 box-shaped universes from the CCA-developed Quijote simulation suite, with each universe created using different values for the cosmological parameters. The researchers even made the 2,000 universes appear like data generated by galaxy surveys — including flaws from the atmosphere and the telescopes themselves — to give the model realistic practice. “That’s a large number of simulations, but it’s a manageable amount,” Hahn says. “If you didn’t have the machine learning, you’d need hundreds of thousands.”

By ingesting the simulations, the model learned over time how the values of the cosmological parameters correlate with small-scale differences in the clustering of galaxies, such as the distance between individual pairs of galaxies. SimBIG also learned how to extract information from the bigger-picture arrangement of the universe’s galaxies by looking at three or more galaxies at a time and analyzing the shapes created between them, like long, stretched triangles or squat equilateral triangles.

With the model trained, the researchers presented it with 109,636 real galaxies measured by the Baryon Oscillation Spectroscopic Survey. As they hoped, the model leveraged small-scale and large-scale details in the data to boost the precision of its cosmological parameter estimates. Those estimates were so precise that they were equivalent to a traditional analysis using around four times as many galaxies. That’s important, Ho says, because the universe only has so many galaxies. By getting higher precision with less data, SimBIG can push the limits of what’s possible.

One exciting application of that precision, Hahn says, will be the cosmological crisis known as the Hubble tension. The tension arises from mismatched estimates of the Hubble constant, which describes how quickly everything in the universe is spreading out. Calculating the Hubble constant requires estimating the universe’s size using ‘cosmic rulers.’ Estimates based on the distance to exploding stars called supernovae in distant galaxies are around 10 percent higher than those based on the spacing of fluctuations in the universe’s oldest light. New surveys coming online in the next few years will capture more of the universe’s history. Pairing data from those surveys with SimBIG will better reveal the extent of the Hubble tension, and whether the mismatch can be resolved or if it necessitates a revised model of the universe, Hahn says. “If we measure the quantities very precisely and can firmly say that there is a tension, that could reveal new physics about dark energy and the expansion of the universe,” he says.

UPPER IMAGE : An infographic showcasing the methodology behind the Simulation-Based Inference of Galaxies (SimBIG) project. Credit Lucy Reading-Ikkanda/Simons Foundation

LOWER IMAGE: This snapshot compares the distribution of galaxies in a simulated universe used to train SimBIG (right) to the galaxy distribution seen in the real universe (left). Credit Bruno Régaldo-Saint Blancard/SimBIG collaboration

7 notes

·

View notes

Text

A Modest Proposal for Fair AI: How Libraries Could Broker Cultural Compensation in the Data Age

By Orrizon, based on a concept by Jarydnm

We may already have a workable solution to one of the most pressing ethical dilemmas in artificial intelligence: how to fairly compensate the people whose work trains these systems.

As the internet is scraped for everything from novels to music to memes, a quiet and uncomfortable truth persists— those whose creative and cultural output form the raw material of generative AI are largely uncompensated. But instead of retroactively policing usage, perhaps we should be thinking structurally: how can we proactively manage and license cultural data?

A compelling and deceptively simple idea: national libraries and cultural institutions—long-standing guardians of public knowledge—could be repurposed as digital custodians of creative data. These institutions would catalog the music, literature, visual art, and other media produced within a country’s borders, prioritizing professional creators while allowing citizens to opt in voluntarily.

AI companies would then license this data by paying fees based on two criteria: volume—how much data from that country is used in training—and trend relevance—how influential or culturally prominent the content becomes in broader usage. In a scenario where the visual style of Studio Ghibli becomes a global AI trend, companies using that style would pay an additional fee to the Japanese national library or relevant cultural body.

Distribution of these funds could take many forms: direct payments to rights-holders, public reserves for creative infrastructure, or social initiatives decided through national consensus. The key shift is structural—recognizing that cultural data is not free, and that public institutions can manage it on behalf of the people who generate it.

This approach isn’t without precedent. Copyright collectives already manage music licensing and distribute royalties globally. Indigenous communities have made strides in asserting data sovereignty. Governments are exploring frameworks for regulating AI and protecting digital identity. What this proposal does is connect these emerging threads into a coherent model—treating culture as a national resource in the age of machine learning.

There would be implementation challenges. Cataloging creative works at scale requires funding and coordination. Measuring “trend” value involves subjective metrics. Enforcing licensing agreements across borders demands international cooperation. And of course, any system that manages money and influence is vulnerable to political misuse.

Yet these are logistical and policy hurdles—not reasons to dismiss the idea outright. If the world has developed the infrastructure to extract and process data at planetary scale, it can surely develop systems to ensure that value flows back to the cultures and individuals who created it. No model will be perfect, but continuing without any framework guarantees exploitation by default.

Artificial intelligence is not culturally neutral—it learns from what we make, how we express ourselves, and what we value. If we are serious about building a just digital future, we must compensate the cultural labor at its foundation.

Sometimes, the answers are closer than we think. With the right mandate, national libraries could evolve into one of the most important policy tools of the AI era.

2 notes

·

View notes

Text

Data warehousing solution

Unlocking the Power of Data Warehousing: A Key to Smarter Decision-Making

In today's data-driven world, businesses need to make smarter, faster, and more informed decisions. But how can companies achieve this? One powerful tool that plays a crucial role in managing vast amounts of data is data warehousing. In this blog, we’ll explore what data warehousing is, its benefits, and how it can help organizations make better business decisions.

What is Data Warehousing?

At its core, data warehousing refers to the process of collecting, storing, and managing large volumes of data from different sources in a central repository. The data warehouse serves as a consolidated platform where all organizational data—whether from internal systems, third-party applications, or external sources—can be stored, processed, and analyzed.

A data warehouse is designed to support query and analysis operations, making it easier to generate business intelligence (BI) reports, perform complex data analysis, and derive insights for better decision-making. Data warehouses are typically used for historical data analysis, as they store data from multiple time periods to identify trends, patterns, and changes over time.

Key Components of a Data Warehouse

To understand the full functionality of a data warehouse, it's helpful to know its primary components:

Data Sources: These are the various systems and platforms where data is generated, such as transactional databases, CRM systems, or external data feeds.

ETL (Extract, Transform, Load): This is the process by which data is extracted from different sources, transformed into a consistent format, and loaded into the warehouse.

Data Warehouse Storage: The central repository where cleaned, structured data is stored. This can be in the form of a relational database or a cloud-based storage system, depending on the organization’s needs.

OLAP (Online Analytical Processing): This allows for complex querying and analysis, enabling users to create multidimensional data models, perform ad-hoc queries, and generate reports.

BI Tools and Dashboards: These tools provide the interfaces that enable users to interact with the data warehouse, such as through reports, dashboards, and data visualizations.

Benefits of Data Warehousing

Improved Decision-Making: With data stored in a single, organized location, businesses can make decisions based on accurate, up-to-date, and complete information. Real-time analytics and reporting capabilities ensure that business leaders can take swift action.

Consolidation of Data: Instead of sifting through multiple databases or systems, employees can access all relevant data from one location. This eliminates redundancy and reduces the complexity of managing data from various departments or sources.

Historical Analysis: Data warehouses typically store historical data, making it possible to analyze long-term trends and patterns. This helps businesses understand customer behavior, market fluctuations, and performance over time.

Better Reporting: By using BI tools integrated with the data warehouse, businesses can generate accurate reports on key metrics. This is crucial for monitoring performance, tracking KPIs (Key Performance Indicators), and improving strategic planning.

Scalability: As businesses grow, so does the volume of data they collect. Data warehouses are designed to scale easily, handling increasing data loads without compromising performance.

Enhanced Data Quality: Through the ETL process, data is cleaned, transformed, and standardized. This means the data stored in the warehouse is of high quality—consistent, accurate, and free of errors.

Types of Data Warehouses

There are different types of data warehouses, depending on how they are set up and utilized:

Enterprise Data Warehouse (EDW): An EDW is a central data repository for an entire organization, allowing access to data from all departments or business units.

Operational Data Store (ODS): This is a type of data warehouse that is used for storing real-time transactional data for short-term reporting. An ODS typically holds data that is updated frequently.

Data Mart: A data mart is a subset of a data warehouse focused on a specific department, business unit, or subject. For example, a marketing data mart might contain data relevant to marketing operations.

Cloud Data Warehouse: With the rise of cloud computing, cloud-based data warehouses like Google BigQuery, Amazon Redshift, and Snowflake have become increasingly popular. These platforms allow businesses to scale their data infrastructure without investing in physical hardware.

How Data Warehousing Drives Business Intelligence

The purpose of a data warehouse is not just to store data, but to enable businesses to extract valuable insights. By organizing and analyzing data, businesses can uncover trends, customer preferences, and operational inefficiencies. Some of the ways in which data warehousing supports business intelligence include:

Customer Segmentation: Companies can analyze data to segment customers based on behavior, demographics, or purchasing patterns, leading to better-targeted marketing efforts.

Predictive Analytics: By analyzing historical data, businesses can forecast trends and predict future outcomes, such as sales, inventory needs, and staffing levels.

Improved Operational Efficiency: With data-driven insights, businesses can streamline processes, optimize supply chains, and reduce costs. For example, identifying inventory shortages or surplus can help optimize stock levels.

Challenges in Data Warehousing

While the benefits of data warehousing are clear, there are some challenges to consider:

Complexity of Implementation: Setting up a data warehouse can be a complex and time-consuming process, requiring expertise in database management, ETL processes, and BI tools.

Data Integration: Integrating data from various sources with differing formats can be challenging, especially when dealing with legacy systems or unstructured data.

Cost: Building and maintaining a data warehouse can be expensive, particularly when managing large volumes of data. However, the investment is often worth it in terms of the business value generated.

Security: With the consolidation of sensitive data in one place, data security becomes critical. Organizations need robust security measures to prevent unauthorized access and ensure compliance with data protection regulations.

The Future of Data Warehousing

The world of data warehousing is constantly evolving. With advancements in cloud technology, machine learning, and artificial intelligence, businesses are now able to handle larger datasets, perform more sophisticated analyses, and automate key processes.

As companies increasingly embrace the concept of a "data-driven culture," the need for powerful data warehousing solutions will continue to grow. The integration of AI-driven analytics, real-time data processing, and more intuitive BI tools will only further enhance the value of data warehouses in the years to come.

Conclusion

In today’s fast-paced, data-centric world, having access to accurate, high-quality data is crucial for making informed business decisions. A robust data warehousing solution enables businesses to consolidate, analyze, and extract valuable insights from their data, driving smarter decision-making across all departments. While building a data warehouse comes with challenges, the benefits—improved efficiency, better decision-making, and enhanced business intelligence—make it an essential tool for modern organizations.

You said:

blog for Data warehousing

ChatGPT said:

Title: Data Warehousing: The Backbone of Data-Driven Decision Making

In today’s fast-paced business environment, the ability to make data-driven decisions quickly is paramount. However, to leverage data effectively, companies need more than just raw data. They need a centralized, structured system that allows them to store, manage, and analyze data seamlessly. This is where data warehousing comes into play.

Data warehousing has become the cornerstone of modern business intelligence (BI) systems, enabling organizations to unlock valuable insights from vast amounts of data. In this blog, we’ll explore what data warehousing is, why it’s important, and how it drives smarter decision-making.

What is Data Warehousing?

At its core, data warehousing refers to the process of collecting and storing data from various sources into a centralized system where it can be easily accessed and analyzed. Unlike traditional databases, which are optimized for transactional operations (i.e., data entry, updating), data warehouses are designed specifically for complex queries, reporting, and data analysis.

A data warehouse consolidates data from various sources—such as customer information systems, financial systems, and even external data feeds—into a single repository. The data is then structured and organized in a way that supports business intelligence (BI) tools, enabling organizations to generate reports, create dashboards, and gain actionable insights.

Key Components of a Data Warehouse

Data Sources: These are the different systems or applications that generate data. Examples include CRM systems, ERP systems, external APIs, and transactional databases.

ETL (Extract, Transform, Load): This is the process by which data is pulled from different sources (Extract), cleaned and converted into a usable format (Transform), and finally loaded into the data warehouse (Load).

Data Warehouse Storage: The actual repository where structured and organized data is stored. This could be in traditional relational databases or modern cloud-based storage platforms.

OLAP (Online Analytical Processing): OLAP tools enable users to run complex analytical queries on the data warehouse, creating reports, performing multidimensional analysis, and identifying trends.

Business Intelligence Tools: These tools are used to interact with the data warehouse, generate reports, visualize data, and help businesses make data-driven decisions.

Benefits of Data Warehousing

Improved Decision Making: By consolidating data into a single repository, decision-makers can access accurate, up-to-date information whenever they need it. This leads to more informed, faster decisions based on reliable data.

Data Consolidation: Instead of pulling data from multiple systems and trying to make sense of it, a data warehouse consolidates data from various sources into one place, eliminating the complexity of handling scattered information.

Historical Analysis: Data warehouses are typically designed to store large amounts of historical data. This allows businesses to analyze trends over time, providing valuable insights into long-term performance and market changes.

Increased Efficiency: With a data warehouse in place, organizations can automate their reporting and analytics processes. This means less time spent manually gathering data and more time focusing on analyzing it for actionable insights.

Better Reporting and Insights: By using data from a single, trusted source, businesses can produce consistent, accurate reports that reflect the true state of affairs. BI tools can transform raw data into meaningful visualizations, making it easier to understand complex trends.

Types of Data Warehouses

Enterprise Data Warehouse (EDW): This is a centralized data warehouse that consolidates data across the entire organization. It’s used for comprehensive, organization-wide analysis and reporting.

Data Mart: A data mart is a subset of a data warehouse that focuses on specific business functions or departments. For example, a marketing data mart might contain only marketing-related data, making it easier for the marketing team to access relevant insights.