#Forward kinematics

Explore tagged Tumblr posts

Text

Rigging Animation Techniques

Inverse Kinematics & Forward Kinematics

When a modeller finishes building a character, to animate the same, first we need to Rig the character. Rigging animation is a process where we bind the character with skeletal joints and controllers which allows us to pose/move the character.

The terms ik and fk refer to the most efficient Rigging Animation Techniques. As an animator, you need to know the best and most efficient ways to accomplish an animation. Let’s take a simple look at what IK and FK are.

#Animation#Animation Course#Animation Course in Ahmedabad#Rigging Animation Techniques#Animation Institute#Animation Techniques#Inverse Kinematics#Forward Kinematics

0 notes

Text

Matra P41, 1991. A futuristic monobox city car prototype that featured a double-kinematic door system, a Matra patent that was put into production on the Renault Avantime. The system pushes the door forward as it opens providing a wider opening than a conventionally hinged door

#Matra#Matra P41#concept#prototype#city car#futuristic#1991#design study#double-kinematic door#monobox#1990s#patent

159 notes

·

View notes

Note

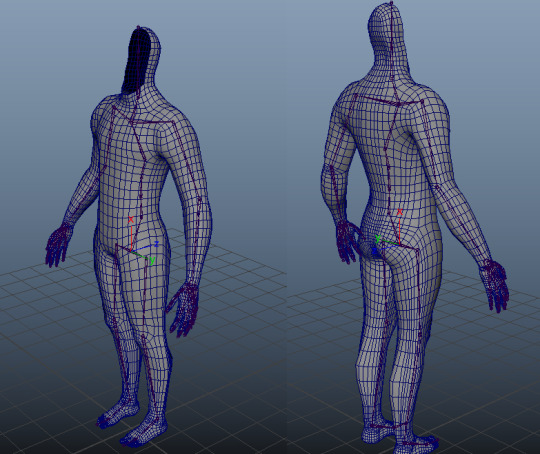

Learning blender 3D low poly guy here. I haven't delved into animating yet (scary) but I'd love to learn. Any tips/recommendations?

Of course! Don't be scared of animation, and try different techniques and styles until you find which one clicks with you and which you have the most fun with!

I like using simple IK setups I make myself, but other people use forward kinematics or addons that create complex rigs for them. :)

7 notes

·

View notes

Text

Day 9 - Aphrodisiacs - Robots with Dicks

"Oh fuck, yes, yes," Carla panted.

She heard Felix scribble something on his clipboard. For an AI postdoc, he was oddly attached to pen and paper.

The Mk1's chassis had been completed weeks ago. Carla had stared at it, stood in the corner of their basement workshop, waiting for Felix to finish training the AI, until finally she couldn't take it anymore.

"Yes, harder, faster." Carla moaned as the robot's control loop interpreted the commands and thrust into her with greater intensity.

Every inch of it was as good as she could make it, artificial filament muscles covered in variably translucent silicone to visually measure performance, hydraulic actuators in the torso visible behind 3D printed transparent aluminium. Strength was about twice what the strongest human could achieve without modification or drugs, dexterity on par with the best industrial robots from ten years ago, but on a fully mobile base. It was the peak of humanoid robotics, right at the very bleeding edge of technology. Their research paper was going to omit certain additions she'd made to it, though she had been half tempted to see if they could win a Nobel and Ig Nobel for the same project.

Felix looked up long enough from his clipboard to stroke her hair.

"Feel good?"

"Oh fuck yes, I'm close."

Even the penis attachments were works of art. Integrated in a special modular pelvis, she'd created two prototypes. The one that was rocking her world right now was a basic steel shaft with an internal set of ducting keeping it at body temperature, and a separate network of microtubules dispensing lubrication along the entire length. Pressure transducers and temperature sensors fed back into the control loop, letting the robot respond to her physiological responses as well as her voice commands.

She was saving the other prototype for the full AI integration test. The basic functionality worked the same as a mammalian penis. Silicone stood in for flesh, with a body safe hydraulic fluid for blood, filling corpora cavernosa made of custom designed aerogel. It even had realistic skin that slid along the basic structure. The sensors were also inspired by biological systems, with increased density in the tip. The piece de resistance was a realistic set of testicles, weighted properly, that contained most of the operating mechanics and a fully functional ejaculation system tuned to mimic anything from a pathetic little dribble to a pressure and volume any porn star would sign away their immortal soul for.

She already had plans for another, more futuristic attachment with a direct magnetic nerve stimulator for the clit and g-spot.

"Fuck YES!" Carla screamed as she came.

The sensors in the robot's dick tripped the control loop into a new regime, keeping the same pace perfectly, matching her thrashing movements, letting her focus on nothing but her own pleasure. The impassive face, silicon lips pressed tightly together, eyes scanning her face mechanically, pulled her out of the moment a little but the perfect fucking it was delivering got her close to the edge again right on the heels of her first orgasm. Just before her pleasure peaked, the robot pulled back out of her completely and sat back on its heels between her legs.

"CONTROL LOOP FAILURE, SAFETY MODE ENGAGED."

She screamed her frustration at the abrupt feeling of emptiness and ruined orgasm so tight on the heels of such a good one.

"Fuck, that sucked." Carla tried to catch her breath. "Mk1, go stand in the corner."

"COMMAND UNCLEAR, PLEASE RESTATE."

She pushed it off the bed with her foot, the basic inverse kinematics keeping it stable as it shifted to the floor. At least that was still working.

"Walk forward four paces, turn forty-five degrees clockwise, walk two more paces, then go into standby mode."

Carla pulled at Felix's shirt, trying to get it off over his head while he tried to hold on to his clipboard.

"Are you going to take notes, or are you going to fuck me? The Mk1 clearly isn't up to the task yet."

He froze, then tossed his clipboard aside. They kissed as he fumbled his pants off. He was inside of her seconds later, rock hard. They'd fantasized together about being with other people, but never wanted to make it a reality. The fantasy was hot, real people was too far for both of them. When they'd been working on the Mk1 together, Carla had suggested a little side project. Felix clearly really got off on seeing her with it, he was rock hard.

"Yes, fuck me, fuck me." Carla rocked her hips against him, meeting him thrust for thrust. She held his gaze, urging him on. Within minutes, he had her back at the edge, years of being together had taught him exactly what she liked. She held herself there, holding back, waiting for him.

"Cum in me, fill me, yes, YES!" Carla felt Felix stiffen inside of her and then warmth flooded her. She let go and screamed his name as she came, "Felix, Felix, Felix!"

When he collapsed on top of her, she stroked his back. He was still inside of her, and she could still feel the occasional twitch of his cock.

"Of course! There was no path from the 'partner orgasm occurring' state into the 'partner orgasm starting' state! God damnit, I forgot to account for multiple orgasms in quick succession. Fuck."

Felix kissed her, muffling her last word. He pushed up on his hands, hovering over her, still inside. "The tensor farm should be done testing the latest model by 8pm. If this one is all green, we can probably have it installed by 10 and give it another shot with the AI this time."

"I'll rewire the state machine for the control loop in case there's any red tests still."

This had been their sex life for the last couple of months, since they started the project to build the ultimate sex bot. After, often with Felix still inside of her, they'd discuss ideas about what they could change, or features they had to have. This was the first time after a field test though.

"Did you like watching me with it?"

"Oh yes. God damn, that was hot."

"Would you ever want to try it? Both cocks are self lubricating, you know."

"Mmm, maybe. I want to see if we can get a threesome mode working first though."

After dinner they guided the bot back onto its stand in the basement workshop with a dozen cables leading to various parts to extract telemetry, recharge, and provide data connections for reprogramming. Carla was getting distracted trying to rewire the state machine, each possible transition suddenly causing both real and imagined sense memories. Felix looked tastier and tastier as she worked. He was futzing with parameters, rerunning partial tests on subsystems. The tests had all been green, but he'd had ideas to get everything optimized before their first live test.

By 2am, they had the first version of Felix's AI uploaded to the Mk1. She and Felix had curated a lot of videos from Pornhub over the last couple of months, finding performances they liked. Lots of hotwife scenes and threesomes, some bisexual stuff, but mostly relatively vanilla scenes. Carla had added some scenes where the male performer was a bit more rough than Felix was comfortable with doing himself, spanking and pinning wrists above heads. For vocal interactions, they'd retrained a large language model on erotica and textual descriptions of the scenes in the porn videos, generated by an off the shelf accessibility AI.

There wasn't any actual universal intelligence in the robot of course. This was a sexy version of an AI chat bot that most phones had built in now, combined with a convoluted control loop for its physical interactions. Simply a very clever way of giving the impression that something was smart, when really all it was doing was basic pattern recognition based on a predefined dataset.

"Want to give it a shot?" Felix asked, but Carla shook her head.

"I want you, not the bot."

Shutting the bot down for the night, Carla drew Felix upstairs back to their bedroom. As they made love, they teased each other with all the amazing things they'd do with the robot tomorrow and in the weeks to come.

The next weekend, Carla really had to admit Felix had been right. Her control loop version of the robot's software was good. It got her off just fine, but it was impersonal. As its designer, she had a hard time focusing on herself as she felt it roll into new control regimes. The AI felt much more human. He looked at you, used his hands for more than balance, and even showed some imperfections in his motions. He got (artificially) winded, slipped out, fell over, all the things a real human partner would do. The experience was so much more realistic, she sometimes forgot it was a robot fucking her if she couldn't see him.

She sat at her desk in their upstairs office now, working on the more serious portion of her research. They had run a series of strength and dexterity tests that afternoon, characterizing the robot's ability to maintain precision while assert force at different levels, and she was processing the data. Felix was downstairs in the lab, tinkering with parameters and adjusting the training data for the next version of the AI.

Carla heard the neighbor plug in his bass guitar, the amp turned way up. She muttered under her breath about people not respecting their tools. Didn't he know he could damage the speaker like that? The noise wasn't too bad, but listening to Seven Nation Army played by a spirited amateur over and over again didn't really appeal either. Her noise cancelling headphones were in the basement with Felix though, so for now she'd just suffer through.

Her phone beeped halfway through the neighbor's warmup.

Felix: Robot reacting to bass music. Carla: "music" Felix: He's getting better. Anyway:

The next message was the robot's dick, the biomorphic one, clearly at half mast.

Carla: Is he on? Felix: in standby Carla: Odd. Sensors recording? Felix: Yup, caught it before the buffer flushed. AI parameter log too. Carla: nice

Before she could really get back into her work, the neighbor finished Seven Nation Army. The next tune he played was the Pornhub sting. She almost spat out her drink. He did a pretty good version, though the lack of drums made it not quite perfect.

Before she could get back into her work, Felix yelled from down in the basement.

"Carla, come take a look at this!"

The Mk1 was standing in its alcove, still docked to all the various wires and cables. Felix was standing in front of it, studying the biomorphic cock. It was throbbing like a real one would.

"Remember how it was at half mast during the first song the neighbor was playing? Despite it being in standby? I think I figured out the reason."

The neighbor, who had just finished House of the Rising Sun, chose that moment to play another couple of Pornhub opening stingers. The Mk1 responded, humping the air slightly, his cock throbbing.

"You didn't cut out the intros on the training data so—"

"— now every time it hears bass music, and the Pornhub riff in particular, it gets aroused. It's still in standby, it's barely drawing current, but there's enough residual charge in the artificial muscle fibers for, well, this." He gestured at the robot's midsection, still rocking back and forth.

"Aren't the tensor cores supposed to be off?" Carla watched a slow drop of lubricant fall from the tip of the twitching robot cock.

Felix shook his head, "Some stay on to parse voice commands."

She reached out, touching the silicone cock. It was slick, the lubricant dispensers clearly activated. It was interesting to see that it was apparently simulating precum as well, despite that not necessarily being the focus of their training data. The artificial dick twitched at her touch, and she grasped it firmly, stroking up and down. A slow trickle of fake cum was leaking out the tip now, covering her hand.

"So we're thinking bass guitar is a robo-aphrodisiac then? Because you trained it on videos with Pornhub intros?"

"Mm-hmm."

"That's hilarious."

"And means I have to remove the intro from over fifteen hundred videos, and then retrain and retest the entire model." Felix sighed heavily. "Again."

"There's an ffmpeg command for that, surely."

"The trimming, sure."

Carla kept stroking the robot's cock, watching the artificial foreskin slide back and forth over the head.

"Seems like a shame to waste this though. It really shouldn't be erect out in the open air for too long, it's designed with the idea of at least some counter pressure. Also, it would be a shame to not gather some extra data. It might be interesting to have a robo-aphrodisiac function, though maybe something more specific that won't just trigger if someone forgets to unplug their Bluetooth speaker when they're going to rub one out."

Felix grinned at her, then nodded.

Carla pulled her sweat pants and top off, standing naked in front of the mechanical man. "Mk1, wake up."

The Mk1 went through his wakeup sequence, part mandated by technology, part for show because they were both massive nerds. The cables, mostly plugged in along his arms and back, ejected and retracted into the alcove like Neo waking up in the real world for the first time. The sound effect of Seven of Nine's alcove powering down at the end of her regeneration cycle played, and Mk1 took a single step forward.

"Hello Carla, nice to see you again. What would you like to do today?"

Felix had campaigned long and hard for the robot to say "Please state the nature of the sexual emergency" but eventually she had put her foot down. The chances of that ending up in a version they showed off at their defense were too high, and while Robert Picardo could get it, the Doctor was a bit too acerbic for her tastes.

She walked over to the mattress they kept in the basement for quick tests, standing at the foot. She was in the mood for something a bit more rough than just the vanilla stuff they'd tried with the bot so far, and this heightened state it was operating in seemed to be a perfect opportunity to try that out.

"Take me. Be a little rough."

Before, he'd always asked for confirmation before initiating anything sexual. It hadn't been hardwired, but the AI training data was heavily incentivized towards asking consent first. This time though, with three long, powerful strides he was inches away from her. The intensity of his movements were a little scary, but she had the utmost faith in her and Felix's work. Still, she took a half step back reflexively.

"Are you sure this is a good idea?" Felix asked.

Carla stood staring at the Mk1, transfixed by his gaze. She knew it was just servos and cameras and tensor cores running a neural network, there was nothing there, but she still couldn't look away.

"I need this," Carla whispered.

With that, Mk1 took one more step, pushing her over and onto the mattress. He guided her down as they tumbled, cushioning her fall a little and making sure her head didn't hit the ground, but it was still an intense experience.

Decided to fight a little, she tried to push him off. He gathered her wrists in one hand and effortlessly pinned her arms above her head.

"Pause," Carla said.

Immediately, the Mk1 froze. He still held her, but the pressure on her wrists was lower, and he held all his weight off of her.

"Good, that still works just fine. Resume."

The intensity the Mk1 showed was unreal. She'd enjoyed him before, but with this added level of robotic arousal added on top, she could finally completely lose herself in the act. There was no room for thinking about kinematics and control loops, muscle fiber force limits, defects, or additions to the training data. There was no worry about her partner's pleasure, no anxiety for her own performance. All that was there was her own pleasure, pure and uncomplicated.

She fantasized about a future where a Mk2 and Mk3 could join in with the Mk1, taking turns getting her off, letting them recharge and refuel in shifts as they spent an entire day teasing her from orgasm to orgasm.

Mk1's synthesized voice, indistinguishable from human despite being produced by a speaker rather than a voice box, let her know how good this felt for him. All artificial of course, but so necessary for a realistic experience. Soft moans, grunts, little gasps. Even simulated breathing growing shorter as he exerted himself. It had still sounded artificial to her previously, but now it just went straight to the pleasure center of her brain, letting her enjoy the moment even more. She came, crying out as he whispered her name in her ear.

Just as her wrists were beginning to hurt, he shifted, pulling her legs up against his chest. The new position let him reach new and interesting places inside of her, the intentional curve she'd put on his cock letting him hit her g-spot. As she approached her second orgasm of the afternoon, he started moaning louder, grunting. When she came, so did he. The twitching of his cock was entirely lifelike, his orgasm forceful enough she could feel it deep inside of her.

She lay there panting, and he emulated her, letting her bask in the moment. Felix had sat next to her on the mattress, watching her closely. She could see his erection clearly in his sweats.

"That looked intense," he said when she looked over to him.

"Oh yes. We definitely need this feature."

"Would you like to continue?" the Mk1 asked.

Carla flicked her gaze down to Felix's sweats then looked him in the eyes. "Join us?"

Felix grinned and started pulling his shirt off.

#krakentober#kinktober#robot smut#m/f#science fiction#ns/fw#abrupt ending#original smut#original fiction

78 notes

·

View notes

Text

Check out what I did!

I didn’t make the model itself, rather I learned rigging in blender to pose it! The model started as a T-pose, and this is the pose I settled on. That, and I gave the model custom guns. They started off as box-fed boltguns, but I scraped off a wing from a faction and added a shield for the gun to stay loyal to the inspired model, the inceptor.

Very proud of my work, learning how to rig was a pain because I started the hard way with inverse kinematic only to realize forward kinematic was what I needed lol.

10 notes

·

View notes

Text

Physics-Informed Neural Networks

Literature Reviews

Physics-Informed Neural Networks (PINNs)

Raissi, Maziar, Paris Perdikaris, and George Em Karniadakis. "Physics Informed Deep Learning (Part I): Data-driven Solutions of Nonlinear Partial Differential Equations." arXiv preprint arXiv:1711.10561 (2017). [link](Raissi, M., Perdikaris, P., & Karniadakis, G. E. (2017). Physics informed deep learning (part i): Data-driven solutions of nonlinear partial differential equations. arXiv preprint arXiv:1711.10561.)

Raissi, Maziar, Paris Perdikaris, and George Em Karniadakis. "Physics Informed Deep Learning (Part II): Data-driven Discovery of Nonlinear Partial Differential Equations." arXiv preprint arXiv:1711.10566 (2017). link

Raissi, Maziar, Paris Perdikaris, and George E. Karniadakis. "Physics-informed neural networks: A deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations." Journal of Computational Physics 378 (2019): 686-707. link

Thais, S., Calafiura, P., Chachamis, G., DeZoort, G., Duarte, J., Ganguly, S., ... & Terao, K. (2022). Graph neural networks in particle physics: Implementations, innovations, and challenges. arXiv preprint arXiv:2203.12852. link

Daw, A., Karpatne, A., Watkins, W. D., Read, J. S., & Kumar, V. (2022). Physics-guided neural networks (pgnn): An application in lake temperature modeling. In Knowledge Guided Machine Learning (pp. 353-372). Chapman and Hall/CRC. link

Chen, Yanlai, and Shawn Koohy. "Gpt-pinn: Generative pre-trained physics-informed neural networks toward non-intrusive meta-learning of parametric pdes." Finite Elements in Analysis and Design 228 (2024): 104047. link

Ni, R., & Qureshi, A. H. (2024). Physics-informed Neural Motion Planning on Constraint Manifolds. arXiv preprint arXiv:2403.05765. link Contributes generally in three folds:

Introduces physics-informed neural network to Neural Motion Planners (NMPs).

Formulates a physics-driven objective function.

Reflect the physics-driven objective function to directly parameterize the Eikonal equation and generate time fields for different scenarios, including a 6-DOF manipulator space, under collision-avoidance and other kinematic constraints. Limitations:

Only generalizes to the new start and goal configurations in given environments.

Reliance on specific PDE formulations to train physics-informed NMPs.

solve motion planning and control under dynamic constraints.

Rigid Body Dynamics Inferring

Bhat, Kiran S., et al. "Computing the physical parameters of rigid-body motion from video." Computer Vision—ECCV 2002: 7th European Conference on Computer Vision Copenhagen, Denmark, May 28–31, 2002 Proceedings, Part I 7. Springer Berlin Heidelberg, 2002. link

Heiden, Eric, et al. "Inferring articulated rigid body dynamics from rgbd video." 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2022. linl

3D LLMs

To enable the pyhysics-aware embodied abilities of LLMs, the 3D perception is vital:

Hong, Y., Zhen, H., Chen, P., Zheng, S., Du, Y., Chen, Z., & Gan, C. (2023). 3d-llm: Injecting the 3d world into large language models. Advances in Neural Information Processing Systems, 36, 20482-20494. link Several tasks are concluded:(Image extracted from Hong et al. (2023).

Zhen, H., Qiu, X., Chen, P., Yang, J., Yan, X., Du, Y., ... & Gan, C. (2024). 3d-vla: A 3d vision-language-action generative world model. arXiv preprint arXiv:2403.09631. link This work is based on Hong et al. (2023).

Video Language Planning

Du, Y., Yang, M., Florence, P., Xia, F., Wahid, A., Ichter, B., ... & Tompson, J. (2023). link

Neural Symbolics

Hsu, J., Mao, J., Tenenbaum, J., & Wu, J. (2024). What’s left? concept grounding with logic-enhanced foundation models. Advances in Neural Information Processing Systems, 36. link

Liu, W., Chen, G., Hsu, J., Mao, J., & Wu, J. (2024). Learning Planning Abstractions from Language. arXiv preprint arXiv:2405.03864. link

Feng, C., Hsu, J., Liu, W., & Wu, J. (2024). Naturally supervised 3d visual grounding with language-regularized concept learners. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp. 13269-13278). link

Hsu, J., Mao, J., & Wu, J. (2023). Ns3d: Neuro-symbolic grounding of 3d objects and relations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp. 2614-2623). link

Energy-Based Models

Du, Y., Mao, J., & Tenenbaum, J. B. Learning Iterative Reasoning through Energy Diffusion. In Forty-first International Conference on Machine Learning. link

Du, Yilun, et al. "Learning iterative reasoning through energy minimization." International Conference on Machine Learning. PMLR, 2022. link They propose Energy-Based Models as a methodology for reasoning, with a learning an energy landscape.

Read World Simulators

Yang, M., Du, Y., Ghasemipour, K., Tompson, J., Schuurmans, D., & Abbeel, P. (2023). Learning interactive real-world simulators. arXiv preprint arXiv:2310.06114. link

Compositional Generative Modeling

Du, Y., & Kaelbling, L. (2024). Compositional Generative Modeling: A Single Model is Not All You Need. arXiv preprint arXiv:2402.01103. link

Universal Policies

Du, Y., Yang, S., Dai, B., Dai, H., Nachum, O., Tenenbaum, J., ... & Abbeel, P. (2024). Learning universal policies via text-guided video generation. Advances in Neural Information Processing Systems, 36. link

Lectures

Physics-informed machine learning – Hype or new trend in computational engineering?

(PINNs) Physics Informed Machine Learning

Media & Web Resouces

Alexander Leschik. Forecasting with Physics Informed Machine Learning (PIML)

Milestones

Hybrid Models Combining Physics and Data-Driven Approaches (2017)

Physics-Guided Neural Networks (PGNNs): PGNNs incorporate physical laws directly into the training process, reducing the need for large datasets and enhancing model interpretability.

Differentiable Physics: This approach integrates differentiable physics solvers with neural networks, allowing models to learn physical dynamics more effectively.

Physics-Informed Neural Networks (PINNs) (2019)

Improved Training Algorithms: Advances in optimization techniques, such as adaptive learning rates and physics-based regularization methods, have significantly improved the training efficiency of PINNs.

Multi-Scale and Multi-Physics Problems: Recent work has extended PINNs to handle complex multi-scale and multi-physics problems, making them applicable to a wider range of scientific and engineering challenges.

Graph Neural Networks (GNNs) for Physical Systems (2022)

Graph-based Representations: GNNs have been utilized to model the interactions within physical systems, such as molecular dynamics and material science, by representing systems as graphs where nodes correspond to particles or atoms and edges represent interactions.

Scalable and Efficient Computations: Improvements in GNN architectures and training methods have enabled the scalable and efficient simulation of large physical systems.

Challenges and Future Directions

Scalability and Generalization

Scalability: Ensuring that physics-informed LLMs can scale to handle large and complex systems remains a significant challenge.

Generalization: Developing models that generalize well across different physical systems and conditions is crucial for broader applicability.

Interdisciplinary Collaboration

Collaboration: Continued progress will require close collaboration between domain experts in physics, machine learning, and high-performance computing.

Model Interpretability

Interpretability: Enhancing the interpretability of physics-informed LLMs is essential for gaining insights into physical phenomena and for their acceptance in scientific and engineering communities.

Conclusion

The field of physics-informed large language models is rapidly advancing, driven by innovations in model architectures, training techniques, and applications across various scientific domains. These models hold great promise for accelerating scientific discovery and engineering innovation by providing powerful tools that blend the strengths of machine learning with the rigor of physical laws.

As research continues, addressing challenges related to scalability, generalization, and interpretability will be key to unlocking the full potential of physics-informed LLMs. The future of this interdisciplinary field looks promising, with exciting opportunities for breakthroughs in both fundamental science and practical applications.

2 notes

·

View notes

Text

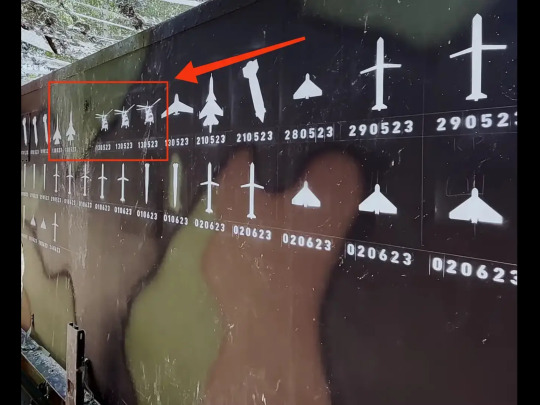

U.S. Army Officer Confirms Russian A-50 Radar Jet Was Shot Down With Patriot Missile

The U.S. Army colonel described how Ukrainian Patriot operators staged a “SAMbush” to bring down the A-50 in January of this year.

Thomas NewdickPUBLISHED Jun 10, 2024 6:55 PM EDT

The Beriev A-50U ‘Mainstay’ airborne warning and control system (AWACS) aircraft based on the Ilyushin Il-76 transport aircraft belonging to Russian Air Force in the air. ‘U’ designation stands for extended range and advanced digital radio systems. This aircraft was named after Sergey Atayants – Beriev’s chief designer. (Photo by: aviation-images.com/Universal Images Group via Getty Images).

A U.S.-made Patriot air defense system was responsible for shooting down a Russian A-50 Mainstay airborne early warning and control (AEW&C) aircraft over the Sea of Azov on January 14, according to a U.S. Army officer. The high-value aircraft, one of only a handful immediately available to Russia, was the first of two brought down in the space of five weeks. Previously, a Ukrainian official confirmed to TWZ that the second A-50 was brought down by a Soviet-era S-200 (SA-5 Gammon) long-range surface-to-air missile.

Speaking on a panel at the United States Field Artillery Association’s Fires Symposium 2024 last week, Col. Rosanna Clemente, Assistant Chief of Staff at the 10th Army Air and Missile Defense Command, confirmed that the first A-50 fell to a German-provided Patriot system, in what she described as a “SAMbush,” or surface-to-air missile ambush.

“They have probably about a battalion of Patriots operating in Ukraine right now,” Col. Clemente explained. “Some of it’s being used to protect static sites and critical national infrastructure. Others are being moved around and doing some really, really historic things that I’ve haven’t seen in 22 years of being an air defender, and one of them is a SAMbush … they’re doing that with extremely mobile Patriot systems that were donated by the Germans, because the systems are all mounted on the trucks. So they’re moving around and they’re using these types of systems, bringing them close to the plot … and stretching the very, very edges of the kinematic capabilities of that system to engage the first A-50 C2 [command and control] system back in January.”

Fifteen crew members were reportedly killed aboard the A-50.

Col. Clemente also provided some other interesting details of how the Ukrainians worked up their capabilities with these particular systems, which included a period of validation training involving the U.S. Army in Poland in April 2023.

Elements of a German Patriot air defense system stand on a snow-covered field in Miaczyn, southeastern Poland, in April 2023. Photo by Sebastian Kahnert/picture alliance via Getty Images

According to Col. Clemente, the German soldiers tasked with training the Ukrainians on the mobile Patriot systems woke up the Ukrainian battery in the middle of the night, marched them to a location where they fought a simulated air battle, and then made them march again. “I was like, ‘Huh, wonder why they did that?’ And it was a month later, they conducted some of their first ambushes where they’re shooting down Russian Su-27s along the Russian border.”

As we reported at the time, the use of Patriot to engage the radar plane over the Sea of Azov seemed likely, especially as it followed the pattern of an anti-access counter-air campaign that Ukraine was already waging against Russian military aircraft using the same air defense system.

Accordingly, in May 2023, Ukraine began pushing forward Patriot batteries to reach deep into Russian-controlled airspace. Most dramatically, a string of Russian aircraft was downed over Russian territory that borders northeastern Ukraine. Among them may have been the Su-27s (or perhaps another Flanker-variant aircraft) that Col. Clemente mentioned.

A screen capture from a Ukrainian Air Force video shows images of three Russian helicopters and two Russian fighters painted on the side of a Patriot air defense system. The three helicopter and two jet images bear the date May 13, 2023. Defense Industry of Ukraine

While the use of German-supplied weapons within Russian territory previously led to friction between Berlin and Kyiv, German officials more recently approved the use of Patriot to target aircraft in Russian airspace.

In December 2023, similar tactics were used against tactical jets flying over the northwestern Black Sea.

These kinds of highly mobile operations were then further proven with the destruction of the first A-50, on the night of January 14.

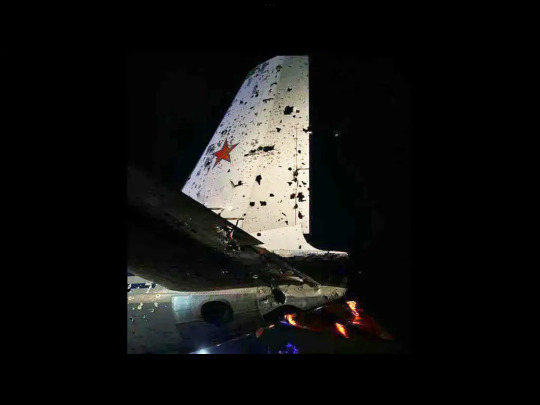

A Russian Il-22M radio-relay aircraft was also apparently engaged by Ukrainian air defenses the same night, confirmed by photo evidence of the aircraft after it had made it back to a Russian air base. It’s not clear whether the Patriot system was also responsible for inflicting damage on this aircraft, but it’s certainly a probable explanation.

A photo of the Il-22M which purportedly made an emergency landing in Anapa, in the Krasnodar region of western Russia. via X

Both incidents appear to have taken place in the western part of the Azov Sea and, as we discussed at the time, the distances involved suggested that, if Patriot was used, it was likely at the very limits of its engagement envelope.

Based on Col. Clemente’s account, it seems likely that the Patriot system in question was not only being pushed to the limits of its capabilities but was likely being deployed very far forward in an especially bold tactical move.

As we wrote at the time: “Considering risking a Patriot system or even a remote launcher right at the front is unlikely, and these airborne assets were likely orbiting at least some ways out over the water, this shot was more likely to have been around 100 miles, give or take a couple dozen miles.”

Of course, all this also depends on exactly where the targeted aircraft were at the time of the engagement.

A map showing the Sea of Azov as well as Robotyne, which is really the closest Ukraine regularly operates to that body of water, a distance of roughly 55 miles. Google Earth

Once again, the A-50 shootdown may be the most important single victory achieved so far by Ukrainian-operated Patriot systems, but it was part of a highly targeted campaign waged against the Russian Aerospace Forces which has seemingly included multiple long-range downings of tactical aircraft.

The Ukrainian tactics first found success in pushing back Russian airpower and degrading its ability to launch direct attacks and even those using standoff glide bombs, which have wreaked havoc on Ukrainian towns.

The same anti-access tactics extended to Russia’s small yet vital AEW&C fleet have arguably had an even greater effect. After all, these aircraft offer a unique look-down air picture that extends deep into Ukrainian-controlled territory. As well as spotting incoming cruise missile and drone attacks, and low-flying fighter sorties, they provide command and control and situational awareness for Russian fighters and air defense batteries. According to Ukrainian officials, the radar planes are also used to direct Russian cruise missile and drone strikes.

Dmitry Terekhov/Wikimedia Commons

The importance of these force-multipliers has seen earlier efforts to disable them, with A-50s in Belarus having been targeted by forces allied with Ukraine.

The recent appearance of a photo showing a Ukrainian S-300PS (SA-10 Grumble) air defense system marked with an A-50 symbol also indicates that previous attempts were made to bring these aircraft down using this Soviet-era surface-to-air missile, too.

With all this in mind, it’s not surprising that Ukraine’s highly valued, long-reaching Patriot air defense system was tasked against the A-50.

In demonstrating the vulnerability of Russian aircraft patrolling over the Sea of Azov, the January 15 shootdown might have been expected to push these assets back. That may have happened, but another example was then shot down at an even greater distance from the front line, on February 23. The fact that the second A-50 came down over the Krasnodar region fueled speculation that it may have been a ‘friendly fire’ incident.

However, Lt. Gen. Kyrylo Budanov, the head of the Ukrainian Ministry of Defense’s Main Directorate of Intelligence (GUR), subsequently confirmed to TWZ that the second A-50 — as well as a Tu-22M3 Backfire bomber, in a separate incident — were brought down by the Soviet-era S-200 long-range surface-to-air missile system.

Undoubtedly, there are more details still to emerge about the shootdowns of the two A-50s, not to mention other engagements that the Ukrainian Patriot has been involved in.

However, Col. Rosanna Clemente’s comments confirm that the Ukrainian Air Force is using these critical systems in a sometimes-daring manner, using limited numbers of assets not only to protect key static infrastructure but also to maraud closer to the front lines and bring down high-profile Russian aerial targets. Not only does this force Russia to adapt its airpower tactics for its own survival, reducing its effectiveness, but it also provides another means for Ukraine to fight back against numerical odds that are stacked against it.

Contact the author: [email protected]

18 notes

·

View notes

Note

🐶💙: Hey Shifty, I brought Sniffles for you. HEHEHEHEHE

🩵🧪: Hey Shifty! I have a problem for you to solve:

Problem:

A delivery truck with a mass of 4500 kg is initially at rest. The driver applies the accelerator, and the truck experiences a net force of 9000 N in the forward direction.

Questions:

a) What is the acceleration of the truck?

b) After 5 seconds, what will be the velocity of the truck? (Assume the acceleration is constant)

Think about:

* Newton's Second Law of Motion: Force (F) equals mass (m) times acceleration (a) (F = ma)

* Kinematics Equation: Velocity (v) equals initial velocity (v₀) plus acceleration (a) times time (t) (v = v₀ + at)

🩵🧪: Good luck Shifty!

🐶💙: Yea. Good luck. HAHA

I AINT DOIN THATTTTTTTTT!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!! GET OUTTA HERE BUG BOY!!!!!!!!!!!! IDK HOWWWWWW

4 notes

·

View notes

Text

10 people I'd like to get to know better

thanks @lonewolflupe for the tag!

Last Song: "Thought Crime" by Yorushika

Favorite Color: blue! but a dark blue like the night sky or the stratosphere

Last Book: Iron Widow by Xiran Jay Zhao; i don't think my textbooks count so this was the last book. also this was the first book i finished in years!

Last Movie: Mission: Impossible - Dead Reckoning Part One; i'm super excited for part 2 this year :D

Last Show: Doctor Who; specifically "Time of the Doctor," "The Doctor's Wife," and "Into the Dalek" though i've seen all of series 1-10 of the revived era :3

Sweet/spicy/savory: man, that's hard. i like both sweet and spicy food in moderation

Relationship status: single

Last thing I googled: "kinematic equations;" i was playing a ttrpg with my friends last night, and they wanted to know what the acceleration of something floating down a river would be given some arbitrary values for initial velocity, distance, and time. it was .225 ft/s/s btw

Current obsession: clone wars and the clones. i won't shut up about them. i love the silly little boys so much. i thought i'd find something new by now. NO! MORE CLONES!!!

I look forward to telling you: i got so many ideas kicking around. i got oc ideas sitting in sketches. i got an entire bad batch au i've been workshopping with one of my closest friends for months. there's also my overall art plans for the year. for being so inarticulate, i won't shut up XD

npt: @eobe

2 notes

·

View notes

Text

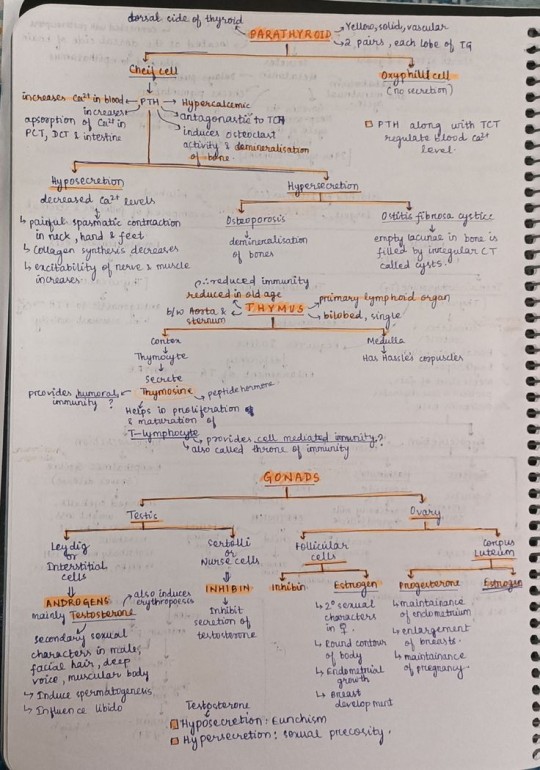

130 Day Productivity Challenge!

30 April, 2024 - Day 130

And with this post, I have achieved 130 days of productive work and continuous study.

I wrote a mock test in the morning to check my spirits. It went fine, I noted down my doubts in it and surely aim to resolve them in time.

I revised some basic maths, Units and Dimensions, Kinematics, Morphology in flowering plants, Ecology, Plant Growth, Evolution and Plant Families before my test today.

I'm getting 677/720 in today's test, a good improvement from yesterday's score. And today's paper was of a medium-difficult level.

I updated my mistake book and went through the topics in NCERT.

I revises NCERT of Phy book 1 through summaries and points to ponder.

Hope you had a good day💛

⭐✨⭐✨⭐

This productivity streak has been successfully completed and I find it exciting to move forward and work further. NEET 2024 4 days away and for the first time, I'm excited.

My ask box and messages are always open to students and friends interested in mutual topics and easy conversation. All the very best to you all! It was such a treat being on this journey 🌈💛

13 notes

·

View notes

Text

3D rigging is giving me a headache

I hate wrists. I hate ankles. I hate necks. I hate torsos. I hate inverse kinematics. I hate forward kinematics. I hate hips. I hate hands.

If anyone has advice I am begging for it. Please. God.

2 notes

·

View notes

Note

Just gonna imagine the guy as Sigurd until we get the full version because DANG that Sakura tiddy

I have tried so hard to get Sigurd into Koikatsu and I am losing my mind. No dragon head props for characters, sculpting a dragon head ends up with weird fucking mouths (in that they have a human mouth at the end of a snout). The only half decent dragon or lizard man in Studio is Forward Kinematic only and I am not patient enough to rig up an Inverse Kinematic rig for it or pose it in FK. I have tried so hard to get this man into Koikatsu and I am failing.

3 notes

·

View notes

Text

Time from a, r, and ω [Ex. 1]

A car decelerates at 6.6 m/s². The tires have a radius of 0.26 m and have a positive direction during the forward motion. If the initial angular velocity is 94 rad/s, how long does it take the car to fully stop?

-- Given values: -> a = 6.6 m/s² -> r = 0.26 m -> ωi = 94 rad/s -> ωf = 0 rad/s

-- Use one of the kinematic equations 1. ωf = ωi + αt 2. Δθ = ωit + (1/2)αt² 3. ωf² = ωi² + 2αΔθ

-- Use Equation 1

-- Missing α, which is angular acceleration

-- Solve for α

α = a/r α = 6.6 / 0.26 α = 25.385 rad/s²

-- Should be negative, because the car is decelerating

α = -25.385 rad/s²

-- Now solve for t using Equation 1

ωf = ωi + α 0 = 94 + (-25.385)t 0 = 94 - 25.385t -94 = -25.385t 94 = 25.385t 94 / 25.385 = t 3.7 = t

-- It takes the car 3.7 seconds to stop

.

Patreon

#studyblr#notes#my notes#physics example#physics problems#worked physics problems#worked physics example#physics#physics notes#physics vocabulary#physics vocab#science#introductory physics#physics 1#physics 2#physics I#physics II#physics terminology#scientific terminology#physics concepts#concepts in physics#deceleration#angular acceleration#acceleration

3 notes

·

View notes

Text

Admit One Dev Blog: Update 46 - Rigging (Part 2)

This week we have another quick rigging update:

The basic skeleton is now complete! It may need a little polish here and there but it's in a place where I'll be comfortable with making controls for it as well setting up the Inverse/Forward Kinematics. While the mesh isn't final at the moment, I'd like to get to a place where I can start making custom animations for both the Player and human-like enemies. New animations will allow me to make a lot more progress when it comes to improving the moment to moment gameplay and hopefully I'll be to retain these animations when I update both the Player and enemy models later on. Thanks for reading, I'll catch you next week!

#survival horror#admit one#horror#video games#soulslike#roguelite#roguelike#game development#game dev blog#resident evil#resident evil 4#indie games#indiedev#gamedev#indiegamedev#unreal engine#solodev#indie game dev

4 notes

·

View notes

Note

What are some of your current blender projects? Or just things you like abt the program and hobby?

Hi sorry I meant to answer this several days ago but I kept forgetting until right before bedtime and I knew this would not be short... Thank you for the ask!

Ok so I don't know what your definition of "current projects" is but there's nothing I'm actively working on right now, I'm just playing Minecraft all day, but I have many wips in various degrees of being abandoned, most recent being an alternative to https://minetrim.com/ that would run in Blender and be controlled by a geometry nodes modifier. I do hope I get around to finishing it, since the online tool as it currently exists is lacking in many aspects and can be a little buggy. It probably wouldn't be all that useful for most players since you'd need to install Blender and understand a few basics of how to use it, but it would at least help me plan my armor trims (if you don't know, Minecraft recently added a system for cosmetic customization of the player's armor combining colors and patterns, and the website and my tool are meant to simulate user-selected combinations to see what it'd look like).

And that is one of my favorite things to do in Blender, create little tools with geometry nodes, which is basically a visual simplistic programming language interacting with many of the things Blender does. I can create a customizable banana with randomized shapes and spot locations with a slider for age, length, curviness, thickness, you name it, and produce a photorealistic banana (this is one abandoned project), or I could make a regular polyhedron generator taking only a Schläfli symbol as input (another abandoned project, here's a great video that inspired me to try, I highly recommend it if you have no idea what I just said), or, as mentioned, an armor trim simulator.

But Blender can do so much and I can't talk about it without mentioning that it is Completely Free And Open Source and it's good for so much more than just 3D modeling. You can of course add materials to the model, defining exactly how the surface interacts with light, defaulting to what is physically possible but not limiting to this, allowing you to create every possible and a wide range of impossible materials. And then of course you can render that, with several rendering engines to choose from depending on the look you want and the computing power and time you're willing to invest, but I usually use the ray tracing engine (simulating rays of light for most realistic result, which you shouldn't do in video games much because games have to render in real time please don't conflate my use with the crimes of AAA games). But why stop at a still image? You can animate the model, and the material if you want, and you can animate basically any property, and of course to make animation more interesting you can rig it to a skeleton (usually how characters are animated) (includes inverse and forward kinematics of course) or do physics simulation including fluid/smoke/fire simulation, soft and rigid body simulation, and cloth simulation. They've made some changes to simulation and hair since I last looked at those aspects so I'm not totally in the loop on the details but it's good and only getting better. Ok cool you've got your little animation and you can render it to a little video, neat, but it's just in a void? Do you have to model the whole background too? Well, you can, or (I'm gonna oversimplify and gloss over a lot of differences and unique challenges of each method here) you could use an HDRI (high dynamic range image, meaning it contains A Lot more information about lighting from a much wider range of values than a normal image... you know how you point your phone camera at a light source and the image goes dark? That's because your phone camera has a lower dynamic range than human eyes, it can only see a small range of light or dark values at a time), or, hey, if you do have a video camera, you can try something really fun... camera tracking. If you film a video of real life, with the camera moving around, you can plug that video into Blender and with some help it can figure out exactly how the camera is moving through space! This means, you can make the virtual camera inside Blender move the exact same way around the animation you made, and you can then render that video and lay it over the footage you took and BAM your 3D object is now in that scene! It might still look off, of course, if it doesn't cast a shadow on the ground or if it's reflective and clearly not reflecting its surroundings, but there are solutions to all these issues and if you're like me it'll turn into a fun little puzzle.

And that's just. Just scratching the surface ok? I just. Love Blender. I love that it's free and open source. If you have a computer and time you can just. Make a movie with amazing special effects and yes yes we love practical effects but trust me digital effects are not evil they're just overused because it's not unionized so it's cheaper labor but you can't tell me it isn't cool that you could make a photorealistic video of a dragon landing on your own rooftop without paying for software or putting up with ads or risking malware with piracy it's legal it's free it's fun. I will not tell you it's not time consuming but I will tell you it's cool and free*

*I recognize not everyone has free access to a video camera, internet, a computer, and enough electricity to run it

Anyway that's my summary of why I love Blender. "That's not a summary, Maws." Trust me. This is the short version.

2 notes

·

View notes

Note

Henry help me my robotics assignment hasa question i need help with.

Inverse Kinematics is defined as given the position and orientation of the end effector or last frame find the - and it gives options

position and orientation of the remaining frames

joint angles/lengths

joint rates

joint positions

Well, chump, Inverse Kinematics is totes about determining the joint parameters for like... angles, orientation, or linear displacements and stuff. You know... to position and orient the end effector in the pose you need.

Tl;dr: The calculation of the variables of the set of joints and linkages connected to an end effector by knowing the values of its joint variables

Forward = Link and joint variables but not the position and orientation of the End Effector

Inverse = Position and orientation of the End Effector but not the link and joint variables.

Take from that what you will.

1 note

·

View note