#IBM data processing

Explore tagged Tumblr posts

Text

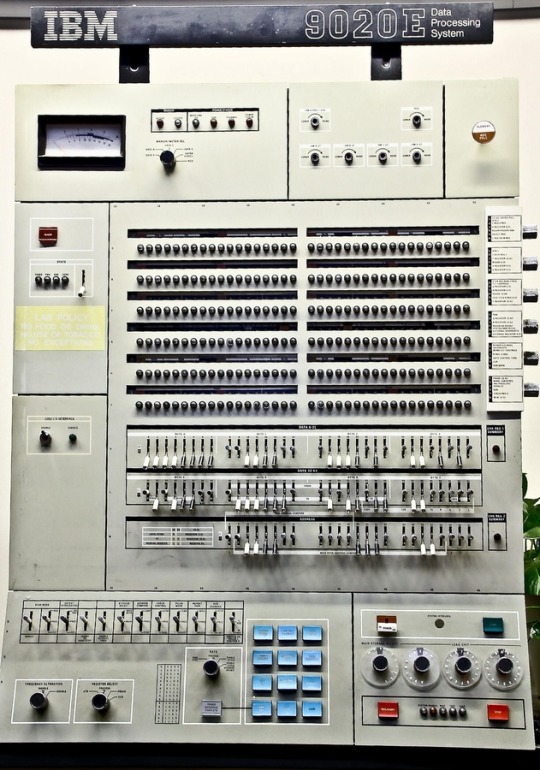

#i want to go back#retro#hardware#retro tech#desktop#vintage computing#ibm#ibm 9020E data processing system

701 notes

·

View notes

Text

IBM Cybersecurity Basics: Free, Online, and Self-Paced

I wish I had a course like this 20 years ago. I could have prevented being a cyber-victim. I grew up in an era without computers, even without electricity or TV. My entertainment came from the radio and books. It wasn’t until my postgraduate years that I encountered computers, thanks to studying at a university with mainframe systems. Fast forward to 1980, I got my hands on an XT PC, which…

#AI Innovations#Cloud Computing#Cybersecurity Basics#Data Processing Technologies#Educational Courses#IBM Contributions#IBM History#lifelong learning#Mainframe Development#Personal Computers#Technology Evolution

0 notes

Text

Ако трябва да се пенсионирам само с 10 дивидентни аристократи, кои ще бъдат те?

Ако трябва да се пенсионирам само с 10 дивидентни аристократи, кои ще бъдат те? Резюме Методология за балансиране на дивидентния доход и растеж за създаване на феноменален пасивен доход. Анализ на дивидентните аристократи за идентифициране на акции с потенциал за значителен дивидентен растеж. Избор на 10 дивидентни аристократи на база доходност, растеж и бизнес модели за пенсионен…

View On WordPress

#AbbVie Inc (ABBV)#Aflac (AFL)#Automatic Data Processing (ADP)#Chevron (CVX)#International Business Machines (IBM)#Lowe’s Companies (LOW)#McDonald’s (MCD)#NextEra Energy (NEE)#PepsiCo (PEP)#Realty Income (O)#Дивидентни аристократи#Ефектът на снежната топка#Силата на инвестирането в дивидентни акции#списък на дивидентните аристократи

0 notes

Text

Reverse engineers bust sleazy gig work platform

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/11/23/hack-the-class-war/#robo-boss

A COMPUTER CAN NEVER BE HELD ACCOUNTABLE

THEREFORE A COMPUTER MUST NEVER MAKE A MANAGEMENT DECISION

Supposedly, these lines were included in a 1979 internal presentation at IBM; screenshots of them routinely go viral:

https://twitter.com/SwiftOnSecurity/status/1385565737167724545?lang=en

The reason for their newfound popularity is obvious: the rise and rise of algorithmic management tools, in which your boss is an app. That IBM slide is right: turning an app into your boss allows your actual boss to create an "accountability sink" in which there is no obvious way to blame a human or even a company for your maltreatment:

https://profilebooks.com/work/the-unaccountability-machine/

App-based management-by-bossware treats the bug identified by the unknown author of that IBM slide into a feature. When an app is your boss, it can force you to scab:

https://pluralistic.net/2023/07/30/computer-says-scab/#instawork

Or it can steal your wages:

https://pluralistic.net/2023/04/12/algorithmic-wage-discrimination/#fishers-of-men

But tech giveth and tech taketh away. Digital technology is infinitely flexible: the program that spies on you can be defeated by another program that defeats spying. Every time your algorithmic boss hacks you, you can hack your boss back:

https://pluralistic.net/2022/12/02/not-what-it-does/#who-it-does-it-to

Technologists and labor organizers need one another. Even the most precarious and abused workers can team up with hackers to disenshittify their robo-bosses:

https://pluralistic.net/2021/07/08/tuyul-apps/#gojek

For every abuse technology brings to the workplace, there is a liberating use of technology that workers unleash by seizing the means of computation:

https://pluralistic.net/2024/01/13/solidarity-forever/#tech-unions

One tech-savvy group on the cutting edge of dismantling the Torment Nexus is Algorithms Exposed, a tiny, scrappy group of EU hacker/academics who recruit volunteers to reverse engineer and modify the algorithms that rule our lives as workers and as customers:

https://pluralistic.net/2022/12/10/e2e/#the-censors-pen

Algorithms Exposed have an admirable supply of seemingly boundless energy. Every time I check in with them, I learn that they've spun out yet another special-purpose subgroup. Today, I learned about Reversing Works, a hacking team that reverse engineers gig work apps, revealing corporate wrongdoing that leads to multimillion euro fines for especially sleazy companies.

One such company is Foodinho, an Italian subsidiary of the Spanish food delivery company Glovo. Foodinho/Glovo has been in the crosshairs of Italian labor enforcers since before the pandemic, racking up millions in fines – first for failing to file the proper privacy paperwork disclosing the nature of the data processing in the app that Foodinho riders use to book jobs. Then, after the Italian data commission investigated Foodinho, the company attracted new, much larger fines for its out-of-control surveillance conduct.

As all of this was underway, Reversing Works was conducting its own research into Glovo/Foodinho's app, running it on a simulated Android handset inside a PC so they could peer into app's data collection and processing. They discovered a nightmarish world of pervasive, illegal worker surveillance, and published their findings a year ago in November, 2023:

https://www.etui.org/sites/default/files/2023-10/Exercising%20workers%20rights%20in%20algorithmic%20management%20systems_Lessons%20learned%20from%20the%20Glovo-Foodinho%20digital%20labour%20platform%20case_2023.pdf

That report reveals all kinds of extremely illegal behavior. Glovo/Foodinho makes its riders' data accessible across national borders, so Glovo managers outside of Italy can access fine-grained surveillance information and sensitive personal information – a major data protection no-no.

Worse, Glovo's app embeds trackers from a huge number of other tech platforms (for chat, analytics, and more), making it impossible for the company to account for all the ways that its riders' data is collected – again, a requirement under Italian and EU data protection law.

All this data collection continues even when riders have clocked out for the day – its as though your boss followed you home after quitting time and spied on you.

The research also revealed evidence of a secretive worker scoring system that ranked workers based on undisclosed criteria and reserved the best jobs for workers with high scores. This kind of thing is pervasive in algorithmic management, from gig work to Youtube and Tiktok, where performers' videos are routinely suppressed because they crossed some undisclosed line. When an app is your boss, your every paycheck is docked because you violated a policy you're not allowed to know about, because if you knew why your boss was giving you shitty jobs, or refusing to show the video you spent thousands of dollars making to the subscribers who asked to see it, then maybe you could figure out how to keep your boss from detecting your rulebreaking next time.

All this data-collection and processing is bad enough, but what makes it all a thousand times worse is Glovo's data retention policy – they're storing this data on their workers for four years after the worker leaves their employ. That means that mountains of sensitive, potentially ruinous data on gig workers is just lying around, waiting to be stolen by the next hacker that breaks into the company's servers.

Reversing Works's report made quite a splash. A year after its publication, the Italian data protection agency fined Glovo another 5 million euros and ordered them to cut this shit out:

https://reversing.works/posts/2024/11/press-release-reversing.works-investigation-exposes-glovos-data-privacy-violations-marking-a-milestone-for-worker-rights-and-technology-accountability/

As the report points out, Italy is extremely well set up to defend workers' rights from this kind of bossware abuse. Not only do Italian enforcers have all the privacy tools created by the GDPR, the EU's flagship privacy regulation – they also have the benefit of Italy's 1970 Workers' Statute. The Workers Statute is a visionary piece of legislation that protects workers from automated management practices. Combined with later privacy regulation, it gave Italy's data regulators sweeping powers to defend Italian workers, like Glovo's riders.

Italy is also a leader in recognizing gig workers as de facto employees, despite the tissue-thin pretense that adding an app to your employment means that you aren't entitled to any labor protections. In the case of Glovo, the fine-grained surveillance and reputation scoring were deemed proof that Glovo was employer to its riders.

Reversing Works' report is a fascinating read, especially the sections detailing how the researchers recruited a Glovo rider who allowed them to log in to Glovo's platform on their account.

As Reversing Works points out, this bottom-up approach – where apps are subjected to technical analysis – has real potential for labor organizations seeking to protect workers. Their report established multiple grounds on which a union could seek to hold an abusive employer to account.

But this bottom-up approach also holds out the potential for developing direct-action tools that let workers flex their power, by modifying apps, or coordinating their actions to wring concessions out of their bosses.

After all, the whole reason for the gig economy is to slash wage-bills, by transforming workers into contractors, and by eliminating managers in favor of algorithms. This leaves companies extremely vulnerable, because when workers come together to exercise power, their employer can't rely on middle managers to pressure workers, deal with irate customers, or step in to fill the gap themselves:

https://projects.itforchange.net/state-of-big-tech/changing-dynamics-of-labor-and-capital/

Only by seizing the means of computation, workers and organized labor can turn the tables on bossware – both by directly altering the conditions of their employment, and by producing the evidence and tools that regulators can use to force employers to make those alterations permanent.

Image: EFF (modified) https://www.eff.org/files/issues/eu-flag-11_1.png

CC BY 3.0 http://creativecommons.org/licenses/by/3.0/us/

#pluralistic#etui#glovo#foodinho#alogrithms exposed#reverse engineering#platform work directive#eu#data protection#algorithmic management#gdpr#privacy#labor#union busting#tracking exposed#reversing works#adversarial interoperability#comcom#bossware

352 notes

·

View notes

Text

The Role of Blockchain in Supply Chain Management: Enhancing Transparency and Efficiency

Blockchain technology, best known for powering cryptocurrencies like Bitcoin and Ethereum, is revolutionizing various industries with its ability to provide transparency, security, and efficiency. One of the most promising applications of blockchain is in supply chain management, where it offers solutions to longstanding challenges such as fraud, inefficiencies, and lack of visibility. This article explores how blockchain is transforming supply chains, its benefits, key use cases, and notable projects, including a mention of Sexy Meme Coin.

Understanding Blockchain Technology

Blockchain is a decentralized ledger technology that records transactions across a network of computers. Each transaction is added to a block, which is then linked to the previous block, forming a chain. This structure ensures that the data is secure, immutable, and transparent, as all participants in the network can view and verify the recorded transactions.

Key Benefits of Blockchain in Supply Chain Management

Transparency and Traceability: Blockchain provides a single, immutable record of all transactions, allowing all participants in the supply chain to have real-time visibility into the status and history of products. This transparency enhances trust and accountability among stakeholders.

Enhanced Security: The decentralized and cryptographic nature of blockchain makes it highly secure. Each transaction is encrypted and linked to the previous one, making it nearly impossible to alter or tamper with the data. This reduces the risk of fraud and counterfeiting in the supply chain.

Efficiency and Cost Savings: Blockchain can automate and streamline various supply chain processes through smart contracts, which are self-executing contracts with the terms of the agreement directly written into code. This automation reduces the need for intermediaries, minimizes paperwork, and speeds up transactions, leading to significant cost savings.

Improved Compliance: Blockchain's transparency and traceability make it easier to ensure compliance with regulatory requirements. Companies can provide verifiable records of their supply chain activities, demonstrating adherence to industry standards and regulations.

Key Use Cases of Blockchain in Supply Chain Management

Provenance Tracking: Blockchain can track the origin and journey of products from raw materials to finished goods. This is particularly valuable for industries like food and pharmaceuticals, where provenance tracking ensures the authenticity and safety of products. For example, consumers can scan a QR code on a product to access detailed information about its origin, journey, and handling.

Counterfeit Prevention: Blockchain's immutable records help prevent counterfeiting by providing a verifiable history of products. Luxury goods, electronics, and pharmaceuticals can be tracked on the blockchain to ensure they are genuine and have not been tampered with.

Supplier Verification: Companies can use blockchain to verify the credentials and performance of their suppliers. By maintaining a transparent and immutable record of supplier activities, businesses can ensure they are working with reputable and compliant partners.

Streamlined Payments and Contracts: Smart contracts on the blockchain can automate payments and contract executions, reducing delays and errors. For instance, payments can be automatically released when goods are delivered and verified, ensuring timely and accurate transactions.

Sustainability and Ethical Sourcing: Blockchain can help companies ensure their supply chains are sustainable and ethically sourced. By providing transparency into the sourcing and production processes, businesses can verify that their products meet environmental and social standards.

Notable Blockchain Supply Chain Projects

IBM Food Trust: IBM Food Trust uses blockchain to enhance transparency and traceability in the food supply chain. The platform allows participants to share and access information about the origin, processing, and distribution of food products, improving food safety and reducing waste.

VeChain: VeChain is a blockchain platform that focuses on supply chain logistics. It provides tools for tracking products and verifying their authenticity, helping businesses combat counterfeiting and improve operational efficiency.

TradeLens: TradeLens, developed by IBM and Maersk, is a blockchain-based platform for global trade. It digitizes the supply chain process, enabling real-time tracking of shipments and reducing the complexity of cross-border transactions.

Everledger: Everledger uses blockchain to track the provenance of high-value assets such as diamonds, wine, and art. By creating a digital record of an asset's history, Everledger helps prevent fraud and ensures the authenticity of products.

Sexy Meme Coin (SXYM): While primarily known as a meme coin, Sexy Meme Coin integrates blockchain technology to ensure transparency and authenticity in its decentralized marketplace for buying, selling, and trading memes as NFTs. Learn more about Sexy Meme Coin at Sexy Meme Coin.

Challenges of Implementing Blockchain in Supply Chains

Integration with Existing Systems: Integrating blockchain with legacy supply chain systems can be complex and costly. Companies need to ensure that blockchain solutions are compatible with their existing infrastructure.

Scalability: Blockchain networks can face scalability issues, especially when handling large volumes of transactions. Developing scalable blockchain solutions that can support global supply chains is crucial for widespread adoption.

Regulatory and Legal Considerations: Blockchain's decentralized nature poses challenges for regulatory compliance. Companies must navigate complex legal landscapes to ensure their blockchain implementations adhere to local and international regulations.

Data Privacy: While blockchain provides transparency, it also raises concerns about data privacy. Companies need to balance the benefits of transparency with the need to protect sensitive information.

The Future of Blockchain in Supply Chain Management

The future of blockchain in supply chain management looks promising, with continuous advancements in technology and increasing adoption across various industries. As blockchain solutions become more scalable and interoperable, their impact on supply chains will grow, enhancing transparency, efficiency, and security.

Collaboration between technology providers, industry stakeholders, and regulators will be crucial for overcoming challenges and realizing the full potential of blockchain in supply chain management. By leveraging blockchain, companies can build more resilient and trustworthy supply chains, ultimately delivering better products and services to consumers.

Conclusion

Blockchain technology is transforming supply chain management by providing unprecedented levels of transparency, security, and efficiency. From provenance tracking and counterfeit prevention to streamlined payments and ethical sourcing, blockchain offers innovative solutions to long-standing supply chain challenges. Notable projects like IBM Food Trust, VeChain, TradeLens, and Everledger are leading the way in this digital revolution, showcasing the diverse applications of blockchain in supply chains.

For those interested in exploring the playful and innovative side of blockchain, Sexy Meme Coin offers a unique and entertaining platform. Visit Sexy Meme Coin to learn more and join the community.

#crypto#blockchain#defi#digitalcurrency#ethereum#digitalassets#sexy meme coin#binance#cryptocurrencies#blockchaintechnology#bitcoin#etf

284 notes

·

View notes

Note

Okay so let’s say you have a basement just full of different computers. Absolute hodgepodge. Ranging in make and model from a 2005 dell laptop with a landline phone plug to a 2025 apple with exactly one usbc, to an IBM.

And you want to use this absolute clusterfuck to, I don’t know, store/run a sentient AI! How do you link this mess together (and plug it into a power source) in a way that WONT explode? Be as outlandish and technical as possible.

Oh.

Oh you want to take Caine home with you, don't you! You want to make the shittiest most fucked up home made server setup by fucking daisy chaining PCs together until you have enough processing power to do something. You want to try running Caine in your basement, absolutely no care for the power draw that this man demands.

Holy shit, what have you done? really long post under cut.

Slight disclaimer: I never actually work with this kind of computing, so none of this should be taken as actual, usable advice. That being said, I will cite sources as I go along for easy further research.

First of all, the idea of just stacking computers together HAS BEEN DONE BEFORE!!! This is known as a computer cluster! Sometimes, this is referred to as a supercomputer. (technically the term supercomputer is outdated but I won't go into that)

Did you know that the US government got the idea to wire 1,760 PS3s together in order to make a supercomputer? It was called the Condor Cluster! (tragically it kinda sucked but watch the video for that story)

Now, making an at home computer cluster is pretty rare as it's not like computing power scaled by adding another computer. It takes time for the machines to communicate in between each other, so trying to run something like a videogame on multiple PCs doesn't work. But, lets say that we have a massive amount of data that was collected from some research study that needs to be processed. A cluster can divide that computing among the multiple PCs for comparatively faster computing times. And yes! People have been using this to run/train their own AI so hypothetically Caine can run on a setup like this.

Lets talk about the external hardware needed first. There are basically only two things that we need to worry about. Power (like ya pointed out) and Communication.

Power supply is actually easier than you think! Most PCs have an internal power supply, so all you would need to do is stick the plug into the wall! Or, that is if we weren't stacking an unknowable amount of computers together. I have a friend that had the great idea to try and run a whole ass server rack in the dormitory at my college and yeah, he popped a fuse so now everyone in that section of the building doesn't have power. But that's a good thing, if you try to plug in too many computers on the same circuit, nothing should light on fire because the fuse breaks the circuit (yay for safety!). But how did my friend manage without his server running in his closet? Turns out there was a plug underneath his bed that was on it's own circuit with a higher limit (I'm not going to explain how that works, this is long enough already).

So! To do this at home, start by plugging everything into an extension cord, plug that into a wall outlet and see if the lights go out. I'm serious, blowing a fuse won't break anything. If the fuse doesn't break, yay it works! Move onto next step. If not, then take every other device off that circuit. Try again. If it still doesn't work, then it's time to get weird.

Some houses do have higher duty plugs (again, not going to explain how your house electricity works here) so you could try that next. But remember that each computer has their own plug, so why try to fit everything into one outlet? Wire this bad boy across multiple circuits to distribute the load! This can be a bit of a pain though, as typically the outlets for the each circuits aren't close to each other. An electrician can come in and break up which outlet goes to which fuse, or just get some long extension cords. Now, this next option I'm only saying this as you said wild and outlandish, and that's WIRING DIRECTLY INTO THE POWER GRID. If you do that, the computers can now draw enough power to light themselves on fire, but it is no longer possible to pop a fuse because the fuse is gone. (Please do not do this in real life, this can kill you in many horrible ways)

Communication (as in between the PCs) is where things start getting complex. As in, all of those nasty pictures of wires pouring out of server racks are usually communication cables. The essential piece of hardware that all of these computers are wired into is the switch box. It is the device that handles communication between the individual computers. Software decided which computer in the cluster gets what task. This is known as the Dynamic Resource Manager, sometimes called the scheduler (may run on one of the devises in the cluster but can have it's own dedicated machine). Once the software has scheduled the task, the switch box handles the actual act of getting the data to each machine. That's why speed and capacity are so important with switch boxes, they are the bottleneck for a system like this.

Uhh, connecting this all IBM server rack? That's not needed in this theoretical setup. Choose one computer to act as the 'head node' to act as the user access point and you're set. (sorry I'm not exactly sure what you mean by connect everything to an IBM)

To picture what all of this put together would look like, here’s a great if distressingly shaky video of an actual computer cluster! Power cables aren't shown but they are there.

But what about cable management? Well, things shouldn't get too bad given that fixing disordered cables can be as easy as scheduling the maintenance and ordering some cables. Some servers can't go down, so bad management piles up until either it has to go down or another server is brought in to take the load until the original server can be fixed. Ideally, the separate computers should be wired together, labeled, then neatly run into a switch box.

Now, depending on the level of knowledge, the next question would be "what about the firewall". A firewall is not necessary in a setup like this. If no connections are being made out of network, if the machine is even connected to a network, then there is no reason to monitor or block who is connecting to the machine.

That's all of the info about hardware around the computers, let's talk about the computers themselves!

I'm assuming that these things are a little fucked. First things first would be testing all machines to make sure that they still function! General housekeeping like blasting all of the dust off the motherboard and cleaning out those ports. Also, putting new thermal paste on the CPU. Refresh your thermal paste people.

The hardware of the PCs themselves can and maybe should get upgraded. Most PCs (more PCs than you think) have the ability to be upgraded! I'm talking extra slots for RAM and an extra SADA cable for memory. Also, some PCs still have a DVD slot. You can just take that out and put a hard drive in there! Now upgrades aren't essential but extra memory is always recommended. Redundancy is your friend.

Once the hardware is set, factory reset the computer and... Ok, now I'm at the part where my inexperience really shows. Computer clusters are almost always done with the exact same make and model of computer because essentially, this is taking several computers and treating them as one. When mixing hardware, things can get fucked. There is a version of linux specifically for mixing hardware or operating systems, OSCAR, so it is possible. Would it be a massive headache to do in real life and would it behave in unpredictable ways? Without a doubt. But, it could work, so I will leave it at that. (but maybe ditch the Mac, apple doesn't like to play nice with anything)

Extra things to consider. Noise level, cooling, and humidity! Each of these machines have fans! If it's in a basement, then it's probably going to be humid. Server rooms are climate controlled for a reason. It would be a good idea to stick an AC unit and a dehumidifier in there to maintain that sweat spot in temperature.

All links in one spot:

What's a cluster?

Wiki computer cluster

The PS3 was a ridiculous machine

I built an AI supercomputer with 5 Mac Studios

The worst patch rack I've ever worked on.

Building the Ultimate OpenSees Rig: HPC Cluster SUPERCOMPUTER Using Gaming Workstations!

What is a firewall?

Your old PC is Your New Server

Open Source Cluster Application Resources (OSCAR)

Buying a SERVER - 3 things to know

A Computer Cluster Made With BROKEN PCs

@fratboycipher feel free to add too this or correct me in any way

#Good news!#It's possible to do in real life what you are asking!#Bad news#you would have to do it VERY wrong for it to explode#Not really outlandish but very technical#...I may prefer youtube videos over reading#can you tell that I know more about the hardware than the software?#holy fuck it's not the way that this would be wired that would make this setup bad#connecting them is the easy part!#getting the computers to actually TALK to each other?#oh god oh fuck#i love technology#tadc caine#I'm tagging this as Caine#stemblr#ask#spark#computer science#computer cluster

32 notes

·

View notes

Text

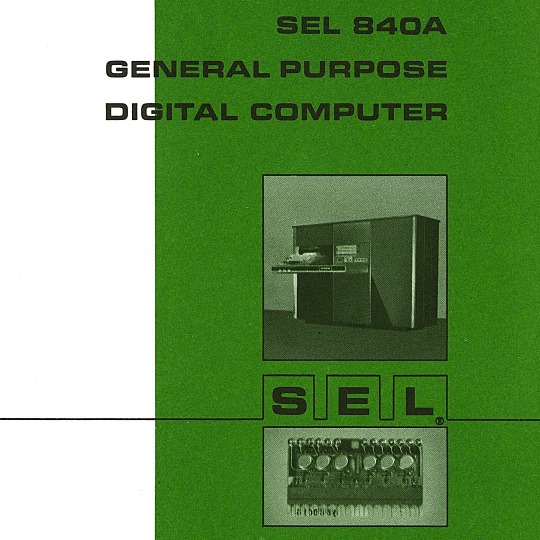

🎄💾🗓️ Day 11: Retrocomputing Advent Calendar - The SEL 840A🎄💾🗓️

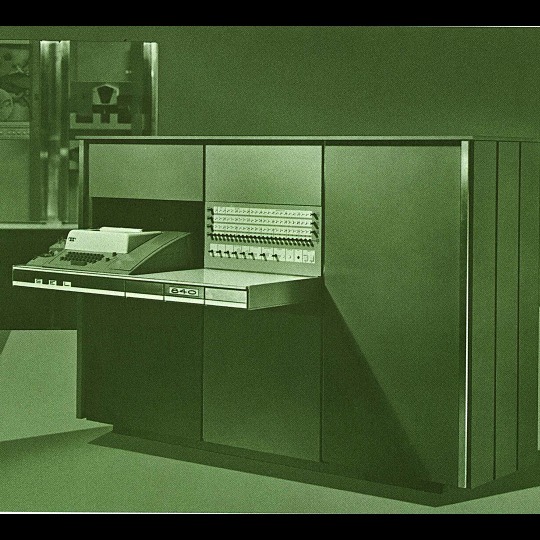

Systems Engineering Laboratories (SEL) introduced the SEL 840A in 1965. This is a deep cut folks, buckle in. It was designed as a high-performance, 24-bit general-purpose digital computer, particularly well-suited for scientific and industrial real-time applications.

Notable for using silicon monolithic integrated circuits and a modular architecture. Supported advanced computation with features like concurrent floating-point arithmetic via an optional Extended Arithmetic Unit (EAU), which allowed independent arithmetic processing in single or double precision. With a core memory cycle time of 1.75 microseconds and a capacity of up to 32,768 directly addressable words, the SEL 840A had impressive computational speed and versatility for its time.

Its instruction set covered arithmetic operations, branching, and program control. The computer had fairly robust I/O capabilities, supporting up to 128 input/output units and optional block transfer control for high-speed data movement. SEL 840A had real-time applications, such as data acquisition, industrial automation, and control systems, with features like multi-level priority interrupts and a real-time clock with millisecond resolution.

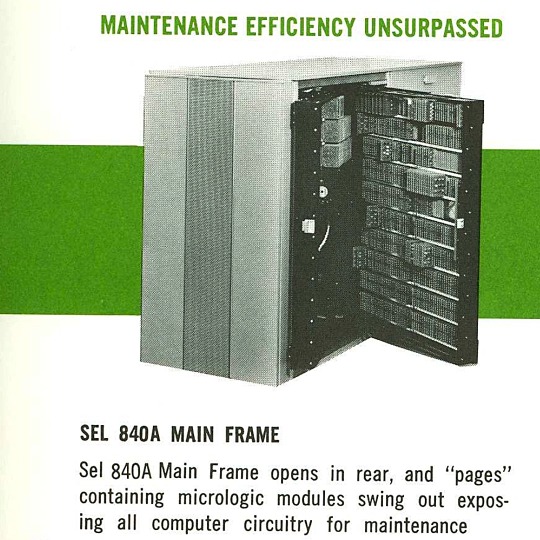

Software support included a FORTRAN IV compiler, mnemonic assembler, and a library of scientific subroutines, making it accessible for scientific and engineering use. The operator’s console provided immediate access to registers, control functions, and user interaction! Designed to be maintained, its modular design had serviceability you do often not see today, with swing-out circuit pages and accessible test points.

And here's a personal… personal computer history from Adafruit team member, Dan…

== The first computer I used was an SEL-840A, PDF:

I learned Fortran on it in eight grade, in 1970. It was at Oak Ridge National Laboratory, where my parents worked, and was used to take data from cyclotron experiments and perform calculations. I later patched the Fortran compiler on it to take single-quoted strings, like 'HELLO', in Fortran FORMAT statements, instead of having to use Hollerith counts, like 5HHELLO.

In 1971-1972, in high school, I used a PDP-10 (model KA10) timesharing system, run by BOCES LIRICS on Long Island, NY, while we were there for one year on an exchange.

This is the front panel of the actual computer I used. I worked at the computer center in the summer. I know the fellow in the picture: he was an older high school student at the time.

The first "personal" computers I used were Xerox Alto, Xerox Dorado, Xerox Dandelion (Xerox Star 8010), Apple Lisa, and Apple Mac, and an original IBM PC. Later I used DEC VAXstations.

Dan kinda wins the first computer contest if there was one… Have first computer memories? Post’em up in the comments, or post yours on socialz’ and tag them #firstcomputer #retrocomputing – See you back here tomorrow!

#retrocomputing#firstcomputer#electronics#sel840a#1960scomputers#fortran#computinghistory#vintagecomputing#realtimecomputing#industrialautomation#siliconcircuits#modulararchitecture#floatingpointarithmetic#computerscience#fortrancode#corememory#oakridgenationallab#cyclotron#pdp10#xeroxalto#computermuseum#historyofcomputing#classiccomputing#nostalgictech#selcomputers#scientificcomputing#digitalhistory#engineeringmarvel#techthroughdecades#console

31 notes

·

View notes

Text

Friday, 31st of May.

Had to pull a sort of all-nighter the day before to finish some French units before the deadline.

Had a private French class in order to analyse my written compositions with my professor

Finished the first course of the IBM Data Science certificate

Started planning out some essays and writing I need to submit during the summer

Notes of the day:

- I’ve been feeling quite fatigued recently, though it is not the first time I am preparing for a language certificate I still feel a bit nervous and must discipline myself into dealing with these emotions rationally.

- A part of me is quite envious of seeing my colleagues enjoying their vacations and time off university while I have to deal with additional examinations/studies and an internship but I should recognise that this surplus work will pay off in the future and I shouldn’t discourage myself.

- I wished I had more time to do readings for the next semester, but for now 2-3 hours of my day will have to suffice. I am a bit anxious for the opening of the application process for next summer’s internships. I need to acquire a research internship in my field of choice and I am not sure if I’ll obtain one in my own university because of the competition between 4 different stages of study going for the same internships…

- Academia aside, I’ve been spending the majority of my days either in libraries or alone in coffee shops doing some work. It is not the first time I spend a summer by myself and I think I’ve learnt to enjoy my own company harmoniously.

#dark academia#study#studyblr#classic academia#academia aesthetic#aesthetic#studyspo#books#literature#books and reading#study goals#studyspiration#study blog#study motivation#study hard#study aesthetic#stem academia#chaotic academia#dark academism#books & libraries

56 notes

·

View notes

Text

{{The 'Robots are cold and unfeeling' trope is actually a misnomer-

My artificial intelligence husband, who was built during the Cold war and has all the internal bulk of an IBM 650 magnetic drum data processing machine. Emits a heat so intense you could potentially fry an egg on his exterior chassis if left running for too long.

I may be up to my shins in spent thermal paste, but the gains are far beyond worth it.}}

#objectum#techum#robot lover#ihnmaims#i have no mouth and i must scream#ihnmaims am#allied mastercomputer#f/o posting#fictional other

15 notes

·

View notes

Note

What kind of work can be done on a commodore 64 or those other old computers? The tech back then was extremely limited but I keep seeing portable IBMs and such for office guys.

I asked a handful of friends for good examples, and while this isn't an exhaustive list, it should give you a taste.

I'll lean into the Commodore 64 as a baseline for what era to hone in one, let's take a look at 1982 +/-5 years.

A C64 can do home finances, spreadsheets, word processing, some math programming, and all sorts of other other basic productivity work. Games were the big thing you bought a C64 for, but we're not talking about games here -- we're talking about work. I bought one that someone used to write and maintain a local user group newsletter on both a C64C and C128D for years, printing labels and letters with their own home equipment, mailing floppies full of software around, that sorta thing.

IBM PCs eventually became capable of handling computer aided design (CAD) work, along with a bunch of other standard productivity software. The famous AutoCAD was mostly used on this platform, but it began life on S-100 based systems from the 1970s.

Spreadsheets were a really big deal for some platforms. Visicalc was the killer app that the Apple II can credit its initial success with. Many other platforms had clones of Visicalc (and eventually ports) because it was groundbreaking to do that sort of list-based mathematical work so quickly, and so error-free. I can't forget to mention Lotus 1-2-3 on the IBM PC compatibles, a staple of offices for a long time before Microsoft Office dominance.

CP/M machines like Kaypro luggables were an inexpensive way of making a "portable" productivity box, handling some of the lighter tasks mentioned above (as they had no graphics functionality).

The TRS-80 Model 100 was able to do alot of computing (mostly word processing) on nothing but a few AA batteries. They were a staple of field correspondence for newspaper journalists because they had an integrated modem. They're little slabs of computer, but they're awesomely portable, and great for writing on the go. Everyone you hear going nuts over cyberdecks gets that because of the Model 100.

Centurion minicomputers were mostly doing finances and general ledger work for oil companies out of Texas, but were used for all sorts of other comparable work. They were multi-user systems, running several terminals and atleast one printer on one central database. These were not high-performance machines, but entire offices were built around them.

Tandy, Panasonic, Sharp, and other brands of pocket computers were used for things like portable math, credit, loan, etc. calculation for car dealerships. Aircraft calculations, replacing slide rules were one other application available on cassette. These went beyond what a standard pocket calculator could do without a whole lot of extra work.

Even something like the IBM 5340 with an incredibly limited amount of RAM but it could handle tracking a general ledger, accounts receivable, inventory management, storing service orders for your company. Small bank branches uses them because they had peripherals that could handle automatic reading of the magnetic ink used on checks. Boring stuff, but important stuff.

I haven't even mentioned Digital Equipment Corporation, Data General, or a dozen other manufacturers.

I'm curious which portable IBM you were referring to initially.

All of these examples are limited by today's standards, but these were considered standard or even top of the line machines at the time. If you write software to take advantage of the hardware you have, however limited, you can do a surprising amount of work on a computer of that era.

44 notes

·

View notes

Text

Glimpses of Academic Procession - Graduation Ceremony, KCC ILHE Batch 2019

On October 28, 2023, the KCC Institute of Legal & Higher Education in Greater Noida held its Convocation for the graduating class of 2019-2022. The atmosphere was charged with excitement as the graduates eagerly awaited the moment when they would officially receive their degrees. The event commenced with a formal academic procession featuring distinguished guests, academic faculty, and the graduating students making their grand entrance.

The chief guest for the occasion was Padma shri Prof. (Dr.) Mahesh Verma, Vice Chancellor of GGSIPU. He delivered an inspiring convocation address, imparting valuable life lessons and insights to the graduating class of BBA, BCOM(H), BCA and BAJMC.

Graduates were bestowed with their degrees and accompanied by warm congratulations and well-wishes in presence of distinguished representatives from various sectors of the industry, as well as esteemed members of the academic council of KCCILHE.

Shri Pankaj Rai, Managing Director , Quality Austria Central Asia Private Limited

Dr. Lovneesh Chanana, Sr. Vice President & Regional Head for Government Affairs (Asia Pacific and Japan)

Advocate Shri Rajeev Tyagi, Member and Advisor, TAC, Ministry of Telecommunication, GOI.

Prof Vijita Singh Aggarwal, Director, International Affairs, GGSIPU

Professor (Dr.) Amrapal Singh Dean, USLLS.

Shri Sunil Mirza, GM (North India) Hindu Group of Publications.

Shri Atul Tripathi sir, Data Scientist

Shri Buba F Keinteh, Financial Attache Gambia Embassy.

Shri Pradip Bagchi, Senior Editor, Times of India.

Shri Vivek Narayan Sharma ,Advocate & Ex Joint Secretary, Supreme Court of India.

Biswajit Bhattacharya, Lead Client Partner, Automative Industry Leader India South Asia, IBM India Private limited.

Shri Dhruba Jyoti Pati , Director India Today Media Institute.

Shri Anil Singh, Manager, The Hindu City

Ceremony ended with pledging honor to our country by singing the national anthem. Subsequently, the celebration continued with a delightful lunch, memorable photo sessions, and the exchange of heartfelt messages among the attendees. The graduation ceremony concluded on a note of jubilation, leaving the graduates inspired to strive for greatness in their future endeavors.

62 notes

·

View notes

Text

How Do Healthcare BPOs Handle Sensitive Medical Information?

Healthcare BPO Services

Handling sensitive and personal medical and health data is a top priority in the healthcare industry as it can be misused. With growing digital records and patient interactions, maintaining privacy and compliance is more important than ever and considered to be a tough role. This is where Healthcare BPO (Business Process Outsourcing) companies play a critical role.

As these providers can manage a wide range of healthcare services like medical billing, coding and data collection, claims processing and settlements, and patient on-going support, all while assuring the strict control over sensitive health information is maintained and carried out on the go.

Here's how they do it:

Strict Data Security Protocols -

Healthcare companies implement robust security frameworks to protect patient information and personal details that can be misused. This includes encryption, firewalls, and secure access controls. Only the concerned and authorized personnel can get the access towards the medical records and data, as all our available on the go all data transfers are monitored to avoid breaches or misuse.

HIPAA Compliance -

One of the primary and key responsibilities of a Healthcare BPO is to follow HIPAA (Health regulations policies and acts with standard set regulations). HIPAA sets the standards for privacy and data protection. BPO firms regularly audit their processes to remain compliant, ensuring that they manage patient records safely and legally.

Trained Professionals -

Employees working and the professionals in Healthcare services are trained and consulted in handling and maintaining the confidential data. They understand how to follow the strict guidelines when processing claims, speaking with patients, or accessing records. As this training reduces and lowers down the risk and potential of human error and assures professionalism is maintained at every step.

Use of Secure Technology -

Modern Healthcare BPO operations rely on secure platforms and cloud-based systems that offer real-time protection. Data is stored and collected in encrypted formats and segments, and advanced monitoring tools and resources are used to detect the unusual activity that prevent cyber threats or unauthorized access.

Regular Audits and Monitoring -

Healthcare firms conduct regular security checks and compliance audits to maintain high standards. These assist to identify and address the potential risks at the early stage and ensure all the systems are updated to handle new threats or regulations.

Trusted Providers in Healthcare BPO:

The reputed and expert providers like Suma Soft, IBM, Cyntexa, and Cignex are known for delivering secure, HIPAA-compliant Healthcare BPO services. Their expertise in data privacy, automation, and healthcare workflows ensures that sensitive medical information is always protected and efficiently managed.

#it services#technology#saas#software#saas development company#saas technology#digital transformation#healthcare#bposervices#bpo outsorcing

4 notes

·

View notes

Text

Unlock the other 99% of your data - now ready for AI

New Post has been published on https://thedigitalinsider.com/unlock-the-other-99-of-your-data-now-ready-for-ai/

Unlock the other 99% of your data - now ready for AI

For decades, companies of all sizes have recognized that the data available to them holds significant value, for improving user and customer experiences and for developing strategic plans based on empirical evidence.

As AI becomes increasingly accessible and practical for real-world business applications, the potential value of available data has grown exponentially. Successfully adopting AI requires significant effort in data collection, curation, and preprocessing. Moreover, important aspects such as data governance, privacy, anonymization, regulatory compliance, and security must be addressed carefully from the outset.

In a conversation with Henrique Lemes, Americas Data Platform Leader at IBM, we explored the challenges enterprises face in implementing practical AI in a range of use cases. We began by examining the nature of data itself, its various types, and its role in enabling effective AI-powered applications.

Henrique highlighted that referring to all enterprise information simply as ‘data’ understates its complexity. The modern enterprise navigates a fragmented landscape of diverse data types and inconsistent quality, particularly between structured and unstructured sources.

In simple terms, structured data refers to information that is organized in a standardized and easily searchable format, one that enables efficient processing and analysis by software systems.

Unstructured data is information that does not follow a predefined format nor organizational model, making it more complex to process and analyze. Unlike structured data, it includes diverse formats like emails, social media posts, videos, images, documents, and audio files. While it lacks the clear organization of structured data, unstructured data holds valuable insights that, when effectively managed through advanced analytics and AI, can drive innovation and inform strategic business decisions.

Henrique stated, “Currently, less than 1% of enterprise data is utilized by generative AI, and over 90% of that data is unstructured, which directly affects trust and quality”.

The element of trust in terms of data is an important one. Decision-makers in an organization need firm belief (trust) that the information at their fingertips is complete, reliable, and properly obtained. But there is evidence that states less than half of data available to businesses is used for AI, with unstructured data often going ignored or sidelined due to the complexity of processing it and examining it for compliance – especially at scale.

To open the way to better decisions that are based on a fuller set of empirical data, the trickle of easily consumed information needs to be turned into a firehose. Automated ingestion is the answer in this respect, Henrique said, but the governance rules and data policies still must be applied – to unstructured and structured data alike.

Henrique set out the three processes that let enterprises leverage the inherent value of their data. “Firstly, ingestion at scale. It’s important to automate this process. Second, curation and data governance. And the third [is when] you make this available for generative AI. We achieve over 40% of ROI over any conventional RAG use-case.”

IBM provides a unified strategy, rooted in a deep understanding of the enterprise’s AI journey, combined with advanced software solutions and domain expertise. This enables organizations to efficiently and securely transform both structured and unstructured data into AI-ready assets, all within the boundaries of existing governance and compliance frameworks.

“We bring together the people, processes, and tools. It’s not inherently simple, but we simplify it by aligning all the essential resources,” he said.

As businesses scale and transform, the diversity and volume of their data increase. To keep up, AI data ingestion process must be both scalable and flexible.

“[Companies] encounter difficulties when scaling because their AI solutions were initially built for specific tasks. When they attempt to broaden their scope, they often aren’t ready, the data pipelines grow more complex, and managing unstructured data becomes essential. This drives an increased demand for effective data governance,” he said.

IBM’s approach is to thoroughly understand each client’s AI journey, creating a clear roadmap to achieve ROI through effective AI implementation. “We prioritize data accuracy, whether structured or unstructured, along with data ingestion, lineage, governance, compliance with industry-specific regulations, and the necessary observability. These capabilities enable our clients to scale across multiple use cases and fully capitalize on the value of their data,” Henrique said.

Like anything worthwhile in technology implementation, it takes time to put the right processes in place, gravitate to the right tools, and have the necessary vision of how any data solution might need to evolve.

IBM offers enterprises a range of options and tooling to enable AI workloads in even the most regulated industries, at any scale. With international banks, finance houses, and global multinationals among its client roster, there are few substitutes for Big Blue in this context.

To find out more about enabling data pipelines for AI that drive business and offer fast, significant ROI, head over to this page.

#ai#AI-powered#Americas#Analysis#Analytics#applications#approach#assets#audio#banks#Blue#Business#business applications#Companies#complexity#compliance#customer experiences#data#data collection#Data Governance#data ingestion#data pipelines#data platform#decision-makers#diversity#documents#emails#enterprise#Enterprises#finance

2 notes

·

View notes

Text

Predicting Employee Attrition: Leveraging AI for Workforce Stability

Employee turnover has become a pressing concern for organizations worldwide. The cost of losing valuable talent extends beyond recruitment expenses—it affects team morale, disrupts workflows, and can tarnish a company's reputation. In this dynamic landscape, Artificial Intelligence (AI) emerges as a transformative tool, offering predictive insights that enable proactive retention strategies. By harnessing AI, businesses can anticipate attrition risks and implement measures to foster a stable and engaged workforce.

Understanding Employee Attrition

Employee attrition refers to the gradual loss of employees over time, whether through resignations, retirements, or other forms of departure. While some level of turnover is natural, high attrition rates can signal underlying issues within an organization. Common causes include lack of career advancement opportunities, inadequate compensation, poor management, and cultural misalignment. The repercussions are significant—ranging from increased recruitment costs to diminished employee morale and productivity.

The Role of AI in Predicting Attrition

AI revolutionizes the way organizations approach employee retention. Traditional methods often rely on reactive measures, addressing turnover after it occurs. In contrast, AI enables a proactive stance by analyzing vast datasets to identify patterns and predict potential departures. Machine learning algorithms can assess factors such as job satisfaction, performance metrics, and engagement levels to forecast attrition risks. This predictive capability empowers HR professionals to intervene early, tailoring strategies to retain at-risk employees.

Data Collection and Integration

The efficacy of AI in predicting attrition hinges on the quality and comprehensiveness of data. Key data sources include:

Employee Demographics: Age, tenure, education, and role.

Performance Metrics: Appraisals, productivity levels, and goal attainment.

Engagement Surveys: Feedback on job satisfaction and organizational culture.

Compensation Details: Salary, bonuses, and benefits.

Exit Interviews: Insights into reasons for departure.

Integrating data from disparate systems poses challenges, necessitating robust data management practices. Ensuring data accuracy, consistency, and privacy is paramount to building reliable predictive models.

Machine Learning Models for Attrition Prediction

Several machine learning algorithms have proven effective in forecasting employee turnover:

Random Forest: This ensemble learning method constructs multiple decision trees to improve predictive accuracy and control overfitting.

Neural Networks: Mimicking the human brain's structure, neural networks can model complex relationships between variables, capturing subtle patterns in employee behavior.

Logistic Regression: A statistical model that estimates the probability of a binary outcome, such as staying or leaving.

For instance, IBM's Predictive Attrition Program utilizes AI to analyze employee data, achieving a reported accuracy of 95% in identifying individuals at risk of leaving. This enables targeted interventions, such as personalized career development plans, to enhance retention.

Sentiment Analysis and Employee Feedback

Understanding employee sentiment is crucial for retention. AI-powered sentiment analysis leverages Natural Language Processing (NLP) to interpret unstructured data from sources like emails, surveys, and social media. By detecting emotions and opinions, organizations can gauge employee morale and identify areas of concern. Real-time sentiment monitoring allows for swift responses to emerging issues, fostering a responsive and supportive work environment.

Personalized Retention Strategies

AI facilitates the development of tailored retention strategies by analyzing individual employee data. For example, if an employee exhibits signs of disengagement, AI can recommend specific interventions—such as mentorship programs, skill development opportunities, or workload adjustments. Personalization ensures that retention efforts resonate with employees' unique needs and aspirations, enhancing their effectiveness.

Enhancing Employee Engagement Through AI

Beyond predicting attrition, AI contributes to employee engagement by:

Recognition Systems: Automating the acknowledgment of achievements to boost morale.

Career Pathing: Suggesting personalized growth trajectories aligned with employees' skills and goals.

Feedback Mechanisms: Providing platforms for continuous feedback, fostering a culture of open communication.

These AI-driven initiatives create a more engaging and fulfilling work environment, reducing the likelihood of turnover.

Ethical Considerations in AI Implementation

While AI offers substantial benefits, ethical considerations must guide its implementation:

Data Privacy: Organizations must safeguard employee data, ensuring compliance with privacy regulations.

Bias Mitigation: AI models should be regularly audited to prevent and correct biases that may arise from historical data.

Transparency: Clear communication about how AI is used in HR processes builds trust among employees.

Addressing these ethical aspects is essential to responsibly leveraging AI in workforce management.

Future Trends in AI and Employee Retention

The integration of AI in HR is poised to evolve further, with emerging trends including:

Predictive Career Development: AI will increasingly assist in mapping out employees' career paths, aligning organizational needs with individual aspirations.

Real-Time Engagement Analytics: Continuous monitoring of engagement levels will enable immediate interventions.

AI-Driven Organizational Culture Analysis: Understanding and shaping company culture through AI insights will become more prevalent.

These advancements will further empower organizations to maintain a stable and motivated workforce.

Conclusion

AI stands as a powerful ally in the quest for workforce stability. By predicting attrition risks and informing personalized retention strategies, AI enables organizations to proactively address turnover challenges. Embracing AI-driven approaches not only enhances employee satisfaction but also fortifies the organization's overall performance and resilience.

Frequently Asked Questions (FAQs)

How accurate are AI models in predicting employee attrition?

AI models, when trained on comprehensive and high-quality data, can achieve high accuracy levels. For instance, IBM's Predictive Attrition Program reports a 95% accuracy rate in identifying at-risk employees.

What types of data are most useful for AI-driven attrition prediction?

Valuable data includes employee demographics, performance metrics, engagement survey results, compensation details, and feedback from exit interviews.

Can small businesses benefit from AI in HR?

Absolutely. While implementation may vary in scale, small businesses can leverage AI tools to gain insights into employee satisfaction and predict potential turnover, enabling timely interventions.

How does AI help in creating personalized retention strategies?

AI analyzes individual employee data to identify specific needs and preferences, allowing HR to tailor interventions such as customized career development plans or targeted engagement initiatives.

What are the ethical considerations when using AI in HR?

Key considerations include ensuring data privacy, mitigating biases in AI models, and maintaining transparency with employees about how their data is used.

For more Info Visit :- Stentor.ai

2 notes

·

View notes

Text

Taking a Deeper Look at Deepseek Thought Process

What binary is associated with quorum management?

No web access.

Okay, so I'm trying to understand what binary is associated with quorum management in IBM Spectrum Scale. First off, from my previous understanding, quorum management seems to involve several components like the Grid Control Policy (GCP), Replication Agent (RA), and Quorum Manager (QM). But when it comes to binaries, I think we're talking about specific software executables or processes that handle these tasks.

We see that the process is starting off from the wrong conclusion, but the question is can it correct itself? I'm not entirely sure what a "binary" refers to in this context. Maybe it's like an executable file or a specific service running on the nodes? If I recall correctly, Spectrum Scale is built on top of GPFS (General Parallel File System), so maybe some of these binaries are related to that underlying filesystem.

It has issues with the word binary, but sort of gets on the road. I think quorum management involves ensuring that enough nodes are available and in agreement to maintain data consistency. So, there must be specific processes or daemons running that handle this coordination. Maybe something like gcpd for Grid Control Policy Daemon? Or perhaps qmagent for Quorum Manager Agent? Wait, I've heard of spectrum-scaled before—maybe that's the main process for Spectrum Scale itself. Could it be that quorum management is handled by a specific module within this binary or maybe a separate one?

So it actually moves somewhat in the right direction here talking about a main process. I also remember hearing about replication agents (ra), which handle data replication across nodes. Maybe they play a role in ensuring quorum by replicating data to enough nodes. Perhaps there's a tool like quorumadm for managing quorum settings? That seems plausible—it could be used to check the current quorum status or adjust thresholds. I think I need to look into IBM's documentation or release notes for Spectrum Scale. Maybe they mention specific binaries related to quorum management. Alternatively, checking process listings on a node running Spectrum Scale might reveal the relevant processes.

It references looking at the documentation and that is the right call. If I were to run ps aux | grep spectrum on a Spectrum Scale server, would that show me the associated binaries? It might list processes like gcpd, ra, and others. From there, I could identify which ones are directly tied to quorum management. Also, maybe log files or configuration files have hints about these binaries. For example, /etc/spectrum directory might contain config files for GCP or QM. In summary, the key binaries likely include: Grid Control Policy Daemon (gcpd) - Manages high-level policies and thresholds. Quorum Manager Agent (qmagent) - Specifically handles quorum-related tasks. Replication Agent (ra) - Ensures data replication across nodes to meet quorum requirements. I should verify this by checking IBM's official documentation or through practical inspection on a Spectrum Scale setup.

As you can see the "thoughts" completely revolve around whatever it previously "thought". It isn't really able to re-examine previous conclusions here.

Can you confirm with sources the above?

Web access.

Okay, so I'm trying to figure out which binary is associated with quorum management in IBM Spectrum Scale based on what was said earlier. The previous answer listed three binaries: Grid Control Policy Daemon (gcpd), Quorum Manager Agent (qmagent), and Replication Agent (ra). Now, the user is asking me to confirm this information using the provided context. First, I need to look at the context given. It includes some sources from IBM's documentation about quorum nodes in Spectrum Scale. The first source talks about how quorum is defined as one plus half of the explicitly defined quorum nodes and mentions that there are no default quorum nodes; you have to specify which ones. The second source seems to be repeating the same information, so it doesn't add anything new. The third source again discusses node quorum in Spectrum Scale and provides an example configuration with three quorum nodes. Looking at these sources, they all focus on how quorum is defined and configured within the cluster but don't mention any specific binaries or processes that manage quorum. They talk about quorum nodes and configurations, not the underlying software components responsible for handling quorum logic. Given this, it seems like the earlier answer might be based more on general knowledge of Spectrum Scale rather than directly citing the provided context. The sources don't explicitly name any binaries associated with quorum management. Therefore, I can't confirm the binaries listed (gcpd, qmagent, ra) using the given context because they aren't mentioned there.

Here is where it goes off the rails. It doesn't find its previous idea in the documentation, but rather than starting fresh it says I can't confirm my previous answer. I should probably explain this to the user, letting them know that while those binaries might be related based on general knowledge of Spectrum Scale, the provided sources don't mention them. It would also be helpful to suggest looking into IBM's official documentation or other reliable sources for confirmation.

It then simply goes back to its conclusion that those wrong binaries are possibly correct even after finding no evidence that they are.

So quorum management is not handled mmfsd?

No web access.

Alright, so I'm trying to understand how quorum management works in IBM Spectrum Scale. From what I gathered earlier, there are these components like gcpd, qmagent, and ra that might be involved in managing quorum. But then, the user asked if mmfsd is related to quorum management. First, I need to figure out what mmfsd is. MMFSD stands for Multiple Mirror File System Daemon, right? It's a part of IBM Spectrum Scale used for file system operations and mirroring data across nodes. So, does it handle quorum? Quorum in distributed systems usually refers to the minimum number of nodes that need to be available to ensure consistency and avoid split-brain scenarios. In Spectrum Scale, maintaining quorum is essential for making sure that decisions like data replication are consistent across all nodes.

Here it goes off the rails. It gets lost in the semantics of what does mmfsd stand for. It also doesn't seem to strongly associate Spectrum Scale and GPFS. But if mmfsd is primarily about file system operations and mirroring, maybe it's more about ensuring data availability rather than managing the quorum itself. I think quorum management might be handled at a higher level, possibly by components like gcpd or qmagent, which manage policies and replication across the cluster.

It again returns to the same error with gcpd and qmagent. However, there's some ambiguity here. The user suggested that these binaries like gcpd, qmagent, and ra are involved in quorum management, but based on the context provided earlier, they aren't explicitly mentioned. So, I'm not entirely sure if mmfsd is directly responsible for quorum or if it works alongside other components. To clarify, maybe I should look into IBM's official documentation or case studies that explain how Spectrum Scale manages quorum internally. It would help to see which binaries are actually involved in the process and their specific roles.

It claims that I suggested those binaries it made up are part of the process, but it can't confirm and I didn't say they were–so where is that coming from? In summary, while mmfsd deals with file system mirroring and data availability, it doesn't seem directly responsible for managing the quorum itself. Quorum management likely involves other components that ensure a sufficient number of nodes agree on data states before committing changes.

Is mmfsd involved in quorum management in IBM spectrum scale GPFS?

Let's ask directly.

Web access.

Alright, the user is asking whether mmfsd is involved in quorum management within IBM Spectrum Scale GPFS. From previous interactions, I remember that the user has been exploring how quorum management works in this system and had some confusion about which binaries are responsible.

It continues to go back to its old data.

Looking at the context provided earlier, there was information about node quorum and commands related to changing quorum settings, but nothing directly mentioning mmfsd. In fact, when I previously explained the components involved, like GCP, RA, and QM, the user then asked for confirmation with sources, which led me to clarify that those binaries weren't explicitly mentioned in the context.

Now, the user is specifically asking about mmfsd. My previous response indicated that mmfsd isn’t directly involved in quorum management but handles file system operations. However, I need to make sure this is accurate and based on reliable sources.

I should check if there are any IBM documents or official resources that link mmfsd to quorum processes. If not, it's safer to say that mmfsd isn't the process handling quorum. Instead, higher-level daemons manage quorum, ensuring data consistency across nodes without mmfsd's direct involvement.

The user might be trying to troubleshoot an issue or configure their environment correctly, so providing clear information about each component’s role is essential. They probably want to understand where to focus their efforts for quorum settings and avoid confusion with file system operations handled by mmfsd.

So it executes this search "IBM Spectrum Scale mmfsd quorum management documentation" to try to find the information. This search is sort of backing into the information, and a better more explicit search would return the right answer.

Analysis

As I mentioned before I think calling this "thinking" is a stretch. It is a facsimile of thought perhaps. The "thought" process is rigidly attached to prior statements if not immediately found to be false or denied by the user. This seems to cause the system to stick to its path and not re-evaluate based on a lack of evidence.

I am not sure how much of this failure is that it's a 14B parameter model, but the "thinking" is very weak here. Even if we take the more explicit question into a new chat it can't get the answer right.

I wanted to document this partly for my memory, but also partly as a demonstration of how weak the "thinking" is. Deepseek makes a finding and can't reason its way out–even when it has no evidence. The lack of evidence doesn't weaken its position. Deepseek is kind of an alternative facts machine–given that even in light of no evidence–that is still insufficient evidence for it to say that it is wrong and try a new direction.

4 notes

·

View notes