#Interpolation Techniques Assignment Help

Explore tagged Tumblr posts

Text

A structured way to learn JavaScript.

I came across a post on Twitter that I thought would be helpful to share with those who are struggling to find a structured way to learn Javascript on their own. Personally, I wish I had access to this information when I first started learning in January. However, I am grateful for my learning journey so far, as I have covered most topics, albeit in a less structured manner.

N/B: Not everyone learns in the same way; it's important to find what works for you. This is a guide, not a rulebook.

EASY

What is JavaScript and its role in web development?

Brief history and evolution of JavaScript.

Basic syntax and structure of JavaScript code.

Understanding variables, constants, and their declaration.

Data types: numbers, strings, boolean, and null/undefined.

Arithmetic, assignment, comparison, and logical operators.

Combining operators to create expressions.

Conditional statements (if, else if, else) for decision making.

Loops (for, while) for repetitive tasks. - Switch statements for multiple conditional cases.

MEDIUM

Defining functions, including parameters and return values.

Function scope, closures, and their practical applications.

Creating and manipulating arrays.

Working with objects, properties, and methods.

Iterating through arrays and objects.Understanding the Document Object Model (DOM).

Selecting and modifying HTML elements with JavaScript.Handling events (click, submit, etc.) with event listeners.

Using try-catch blocks to handle exceptions.

Common error types and debugging techniques.

HARD

Callback functions and their limitations.

Dealing with asynchronous operations, such as AJAX requests.

Promises for handling asynchronous operations.

Async/await for cleaner asynchronous code.

Arrow functions for concise function syntax.

Template literals for flexible string interpolation.

Destructuring for unpacking values from arrays and objects.

Spread/rest operators.

Design Patterns.

Writing unit tests with testing frameworks.

Code optimization techniques.

That's it I guess!

872 notes

·

View notes

Text

Data Matters: How to Curate and Process Information for Your Private LLM

In the era of artificial intelligence, data is the lifeblood of any large language model (LLM). Whether you are building a private LLM for business intelligence, customer service, research, or any other application, the quality and structure of the data you provide significantly influence its accuracy and performance. Unlike publicly trained models, a private LLM requires careful curation and processing of data to ensure relevance, security, and efficiency.

This blog explores the best practices for curating and processing information for your private LLM, from data collection and cleaning to structuring and fine-tuning for optimal results.

Understanding Data Curation

Importance of Data Curation

Data curation involves the selection, organization, and maintenance of data to ensure it is accurate, relevant, and useful. Poorly curated data can lead to biased, irrelevant, or even harmful responses from an LLM. Effective curation helps improve model accuracy, reduce biases, enhance relevance and domain specificity, and strengthen security and compliance with regulations.

Identifying Relevant Data Sources

The first step in data curation is sourcing high-quality information. Depending on your use case, your data sources may include:

Internal Documents: Business reports, customer interactions, support tickets, and proprietary research.

Publicly Available Data: Open-access academic papers, government databases, and reputable news sources.

Structured Databases: Financial records, CRM data, and industry-specific repositories.

Unstructured Data: Emails, social media interactions, transcripts, and chat logs.

Before integrating any dataset, assess its credibility, relevance, and potential biases.

Filtering and Cleaning Data

Once you have identified data sources, the next step is cleaning and preprocessing. Raw data can contain errors, duplicates, and irrelevant information that can degrade model performance. Key cleaning steps include removing duplicates to ensure unique entries, correcting errors such as typos and incorrect formatting, handling missing data through interpolation techniques or removal, and eliminating noise such as spam, ads, and irrelevant content.

Data Structuring for LLM Training

Formatting and Tokenization

Data fed into an LLM should be in a structured format. This includes standardizing text formats by converting different document formats (PDFs, Word files, CSVs) into machine-readable text, tokenization to break down text into smaller units (words, subwords, or characters) for easier processing, and normalization by lowercasing text, removing special characters, and converting numbers and dates into standardized formats.

Labeling and Annotating Data

For supervised fine-tuning, labeled data is crucial. This involves categorizing text with metadata, such as entity recognition (identifying names, locations, dates), sentiment analysis (classifying text as positive, negative, or neutral), topic tagging (assigning categories based on content themes), and intent classification (recognizing user intent in chatbot applications). Annotation tools like Prodigy, Labelbox, or Doccano can facilitate this process.

Structuring Large Datasets

To improve retrieval and model efficiency, data should be stored in a structured format such as vector databases (using embeddings and vector search for fast retrieval like Pinecone, FAISS, Weaviate), relational databases (storing structured data in SQL-based systems), or NoSQL databases (storing semi-structured data like MongoDB, Elasticsearch). Using a hybrid approach can help balance flexibility and speed for different query types.

Processing Data for Model Training

Preprocessing Techniques

Before feeding data into an LLM, preprocessing is essential to ensure consistency and efficiency. This includes data augmentation (expanding datasets using paraphrasing, back-translation, and synthetic data generation), stopword removal (eliminating common but uninformative words like "the," "is"), stemming and lemmatization (reducing words to their base forms like "running" → "run"), and encoding and embedding (transforming text into numerical representations for model ingestion).

Splitting Data for Training

For effective training, data should be split into a training set (80%) used for model learning, a validation set (10%) used for tuning hyperparameters, and a test set (10%) used for final evaluation. Proper splitting ensures that the model generalizes well without overfitting.

Handling Bias and Ethical Considerations

Bias in training data can lead to unfair or inaccurate model predictions. To mitigate bias, ensure diverse data sources that provide a variety of perspectives and demographics, use bias detection tools such as IBM AI Fairness 360, and integrate human-in-the-loop review to manually assess model outputs for biases. Ethical AI principles should guide dataset selection and model training.

Fine-Tuning and Evaluating the Model

Transfer Learning and Fine-Tuning

Rather than training from scratch, private LLMs are often fine-tuned on top of pre-trained models (e.g., GPT, Llama, Mistral). Fine-tuning involves selecting a base model that aligns with your needs, using domain-specific data to specialize the model, and training with hyperparameter optimization by tweaking learning rates, batch sizes, and dropout rates.

Model Evaluation Metrics

Once the model is trained, its performance must be evaluated using metrics such as perplexity (measuring how well the model predicts the next word), BLEU/ROUGE scores (evaluating text generation quality), and human evaluation (assessing outputs for coherence, factual accuracy, and relevance). Continuous iteration and improvement are crucial for maintaining model quality.

Deployment and Maintenance

Deploying the Model

Once the LLM is fine-tuned, deployment considerations include choosing between cloud vs. on-premise hosting depending on data sensitivity, ensuring scalability to handle query loads, and integrating the LLM into applications via REST or GraphQL APIs.

Monitoring and Updating

Ongoing maintenance is necessary to keep the model effective. This includes continuous learning by regularly updating with new data, model drift detection to identify and correct performance degradation, and user feedback integration to use feedback loops to refine responses. A proactive approach to monitoring ensures sustained accuracy and reliability.

Conclusion

Curating and processing information for a private LLM is a meticulous yet rewarding endeavor. By carefully selecting, cleaning, structuring, and fine-tuning data, you can build a robust and efficient AI system tailored to your needs. Whether for business intelligence, customer support, or research, a well-trained private LLM can offer unparalleled insights and automation, transforming the way you interact with data.

Invest in quality data, and your model will yield quality results.

#ai#blockchain#crypto#ai generated#dex#cryptocurrency#blockchain app factory#ico#ido#blockchainappfactory

0 notes

Text

Video Annotation: Transforming Motion into AI-Ready Insights

AI and ML have changed the way people interact with digital content while video annotation is at the focal point of such transformation. It enables everything from detecting pedestrians by autonomous vehicles to real-time checking of threats in surveillance systems, but the interpretation of movement by artificial intelligence is highly dependent on high-quality annotated video data.

In simple terms, it is labeling video frames to add context for AI models. Annotated videos teach AI systems more effectively to identify underlying patterns, behaviors, and environments by marking objects, tracking movement, and defining interactions. Thus, they become smart, fast, and reliable decision-makers.

Why Video Annotation Matters in AI Development

The models that really grow from data are AI, and videos share much richer, dynamic information as compared to static images with no motion. It will provide motion, depth, and continuity, allowing AI to do things like:

Understand movement and object tracking in real-world environments.

Analyze complex interactions between people, objects, and the environment.

Improve decision-making in real-time AI applications.

Enhance accuracy by considering multiple frames rather than isolated snapshots.

These capabilities make video annotation essential for applications ranging from self-driving cars to sports analytics and healthcare diagnostics.

Types of Video Annotation Techniques

The efficiency of any AI model depends on the detail and number of techniques used to annotate the video. Each method caters to a particular type of AI, allowing the model to get exactly the type of data required in its learning process.

Bounding Box Annotation: This method involves drawing rectangular boxes around the objects identified in every frame. Bounding boxes provide an intuitive and effective training method for AI models to detect and recognize objects in the scene.

Semantic Segmentation: Semantic Segmentation is a more complex technique, but it involves the assignment of a category label for every pixel in the frame of a video. With semantic segmentation, the AI reaches a level of understanding of video content that matches that of a human, thus being able to better recognize objects.

Keypoint Annotation: This method usually annotates important points on an object for applications ranging from human pose analysis to face recognition AI. This annotation helps the AI understand body language and gesture by mapping joints and satellite trajectories.

Optical Flow Annotation: The optical flow technique tracks motion in terms of pixel displacement between two adjacent frames. It allows AI models to analyze past trajectories and ascertain the future possibilities of odors.

3D Cuboid Annotation: Unlike bounding boxes, 3D cuboids give depth perception, enabling AI to recognize object dimensions and spatial relationships. This annotation type ensures that AI understands object placement in three-dimensional space.

Challenges in Video Annotation

In spite of their tremendous advantages, video annotation has some annoyances that compromise AI model training farther on.

Huge Volume of Data: Videos contain thousands of frames, making annotation a small-scale arm-bending job. Streamlining the workflow with artificial or AI-assisted annotation could somehow help in this respect.

Motion Blur and Occlusion: Moving objects are magnificently difficult, if not next to impossible, to annotate. This is mainly because they can obscure themselves or become blurred by the movement. Some frame interpolation methods support increased annotation accuracy.

Data Privacy and Compliance: Video content may contain a lot of sensitive data from its very nature. Hence, it is subject to regulations of GDPR, CCPA, and the like. Such proper anonymization methods lead to ethical AI development in practice.

Annotation Consistency: Labeling must be consistent throughout frames and datasets. High variability leads to machine learning bias, so rigorous quality control is necessary.

The Future of Video Annotation in AI

Even as AI improves, techniques will change for video annotation. Various trends in motion analysis of video include:

AI-assisted annotation: Annotating objects with the assistance of AI in an automatic way and less manual effort.

Self-supervised learning: AI learns from unlabeled data off a video.

Real-time annotation: AI easily merges to the live video feed in real-time.

Federated learning: AI models trained over many sources from the edge of the client together, preserving privacy.

These developments will only increase the accuracy of AI, help ensure less training time for AI systems, and support enhanced AI application efficiency.

Conclusion

Video annotation is the backbone of AI-powered motion analysis, as they provide the training data required by machine learning models. With the ability to label objects, movements, and interactions correctly, AI systems can interpret and understand reality with improved accuracy.

From autonomous vehicles and healthcare to sports analytics and retail, video annotation is transforming industries and driving the path to AI innovation for the future. As annotation techniques develop, AI will continue to learn, adapt, and revolutionize the way we interact with visual data.

Visit Globose Technology Solutions to see how the team can speed up your video annotation projects.

0 notes

Text

Pure Mathematics. Topic Numerical Techniques.

Since I have a test on Monday I thought I would use this blog for its intended purpose.

When the normal techniques do not work, we use numerical Techniques to find the root of a function. The normal techniques are factorization, completing the square, and using the quadratic formula x= -b +- square root (b2-4ac ) /2a.

Firstly, we NEED to establish that there is a root, to do so we use the intermediate value theorem (IMVT). The formal definition of the IMVT is that

IF y=f(x) is a continuous function in the interval a<x<b and if “k” is a number which lies in between f(a)<k<f(b) or f(b)<k<f(a) then there exists at least one number “c” such that “c” lies between a<c<b and f(c)=k.

BUT there is no need to commit the definition to memory

Going back to establishing the root.

Eg. Show that x^2 -3x - 1 = 0 has a root in [3,4]

The [3,4] in this case means interval, 3 < root < 4 or the root lies in between 3 and 4.

For a root to exist there MUST be a change in sign either (-ve to +ve) or (+ve to -ve).

f(3) = 3^2 - 3(3) -1

= -1

f(4) = 4^2 - 3(4) - 1

= 3

What I just did is called substitution. I replaced the value of x with the values in the given interval.

Now we write a STATEMENT, the change in sign indicates that there is a root, and a statement is needed.

Since f(x) is a continuous function in [3,4] and f(3) x f(4) <0 then by IMVT there exits a root such that 3 <root< 4

3< root< 4 This means that ← the root lies in between the interval

f(3) x f(4) <0 this means multiplied it produces a negative value which is less than 0

This needs to be memorised.

There are four methods in this topic:

Interval bisection

Liner Interpolation

Newton-Raphson

and

Fixed Pt Iteration

In this post, I'll be covering Interval Bisection and Liner Interpolation.

Interval Bisection

First Prove that there is a root

f(x) ; x^2 - 4x + 1 = 0, [3,4]

f(3) = (3)^2 - 4(3) + 1

= -2

f(4) = 1

Since f(x) is a continuous function in [3,4] and f(3) x f(4) <0 then by IMVT there exists a root such that 3 < root < 4.

Now using the interval bisection method

WE FIND THE MIDPOINT

3+4/2 = 7/2 = 3.5

Consider f(3.5) = (3.5)2 - 4(3.5) +1 = 0

f(3.5)= -0.75

Now we change the interval

Since f(3.5) x f(4) <0

Then 3.5 < root < 4 or [3.5, 4]

Keep in mind that the root EXISTS where there is a change of sign

3.5 +4/2 = 3.75

Consider f(3.75)= 0.0625

Since f(3.5) x f(3.75) < 0

Then 3.5 < root < 3.75 or [3.5, 3.75]

3.75 + 3.5 /2 = 3.625

Consider f(3.625) = -0.359

Since f(3.625) x f( 3.75)

Then 3.625 < root < 3.75

After a few more rounds you should get

3.719 < root < 3.734 identical to 1 decimal place

As you can see this method can be lengthy

A question you might have is How do you know when to stop?

Well 2 ways:

When they are identical to the asked decimal places

eg. 3.7 < root < 3.7 which is identical to 1 decimal places

or after how many iterations they ask you for.

eg. Do interval bisection 3 times. With reference to the example we worked out above the answer here would be 3.625 < root < 3.75

LINEAR INTERPOLATION

There are two proofs included in this method that I’ll attach a picture of below. You must memorise these proofs

In this method, we assign the interval value as either a or b. A is normally used for the smaller interval value and b for the larger interval value.

We then find f(a) and f(b) and then substitute into any one of the formulas in the above proof. An example of this is the picture below

There was no need to continue since 0.2 < root < 0.2

As stated we only need to put the statement: since f(a) x f(b)< 0, a< root< b if we are doing another interpolation which is why there is an absence of that statement after working out x2.

Again we stop either when the interval is identical to the stated decimal points or after how many iterations.

Hope you found this helpful, I'll post the second part... sometime😅 but for now I have to revise those other two methods for my test tomorrow.

1 note

·

View note

Text

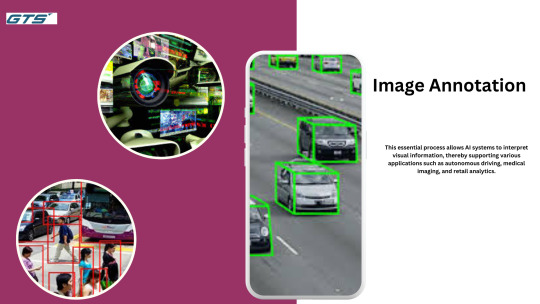

Image Annotation in Healthcare, Retail, and Autonomous Driving

Introduction:

Image Annotation plays a crucial role in the fields of artificial intelligence (AI) and machine learning (ML), as it involves the careful labeling of images to train models for effective object recognition and scene analysis. This essential process allows AI systems to interpret visual information, thereby supporting various applications such as autonomous driving, medical imaging, and retail analytics.

Categories of Image Annotation:

Bounding Box Annotation: This method entails drawing rectangular boxes around objects in an image, aiding AI models in detecting and classifying different elements. It is commonly utilized in scenarios such as object detection for self-driving cars and surveillance systems.

Semantic Segmentation: In this approach, each pixel in an image is assigned a specific class label, which facilitates a comprehensive understanding of the scene. This technique is vital in medical imaging for differentiating between various tissue types and in autonomous driving for recognizing roadways versus sidewalks.

Polygon Annotation: For objects with non-standard shapes, polygon annotation allows for accurate contour mapping, improving the model's capability to identify complex structures. This technique is particularly beneficial in agricultural technology for recognizing different plant species.

Key Point Annotation: This method involves marking significant points on objects, such as facial features or joint locations, which helps models comprehend object orientation and movement. It is critical in applications like facial recognition and pose estimation.

Cuboid Annotation: Expanding beyond two dimensions, cuboid annotation provides three-dimensional labeling, offering depth information to models. This is essential in robotics and autonomous navigation for understanding spatial relationships.

Industry Applications:

Autonomous Technology: Comprehensive image annotations are vital for self-driving vehicles to accurately perceive and react to their surroundings, thereby ensuring safety and operational efficiency.

Healthcare: In the realm of medical diagnostics, accurate annotations of medical images such as MRIs and CT scans support AI models in identifying various conditions.

GTS's Proficiency in Image Annotation:

At GTS, we excel in delivering extensive image and video annotation services designed to meet the specific requirements of various industries. Our dedicated team meticulously annotates each image, ensuring that even the smallest details are captured to facilitate the development of advanced machine learning models. Utilizing cutting-edge tools and methodologies, we provide data that is not only accurately labeled but also meaningful and actionable.

Our offerings encompass bounding box annotation, semantic segmentation, cuboid annotation, image classification, polygon annotation, key points annotation, lane annotation, custom annotation, and 3D point cloud annotation. We serve a wide range of sectors, including autonomous technology, healthcare, government, retail, finance, and technology.

By collaborating with GTS, organizations can significantly improve the performance of their AI models, resulting in more intelligent and responsive applications across multiple fields.

For further details regarding our image and video annotation services, please visit our website.

Tools and Services for Image Annotation

To address these challenges, various tools and services have been developed to streamline the annotation process:

Computer Vision Annotation Tool (CVAT): An open-source, web-based tool designed for annotating digital images and videos. CVAT supports tasks such as object detection, image classification, and image segmentation, offering features like interpolation of shapes between keyframes and semi-automatic annotation using deep learning models.

Super Annotate: Ranked as a leading data labeling platform, Super Annotate provides an end-to-end data solution with an integrated service marketplace, facilitating efficient and accurate annotation processes.

Anolytics : Offers data annotation services for machine learning, specializing in image annotation to make objects recognizable to computer vision models.

GTS.ai: Provides comprehensive image and video annotation services, employing techniques such as bounding box annotation, semantic segmentation, cuboid annotation, and more to enhance AI algorithms across various industries.

Future of Image Annotation

Advancements in AI are leading towards automated annotation methods, reducing the reliance on manual efforts. Techniques such as automatic image annotation, where systems assign metadata to images without human intervention, are being developed to enhance efficiency and scalability.

Conclusion

Image annotation is a cornerstone of computer vision and AI, transforming raw visual data into structured information that machines can comprehend. As AI continues to evolve, the methods and tools for image annotation will advance Globose Technology Solutions further expanding the potential applications and impact of intelligent systems across various sectors.

0 notes

Video

youtube

Tech talk with Arcoiris Logics #javascript #mobileappdevelopment #codin... Title: Unlocking JavaScript Secrets: Hidden Tech Tips Every Developer Should KnowJavaScript continues to reign as one of the most powerful and widely used programming languages. From creating interactive websites to building complex applications, JavaScript powers the web in ways many developers are yet to fully understand. In this post, we’re diving into some hidden tech tips that can help you improve your JavaScript coding skills and efficiency.Here are some JavaScript tips for developers that will take your development game to the next level:1. Use Destructuring for Cleaner CodeJavaScript’s destructuring assignment allows you to extract values from arrays or objects into variables in a cleaner and more readable way. It’s perfect for dealing with complex data structures and improves the clarity of your code.javascriptCopy codeconst user = { name: "John", age: 30, location: "New York" }; const { name, age } = user; console.log(name, age); // John 30 2. Master Arrow FunctionsArrow functions provide a shorter syntax for writing functions and fix some common issues with the this keyword. They are especially useful in callback functions and higher-order functions.javascriptCopy codeconst greet = (name) => `Hello, ${name}!`; console.log(greet("Alice")); // Hello, Alice! 3. Leverage Default ParametersDefault parameters allow you to set default values for function parameters when no value is passed. This feature can help prevent errors and make your code more reliable.javascriptCopy codefunction greet(name = "Guest") { return `Hello, ${name}!`; } console.log(greet()); // Hello, Guest! 4. Mastering Promises and Async/AwaitPromises and async/await are essential for handling asynchronous operations in JavaScript. While callbacks were once the go-to solution, promises provide a more manageable way to handle complex asynchronous code.javascriptCopy codeconst fetchData = async () => { try { const response = await fetch("https://api.example.com"); const data = await response.json(); console.log(data); } catch (error) { console.error(error); } }; fetchData(); 5. Use Template Literals for Dynamic StringsTemplate literals make string interpolation easy and more readable. They also support multi-line strings and expression evaluation directly within the string.javascriptCopy codeconst user = "Alice"; const message = `Hello, ${user}! Welcome to JavaScript tips.`; console.log(message); 6. Avoid Global Variables with IIFE (Immediately Invoked Function Expressions)Global variables can be a source of bugs, especially in large applications. An IIFE helps by creating a local scope for your variables, preventing them from polluting the global scope.javascriptCopy code(function() { const temp = "I am local!"; console.log(temp); })(); 7. Array Methods You Should KnowJavaScript offers powerful array methods such as map(), filter(), reduce(), and forEach(). These methods allow for more functional programming techniques, making code concise and easier to maintain.javascriptCopy codeconst numbers = [1, 2, 3, 4]; const doubled = numbers.map(num => num * 2); console.log(doubled); // [2, 4, 6, 8] 8. Take Advantage of Spread OperatorThe spread operator (...) can be used to copy elements from arrays or properties from objects. It’s a game changer when it comes to cloning data or merging multiple arrays and objects.javascriptCopy codeconst arr1 = [1, 2, 3]; const arr2 = [...arr1, 4, 5]; console.log(arr2); // [1, 2, 3, 4, 5] 9. Debugging with console.table()When working with complex data structures, console.table() allows you to output an object or array in a neat table format, making debugging much easier.javascriptCopy codeconst users = [{ name: "John", age: 25 }, { name: "Alice", age: 30 }]; console.table(users); 10. Use Strict Mode for Cleaner CodeActivating strict mode in JavaScript helps eliminate some silent errors by making them throw exceptions. It’s especially useful when debugging code or working in larger teams.javascriptCopy code"use strict"; let x = 3.14; ConclusionJavaScript offers a wealth of features and hidden gems that, when mastered, can drastically improve your coding skills. By incorporating these tips into your everyday coding practices, you'll write cleaner, more efficient code and stay ahead of the curve in JavaScript development.As technology continues to evolve, staying updated with JavaScript tips and best practices will ensure you're always on top of your development game. Happy coding!#JavaScript #WebDevelopment #TechTips #Coding #DeveloperLife #WebDevTips #JSDevelopment #JavaScriptTips #FrontendDevelopment #ProgrammerLife #JavaScriptForDevelopers #TechCommunity #WebDev

0 notes

Text

Data Labeling Strategies for Cutting-Edge Segmentation Projects

Deep learning has been very successful when working with images as data and is currently at a stage where it works better than humans on multiple use-cases. The most important problems that humans have been interested in solving with computer vision are image classification, object detection and segmentation in the increasing order of their difficulty.

While there in the plain old task of image classification we are just interested in getting the labels of all the objects that are present in an image. In object detection we come further and try to know along with what all objects that are present in an image, the location at which the objects are present with the help of bounding boxes. Image segmentation takes it to a new level by trying to find out accurately the exact boundary of the objects in the image.

What is image segmentation?

We know an image is nothing but a collection of pixels. Image segmentation is the process of classifying each pixel in an image belonging to a certain class and hence can be thought of as a classification problem per pixel. There are two types of segmentation techniques

segmentation: - Semantic segmentation is the process of classifying each pixel belonging to a particular label. It doesn’t different across different instances of the same object. For example, if there are 2 cats in an image, semantic segmentation gives same label to all the pixels of both cats

Instance segmentation: - Instance segmentation differs from semantic segmentation in the sense that it gives a unique label to every instance of a particular object in the image. As can be seen in the image above all 3 dogs are assigned different colors i.e different labels. With semantic segmentation all of them would have been assigned the same color.

There are numerous advances in Segmentation algorithms and open-source datasets. But to solve a particular problem in your domain, you will still need human labeled images or human based verification. In this article, we will go through some of the nuances in segmentation task labeling and how human based workforce can work in tandem with machine learning based approaches.

To train your machine learning model, you need high quality labels. For a successful data labeling project for segmentation depends on three key ingredients.

Labeling Tools

Training

Quality Management

Labeling Tools

There are many open source and commercially available tools on the market. At objectways, we train our workforce using Open CVAT that provides a polygon tool with interpolation and assistive tooling that gives 4x better speed at labeling and then we use a tool that fits the use case.

Here are the leading tools that we recommend for labeling. For efficient labeling, prefer a tool that allows pre-labeling and assistive labeling using techniques like Deep Extreme Cut or Grab cut and good review capabilities such as per label opacity controls.

Workforce training

While it is easier to train a resource to perform simple image tasks such as classification or bounding boxes, segmentation tasks require more training as it involves multiple mechanisms to optimize time, increase efficiency and reduce worker fatigue. Here are some simple training techniques

Utilize Assistive Tooling: An annotator may start with a simple brush or polygon tool which they find easy to pick up. But at volume, these tools tend to induce muscle fatigue hence it is important to make use of assistive tooling.

Gradually introduce complex tasks: Annotators are always good at doing the same task more efficiently with time and should be part of the training program. At Objectways, we tend to start training by introducing annotators with simple images with relatively easy shapes (Cars/Buses/Roads) and migrate them to using complex shapes such as vegetation, barriers.

Use variety of available open-source pre-labeled datasets: It is also important to train the workforce using different datasets and we use PascalVoc, Coco, Cityscapes, Lits, CCP, Pratheepan, Inria Aerial Image Labeling.

Provide Feedback: It is also important to provide timely feedback about their work and hence we use the golden set technique that is created by our senior annotators with 99.99% accuracy and use it to provide feedback for annotators during the training.

Quality Management

In Machine Learning, there are different techniques to understand and evaluate the results of a model.

Pixel accuracy: Pixel accuracy is the most basic metric which can be used to validate the results. Accuracy is obtained by taking the ratio of correctly classified pixels w.r.t total pixels.

Intersection over Union: IOU is defined as the ratio of intersection of ground truth and predicted segmentation outputs over their union. If we are calculating for multiple classes, the IOU of each class is calculated, and their meaning is taken. It is a better metric compared to pixel accuracy as if every pixel is given as background in a 2-class input the IOU value is (90/100+0/100)/2 i.e 45% IOU which gives a better representation as compared to 90% accuracy.

F1 Score: The metric popularly used in classification F1 Score can be used for segmentation tasks as well to deal with class imbalance.

If you have a labeled dataset, you can introduce a golden set in the labeling pipeline and use one of the scores to compare labels against your own ground truth. We focus on following aspects to improve quality of labeling

Understand labeling instructions: Never underestimate the importance of good labeling instructions. Typically, instructions are authored by data scientists who are good at expressing what they want with examples. The human brain has a natural tendency to give weight to (and remember) negative experiences or interactions more than positive ones — they stand out more. So, it is important to provide bad labeling examples. Reading instructions carefully often weeds out many systemic errors across tasks.

Provide timely feedback: While many workforces use tiered skilled workforce where level1 workforce are less experienced than quality control team, it is important to provide timely feedback to level1 annotators, so they understand unintentional labeling errors, so they do not make those errors in the future tasks

Rigorous Quality audits: Many tools provide nice metrics to track label addition/deletion or change over time. Just as algorithms should converge and reduce the loss function, the time to QC a particular task and suggested changes should converge to less than .01% error rate. At objectways, we have dedicated QC and super QC teams who have a consistent track record to achieve over 99% accuracy.

Summary

We have discussed best practices to manage complex large scale segmentation projects and provided guidance for tooling, workforce upskilling and quality management. Please contact [email protected] to provide feedback or if you have any questions.

#Objectways#Artificial Intelligence#Machine Learning#Data Science#Data Labeling#Data Annotation#Human in the Loop

0 notes

Text

MMD FX file reading for shaders: a translation by ryuu

The following tutorial is an English translation of the original one in Japanese by Dance Intervention P.

This English documentation was requested by Chestnutscoop on DeviantArt, as it’ll be useful to the MME modding community and help MMD become open-source for updates. It’s going to be an extensive one, so take it easy.

Disclaimer: coding isn’t my area, not even close to my actual career and job (writing/health). I have little idea of what’s going on here and I’m relying on my IT friends to help me with this one.

Content Index:

Introduction

Overall Flow

Parameter Declaration

Outline Drawing

Non-Self-shadow Rendering

Drawing Objects When Self-shadow is Disabled

Z-value Plot For Self-shadow Determination

Drawing Objects in Self-shadowing

Final Notes

1. INTRODUCTION

This documentation contains the roots of .fx file reading for MME as well as information on DirectX and programmable shaders while reading full.fx version 1.3. In other words, how to use HLSL for MMD shaders. Everything in this tutorial will try to stay as faithful as possible to the original text in Japanese.

It was translated from Japanese to English by ryuu. As I don’t know how to contact Dance Intervention P for permission to translate and publish it here, the original author is free to request me to take it down. The translation was done with the aid of the online translator DeepL and my friends’ help. This documentation has no intention in replacing the original author’s.

Any coding line starting with “// [Japanese text]” is the author’s comments. If the coding isn’t properly formatted on Tumblr, you can visit the original document to check it. The original titles of each section were added for ease of use.

2. OVERALL FLOW (全体の流れ)

Applicable technique → pass → VertexShader → PixelShader

• Technique: processing of annotations that fall under <>.

• Pass: processing unit.

• VertexShader: convert vertices in local coordinates to projective coordinates.

• PixelShader: sets the color of a vertex.

3. PARAMETER DECLARATION (パラメータ宣言)

9 // site-specific transformation matrix

10 float4x4 WorldViewProjMatrix : WORLDVIEWPROJECTION;

11 float4x4 WorldMatrix : WORLD;

12 float4x4 ViewMatrix : VIEW;

13 float4x4 LightWorldViewProjMatrix : WORLDVIEWPROJECTION < string Object = “Light”; >;

• Float4x4: 32-bit floating point with 4 rows and 4 columns.

• WorldViewProjMatrix: a matrix that can transform vertices in local coordinates to projective coordinates with the camera as the viewpoint in a single step.

• WorldMatrix: a matrix that can transform vertices in local coordinates into world coordinates with the camera as the viewpoint.

• ViewMatrix: a matrix that can convert world coordinate vertices to view coordinates with the camera as the viewpoint.

• LightWorldViewProjMatrix: a matrix that can transform vertices in local coordinates to projective coordinates with the light as a viewpoint in a single step.

• Local coordinate system: coordinates to represent the positional relationship of vertices in the model.

• World coordinate: coordinates to show the positional relationship between models.

• View coordinate: coordinates to represent the positional relationship with the camera.

• Projection Coordinates: coordinates used to represent the depth in the camera. There are two types: perspective projection and orthographic projection.

• Perspective projection: distant objects are shown smaller and nearby objects are shown larger.

• Orthographic projection: the size of the image does not change with depth.

15 float3 LightDirection : DIRECTION < string Object = “Light”; >;

16 float3 CameraPosition : POSITION < string Object = “Camera”; >;

• LightDirection: light direction vector.

• CameraPosition: world coordinates of the camera.

18 // material color

19 float4 MaterialDiffuse : DIFFUSE < string Object = “Geometry”; >;

20 float3 MaterialAmbient : AMBIENT < string Object = “Geometry”; >;

21 float3 MaterialEmmisive : EMISSIVE < string Object = “Geometry”; >;

22 float3 MaterialSpecular : SPECULAR < string Object = “Geometry”; >;

23 float SpecularPower : SPECULARPOWER < string Object = “Geometry”; >;

24 float3 MaterialToon : TOONCOLOR;

25 float4 EdgeColor : EDGECOLOR;

• float3: no alpha value.

• MaterialDiffuse: diffuse light color of material, Diffuse+A (alpha value) in PMD.

• MaterialAmbient: ambient light color of the material; Diffuse of PMD?

• MaterialEmmisive: light emitting color of the material, Ambient in PMD.

• MaterialSpecular: specular light color of the material; PMD’s Specular.

• SpecularPower: specular strength. PMD Shininess.

• MaterialToon: shade toon color of the material, lower left corner of the one specified by the PMD toon texture.

• EdgeColor: putline color, as specified by MMD’s edge color.

26 // light color

27 float3 LightDiffuse : DIFFUSE < string Object = “Light”; >;

28 float3 LightAmbient : AMBIENT < string Object = “Light”; >;

29 float3 LightSpecular : SPECULAR < string Object = “Light”; >;

30 static float4 DiffuseColor = MaterialDiffuse * float4(LightDiffuse, 1.0f);

31 static float3 AmbientColor = saturate(MaterialAmbient * LightAmbient + MaterialEmmisive);

32 static float3 SpecularColor = MaterialSpecular * LightSpecular;

• LightDiffuse: black (floa3(0,0,0))?

• LightAmbient: MMD lighting operation values.

• LightSpecular: MMD lighting operation values.

• DiffuseColor: black by multiplication in LightDiffuse?

• AmbientColor: does the common color of Diffuse in PMD become a little stronger in the value of lighting manipulation in MMD?

• SpecularColor: does it feel like PMD’s Specular is a little stronger than MMD’s Lighting Manipulation value?

34 bool parthf; // perspective flags

35 bool transp; // semi-transparent flag

36 bool spadd; // sphere map additive synthesis flag

37 #define SKII1 1500

38 #define SKII2 8000

39 #define Toon 3

• parthf: true for self-shadow distance setting mode2.

• transp: true for self-shadow distance setting mode2.

• spadd: true in sphere file .spa.

• SKII1:self-shadow A constant used in mode1. The larger the value, the weirder the shadow will be, and the smaller the value, the weaker the shadow will be.

• SKII2: self-shadow A constant used in mode2. If it is too large, the self-shadow will have a strange shadow, and if it is too small, it will be too thin.

• Toon: weaken the shade in the direction of the light with a close range shade toon.

41 // object textures

42 texture ObjectTexture: MATERIALTEXTURE;

43 sampler ObjTexSampler = sampler_state {

44 texture = <ObjectTexture>;

45 MINFILTER = LINEAR;

46 MAGFILTER = LINEAR;

47 };

48

• ObjectTexture: texture set in the material.

• ObjTexSampler: setting the conditions for acquiring material textures.

• MINIFILTER: conditions for shrinking textures.

• MAGFILTER: conditions for enlarging a texture.

• LINEAR: interpolate to linear.

49 // sphere map textures

50 texture ObjectSphereMap: MATERIALSPHEREMAP;

51 sampler ObjSphareSampler = sampler_state {

52 texture = <ObjectSphereMap>;

53 MINFILTER = LINEAR;

54 MAGFILTER = LINEAR;

55 };

• ObjectSphereMap: sphere map texture set in the material.

• ObjSphareSampler: setting the conditions for obtaining a sphere map texture.

57 // this is a description to avoid overwriting the original MMD sampler. Cannot be deleted.

58 sampler MMDSamp0 : register(s0);

59 sampler MMDSamp1 : register(s1);

60 sampler MMDSamp2 : register(s2);

• register: assign shader variables to specific registers.

• s0: sampler type register 0.

4. OUTLINE DRAWING (輪郭描画)

Model contours used for drawing, no accessories.

65 // vertex shader

66 float4 ColorRender_VS(float4 Pos : POSITION) : POSITION

67 {

68 // world-view projection transformation of camera viewpoint.

69 return mul( Pos, WorldViewProjMatrix );

70 }

Return the vertex coordinates of the camera viewpoint after the world view projection transformation.

Parameters

• Pos: local coordinates of the vertex.

• POSITION (input): semantic indicating the vertex position in the object space.

• POSITION (output): semantic indicating the position of a vertex in a homogeneous space.

• mul (x,y): perform matrix multiplication of x and y.

Return value

Vertex coordinates in projective space; compute screen coordinate position by dividing by w.

• Semantics: communicating information about the intended use of parameters.

72 // pixel shader

73 float4 ColorRender_PS() : COLOR

74 {

75 // fill with outline color

76 return EdgeColor;

77 }

Returns the contour color of the corresponding input vertex.

Return value

Output color

• COLOR: output color semantic.

79 // contouring techniques

80 technique EdgeTec < string MMDPass = "edge"; > {

81 pass DrawEdge {

82 AlphaBlendEnable = FALSE;

83 AlphaTestEnable = FALSE;

84

85 VertexShader = compile vs_2_0 ColorRender_VS();

86 PixelShader = compile ps_2_0 ColorRender_PS();

87 }

88 }

Processing for contour drawing.

• MMDPASS: specify the drawing target to apply.

• “edge”: contours of the PMD model.

• AlphaBlendEnable: set the value to enable alpha blending transparency. Blend surface colors, materials, and textures with transparency information to overlay on another surface.

• AlphaTestEnable: per-pixel alpha test setting. If passed, the pixel will be processed by the framebuffer. Otherwise, all framebuffer processing of pixels will be skipped.

• VertexShader: shader variable representing the compiled vertex shader.

• PixelShader: shader variable representing the compiled pixel shader.

• vs_2_0: vertex shader profile for shader model 2.

• ps_2_0: pixel shader profile for shader model 2.

• Frame buffer: memory that holds the data for one frame until it is displayed on the screen.

5. NON-SELF-SHADOW SHADOW RENDERING (非セルフシャドウ影描画)

Drawing shadows falling on the ground in MMD, switching between showing and hiding them in MMD's ground shadow display.

94 // vertex shader

95 float4 Shadow_VS(float4 Pos : POSITION) : POSITION

96 {

97 // world-view projection transformation of camera viewpoint.

98 return mul( Pos, WorldViewProjMatrix );

99 }

Returns the vertex coordinates of the source vertex of the shadow display after the world-view projection transformation of the camera viewpoint.

Parameters

• Pos: local coordinates of the vertex from which the shadow will be displayed.

Return value

Vertex coordinates in projective space.

101 // pixel shader

102 float4 Shadow_PS() : COLOR

103 {

104 // fill with ambient color

105 return float4(AmbientColor.rgb, 0.65f);

106 }

Returns the shadow color to be drawn. The alpha value will be reflected when MMD's display shadow color transparency is enabled.

Return value

Output color

108 // techniques for shadow drawing

109 technique ShadowTec < string MMDPass = "shadow"; > {

110 pass DrawShadow {

111 VertexShader = compile vs_2_0 Shadow_VS();

112 PixelShader = compile ps_2_0 Shadow_PS();

113 }

114 }

Processing for non-self-shadow shadow drawing.

• “shadow”: simple ground shadow.

6. DRAWING OBJECTS WHEN SELF-SHADOW IS DISABLED (セルフシャドウ無効時オブジェクト描画)

Drawing objects when self-shadowing is disabled. Also used when editing model values.

120 struct VS_OUTPUT {

121 float4 Pos : POSITION; // projective transformation coordinates

122 float2 Tex : TEXCOORD1; // texture

123 float3 Normal : TEXCOORD2; // normal vector

124 float3 Eye : TEXCOORD3; // position relative to camera

125 float2 SpTex : TEXCOORD4; // sphere map texture coordinates

126 float4 Color : COLOR0; // diffuse color

127 };

A structure for passing multiple return values between shader stages. The final data to be passed must specify semantics.

Parameters

• Pos:stores the position of a vertex in projective coordinates as a homogeneous spatial coordinate vertex shader output semantic.

• Tex: stores the UV coordinates of the vertex as the first texture coordinate vertex shader output semantic.

• Normal: stores the vertex normal vector as the second texture coordinate vertex shader output semantic.

• Eye: (opposite?) stores the eye vector as a #3 texture coordinate vertex shader output semantic.

• SpTex: stores the UV coordinates of the vertex as the number 4 texture coordinate vertex shader output semantic.

• Color: stores the diffuse light color of a vertex as the 0th color vertex shader output semantic.

129 // vertex shader

130 VS_OUTPUT Basic_VS(float4 Pos : POSITION, float3 Normal : NORMAL, float2 Tex : TEXCOORD0, uniform bool useTexture, uniform bool useSphereMap, uniform bool useToon)

131 {

Converts local coordinates of vertices to projective coordinates. Sets the value to pass to the pixel shader, which returns the VS_OUTPUT structure.

Parameters

• Pos: local coordinates of the vertex.

• Normal: normals in local coordinates of vertices.

• Tex: UV coordinates of the vertices.

• useTexture: determination of texture usage, given by pass.

• useSphereMap: determination of sphere map usage, given by pass.

• useToon: determination of toon usage. Given by pass in the case of model data.

• uniform: marks variables with data that are always constant during shader execution.

Return value

VS_OUTPUT, a structure passed to the pixel shader.

132 VS_OUTPUT Out = (VS_OUTPUT)0;

133

Initialize structure members with 0. Error if return member is undefined.

134 // world-view projection transformation of camera viewpoint.

135 Out.Pos = mul( Pos, WorldViewProjMatrix );

136

Convert local coordinates of vertices to projective coordinates.

137 // position relative to camera

138 Out.Eye = CameraPosition - mul( Pos, WorldMatrix );

The opposite vector of eye? Calculate.

139 // vertex normal

140 Out.Normal = normalize( mul( Normal, (float3x3)WorldMatrix ) );

141

Compute normalized normal vectors in the vertex world space.

• normalize (x): normalize a floating-point vector based on x/length(x).

• length (x): returns the length of a floating-point number vector.

142 // Diffuse color + Ambient color calculation

143 Out.Color.rgb = AmbientColor;

144 if ( !useToon ) {

145 Out.Color.rgb += max(0,dot( Out.Normal, -LightDirection )) * DiffuseColor.rgb;

By the inner product of the vertex normal and the backward vector of the light, the influence of the light (0-1) is calculated, and the diffuse light color calculated from the influence is added to the ambient light color. DiffuseColor is black because LightDifuse is black, and AmbientColor is the diffuse light of the material. Confirmation required.

• dot (x,y): return the inner value of the x and y vectors.

• max (x,y): choose the value of x or y, whichever is greater.

146 }

147 Out.Color.a = DiffuseColor.a;

148 Out.Color = saturate( Out.Color );

149

• saturate (x): clamp x to the range 0-1. 0>x, 1>x truncated?

150 // texture coordinates

151 Out.Tex = Tex;

152

153 if ( useSphereMap ) {

154 // sphere map texture coordinates

155 float2 NormalWV = mul( Out.Normal, (float3x3)ViewMatrix );

X and Y coordinates of vertex normals in view space.

156 Out.SpTex.x = NormalWV.x * 0.5f + 0.5f;

157 Out.SpTex.y = NormalWV.y * -0.5f + 0.5f;

158 }

159

Converts view coordinate values of vertex normals to texture coordinate values. Idiomatic.

160 return Out;

161 }

Return the structure you set.

163 // pixel shader

164 float4 Basic_PS(VS_OUTPUT IN, uniform bool useTexture, uniform bool useSphereMap, uniform bool useToon) : COLOR0

165 {

Specify the color of pixels to be displayed on the screen.

Parameters

• IN: VS_OUTPUT structure received from the vertex shader.

• useTexture: determination of texture usage, given by pass.

• useSphereMap: determination of using sphere map, given by pass.

• useToon: determination of toon usage. Given by pass in the case of model data.

Output value

Output color

166 // specular color calculation

167 float3 HalfVector = normalize( normalize(IN.Eye) + -LightDirection );

Find the half vector from the inverse vector of the line of sight and the inverse vector of the light.

• Half vector: a vector that is the middle (addition) of two vectors. Used instead of calculating the reflection vector.

168 float3 Specular = pow( max(0,dot( HalfVector, normalize(IN.Normal) )), SpecularPower ) * SpecularColor;

169

From the half-vector and vertex normals, find the influence of reflection. Multiply the influence by the specular intensity, and multiply by the specular light color to get the specular.

• pow (x,y): multiply x by the exponent y.

170 float4 Color = IN.Color;

171 if ( useTexture ) {

172 // apply texture

173 Color *= tex2D( ObjTexSampler, IN.Tex );

174 }

If a texture is set, extract the color of the texture coordinates and multiply it by the base color.

• tex2D (sampler, tex): extract the color of the tex coordinates from the 2D texture in the sampler settings.

175 if ( useSphereMap ) {

176 // apply sphere map

177 if(spadd) Color += tex2D(ObjSphareSampler,IN.SpTex);

178 else Color *= tex2D(ObjSphareSampler,IN.SpTex);

179 }

180

If a sphere map is set, extract the color of the sphere map texture coordinates and add it to the base color if it is an additive sphere map file, otherwise multiply it.

181 if ( useToon ) {

182 // toon application

183 float LightNormal = dot( IN.Normal, -LightDirection );

184 Color.rgb *= lerp(MaterialToon, float3(1,1,1), saturate(LightNormal * 16 + 0.5));

185 }

In the case of the PMD model, determine the influence of the light from the normal vector of the vertex and the inverse vector of the light. Correct the influence level to 0.5-1, and darken the base color for lower influence levels.

• lerp (x,y,s): linear interpolation based on x + s(y - x). 0=x, 1=y.

186

187 // specular application

188 Color.rgb += Specular;

189

190 return Color;

191 }

Add the obtained specular to the base color and return the output color.

195 technique MainTec0 < string MMDPass = "object"; bool UseTexture = false; bool UseSphereMap = false; bool UseToon = false; > {

196 pass DrawObject {

197 VertexShader = compile vs_2_0 Basic_VS(false, false, false);

198 PixelShader = compile ps_2_0 Basic_PS(false, false, false);

199 }

200 }

Technique performed on a subset of accessories (materials) that don’t use texture or sphere maps when self-shadow is disabled.

• “object”: object when self-shadow is disabled.

• UseTexture: true for texture usage subset.

• UseSphereMap: true for sphere map usage subset.

• UseToon: true for PMD model.

7. Z-VALUE PLOT FOR SELF-SHADOW DETERMINATION (セルフシャドウ判定用Z値プロット)

Create a boundary value to be used for determining the self-shadow.

256 struct VS_ZValuePlot_OUTPUT {

257 float4 Pos : POSITION; // projective transformation coordinates

258 float4 ShadowMapTex : TEXCOORD0; // z-buffer texture

259 };

A structure for passing multiple return values between shader stages.

Parameters

• Pos: stores the position of a vertex in projective coordinates as a homogeneous spatial coordinate vertex shader output semantic.

• ShadowMapTex: stores texture coordinates for hardware calculation of z and w interpolation values as 0 texture coordinate vertex shader output semantics.

• w: scaling factor of the visual cone (which expands as you go deeper) in projective space.

261 // vertex shader

262 VS_ZValuePlot_OUTPUT ZValuePlot_VS( float4 Pos : POSITION )

263 {

264 VS_ZValuePlot_OUTPUT Out = (VS_ZValuePlot_OUTPUT)0;

265

266 // do a world-view projection transformation with the eyes of the light.

267 Out.Pos = mul( Pos, LightWorldViewProjMatrix );

268

Conversion of local coordinates of a vertex to projective coordinates with respect to a light.

269 // align texture coordinates to vertices.

270 Out.ShadowMapTex = Out.Pos;

271

272 return Out;

273 }

Assign to texture coordinates to let the hardware calculate z, w interpolation values for vertex coordinates, and return the structure.

275 // pixel shader

276 float4 ZValuePlot_PS( float4 ShadowMapTex : TEXCOORD0 ) : COLOR

277 {

278 // record z-values for R color components

279 return float4(ShadowMapTex.z/ShadowMapTex.w,0,0,1);

280 }

Divide the z-value in projective space by the magnification factor w, calculate the z-value in screen coordinates, assign to r-value and return (internal MMD processing?).

282 // techniques for Z-value mapping

283 technique ZplotTec < string MMDPass = "zplot"; > {

284 pass ZValuePlot {

285 AlphaBlendEnable = FALSE;

286 VertexShader = compile vs_2_0 ZValuePlot_VS();

287 PixelShader = compile ps_2_0 ZValuePlot_PS();

288 }

289 }

Technique to be performed when calculating the z-value for self-shadow determination.

• “zplot”: Z-value plot for self-shadow.

8. DRAWING OBJECTS IN SELF-SHADOWING (セルフシャドウ時オブジェクト描画)

Drawing an object with self-shadow.

295 // sampler for the shadow buffer. “register(s0)" because MMD uses s0

296 sampler DefSampler : register(s0);

297

Assign sampler register 0 to DefSampler. Not sure when it’s swapped with MMDSamp0 earlier. Not replaceable.

298 struct BufferShadow_OUTPUT {

299 float4 Pos : POSITION; // projective transformation coordinates

300 float4 ZCalcTex : TEXCOORD0; // z value

301 float2 Tex : TEXCOORD1; // texture

302 float3 Normal : TEXCOORD2; // normal vector

303 float3 Eye : TEXCOORD3; // position relative to camera

304 float2 SpTex : TEXCOORD4; // sphere map texture coordinates

305 float4 Color : COLOR0; // diffuse color

306 };

VS_OUTPUT with ZCalcTex added.

• ZCalcTex: stores the texture coordinates for calculating the interpolation values of Z and w for vertices in screen coordinates as the 0 texture coordinate vertex shader output semantic.

308 // vertex shader

309 BufferShadow_OUTPUT BufferShadow_VS(float4 Pos : POSITION, float3 Normal : NORMAL, float2 Tex : TEXCOORD0, uniform bool useTexture, uniform bool useSphereMap, uniform bool useToon)

310 {

Converts local coordinates of vertices to projective coordinates. Set the value to pass to the pixel shader, returning the BufferShadow_OUTPUT structure.

Parameters

• Pos: local coordinates of the vertex.

• Normal: normals in local coordinates of vertices.

• Tex: UV coordinates of the vertices.

• useTexture: determination of texture usage, given by pass.

• useSphereMap: determination of sphere map usage, given by pass.

• useToon: determination of toon usage. Given by pass in the case of model data.

Return value

BufferShadow_OUTPUT.

311 BufferShadow_OUTPUT Out = (BufferShadow_OUTPUT)0;

312

Initializing the structure.

313 // world-view projection transformation of camera viewpoint.

314 Out.Pos = mul( Pos, WorldViewProjMatrix );

315

Convert local coordinates of vertices to projective coordinates.

316 // position relative to camera 317 Out.Eye = CameraPosition - mul( Pos, WorldMatrix );

Calculate the inverse vector of the line of sight.

318 // vertex normal

319 Out.Normal = normalize( mul( Normal, (float3x3)WorldMatrix ) );

Compute normalized normal vectors in the vertex world space.

320 // world View Projection Transformation with Light Perspective

321 Out.ZCalcTex = mul( Pos, LightWorldViewProjMatrix );

Convert local coordinates of vertices to projective coordinates with respect to the light, and let the hardware calculate z and w interpolation values.

323 // Diffuse color + Ambient color Calculation

324 Out.Color.rgb = AmbientColor;

325 if ( !useToon ) {

326 Out.Color.rgb += max(0,dot( Out.Normal, -LightDirection )) * DiffuseColor.rgb;

327 }

328 Out.Color.a = DiffuseColor.a;

329 Out.Color = saturate( Out.Color );

Set the base color. For accessories, add a diffuse color to the base color based on the light influence, and set each component to 0-1.

331 // texture coordinates

332 Out.Tex = Tex;

Assign the UV coordinates of the vertex as they are.

334 if ( useSphereMap ) {

335 // sphere map texture coordinates

336 float2 NormalWV = mul( Out.Normal, (float3x3)ViewMatrix );

Convert vertex normal vectors to x and y components in view space coordinates when using sphere maps.

337 Out.SpTex.x = NormalWV.x * 0.5f + 0.5f;

338 Out.SpTex.y = NormalWV.y * -0.5f + 0.5f;

339 }

340

341 return Out;

342 }

Convert view space coordinates to texture coordinates and put the structure back.

344 // pixel shader

345 float4 BufferShadow_PS(BufferShadow_OUTPUT IN, uniform bool useTexture, uniform bool useSphereMap, uniform bool useToon) : COLOR

346 {

Specify the color of pixels to be displayed on the screen.

Parameters

• IN: BufferShadow_OUTPUT structure received from vertex shader.

• useTexture: determination of texture usage, given by pass.

• useSphereMap: determination of sphere map usage, given by pass.

• useToon: determination of toon usage. Given by pass in the case of model data.

Output value

Output color

347 // specular color calculation

348 float3 HalfVector = normalize( normalize(IN.Eye) + -LightDirection );

349 float3 Specular = pow( max(0,dot( HalfVector, normalize(IN.Normal) )), SpecularPower ) * SpecularColor;

350

Same specular calculation as Basic_PS.

351 float4 Color = IN.Color;

352 float4 ShadowColor = float4(AmbientColor, Color.a); // shadow’s color

Base color and self-shadow base color.

353 if ( useTexture ) {

354 // apply texture

355 float4 TexColor = tex2D( ObjTexSampler, IN.Tex );

356 Color *= TexColor;

357 ShadowColor *= TexColor;

358 }

When using a texture, extract the color of the texture coordinates from the set texture and multiply it by the base color and self-shadow color respectively.

359 if ( useSphereMap ) {

360 // apply sphere map

361 float4 TexColor = tex2D(ObjSphareSampler,IN.SpTex);

362 if(spadd) {

363 Color += TexColor;

364 ShadowColor += TexColor;

365 } else {

366 Color *= TexColor;

367 ShadowColor *= TexColor;

368 }

369 }

As with Basic_PS, when using a sphere map, add or multiply the corresponding colors.

370 // specular application

371 Color.rgb += Specular;

372

Apply specular to the base color.

373 // convert to texture coordinates 374 IN.ZCalcTex /= IN.ZCalcTex.w;

Divide the z-value in projective space by the scaling factor w and convert to screen coordinates.

375 float2 TransTexCoord;

376 TransTexCoord.x = (1.0f + IN.ZCalcTex.x)*0.5f;

377 TransTexCoord.y = (1.0f - IN.ZCalcTex.y)*0.5f;

378

Convert screen coordinates to texture coordinates.

379 if( any( saturate(TransTexCoord) != TransTexCoord ) ) {

380 // external shadow buffer

381 return Color;

Return the base color if the vertex coordinates aren’t in the 0-1 range of the texture coordinates.

382 } else {

383 float comp;

384 if(parthf) {

385 // self-shadow mode2

386 comp=1-saturate(max(IN.ZCalcTex.z-tex2D(DefSampler,TransTexCoord).r , 0.0f)*SKII2*TransTexCoord.y-0.3f);

In self-shadow mode2, take the Z value from the shadow buffer sampler and compare it with the Z value of the vertex, if the Z of the vertex is small, it isn't a shadow. If the difference is small (close to the beginning of the shadow), the shadow is heavily corrected. (Weak correction in the upward direction of the screen?) Weakly corrects the base color.

387 } else {

388 // self-shadow mode1

389 comp=1-saturate(max(IN.ZCalcTex.z-tex2D(DefSampler,TransTexCoord).r , 0.0f)*SKII1-0.3f);

390 }

Do the same for self-shadow mode1.

391 if ( useToon ) {

392 // toon application

393 comp = min(saturate(dot(IN.Normal,-LightDirection)*Toon),comp);

In the case of MMD models, compare the degree of influence of the shade caused by the light with the degree of influence caused by the self-shadow, and choose the smaller one as the degree of influence of the shadow.

• min (x,y): select the smaller value of x and y.

394 ShadowColor.rgb *= MaterialToon;

395 }

396

Multiply the self-shadow color by the toon shadow color.

397 float4 ans = lerp(ShadowColor, Color, comp);

Linearly interpolate between the self-shadow color and the base color depending on the influence of the shadow.

398 if( transp ) ans.a = 0.5f;

399 return ans;

400 }

401 }

If translucency is enabled, set the transparency of the display color to 50% and restore the composite color.

403 // techniques for drawing objects (for accessories)

404 technique MainTecBS0 < string MMDPass = "object_ss"; bool UseTexture = false; bool UseSphereMap = false; bool UseToon = false; > {

405 pass DrawObject {

406 VertexShader = compile vs_3_0 BufferShadow_VS(false, false, false);

407 PixelShader = compile ps_3_0 BufferShadow_PS(false, false, false);

408 }

409 }

Technique performed on a subset of accessories (materials) that don’t use a texture or sphere map during self-shadowing.

• “object-ss”: object when self-shadow is disabled.

• UseTexture: true for texture usage subset.

• UseSphereMap: true for sphere map usage subset.

• UseToon: true for PMD model.

9. FINAL NOTES

For further reading on HLSL coding, please visit Microsoft’s official English reference documentation.

5 notes

·

View notes

Text

𝑊𝑎𝑟 𝑂𝑓 𝐻𝑒𝑎𝑟𝑡𝑠 - 𝐶𝑟𝑖𝑚𝑖𝑛𝑎𝑙 𝑀𝑖𝑛𝑑𝑠, 𝑆𝑝𝑒𝑛𝑐𝑒𝑟 𝑅𝑒𝑖𝑑 𝑥 𝑂𝐶 - 𝐶ℎ𝑎𝑝𝑡𝑒𝑟 1 : 𝑉𝑎𝑙𝑒𝑛𝑜

Masterlist

Rating: Mature

Summary: 𝐴𝑙𝑖𝑐𝑒 𝑛𝑒𝑣𝑒𝑟 𝑖𝑚𝑎𝑔𝑖𝑛𝑒𝑑 ℎ𝑒𝑟𝑠𝑒𝑙𝑓 𝑤𝑜𝑟𝑘𝑖𝑛𝑔 𝑖𝑛 𝑖𝑛𝑡𝑒𝑙𝑙𝑖𝑔𝑒𝑛𝑐𝑒, 𝑏𝑢𝑡 ℎ𝑒𝑟 𝑑𝑒𝑠𝑖𝑟𝑒 𝑡𝑜 ℎ𝑒𝑙𝑝 𝑜𝑡ℎ𝑒𝑟𝑠 𝑟𝑒𝑠𝑢𝑙𝑡𝑒𝑑 𝑖𝑛 𝑡𝑟𝑜𝑢𝑏𝑙𝑒 𝑡ℎ𝑎𝑡 𝑙𝑒𝑓𝑡 ℎ𝑒𝑟 𝑤𝑖𝑡ℎ 𝑙𝑖𝑡𝑡𝑙𝑒 𝑜𝑝𝑡𝑖𝑜𝑛𝑠. 𝑊𝑖𝑡ℎ 𝑡ℎ𝑒 𝑠𝑢𝑝𝑝𝑜𝑟𝑡 𝑜𝑓 𝑡ℎ𝑒 𝐵𝐴𝑈 𝑓𝑎𝑚𝑖𝑙𝑦, 𝑚𝑎𝑦𝑏𝑒 𝑠ℎ𝑒 𝑐𝑎𝑛 𝑓𝑖𝑛𝑎𝑙𝑙𝑦 𝑏𝑒𝑔𝑖𝑛 𝑡𝑜 ℎ𝑒𝑎𝑙 𝑡ℎ𝑒 𝑤𝑜𝑢𝑛𝑑𝑠 𝑜𝑓 𝑡ℎ𝑒 𝑝𝑎𝑠𝑡.

Fandom: Criminal Minds

Pairing: Spencer Reid x OC

Status: Ongoing

LONG TERM ONGOING PROJECT :)

My writing is entirely fuelled by coffee! If you enjoy my work, feel free to donate toward my caffeine dependency: will work for coffee

𝑾𝒂𝒓𝒏𝒊𝒏𝒈𝒔: 𝐺𝑒𝑛𝑒𝑟𝑎𝑙𝑙𝑦 𝑎𝑑𝑢𝑙𝑡 𝑐𝑜𝑛𝑡𝑒𝑛𝑡, 𝑤𝑖𝑡ℎ 𝑠𝑜𝑚𝑒 𝑡𝑟𝑖𝑔𝑔𝑒𝑟𝑖𝑛𝑔 𝑡ℎ𝑒𝑚𝑒𝑠 𝑎𝑠 𝑤𝑖𝑡ℎ 𝑡ℎ𝑒 𝑠ℎ𝑜𝑤. 𝑃𝑙𝑒𝑎𝑠𝑒 𝑏𝑒 𝑎𝑤𝑎𝑟𝑒 𝑡ℎ𝑖𝑠 𝑑𝑜𝑒𝑠 𝑚𝑒𝑎𝑛 𝑐𝑜𝑣𝑒𝑟𝑖𝑛𝑔 𝑐𝑎𝑠𝑒𝑠 𝑜𝑓 𝑚𝑢𝑟𝑑𝑒𝑟, 𝑐ℎ𝑖𝑙𝑑 𝑎𝑏𝑑𝑢𝑐𝑡𝑖𝑜𝑛 & 𝑠𝑒𝑥𝑢𝑎𝑙 𝑎𝑏𝑢𝑠𝑒 𝑎𝑠 𝑡ℎ𝑖𝑠 𝑖𝑠 𝑡ℎ𝑒 𝑛𝑎𝑡𝑢𝑟𝑒 𝑜𝑓 𝑡ℎ𝑒 𝐵𝐴𝑈'𝑠 𝑤𝑜𝑟𝑘. 𝐼𝑡 𝑖𝑠 𝑚𝑦 𝑖𝑛𝑡𝑒𝑛𝑡𝑖𝑜𝑛 𝑡𝑜 ℎ𝑎𝑛𝑑𝑙𝑒 𝑡ℎ𝑒𝑠𝑒 𝑖𝑠𝑠𝑢𝑒𝑠 𝑎𝑠 𝑐𝑎𝑟𝑒𝑓𝑢𝑙𝑙𝑦 𝑎𝑠 𝑝𝑜𝑠𝑠𝑖𝑏𝑙𝑒, 𝑏𝑢𝑡 𝑖𝑓 𝑡ℎ𝑒𝑟𝑒 𝑖𝑠 𝑎𝑛𝑦𝑡ℎ𝑖𝑛𝑔 𝑡ℎ𝑎𝑡 𝑦𝑜𝑢 𝑓𝑒𝑒𝑙 𝑐𝑜𝑢𝑙𝑑 𝑏𝑒 𝑖𝑚𝑝𝑟𝑜𝑣𝑒𝑑 𝑤𝑖𝑡ℎ ℎ𝑜𝑤 𝑡ℎ𝑒𝑠𝑒 𝑎𝑟𝑒 𝑚𝑎𝑛𝑎𝑔𝑒𝑑, 𝑝𝑙𝑒𝑎𝑠𝑒 𝑙𝑒𝑡 𝑚𝑒 𝑘𝑛𝑜𝑤.

Eᴘɪsᴏᴅᴇ: Pʀᴇ Sᴇᴀsᴏɴ 1

Chapter One

The smell of stale coffee was overpowering, almost more so than the lack of daylight in the room. Overworking was common practice here and as such, caffeine addiction was deeply ingrained into our culture. I was relieved to have my own office to allow me to focus away from the highly strung teams that depended on me. Topping up my teacup from the pot, I continued to scan my eyes through the various databases in search of something to provide us with a lead.

My eyelids were heavy from exhaustion as I battled to hold my concentration and almost jumped out of my chair as a shrill phone rang from the back of the desk. It was a secure line that I’d set up a while ago, but seldom ever used and I quickly got to my feet to close the door before picking up the handset.

“Wonderland.” The careful voice announced a code that prompted a smile to fill my lips and I was immediately flooded with a warm sense of appreciation as I recognised them.

“Well, it’s been some time since I heard that.” I muttered curtly, settling back into my seat and a small satisfied sound on the other end of the line seemed to indicate that she was relieved to have confirmed my identity. “You’re certainly working late, aren’t you?”

“You know how it is. Monsters to catch.” Penelope sighed, allowing me the chance to catch the fatigue in her voice that likely would have been easily missed by anyone else. “Speaking of which, I have a problem.” She confessed, causing me to lean forward with riveted interest.

“We’ve got one of your boys causing mayhem over here. Your agents are keeping suspiciously schtum on it, territorial as ever. My team wants to stick with the case. I can’t ask for official permission to access Interpol files when we’ve been told to drop it, but if I could just get five minutes in the system…” She trailed off suggestively and I chuckled lightly to myself.

“You’re losing your touch, My Queen. I would have expected you to have found your way in already.” I teased, referring to the name that I’d once known her by as I set to work on bringing up the files of the case. I didn’t need specifics to clarify, I knew exactly which criminal my team was after that had required them to fly to the States to investigate and I heard her sigh.

“Any other time, you know that I would hack first and deny knowledge later, but I can’t plead ignorance on something that we’ve already been clearly told to stay away from.” She groaned, her frustration obvious even over the phone and I began typing rapidly as a plan formulated in my mind.

“I can’t allow access to a foreign agency, my dear. The other techs would rat me out before you’d even found anything.” I explained, chewing on my lip as I entered line after line of code in an attempt to outsmart my colleagues with techniques that would never be used in an official capacity. “However, if a back door was accidentally left open during maintenance, I couldn’t be held responsible for anything that might creep in.” I thought aloud as I sped through screens with a wicked smile, knowing that I could easily avoid any blame this way.

“I’ve assigned all of the correct credentials. Anything that you access will appear as if it’s me doing admin in the case, but be careful. It’s a very limited disguise that won’t last long if anyone starts to dig at it. I can keep our techs busy for a little while, but you know that subtly isn’t my strong suit. Get out before you’re noticed.” I instructed as I entered the last few commands and could already hear her making preparations for the task.

“Oh, sweetheart. I will be gone before they even know what hit them.” She breezed, her voice filled with a confidence that was contagious and I took a deep breath as I allowed it to pass onto me.

“Launching now.” I confirmed, as I entered the final key and my screen began filling with pages of data. “Make it worthwhile. Catch the bastard.”

--⥈--

“You think it’s an inside agent?”

My sector chief stared me down with an intensely disbelieving expression and I struggled to keep my nervousness from showing. It was incredibly nerve wracking to suggest this in a team where I was already the odd one out, but I couldn’t allow my own insecurities to prevent us from getting justice. Ever since I had started here, I’d had the deep rooted feeling that none of my colleagues approved of the decision to recruit someone who should have been arrested and the disdain always seemed most powerful when addressing the man before me.

“That is my belief, Sir.” I answered, hoping that he wouldn’t notice my false confidence and I forced myself to hold his gaze as he crossed his arms. “Valeno has successfully dodged every digital trap that I’ve laid. He’s avoided multiple agencies now and essentially vanished without a trace. It wouldn’t be possible to achieve that without inside knowledge of the measures that we are taking.” I explained, carefully presenting my theory and he remained unmoved as he studied me, causing me to gulp in discomfort.

“Find me proof, Hawthorne. I can’t call a witch hunt based on your bitterness against this team.” Shepard responded coldly, turning his back on me without another word to take a seat at his desk and I gulped down the anger that I felt at this unfair accusation. When I remained rooted to the spot in confusion, he simply gestured for me to show myself out.

I stomped back to my office with my cheeks burning in humiliation and closed the door behind me so that I could sink down against it with my face in my hands. It was endlessly frustrating to have invested so much time into pursuing this trafficking ring, only to have them continuously avoid capture. I was exhausted by this case, unable to remember my last day off since I was assigned to it and I could feel that I was running out of ideas, as I faced a heart breaking lack of support.

A loud alert on my computer pulled me from my wallowing and I rushed into my seat with interest. Flashing on my screen was a warning that made my stomach lurch and I stared at it with wide eyes. After months of silence, someone had finally accessed one of the booby trapped files.

I jumped into action, entering commands at lightning speed and became determined to capture whomever it was that had been defeating me for many long months. They were quick, evading my tactics with ease and I found myself engaged in a maddening game of digital hide and seek in the various systems. It was immediately clear that I was dealing with a professional and I cursed under my breath as I strained to keep up with them, setting fresh traps as I worked in the hope of preventing their escape.

“Oh no you don’t, you little bugger! Not today!” I hissed under my breath, feeling sweet on my brow as I fretted that one wrong move could lose them forever.

As I watched them evade my tracking, then jump straight into blocking me out with expert knowledge, a memory stirred in the back of my mind. The theory rapidly took hold and I wasted no time in throwing out a manoeuvre that I knew would cause a bolt of familiarity if the culprit was who I suspected. Barely moments later, my screen fizzled in error, before displaying a bizarre Tetris overlay that could only be the signature of one person and I gasped, reaching for the phone in a fluster. I could hardly dial the number properly with my shaking hands and stumbled over my words as they answered hurriedly.

“Tell me that you’re the person flooding my system with Tetris right now!” I spat, cutting her off before she could even speak and I heard the noisy typing on the other end rapidly stop.

“I knew it! You’re the only hacker I know that would use Alice in Wonderland riddles to throw me off!” Penelope gasped with excitement and I released a breath that I didn’t even know I had been holding in relief. It had been almost six months since I last heard her voice and although I would usually be pleased to speak to her, I couldn’t help a wave of disappointment at the realisation that I hadn’t caught anyone connected to the case.

“Well done, Reid! I just almost hacked our best chance of cracking your sicko lady thief. Thank god for your crazy book memory.” I heard her chatting to someone in the background and cleared my throat to regain her attention.

“I’d recognise those moves anywhere. You’re the only person that aggressive in code. What are you doing in my case?” I asked, rubbing at my temples in confusion and though I could hear someone barraging her with questions about who she was talking to, she remained obediently focused on me.

“Your case? I’m researching an abduction for the team.” She revealed, sounding as if she hadn’t even realised what was happening yet and I felt my eyes widen in horror. “Wait a second. Interpol is on this?” She breathed, causing sounds of shock to echo from whoever was in her background and I leapt to my feet as my thoughts bounced around in my mind.

“Penelope, I need you to send me everything you have. Right now! I think you’ve got a much bigger problem on your hands than you even know.” I ordered, flicking through the case files that covered my desk in a fluster and without a moment of hesitation, the details began to pop up on my screen. As I acknowledged the matching signature to the photos on my desk, I held a hand to my mouth in shock.

“Valeno.” I whispered as adrenaline shot through my entire body and I grabbed my phone as I began marching through the halls of the office. “Get your Unit Chief ready for a call. I’m taking this to the chief now.” I instructed, before hanging up and striding confidently back toward the office that I’d been so rudely dismissed from a short while ago.

Without awaiting permission, I barged inside and switched on the monitor on the wall. I flicked it to the channel that connected to my station and displayed the crime scene photos that were sent by Penelope. At first, Shepard seemed irritated by the brashness of my approach, but as I flicked through the photos with a determined energy, understanding dawned on his features.