#Modern Codebase

Explore tagged Tumblr posts

Text

#Magento 2.0#Ecommerce Features#Improved Checkout#Full-Page Caching#Modern Codebase#Backend Enhancements#Database Optimization#Performance Improvement#Enterprise Edition#Open Source Development

0 notes

Text

At my last job, we sold lots of hobbyist electronics stuff, including microcontrollers.

This turned out to be a little more complicated than selling, like, light bulbs. Oh how I yearned for the simplicity of a product you could plug in and have work.

Background: A microcontroller is the smallest useful computer. An ATtiny10 has a kilobyte of program memory. If you buy a thousand at a time, they cost 44 cents each.

As you'd imagine, the smallest computer has not great specs. The RAM is 32 bytes. Not gigabytes, not megabytes, not kilobytes. Individual bytes. Microcontrollers have the absolute minimum amount of hardware needed to accomplish their task, and nothing more.

This includes programming the thing. Any given MCU is programmed once, at the start of its life, and then spends the next 30 years blinking an LED on a refrigerator. Since they aren’t meant to be reflashed in the field, and modern PCs no longer expose the fast, bit-bangable ports hobbyists once used, MCUs usually need a third-party programming tool.

But you could just use that tool to install a bootloader, which then listens for a magic number on the serial bus. Then you can reprogram the chip as many times as you want without the expensive programming hardware.

There is an immediate bifurcation here. Only hobbyists will use the bootloader version. With 1024 bytes of program memory, there is, even more than usual, nothing to spare.

Consumer electronics development is a funny gig. It, more than many other businesses, requires you to be good at everything. A startup making the next Furby requires a rare omniexpertise. Your company has to write software, design hardware, create a production plan, craft a marketing scheme, and still do the boring logistics tasks of putting products in boxes and mailing them out. If you want to turn a profit, you do this the absolute minimum number of people. Ideally, one.

Proving out a brand new product requires cutting corners. You make the prototype using off the shelf hobbyist electronics. You make the next ten units with the same stuff, because there's no point in rewriting the entire codebase just for low rate initial production. You use the legacy code for the next thousand units because you're desperately busy putting out a hundred fires and hiring dozens of people to handle the tsunami of new customers. For the next ten thousand customers...

Rather by accident, my former employer found itself fulfilling the needs of the missing middle. We were an official distributor of PICAXE chips for North America. Our target market was schools, but as a sideline, we sold individual PICAXE chips, which were literally PIC chips flashed with a bootloader and a BASIC interpreter at a 200% markup. As a gag, we offered volume discounts on the chips up to a thousand units. Shortly after, we found ourselves filling multi-thousand unit orders.

We had blundered into a market niche too stupid for anyone else to fill. Our customers were tiny companies who sold prototypes hacked together from dev boards. And every time I cashed a ten thousand dollar check from these guys, I was consumed with guilt. We were selling to willing buyers at the current fair market price, but they shouldn't have been buying these products at all! Since they were using bootloaders, they had to hand program each chip individually, all while PIC would sell you programmed chips at the volume we were selling them for just ten cents extra per unit! We shouldn't have been involved at all!

But they were stuck. Translating a program from the soft and cuddly memory-managed education-oriented languages to the hardcore embedded byte counting low level languages was a rather esoteric skill. If everyone in-house is just barely keeping their heads above water responding to customer emails, and there's no budget to spend $50,000 on a consultant to rewrite your program, what do you do? Well, you keep buying hobbyist chips, that's what you do.

And I talked to these guys. All the time! They were real, functional, profitable businesses, who were giving thousands of dollars to us for no real reason. And the worst thing. The worst thing was... they didn't really care? Once every few months they would talk to their chip guy, who would make vague noises about "bootloaders" and "programming services", while they were busy solving actual problems. (How to more accurately detect deer using a trail camera with 44 cents of onboard compute) What I considered the scandal of the century was barely even perceived by my customers.

In the end my employer was killed by the pandemic, and my customers seamlessly switched to buying overpriced chips straight from the source. The end! No moral.

358 notes

·

View notes

Text

Welcome back, coding enthusiasts! Today we'll talk about Git & Github , the must-know duo for any modern developer. Whether you're just starting out or need a refresher, this guide will walk you through everything from setup to intermediate-level use. Let’s jump in!

What is Git?

Git is a version control system. It helps you as a developer:

Track changes in your codebase, so if anything breaks, you can go back to a previous version. (Trust me, this happens more often than you’d think!)

Collaborate with others : whether you're working on a team project or contributing to an open-source repo, Git helps manage multiple versions of a project.

In short, Git allows you to work smarter, not harder. Developers who aren't familiar with the basics of Git? Let’s just say they’re missing a key tool in their toolkit.

What is Github ?

GitHub is a web-based platform that uses Git for version control and collaboration. It provides an interface to manage your repositories, track bugs, request new features, and much more. Think of it as a place where your Git repositories live, and where real teamwork happens. You can collaborate, share your code, and contribute to other projects, all while keeping everything well-organized.

Git & Github : not the same thing !

Git is the tool you use to create repositories and manage code on your local machine while GitHub is the platform where you host those repositories and collaborate with others. You can also host Git repositories on other platforms like GitLab and BitBucket, but GitHub is the most popular.

Installing Git (Windows, Linux, and macOS Users)

You can go ahead and download Git for your platform from (git-scm.com)

Using Git

You can use Git either through the command line (Terminal) or through a GUI. However, as a developer, it’s highly recommended to learn the terminal approach. Why? Because it’s more efficient, and understanding the commands will give you a better grasp of how Git works under the hood.

GitWorkflow

Git operates in several key areas:

Working directory (on your local machine)

Staging area (where changes are prepared to be committed)

Local repository (stored in the hidden .git directory in your project)

Remote repository (the version of the project stored on GitHub or other hosting platforms)

Let’s look at the basic commands that move code between these areas:

git init: Initializes a Git repository in your project directory, creating the .git folder.

git add: Adds your files to the staging area, where they’re prepared for committing.

git commit: Commits your staged files to your local repository.

git log: Shows the history of commits.

git push: Pushes your changes to the remote repository (like GitHub).

git pull: Pulls changes from the remote repository into your working directory.

git clone: Clones a remote repository to your local machine, maintaining the connection to the remote repo.

Branching and merging

When working in a team, it’s important to never mess up the main branch (often called master or main). This is the core of your project, and it's essential to keep it stable.

To do this, we branch out for new features or bug fixes. This way, you can make changes without affecting the main project until you’re ready to merge. Only merge your work back into the main branch once you're confident that it’s ready to go.

Getting Started: From Installation to Intermediate

Now, let’s go step-by-step through the process of using Git and GitHub from installation to pushing your first project.

Configuring Git

After installing Git, you’ll need to tell Git your name and email. This helps Git keep track of who made each change. To do this, run:

Master vs. Main Branch

By default, Git used to name the default branch master, but GitHub switched it to main for inclusivity reasons. To avoid confusion, check your default branch:

Pushing Changes to GitHub

Let’s go through an example of pushing your changes to GitHub.

First, initialize Git in your project directory:

Then to get the ‘untracked files’ , the files that we haven’t added yet to our staging area , we run the command

Now that you’ve guessed it we’re gonna run the git add command , you can add your files individually by running git add name or all at once like I did here

And finally it's time to commit our file to the local repository

Now, create a new repository on GitHub (it’s easy , just follow these instructions along with me)

Assuming you already created your github account you’ll go to this link and change username by your actual username : https://github.com/username?tab=repositories , then follow these instructions :

You can add a name and choose wether you repo can be public or private for now and forget about everything else for now.

Once your repository created on github , you’ll get this :

As you might’ve noticed, we’ve already run all these commands , all what’s left for us to do is to push our files from our local repository to our remote repository , so let’s go ahead and do that

And just like this we have successfully pushed our files to the remote repository

Here, you can see the default branch main, the total number of branches, your latest commit message along with how long ago it was made, and the number of commits you've made on that branch.

Now what is a Readme file ?

A README file is a markdown file where you can add any relevant information about your code or the specific functionality in a particular branch—since each branch can have its own README.

It also serves as a guide for anyone who clones your repository, showing them exactly how to use it.

You can add a README from this button:

Or, you can create it using a command and push it manually:

But for the sake of demonstrating how to pull content from a remote repository, we’re going with the first option:

Once that’s done, it gets added to the repository just like any other file—with a commit message and timestamp.

However, the README file isn’t on my local machine yet, so I’ll run the git pull command:

Now everything is up to date. And this is just the tiniest example of how you can pull content from your remote repository.

What is .gitignore file ?

Sometimes, you don’t want to push everything to GitHub—especially sensitive files like environment variables or API keys. These shouldn’t be shared publicly. In fact, GitHub might even send you a warning email if you do:

To avoid this, you should create a .gitignore file, like this:

Any file listed in .gitignore will not be pushed to GitHub. So you’re all set!

Cloning

When you want to copy a GitHub repository to your local machine (aka "clone" it), you have two main options:

Clone using HTTPS: This is the most straightforward method. You just copy the HTTPS link from GitHub and run:

It's simple, doesn’t require extra setup, and works well for most users. But each time you push or pull, GitHub may ask for your username and password (or personal access token if you've enabled 2FA).

But if you wanna clone using ssh , you’ll need to know a bit more about ssh keys , so let’s talk about that.

Clone using SSH (Secure Shell): This method uses SSH keys for authentication. Once set up, it’s more secure and doesn't prompt you for credentials every time. Here's how it works:

So what is an SSH key, actually?

Think of SSH keys as a digital handshake between your computer and GitHub.

Your computer generates a key pair:

A private key (stored safely on your machine)

A public key (shared with GitHub)

When you try to access GitHub via SSH, GitHub checks if the public key you've registered matches the private key on your machine.

If they match, you're in — no password prompts needed.

Steps to set up SSH with GitHub:

Generate your SSH key:

2. Start the SSH agent and add your key:

3. Copy your public key:

Then copy the output to your clipboard.

Add it to your GitHub account:

Go to GitHub → Settings → SSH and GPG keys

Click New SSH key

Paste your public key and save.

5. Now you'll be able to clone using SSH like this:

From now on, any interaction with GitHub over SSH will just work — no password typing, just smooth encrypted magic.

And there you have it ! Until next time — happy coding, and may your merges always be conflict-free! ✨👩💻👨💻

#code#codeblr#css#html#javascript#java development company#python#studyblr#progblr#programming#comp sci#web design#web developers#web development#website design#webdev#website#tech#html css#learn to code#github

94 notes

·

View notes

Text

Development Update - December 2024

Happy New Year, everyone! We're so excited to be able to start off 2025 with our biggest news yet: we have a planned closed beta launch window of Q1 2026 for Mythaura!

Read on for a recap of 2024, more information about our closed beta period, Ryu expressions, January astrology, and Ko-fi Winter Quarter reward concepts!

2024 Year in Review

Creative

This year, the creative team worked on adding new features, introducing imaginative designs, and refining lore/worldbuilding to enrich the overall experience.

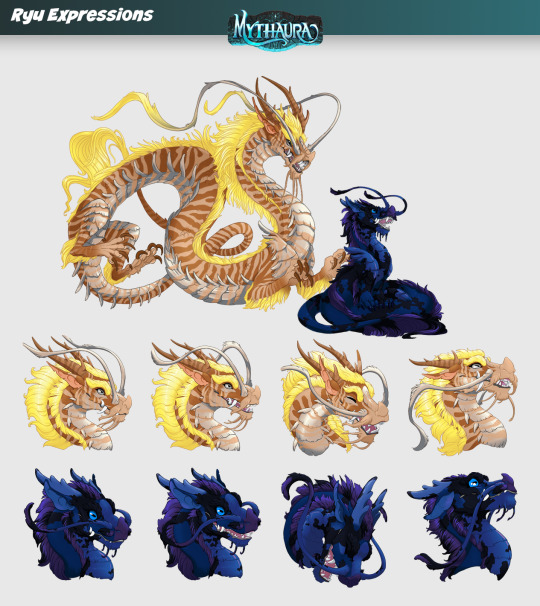

New Beasts and Expressions: All 9 beast expression bases completed for both young and adult with finalized specials for Dragons, Unicorns, Griffins, Hippogriffs, and Ryu.

Mutations, Supers and Specials: Introduced the Celestial mutation as well as new Specials Banding & Merle, and the Super Prismatic.

New Artist: Welcomed Sourdeer to the creative team.

Collaboration and Sponsorship: Sponsored several new companions from our Ko-Fi sponsors—Amaru, Inkminks, Somnowl, Torchlight Python, Belligerent Capygora, and the Fruit-Footeded Gecko.

New Colors: Revealed two eye-catching colors, Canyon (a contest winner) and Porphyry (a surprise bonus), giving players even more variety for their Beasts.

Classes and Gear: Unveiled distinct classes, each with its own themed equipment and companions, to provide deeper roleplay and strategic depth.

Items and Worldbuilding: Created a range of new items—from soulshift coins to potions, rations, and over a dozen fishable species—enriching Mythaura’s economy and interactions.

Star Signs & Astrology: Continued to elaborate on the zodiac-like system, connecting each Beast’s fate to celestial alignments.

Questing & Story Outline: Laid the groundwork for the intro quest pipeline and overarching narrative, ensuring that players’ journey unfolds with purposeful progression.

Code

This year, the development team worked diligently on refining and expanding the codebase to support new features, enhance performance, and improve gameplay experiences. A total 429,000 lines of code changed across both the backend and frontend, reflecting:

New Features: Implementation of systems like skill trees, inventory management, community forums, elite enemies, npc & quest systems, and advanced customization options for Beasts.

Optimizations and Refactoring: Significant cleanup and streamlining of backend systems, such as game state management, passive effects, damage algorithms, and map data structures, ensuring better performance and maintainability.

Map Builder: a tool that allows us to build bespoke maps

Regular updates to ensure compatibility with modern tools and frameworks.

It’s worth noting that line changes alone don’t capture the complexity of programming work. For example:

A single line of efficient code can replace multiple lines of legacy logic.

Optimizing backend systems often involves removing redundant or outdated code without adding new functionality.

Things like added dependencies can add many lines of code without adding much bespoke functionality.

Mythaura Closed Beta

We are so beyond excited to share this information with you here first: Mythaura closed beta is targeted for Q1 2026!

On behalf of the whole team, thank you all so, so much for all of the support for Mythaura over the years. Whether you’ve been around since the Patreon days or joined us after Koa and Sark took over…it’s your support that has gotten this project to where it is. We are so grateful for the faith and trust placed in us, and the opportunity to create something we hope people will truly love and enjoy. This has truly been a collaborative effort with you and we are constantly humbled by all of the thoughtful insights, engaging discussions, and great ideas to come out of this amazing community of supporters.

So: thank you again, it’s been an emotional and amazing journey for the dev team and we’re delighted to join you on your journeys through Mythaura.

Miyazaki Full-Time

Hey everyone, Koa here!

We’re thrilled to share some news about Mythaura’s development! Starting in 2025, Miya will be officially dedicating herself full-time to Mythaura. Her focus will be on bringing even more depth and wonder to the world of Mythaura through content creation, worldbuilding, and building up the brand. It’s a huge step forward, and we’re so excited for the impact her passion and creativity will have on the project!

In addition, I’ve secured 4-day weeks and will be working full-time each Friday to dive deeper into development. This extra push is going to allow us to keep moving steadily forward on both the art and code fronts, and with Miya’s expanded role, the next year of development is looking really promising.

Thank you all for being here and supporting Mythaura every step of the way. We can’t wait to share more as things progress!

Closed Beta FAQ

In the interest of keeping all of the information about our Closed Beta in one place and update as needed, we have added as much information as possible to the FAQ page.

If you have any questions that you can think of, please feel free to reach out to us through our contact form or on Discord!

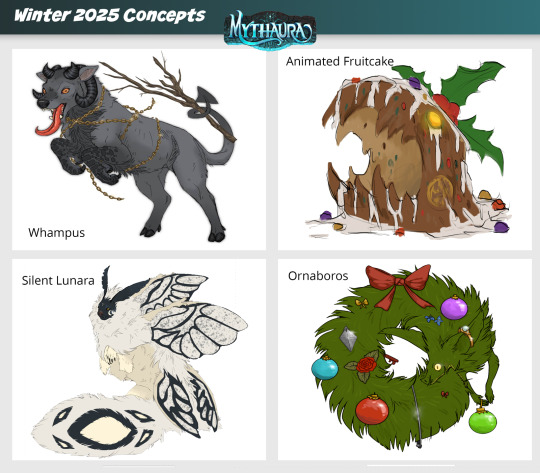

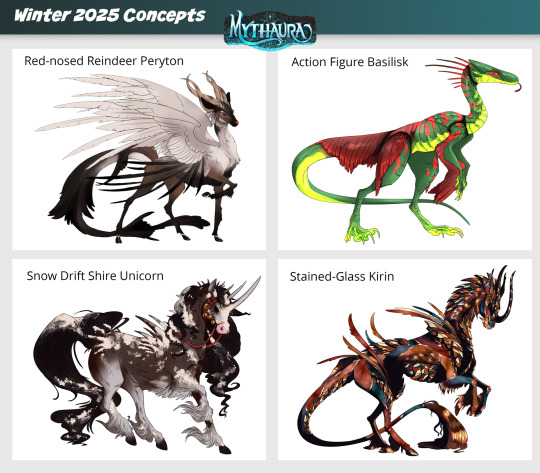

Winter Quarter (2025) Concepts

It’s the first day of Winter Quarter 2025, which means we’ve got new Quarterly Rewards for Sponsors to vote on on our Ko-fi page!

Which concepts would you like to see made into official site items? Sponsors of Bronze level or higher have a vote in deciding. Please check out the Companion post and the Glamour post on Ko-fi to cast your vote for the winning concepts!

Votes must be posted by January 29, 2025 at 11:59pm PDT in order to be considered.

All Fall 2024 Rewards are now listed in our Ko-fi Shop for individual purchase for all Sponsor levels at $5 USD flat rate per unit. As a reminder, please remember that no more than 3 units of any given item can be purchased. If you purchase more than 3 units of any given item, your entire purchase will be refunded and you will need to place your order again, this time with no more than 3 units of any given item.

Fall 2024 Glamour: Diaphonized Ryu

Fall 2024 Companion: Inhabited Skull

Fall 2024 Solid Gold Glamour: Hippogriff (Young)

NOTE: As covered in the FAQ, the Ko-fi shop will be closing at the end of the year. These will be the last Winter Quarter rewards for Mythaura!

New Super: Zebra

We've added our first new Super to the site since last year's Prismatic: Zebra, which has a chance to occur when parents have the Wildebeest and Banding Specials!

Zebra is now live in our Beast Creator--we're excited to see what you all create with it!

New Expressions: Ryu

The Water-element Ryu has had expressions completed for both the adult and young models. Expressions have been a huge, time-intensive project for the art team to undertake, but the result is always worth it!

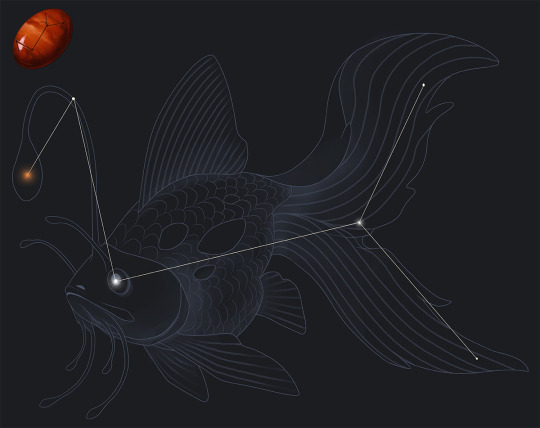

Mythauran Astrology: January

The month of January is referred to as Hearth's Embrace, representing the fireplaces kept lit for the entirety of the coldest month of the year. This month is also associated with the constellation of the Glassblower and the carnelian stone.

Mythaura v0.35

Refactored "Beast Parties" into "User Parties," allowing non-beast entities like NPCs to be added to your party. NPCs added to your party will follow you in the overworld, cannot be made your leader, and will make their own decisions in combat.

Checkpoint floor functionality ironed out, allowing pre-built maps to appear at specific floor intervals.

The ability to set spawn and end coordinates in the map builder was added to allow staff to build checkpoint floors.

Various cleanups and refactors to improve performance and reduce the number of queries needed to run certain operations.

Added location events, which power interactable objects in the overworld, such as a lootable chest or a pickable bush.

Thank You!

Thanks for sticking through to the end of the post, we always look forward to sharing our month's work with all of you--thank you for taking the time to read. We'll see you around the Discord.

#mythaura#indie game#indie game dev#game dev#dev update#unicorn#dragon#griffin#peryton#ryu#basilisk#quetzal#hippogriff#kirin#petsite#pet site#virtual pet site#closed beta launch#flight rising#neopets

93 notes

·

View notes

Text

Here is an observation of common attitudes I see in tech-adjacent spaces (mostly online).

The thing about programming/tech is, at its base, it's historically and culturally contingent. There are of course many fundamental (physical and mathematical) limitations on what a computer can and cannot do, how fast it can do things, and so on. But at least as much of the modern tech landscape is the product of choices made by people about how these machines will work, choices that very much could have been made differently. And modern computing technology is a huge tower of these choices, each resting on and grappling with the ones below it. If you're, say, a web developer writing a web app, the sheer height of this tower of contingent human decisions that your work rests on is almost incomprehensible. And by and large, programmers know this.

I am not dispensing some secret wisdom that I think tech workers don't have. On the contrary, I think the vast contingency of it all is blindingly obvious to anyone who has tried to make a computer do anything. But tech is also, well, technical, and do you know what else is technical? Science. I think this has lead to a sort of cultural false affinity, where tech is perceived, both from within and without, as more similar to science than it is to the humanities. Certainly, there are certain kinds of intellectual labor that tech shares with the sciences. But there are also, as described above, certain kinds of intellectual labor that tech shares to a much greater degree with the humanities, namely (in the broadest terms): grappling with other people's choices.

From without, I think this misplaced affinity leads people to believe that technology is less contingent than it actually is. But I think this belief would be completely untenable from within; it just cannot contend with reality. I've never met a tech worker or enthusiast who seems to think this way. Rather, I feel there is a persistent perception among tech-inclined people that science is more contingent than it actually is. I don't think this misperception rises to the level of a belief, rather I think it is more of an intuition. I think tech people have very much trained themselves (rightly, in their native context) to look at complex systems and go "how could this be reworked, improved, done differently?" I think this impulse is very sensible in computing but very out of place in, say, biology. And I suppose my conjecture (this whole post is purely conjectural, based on a gut sense that might not be worth anything) is that this is one of the main reasons for the popularity of transhumanism in, you know, the Bay. And whatnot.

I'm not saying transhumanism is actually, physically impossible. Of course it's not! The technology will, I strongly suspect, exist some day. But if you're living in 2024, I think the engineering mindset is more-or-less unambiguously the wrong one to bring to biology, at least macrobiology. This post is not about the limits of what is physically possible, it's about the attitudes that I sometimes see tech people bring to other endeavors that I think sometimes lead them to fall on their face. If you come to biology thinking about it as this contingent thing that you must grapple with, as you grapple with a novel or a codebase or anything else made by humans, I think it will make you like biology less and understand it less well.

When I was younger and a lot more naive, as a young teenager who knew a little bit about programming and nothing about linguistics, I wanted to create a "logical language" that could replace natural languages (with all their irregularities and perceived inefficiencies) for the purpose of human communication. This is part of how I initially got into conlanging. Now, with an actual linguistics background, I view this as... again, perhaps not per se impossible, but extremely unlikely to work or even to be desirable to attempt in any foreseeable future, for a whole host of rather fundamental reasons. I don't feel that this desire can survive very well upon confrontation with what we actually know (and crucially also, what we don't know) about human language.

I mean, if you want to try, you can try. I won't stop you.

Anyway, I feel that holding onto this sort of mindset too intensely does not really permit engagement with nature and the sciences. It's the same way I think a lot of per se humanities people fudge engagement with the sciences, where they insist on mounting some kind of social critique even when it is not appropriate (to be clear, I think critique of scientific practices/institutions are sometimes appropriate, but I think people whose professional training gives them an instinct to critique often take it too far).

So like, I guess that's my thesis. Coding is a humanity in disguise, and I wish that people who are used to dealing with human-made things would adopt a more native scientific or naturalist mindset when dealing with science and nature.

115 notes

·

View notes

Text

i have complex feelings about the new ZA trailer.

basically im a LITTLE disappointed because it's set in modern day , u was hoping it would be set in the past like PLA cuz I actually really enjoyed the historical setting of that game.

i am also worried that its going to feel really cramped? the smaller spaces worry me a bit, especially since part of the reason why i liked PLA so much was because it was so open. I feel like there are going to be much more forced pokemon battles as a result. I liked being able to avoid pokemon and observe them from afar in PLA.

I am really excited for the real time battle system, but i certainly hope there's an auto battle mode for wild pokemon encounters cuz I am not entirely sure how fleeing will work in this scenerio.

I also hope that we get different final evos for the starters like we did in PLA. I like tepig and tododile but their final evolutions are kind of rough (for me personally! i get the appeal!! i just prefer cuteness)

i also hope we get new mega evos for the main 3 but also for other pokemon!

i also hope we get the XY legendaries like we did in PLA. I do like the look of the trainers we've seen so far and i am OBSESSED with the hotel. I love overgrown buildings and everything.

I also like the ability to run on the rooftops that brings me a lot of joy. That's exciting for me personally!

It seems like it's built off of the SV PLA codebase so expect some performance issues ^-^

also the trainers starting with fedoras is fucking CRAZY holy shit

TL;DR - cramped spaces bad. npcs cute. starters rough. expect frame drops. will buy, will enjoy. i might prefer PLA

43 notes

·

View notes

Text

Hire Svelte Developers for Fast Web Apps

If your website or app is slow or bulky, chances are people won’t stick around. These days, speed matters. That’s where Svelte can make a big difference and why it’s a smart choice for modern web development.

At Capital Compute, we work with businesses that want to build fast, lightweight apps that just work with no extra bloat and no unnecessary delays. Svelte helps us do exactly that. Unlike other frameworks, it does most of the processing before the code even hits the browser, which makes everything feel faster and smoother.

We’ve helped startups and growing teams build:

MVPs that are quick to launch

Scalable web apps that handle real traffic

Clean, modular components for existing projects

Our team focuses on writing solid code that performs well, loads quickly, and can grow with your business. You won’t get a messy codebase or clunky design, and we build for real users and real results.

Here’s why teams choose us:

✅ Apps that load fast and run smoothly

✅ Code that’s easy to maintain and scale

✅ Great user experience on all devices

✅ Friendly, skilled developers who understand your goals

If you're looking to build a better frontend or even just test an idea, we can help. Let’s build something fast, simple, and reliable.

Talk to our Svelte developers at Capital Compute

#Hire Svelte developers#Svelte development company#Capital Compute Svelte developers#Hire a Svelte developer for your startup#Best frontend developers for Svelte

2 notes

·

View notes

Text

Static Typing in Dynamic Languages: A Modern Safety Net

Content: Traditionally, dynamic languages like Python and JavaScript traded compile-time type safety for speed and flexibility. But today, optional static typing—via tools like TypeScript for JavaScript or Python’s typing module—brings the best of both worlds.

Static types improve code readability, tooling (like autocompletion), and catch potential errors early. They also make refactoring safer and large-scale collaboration easier.

TypeScript’s popularity showcases how adding types to JavaScript empowers developers to manage growing codebases with greater confidence. Similarly, using Python’s type hints with tools like mypy can improve code robustness without sacrificing Python’s simplicity.

For teams prioritizing long-term maintainability, adopting static typing early pays dividends. Organizations, including Software Development, advocate for using typing disciplines to future-proof projects without overcomplicating development.

Static typing is not about perfection; it’s about increasing predictability and easing future changes.

Start by adding types to critical parts of your codebase—public APIs, core data models, and utility libraries—before expanding to the entire project.

3 notes

·

View notes

Text

So I made an app for PROTO. Written in Kotlin and runs on Android.

Next, I want to upgrade it with a controller mode. It should work so so I simply plug a wired xbox controller into my phone with a USB OTG adaptor… and bam, the phone does all the complex wireless communication and is a battery. Meaning that besides the controller, you only need the app and… any phone. Which anyone is rather likely to have Done.

Now THAT is convenient!

( Warning, the rest of the post turned into... a few rants. ) Why Android? Well I dislike Android less than IOS

So it is it better to be crawling in front of the alter of "We are making the apocalypse happen" Google than "5 Chinese child workers died while you read this" Apple?

Not much…

I really should which over to a better open source Linux distribution… But I do not have the willpower to research which one... So on Android I stay.

Kotlin is meant to be "Java, but better/more modern/More functional programming style" (Everyone realized a few years back that the 100% Object oriented programming paradigme is stupid as hell. And we already knew that about the functional programming paradigme. The best is a mix of everything, each used when it is the best option.) And for the most part, it succeeds. Java/Kotlin compiles its code down to "bytecode", which is essentially assembler but for the Java virtual machine. The virtual machine then runs the program. Like how javascript have the browser run it instead of compiling it to the specific machine your want it to run on… It makes them easy to port…

Except in the case of Kotlin on Android... there is not a snowflakes chance in hell that you can take your entire codebase and just run it on another linux distribution, Windows or IOS…

So... you do it for the performance right? The upside of compiling directly to the machine is that it does not waste power on middle management layers… This is why C and C++ are so fast!

Except… Android is… Clunky… It relies on design ideas that require EVERY SINGLE PROGRAM AND APP ON YOUR PHONE to behave nicely (Lots of "This system only works if every single app uses it sparingly and do not screw each-other over" paradigms .). And many distributions from Motorola like mine for example comes with software YOUR ARE NOT ALLOWED TO UNINSTALL... meaning that software on your phone is ALWAYS behaving badly. Because not a single person actually owns an Android phone. You own a brick of electronics that is worthless without its OS, and google does not sell that to you or even gift it to you. You are renting it for free, forever. Same with Motorola which added a few extra modifications onto Googles Android and then gave it to me.

That way, google does not have to give any rights to its costumers. So I cannot completely control what my phone does. Because it is not my phone. It is Googles phone.

That I am allowed to use. By the good graces of our corporate god emperors

"Moose stares blankly into space trying to stop being permanently angry at hoe everyone is choosing to run the world"

… Ok that turned dark… Anywho. TLDR There is a better option for 95% of apps (Which is "A GUI that interfaces with a database") "Just write a single HTML document with internal CSS and Javascript" Usually simpler, MUCH easier and smaller… And now your app works on any computer with a browser. Meaning all of them…

I made a GUI for my parents recently that works exactly like that. Soo this post:

It was frankly a mistake of me to learn Kotlin… Even more so since It is a… awful language… Clearly good ideas then ruined by marketing department people yelling "SUPPORT EVERYTHING! AND USE ALL THE BUZZWORD TECHNOLOGY! Like… If your language FORCES you to use exceptions for normal runtime behavior "Stares at CancellationException"... dear god that is horrible...

Made EVEN WORSE by being a really complicated way to re-invent the GOTO expression… You know... The thing every programmer is taught will eat your feet if you ever think about using it because it is SO dangerous, and SO bad form to use it? Yeah. It is that, hidden is a COMPLEATLY WRONG WAY to use exceptions…

goodie… I swear to Christ, every page or two of my Kotlin notes have me ranting how I learned how something works, and that it is terrible... Blaaa. But anyway now that I know it, I try to keep it fresh in my mind and use it from time to time. Might as well. It IS possible to run certain things more effective than a web page, and you can work much more directly with the file system. It is... hard-ish to get a webpage to "load" a file automatically... But believe me, it is good that this is the case.

Anywho. How does the app work and what is the next version going to do?

PROTO is meant to be a platform I test OTHER systems on, so he is optimized for simplicity. So how you control him is sending a HTTP 1.1 message of type Text/Plain… (This is a VERY fancy sounding way of saying "A string" in network speak). The string is 6 comma separated numbers. Linear movement XYZ and angular movement XYZ.

The app is simply 5 buttons that each sends a HTTP PUT request with fixed values. Specifically 0.5/-0.5 meter/second linear (Drive back or forward) 0.2/-0.2 radians/second angular (Turn right or turn left) Or all 0 for stop

(Yes, I just formatted normal text as code to make it more readable... I think I might be more infected by programming so much than I thought...)

Aaaaaanywho. That must be enough ranting. Time to make the app

31 notes

·

View notes

Text

wikipedia has clearly been a huge success: corpus of information is huge, truly encyclopedia-like, it's a fairly reliable source of first-reference information, etc.

and yet, it feels like the dream of wikipedia never materialized: like so many of the projects build on the same ideas of collaborative, crowd-sourced work, it has not been able to overcome that most people are not looking to contribute, be that because they are unwilling, unable, or just not used to the idea of this kind of collaboration.

just like open-source software or openstreetmap, it has become the domain of the few, which as it turns out comes with some pretty big risks.

and while programming is pretty clearly out of reach of most people given how much mental space you need to devote to all the moving pieces in a modern codebase, adding a bit to wikipedia feels like something most people should be able to do. After all, there are at the very least numerous examples of semi-public figures creating their own wikipedia page.

4 notes

·

View notes

Note

How do you cope with package management? I mostly use python where it's literally just a single command. It took me over an hour to work out in c++

I'm going to be really honest and say the language package/build pipeline ecosystem is the single worst thing about C++. Memory safety isn't that big of a deal in well managed projects that utilize modern C++, pointer arithmetic ain't that hard once you take your time to actually understanding but the fucking mess that is the bizarre scene of package managers, build system setups and different ways of handling that in-between different codebases that rely on eachother? Nightmarish, unsolvable, literally not possible to workaround (see https://xkcd.com/927/).

What I'd recommend - look around at different repos for software you use and look how they structure their codebases, what buildsystems they use and see how it works for you! There isn't one-size-fits-all answer, unfortunately.

The way I program my own lil side projects, I use mainly CMake + CPM.cmake (a lil script that allows me to download github repos into my own source tree at a specific version and configure them for building) and make HEAVY use of CMake Presets.

As for what I'd recommend for you to try as a good starting point would be meson. It's great at getting you into a working program pretty fast and without much boilerplate.

Best of luck. There's a world of performant OOP goodness waiting.

3 notes

·

View notes

Note

ruo: modern c++

it's really not as bad as the reputation has it if you're in a codebase that uses things like lambdas, auto, smart pointers, and that avoids trying to be "clever" with templates

2 notes

·

View notes

Text

08.10.24

I downloaded Linux Mint 22 with the 'MATE' Desktop today.

https://www.linuxmint.com/download.php

Using Linux Mint Cinnamon's built in USB writer, I wrote the ISO file to my 16 GB USB stick. The file size is 2.8 Giga-bytes for MATE.

Once this had completed, I then restarted the computer and from the boot screen, selected 'Start Linux Mint 22 MATE.'

The laptop booted into the live USB environment of Mint, where I could play around with it and at a later time chose to install it onto one of the hard disk partitions.

Linux Mint 22 MATE like the Cinnamon and XFCE desktop choices is based on Ubuntu 24.04, and features the MATE desktop 1.28 (2024).

https://mate-desktop.org/

MATE is based on the classic Gnome 2 codebase which was a very stable and popular desktop environment between 1997 to 2011.

The screenshot shows how the panels can be re-arranged to match the 'traditional' Gnome 2 layout seen on Ubuntu and other Linux desktops. XViewer is the default image viewer on all Mint desktops.

The operating system also comes with a selection of modern and classic colourful themes.

Underneath is a screen capture of Linux Mint 7 featuring GNOME 2, which was released in 2009!

I will install it alongside Linux Mint 22 Cinnamon and Ubuntu 24.04!

4 notes

·

View notes

Text

Gemini Code Assist Enterprise: AI App Development Tool

Introducing Gemini Code Assist Enterprise’s AI-powered app development tool that allows for code customisation.

The modern economy is driven by software development. Unfortunately, due to a lack of skilled developers, a growing number of integrations, vendors, and abstraction levels, developing effective apps across the tech stack is difficult.

To expedite application delivery and stay competitive, IT leaders must provide their teams with AI-powered solutions that assist developers in navigating complexity.

Google Cloud thinks that offering an AI-powered application development solution that works across the tech stack, along with enterprise-grade security guarantees, better contextual suggestions, and cloud integrations that let developers work more quickly and versatile with a wider range of services, is the best way to address development challenges.

Google Cloud is presenting Gemini Code Assist Enterprise, the next generation of application development capabilities.

Beyond AI-powered coding aid in the IDE, Gemini Code Assist Enterprise goes. This is application development support at the corporate level. Gemini’s huge token context window supports deep local codebase awareness. You can use a wide context window to consider the details of your local codebase and ongoing development session, allowing you to generate or transform code that is better appropriate for your application.

With code customization, Code Assist Enterprise not only comprehends your local codebase but also provides code recommendations based on internal libraries and best practices within your company. As a result, Code Assist can produce personalized code recommendations that are more precise and pertinent to your company. In addition to finishing difficult activities like updating the Java version across a whole repository, developers can remain in the flow state for longer and provide more insights directly to their IDEs. Because of this, developers can concentrate on coming up with original solutions to problems, which increases job satisfaction and gives them a competitive advantage. You can also come to market more quickly.

GitLab.com and GitHub.com repos can be indexed by Gemini Code Assist Enterprise code customisation; support for self-hosted, on-premise repos and other source control systems will be added in early 2025.

Yet IDEs are not the only tool used to construct apps. It integrates coding support into all of Google Cloud’s services to help specialist coders become more adaptable builders. The time required to transition to new technologies is significantly decreased by a code assistant, which also integrates the subtleties of an organization’s coding standards into its recommendations. Therefore, the faster your builders can create and deliver applications, the more services it impacts. To meet developers where they are, Code Assist Enterprise provides coding assistance in Firebase, Databases, BigQuery, Colab Enterprise, Apigee, and Application Integration. Furthermore, each Gemini Code Assist Enterprise user can access these products’ features; they are not separate purchases.

Gemini Code Support BigQuery enterprise users can benefit from SQL and Python code support. With the creation of pre-validated, ready-to-run queries (data insights) and a natural language-based interface for data exploration, curation, wrangling, analysis, and visualization (data canvas), they can enhance their data journeys beyond editor-based code assistance and speed up their analytics workflows.

Furthermore, Code Assist Enterprise does not use the proprietary data from your firm to train the Gemini model, since security and privacy are of utmost importance to any business. Source code that is kept separate from each customer’s organization and kept for usage in code customization is kept in a Google Cloud-managed project. Clients are in complete control of which source repositories to utilize for customization, and they can delete all data at any moment.

Your company and data are safeguarded by Google Cloud’s dedication to enterprise preparedness, data governance, and security. This is demonstrated by projects like software supply chain security, Mandiant research, and purpose-built infrastructure, as well as by generative AI indemnification.

Google Cloud provides you with the greatest tools for AI coding support so that your engineers may work happily and effectively. The market is also paying attention. Because of its ability to execute and completeness of vision, Google Cloud has been ranked as a Leader in the Gartner Magic Quadrant for AI Code Assistants for 2024.

Gemini Code Assist Enterprise Costs

In general, Gemini Code Assist Enterprise costs $45 per month per user; however, a one-year membership that ends on March 31, 2025, will only cost $19 per month per user.

Read more on Govindhtech.com

#Gemini#GeminiCodeAssist#AIApp#AI#AICodeAssistants#CodeAssistEnterprise#BigQuery#Geminimodel#News#Technews#TechnologyNews#Technologytrends#Govindhtech#technology

3 notes

·

View notes

Text

Front-End Development: Building the Interface of the Future

Front-end development is at the heart of creating user-friendly and visually appealing websites. It involves translating designs into code and ensuring that web applications are responsive and interactive. In this article, we explore the key aspects of front-end development, essential skills, and emerging trends in the field.

What is Front-End Development?

Front-end development focuses on the user interface (UI) and user experience (UX) aspects of web development. It involves creating the part of the website that users see and interact with, using a combination of HTML, CSS, and JavaScript.

Core Technologies

HTML (HyperText Markup Language): HTML is the foundation of web pages, defining the structure and content, such as headings, paragraphs, and images.

CSS (Cascading Style Sheets): CSS is used to style and layout web pages, controlling aspects like colors, fonts, and spacing to create an attractive and consistent look.

JavaScript: JavaScript adds interactivity and dynamic content to web pages, enabling features like form validation, animations, and user input handling.

Popular Frameworks and Libraries

React: A JavaScript library for building fast and dynamic user interfaces, particularly single-page applications.

Angular: A comprehensive framework for building large-scale applications with a structured and modular approach.

Vue.js: A flexible framework that is easy to integrate into projects and focuses on the view layer of applications.

The Role of a Front-End Developer

Turning Designs into Code

Front-end developers take designs created by UI/UX designers and turn them into code. This involves creating HTML for structure, CSS for styling, and JavaScript for functionality, ensuring the design is faithfully implemented and functional across various devices and browsers.

Ensuring Responsiveness

With the growing use of mobile devices, it’s crucial that websites work well on screens of all sizes. Front-end developers ensure that web applications are responsive, meaning they adapt smoothly to different screen resolutions and orientations.

Optimizing Performance

Performance optimization is key in front-end development. Developers reduce file sizes, minimize load times, and implement lazy loading for images and videos to enhance the user experience.

Maintaining Cross-Browser Compatibility

A successful front-end developer ensures that web applications work consistently across different browsers. This involves testing and resolving compatibility issues to provide a uniform experience.

Implementing Accessibility

Making web content accessible to people with disabilities is a critical aspect of front-end development. Developers adhere to accessibility standards and best practices to ensure that everyone can use the website effectively.

Essential Skills for Front-End Developers

Mastery of Core Technologies

Proficiency in HTML, CSS, and JavaScript is fundamental. Front-end developers must be able to write clean, efficient code that is both maintainable and scalable.

Familiarity with Modern Frameworks

Knowledge of modern frameworks like React, Angular, and Vue.js is crucial for building contemporary web applications. These tools facilitate the creation of complex, dynamic interfaces.

Version Control with Git

Version control systems like Git are essential for tracking changes in the codebase and collaborating with other developers. Mastery of Git allows for efficient project management and collaboration.

Understanding of UX/UI Design

An understanding of UX/UI principles helps developers create user-friendly and aesthetically pleasing interfaces. This includes knowledge of user behavior, usability testing, and design basics.

Problem-Solving and Debugging

Front-end development often involves troubleshooting issues related to layout, functionality, and performance. Strong problem-solving skills are essential to identify and resolve these challenges efficiently.

Emerging Trends in Front-End Development

Progressive Web Apps (PWAs)

PWAs combine the best features of web and mobile applications, offering fast loading times, offline capabilities, and push notifications. They provide a native app-like experience within the browser.

WebAssembly

WebAssembly allows developers to run high-performance code in web browsers. It enables complex applications like games and video editors to run efficiently on the web, expanding the possibilities of front-end development.

Server-Side Rendering (SSR)

Server-side rendering improves the loading speed of web pages and enhances SEO. Frameworks like Next.js (for React) facilitate SSR, making it easier to build fast and search-friendly applications.

Single Page Applications (SPAs)

SPAs load a single HTML page and dynamically update the content as users interact with the application. This approach provides a smoother user experience, similar to that of a desktop application.

Component-Based Development

Modern frameworks emphasize component-based architecture, where UI elements are built as reusable components. This modular approach enhances maintainability and scalability.

AI and Machine Learning Integration

Integrating AI and machine learning into front-end development enables the creation of smarter, more personalized applications. Features like chatbots, recommendation engines, and voice recognition can significantly enhance user engagement.

#FrontEndDevelopment#WebDevelopment#UIUXDesign#HTML#CSS#JavaScript#ReactJS#Angular#VueJS#ResponsiveDesign#WebDesign#UserExperience#WebPerformance#WebAccessibility#SinglePageApplication#ProgressiveWebApp#WebDevelopmentTrends#ModernWebDev#FrontendFrameworks#CodeNewbie#LearnToCode#WebDevCommunity#CodingLife#TechTrends#WebComponents#WebAssembly#ServerSideRendering#DigitalDesign#UIComponents#WebOptimization

3 notes

·

View notes

Text

The Role of Microservices In Modern Software Architecture

Are you ready to dive into the exciting world of microservices and discover how they are revolutionizing modern software architecture? In today’s rapidly evolving digital landscape, businesses are constantly seeking ways to build more scalable, flexible, and resilient applications. Enter microservices – a groundbreaking approach that allows developers to break down monolithic systems into smaller, independent components. Join us as we unravel the role of microservices in shaping the future of software design and explore their immense potential for transforming your organization’s technology stack. Buckle up for an enlightening journey through the intricacies of this game-changing architectural style!

Introduction To Microservices And Software Architecture

In today’s rapidly evolving technological landscape, software architecture has become a crucial aspect for businesses looking to stay competitive. As companies strive for faster delivery of high-quality software, the traditional monolithic architecture has proved to be limiting and inefficient. This is where microservices come into play.

Microservices are an architectural approach that involves breaking down large, complex applications into smaller, independent services that can communicate with each other through APIs. These services are self-contained and can be deployed and updated independently without affecting the entire application.

Software architecture on the other hand, refers to the overall design of a software system including its components, relationships between them, and their interactions. It provides a blueprint for building scalable, maintainable and robust applications.

So how do microservices fit into the world of software architecture? Let’s delve deeper into this topic by understanding the fundamentals of both microservices and software architecture.

As mentioned earlier, microservices are small independent services that work together to form a larger application. Each service performs a specific business function and runs as an autonomous process. These services can be developed in different programming languages or frameworks based on what best suits their purpose.

The concept of microservices originated from Service-Oriented Architecture (SOA). However, unlike SOA which tends to have larger services with complex interconnections, microservices follow the principle of single responsibility – meaning each service should only perform one task or function.

Evolution Of Software Architecture: From Monolithic To Microservices

Software architecture has evolved significantly over the years, from traditional monolithic architectures to more modern and agile microservices architectures. This evolution has been driven by the need for more flexible, scalable, and efficient software systems. In this section, we will explore the journey of software architecture from monolithic to microservices and how it has transformed the way modern software is built.

Monolithic Architecture:

In a monolithic architecture, all components of an application are tightly coupled together into a single codebase. This means that any changes made to one part of the code can potentially impact other parts of the application. Monolithic applications are usually large and complex, making them difficult to maintain and scale.

One of the main drawbacks of monolithic architecture is its lack of flexibility. The entire application needs to be redeployed whenever a change or update is made, which can result in downtime and disruption for users. This makes it challenging for businesses to respond quickly to changing market needs.

The Rise of Microservices:

To overcome these limitations, software architects started exploring new ways of building applications that were more flexible and scalable. Microservices emerged as a solution to these challenges in software development.

Microservices architecture decomposes an application into smaller independent services that communicate with each other through well-defined APIs. Each service is responsible for a specific business function or feature and can be developed, deployed, and scaled independently without affecting other services.

Advantages Of Using Microservices In Modern Software Development

Microservices have gained immense popularity in recent years, and for good reason. They offer numerous advantages over traditional monolithic software development approaches, making them a highly sought-after approach in modern software architecture.

1. Scalability: One of the key advantages of using microservices is their ability to scale independently. In a monolithic system, any changes or updates made to one component can potentially affect the entire application, making it difficult to scale specific functionalities as needed. However, with microservices, each service is developed and deployed independently, allowing for easier scalability and flexibility.

2. Improved Fault Isolation: In a monolithic architecture, a single error or bug can bring down the entire system. This makes troubleshooting and debugging a time-consuming and challenging process. With microservices, each service operates independently from others, which means that if one service fails or experiences issues, it will not impact the functioning of other services. This enables developers to quickly identify and resolve issues without affecting the overall system.

3. Faster Development: Microservices promote faster development cycles because they allow developers to work on different services concurrently without disrupting each other’s work. Moreover, since services are smaller in size compared to monoliths, they are easier to understand and maintain which results in reduced development time.

4. Technology Diversity: Monolithic systems often rely on a single technology stack for all components of the application. This can be limiting when new technologies emerge or when certain functionalities require specialized tools or languages that may not be compatible with the existing stack. In contrast, microservices allow for a diverse range of technologies to be used for different services, providing more flexibility and adaptability.

5. Easy Deployment: Microservices are designed to be deployed independently, which means that updates or changes to one service can be rolled out without affecting the entire system. This makes deployments faster and less risky compared to monolithic architectures, where any changes require the entire application to be redeployed.

6. Better Fault Tolerance: In a monolithic architecture, a single point of failure can bring down the entire system. With microservices, failures are isolated to individual services, which means that even if one service fails, the rest of the system can continue functioning. This improves overall fault tolerance in the application.

7. Improved Team Productivity: Microservices promote a modular approach to software development, allowing teams to work on specific services without needing to understand every aspect of the application. This leads to improved productivity as developers can focus on their areas of expertise and make independent decisions about their service without worrying about how it will affect other parts of the system.

Challenges And Limitations Of Microservices

As with any technology or approach, there are both challenges and limitations to implementing microservices in modern software architecture. While the benefits of this architectural style are numerous, it is important to be aware of these potential obstacles in order to effectively navigate them.

1. Complexity: One of the main challenges of microservices is their inherent complexity. When a system is broken down into smaller, independent services, it becomes more difficult to manage and understand as a whole. This can lead to increased overhead and maintenance costs, as well as potential performance issues if not properly designed and implemented.

2. Distributed Systems Management: Microservices by nature are distributed systems, meaning that each service may be running on different servers or even in different geographical locations. This introduces new challenges for managing and monitoring the system as a whole. It also adds an extra layer of complexity when troubleshooting issues that span multiple services.

3. Communication Between Services: In order for microservices to function effectively, they must be able to communicate with one another seamlessly. This requires robust communication protocols and mechanisms such as APIs or messaging systems. However, setting up and maintaining these connections can be time-consuming and error-prone.

4. Data Consistency: In a traditional monolithic architecture, data consistency is relatively straightforward since all components access the same database instance. In contrast, microservices often have their own databases which can lead to data consistency issues if not carefully managed through proper synchronization techniques.

Best Practices For Implementing Microservices In Your Project

Implementing microservices in your project can bring a multitude of benefits, such as increased scalability, flexibility and faster development cycles. However, it is also important to ensure that the implementation is done correctly in order to fully reap these benefits. In this section, we will discuss some best practices for implementing microservices in your project.

1. Define clear boundaries and responsibilities: One of the key principles of microservices architecture is the idea of breaking down a larger application into smaller independent services. It is crucial to clearly define the boundaries and responsibilities of each service to avoid overlap or duplication of functionality. This can be achieved by using techniques like domain-driven design or event storming to identify distinct business domains and their respective services.

2. Choose appropriate communication protocols: Microservices communicate with each other through APIs, so it is important to carefully consider which protocols to use for these interactions. RESTful APIs are popular due to their simplicity and compatibility with different programming languages. Alternatively, you may choose messaging-based protocols like AMQP or Kafka for asynchronous communication between services.

3. Ensure fault tolerance: In a distributed system like microservices architecture, failures are inevitable. Therefore, it is important to design for fault tolerance by implementing strategies such as circuit breakers and retries. These mechanisms help prevent cascading failures and improve overall system resilience.

Real-Life Examples Of Successful Implementation Of Microservices

Microservices have gained immense popularity in recent years due to their ability to improve the scalability, flexibility, and agility of software systems. Many organizations across various industries have successfully implemented microservices architecture in their applications, resulting in significant benefits. In this section, we will explore real-life examples of successful implementation of microservices and how they have revolutionized modern software architecture.

1. Netflix: Netflix is a leading streaming service that has disrupted the entertainment industry with its vast collection of movies and TV shows. The company’s success can be attributed to its adoption of microservices architecture. Initially, Netflix had a monolithic application that was becoming difficult to scale and maintain as the user base grew rapidly. To overcome these challenges, they broke down their application into smaller independent services following the microservices approach.

Each service at Netflix has a specific function such as search, recommendations, or video playback. These services can be developed independently, enabling faster deployment and updates without affecting other parts of the system. This also allows for easier scaling based on demand by adding more instances of the required services. With microservices, Netflix has improved its uptime and performance while keeping costs low.

The Future Of Microservices In Software Architecture

The concept of microservices has been gaining traction in the world of software architecture in recent years. This approach to building applications involves breaking down a monolithic system into smaller, independent services that communicate with each other through well-defined APIs. The benefits of this architecture include increased flexibility, scalability, and resilience.

But what does the future hold for microservices? In this section, we will explore some potential developments and trends that could shape the future of microservices in software architecture.

1. Rise of Serverless Architecture

As organizations continue to move towards cloud-based solutions, serverless architecture is becoming increasingly popular. This approach eliminates the need for traditional servers and infrastructure management by allowing developers to deploy their code directly onto a cloud platform such as Amazon Web Services (AWS) or Microsoft Azure.

Microservices are a natural fit for serverless architecture as they already follow a distributed model. With serverless, each microservice can be deployed independently, making it easier to scale individual components without affecting the entire system. As serverless continues to grow in popularity, we can expect to see more widespread adoption of microservices.

2. Increased Adoption of Containerization

Containerization technology such as Docker has revolutionized how applications are deployed and managed. Containers provide an isolated environment for each service, making it easier to package and deploy them anywhere without worrying about compatibility issues.

Conclusion:

As we have seen throughout this article, microservices offer a number of benefits in terms of scalability, flexibility, and efficiency in modern software architecture. However, it is important to carefully consider whether or not the use of microservices is right for your specific project.

First and foremost, it is crucial to understand the complexity that comes with implementing a microservices architecture. While it offers many advantages, it also introduces new challenges such as increased communication overhead and the need for specialized tools and processes. Therefore, if your project does not require a high level of scalability or if you do not have a team with sufficient expertise to manage these complexities, using a monolithic architecture may be more suitable.

#website landing page design#magento development#best web development company in united states#asp.net web and application development#web designing company#web development company#logo design company#web development#web design#digital marketing company in usa

2 notes

·

View notes